Seasonal Forecast Verification What Why and How Simon

- Slides: 39

Seasonal Forecast Verification: What, Why and How? Simon J. Mason simon@iri. columbia. edu International Research Institute for Climate and Society The Earth Institute of Columbia University Cari. COF Seasonal Forecast Verification Training Workshop Port of Spain, Trinidad, 22 – 25 May, 2013

What makes a good forecast? Forecast: West Indies will win the 2012 ICC Twenty 20 World Cup Verification: Correct! Correctness is only one aspect of forecast quality – how well do the forecasts correspond with the observations? 2

What makes a good forecast? Forecast: West Indies will win the 2012 ICC Twenty 20 World Cup Final by 30 runs Verification: They won by 36 runs Accuracy may be more appropriate than correctness. 3

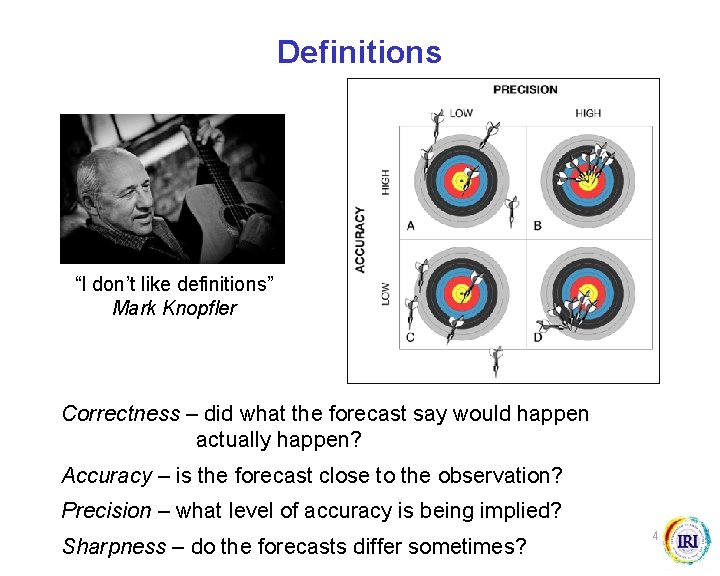

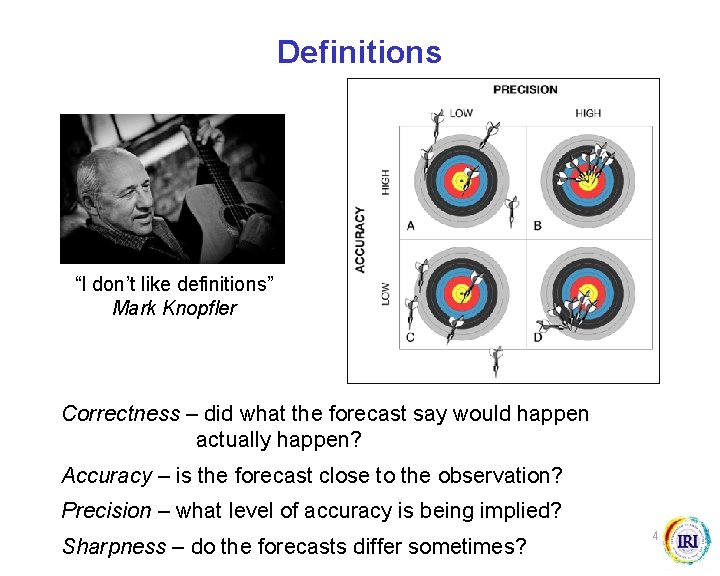

Definitions “I don’t like definitions” Mark Knopfler Correctness – did what the forecast say would happen actually happen? Accuracy – is the forecast close to the observation? Precision – what level of accuracy is being implied? Sharpness – do the forecasts differ sometimes? 4

What makes a good forecast? Forecast: Sri Lanka will lose the 2012 ICC Twenty 20 World Cup Final When: In the seventeenth over of the second innings Timeliness Example: Are seasonal climate forecasts issued too late for important decisions? 5

What makes a good forecast? Forecast: Trinidad and Tobago will do well in the 2012 Olympics Verification: ? 4 medals Ambiguity Example: Is the precise meaning of the forecasts clear? 6

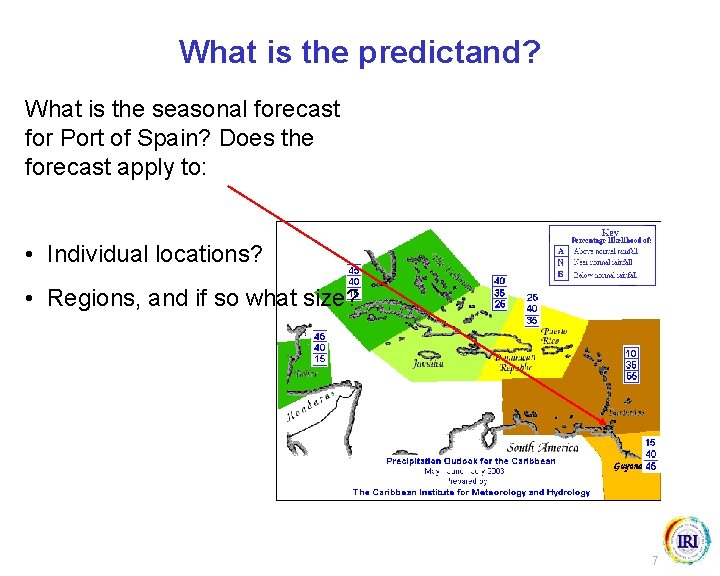

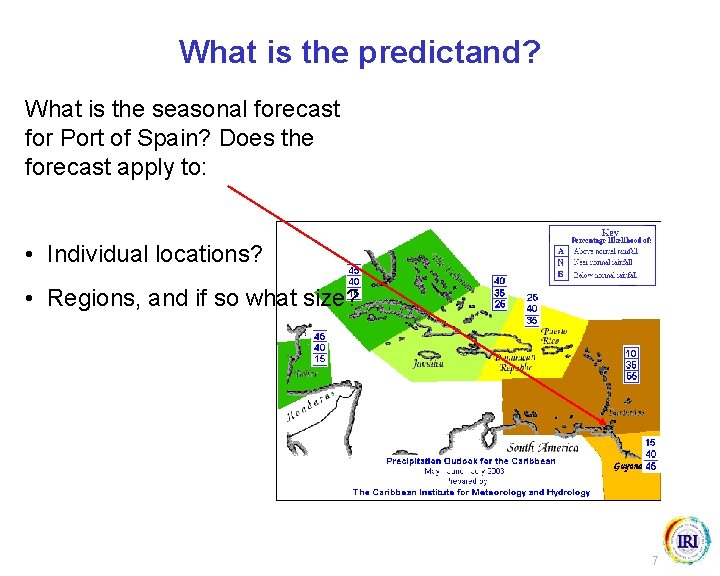

What is the predictand? What is the seasonal forecast for Port of Spain? Does the forecast apply to: • Individual locations? • Regions, and if so what size? 7

“Good” forecasts Forecast: Laos will not top the 2012 Olympic medal table. Verification: Who needs to ask? (Laos has never won a medal). Example: Uncertainty Short-term forecasts of the SPI during a drought. Temperature forecasts. 8

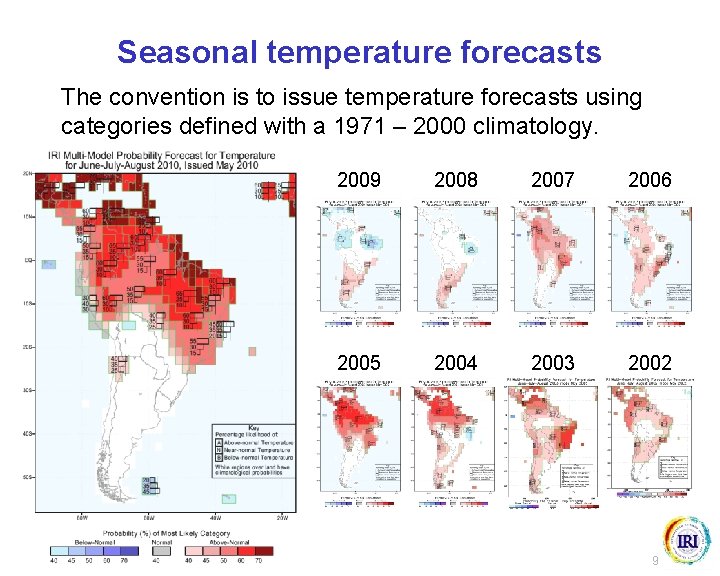

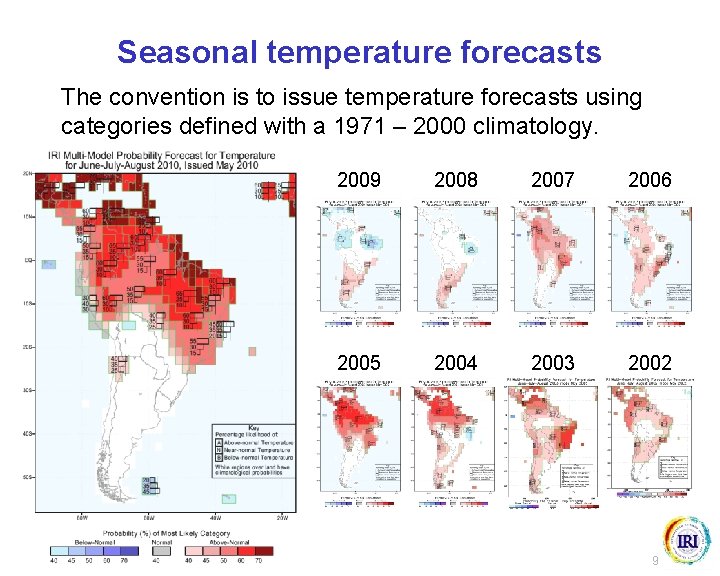

Seasonal temperature forecasts The convention is to issue temperature forecasts using categories defined with a 1971 – 2000 climatology. 2009 2008 2007 2006 2005 2004 2003 2002 9

What makes a good forecast? Forecast: Usain Bolt will win gold in the 2012 Olympics 100 m But: I lied – I thought he would come second. Example: Consistency Preference to forecast high probabilities of normal rainfall? Is the forecast what we really think, or what we are most comfortable with saying? 70% - 80% of all African RCOF forecasts have the highest probability on “normal”. 10

What makes a good forecast? Forecast: Phil Taylor and Adrian Lewis (England) will successfully defend the 2013 PDC World Cup of Darts Verification: Who cares? (They did, defeating the Belgians) Example: Relevance Are tercile forecasts really what users want to know? 11

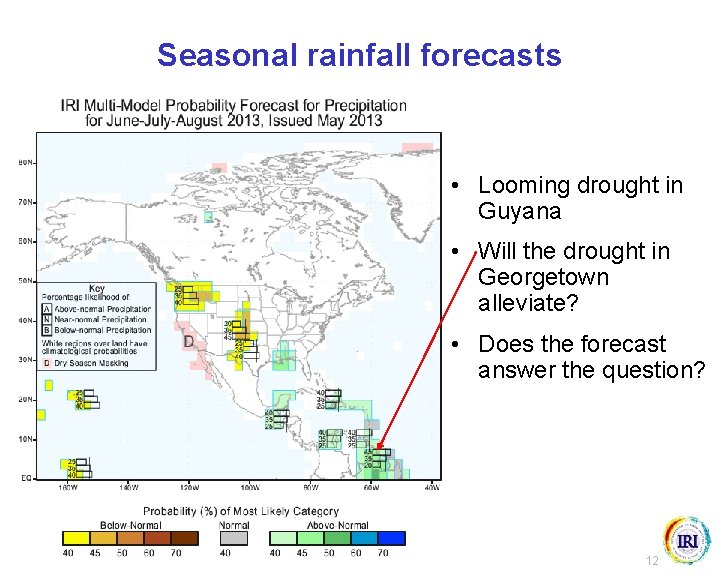

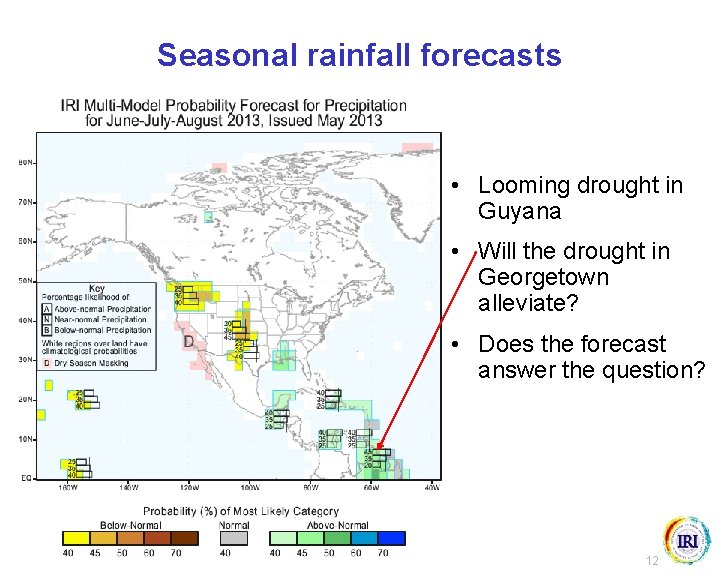

Seasonal rainfall forecasts • Looming drought in Guyana • Will the drought in Georgetown alleviate? • Does the forecast answer the question? 12

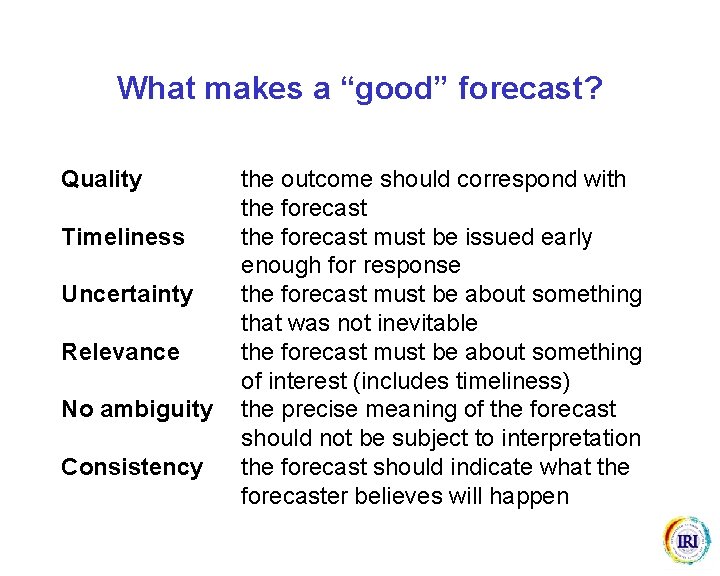

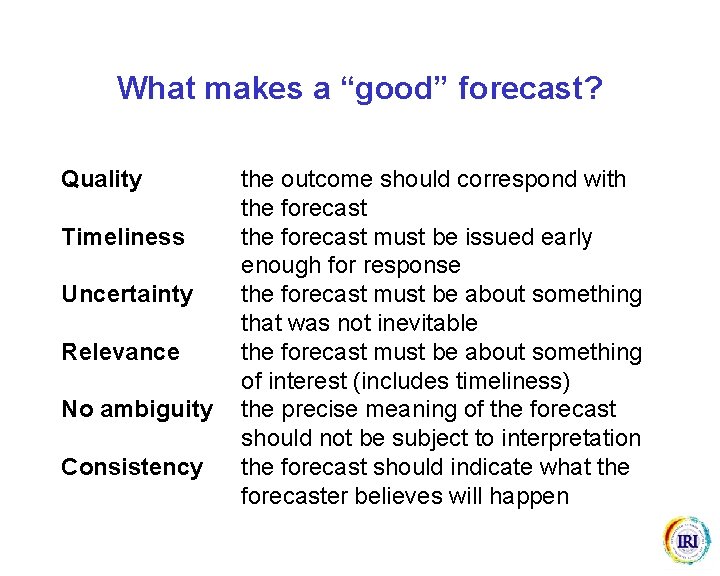

What makes a “good” forecast? Quality Timeliness Uncertainty Relevance No ambiguity Consistency the outcome should correspond with the forecast must be issued early enough for response the forecast must be about something that was not inevitable the forecast must be about something of interest (includes timeliness) the precise meaning of the forecast should not be subject to interpretation the forecast should indicate what the forecaster believes will happen

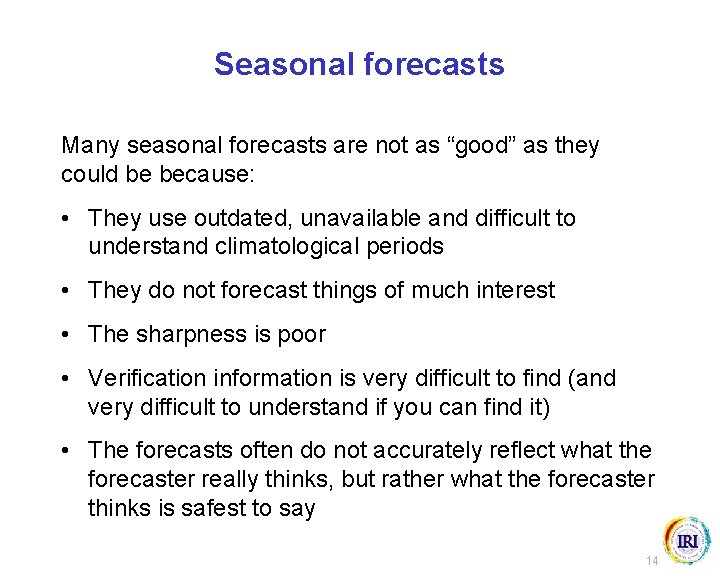

Seasonal forecasts Many seasonal forecasts are not as “good” as they could be because: • They use outdated, unavailable and difficult to understand climatological periods • They do not forecast things of much interest • The sharpness is poor • Verification information is very difficult to find (and very difficult to understand if you can find it) • The forecasts often do not accurately reflect what the forecaster really thinks, but rather what the forecaster thinks is safest to say 14

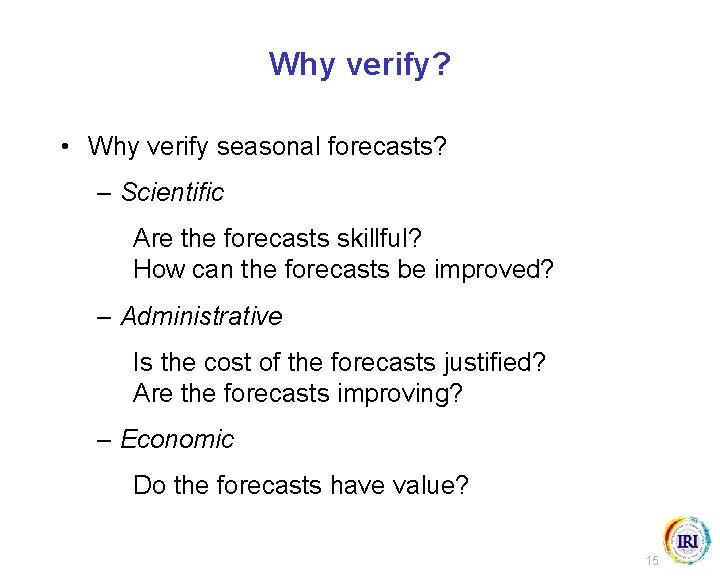

Why verify? • Why verify seasonal forecasts? – Scientific Are the forecasts skillful? How can the forecasts be improved? – Administrative Is the cost of the forecasts justified? Are the forecasts improving? – Economic Do the forecasts have value? 15

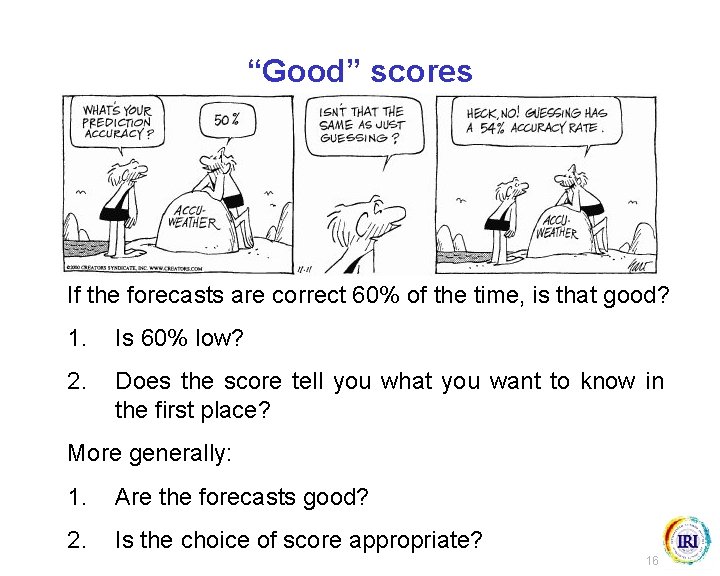

“Good” scores If the forecasts are correct 60% of the time, is that good? 1. Is 60% low? 2. Does the score tell you what you want to know in the first place? More generally: 1. Are the forecasts good? 2. Is the choice of score appropriate? 16

What makes a good forecast? Forecast: There is a 40% probability that West Indies will win the 2012 ICC Twenty 20 World Cup Final Verification: ? 17

How to verify forecasts? Procedures for verifying forecasts depend on: • The format of the observations; • The format of the forecasts; • How many forecast and observations we have. 18

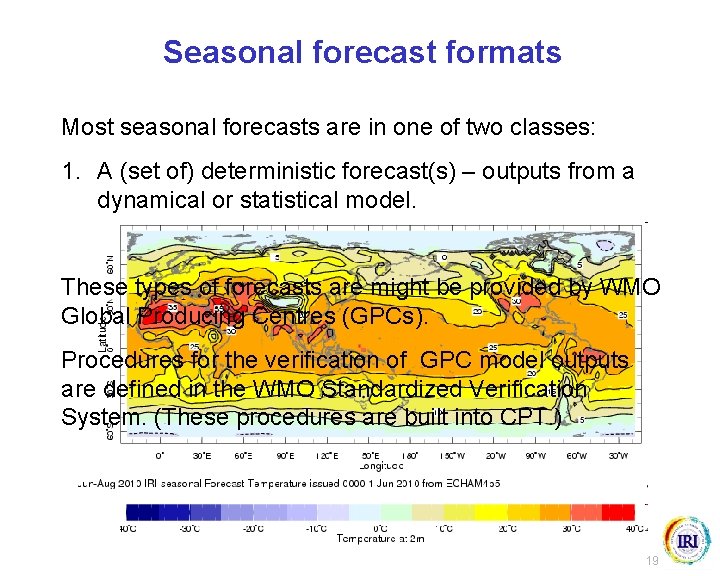

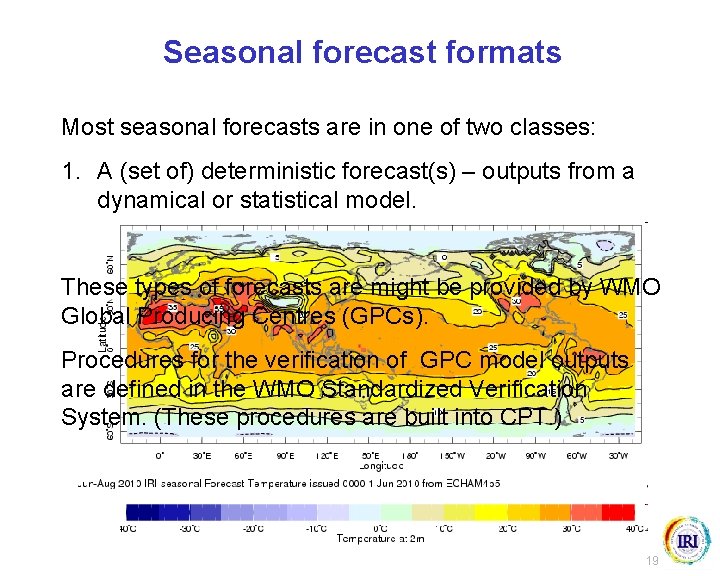

Seasonal forecast formats Most seasonal forecasts are in one of two classes: 1. A (set of) deterministic forecast(s) – outputs from a dynamical or statistical model. These types of forecasts are might be provided by WMO Global Producing Centres (GPCs). Procedures for the verification of GPC model outputs are defined in the WMO Standardized Verification System. (These procedures are built into CPT. ) 19

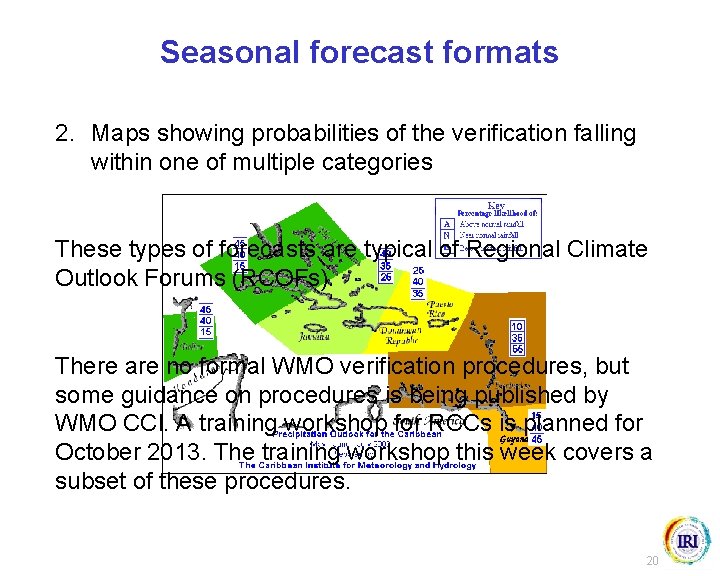

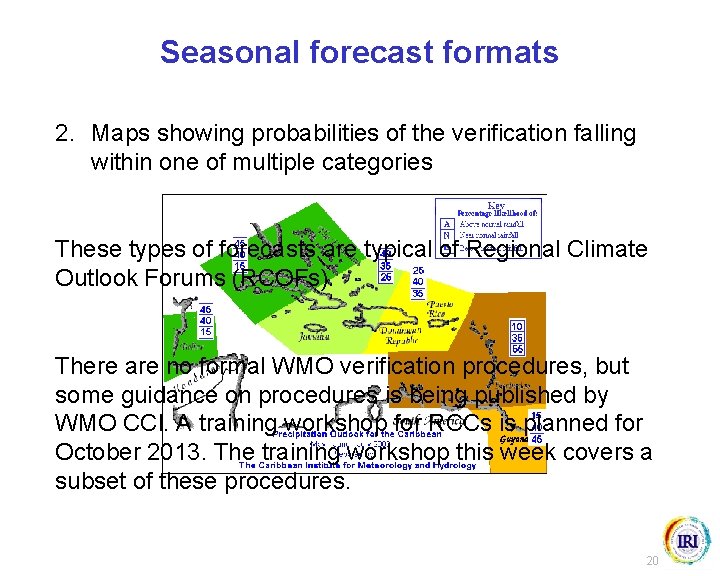

Seasonal forecast formats 2. Maps showing probabilities of the verification falling within one of multiple categories These types of forecasts are typical of Regional Climate Outlook Forums (RCOFs). There are no formal WMO verification procedures, but some guidance on procedures is being published by WMO CCl. A training workshop for RCCs is planned for October 2013. The training workshop this week covers a subset of these procedures. 20

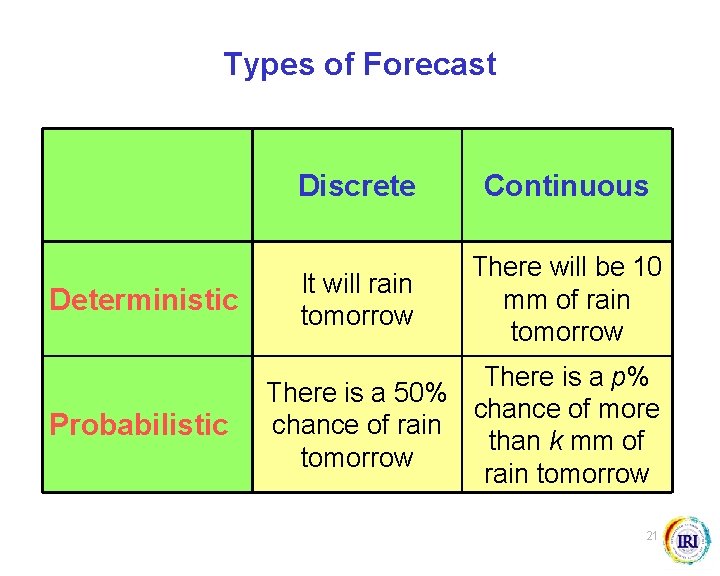

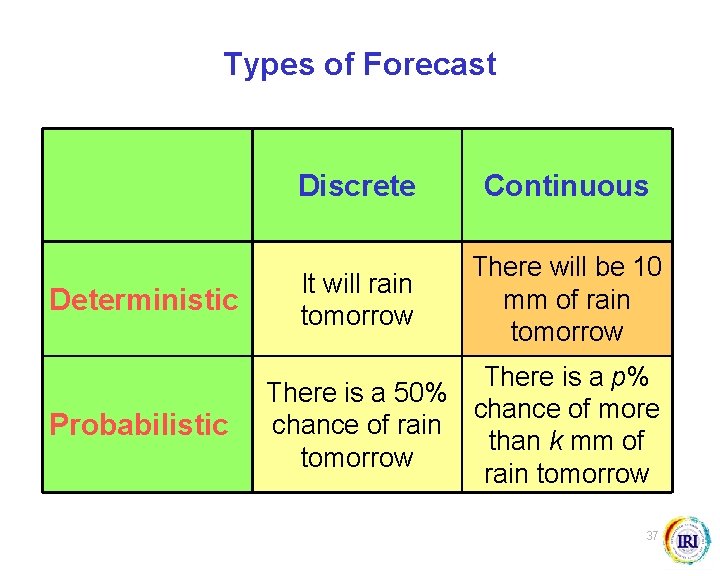

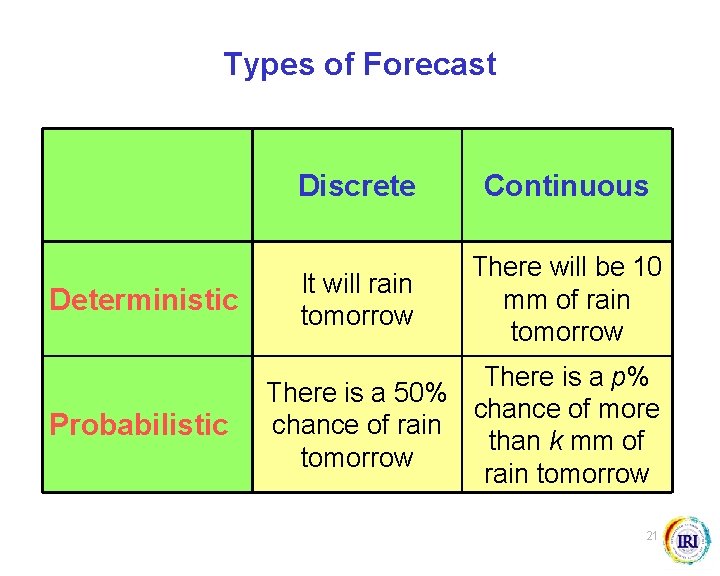

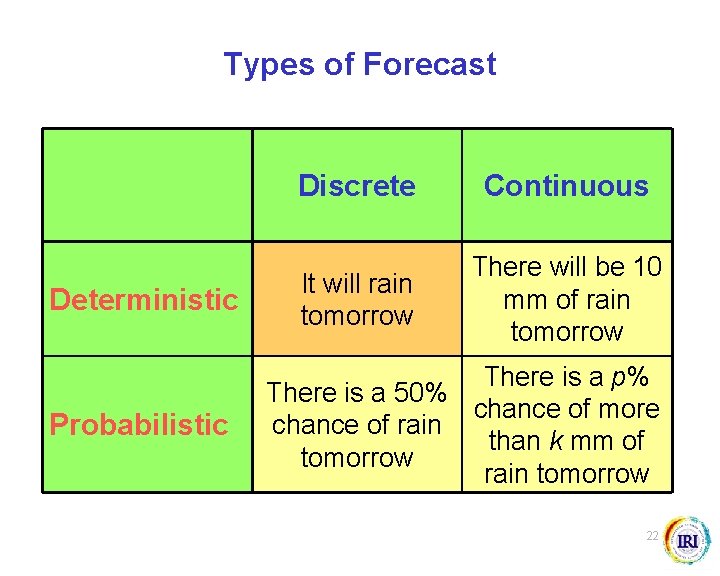

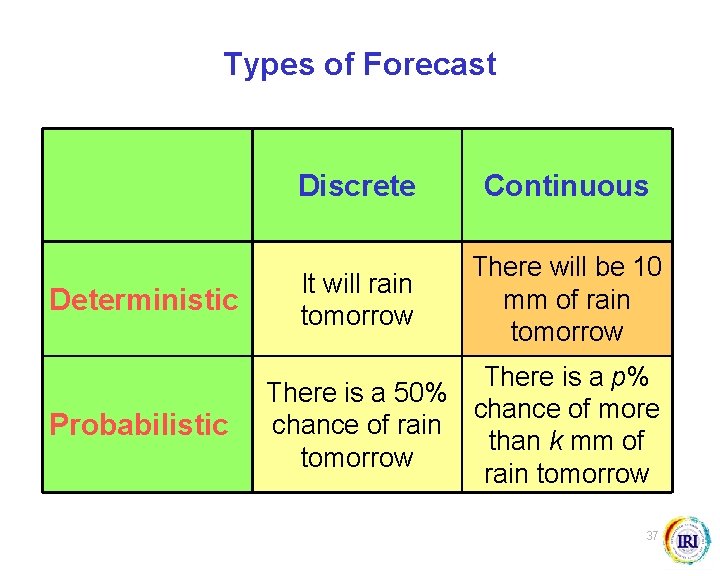

Types of Forecast Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 21

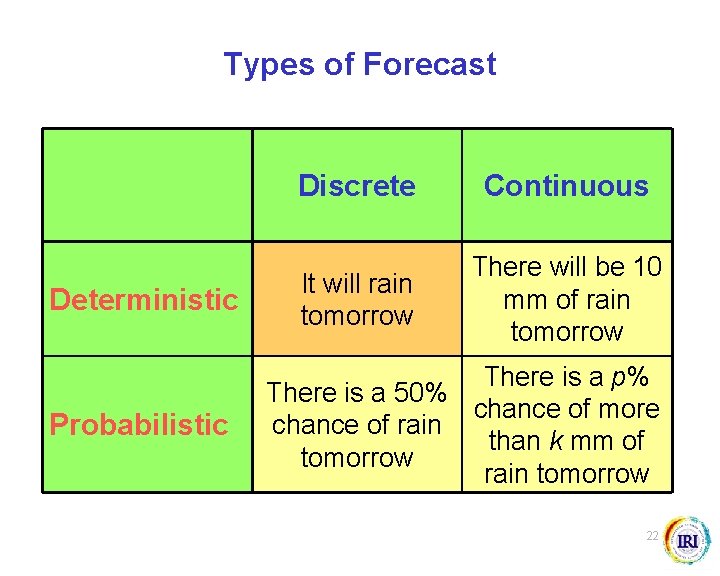

Types of Forecast Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 22

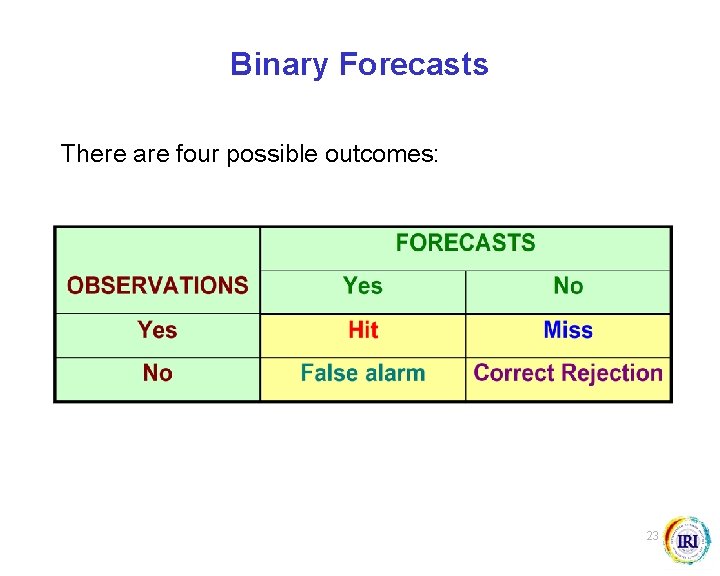

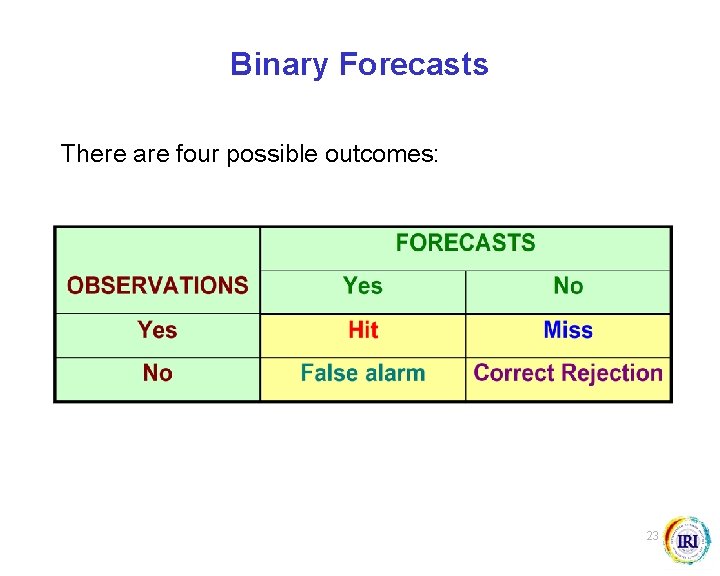

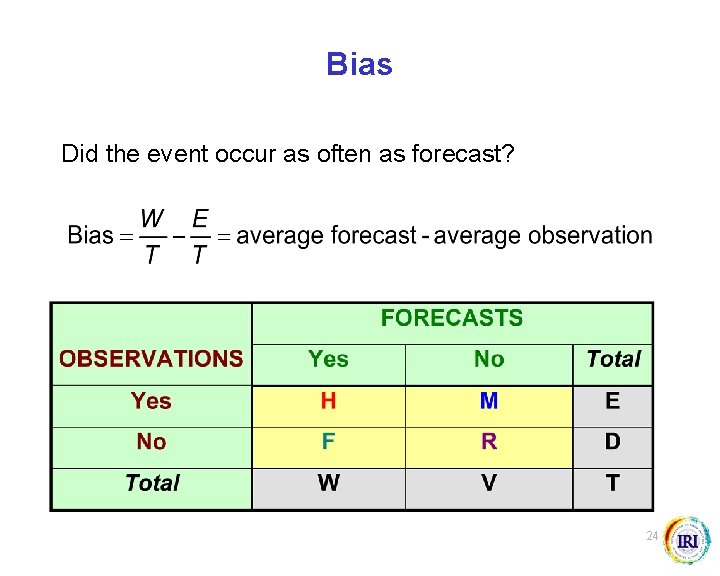

Binary Forecasts There are four possible outcomes: 23

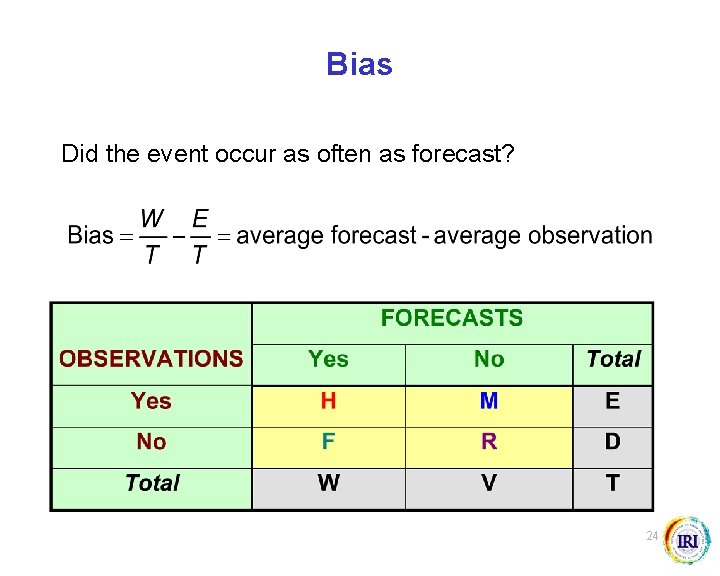

Bias Did the event occur as often as forecast? 24

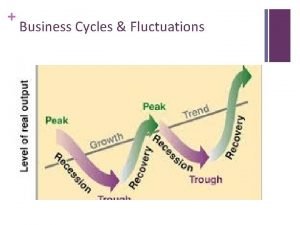

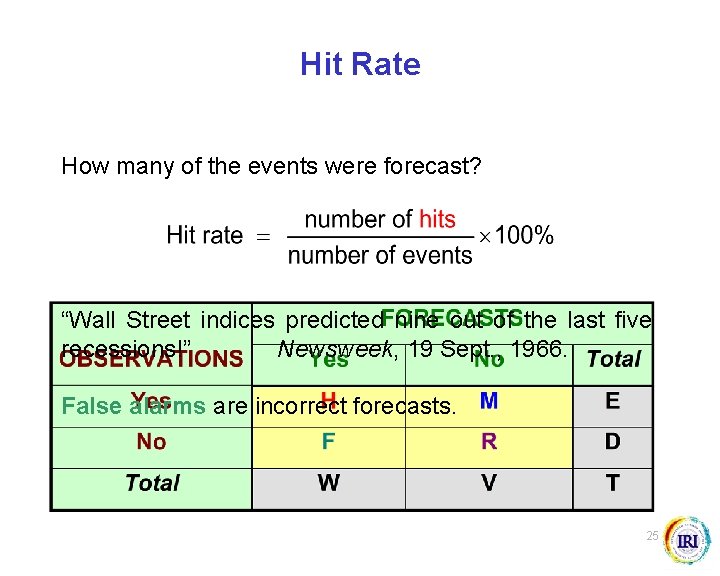

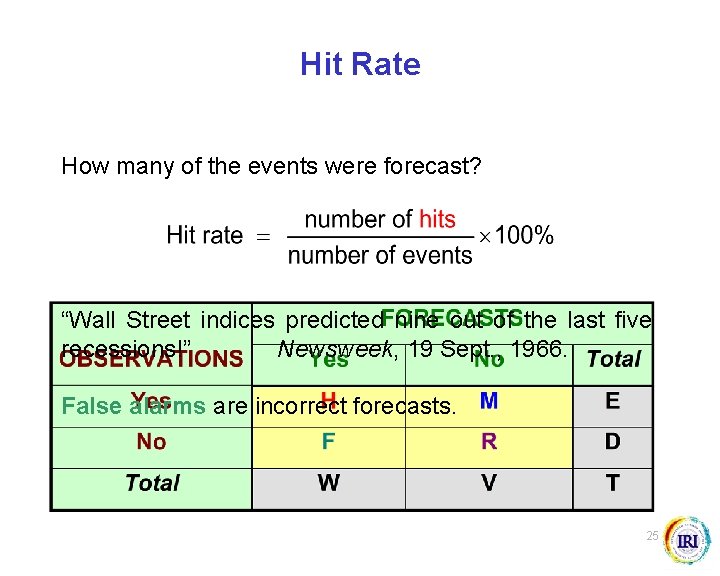

Hit Rate How many of the events were forecast? “Wall Street indices predicted nine out of the last five recessions!” Newsweek, 19 Sept. , 1966. False alarms are incorrect forecasts. 25

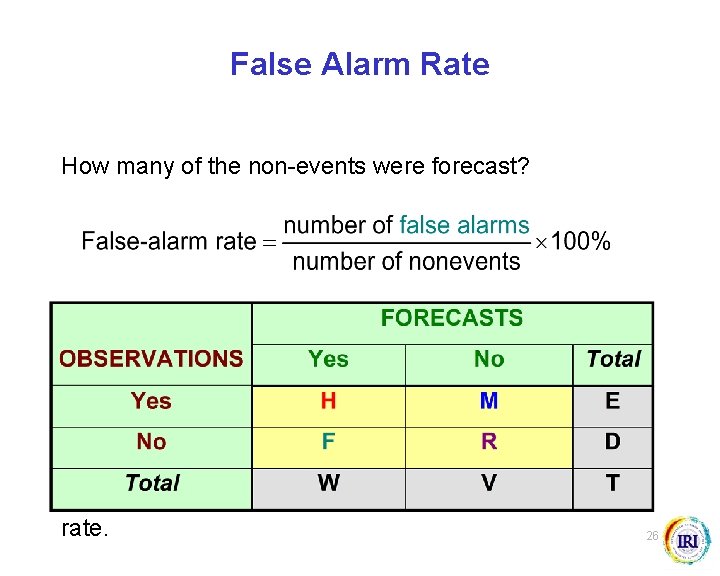

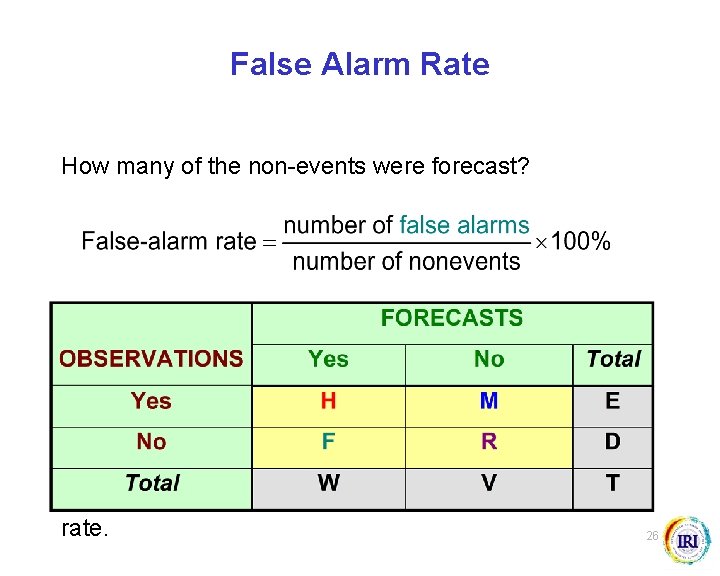

False Alarm Rate How many of the non-events were forecast? If we issue more warnings, the hit rate can be increased, but the false-alarm rate will also be increased – there is a trade-off. We want the hit rate to be as high as possible and the false-alarm rate as low as possible, and, at the very least, the hit rate should be higher than the false-alarm rate. 26

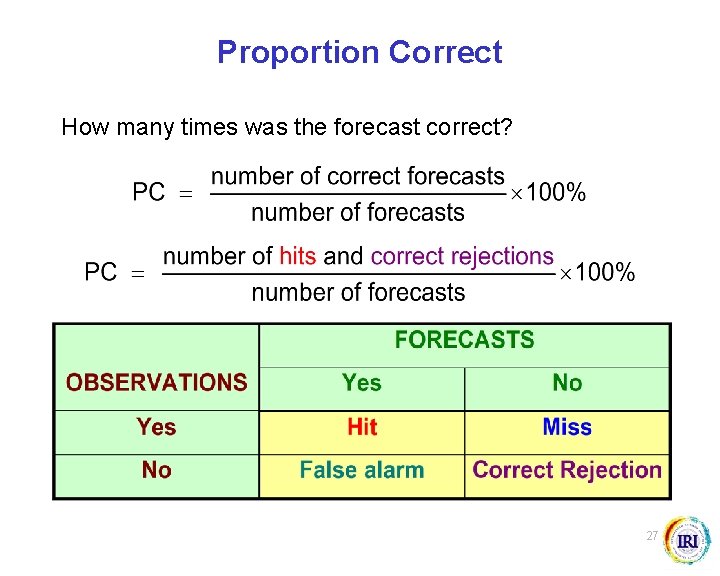

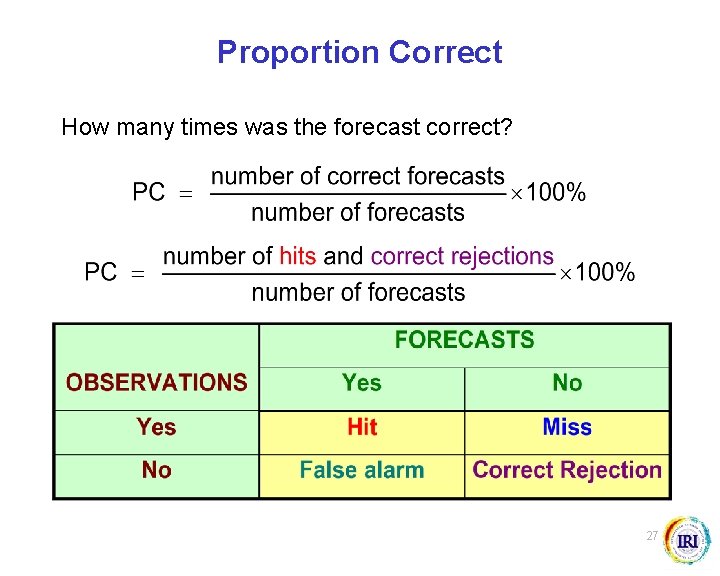

Proportion Correct How many times was the forecast correct? 27

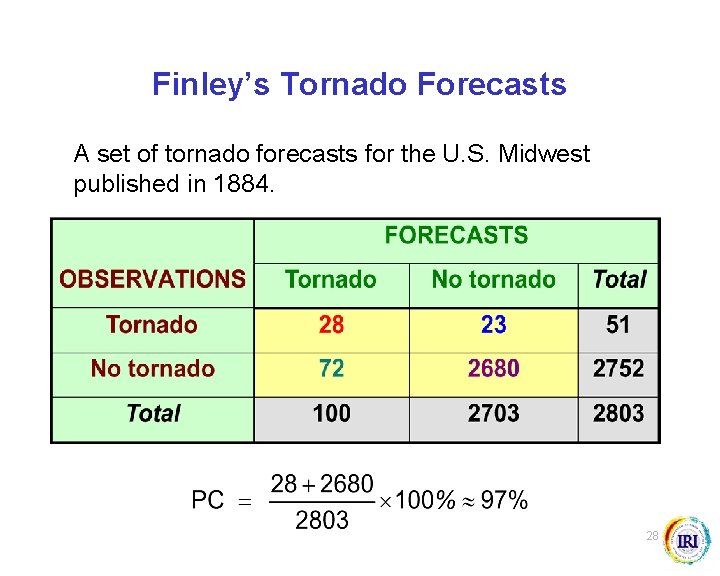

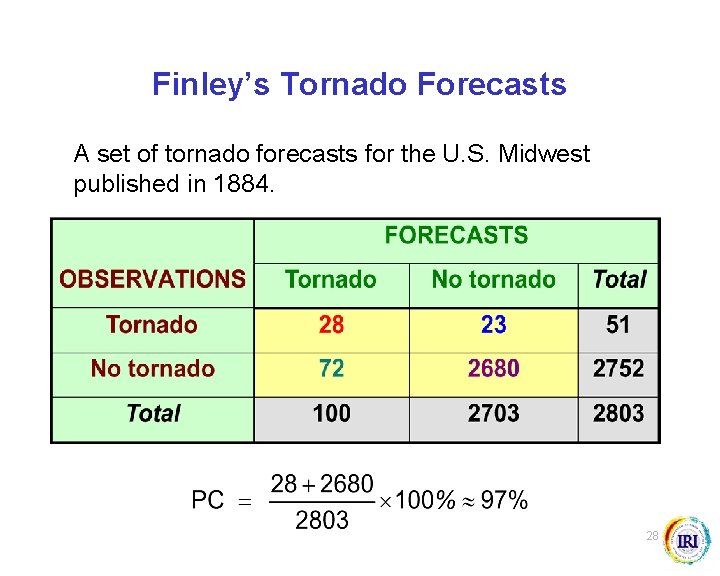

Finley’s Tornado Forecasts A set of tornado forecasts for the U. S. Midwest published in 1884. 28

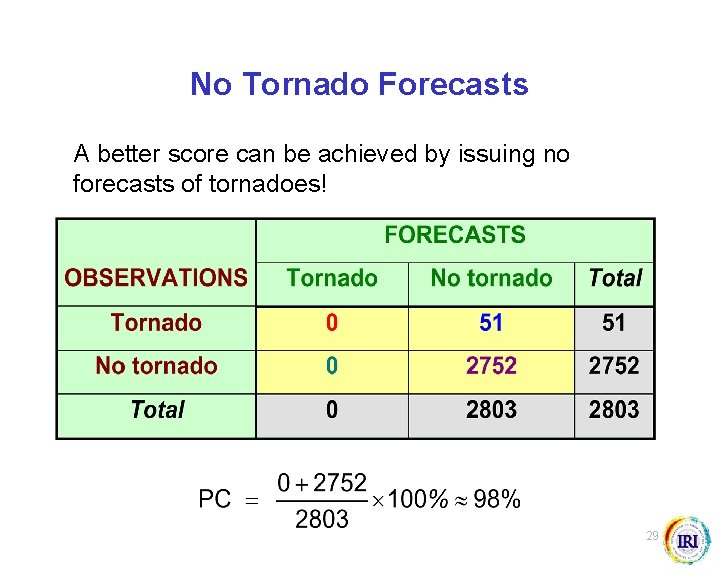

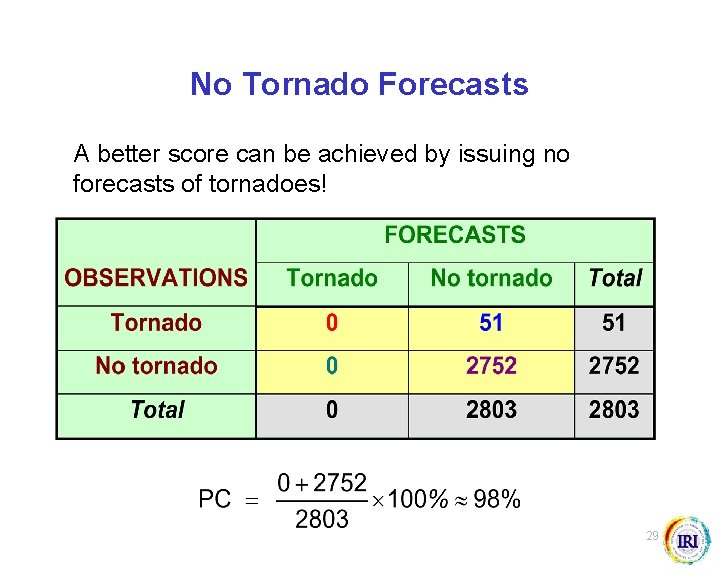

No Tornado Forecasts A better score can be achieved by issuing no forecasts of tornadoes! 29

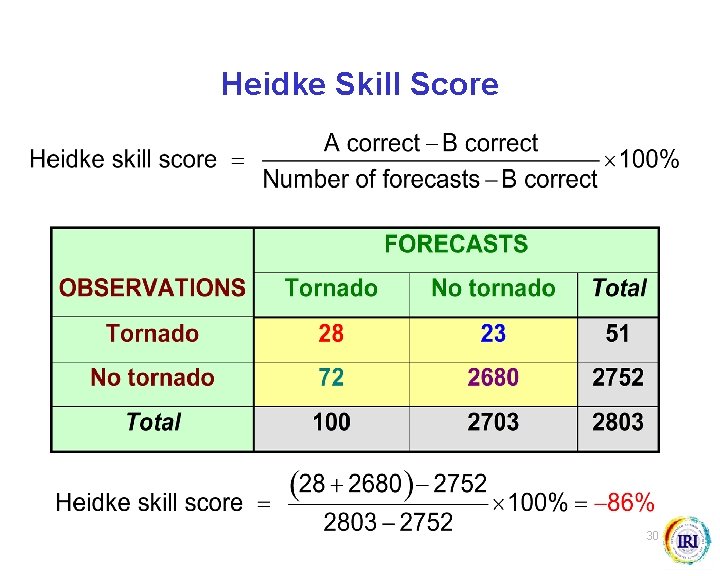

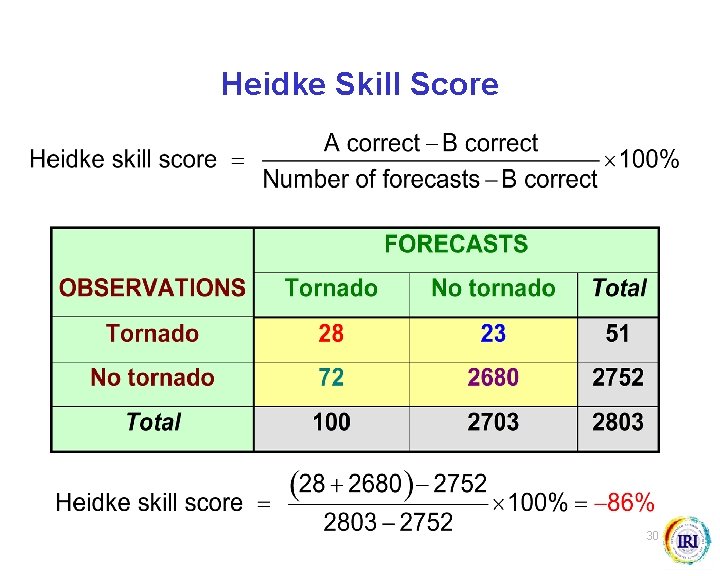

Heidke Skill Score 30

What is “skill”? Is one set of forecasts better than another? 31

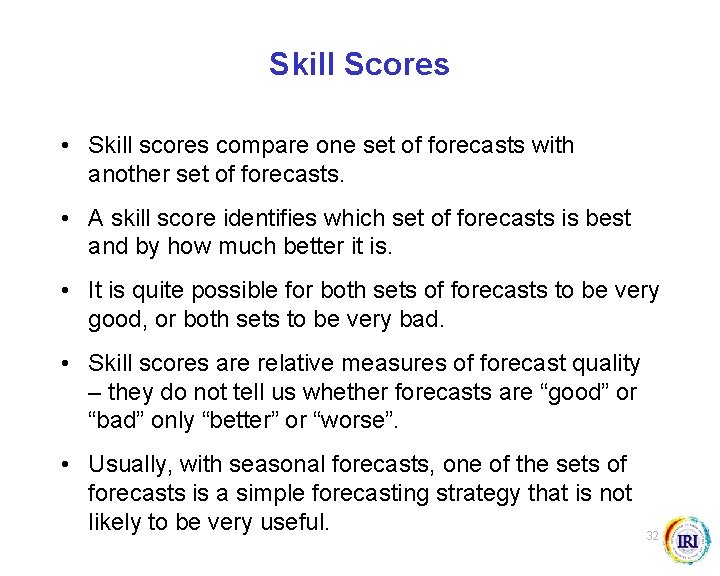

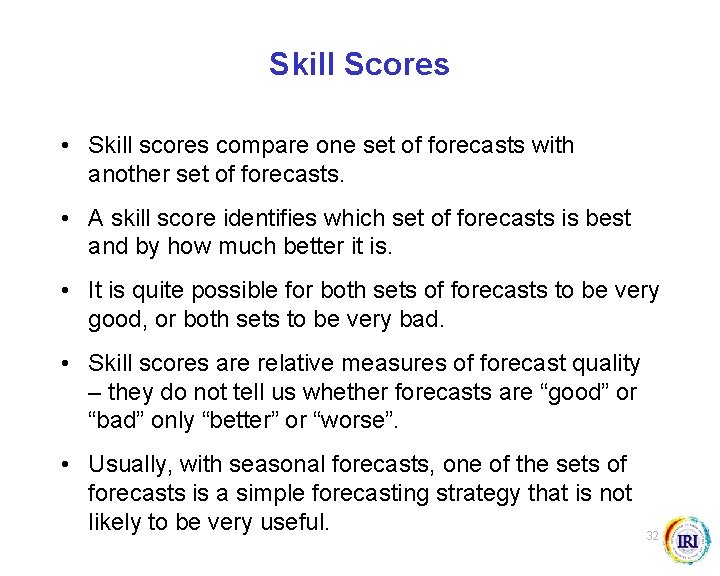

Skill Scores • Skill scores compare one set of forecasts with another set of forecasts. • A skill score identifies which set of forecasts is best and by how much better it is. • It is quite possible for both sets of forecasts to be very good, or both sets to be very bad. • Skill scores are relative measures of forecast quality – they do not tell us whether forecasts are “good” or “bad” only “better” or “worse”. • Usually, with seasonal forecasts, one of the sets of forecasts is a simple forecasting strategy that is not likely to be very useful. 32

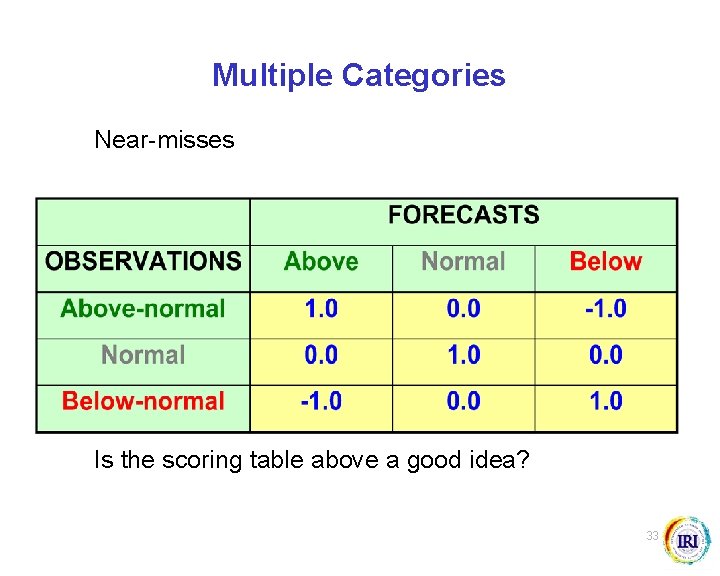

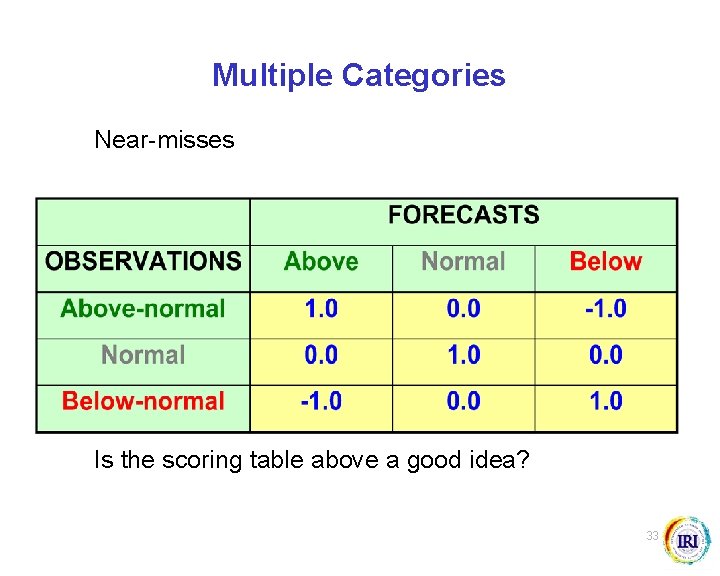

Multiple Categories Near-misses Is the scoring table above a good idea? 33

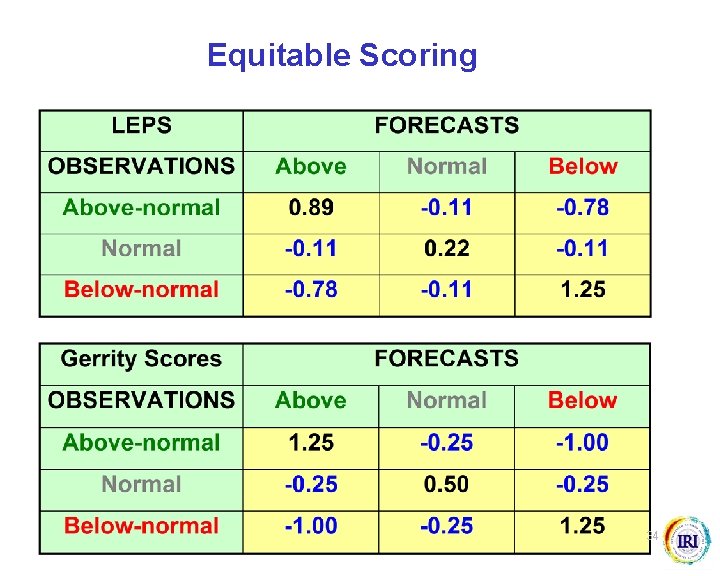

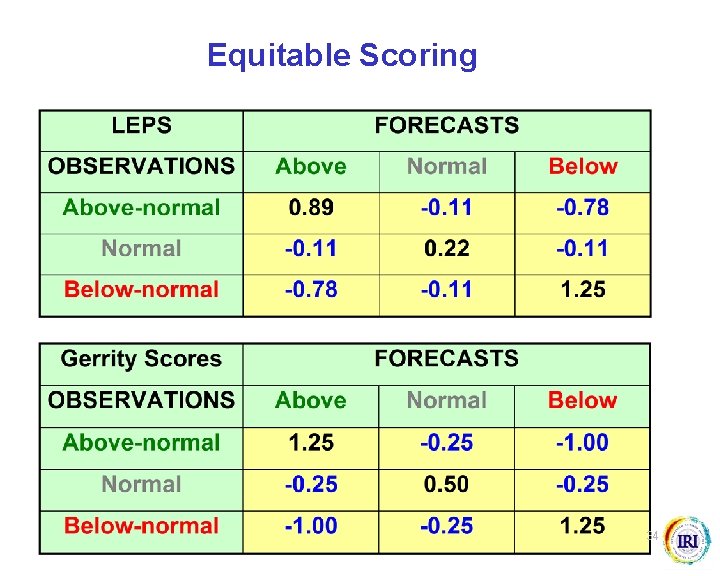

Equitable Scoring 34

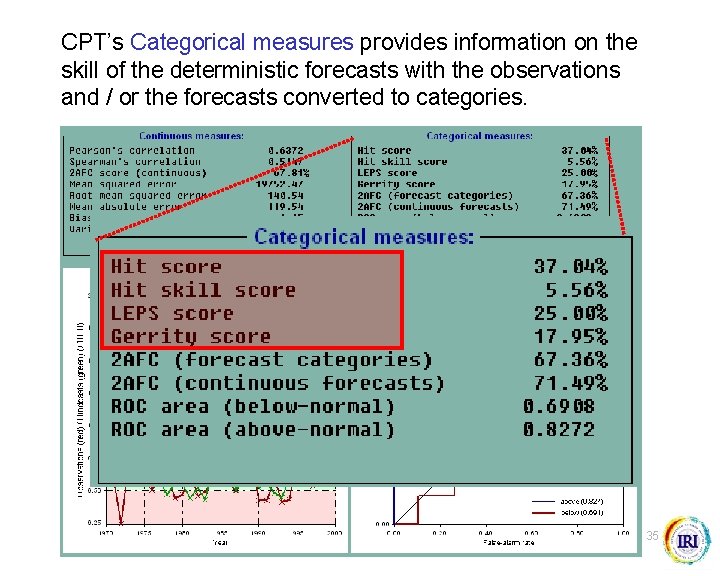

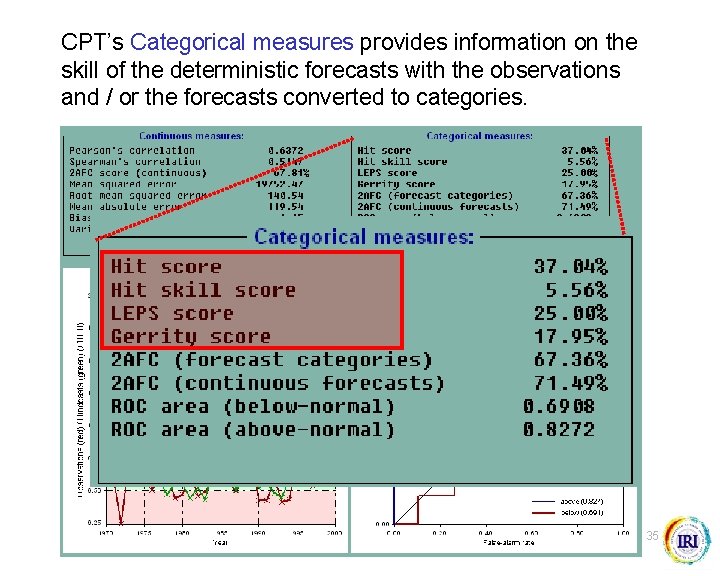

CPT’s Categorical measures provides information on the skill of the deterministic forecasts with the observations and / or the forecasts converted to categories. 35

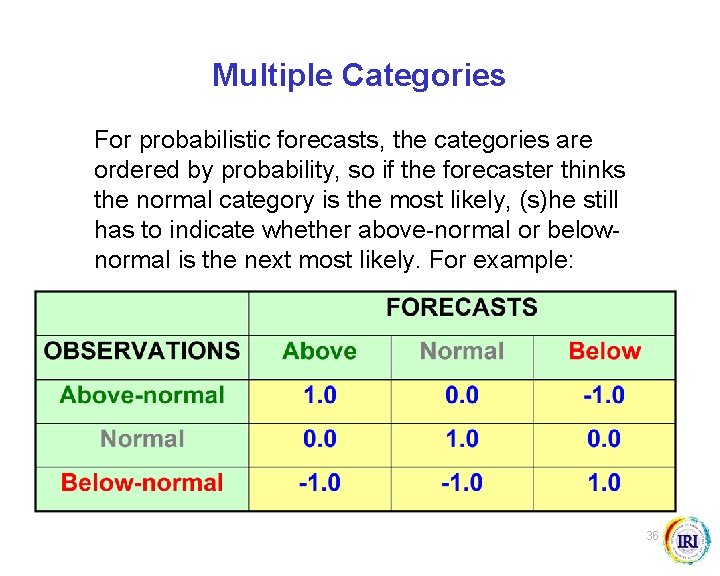

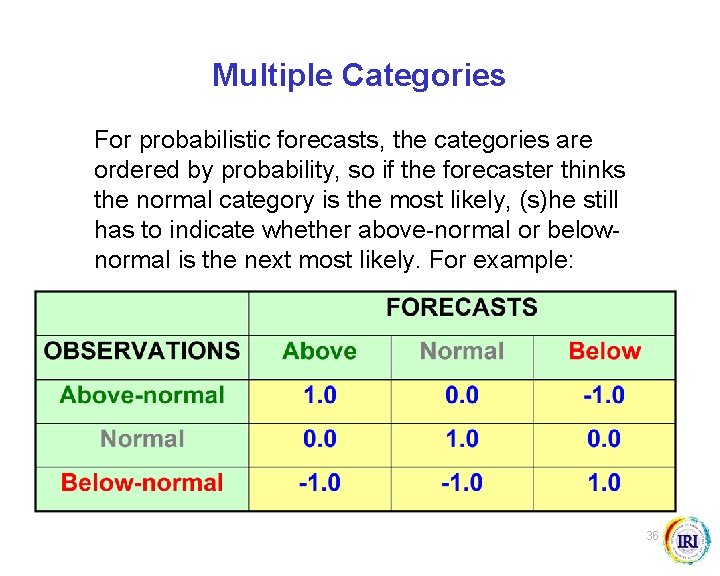

Multiple Categories For probabilistic forecasts, the categories are ordered by probability, so if the forecaster thinks the normal category is the most likely, (s)he still has to indicate whether above-normal or belownormal is the next most likely. For example: 36

Types of Forecast Deterministic Probabilistic Discrete Continuous It will rain tomorrow There will be 10 mm of rain tomorrow There is a p% There is a 50% chance of more chance of rain than k mm of tomorrow rain tomorrow 37

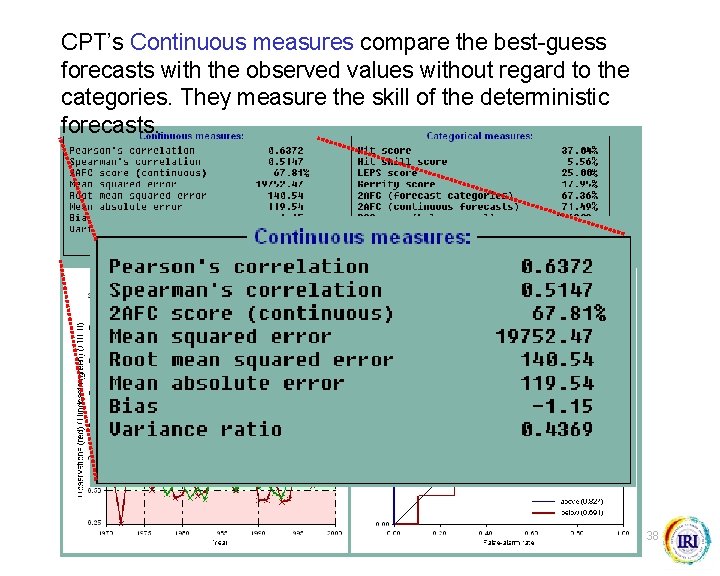

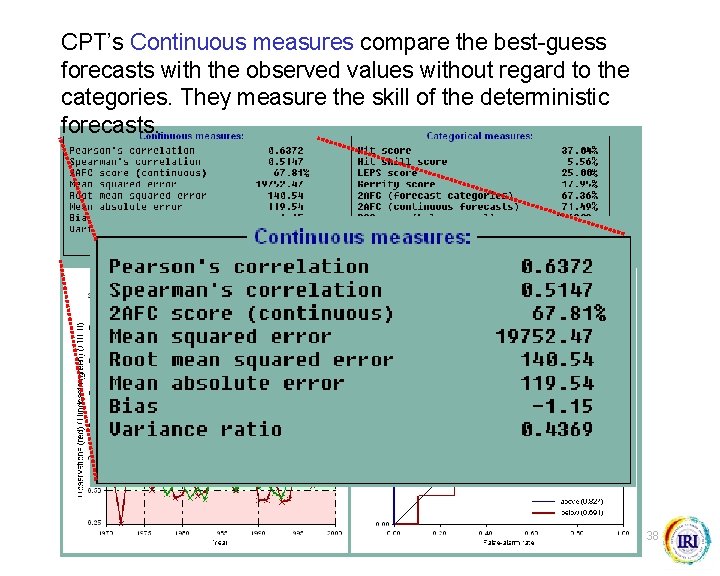

CPT’s Continuous measures compare the best-guess forecasts with the observed values without regard to the categories. They measure the skill of the deterministic forecasts. 38

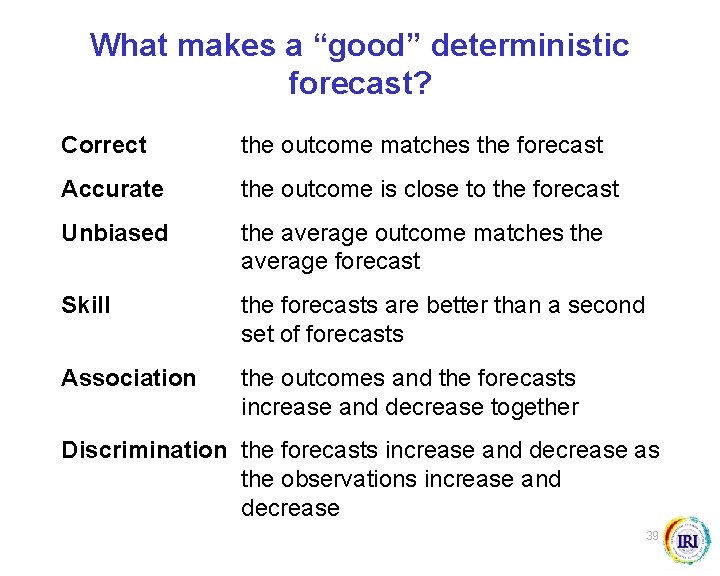

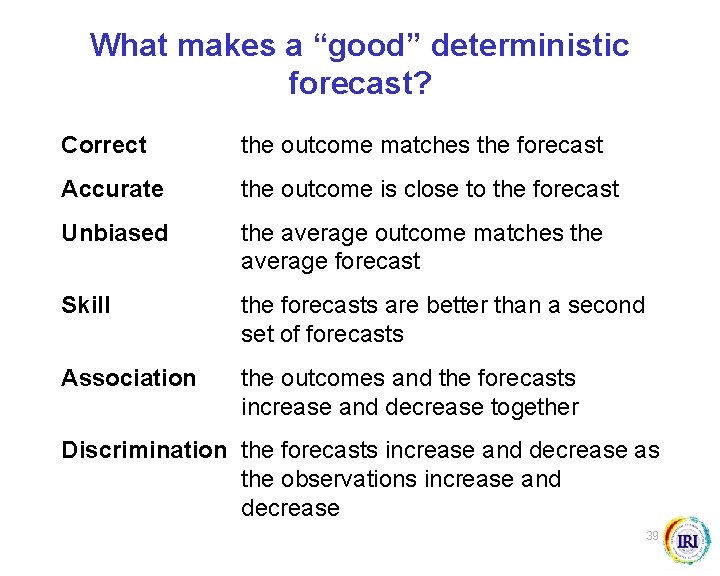

What makes a “good” deterministic forecast? Correct the outcome matches the forecast Accurate the outcome is close to the forecast Unbiased the average outcome matches the average forecast Skill the forecasts are better than a second set of forecasts Association the outcomes and the forecasts increase and decrease together Discrimination the forecasts increase and decrease as the observations increase and decrease 39

Jma seasonal forecast

Jma seasonal forecast Iri columbia seasonal forecast

Iri columbia seasonal forecast Asia seasonal forecast

Asia seasonal forecast Jma seasonal forecast

Jma seasonal forecast Iri columbia seasonal forecast

Iri columbia seasonal forecast Andreas carlsson bye bye bye

Andreas carlsson bye bye bye Northern hemisphere seasons diagram

Northern hemisphere seasons diagram Don't ask why why why

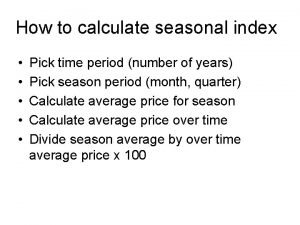

Don't ask why why why Seasonal index

Seasonal index How to calculate average seasonal variation

How to calculate average seasonal variation Nursery pond management ppt

Nursery pond management ppt Seasonal unemployment example

Seasonal unemployment example How to calculate average seasonal variation

How to calculate average seasonal variation Animal represent freedom

Animal represent freedom From statsmodels.tsa.seasonal import stl

From statsmodels.tsa.seasonal import stl Seasonal movement definition

Seasonal movement definition What is ferrel cell

What is ferrel cell How to calculate seasonal index

How to calculate seasonal index Seasonal work means

Seasonal work means Temperate deciduous forest plant adaptations

Temperate deciduous forest plant adaptations Definition of participatory rural appraisal

Definition of participatory rural appraisal Icd 10 code for allergies

Icd 10 code for allergies Deciduous forest adaptations

Deciduous forest adaptations Variasi musiman adalah

Variasi musiman adalah Taiga climatogram

Taiga climatogram Symptomen van seasonal affective disorder

Symptomen van seasonal affective disorder Seasonal winds that dominate india's climate

Seasonal winds that dominate india's climate Seasonal plants examples

Seasonal plants examples Hadley cells

Hadley cells Seasonal unemployment investopedia

Seasonal unemployment investopedia Observed demand formula

Observed demand formula Seasonal round

Seasonal round Sundial meaning

Sundial meaning Recognized seasonal employer visa

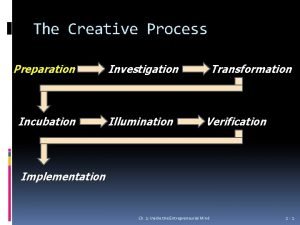

Recognized seasonal employer visa Transformation in creative process

Transformation in creative process Verification and validation

Verification and validation Verification and validation

Verification and validation Testing types in software engineering

Testing types in software engineering Verification and validation plan

Verification and validation plan Verification principle strengths and weaknesses

Verification principle strengths and weaknesses