Analysis 1 Verification vs Validation Verification Validation Are

![Storyboard [Leffingwell & Widrig] • Elicit reaction such as “Yes, but…” • Passive, Active Storyboard [Leffingwell & Widrig] • Elicit reaction such as “Yes, but…” • Passive, Active](https://slidetodoc.com/presentation_image/8fd72c8bd6baa6390d1f6c06aea473b4/image-14.jpg)

![Walk-through • ad hoc preparation – Select participants • Meeting [author(s), evaluator(s), secretary, (Leader)] Walk-through • ad hoc preparation – Select participants • Meeting [author(s), evaluator(s), secretary, (Leader)]](https://slidetodoc.com/presentation_image/8fd72c8bd6baa6390d1f6c06aea473b4/image-26.jpg)

- Slides: 44

Analysis 1

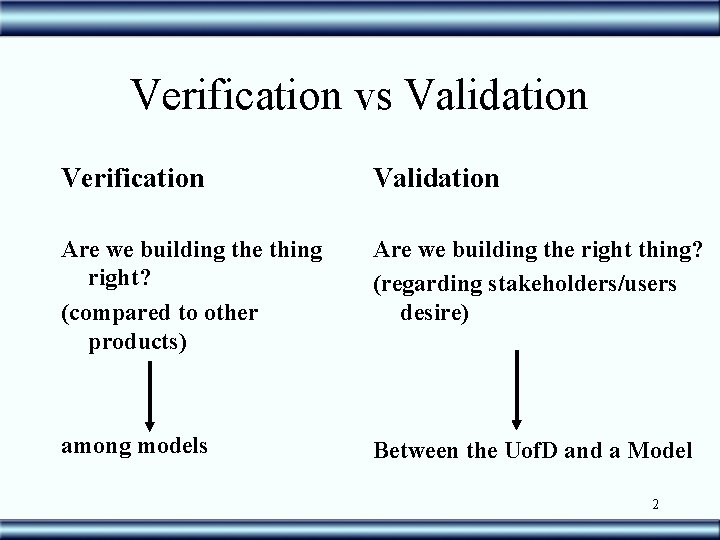

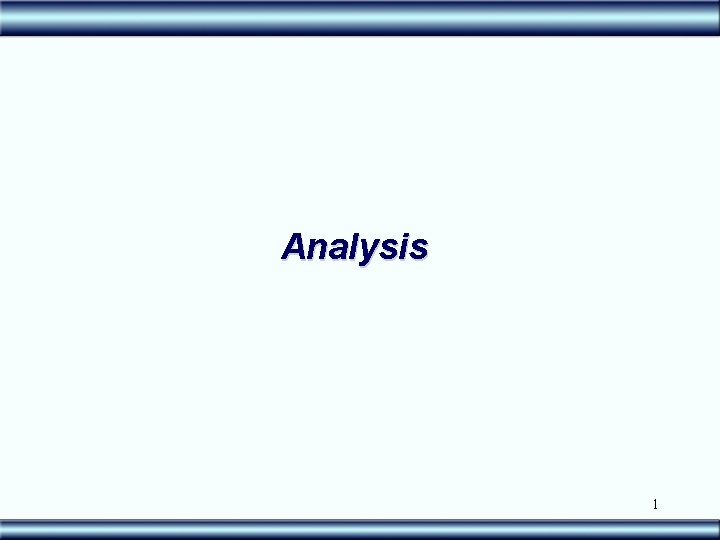

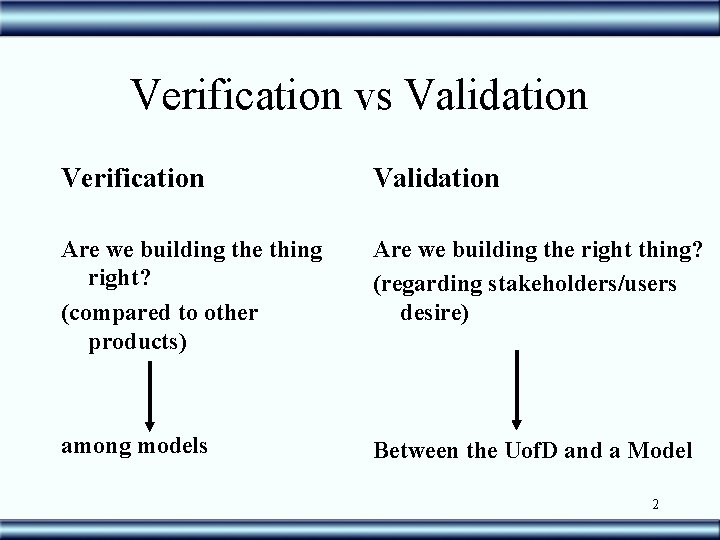

Verification vs Validation Verification Validation Are we building the thing right? (compared to other products) Are we building the right thing? (regarding stakeholders/users desire) among models Between the Uof. D and a Model 2

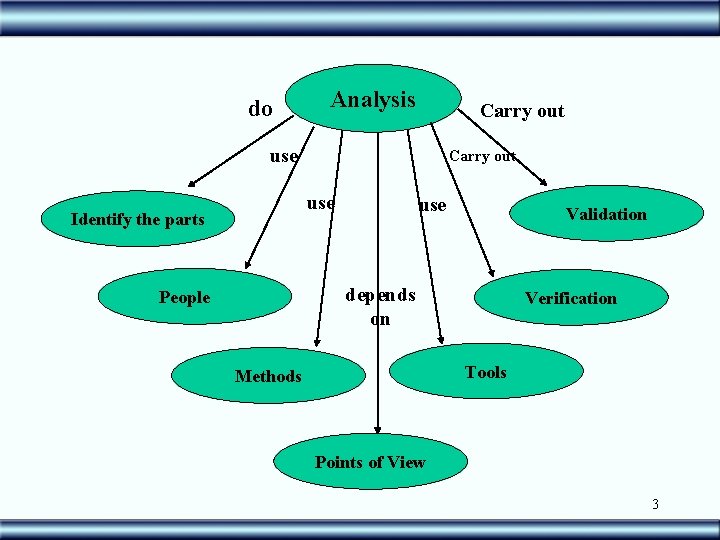

do Analysis Carry out use Identify the parts use Validation depends on People Verification Tools Methods Points of View 3

Analysis • Identify the parts – How is it organized? – How is it stored? – traceability 4

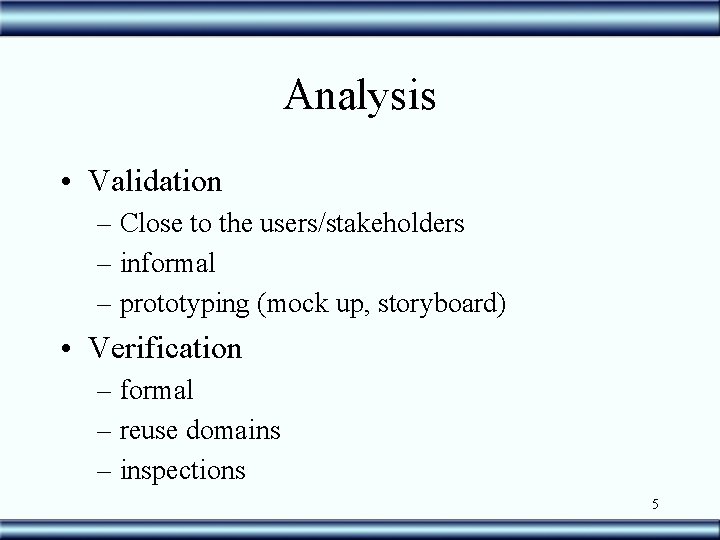

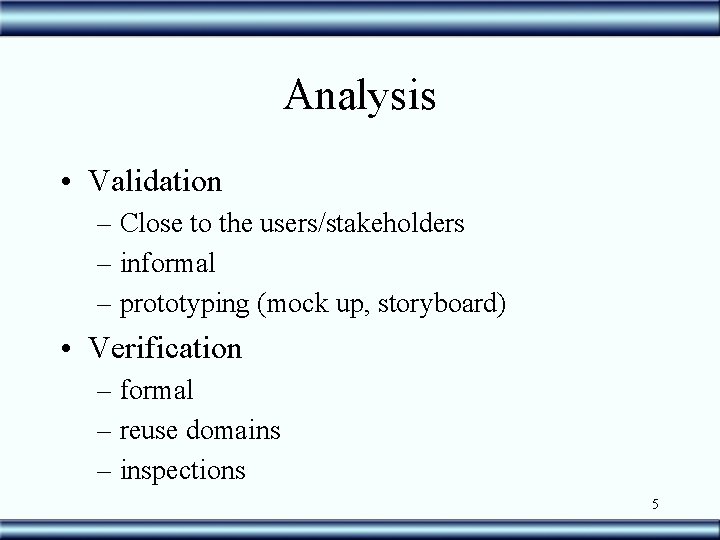

Analysis • Validation – Close to the users/stakeholders – informal – prototyping (mock up, storyboard) • Verification – formal – reuse domains – inspections 5

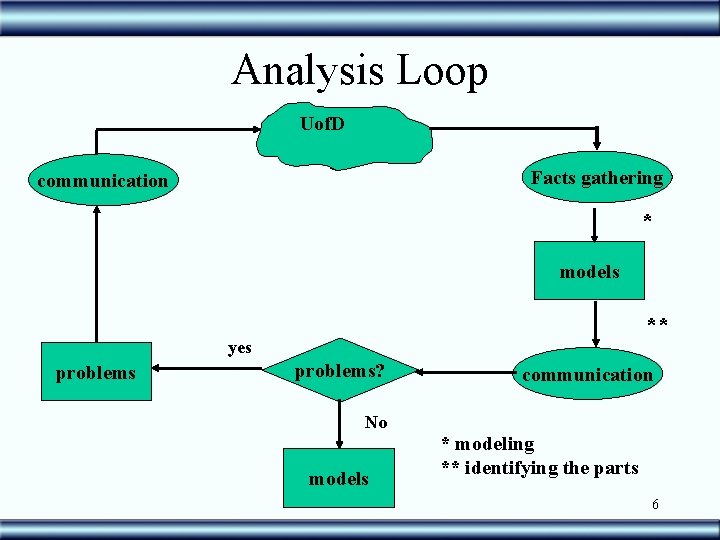

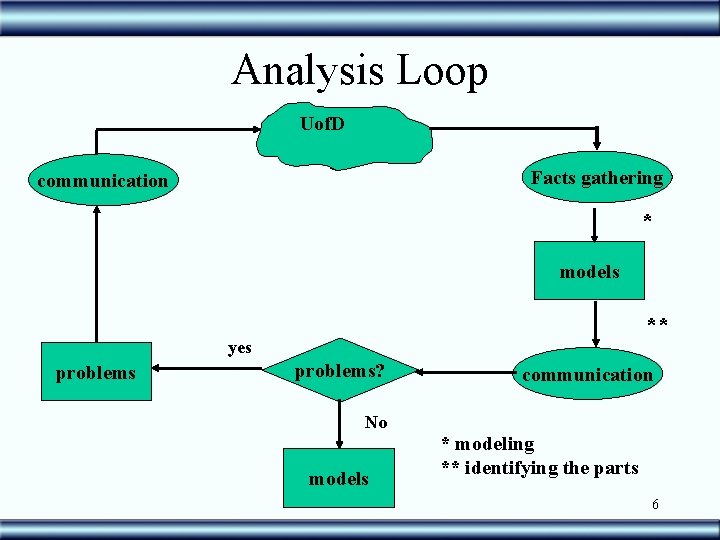

Analysis Loop Uof. D Facts gathering communication * models ** yes problems? communication No models * modeling ** identifying the parts 6

Identification of the parts • Depends on how the models are organized and stored • Linked to modelling and elicitation • 90% of the problems is in 10% do system 7

Validation • Are we building the right product? • We have to compare the Uof. D with users/stakeholders expectations • Run Scenarios (Reading them in meetings) • Prototype 8

Validation Strategies • Informal corroboration • storyboards • prototypes 9

Validating through scenarios use • As many times as possible • The earliest the better – If possible, validate the candidate scenarios list • Scenario Validation goal: elaborate the DEO list( Discrepancies, Errors and Omissions) • Users’ commitment is essential 10

Be careful 11

Validation Through Scenarios – Gradual confirmation of scenarios parts (objective, actors, resources) – Feedback for LEL – Tag scenarios where doubts arise – Make notes of discrepancies, errors or omissions 12

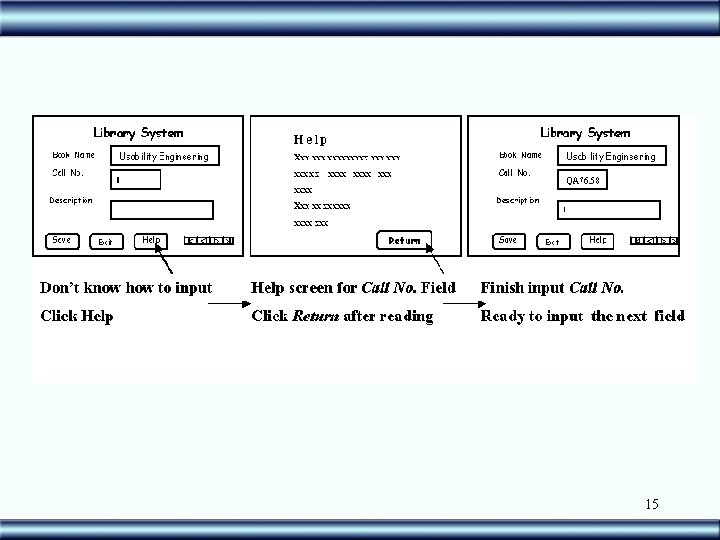

Main stream • Validate scenarios with users using semistructured interviews (other techniques possible too) • Strategies: – Read scenarios aloud together with users – Ask if they have anything to add or change – Ask Why 13

![Storyboard Leffingwell Widrig Elicit reaction such as Yes but Passive Active Storyboard [Leffingwell & Widrig] • Elicit reaction such as “Yes, but…” • Passive, Active](https://slidetodoc.com/presentation_image/8fd72c8bd6baa6390d1f6c06aea473b4/image-14.jpg)

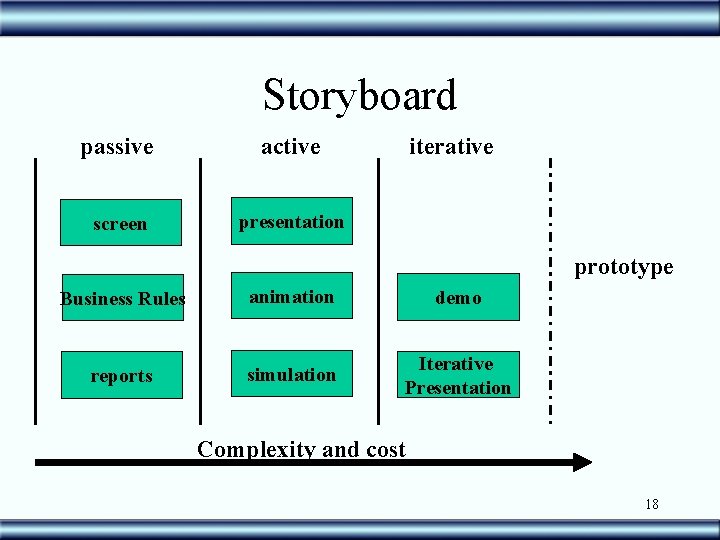

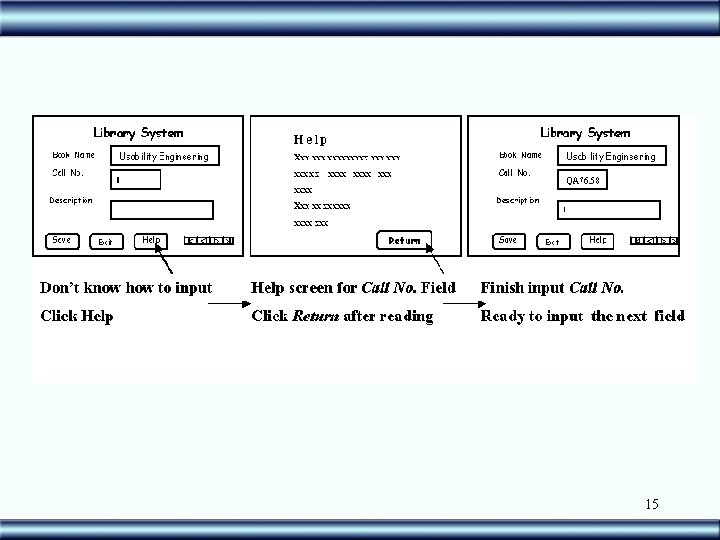

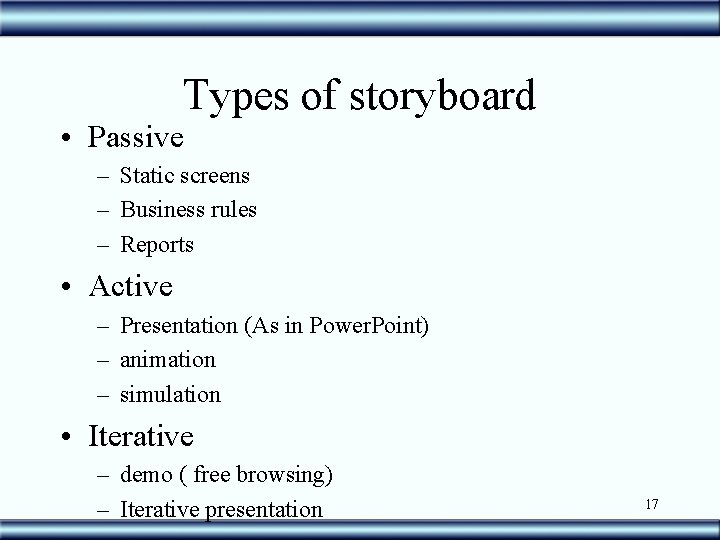

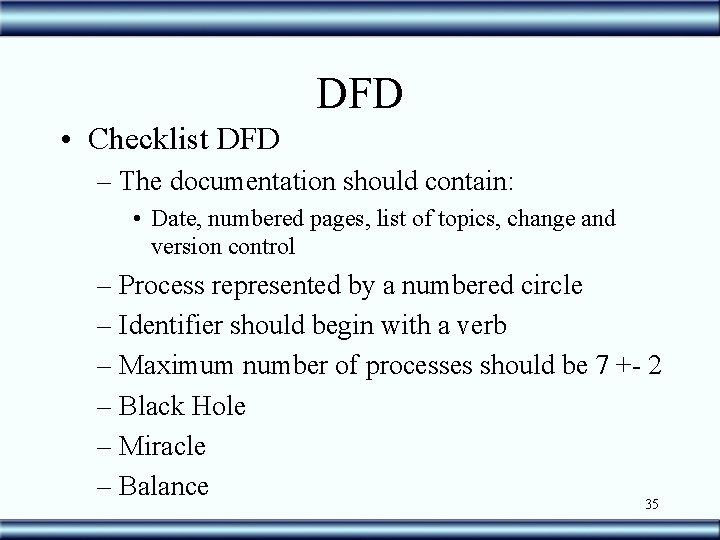

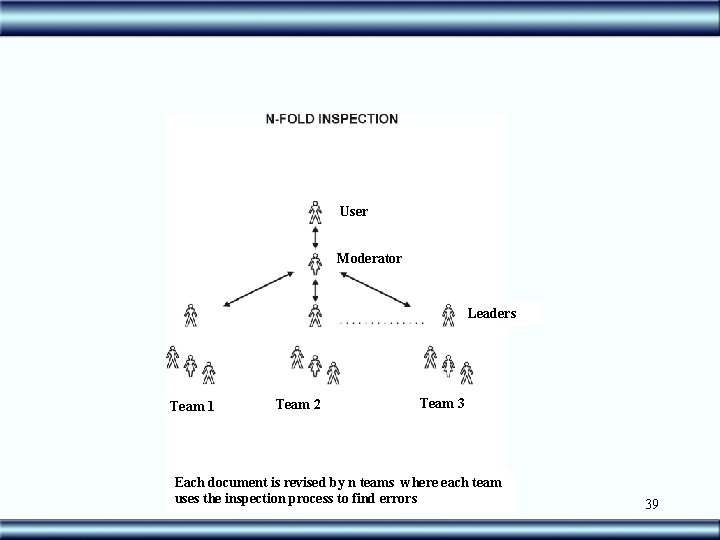

Storyboard [Leffingwell & Widrig] • Elicit reaction such as “Yes, but…” • Passive, Active or iterative • Identify actors, explain what happens to them and describe how it happens • More effective to projects with innovative or unknown content 14

15

Storyboard • Pros: – cheap – User friendly, informal and iterative – Allow to criticize system interface early in the project – Easy to create and modify 16

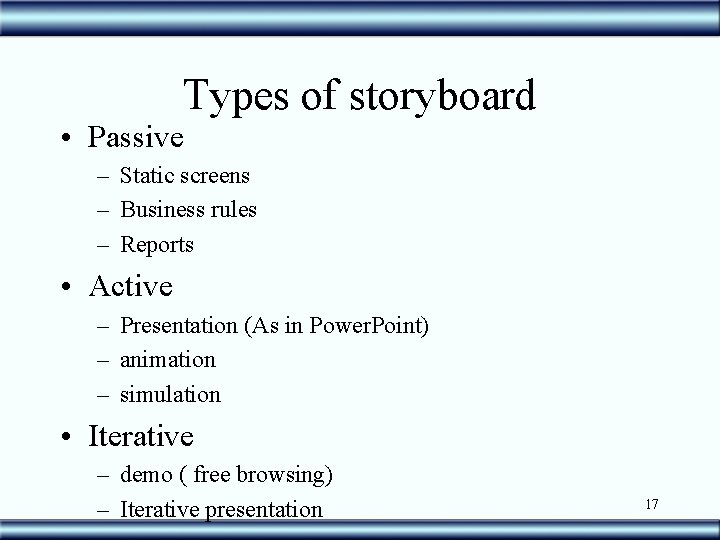

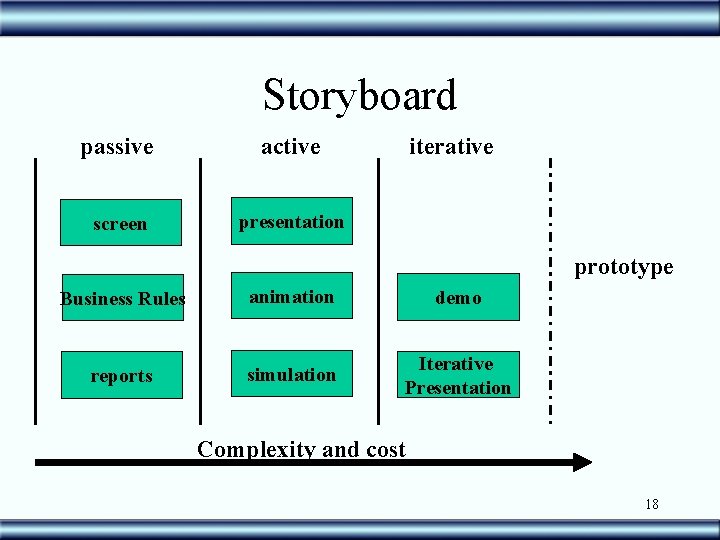

Types of storyboard • Passive – Static screens – Business rules – Reports • Active – Presentation (As in Power. Point) – animation – simulation • Iterative – demo ( free browsing) – Iterative presentation 17

Storyboard passive active screen presentation iterative prototype Business Rules animation demo reports simulation Iterative Presentation Complexity and cost 18

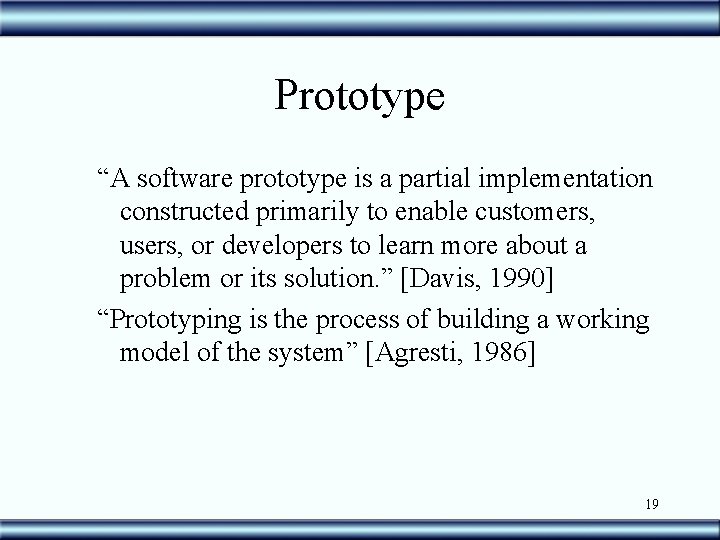

Prototype “A software prototype is a partial implementation constructed primarily to enable customers, users, or developers to learn more about a problem or its solution. ” [Davis, 1990] “Prototyping is the process of building a working model of the system” [Agresti, 1986] 19

Prototypes • Also helps to elicit reactions such as “ Yes, but…” • Help to clarify fuzzy requirements – Requirements that are known but not well defined or not well understood • Help elicit reactions such as “Now that I can see it working it comes to me that I also need…. . ” • Availability of tools that help to build fast 20 and cheap prototypes

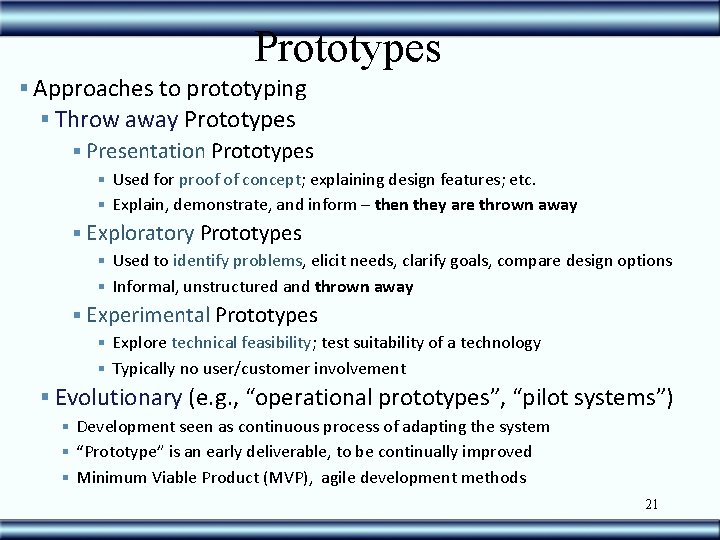

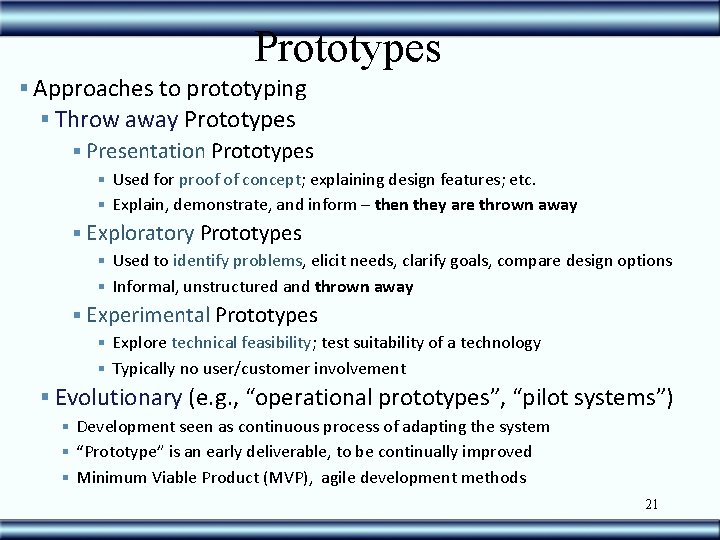

Prototypes § Approaches to prototyping § Throw away Prototypes § Presentation Prototypes § Used for proof of concept; explaining design features; etc. § Explain, demonstrate, and inform – then they are thrown away § Exploratory Prototypes § Used to identify problems, elicit needs, clarify goals, compare design options § Informal, unstructured and thrown away § Experimental Prototypes § Explore technical feasibility; test suitability of a technology § Typically no user/customer involvement § Evolutionary (e. g. , “operational prototypes”, “pilot systems”) § Development seen as continuous process of adapting the system § “Prototype” is an early deliverable, to be continually improved § Minimum Viable Product (MVP), agile development methods 21

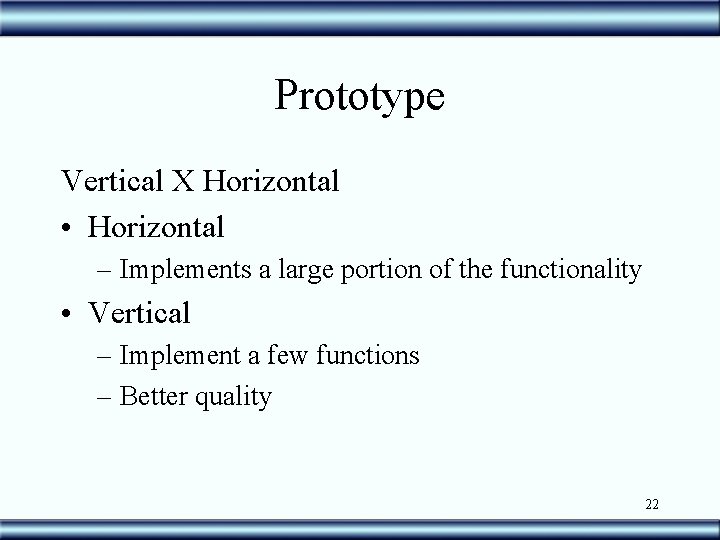

Prototype Vertical X Horizontal • Horizontal – Implements a large portion of the functionality • Vertical – Implement a few functions – Better quality 22

Verification • Are we building the product correctly ? • Use of Models – representations/languages • Use of formalisms • Informal Techniques 23

Use of formalisms • Formal Proofing of a model – Theorem proofing • Detection of discrepancies between the model and the meta models – Model Proofing 24

Techniques • Inspection A formal evaluation technique in which artifacts are examined in detail by a person or group other than the author to detect errors, violations of development standards, and other problems. Formal, initiated by the project team, planned, author is not the presenter. • Walkthrough A review process in which a developer leads one or more members of the development team through a segment of an artifact that he or she has written while the other members ask questions and make comments about technique, style, possible error, violation of development standards, and other problems. Semi-formal, initiated by the author, quite frequently poorly planned. 25

![Walkthrough ad hoc preparation Select participants Meeting authors evaluators secretary Leader Walk-through • ad hoc preparation – Select participants • Meeting [author(s), evaluator(s), secretary, (Leader)]](https://slidetodoc.com/presentation_image/8fd72c8bd6baa6390d1f6c06aea473b4/image-26.jpg)

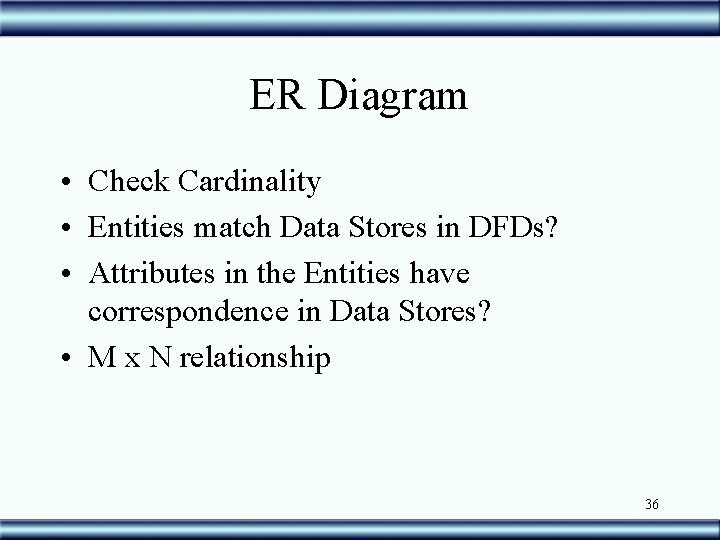

Walk-through • ad hoc preparation – Select participants • Meeting [author(s), evaluator(s), secretary, (Leader)] – Set date and location – Highlight the importance of the stakeholders participation • Prepare the Material – Have some hard Copies – Project the Req. Document onto the wall – Send the Material to be reviewed to participants 26

• Reading – author reads • Go Section by Section – Evaluators hear/read it • Give time for Evaluators to read it. – Have questions prepared to stimulate discussion in case you get a passive audience – Evaluators point out problems (questions) – Secretaries write down problems – Ensure the meeting focus on Requirements not on how to build them or on the process. • List of problems/Changes 27

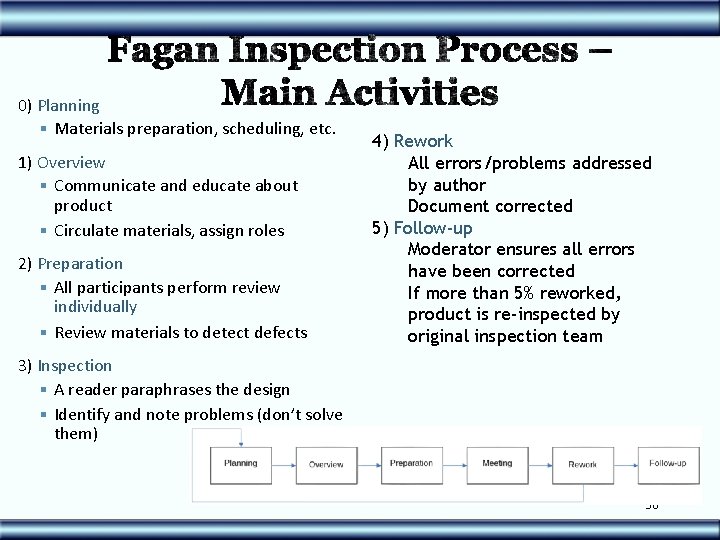

Inspections • Create in 1972 by Fagan, at IBM, to improve quality of code • Currently they are used to check any type of artefact used in the software development process • Inspection can detect between 30% and 90% of existing errors • Reading technique applied to an artefact aiming at detecting errors in the artefact according to a prestablished criterea 28

Fagan Inspection Process § Fagan inspection – structured review process § Aim – finding defects in development documents, including requirements specifications § Named after Michael Fagan, who is credited with being the inventor of formal software inspections (at IBM in the 70’s) § Every activity has entry and exit criteria (i. e. , pre-/postconditions) § The Fagan inspection process offers a way to validate whether the output complies with the exit criteria intended for that activity. 29

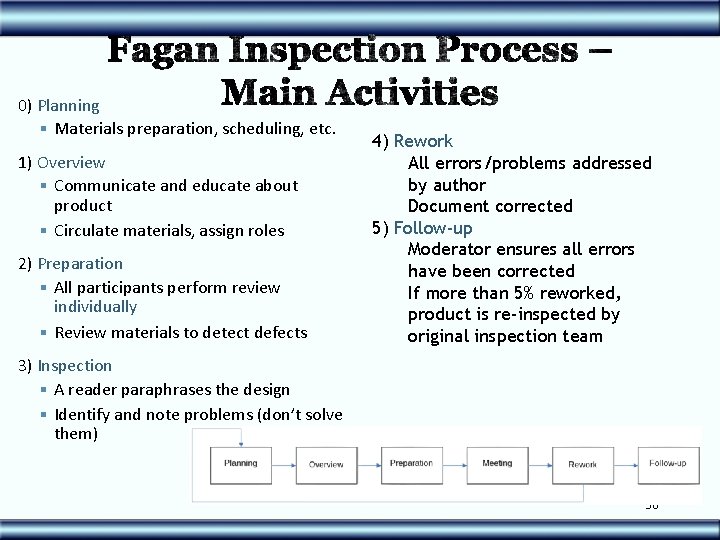

0) Planning § Materials preparation, scheduling, etc. 1) Overview § Communicate and educate about product § Circulate materials, assign roles 2) Preparation § All participants perform review individually § Review materials to detect defects 4) Rework All errors/problems addressed by author Document corrected 5) Follow-up Moderator ensures all errors have been corrected If more than 5% reworked, product is re-inspected by original inspection team 3) Inspection § A reader paraphrases the design § Identify and note problems (don’t solve them) 30

Inspections – Help to find errors before we move to the next phase – Which information should be checked – How to identify defects in the chosen models – Techiniques for reading a Requirements Document • Ad hoc (based on personal experience) • Checklist (list of itens to be checked) • Perspective-based reading (Good for req in Natural Language) 31

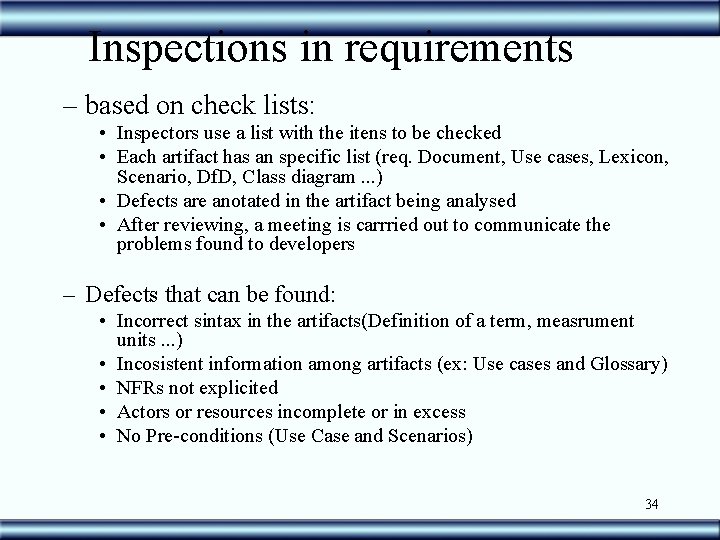

During Inspections, Avoid § Devil’s Advocates § Contradicting for the sake of argument § Debugging § Find problems don’t try to fix them (no time) § Reviewing the author and not the product § Engaging in debates and rebuttals § Record issues for later discussion § Violating rules and deviating from the agenda § Have people put, say, $1 into the party fund when they speak out of turn § Take away the chairs (stand-up review) § Timer to limit participants from talking longer than allowed 32

Inspections - roles • organizer: responsable for organizing the whole process • author : presents a global view of the artefact before the inspection begins • inspector: analyse the artefacts following a pre-defined reading techinique anotating all the defects found • secretary: document the inspection. Collects defects found by inspectors and consolidate them into one document • moderator: reponsable for conducting the meeting and manage possible conflicts 33

Inspections in requirements – based on check lists: • Inspectors use a list with the itens to be checked • Each artifact has an specific list (req. Document, Use cases, Lexicon, Scenario, Df. D, Class diagram. . . ) • Defects are anotated in the artifact being analysed • After reviewing, a meeting is carrried out to communicate the problems found to developers – Defects that can be found: • Incorrect sintax in the artifacts(Definition of a term, measrument units. . . ) • Incosistent information among artifacts (ex: Use cases and Glossary) • NFRs not explicited • Actors or resources incomplete or in excess • No Pre-conditions (Use Case and Scenarios) 34

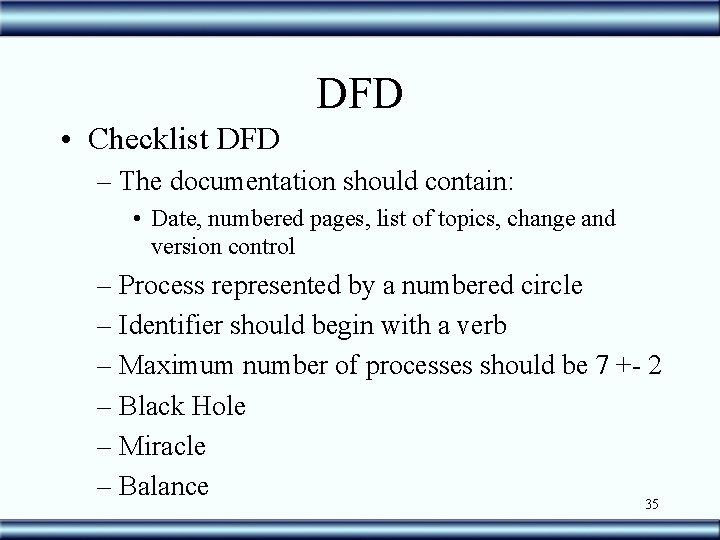

DFD • Checklist DFD – The documentation should contain: • Date, numbered pages, list of topics, change and version control – Process represented by a numbered circle – Identifier should begin with a verb – Maximum number of processes should be 7 +- 2 – Black Hole – Miracle – Balance 35

ER Diagram • Check Cardinality • Entities match Data Stores in DFDs? • Attributes in the Entities have correspondence in Data Stores? • M x N relationship 36

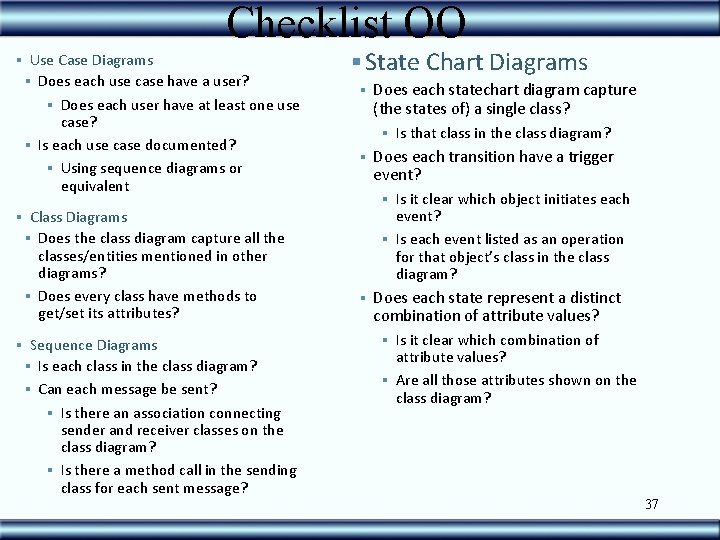

Checklist OO § Use Case Diagrams § Does each use case have a user? § Does each user have at least one use case? § Is each use case documented? § Using sequence diagrams or equivalent § Class Diagrams § Does the class diagram capture all the classes/entities mentioned in other diagrams? § Does every class have methods to get/set its attributes? § Sequence Diagrams § Is each class in the class diagram? § Can each message be sent? § Is there an association connecting sender and receiver classes on the class diagram? § Is there a method call in the sending class for each sent message? § State Chart Diagrams § Does each statechart diagram capture (the states of) a single class? § Is that class in the class diagram? § Does each transition have a trigger event? § Is it clear which object initiates each event? § Is each event listed as an operation for that object’s class in the class diagram? § Does each state represent a distinct combination of attribute values? § Is it clear which combination of attribute values? § Are all those attributes shown on the class diagram? 37

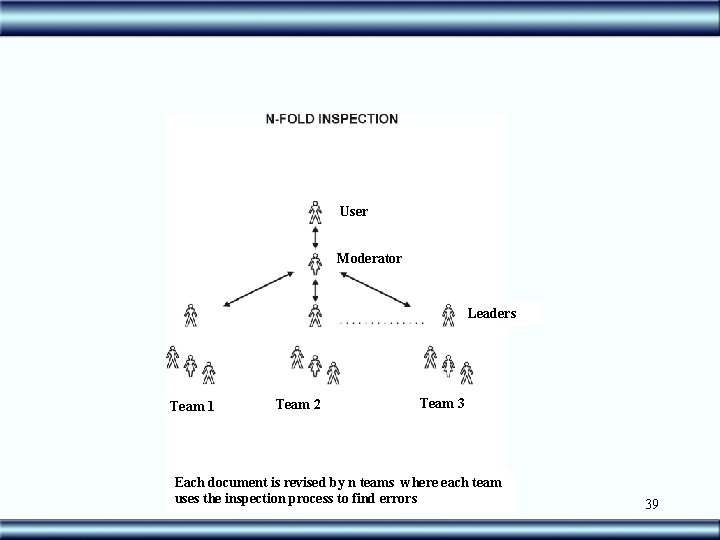

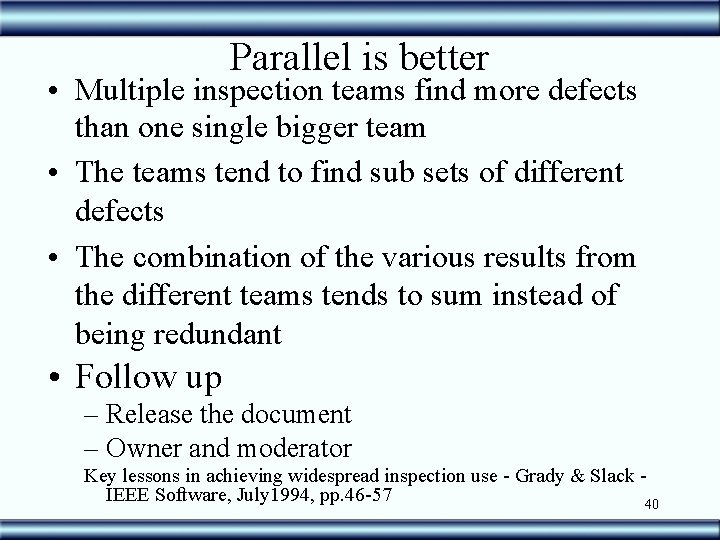

• N-Fold Inspection – Many teams – Each one carries out an independent inspection process – Compare results – Final Report 38

User Moderator Leaders Team 1 Team 2 Team 3 Each document is revised by n teams where each team uses the inspection process to find errors 39

Parallel is better • Multiple inspection teams find more defects than one single bigger team • The teams tend to find sub sets of different defects • The combination of the various results from the different teams tends to sum instead of being redundant • Follow up – Release the document – Owner and moderator Key lessons in achieving widespread inspection use - Grady & Slack IEEE Software, July 1994, pp. 46 -57 40

Challenges from N-Fold inspections • Big Requirements Document – Informal and incremental revisions during the development of specification – Each inspector starts from a different point – Divide into many small teams – each team inspects a specific part of the document 41

Challenges from inspections • Large inspection teams – Difficult to schedule meetings – Parallel conversation – Difficult to get an agreement • What to do? – Be sure the participants are there to inspect and not to “spy” the specification or to keep a political status 42

Challenges from inspections • Large inspection teams – Understand which point of view (client, user, developer) the inspector is using and keep only one to each interested part – Establish many small teams and carry out the inspection in parallel. Combine the lists and remove redundancies. 43

Challenges from inspections • Geographical distance between inspectors – videoconference, teleconference, e-mail, web • Difficult to observe corporal language and expressions, • Difficult to moderate – 25% reduction on the effectiveness • [Wiegers 98] - The seven deadly sins of software reviews - Software Development -6(3) pp. 44 -47 44