Reverse Time Migration on GMAC NVIDIA GTC 22

- Slides: 56

Reverse Time Migration on GMAC NVIDIA GTC 22 nd of September, 2010 Javier Cabezas Mauricio Araya Isaac Gelado Thomas Bradley Gladys González José María Cela Nacho Navarro BSC Repsol/BSC UPC/UIUC NVIDIA Repsol UPC/BSC

Outline • Introduction • Reverse Time Migration on CUDA • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 2

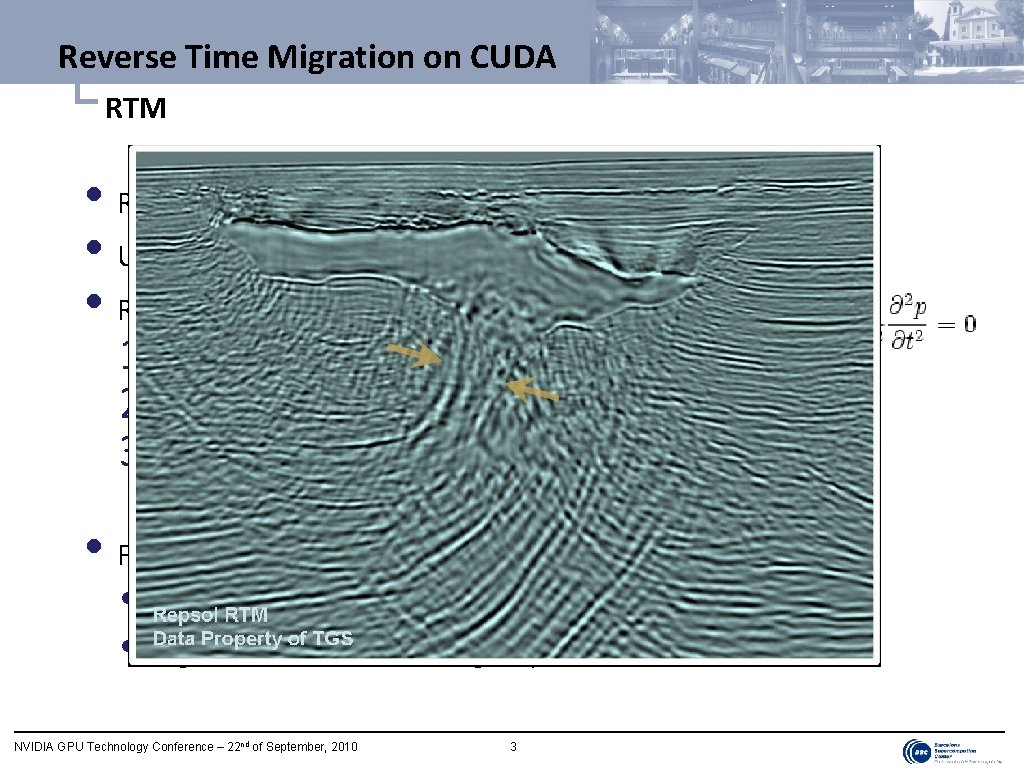

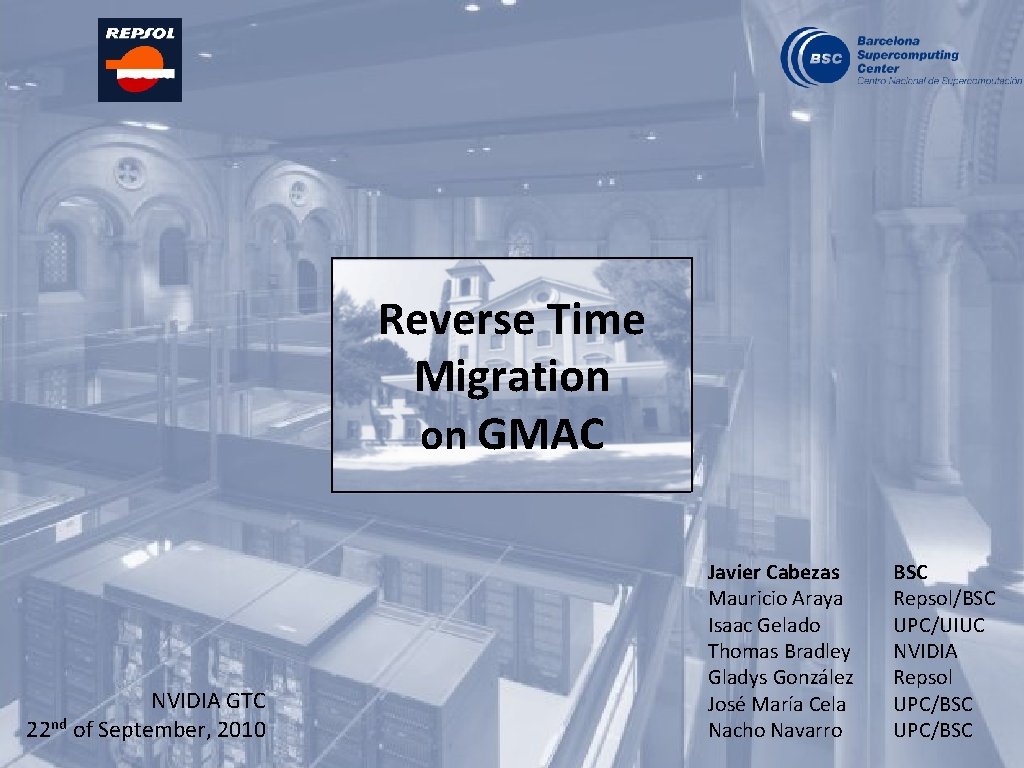

Reverse Time Migration on CUDA └ RTM • RTM generates an image of the subsurface layers • Uses traces recorded by sensors in the field • RTM’s algorithm 1. Propagation of a modeled wave (forward in time) 2. Propagation of the recorded traces (backward in time) 3. Correlation of the forward and backward wavefields • Last forward wavefield with the first backward wavefield • FDTD are preferred to FFT • • 2 nd-order finite differencing in time High-order finite differencing in space NVIDIA GPU Technology Conference – 22 nd of September, 2010 3

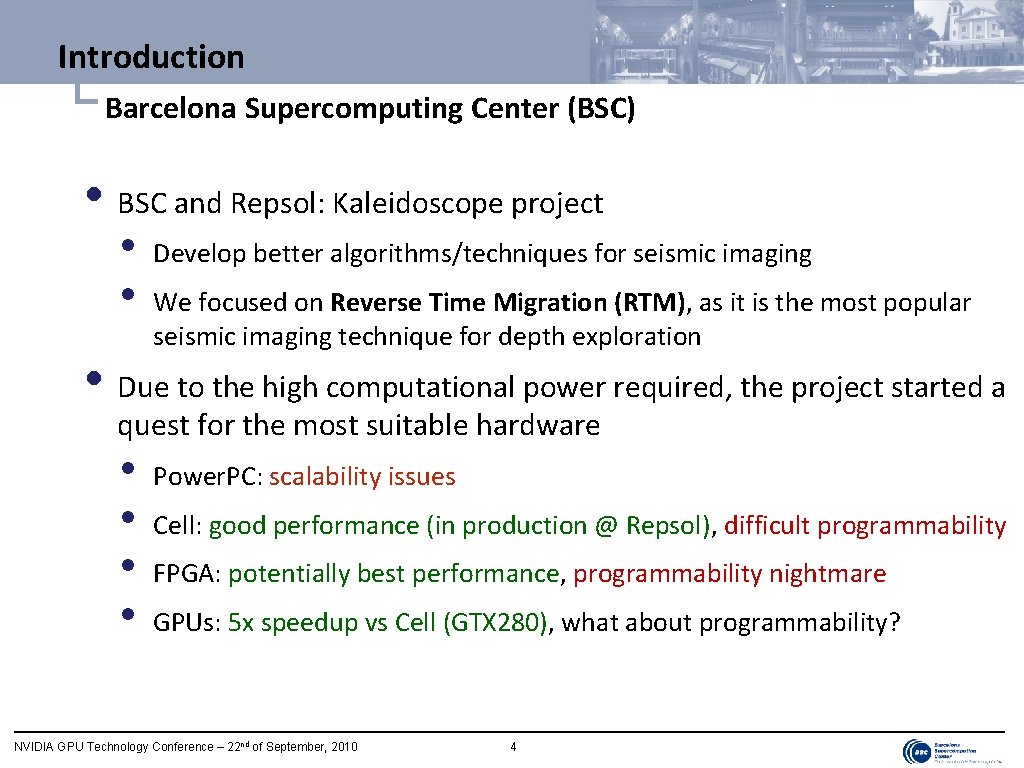

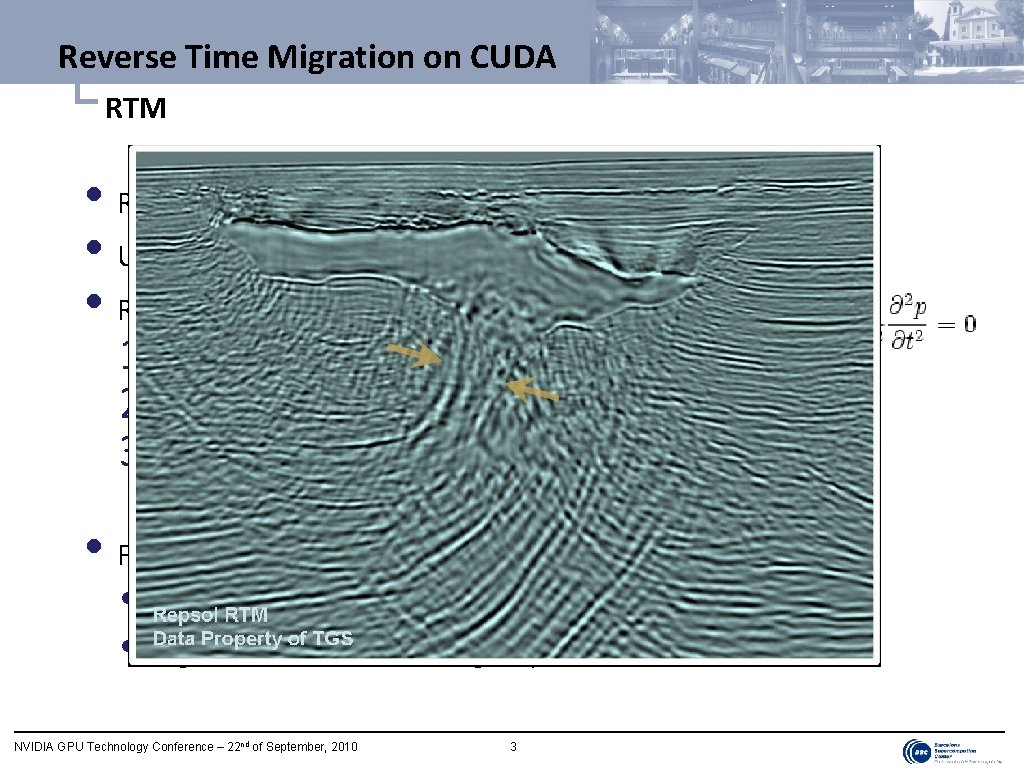

Introduction └ Barcelona Supercomputing Center (BSC) • BSC and Repsol: Kaleidoscope project • • Develop better algorithms/techniques for seismic imaging We focused on Reverse Time Migration (RTM), as it is the most popular seismic imaging technique for depth exploration • Due to the high computational power required, the project started a quest for the most suitable hardware • • Power. PC: scalability issues Cell: good performance (in production @ Repsol), difficult programmability FPGA: potentially best performance, programmability nightmare GPUs: 5 x speedup vs Cell (GTX 280), what about programmability? NVIDIA GPU Technology Conference – 22 nd of September, 2010 4

Outline • Introduction • Reverse Time Migration on CUDA →General approach • Disk I/O • Domain decomposition • Overlapping computation and communication • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 5

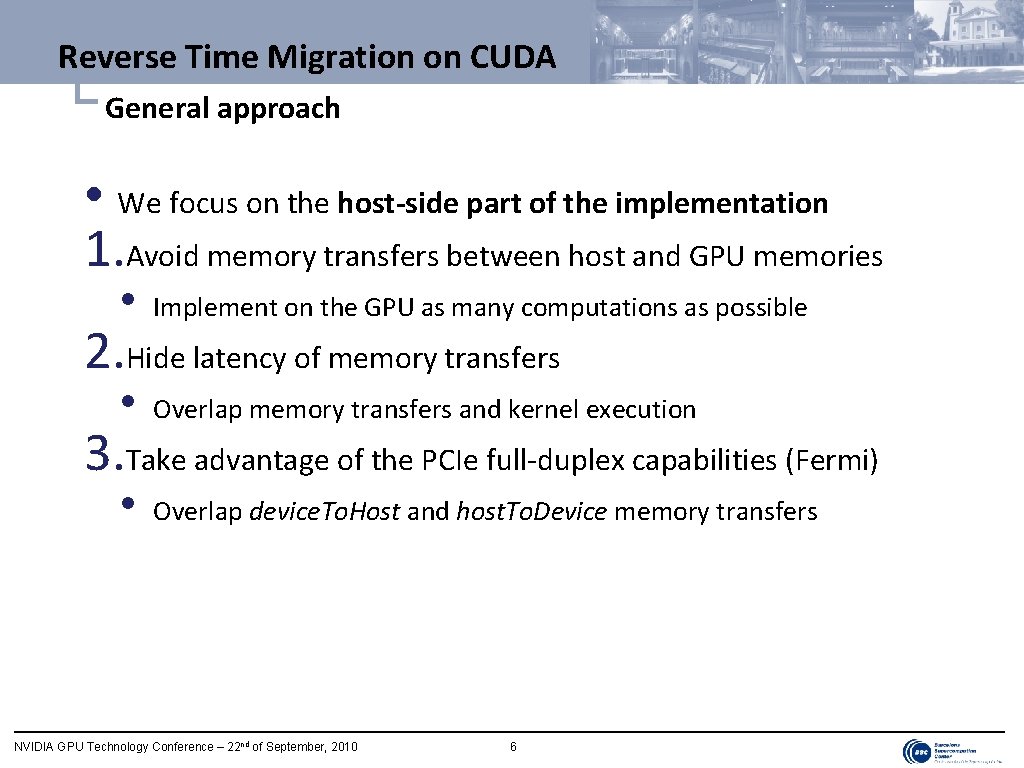

Reverse Time Migration on CUDA └ General approach • We focus on the host-side part of the implementation 1. Avoid memory transfers between host and GPU memories • Implement on the GPU as many computations as possible • Overlap memory transfers and kernel execution • Overlap device. To. Host and host. To. Device memory transfers 2. Hide latency of memory transfers 3. Take advantage of the PCIe full-duplex capabilities (Fermi) NVIDIA GPU Technology Conference – 22 nd of September, 2010 6

Reverse Time Migration on CUDA └ General approach Forward Backward 3 D-Stencil Absorbing Boundary Conditions Source insertion Traces insertion Compression Read from disk Write to disk Decompression Correlation NVIDIA GPU Technology Conference – 22 nd of September, 2010 7

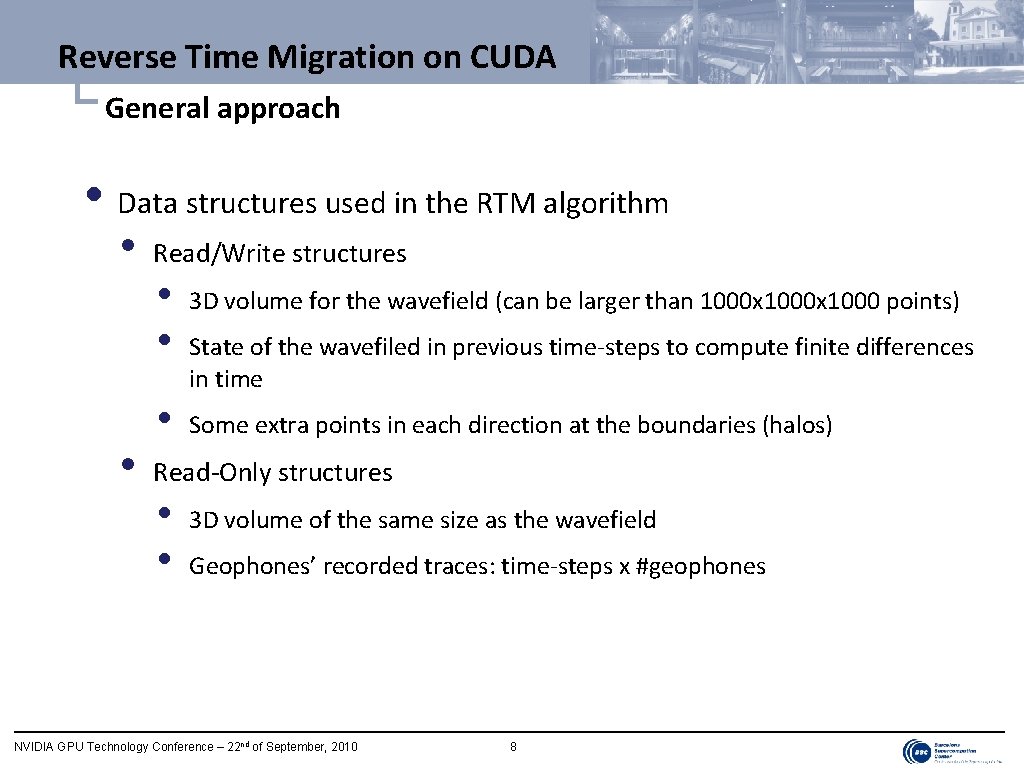

Reverse Time Migration on CUDA └ General approach • Data structures used in the RTM algorithm • • Read/Write structures • • • 3 D volume for the wavefield (can be larger than 1000 x 1000 points) State of the wavefiled in previous time-steps to compute finite differences in time Some extra points in each direction at the boundaries (halos) Read-Only structures • • 3 D volume of the same size as the wavefield Geophones’ recorded traces: time-steps x #geophones NVIDIA GPU Technology Conference – 22 nd of September, 2010 8

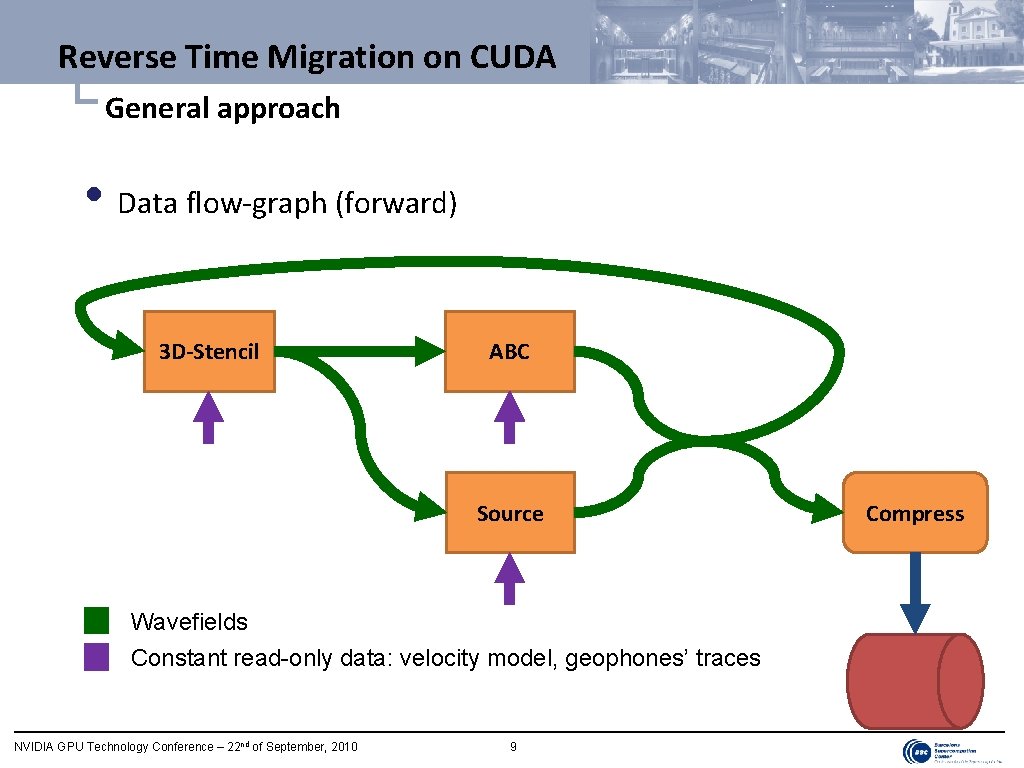

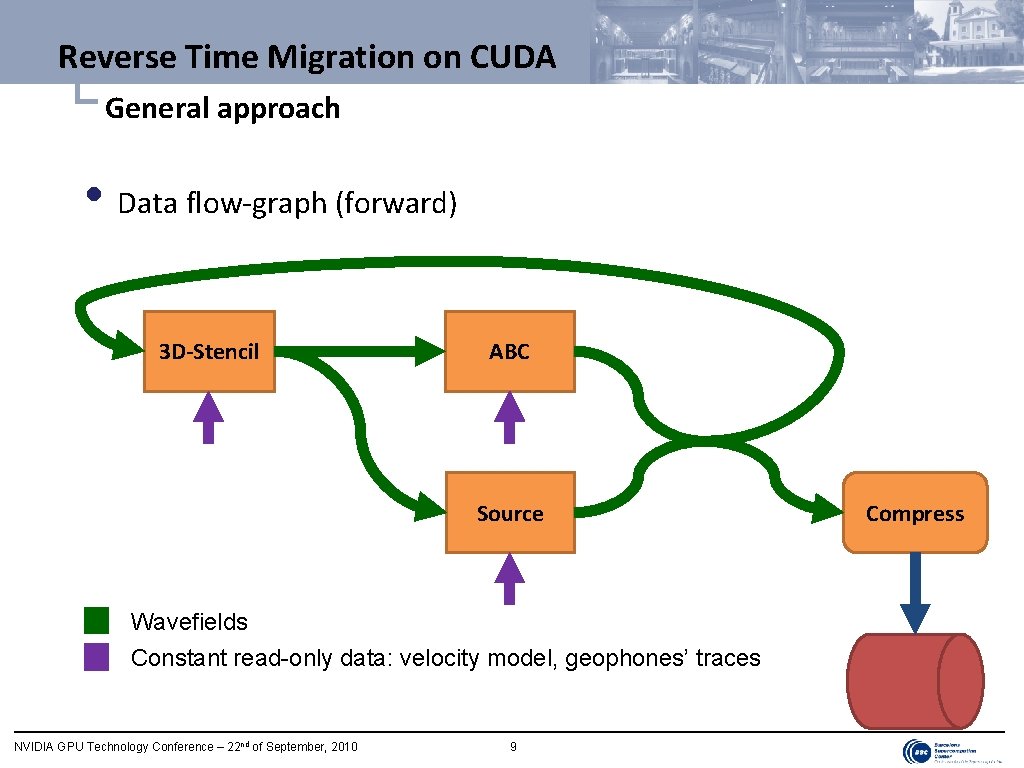

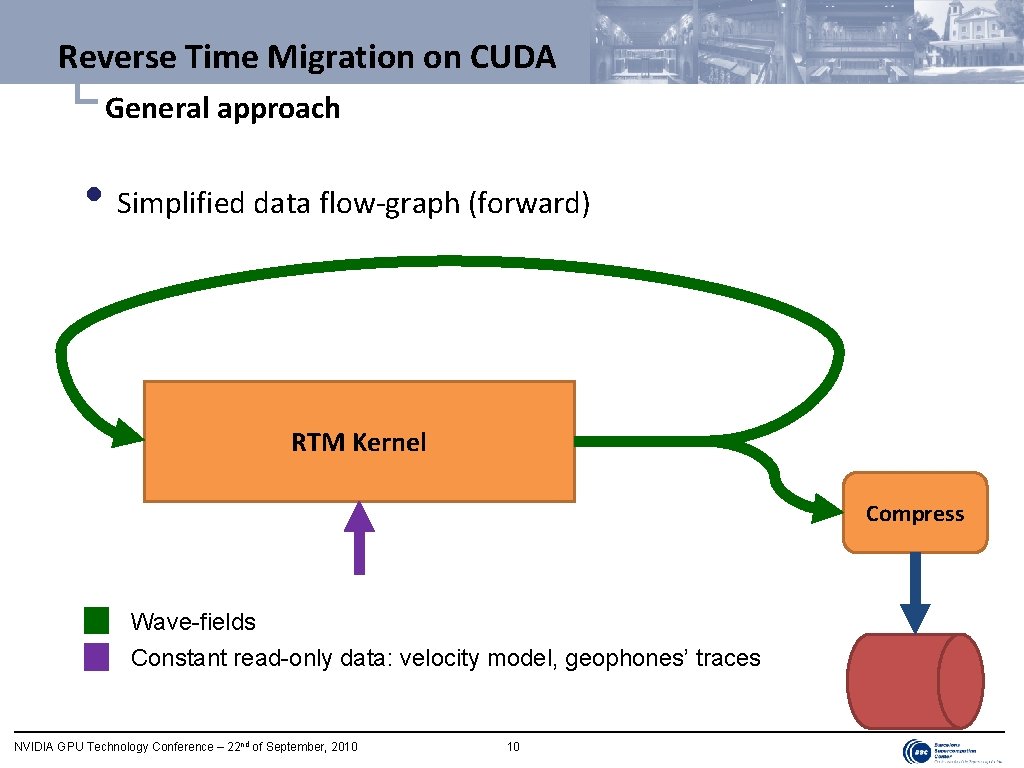

Reverse Time Migration on CUDA └ General approach • Data flow-graph (forward) 3 D-Stencil ABC Source Wavefields Constant read-only data: velocity model, geophones’ traces NVIDIA GPU Technology Conference – 22 nd of September, 2010 9 Compress

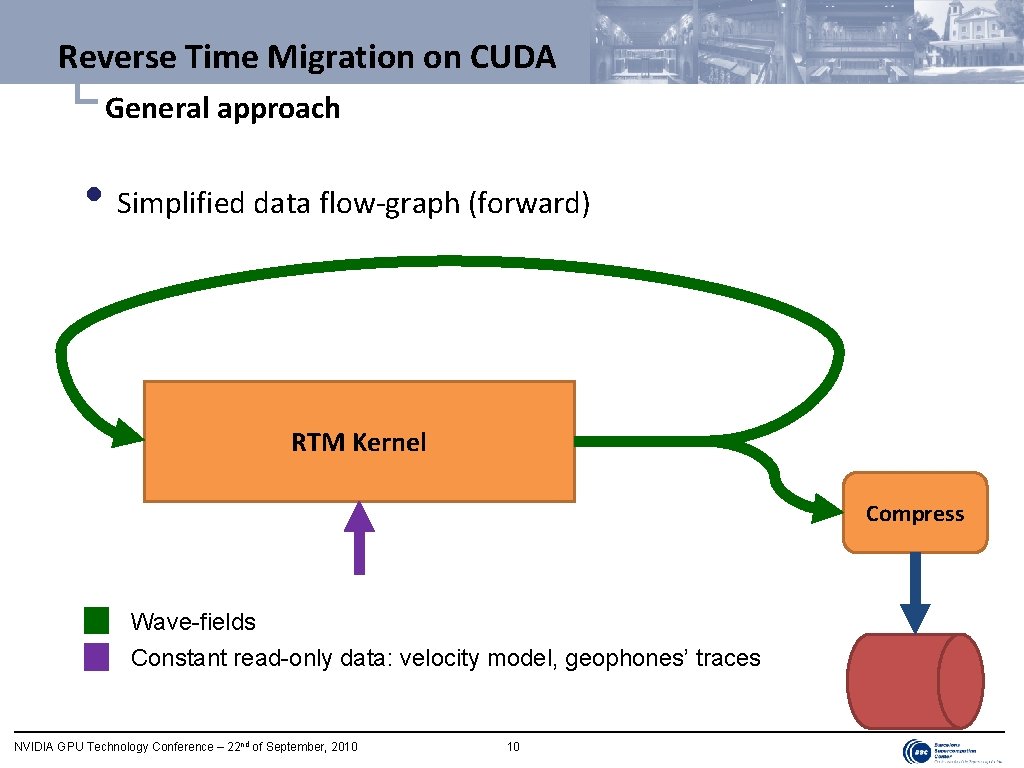

Reverse Time Migration on CUDA └ General approach • Simplified data flow-graph (forward) RTM Kernel Compress Wave-fields Constant read-only data: velocity model, geophones’ traces NVIDIA GPU Technology Conference – 22 nd of September, 2010 10

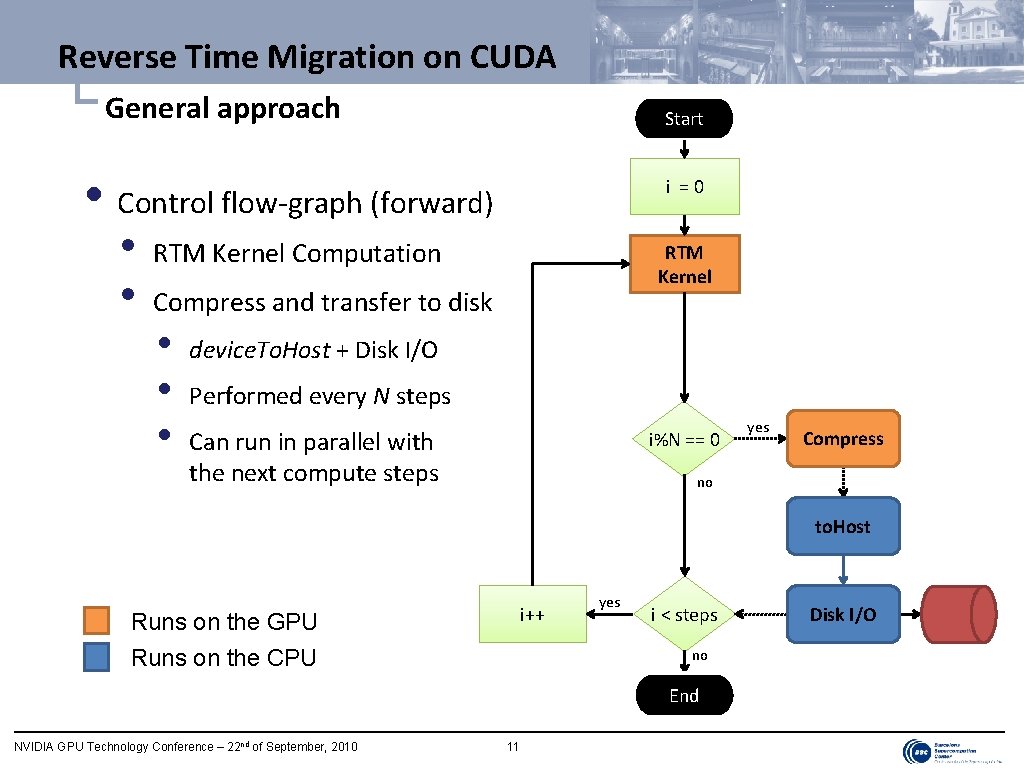

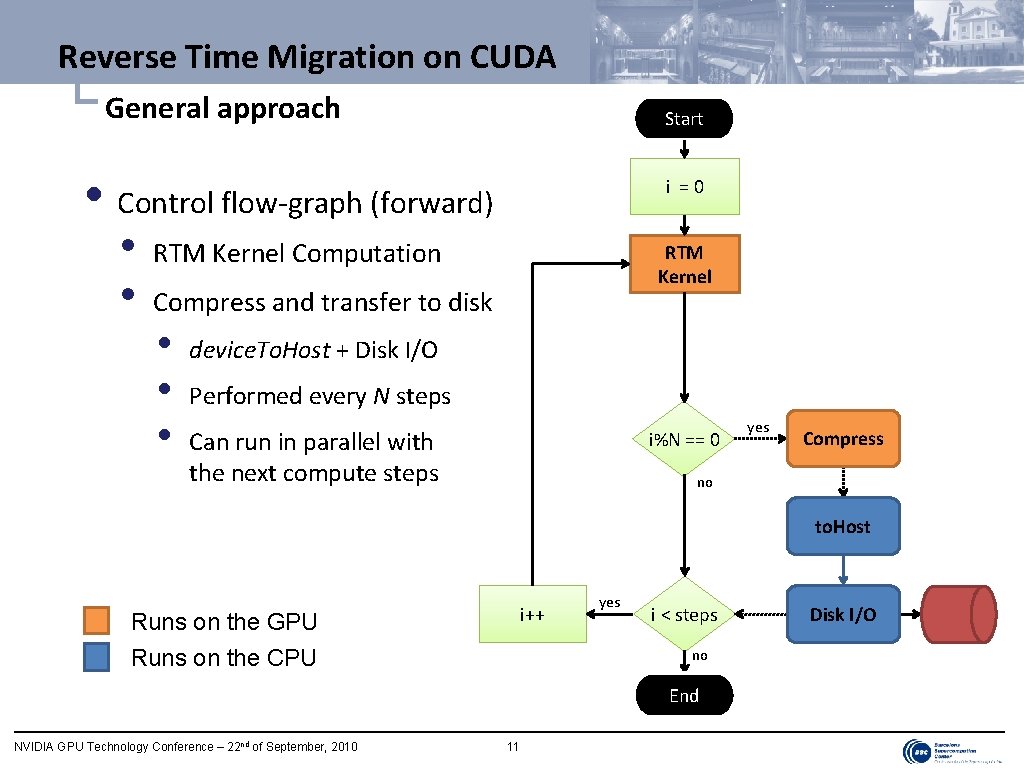

Reverse Time Migration on CUDA └ General approach Start • Control flow-graph (forward) • • i =0 RTM Kernel Computation RTM Kernel Compress and transfer to disk • • • device. To. Host + Disk I/O Performed every N steps Can run in parallel with the next compute steps i%N == 0 yes Compress no to. Host i++ Runs on the GPU Runs on the CPU yes i < steps no End NVIDIA GPU Technology Conference – 22 nd of September, 2010 11 Disk I/O

Outline • Introduction • Reverse Time Migration on CUDA • General approach →Disk I/O • Domain decomposition • Overlapping computation and communication • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 12

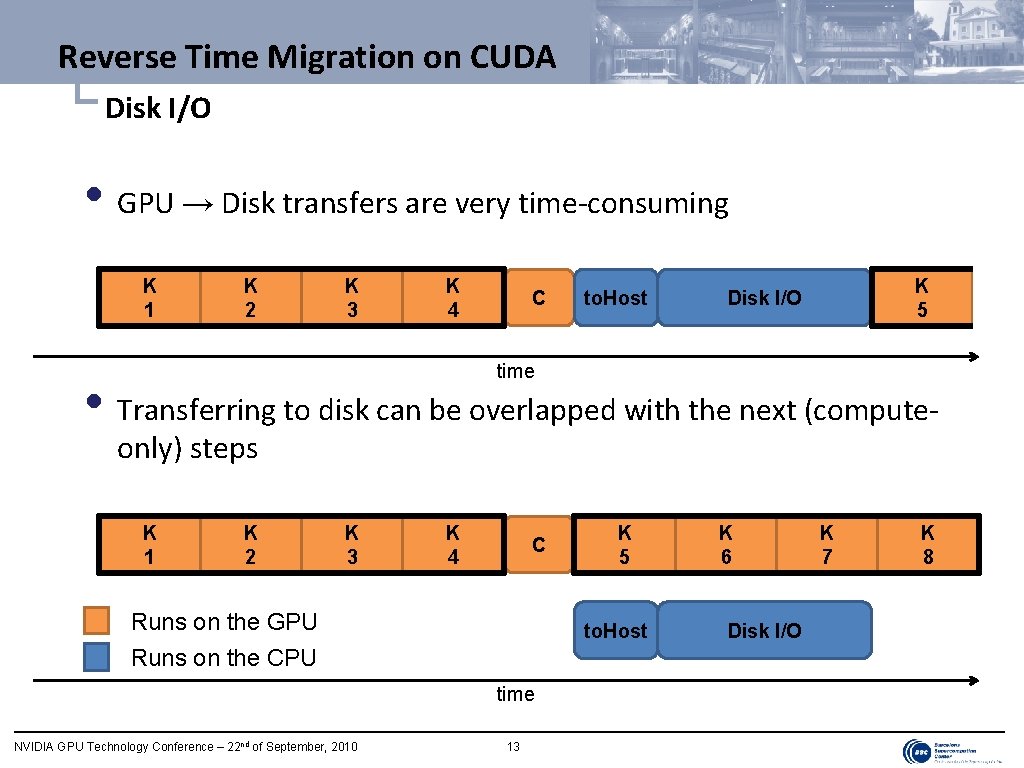

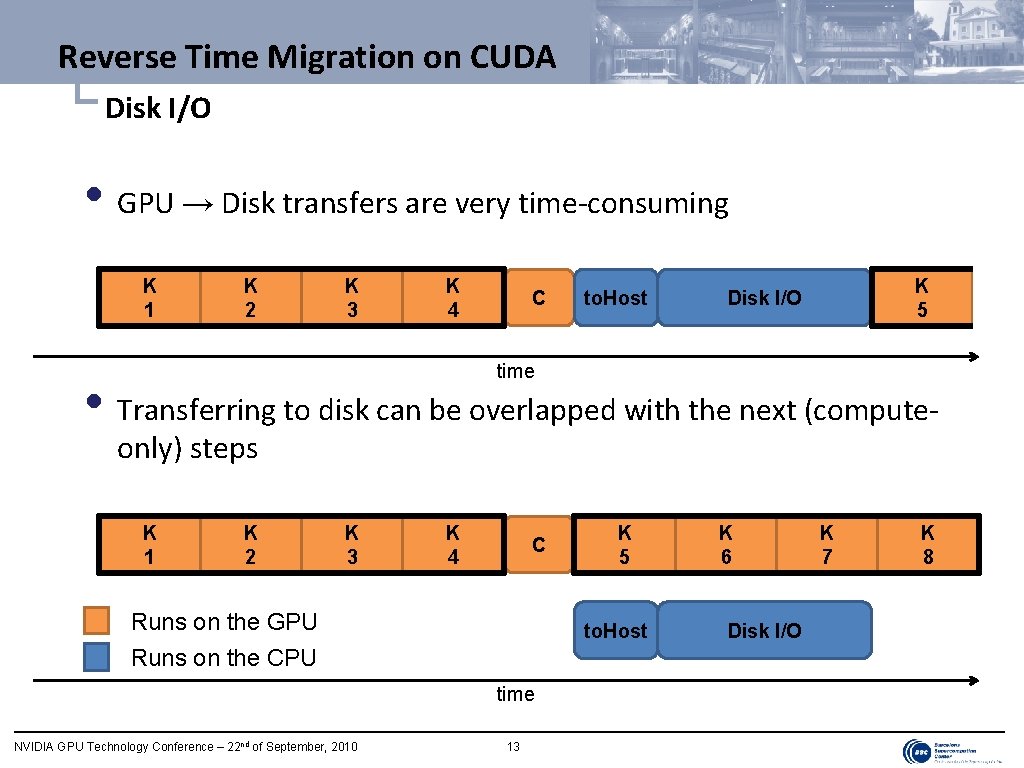

Reverse Time Migration on CUDA └ Disk I/O • GPU → Disk transfers are very time-consuming K 1 K 2 K 3 K 4 C to. Host K 5 Disk I/O time • Transferring to disk can be overlapped with the next (computeonly) steps K 1 K 2 K 3 K 4 C Runs on the GPU to. Host Runs on the CPU time NVIDIA GPU Technology Conference – 22 nd of September, 2010 K 5 13 K 6 Disk I/O K 7 K 8

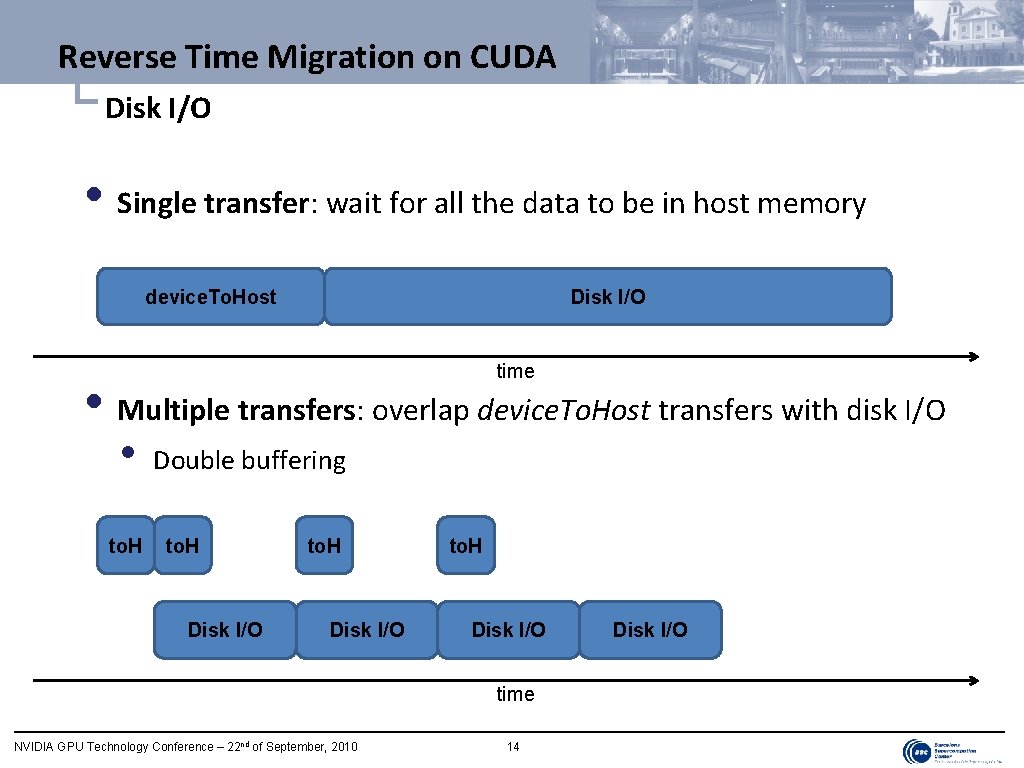

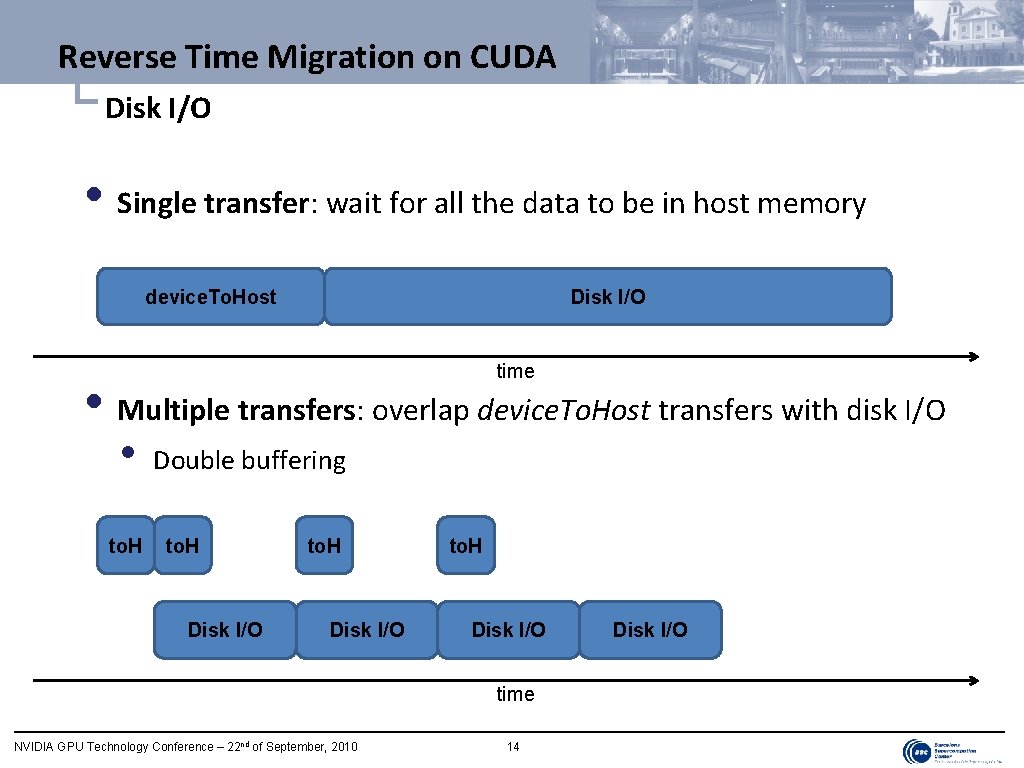

Reverse Time Migration on CUDA └ Disk I/O • Single transfer: wait for all the data to be in host memory device. To. Host Disk I/O time • Multiple transfers: overlap device. To. Host transfers with disk I/O • to. H Double buffering to. H Disk I/O time NVIDIA GPU Technology Conference – 22 nd of September, 2010 14 Disk I/O

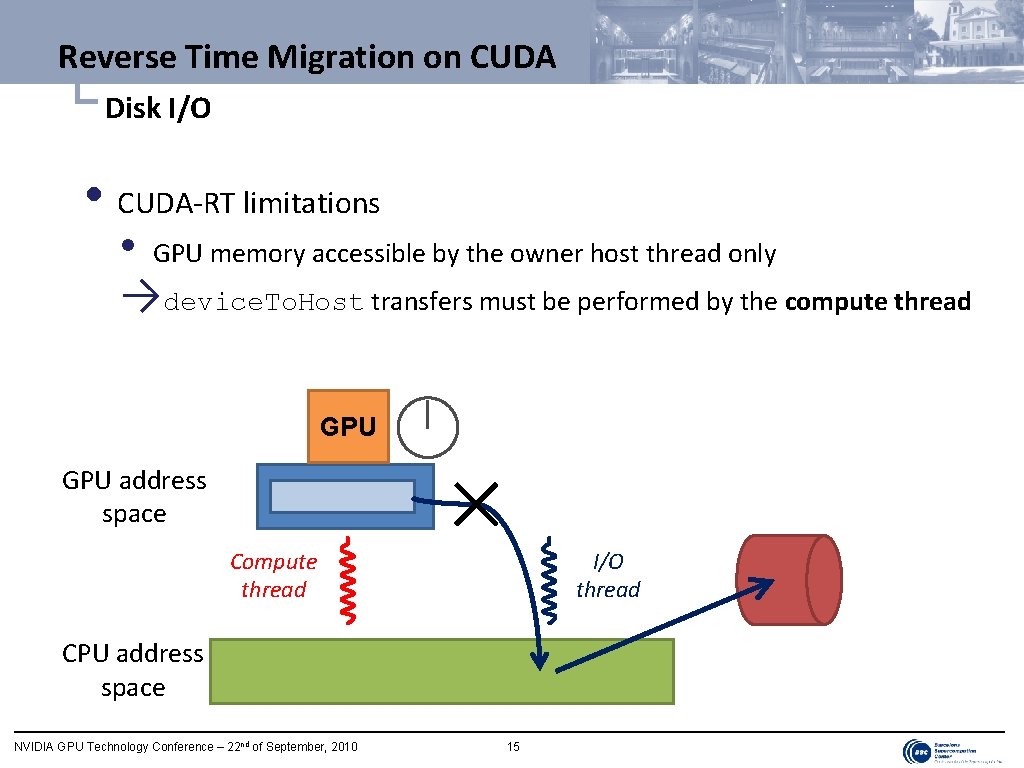

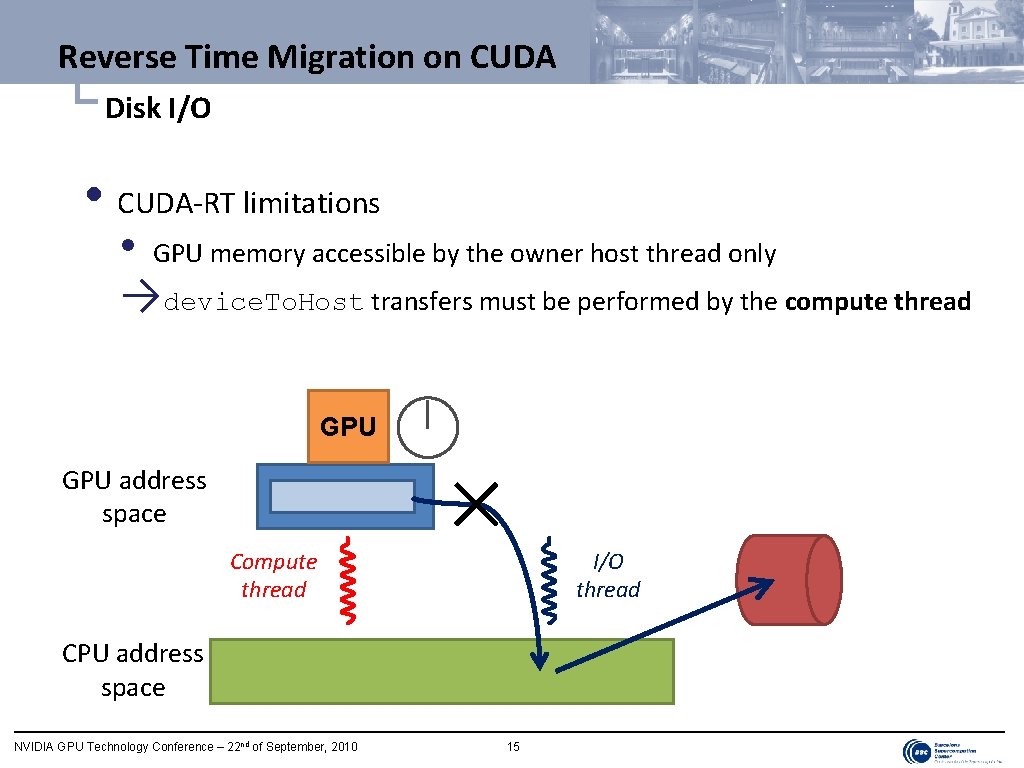

Reverse Time Migration on CUDA └ Disk I/O • CUDA-RT limitations • GPU memory accessible by the owner host thread only →device. To. Host transfers must be performed by the compute thread GPU address space Compute thread I/O thread CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 15

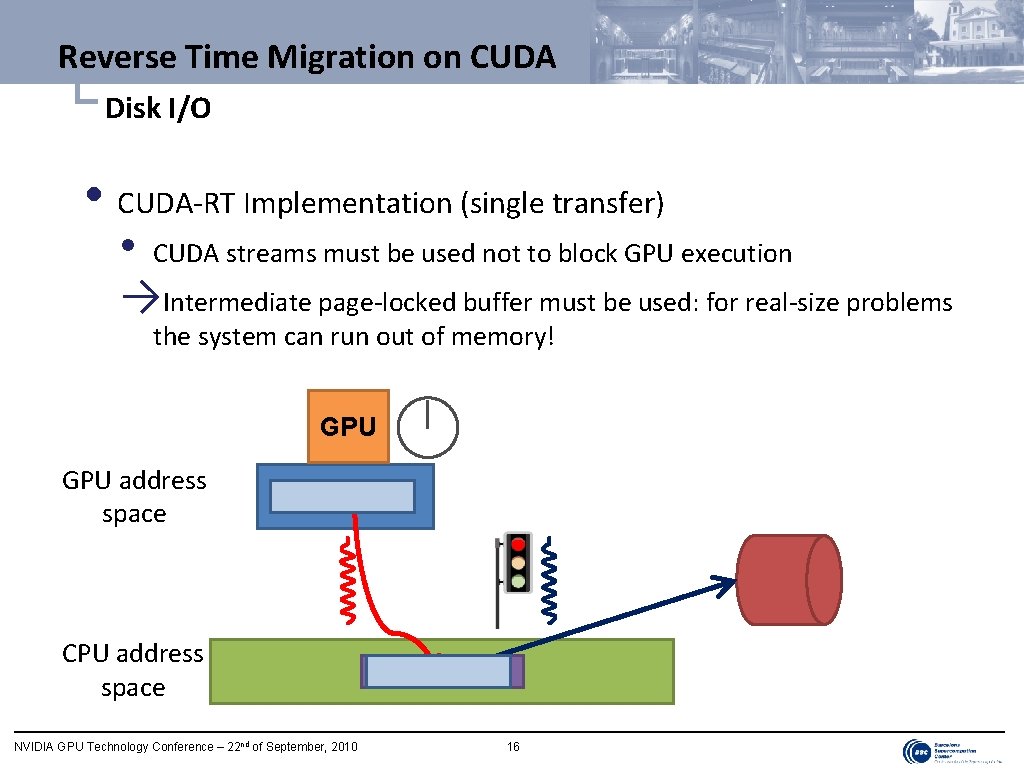

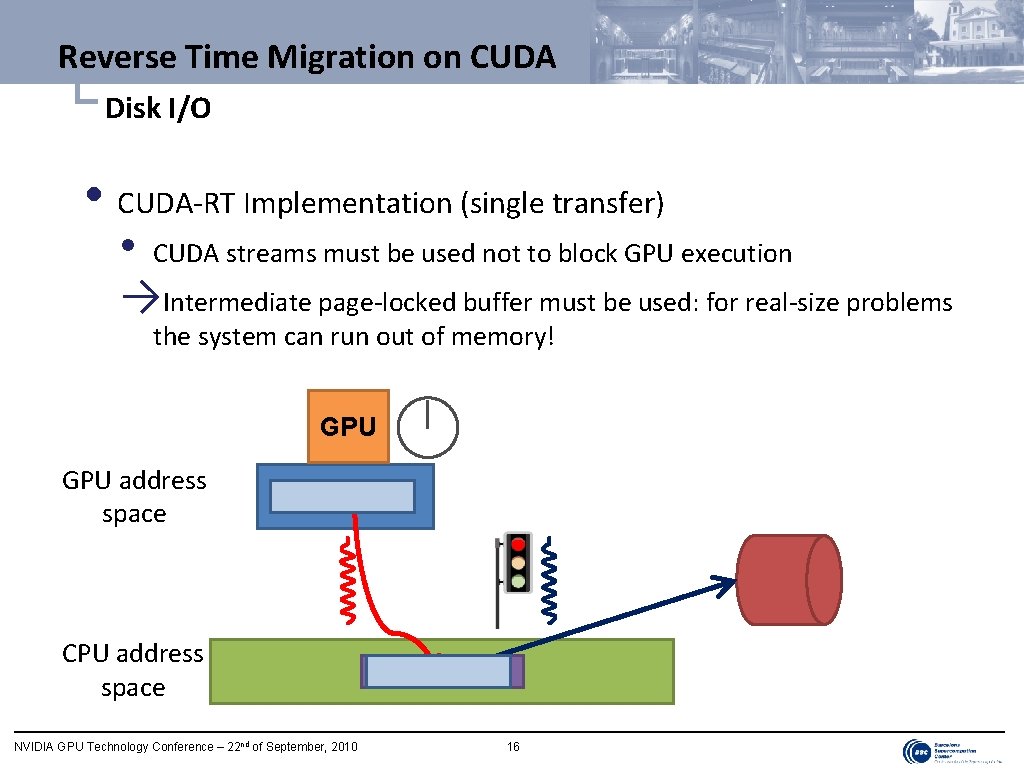

Reverse Time Migration on CUDA └ Disk I/O • CUDA-RT Implementation (single transfer) • CUDA streams must be used not to block GPU execution →Intermediate page-locked buffer must be used: for real-size problems the system can run out of memory! GPU address space CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 16

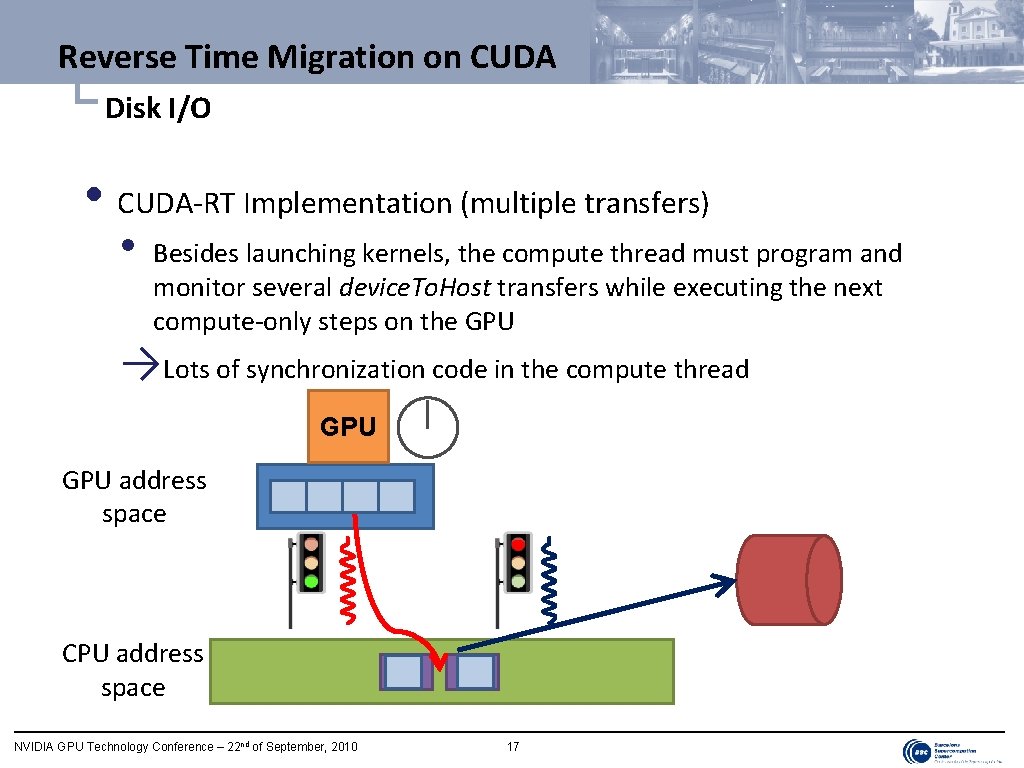

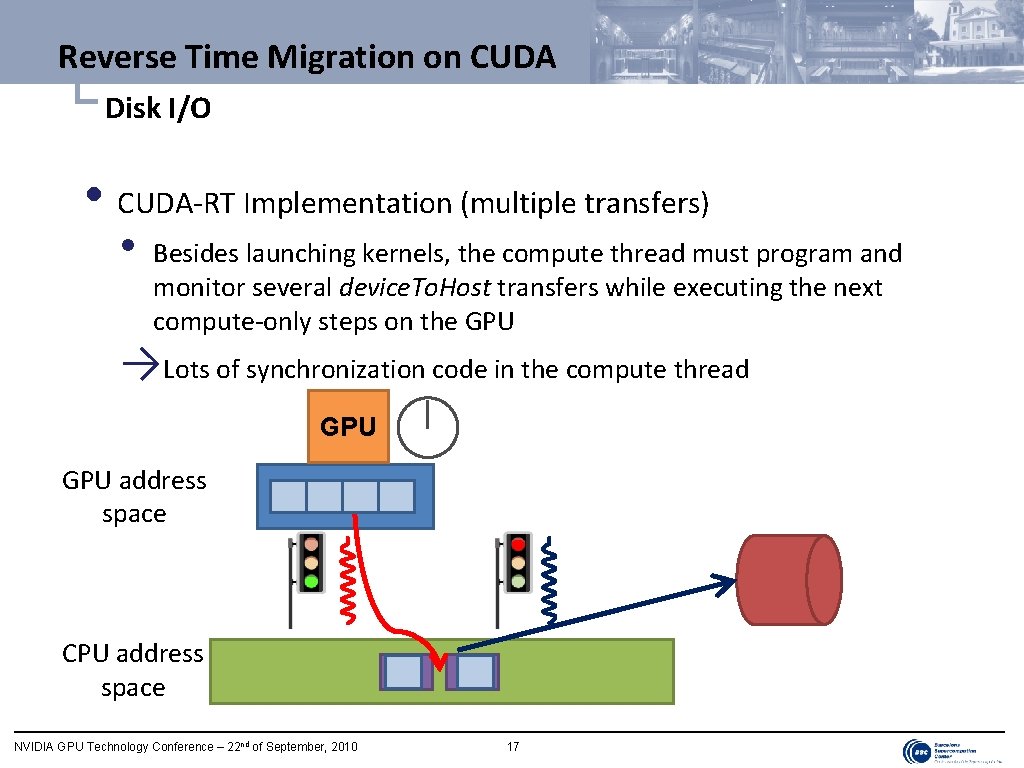

Reverse Time Migration on CUDA └ Disk I/O • CUDA-RT Implementation (multiple transfers) • Besides launching kernels, the compute thread must program and monitor several device. To. Host transfers while executing the next compute-only steps on the GPU →Lots of synchronization code in the compute thread GPU address space CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 17

Outline • Introduction • Reverse Time Migration on CUDA • General approach • Disk I/O →Domain decomposition • Overlapping computation and communication • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 18

Reverse Time Migration on CUDA └ Domain decomposition • But… wait, real-size problems require > 16 GB of data! • Volumes are split into tiles (along the Z-axis) • 3 D-Stencil introduces data dependencies D 4 D 2 x y z D 1 NVIDIA GPU Technology Conference – 22 nd of September, 2010 D 3 19

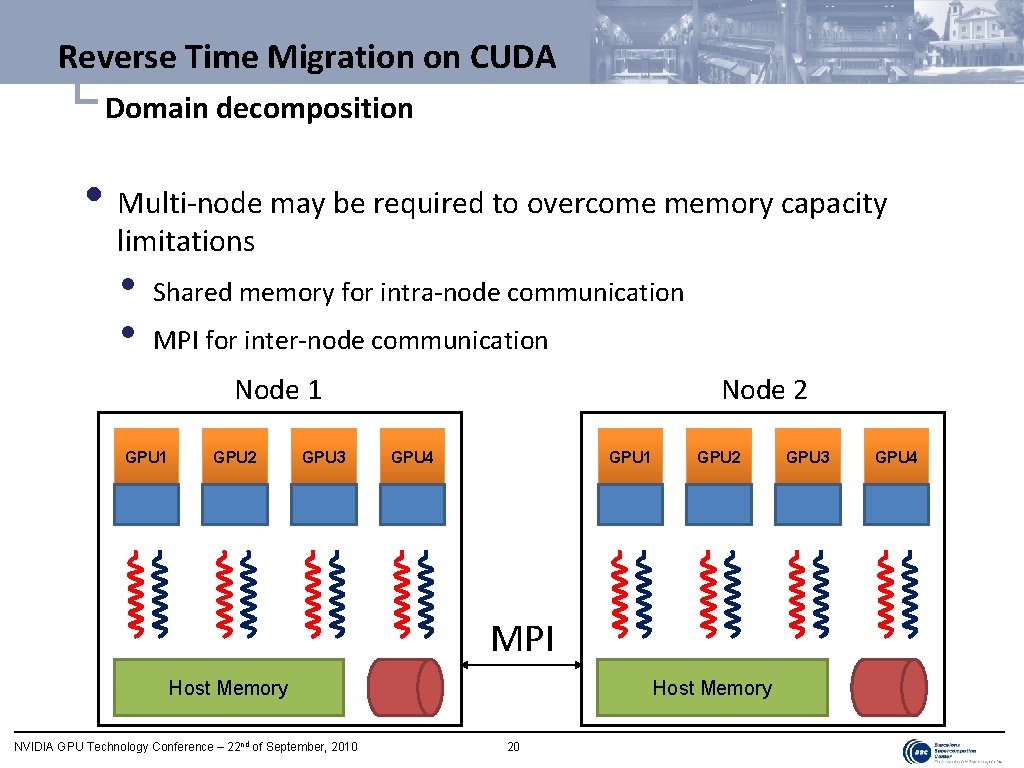

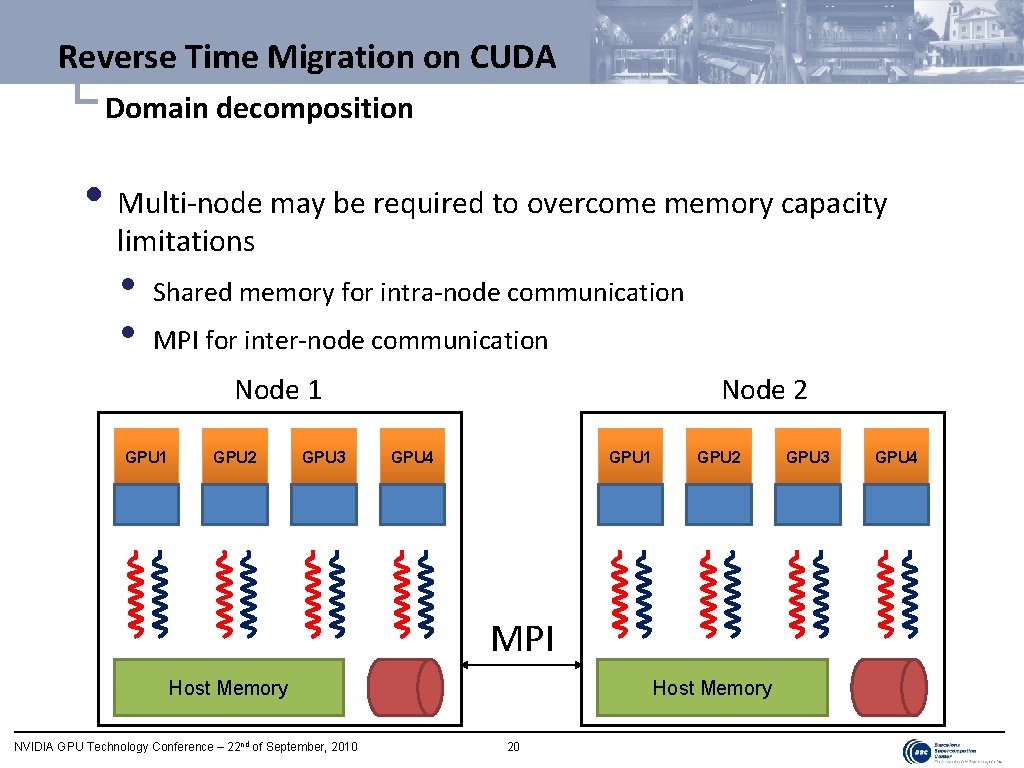

Reverse Time Migration on CUDA └ Domain decomposition • Multi-node may be required to overcome memory capacity limitations • • Shared memory for intra-node communication MPI for inter-node communication Node 2 Node 1 GPU 2 GPU 3 GPU 4 GPU 1 GPU 2 MPI Host Memory NVIDIA GPU Technology Conference – 22 nd of September, 2010 Host Memory 20 GPU 3 GPU 4

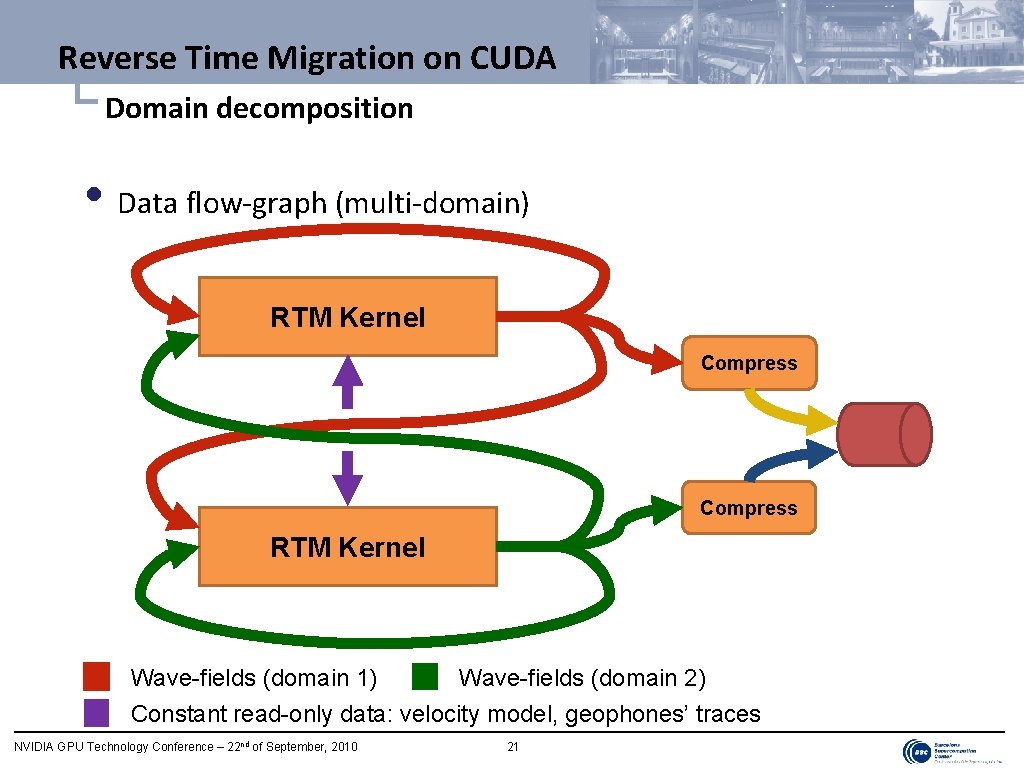

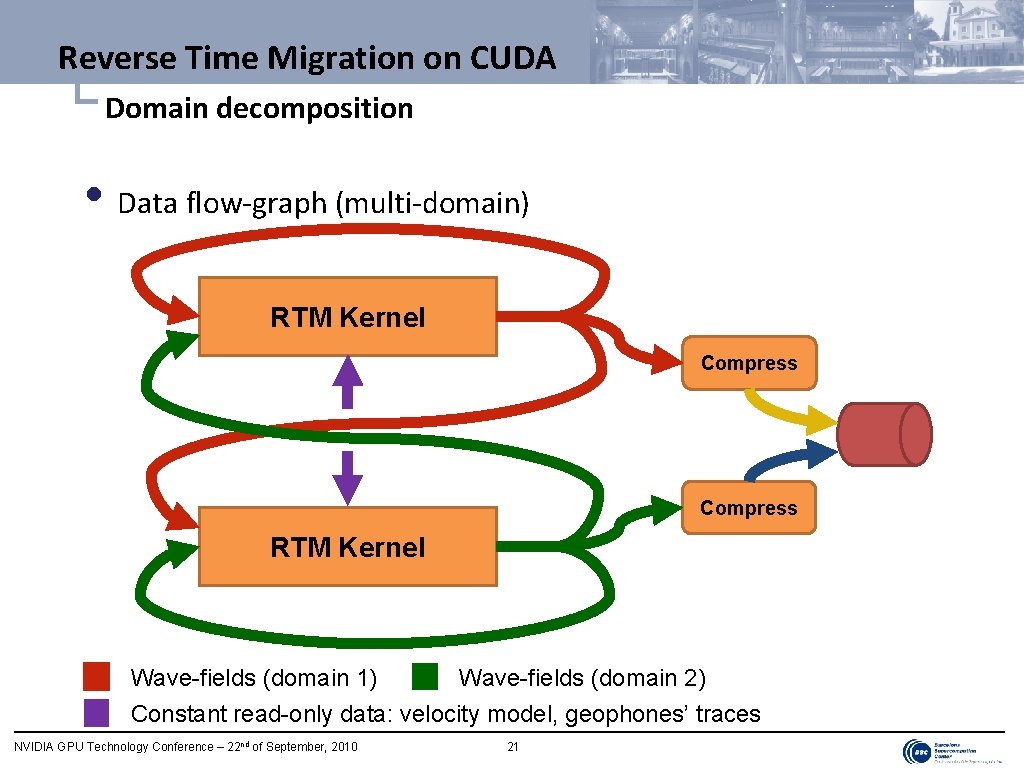

Reverse Time Migration on CUDA └ Domain decomposition • Data flow-graph (multi-domain) RTM Kernel Compress RTM Kernel Wave-fields (domain 1) Wave-fields (domain 2) Constant read-only data: velocity model, geophones’ traces NVIDIA GPU Technology Conference – 22 nd of September, 2010 21

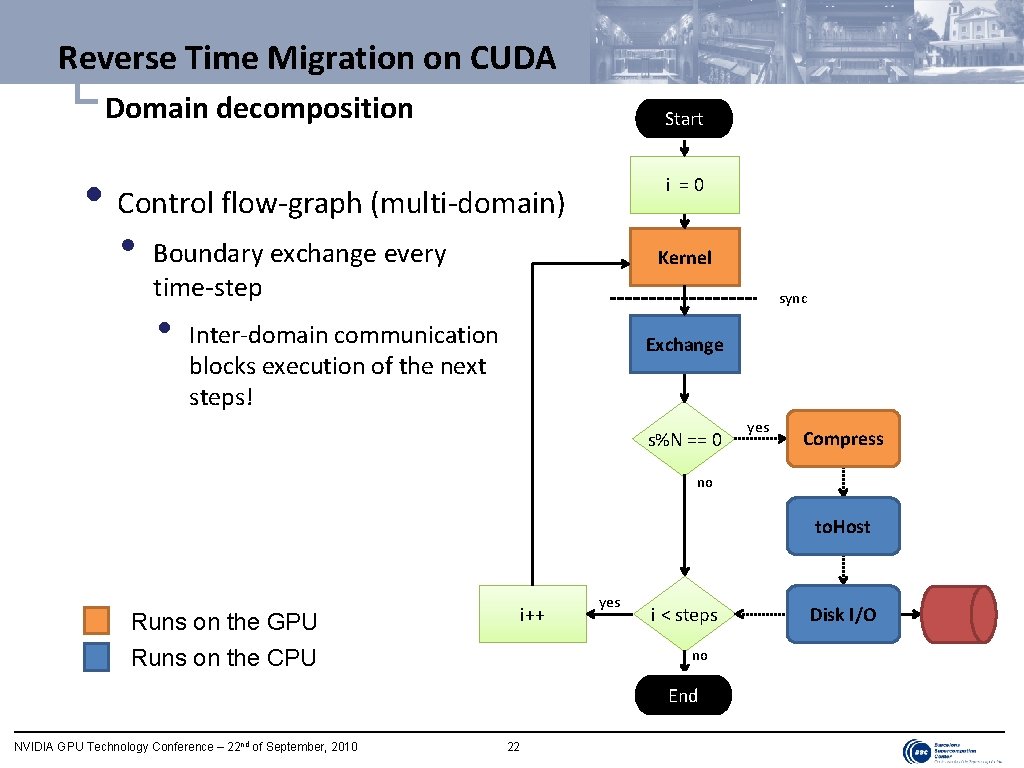

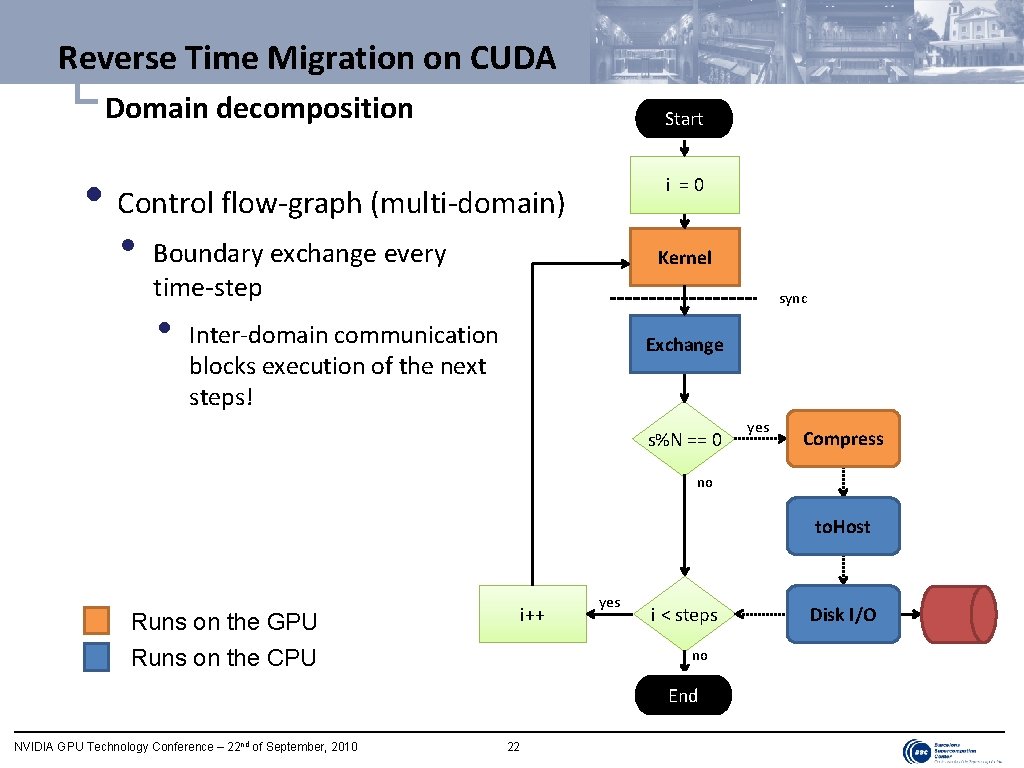

Reverse Time Migration on CUDA └ Domain decomposition Start • Control flow-graph (multi-domain) • i =0 Boundary exchange every time-step • Kernel sync Inter-domain communication blocks execution of the next steps! Exchange s%N == 0 yes Compress no to. Host i++ Runs on the GPU Runs on the CPU yes i < steps no End NVIDIA GPU Technology Conference – 22 nd of September, 2010 22 Disk I/O

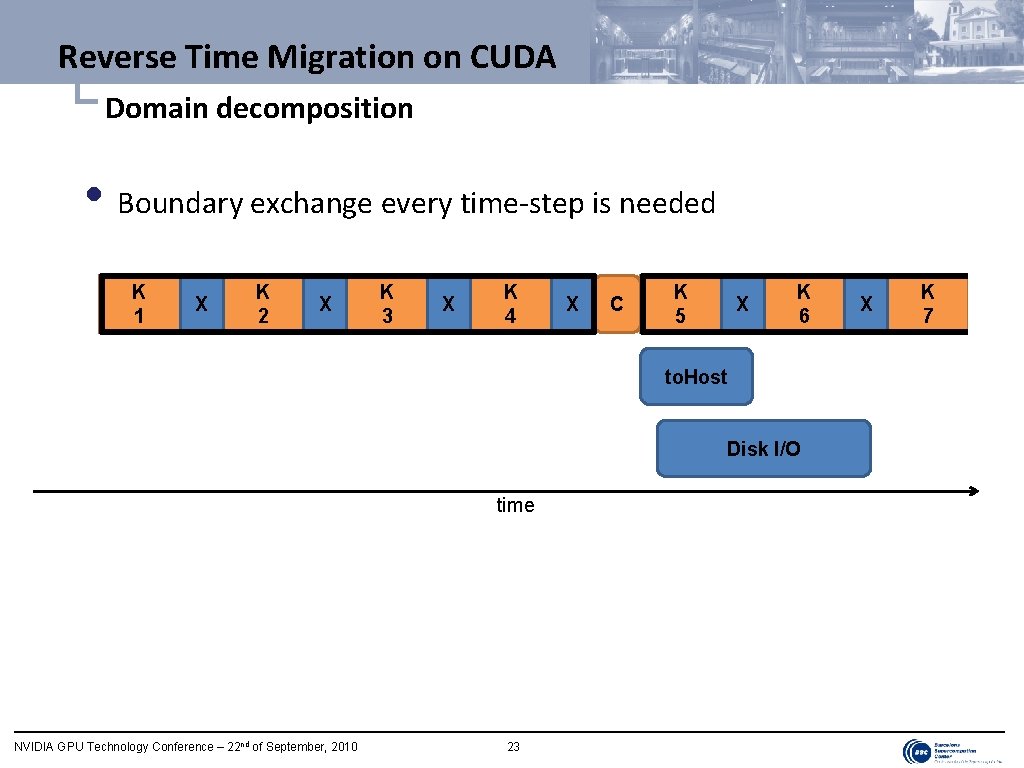

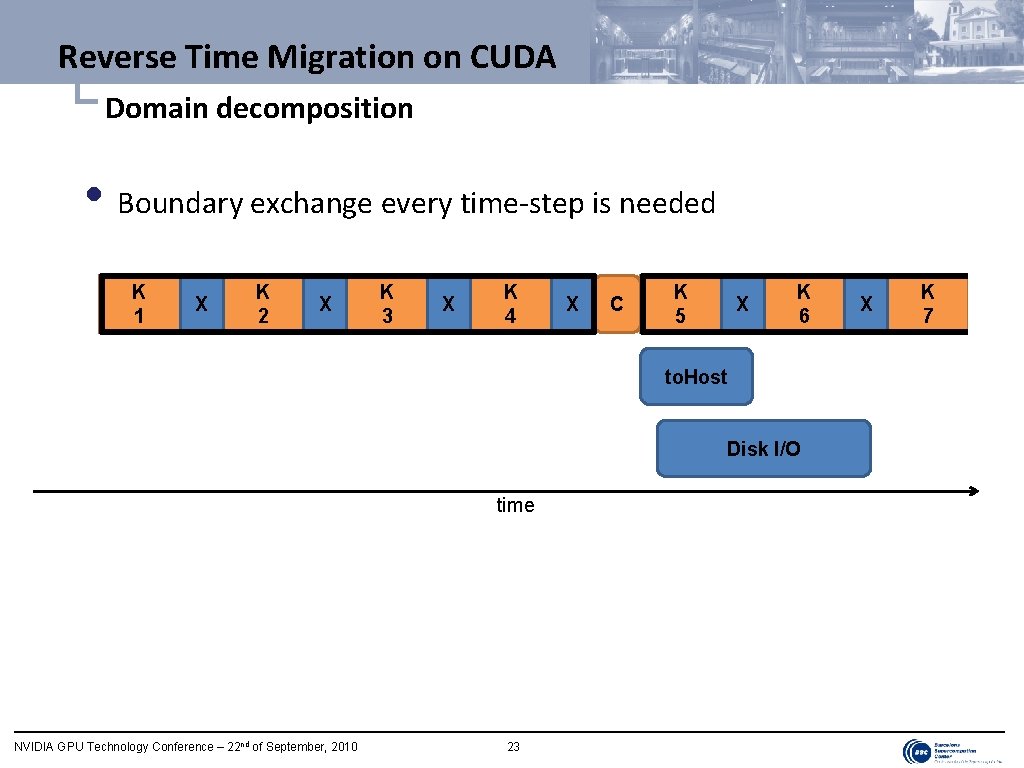

Reverse Time Migration on CUDA └ Domain decomposition • Boundary exchange every time-step is needed K 1 X K 2 X K 3 X K 4 X C K 5 X K 6 to. Host Disk I/O time NVIDIA GPU Technology Conference – 22 nd of September, 2010 23 X K 7

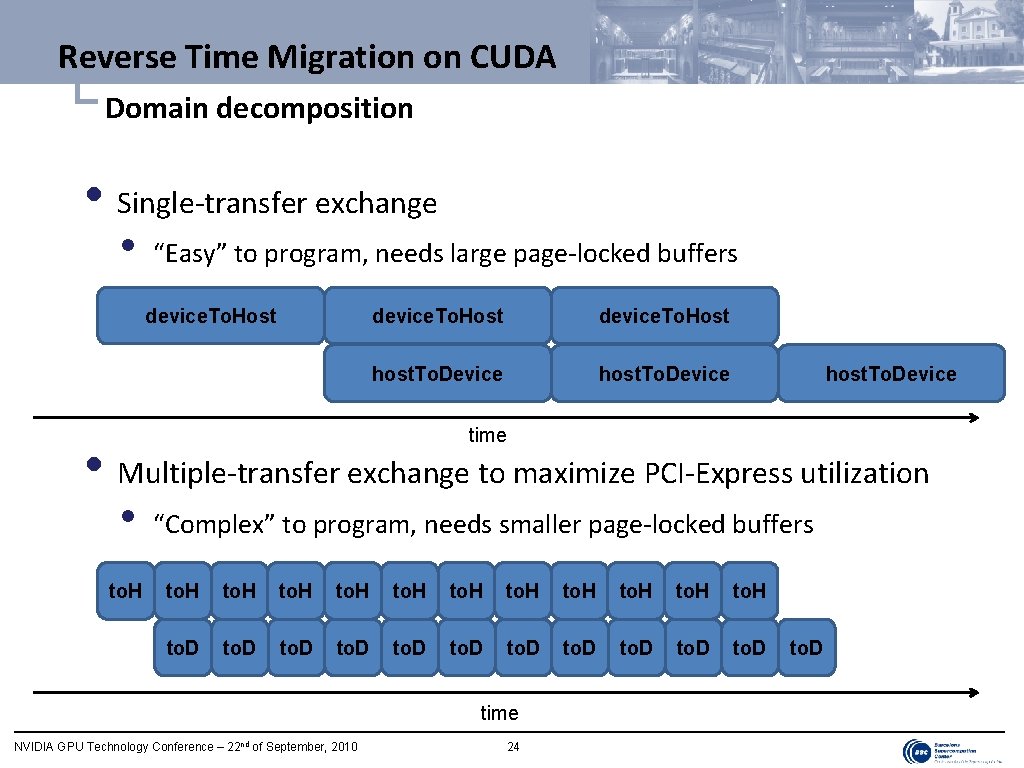

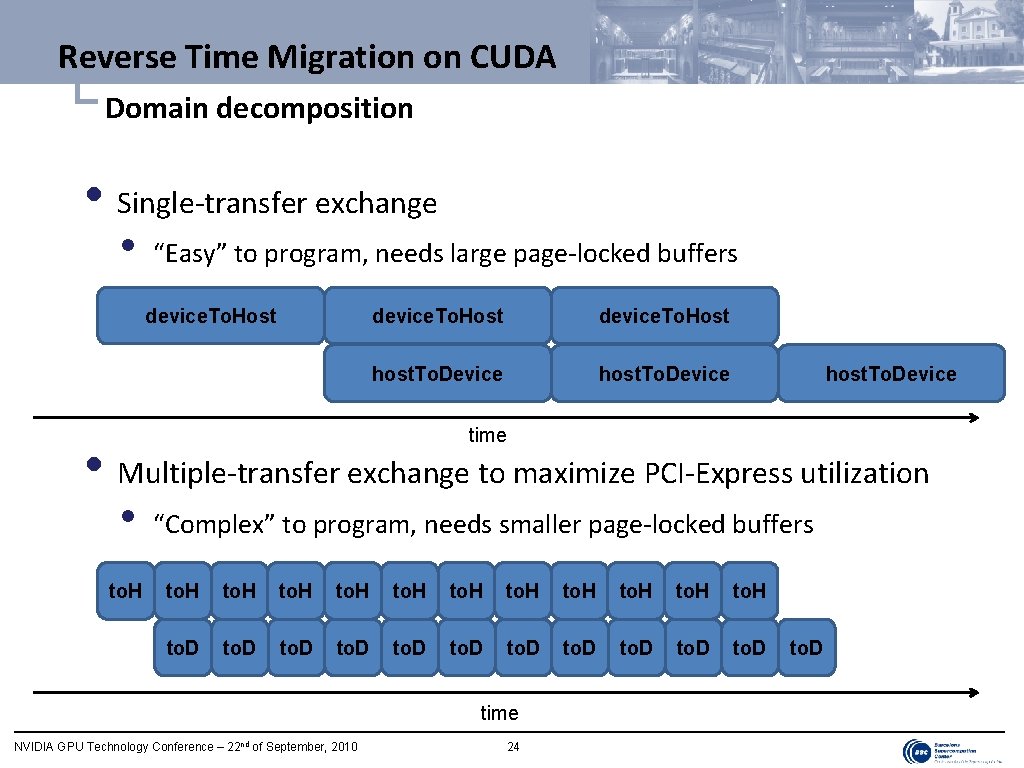

Reverse Time Migration on CUDA └ Domain decomposition • Single-transfer exchange • “Easy” to program, needs large page-locked buffers device. To. Host host. To. Device time • Multiple-transfer exchange to maximize PCI-Express utilization • to. H “Complex” to program, needs smaller page-locked buffers to. H to. D to. D time NVIDIA GPU Technology Conference – 22 nd of September, 2010 24 to. D

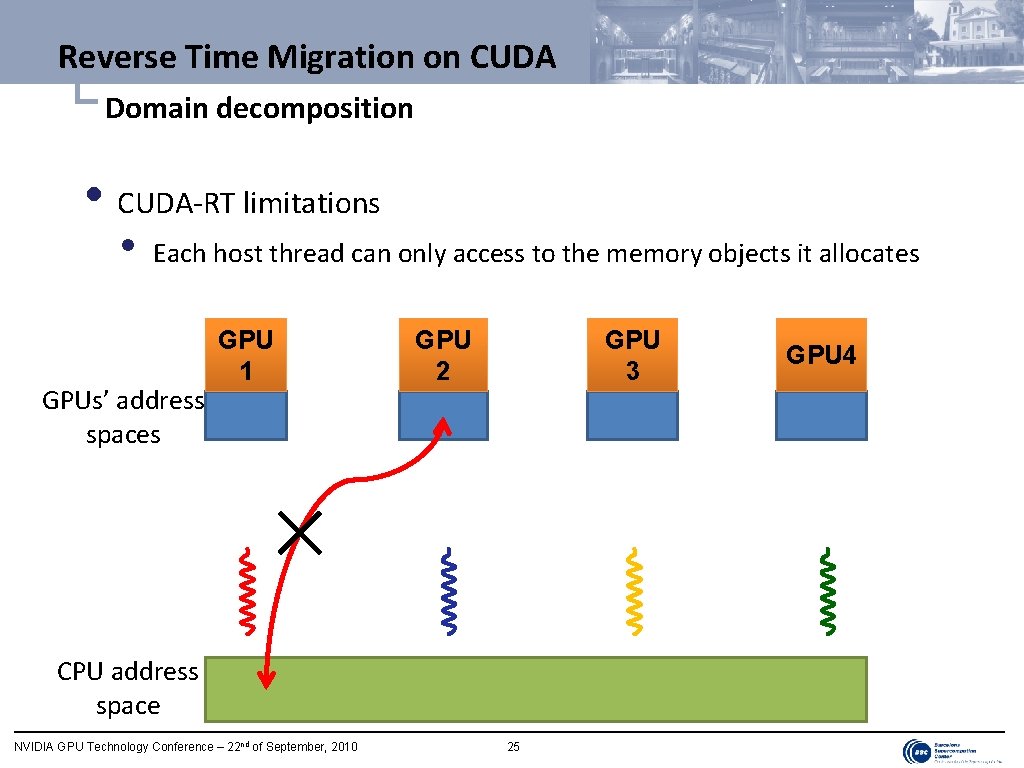

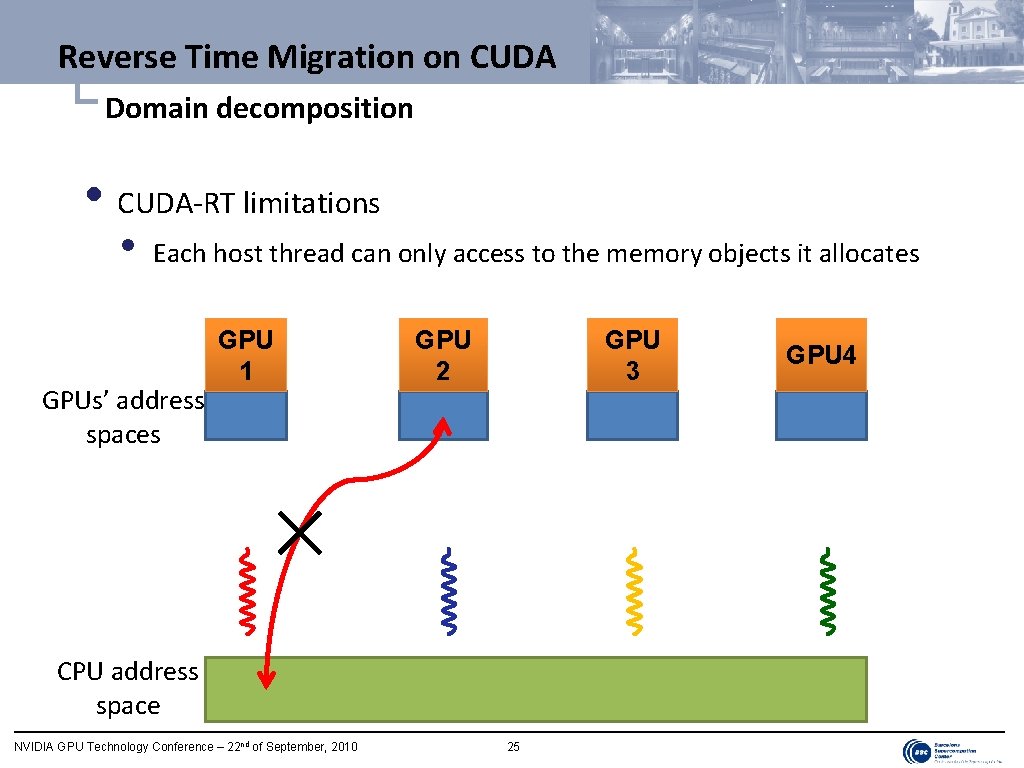

Reverse Time Migration on CUDA └ Domain decomposition • CUDA-RT limitations • Each host thread can only access to the memory objects it allocates GPUs’ address spaces GPU 1 GPU 2 GPU 3 CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 25 GPU 4

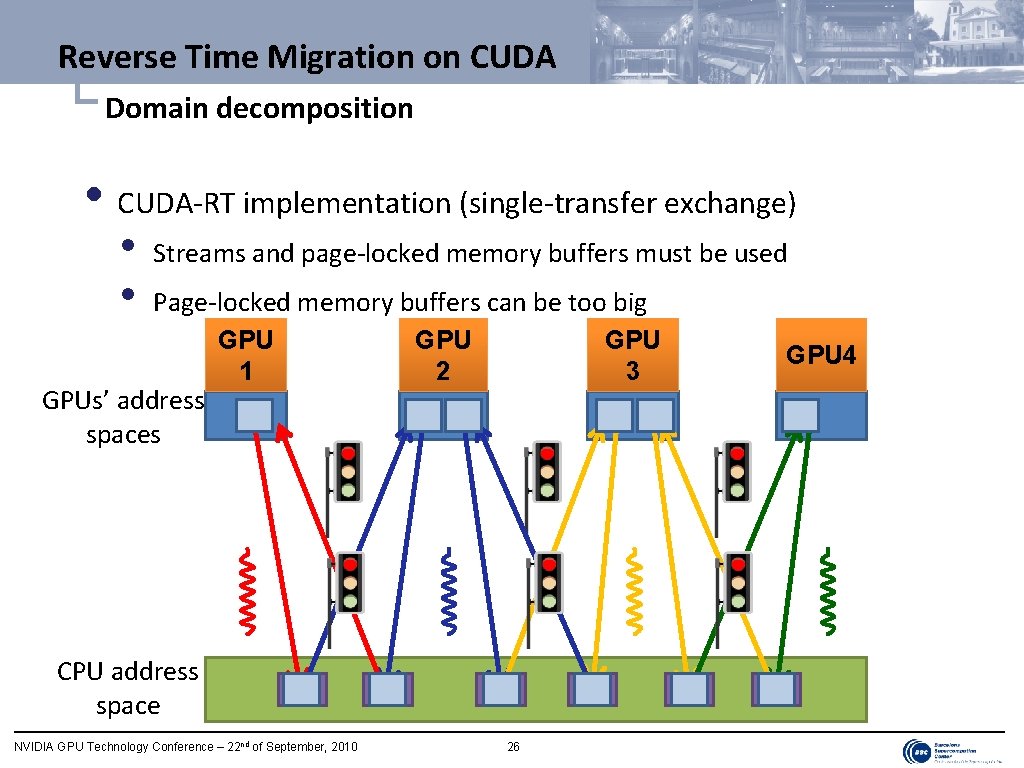

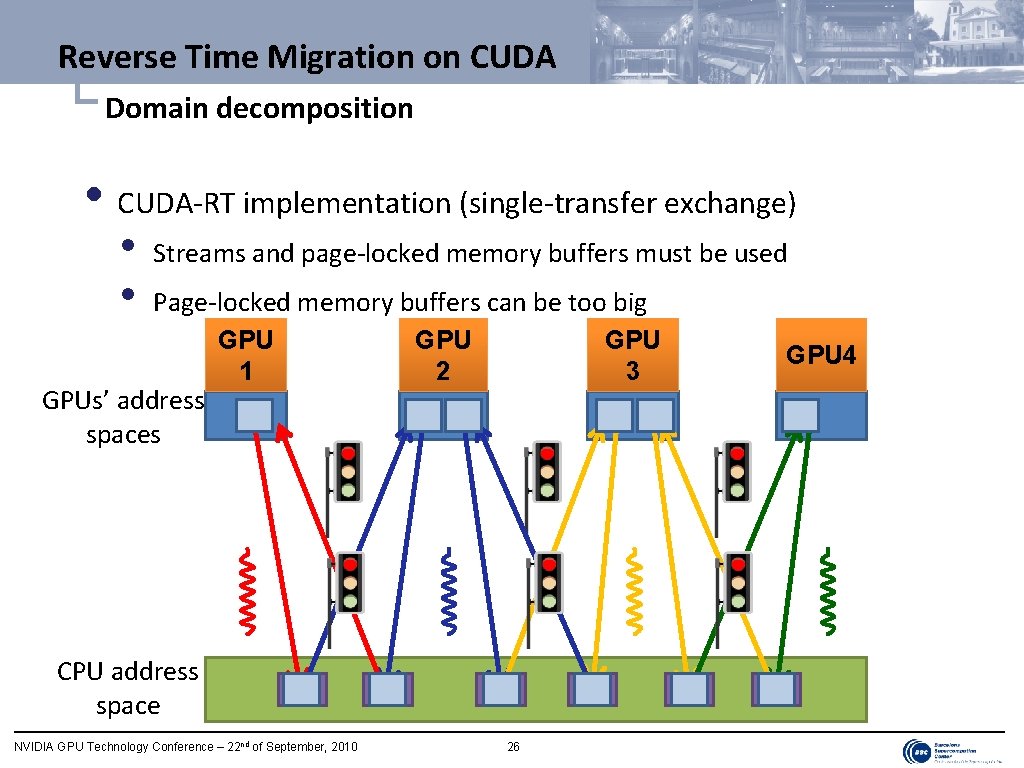

Reverse Time Migration on CUDA └ Domain decomposition • CUDA-RT implementation (single-transfer exchange) • • Streams and page-locked memory buffers must be used Page-locked memory buffers can be too big GPUs’ address spaces GPU 1 GPU 2 GPU 3 CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 26 GPU 4

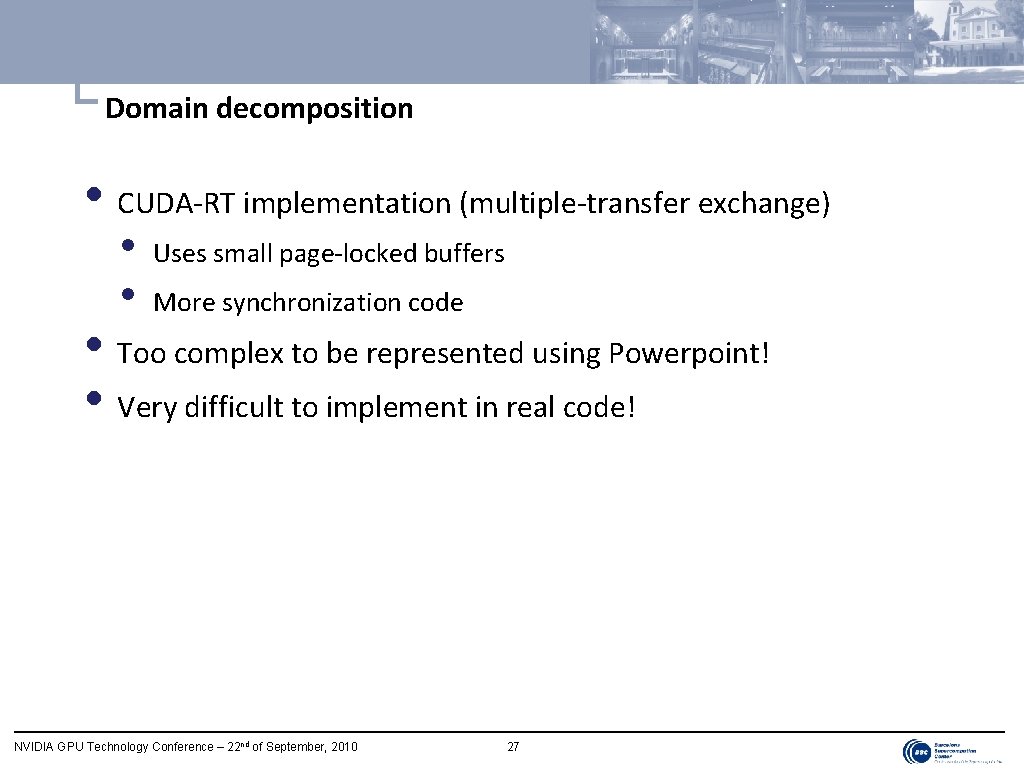

└ Domain decomposition • CUDA-RT implementation (multiple-transfer exchange) • • Uses small page-locked buffers More synchronization code • Too complex to be represented using Powerpoint! • Very difficult to implement in real code! NVIDIA GPU Technology Conference – 22 nd of September, 2010 27

Outline • Introduction • Reverse Time Migration on CUDA • General approach • Disk I/O • Domain decomposition →Overlapping computation and communication • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 28

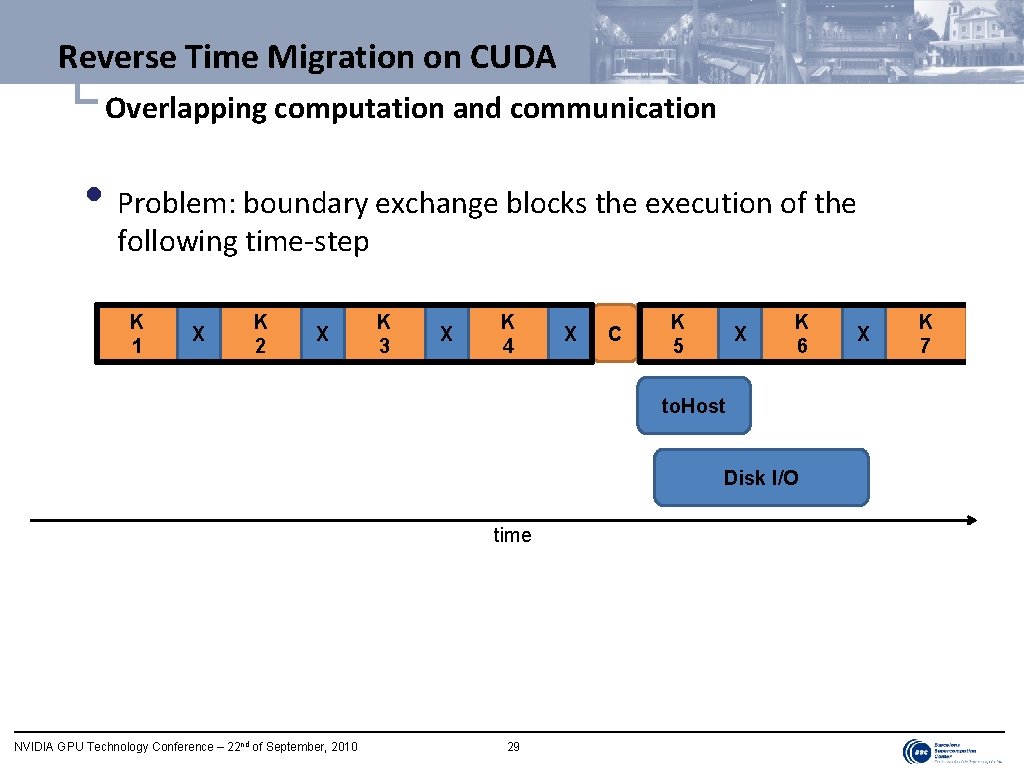

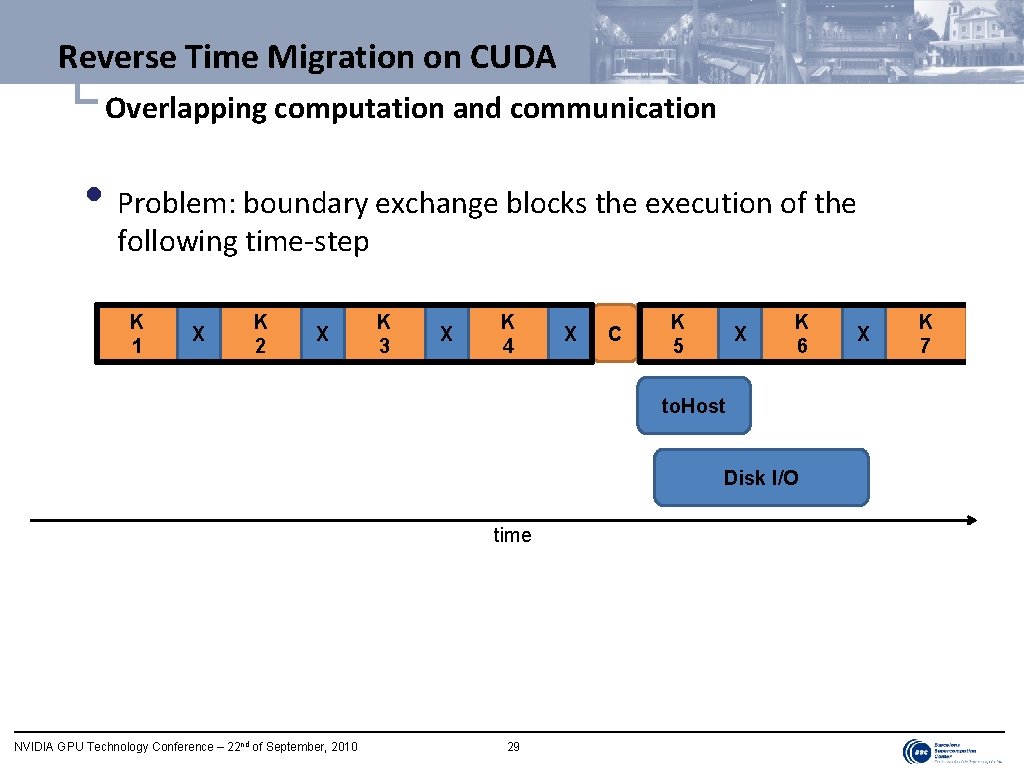

Reverse Time Migration on CUDA └ Overlapping computation and communication • Problem: boundary exchange blocks the execution of the following time-step K 1 X K 2 X K 3 X K 4 X C K 5 X K 6 to. Host Disk I/O time NVIDIA GPU Technology Conference – 22 nd of September, 2010 29 X K 7

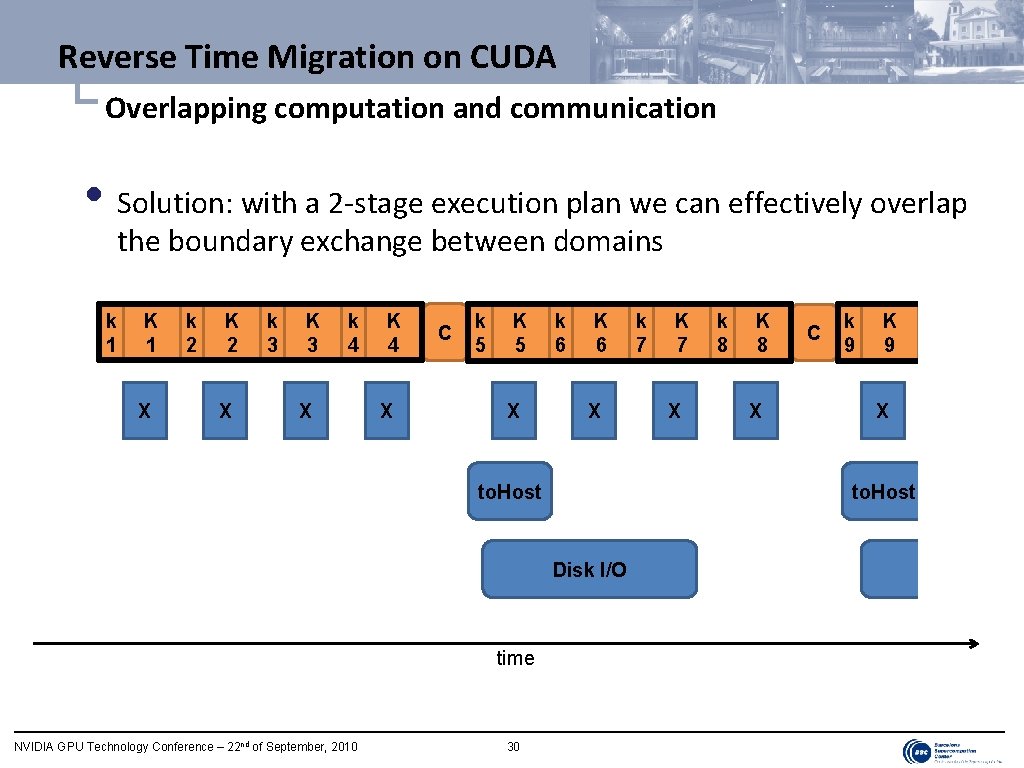

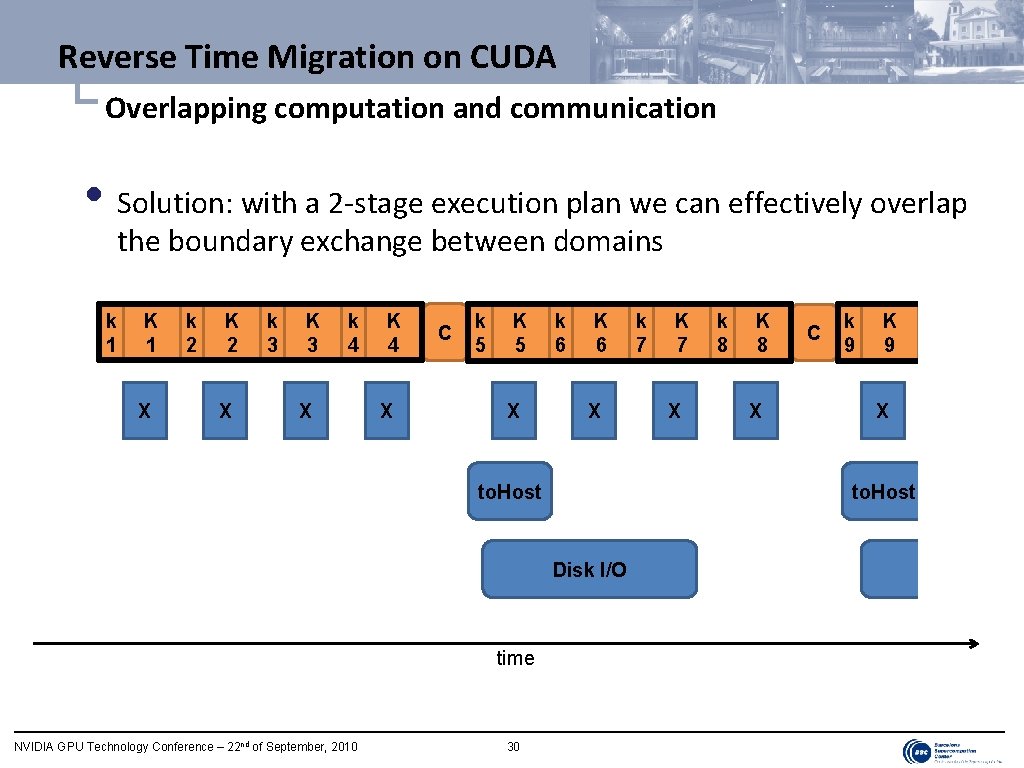

Reverse Time Migration on CUDA └ Overlapping computation and communication • Solution: with a 2 -stage execution plan we can effectively overlap the boundary exchange between domains k 1 K 1 X k 2 K 2 X k 3 K 3 k 4 X K 4 X C k 5 K 5 X k 6 K 6 X to. Host NVIDIA GPU Technology Conference – 22 nd of September, 2010 30 K 7 X k 8 K 8 X C k 9 K 9 X to. Host Disk I/O time k 7 Disk I/O

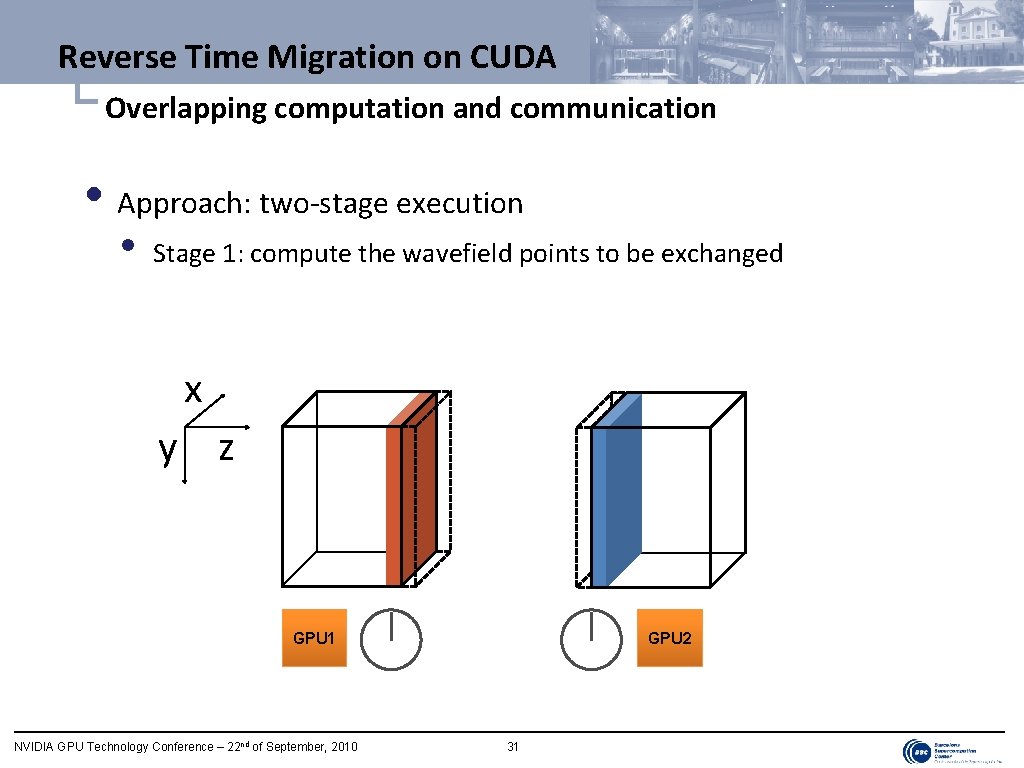

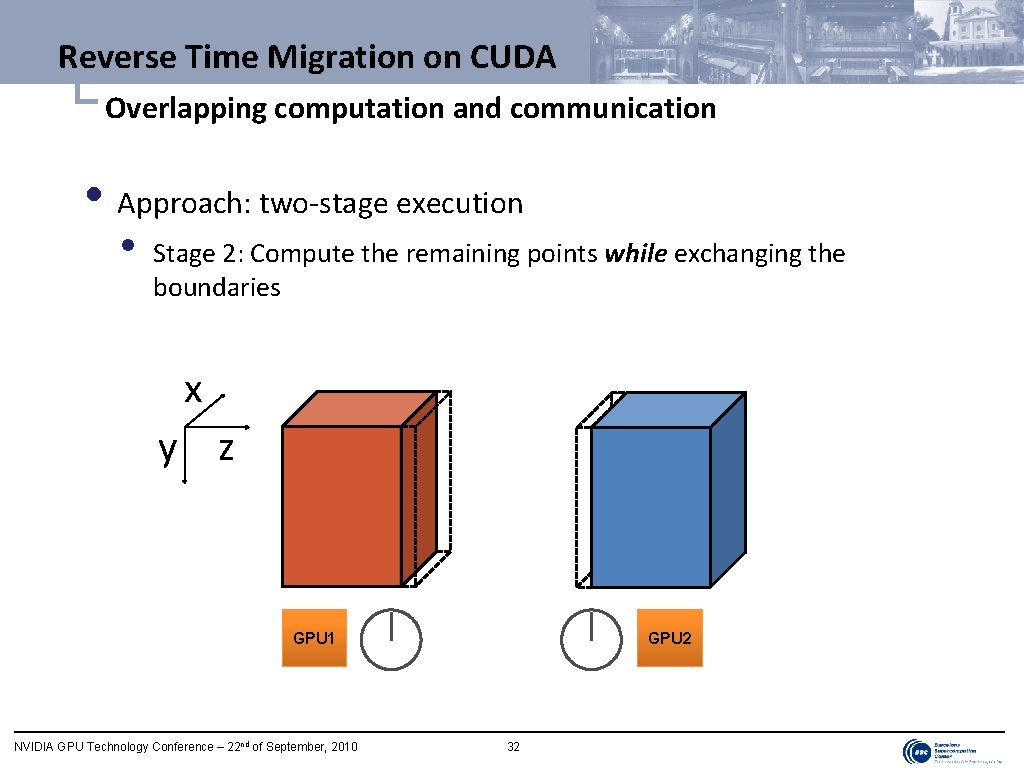

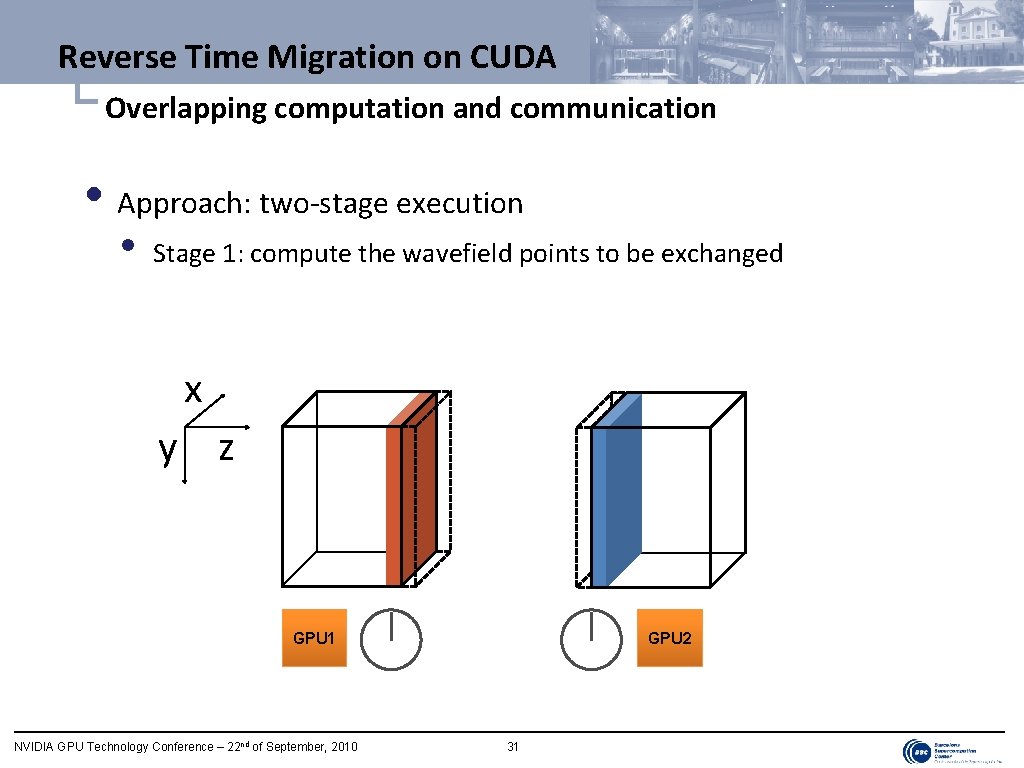

Reverse Time Migration on CUDA └ Overlapping computation and communication • Approach: two-stage execution • Stage 1: compute the wavefield points to be exchanged x y z GPU 1 NVIDIA GPU Technology Conference – 22 nd of September, 2010 GPU 2 31

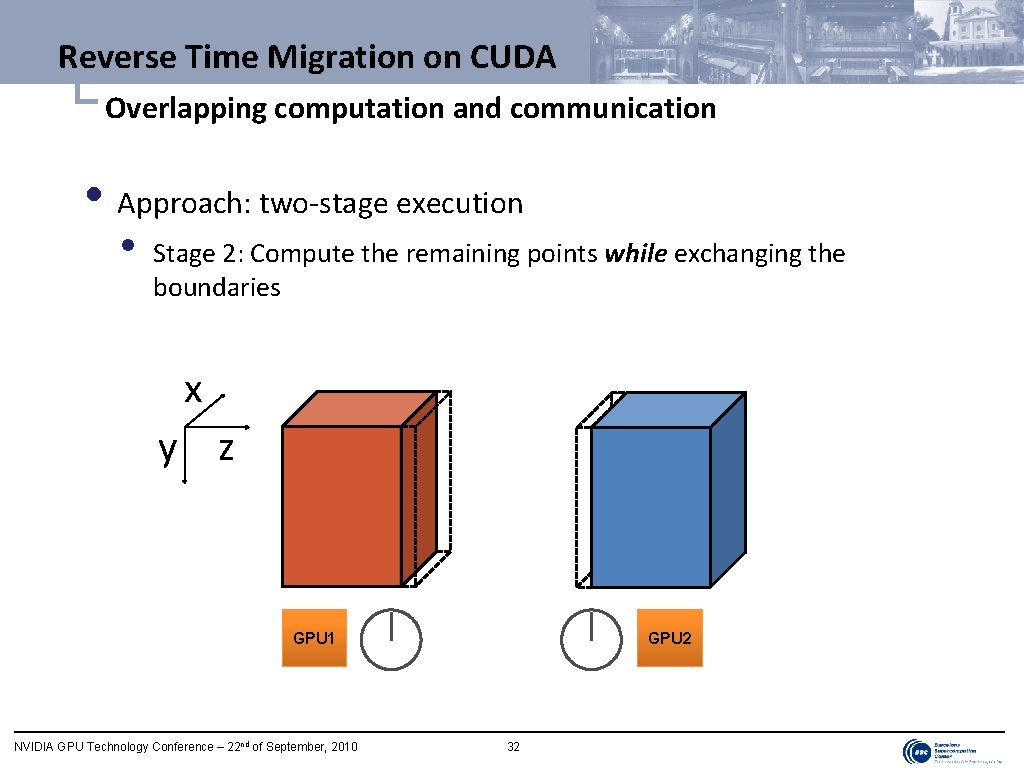

Reverse Time Migration on CUDA └ Overlapping computation and communication • Approach: two-stage execution • Stage 2: Compute the remaining points while exchanging the boundaries x y z GPU 1 NVIDIA GPU Technology Conference – 22 nd of September, 2010 GPU 2 32

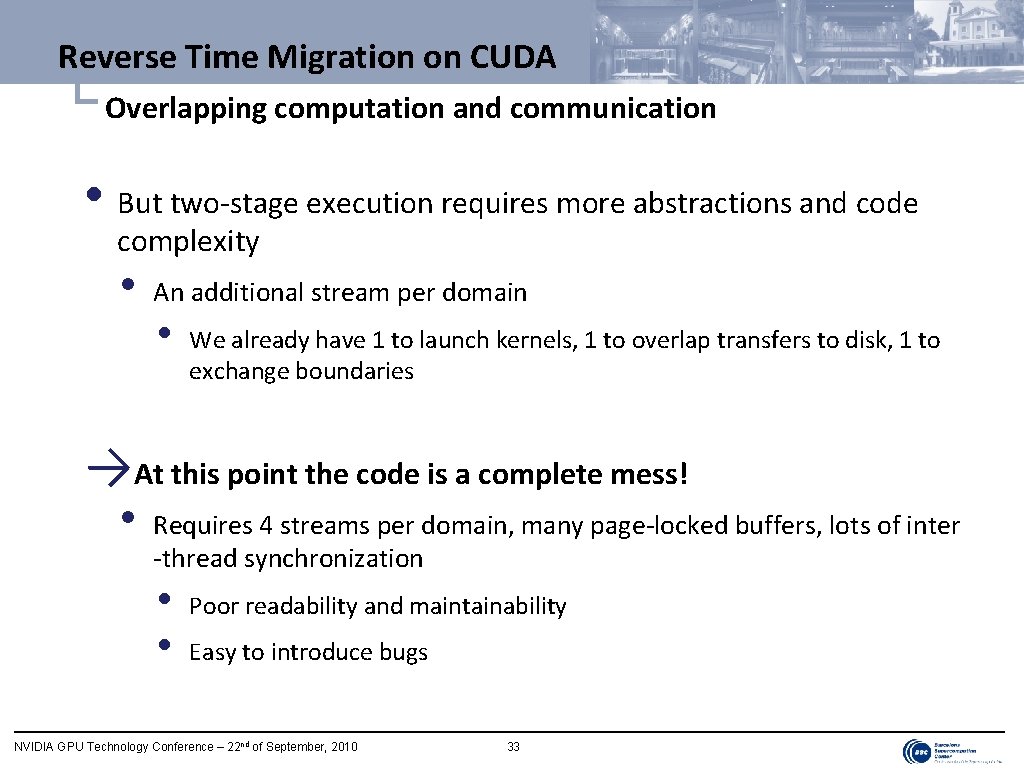

Reverse Time Migration on CUDA └ Overlapping computation and communication • But two-stage execution requires more abstractions and code complexity • An additional stream per domain • We already have 1 to launch kernels, 1 to overlap transfers to disk, 1 to exchange boundaries →At this point the code is a complete mess! • Requires 4 streams per domain, many page-locked buffers, lots of inter -thread synchronization • • Poor readability and maintainability Easy to introduce bugs NVIDIA GPU Technology Conference – 22 nd of September, 2010 33

Outline • Introduction • Reverse Time Migration on CUDA • GMAC at a glance →Features • Code examples • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 34

GMAC at a glance └ Introduction • Library that enhances the host programming model of CUDA • Freely available at http: //code. google. com/p/adsm/ • • • Developed by BSC and UIUC NCSA license (BSD-like) Works in Linux and Mac. OS X (Windows version coming soon) • Presented in detail tomorrow at 9 am @ San Jose Ballroom NVIDIA GPU Technology Conference – 22 nd of September, 2010 35

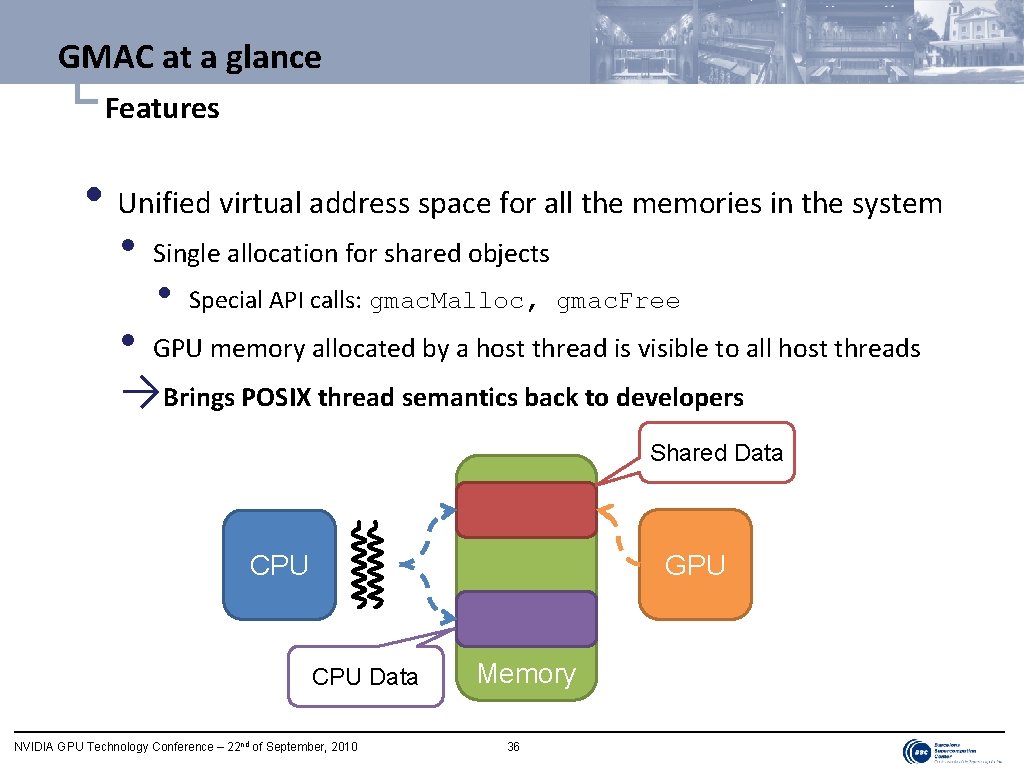

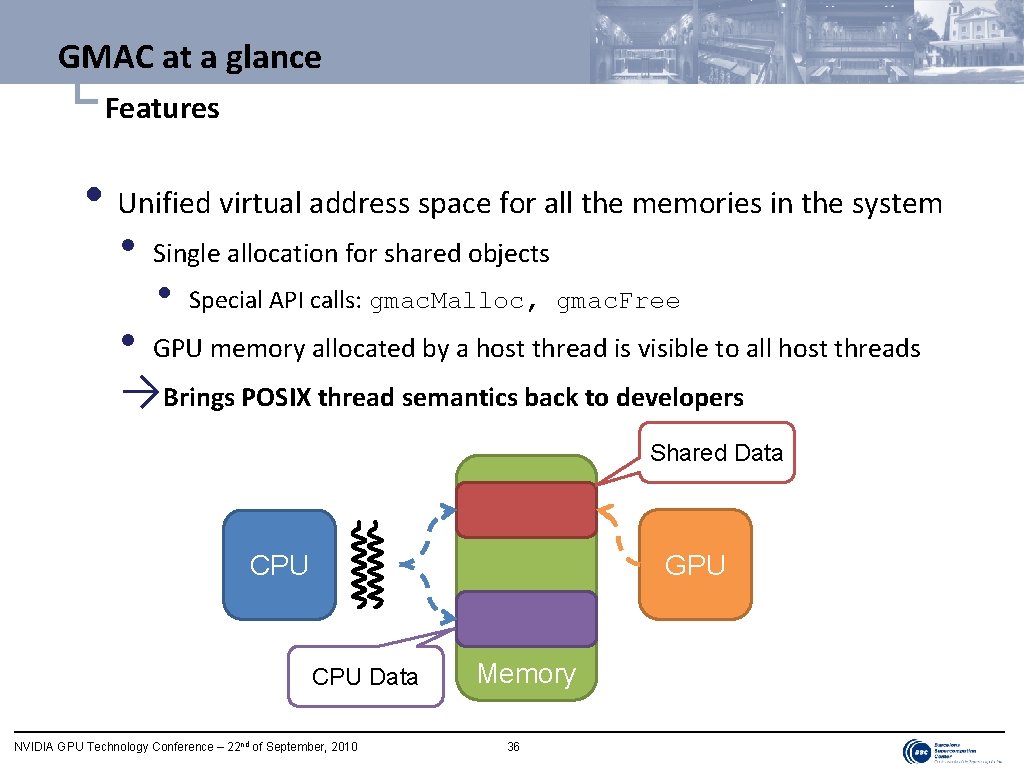

GMAC at a glance └ Features • Unified virtual address space for all the memories in the system • Single allocation for shared objects • Special API calls: gmac. Malloc, gmac. Free • GPU memory allocated by a host thread is visible to all host threads →Brings POSIX thread semantics back to developers Shared Data GPU CPU Data NVIDIA GPU Technology Conference – 22 nd of September, 2010 Memory 36

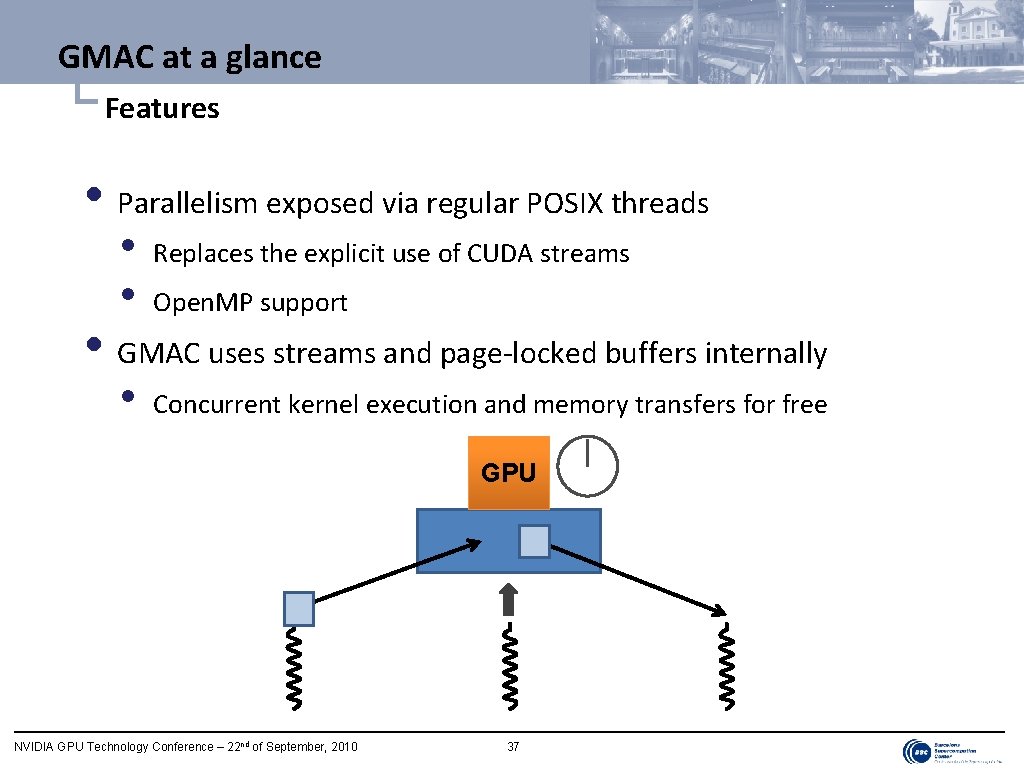

GMAC at a glance └ Features • Parallelism exposed via regular POSIX threads • • Replaces the explicit use of CUDA streams Open. MP support • GMAC uses streams and page-locked buffers internally • Concurrent kernel execution and memory transfers for free GPU NVIDIA GPU Technology Conference – 22 nd of September, 2010 37

GMAC at a glance └ Features • Optimized bulk memory operations via library interposition • • • File I/O • • Standard I/O functions: fwrite, fread Automatic overlap of Disk I/O and host. To. Device and device. To. Host transfers Optimized GPU to GPU transfers via regular memcpy Enhanced versions of the MPI send/receive calls NVIDIA GPU Technology Conference – 22 nd of September, 2010 38

Outline • Introduction • Reverse Time Migration on CUDA • GMAC at a glance • Features →Code examples • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 39

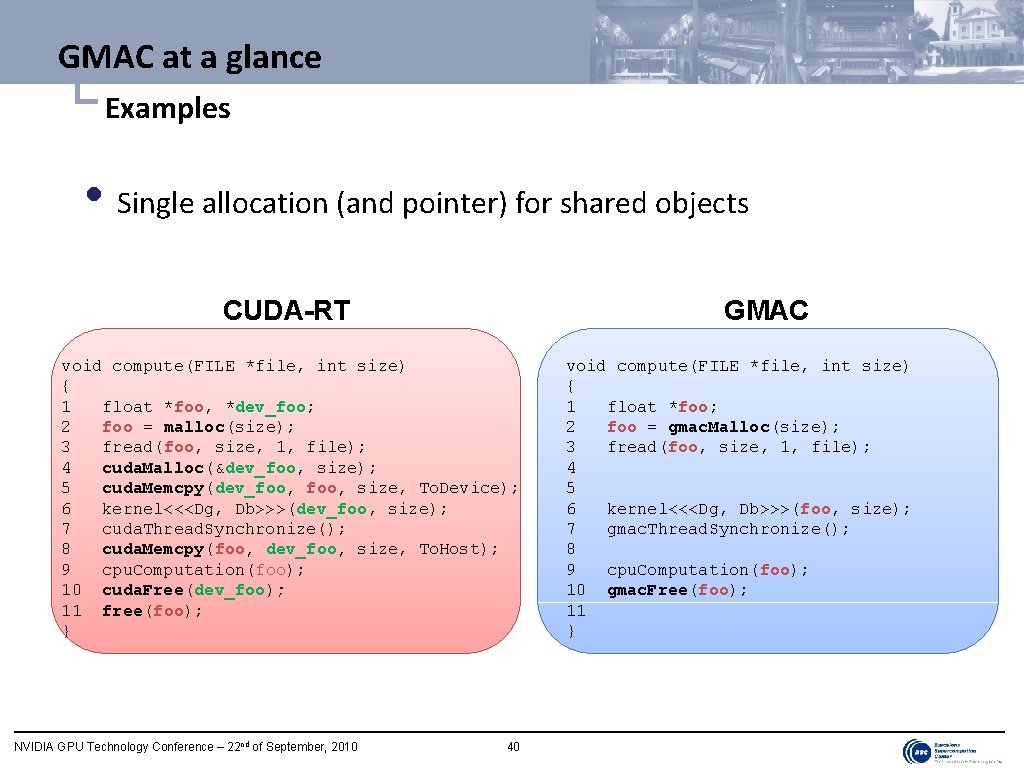

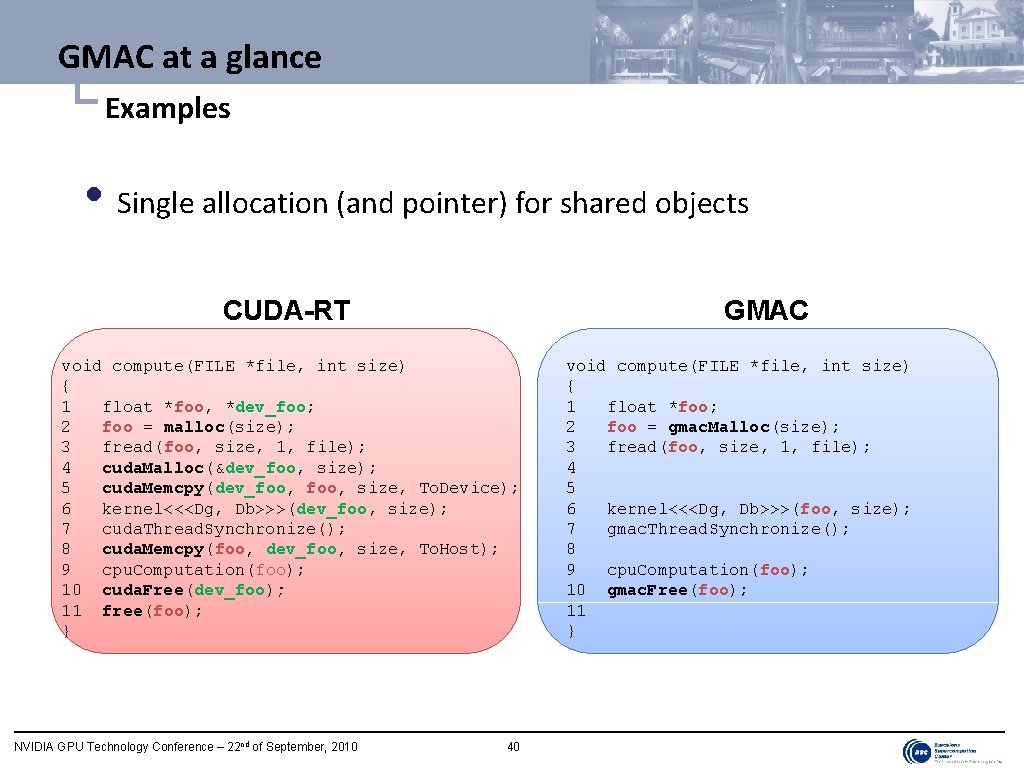

GMAC at a glance └ Examples • Single allocation (and pointer) for shared objects CUDA-RT GMAC void compute(FILE *file, int size) { 1 float *foo, *dev_foo; 2 foo = malloc(size); 3 fread(foo, size, 1, file); 4 cuda. Malloc(&dev_foo, size); 5 cuda. Memcpy(dev_foo, size, To. Device); 6 kernel<<<Dg, Db>>>(dev_foo, size); 7 cuda. Thread. Synchronize(); 8 cuda. Memcpy(foo, dev_foo, size, To. Host); 9 cpu. Computation(foo); 10 cuda. Free(dev_foo); 11 free(foo); } NVIDIA GPU Technology Conference – 22 nd of September, 2010 40 void compute(FILE *file, int size) { 1 float *foo; 2 foo = gmac. Malloc(size); 3 fread(foo, size, 1, file); 4 5 6 kernel<<<Dg, Db>>>(foo, size); 7 gmac. Thread. Synchronize(); 8 9 cpu. Computation(foo); 10 gmac. Free(foo); 11 }

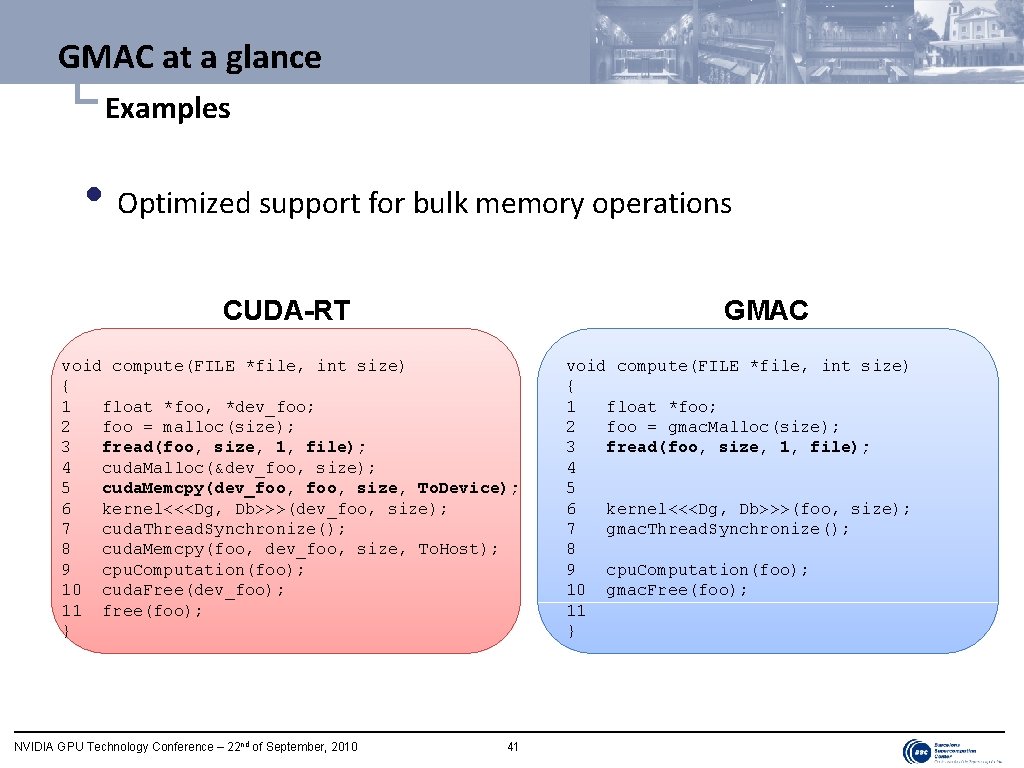

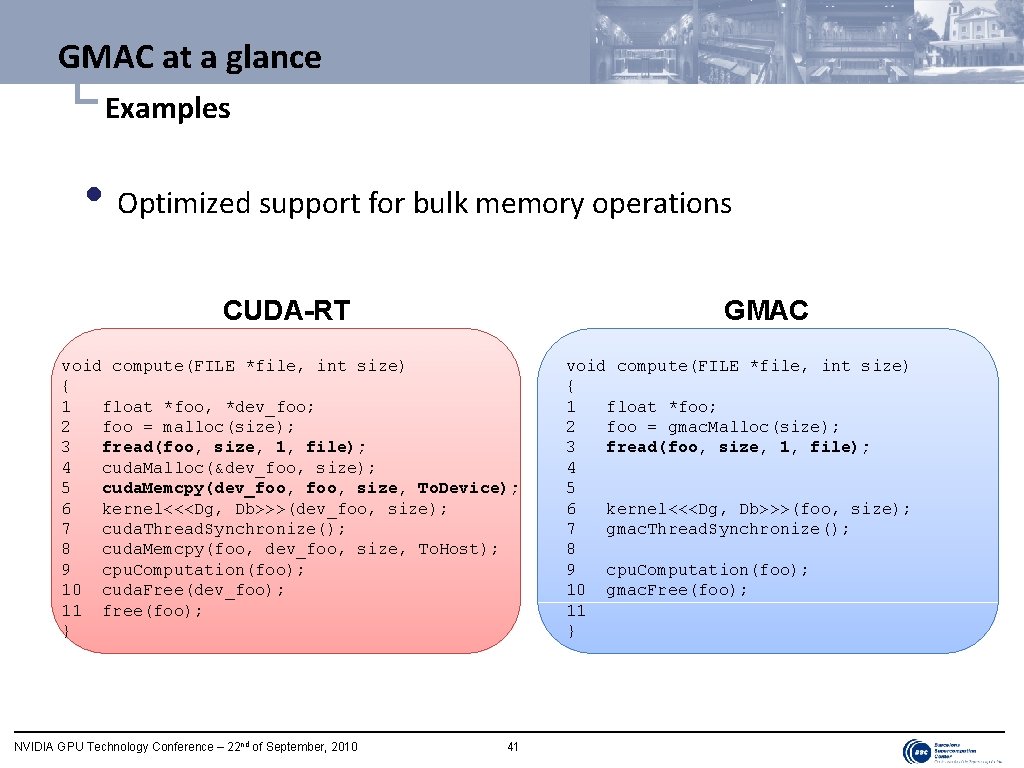

GMAC at a glance └ Examples • Optimized support for bulk memory operations CUDA-RT GMAC void compute(FILE *file, int size) { 1 float *foo, *dev_foo; 2 foo = malloc(size); 3 fread(foo, size, 1, file); 4 cuda. Malloc(&dev_foo, size); 5 cuda. Memcpy(dev_foo, size, To. Device); 6 kernel<<<Dg, Db>>>(dev_foo, size); 7 cuda. Thread. Synchronize(); 8 cuda. Memcpy(foo, dev_foo, size, To. Host); 9 cpu. Computation(foo); 10 cuda. Free(dev_foo); 11 free(foo); } NVIDIA GPU Technology Conference – 22 nd of September, 2010 41 void compute(FILE *file, int size) { 1 float *foo; 2 foo = gmac. Malloc(size); 3 fread(foo, size, 1, file); 4 5 6 kernel<<<Dg, Db>>>(foo, size); 7 gmac. Thread. Synchronize(); 8 9 cpu. Computation(foo); 10 gmac. Free(foo); 11 }

Outline • Introduction • GMAC at a glance • Reverse Time Migration on GMAC →Disk I/O • Domain decomposition • Overlapping computation and communication • Development cycle and debugging • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 42

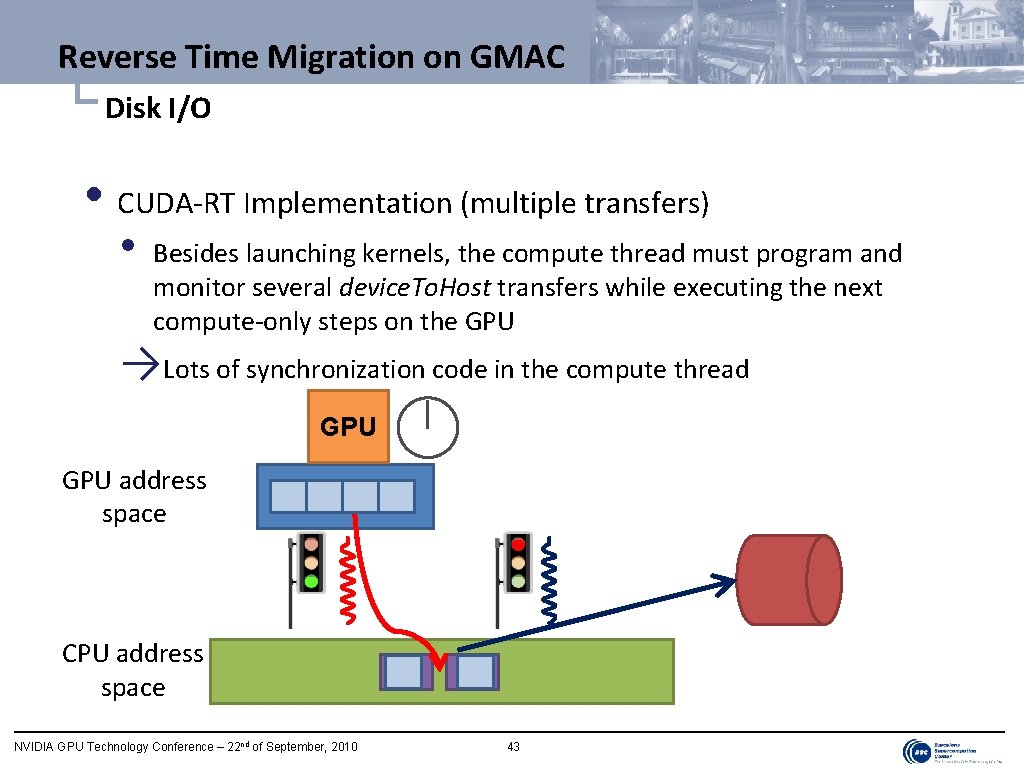

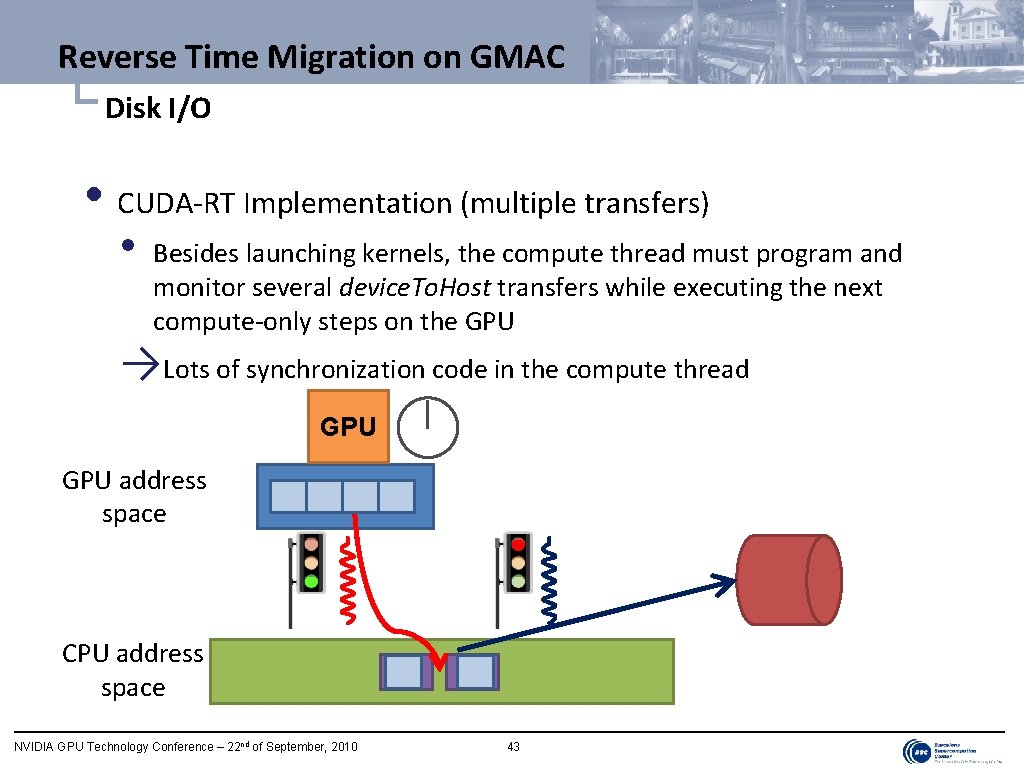

Reverse Time Migration on GMAC └ Disk I/O • CUDA-RT Implementation (multiple transfers) • Besides launching kernels, the compute thread must program and monitor several device. To. Host transfers while executing the next compute-only steps on the GPU →Lots of synchronization code in the compute thread GPU address space CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 43

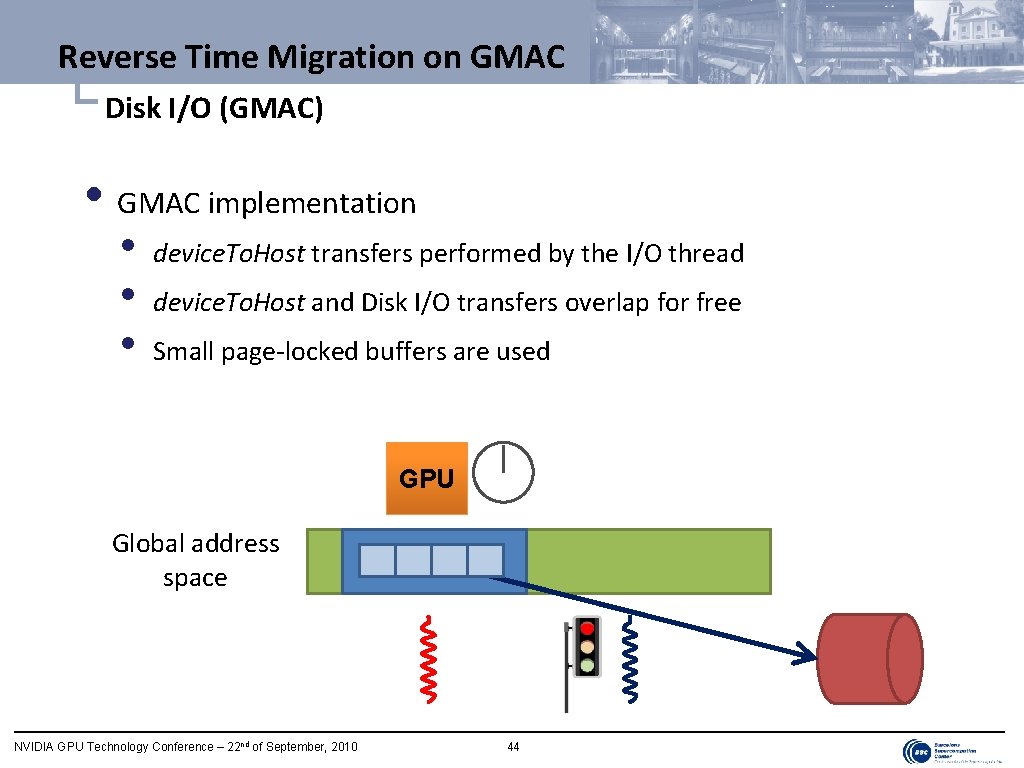

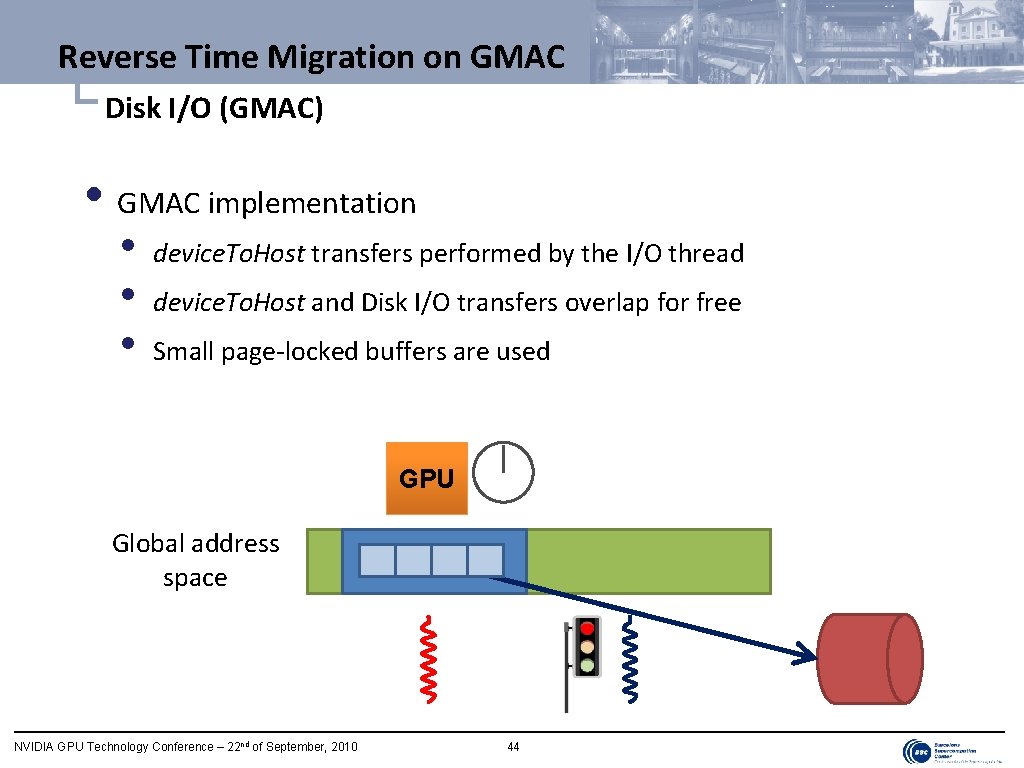

Reverse Time Migration on GMAC └ Disk I/O (GMAC) • GMAC implementation • • • device. To. Host transfers performed by the I/O thread device. To. Host and Disk I/O transfers overlap for free Small page-locked buffers are used GPU Global address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 44

Outline • Introduction • GMAC at a glance • Reverse Time Migration on GMAC • Disk I/O →Domain decomposition • Overlapping computation and communication • Development cycle and debugging • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 45

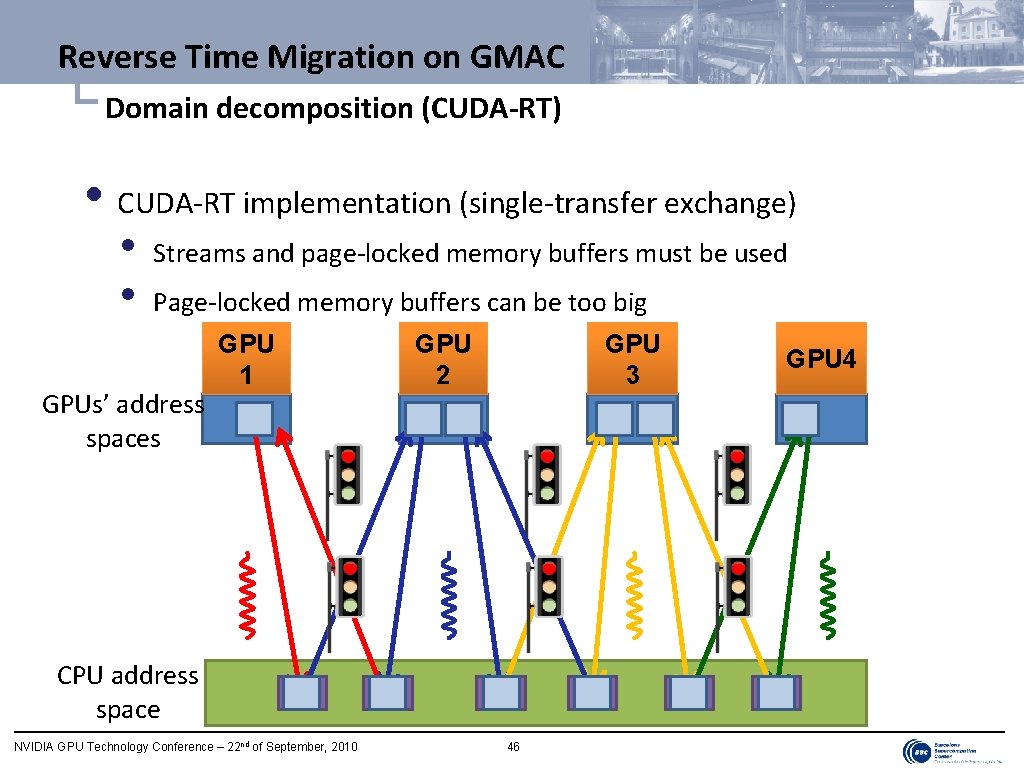

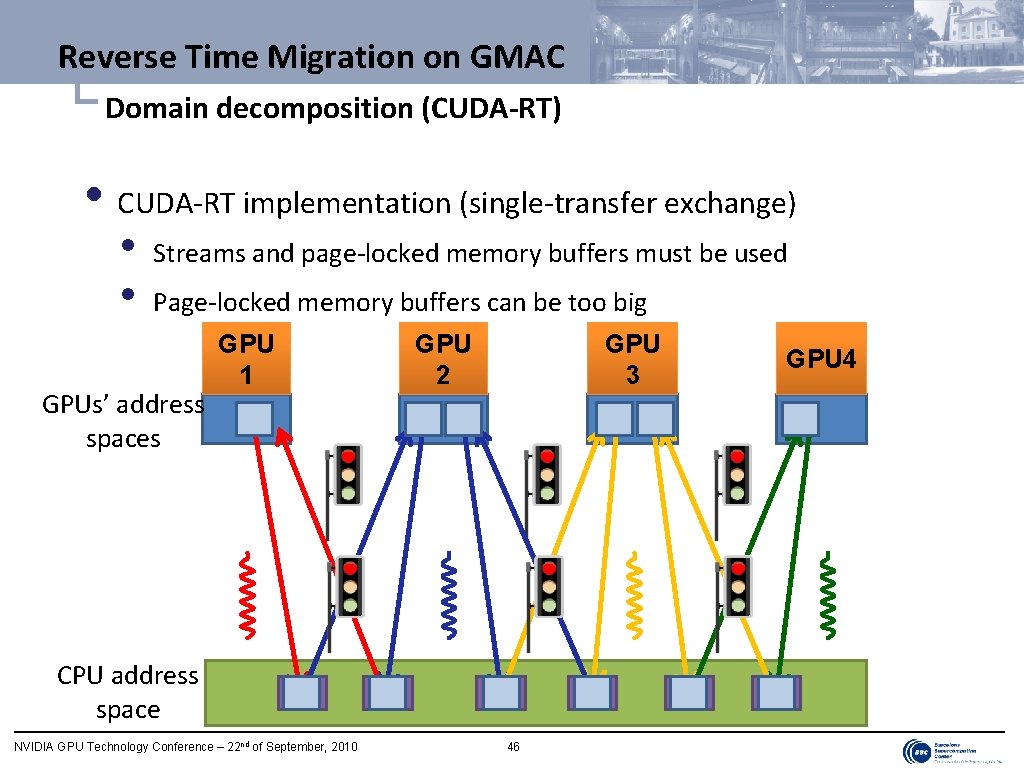

Reverse Time Migration on GMAC └ Domain decomposition (CUDA-RT) • CUDA-RT implementation (single-transfer exchange) • • Streams and page-locked memory buffers must be used Page-locked memory buffers can be too big GPUs’ address spaces GPU 1 GPU 2 GPU 3 CPU address space NVIDIA GPU Technology Conference – 22 nd of September, 2010 46 GPU 4

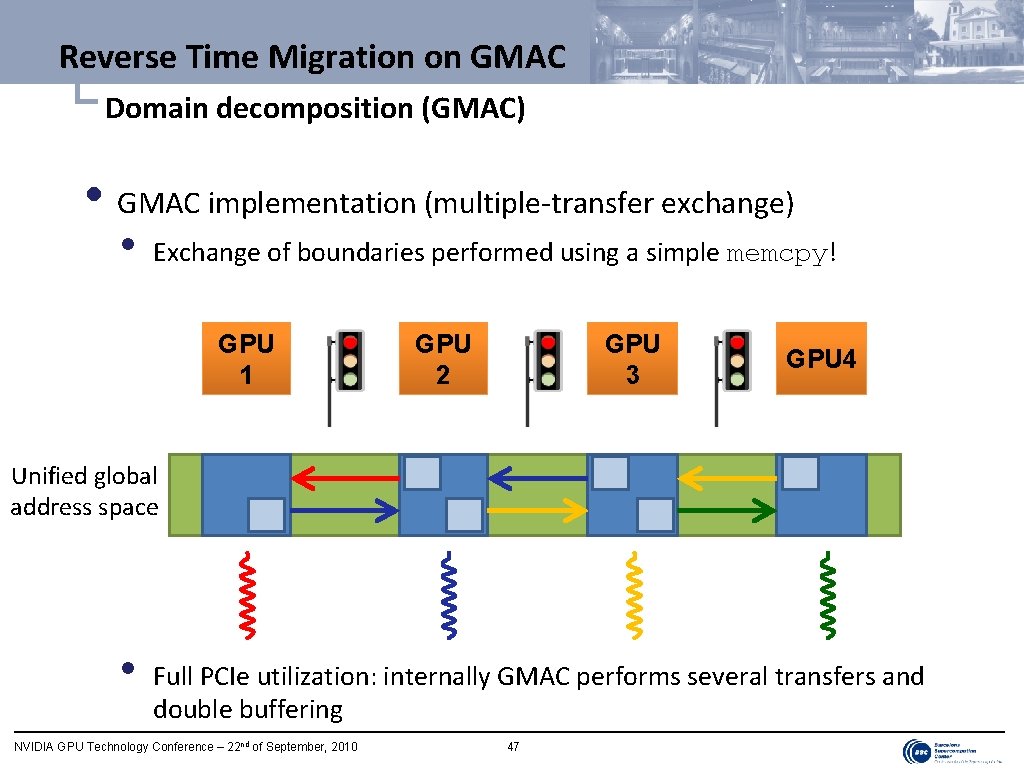

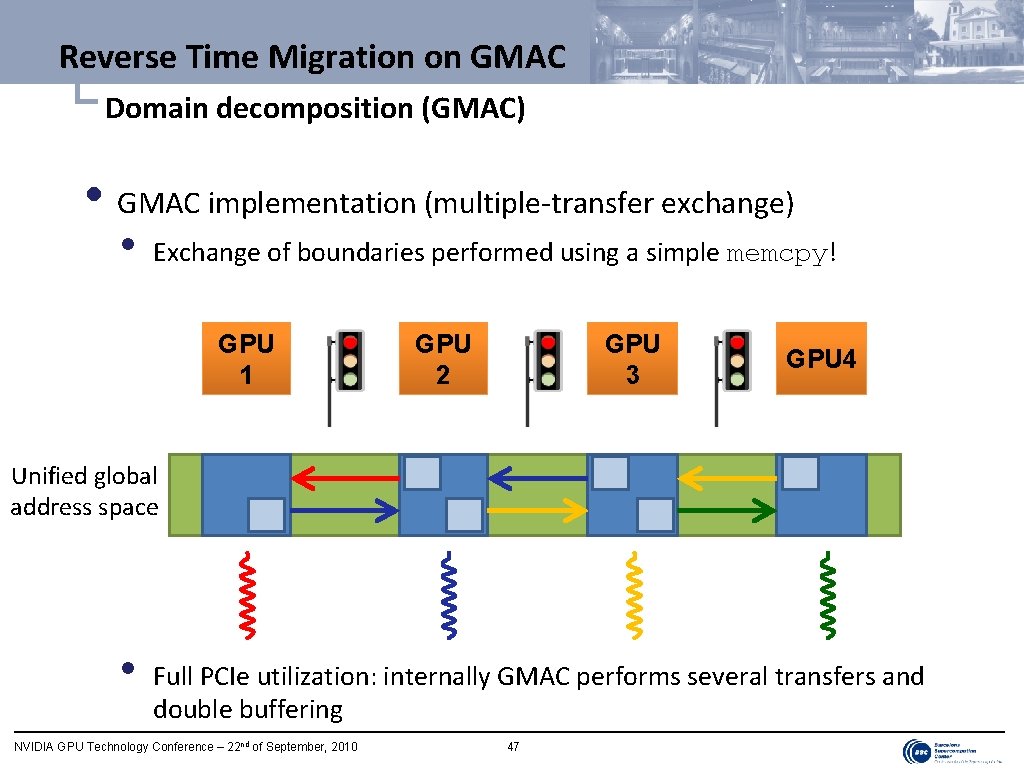

Reverse Time Migration on GMAC └ Domain decomposition (GMAC) • GMAC implementation (multiple-transfer exchange) • Exchange of boundaries performed using a simple memcpy! GPU 1 GPU 2 GPU 3 GPU 4 Unified global address space • Full PCIe utilization: internally GMAC performs several transfers and double buffering NVIDIA GPU Technology Conference – 22 nd of September, 2010 47

Outline • Introduction • GMAC at a glance • Reverse Time Migration on GMAC • Disk I/O • Domain decomposition →Overlapping computation and communication • Development cycle and debugging • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 48

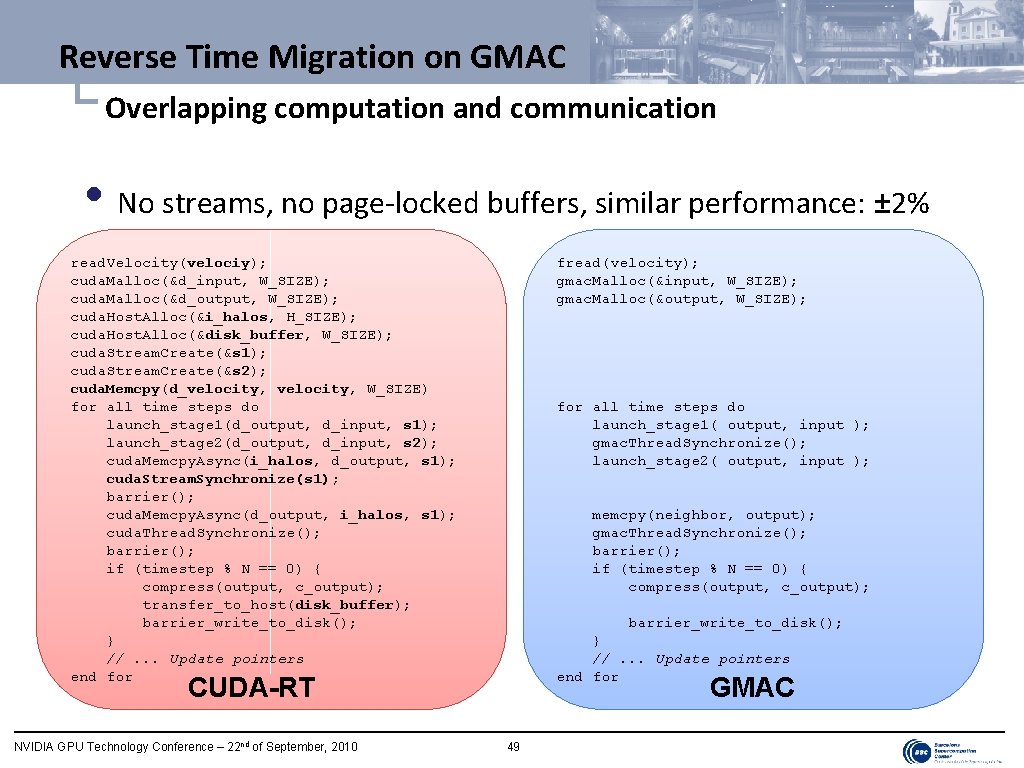

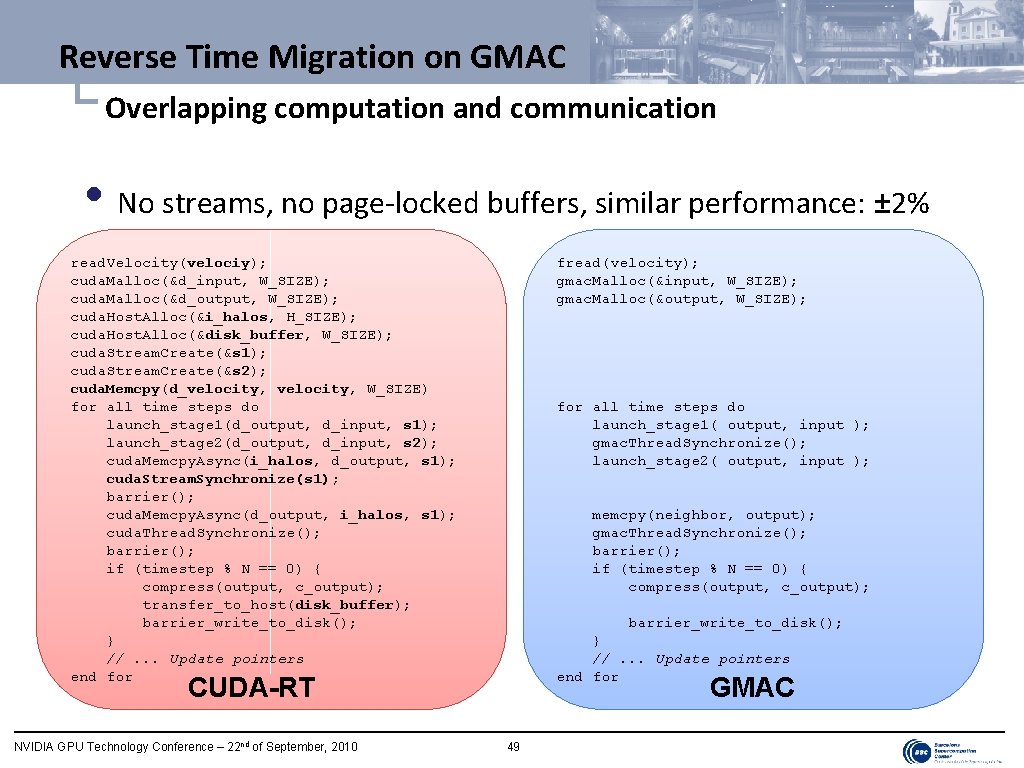

Reverse Time Migration on GMAC └ Overlapping computation and communication • No streams, no page-locked buffers, similar performance: ± 2% fread(velocity); gmac. Malloc(&input, W_SIZE); gmac. Malloc(&output, W_SIZE); read. Velocity(velociy); cuda. Malloc(&d_input, W_SIZE); cuda. Malloc(&d_output, W_SIZE); cuda. Host. Alloc(&i_halos, H_SIZE); cuda. Host. Alloc(&disk_buffer, W_SIZE); cuda. Stream. Create(&s 1); cuda. Stream. Create(&s 2); cuda. Memcpy(d_velocity, W_SIZE) for all time steps do launch_stage 1(d_output, d_input, s 1); launch_stage 2(d_output, d_input, s 2); cuda. Memcpy. Async(i_halos, d_output, s 1); cuda. Stream. Synchronize(s 1); barrier(); cuda. Memcpy. Async(d_output, i_halos, s 1); cuda. Thread. Synchronize(); barrier(); if (timestep % N == 0) { compress(output, c_output); transfer_to_host(disk_buffer); barrier_write_to_disk(); } //. . . Update pointers end for all time steps do launch_stage 1( output, input ); gmac. Thread. Synchronize(); launch_stage 2( output, input ); memcpy(neighbor, output); gmac. Thread. Synchronize(); barrier(); if (timestep % N == 0) { compress(output, c_output); barrier_write_to_disk(); } //. . . Update pointers end for CUDA-RT NVIDIA GPU Technology Conference – 22 nd of September, 2010 GMAC 49

Outline • Introduction • GMAC at a glance • Reverse Time Migration on GMAC • Disk I/O • Domain decomposition • Inter-domain communication →Development cycle and debugging • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 50

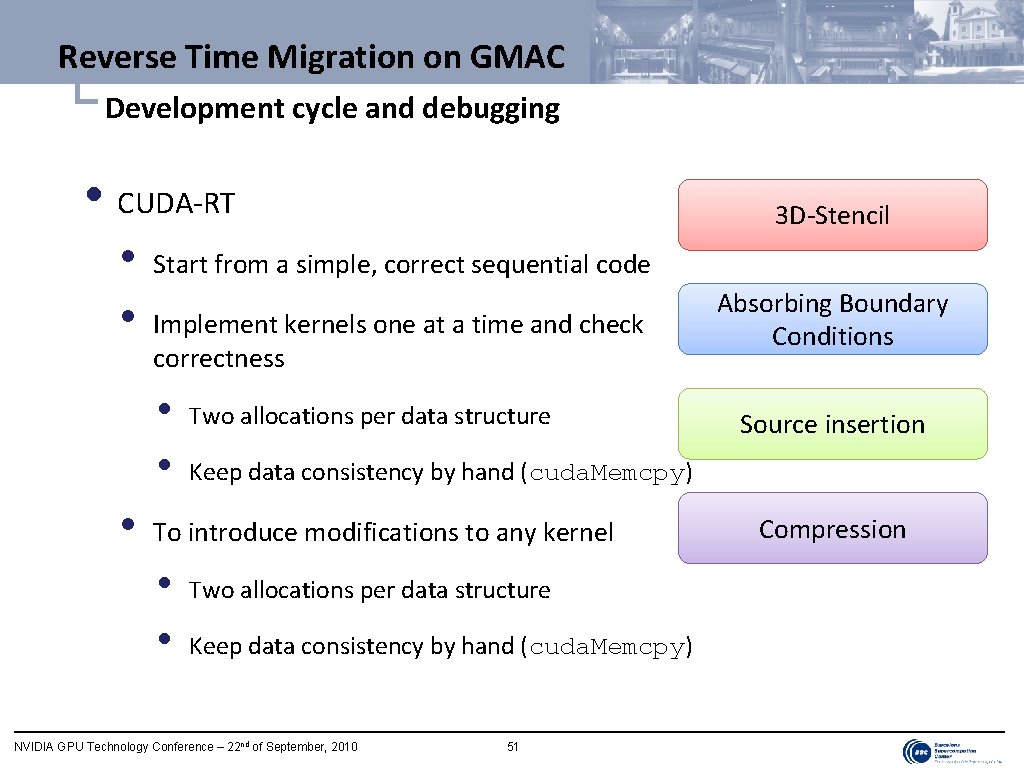

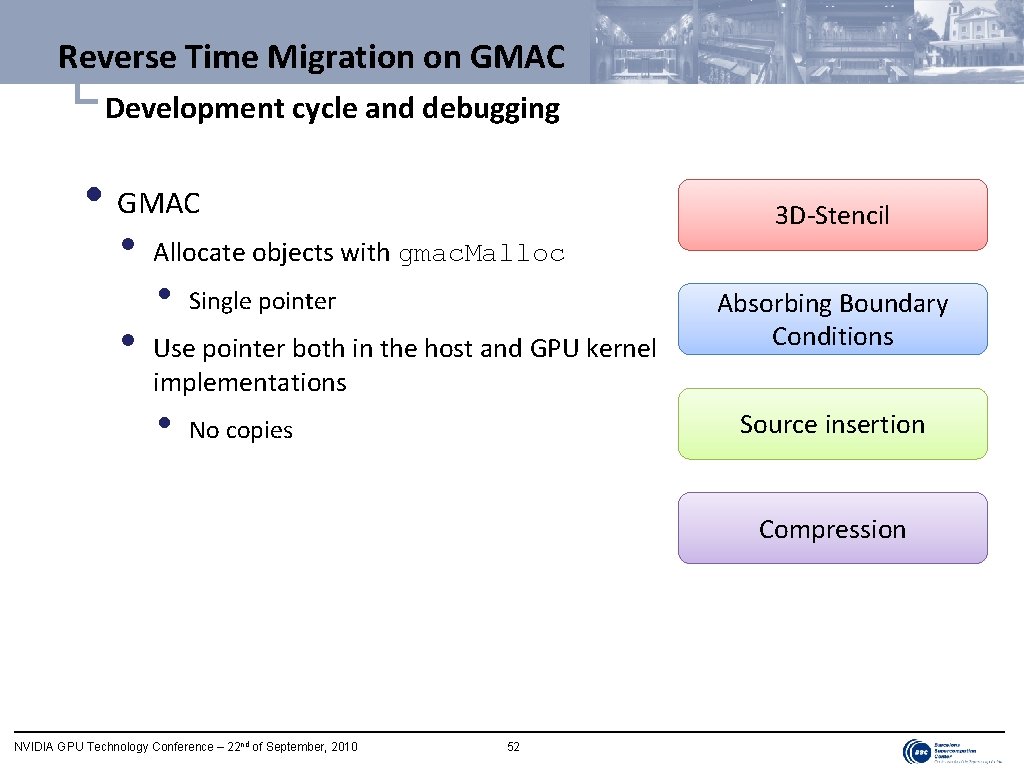

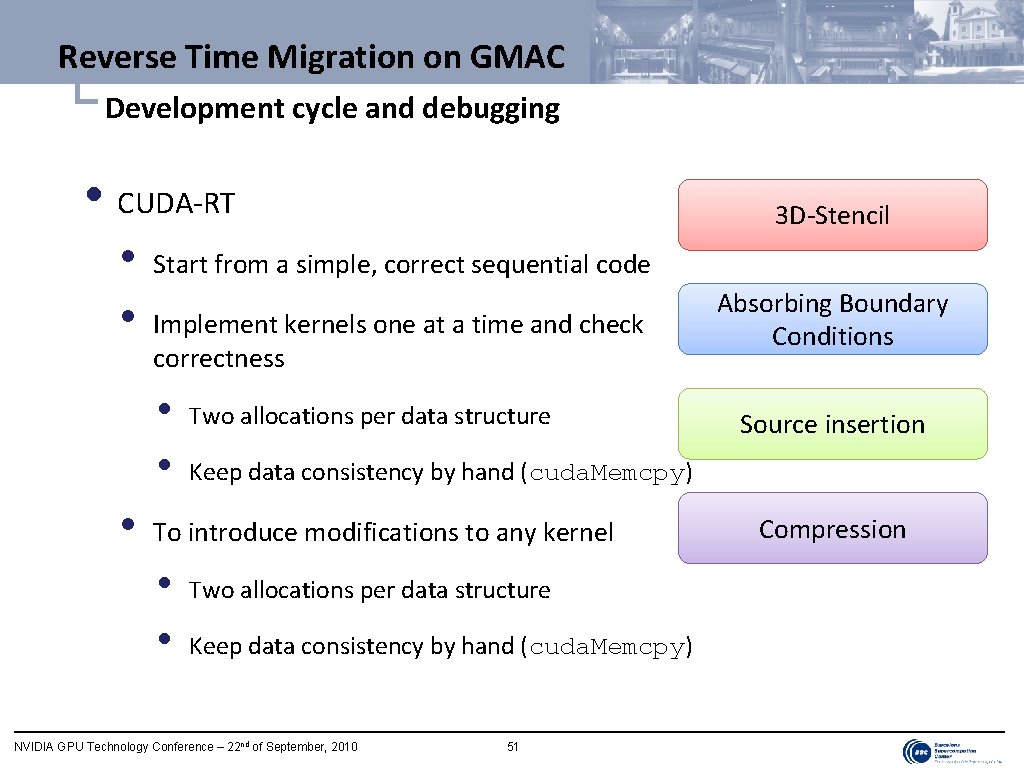

Reverse Time Migration on GMAC └ Development cycle and debugging • CUDA-RT • • Start from a simple, correct sequential code Implement kernels one at a time and check correctness • • • 3 D-Stencil Two allocations per data structure Source insertion Keep data consistency by hand (cuda. Memcpy) To introduce modifications to any kernel • • Absorbing Boundary Conditions Two allocations per data structure Keep data consistency by hand (cuda. Memcpy) NVIDIA GPU Technology Conference – 22 nd of September, 2010 51 Compression

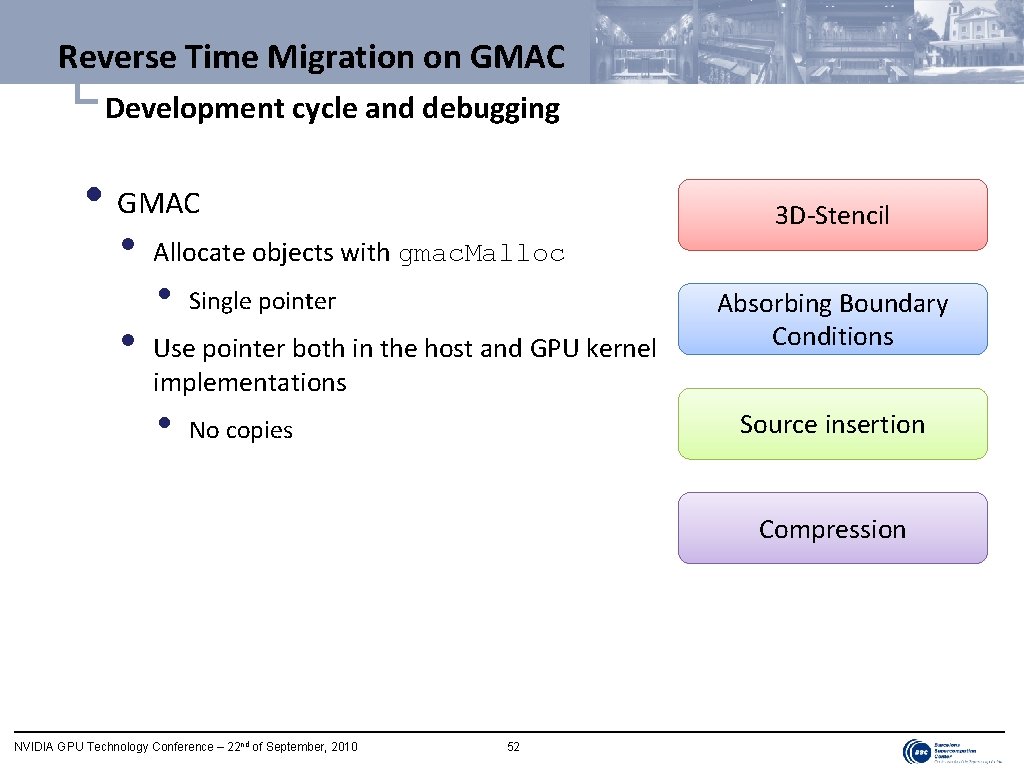

Reverse Time Migration on GMAC └ Development cycle and debugging • GMAC • • 3 D-Stencil Allocate objects with gmac. Malloc • Single pointer Use pointer both in the host and GPU kernel implementations • Absorbing Boundary Conditions Source insertion No copies Compression NVIDIA GPU Technology Conference – 22 nd of September, 2010 52

Outline • Introduction • Reverse Time Migration on CUDA • GMAC at a glance • Reverse Time Migration on GMAC • Conclusions NVIDIA GPU Technology Conference – 22 nd of September, 2010 53

Conclusions • Heterogeneous systems based on GPUs are currently the most appropriate to implement RTM • CUDA has programmability issues • • CUDA provides a good language to expose data parallelism in the code to be run on the GPU The host-side interface provided by the CUDA-RT makes difficult to implement even some basic optimizations ØGMAC eases the development of applications for GPU-based systems with no performance penalty Ø 6 -month part-time single programmer: full RTM version (5 x speedup over the previous Cell implementation) NVIDIA GPU Technology Conference – 22 nd of September, 2010 54

Acknowledgements • Barcelona Supercomputing Center • Repsol • Universitat Politècnica de Catalunya • University of Illinois at Urbana-Champaign NVIDIA GPU Technology Conference – 22 nd of September, 2010 55

Thank you! Questions? NVIDIA GPU Technology Conference – 22 nd of September, 2010 56