PROJECT MEASUREMENT AND CONTROL QUALITATIVE AND QUANTITATIVE DATA

- Slides: 79

PROJECT MEASUREMENT AND CONTROL

QUALITATIVE AND QUANTITATIVE DATA q. Software project managers need both qualitative and quantitative data to be able to make decisions and control software projects so that if there any deviations from what is planned, control can be exerted. q. Control actions may include: ØExtending the schedule ØAdding more resources ØUsing superior resources ØImproving software processes ØReducing scope (product requirements)

MEASURES AND SOFTWARE METRICS q. Measurements enable managers to gain insight for objective project evaluation. q. If we do not measure, judgments and decision making can be based only on our intuition and subjective evaluation. q. A measure provides a quantitative indication of the extend, amount, dimension, capacity, or size of some attribute of a product or a process. q. IEEE defines a software metric as “a quantitative measure of the degree to which a system, component, or process possesses a given attribute”.

MEASURES AND SOFTWARE METRICS q. Engineering is quantitative discipline, and direct measures such as voltage, mass, velocity, or temperature are measured. q. But unlike other engineering disciplines, software engineering is not grounded in the basic laws of physics. q. Some members of software community argue that software is not measurable. There will always be a qualitative assessments, but project managers need software metrics to gain insight and control. “Just as temperature measurement began with an index finger. . . and grew to sophisticated scales, tools, and techniques, so too is software measurement maturing”.

DIRECT AND INDIRECT MEASURES q. A direct measure is obtained by applying measurement rules directly to the phenomenon of interest. q. For example, by using the specified counting rules, a software program’s “Line of Code” can be measured directly. http: //sunset. usc. edu/research/CODECOUNT/ q. An indirect measure is obtained by combining direct measures. q. For example, number of “Function Points” is an indirect measure determined by counting a system’s inputs, outputs, queries, files, and interfaces.

SOFTWARE METRIC TYPES q. Product metrics, also called predictor metrics are measures of software product and mainly used to determine the quality of the product such as performance. q. Process metrics, also called control metrics are measures of software process and mainly used to determine efficiency and effectiveness of the process, such as defects discovered during unit testing They are used for Software Process Improvement (SPI). q. Project metrics are measures of effort, cost, schedule, and risk. They are used to assess status of a project and track risks.

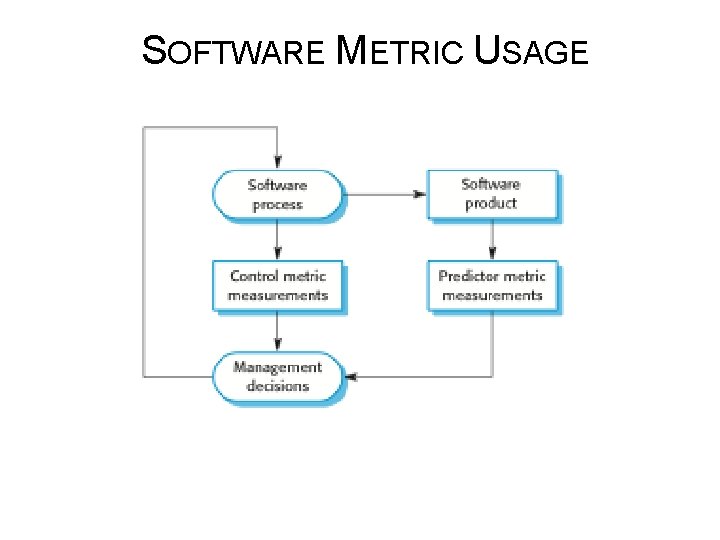

SOFTWARE METRIC USAGE Product, Process, Project Metrics ØProject Time ØProject Cost ØProduct Scope/Quality ØRisk Management ØFuture Project Estimation ØSoftware Process Improvement Metrics Repository

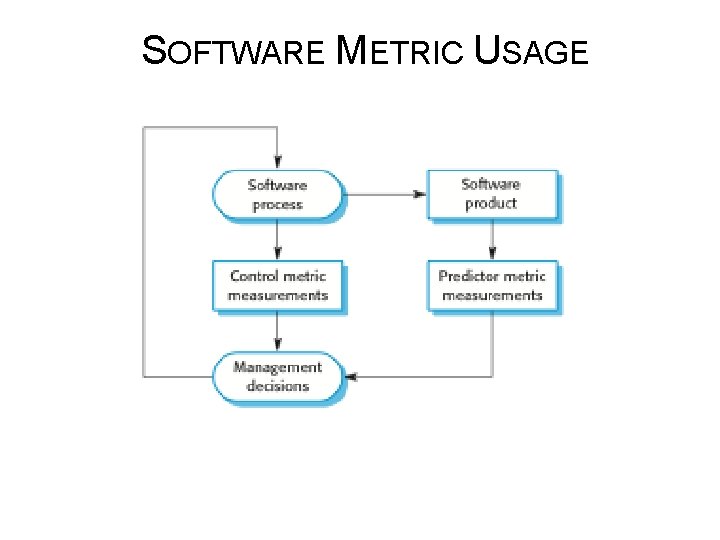

SOFTWARE METRIC USAGE

SOFTWARE METRICS COLLECTION q. Very few companies have made long-term commitment to collecting metrics. q. In 1990’s, several large companies such as Hewlett. Packard, AT&T, and Nokia introduced metrics programs. q. Most of the focus was on collecting metrics on software program defects and the Verification & Validation processes. q. There is little information publicly available about current use of systematic software measurement industry.

SOFTWARE METRICS COLLECTION q. Some companies do collect information about their software, such as number of requirements change requests or the number of defects discovered during testing. q. However, it is not clear if they then use them. The reasons are: ØIt is impossible to quantify Return On Investment (ROI) of introducing an organizational metrics program. ØThere are no standards for software metrics or standardized processes for measurement and analysis.

SOFTWARE METRICS COLLECTION ØIn many companies processes are not standardized and poorly defined. ØMuch of the research has focused on code-based metrics and plan driven development processes. However, there are many software develop by using ERP packages, COTS products, and agile methods. ØSoftware metrics are the basis of empirical software engineering. This is a research area in which experiments on software systems and collection of data about real projects has been used to form and validate hypotheses about methods and techniques.

REVIEWS AND INSPECTIONS q A group examines part or all of a process or system and its documentation to find potential problems. q Software or documents may be 'signed off' at a review which signifies that progress to the next development stage has been approved by management. q There are different types of review with different objectives Ø Inspections for defect removal (product); Ø Reviews for progress assessment (product and process); Ø Quality reviews (product and standards). 12

REVIEWS AND INSPECTIONS q A group of people carefully examine part or all of a software system and its associated documentation. q Code, designs, specifications, test plans, standards, etc. can all be reviewed. q Software or documents may be 'signed off' at a review which signifies that progress to the next development stage has been approved by management. 13

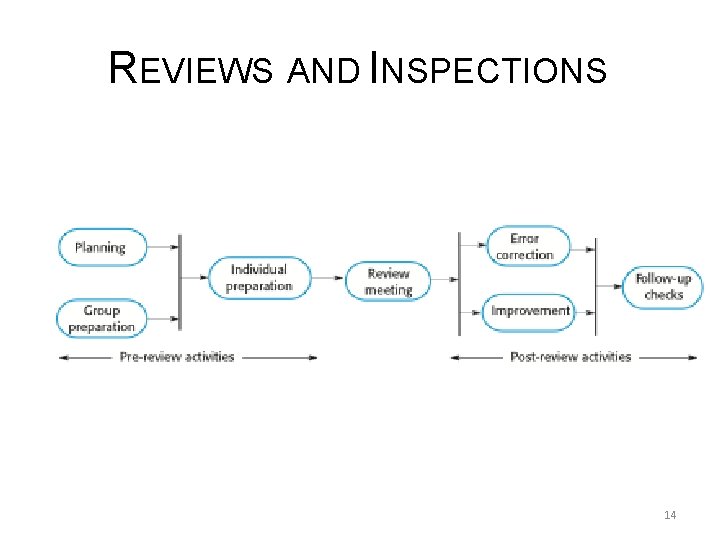

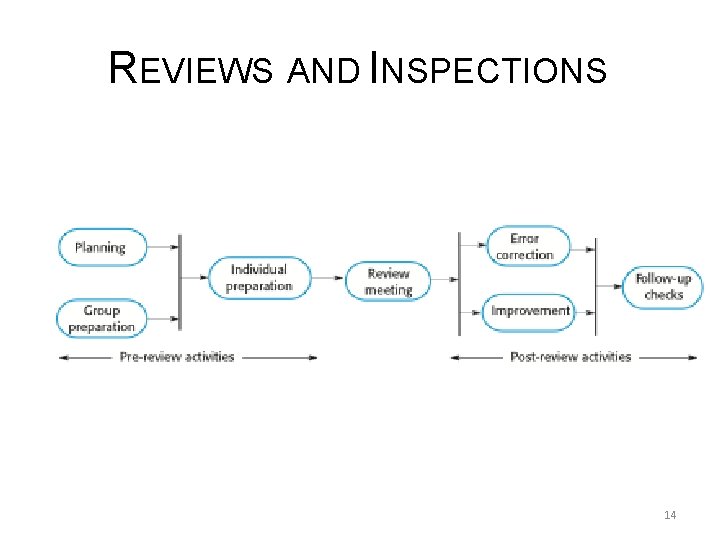

REVIEWS AND INSPECTIONS 14

REVIEWS AND INSPECTIONS q The review process in agile software development is usually informal. Ø In Scrum, for example, there is a review meeting after each iteration of the software has been completed (a sprint review), where quality issues and problems may be discussed. q In extreme programming, pair programming ensures that code is constantly being examined and reviewed by another team member. q XP relies on individuals taking the initiative to improve and re-factor code. Agile approaches are not usually standards-driven, so issues of standards compliance are not usually considered. 15

REVIEWS AND INSPECTIONS q These are peer reviews where engineers examine the source of a system with the aim of discovering anomalies and defects. q Inspections do not require execution of a system so may be used before implementation. q They may be applied to any representation of the system (requirements, design, configuration data, test data, etc. ). q They have been shown to be an effective technique for discovering program errors. 16

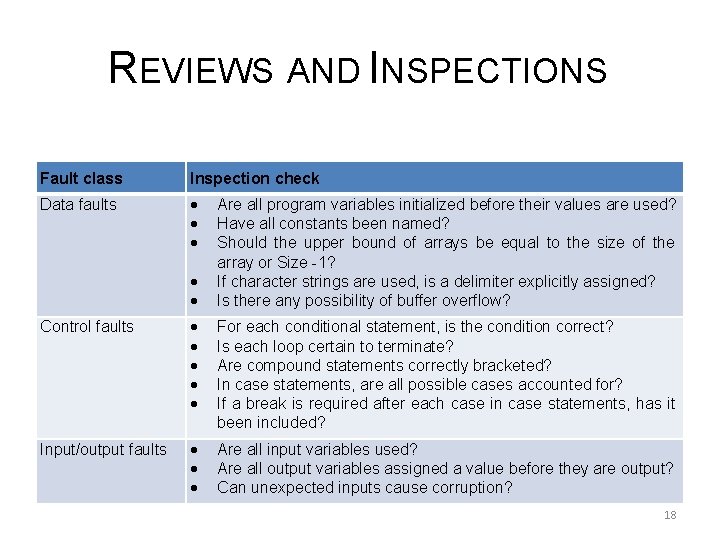

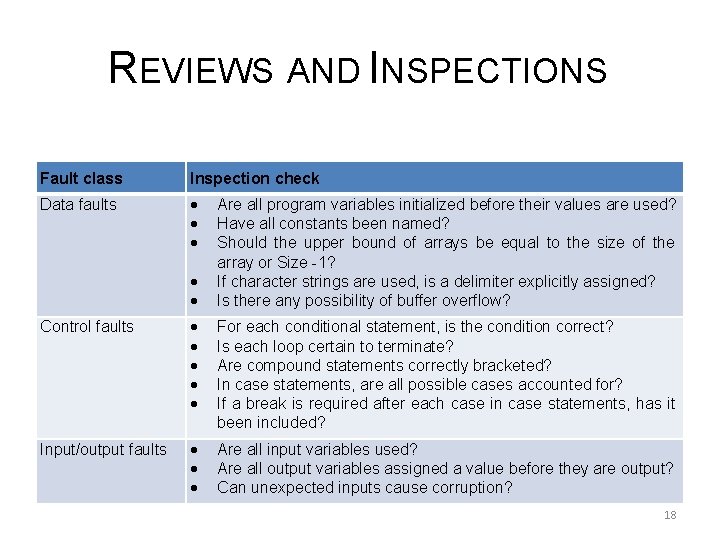

REVIEWS AND INSPECTIONS q Checklist of common errors should be used to drive the inspection. q Error checklists are programming language dependent and reflect the characteristic errors that are likely to arise in the language. q In general, the 'weaker' the type checking, the larger the checklist. q Examples: Initialization, Constant naming, loop termination, array bounds, etc. 17

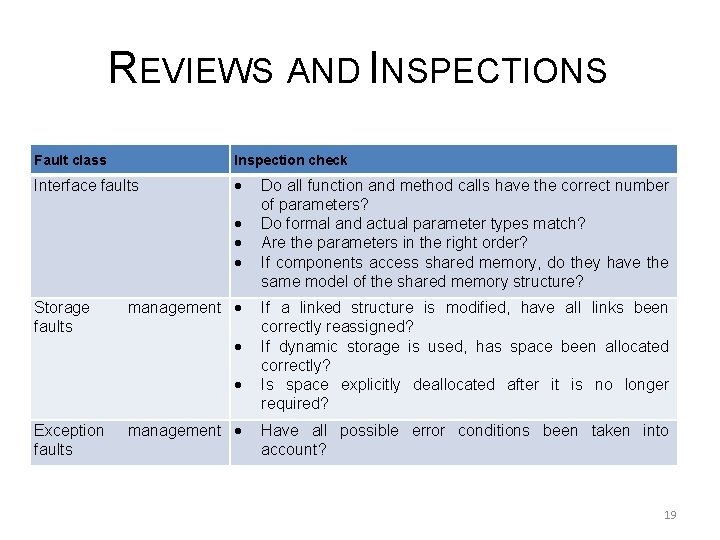

REVIEWS AND INSPECTIONS Fault class Inspection check Data faults Are all program variables initialized before their values are used? Have all constants been named? Should the upper bound of arrays be equal to the size of the array or Size -1? If character strings are used, is a delimiter explicitly assigned? Is there any possibility of buffer overflow? Control faults For each conditional statement, is the condition correct? Is each loop certain to terminate? Are compound statements correctly bracketed? In case statements, are all possible cases accounted for? If a break is required after each case in case statements, has it been included? Input/output faults Are all input variables used? Are all output variables assigned a value before they are output? Can unexpected inputs cause corruption? 18

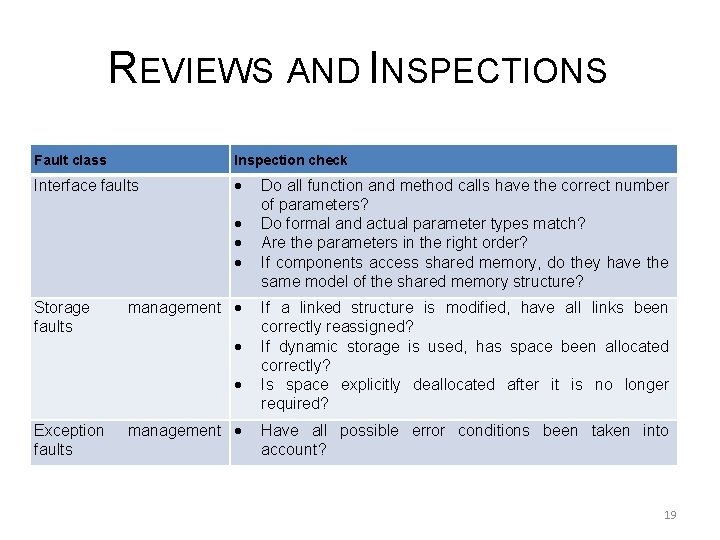

REVIEWS AND INSPECTIONS Fault class Inspection check Interface faults Storage faults management Exception faults management Do all function and method calls have the correct number of parameters? Do formal and actual parameter types match? Are the parameters in the right order? If components access shared memory, do they have the same model of the shared memory structure? If a linked structure is modified, have all links been correctly reassigned? If dynamic storage is used, has space been allocated correctly? Is space explicitly deallocated after it is no longer required? Have all possible error conditions been taken into account? 19

REVIEWS AND INSPECTIONS q Agile processes rarely use formal inspection or peer review processes. q Rather, they rely on team members cooperating to check each other’s code, and informal guidelines, such as ‘check before check-in’, which suggest that programmers should check their own code. q Extreme programming practitioners argue that pair programming is an effective substitute for inspection as this is, in effect, a continual inspection process. q Two people look at every line of code and check it before it is accepted. 20

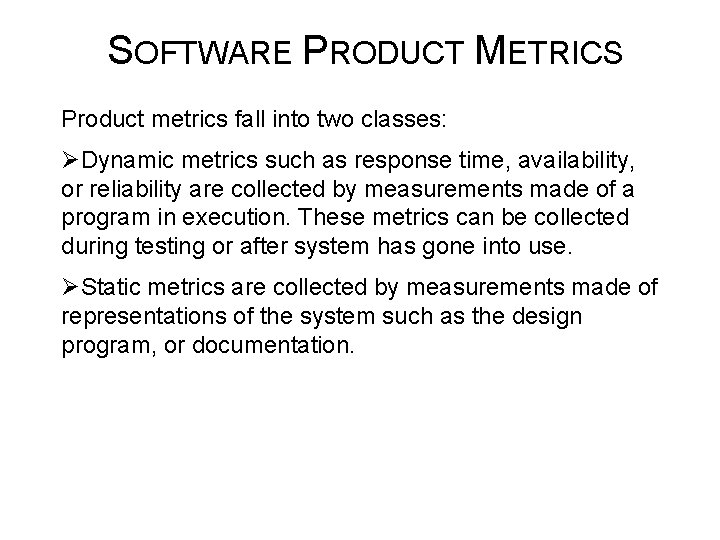

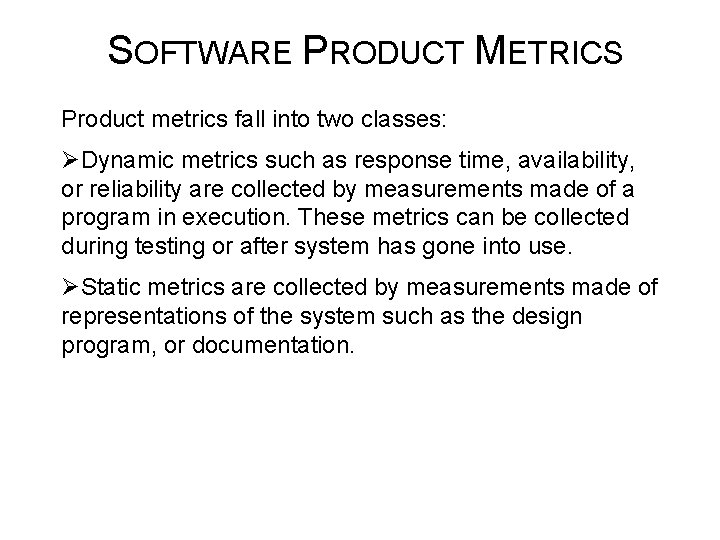

SOFTWARE PRODUCT METRICS Product metrics fall into two classes: ØDynamic metrics such as response time, availability, or reliability are collected by measurements made of a program in execution. These metrics can be collected during testing or after system has gone into use. ØStatic metrics are collected by measurements made of representations of the system such as the design program, or documentation.

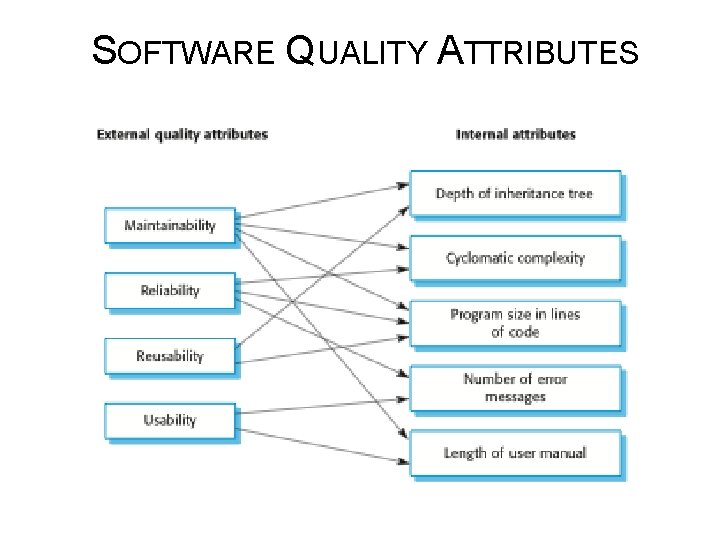

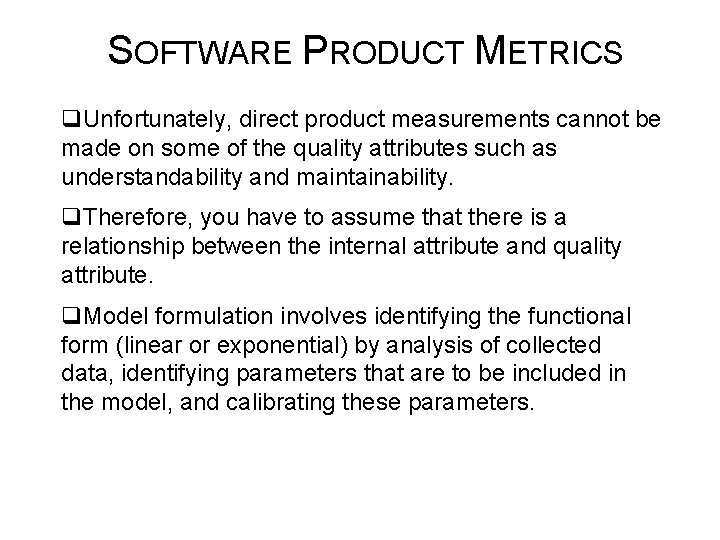

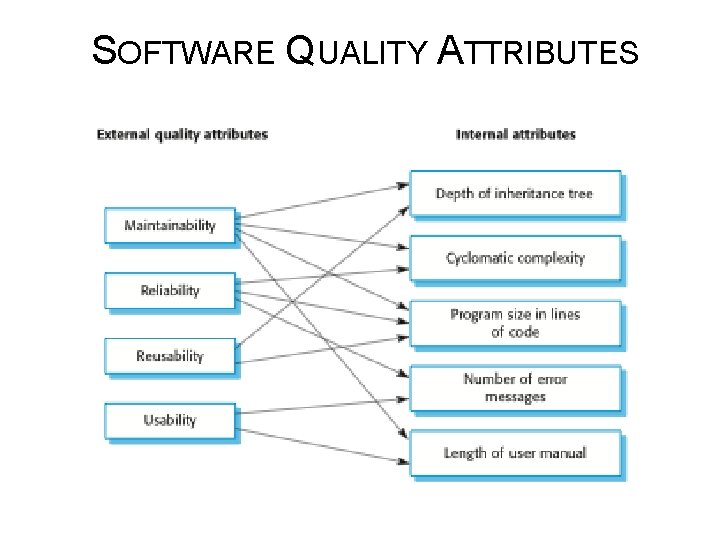

SOFTWARE PRODUCT METRICS q. Unfortunately, direct product measurements cannot be made on some of the quality attributes such as understandability and maintainability. q. Therefore, you have to assume that there is a relationship between the internal attribute and quality attribute. q. Model formulation involves identifying the functional form (linear or exponential) by analysis of collected data, identifying parameters that are to be included in the model, and calibrating these parameters.

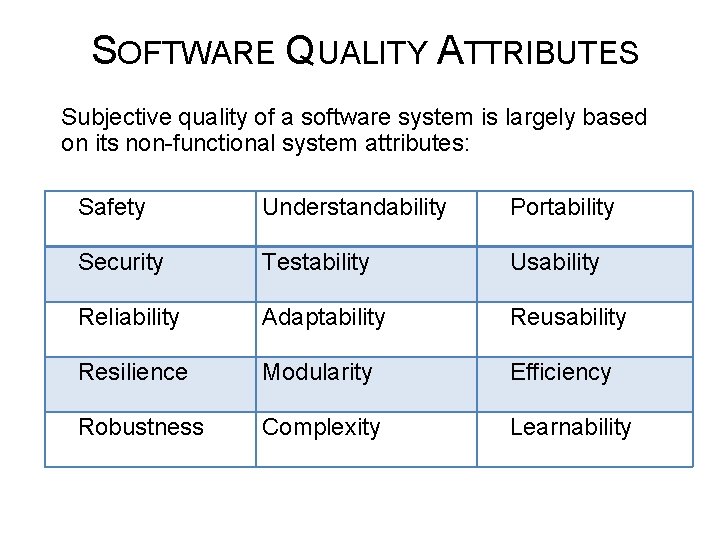

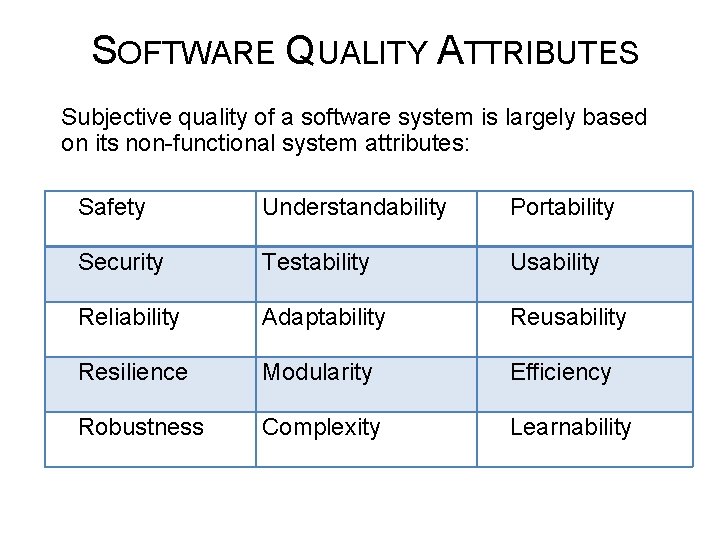

SOFTWARE QUALITY ATTRIBUTES Subjective quality of a software system is largely based on its non-functional system attributes: Safety Understandability Portability Security Testability Usability Reliability Adaptability Reusability Resilience Modularity Efficiency Robustness Complexity Learnability

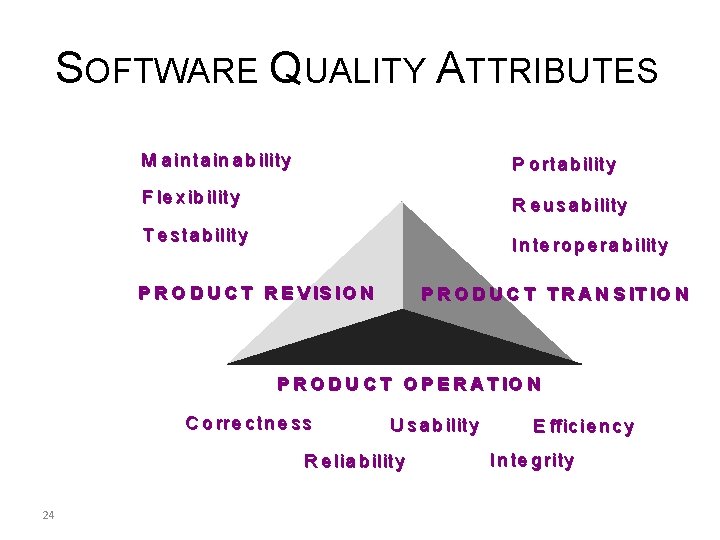

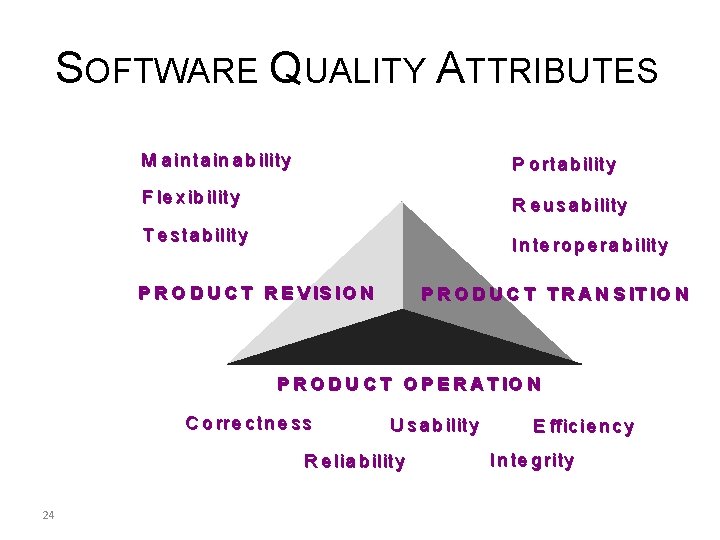

SOFTWARE QUALITY ATTRIBUTES M a in ta in a b ility P o r ta b ility F le x ib ility R e u s a b ility T e s ta b ility IIn n te r o p e r a b ilit y P R O D U C T R E V IS IO N P R O D U C T T R A N S IT IO N P R O D U C T O P E R A T IO N C o rre c tn e s s U s a b ility R e lia b ility 24 E ff ic ie n c y I n te g r ity

SOFTWARE QUALITY ATTRIBUTES

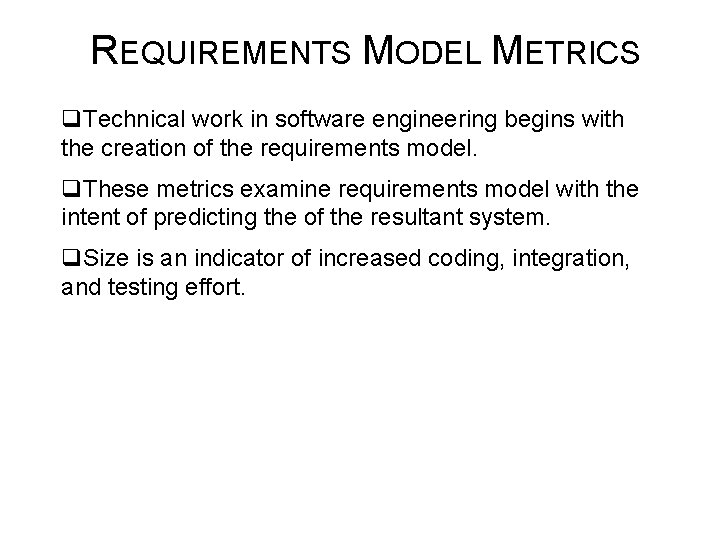

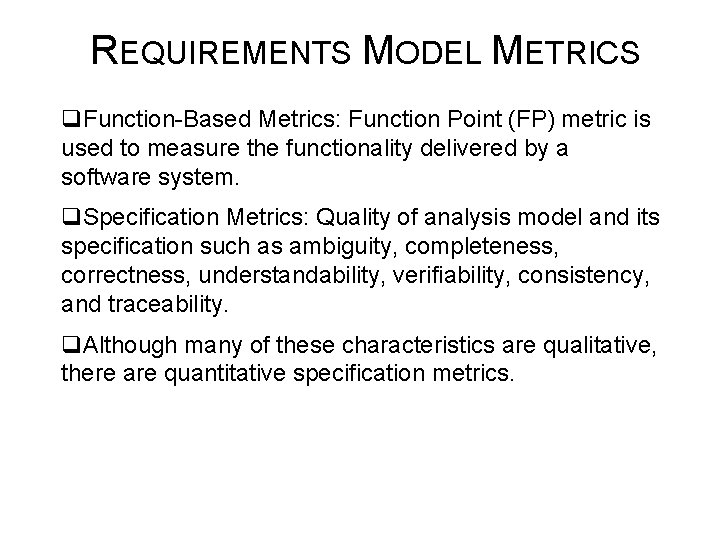

REQUIREMENTS MODEL METRICS q. Technical work in software engineering begins with the creation of the requirements model. q. These metrics examine requirements model with the intent of predicting the of the resultant system. q. Size is an indicator of increased coding, integration, and testing effort.

REQUIREMENTS MODEL METRICS q. Function-Based Metrics: Function Point (FP) metric is used to measure the functionality delivered by a software system. q. Specification Metrics: Quality of analysis model and its specification such as ambiguity, completeness, correctness, understandability, verifiability, consistency, and traceability. q. Although many of these characteristics are qualitative, there are quantitative specification metrics.

REQUIREMENTS MODEL METRICS q. Number of total requirements: Nr = Nf + Nnf Nr= number of total requirements Nf=Number of functional requirements Nfn=Number of non-functional requirements q. Ambiguity: Q = Nui / Nr; where Nui= number of requirements for which all reviewers had identical interpretations The closer the value of Q to 1, the lower the ambiguity of the specification.

REQUIREMENTS MODEL METRICS q. The adequacy of uses can be measured using an ordered triple (Low, Medium, High) to indicate: ØLevel of granularity (excessive detail) specified ØLevel in the primary and secondary scenarios ØSufficiency of the number of secondary scenarios in specifying alternatives to the primary scenario q. Semantics of analysis UML models such as sequence, state, and class diagrams can also be measured.

OO DESIGN MODEL METRICS q. Coupling: Physical connections between elements of OO design such as number of collaborations between classes or the number of messages passed between objects q. Cohesion: Cohesiveness of a class is determined by examining the degree to which the set of properties it possesses is part of the problem or design domain.

CLASS-ORIENTED METRICS – THE CK METRICS SUITE ØDepth of the inheritance tree (DIT) is the maximum length from the node to the root of the tree. As DIT grows, it is likely that lower-level classes will inherit many methods. This leads to potential difficulties when attempting to predict the behavior of a class, but large DIT values imply that many methods may be reused. ØCoupling between object classes (CBO). The CRC model may be used to determine the value for CBO. It is the number of collaborations listed for a class on its CRC index card. As CBO increases, it is likely that the reusability of a class will decrease.

USER INTERFACE DESIGN METRICS q. Layout Appropriateness (LA) measures the user’s movements from one layout entity such as icons, text, menu, and windows. q. Web page metrics are number of words, links, graphics, colors, and fonts contained within a Web page.

USER INTERFACE DESIGN METRICS q. Does the user interface promote usability? q. Are the aesthetics of the Web. App appropriate for the application domain and pleasing to the user? q. Is the content designed in a manner that imparts the most information with the least effort? q. Is navigation efficient and straightforward? q. Has the Web. App architecture been designed to accommodate the special goals and objectives of Web. App users, the structure of content and functionality, and the flow of navigation required to use the system effectively? q. Are components designed in a manner that reduces procedural complexity and enhances the correctness, reliability and performance?

SOURCE CODE METRICS q. Cyclomatic Complexity is a measure of the number of independent paths through the code and measures structural complexity. q. Measures greater than 10 -12 are considered to complex; difficult to understand, difficult to modify and test. C= E – N + 2 C: Complexity E: number of edges N: Number of nodes

SOURCE CODE METRICS q. Length of identifiers is the average length of identifiers (names for variables, methods, etc. ). The longer the identifiers, the more likely that they are meaningful, thus more maintainable. q. Depth of Conditional Nesting measures nesting of ifstatements. Deeply nested if-statements are hard to understand potentially error-prone.

PROCESS METRICS q. Process metrics are quantitative data about software processes, such as the time taken to perform some process activity. q 3 Types of process metrics can be collected: ØTime taken: time devoted to a process or spent by particular engineers ØResources required: effort, travel costs, or computer resources ØNumber of occurrences of events: events can be number of defects, number of requirements changes, number of lines of code modified in response to a requirements change

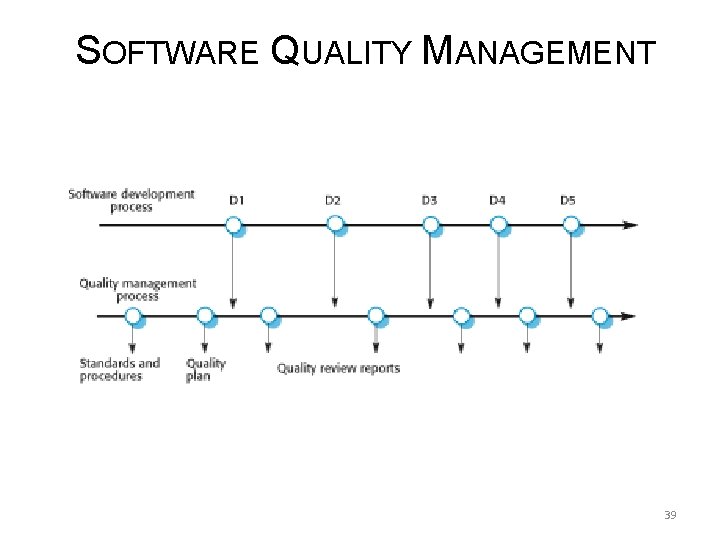

SOFTWARE QUALITY MANAGEMENT q Concerned with ensuring that the required level of quality is achieved in a software product. q Three principal concerns: Ø At the organizational level, quality management is concerned with establishing a framework. Ø At the project level, quality management involves the application of specific quality processes and checking that these planned processes have been followed. Ø At the project level, quality management is also concerned with establishing a quality plan for a project. The quality plan should set out the quality goals for the project and define what processes and standards are to be used. 37

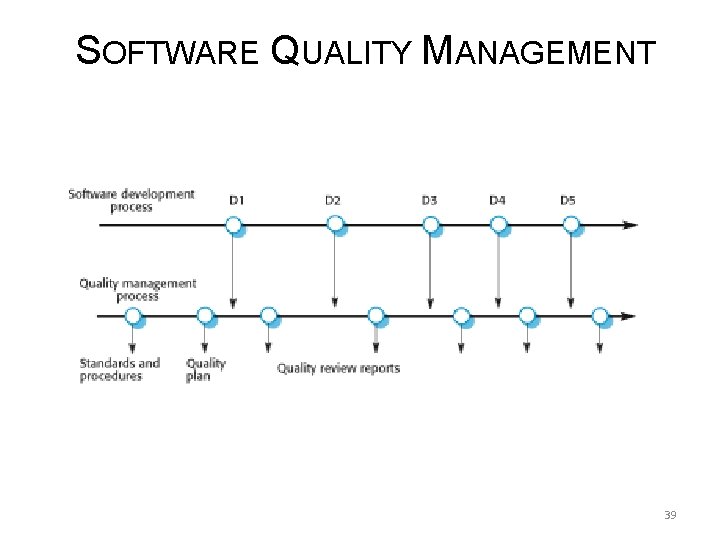

SOFTWARE QUALITY MANAGEMENT q Quality management provides an independent check on the software development process. q The quality management process checks the project deliverables to ensure that they are consistent with organizational standards and goals. q The quality team should be independent from the development team so that they can take an objective view of the software. This allows them to report on software quality without being influenced by software development issues. 38

SOFTWARE QUALITY MANAGEMENT 39

SOFTWARE QUALITY PLANNING q A quality plan sets out the desired product qualities and how these are assessed and defines the most significant quality attributes. q The quality plan should define the quality assessment process. q It should set out which organizational standards should be applied and, where necessary, define new standards to be used. 40

SOFTWARE QUALITY PLANNING q Quality plan structure ØProduct introduction ØProduct plans ØProcess descriptions ØQuality goals ØRisks and risk management. q Quality plans should be short, succinct documents ØIf they are too long, no-one will read them 41

SOFTWARE QUALITY q Quality, simplistically, means that a product should meet its specification. q This is problematical for software systems § There is a tension between customer quality requirements (efficiency, reliability, etc. ) and developer quality requirements (maintainability, reusability, etc. ); § Some quality requirements are difficult to specify in an unambiguous way; § Software specifications are usually incomplete and often inconsistent. q The focus may be ‘fitness for purpose’ rather than specification conformance. 42

SOFTWARE QUALITY q Have programming and documentation standards been followed in the development process? q Has the software been properly tested? q Is the software sufficiently dependable to be put into use? q Is the performance of the software acceptable for normal use? q Is the software usable? q Is the software well-structured and understandable? 43

SOFTWARE STANDARDS q Standards define the required attributes of a product or process. They play an important role in quality management. q Standards may be international, organizational or project standards. q Product standards define characteristics that all software components should exhibit e. g. a common programming style. q Process standards define how the software process should be enacted. 44

SOFTWARE STANDARDS q Encapsulation of best practices - avoids repetition of past mistakes q They are a framework for defining what quality means in a particular setting i. e. that organization’s view of quality. q They provide continuity - new staff can understand the organization by understanding the standards that are used. 45

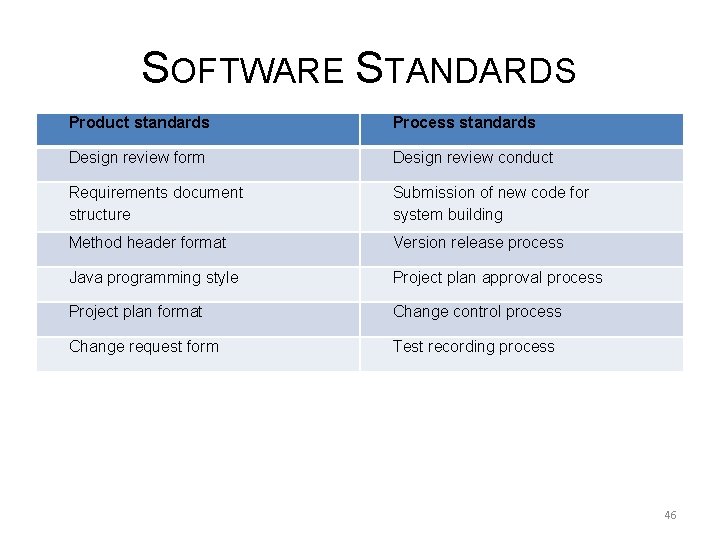

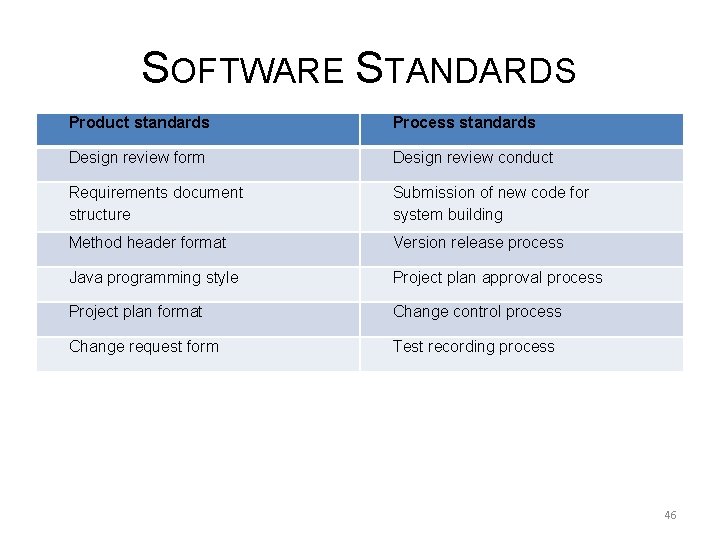

SOFTWARE STANDARDS Product standards Process standards Design review form Design review conduct Requirements document structure Submission of new code for system building Method header format Version release process Java programming style Project plan approval process Project plan format Change control process Change request form Test recording process 46

SOFTWARE PROCESS IMPROVEMENT q. Software Process Improvement (SPI) means understanding existing processes and changing these processes to increase product quality or reduce cost and development time. ØEffort/duration per work product ØNumber of errors found before release of software ØDefects reported by end users ØNumber of work products delivered (productivity)

SOFTWARE PROCESS IMPROVEMENT q. An American engineer Deming pioneered the SPI. q. He worked with Japanese manufacturing industry after World War II to help improve quality of manufactured goods. q. Deming and several other introduced statistical quality control which is based on measuring number of product defects and relating these defects to the process. q. Aim is to reduce number of defects by analyzing and modifying a process.

SOFTWARE PROCESS IMPROVEMENT q. However the same techniques cannot be applied to software development. q. Process quality and product quality relationship is less obvious when product is intangible and mostly dependent on intellectual processes that cannot be automated. q. People’s skills and experience are significant. q. Still, for very large projects, the principal factor that affects product quality is the software process.

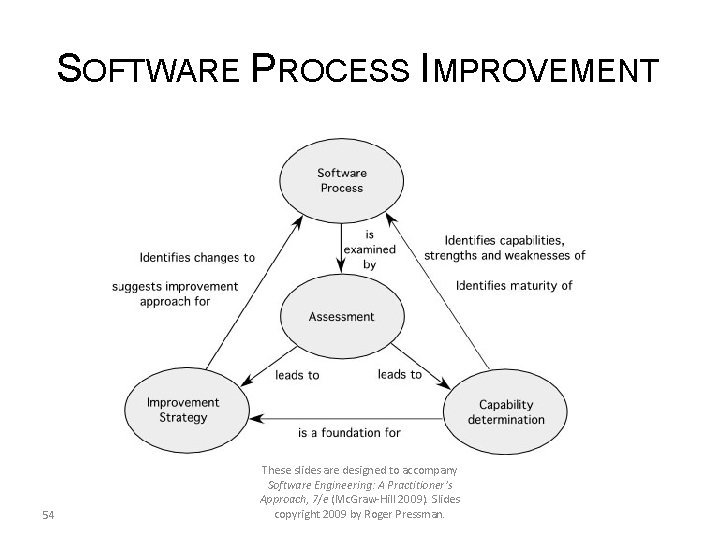

SOFTWARE PROCESS IMPROVEMENT 50

SOFTWARE PROCESS IMPROVEMENT q. SPI encompasses a set of activities that will lead to a better software process, and as a consequence a higher -quality software delivered in a more timely manner. q. SPI help software engineering companies to find their process inefficiencies and try to improve them.

SOFTWARE PROCESS IMPROVEMENT 52

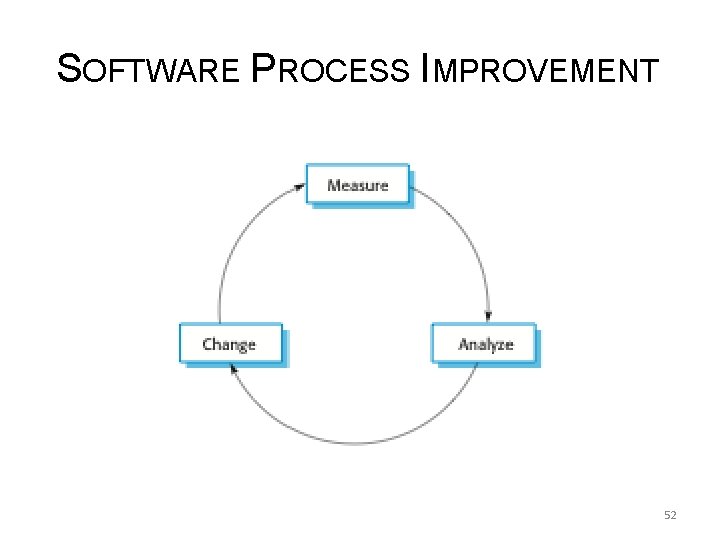

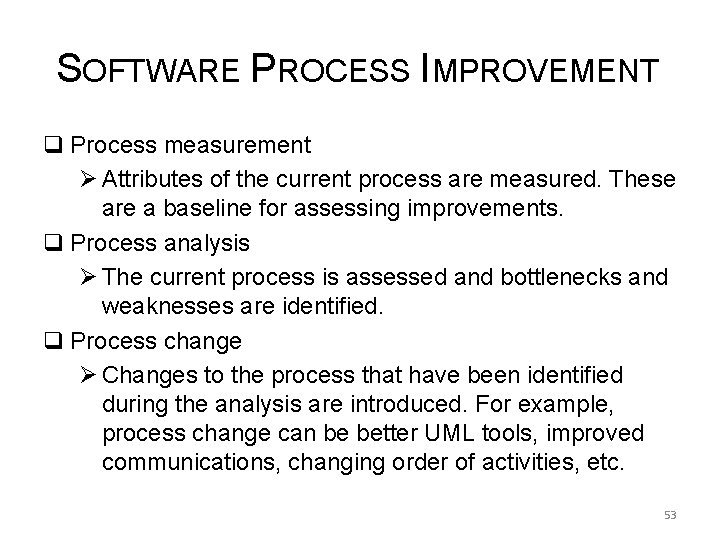

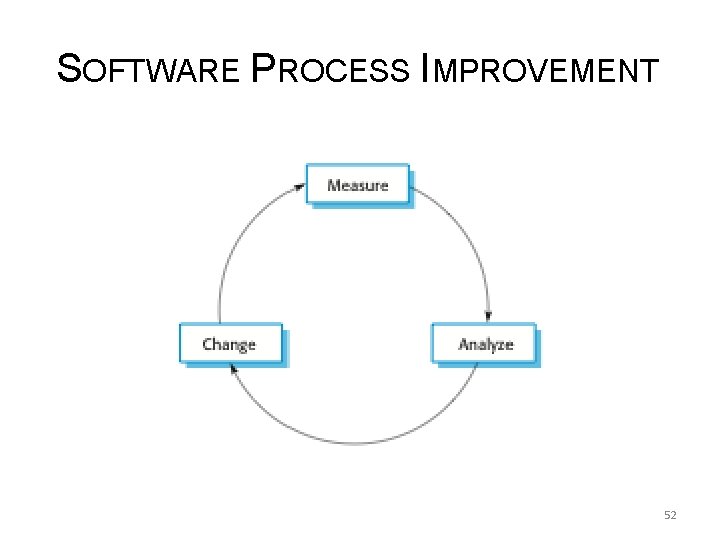

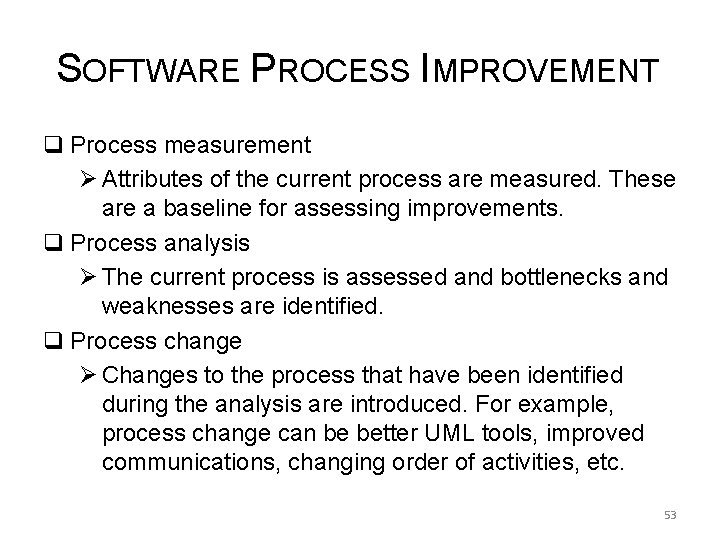

SOFTWARE PROCESS IMPROVEMENT q Process measurement Ø Attributes of the current process are measured. These are a baseline for assessing improvements. q Process analysis Ø The current process is assessed and bottlenecks and weaknesses are identified. q Process change Ø Changes to the process that have been identified during the analysis are introduced. For example, process change can be better UML tools, improved communications, changing order of activities, etc. 53

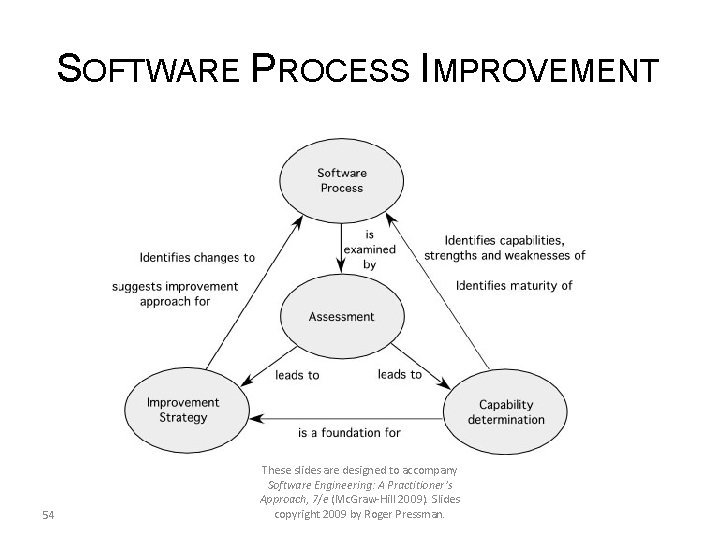

SOFTWARE PROCESS IMPROVEMENT 54 These slides are designed to accompany Software Engineering: A Practitioner’s Approach, 7/e (Mc. Graw-Hill 2009). Slides copyright 2009 by Roger Pressman.

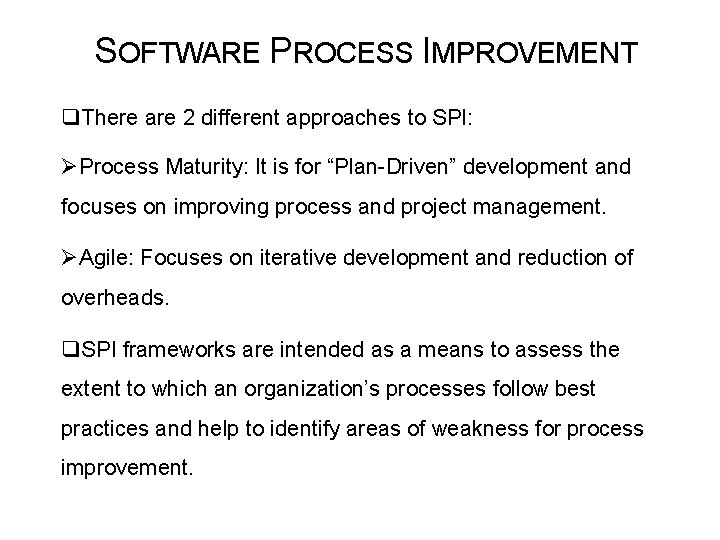

SOFTWARE PROCESS IMPROVEMENT q. There are 2 different approaches to SPI: ØProcess Maturity: It is for “Plan-Driven” development and focuses on improving process and project management. ØAgile: Focuses on iterative development and reduction of overheads. q. SPI frameworks are intended as a means to assess the extent to which an organization’s processes follow best practices and help to identify areas of weakness for process improvement.

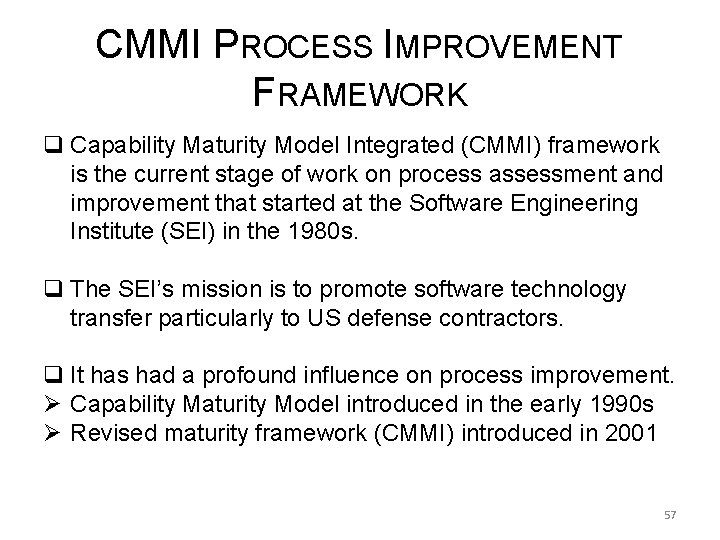

CMMI PROCESS IMPROVEMENT FRAMEWORK q There are several process maturity models: Ø SPICE Ø ISO/IEC 15504 Ø Bootstrap Ø Personal Software Process (PSP) Ø Team Software Process (TSP) Ø Tick. IT Ø SEI CMMI 56

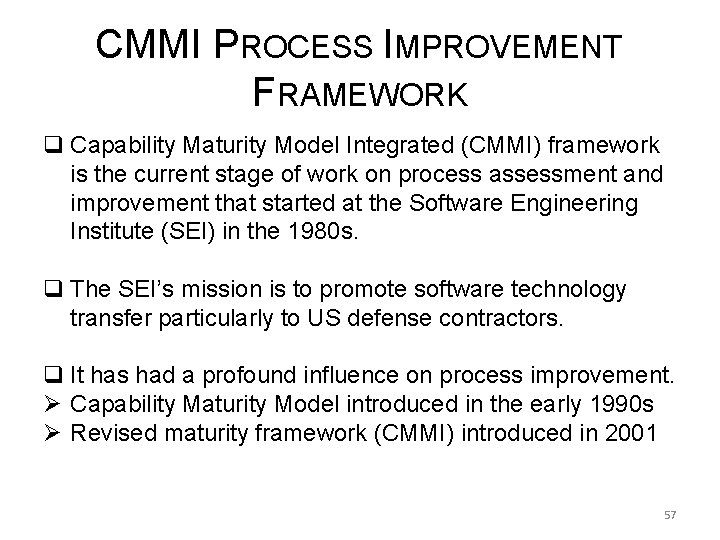

CMMI PROCESS IMPROVEMENT FRAMEWORK q Capability Maturity Model Integrated (CMMI) framework is the current stage of work on process assessment and improvement that started at the Software Engineering Institute (SEI) in the 1980 s. q The SEI’s mission is to promote software technology transfer particularly to US defense contractors. q It has had a profound influence on process improvement. Ø Capability Maturity Model introduced in the early 1990 s Ø Revised maturity framework (CMMI) introduced in 2001 57

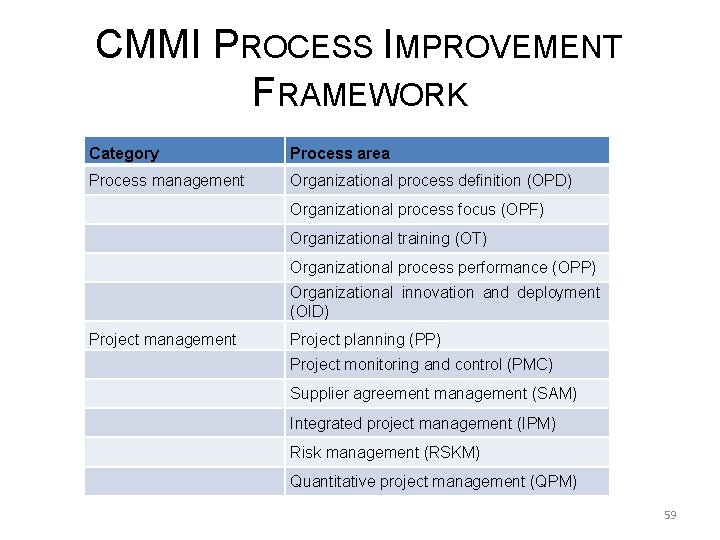

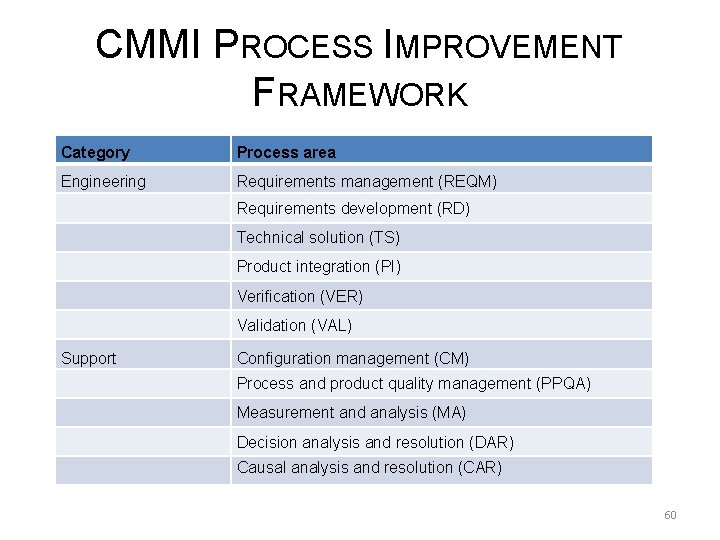

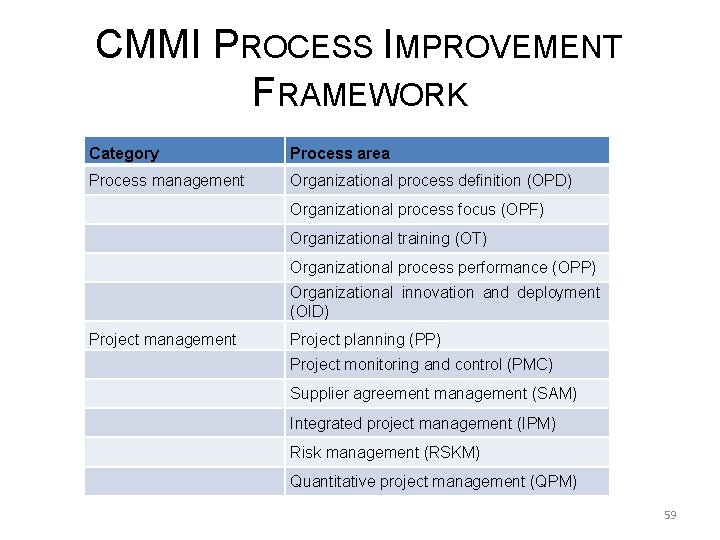

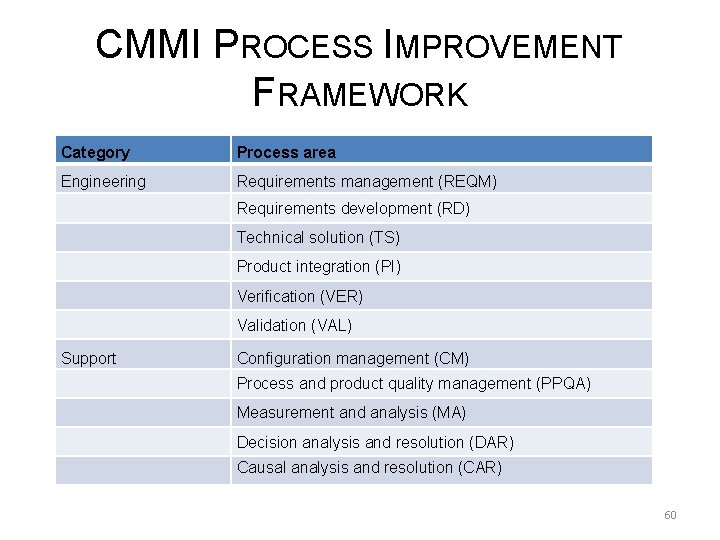

CMMI PROCESS IMPROVEMENT FRAMEWORK q CMMI allows a software company’s development and management processes to be assessed and assigned a score. q There are 4 process groups which include 22 process areas. q These process areas are relevant to software process capability and improvement. 58

CMMI PROCESS IMPROVEMENT FRAMEWORK Category Process area Process management Organizational process definition (OPD) Organizational process focus (OPF) Organizational training (OT) Organizational process performance (OPP) Organizational innovation and deployment (OID) Project management Project planning (PP) Project monitoring and control (PMC) Supplier agreement management (SAM) Integrated project management (IPM) Risk management (RSKM) Quantitative project management (QPM) 59

CMMI PROCESS IMPROVEMENT FRAMEWORK Category Process area Engineering Requirements management (REQM) Requirements development (RD) Technical solution (TS) Product integration (PI) Verification (VER) Validation (VAL) Support Configuration management (CM) Process and product quality management (PPQA) Measurement and analysis (MA) Decision analysis and resolution (DAR) Causal analysis and resolution (CAR) 60

CMMI GOALS AND PRACTICES q Process areas are defined in terms of Specific Goals (SG) and Specific Practices (SP) required to achieve these goals. q SGs are the characteristics for the process area to be effective. q SPs refine a goal into a set of process-related activities. 61

CMMI GOALS AND PRACTICES For example, for Project Planning (PP), the following SGs and SPs are defined: q SG 1: Establish Estimates Ø SP 1. 1 -1 Estimate the Scope of the Project Ø SP 1. 2 -1 Establish Estimates of Work Product and Task Attributes Ø SP 1. 3 -1 Define Project Life Cycle Ø SP 1. 4 -1 Determine Estimates of Effort and Cost 62

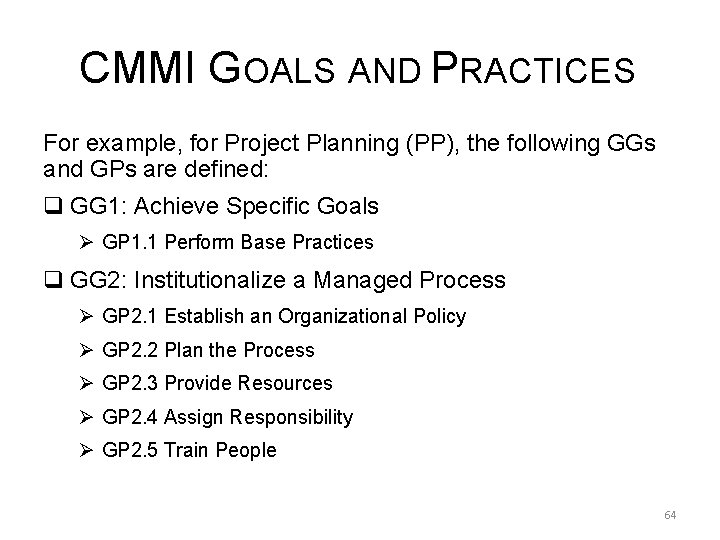

CMMI GOALS AND PRACTICES q In addition to SGs and SPs, CMMI also defines a set of 5 Generic Goals (GG) and Generic Practices (GP) for each process area. q Each of these 5 GGs corresponds to one of the 5 capability levels. q To achieve a particular capability level, GG for that level and GPs that correspond to that GG must be achieved. 63

CMMI GOALS AND PRACTICES For example, for Project Planning (PP), the following GGs and GPs are defined: q GG 1: Achieve Specific Goals Ø GP 1. 1 Perform Base Practices q GG 2: Institutionalize a Managed Process Ø GP 2. 1 Establish an Organizational Policy Ø GP 2. 2 Plan the Process Ø GP 2. 3 Provide Resources Ø GP 2. 4 Assign Responsibility Ø GP 2. 5 Train People 64

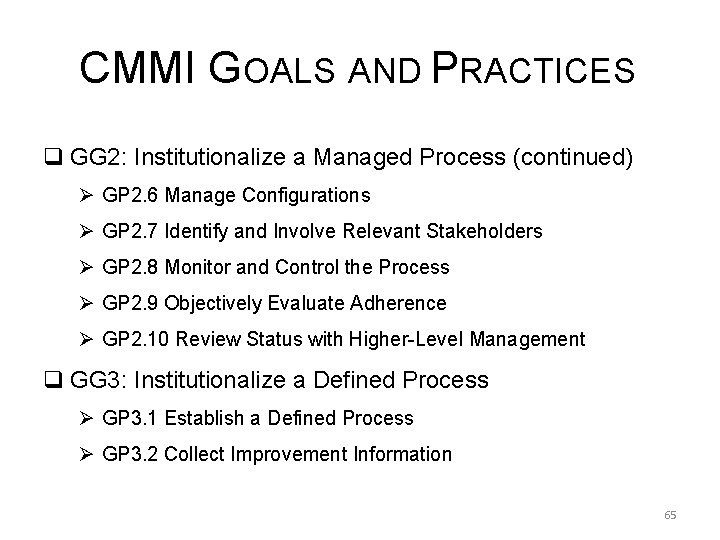

CMMI GOALS AND PRACTICES q GG 2: Institutionalize a Managed Process (continued) Ø GP 2. 6 Manage Configurations Ø GP 2. 7 Identify and Involve Relevant Stakeholders Ø GP 2. 8 Monitor and Control the Process Ø GP 2. 9 Objectively Evaluate Adherence Ø GP 2. 10 Review Status with Higher-Level Management q GG 3: Institutionalize a Defined Process Ø GP 3. 1 Establish a Defined Process Ø GP 3. 2 Collect Improvement Information 65

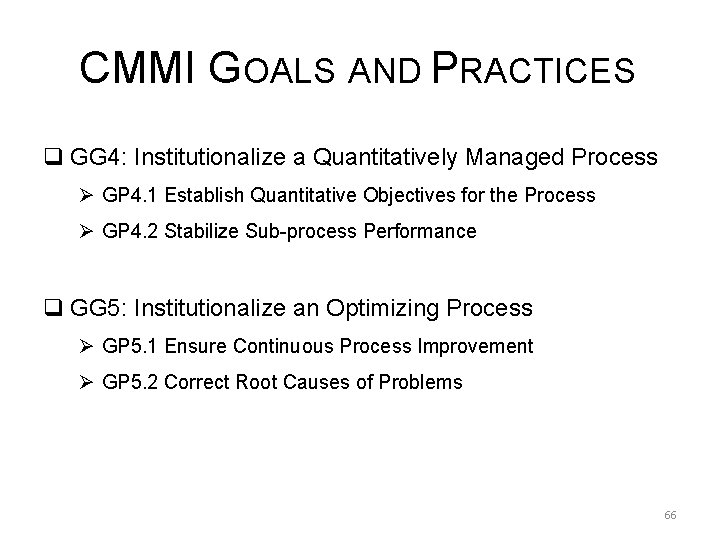

CMMI GOALS AND PRACTICES q GG 4: Institutionalize a Quantitatively Managed Process Ø GP 4. 1 Establish Quantitative Objectives for the Process Ø GP 4. 2 Stabilize Sub-process Performance q GG 5: Institutionalize an Optimizing Process Ø GP 5. 1 Ensure Continuous Process Improvement Ø GP 5. 2 Correct Root Causes of Problems 66

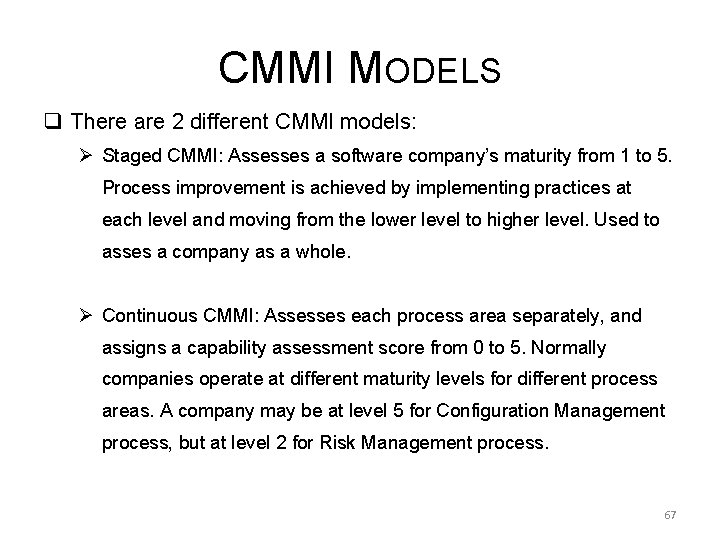

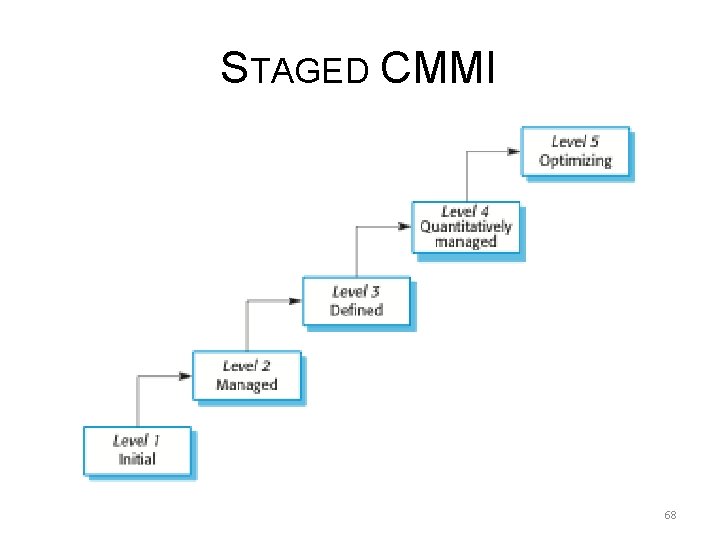

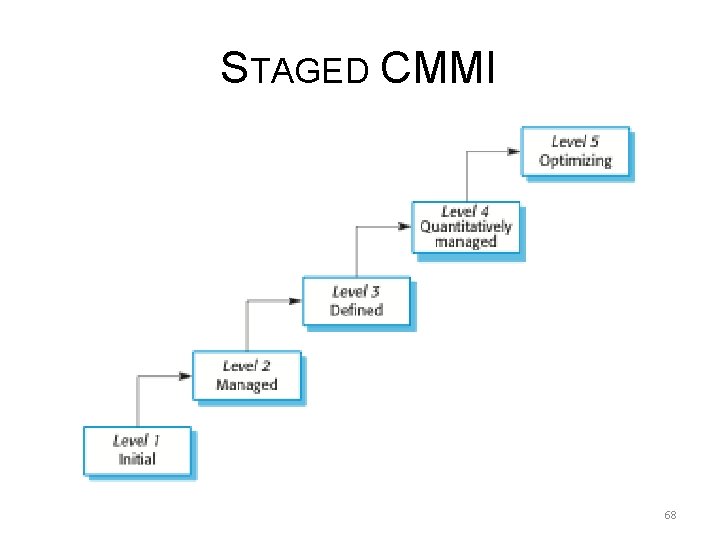

CMMI MODELS q There are 2 different CMMI models: Ø Staged CMMI: Assesses a software company’s maturity from 1 to 5. Process improvement is achieved by implementing practices at each level and moving from the lower level to higher level. Used to asses a company as a whole. Ø Continuous CMMI: Assesses each process area separately, and assigns a capability assessment score from 0 to 5. Normally companies operate at different maturity levels for different process areas. A company may be at level 5 for Configuration Management process, but at level 2 for Risk Management process. 67

STAGED CMMI 68

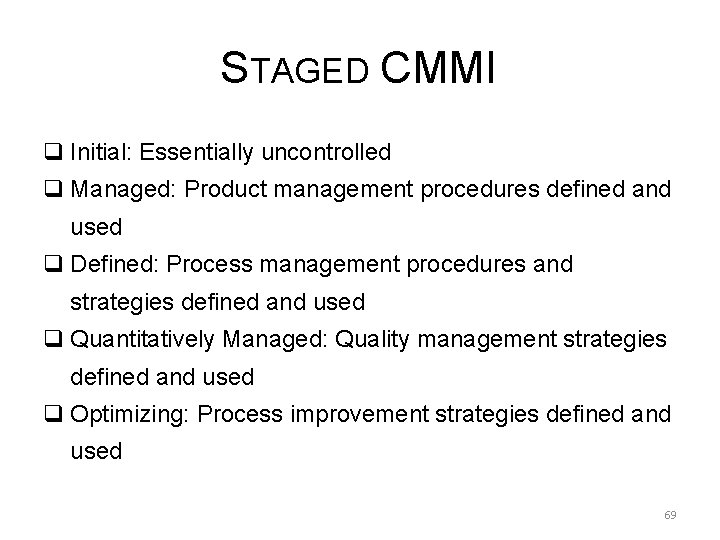

STAGED CMMI q Initial: Essentially uncontrolled q Managed: Product management procedures defined and used q Defined: Process management procedures and strategies defined and used q Quantitatively Managed: Quality management strategies defined and used q Optimizing: Process improvement strategies defined and used 69

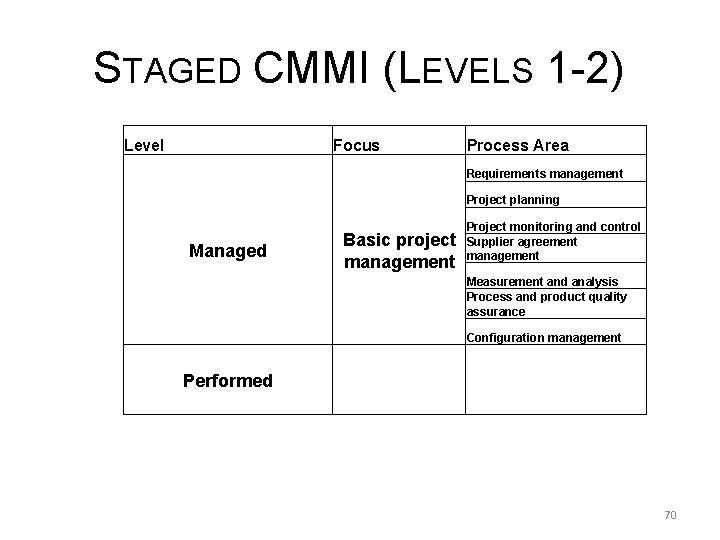

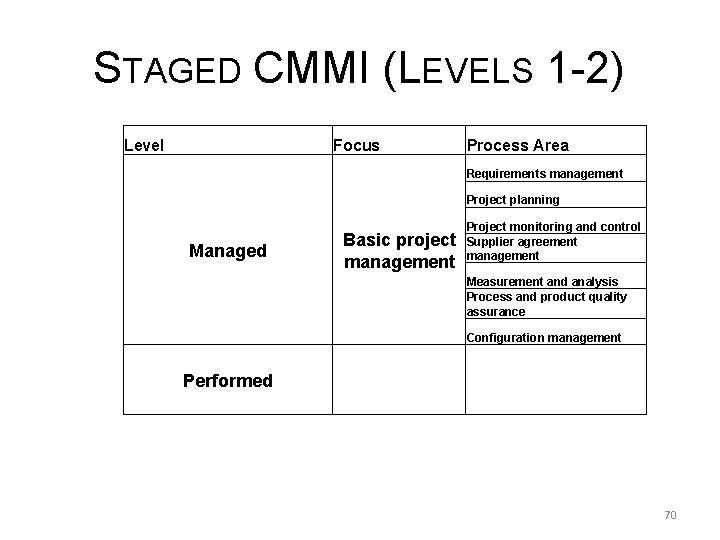

STAGED CMMI (LEVELS 1 -2) Level Focus Process Area Requirements management Project planning Managed Basic project management Project monitoring and control Supplier agreement management Measurement and analysis Process and product quality assurance Configuration management Performed 70

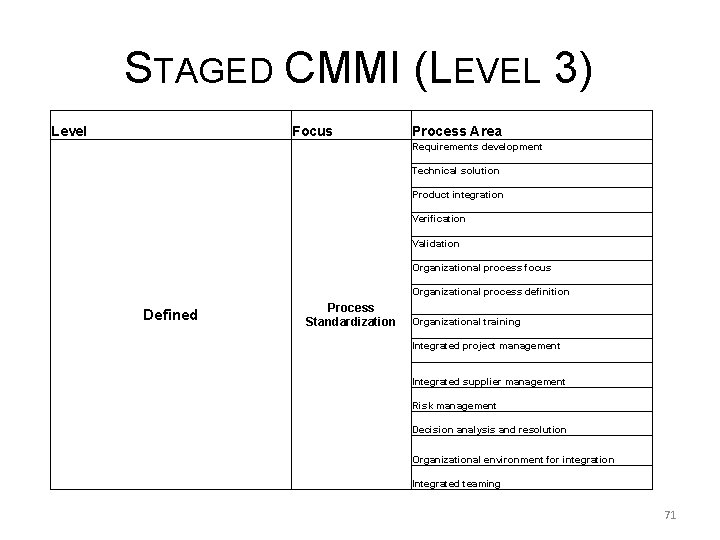

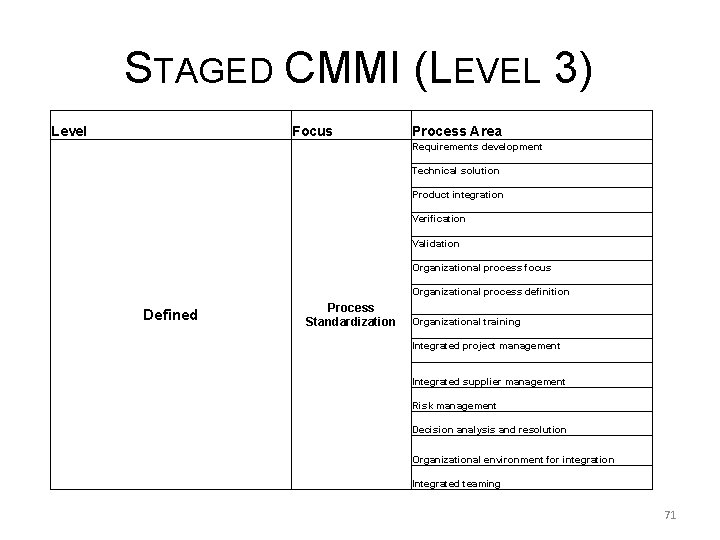

STAGED CMMI (LEVEL 3) Level Focus Process Area Requirements development Technical solution Product integration Verification Validation Organizational process focus Organizational process definition Defined Process Standardization Organizational training Integrated project management Integrated supplier management Risk management Decision analysis and resolution Organizational environment for integration Integrated teaming 71

STAGED CMMI (LEVELS 5 -4) Level Focus Process Area Organizational innovation and deployment Optimizing Continuous process improvement Casual analysis and resolution Organizational process performance Quantitatively managed Quantitative management Quantitative project management 72

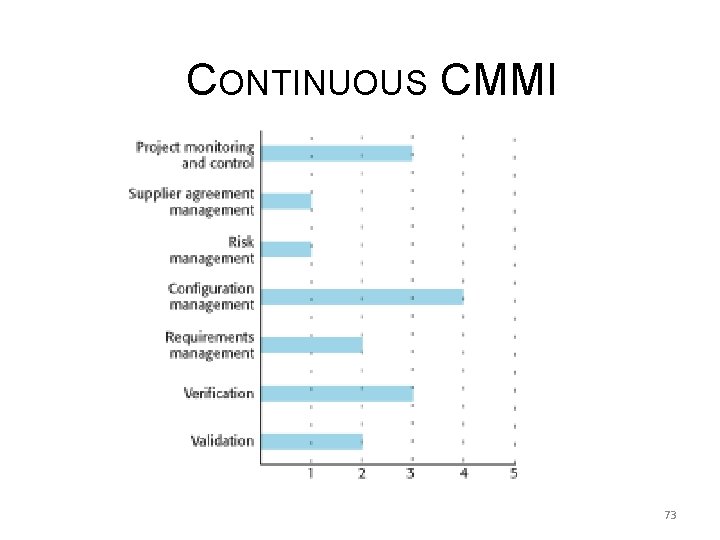

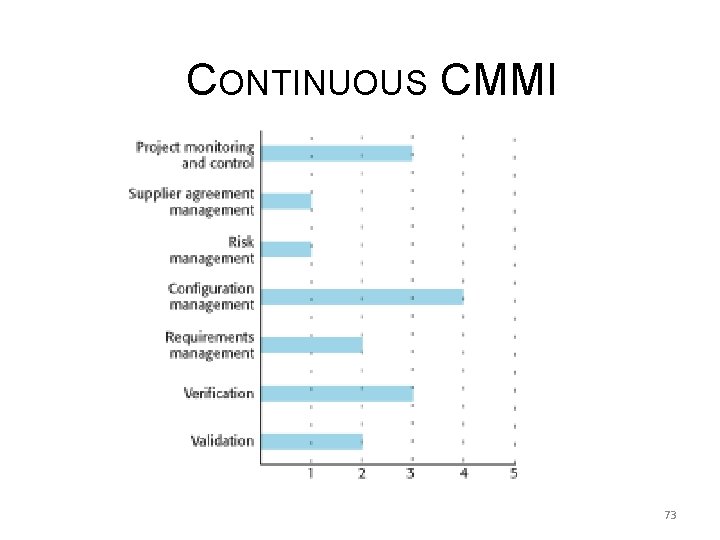

CONTINUOUS CMMI 73

CONTINUOUS CMMI q The maturity assessment is not a single value but is a set of values showing the organizations maturity in each area. q Examines the processes used in an organization and assesses their maturity in each process area. q The advantage of a continuous approach is that organizations can pick and choose process areas to improve according to their local needs. 74

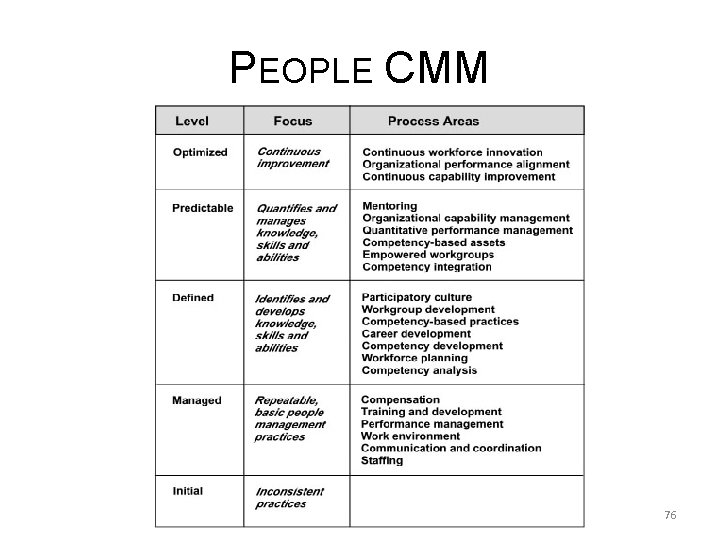

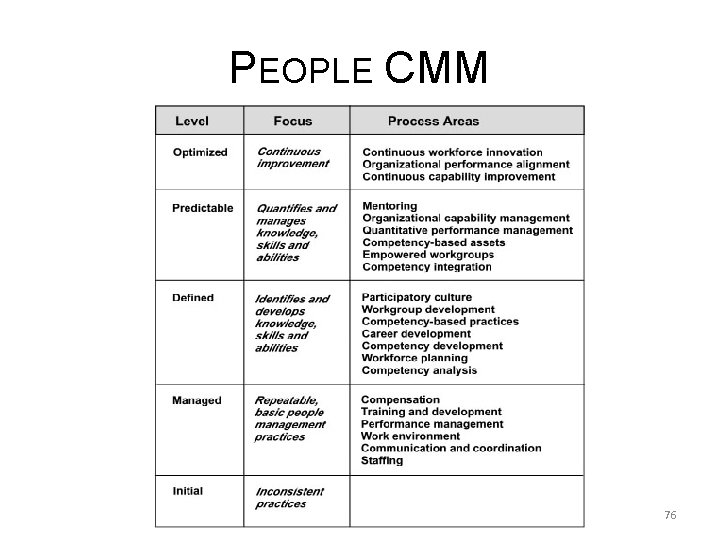

PEOPLE CMM q Used to improve the workforce. q Defines set of 5 organizational maturity levels that provide an indication of sophistication of workforce practices and processes. q People CMM complements any SPI framework. 75

PEOPLE CMM 76

PROJECT METRICS q. Unlike software process metrics that are used for strategic purposes, software project metrics are tactical. q. Adjustments to time, cost, and quality are done, and risk management is performed. q. As the project proceeds, measures of effort and calendar time expended are compared to original estimates. q. Project manager uses these data to monitor and control progress.

PROJECT TRACKING q. Binary tracking records status of work packages as 0% or 100% complete. ØAs a result, progress of a project can be measured as completed or not completed. q. Earned Value Management (EVM) measures the progress of a project by combining technical performance, schedule performance, and cost performance. ØWork Accomplished ØSchedule ØBudget

REFERENCES ØSoftware Engineering, “A practitioner’s Approach”, Roger S. Pressman, Mc. Graw Hill International Edition Seventh Edition 2010, ISBN: 978 -007 -126782 -3 or MHID 007 -126782 -4 ØSoftware Engineering, 9/E Ian Sommerville, University of St Andrews, Scotland ISBN-13: 9780137035151, Addison-Wesley