Overview for Intel Xeon Processors and Intel Xeon

![Offload VS Native model. �Coprocessor usage in application. � 1]Processor Centric “offload” model: Offload VS Native model. �Coprocessor usage in application. � 1]Processor Centric “offload” model:](https://slidetodoc.com/presentation_image/43ad989bce8e7ba399cc3a8d6ecd47f3/image-18.jpg)

- Slides: 26

Overview for Intel Xeon Processors and Intel Xeon Phi coprocessors ORIGINAL AUTHOR JAMES REINDERS, INTEL PRESENTED BY ADITYA AMBARDEKAR

References: � Intel® Xeon Phi™ Coprocessor (codename Knights Corner) http: //software. intel. com/en-us/articles/intel-xeon-phi-coprocessor-codename-knights-corner � Intel® Many Integrated Core Architecture : An Overview and Programming Models http: //www. olcf. ornl. gov/wp-content/training/electronic-structure 2012/ORNL_Elec_Struct_WS_02062012. pdf � Intel details Knights Corner architecture at long last http: //semiaccurate. com/2012/08/28/intel-details-knights-corner-architecture-at-longlast/#. USLa. X 6 U 4 uu. J � Xeon Phi Update: http: //www. advancedclustering. com/news/xeon-phi-update. html � Optimization and Performance Tuning for Intel® Xeon Phi™ Coprocessors - Part 1: Optimization Essentials http: //software. intel. com/en-us/articles/optimization-and-performance-tuning-for-intel-xeon-phicoprocessors-part-1 -optimization � Results at Tera. Grid 2011 conference. http: //www. hpcwire. com/hpcwire/2011 -0815/adventures_with_hpc_accelerators: _gpus_and_intel_mic_coprocessors. html � NCCS introduction to MIC. http: //www. nccs. nasa. gov/images/Intro-to-MIC 012913. pdf � NCSA Scientist Backs MICs over GPUs http: //goparallel. sourceforge. net/ncsa-scientist-backs-mics-gpus/

Paper flow � Introduction ( Performance capability, Parallelism-Dual-transforming-tuning � � � advantage). Features of Knight’s corner with architecture diagram (MIC). Tuning your applications for parallel performance (authors favorite point). Performance and Cache Optimizations. Compiler and Programming models (MPI vs. Offload). Xeon Phi vs. GPU(author doesn’t go into details so we will cover it under additional topic). Summary.

Introduction to Many Integrated Core (MIC ) �The basis of the Intel MIC architecture is to leverage x 86 legacy by creating a x 86 -compatible multiprocessor architecture that can utilize existing parallelization software tools. [14]Programming tools include Open. MP, Open. CL, [39] Intel Cilk Plus and specialized versions of Intel's Fortran, C++[40] and math libraries. �More than 50 cores, multiple threads.

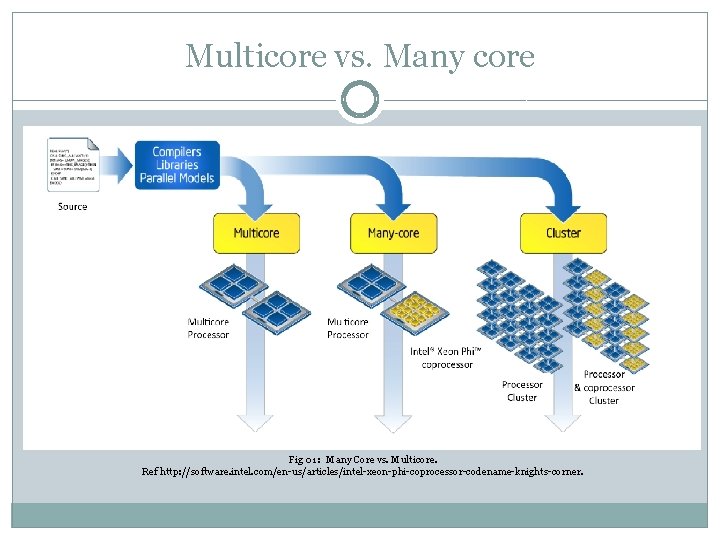

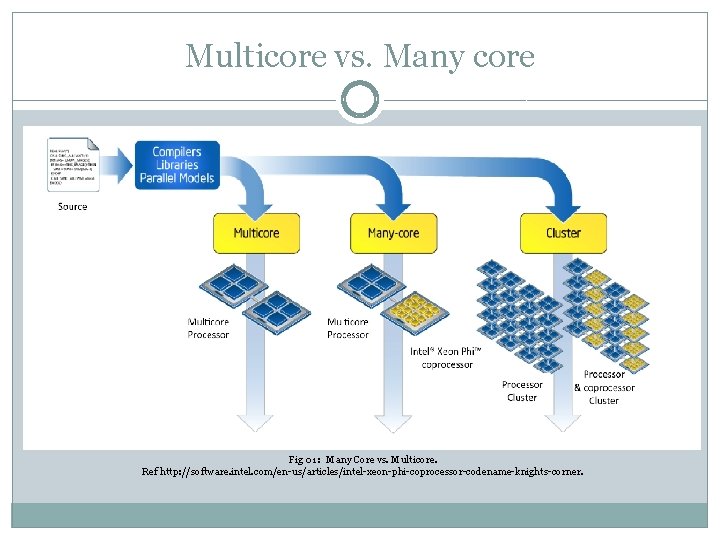

Multicore vs. Many core Fig 01 : Many Core vs. Multicore. Ref http: //software. intel. com/en-us/articles/intel-xeon-phi-coprocessor-codename-knights-corner.

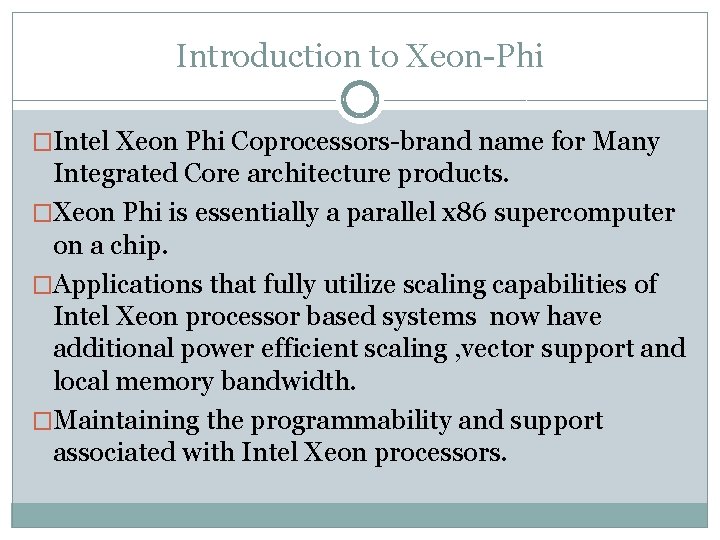

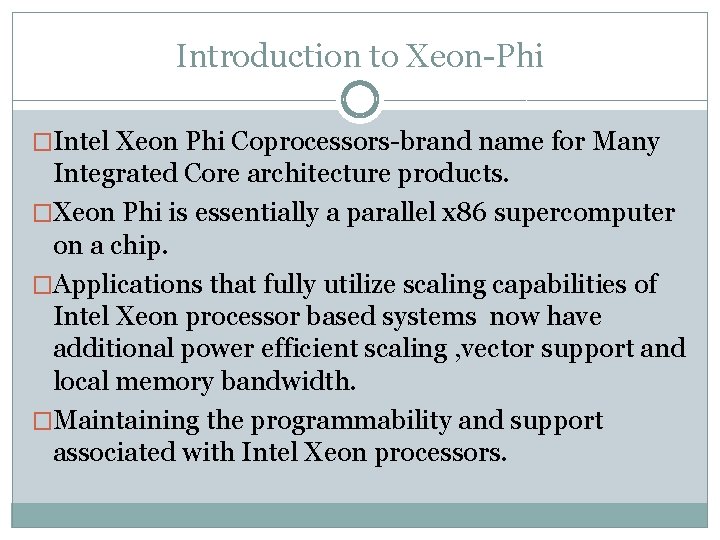

Introduction to Xeon-Phi �Intel Xeon Phi Coprocessors-brand name for Many Integrated Core architecture products. �Xeon Phi is essentially a parallel x 86 supercomputer on a chip. �Applications that fully utilize scaling capabilities of Intel Xeon processor based systems now have additional power efficient scaling , vector support and local memory bandwidth. �Maintaining the programmability and support associated with Intel Xeon processors.

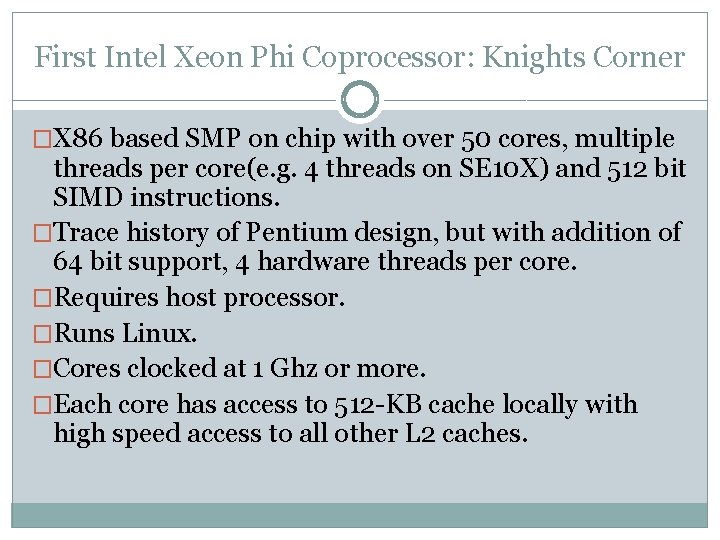

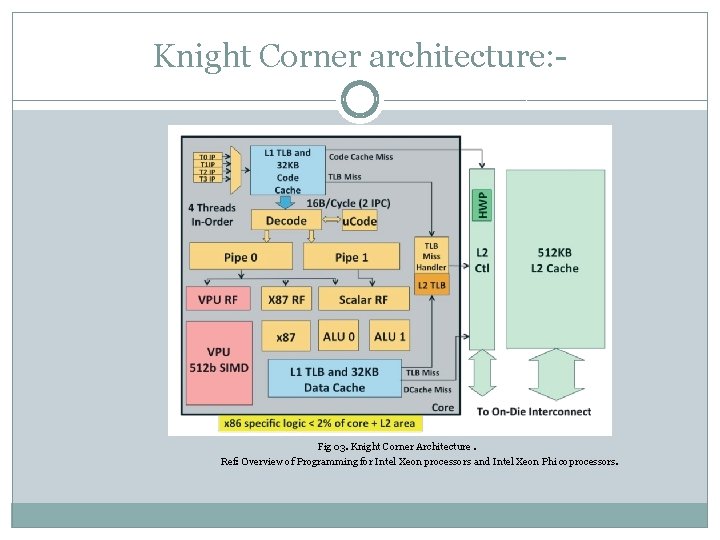

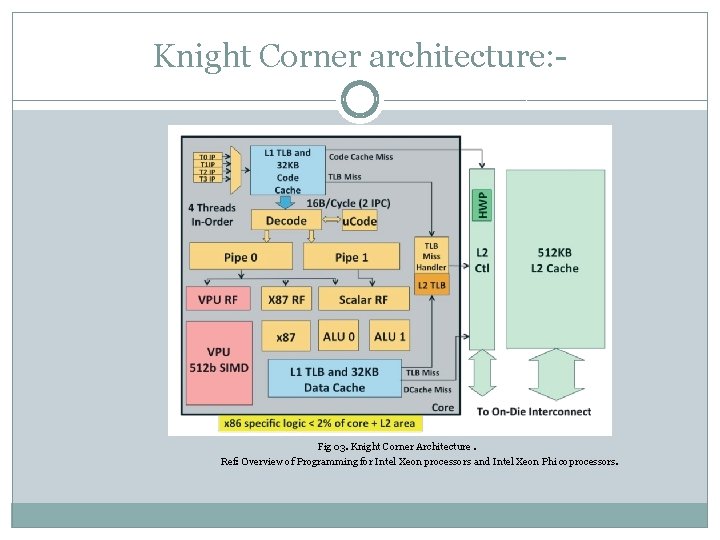

First Intel Xeon Phi Coprocessor: Knights Corner �X 86 based SMP on chip with over 50 cores, multiple threads per core(e. g. 4 threads on SE 10 X) and 512 bit SIMD instructions. �Trace history of Pentium design, but with addition of 64 bit support, 4 hardware threads per core. �Requires host processor. �Runs Linux. �Cores clocked at 1 Ghz or more. �Each core has access to 512 -KB cache locally with high speed access to all other L 2 caches.

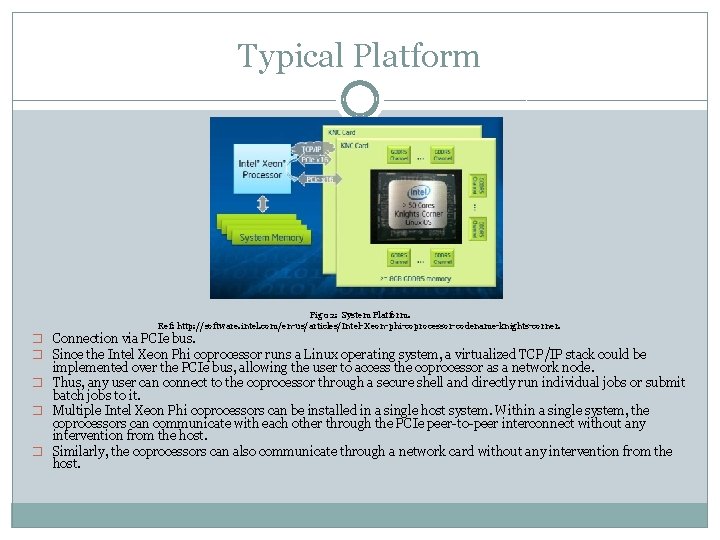

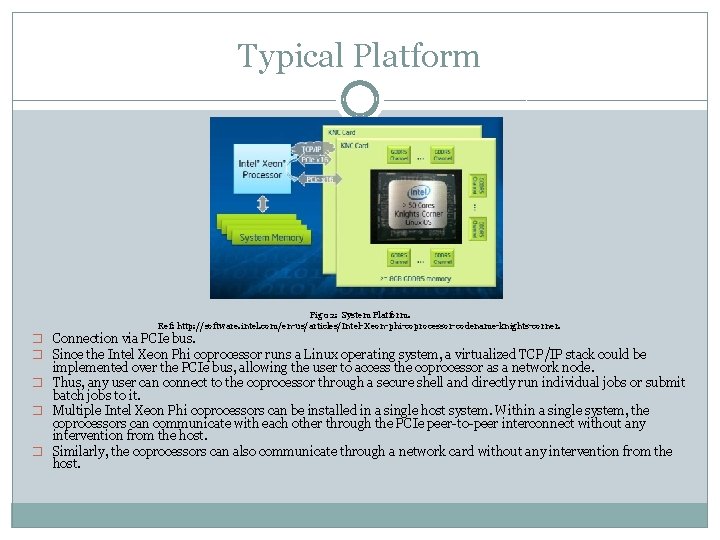

Typical Platform Fig 02: System Platform. Ref: http: //software. intel. com/en-us/articles/Intel-Xeon-phi-coprocessor-codename-knights-corner. � Connection via PCIe bus. � Since the Intel Xeon Phi coprocessor runs a Linux operating system, a virtualized TCP/IP stack could be implemented over the PCIe bus, allowing the user to access the coprocessor as a network node. � Thus, any user can connect to the coprocessor through a secure shell and directly run individual jobs or submit batch jobs to it. � Multiple Intel Xeon Phi coprocessors can be installed in a single host system. Within a single system, the coprocessors can communicate with each other through the PCIe peer-to-peer interconnect without any intervention from the host. � Similarly, the coprocessors can also communicate through a network card without any intervention from the host.

Knight Corner architecture: - Fig 03. Knight Corner Architecture. Ref: Overview of Programming for Intel Xeon processors and Intel Xeon Phi coprocessors.

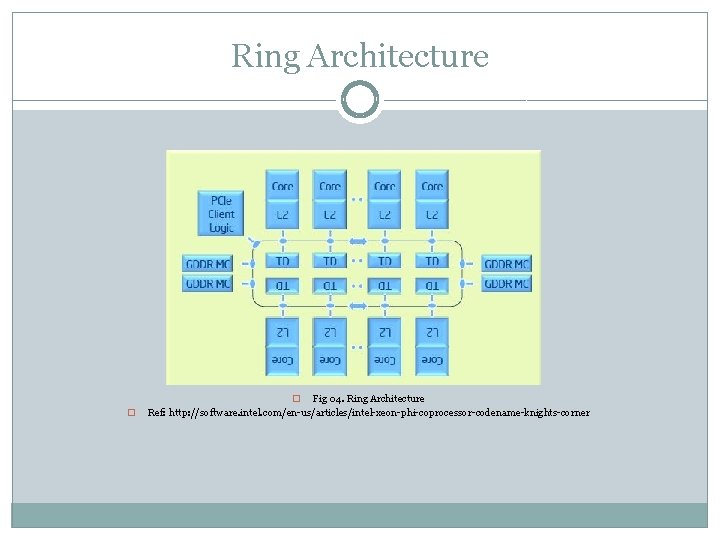

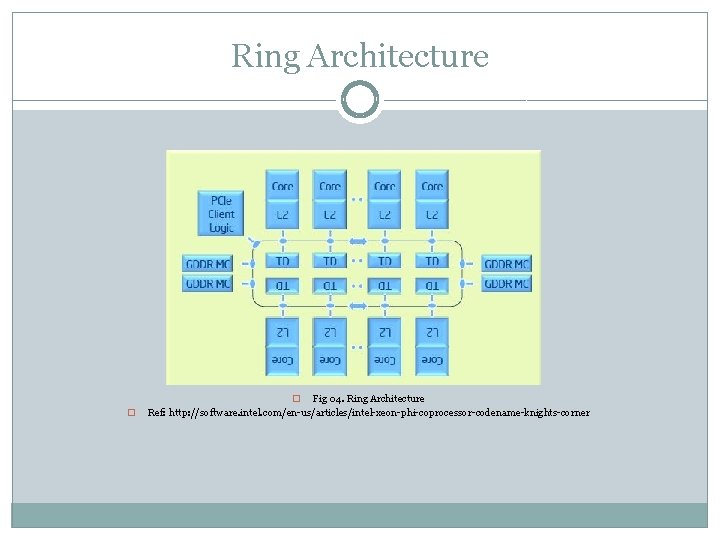

Ring Architecture Fig 04. Ring Architecture Ref: http: //software. intel. com/en-us/articles/intel-xeon-phi-coprocessor-codename-knights-corner � �

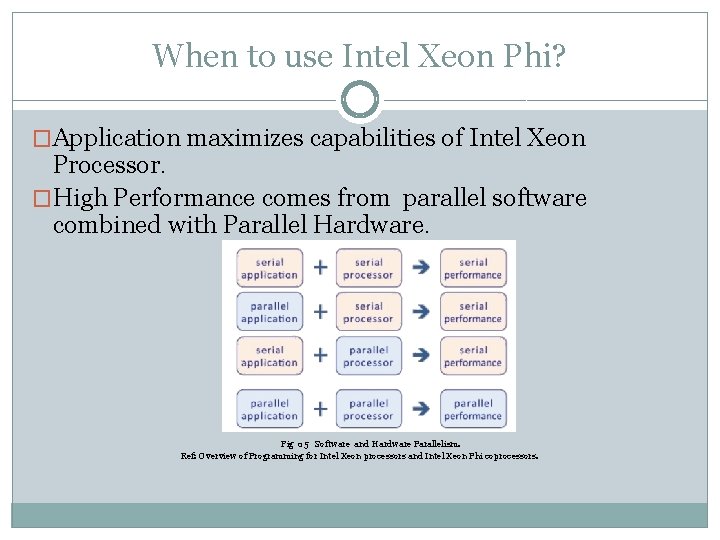

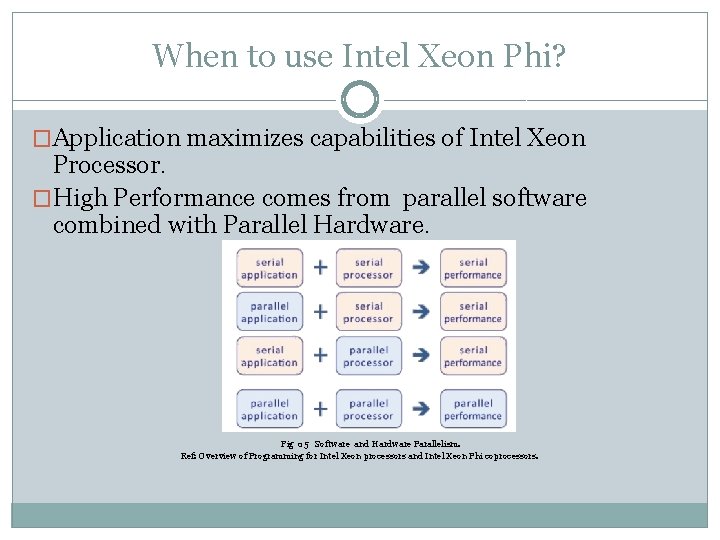

When to use Intel Xeon Phi? �Application maximizes capabilities of Intel Xeon Processor. �High Performance comes from parallel software combined with Parallel Hardware. Fig 05 Software and Hardware Parallelism. Ref: Overview of Programming for Intel Xeon processors and Intel Xeon Phi coprocessors.

When to use Intel Xeon Phi? �Application scales well past 100 threads qualify as highly parallel �More Parallelism = Better Performance. �Two fundamental considerations for application A) Scaling. B) Vectorization.

Tuning your applications for Xeon Phi �Check Scaling Create a simple graph of performance, as you run with various number of threads on Intel Xeon Processor based System. �Check Vectorization. Compile your application with and without Vectorization. Compare the performances. Most effective use of Intel Xeon Phi coprocessors will be when most cycles executing are in vector instructions. �Check for memory bandwidth For this to be efficient, application needs to exhibit good locality of reference and utilizes caches well in its core computations.

Tuning your applications for Xeon Phi �Communication vs Computation ratio, when using MPI. for deciding native vs offline model. Strategy of overlapping communication and I/O with computation.

Tools to measure Performances �Intel Vtune amplifier XE 2013 To measure computations L 1 compute density �Intel trace Analyzer and collector Profiling MPI communications.

Performance Optimizations � Memory access and loop transformations. (ex cache blocking, loop unrolling, prefetching, tiling…) � Blocking or Tiling Code runs faster when data are reused while they are still in the processor registers or the processor cache. It is frequently possible to block or tile operations so that data are reused before they are evicted from cache. � Data Structure transformations: Code will run best when data are accessed in sequential address-order from memory. Frequently developers will change the data structure to allow this linear access pattern. A common transformation is from an array of structures to a structure of arrays ( Ao. S to So. A). � Algorithm Selection: Favor ones those are parallelization and vectorization friendly. � Large page considerations: Use Linuxlibhugetlbfs library – provides easy access to huge mempory pages. preload library to back text, data, malloc() or shared memory with hugepages

Cache Optimizations �Maximum effective use of caches �Maximize locality of references first organized around threads being used per core and then around all the threads across the coprocessor. �Ensuring prefetching is utilized efficiently. Organizing data streams is best c

![Offload VS Native model Coprocessor usage in application 1Processor Centric offload model Offload VS Native model. �Coprocessor usage in application. � 1]Processor Centric “offload” model:](https://slidetodoc.com/presentation_image/43ad989bce8e7ba399cc3a8d6ecd47f3/image-18.jpg)

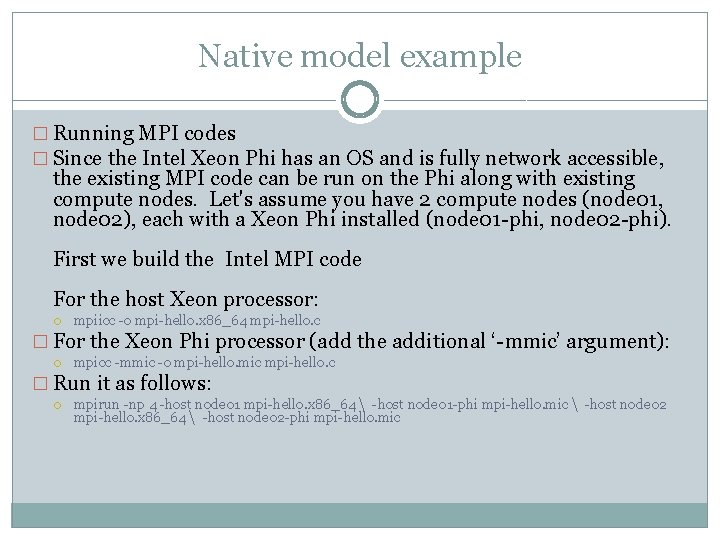

Offload VS Native model. �Coprocessor usage in application. � 1]Processor Centric “offload” model: Program viewed as running on processors and offloading select work to coprocessors. � 2] Native model: Program runs natively on both processors and coprocessors which may communicate with each other.

Offload Model �Fortran/C++ pragma support. �Future version of Open MP to include offload directives. �Offload complex program components that Xeon Phi can process. �No worries for placement of ranks on coprocessor cores, to load balance work across all the cores available.

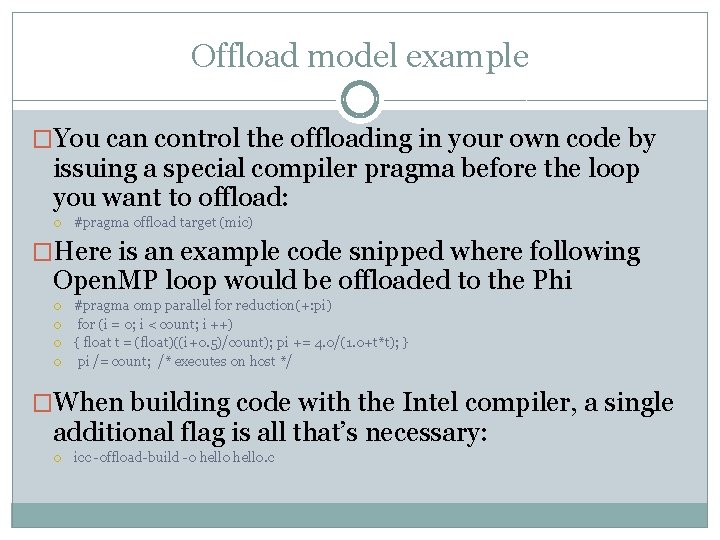

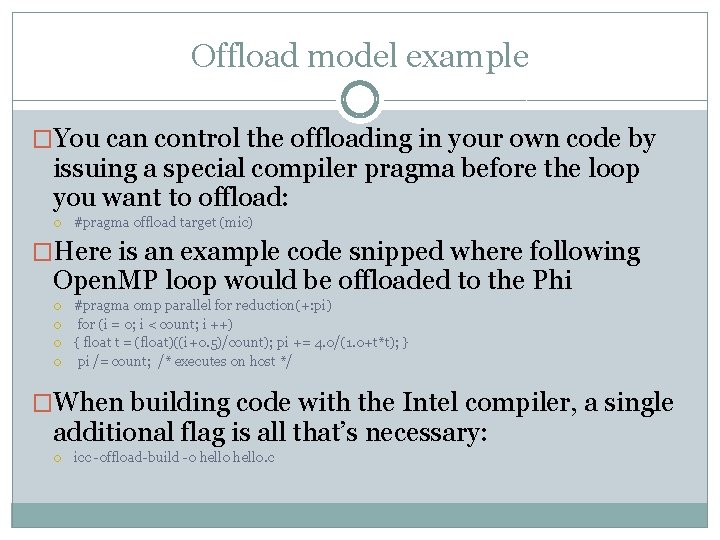

Offload model example �You can control the offloading in your own code by issuing a special compiler pragma before the loop you want to offload: #pragma offload target (mic) �Here is an example code snipped where following Open. MP loop would be offloaded to the Phi #pragma omp parallel for reduction(+: pi) for (i = 0; i < count; i ++) { float t = (float)((i+0. 5)/count); pi += 4. 0/(1. 0+t*t); } pi /= count; /* executes on host */ �When building code with the Intel compiler, a single additional flag is all that’s necessary: icc -offload-build -o hello. c

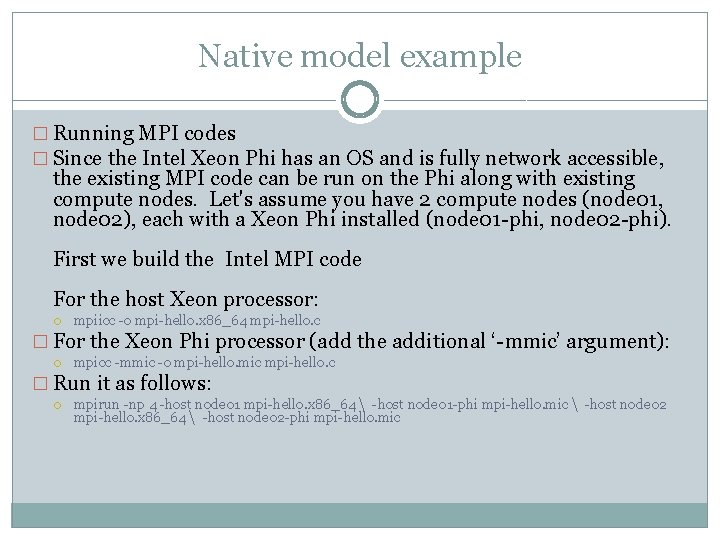

Native Model �MPI program may run on native with ranks on coprocessors and processors �One needs to fit problems in coprocessor environment. �Limited memory on coprocessor. �Load Balancing with Xeon processor cores and Xeon Phi processor cores

Native model example � Running MPI codes � Since the Intel Xeon Phi has an OS and is fully network accessible, the existing MPI code can be run on the Phi along with existing compute nodes. Let's assume you have 2 compute nodes (node 01, node 02), each with a Xeon Phi installed (node 01 -phi, node 02 -phi). First we build the Intel MPI code For the host Xeon processor: mpiicc -o mpi-hello. x 86_64 mpi-hello. c � For the Xeon Phi processor (add the additional ‘-mmic’ argument): mpicc -mmic -o mpi-hello. mic mpi-hello. c � Run it as follows: mpirun -np 4 -host node 01 mpi-hello. x 86_64 -host node 01 -phi mpi-hello. mic -host node 02 mpi-hello. x 86_64 -host node 02 -phi mpi-hello. mic

Recommended Compilers/Programming models. �Fortran Programmers- Use Open MP, DO CONCURRENT & MPI �C++ Programmers- Intel TBB, Intel Cilk Plus and Open MP. �C programmers: - Open MP and Intel Cilk Plus

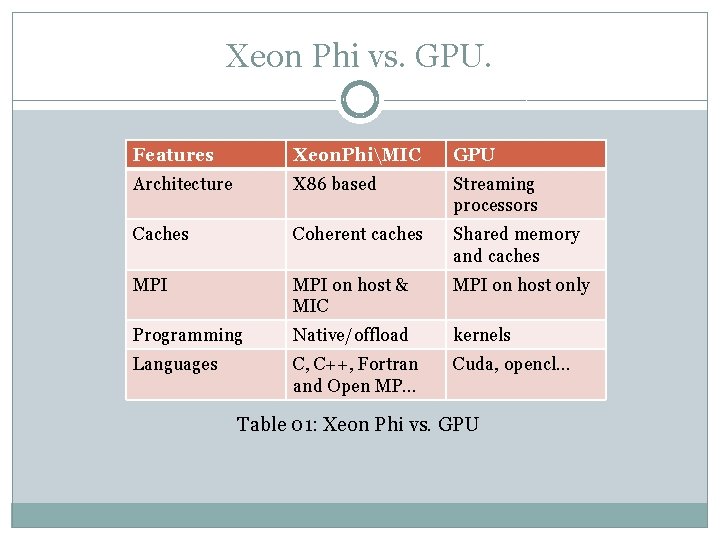

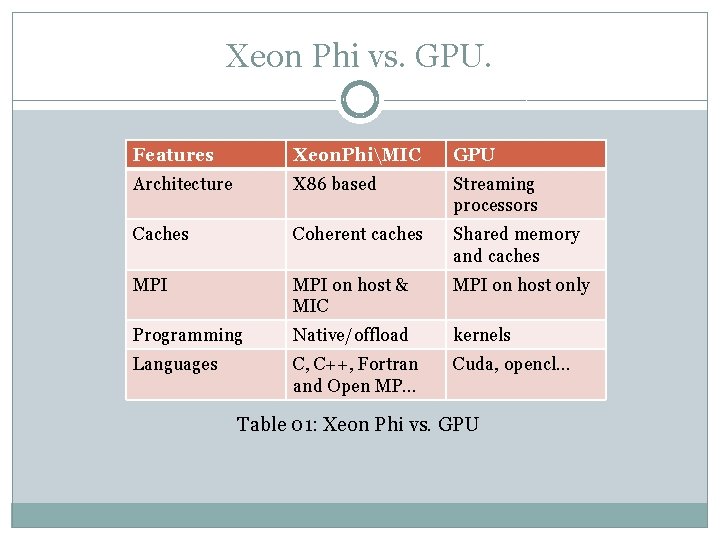

Xeon Phi vs. GPU. Features Xeon. PhiMIC GPU Architecture X 86 based Streaming processors Caches Coherent caches Shared memory and caches MPI on host & MIC MPI on host only Programming Native/offload kernels Languages C, C++, Fortran and Open MP… Cuda, opencl… Table 01: Xeon Phi vs. GPU

Summary �Fundamentals: maximize parallel computations and minimize data movement. �Parallel computations: Scaling and Vectorization. �“Transforming and Tuning” applications for scaling, vector usage and memory usage for use of Xeon Processors and Phi processors. �MIC offers X 86 based architecture legacy support for features over GPU new streaming based architecture.

Questions