Introduction to Spark Shannon Quinn with thanks to

- Slides: 52

Introduction to Spark Shannon Quinn (with thanks to Paco Nathan and Databricks)

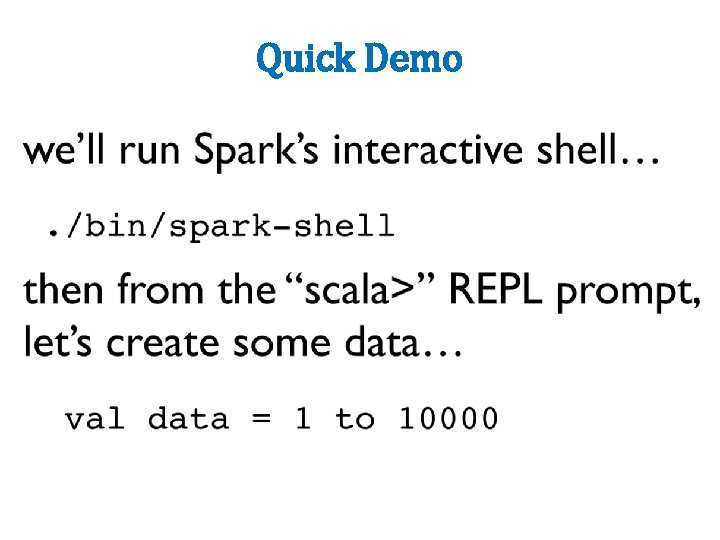

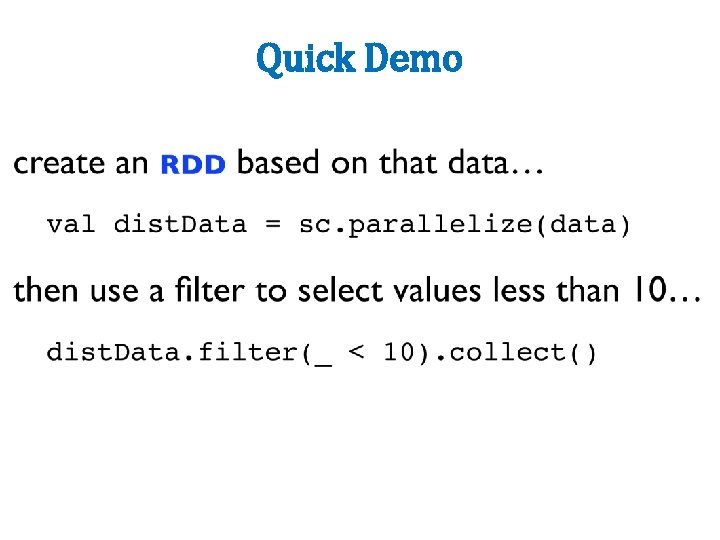

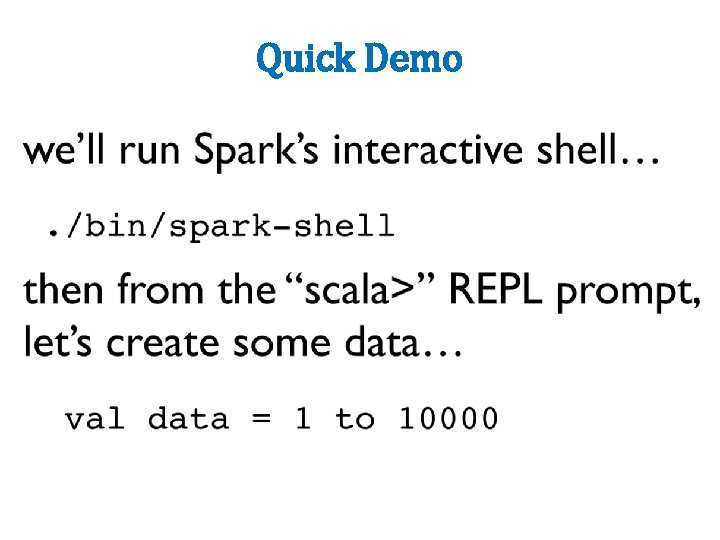

Quick Demo

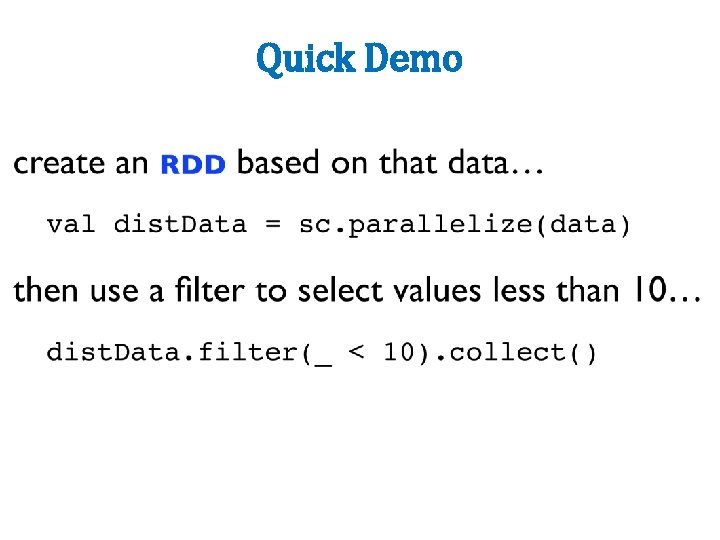

Quick Demo

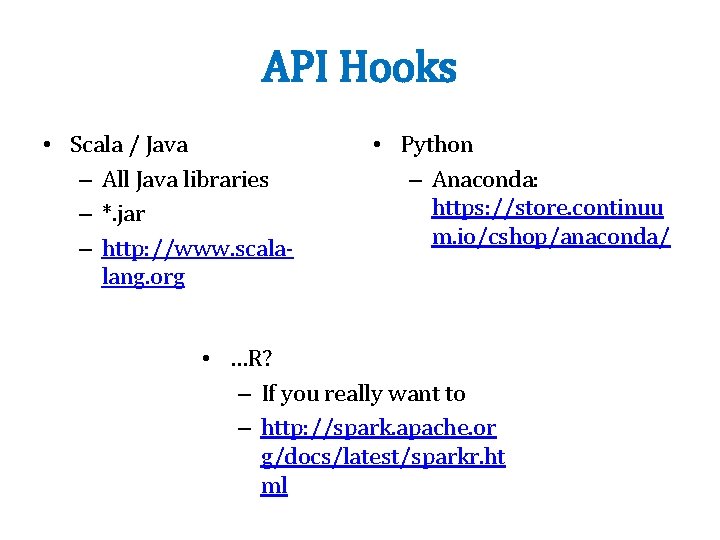

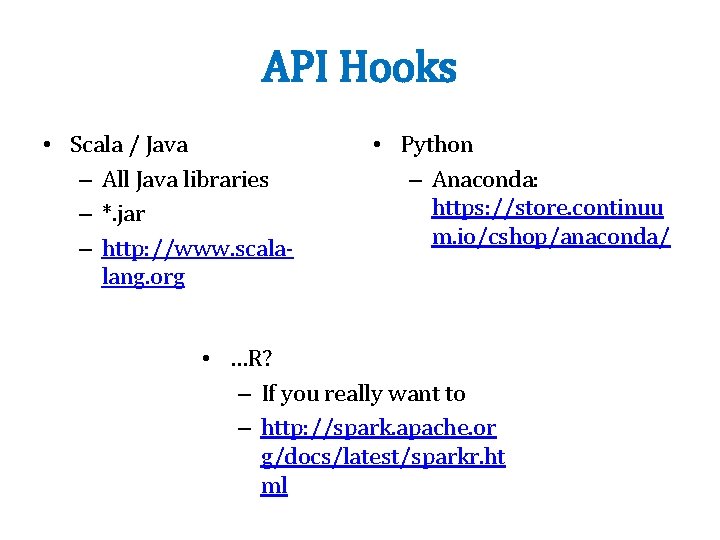

API Hooks • Scala / Java – All Java libraries – *. jar – http: //www. scalalang. org • Python – Anaconda: https: //store. continuu m. io/cshop/anaconda/ • …R? – If you really want to – http: //spark. apache. or g/docs/latest/sparkr. ht ml

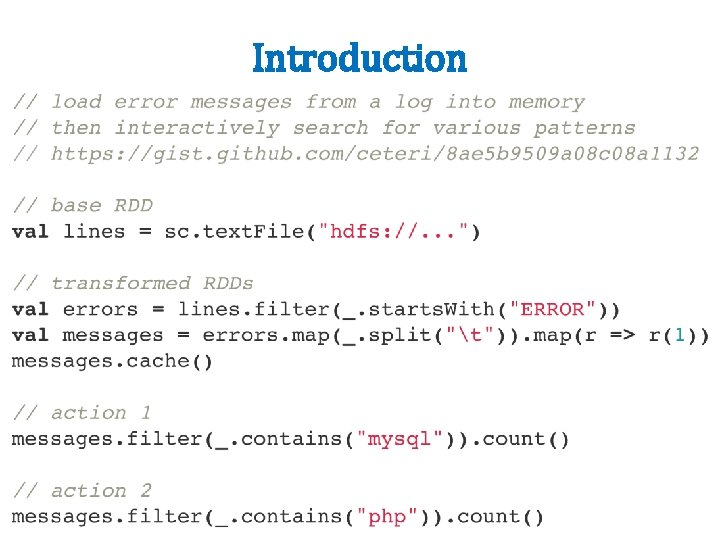

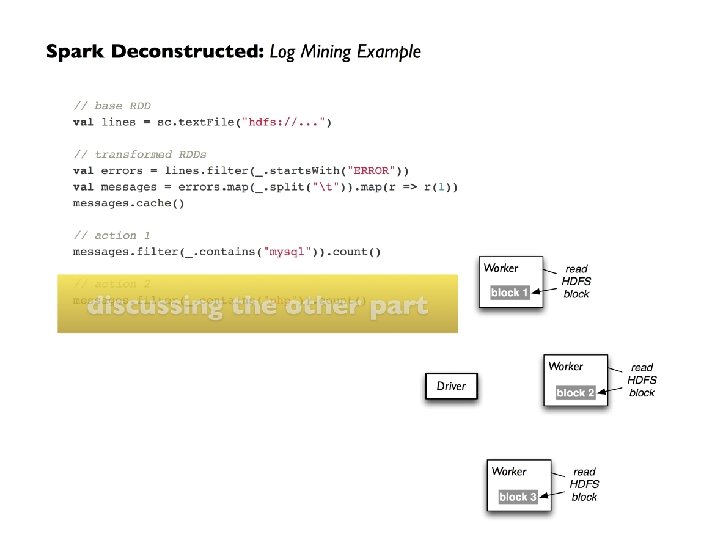

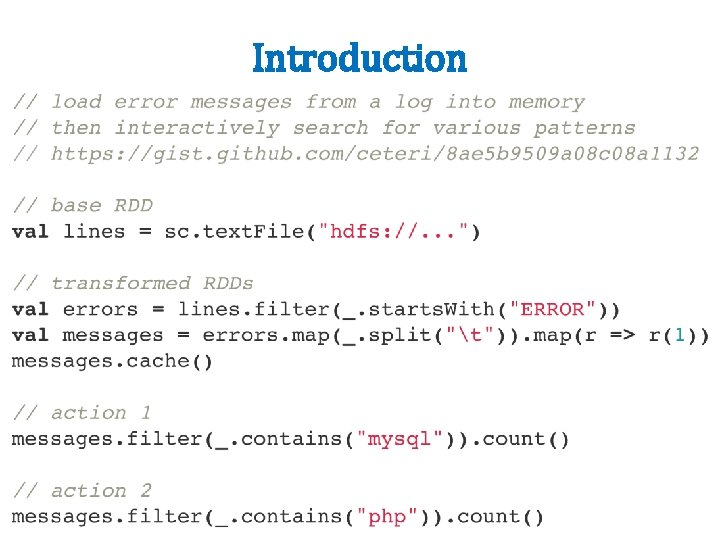

Introduction

Spark Structure • Start Spark on a cluster • Submit code to be run on it

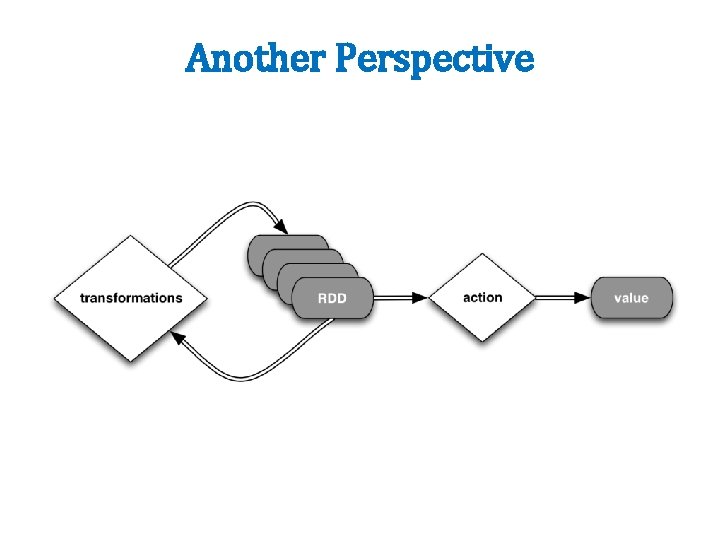

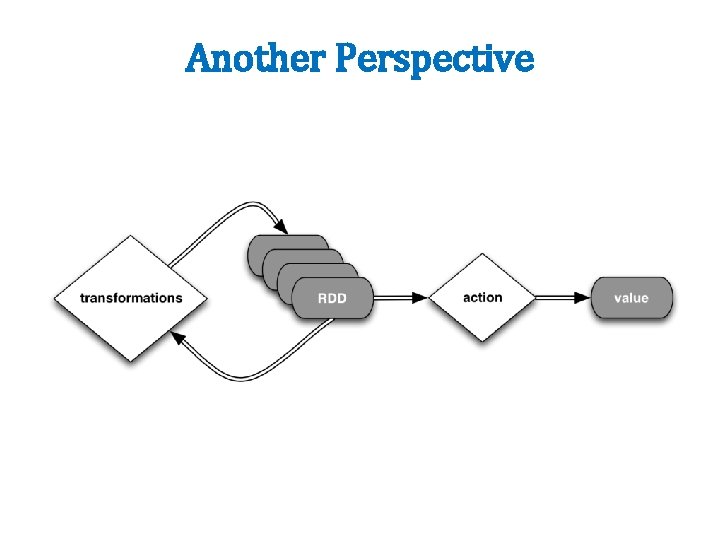

Another Perspective

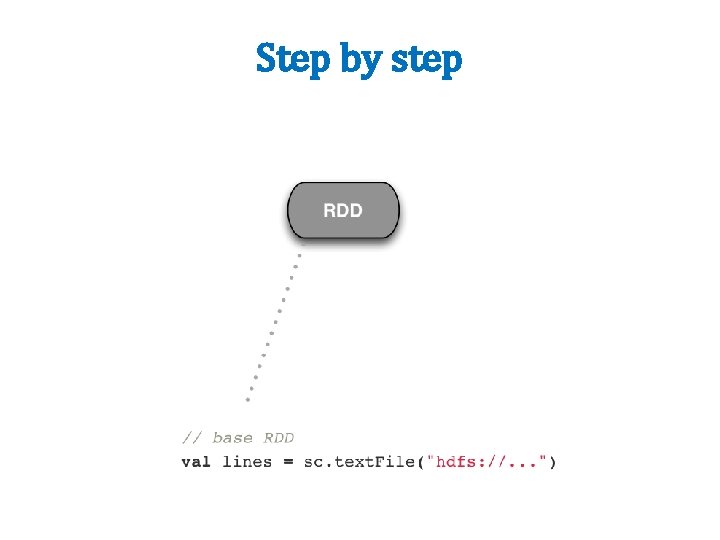

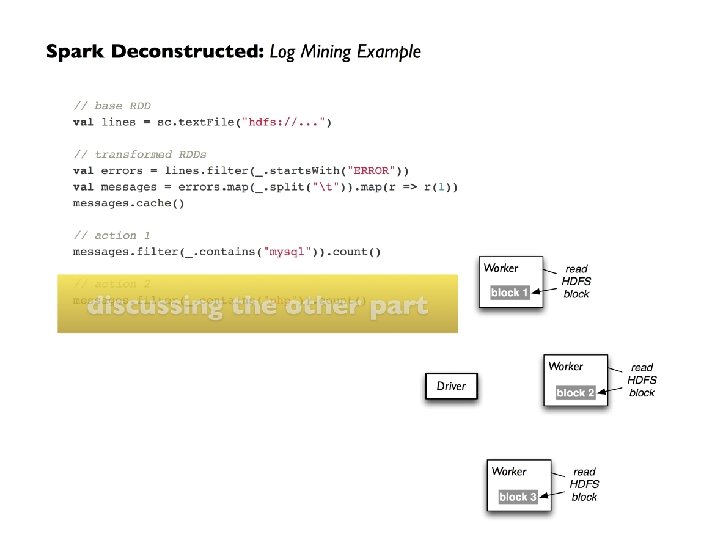

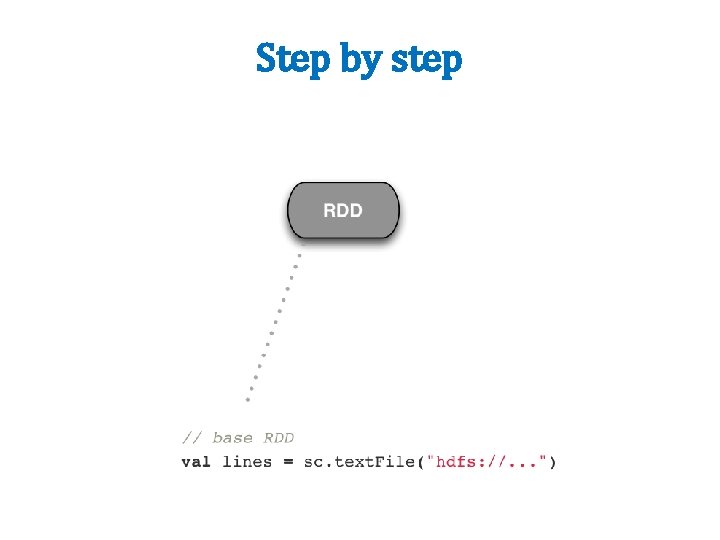

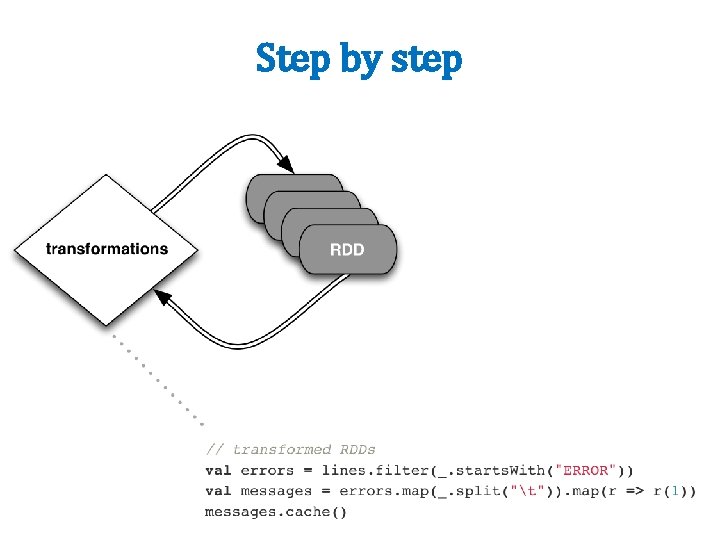

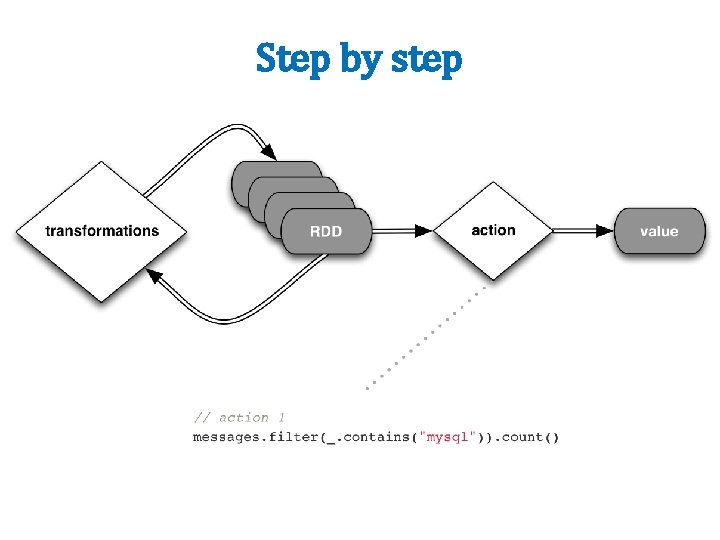

Step by step

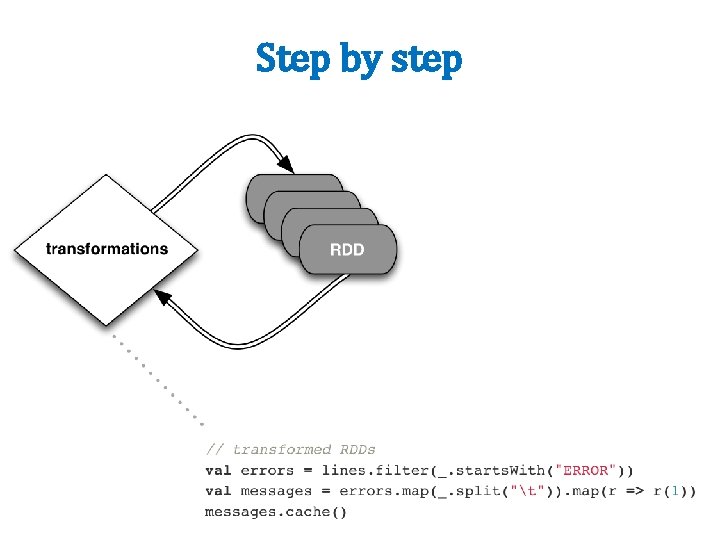

Step by step

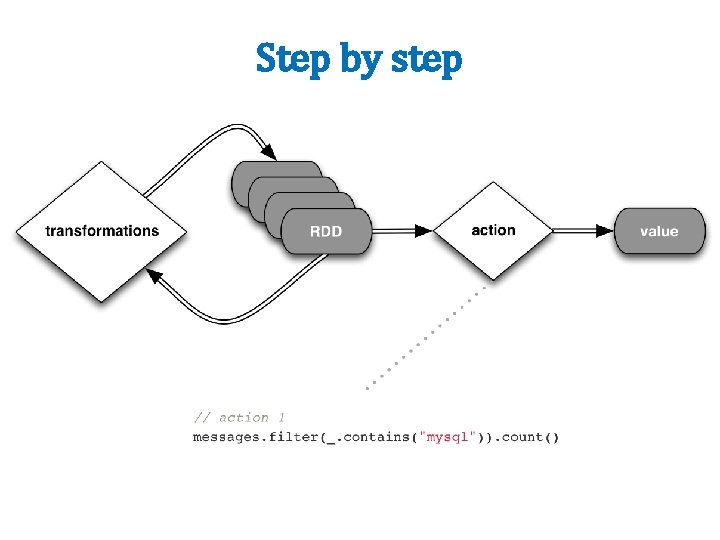

Step by step

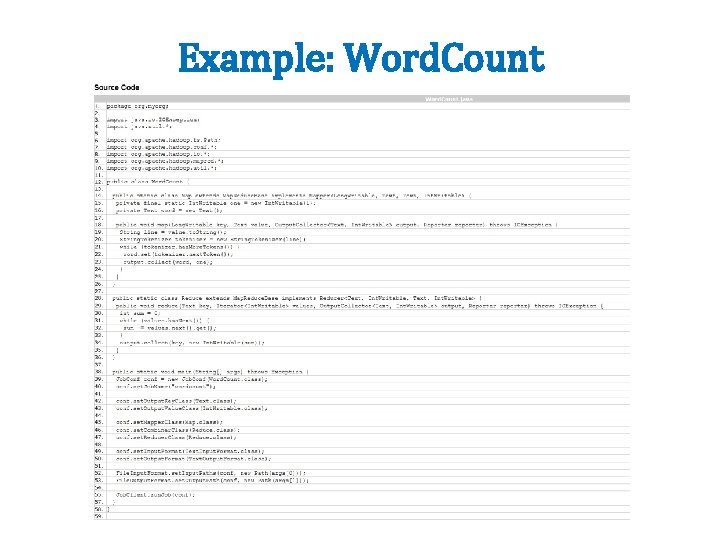

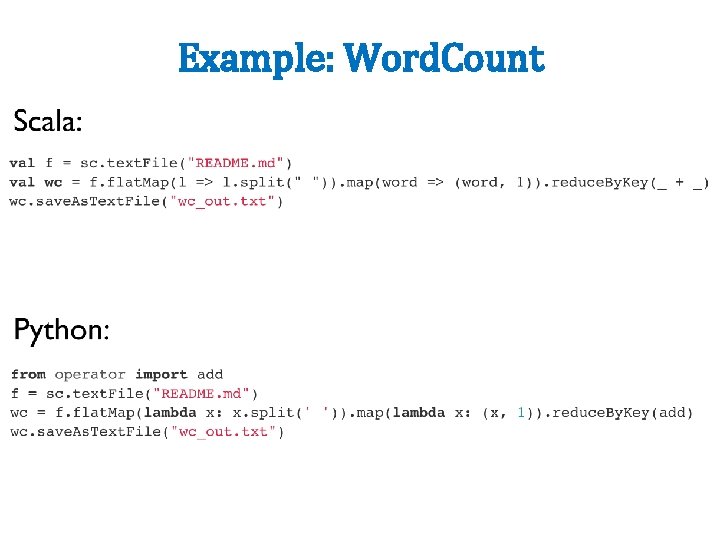

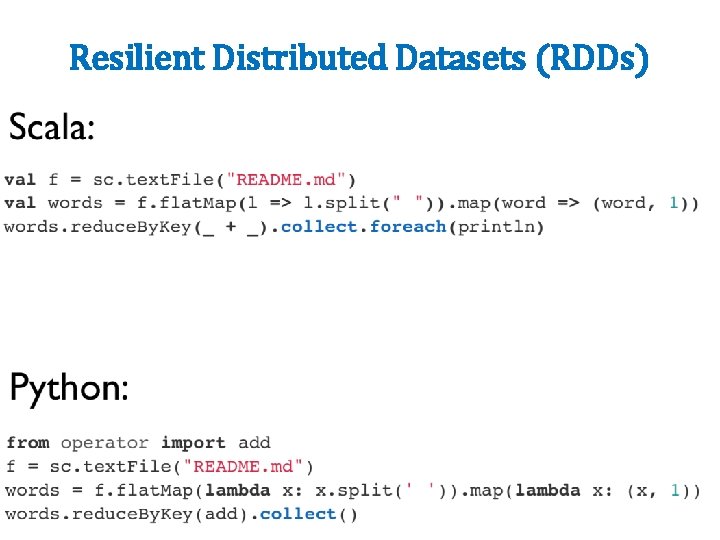

Example: Word. Count

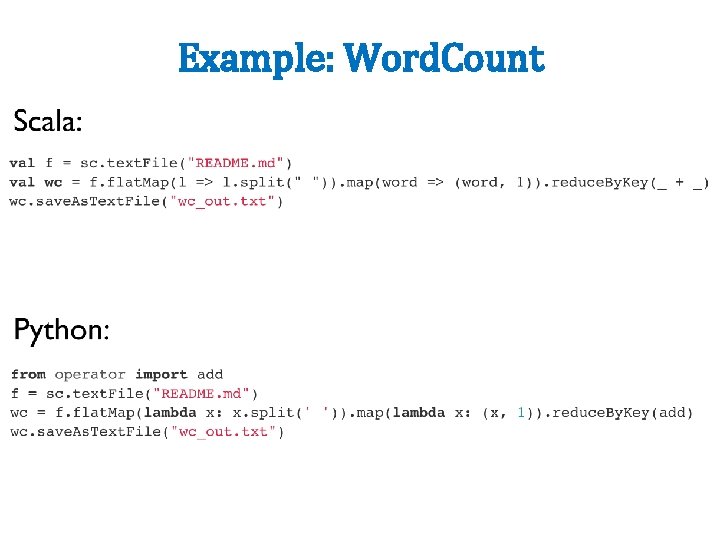

Example: Word. Count

Limitations of Map. Reduce • Performance bottlenecks—not all jobs can be cast as batch processes – Graphs? • Programming in Hadoop is hard – Boilerplate boilerplate everywhere

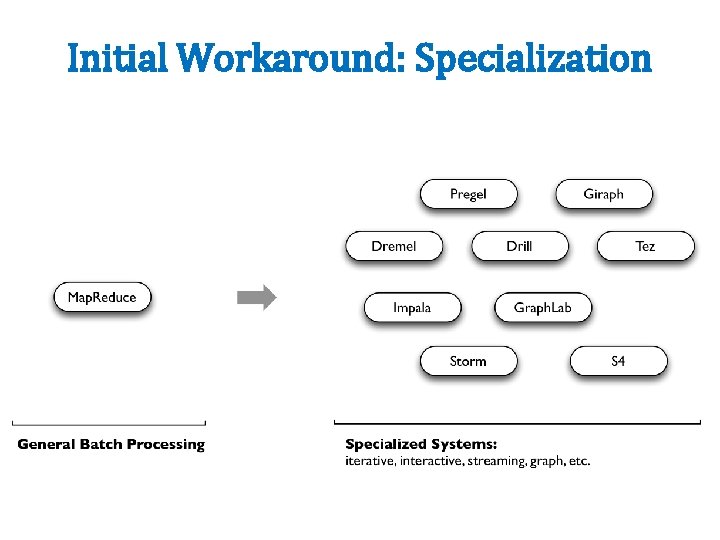

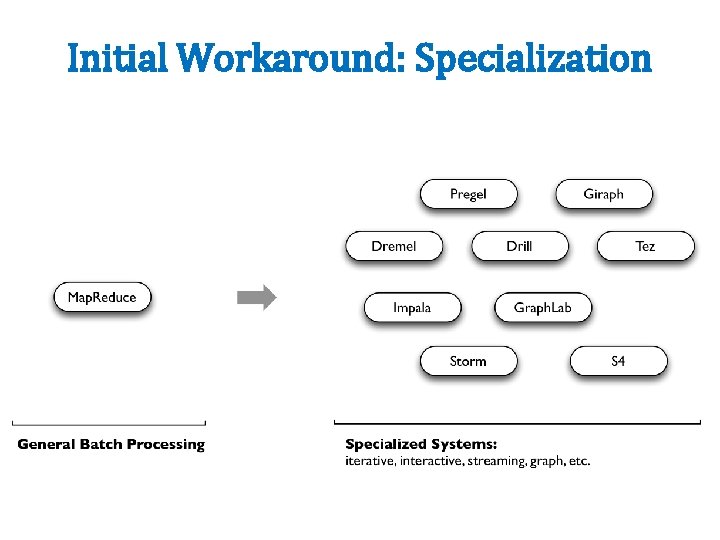

Initial Workaround: Specialization

Along Came Spark • Spark’s goal was to generalize Map. Reduce to support new applications within the same engine • Two additions: – Fast data sharing – General DAGs (directed acyclic graphs) • Best of both worlds: easy to program & more efficient engine in general

Codebase Size

More on Spark • More general – Supports map/reduce paradigm – Supports vertex-based paradigm – Supports streaming algorithms – General compute engine (DAG) • More API hooks – Scala, Java, Python, R • More interfaces – Batch (Hadoop), real-time (Storm), and interactive (? ? ? )

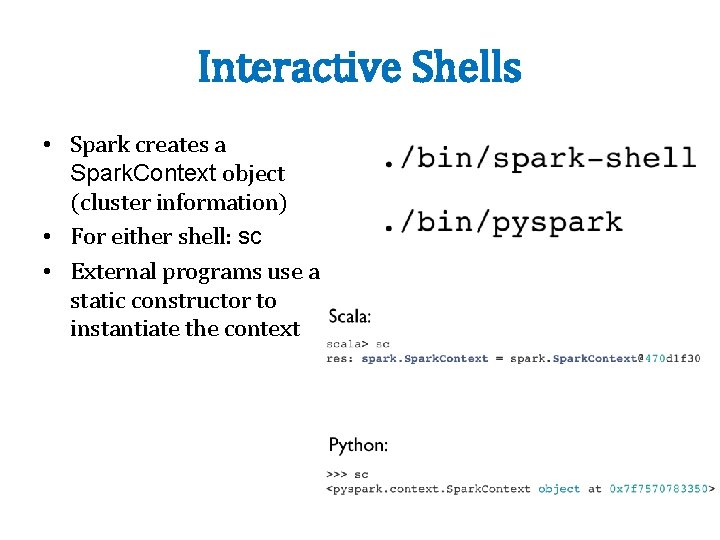

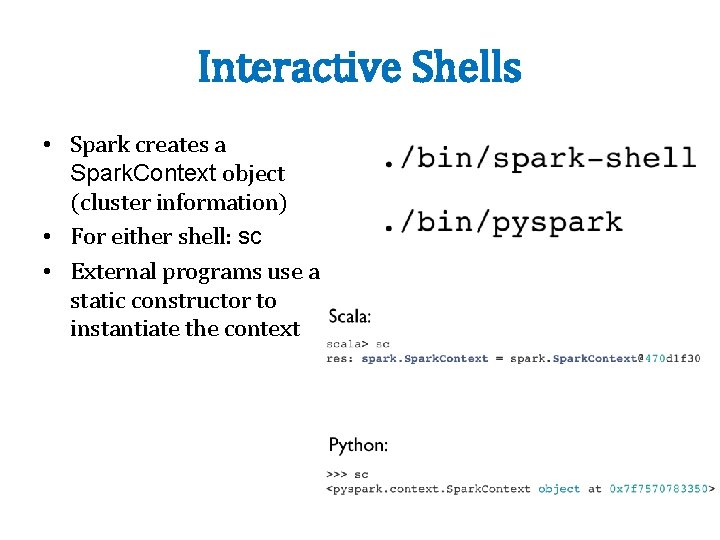

Interactive Shells • Spark creates a Spark. Context object (cluster information) • For either shell: sc • External programs use a static constructor to instantiate the context

Interactive Shells • spark-shell --master

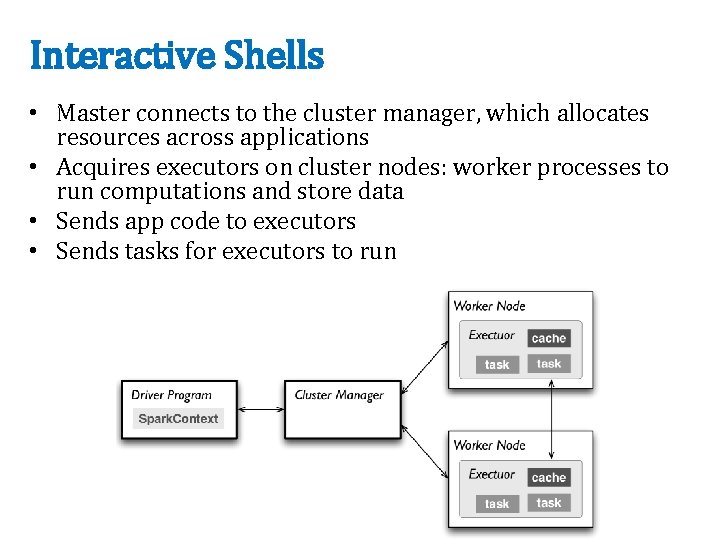

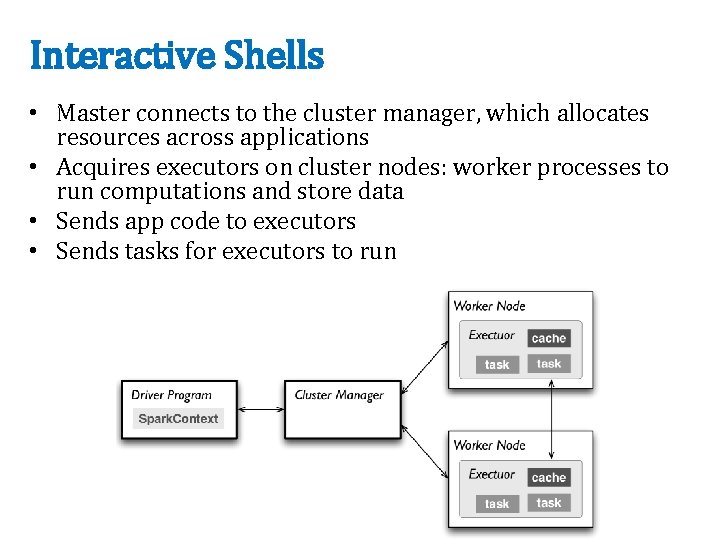

Interactive Shells • Master connects to the cluster manager, which allocates resources across applications • Acquires executors on cluster nodes: worker processes to run computations and store data • Sends app code to executors • Sends tasks for executors to run

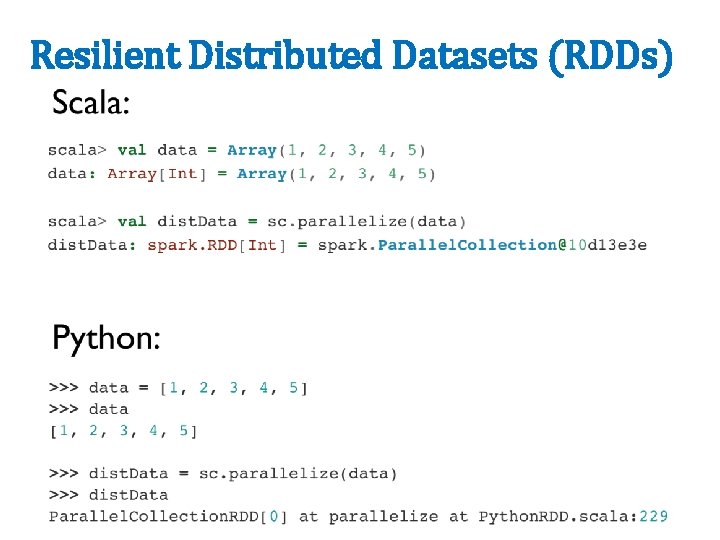

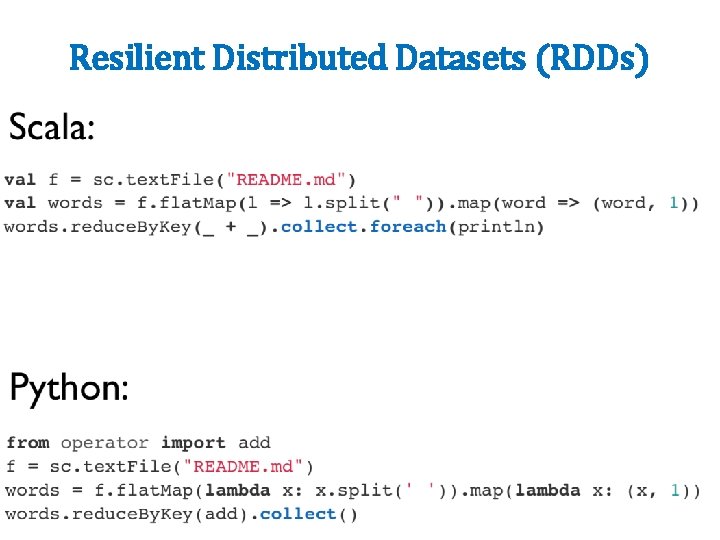

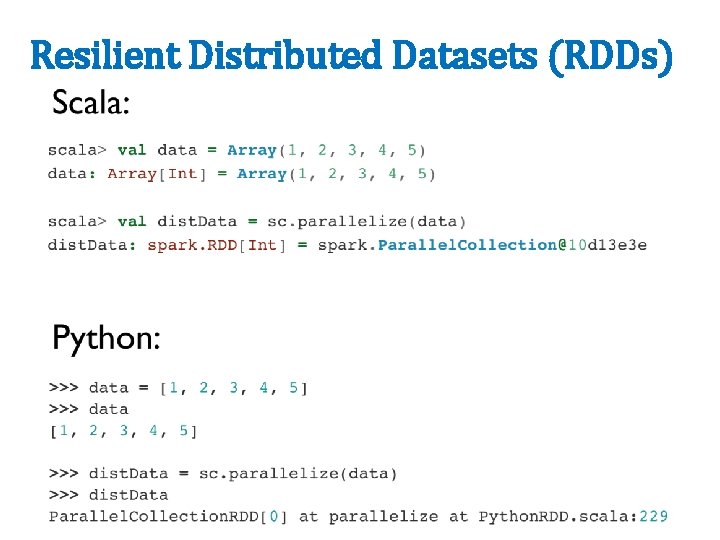

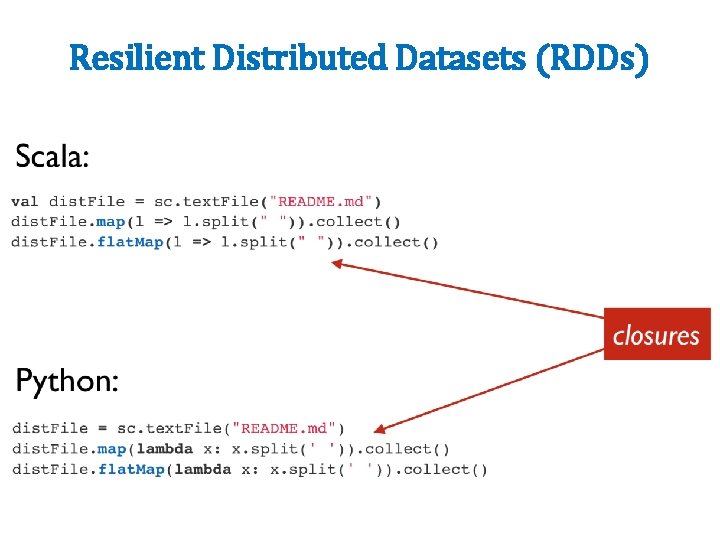

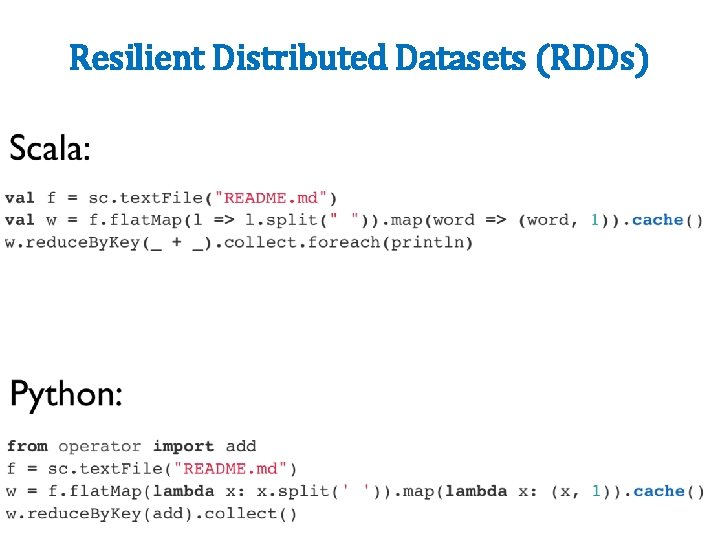

Resilient Distributed Datasets (RDDs) • Resilient Distributed Datasets (RDDs) are primary data abstraction in Spark – Fault-tolerant – Can be operated on in parallel 1. Parallelized Collections 2. Hadoop datasets • Two types of RDD operations 1. Transformations (lazy) 2. Actions (immediate)

Resilient Distributed Datasets (RDDs)

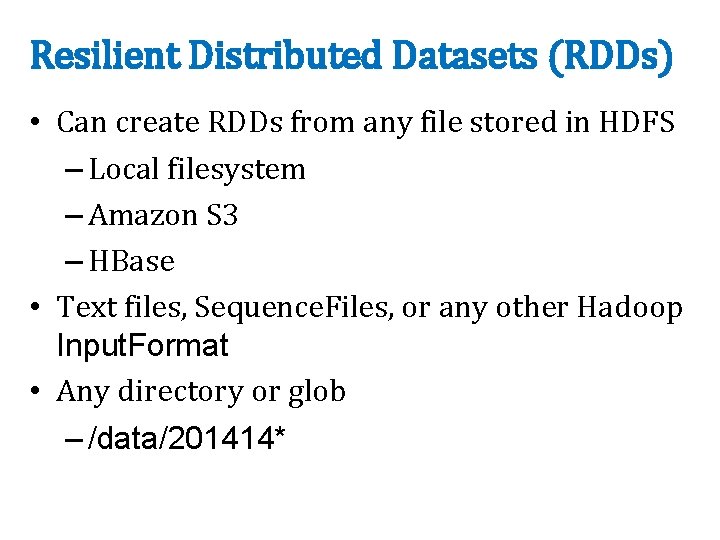

Resilient Distributed Datasets (RDDs) • Can create RDDs from any file stored in HDFS – Local filesystem – Amazon S 3 – HBase • Text files, Sequence. Files, or any other Hadoop Input. Format • Any directory or glob – /data/201414*

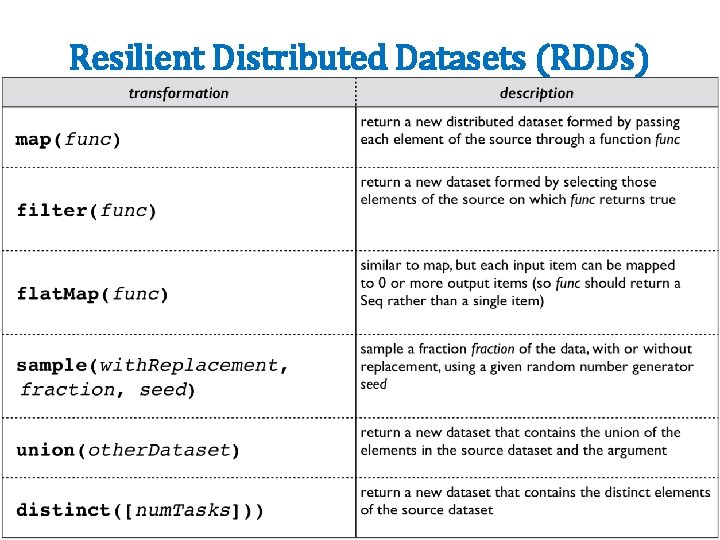

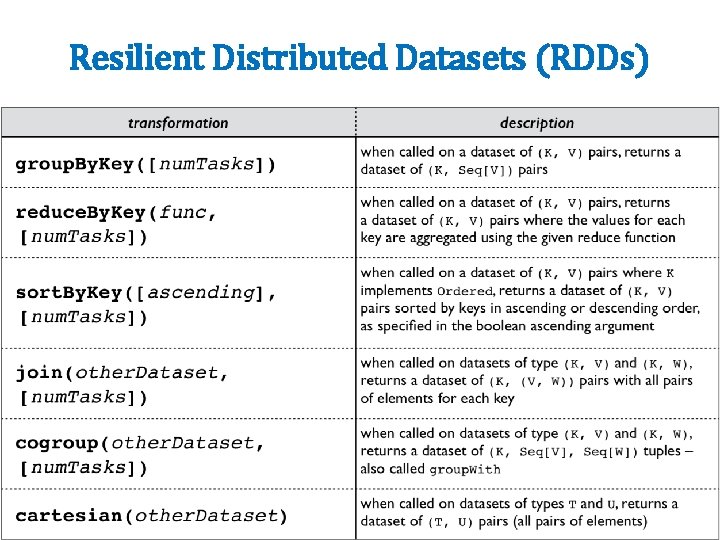

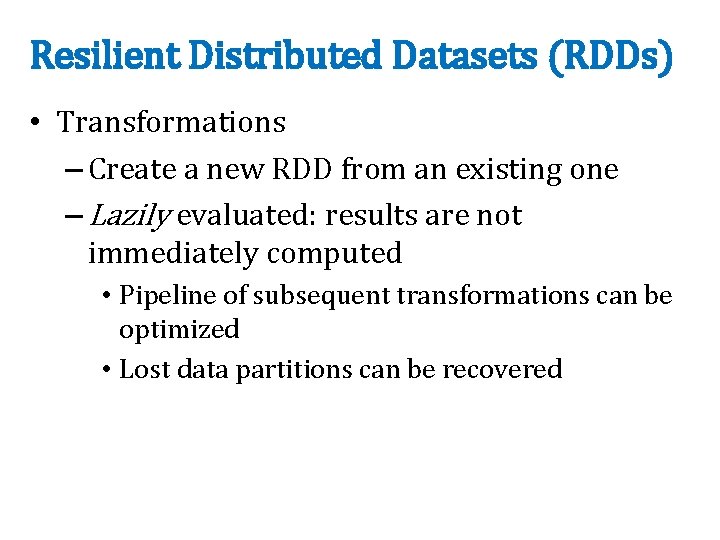

Resilient Distributed Datasets (RDDs) • Transformations – Create a new RDD from an existing one – Lazily evaluated: results are not immediately computed • Pipeline of subsequent transformations can be optimized • Lost data partitions can be recovered

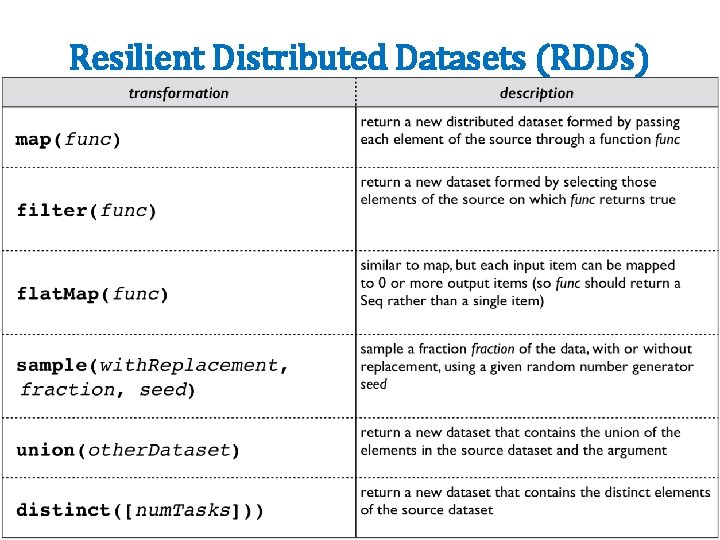

Resilient Distributed Datasets (RDDs)

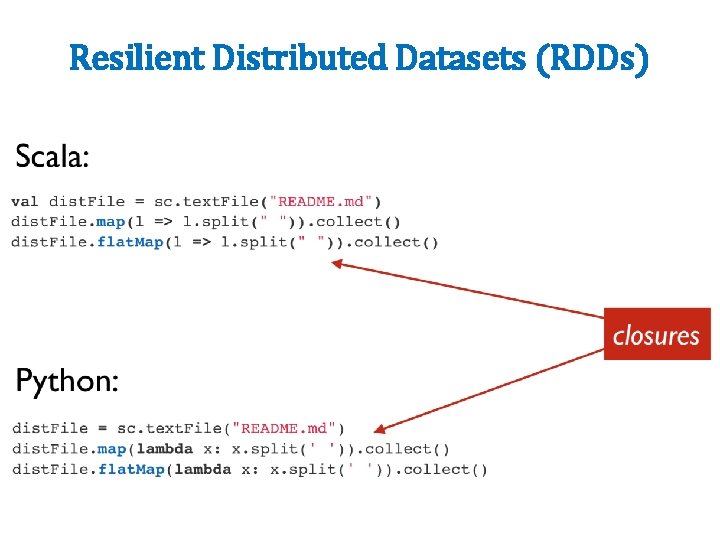

Resilient Distributed Datasets (RDDs)

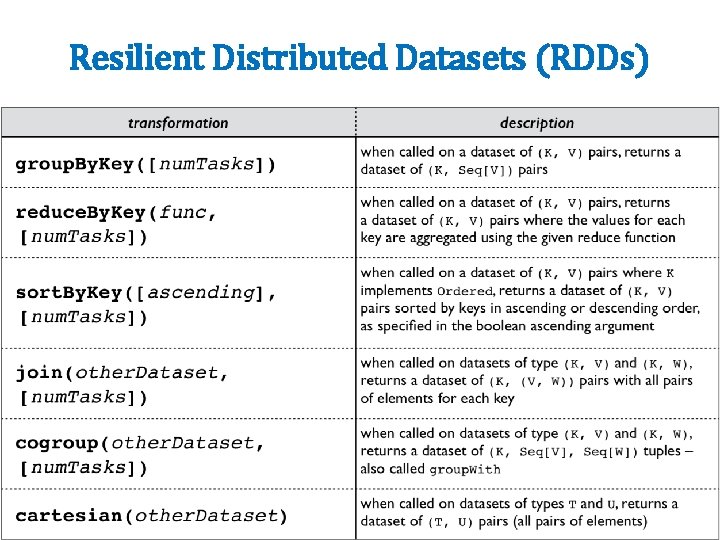

Resilient Distributed Datasets (RDDs)

Resilient Distributed Datasets (RDDs)

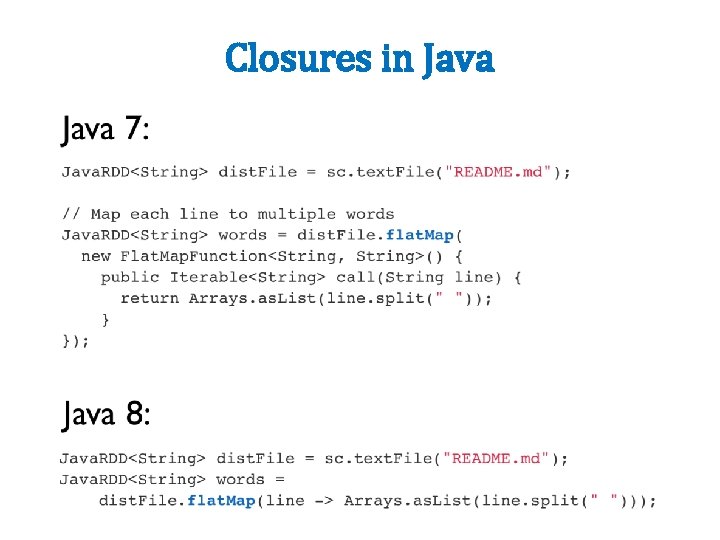

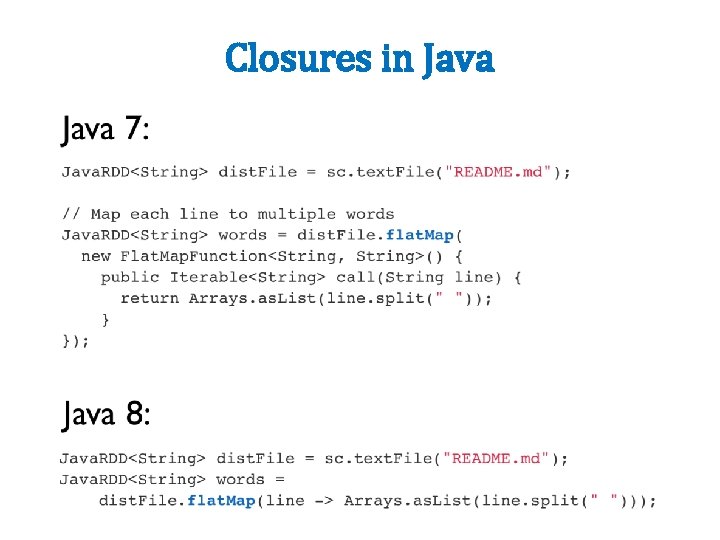

Closures in Java

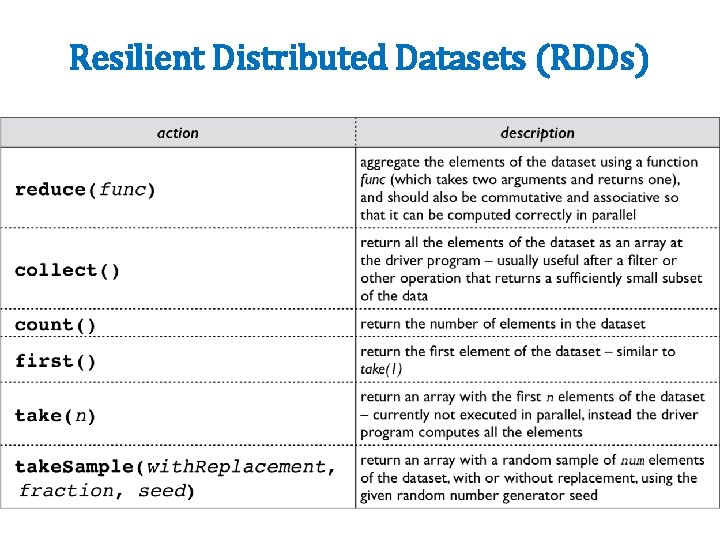

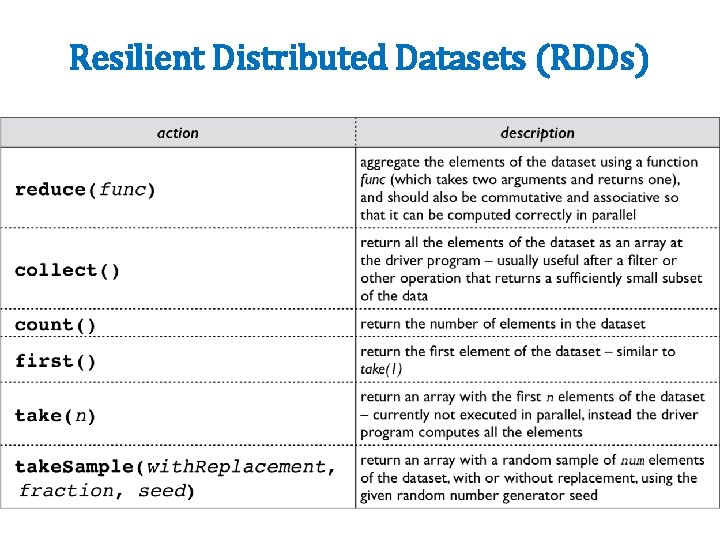

Resilient Distributed Datasets (RDDs) • Actions – Create a new RDD from an existing one – Eagerly evaluated: results are immediately computed • Applies previous transformations • (cache results? )

Resilient Distributed Datasets (RDDs)

Resilient Distributed Datasets (RDDs)

Resilient Distributed Datasets (RDDs)

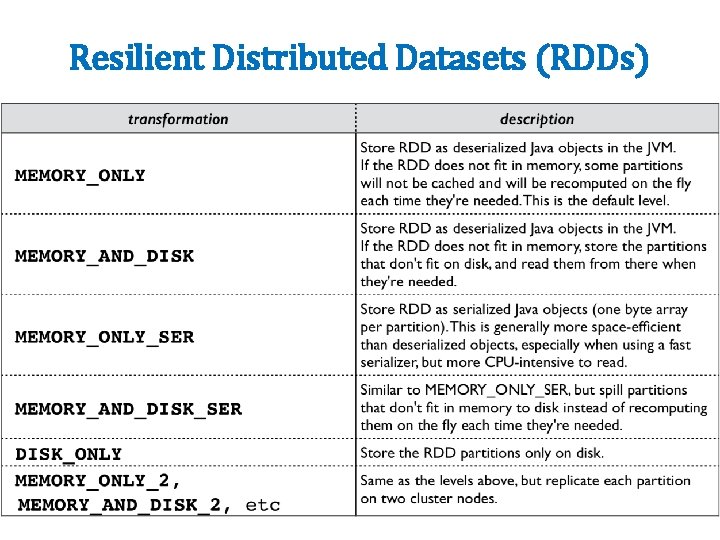

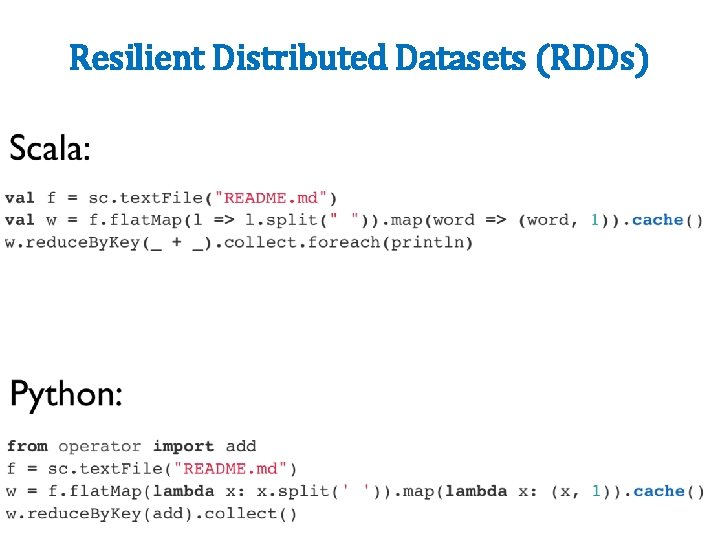

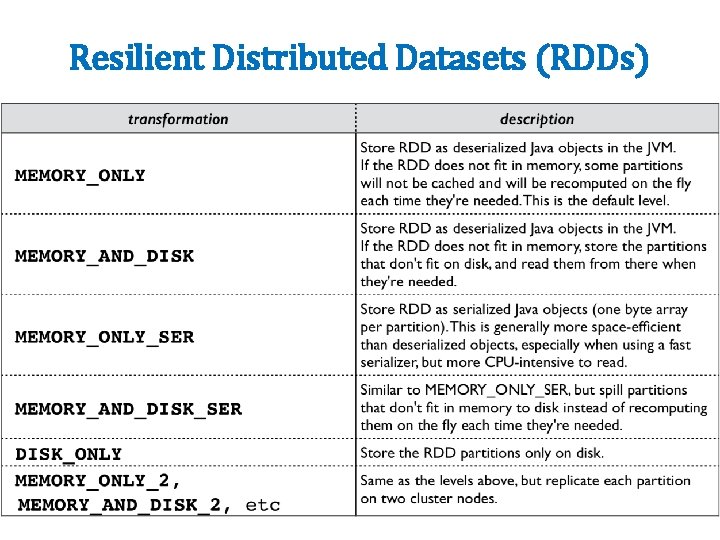

Resilient Distributed Datasets (RDDs) • Spark can persist / cache an RDD in memory across operations • Each slice is persisted in memory and reused in subsequent actions involving that RDD • Cache provides fault-tolerance: if partition is lost, it will be recomputed using transformations that created it

Resilient Distributed Datasets (RDDs)

Resilient Distributed Datasets (RDDs)

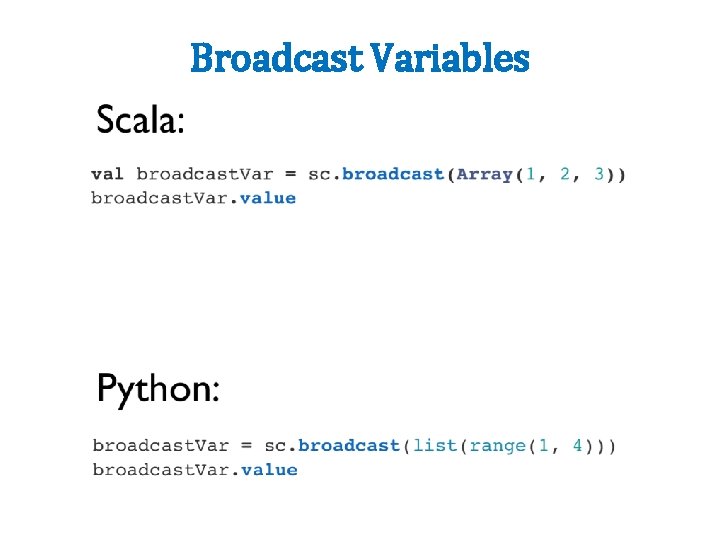

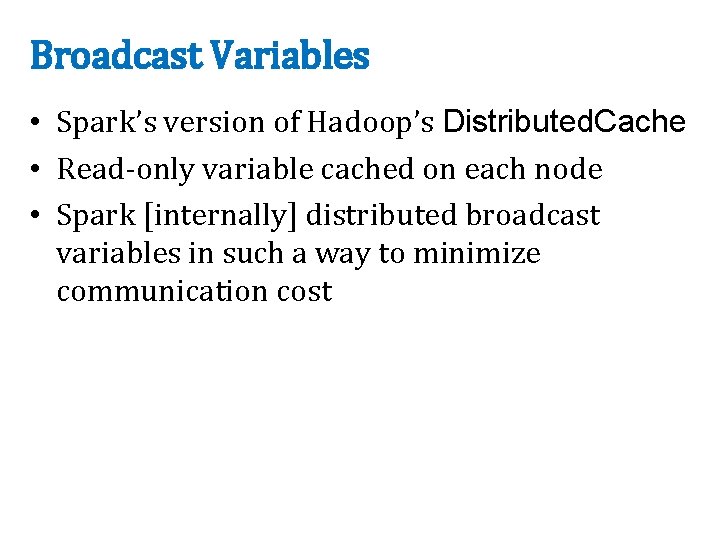

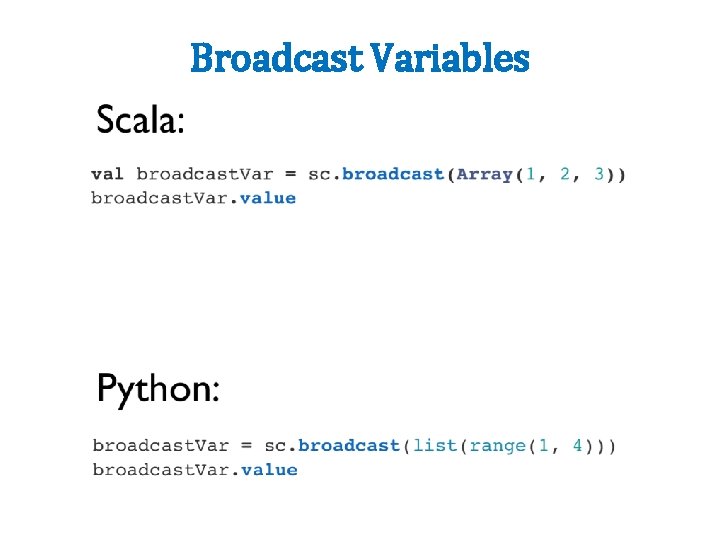

Broadcast Variables • Spark’s version of Hadoop’s Distributed. Cache • Read-only variable cached on each node • Spark [internally] distributed broadcast variables in such a way to minimize communication cost

Broadcast Variables

Accumulators • Spark’s version of Hadoop’s Counter • Variables that can only be added through an associative operation • Native support of numeric accumulator types and standard mutable collections – Users can extend to new types • Only driver program can read accumulator value

Accumulators

Key/Value Pairs

Resources • Original slide deck: http: //cdn. liber 118. com/workshop/itas_work shop. pdf • Code samples: – https: //gist. github. com/ceteri/f 2 c 3486062 c 9610 eac 1 d – https: //gist. github. com/ceteri/8 ae 5 b 9509 a 08 c 08 a 1132 – https: //gist. github. com/ceteri/11381941

Assignment 3! • Clustering on Spark • Data: more text documents! – NIPS abstracts (~1. 9 M) – NY Times articles (~100 M) – Pub. Med abstracts (~730 M) • Spark APIs • Compare clustering methods

Spark sql: relational data processing in spark

Spark sql: relational data processing in spark Introduction to apache spark

Introduction to apache spark Edel quinn

Edel quinn Ucf capstone cheating

Ucf capstone cheating Hornby cdu

Hornby cdu Marc quinn, self, 1991

Marc quinn, self, 1991 Oh mary conceived without sin

Oh mary conceived without sin Dr john quinn beaumont

Dr john quinn beaumont Ashlynn quinn

Ashlynn quinn Quinn modell

Quinn modell Instrumento ocai

Instrumento ocai Duane quinn

Duane quinn Feargal quinn

Feargal quinn Self – marc quinn, 1991

Self – marc quinn, 1991 Jim quinn net worth

Jim quinn net worth Penny quinn

Penny quinn Strongback bridging

Strongback bridging Paulyn marrinan quinn

Paulyn marrinan quinn Unos region 5

Unos region 5 Jenny tsang-quinn

Jenny tsang-quinn Quinn prob

Quinn prob Alleluia alleluia give thanks to the risen lord lyrics

Alleluia alleluia give thanks to the risen lord lyrics Thanks for the victory

Thanks for the victory Thank you for listening slide

Thank you for listening slide Thanks for listen

Thanks for listen Gustatory system

Gustatory system Thanks for listening

Thanks for listening How to reply thanks

How to reply thanks Thanks any question

Thanks any question Give thanks to the lord our god and king

Give thanks to the lord our god and king Christian kittl

Christian kittl Thanks to god for my redeemer

Thanks to god for my redeemer Oh give thanks to the lord for he is good

Oh give thanks to the lord for he is good Thanks for your attencion

Thanks for your attencion Ten thousand thanks to jesus

Ten thousand thanks to jesus Thanks for your listening!

Thanks for your listening! Thanks for teamwork

Thanks for teamwork Kwakiutl prayer of thanks

Kwakiutl prayer of thanks Thanks a lot for your letter

Thanks a lot for your letter Vote of thanks format

Vote of thanks format Is the gulf stream warm or cold

Is the gulf stream warm or cold From woodlands to plains

From woodlands to plains Dear ben thank you for your letter

Dear ben thank you for your letter Challengers leaders niche players visionaries

Challengers leaders niche players visionaries Give thanks michael w smith

Give thanks michael w smith First of all thanks to allah

First of all thanks to allah Farabi is flora's best friend passage model question

Farabi is flora's best friend passage model question Welcome thanks for joining us

Welcome thanks for joining us Thanks for bringing us together

Thanks for bringing us together Thanks for being here

Thanks for being here Thank you to all the sponsors

Thank you to all the sponsors Thanks for your kind notice

Thanks for your kind notice Thanks for solving the problem

Thanks for solving the problem