Introduction to Message Passing Interface MPI Introduction 942012

![Another Example (array) source int array[100]; … // rank 0 fills the array with Another Example (array) source int array[100]; … // rank 0 fills the array with](https://slidetodoc.com/presentation_image_h/00f97aa9e23106c1270fb4ba7a09e7a4/image-29.jpg)

- Slides: 53

Introduction to Message Passing Interface (MPI) Introduction 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 1

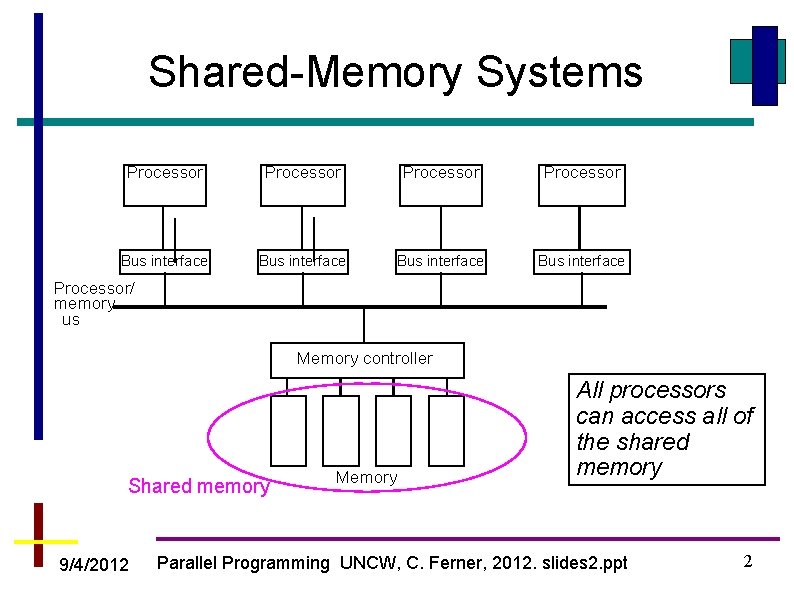

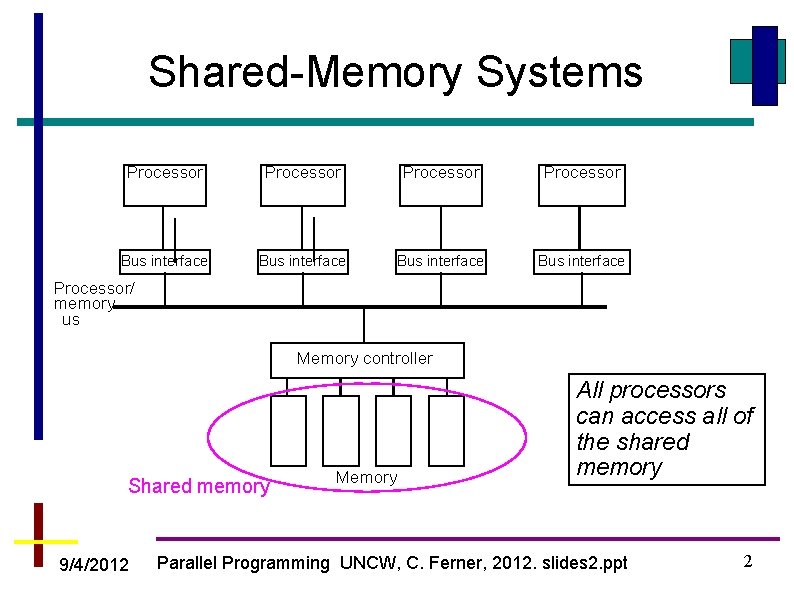

Shared-Memory Systems Processor Bus interface Processor/ memory us Memory controller Shared memory 9/4/2012 Memory All processors can access all of the shared memory Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 2

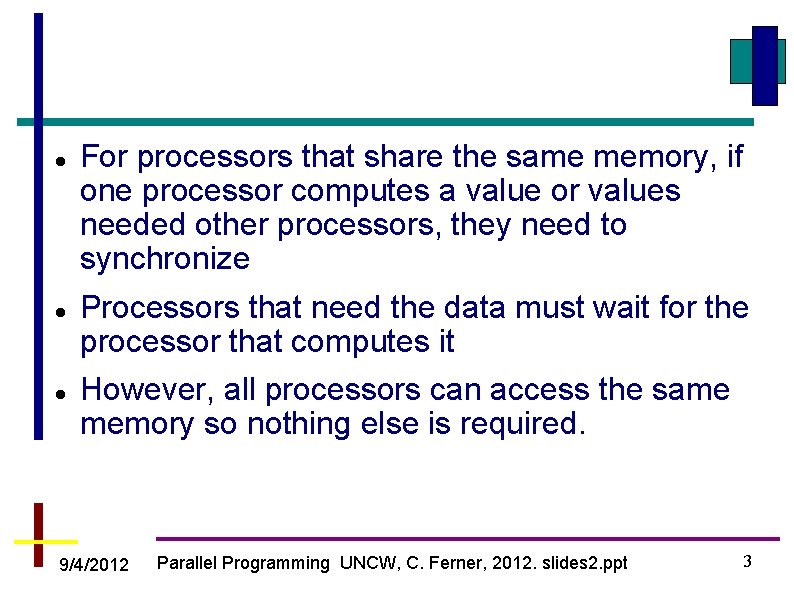

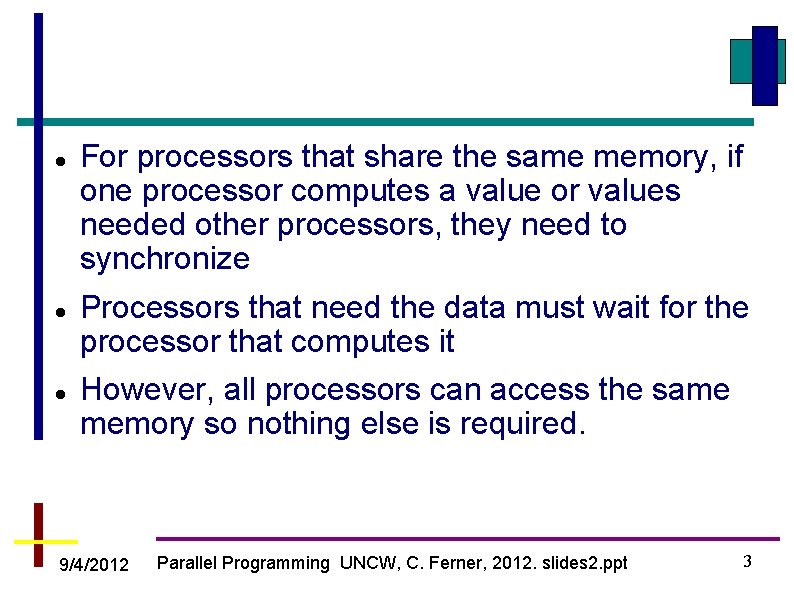

For processors that share the same memory, if one processor computes a value or values needed other processors, they need to synchronize Processors that need the data must wait for the processor that computes it However, all processors can access the same memory so nothing else is required. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 3

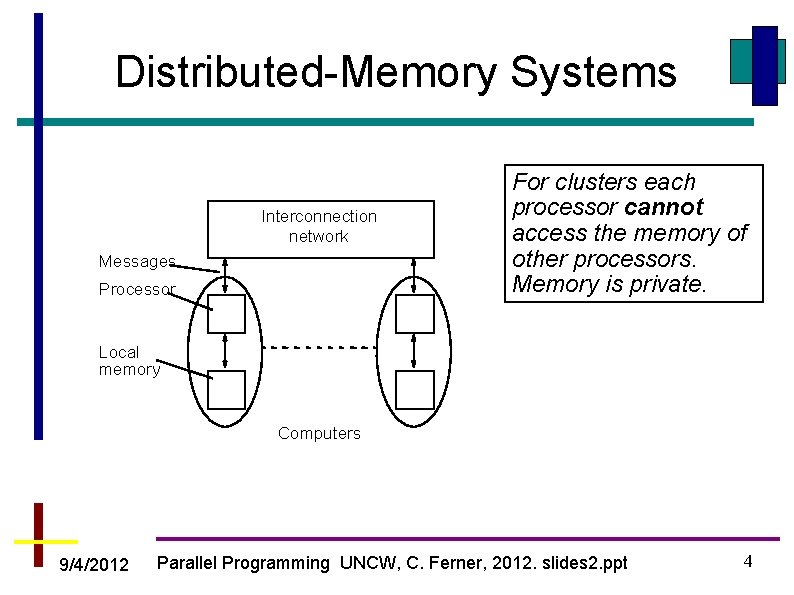

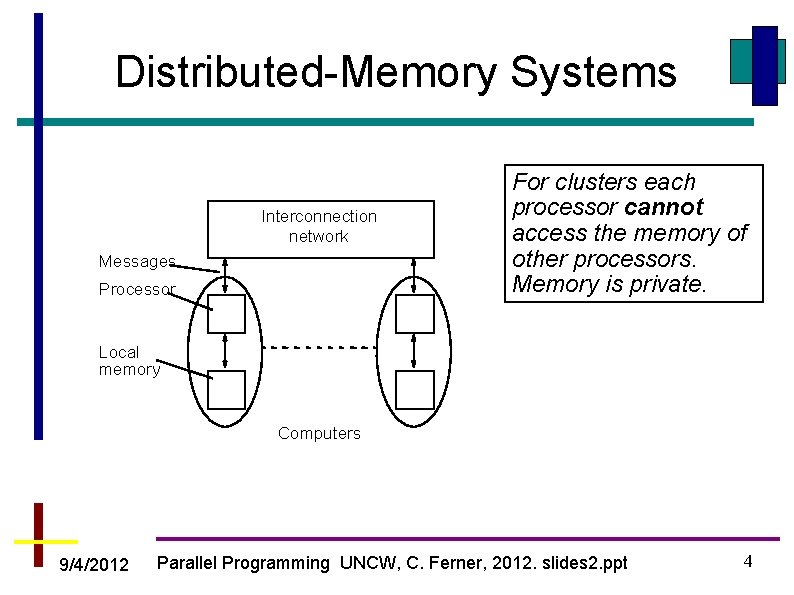

Distributed-Memory Systems Interconnection network Messages Processor For clusters each processor cannot access the memory of other processors. Memory is private. Local memory Computers 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 4

If one processor computes a value or values needed other processors, it has to transmit that data This requires message-passing 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 5

Message-Passing Sockets (very low level) Parallel Virtual Machine (created by Oak Ridge National Lab) MPI (Message Passing Interface) - standard LAM MPICH Open. MPI (we use this one) All of these are libraries that can be used from within a C program 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 6

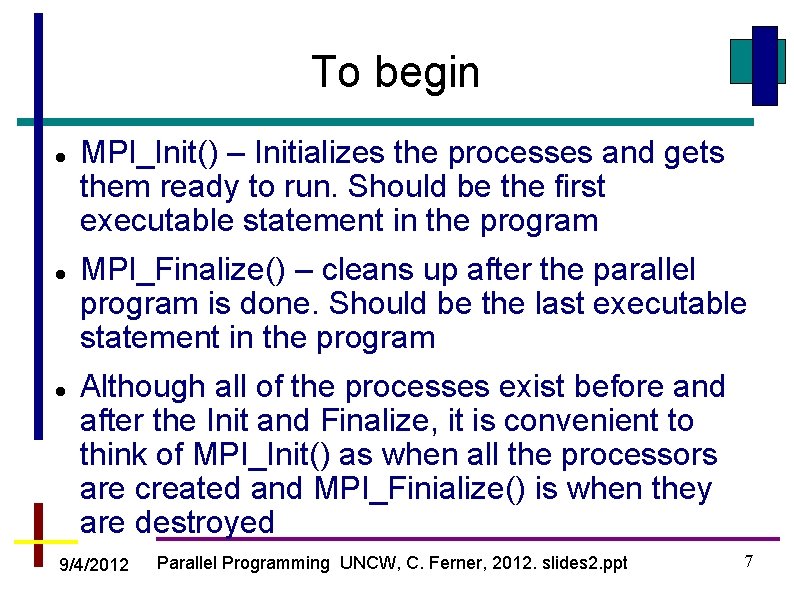

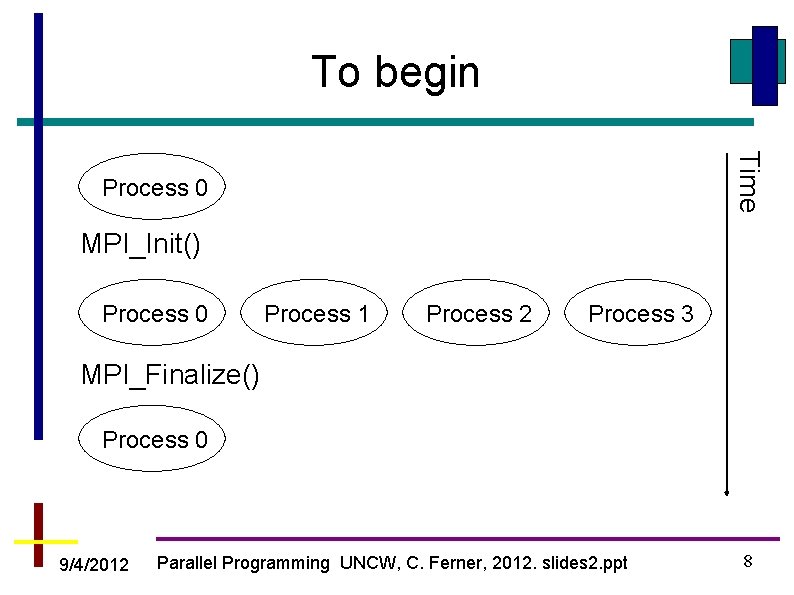

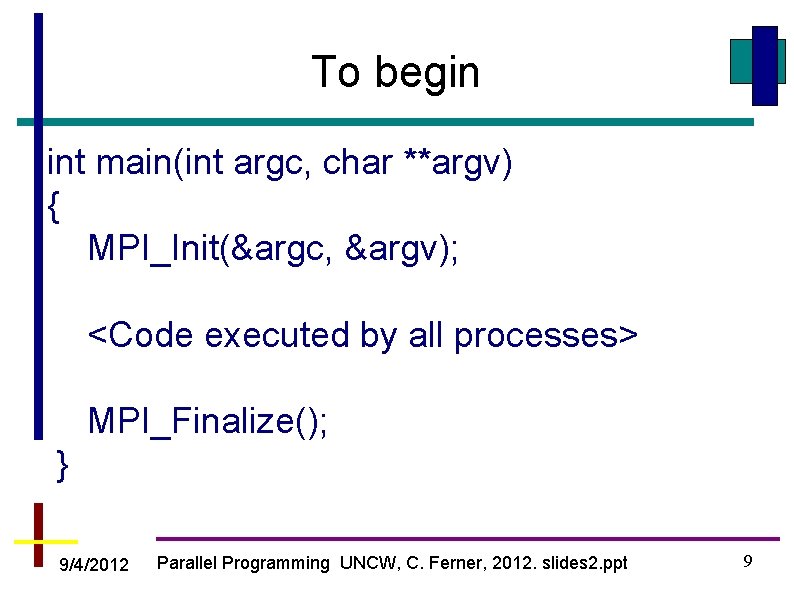

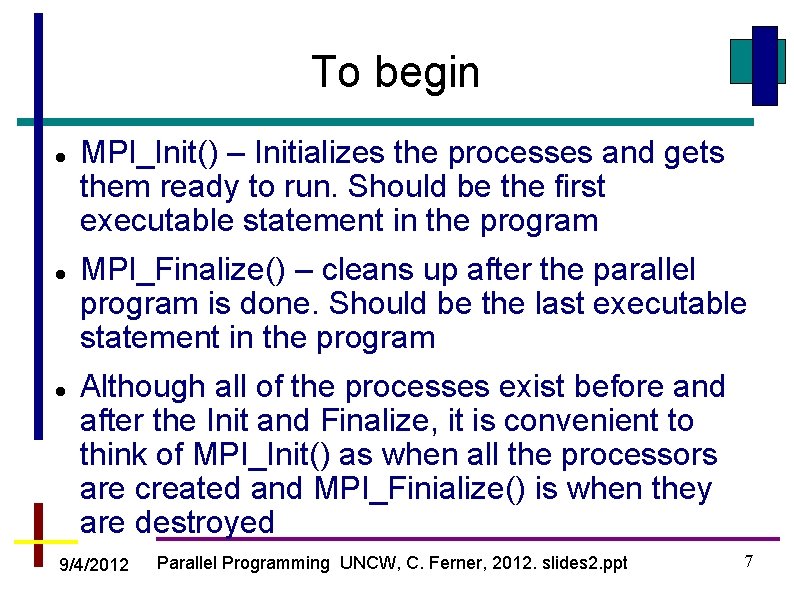

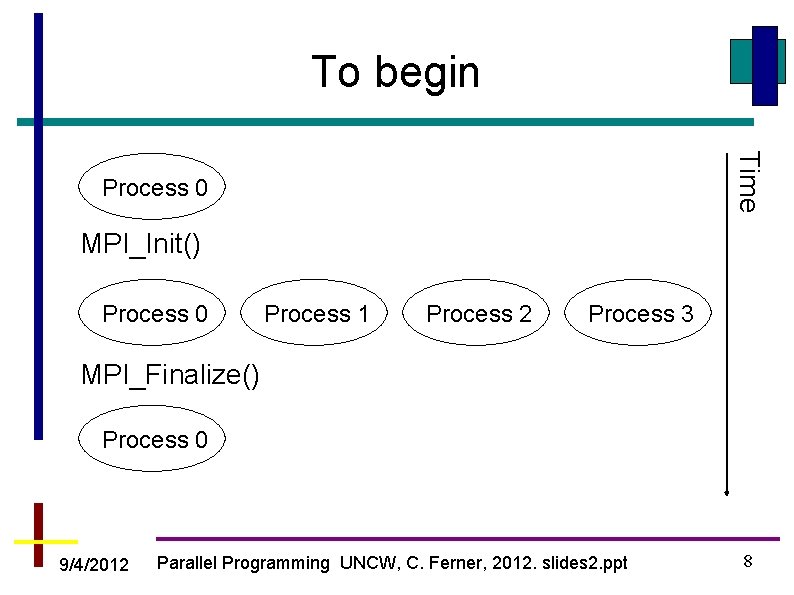

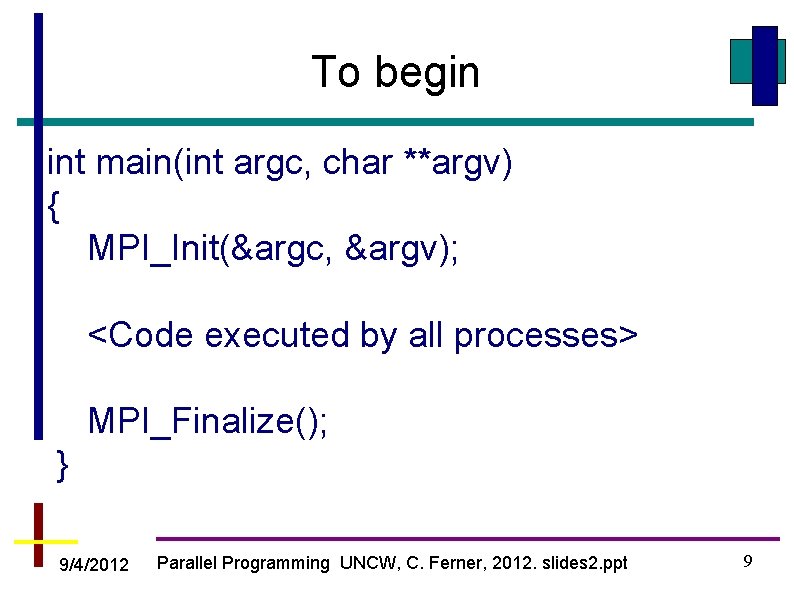

To begin MPI_Init() – Initializes the processes and gets them ready to run. Should be the first executable statement in the program MPI_Finalize() – cleans up after the parallel program is done. Should be the last executable statement in the program Although all of the processes exist before and after the Init and Finalize, it is convenient to think of MPI_Init() as when all the processors are created and MPI_Finialize() is when they are destroyed 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 7

To begin Time Process 0 MPI_Init() Process 0 Process 1 Process 2 Process 3 MPI_Finalize() Process 0 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 8

To begin int main(int argc, char **argv) { MPI_Init(&argc, &argv); <Code executed by all processes> MPI_Finalize(); } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 9

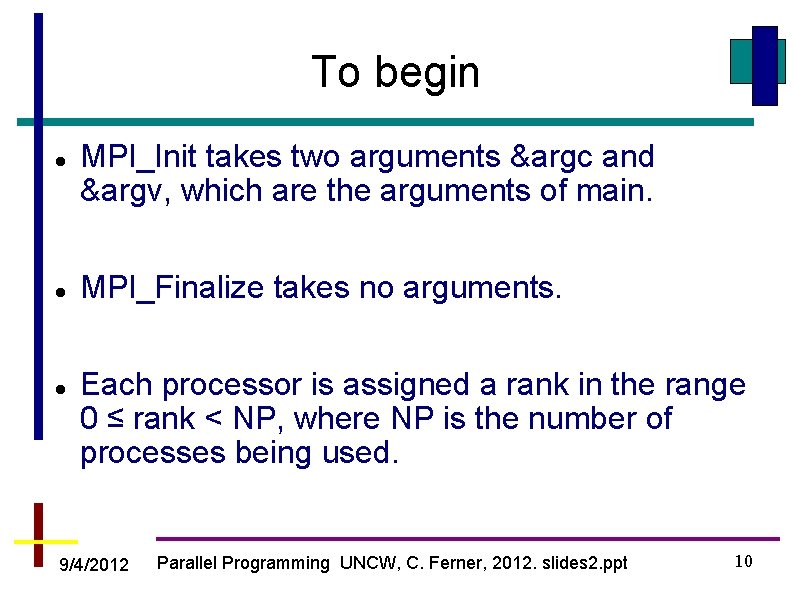

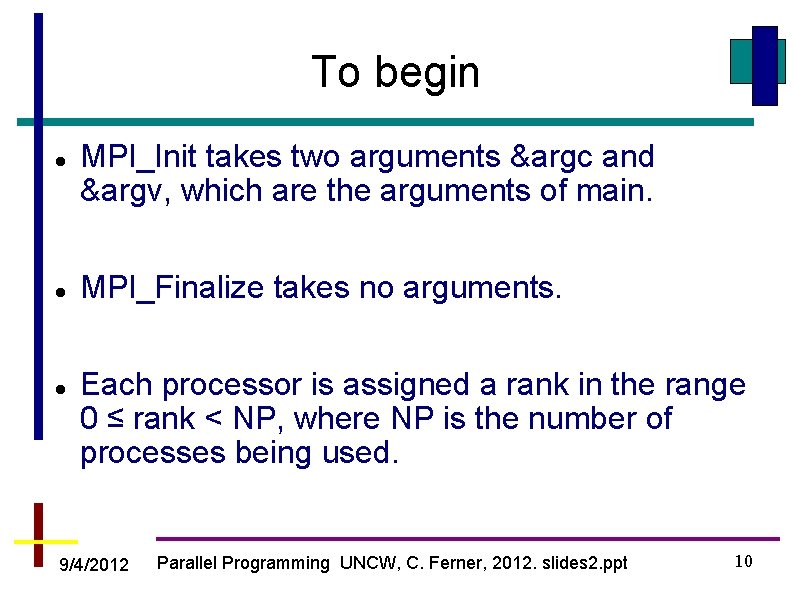

To begin MPI_Init takes two arguments &argc and &argv, which are the arguments of main. MPI_Finalize takes no arguments. Each processor is assigned a rank in the range 0 ≤ rank < NP, where NP is the number of processes being used. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 10

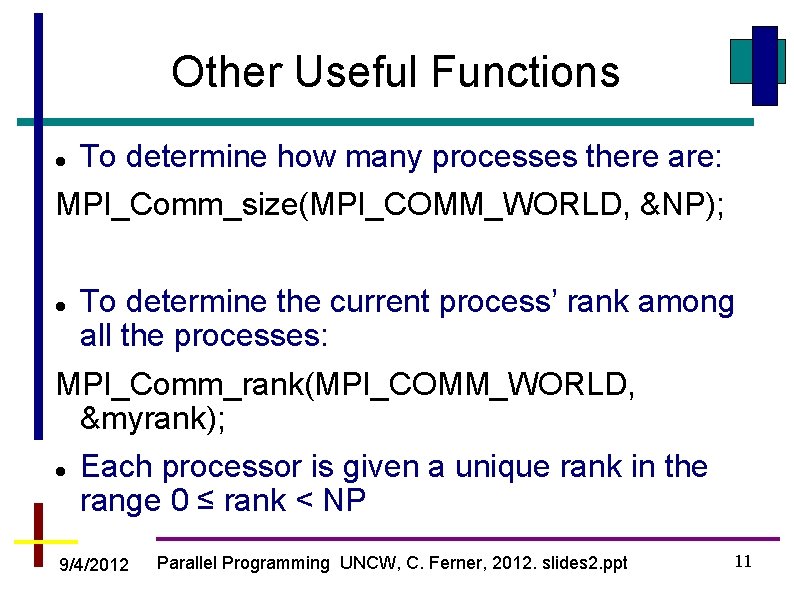

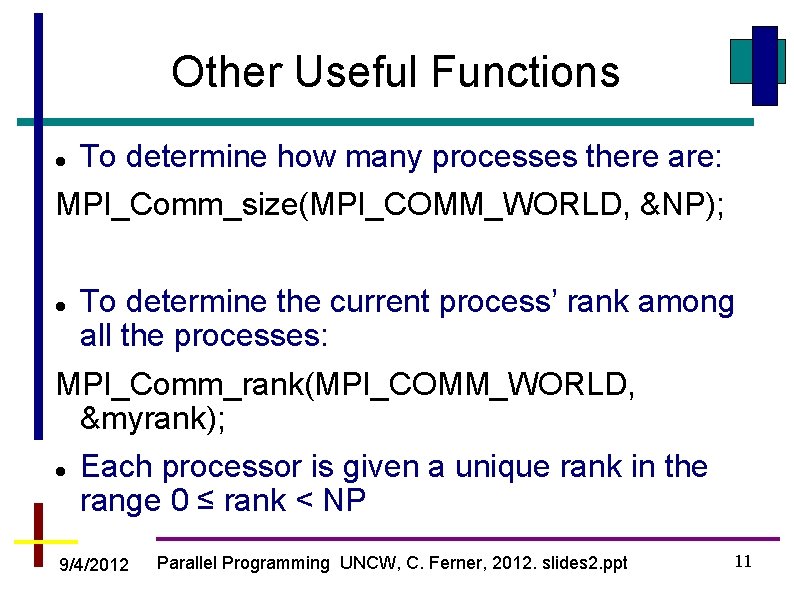

Other Useful Functions To determine how many processes there are: MPI_Comm_size(MPI_COMM_WORLD, &NP); To determine the current process’ rank among all the processes: MPI_Comm_rank(MPI_COMM_WORLD, &myrank); Each processor is given a unique rank in the range 0 ≤ rank < NP 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 11

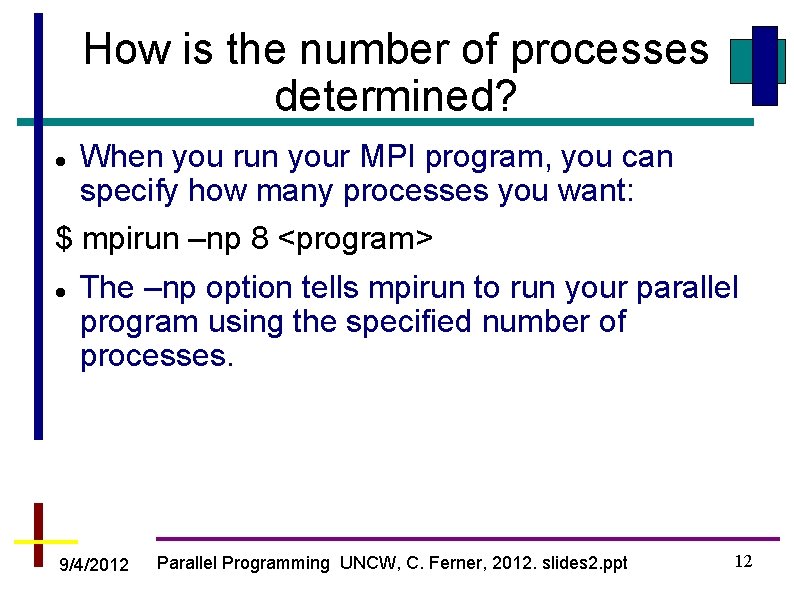

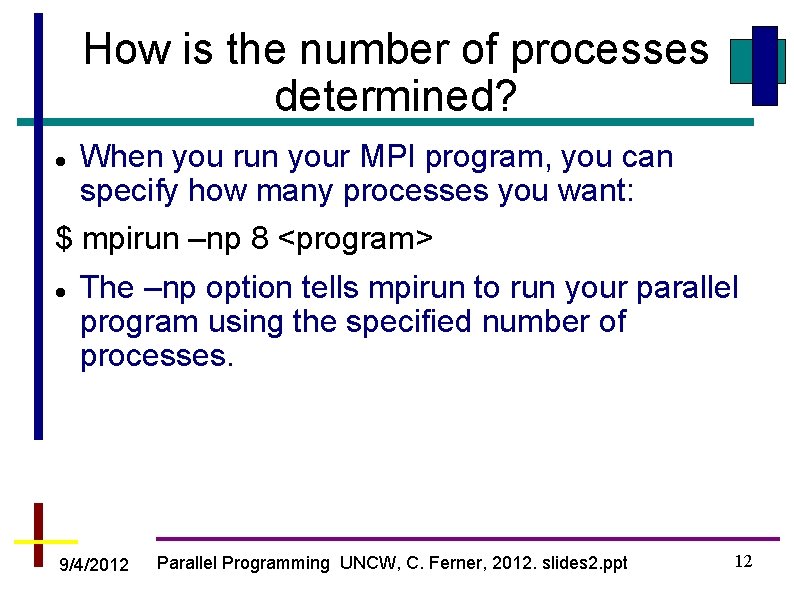

How is the number of processes determined? When you run your MPI program, you can specify how many processes you want: $ mpirun –np 8 <program> The –np option tells mpirun to run your parallel program using the specified number of processes. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 12

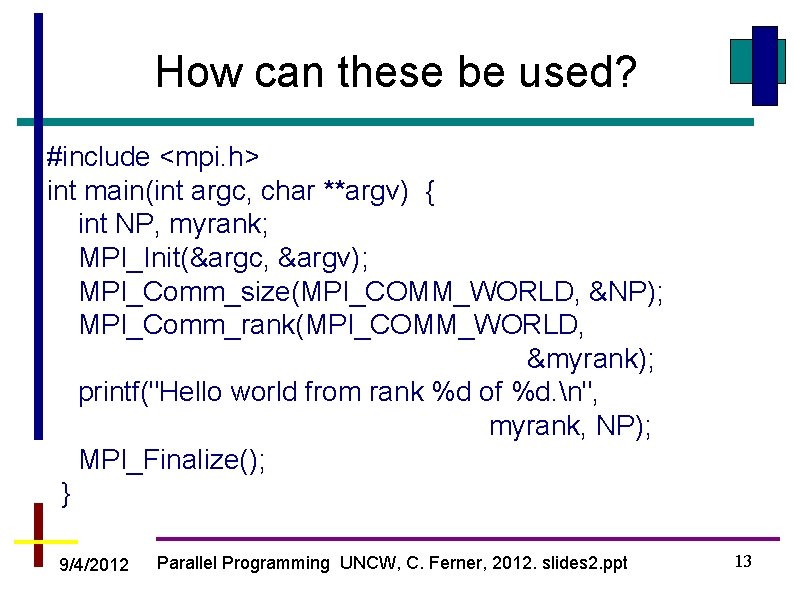

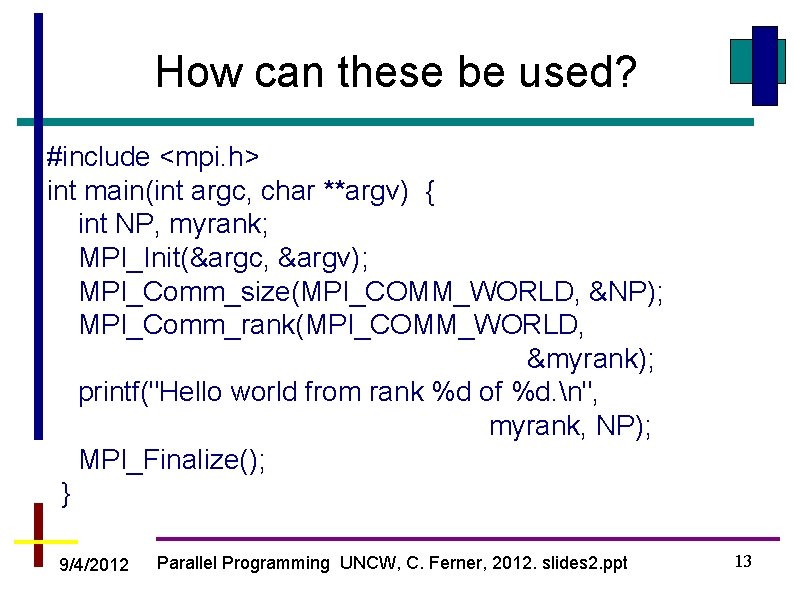

How can these be used? #include <mpi. h> int main(int argc, char **argv) { int NP, myrank; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &myrank); printf("Hello world from rank %d of %d. n", myrank, NP); MPI_Finalize(); } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 13

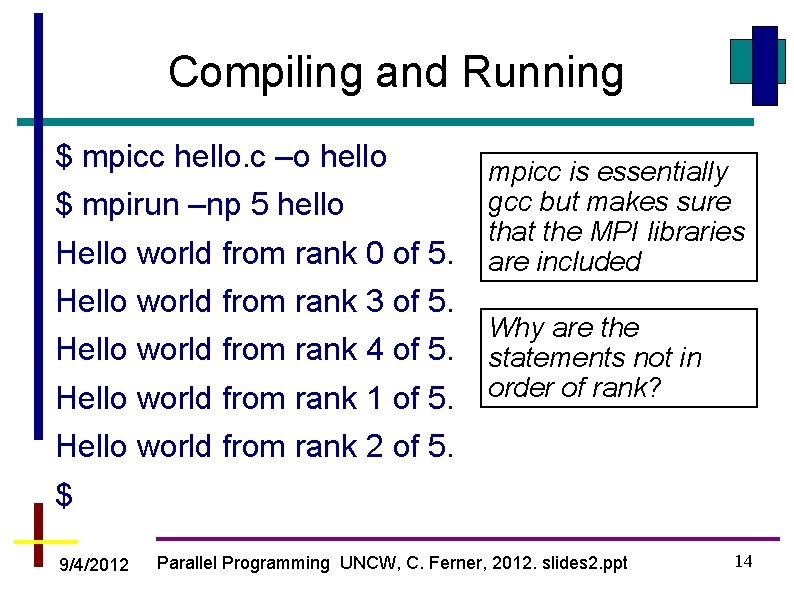

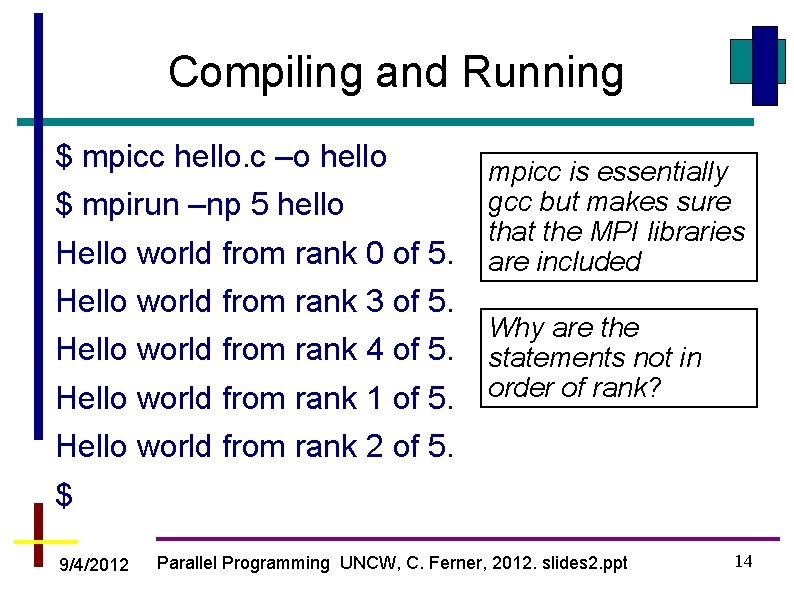

Compiling and Running $ mpicc hello. c –o hello $ mpirun –np 5 hello Hello world from rank 0 of 5. Hello world from rank 3 of 5. Hello world from rank 4 of 5. Hello world from rank 1 of 5. mpicc is essentially gcc but makes sure that the MPI libraries are included Why are the statements not in order of rank? Hello world from rank 2 of 5. $ 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 14

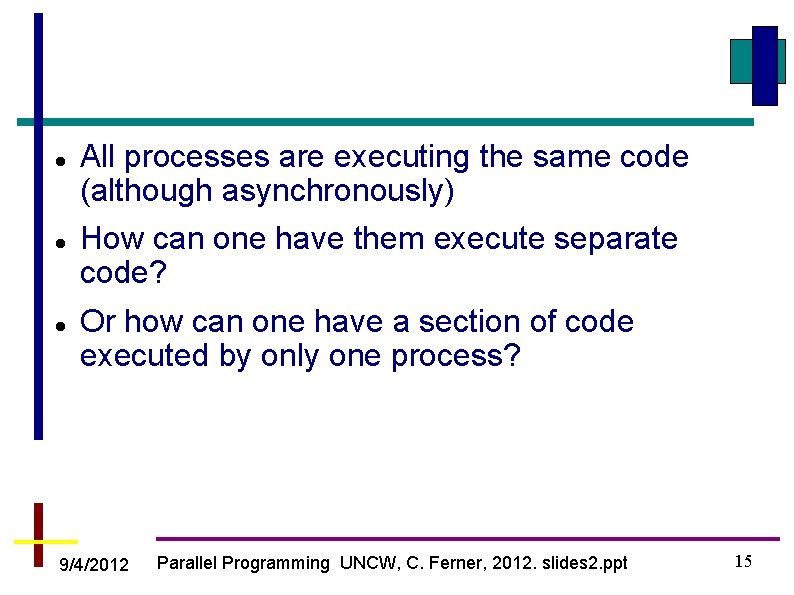

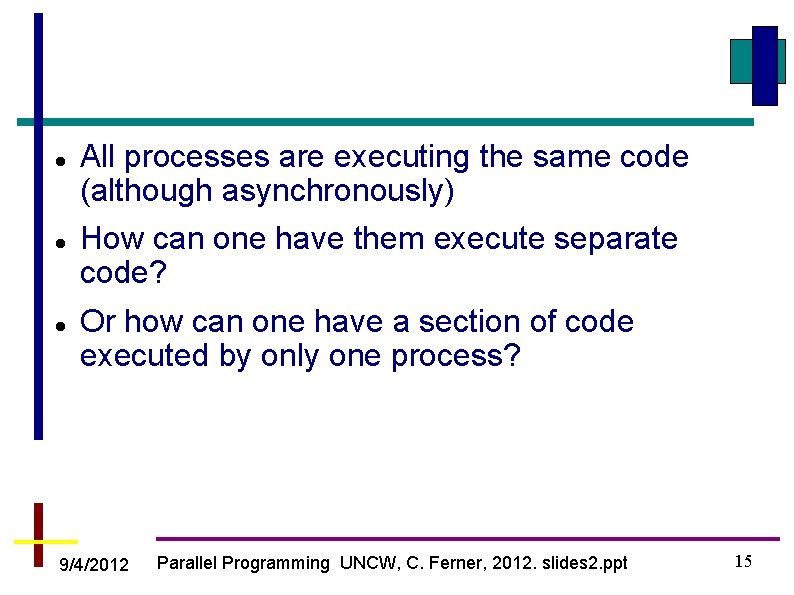

All processes are executing the same code (although asynchronously) How can one have them execute separate code? Or how can one have a section of code executed by only one process? 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 15

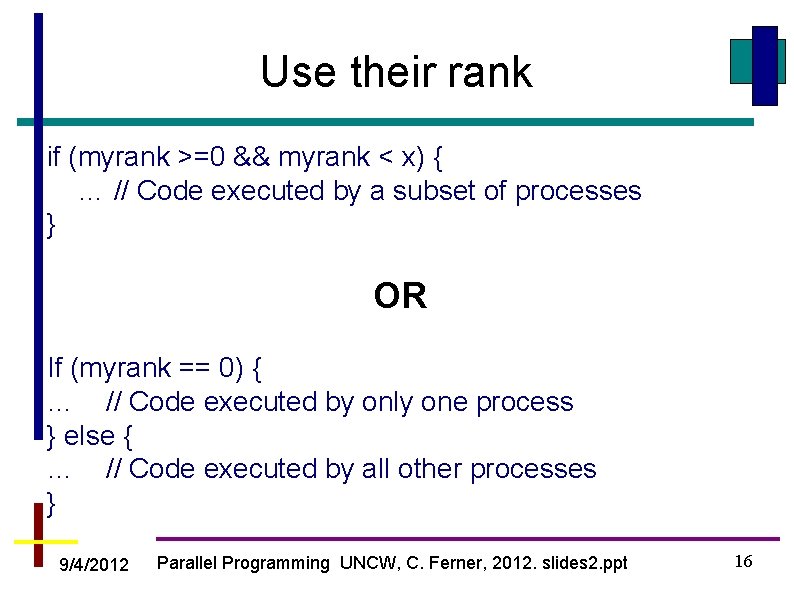

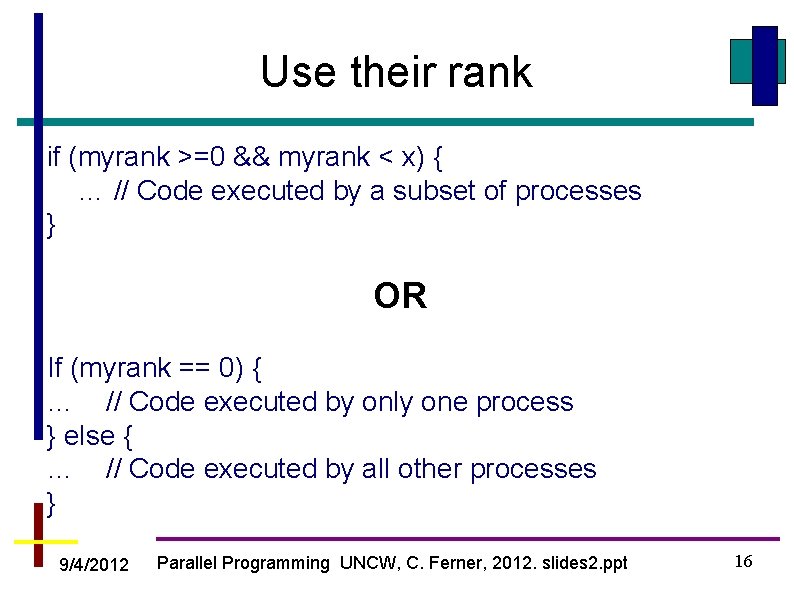

Use their rank if (myrank >=0 && myrank < x) { … // Code executed by a subset of processes } OR If (myrank == 0) { … // Code executed by only one process } else { … // Code executed by all other processes } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 16

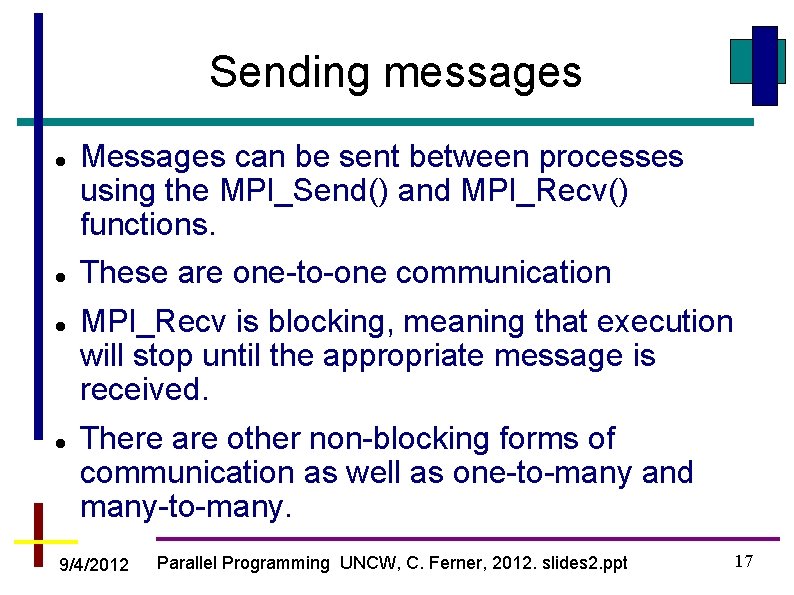

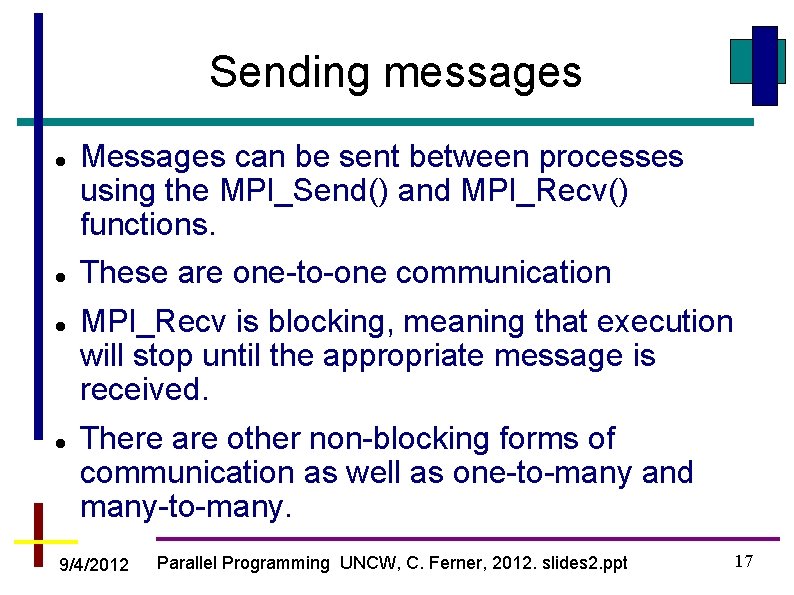

Sending messages Messages can be sent between processes using the MPI_Send() and MPI_Recv() functions. These are one-to-one communication MPI_Recv is blocking, meaning that execution will stop until the appropriate message is received. There are other non-blocking forms of communication as well as one-to-many and many-to-many. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 17

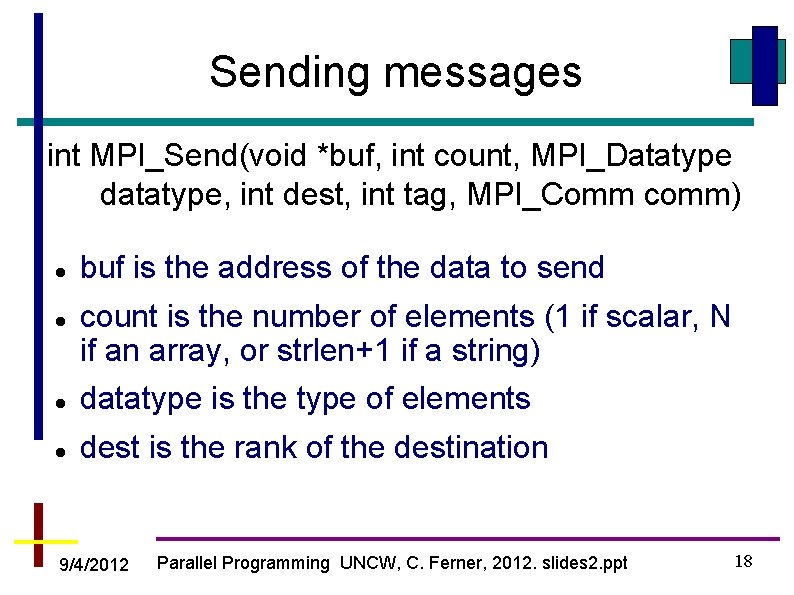

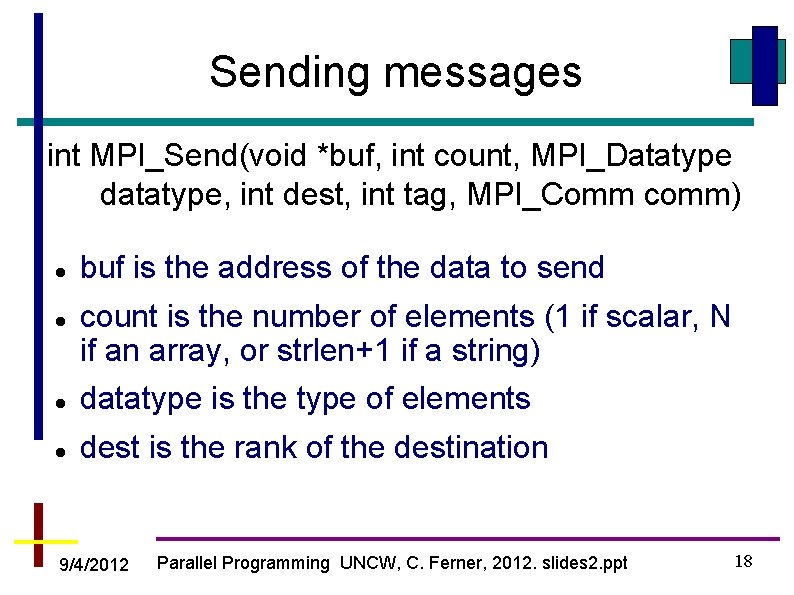

Sending messages int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) buf is the address of the data to send count is the number of elements (1 if scalar, N if an array, or strlen+1 if a string) datatype is the type of elements dest is the rank of the destination 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 18

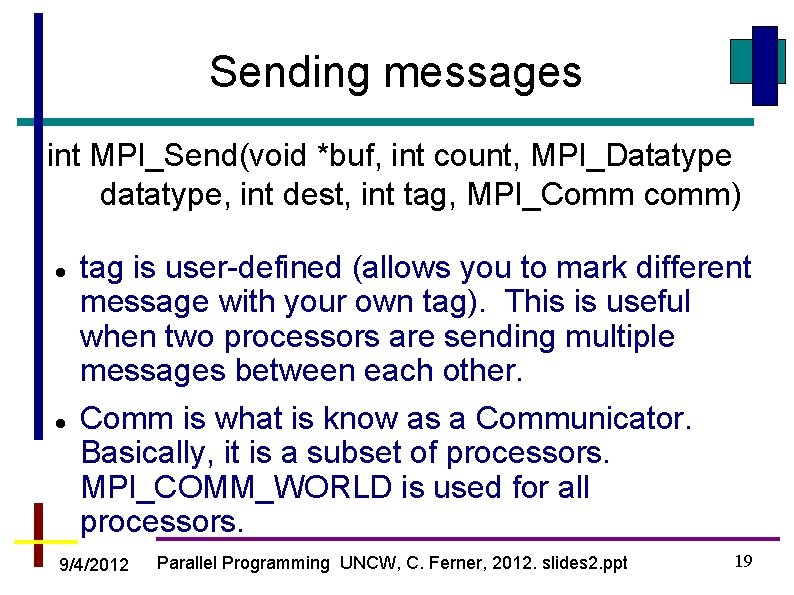

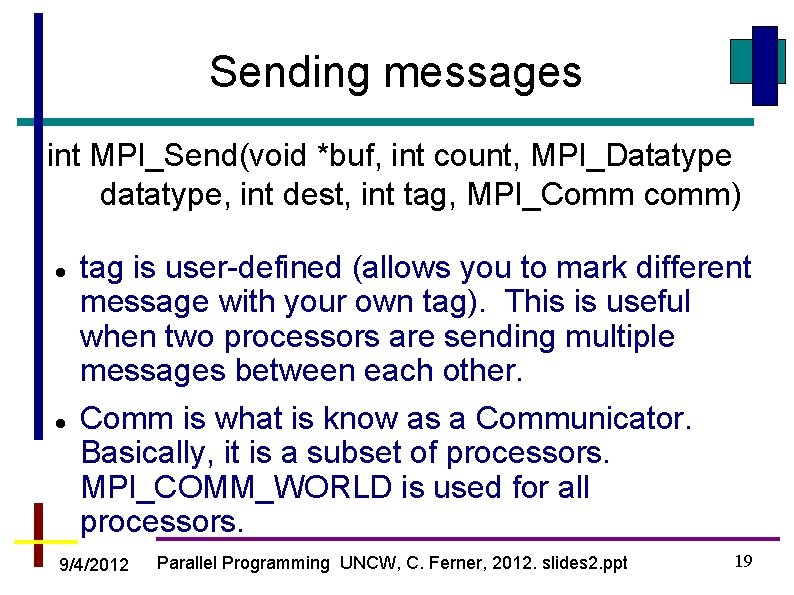

Sending messages int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm) tag is user-defined (allows you to mark different message with your own tag). This is useful when two processors are sending multiple messages between each other. Comm is what is know as a Communicator. Basically, it is a subset of processors. MPI_COMM_WORLD is used for all processors. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 19

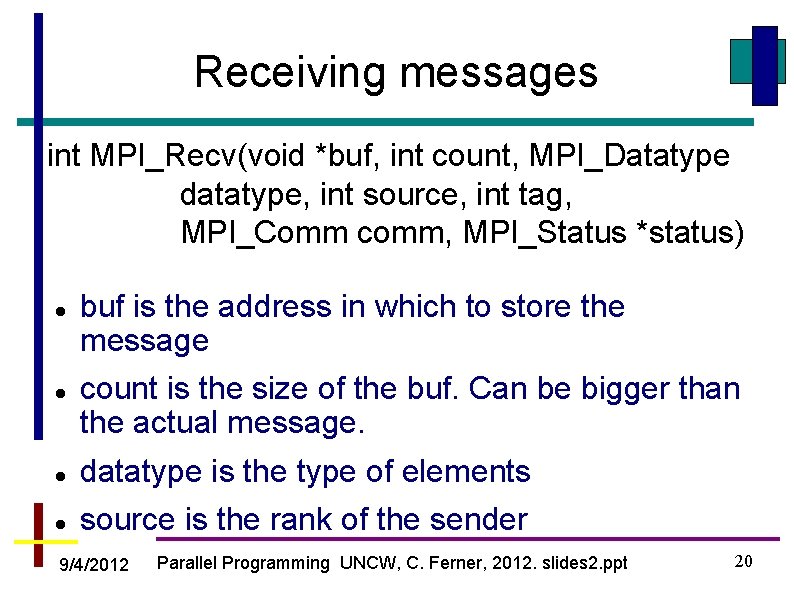

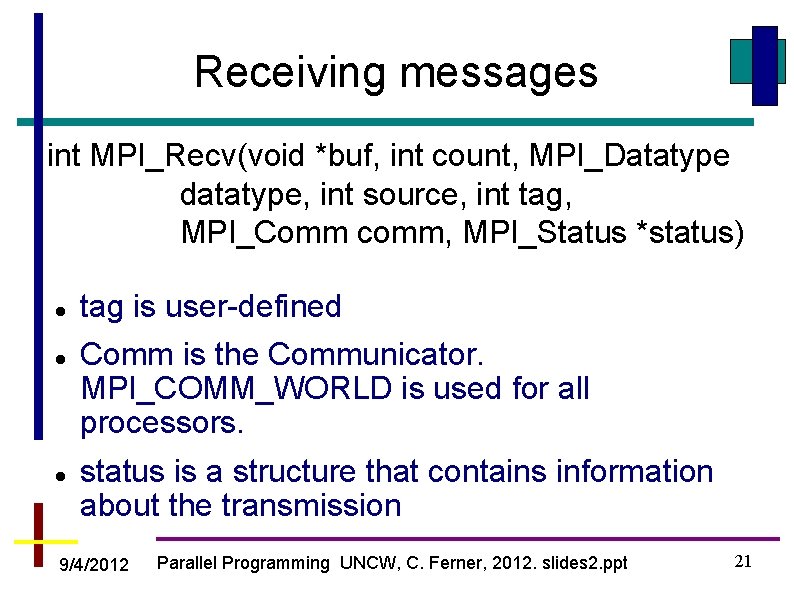

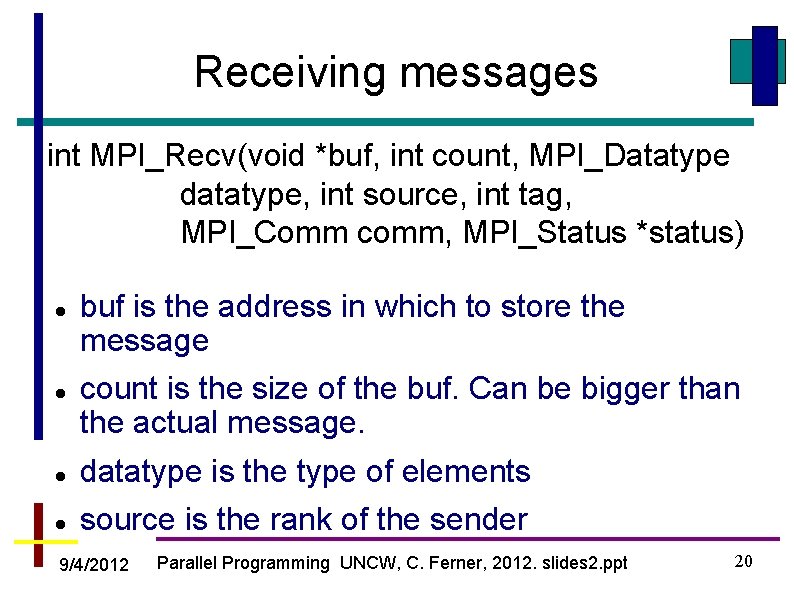

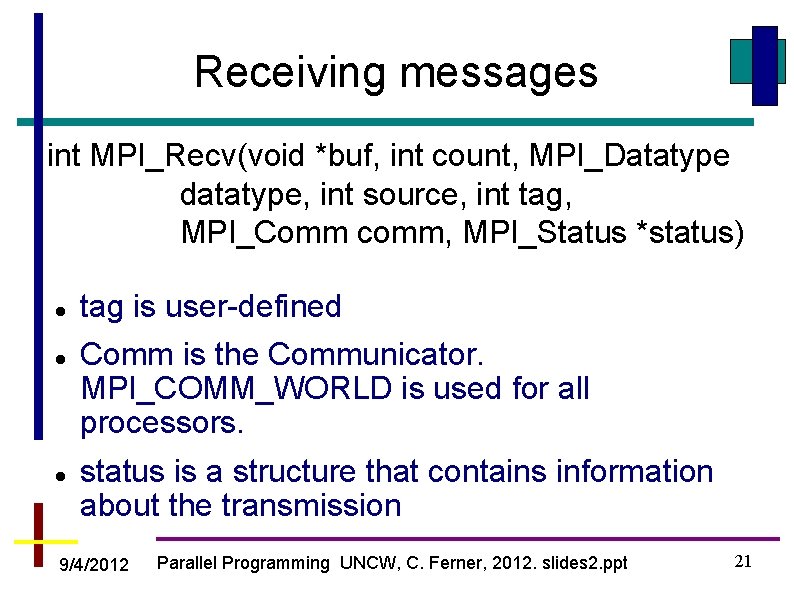

Receiving messages int MPI_Recv(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) buf is the address in which to store the message count is the size of the buf. Can be bigger than the actual message. datatype is the type of elements source is the rank of the sender 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 20

Receiving messages int MPI_Recv(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status) tag is user-defined Comm is the Communicator. MPI_COMM_WORLD is used for all processors. status is a structure that contains information about the transmission 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 21

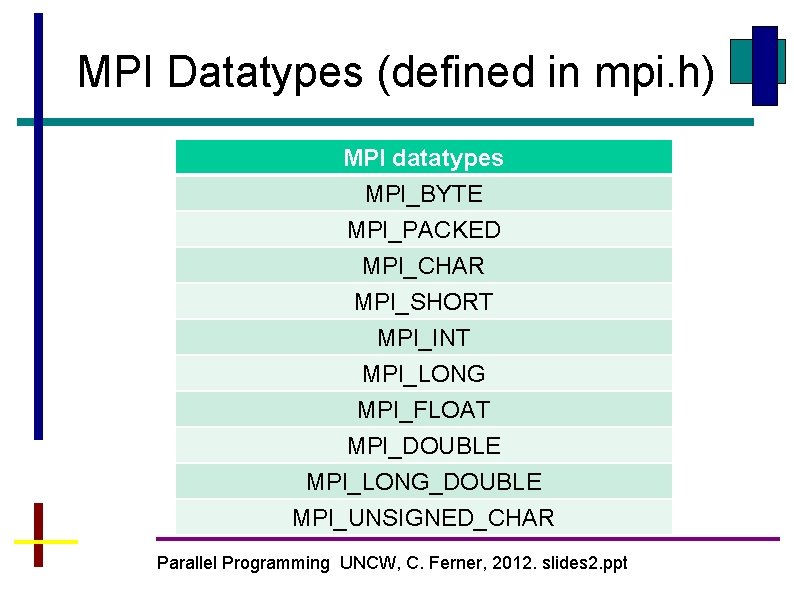

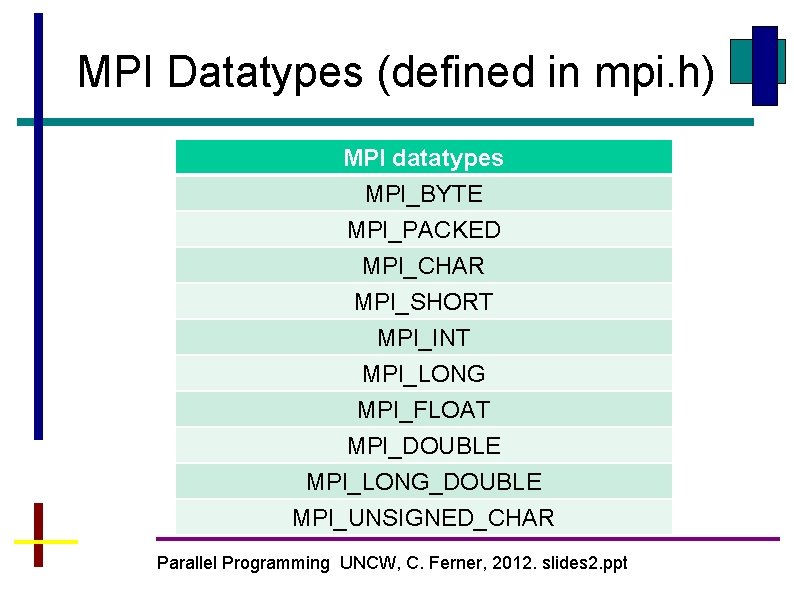

MPI Datatypes (defined in mpi. h) MPI datatypes MPI_BYTE MPI_PACKED MPI_CHAR MPI_SHORT MPI_INT MPI_LONG MPI_FLOAT MPI_DOUBLE MPI_LONG_DOUBLE MPI_UNSIGNED_CHAR Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt

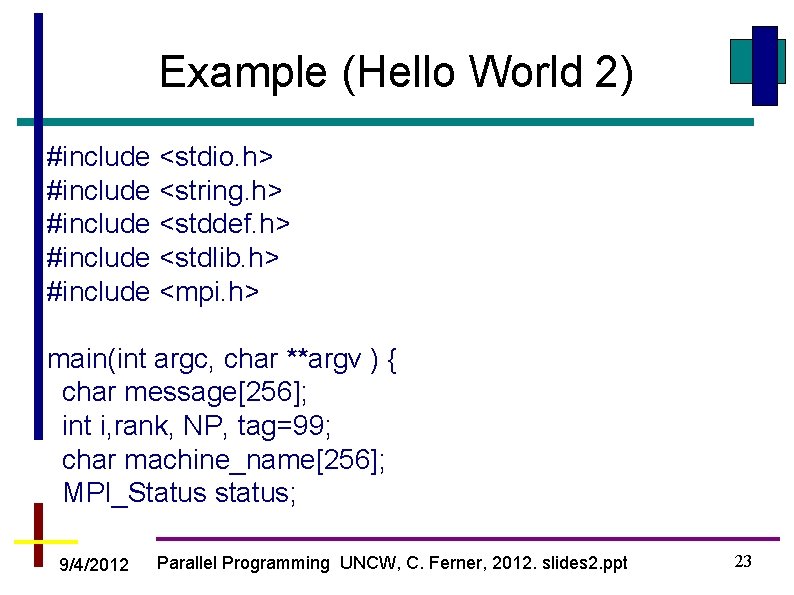

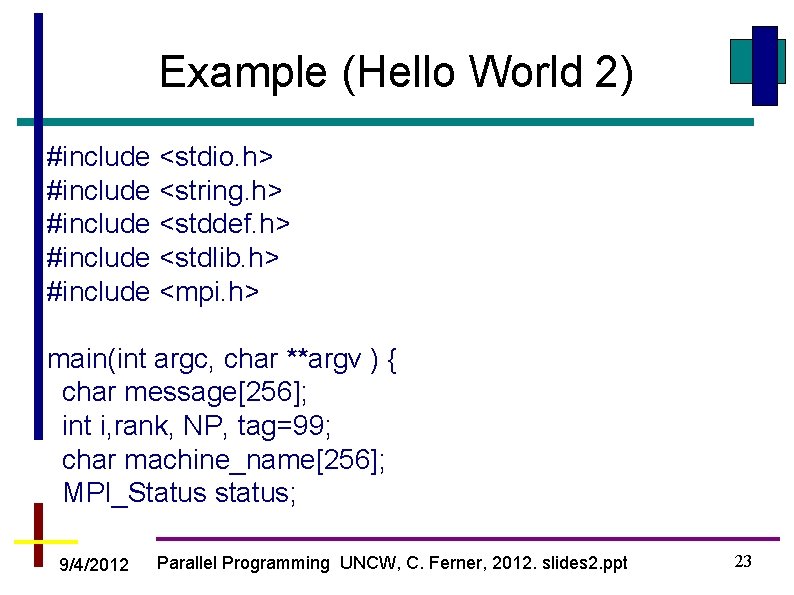

Example (Hello World 2) #include <stdio. h> #include <string. h> #include <stddef. h> #include <stdlib. h> #include <mpi. h> main(int argc, char **argv ) { char message[256]; int i, rank, NP, tag=99; char machine_name[256]; MPI_Status status; 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 23

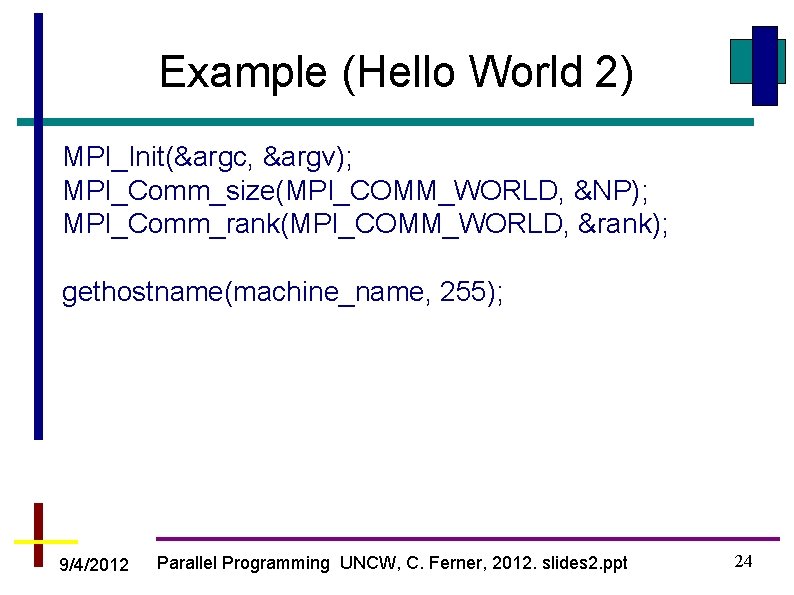

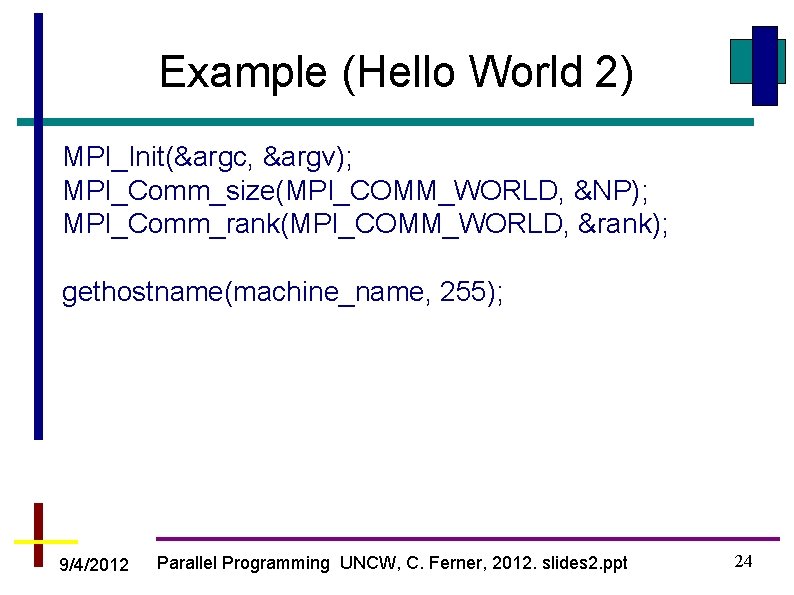

Example (Hello World 2) MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &rank); gethostname(machine_name, 255); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 24

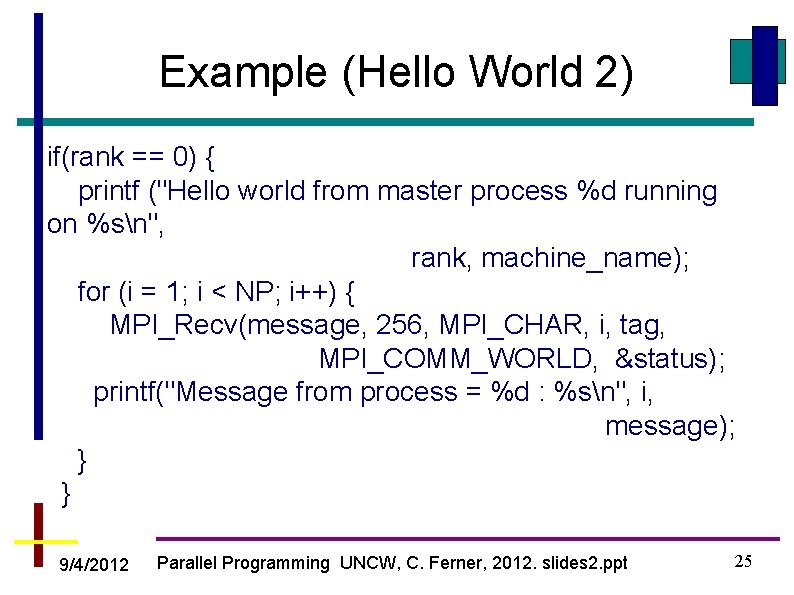

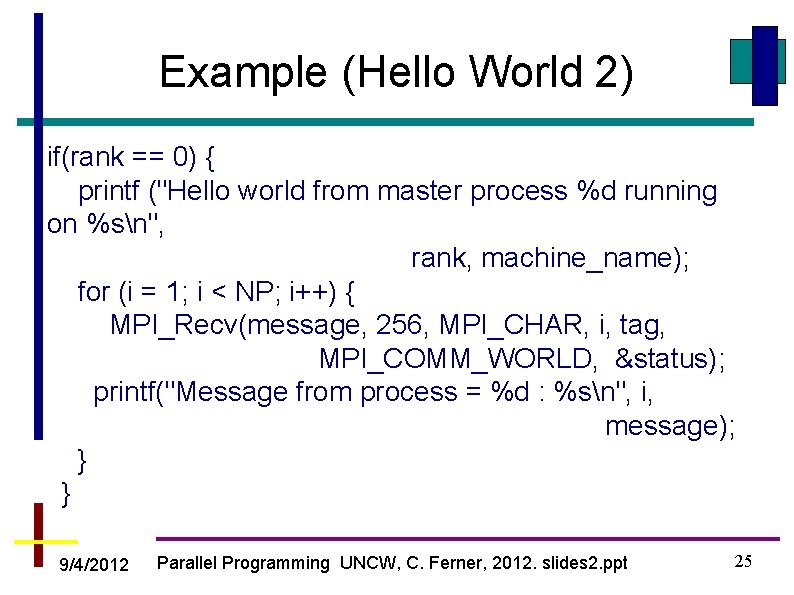

Example (Hello World 2) if(rank == 0) { printf ("Hello world from master process %d running on %sn", rank, machine_name); for (i = 1; i < NP; i++) { MPI_Recv(message, 256, MPI_CHAR, i, tag, MPI_COMM_WORLD, &status); printf("Message from process = %d : %sn", i, message); } } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 25

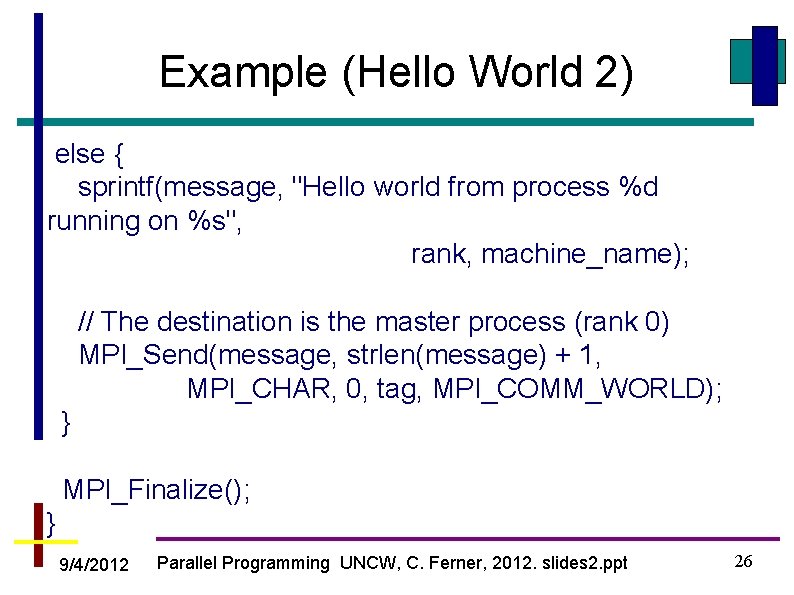

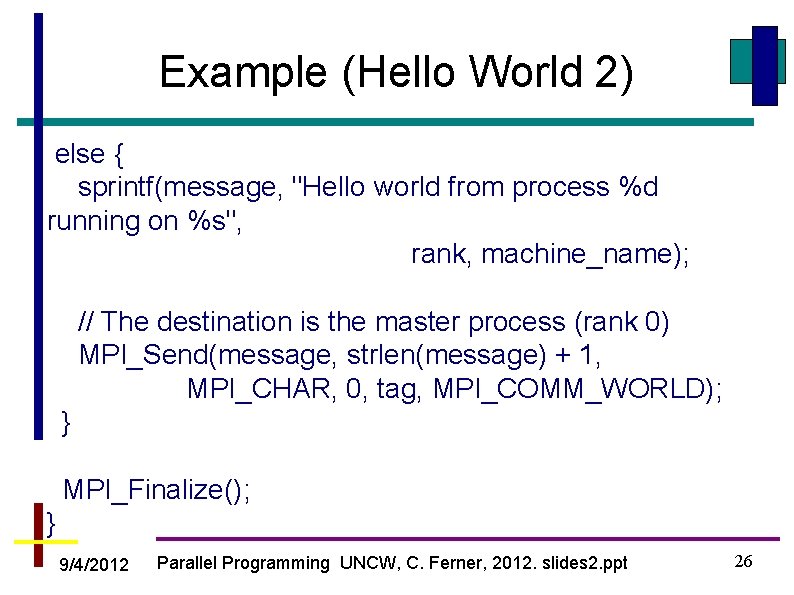

Example (Hello World 2) else { sprintf(message, "Hello world from process %d running on %s", rank, machine_name); // The destination is the master process (rank 0) MPI_Send(message, strlen(message) + 1, MPI_CHAR, 0, tag, MPI_COMM_WORLD); } MPI_Finalize(); } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 26

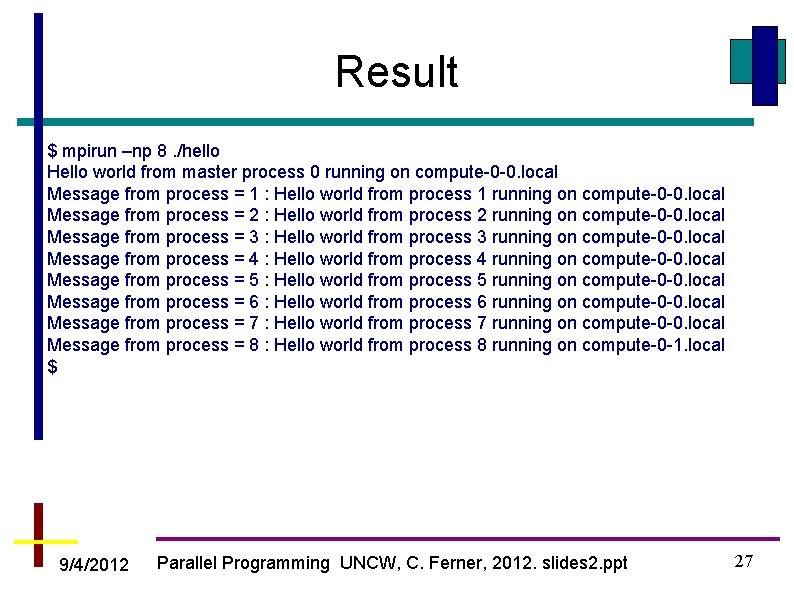

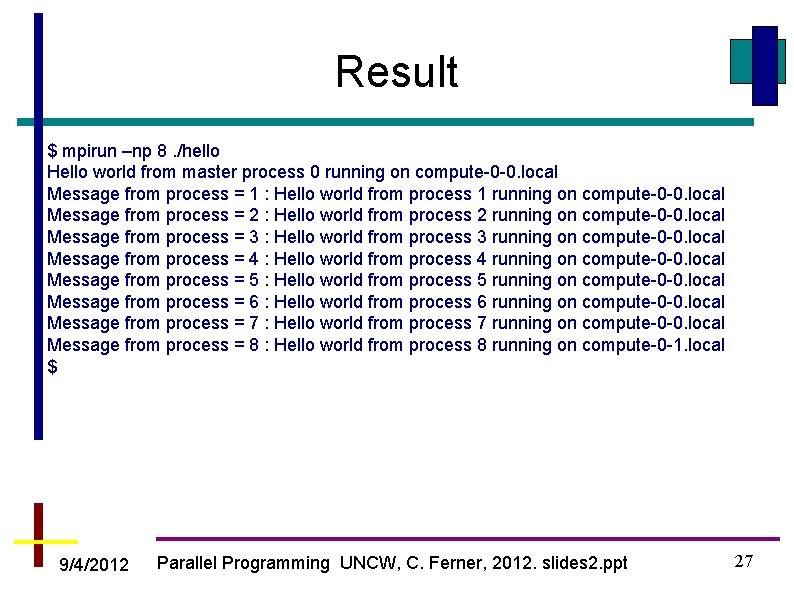

Result $ mpirun –np 8. /hello Hello world from master process 0 running on compute-0 -0. local Message from process = 1 : Hello world from process 1 running on compute-0 -0. local Message from process = 2 : Hello world from process 2 running on compute-0 -0. local Message from process = 3 : Hello world from process 3 running on compute-0 -0. local Message from process = 4 : Hello world from process 4 running on compute-0 -0. local Message from process = 5 : Hello world from process 5 running on compute-0 -0. local Message from process = 6 : Hello world from process 6 running on compute-0 -0. local Message from process = 7 : Hello world from process 7 running on compute-0 -0. local Message from process = 8 : Hello world from process 8 running on compute-0 -1. local $ 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 27

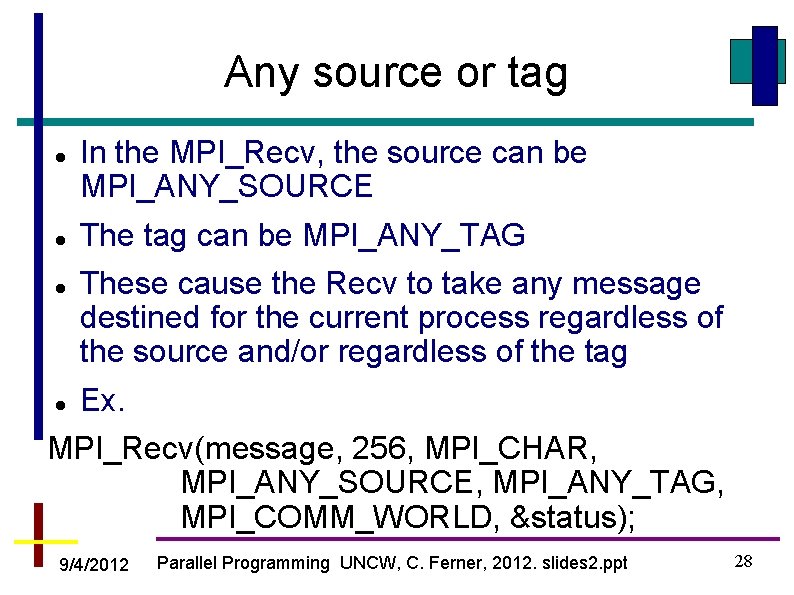

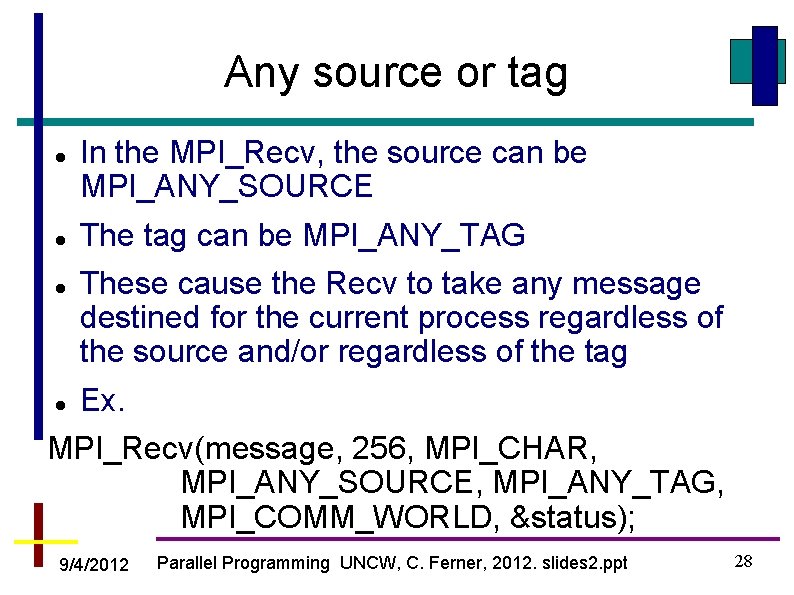

Any source or tag In the MPI_Recv, the source can be MPI_ANY_SOURCE The tag can be MPI_ANY_TAG These cause the Recv to take any message destined for the current process regardless of the source and/or regardless of the tag Ex. MPI_Recv(message, 256, MPI_CHAR, MPI_ANY_SOURCE, MPI_ANY_TAG, MPI_COMM_WORLD, &status); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 28

![Another Example array source int array100 rank 0 fills the array with Another Example (array) source int array[100]; … // rank 0 fills the array with](https://slidetodoc.com/presentation_image_h/00f97aa9e23106c1270fb4ba7a09e7a4/image-29.jpg)

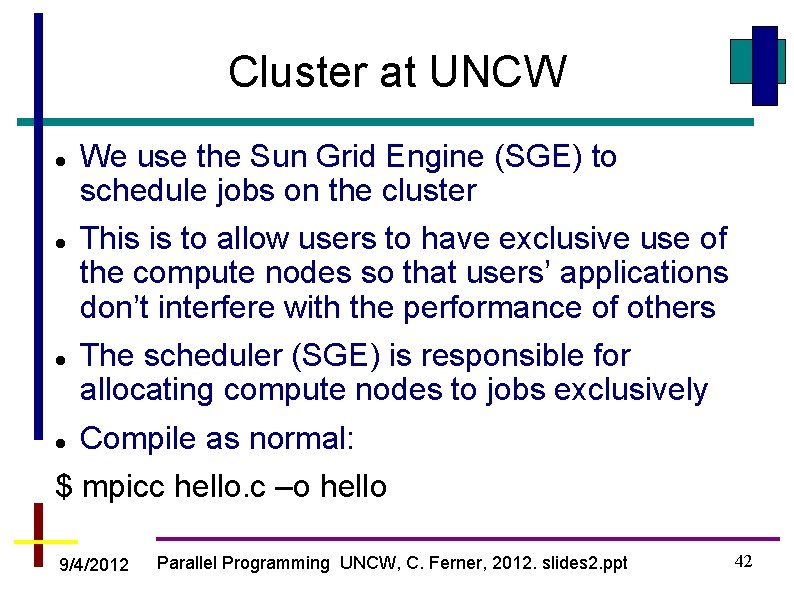

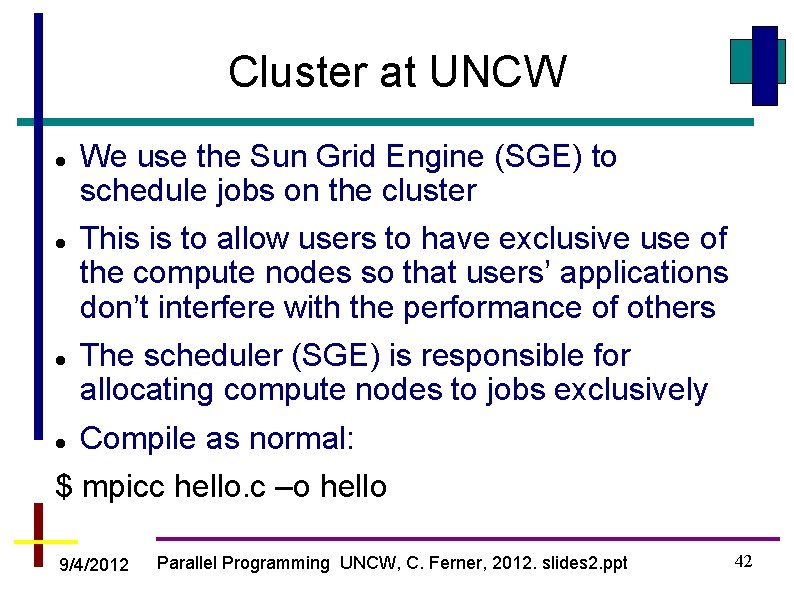

Another Example (array) source int array[100]; … // rank 0 fills the array with data dest if (rank == 0) MPI_Send (array, 100, MPI_INT, 1, 0, MPI_COMM_WORLD); else if (rank == 1) MPI_Recv(array, 100, MPI_INT, 0, 0, MPI_COMM_WORLD, &status); tag Number of Elements 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 29

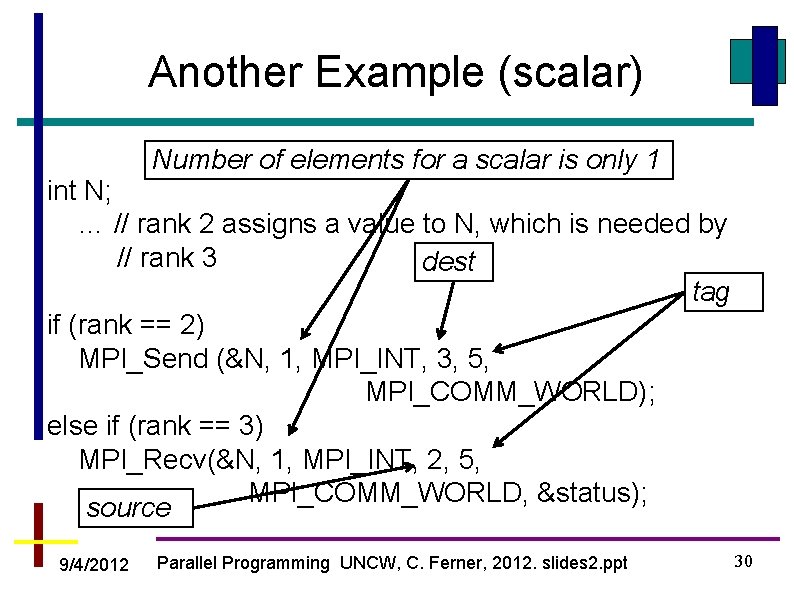

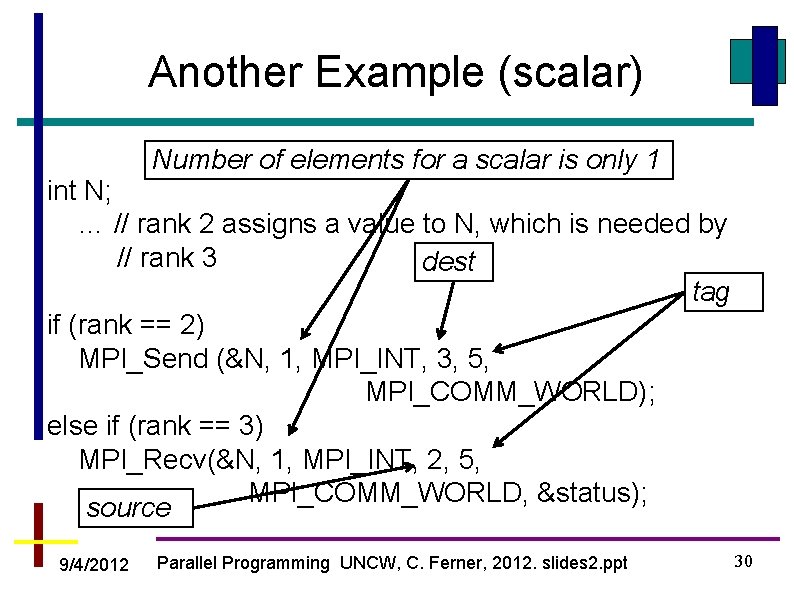

Another Example (scalar) Number of elements for a scalar is only 1 int N; … // rank 2 assigns a value to N, which is needed by // rank 3 dest tag if (rank == 2) MPI_Send (&N, 1, MPI_INT, 3, 5, MPI_COMM_WORLD); else if (rank == 3) MPI_Recv(&N, 1, MPI_INT, 2, 5, MPI_COMM_WORLD, &status); source 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 30

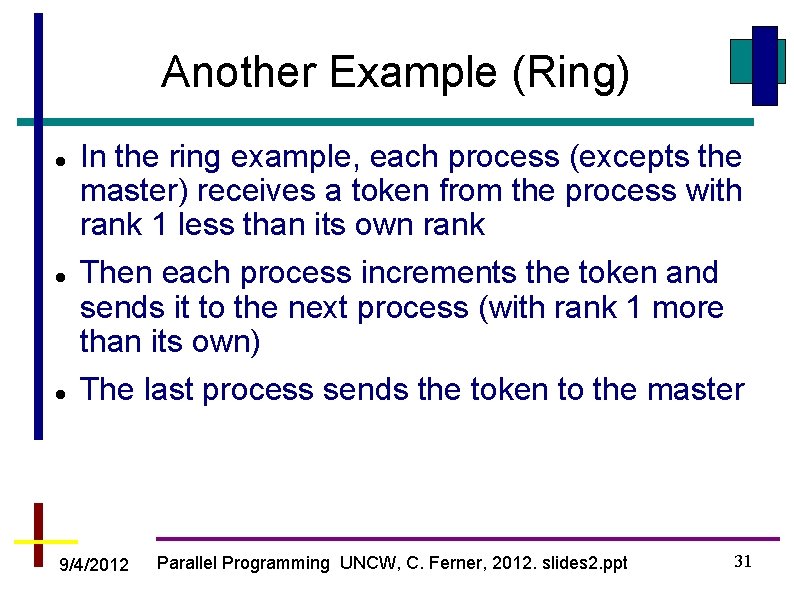

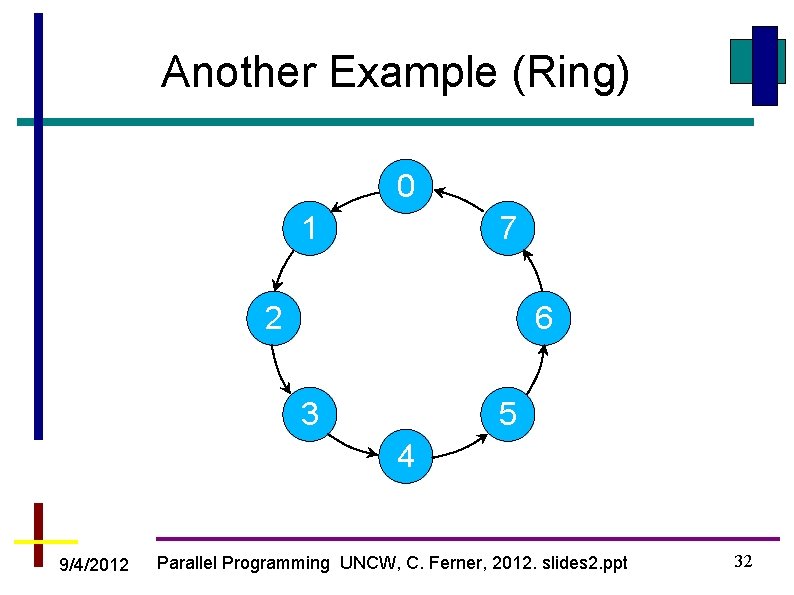

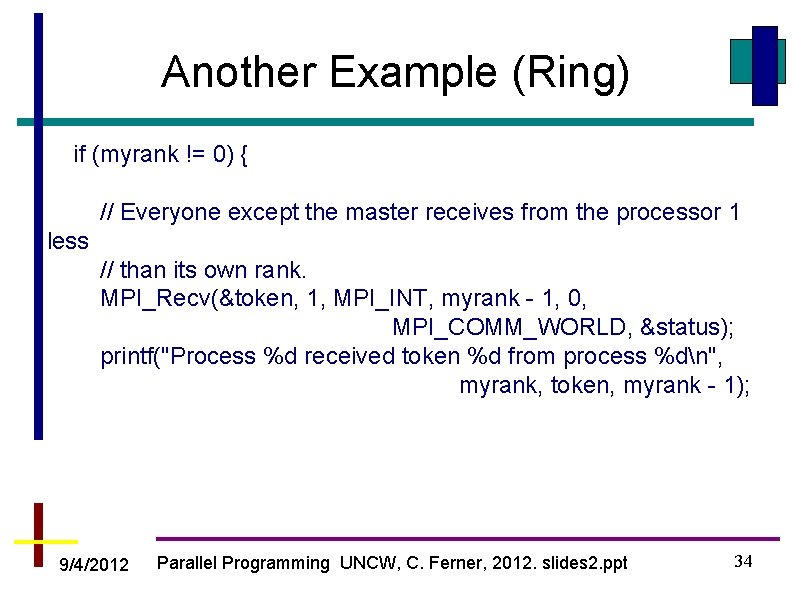

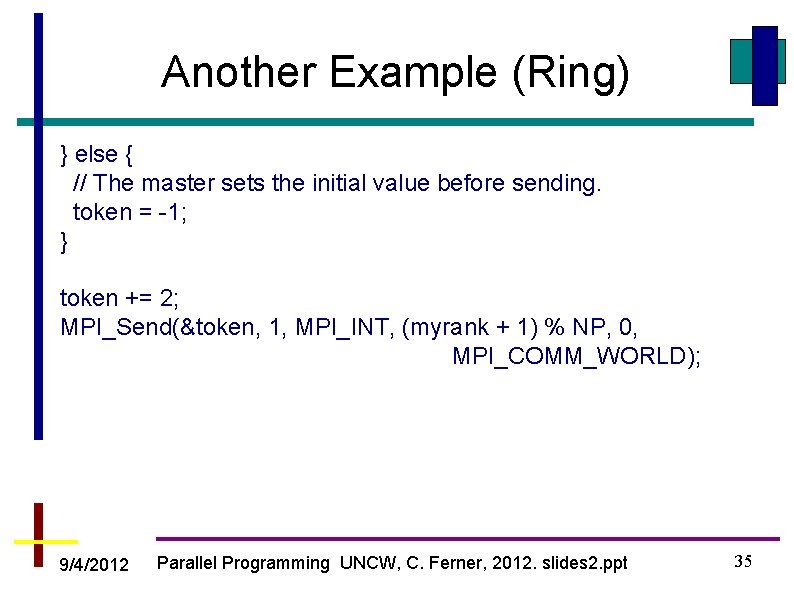

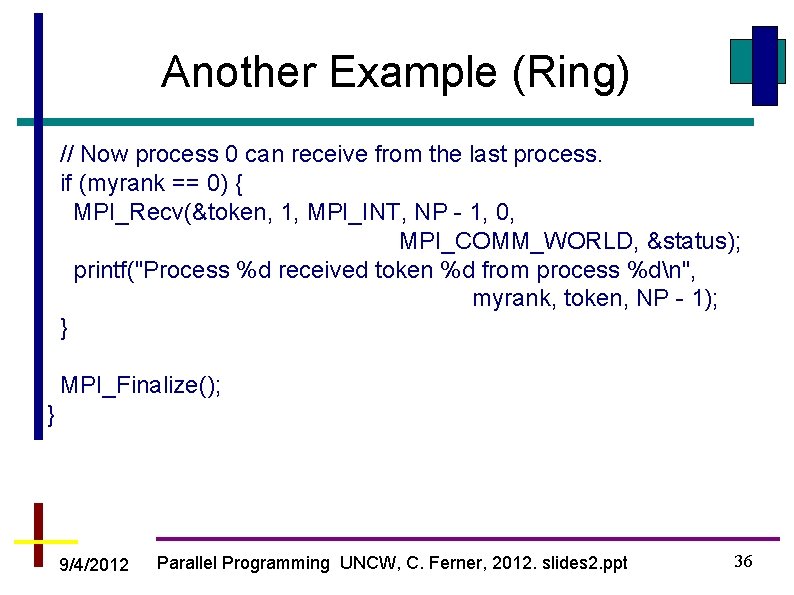

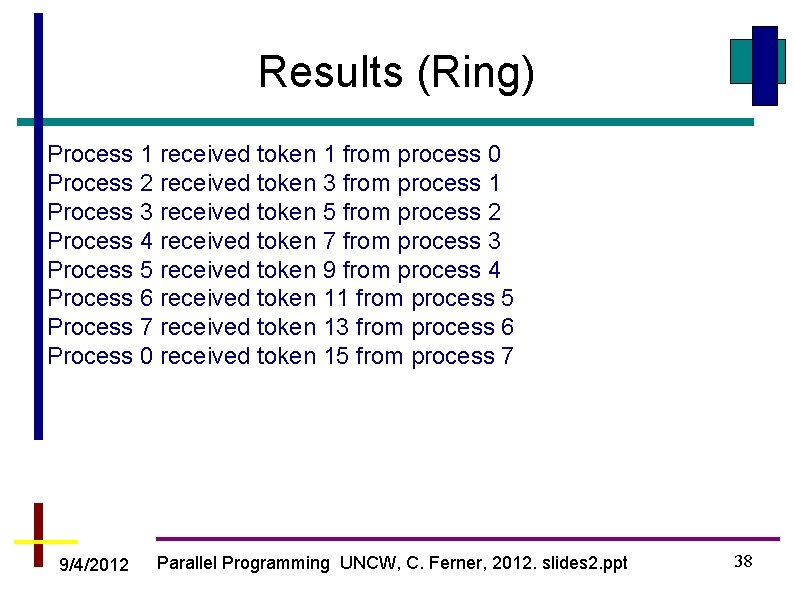

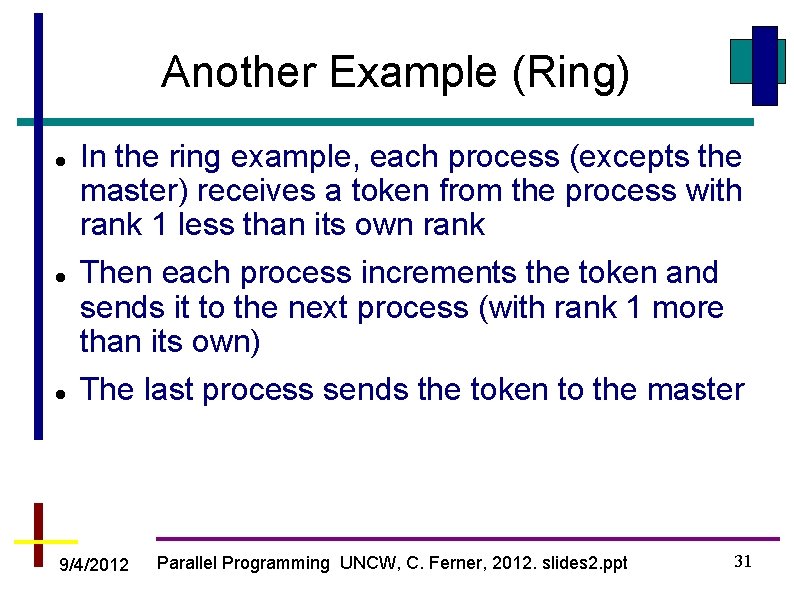

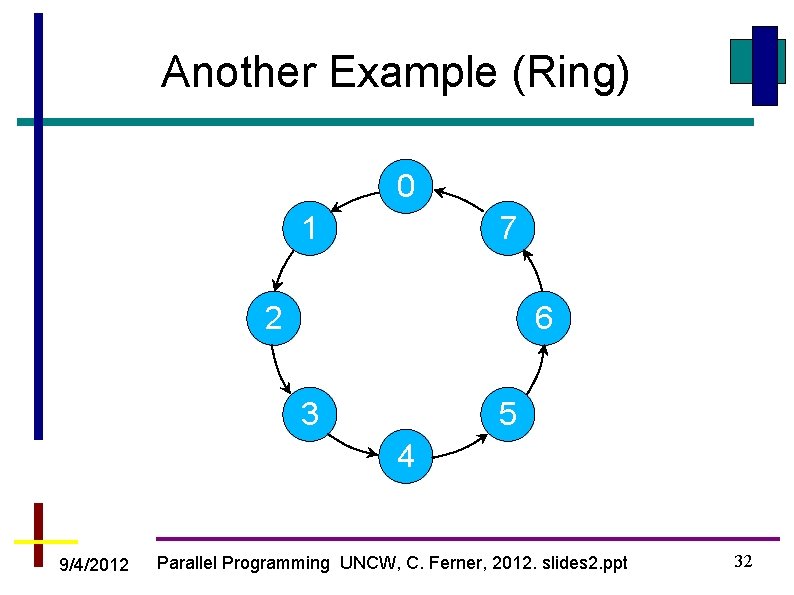

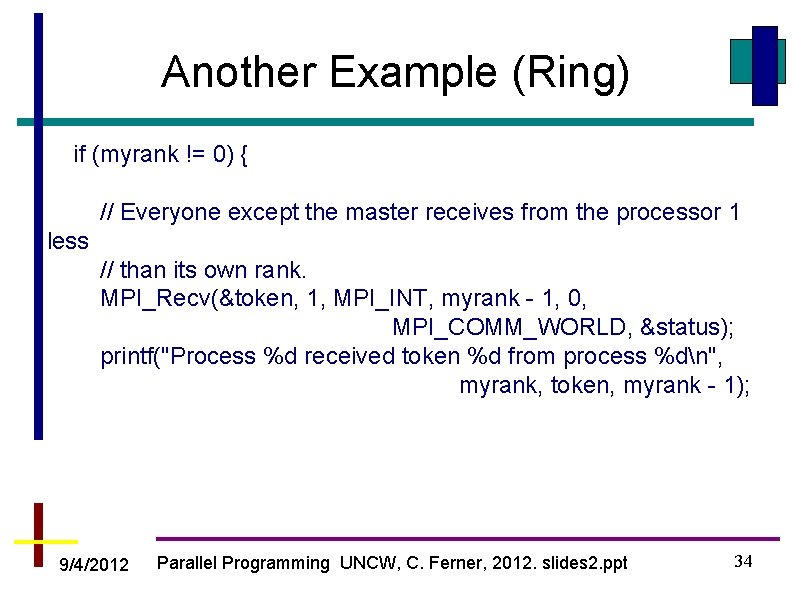

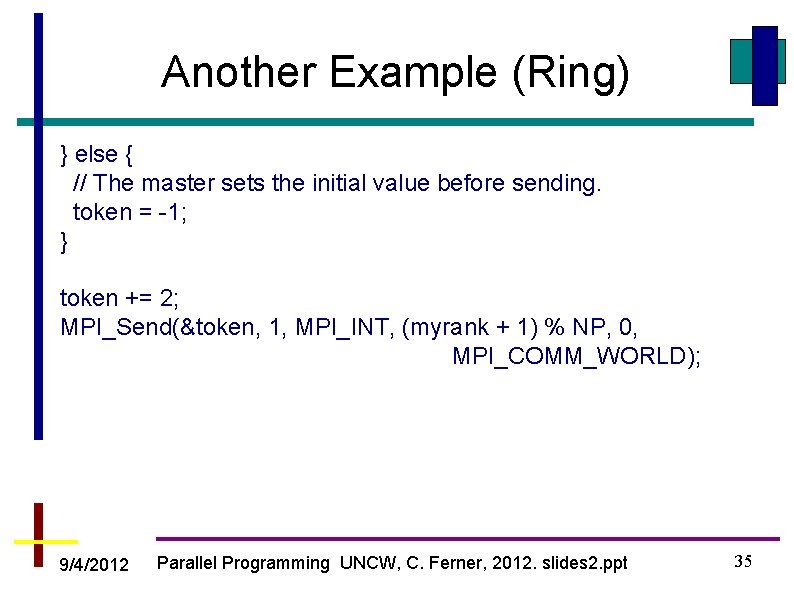

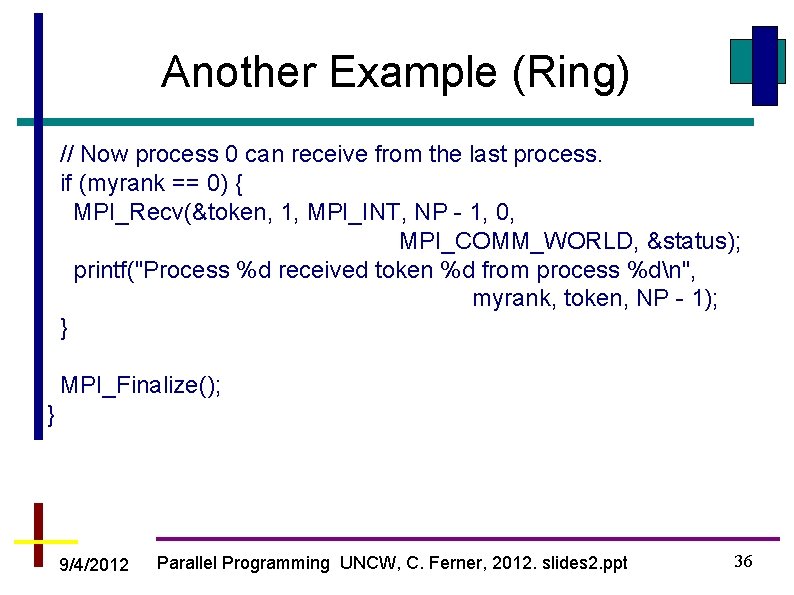

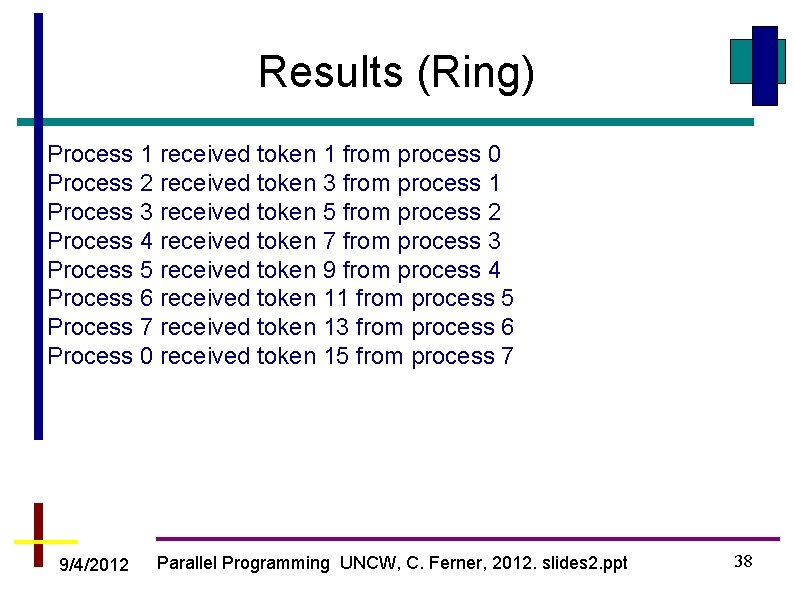

Another Example (Ring) In the ring example, each process (excepts the master) receives a token from the process with rank 1 less than its own rank Then each process increments the token and sends it to the next process (with rank 1 more than its own) The last process sends the token to the master 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 31

Another Example (Ring) 0 1 7 2 6 3 5 4 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 32

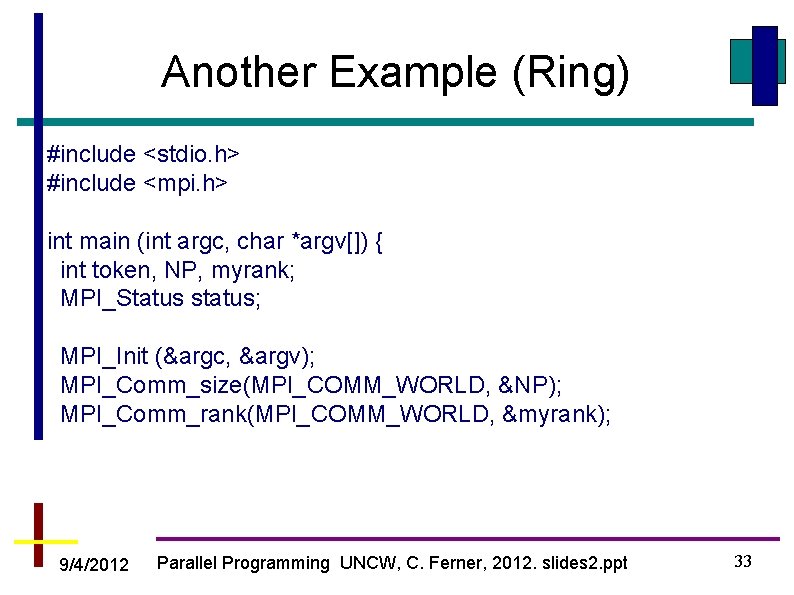

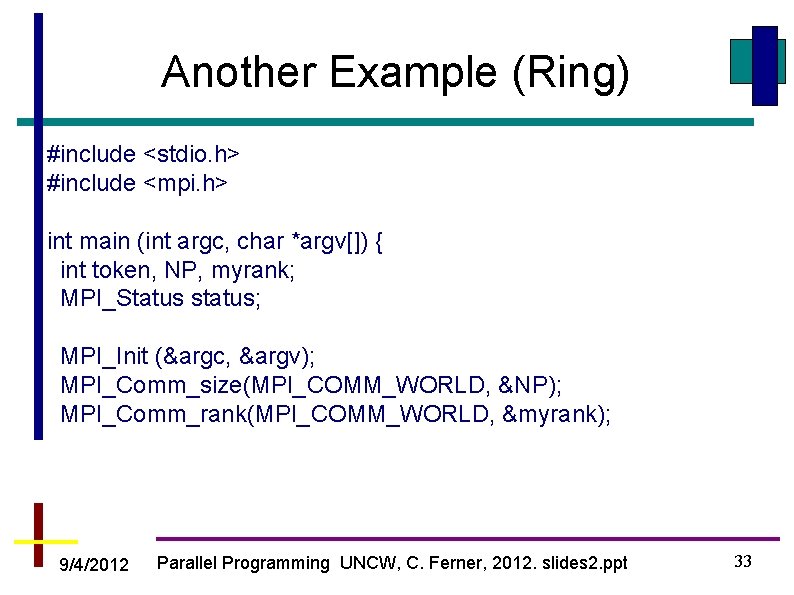

Another Example (Ring) #include <stdio. h> #include <mpi. h> int main (int argc, char *argv[]) { int token, NP, myrank; MPI_Status status; MPI_Init (&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &NP); MPI_Comm_rank(MPI_COMM_WORLD, &myrank); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 33

Another Example (Ring) if (myrank != 0) { // Everyone except the master receives from the processor 1 less // than its own rank. MPI_Recv(&token, 1, MPI_INT, myrank - 1, 0, MPI_COMM_WORLD, &status); printf("Process %d received token %d from process %dn", myrank, token, myrank - 1); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 34

Another Example (Ring) } else { // The master sets the initial value before sending. token = -1; } token += 2; MPI_Send(&token, 1, MPI_INT, (myrank + 1) % NP, 0, MPI_COMM_WORLD); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 35

Another Example (Ring) // Now process 0 can receive from the last process. if (myrank == 0) { MPI_Recv(&token, 1, MPI_INT, NP - 1, 0, MPI_COMM_WORLD, &status); printf("Process %d received token %d from process %dn", myrank, token, NP - 1); } MPI_Finalize(); } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 36

Another Example (Ring) // Now process 0 can receive from the last process. if (myrank == 0) { MPI_Recv(&token, 1, MPI_INT, NP - 1, 0, MPI_COMM_WORLD, &status); printf("Process %d received token %d from process %dn", myrank, token, NP - 1); } MPI_Finalize(); } 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 37

Results (Ring) Process 1 received token 1 from process 0 Process 2 received token 3 from process 1 Process 3 received token 5 from process 2 Process 4 received token 7 from process 3 Process 5 received token 9 from process 4 Process 6 received token 11 from process 5 Process 7 received token 13 from process 6 Process 0 received token 15 from process 7 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 38

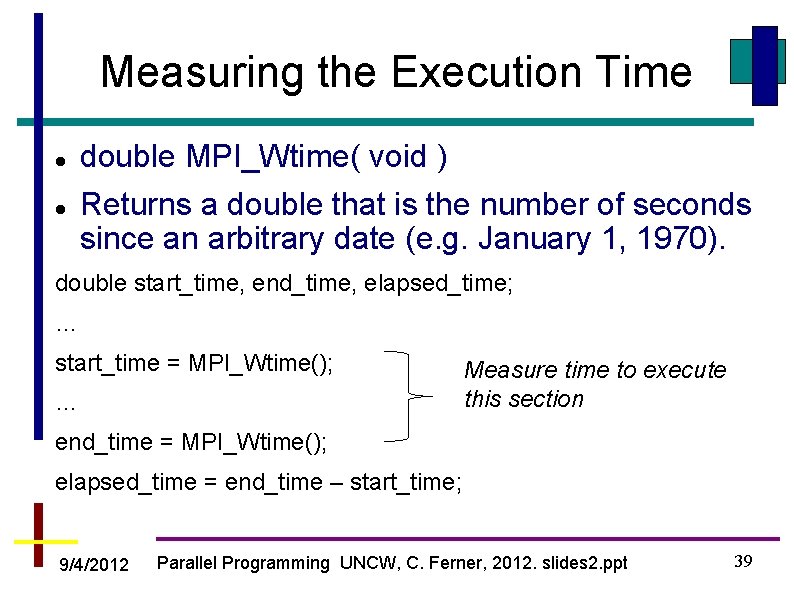

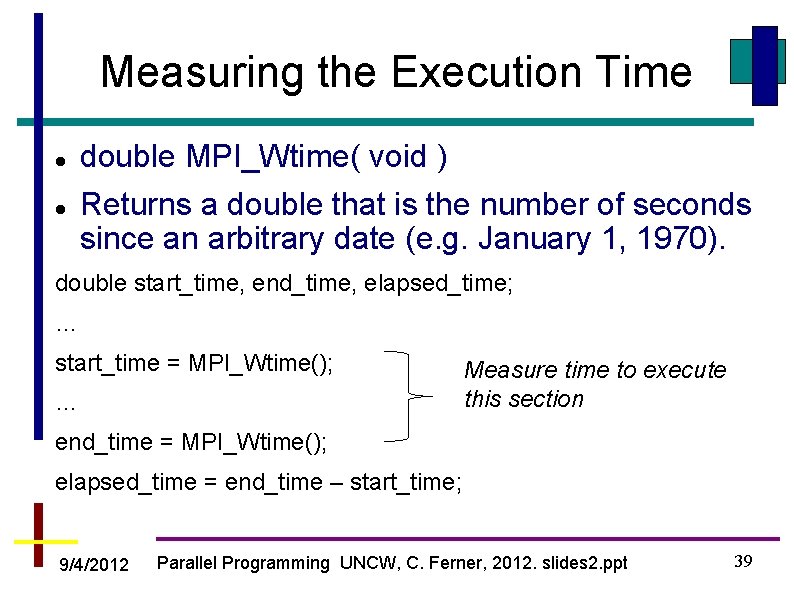

Measuring the Execution Time double MPI_Wtime( void ) Returns a double that is the number of seconds since an arbitrary date (e. g. January 1, 1970). double start_time, end_time, elapsed_time; … start_time = MPI_Wtime(); … Measure time to execute this section end_time = MPI_Wtime(); elapsed_time = end_time – start_time; 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 39

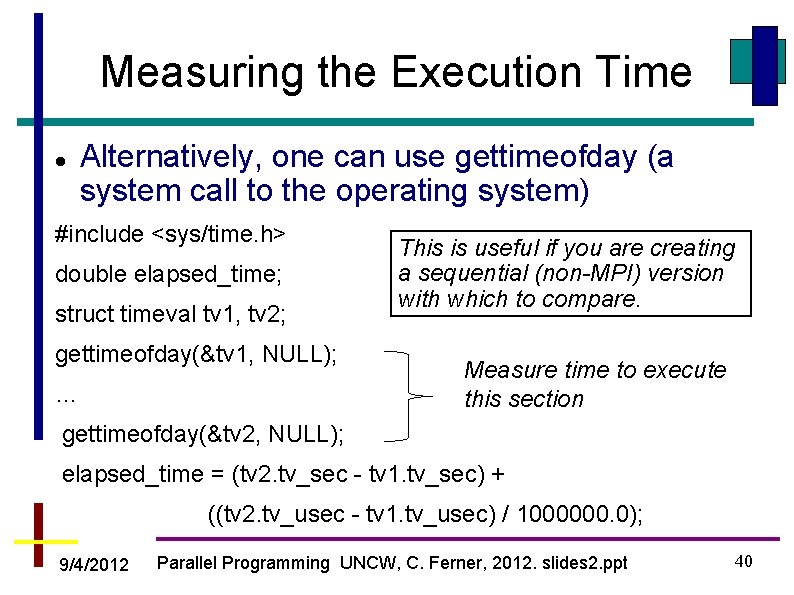

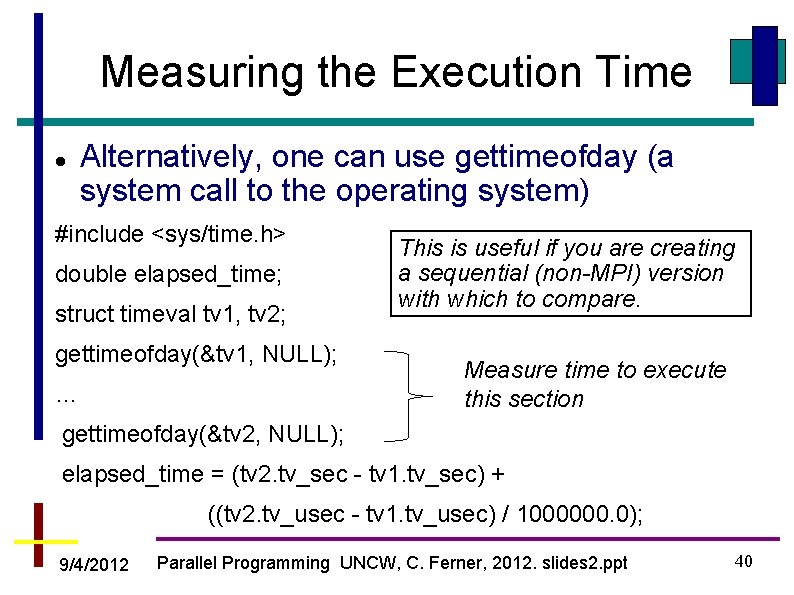

Measuring the Execution Time Alternatively, one can use gettimeofday (a system call to the operating system) #include <sys/time. h> double elapsed_time; struct timeval tv 1, tv 2; gettimeofday(&tv 1, NULL); … This is useful if you are creating a sequential (non-MPI) version with which to compare. Measure time to execute this section gettimeofday(&tv 2, NULL); elapsed_time = (tv 2. tv_sec - tv 1. tv_sec) + ((tv 2. tv_usec - tv 1. tv_usec) / 1000000. 0); 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 40

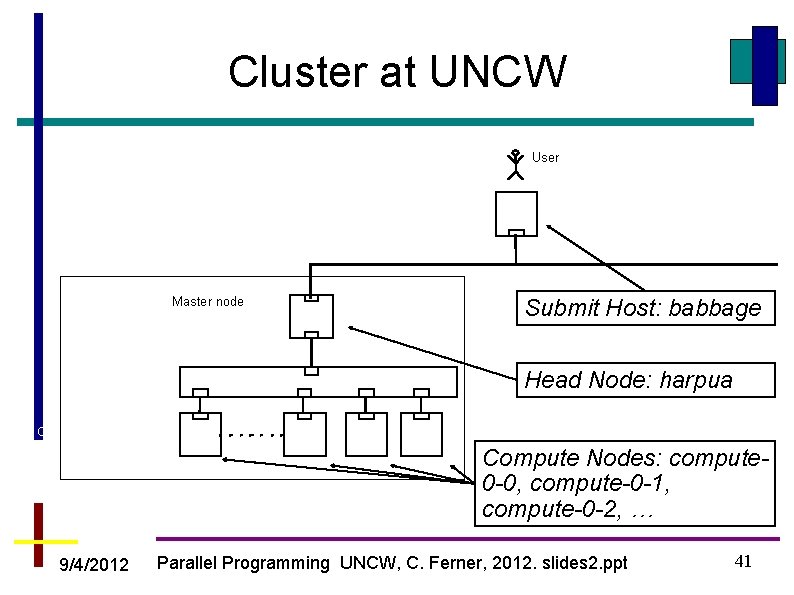

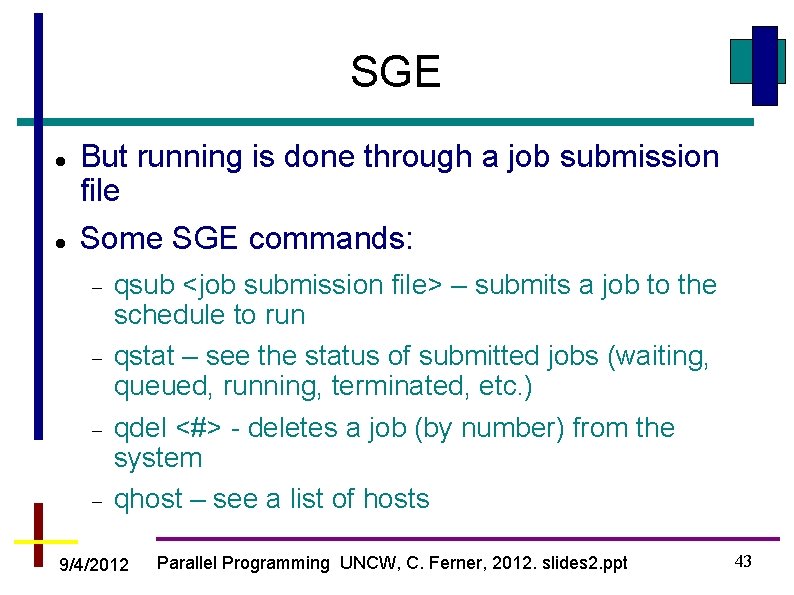

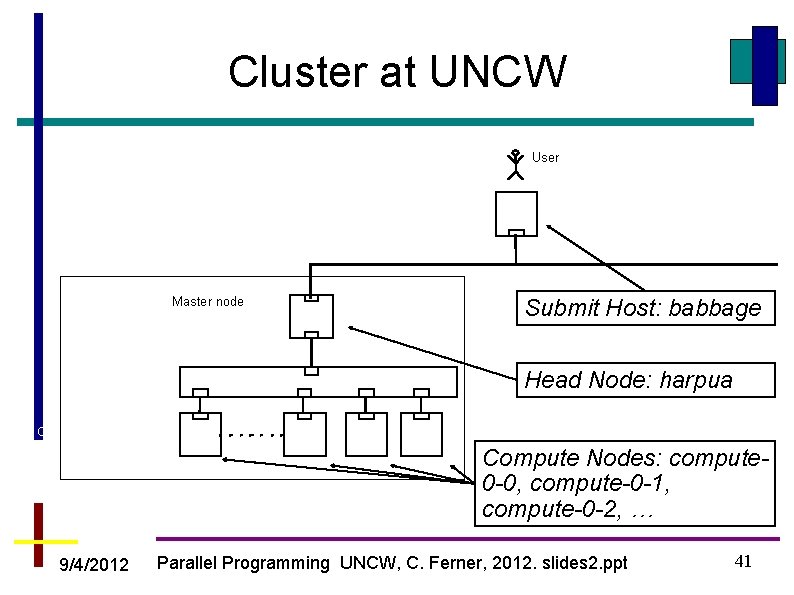

Cluster at UNCW User Computers Dedicated Cluster Master node Switch Ethernet interface Submit Host: babbage Head Node: harpua Compute nodes Compute Nodes: compute 0 -0, compute-0 -1, compute-0 -2, … 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 41

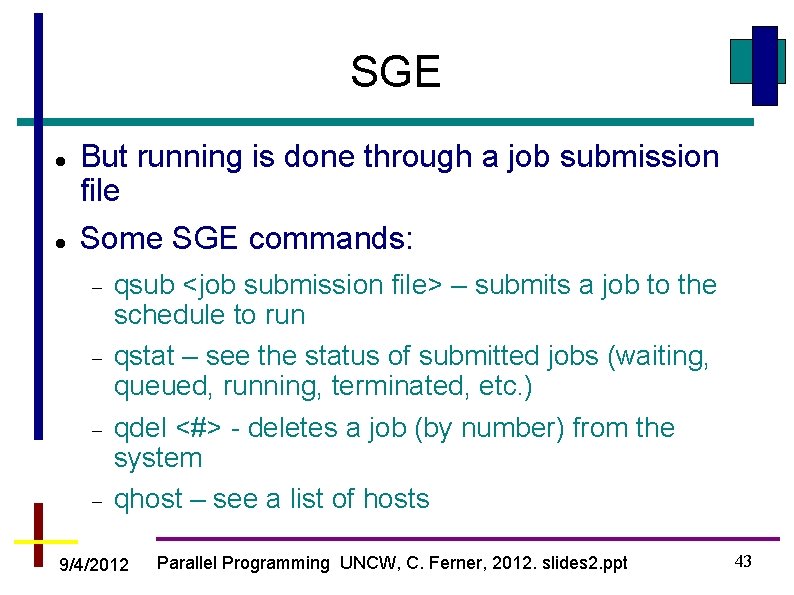

Cluster at UNCW We use the Sun Grid Engine (SGE) to schedule jobs on the cluster This is to allow users to have exclusive use of the compute nodes so that users’ applications don’t interfere with the performance of others The scheduler (SGE) is responsible for allocating compute nodes to jobs exclusively Compile as normal: $ mpicc hello. c –o hello 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 42

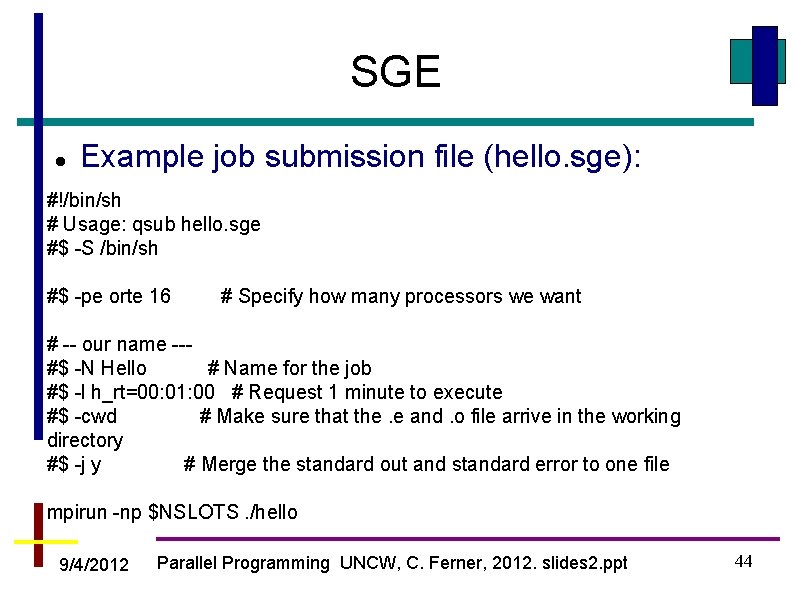

SGE But running is done through a job submission file Some SGE commands: qsub <job submission file> – submits a job to the schedule to run qstat – see the status of submitted jobs (waiting, queued, running, terminated, etc. ) qdel <#> - deletes a job (by number) from the system qhost – see a list of hosts 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 43

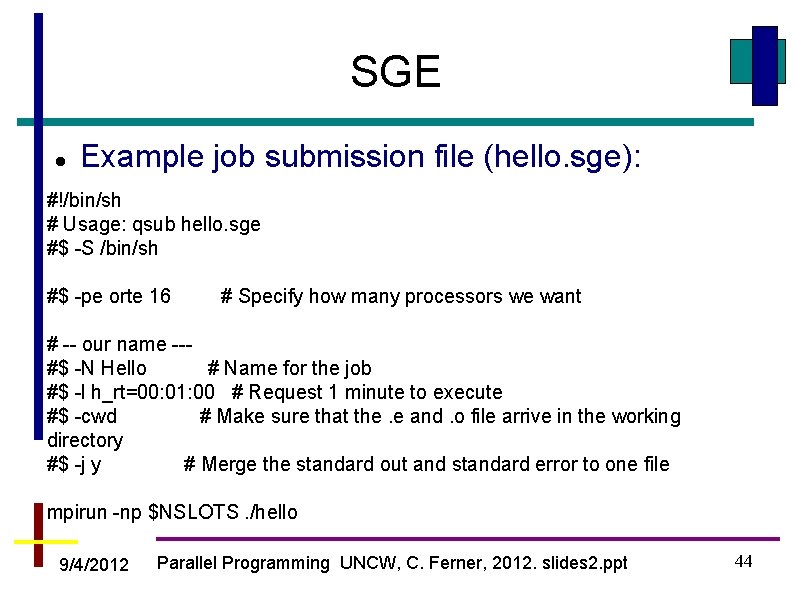

SGE Example job submission file (hello. sge): #!/bin/sh # Usage: qsub hello. sge #$ -S /bin/sh #$ -pe orte 16 # Specify how many processors we want # -- our name --#$ -N Hello # Name for the job #$ -l h_rt=00: 01: 00 # Request 1 minute to execute #$ -cwd # Make sure that the. e and. o file arrive in the working directory #$ -j y # Merge the standard out and standard error to one file mpirun -np $NSLOTS. /hello 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 44

SGE Example job submission file (hello. sge): #!/bin/sh # Usage: qsub hello. sge #$ -S /bin/sh #$ -pe orte 16 want 9/4/2012 # Specify how many processors we Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 45

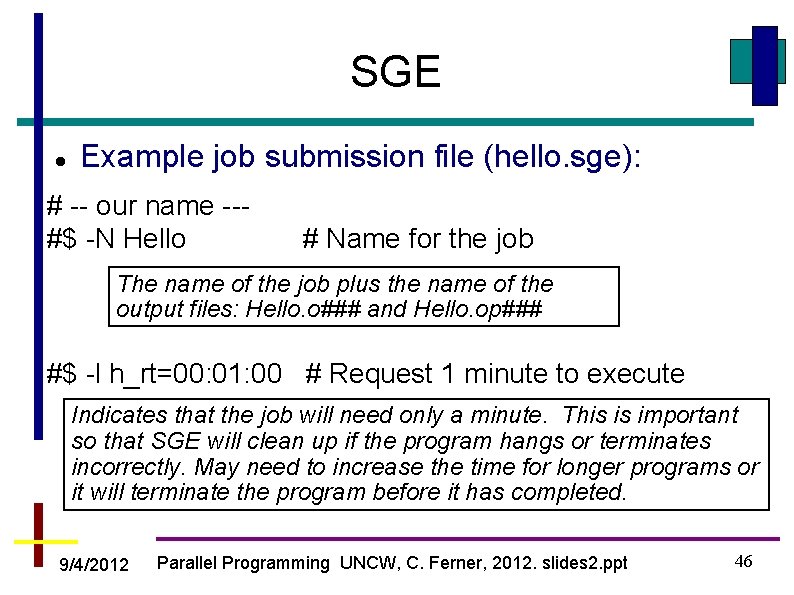

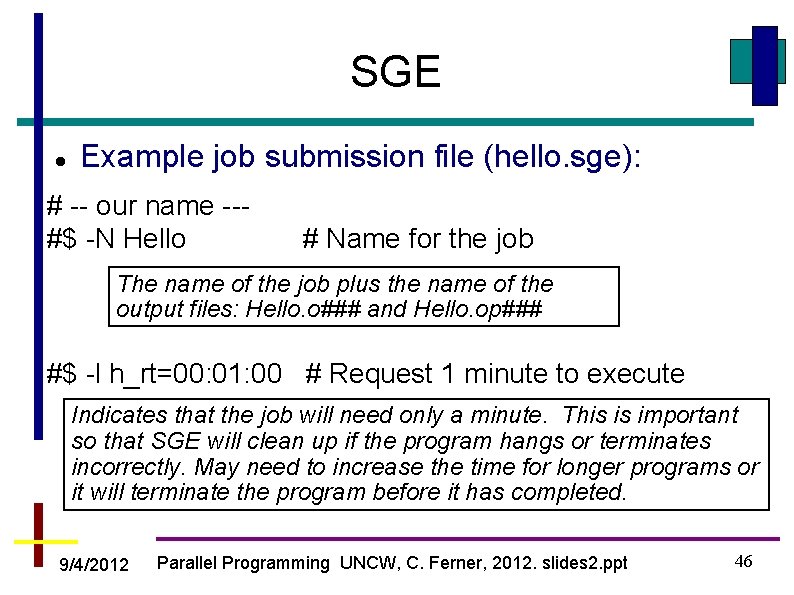

SGE Example job submission file (hello. sge): # -- our name --#$ -N Hello # Name for the job The name of the job plus the name of the output files: Hello. o### and Hello. op### #$ -l h_rt=00: 01: 00 # Request 1 minute to execute Indicates that the job will need only a minute. This is important so that SGE will clean up if the program hangs or terminates incorrectly. May need to increase the time for longer programs or it will terminate the program before it has completed. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 46

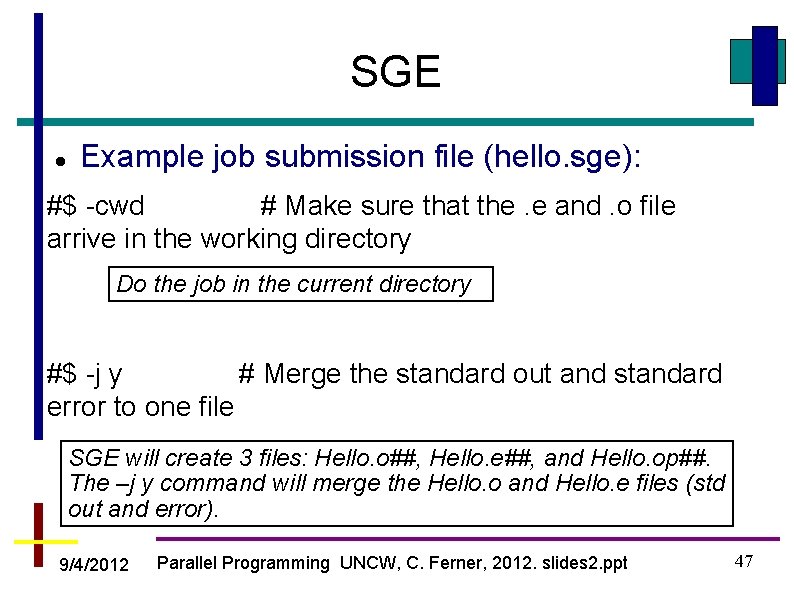

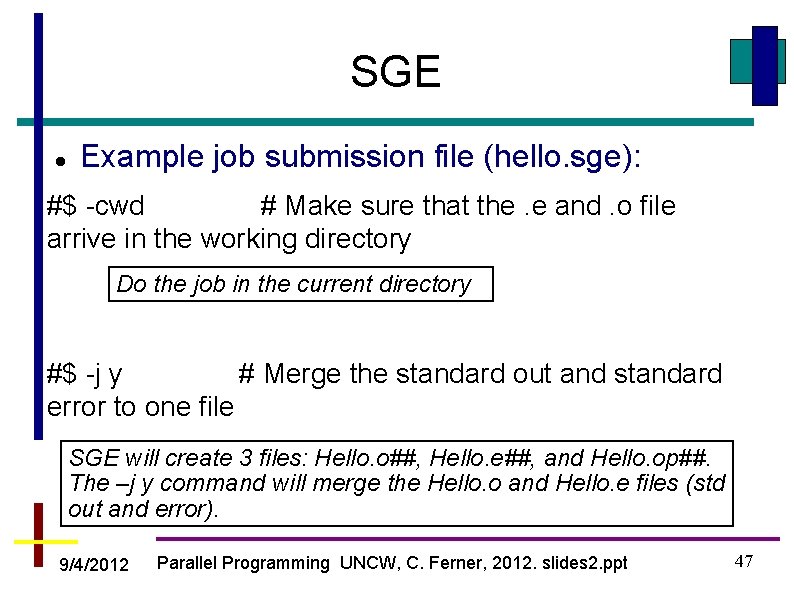

SGE Example job submission file (hello. sge): #$ -cwd # Make sure that the. e and. o file arrive in the working directory Do the job in the current directory #$ -j y # Merge the standard out and standard error to one file SGE will create 3 files: Hello. o##, Hello. e##, and Hello. op##. The –j y command will merge the Hello. o and Hello. e files (std out and error). 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 47

SGE Example job submission file (hello. sge): mpirun -np $NSLOTS. /hello And finally the command to run the MPI program. $NSLOTS is the same number given with the #$ -pe orte 16 line. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 48

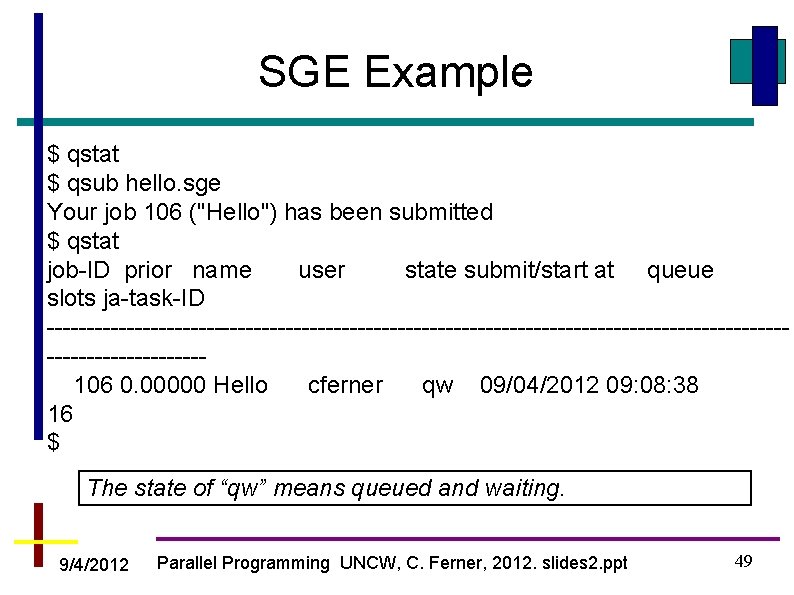

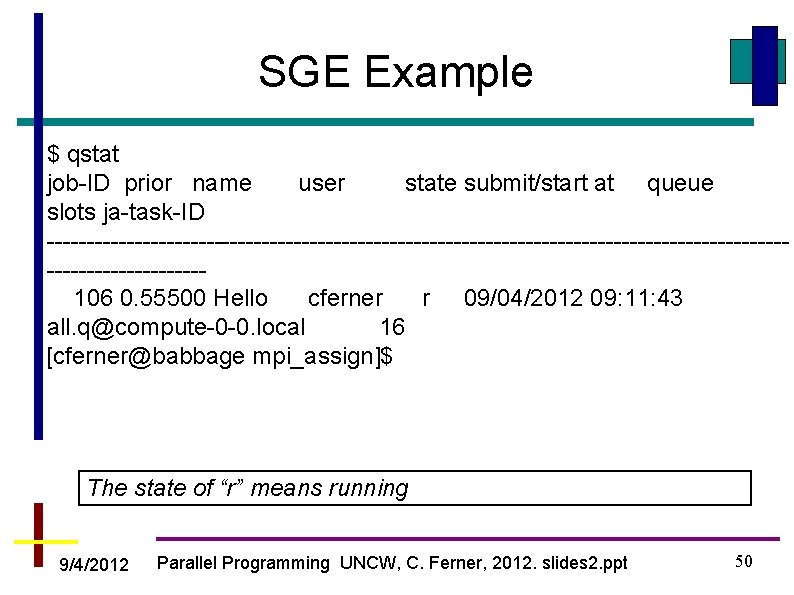

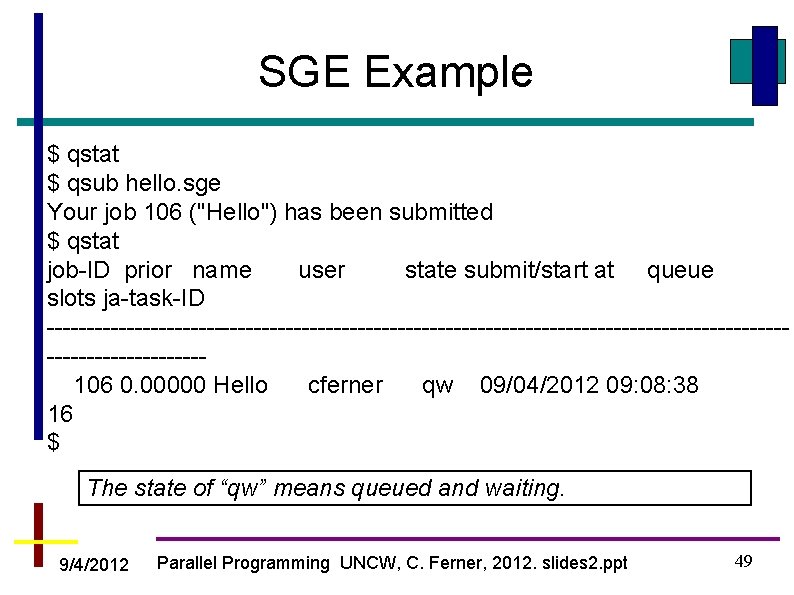

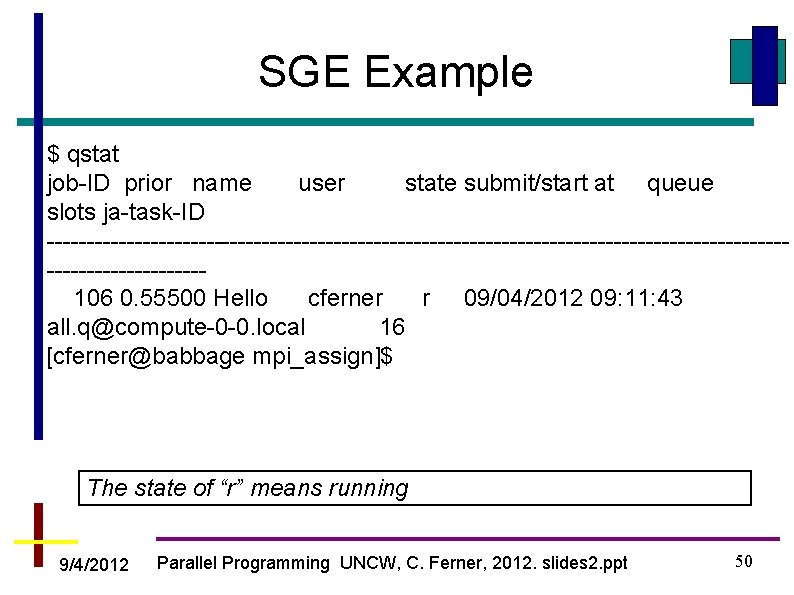

SGE Example $ qstat $ qsub hello. sge Your job 106 ("Hello") has been submitted $ qstat job-ID prior name user state submit/start at queue slots ja-task-ID --------------------------------------------------------106 0. 00000 Hello cferner qw 09/04/2012 09: 08: 38 16 $ The state of “qw” means queued and waiting. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 49

SGE Example $ qstat job-ID prior name user state submit/start at queue slots ja-task-ID --------------------------------------------------------106 0. 55500 Hello cferner r 09/04/2012 09: 11: 43 all. q@compute-0 -0. local 16 [cferner@babbage mpi_assign]$ The state of “r” means running 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 50

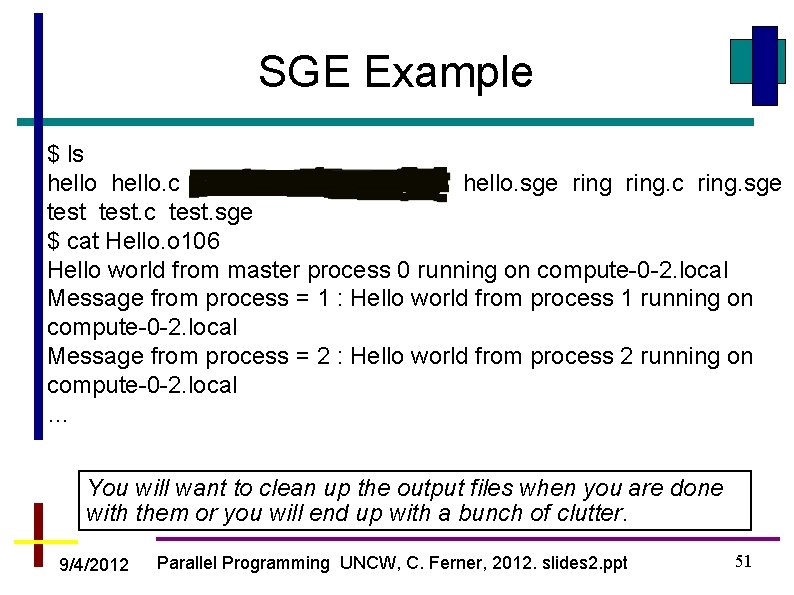

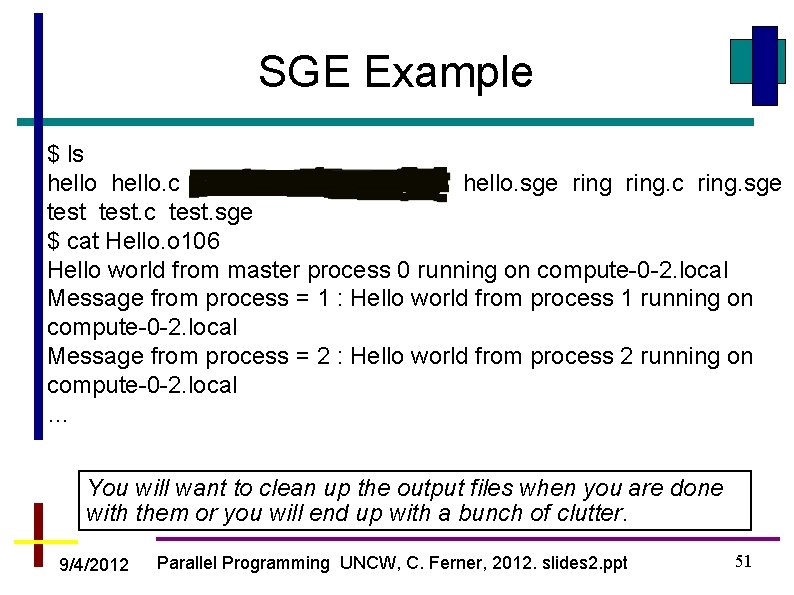

SGE Example $ ls hello. c Hello. o 106 Hello. po 106 hello. sge ring. c ring. sge test. c test. sge $ cat Hello. o 106 Hello world from master process 0 running on compute-0 -2. local Message from process = 1 : Hello world from process 1 running on compute-0 -2. local Message from process = 2 : Hello world from process 2 running on compute-0 -2. local … You will want to clean up the output files when you are done with them or you will end up with a bunch of clutter. 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 51

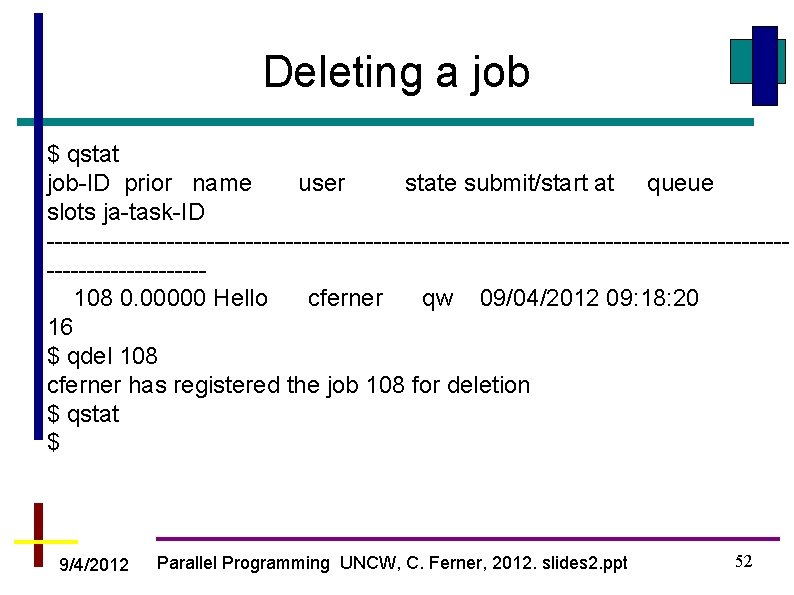

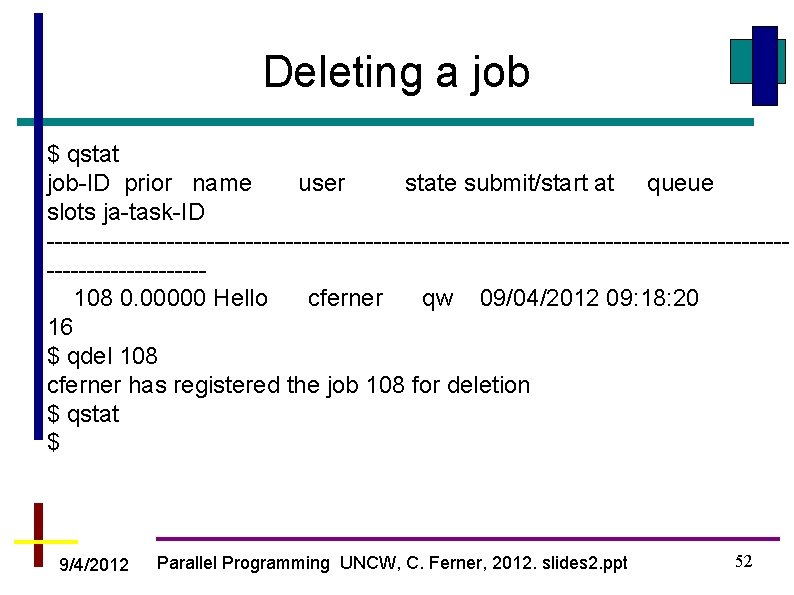

Deleting a job $ qstat job-ID prior name user state submit/start at queue slots ja-task-ID --------------------------------------------------------108 0. 00000 Hello cferner qw 09/04/2012 09: 18: 20 16 $ qdel 108 cferner has registered the job 108 for deletion $ qstat $ 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 52

Questions? 9/4/2012 Parallel Programming UNCW, C. Ferner, 2012. slides 2. ppt 53