Parallel Sorting OddEven Sort David Monismith CS 599

- Slides: 14

Parallel Sorting – Odd-Even Sort David Monismith CS 599 Notes based upon multiple sources provided in the footers of each slide

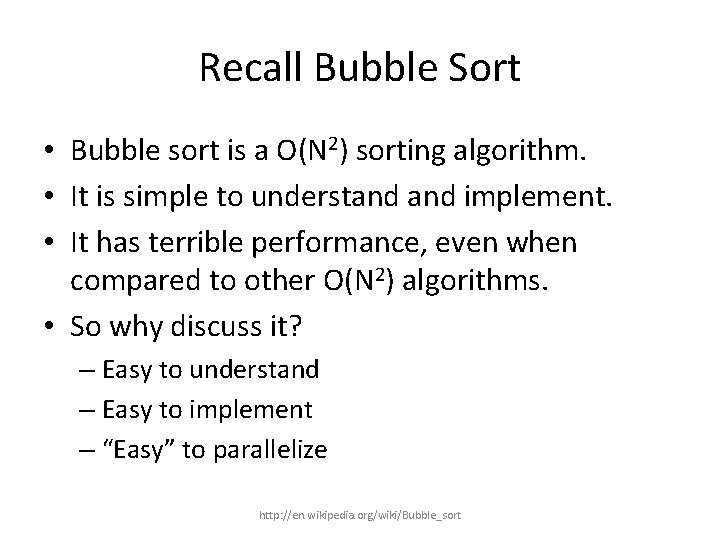

Recall Bubble Sort • Bubble sort is a O(N 2) sorting algorithm. • It is simple to understand implement. • It has terrible performance, even when compared to other O(N 2) algorithms. • So why discuss it? – Easy to understand – Easy to implement – “Easy” to parallelize http: //en. wikipedia. org/wiki/Bubble_sort

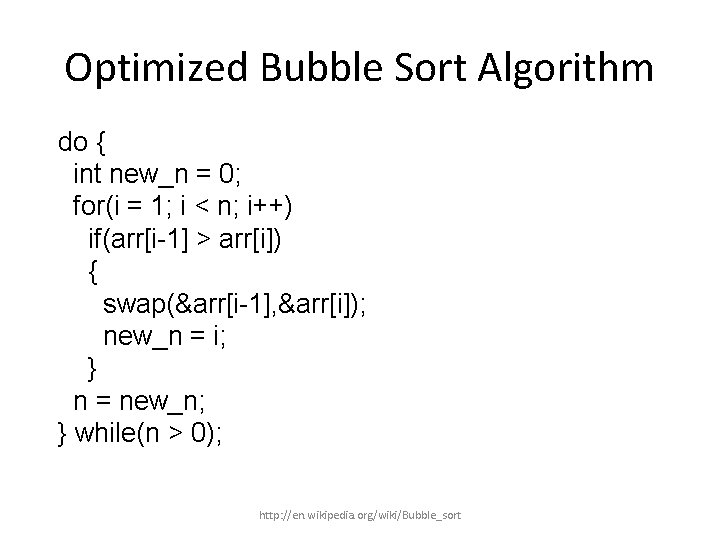

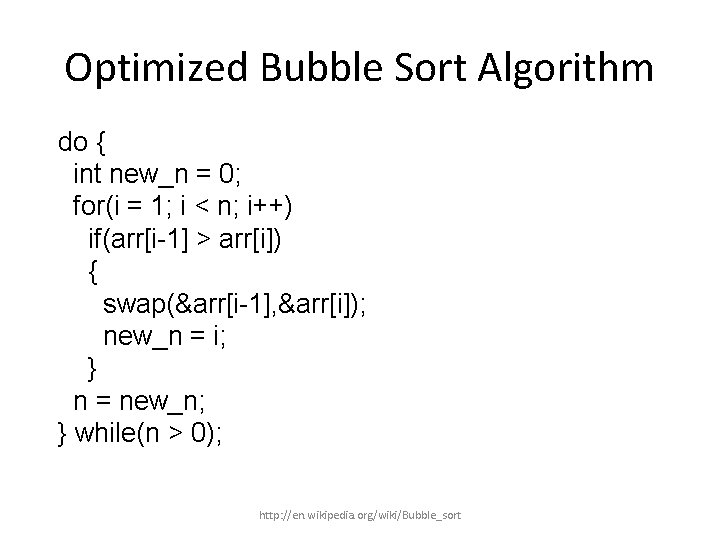

Optimized Bubble Sort Algorithm do { int new_n = 0; for(i = 1; i < n; i++) if(arr[i-1] > arr[i]) { swap(&arr[i-1], &arr[i]); new_n = i; } n = new_n; } while(n > 0); http: //en. wikipedia. org/wiki/Bubble_sort

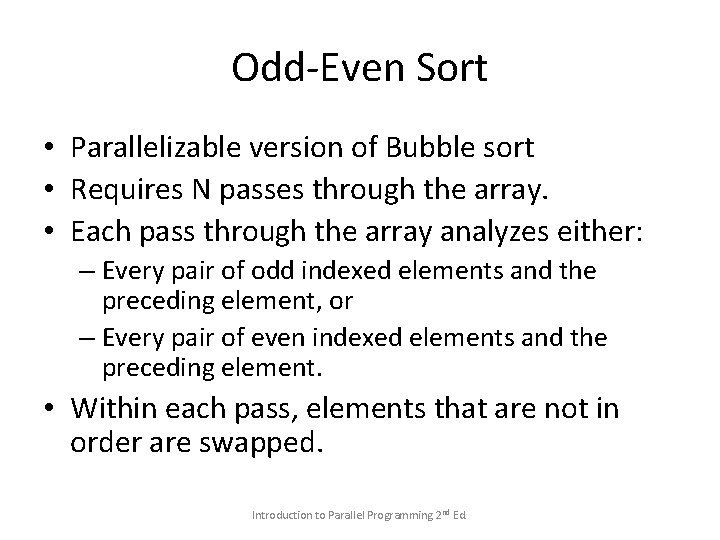

Odd-Even Sort • Parallelizable version of Bubble sort • Requires N passes through the array. • Each pass through the array analyzes either: – Every pair of odd indexed elements and the preceding element, or – Every pair of even indexed elements and the preceding element. • Within each pass, elements that are not in order are swapped. Introduction to Parallel Programming 2 nd Ed.

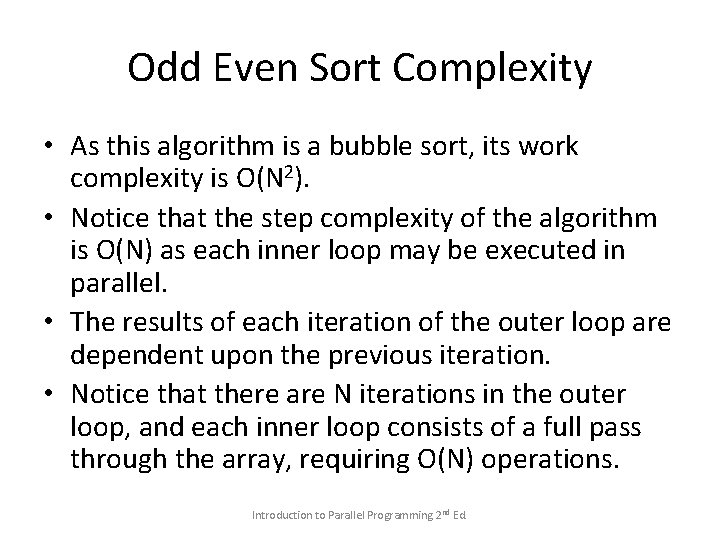

Odd Even Sort Complexity • As this algorithm is a bubble sort, its work complexity is O(N 2). • Notice that the step complexity of the algorithm is O(N) as each inner loop may be executed in parallel. • The results of each iteration of the outer loop are dependent upon the previous iteration. • Notice that there are N iterations in the outer loop, and each inner loop consists of a full pass through the array, requiring O(N) operations. Introduction to Parallel Programming 2 nd Ed.

Example • An example of odd-even sort will be provided on the board. • Students will complete a worksheet problem to trace an odd-even sort. • The goal of this exercise is to see how the algorithm can be parallelized.

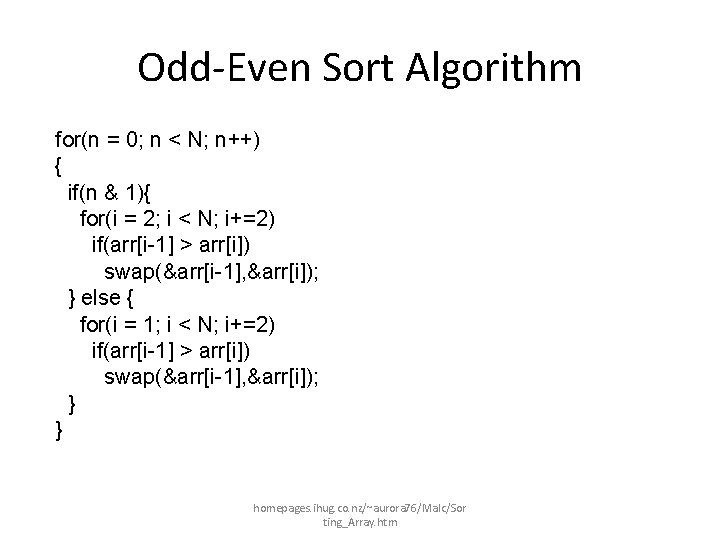

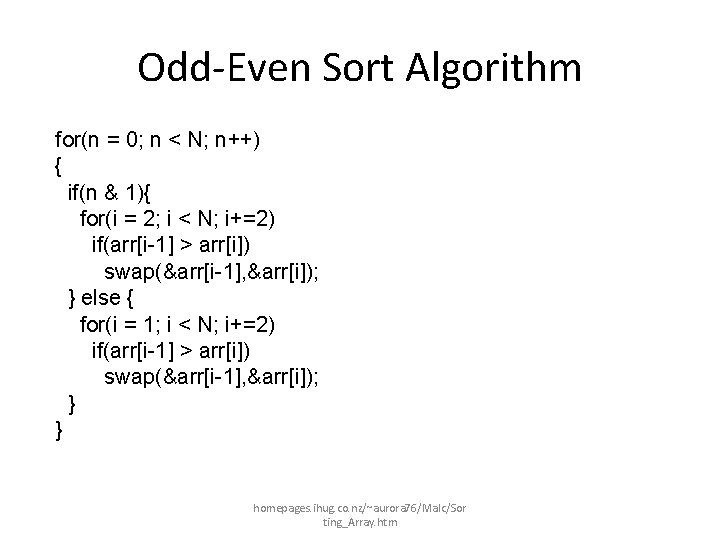

Odd-Even Sort Algorithm for(n = 0; n < N; n++) { if(n & 1){ for(i = 2; i < N; i+=2) if(arr[i-1] > arr[i]) swap(&arr[i-1], &arr[i]); } else { for(i = 1; i < N; i+=2) if(arr[i-1] > arr[i]) swap(&arr[i-1], &arr[i]); } } homepages. ihug. co. nz/~aurora 76/Malc/Sor ting_Array. htm

Why does Odd-Even Sort work?

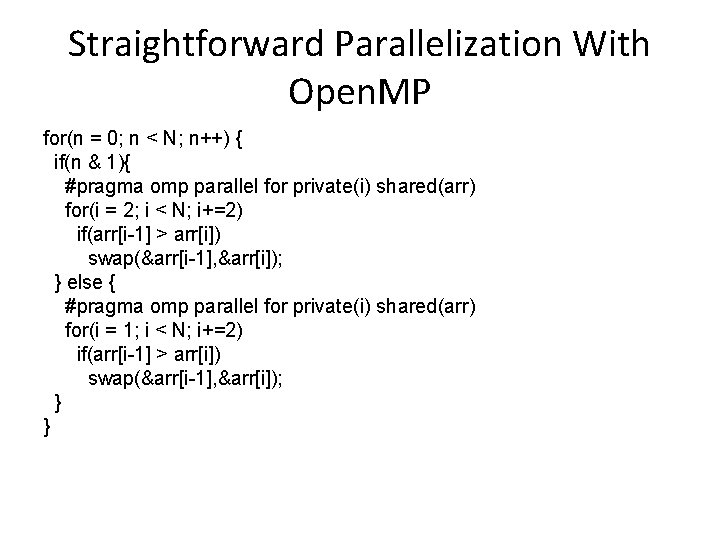

Open. MP Parallelization • Parallelization of the Odd-Even Sort Algorithm is straightforward as the swap operations performed within each iteration are independent. • Thus each odd or even step may be fully parallelized through Open. MP directives.

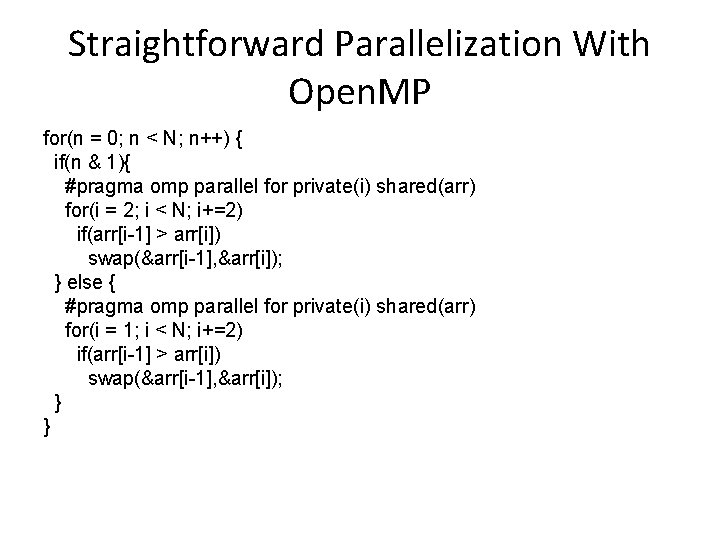

Straightforward Parallelization With Open. MP for(n = 0; n < N; n++) { if(n & 1){ #pragma omp parallel for private(i) shared(arr) for(i = 2; i < N; i+=2) if(arr[i-1] > arr[i]) swap(&arr[i-1], &arr[i]); } else { #pragma omp parallel for private(i) shared(arr) for(i = 1; i < N; i+=2) if(arr[i-1] > arr[i]) swap(&arr[i-1], &arr[i]); } }

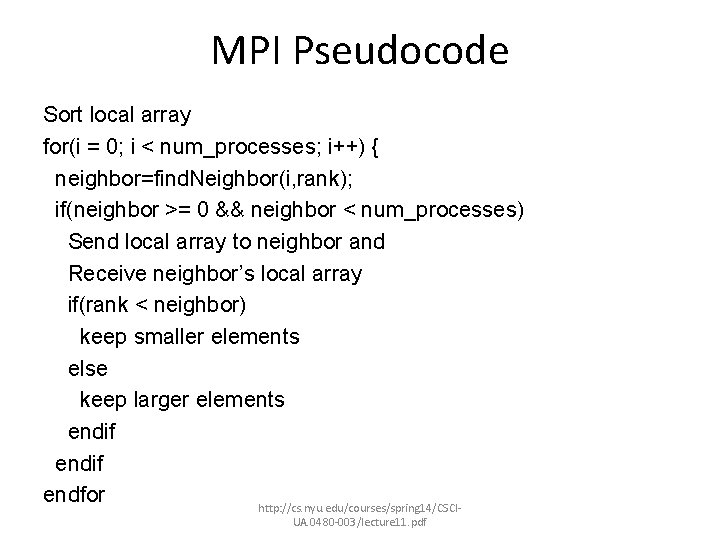

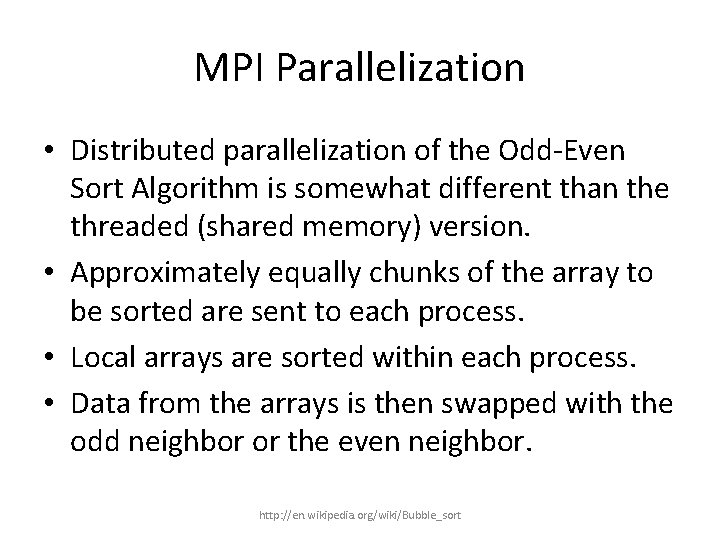

MPI Parallelization • Distributed parallelization of the Odd-Even Sort Algorithm is somewhat different than the threaded (shared memory) version. • Approximately equally chunks of the array to be sorted are sent to each process. • Local arrays are sorted within each process. • Data from the arrays is then swapped with the odd neighbor or the even neighbor. http: //en. wikipedia. org/wiki/Bubble_sort

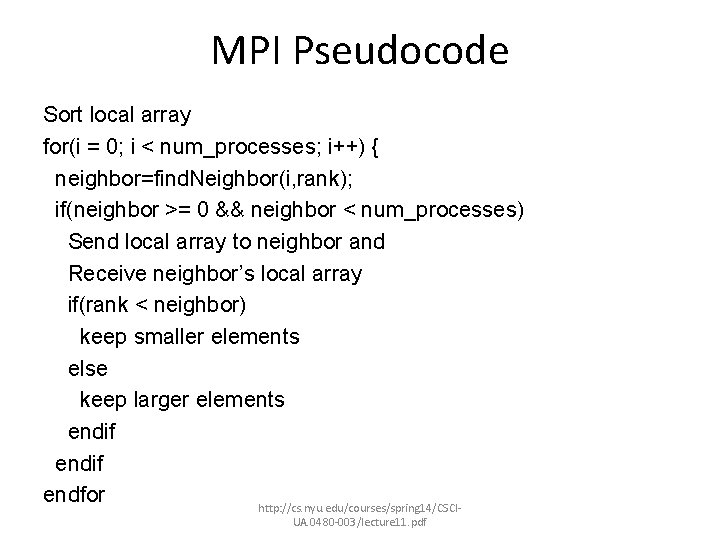

MPI Pseudocode Sort local array for(i = 0; i < num_processes; i++) { neighbor=find. Neighbor(i, rank); if(neighbor >= 0 && neighbor < num_processes) Send local array to neighbor and Receive neighbor’s local array if(rank < neighbor) keep smaller elements else keep larger elements endif endfor http: //cs. nyu. edu/courses/spring 14/CSCIUA. 0480 -003/lecture 11. pdf

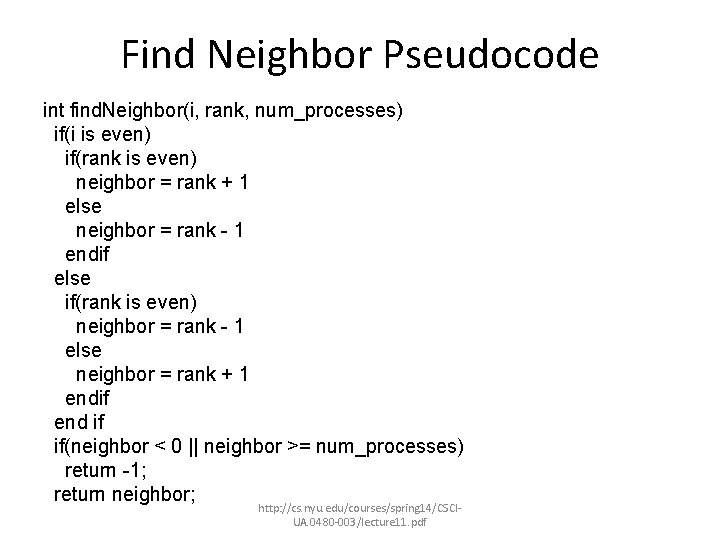

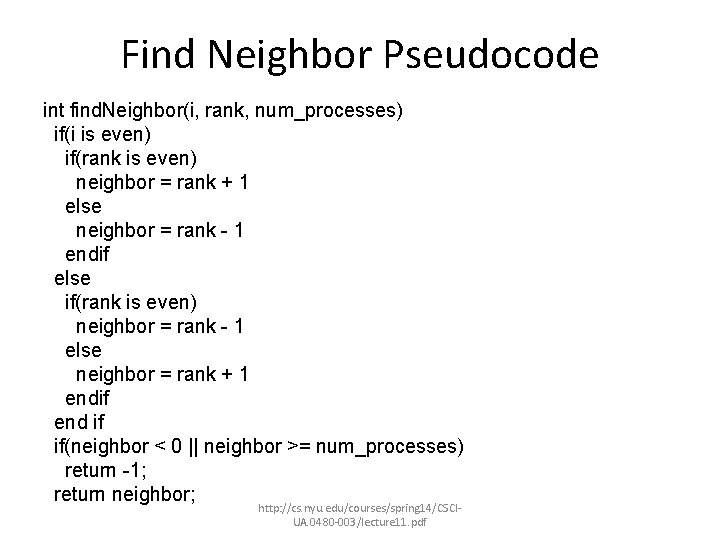

Find Neighbor Pseudocode int find. Neighbor(i, rank, num_processes) if(i is even) if(rank is even) neighbor = rank + 1 else neighbor = rank - 1 endif else if(rank is even) neighbor = rank - 1 else neighbor = rank + 1 endif end if if(neighbor < 0 || neighbor >= num_processes) return -1; return neighbor; http: //cs. nyu. edu/courses/spring 14/CSCIUA. 0480 -003/lecture 11. pdf

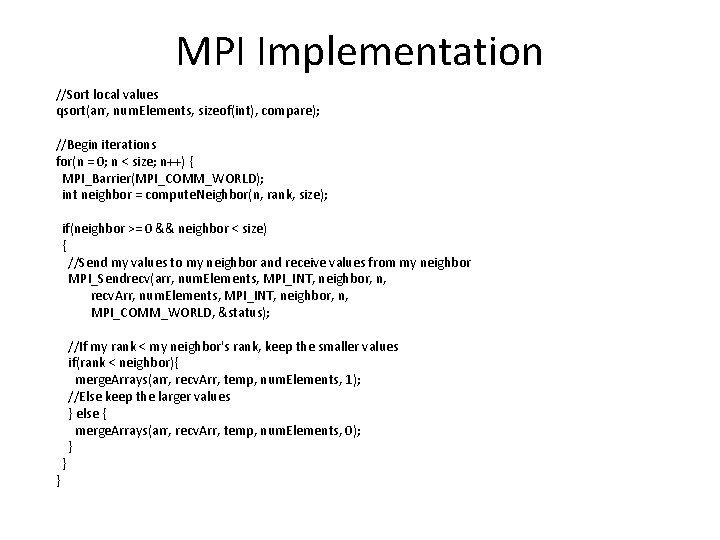

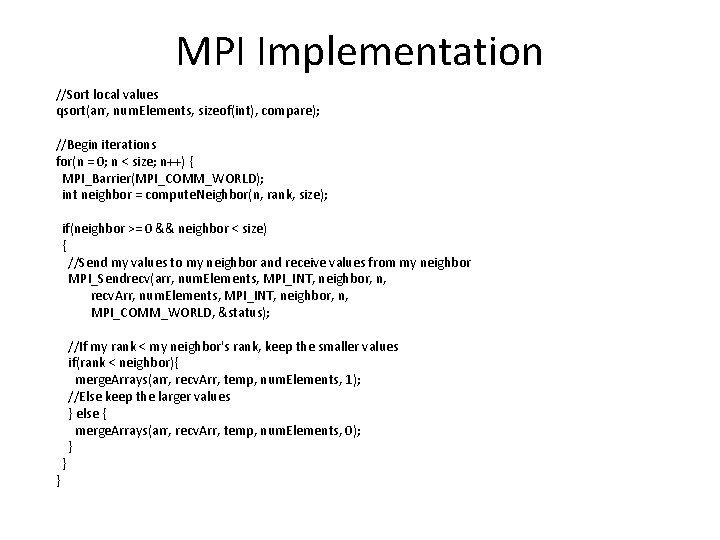

MPI Implementation //Sort local values qsort(arr, num. Elements, sizeof(int), compare); //Begin iterations for(n = 0; n < size; n++) { MPI_Barrier(MPI_COMM_WORLD); int neighbor = compute. Neighbor(n, rank, size); if(neighbor >= 0 && neighbor < size) { //Send my values to my neighbor and receive values from my neighbor MPI_Sendrecv(arr, num. Elements, MPI_INT, neighbor, n, recv. Arr, num. Elements, MPI_INT, neighbor, n, MPI_COMM_WORLD, &status); } } //If my rank < my neighbor's rank, keep the smaller values if(rank < neighbor){ merge. Arrays(arr, recv. Arr, temp, num. Elements, 1); //Else keep the larger values } else { merge. Arrays(arr, recv. Arr, temp, num. Elements, 0); }