PVM and MPI What Else is Needed For

- Slides: 23

PVM and MPI What Else is Needed For Cluster Computing? Al Geist Oak Ridge National Laboratory www. csm. ornl. gov/~geist DAPSYS/Euro. PVM-MPI Balatonfured, Hungary September 11, 2000

Euro. PVM-MPI Dedicated to the hottest developments of PVM and MPI are the most used tools for parallel programming The hottest trend driving PVM and MPI today is PC clusters running Linux and/or Windows This talk will look at gaps in what PVM and MPI provide for Cluster Computing. What role the GRID may play and What is happening to fill the gaps…

PVM Latest News: New release this summer – PVM 3. 4. 3 includes: Optimized msgbox routines – More scalable, more robust New Beowulf-linux port – Allows clusters to be behind firewalls yet work together Smart virtual machine startup – Automatically determines the reason for “Can’t start pvmd” Works with Windows 2000 – Installshield version available – Improved Win 32 communication performance New Third party PVM Software: Python. PVM 0. 9, Java. PVM, PVM port using SCI interface

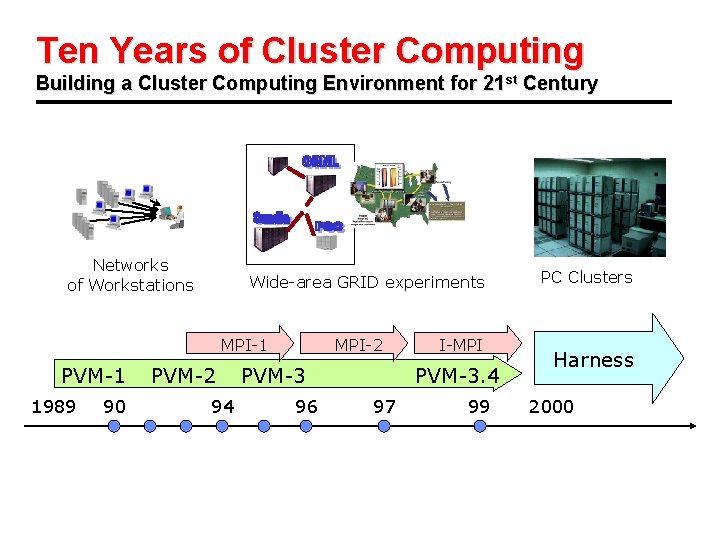

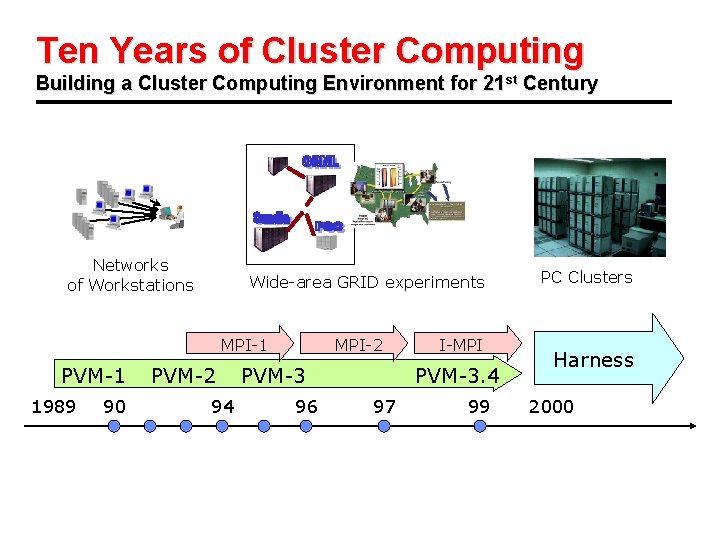

Ten Years of Cluster Computing Building a Cluster Computing Environment for 21 st Century Networks of Workstations Wide-area GRID experiments MPI-1 PVM-1 1989 90 PVM-2 94 MPI-2 PVM-3 96 I-MPI PVM-3. 4 97 99 PC Clusters Harness 2000

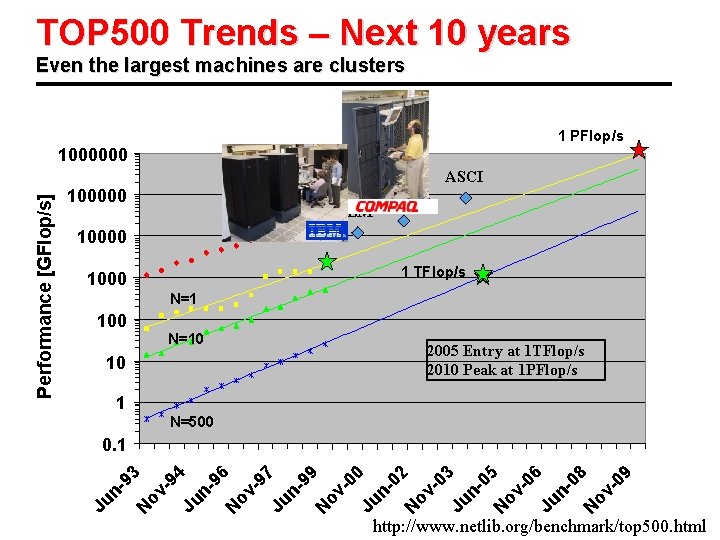

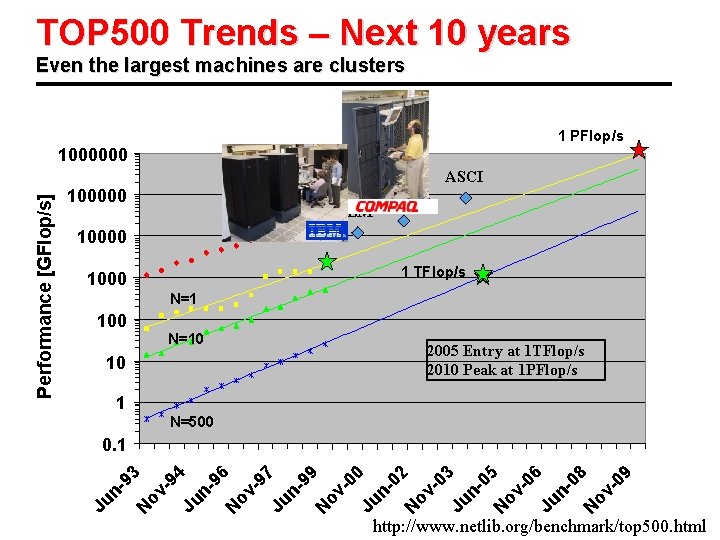

TOP 500 Trends – Next 10 years Even the largest machines are clusters 1 PFlop/s 1000000 100000 IBM Compaq 10000 1 TFlop/s 1000 N=1 100 N=10 2005 Entry at 1 TFlop/s 2010 Peak at 1 PFlop/s 10 1 N=500 09 N ov - 08 Ju n- 06 N ov - 05 Ju n- 03 N ov - 02 Ju n- 00 N ov - 99 Ju n- 97 N ov - 96 Ju n- 94 N ov - 93 0. 1 Ju n- Performance [GFlop/s] ASCI http: //www. netlib. org/benchmark/top 500. html

Trend in Affordable PC clusters PC Clusters are cost effective from a hardware perspective Many Universities and companies can afford 16 to 100 nodes. System administration is an overlooked cost: people to maintain cluster software written for each cluster higher failure rates for COTS Presently there is lack of tools for managing large clusters www. csm. ornl. gov/torc

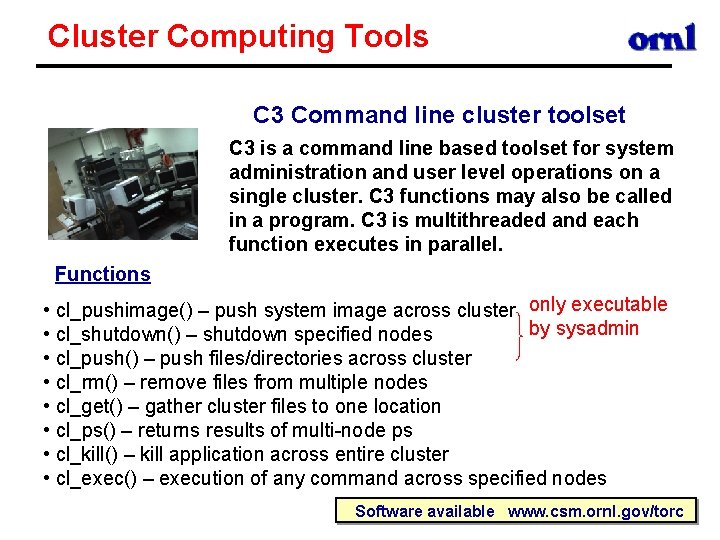

Cluster Computing Tools C 3 Command line cluster toolset C 3 is a command line based toolset for system administration and user level operations on a single cluster. C 3 functions may also be called in a program. C 3 is multithreaded and each function executes in parallel. Functions • cl_pushimage() – push system image across cluster only executable by sysadmin • cl_shutdown() – shutdown specified nodes • cl_push() – push files/directories across cluster • cl_rm() – remove files from multiple nodes • cl_get() – gather cluster files to one location • cl_ps() – returns results of multi-node ps • cl_kill() – kill application across entire cluster • cl_exec() – execution of any command across specified nodes Software available www. csm. ornl. gov/torc

Visualization using Clusters Lowering the Cost of High Performance Graphics VTK ported to Linux Clusters – Visualization toolkit making use of C 3 cluster package AVS Express ported to PC Cluster – Expensive but standard package asked for by apps. – Requires AVS Site license to eliminate the cost of individual node licenese Cumulvs plug-in for AVS Express – Combines the interactive visualization and computational steering of Cumulvs with the visualization tools of AVS.

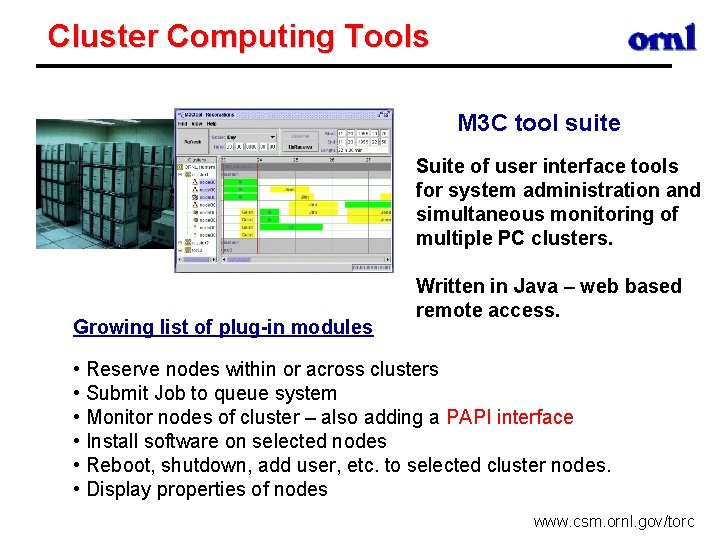

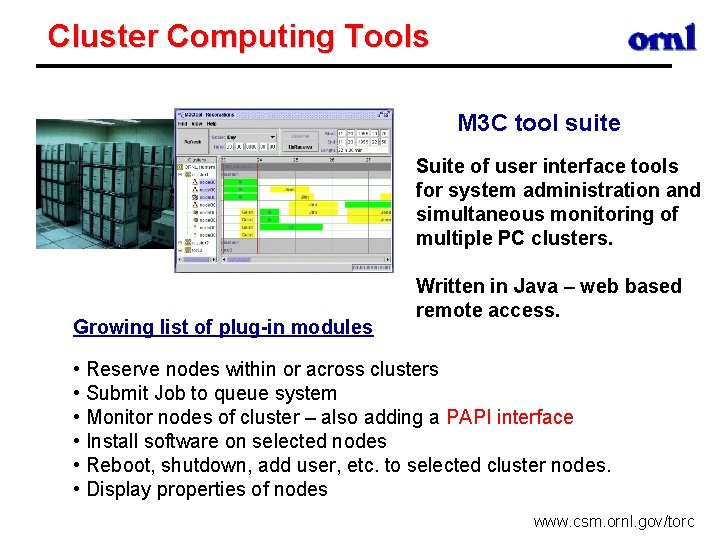

Cluster Computing Tools M 3 C tool suite Suite of user interface tools for system administration and simultaneous monitoring of multiple PC clusters. Growing list of plug-in modules Written in Java – web based remote access. • Reserve nodes within or across clusters • Submit Job to queue system • Monitor nodes of cluster – also adding a PAPI interface • Install software on selected nodes • Reboot, shutdown, add user, etc. to selected cluster nodes. • Display properties of nodes www. csm. ornl. gov/torc

OSCAR National Consortium for Cluster software OSCAR is a collection of the best known software for building, programming, and using clusters. The collection effort is lead by a national consortium which includes: IBM, SGI, Intel, ORNL, NCSA, MCS Software. Other vendors invited. Goals • Bring uniformity to cluster creation and use • Make clusters more broadly acceptable • Foster commercial versions of the cluster software For more details see Stephen Scott (talk Monday 12: 00 DAPSYS track) www. csm. ornl. gov/oscar

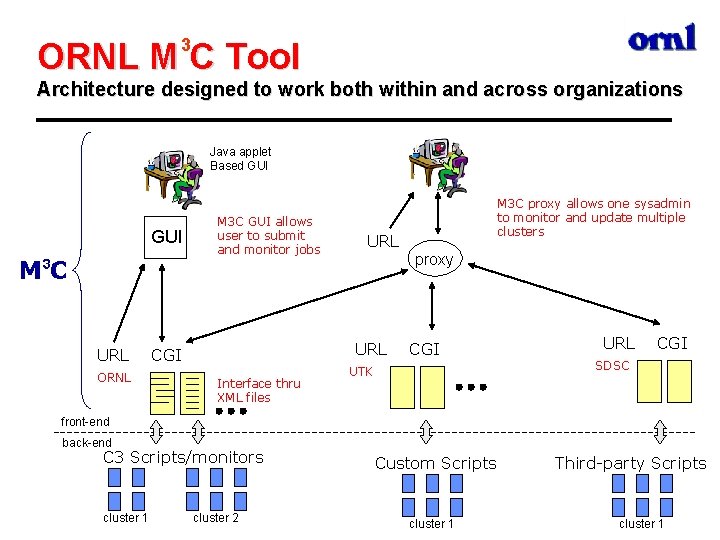

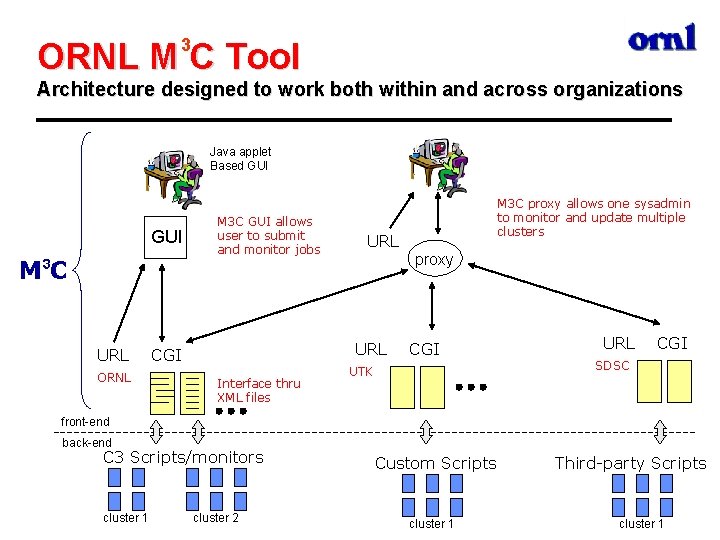

3 ORNL M C Tool Architecture designed to work both within and across organizations Java applet Based GUI M 3 C URL ORNL M 3 C GUI allows user to submit and monitor jobs URL CGI Interface thru XML files M 3 C proxy allows one sysadmin to monitor and update multiple clusters proxy CGI UTK URL CGI SDSC front-end back-end C 3 Scripts/monitors cluster 1 cluster 2 Custom Scripts cluster 1 Third-party Scripts cluster 1

GRID “Ubiquitous” Computing GRID Forum is helping define higher level services • Information services • Uniform naming, locating, and allocating distirbuted resources • Data management and access • Single log-on security MPI and PVM are often seen as lower level capabilities that GRID frameworks support. Globus Net. Solve Condor Legion Neos Sin. RG

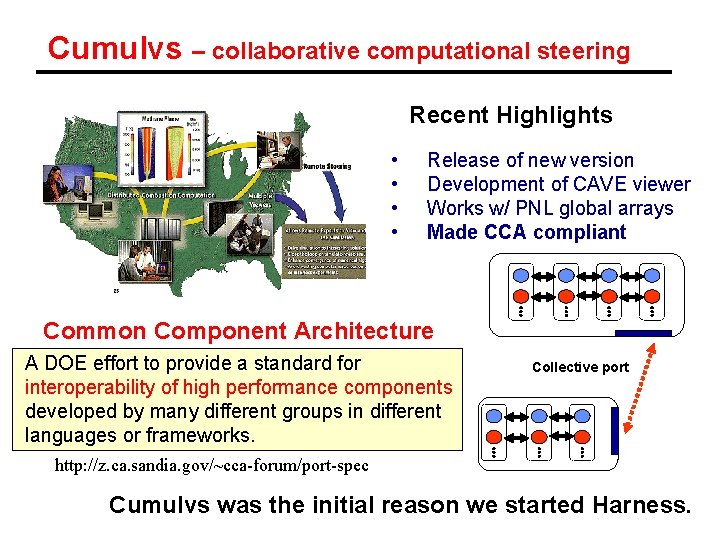

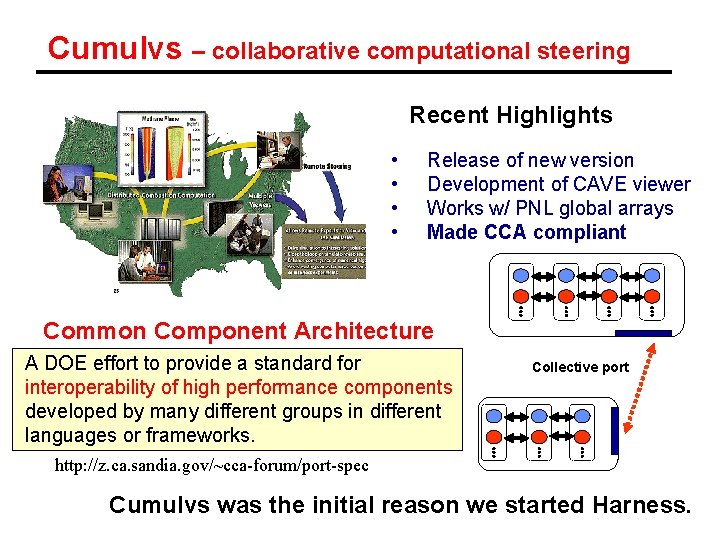

Cumulvs – collaborative computational steering Recent Highlights • • Release of new version Development of CAVE viewer Works w/ PNL global arrays Made CCA compliant Common Component Architecture A DOE effort to provide a standard for interoperability of high performance components developed by many different groups in different languages or frameworks. Collective port http: //z. ca. sandia. gov/~cca-forum/port-spec Cumulvs was the initial reason we started Harness.

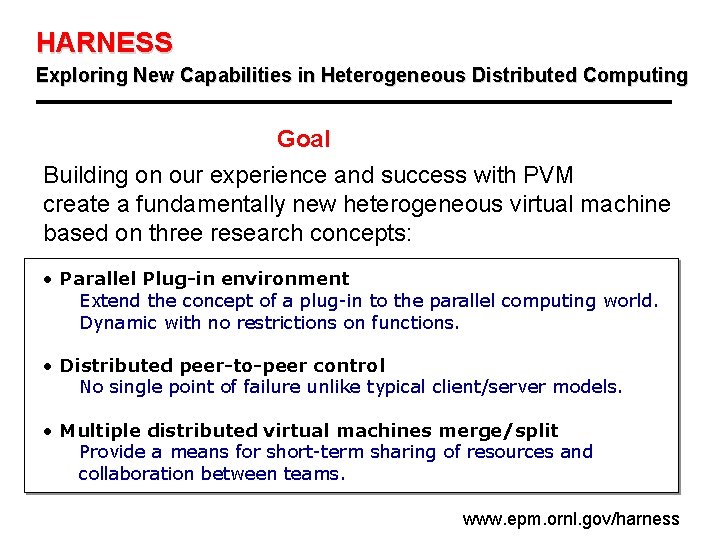

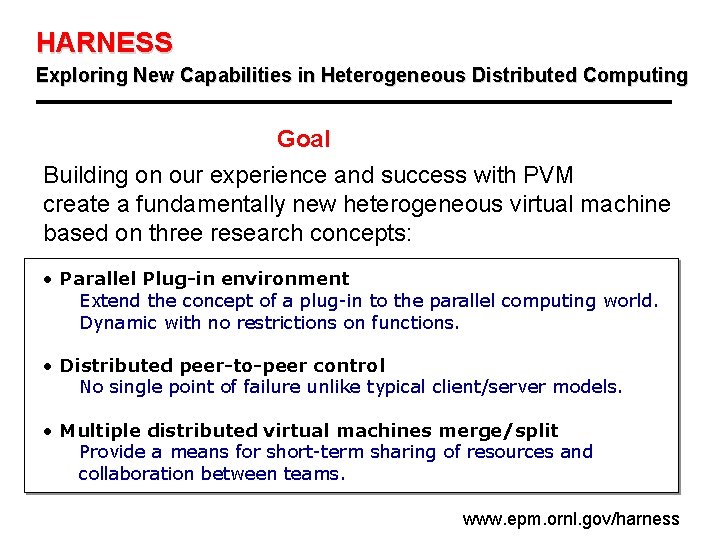

HARNESS Exploring New Capabilities in Heterogeneous Distributed Computing Goal Building on our experience and success with PVM create a fundamentally new heterogeneous virtual machine based on three research concepts: • Parallel Plug-in environment Extend the concept of a plug-in to the parallel computing world. Dynamic with no restrictions on functions. • Distributed peer-to-peer control No single point of failure unlike typical client/server models. • Multiple distributed virtual machines merge/split Provide a means for short-term sharing of resources and collaboration between teams. www. epm. ornl. gov/harness

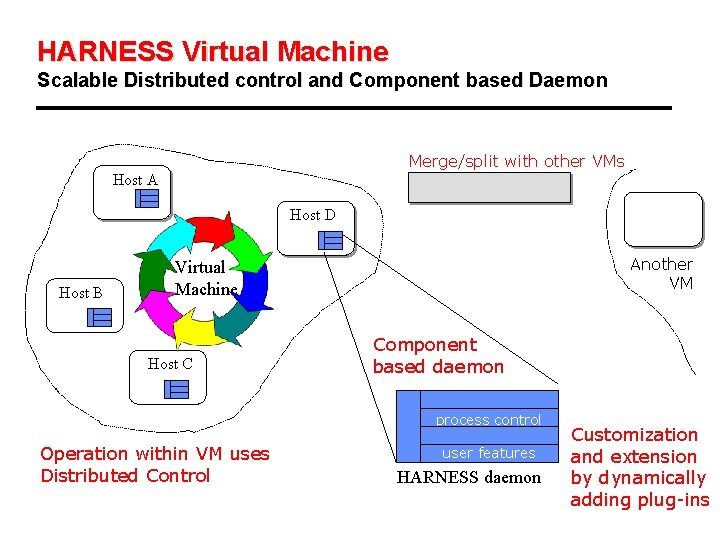

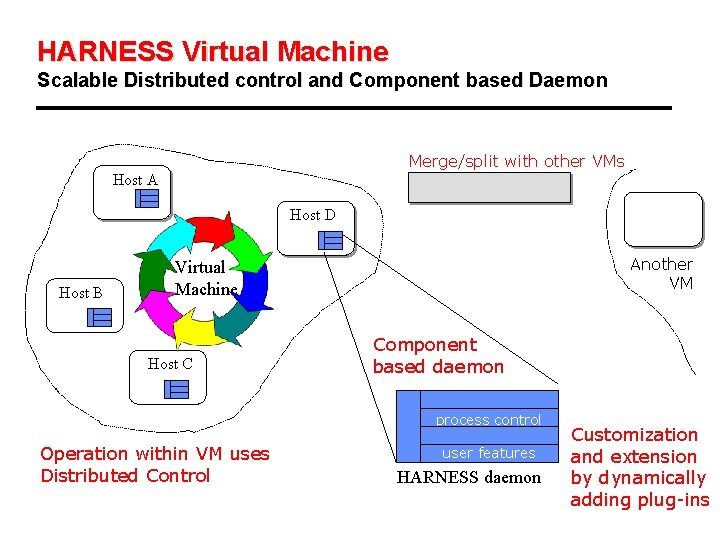

HARNESS Virtual Machine Scalable Distributed control and Component based Daemon Merge/split with other VMs Host A Host D Host B Another VM Virtual Machine Host C Component based daemon process control Operation within VM uses Distributed Control user features HARNESS daemon Customization and extension by dynamically adding plug-ins

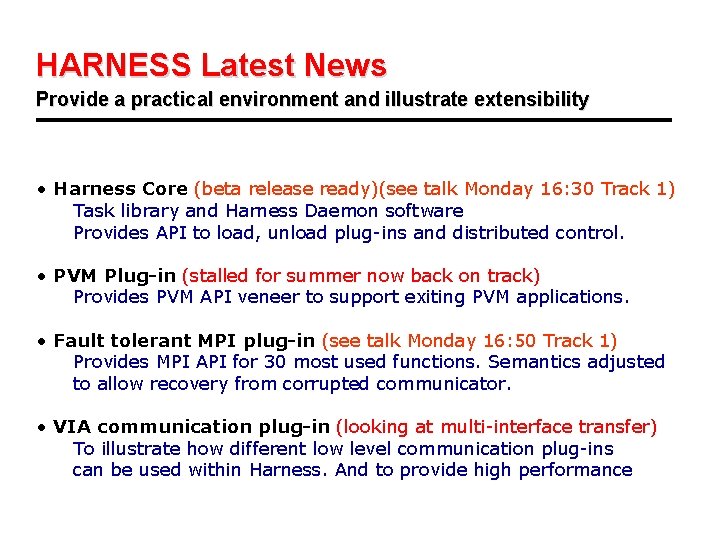

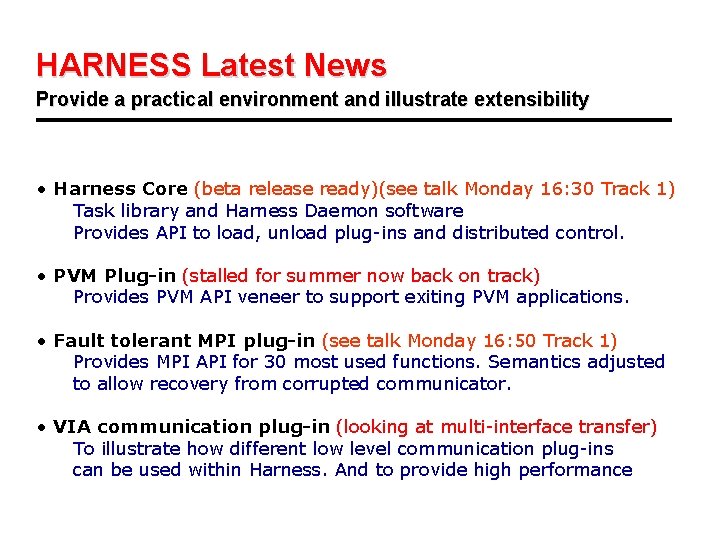

HARNESS Latest News Provide a practical environment and illustrate extensibility • Harness Core (beta release ready)(see talk Monday 16: 30 Track 1) Task library and Harness Daemon software Provides API to load, unload plug-ins and distributed control. • PVM Plug-in (stalled for summer now back on track) Provides PVM API veneer to support exiting PVM applications. • Fault tolerant MPI plug-in (see talk Monday 16: 50 Track 1) Provides MPI API for 30 most used functions. Semantics adjusted to allow recovery from corrupted communicator. • VIA communication plug-in (looking at multi-interface transfer) To illustrate how different low level communication plug-ins can be used within Harness. And to provide high performance

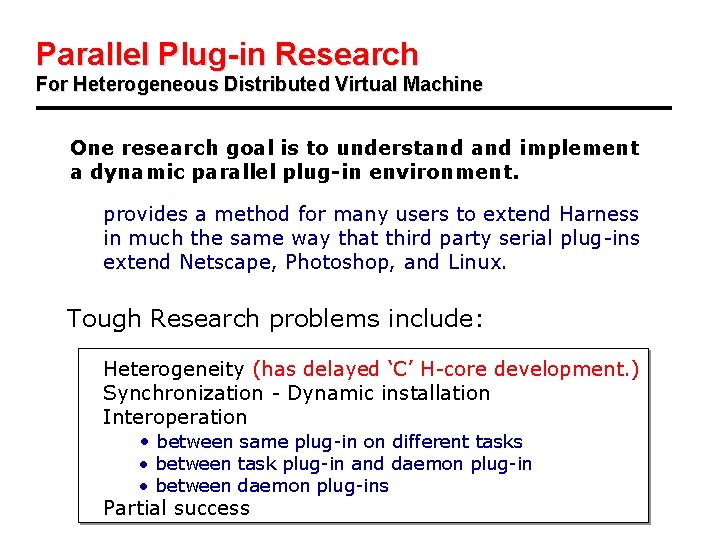

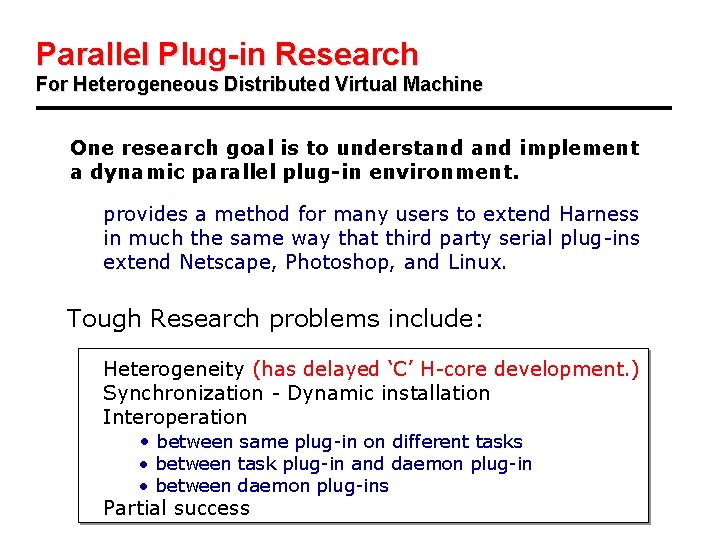

Parallel Plug-in Research For Heterogeneous Distributed Virtual Machine One research goal is to understand implement a dynamic parallel plug-in environment. provides a method for many users to extend Harness in much the same way that third party serial plug-ins extend Netscape, Photoshop, and Linux. Tough Research problems include: Heterogeneity (has delayed ‘C’ H-core development. ) Synchronization - Dynamic installation Interoperation • between same plug-in on different tasks • between task plug-in and daemon plug-in • between daemon plug-ins Partial success

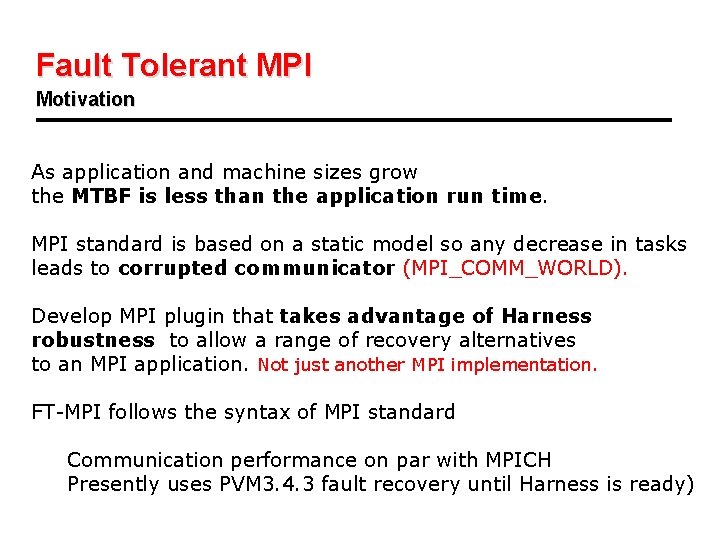

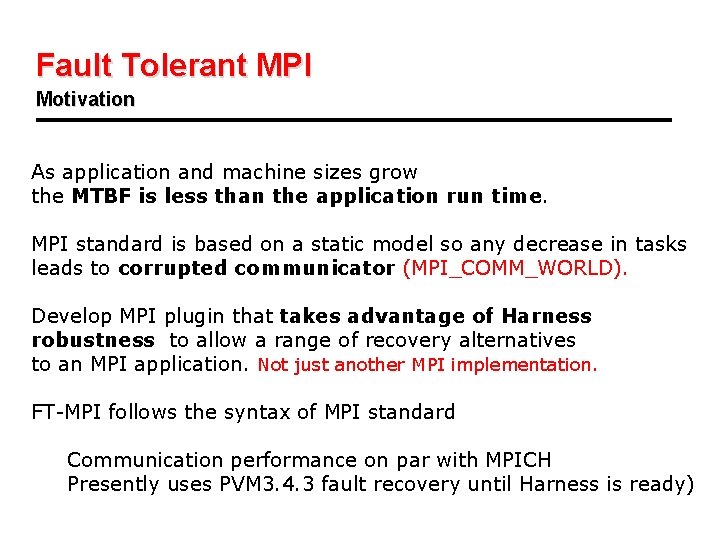

Fault Tolerant MPI Motivation As application and machine sizes grow the MTBF is less than the application run time. MPI standard is based on a static model so any decrease in tasks leads to corrupted communicator (MPI_COMM_WORLD). Develop MPI plugin that takes advantage of Harness robustness to allow a range of recovery alternatives to an MPI application. Not just another MPI implementation. FT-MPI follows the syntax of MPI standard Communication performance on par with MPICH Presently uses PVM 3. 4. 3 fault recovery until Harness is ready)

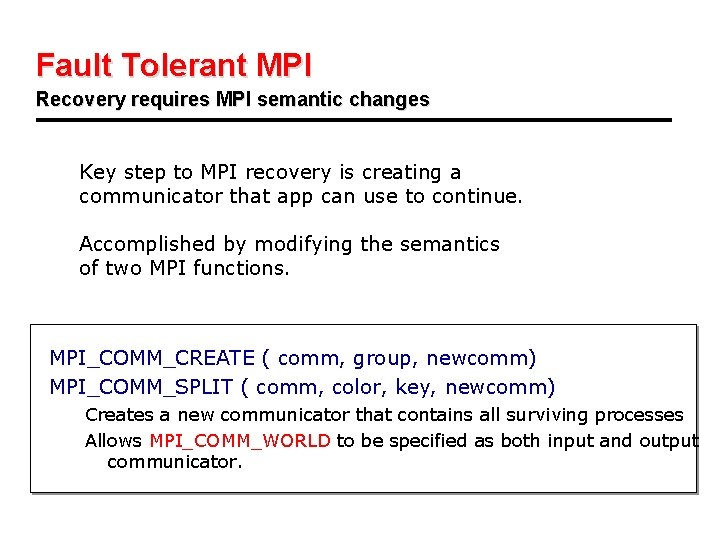

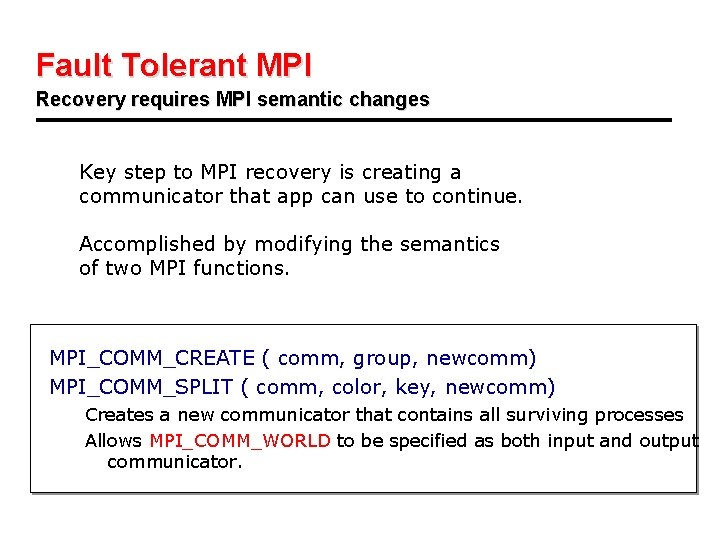

Fault Tolerant MPI Recovery requires MPI semantic changes Key step to MPI recovery is creating a communicator that app can use to continue. Accomplished by modifying the semantics of two MPI functions. MPI_COMM_CREATE ( comm, group, newcomm) MPI_COMM_SPLIT ( comm, color, key, newcomm) Creates a new communicator that contains all surviving processes Allows MPI_COMM_WORLD to be specified as both input and output communicator.

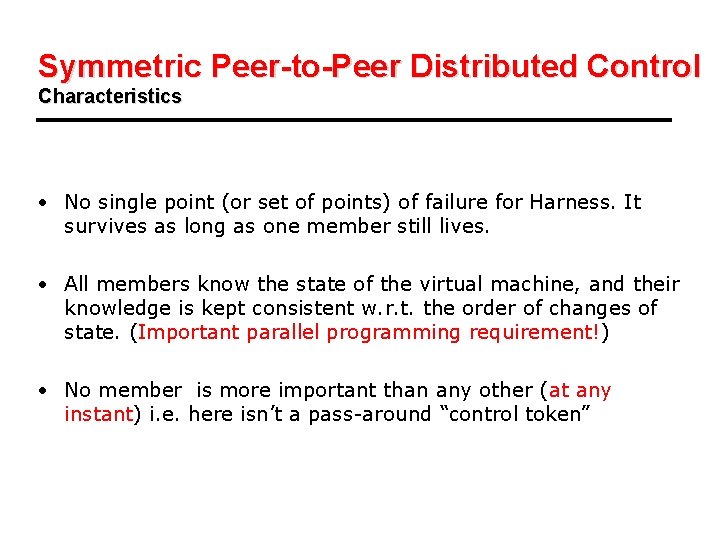

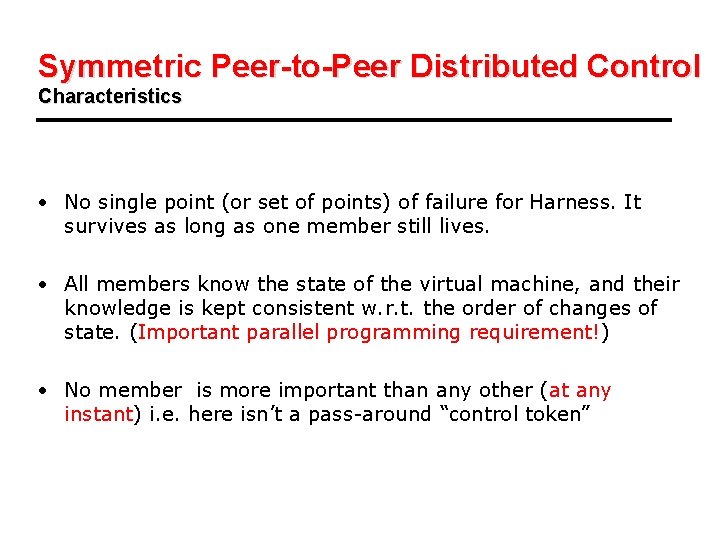

Symmetric Peer-to-Peer Distributed Control Characteristics • No single point (or set of points) of failure for Harness. It survives as long as one member still lives. • All members know the state of the virtual machine, and their knowledge is kept consistent w. r. t. the order of changes of state. (Important parallel programming requirement!) • No member is more important than any other (at any instant) i. e. here isn’t a pass-around “control token”

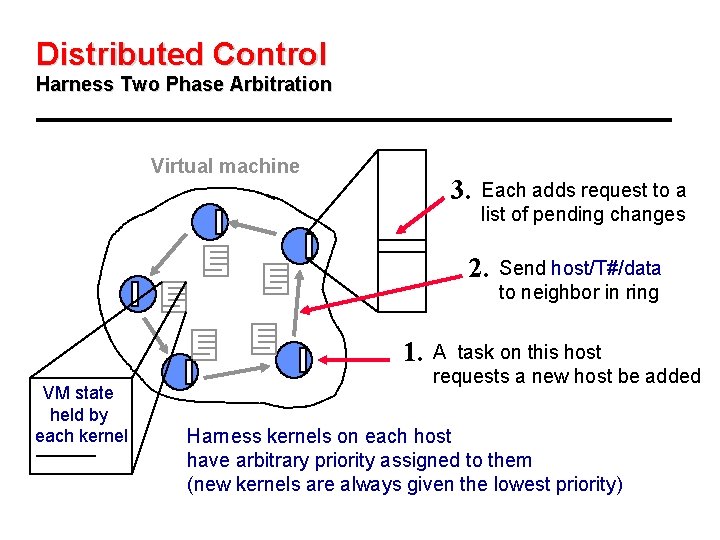

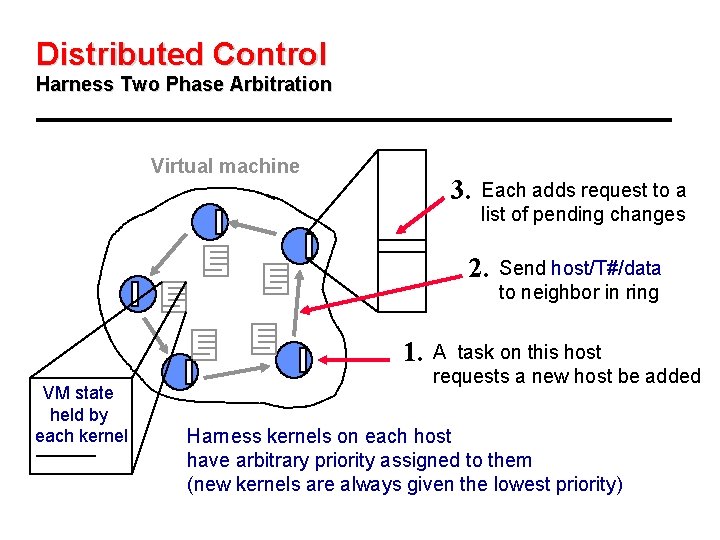

Distributed Control Harness Two Phase Arbitration Virtual machine 3. Each adds request to a list of pending changes 2. 1. VM state held by each kernel Send host/T#/data to neighbor in ring A task on this host requests a new host be added Harness kernels on each host have arbitrary priority assigned to them (new kernels are always given the lowest priority)

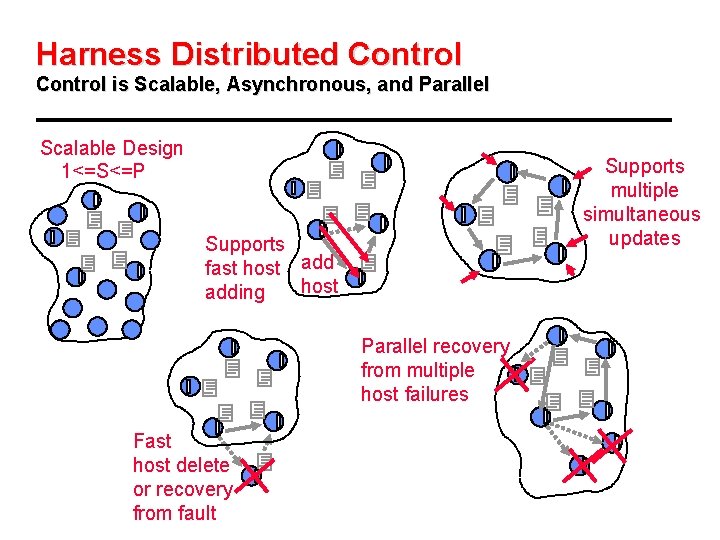

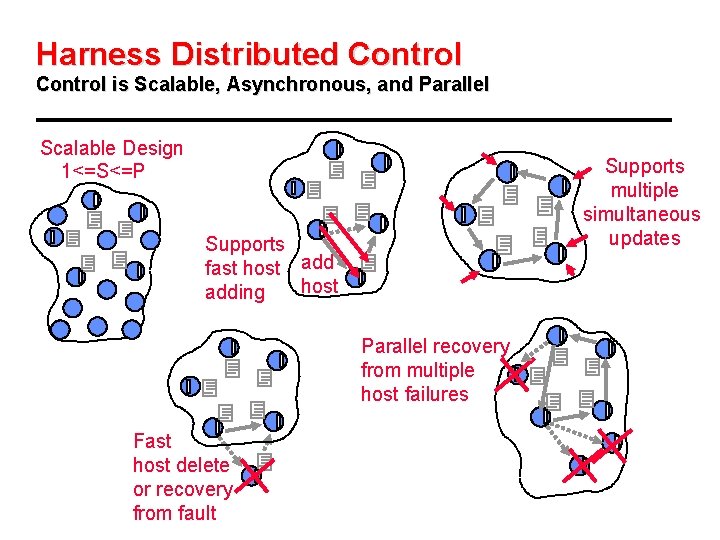

Harness Distributed Control is Scalable, Asynchronous, and Parallel Scalable Design 1<=S<=P Supports multiple simultaneous updates Supports fast host adding Parallel recovery from multiple host failures Fast host delete or recovery from fault

For more Information Also - Copy of these slides Follow the links from my Web site www. csm. ornl. gov/~geist