Introduction to Message Passing Interface MPI Part I

- Slides: 35

Introduction to Message Passing Interface (MPI) Part I So. Cal Annual AAP workshop October 30, 2006 San Diego Supercomputer Center SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

Overview • • MPI: Background and Key Concepts Compilers and Include files for MPI Initialization Basic Communications in MPI Data types Summary of 6 basic MPI calls Example using basic MPI calls SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

Message Passing Interface: Background • MPI - Message Passing Interface • Library standard defined by committee of vendors, implementers, and parallel programmers • Used to create parallel SPMD programs based on message passing • Available on almost all parallel machines in C and Fortran • About 125 routines including advanced routines • 6 basic routines SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

MPI Implementations • Most parallel machine vendors have optimized versions • Some other popular implementations include: • • • http: //www-unix. mcs. anl. gov/mpich/ http: //www. lam-mpi. org/ http: //www. open-mpi. org/ http: //icl. cs. utk. edu/ftmpi/ http: //public. lanl. gov/lampi/ SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

MPI: Key Concepts • Parallel SPMD programs based on message passing. • Universal multiprocessor model that fits well on separate processors connected by fast/slow network. • Normally the same program is running on several different nodes. • Nodes communicate using message passing. • MP allows a way for the programmer to explicitly associate specific data with processes and allows the compiler and cache management hardware to function fully. SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

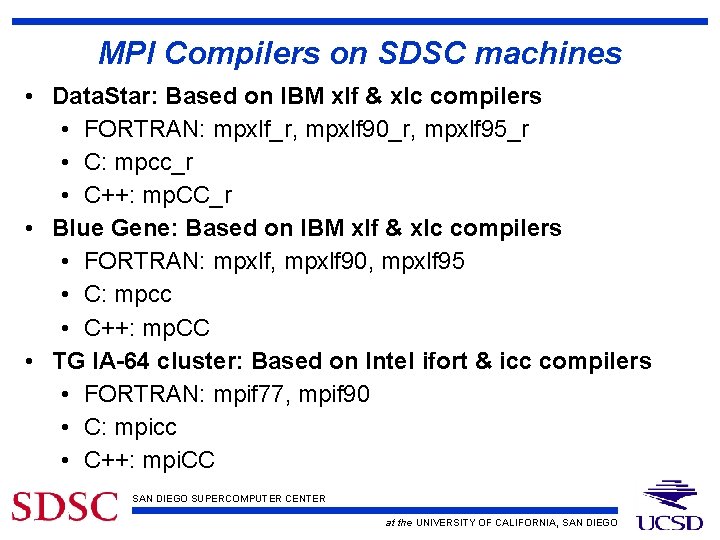

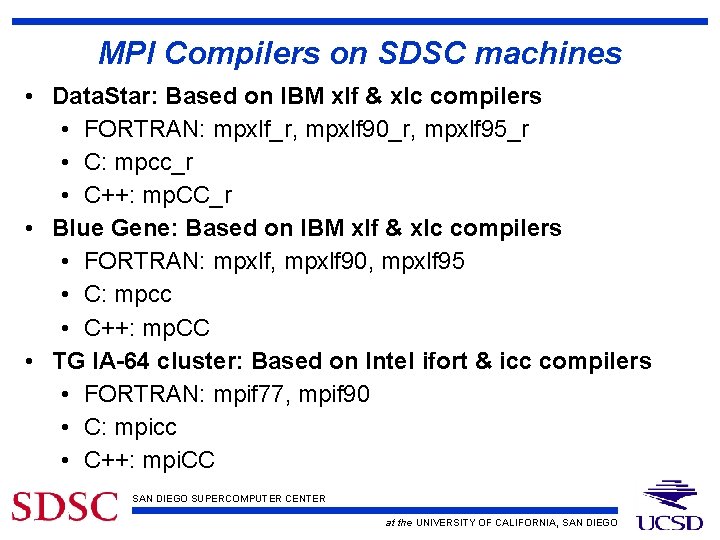

MPI Compilers on SDSC machines • Data. Star: Based on IBM xlf & xlc compilers • FORTRAN: mpxlf_r, mpxlf 90_r, mpxlf 95_r • C: mpcc_r • C++: mp. CC_r • Blue Gene: Based on IBM xlf & xlc compilers • FORTRAN: mpxlf, mpxlf 90, mpxlf 95 • C: mpcc • C++: mp. CC • TG IA-64 cluster: Based on Intel ifort & icc compilers • FORTRAN: mpif 77, mpif 90 • C: mpicc • C++: mpi. CC SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

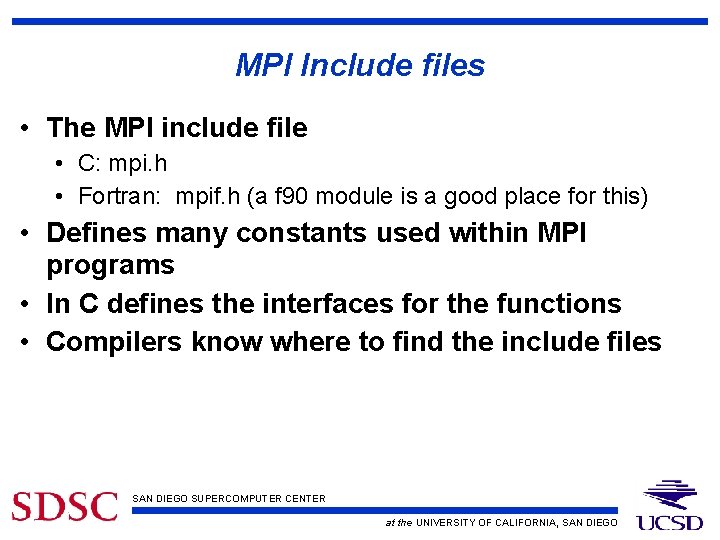

MPI Include files • The MPI include file • C: mpi. h • Fortran: mpif. h (a f 90 module is a good place for this) • Defines many constants used within MPI programs • In C defines the interfaces for the functions • Compilers know where to find the include files SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

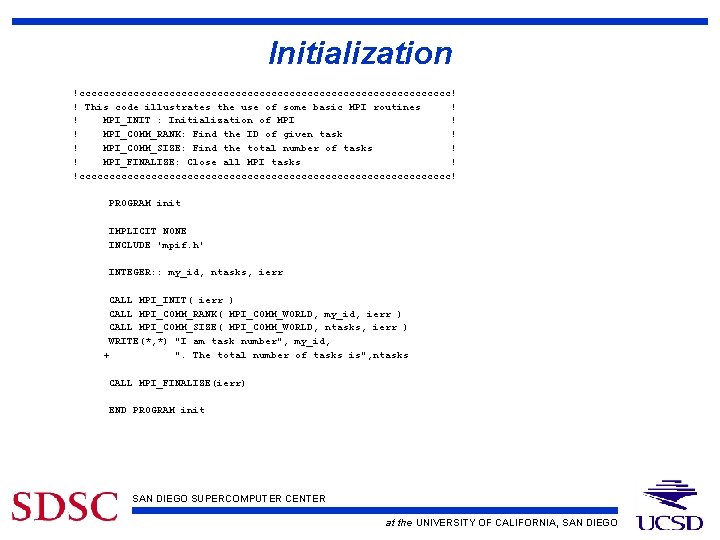

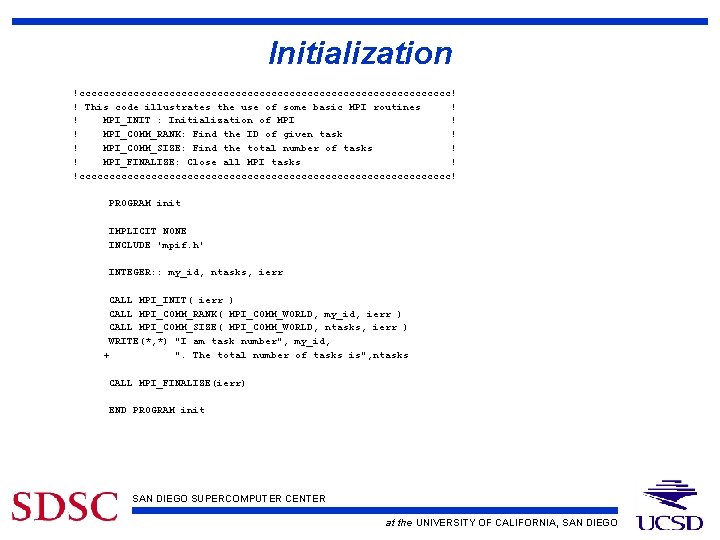

Initialization !ccccccccccccccccccccccccccccccc! ! This code illustrates the use of some basic MPI routines ! ! MPI_INIT : Initialization of MPI ! ! MPI_COMM_RANK: Find the ID of given task ! ! MPI_COMM_SIZE: Find the total number of tasks ! ! MPI_FINALIZE: Close all MPI tasks ! !ccccccccccccccccccccccccccccccc! PROGRAM init IMPLICIT NONE INCLUDE 'mpif. h' INTEGER: : my_id, ntasks, ierr CALL MPI_INIT( ierr ) CALL MPI_COMM_RANK( MPI_COMM_WORLD, my_id, ierr ) CALL MPI_COMM_SIZE( MPI_COMM_WORLD, ntasks, ierr ) WRITE(*, *) "I am task number", my_id, + ". The total number of tasks is", ntasks CALL MPI_FINALIZE(ierr) END PROGRAM init SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

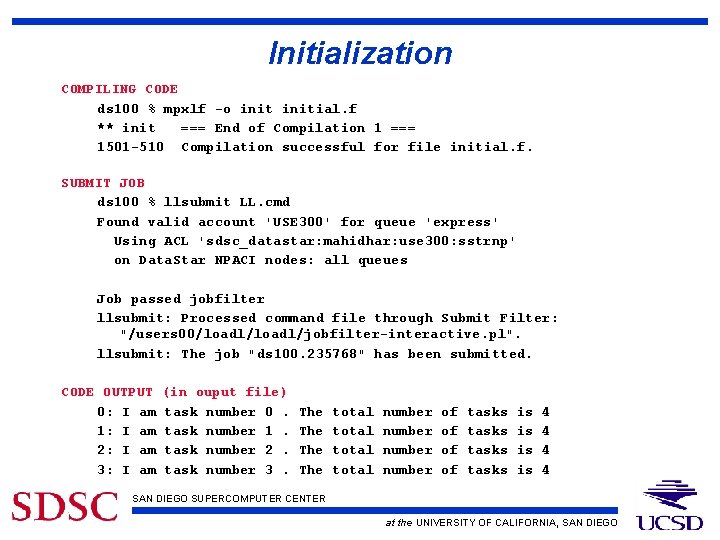

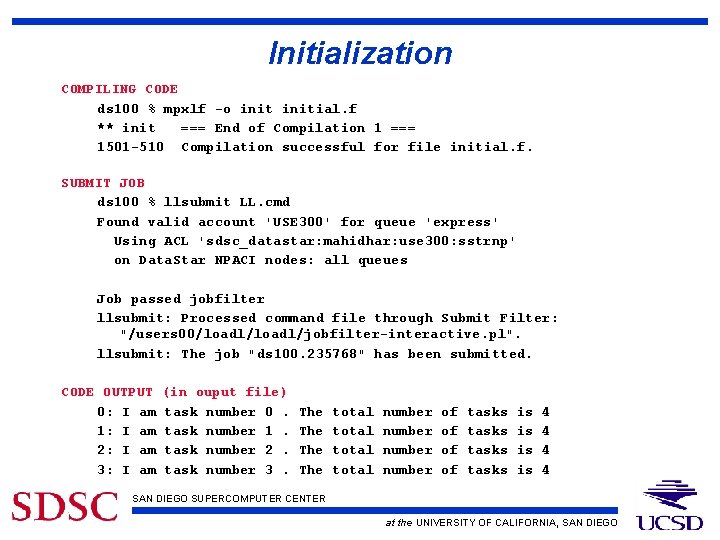

Initialization COMPILING CODE ds 100 % mpxlf -o initial. f ** init === End of Compilation 1 === 1501 -510 Compilation successful for file initial. f. SUBMIT JOB ds 100 % llsubmit LL. cmd Found valid account 'USE 300' for queue 'express' Using ACL 'sdsc_datastar: mahidhar: use 300: sstrnp' on Data. Star NPACI nodes: all queues Job passed jobfilter llsubmit: Processed command file through Submit Filter: "/users 00/loadl/jobfilter-interactive. pl". llsubmit: The job "ds 100. 235768" has been submitted. CODE OUTPUT 0: I am 1: I am 2: I am 3: I am (in ouput file) task number 0. task number 1. task number 2. task number 3. The The total number of of tasks is is 4 4 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

Communicators • A parameter for most MPI calls • A collection of processors working on some part of a parallel job • MPI_COMM_WORLD is defined in the MPI include file as all of the processors in your job • Can create subsets of MPI_COMM_WORLD • Processors within a communicator are assigned numbers 0 to n-1 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

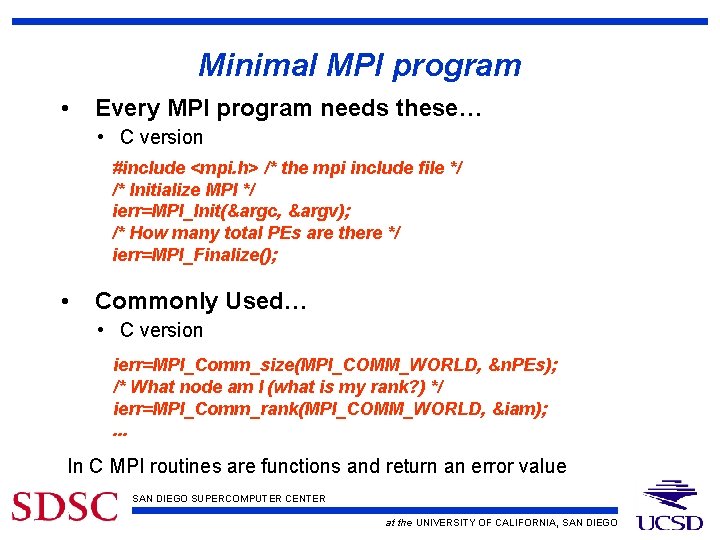

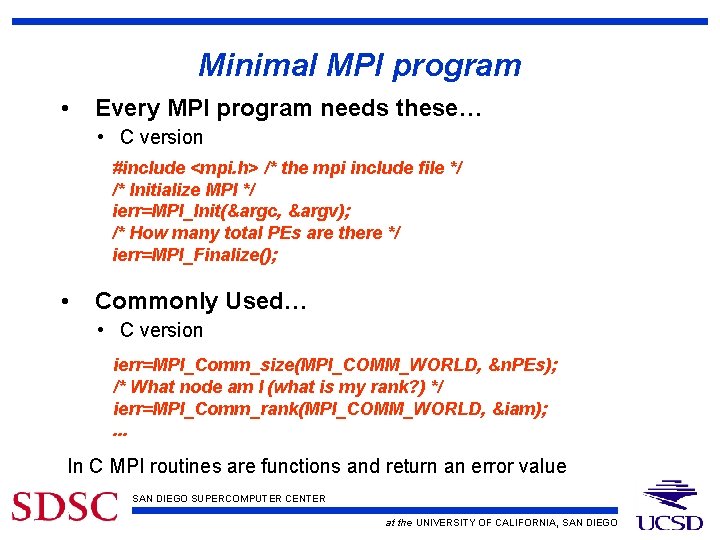

Minimal MPI program • Every MPI program needs these… • C version #include <mpi. h> /* the mpi include file */ /* Initialize MPI */ ierr=MPI_Init(&argc, &argv); /* How many total PEs are there */ ierr=MPI_Finalize(); • Commonly Used… • C version ierr=MPI_Comm_size(MPI_COMM_WORLD, &n. PEs); /* What node am I (what is my rank? ) */ ierr=MPI_Comm_rank(MPI_COMM_WORLD, &iam); . . . In C MPI routines are functions and return an error value SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

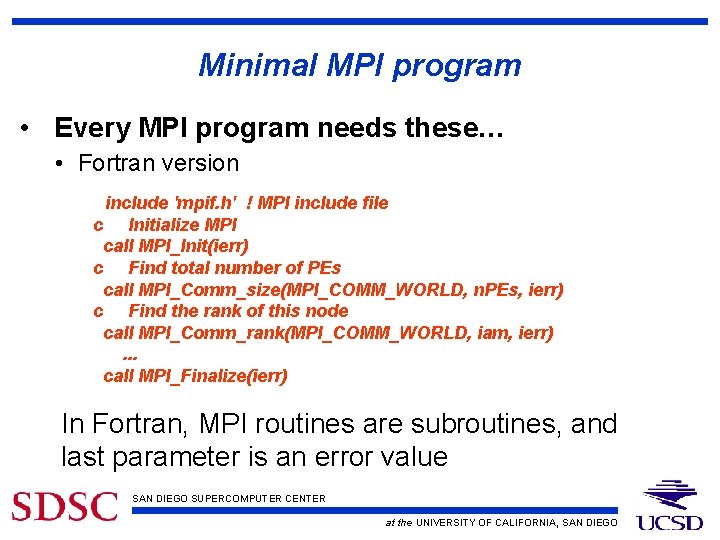

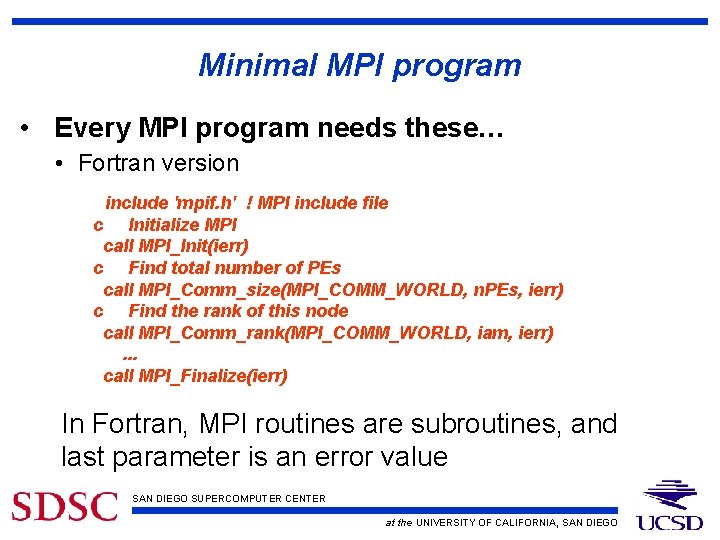

Minimal MPI program • Every MPI program needs these… • Fortran version include 'mpif. h' ! MPI include file c Initialize MPI call MPI_Init(ierr) c Find total number of PEs call MPI_Comm_size(MPI_COMM_WORLD, n. PEs, ierr) c Find the rank of this node call MPI_Comm_rank(MPI_COMM_WORLD, iam, ierr). . . call MPI_Finalize(ierr) In Fortran, MPI routines are subroutines, and last parameter is an error value SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

Basic Communications in MPI • Data values are transferred from one processor to another • One process sends the data • Another receives the data • Standard, Blocking • Call does not return until the message buffer is free to be reused • Standard, Nonblocking • Call indicates a start of send or received, and another call is made to determine if finished SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

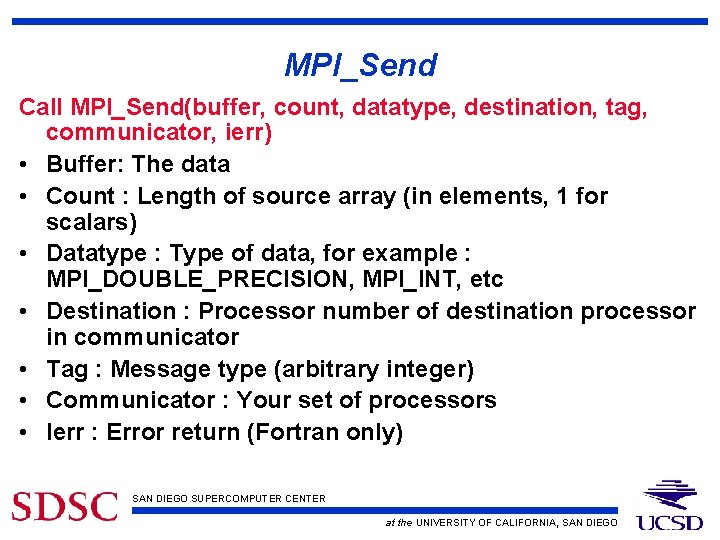

Standard, Blocking Send • MPI_Send: Sends data to another processor • Use MPI_Recv to "get" the data • C • MPI_Send(&buffer, count, datatype, destination, tag, communicator); • Fortran • Call MPI_Send(buffer, count, datatype, destination, tag, communicator, ierr) • Call blocks until message on the way SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

MPI_Send Call MPI_Send(buffer, count, datatype, destination, tag, communicator, ierr) • Buffer: The data • Count : Length of source array (in elements, 1 for scalars) • Datatype : Type of data, for example : MPI_DOUBLE_PRECISION, MPI_INT, etc • Destination : Processor number of destination processor in communicator • Tag : Message type (arbitrary integer) • Communicator : Your set of processors • Ierr : Error return (Fortran only) SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

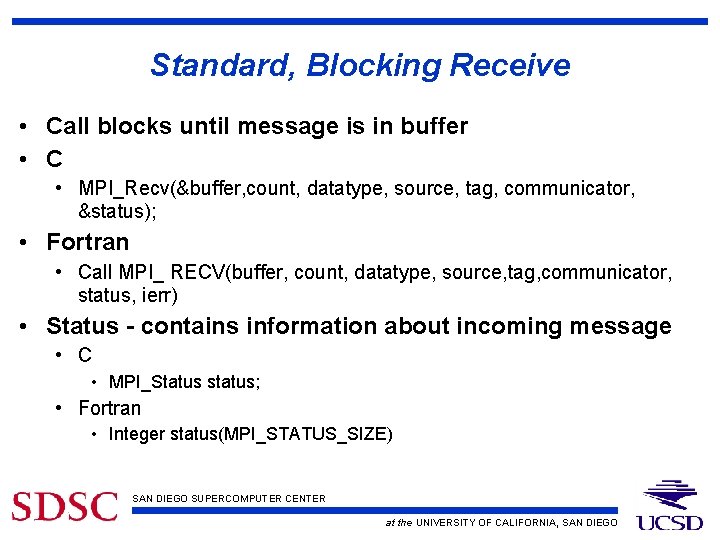

Standard, Blocking Receive • Call blocks until message is in buffer • C • MPI_Recv(&buffer, count, datatype, source, tag, communicator, &status); • Fortran • Call MPI_ RECV(buffer, count, datatype, source, tag, communicator, status, ierr) • Status - contains information about incoming message • C • MPI_Status status; • Fortran • Integer status(MPI_STATUS_SIZE) SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

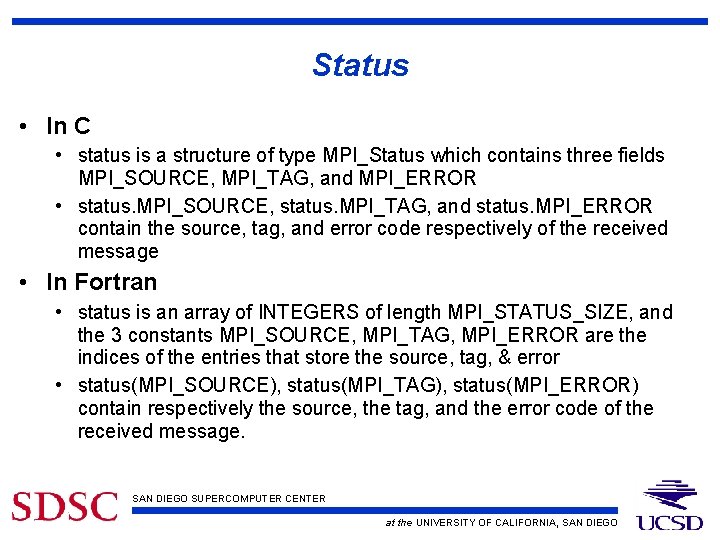

Status • In C • status is a structure of type MPI_Status which contains three fields MPI_SOURCE, MPI_TAG, and MPI_ERROR • status. MPI_SOURCE, status. MPI_TAG, and status. MPI_ERROR contain the source, tag, and error code respectively of the received message • In Fortran • status is an array of INTEGERS of length MPI_STATUS_SIZE, and the 3 constants MPI_SOURCE, MPI_TAG, MPI_ERROR are the indices of the entries that store the source, tag, & error • status(MPI_SOURCE), status(MPI_TAG), status(MPI_ERROR) contain respectively the source, the tag, and the error code of the received message. SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

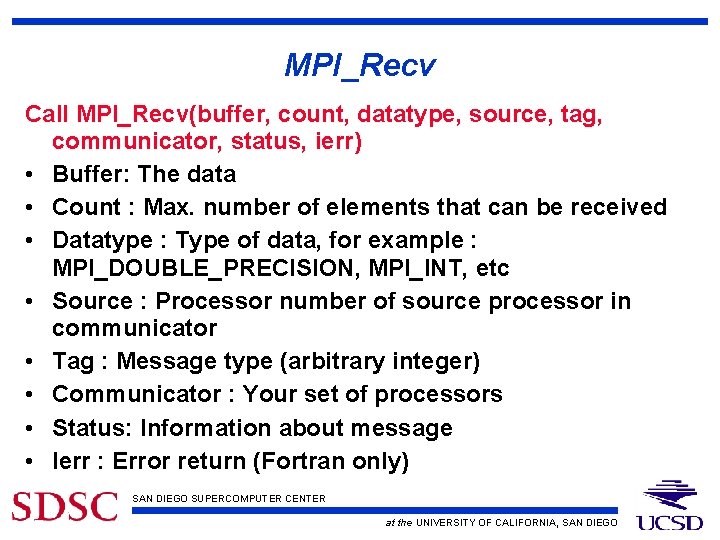

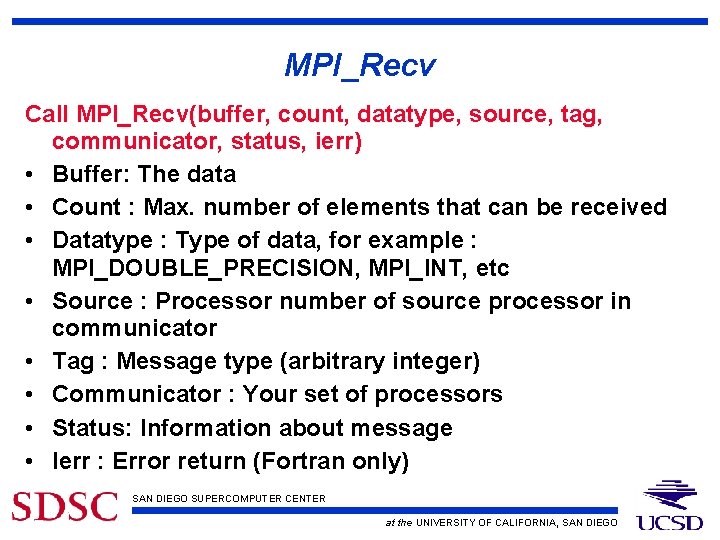

MPI_Recv Call MPI_Recv(buffer, count, datatype, source, tag, communicator, status, ierr) • Buffer: The data • Count : Max. number of elements that can be received • Datatype : Type of data, for example : MPI_DOUBLE_PRECISION, MPI_INT, etc • Source : Processor number of source processor in communicator • Tag : Message type (arbitrary integer) • Communicator : Your set of processors • Status: Information about message • Ierr : Error return (Fortran only) SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

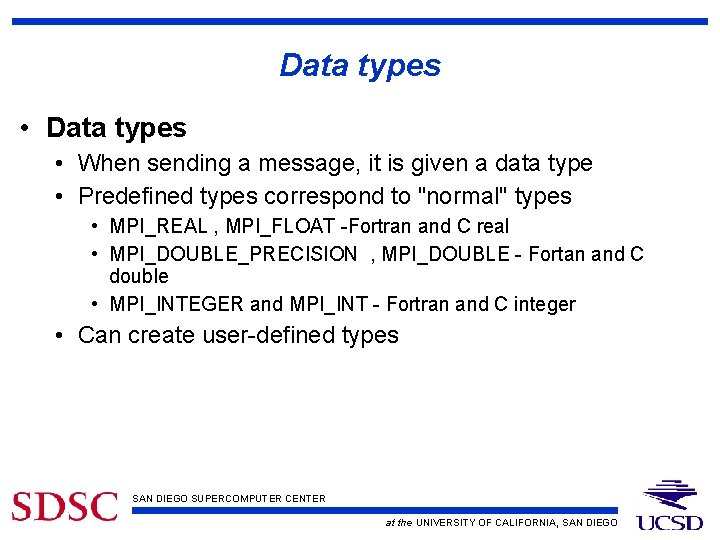

Data types • When sending a message, it is given a data type • Predefined types correspond to "normal" types • MPI_REAL , MPI_FLOAT -Fortran and C real • MPI_DOUBLE_PRECISION , MPI_DOUBLE - Fortan and C double • MPI_INTEGER and MPI_INT - Fortran and C integer • Can create user-defined types SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

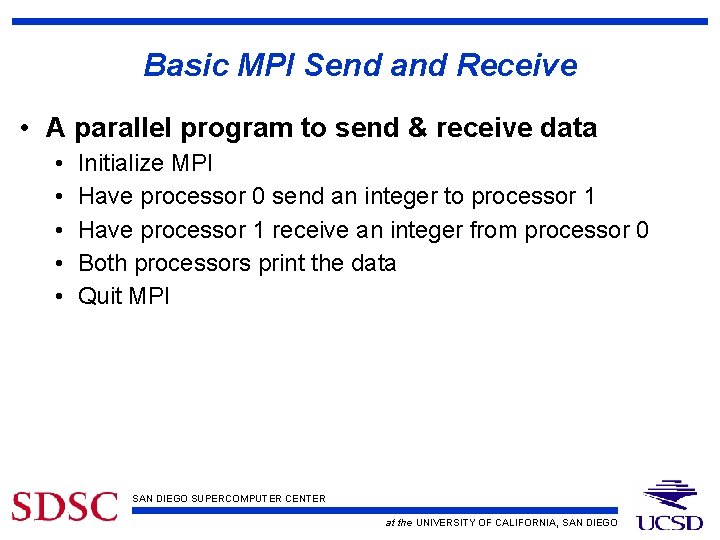

Basic MPI Send and Receive • A parallel program to send & receive data • • • Initialize MPI Have processor 0 send an integer to processor 1 Have processor 1 receive an integer from processor 0 Both processors print the data Quit MPI SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

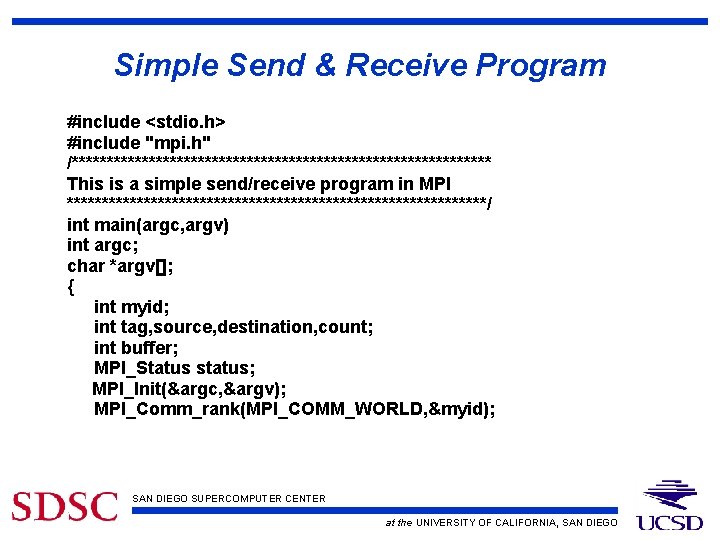

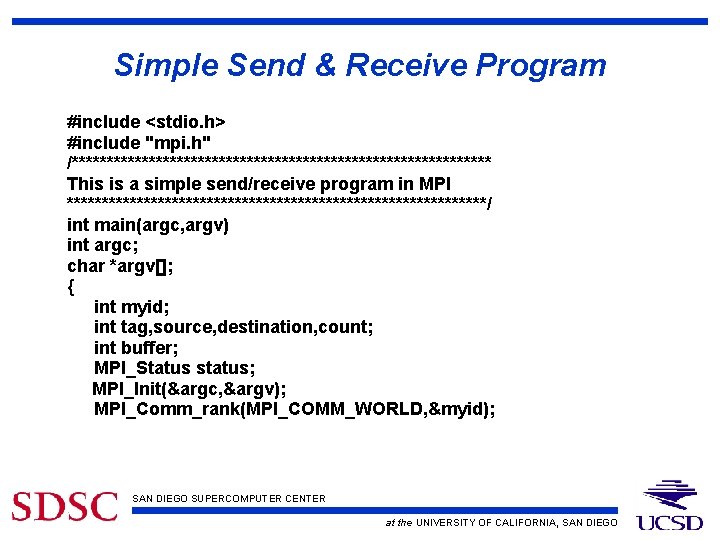

Simple Send & Receive Program #include <stdio. h> #include "mpi. h" /****************************** This is a simple send/receive program in MPI ******************************/ int main(argc, argv) int argc; char *argv[]; { int myid; int tag, source, destination, count; int buffer; MPI_Status status; MPI_Init(&argc, &argv); MPI_Comm_rank(MPI_COMM_WORLD, &myid); SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

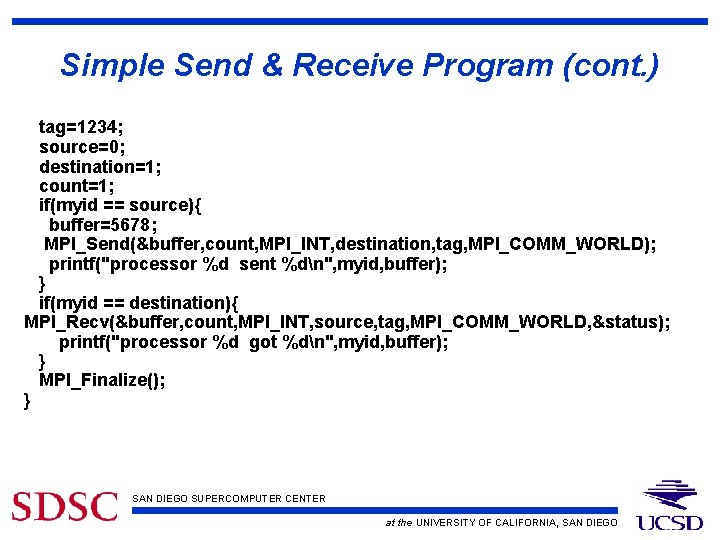

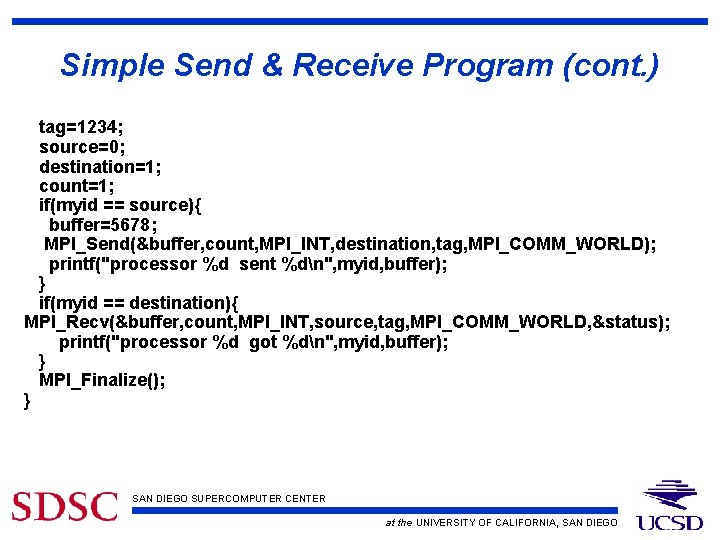

Simple Send & Receive Program (cont. ) tag=1234; source=0; destination=1; count=1; if(myid == source){ buffer=5678; MPI_Send(&buffer, count, MPI_INT, destination, tag, MPI_COMM_WORLD); printf("processor %d sent %dn", myid, buffer); } if(myid == destination){ MPI_Recv(&buffer, count, MPI_INT, source, tag, MPI_COMM_WORLD, &status); printf("processor %d got %dn", myid, buffer); } MPI_Finalize(); } SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

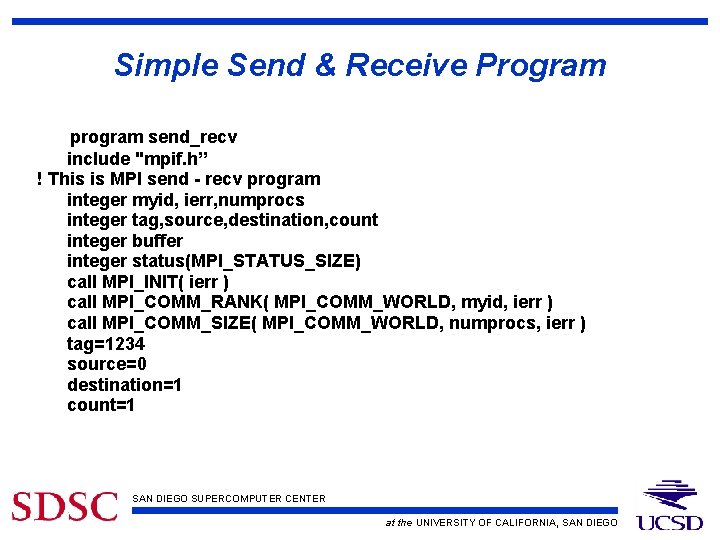

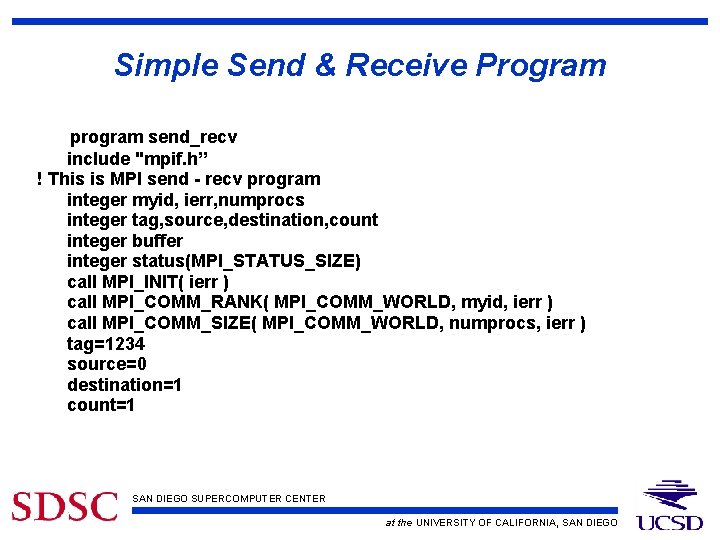

Simple Send & Receive Program program send_recv include "mpif. h” ! This is MPI send - recv program integer myid, ierr, numprocs integer tag, source, destination, count integer buffer integer status(MPI_STATUS_SIZE) call MPI_INIT( ierr ) call MPI_COMM_RANK( MPI_COMM_WORLD, myid, ierr ) call MPI_COMM_SIZE( MPI_COMM_WORLD, numprocs, ierr ) tag=1234 source=0 destination=1 count=1 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

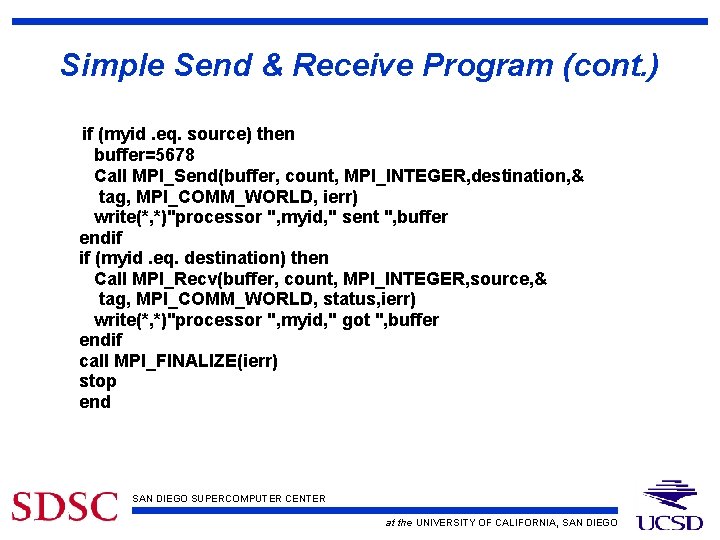

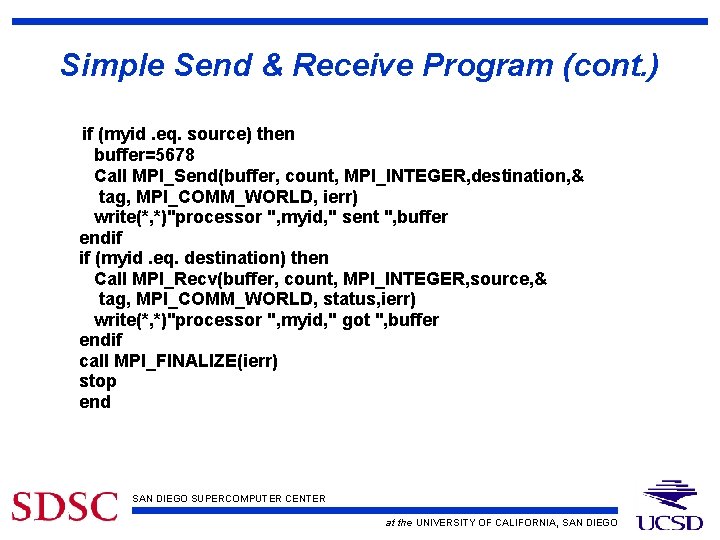

Simple Send & Receive Program (cont. ) if (myid. eq. source) then buffer=5678 Call MPI_Send(buffer, count, MPI_INTEGER, destination, & tag, MPI_COMM_WORLD, ierr) write(*, *)"processor ", myid, " sent ", buffer endif if (myid. eq. destination) then Call MPI_Recv(buffer, count, MPI_INTEGER, source, & tag, MPI_COMM_WORLD, status, ierr) write(*, *)"processor ", myid, " got ", buffer endif call MPI_FINALIZE(ierr) stop end SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

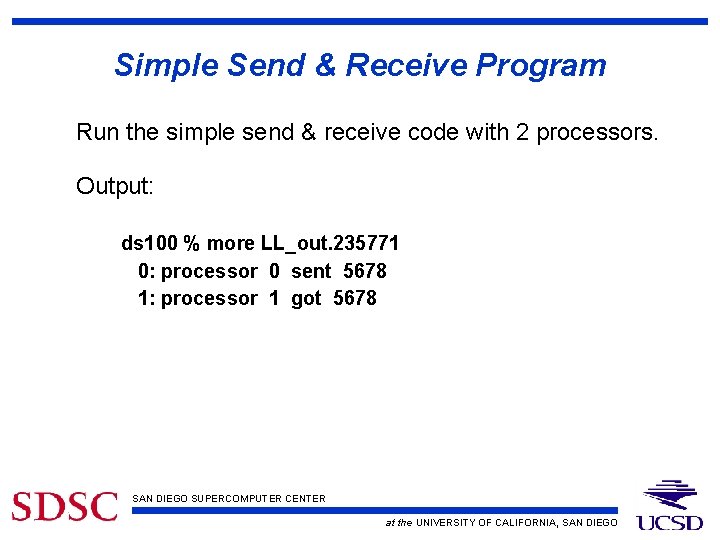

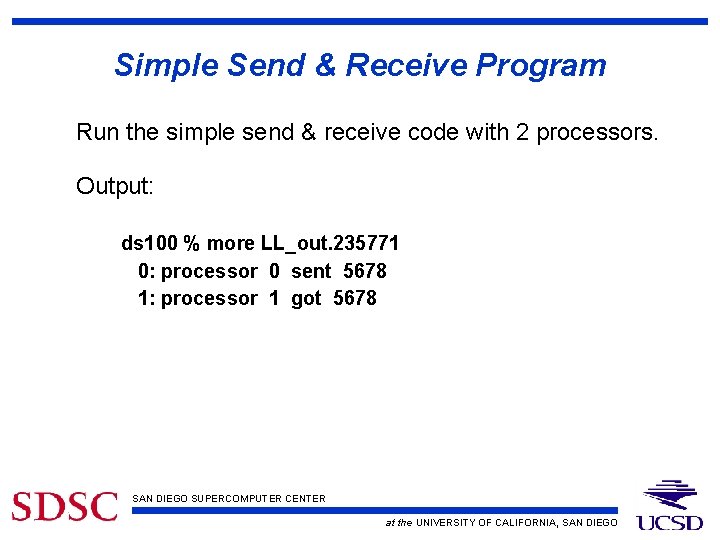

Simple Send & Receive Program Run the simple send & receive code with 2 processors. Output: ds 100 % more LL_out. 235771 0: processor 0 sent 5678 1: processor 1 got 5678 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

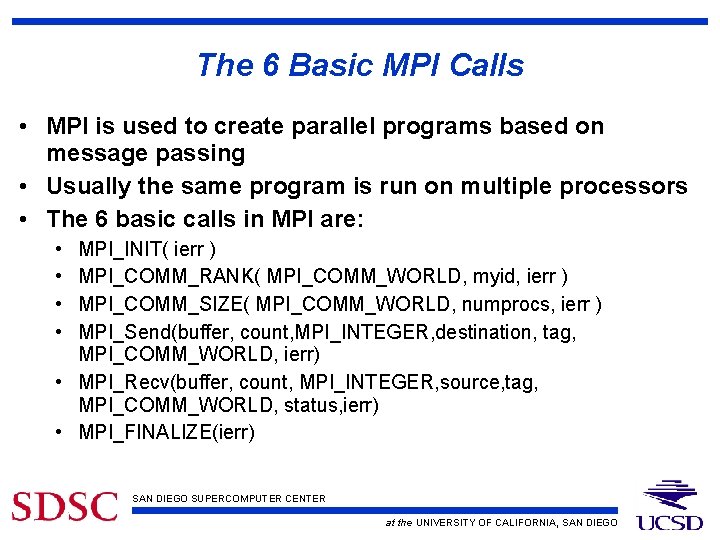

The 6 Basic MPI Calls • MPI is used to create parallel programs based on message passing • Usually the same program is run on multiple processors • The 6 basic calls in MPI are: • • MPI_INIT( ierr ) MPI_COMM_RANK( MPI_COMM_WORLD, myid, ierr ) MPI_COMM_SIZE( MPI_COMM_WORLD, numprocs, ierr ) MPI_Send(buffer, count, MPI_INTEGER, destination, tag, MPI_COMM_WORLD, ierr) • MPI_Recv(buffer, count, MPI_INTEGER, source, tag, MPI_COMM_WORLD, status, ierr) • MPI_FINALIZE(ierr) SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

Example MPI program using basic routines • MPI is used to create parallel programs based on message passing • Usually the same program is run on multiple processors • The 6 basic calls in MPI are: • • MPI_INIT( ierr ) MPI_COMM_RANK( MPI_COMM_WORLD, myid, ierr ) MPI_COMM_SIZE( MPI_COMM_WORLD, numprocs, ierr ) MPI_Send(buffer, count, MPI_INTEGER, destination, tag, MPI_COMM_WORLD, ierr) • MPI_Recv(buffer, count, MPI_INTEGER, source, tag, MPI_COMM_WORLD, status, ierr) • MPI_FINALIZE(ierr) SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

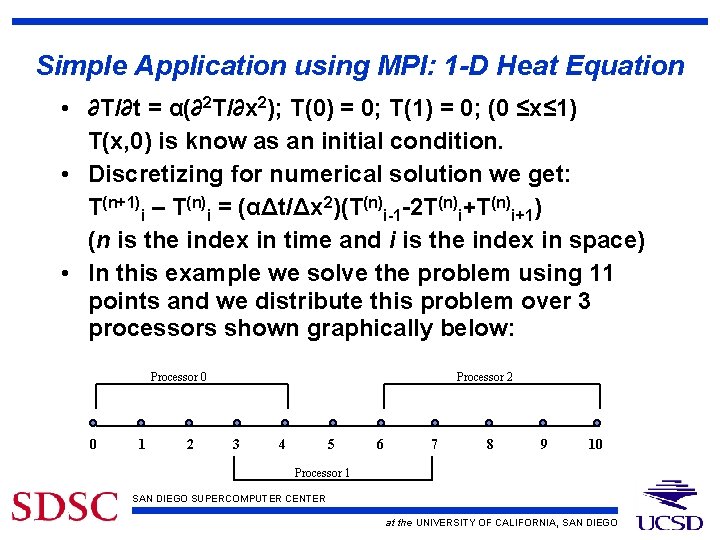

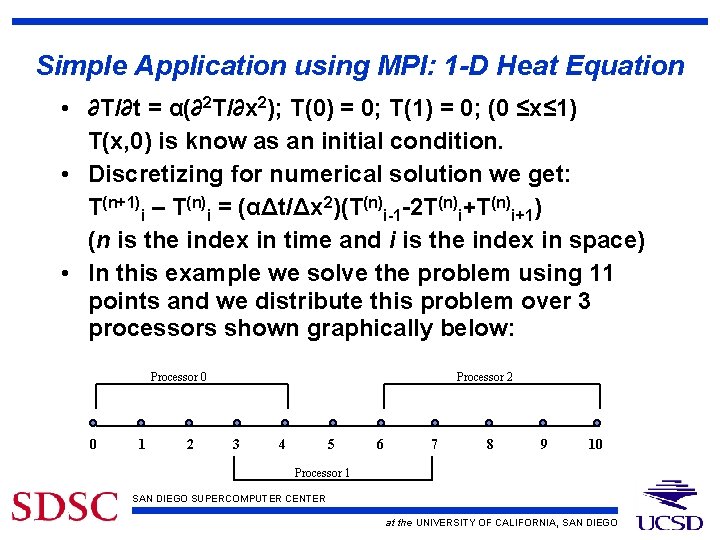

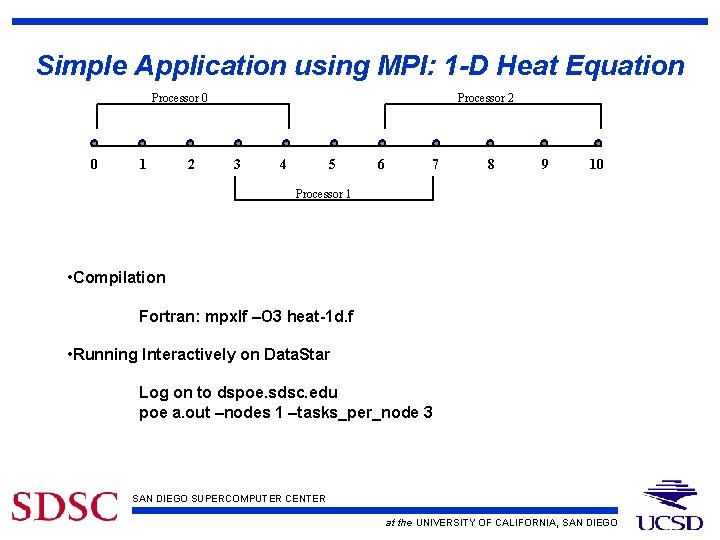

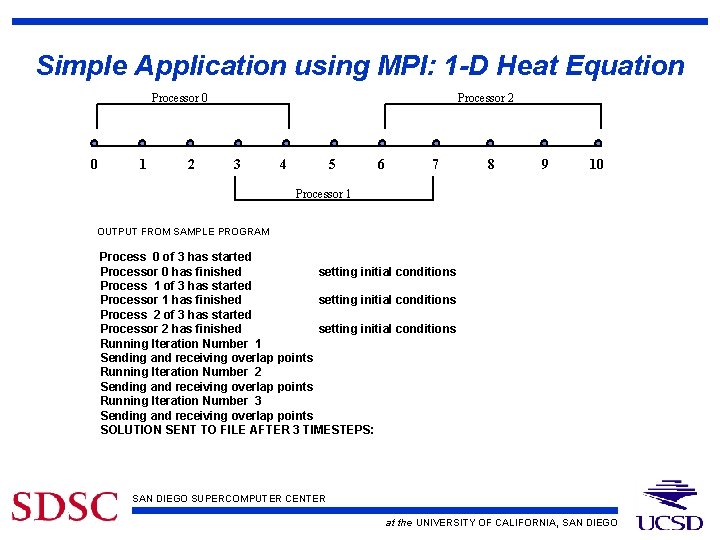

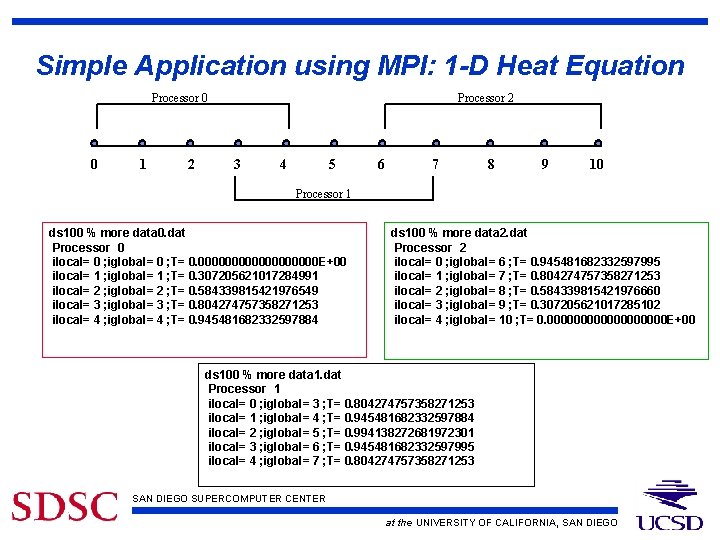

Simple Application using MPI: 1 -D Heat Equation • ∂T/∂t = α(∂2 T/∂x 2); T(0) = 0; T(1) = 0; (0 ≤x≤ 1) T(x, 0) is know as an initial condition. • Discretizing for numerical solution we get: T(n+1)i – T(n)i = (αΔt/Δx 2)(T(n)i-1 -2 T(n)i+1) (n is the index in time and i is the index in space) • In this example we solve the problem using 11 points and we distribute this problem over 3 processors shown graphically below: Processor 0 0 1 2 Processor 2 3 4 5 6 7 8 9 10 Processor 1 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

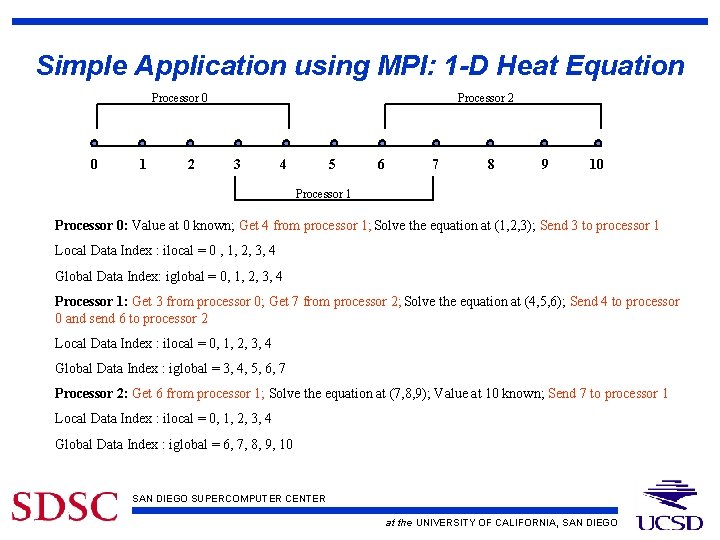

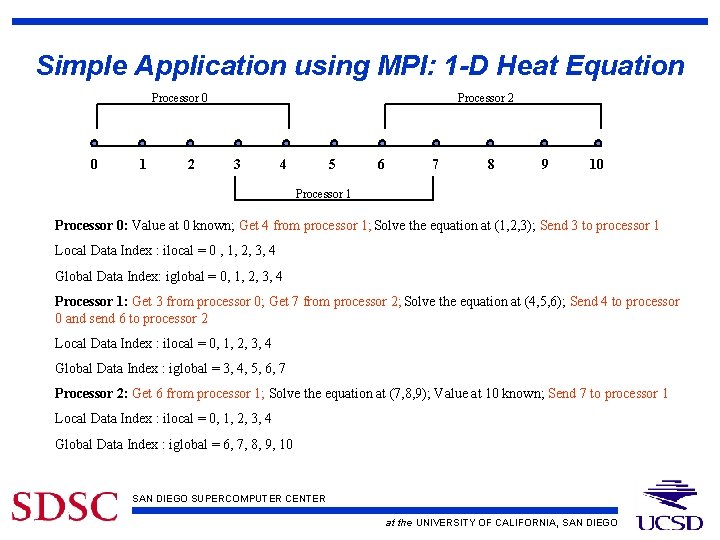

Simple Application using MPI: 1 -D Heat Equation Processor 0 0 1 2 Processor 2 3 4 5 6 7 8 9 10 Processor 1 Processor 0: Value at 0 known; Get 4 from processor 1; Solve the equation at (1, 2, 3); Send 3 to processor 1 Local Data Index : ilocal = 0 , 1, 2, 3, 4 Global Data Index: iglobal = 0, 1, 2, 3, 4 Processor 1: Get 3 from processor 0; Get 7 from processor 2; Solve the equation at (4, 5, 6); Send 4 to processor 0 and send 6 to processor 2 Local Data Index : ilocal = 0, 1, 2, 3, 4 Global Data Index : iglobal = 3, 4, 5, 6, 7 Processor 2: Get 6 from processor 1; Solve the equation at (7, 8, 9); Value at 10 known; Send 7 to processor 1 Local Data Index : ilocal = 0, 1, 2, 3, 4 Global Data Index : iglobal = 6, 7, 8, 9, 10 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

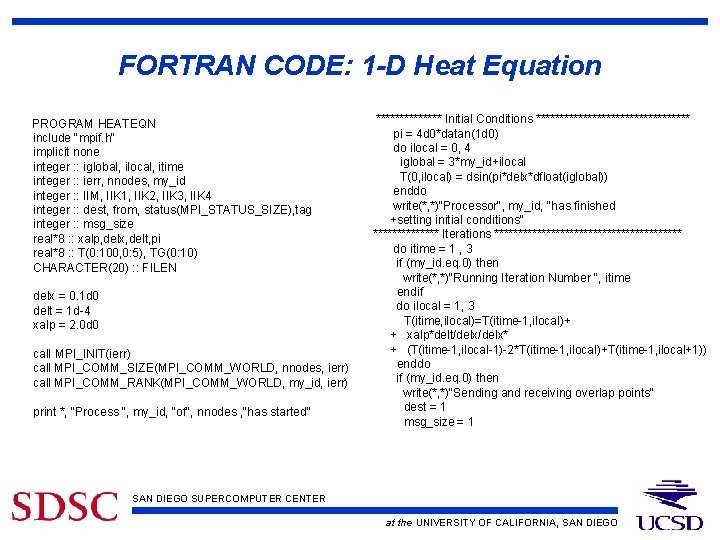

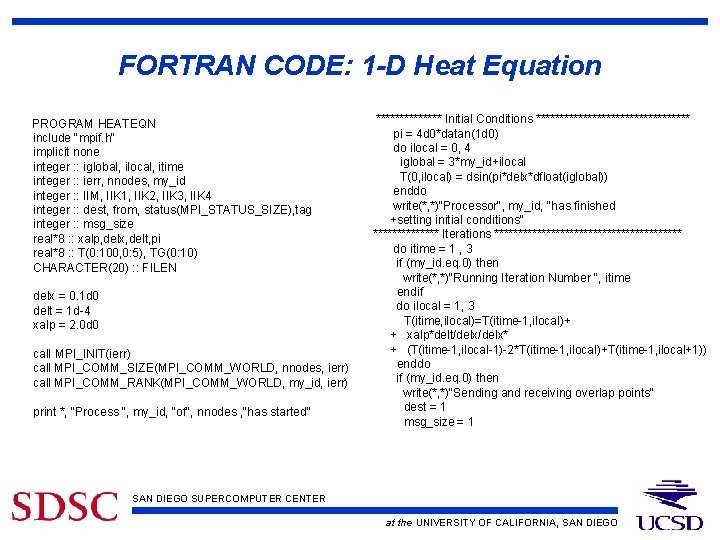

FORTRAN CODE: 1 -D Heat Equation PROGRAM HEATEQN include "mpif. h" implicit none integer : : iglobal, ilocal, itime integer : : ierr, nnodes, my_id integer : : IIM, IIK 1, IIK 2, IIK 3, IIK 4 integer : : dest, from, status(MPI_STATUS_SIZE), tag integer : : msg_size real*8 : : xalp, delx, delt, pi real*8 : : T(0: 100, 0: 5), TG(0: 10) CHARACTER(20) : : FILEN delx = 0. 1 d 0 delt = 1 d-4 xalp = 2. 0 d 0 call MPI_INIT(ierr) call MPI_COMM_SIZE(MPI_COMM_WORLD, nnodes, ierr) call MPI_COMM_RANK(MPI_COMM_WORLD, my_id, ierr) print *, "Process ", my_id, "of", nnodes , "has started" ******* Initial Conditions ***************** pi = 4 d 0*datan(1 d 0) do ilocal = 0, 4 iglobal = 3*my_id+ilocal T(0, ilocal) = dsin(pi*delx*dfloat(iglobal)) enddo write(*, *)"Processor", my_id, "has finished +setting initial conditions" ******* Iterations ******************** do itime = 1 , 3 if (my_id. eq. 0) then write(*, *)"Running Iteration Number ", itime endif do ilocal = 1, 3 T(itime, ilocal)=T(itime-1, ilocal)+ + xalp*delt/delx* + (T(itime-1, ilocal-1)-2*T(itime-1, ilocal)+T(itime-1, ilocal+1)) enddo if (my_id. eq. 0) then write(*, *)"Sending and receiving overlap points" dest = 1 msg_size = 1 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

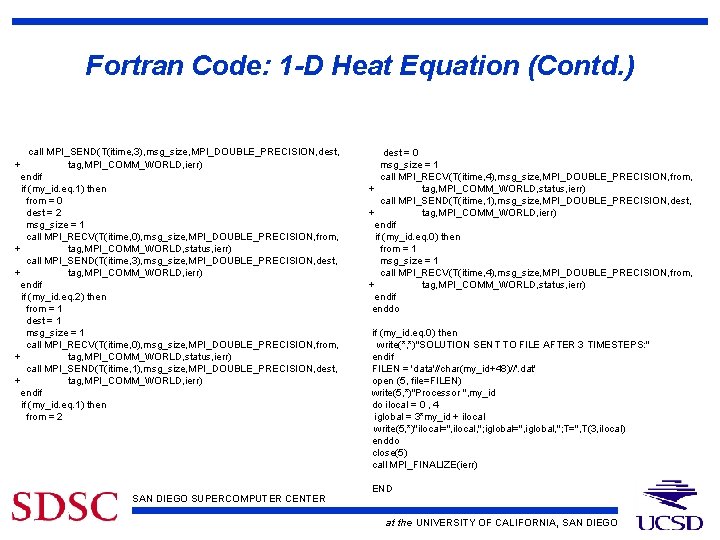

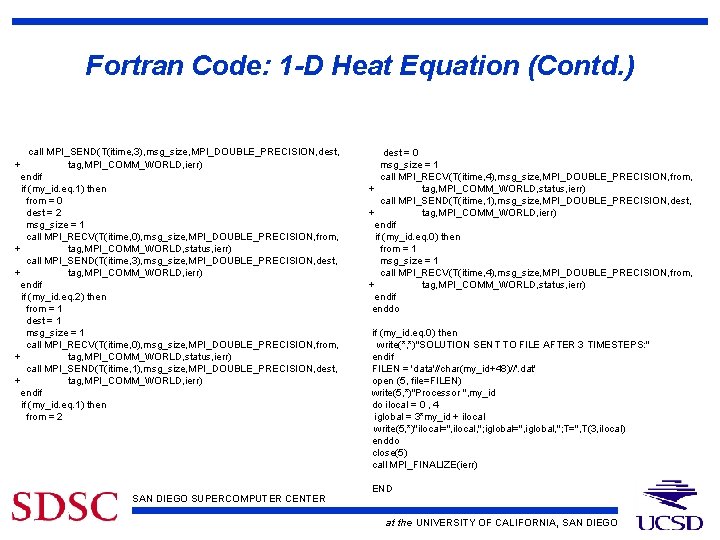

Fortran Code: 1 -D Heat Equation (Contd. ) call MPI_SEND(T(itime, 3), msg_size, MPI_DOUBLE_PRECISION, dest, + tag, MPI_COMM_WORLD, ierr) endif if (my_id. eq. 1) then from = 0 dest = 2 msg_size = 1 call MPI_RECV(T(itime, 0), msg_size, MPI_DOUBLE_PRECISION, from, + tag, MPI_COMM_WORLD, status, ierr) call MPI_SEND(T(itime, 3), msg_size, MPI_DOUBLE_PRECISION, dest, + tag, MPI_COMM_WORLD, ierr) endif if (my_id. eq. 2) then from = 1 dest = 1 msg_size = 1 call MPI_RECV(T(itime, 0), msg_size, MPI_DOUBLE_PRECISION, from, + tag, MPI_COMM_WORLD, status, ierr) call MPI_SEND(T(itime, 1), msg_size, MPI_DOUBLE_PRECISION, dest, + tag, MPI_COMM_WORLD, ierr) endif if (my_id. eq. 1) then from = 2 SAN DIEGO SUPERCOMPUTER CENTER dest = 0 msg_size = 1 call MPI_RECV(T(itime, 4), msg_size, MPI_DOUBLE_PRECISION, from, + tag, MPI_COMM_WORLD, status, ierr) call MPI_SEND(T(itime, 1), msg_size, MPI_DOUBLE_PRECISION, dest, + tag, MPI_COMM_WORLD, ierr) endif if (my_id. eq. 0) then from = 1 msg_size = 1 call MPI_RECV(T(itime, 4), msg_size, MPI_DOUBLE_PRECISION, from, + tag, MPI_COMM_WORLD, status, ierr) endif enddo if (my_id. eq. 0) then write(*, *)"SOLUTION SENT TO FILE AFTER 3 TIMESTEPS: " endif FILEN = 'data'//char(my_id+48)//'. dat' open (5, file=FILEN) write(5, *)"Processor ", my_id do ilocal = 0 , 4 iglobal = 3*my_id + ilocal write(5, *)"ilocal=", ilocal, "; iglobal=", iglobal, "; T=", T(3, ilocal) enddo close(5) call MPI_FINALIZE(ierr) END at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

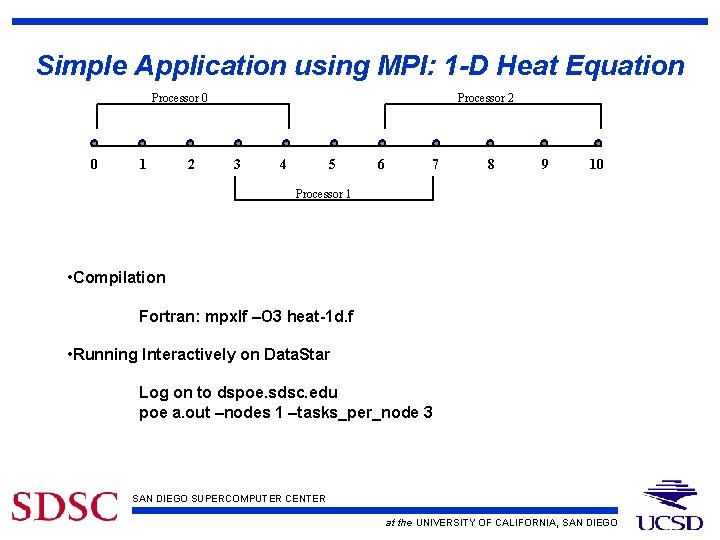

Simple Application using MPI: 1 -D Heat Equation Processor 0 0 1 2 Processor 2 3 4 5 6 7 8 9 10 Processor 1 • Compilation Fortran: mpxlf –O 3 heat-1 d. f • Running Interactively on Data. Star Log on to dspoe. sdsc. edu poe a. out –nodes 1 –tasks_per_node 3 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

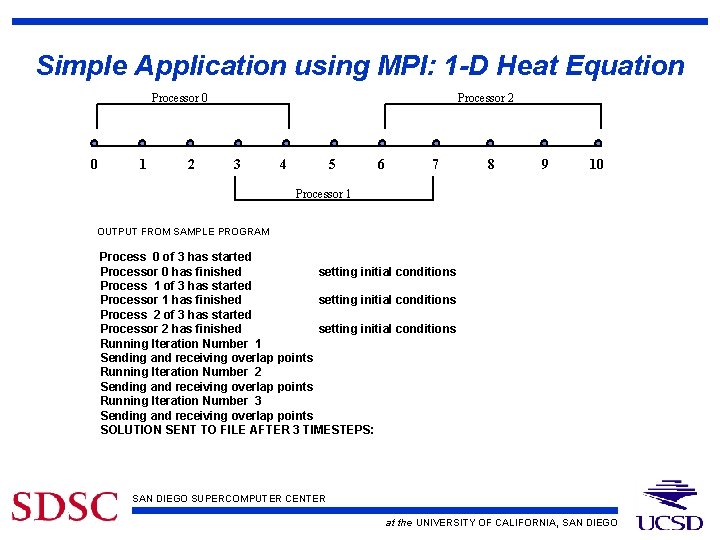

Simple Application using MPI: 1 -D Heat Equation Processor 0 0 1 2 Processor 2 3 4 5 6 7 8 9 10 Processor 1 OUTPUT FROM SAMPLE PROGRAM Process 0 of 3 has started Processor 0 has finished setting initial conditions Process 1 of 3 has started Processor 1 has finished setting initial conditions Process 2 of 3 has started Processor 2 has finished setting initial conditions Running Iteration Number 1 Sending and receiving overlap points Running Iteration Number 2 Sending and receiving overlap points Running Iteration Number 3 Sending and receiving overlap points SOLUTION SENT TO FILE AFTER 3 TIMESTEPS: SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

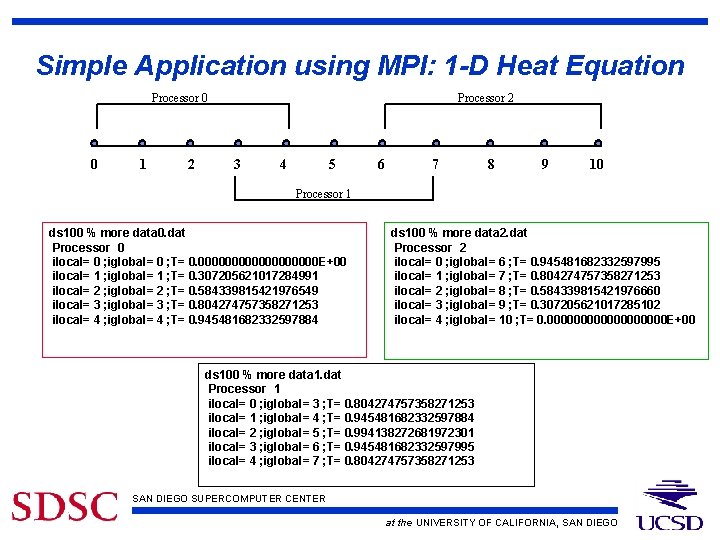

Simple Application using MPI: 1 -D Heat Equation Processor 0 0 1 2 Processor 2 3 4 5 6 7 8 9 10 Processor 1 ds 100 % more data 0. dat Processor 0 ilocal= 0 ; iglobal= 0 ; T= 0. 000000000 E+00 ilocal= 1 ; iglobal= 1 ; T= 0. 307205621017284991 ilocal= 2 ; iglobal= 2 ; T= 0. 584339815421976549 ilocal= 3 ; iglobal= 3 ; T= 0. 804274757358271253 ilocal= 4 ; iglobal= 4 ; T= 0. 945481682332597884 ds 100 % more data 2. dat Processor 2 ilocal= 0 ; iglobal= 6 ; T= 0. 945481682332597995 ilocal= 1 ; iglobal= 7 ; T= 0. 804274757358271253 ilocal= 2 ; iglobal= 8 ; T= 0. 584339815421976660 ilocal= 3 ; iglobal= 9 ; T= 0. 307205621017285102 ilocal= 4 ; iglobal= 10 ; T= 0. 000000000 E+00 ds 100 % more data 1. dat Processor 1 ilocal= 0 ; iglobal= 3 ; T= 0. 804274757358271253 ilocal= 1 ; iglobal= 4 ; T= 0. 945481682332597884 ilocal= 2 ; iglobal= 5 ; T= 0. 994138272681972301 ilocal= 3 ; iglobal= 6 ; T= 0. 945481682332597995 ilocal= 4 ; iglobal= 7 ; T= 0. 804274757358271253 SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO

References • LLNL MPI tutorial http: //www. llnl. gov/computing/tutorials/mpi/ • NERSC MPI tutorial http: //www. nersc. gov/nusers/help/tutorials/mpi/intro/ • LAM MPI tutorials http: //www. lam-mpi. org/tutorials/ • Tutorial on MPI: The Message-Passing Interface by William Gropp http: //www-unix. mcs. anl. gov/mpi/tutorial/gropp/talk. html SAN DIEGO SUPERCOMPUTER CENTER at the UNIVERSITY OF CALIFORNIA, SAN DIEGO