High Performance Computing Open MP MPI and GPUs

![Open. MP: for-loop void process. All(int[] input, int length) { Limitation: This expression cannot Open. MP: for-loop void process. All(int[] input, int length) { Limitation: This expression cannot](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-8.jpg)

![Open. MP: Synchronization int process. And. Add(int input[], int length) { int output = Open. MP: Synchronization int process. And. Add(int input[], int length) { int output =](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-9.jpg)

![Open. MP: Reduction int process. And. Add(int[] input, int length) { int output = Open. MP: Reduction int process. And. Add(int[] input, int length) { int output =](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-10.jpg)

- Slides: 32

High Performance Computing Open. MP, MPI, and GPUs An overview with examples Geoff Mc. Queen 28 August 2018

What is high performance computing? • Applied in engineering, medicine, business, science, government • Generally achieved through parallelism, which comes in many forms • Multiple compute units in the same: • • • Chip – multicore CPU, GPU, Xeon Phi et al, FPGA Board – multi-socketed servers, purpose-built devices Chassis – multiple boards via a backplane Room – multiple systems via interconnect (a “cluster”) Planet – grid, cloud computing • Heterogeneous computing

Flynn’s Taxonomy • Classifies computer architectures • Michael Flynn, since 1966 • Single Instruction, Single Data • SIMD • Vector processor, GPU • MISD • Fault tolerance • MIMD • Multi-core systems • Memory may be shared or distributed

Multiprocessing • Multiple CPUs or “cores” in one system • Memory is shared, low communication overhead • Ubiquitous • Can be harnessed using standard libraries of many programming languages: C++, Java, Python, C#, etc.

Programming with Threads is Hard • Many libraries have their own conventions developers must be comfortable with in order to realize MT designs • Synchronization constructs like mutexes, semaphores, monitors • Thread pools, schedulers • Implicit multithreading • APIs, platforms vary • Error prone • Not studied by undergraduates

Open. MP • Extensions to the C/C++ and Fortran languages, mostly via compiler directives • Portable, platform-agnostic • Simple • “Automatic”

Open. MP: A Simple C Example #include <omp. h> void say. Hello() { #pragma omp parallel { int my. Id = omp_get_thread_num(); printf(“Hello from thread %d!n”, my. Id); } }

![Open MP forloop void process Allint input int length Limitation This expression cannot Open. MP: for-loop void process. All(int[] input, int length) { Limitation: This expression cannot](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-8.jpg)

Open. MP: for-loop void process. All(int[] input, int length) { Limitation: This expression cannot #pragma omp parallel for (int i = 0; i < length; i++) have side effects. { Limitation: This process(input[i]); expression can only have certain forms } Limitation: The } iteration variable must be invariant inside the loop

![Open MP Synchronization int process And Addint input int length int output Open. MP: Synchronization int process. And. Add(int input[], int length) { int output =](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-9.jpg)

Open. MP: Synchronization int process. And. Add(int input[], int length) { int output = 0; #pragma omp parallel for (int i = 0; i < length; i++) { int addend = process(input[i]); #pragma omp critical { output += addend; } } return output; }

![Open MP Reduction int process And Addint input int length int output Open. MP: Reduction int process. And. Add(int[] input, int length) { int output =](https://slidetodoc.com/presentation_image/0278b1230171639675fac2d9e1dee95d/image-10.jpg)

Open. MP: Reduction int process. And. Add(int[] input, int length) { int output = 0; #pragma omp parallel for reduction(+ : output) for (int i = 0; i < length; i++) { output += process(input[i]); } return output; }

Open. MP: Conclusions • Can be used to parallelize a serial program with little effort • Or just portions thereof • Also easy to introduce bugs • Can be difficult to debug • Compiler won’t help you • Another reason to keep it simple • More complicated OMP directives • These stray from the major benefit of ease of use (by programmers) • However, all Open. MP programs are portable • Speedup is acceptable but not the best possible • This is the price you pay for automation/abstraction

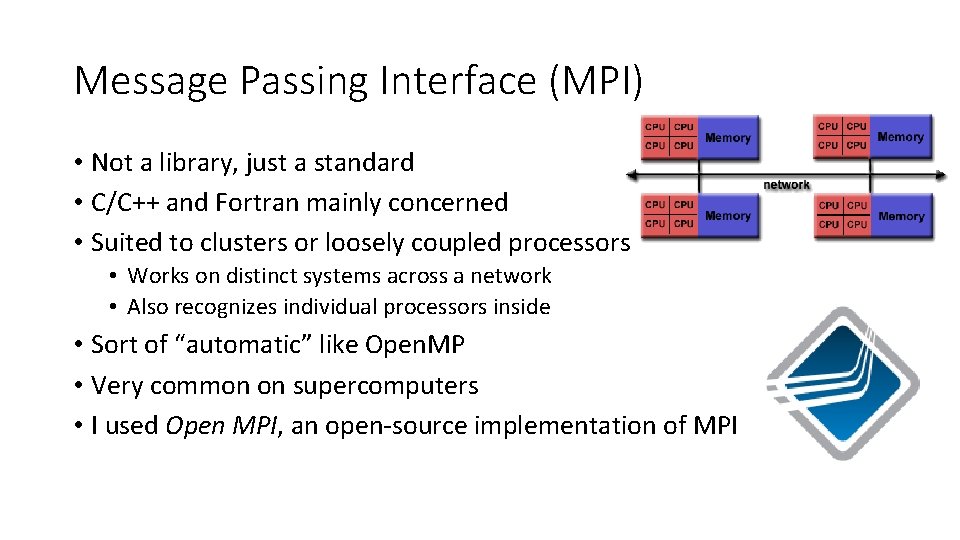

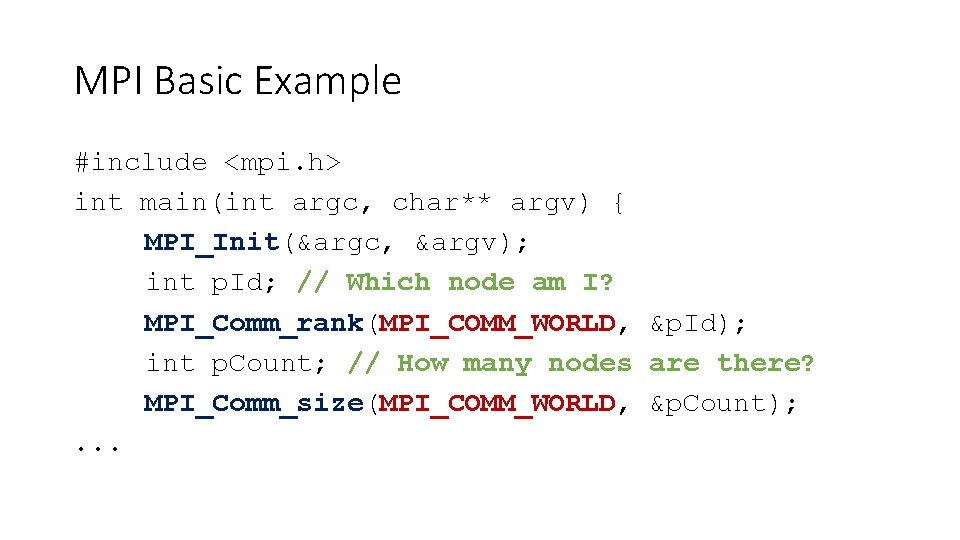

Message Passing Interface (MPI) • Not a library, just a standard • C/C++ and Fortran mainly concerned • Suited to clusters or loosely coupled processors • Works on distinct systems across a network • Also recognizes individual processors inside • Sort of “automatic” like Open. MP • Very common on supercomputers • I used Open MPI, an open-source implementation of MPI

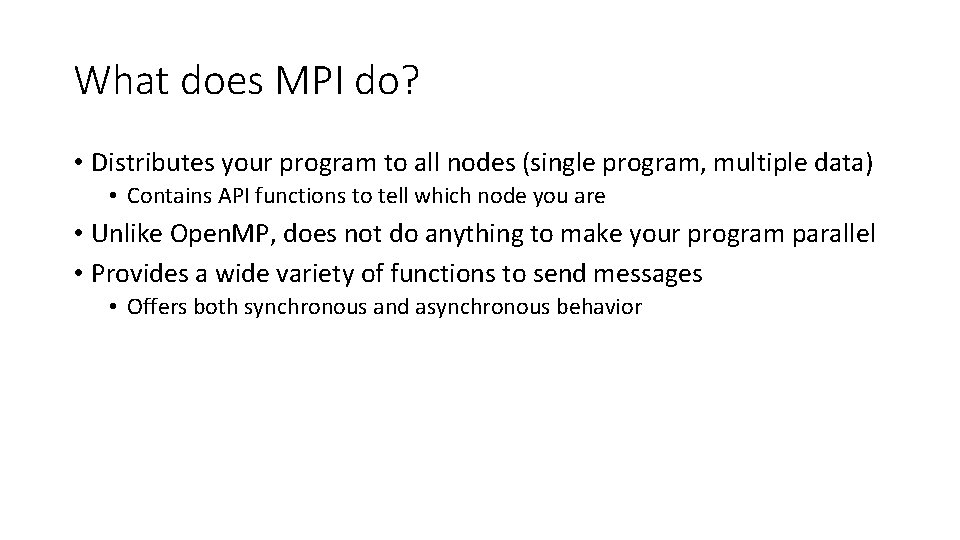

What does MPI do? • Distributes your program to all nodes (single program, multiple data) • Contains API functions to tell which node you are • Unlike Open. MP, does not do anything to make your program parallel • Provides a wide variety of functions to send messages • Offers both synchronous and asynchronous behavior

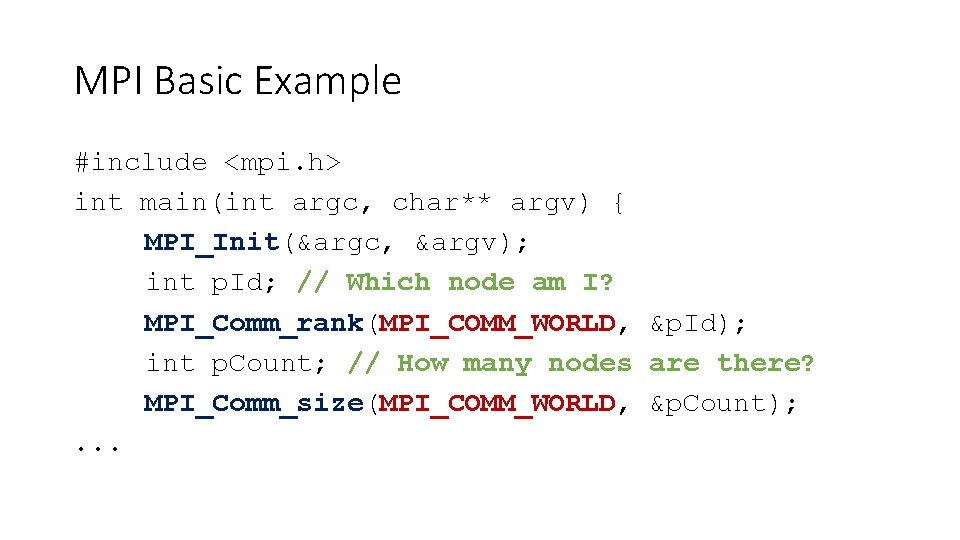

MPI Basic Example #include <mpi. h> int main(int argc, char** argv) { MPI_Init(&argc, &argv); int p. Id; // Which node am I? MPI_Comm_rank(MPI_COMM_WORLD, &p. Id); int p. Count; // How many nodes are there? MPI_Comm_size(MPI_COMM_WORLD, &p. Count); . . .

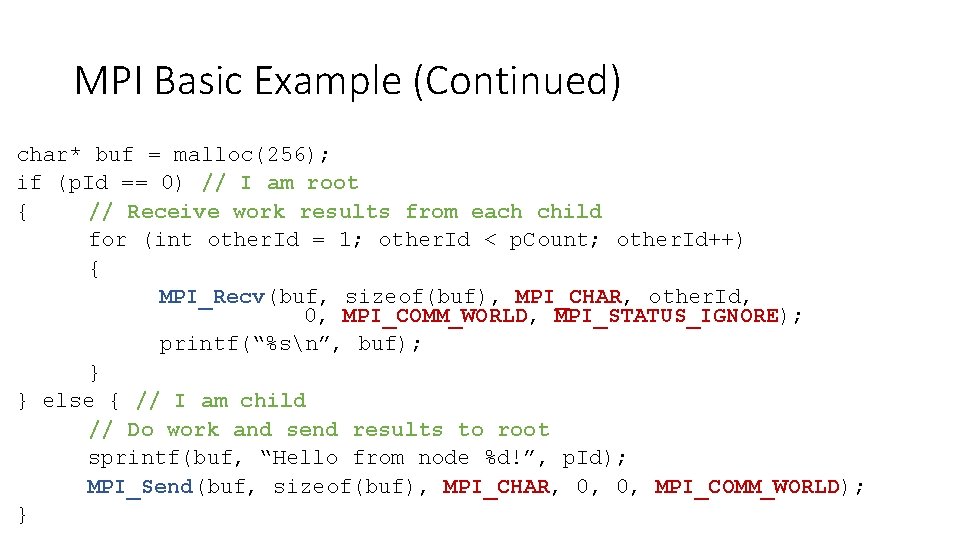

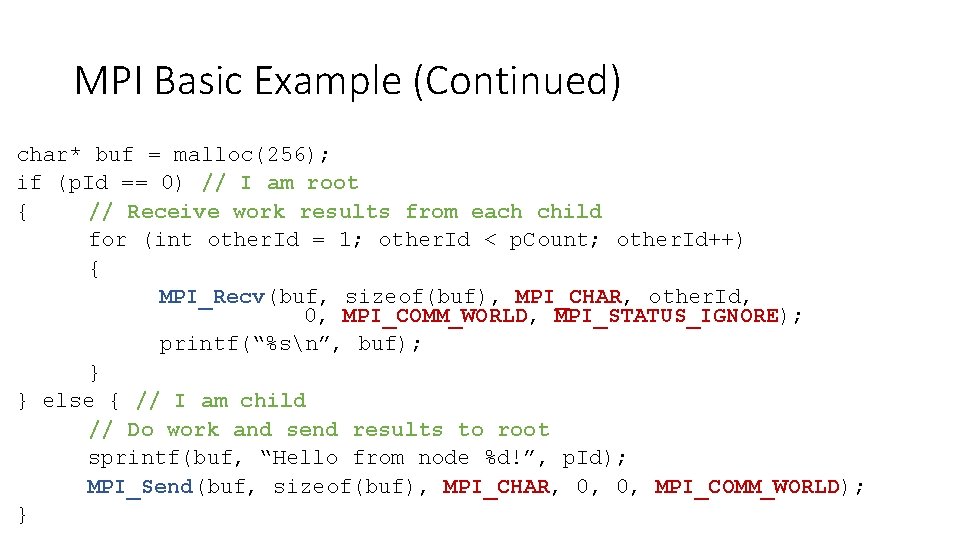

MPI Basic Example (Continued) char* buf = malloc(256); if (p. Id == 0) // I am root { // Receive work results from each child for (int other. Id = 1; other. Id < p. Count; other. Id++) { MPI_Recv(buf, sizeof(buf), MPI_CHAR, other. Id, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE); printf(“%sn”, buf); } } else { // I am child // Do work and send results to root sprintf(buf, “Hello from node %d!”, p. Id); MPI_Send(buf, sizeof(buf), MPI_CHAR, 0, 0, MPI_COMM_WORLD); }

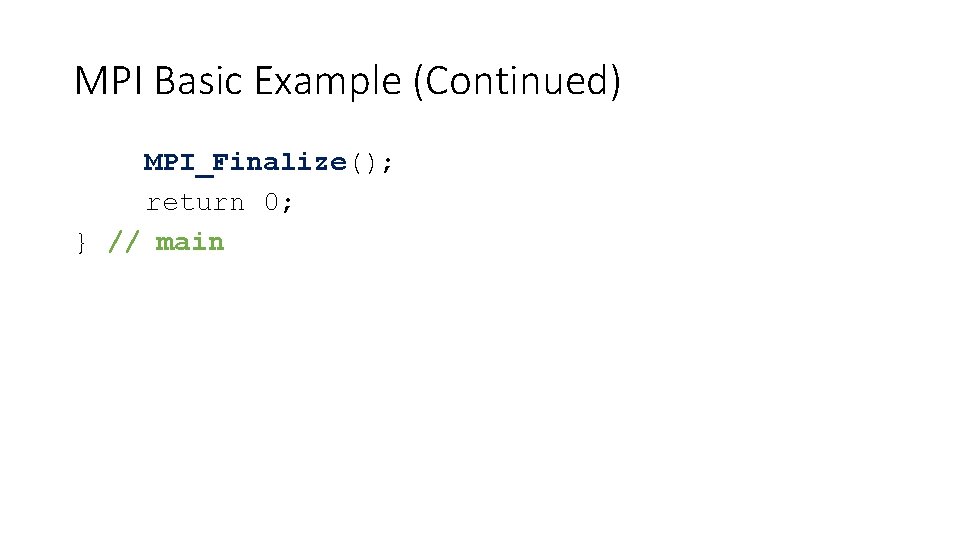

MPI Basic Example (Continued) MPI_Finalize(); return 0; } // main

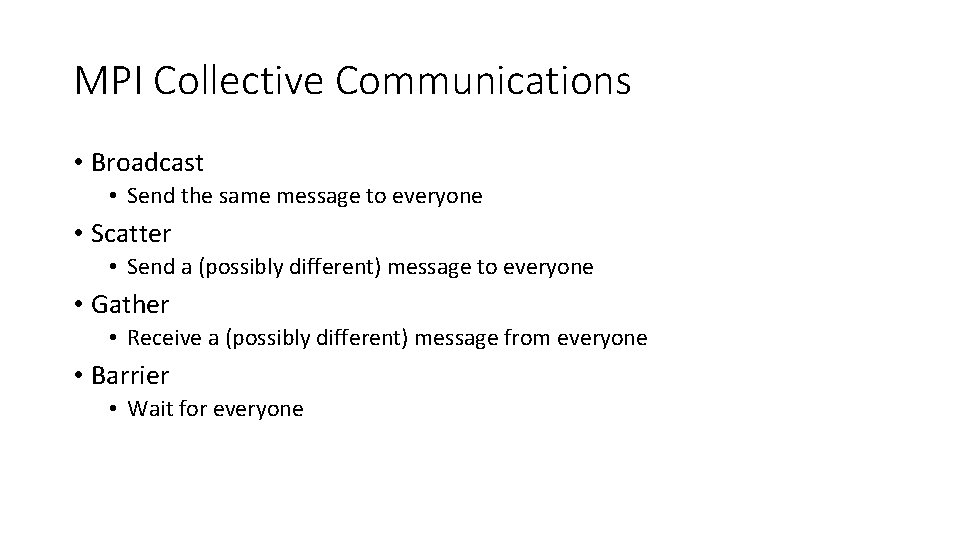

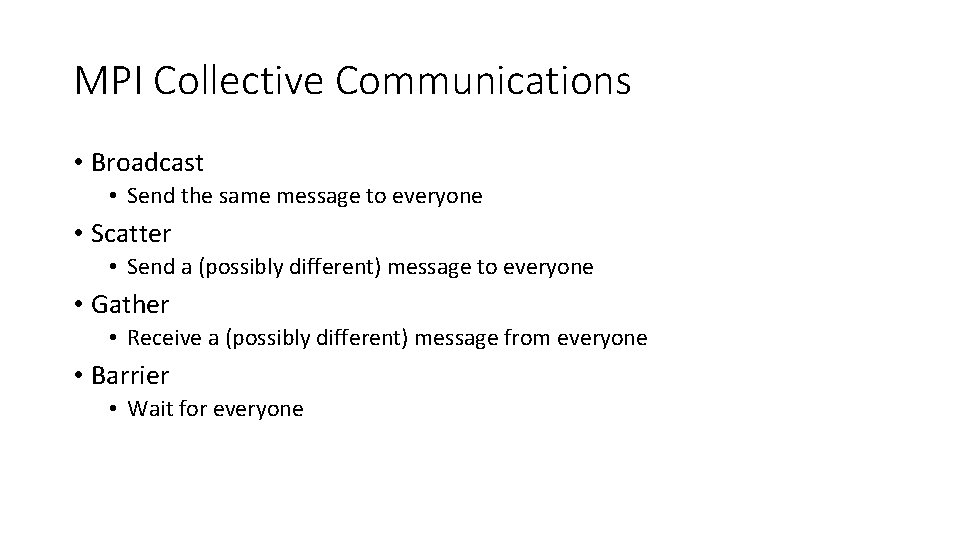

MPI Collective Communications • Broadcast • Send the same message to everyone • Scatter • Send a (possibly different) message to everyone • Gather • Receive a (possibly different) message from everyone • Barrier • Wait for everyone

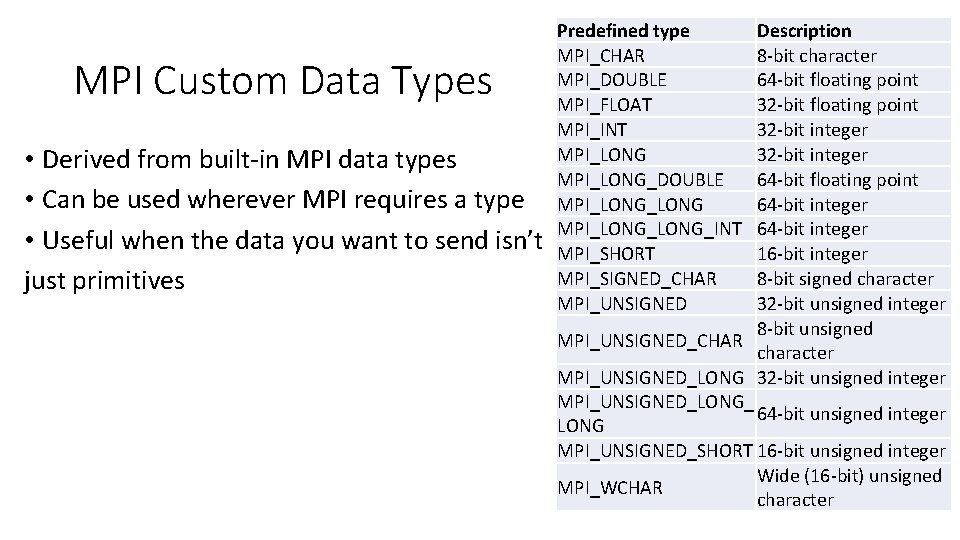

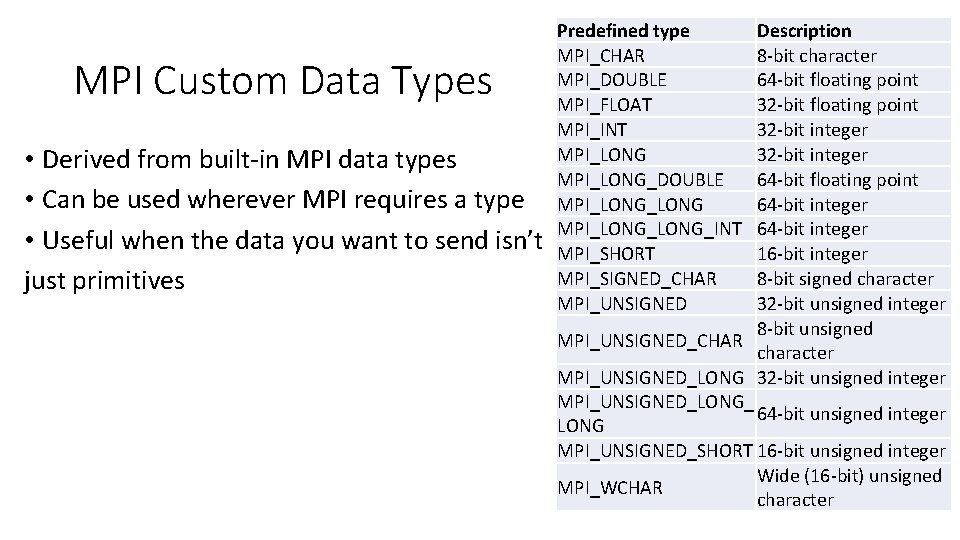

MPI Custom Data Types • Derived from built-in MPI data types • Can be used wherever MPI requires a type • Useful when the data you want to send isn’t just primitives Predefined type MPI_CHAR MPI_DOUBLE MPI_FLOAT MPI_INT MPI_LONG_DOUBLE MPI_LONG_LONG_INT MPI_SHORT MPI_SIGNED_CHAR MPI_UNSIGNED Description 8 -bit character 64 -bit floating point 32 -bit integer 64 -bit floating point 64 -bit integer 16 -bit integer 8 -bit signed character 32 -bit unsigned integer 8 -bit unsigned MPI_UNSIGNED_CHAR character MPI_UNSIGNED_LONG 32 -bit unsigned integer MPI_UNSIGNED_LONG_ 64 -bit unsigned integer LONG MPI_UNSIGNED_SHORT 16 -bit unsigned integer Wide (16 -bit) unsigned MPI_WCHAR character

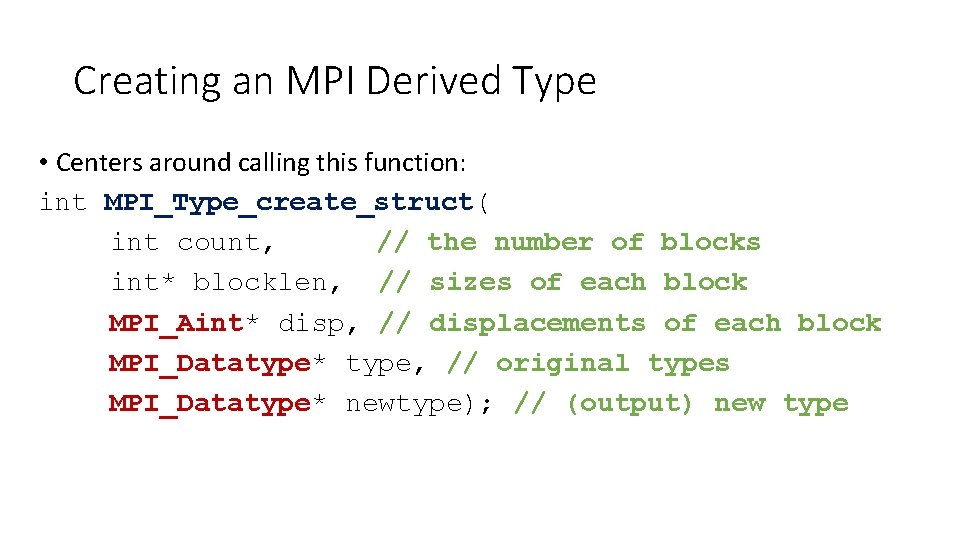

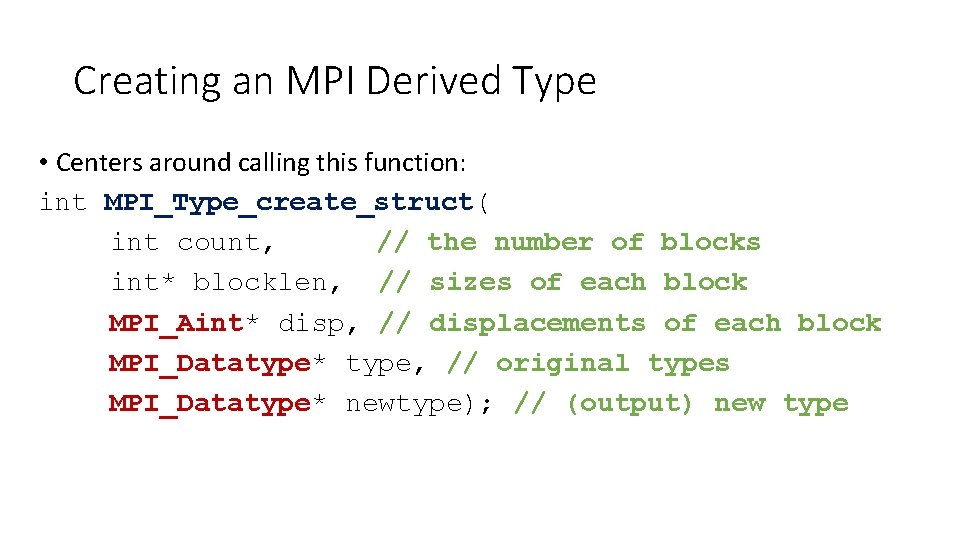

Creating an MPI Derived Type • Centers around calling this function: int MPI_Type_create_struct( int count, // the number of blocks int* blocklen, // sizes of each block MPI_Aint* disp, // displacements of each block MPI_Datatype* type, // original types MPI_Datatype* newtype); // (output) new type

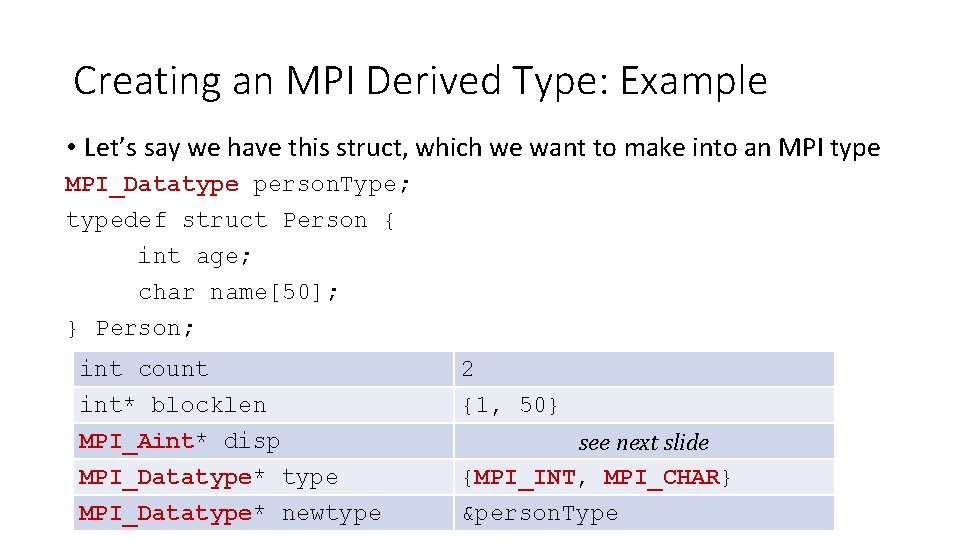

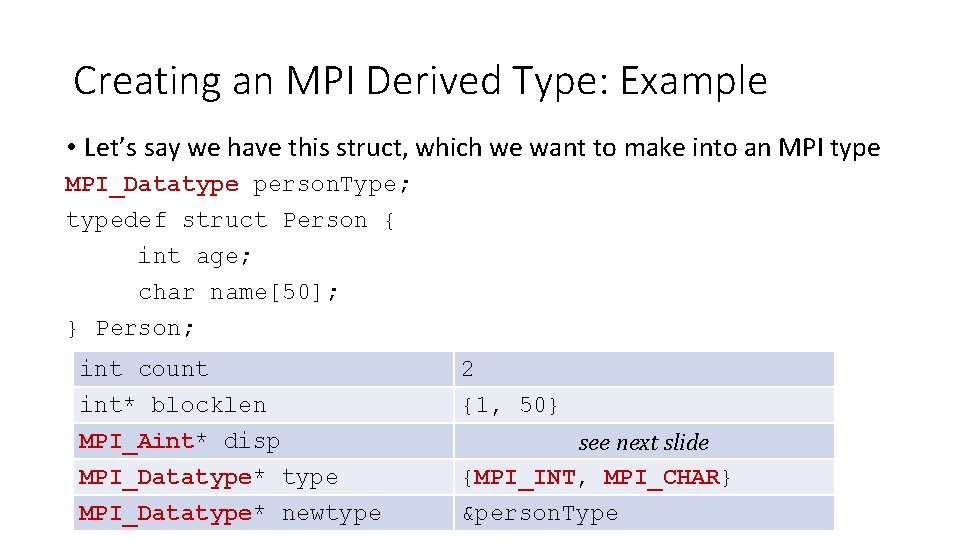

Creating an MPI Derived Type: Example • Let’s say we have this struct, which we want to make into an MPI type MPI_Datatype person. Type; typedef struct Person { int age; char name[50]; } Person; int count int* blocklen MPI_Aint* disp MPI_Datatype* type 2 {1, 50} MPI_Datatype* newtype &person. Type see next slide {MPI_INT, MPI_CHAR}

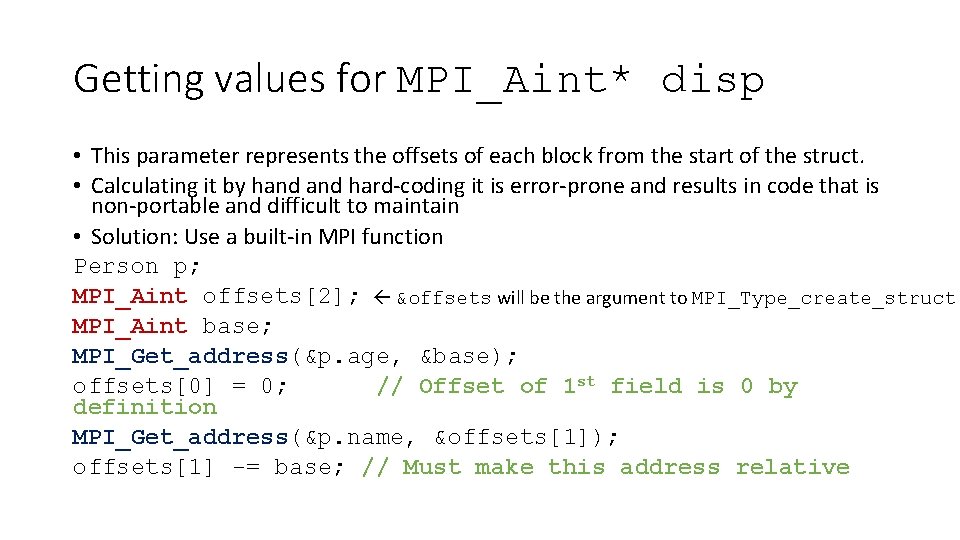

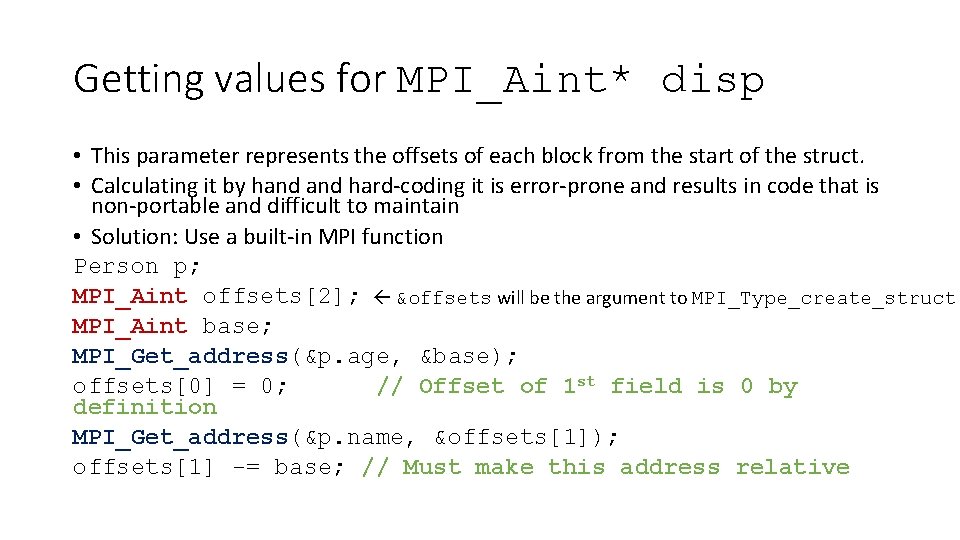

Getting values for MPI_Aint* disp • This parameter represents the offsets of each block from the start of the struct. • Calculating it by hand hard-coding it is error-prone and results in code that is non-portable and difficult to maintain • Solution: Use a built-in MPI function Person p; MPI_Aint offsets[2]; &offsets will be the argument to MPI_Type_create_struct MPI_Aint base; MPI_Get_address(&p. age, &base); offsets[0] = 0; // Offset of 1 st field is 0 by definition MPI_Get_address(&p. name, &offsets[1]); offsets[1] -= base; // Must make this address relative

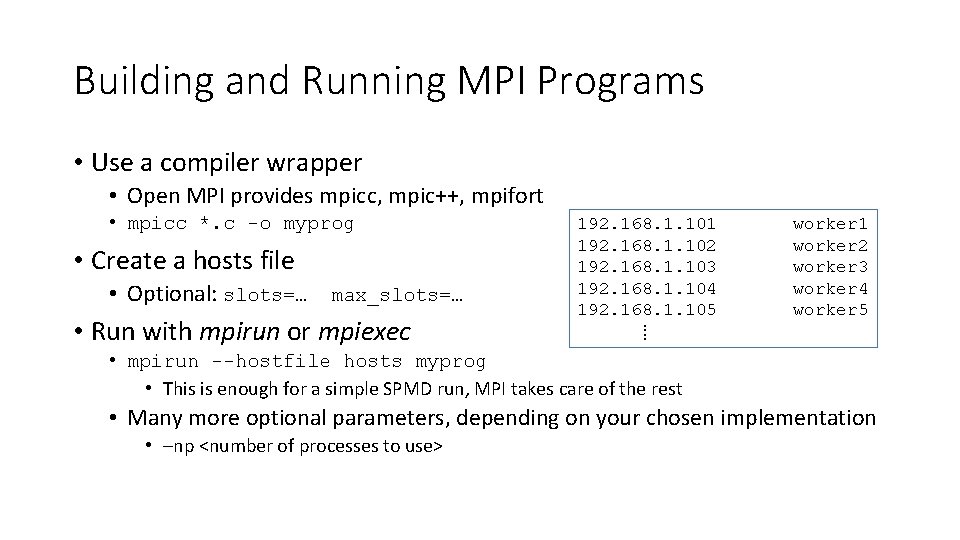

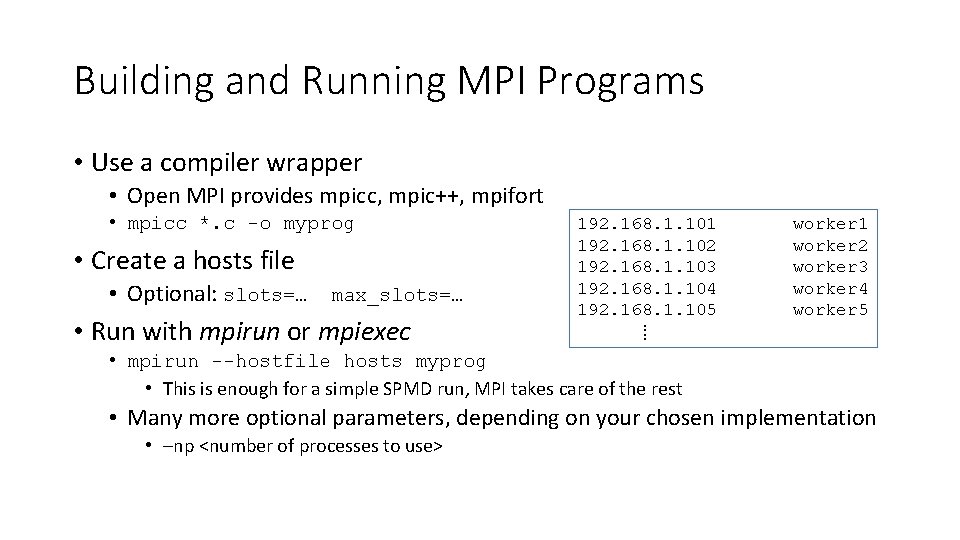

Building and Running MPI Programs • Use a compiler wrapper • Open MPI provides mpicc, mpic++, mpifort • mpicc *. c -o myprog • Create a hosts file • Optional: slots=… max_slots=… • Run with mpirun or mpiexec 192. 168. 1. 101 192. 168. 1. 102 192. 168. 1. 103 192. 168. 1. 104 192. 168. 1. 105 ⁞ worker 1 worker 2 worker 3 worker 4 worker 5 • mpirun --hostfile hosts myprog • This is enough for a simple SPMD run, MPI takes care of the rest • Many more optional parameters, depending on your chosen implementation • –np <number of processes to use>

MPI: Conclusions • “the de facto standard for distributed-memory or shared-nothing programming” (Barlas 2015) • Automates discovery and communication between network nodes • Straightforward and easy enough to use, but low level

GPU (Graphics Processing Unit) • SPMD • Motivated by need to drive computer displays • Millions of pixels, each representing around 24 bits of information • 60 fps * 2 MP * 24 bpp ≈ 3 Gb/s of meaningful data even if no work is done on it • Conclusion: GPUs exceed CPUs in raw computational power

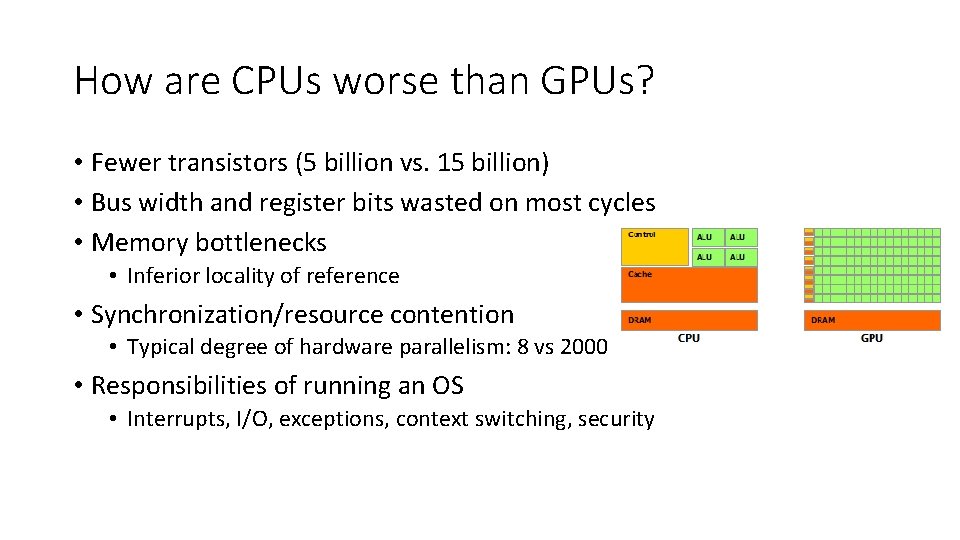

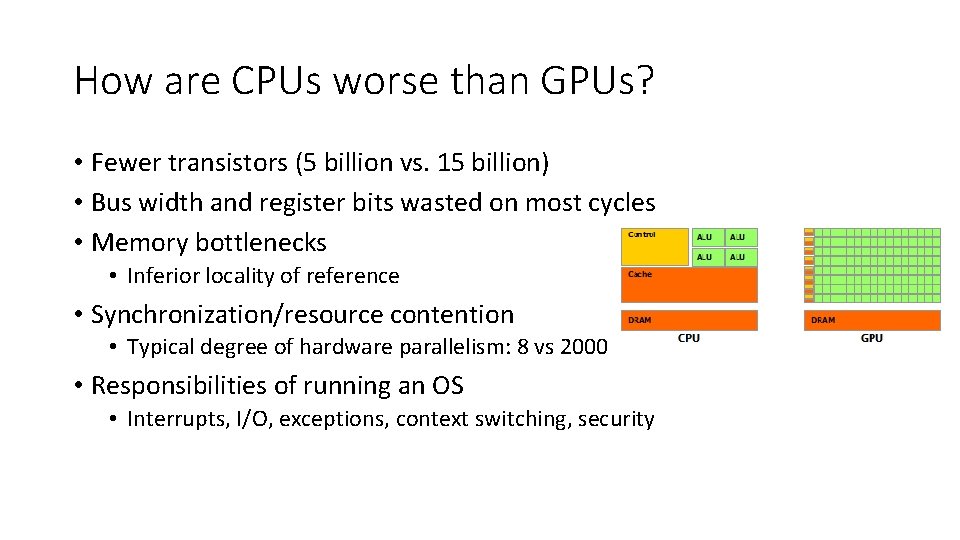

How are CPUs worse than GPUs? • Fewer transistors (5 billion vs. 15 billion) • Bus width and register bits wasted on most cycles • Memory bottlenecks • Inferior locality of reference • Synchronization/resource contention • Typical degree of hardware parallelism: 8 vs 2000 • Responsibilities of running an OS • Interrupts, I/O, exceptions, context switching, security

Challenges of GPU Programming: Memory • On most devices, GPU has its own memory • PCIe only offers around 16 GB/s vs. GPU’s 300 GB/s • Mitigation: Don’t use the system bus! • Copy as infrequently as possible • Prefer peer-to-peer • GPU to GPU vs. GPU to RAM to GPU

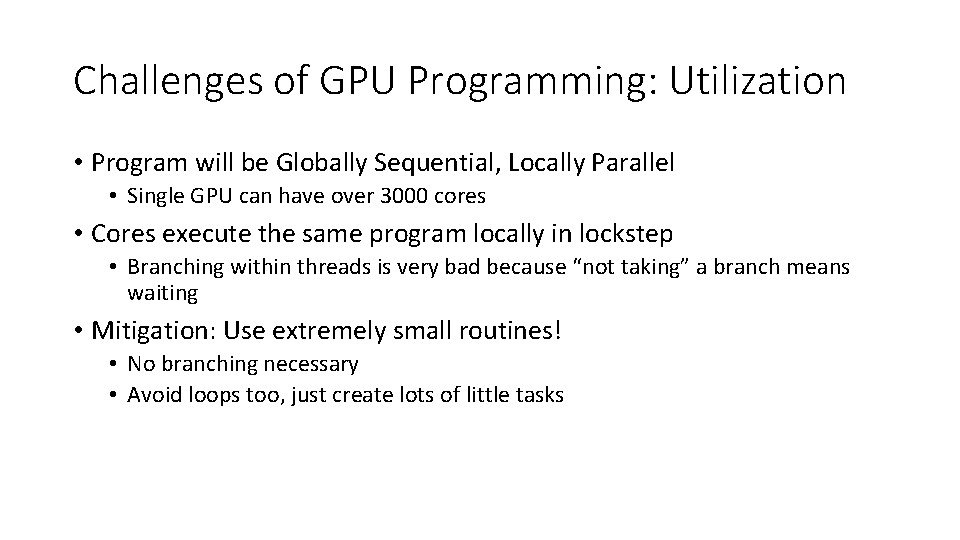

Challenges of GPU Programming: Utilization • Program will be Globally Sequential, Locally Parallel • Single GPU can have over 3000 cores • Cores execute the same program locally in lockstep • Branching within threads is very bad because “not taking” a branch means waiting • Mitigation: Use extremely small routines! • No branching necessary • Avoid loops too, just create lots of little tasks

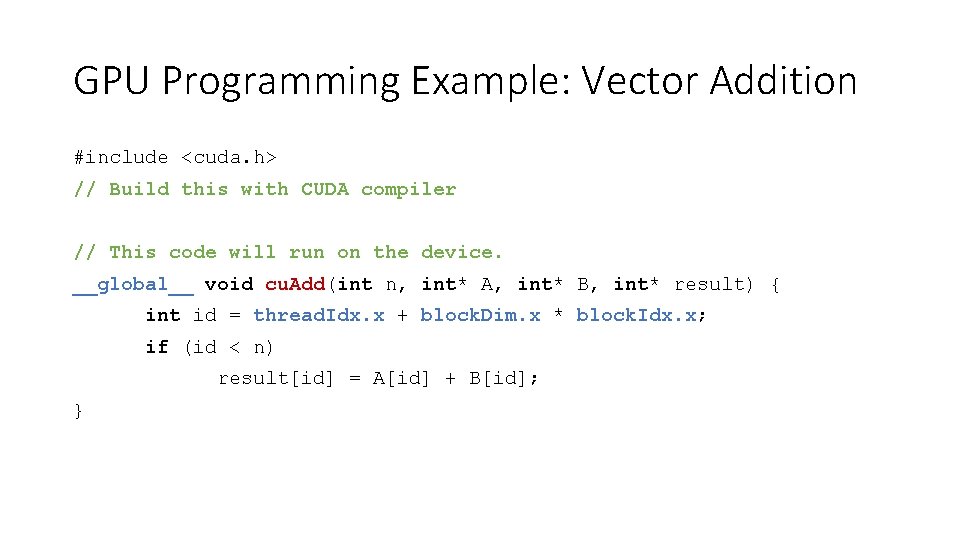

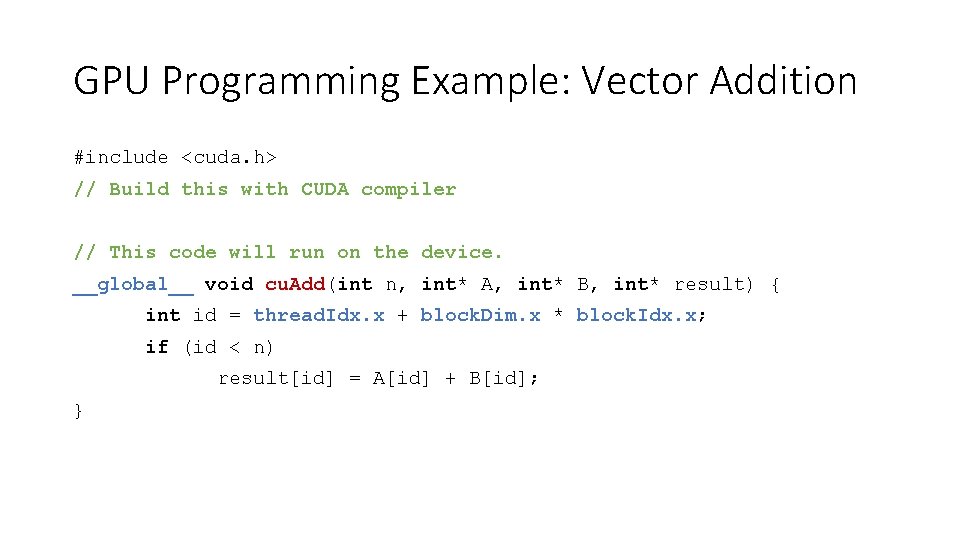

GPU Programming Example: Vector Addition #include <cuda. h> // Build this with CUDA compiler // This code will run on the device. __global__ void cu. Add(int n, int* A, int* B, int* result) { int id = thread. Idx. x + block. Dim. x * block. Idx. x; if (id < n) result[id] = A[id] + B[id]; }

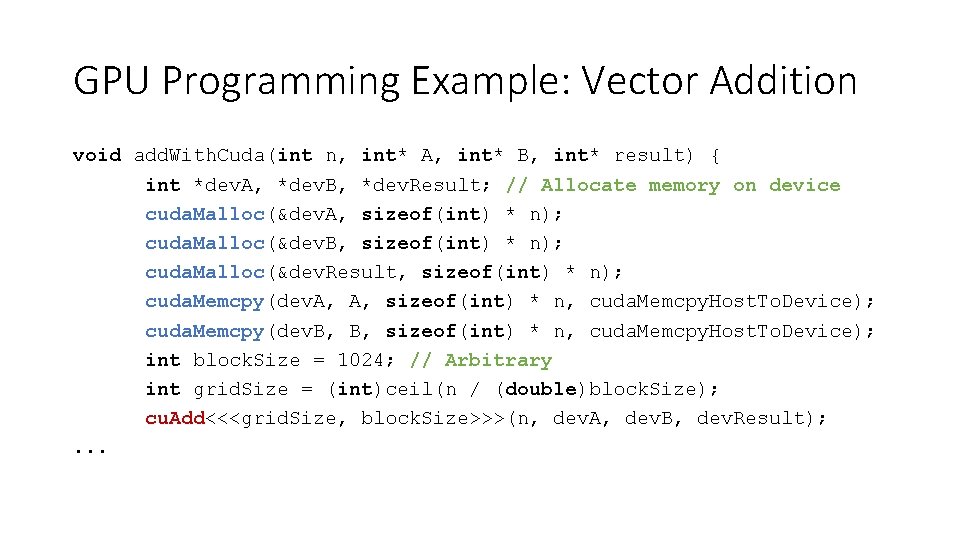

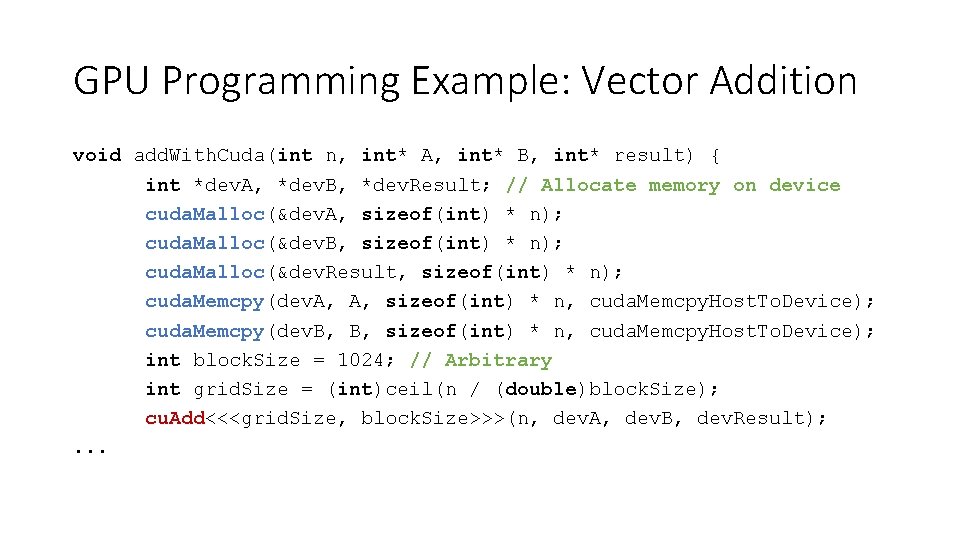

GPU Programming Example: Vector Addition void add. With. Cuda(int n, int* A, int* B, int* result) { int *dev. A, *dev. B, *dev. Result; // Allocate memory on device cuda. Malloc(&dev. A, sizeof(int) * n); cuda. Malloc(&dev. B, sizeof(int) * n); cuda. Malloc(&dev. Result, sizeof(int) * n); cuda. Memcpy(dev. A, A, sizeof(int) * n, cuda. Memcpy. Host. To. Device); cuda. Memcpy(dev. B, B, sizeof(int) * n, cuda. Memcpy. Host. To. Device); int block. Size = 1024; // Arbitrary int grid. Size = (int)ceil(n / (double)block. Size); cu. Add<<<grid. Size, block. Size>>>(n, dev. A, dev. B, dev. Result); . . .

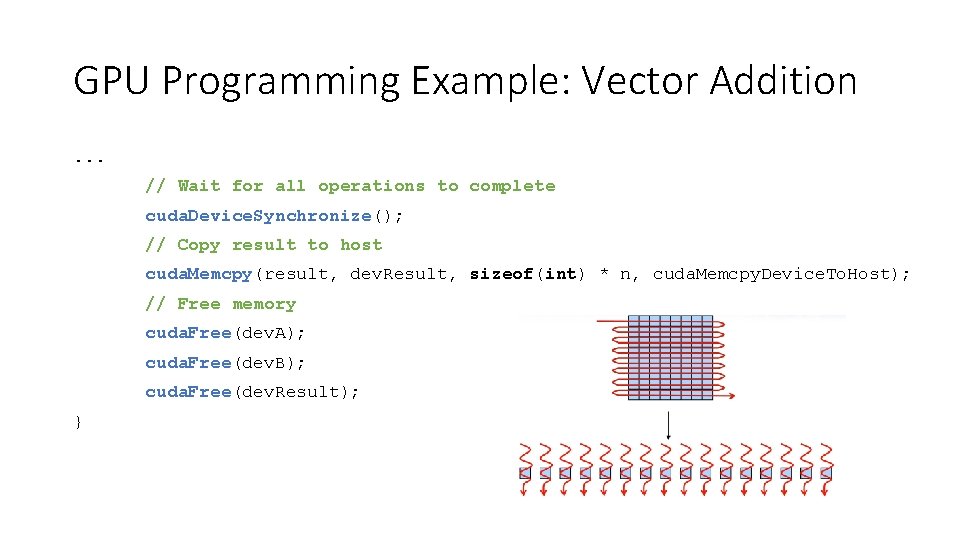

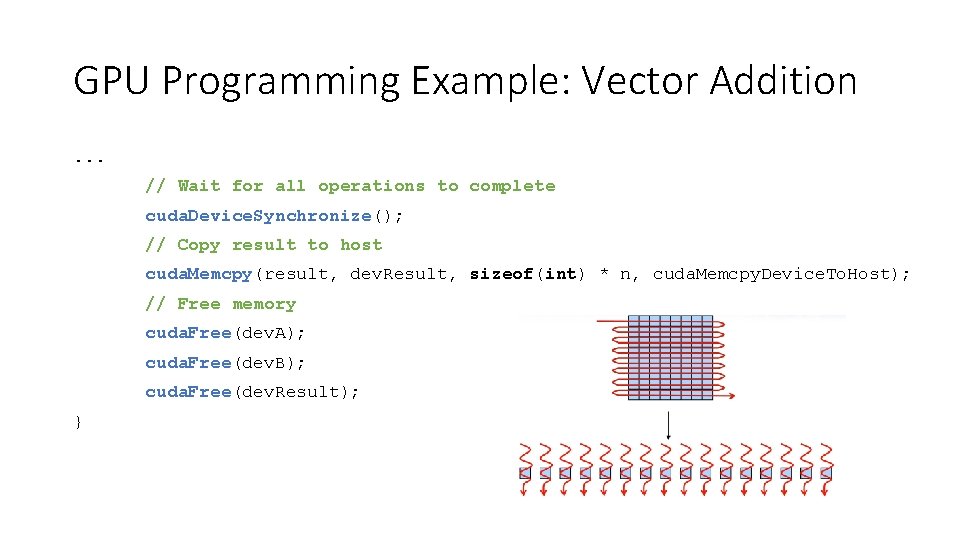

GPU Programming Example: Vector Addition. . . // Wait for all operations to complete cuda. Device. Synchronize(); // Copy result to host cuda. Memcpy(result, dev. Result, sizeof(int) * n, cuda. Memcpy. Device. To. Host); // Free memory cuda. Free(dev. A); cuda. Free(dev. B); cuda. Free(dev. Result); }

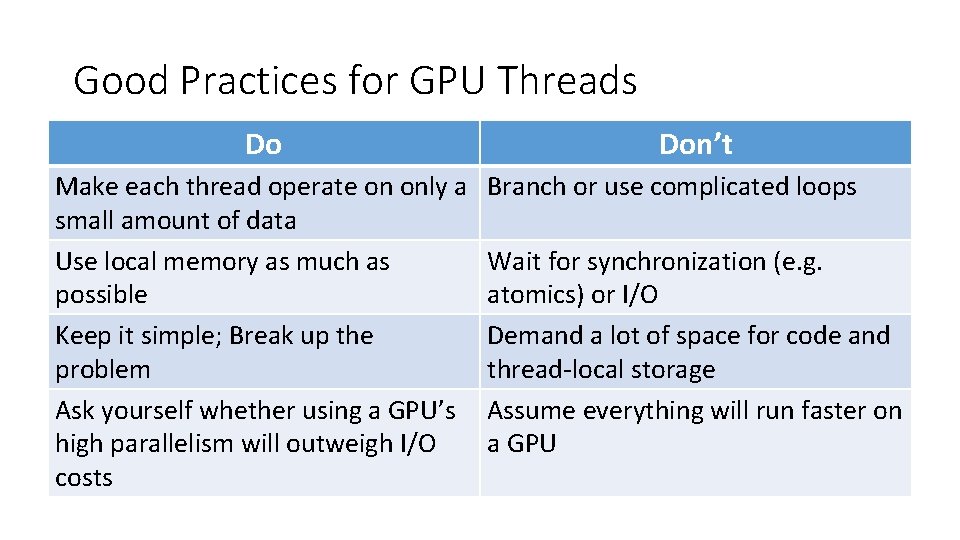

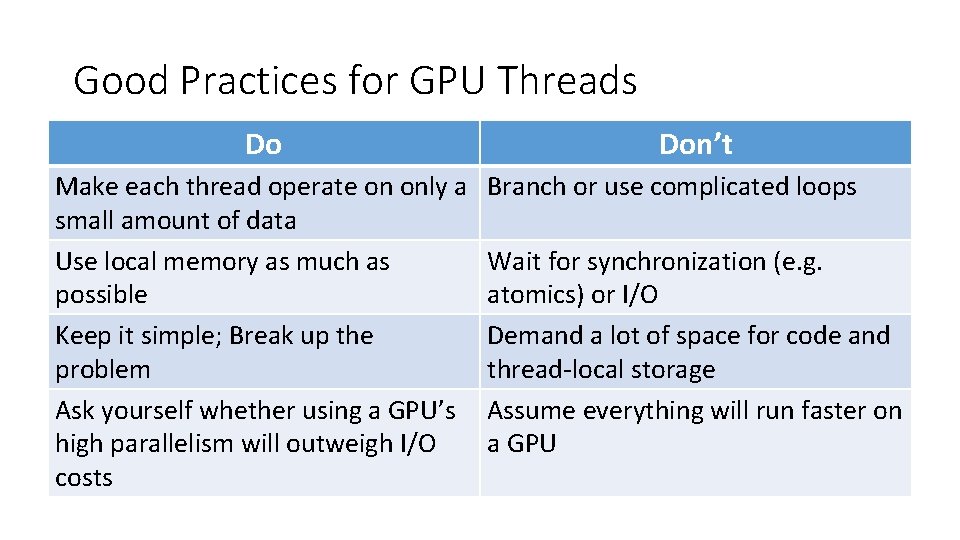

Good Practices for GPU Threads Do Make each thread operate on only a small amount of data Use local memory as much as possible Keep it simple; Break up the problem Ask yourself whether using a GPU’s high parallelism will outweigh I/O costs Don’t Branch or use complicated loops Wait for synchronization (e. g. atomics) or I/O Demand a lot of space for code and thread-local storage Assume everything will run faster on a GPU

Summary • Open. MP • https: //www. openmp. org/resources/openmp-compilers-tools/ • MPI • http: //www. mpich. org/downloads/ • CUDA • https: //developer. nvidia. com/cuda-downloads