An MPI Approach to High Performance Computing with

- Slides: 18

An MPI Approach to High. Performance Computing with FPGAs Chris Madill Molecular Structure and Function, Hospital for Sick Children Department of Biochemistry, University of Toronto Supervised by Dr. Paul Chow Electrical and Computer Engineering, University of Toronto SHARCNET Symposium on GPU and CELL Computing 2008

Introduction � � Many scientific applications can be accelerated by targeting parallel machines � Coarse-grained parallelization allows applications to be distributed across hundreds or thousands of nodes � FPGAs can accelerate many computing tasks by 2 or 3 orders of magnitude over a CPU This work demonstrates a method for combining high performance computer clusters with FPGAs for maximum computational power 2

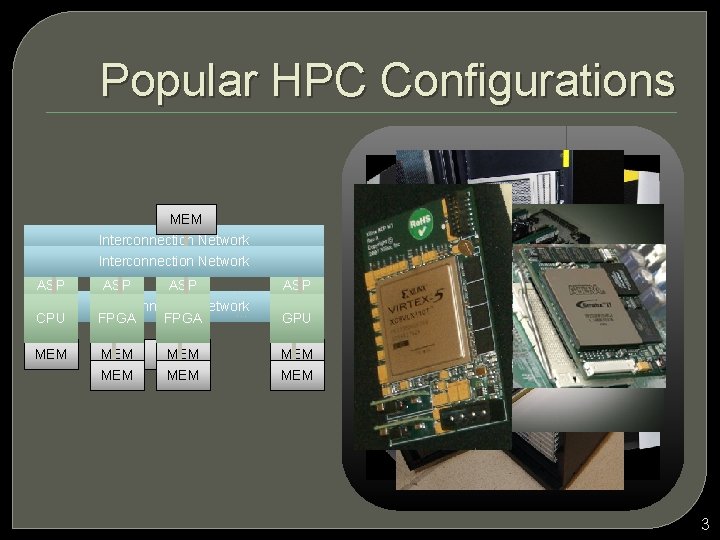

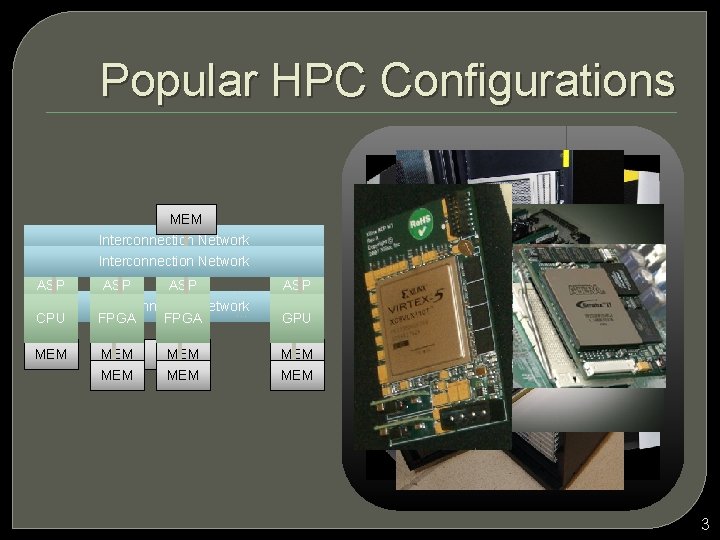

Popular HPC Configurations CPU MEM Interconnection Network … CPU Interconnection Network CPU ASP CPU (GPU/ FPGA) CPU ASP … CPU (GPU/ Interconnection Network FPGA) … FPGA ASP CPU (GPU/ FPGA) GPU MEM MEM 3

A Demanding Application

How Do You Program This? � FPGAs can speed up applications, however. . . � High barrier of entry for designing digital hardware � Developing monolithic FPGA designs is very daunting � How does one easily take advantage of FPGAs for accelerating HPC applications? 5

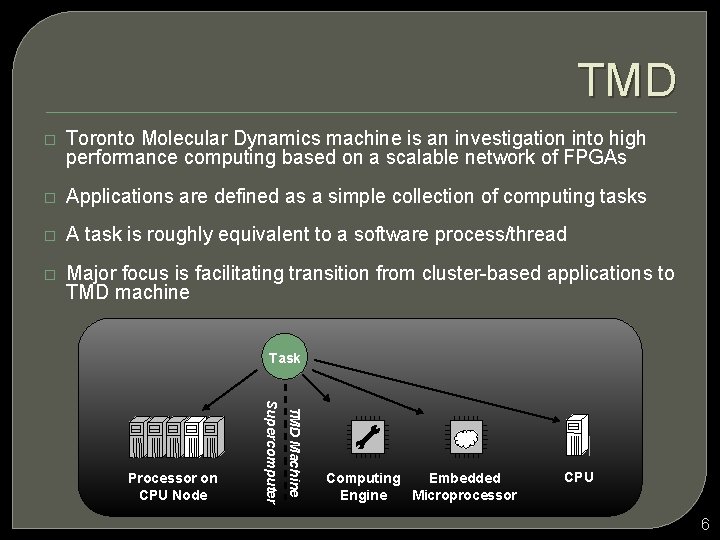

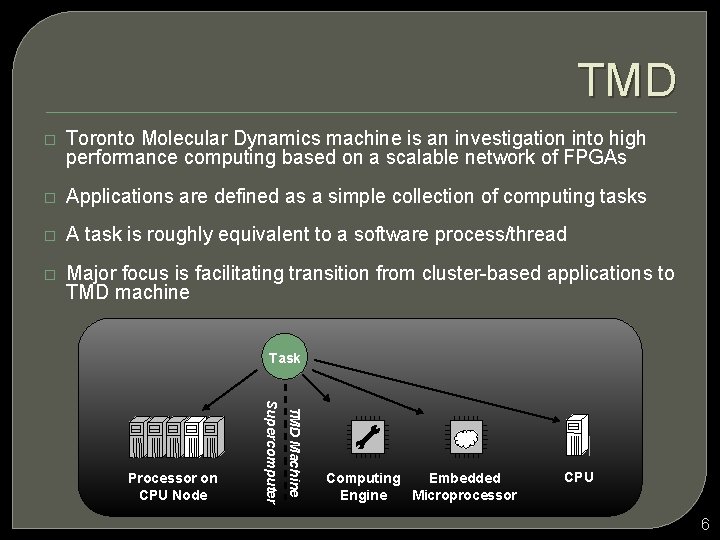

TMD � Toronto Molecular Dynamics machine is an investigation into high performance computing based on a scalable network of FPGAs � Applications are defined as a simple collection of computing tasks � A task is roughly equivalent to a software process/thread � Major focus is facilitating transition from cluster-based applications to TMD machine Task TMD Machine Supercomputer Processor on CPU Node Computing Embedded Engine Microprocessor CPU 6

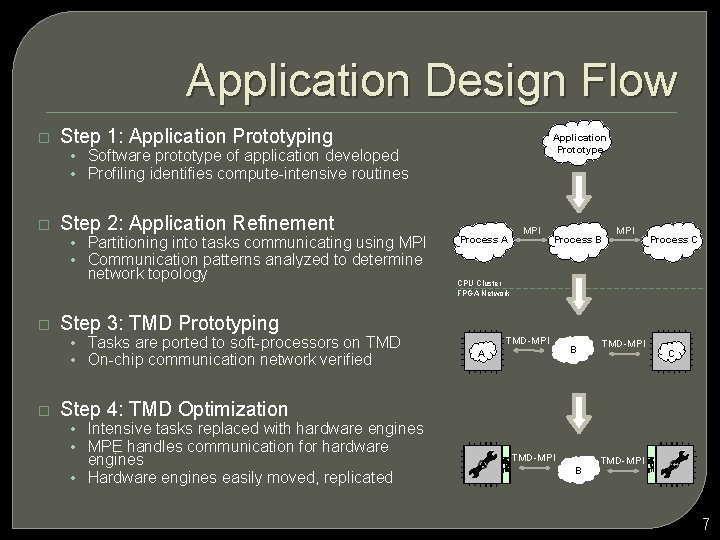

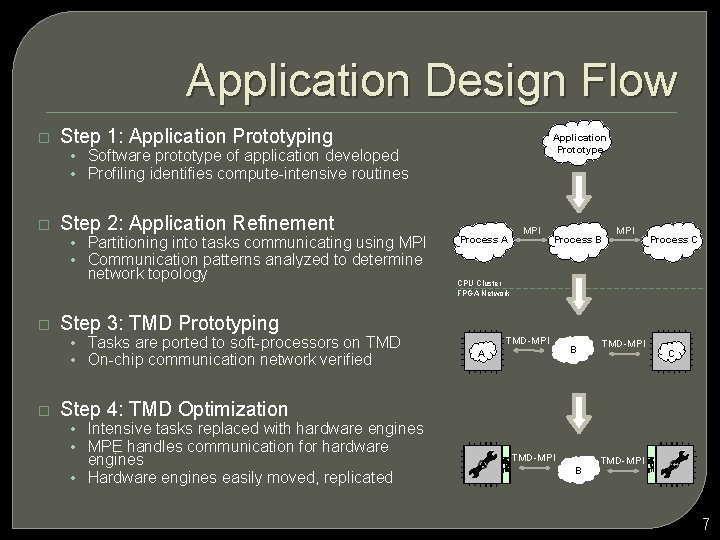

Application Design Flow � Step 1: Application Prototyping Application Prototype • Software prototype of application developed • Profiling identifies compute-intensive routines � Step 2: Application Refinement • Partitioning into tasks communicating using MPI • Communication patterns analyzed to determine network topology � MPI Process B MPI Process C CPU Cluster FPGA Network Step 3: TMD Prototyping • Tasks are ported to soft-processors on TMD • On-chip communication network verified � Process A TMD-MPI A B TMD-MPI C Step 4: TMD Optimization • Intensive tasks replaced with hardware engines • MPE handles communication for hardware engines • Hardware engines easily moved, replicated TMD-MPI B TMD-MPI 7

Communication � Use essential subset of MPI standard � Software library for tasks run on processors � Hardware Message Passing Engine (MPE) for hardwarebased tasks � Tasks do not know (or care) whether remote tasks are run as software processes or hardware engines � MPI isolation of tasks facilitates C-to-gates compilers 8

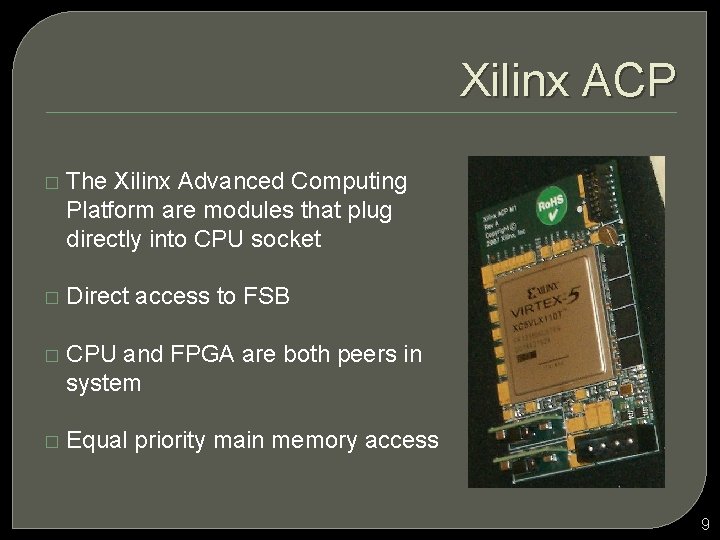

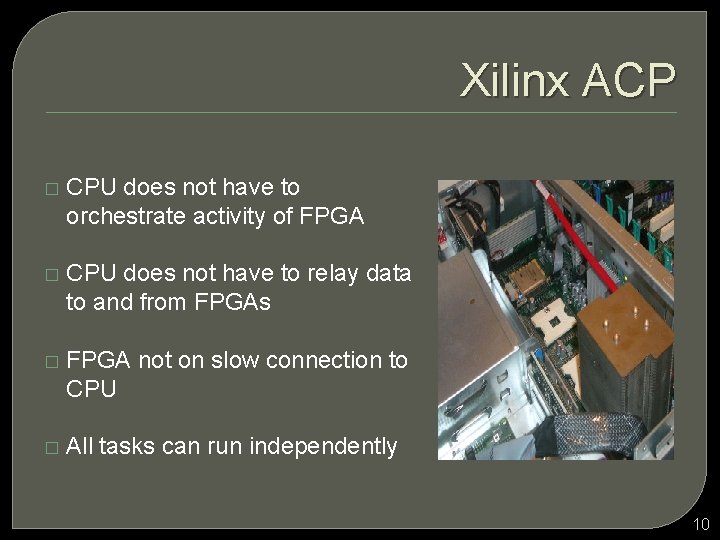

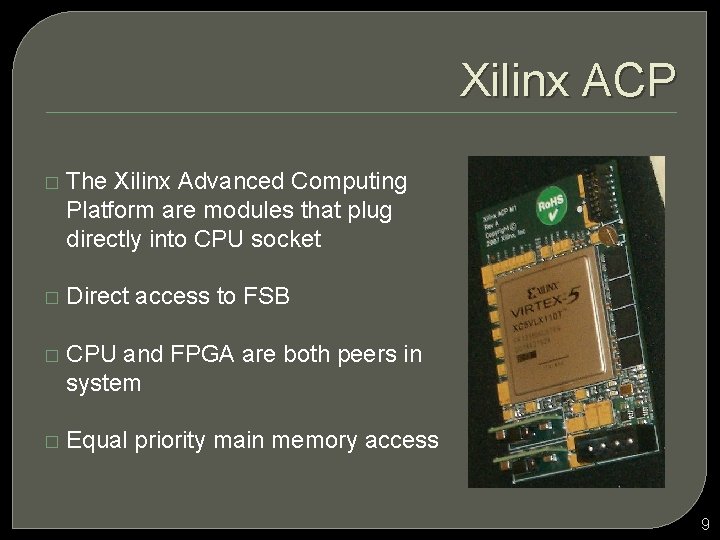

Xilinx ACP � The Xilinx Advanced Computing Platform are modules that plug directly into CPU socket � Direct access to FSB � CPU and FPGA are both peers in system � Equal priority main memory access 9

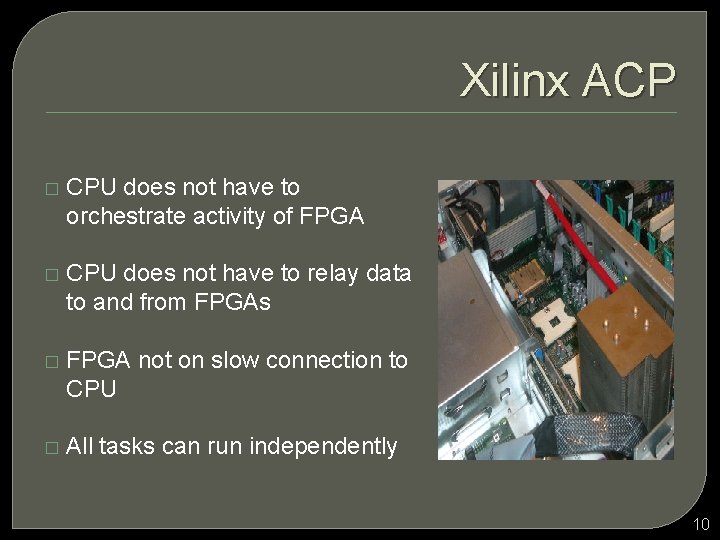

Xilinx ACP � CPU does not have to orchestrate activity of FPGA � CPU does not have to relay data to and from FPGAs � FPGA not on slow connection to CPU � All tasks can run independently 10

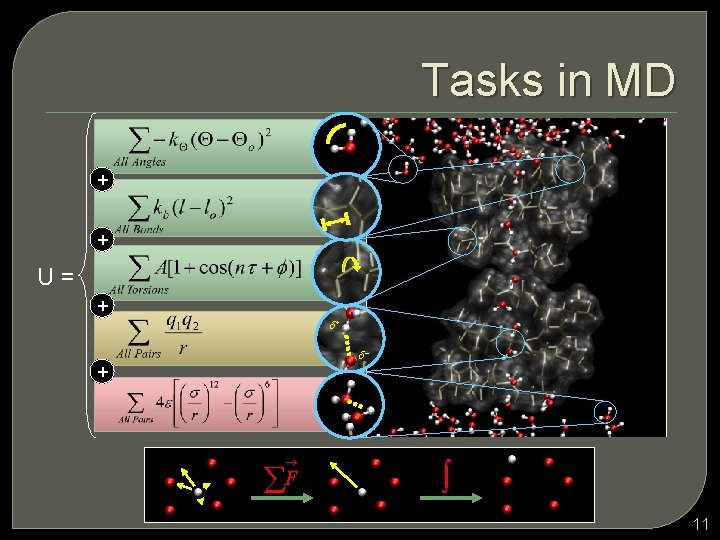

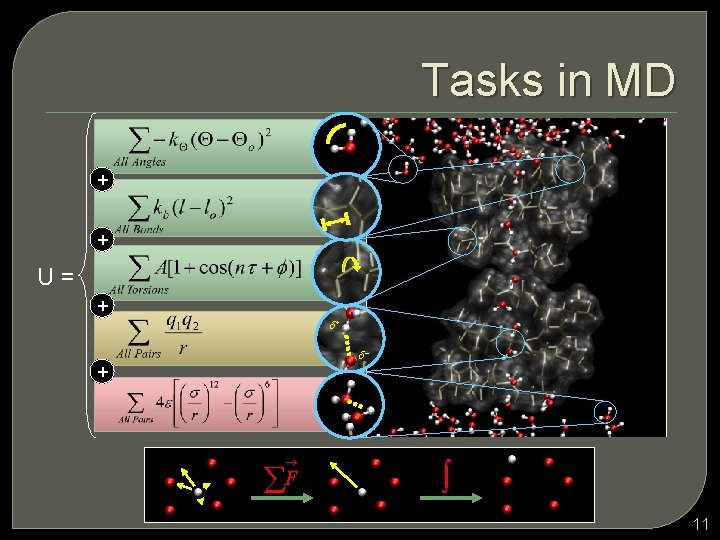

Tasks in MD + + U= + d+ d- + ® åF ò 11

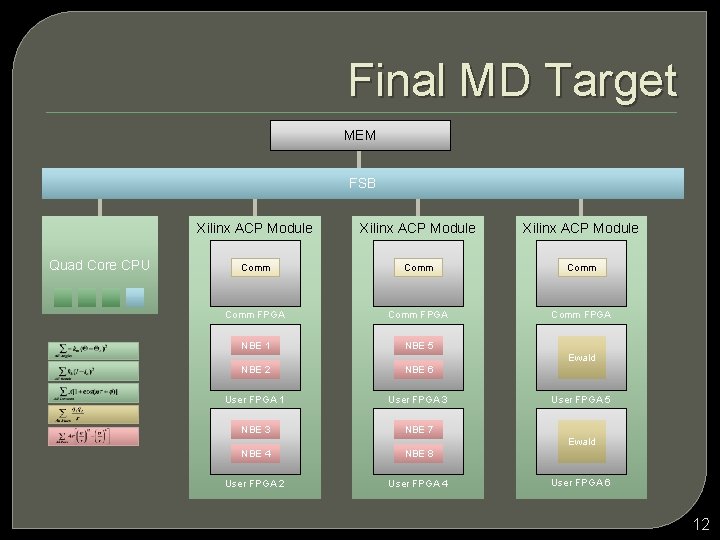

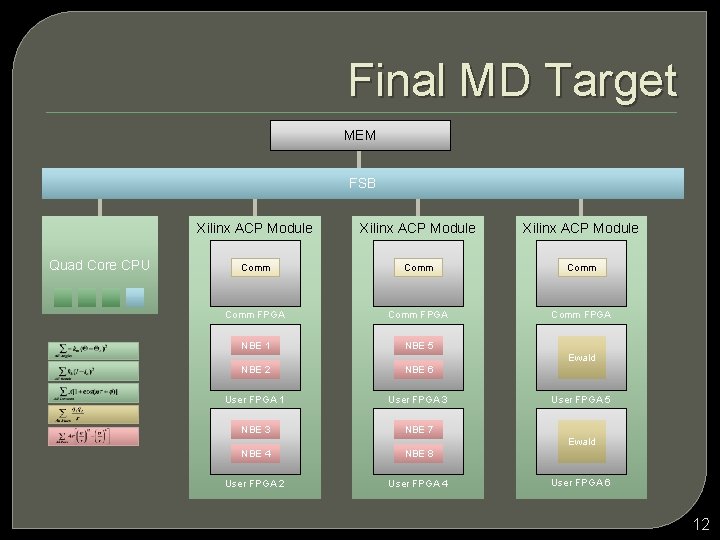

Final MD Target MEM FSB Quad Core CPU Xilinx ACP Module Comm FPGA Comm FPGA NBE 1 NBE 5 NBE 2 NBE 6 User FPGA 1 User FPGA 3 NBE 7 NBE 4 NBE 8 User FPGA 2 User FPGA 4 Ewald User FPGA 5 Ewald User FPGA 6 12

Conclusion � Target system is a combination of software running on CPUs and FPGA hardware accelerators � Key to performance is in identifying hotspots and adding corresponding hardware acceleration � Hardware engineer must focus only on small part of overall application � MPI facilitates hardware/software isolation, collaboration 13

Acknowledgements SOCRN Prof. Paul Chow Prof. Régis Pomès 1, 2 Arches Computing: Arun Patel Manuel Saldaña TMD Group: Danny Gupta Alireza Heiderbarghi Alex Kaganov Daniel Ly Chris Madill 1, 2 Daniel Nunes Emanuel Ramalho David Woods Past Members: David Chui Christopher Comis Sam Lee Daniel Ly Lesley Shannon Mike Yan 1: Molecular Structure and Function, The Hospital for Sick Children 2: Department of Biochemistry, University of Toronto

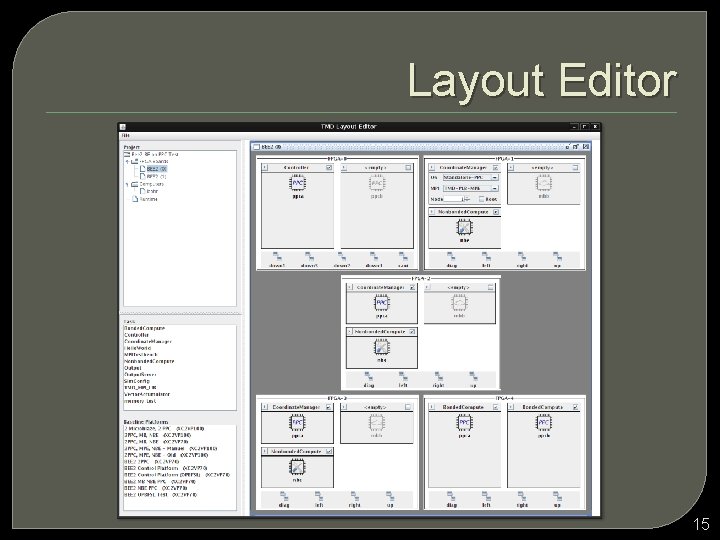

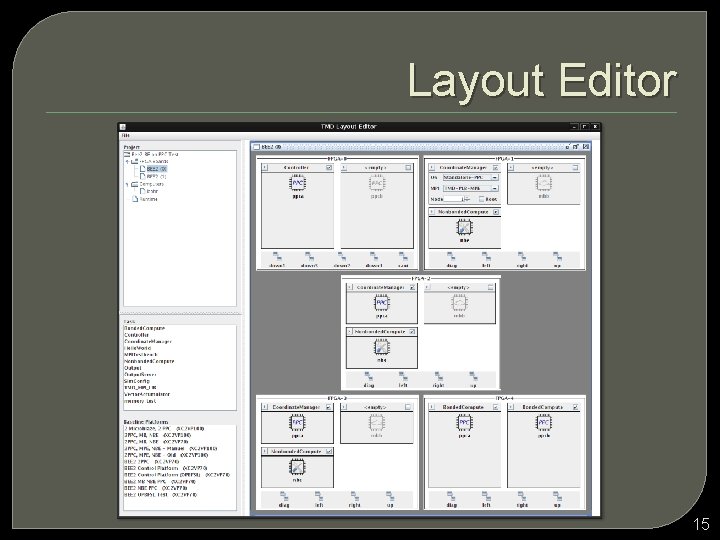

Layout Editor 15

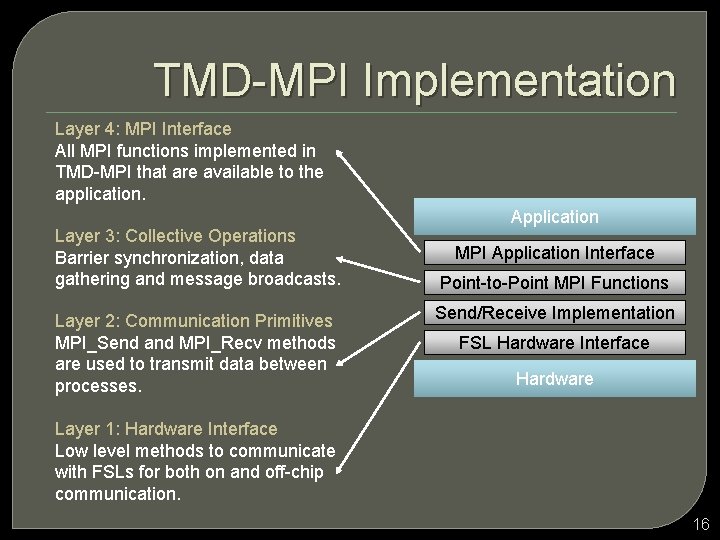

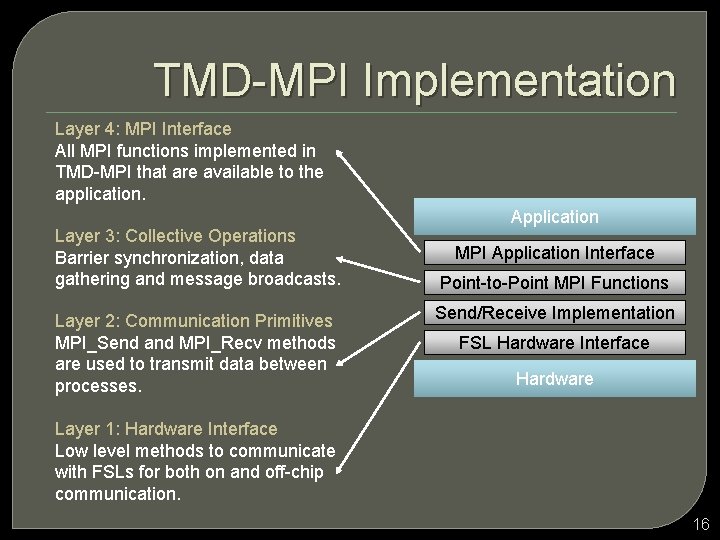

TMD-MPI Implementation Layer 4: MPI Interface All MPI functions implemented in TMD-MPI that are available to the application. Layer 3: Collective Operations Barrier synchronization, data gathering and message broadcasts. Layer 2: Communication Primitives MPI_Send and MPI_Recv methods are used to transmit data between processes. Application MPI Application Interface Point-to-Point MPI Functions Send/Receive Implementation FSL Hardware Interface Hardware Layer 1: Hardware Interface Low level methods to communicate with FSLs for both on and off-chip communication. 16

Intra-FPGA Communication � Communication links are based on Fast Simplex Links (FSL) • Unidirectional Point-to-Point FIFO • Provides buffering and flow-control • Can be used to isolate different clock domains � FSLs simplify component interconnects • Standardized interface, used by both hardware engines and processors • Can assemble system modules rapidly � Application-specific network topologies can be defined 17

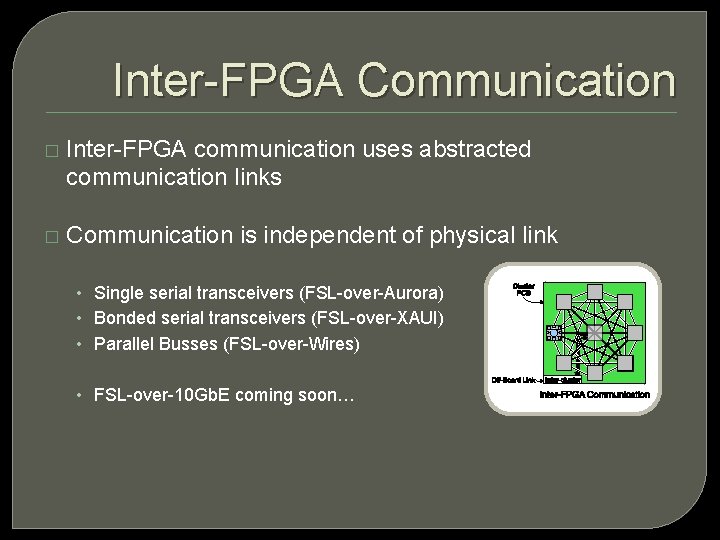

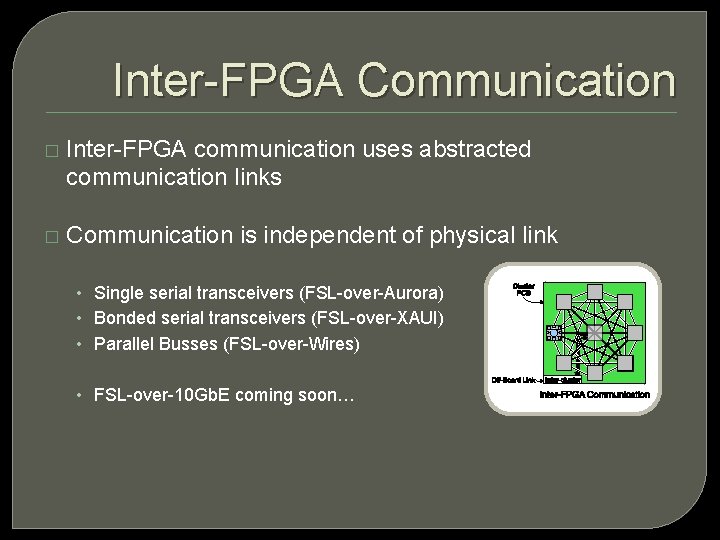

Inter-FPGA Communication � Inter-FPGA communication uses abstracted communication links � Communication is independent of physical link • Single serial transceivers (FSL-over-Aurora) • Bonded serial transceivers (FSL-over-XAUI) • Parallel Busses (FSL-over-Wires) • FSL-over-10 Gb. E coming soon…