No Free Lunch No Hidden Cost How Can

- Slides: 25

No Free Lunch, No Hidden Cost How Can Co-Design Help? X. Sharon Hu Dept. Computer Science and Engineering University of Notre Dame The Salishan Conference on High-Speed Computing 1 Department of Computer Science and Engineering The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 1

Theme: Exposing Hidden Execution Costs q Cost of execution: performance and power Ø Computation Ø Communication Ø Data motion Ø Synchronization Ø… q How can we strike a balance between the extremes? Ø Hide as much as possible? Ø Explicitly manage “all” costs? q My “position”: Ø Expose widely and choose wisely Ø Focus on power The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 2

Why Taking the Position? q Expose widely Ø Better understanding the contribution by each component Ø Allowing application-specific tradeoffs Ø Providing opportunities for powerful co-design tools q Choose wisely Ø Requiring sophisticated co-design tools Ø Exploring more algorithm/software options The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 3

But Easier Said Than Done! q Heterogeneity Ø Compute nodes: (multi-core) CPU, GP-GPU, FPGA, … Ø Memory components: on-chip, on-board, disks, … Ø Communication infrastructure: bus, No. C, networks, … q Parallelism (”non-determinism”) Ø Data access: movement, coherence, … Ø Resource contention Ø synchronization The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 4

Outline q Why expose widely? q How to benefit from exposing widely? q How to choose wisely? q Going forward The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 5

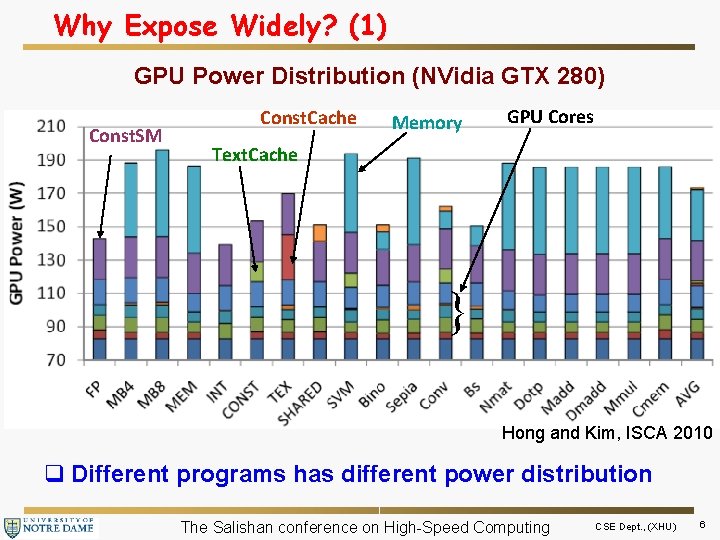

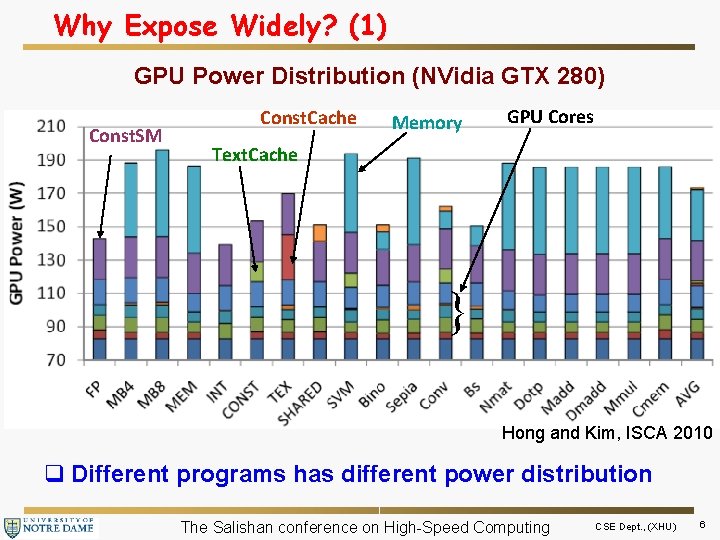

Why Expose Widely? (1) GPU Power Distribution (NVidia GTX 280) Const. SM Const. Cache Memory GPU Cores Text. Cache } Hong and Kim, ISCA 2010 q Different programs has different power distribution The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 6

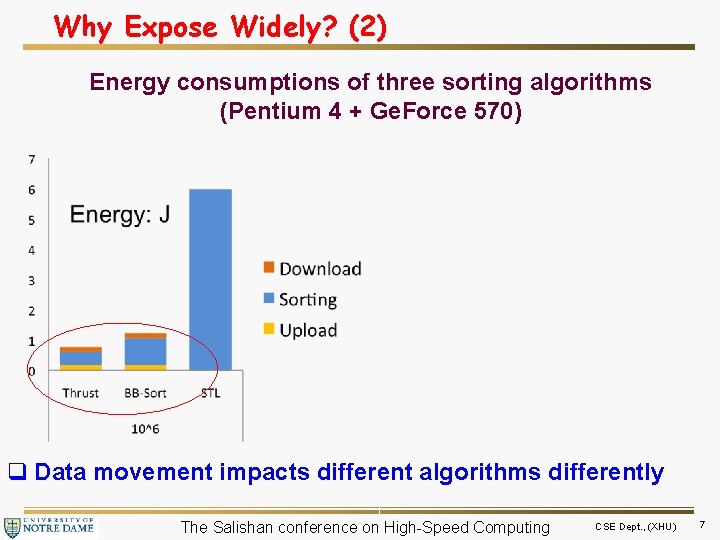

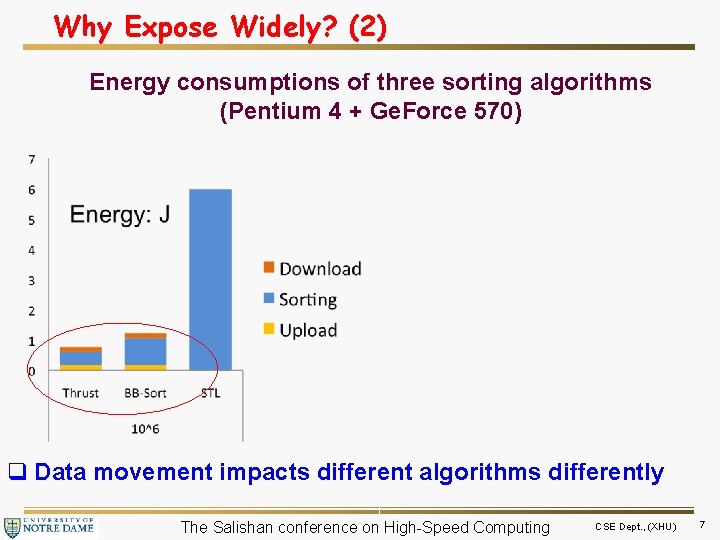

Why Expose Widely? (2) Energy consumptions of three sorting algorithms (Pentium 4 + Ge. Force 570) q Data movement impacts different algorithms differently The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 7

Why Expose Widely? (3) Performance degradation due to memory bus contention Massaki Kondo, et. al. , Sig. ARCH 2007 q Application dependent The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 8

Outline q Why expose widely? q How to benefit from exposing widely? q How to choose wisely? q Going forward The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 9

How to Benefit from “Exposing Widely”? q Co-design is the key q Expose all factors impacting the “execution model” Ø Computation: processing resource Ø Data motion: memory components and hierarchy Ø Communication: bus and network Ø Resource contention, synchronization… Ø Some examples v. Software macromodeling v. Hardware module-based modeling q Optimize through power management Ø Keep in mind Amdahl’s law The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 10

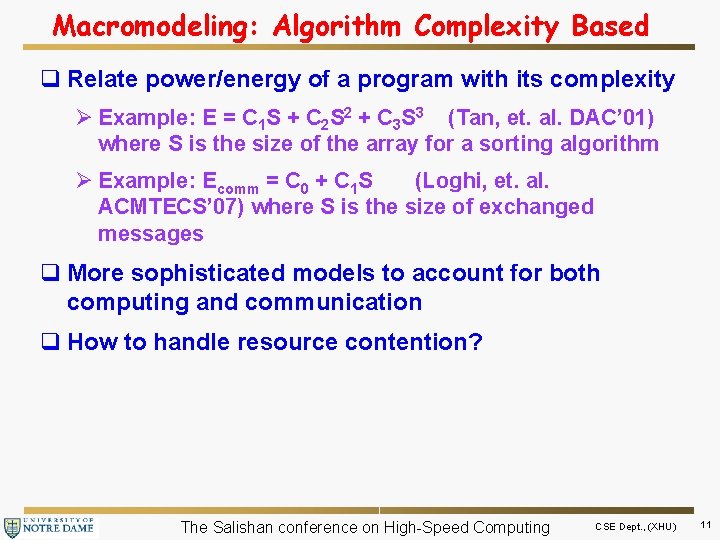

Macromodeling: Algorithm Complexity Based q Relate power/energy of a program with its complexity Ø Example: E = C 1 S + C 2 S 2 + C 3 S 3 (Tan, et. al. DAC’ 01) where S is the size of the array for a sorting algorithm Ø Example: Ecomm = C 0 + C 1 S (Loghi, et. al. ACMTECS’ 07) where S is the size of exchanged messages q More sophisticated models to account for both computing and communication q How to handle resource contention? The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 11

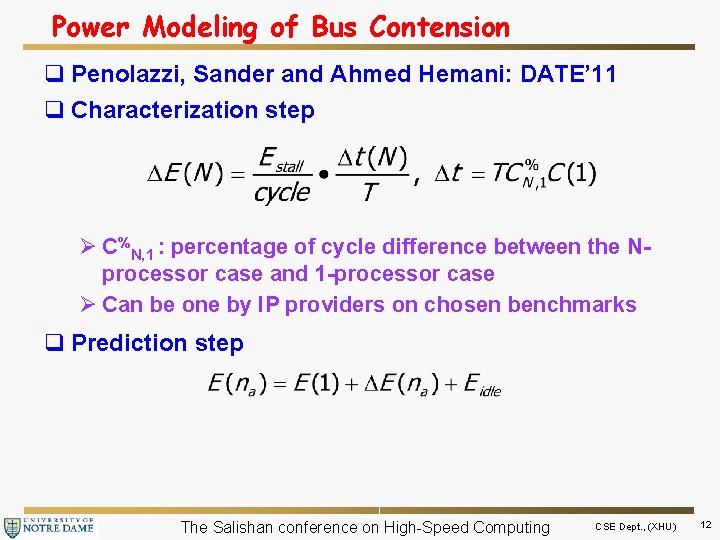

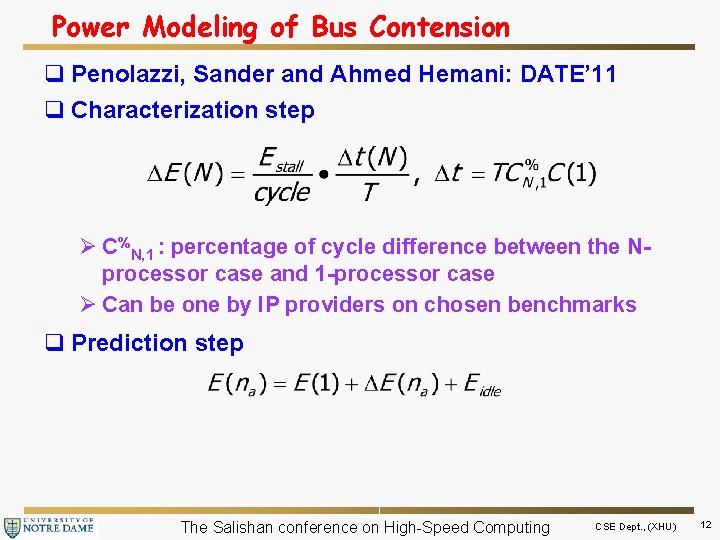

Power Modeling of Bus Contension q Penolazzi, Sander and Ahmed Hemani: DATE’ 11 q Characterization step Ø C%N, 1 : percentage of cycle difference between the Nprocessor case and 1 -processor case Ø Can be one by IP providers on chosen benchmarks q Prediction step The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 12

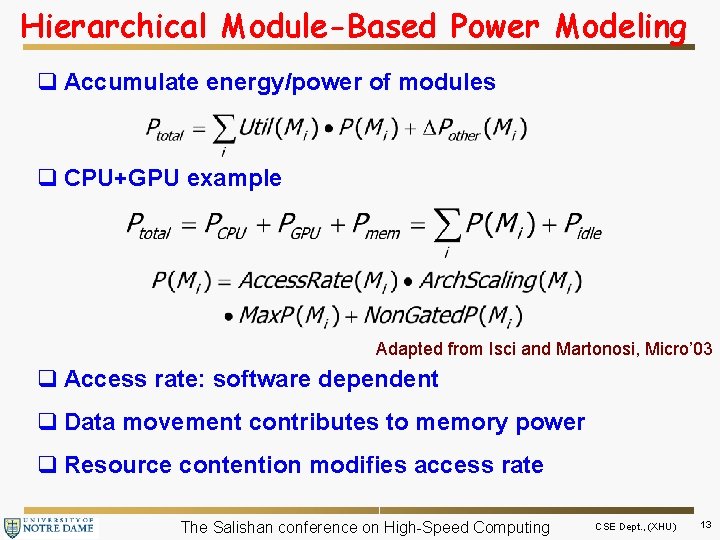

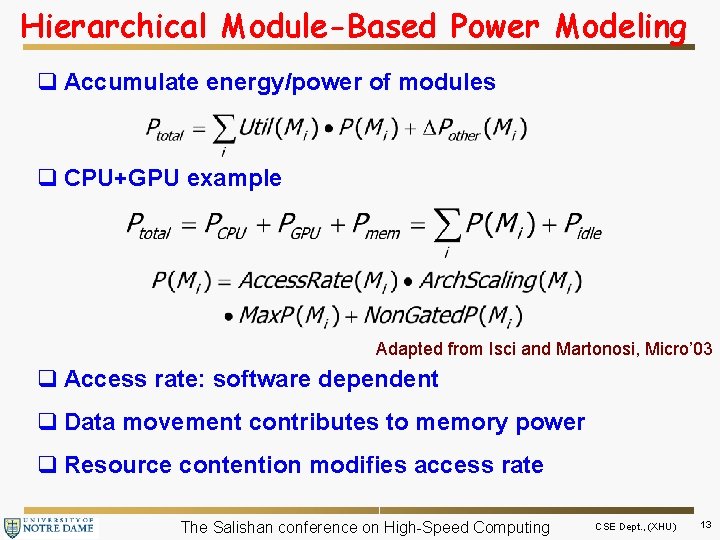

Hierarchical Module-Based Power Modeling q Accumulate energy/power of modules q CPU+GPU example Adapted from Isci and Martonosi, Micro’ 03 q Access rate: software dependent q Data movement contributes to memory power q Resource contention modifies access rate The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 13

Outline q Why expose widely? q How to benefit from exposing widely? q How to choose wisely? q Going forward The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 14

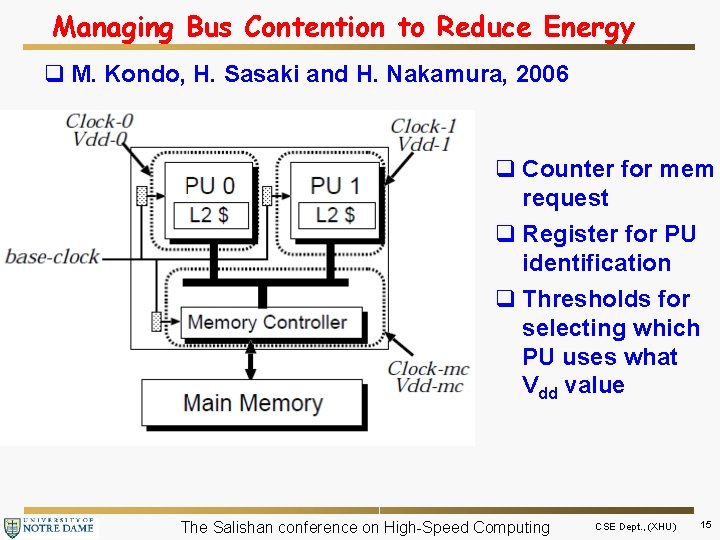

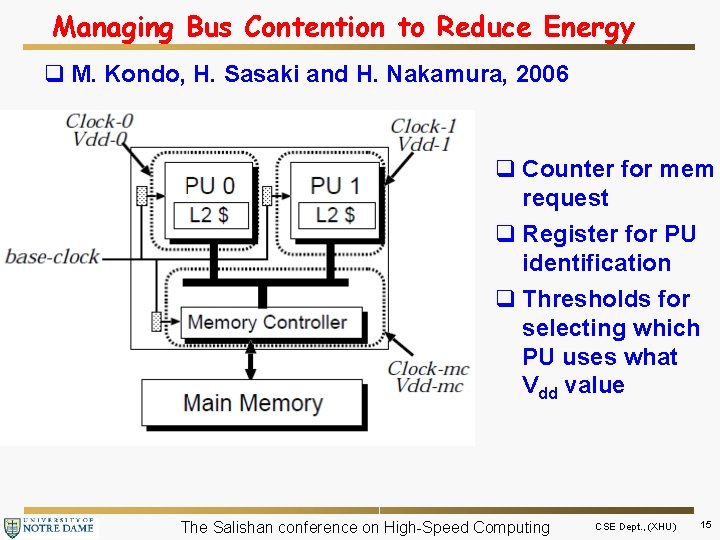

Managing Bus Contention to Reduce Energy q M. Kondo, H. Sasaki and H. Nakamura, 2006 q Counter for mem request q Register for PU identification q Thresholds for selecting which PU uses what Vdd value The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 15

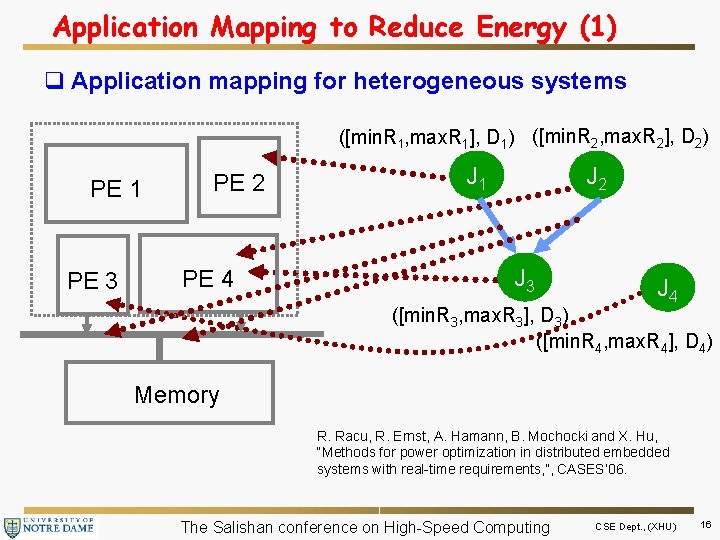

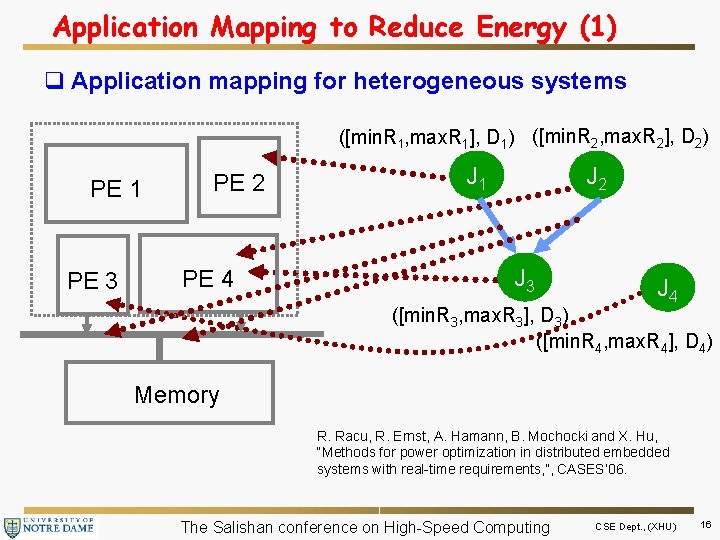

Application Mapping to Reduce Energy (1) q Application mapping for heterogeneous systems ([min. R 1, max. R 1], D 1) ([min. R 2, max. R 2], D 2) PE 1 PE 3 PE 2 PE 4 J 1 J 2 J 3 J 4 ([min. R 3, max. R 3], D 3) ([min. R 4, max. R 4], D 4) Memory R. Racu, R. Ernst, A. Hamann, B. Mochocki and X. Hu, “Methods for power optimization in distributed embedded systems with real-time requirements, ”, CASES’ 06. The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 16

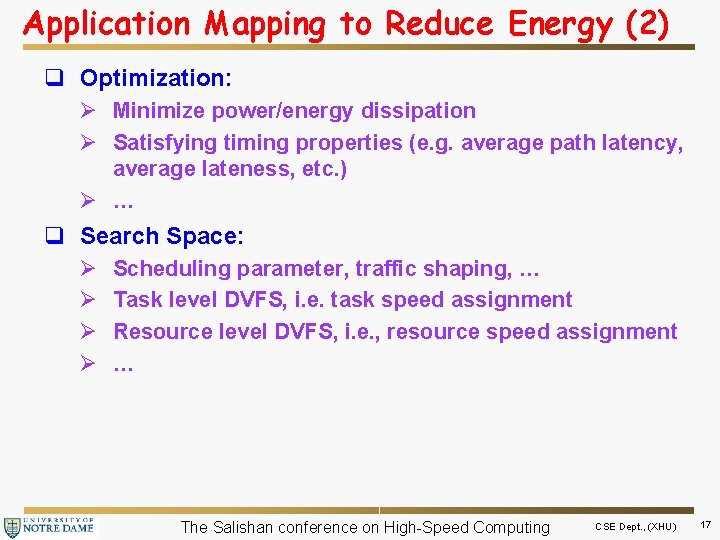

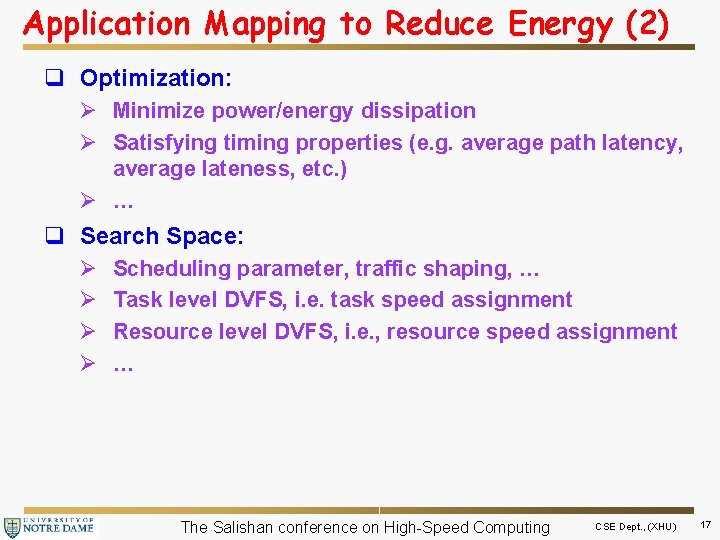

Application Mapping to Reduce Energy (2) q Optimization: Ø Minimize power/energy dissipation Ø Satisfying timing properties (e. g. average path latency, average lateness, etc. ) Ø … q Search Space: Ø Ø Scheduling parameter, traffic shaping, … Task level DVFS, i. e. task speed assignment Resource level DVFS, i. e. , resource speed assignment … The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 17

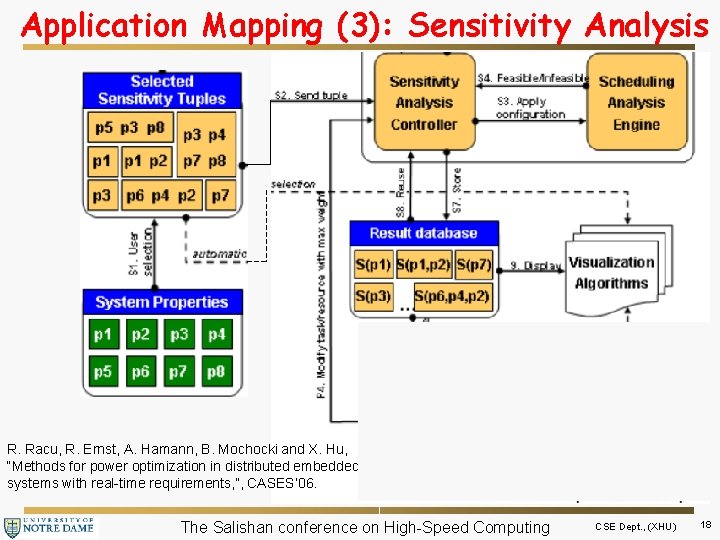

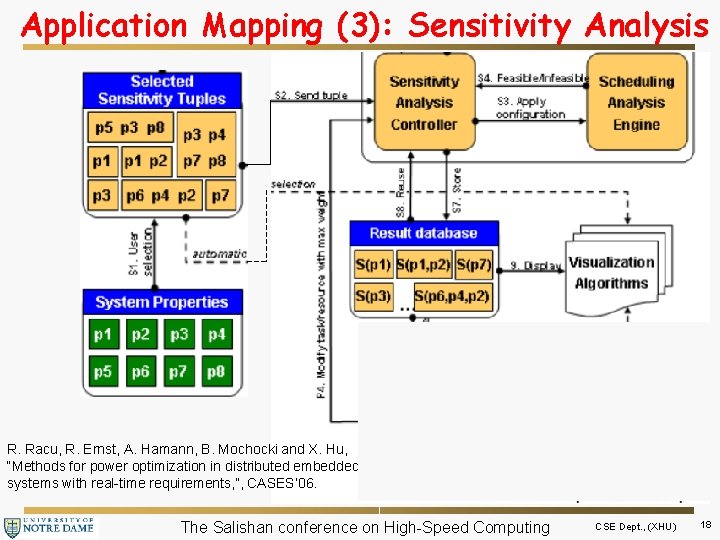

Application Mapping (3): Sensitivity Analysis R. Racu, R. Ernst, A. Hamann, B. Mochocki and X. Hu, “Methods for power optimization in distributed embedded systems with real-time requirements, ”, CASES’ 06. The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 18

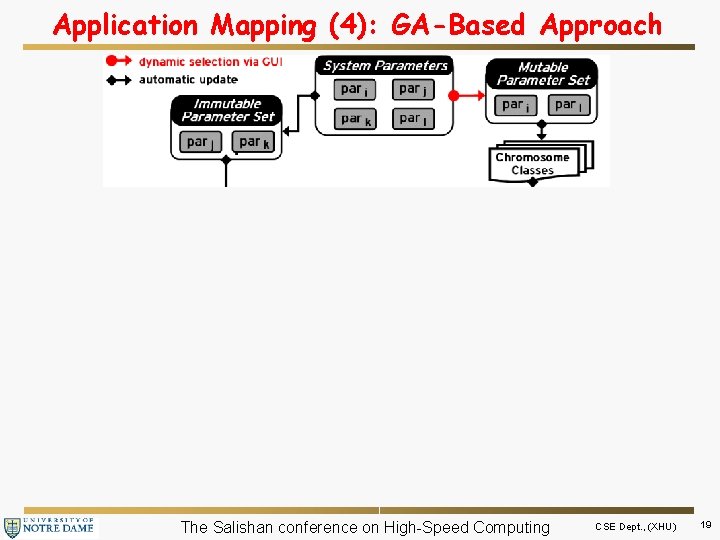

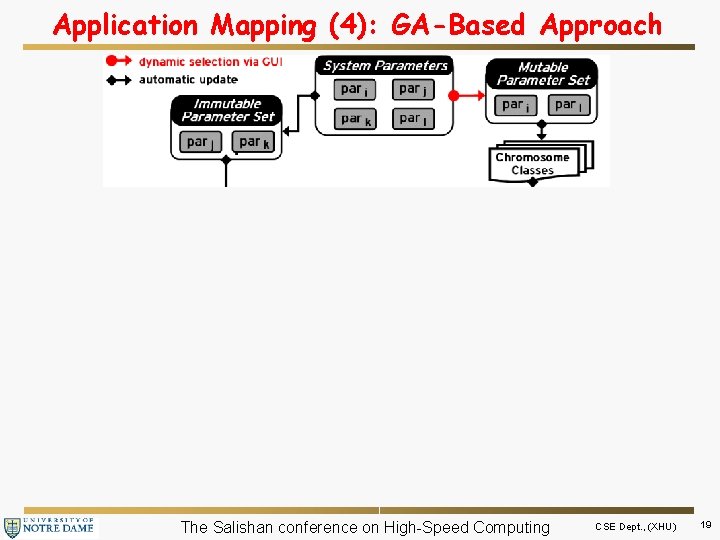

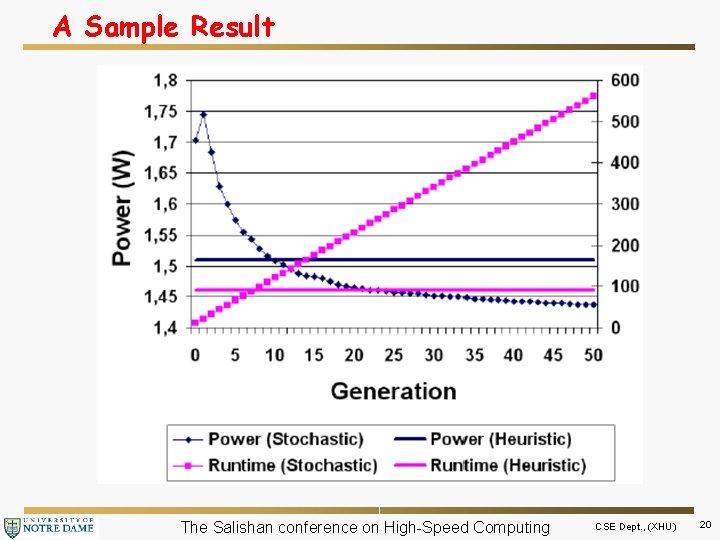

Application Mapping (4): GA-Based Approach 2’. Scheduling Trace 3’. Power Dissipation Power Analyzer Power model needed The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 19

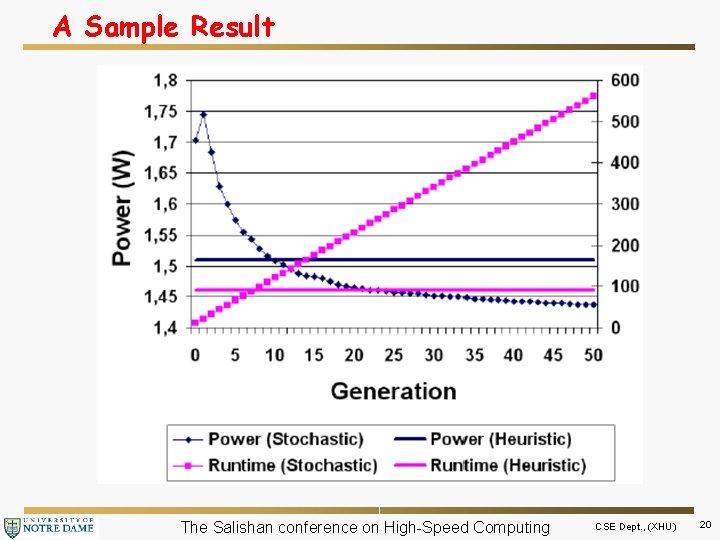

A Sample Result The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 20

Outline q Why expose widely? q How to benefit from exposing widely? q How to choose wisely? q Going forward The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 21

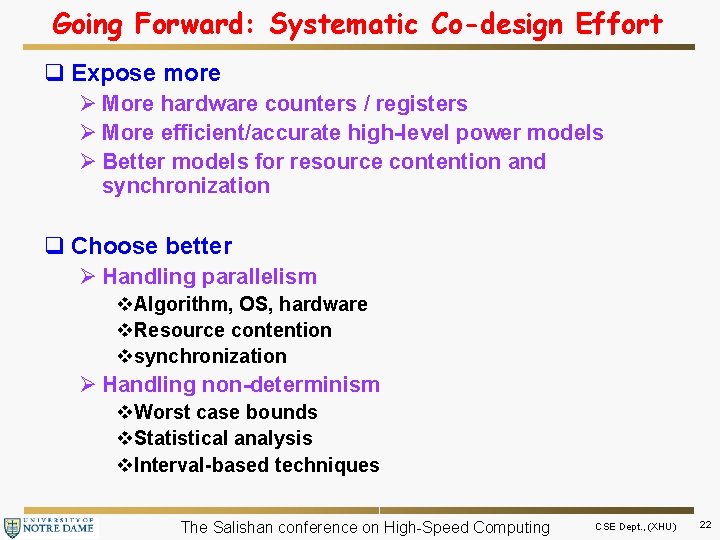

Going Forward: Systematic Co-design Effort q Expose more Ø More hardware counters / registers Ø More efficient/accurate high-level power models Ø Better models for resource contention and synchronization q Choose better Ø Handling parallelism v. Algorithm, OS, hardware v. Resource contention vsynchronization Ø Handling non-determinism v. Worst case bounds v. Statistical analysis v. Interval-based techniques The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 22

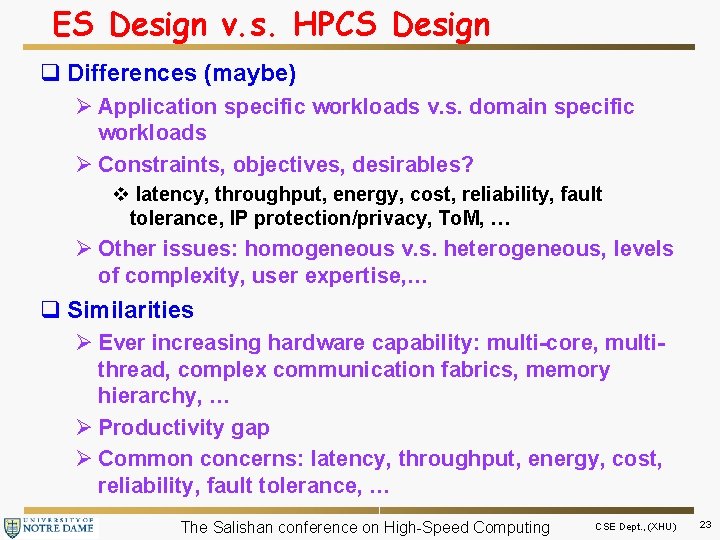

ES Design v. s. HPCS Design q Differences (maybe) Ø Application specific workloads v. s. domain specific workloads Ø Constraints, objectives, desirables? v latency, throughput, energy, cost, reliability, fault tolerance, IP protection/privacy, To. M, … Ø Other issues: homogeneous v. s. heterogeneous, levels of complexity, user expertise, … q Similarities Ø Ever increasing hardware capability: multi-core, multithread, complex communication fabrics, memory hierarchy, … Ø Productivity gap Ø Common concerns: latency, throughput, energy, cost, reliability, fault tolerance, … The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 23

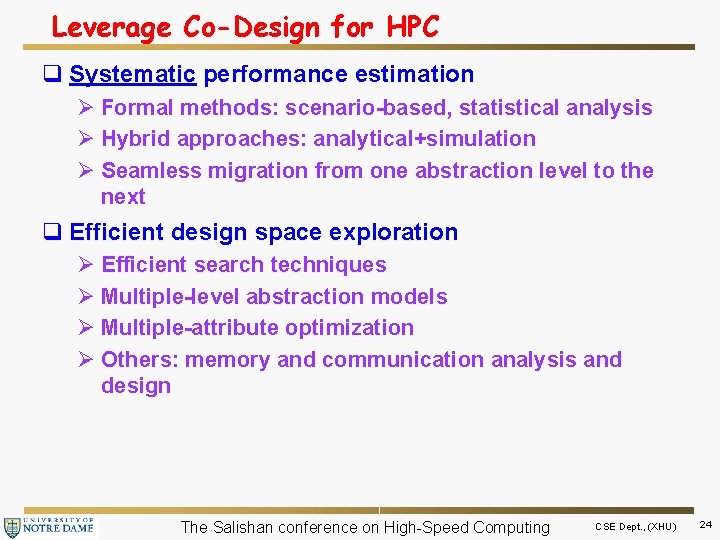

Leverage Co-Design for HPC q Systematic performance estimation Ø Formal methods: scenario-based, statistical analysis Ø Hybrid approaches: analytical+simulation Ø Seamless migration from one abstraction level to the next q Efficient design space exploration Ø Efficient search techniques Ø Multiple-level abstraction models Ø Multiple-attribute optimization Ø Others: memory and communication analysis and design The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 24

Thank you! The Salishan conference on High-Speed Computing CSE Dept. , (XHU) 25