GPU VSIPL High Performance VSIPL Implementation for GPUs

- Slides: 15

GPU VSIPL: High Performance VSIPL Implementation for GPUs Andrew Kerr, Dan Campbell*, Mark Richards, Mike Davis andrew. kerr@gtri. gatech. edu, dan. campbell@gtri. gatech. edu, mark. richards@ece. gatech. edu, mike. davis@gtri. gatech. edu High Performance Embedded Computing (HPEC) Workshop 24 September 2008 Distribution Statement (A): Approved for public release; distribution is unlimited This work was supported in part by DARPA and AFRL under contracts FA 8750 -06 -1 -0012 and FA 8650 -07 -C 7724. The opinions expressed are those of the authors. GTRI_B-1 1

Signal Processing on Graphics Processors • GPUs original role: turn 3 -D polygons into 2 -D pixels… • …Which also makes them cheap & plentiful source of FLOPs • Leverages volume & competition in entertainment industry • Primary role highly parallel, very regular • Typically <$500 drop-in addition to standard PC • Outstripping CPU capacity, and growing more quickly • Peak theoretical ~1 TFlop • Power draw: 280 GTX = 200 W Q 6600 = 100 W • Still making improvements in market app with more parallelism, so growth continues GTRI_B-2 2

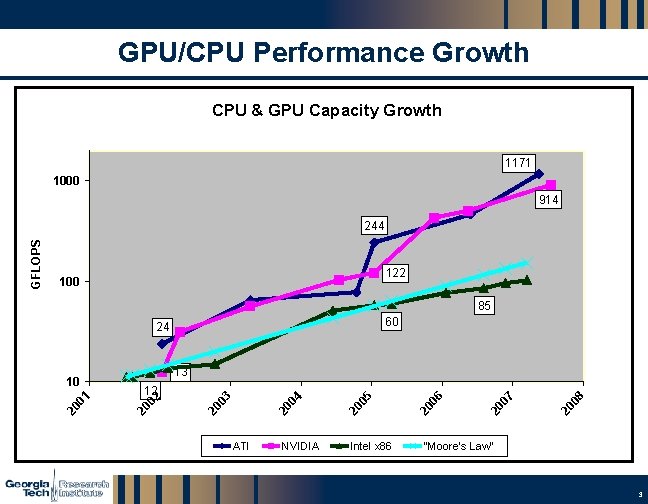

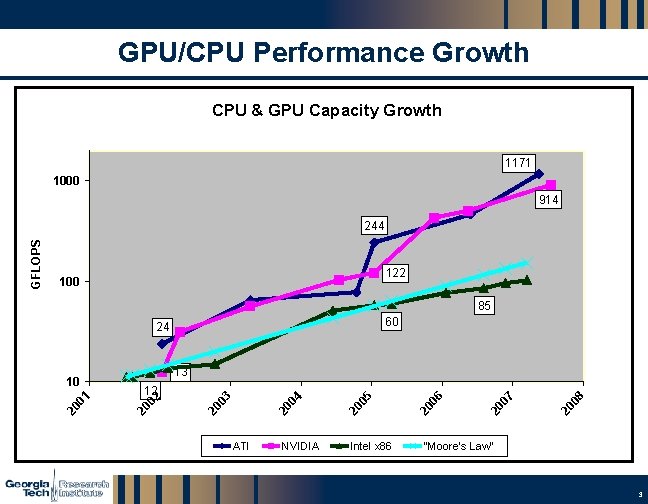

GPU/CPU Performance Growth CPU & GPU Capacity Growth 1171 1000 914 122 100 85 60 24 ATI NVIDIA Intel x 86 08 20 07 20 06 20 05 20 04 20 20 02 12 03 13 20 01 10 20 GFLOPS 244 "Moore's Law" GTRI_B-3 3

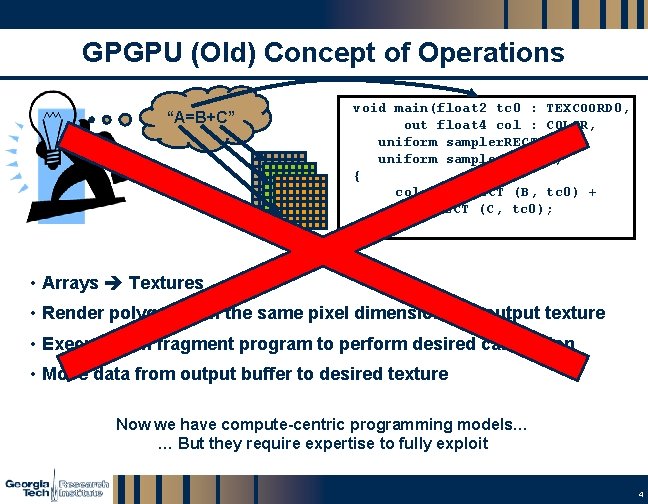

GPGPU (Old) Concept of Operations “A=B+C” void main(float 2 tc 0 : TEXCOORD 0, out float 4 col : COLOR, uniform sampler. RECT B, uniform sampler. RECT C) { col = tex. RECT (B, tc 0) + tex. RECT (C, tc 0); } • Arrays Textures • Render polygon with the same pixel dimensions as output texture • Execute with fragment program to perform desired calculation • Move data from output buffer to desired texture Now we have compute-centric programming models… … But they require expertise to fully exploit GTRI_B-4 4

VSIPL - Vector Signal Image Processing Library • Portable API for linear algebra, image & signal processing • Originally sponsored by DARPA in mid ’ 90 s • Targeted embedded processors – portability primary aim • Open standard, Forum-based • Initial API approved April 2000 • Functional coverage • Vector, Matrix, Tensor • Basic math operations, linear algebra, solvers, FFT, FIR/IIR, bookkeeping, etc GTRI_B-5 5

VSIPL & GPU: Well Matched • VSIPL is great for exploiting GPUs • High level API with good coverage for dense linear algebra • Allows non experts to benefit from hero programmers • Explicit memory access controls • API precision flexibility • GPUs are great for VSIPL • Improves prototyping by speeding algorithm testing • Cheap addition allows more engineers access to HPC • Large speedups without needing explicit parallelism at application level GTRI_B-6 6

GPU-VSIPL Implementation • Full, compliant implementation of VSIPL Core-Lite Profile • Fully encapsulated CUDA backend • Leverages CUFFT library • All VSIPL functions accelerated • Core Lite Profile: • Single precision floating point, some basic integer • Vector & Sxalar, complex & real support • Basic elementwise, FFT, FIR, histogram, RNG, support • Full list: http: //www. vsipl. org/coreliteprofile. pdf • Also, some matrix support, including vsip_fftm_f GTRI_B-7 7

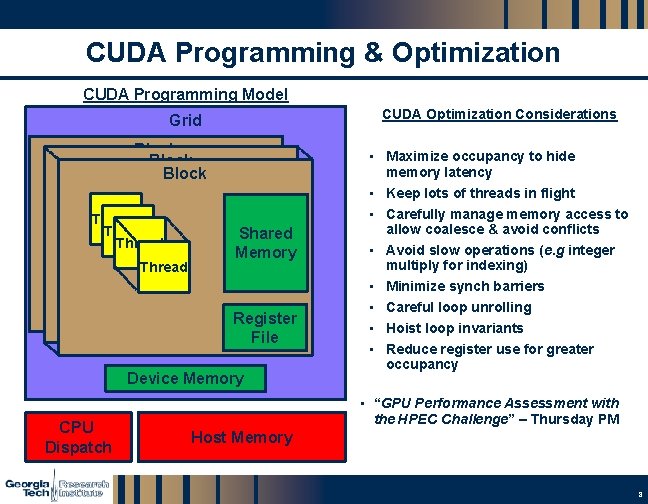

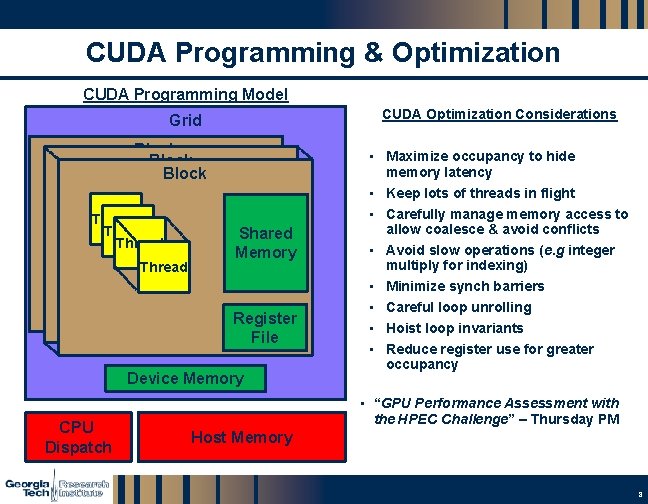

CUDA Programming & Optimization CUDA Programming Model CUDA Optimization Considerations Grid Block Datapath • Maximize occupancy to hide Datapath Shared Datapath Memory Datapath Thread Memory Thread Datapath Shared Datapath Thread Register Datapath Memory Register Datapath Thread File Datapath Datapath Register File Device Memory CPU Dispatch memory latency • Keep lots of threads in flight • Carefully manage memory access to allow coalesce & avoid conflicts • Avoid slow operations (e. g integer • • multiply for indexing) Minimize synch barriers Careful loop unrolling Hoist loop invariants Reduce register use for greater occupancy • “GPU Performance Assessment with the HPEC Challenge” – Thursday PM Host Memory GTRI_B-8 8

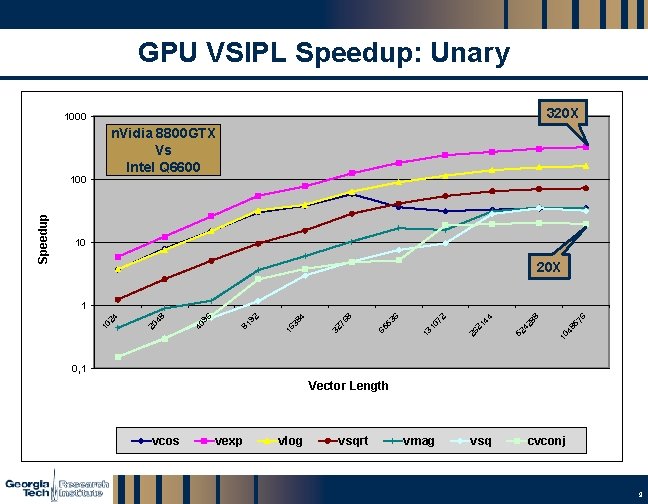

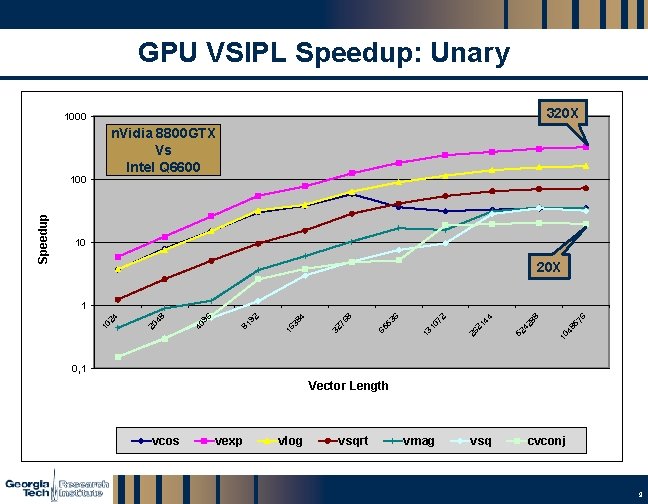

GPU VSIPL Speedup: Unary 320 X 1000 n. Vidia 8800 GTX Vs Intel Q 6600 10 20 X 10 48 57 6 88 42 52 44 21 26 13 10 72 6 53 65 8 76 32 4 38 16 92 81 96 40 48 20 24 1 10 Speedup 100 0, 1 Vector Length vcos vexp vlog vsqrt vmag vsq cvconj GTRI_B-9 9

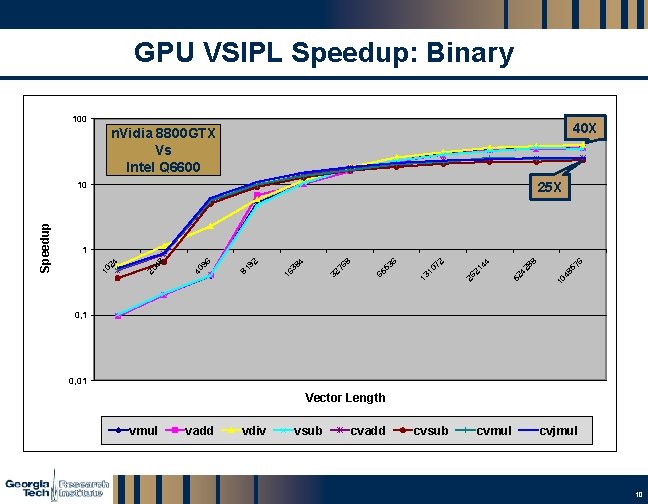

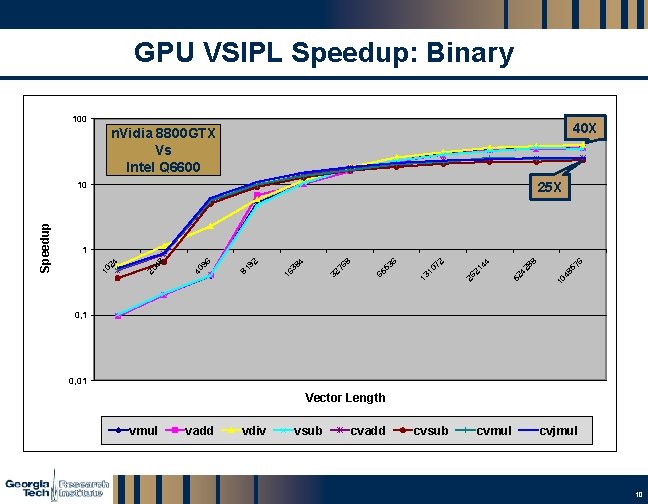

GPU VSIPL Speedup: Binary 100 40 X n. Vidia 8800 GTX Vs Intel Q 6600 25 X 10 48 57 6 88 52 42 44 21 26 13 10 72 6 53 76 32 65 8 4 38 16 92 81 96 40 48 20 24 1 10 Speedup 10 0, 1 0, 01 Vector Length vmul vadd vdiv vsub cvadd cvsub cvmul cvjmul GTRI_B-10 10

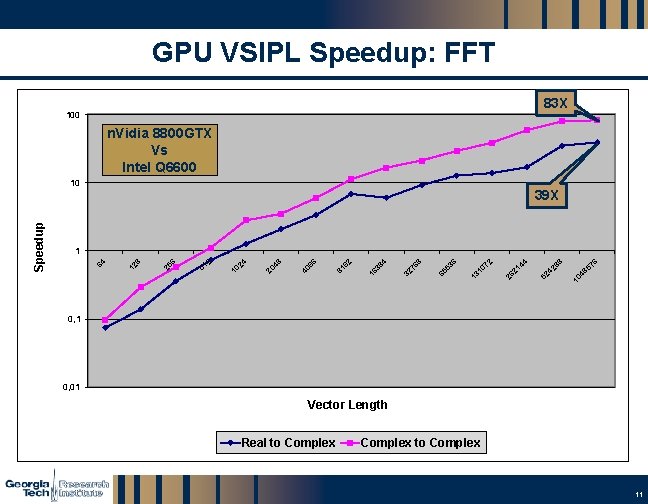

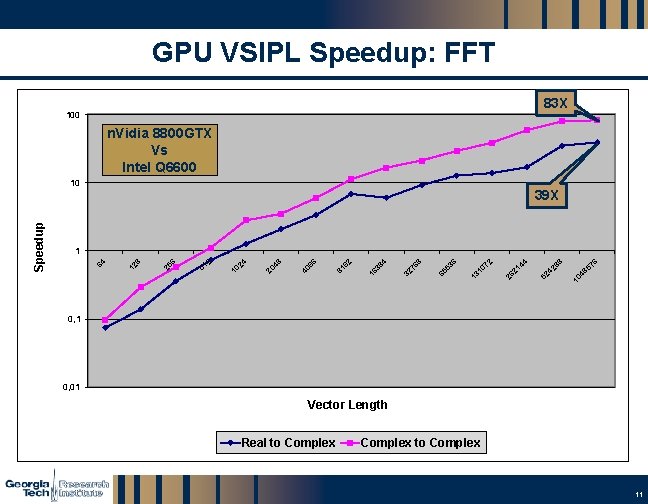

GPU VSIPL Speedup: FFT 83 X 100 n. Vidia 8800 GTX Vs Intel Q 6600 39 X 10 48 57 6 8 42 8 52 4 21 4 26 2 10 7 13 65 53 6 8 76 32 4 38 16 92 81 96 40 48 20 24 10 2 51 6 25 12 8 1 64 Speedup 10 0, 1 0, 01 Vector Length Real to Complex GTRI_B-11 11

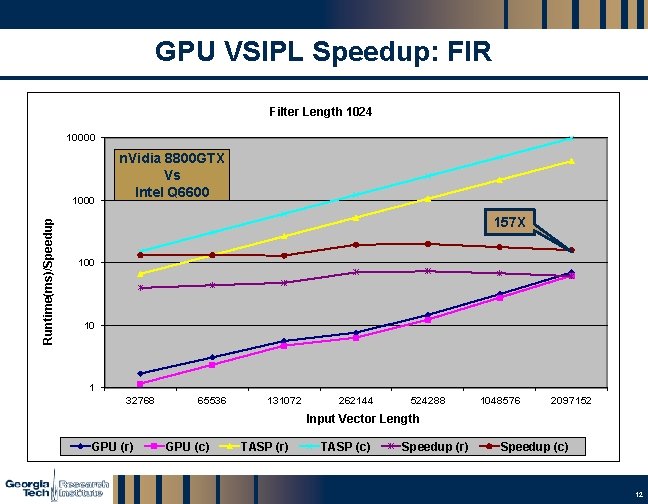

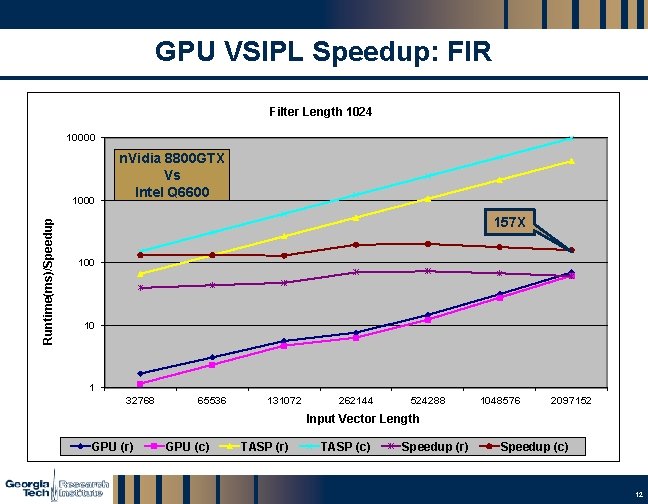

GPU VSIPL Speedup: FIR Filter Length 1024 10000 Runtime(ms)/Speedup 1000 n. Vidia 8800 GTX Vs Intel Q 6600 157 X 100 10 1 32768 65536 131072 262144 524288 1048576 2097152 Input Vector Length GPU (r) GPU (c) TASP (r) TASP (c) Speedup (r) Speedup (c) GTRI_B-12 12

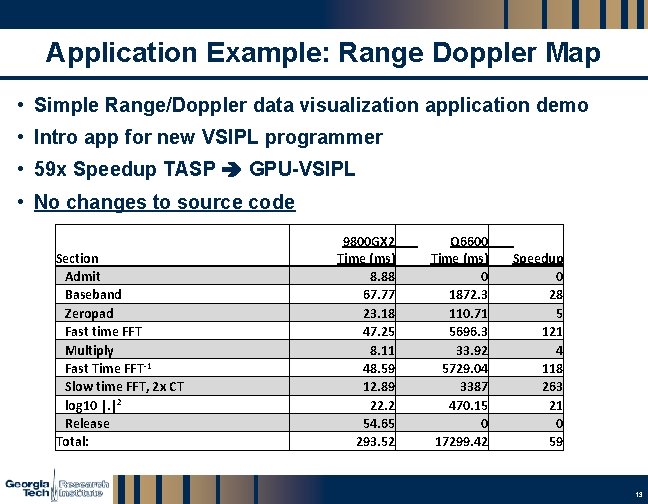

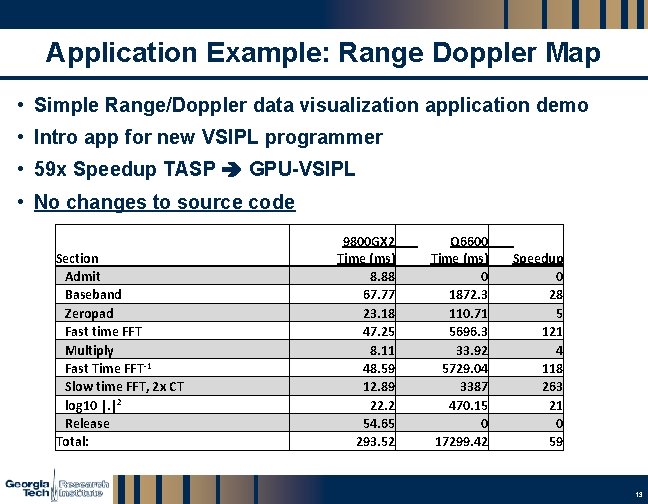

Application Example: Range Doppler Map • Simple Range/Doppler data visualization application demo • Intro app for new VSIPL programmer • 59 x Speedup TASP GPU-VSIPL • No changes to source code Section Admit Baseband Zeropad Fast time FFT Multiply Fast Time FFT-1 Slow time FFT, 2 x CT log 10 |. |2 Release Total: 9800 GX 2 Time (ms) 8. 88 67. 77 23. 18 47. 25 8. 11 48. 59 12. 89 22. 2 54. 65 293. 52 Q 6600 Time (ms) 0 1872. 3 110. 71 5696. 3 33. 92 5729. 04 3387 470. 15 0 17299. 42 Speedup 0 28 5 121 4 118 263 21 0 59 GTRI_B-13 13

GPU-VSIPL: Future Plans • Expand matrix support • Move toward full Core Profile • More linear algebra/solvers • VSIPL++ • Double precision support GTRI_B-14 14

Conclusions • GPUs are fast, cheap signal processors • VSIPL is a portable, intuitive means to exploit GPUs • GPU-VSIPL allows easy access to GPU performance without becoming an expert CUDA/GPU programer • 10 -100 x speed improvement possible with no code change • Not yet released, but unsupported previews may show up at: http: //gpu-vsipl. gtri. gatech. edu GTRI_B-15 15