MPI and Open MP How to get MPI

![Local Variables int main (int argc, char *argv[]) { int i; int id; /* Local Variables int main (int argc, char *argv[]) { int i; int id; /*](https://slidetodoc.com/presentation_image_h/002f71f27f28c06cfa82ac18b7e35935/image-13.jpg)

![What about External Variables? int total; int main (int argc, char *argv[]) { int What about External Variables? int total; int main (int argc, char *argv[]) { int](https://slidetodoc.com/presentation_image_h/002f71f27f28c06cfa82ac18b7e35935/image-20.jpg)

- Slides: 46

MPI and Open. MP

How to get MPI and How to Install MPI? n http: //www-unix. mcs. anl. gov/mpi/ n mpich 2 -doc-install. pdf // installation guide n mpich 2 -doc-user. pdf // user guide

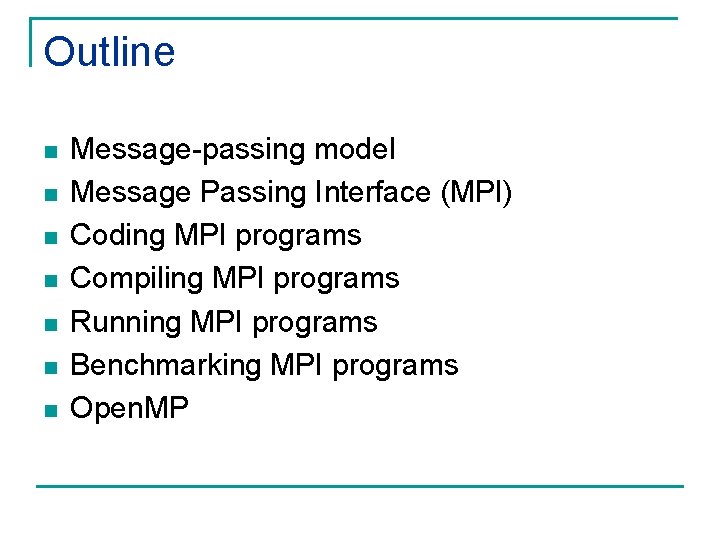

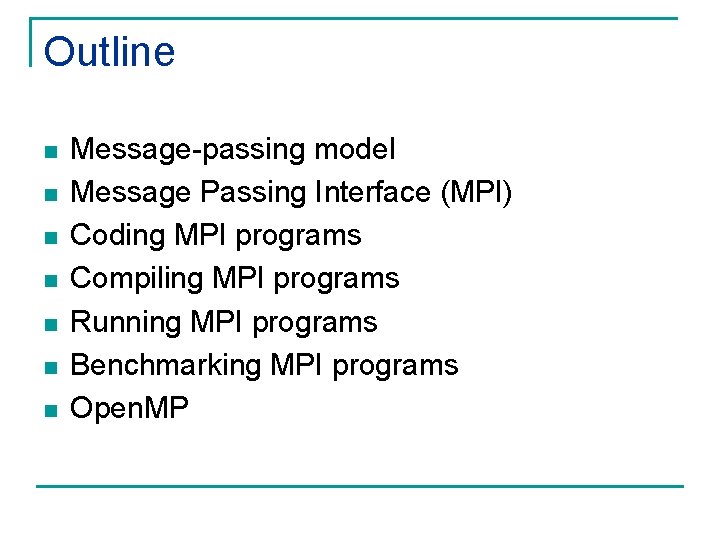

Outline n n n n Message-passing model Message Passing Interface (MPI) Coding MPI programs Compiling MPI programs Running MPI programs Benchmarking MPI programs Open. MP

Message-passing Model

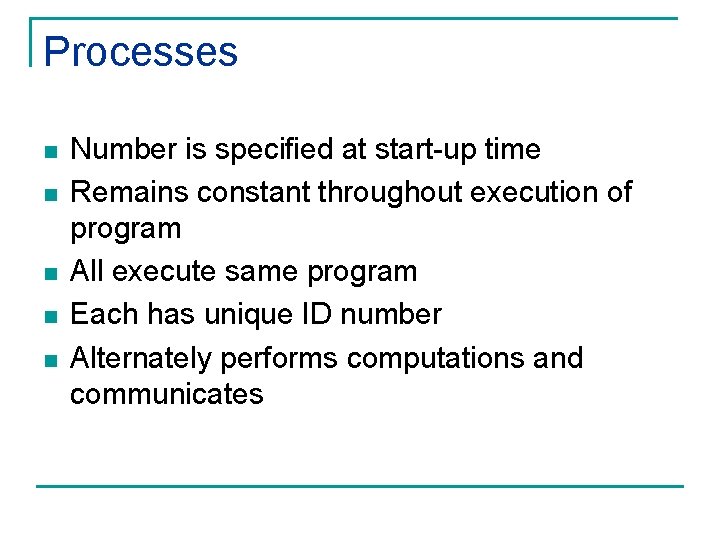

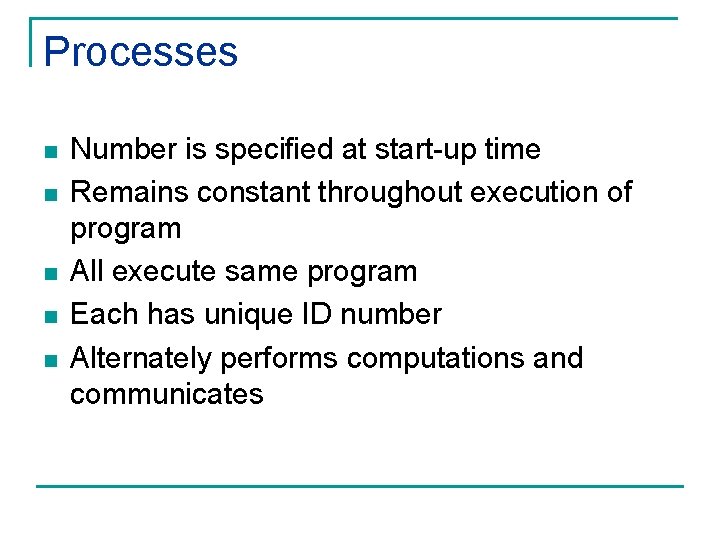

Processes n n n Number is specified at start-up time Remains constant throughout execution of program All execute same program Each has unique ID number Alternately performs computations and communicates

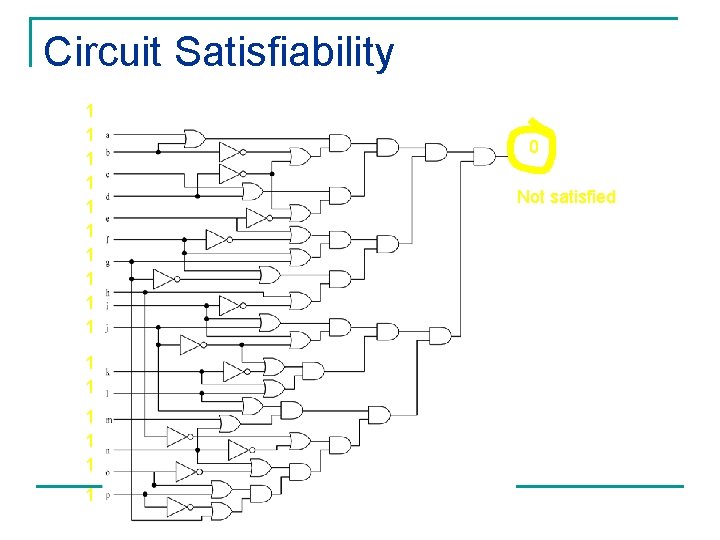

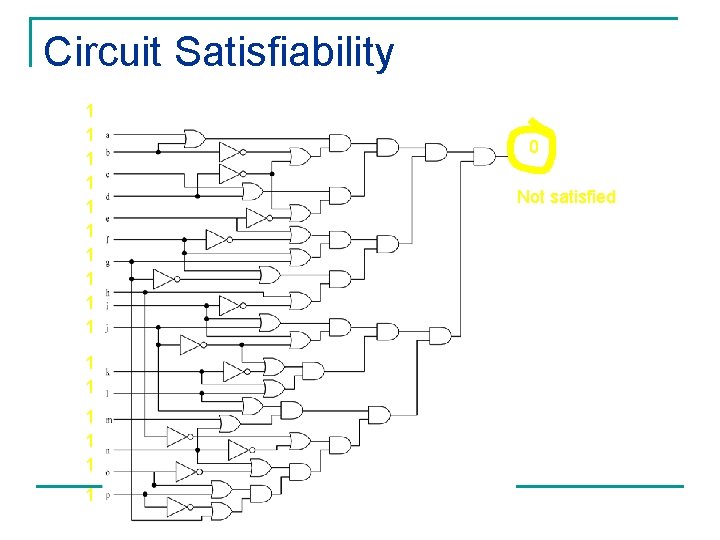

Circuit Satisfiability 1 1 1 1 0 Not satisfied

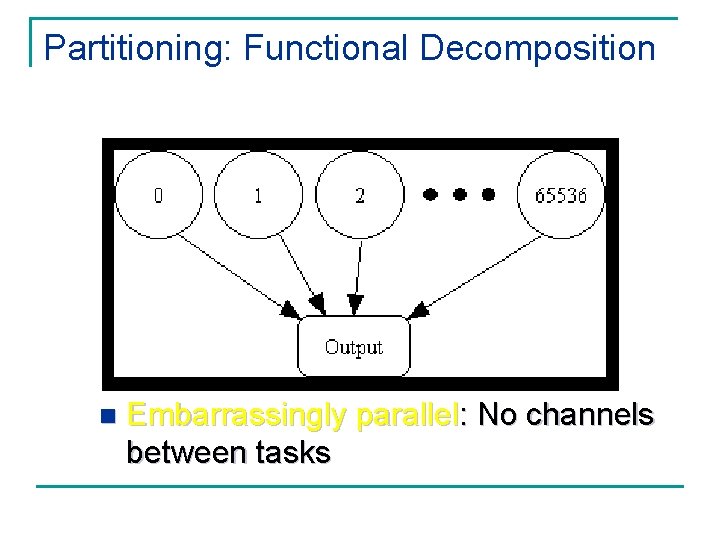

Solution Method n n n Circuit satisfiability is NP-complete No known algorithms to solve in polynomial time We seek all solutions We find through exhaustive search 16 inputs 65, 536 combinations to test

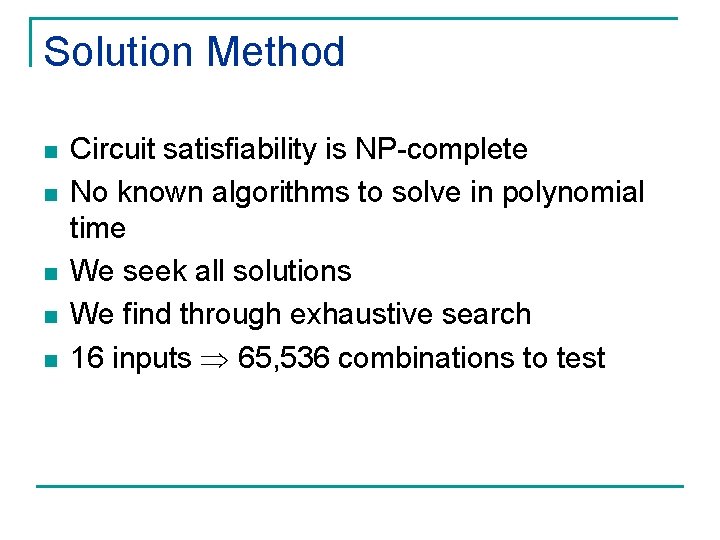

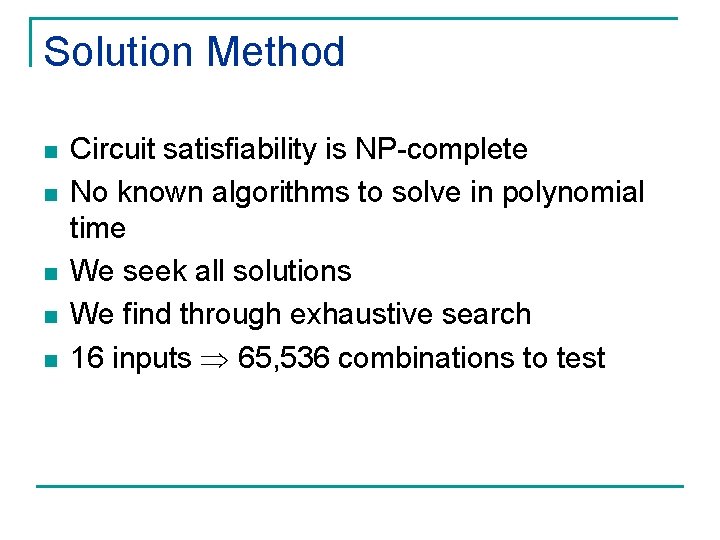

Partitioning: Functional Decomposition n Embarrassingly parallel: No channels between tasks

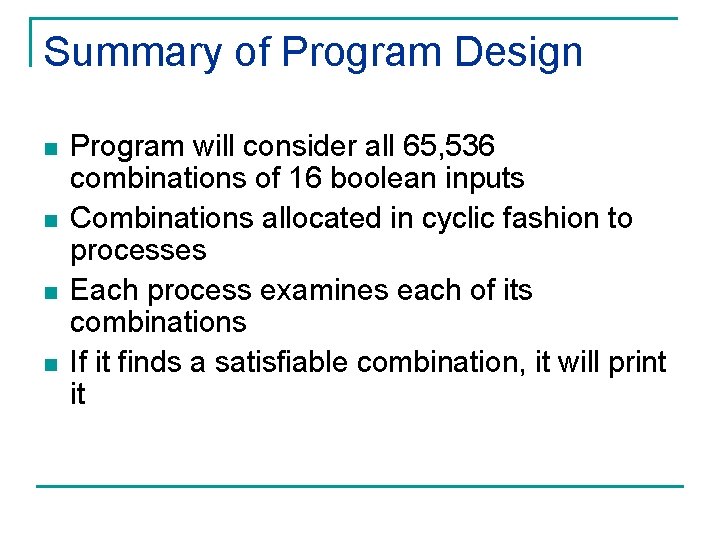

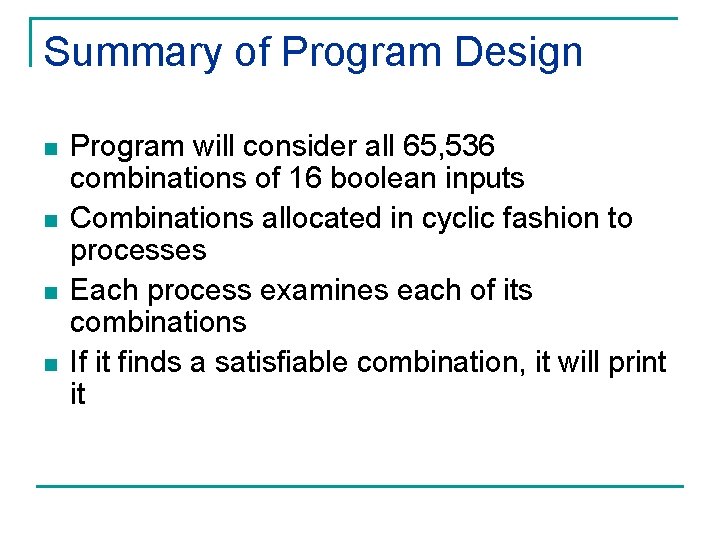

Agglomeration and Mapping n Properties of parallel algorithm q q q n Fixed number of tasks No communications between tasks Time needed per task is variable Consult mapping strategy decision tree q Map tasks to processors in a cyclic fashion

Cyclic (interleaved) Allocation n Assume p processes Each process gets every pth piece of work Example: 5 processes and 12 pieces of work q P 0: 0, 5, 10 q P 1: 1, 6, 11 q P 2: 2, 7 q P 3: 3, 8 q P 4: 4, 9

Summary of Program Design n n Program will consider all 65, 536 combinations of 16 boolean inputs Combinations allocated in cyclic fashion to processes Each process examines each of its combinations If it finds a satisfiable combination, it will print it

Include Files #include <mpi. h> n MPI header file #include <stdio. h> n Standard I/O header file

![Local Variables int main int argc char argv int i int id Local Variables int main (int argc, char *argv[]) { int i; int id; /*](https://slidetodoc.com/presentation_image_h/002f71f27f28c06cfa82ac18b7e35935/image-13.jpg)

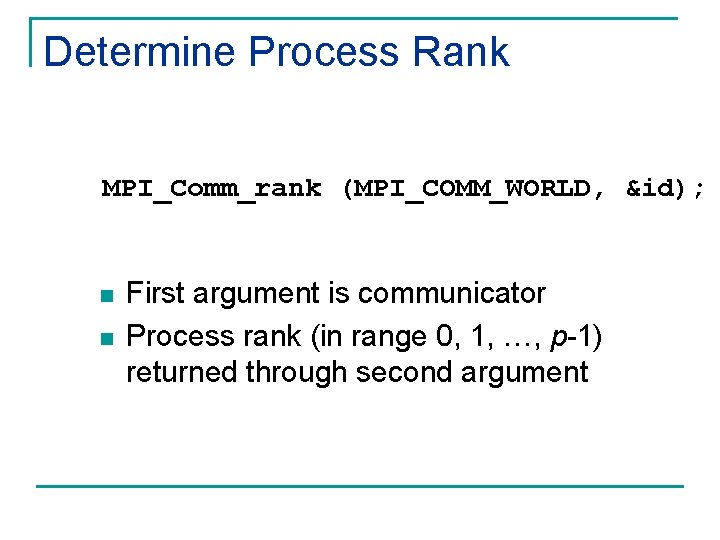

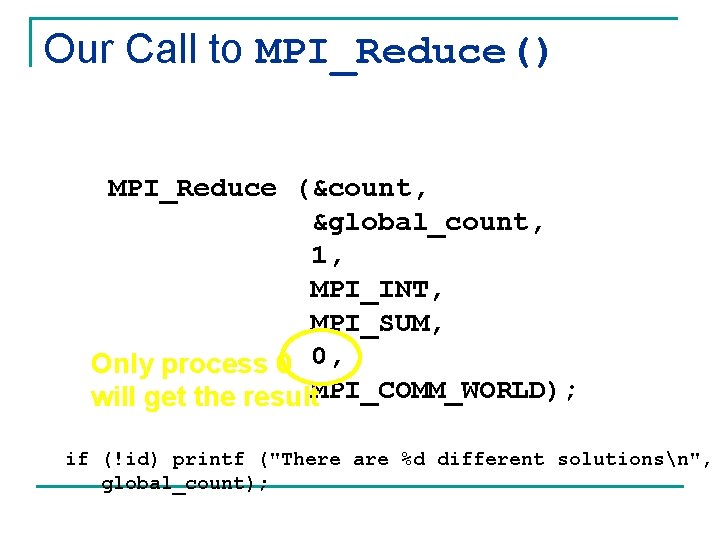

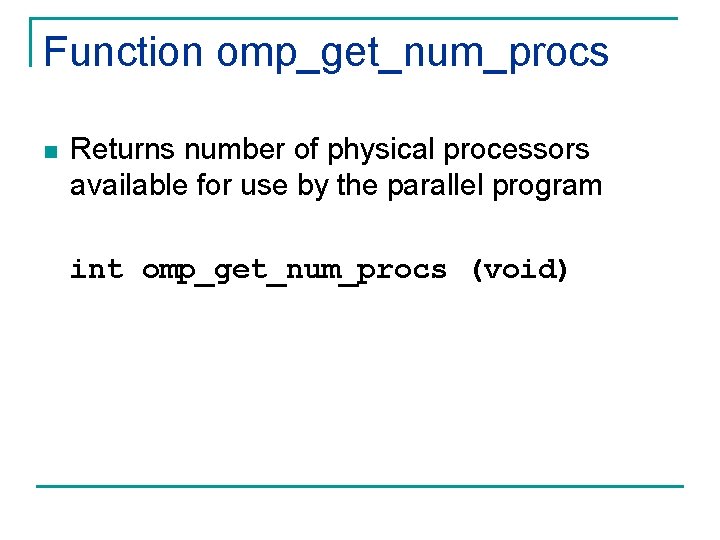

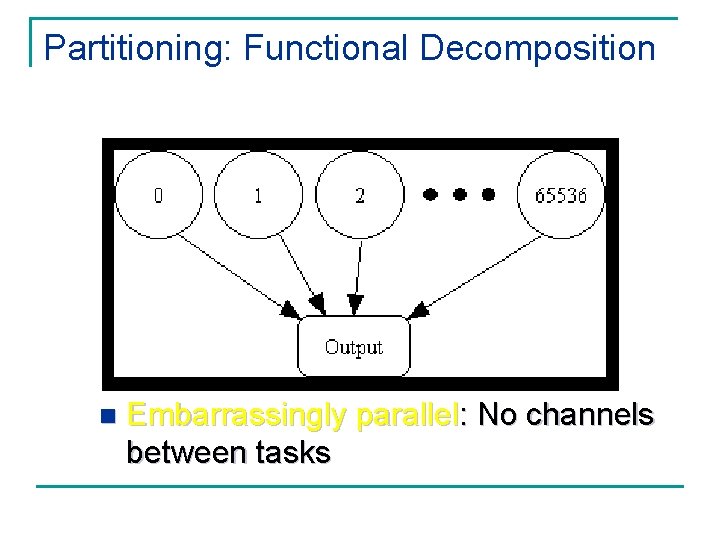

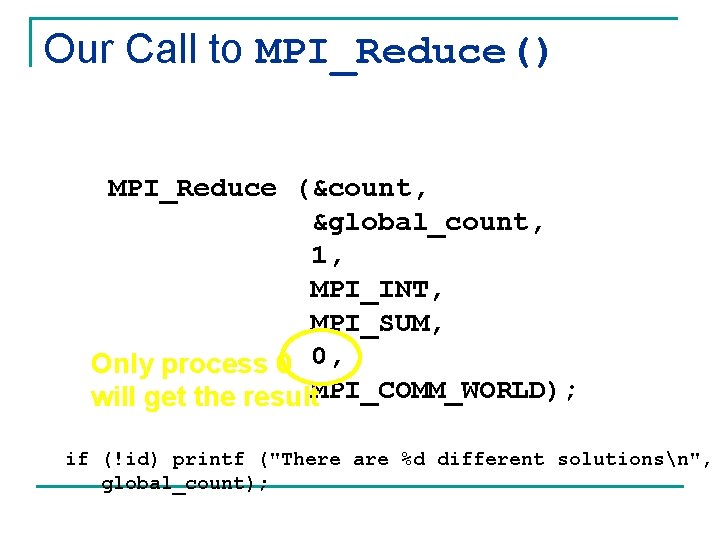

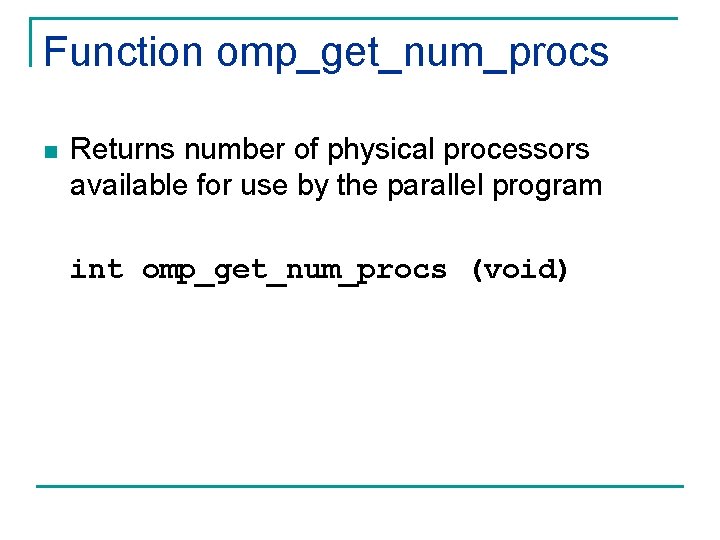

Local Variables int main (int argc, char *argv[]) { int i; int id; /* Process rank */ int p; /* Number of processes */ void check_circuit (int, int); Include argc and argv: they are needed to initialize MPI n One copy of every variable for each process running this program n

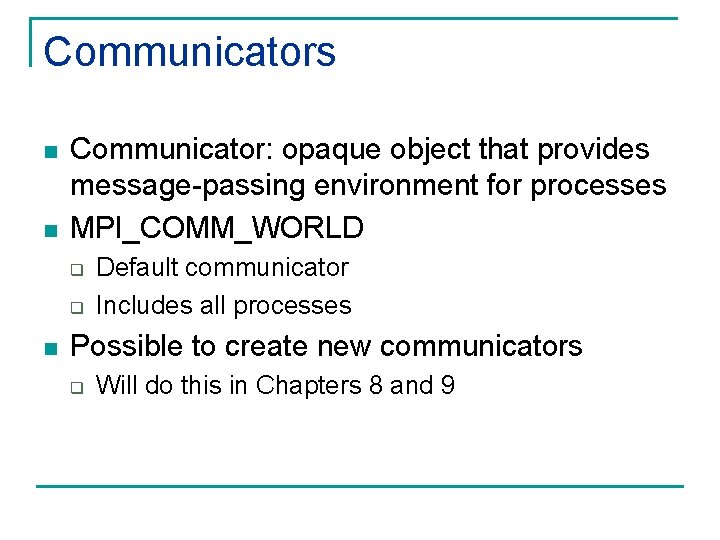

Initialize MPI_Init (&argc, &argv); n n n First MPI function called by each process Not necessarily first executable statement Allows system to do any necessary setup

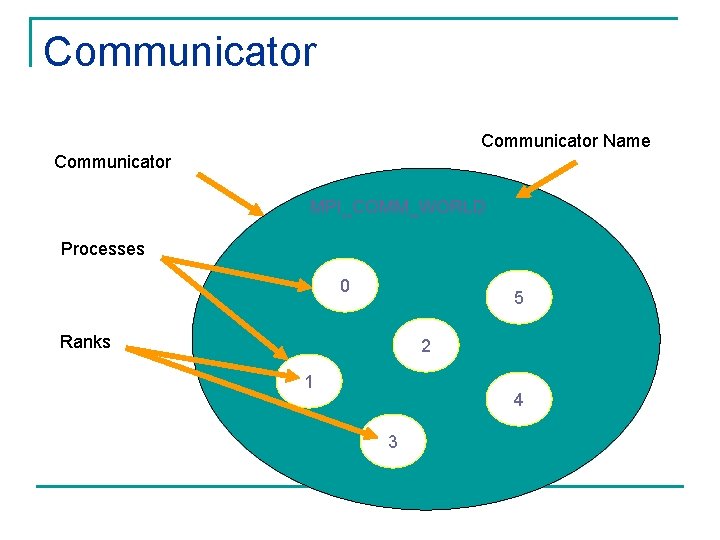

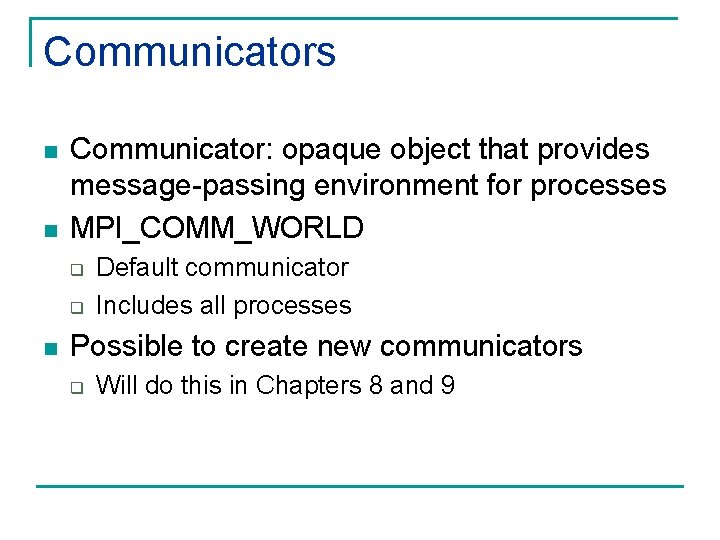

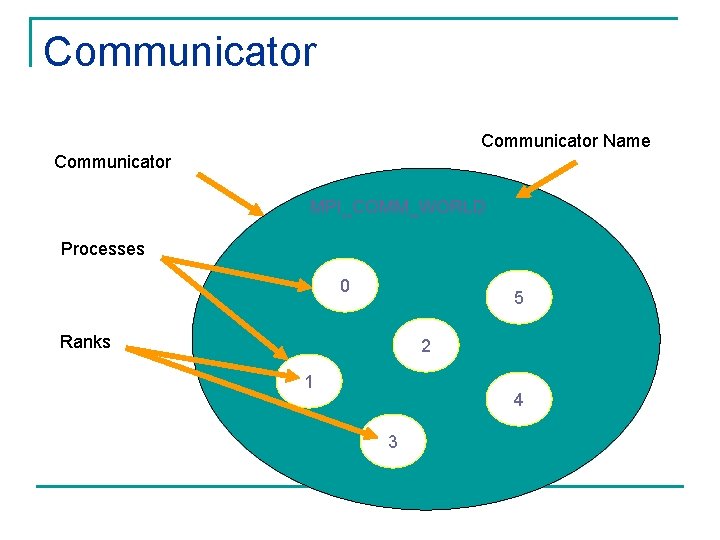

Communicators n n Communicator: opaque object that provides message-passing environment for processes MPI_COMM_WORLD q q n Default communicator Includes all processes Possible to create new communicators q Will do this in Chapters 8 and 9

Communicator Name Communicator MPI_COMM_WORLD Processes 0 5 Ranks 2 1 4 3

Determine Number of Processes MPI_Comm_size (MPI_COMM_WORLD, &p); n n First argument is communicator Number of processes returned through second argument

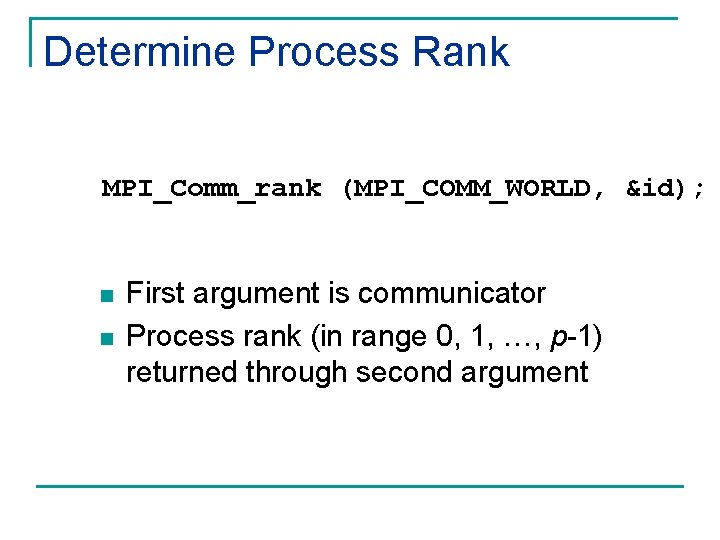

Determine Process Rank MPI_Comm_rank (MPI_COMM_WORLD, &id); n n First argument is communicator Process rank (in range 0, 1, …, p-1) returned through second argument

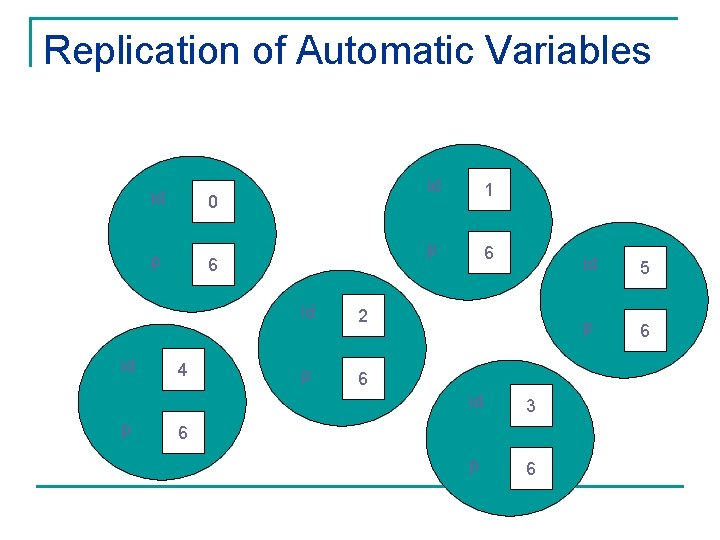

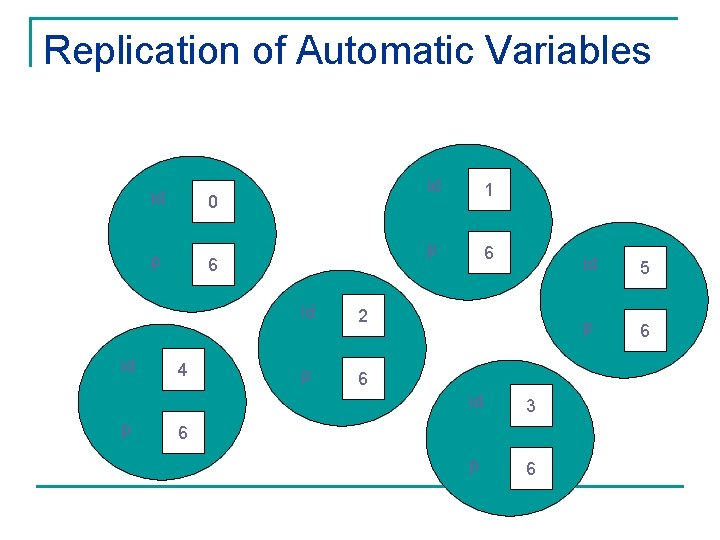

Replication of Automatic Variables id 0 p id p 6 4 id 2 p 6 id 1 p 6 id 3 p 6 6 id 5 p 6

![What about External Variables int total int main int argc char argv int What about External Variables? int total; int main (int argc, char *argv[]) { int](https://slidetodoc.com/presentation_image_h/002f71f27f28c06cfa82ac18b7e35935/image-20.jpg)

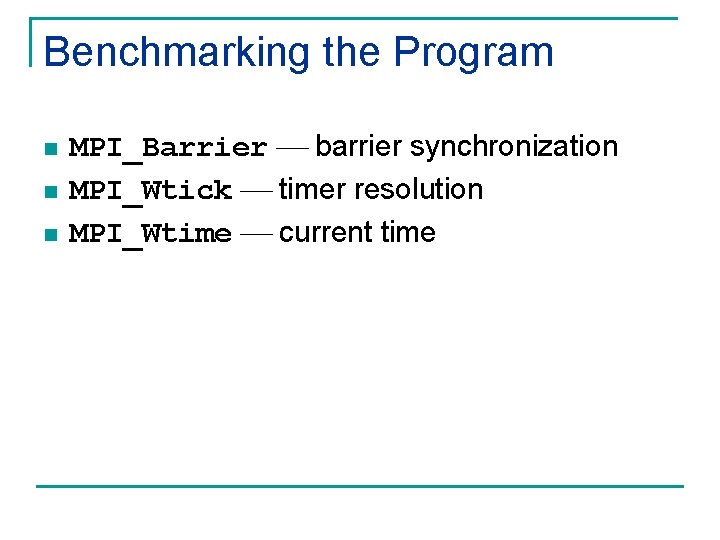

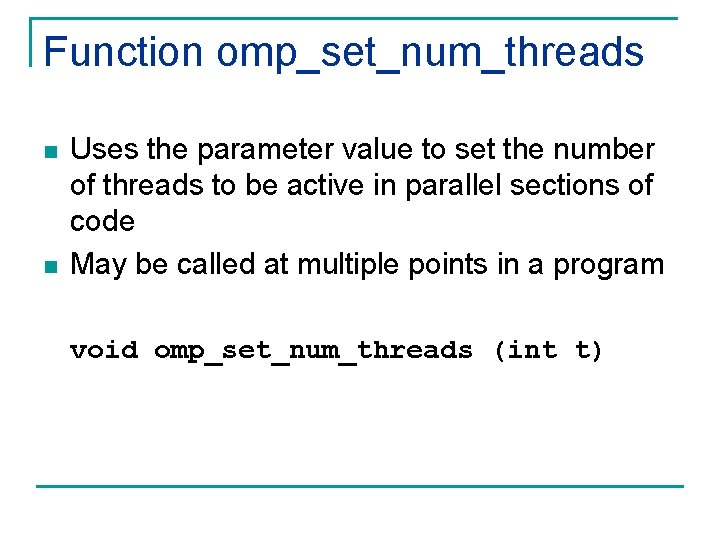

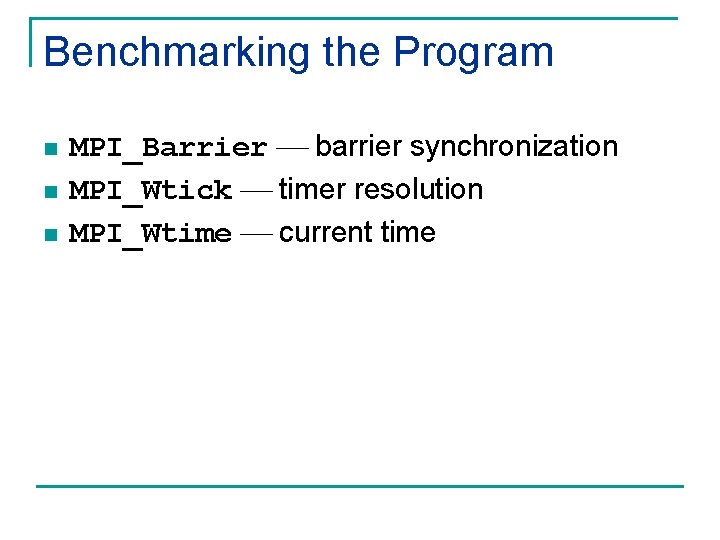

What about External Variables? int total; int main (int argc, char *argv[]) { int i; int id; int p; … n Where is variable total stored?

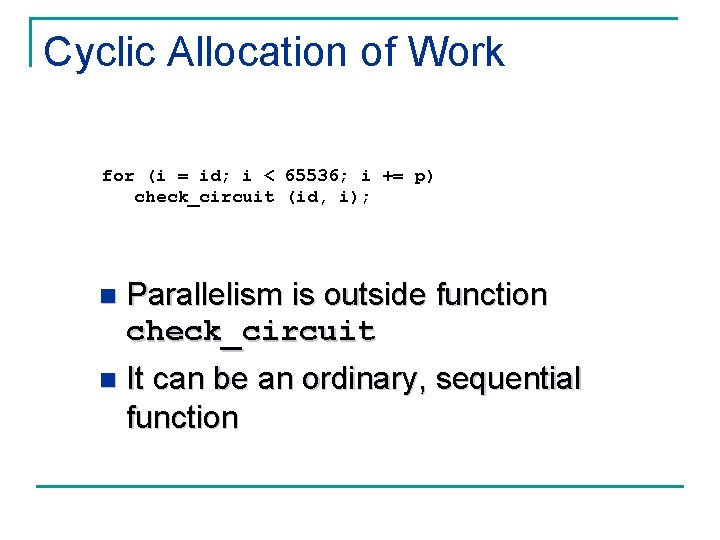

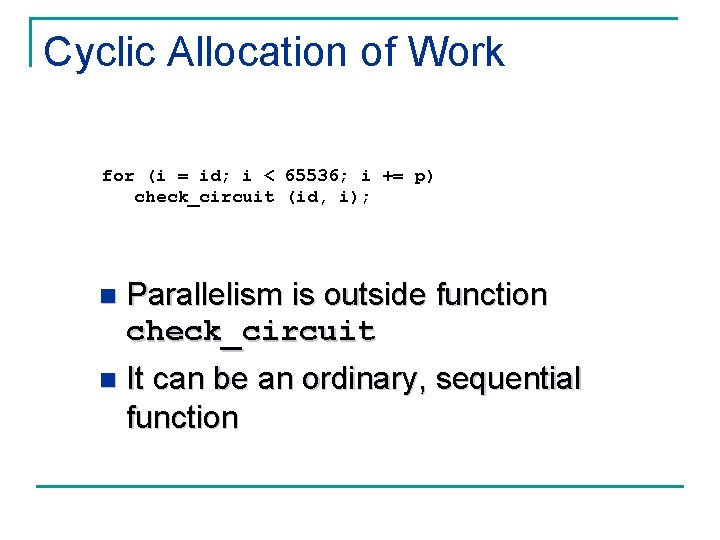

Cyclic Allocation of Work for (i = id; i < 65536; i += p) check_circuit (id, i); Parallelism is outside function check_circuit n It can be an ordinary, sequential function n

Shutting Down MPI_Finalize(); n n Call after all other MPI library calls Allows system to free up MPI resources

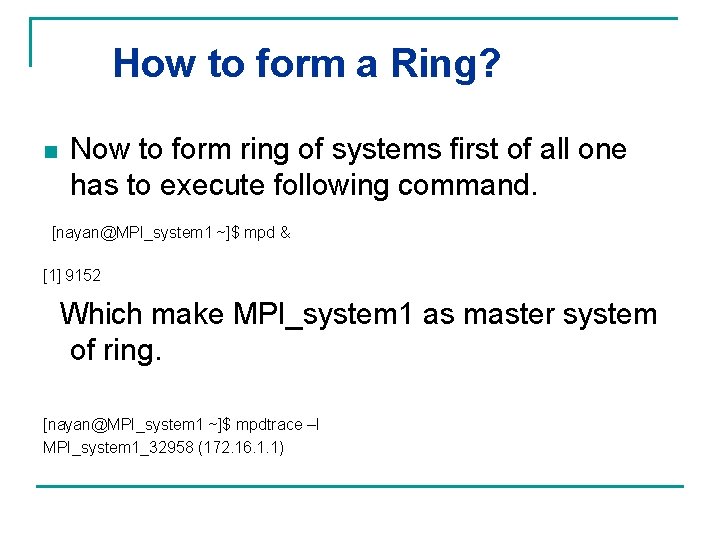

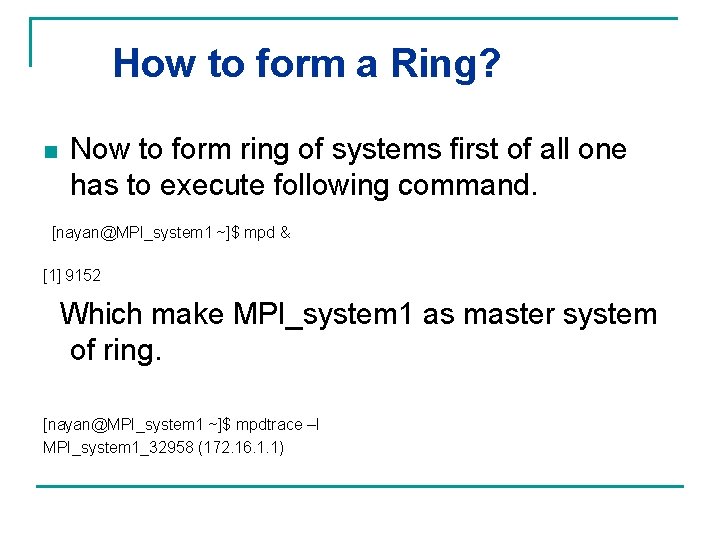

Our Call to MPI_Reduce() MPI_Reduce (&count, &global_count, 1, MPI_INT, MPI_SUM, Only process 0 0, will get the result. MPI_COMM_WORLD); if (!id) printf ("There are %d different solutionsn", global_count);

Benchmarking the Program n n n MPI_Barrier barrier synchronization MPI_Wtick timer resolution MPI_Wtime current time

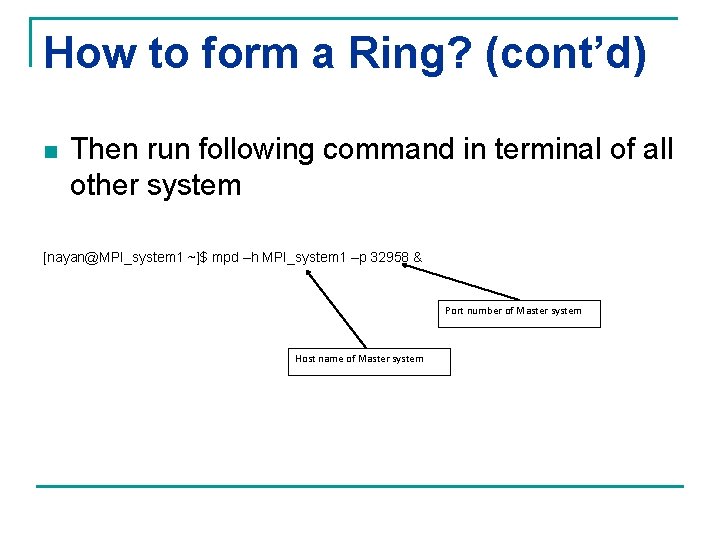

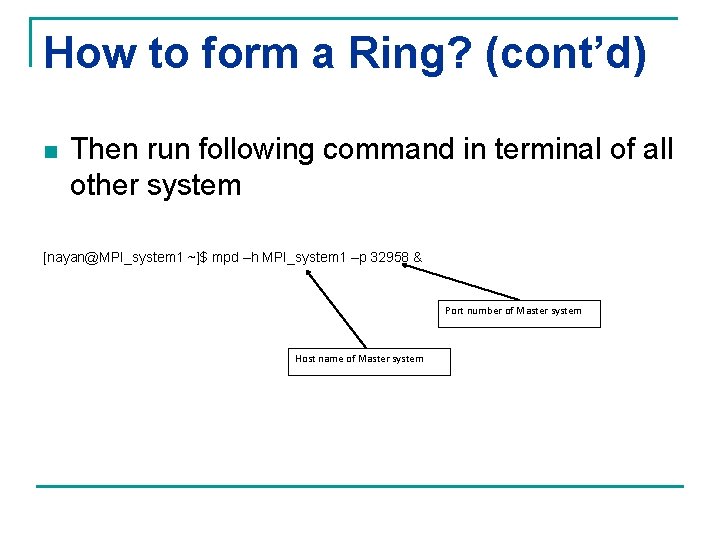

How to form a Ring? n Now to form ring of systems first of all one has to execute following command. [nayan@MPI_system 1 ~]$ mpd & [1] 9152 Which make MPI_system 1 as master system of ring. [nayan@MPI_system 1 ~]$ mpdtrace –l MPI_system 1_32958 (172. 16. 1. 1)

How to form a Ring? (cont’d) n Then run following command in terminal of all other system [nayan@MPI_system 1 ~]$ mpd –h MPI_system 1 –p 32958 & Port number of Master system Host name of Master system

How to kill a Ring? n And to kill ring run following command on master system. [nayan@MPI_system 1 ~]$ mpdallexit

Compiling MPI Programs n n to compile and execute above program follow the following steps first of all compile sat 1. c by executing following command on master system. [nayan@MPI_system 1 ~]$ mpicc -0 sat 1. out sat 1. c here “mpicc” is mpi command to compile sat 1. c file and sat 1. out is output file.

Running MPI Programs n Now to run this output file type following command in master system [nayan@MPI_system 1 ~]$ mpiexec -n 1. /sat 1. out

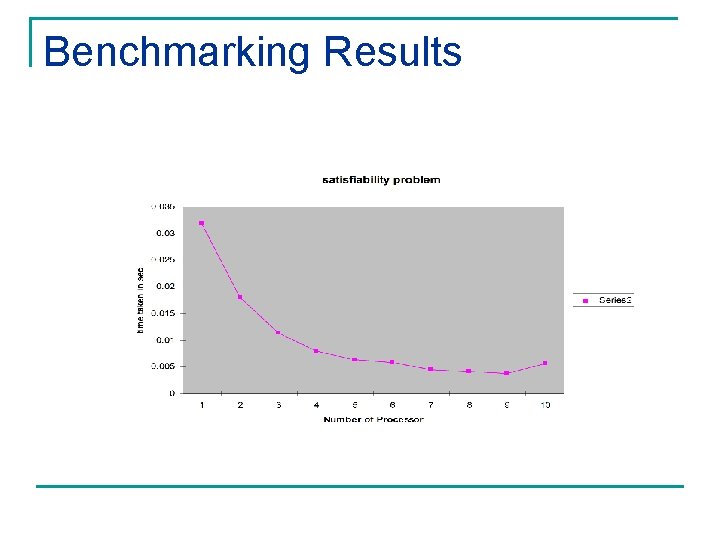

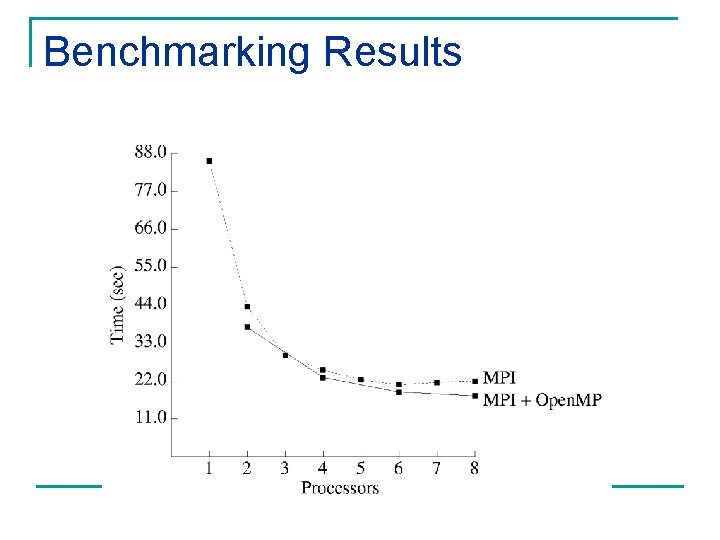

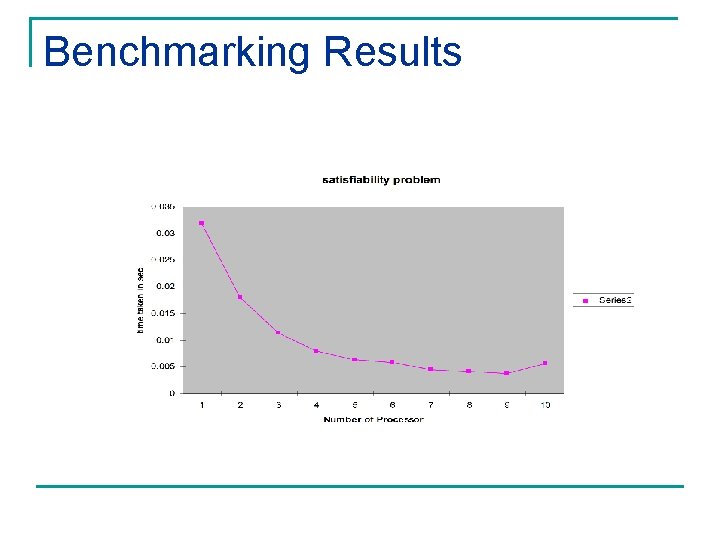

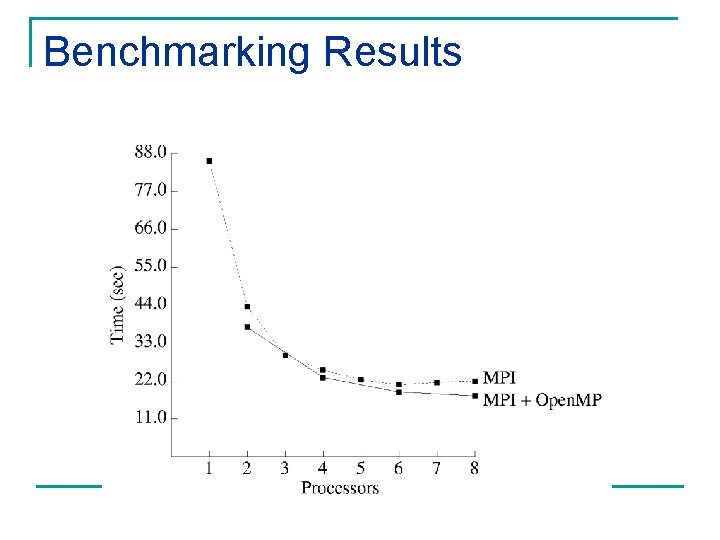

Benchmarking Results

Open. MP n Open. MP: An application programming interface (API) for parallel programming on multiprocessors q q n Compiler directives Library of support functions Open. MP works in conjunction with Fortran, C, or C++

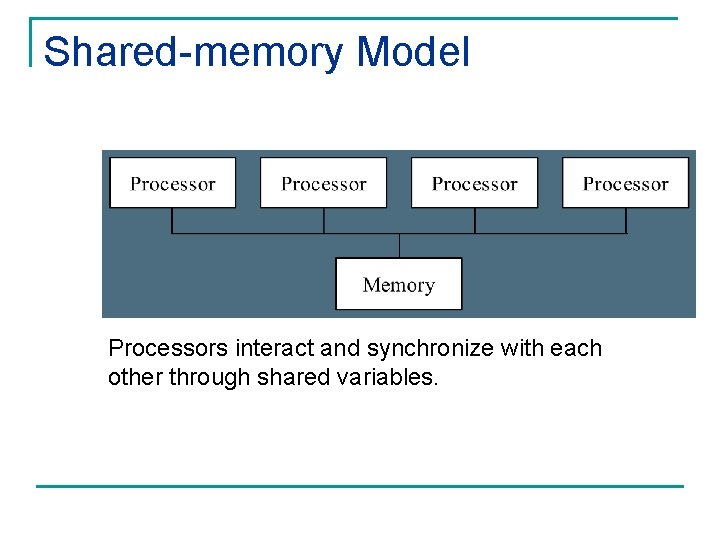

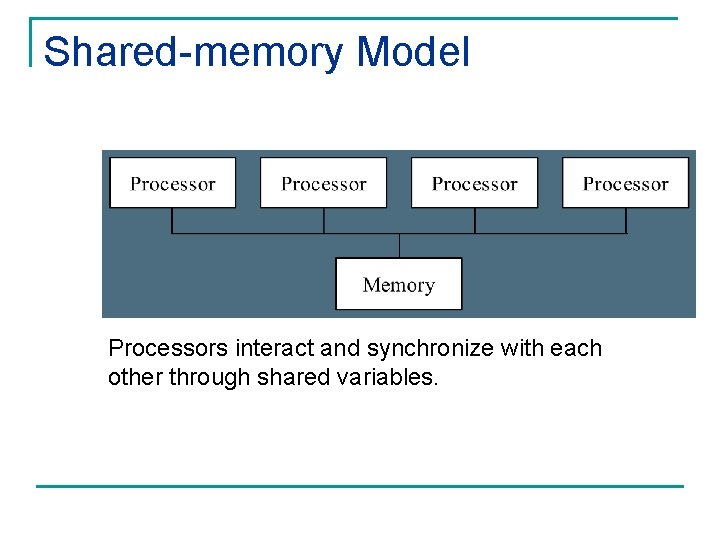

Shared-memory Model Processors interact and synchronize with each other through shared variables.

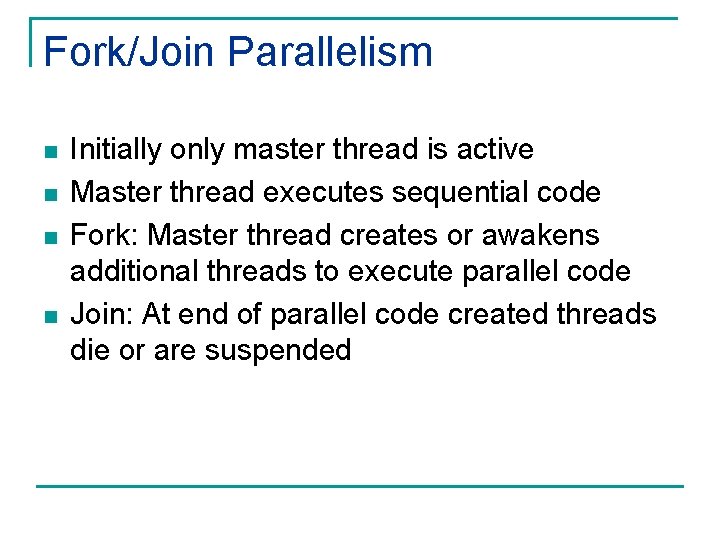

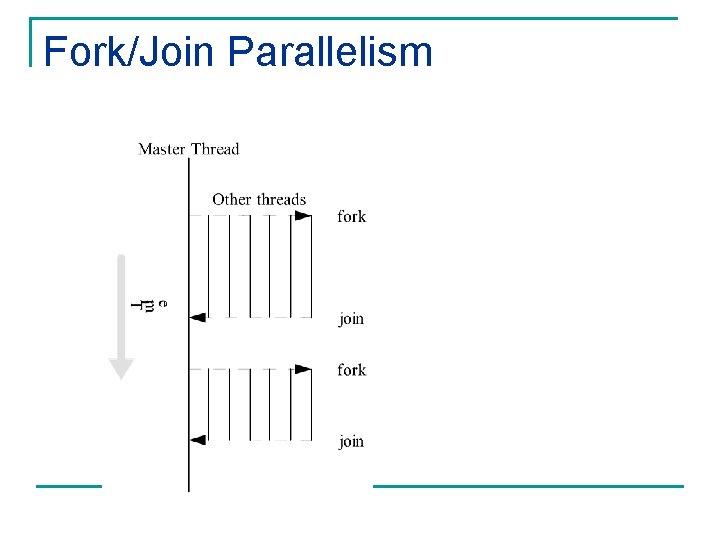

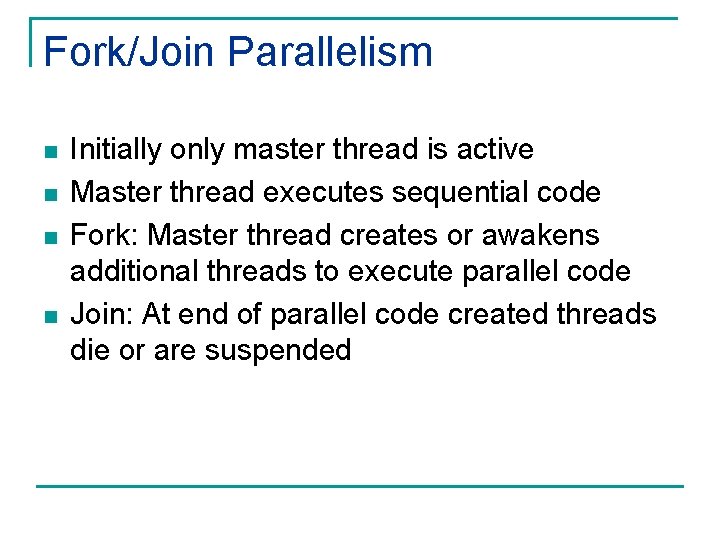

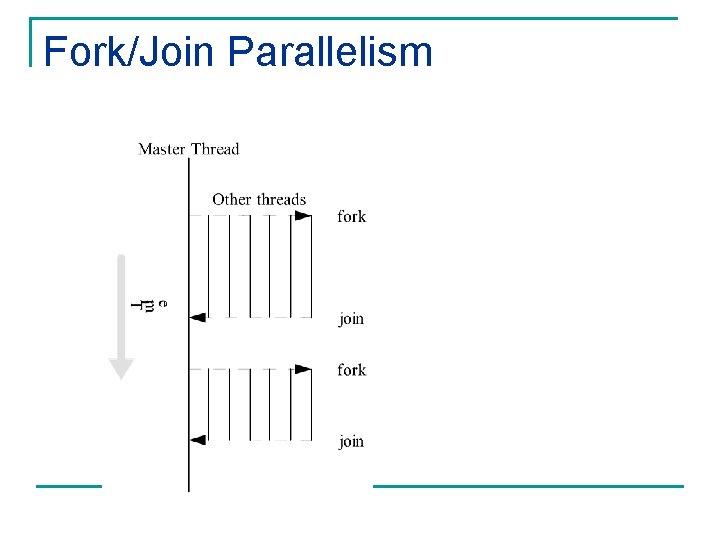

Fork/Join Parallelism n n Initially only master thread is active Master thread executes sequential code Fork: Master thread creates or awakens additional threads to execute parallel code Join: At end of parallel code created threads die or are suspended

Fork/Join Parallelism

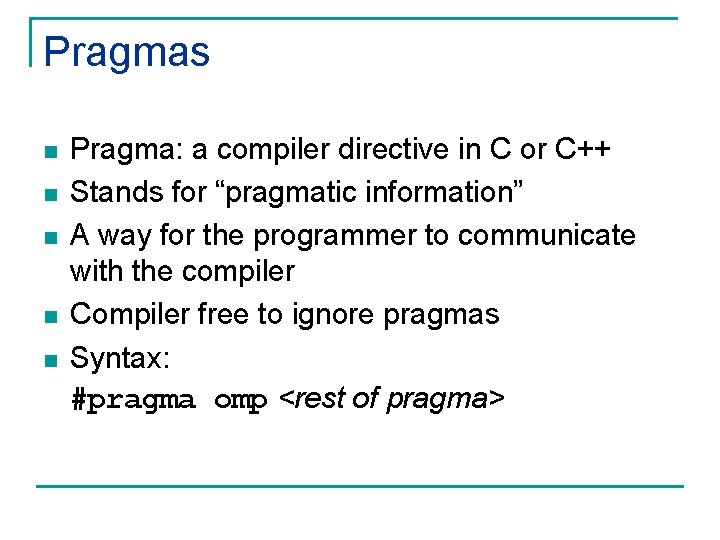

Shared-memory Model vs. Message-passing Model n Shared-memory model q n Number active threads 1 at start and finish of program, changes dynamically during execution Message-passing model q All processes active throughout execution of program

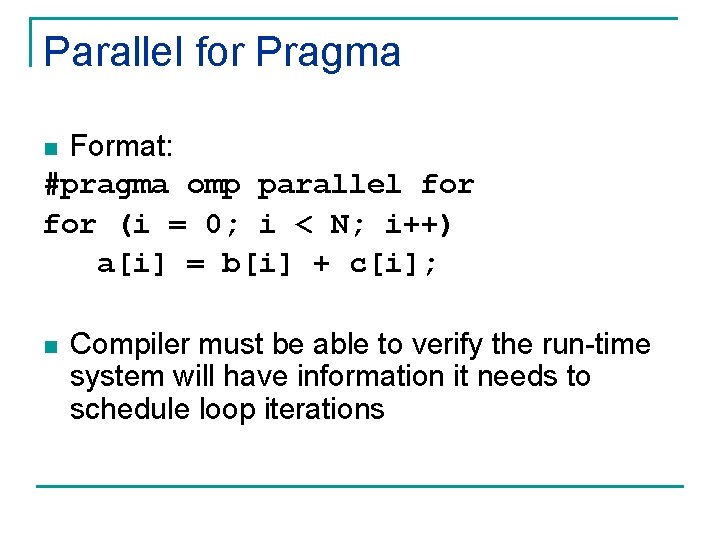

Parallel for Loops n C programs often express data-parallel operations as for loops for (i = first; i < size; i += prime) marked[i] = 1; n n Open. MP makes it easy to indicate when the iterations of a loop may execute in parallel Compiler takes care of generating code that forks/joins threads and allocates the iterations to threads

Pragmas n n n Pragma: a compiler directive in C or C++ Stands for “pragmatic information” A way for the programmer to communicate with the compiler Compiler free to ignore pragmas Syntax: #pragma omp <rest of pragma>

Parallel for Pragma Format: #pragma omp parallel for (i = 0; i < N; i++) a[i] = b[i] + c[i]; n n Compiler must be able to verify the run-time system will have information it needs to schedule loop iterations

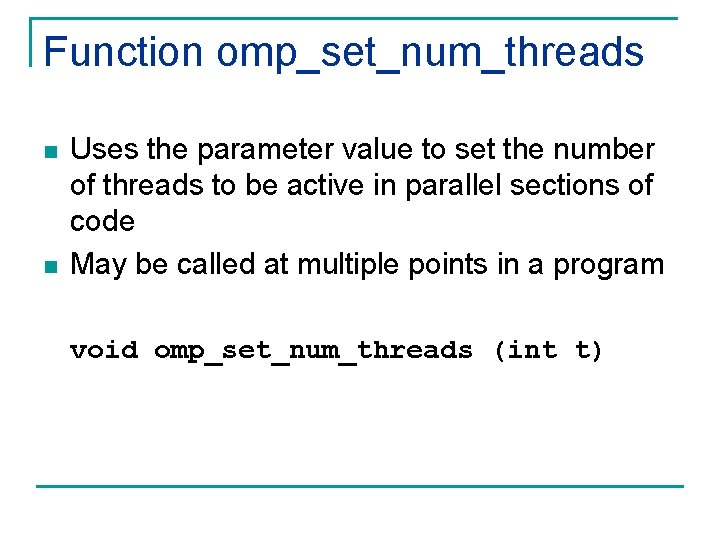

Function omp_get_num_procs n Returns number of physical processors available for use by the parallel program int omp_get_num_procs (void)

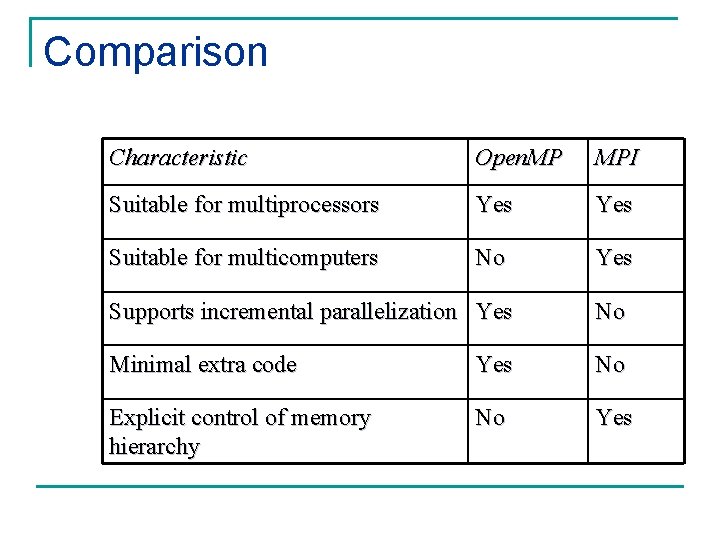

Function omp_set_num_threads n n Uses the parameter value to set the number of threads to be active in parallel sections of code May be called at multiple points in a program void omp_set_num_threads (int t)

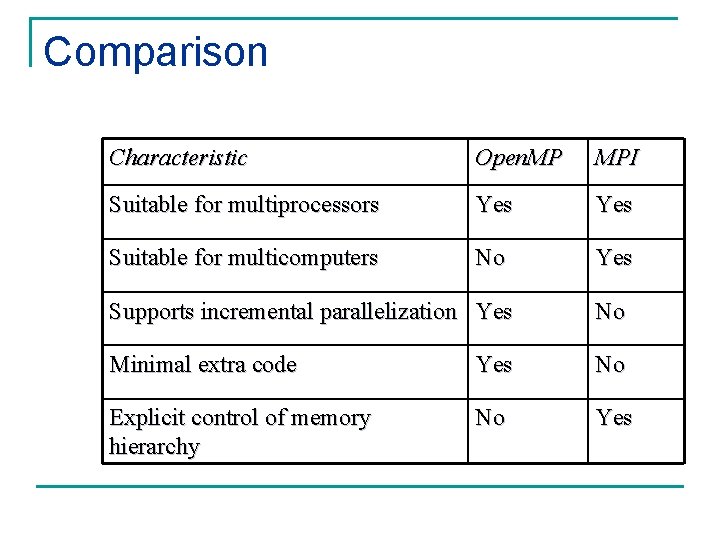

Comparison Characteristic Open. MP MPI Suitable for multiprocessors Yes Suitable for multicomputers No Yes Supports incremental parallelization Yes No Minimal extra code Yes No Explicit control of memory hierarchy No Yes

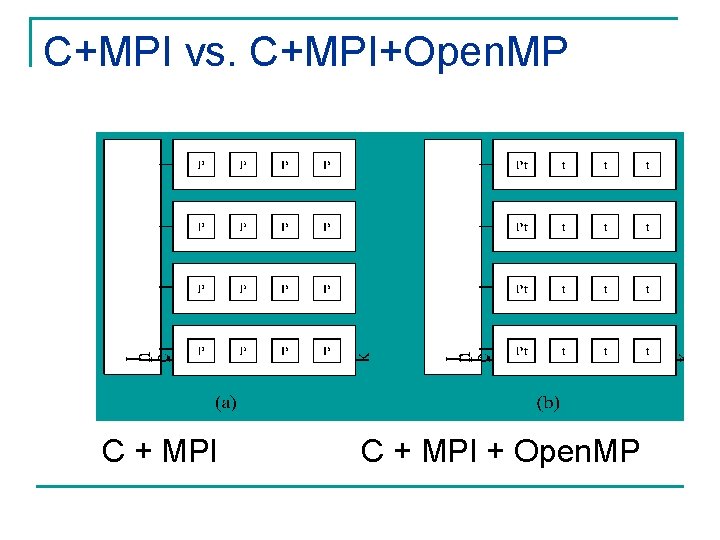

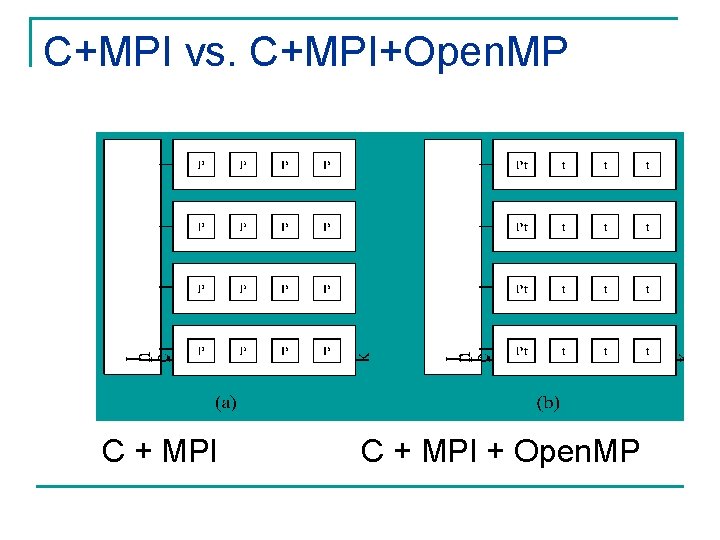

C+MPI vs. C+MPI+Open. MP C + MPI + Open. MP

Benchmarking Results

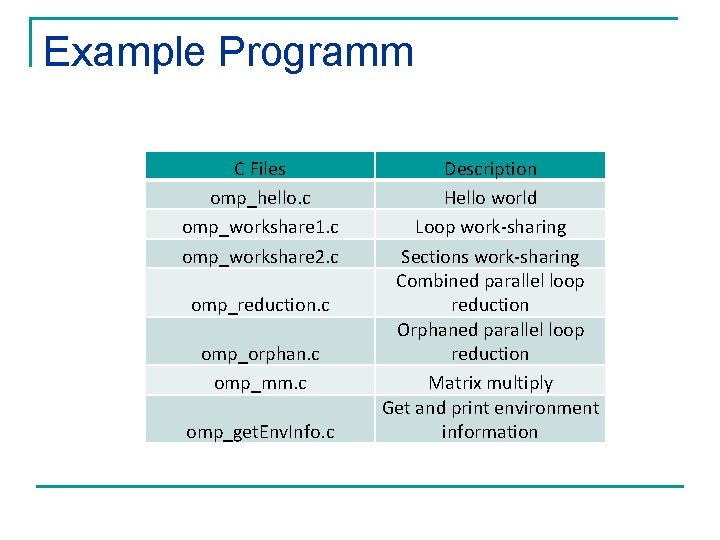

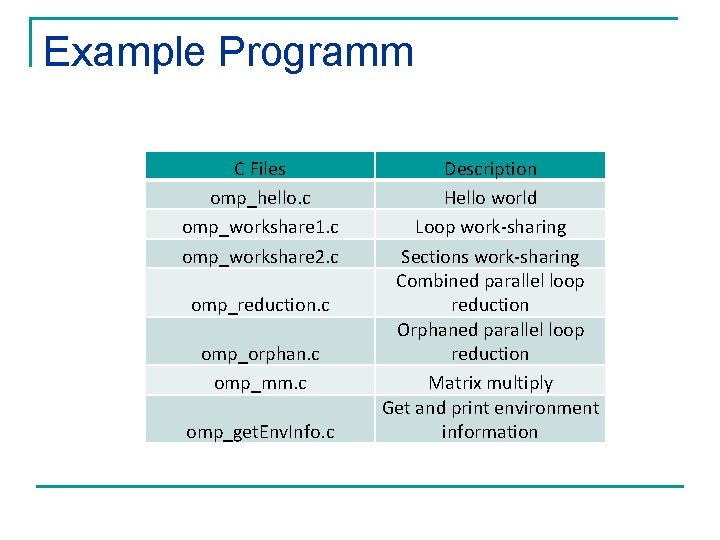

Example Programm C Files omp_hello. c omp_workshare 1. c omp_workshare 2. c omp_reduction. c omp_orphan. c omp_mm. c omp_get. Env. Info. c Description Hello world Loop work-sharing Sections work-sharing Combined parallel loop reduction Orphaned parallel loop reduction Matrix multiply Get and print environment information

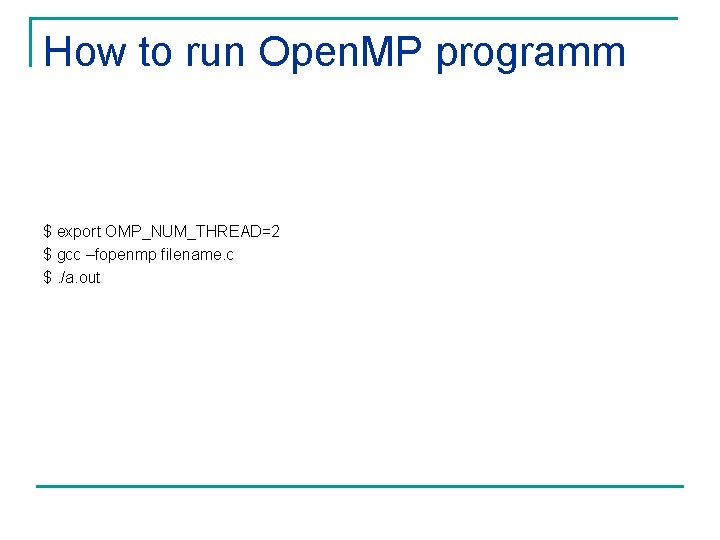

How to run Open. MP programm $ export OMP_NUM_THREAD=2 $ gcc –fopenmp filename. c $. /a. out

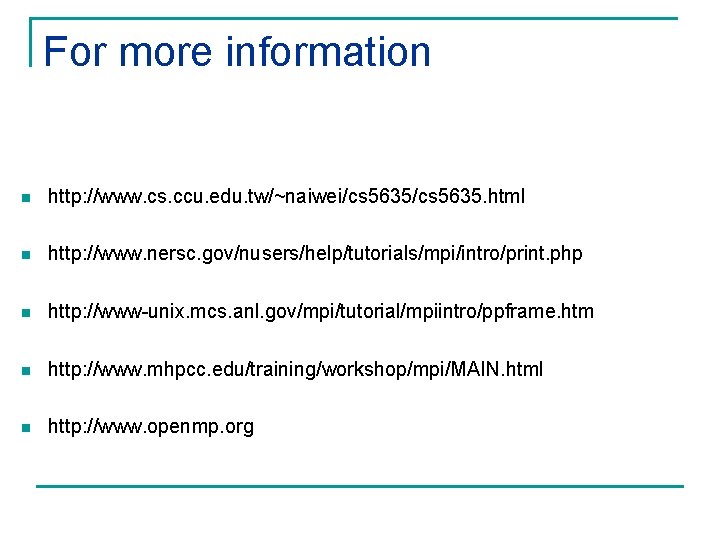

For more information n http: //www. cs. ccu. edu. tw/~naiwei/cs 5635. html n http: //www. nersc. gov/nusers/help/tutorials/mpi/intro/print. php n http: //www-unix. mcs. anl. gov/mpi/tutorial/mpiintro/ppframe. htm n http: //www. mhpcc. edu/training/workshop/mpi/MAIN. html n http: //www. openmp. org