Foundations of Privacy Lecture 7 Lecturer Moni Naor

![Randomized Response • Randomized Response Technique [Warner 1965] – Method for polling stigmatizing questions Randomized Response • Randomized Response Technique [Warner 1965] – Method for polling stigmatizing questions](https://slidetodoc.com/presentation_image_h/17291640799d24d94b55c39d27b6c5a0/image-28.jpg)

- Slides: 42

Foundations of Privacy Lecture 7 Lecturer: Moni Naor

Recap of last week’s lecture • Counting Queries – Hardness Results – Tracing Traitors and hardness results for general (non synthetic) databases

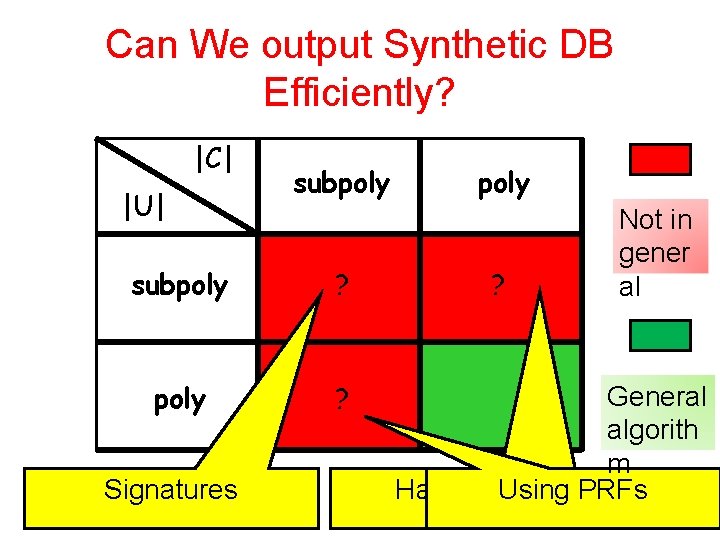

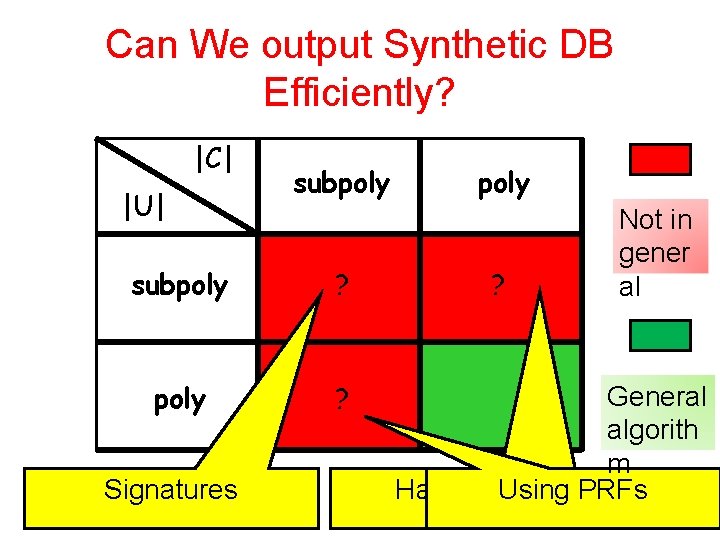

Can We output Synthetic DB Efficiently? |C| |U| subpoly ? Signatures poly ? Not in gener al General algorith m Hard on Using Avg. PRFs

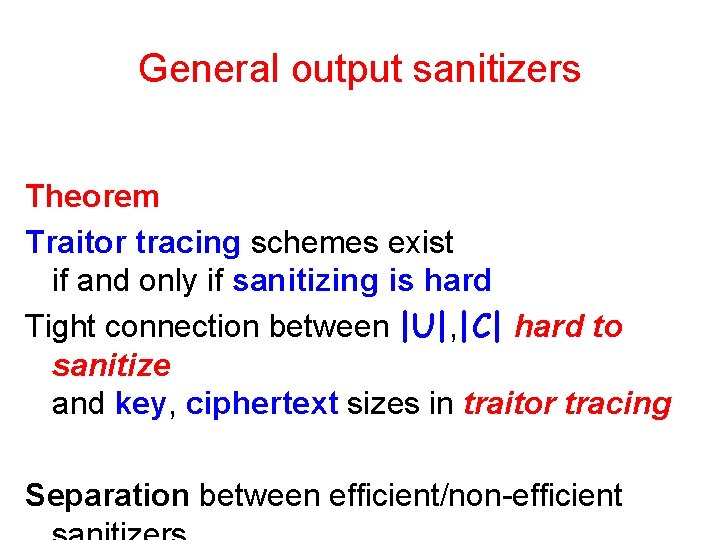

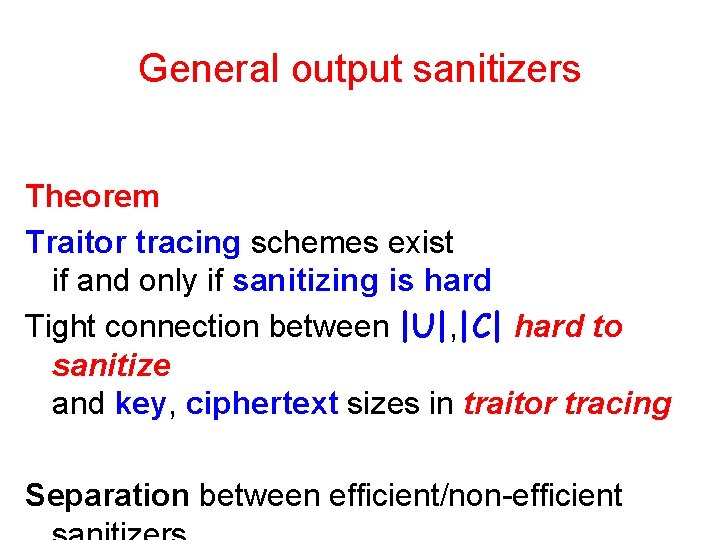

General output sanitizers Theorem Traitor tracing schemes exist if and only if sanitizing is hard Tight connection between |U|, |C| hard to sanitize and key, ciphertext sizes in traitor tracing Separation between efficient/non-efficient

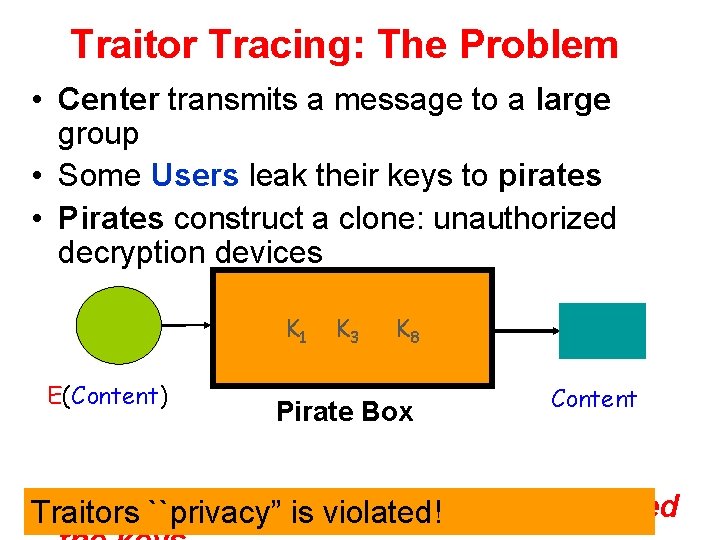

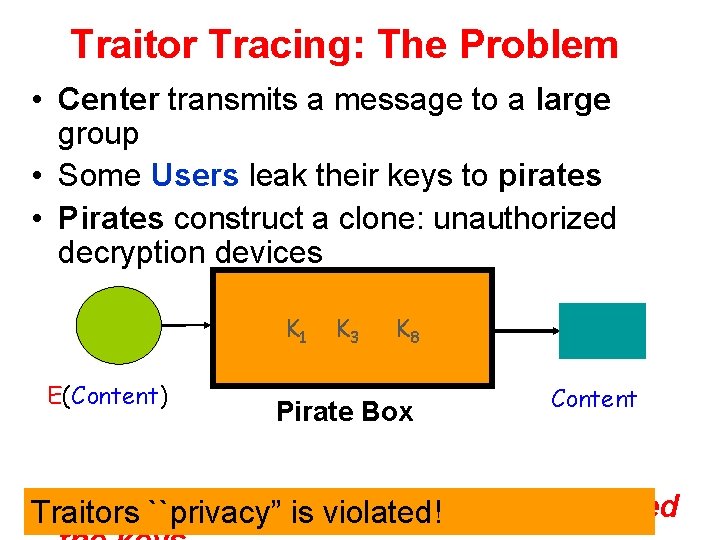

Traitor Tracing: The Problem • Center transmits a message to a large group • Some Users leak their keys to pirates • Pirates construct a clone: unauthorized decryption devices K 1 E(Content) K 3 K 8 Pirate Box Content • Traitors Given ``privacy” a Pirate Box want to find who leaked is violated!

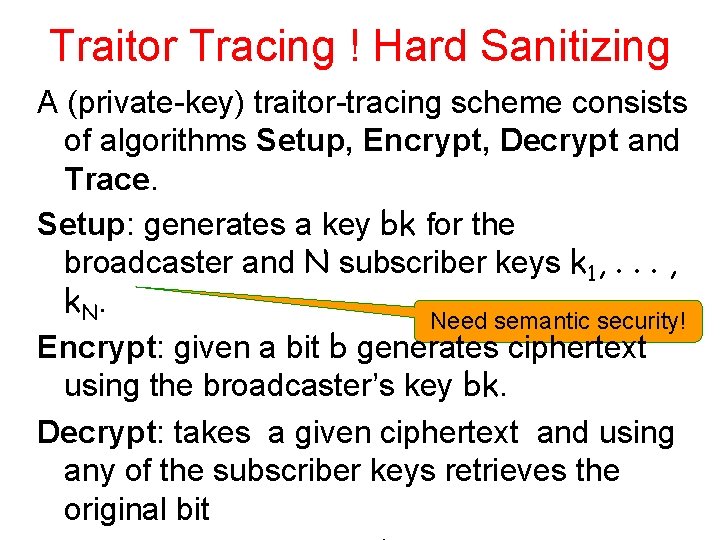

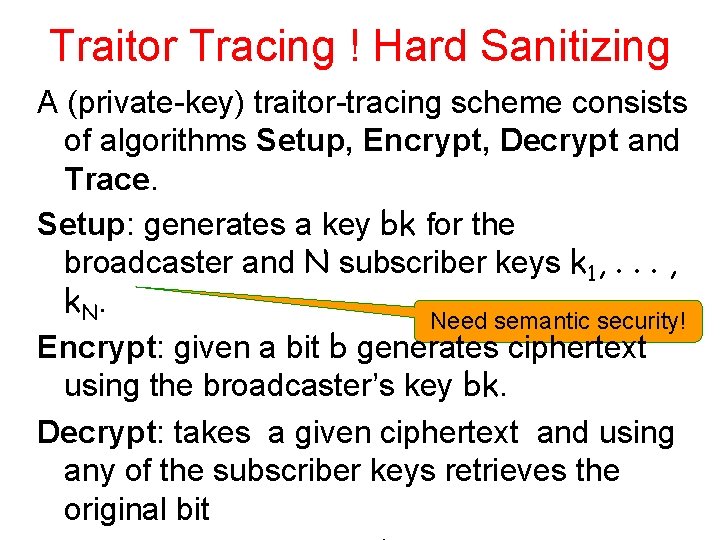

Traitor Tracing ! Hard Sanitizing A (private-key) traitor-tracing scheme consists of algorithms Setup, Encrypt, Decrypt and Trace. Setup: generates a key bk for the broadcaster and N subscriber keys k 1, . . . , k. N. Need semantic security! Encrypt: given a bit b generates ciphertext using the broadcaster’s key bk. Decrypt: takes a given ciphertext and using any of the subscriber keys retrieves the original bit

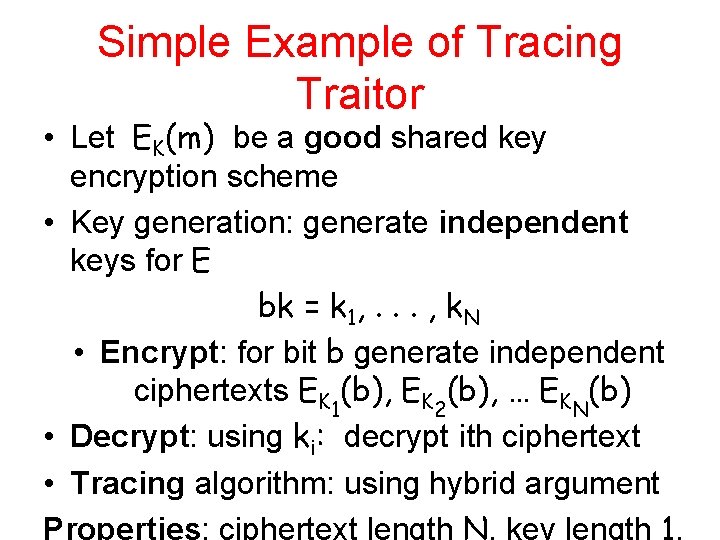

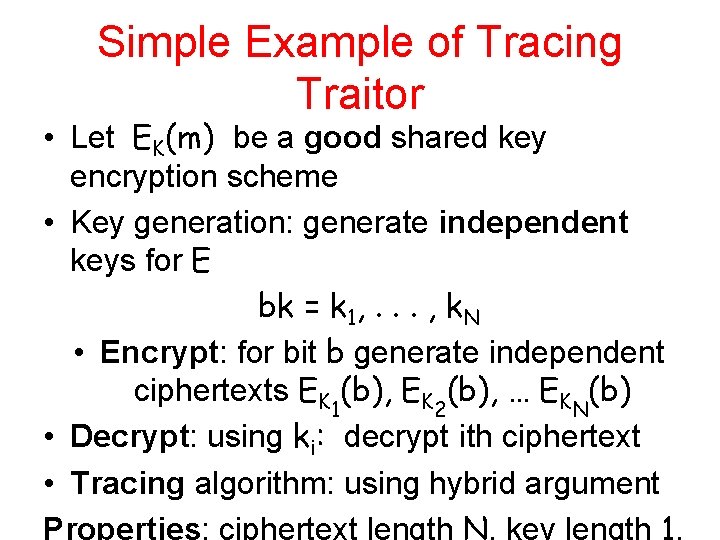

Simple Example of Tracing Traitor • Let EK(m) be a good shared key encryption scheme • Key generation: generate independent keys for E bk = k 1, . . . , k. N • Encrypt: for bit b generate independent ciphertexts EK 1(b), EK 2(b), … EKN(b) • Decrypt: using ki: decrypt ith ciphertext • Tracing algorithm: using hybrid argument Properties: ciphertext length N, key length 1.

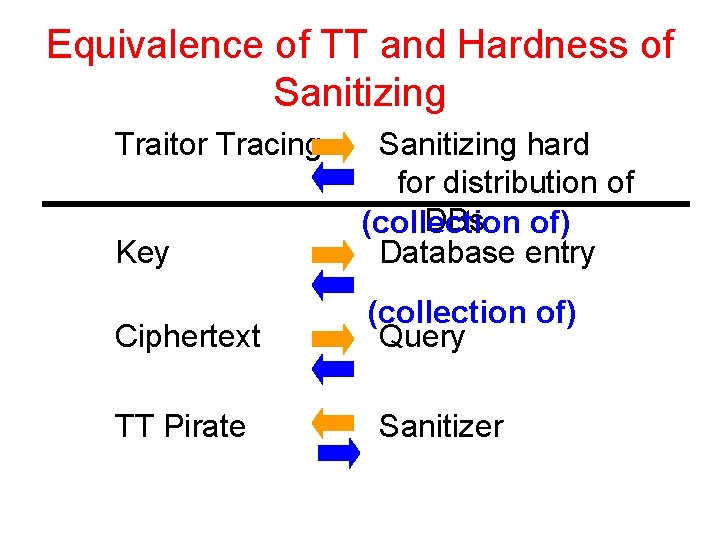

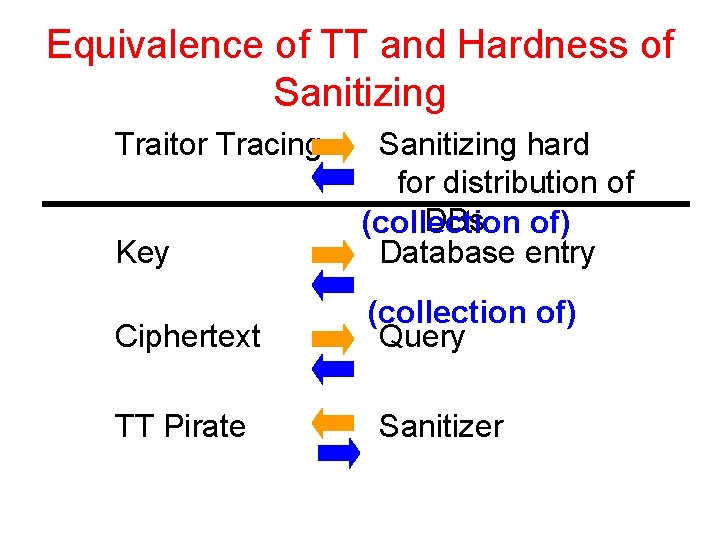

Equivalence of TT and Hardness of Sanitizing Traitor Tracing Key Sanitizing hard for distribution of DBs of) (collection Database entry Ciphertext (collection of) Query TT Pirate Sanitizer

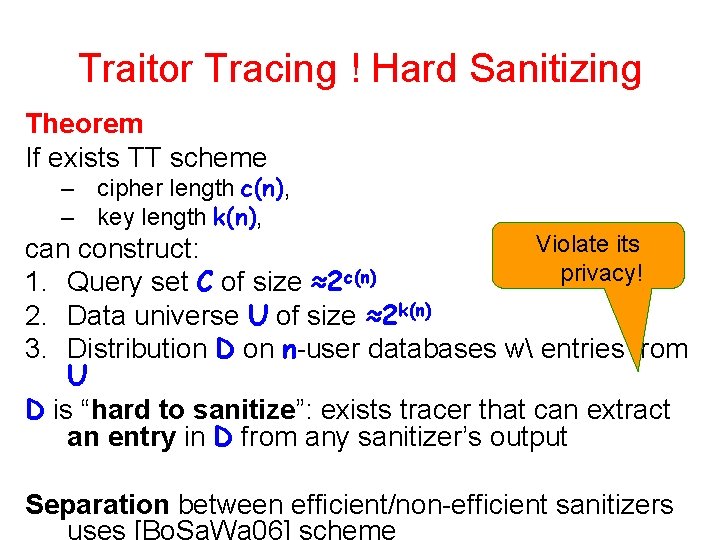

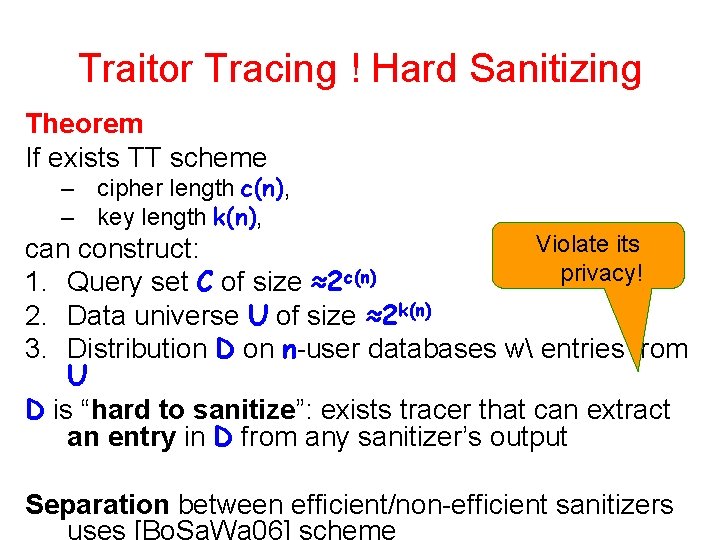

Traitor Tracing ! Hard Sanitizing Theorem If exists TT scheme – cipher length c(n), – key length k(n), Violate its can construct: privacy! 1. Query set C of size ≈2 c(n) 2. Data universe U of size ≈2 k(n) 3. Distribution D on n-user databases w entries from U D is “hard to sanitize”: exists tracer that can extract an entry in D from any sanitizer’s output Separation between efficient/non-efficient sanitizers

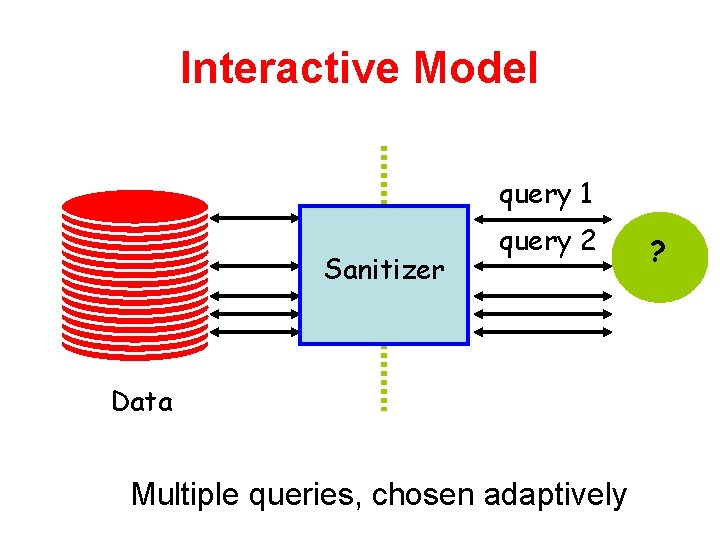

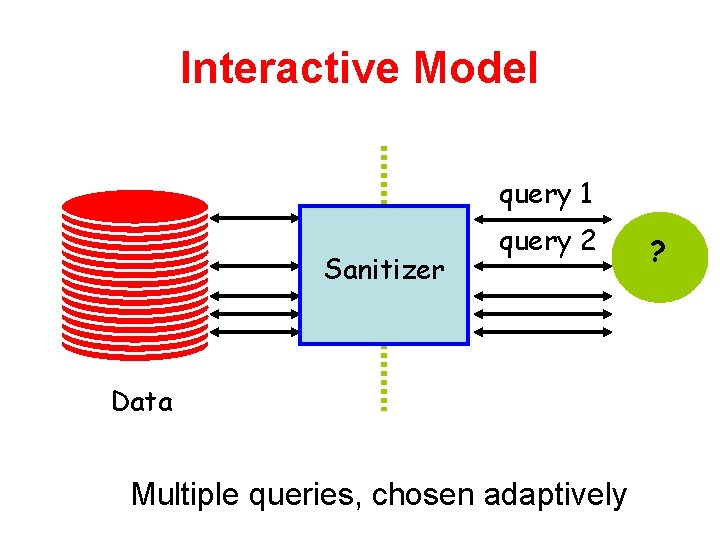

Interactive Model query 1 Sanitizer query 2 Data Multiple queries, chosen adaptively ?

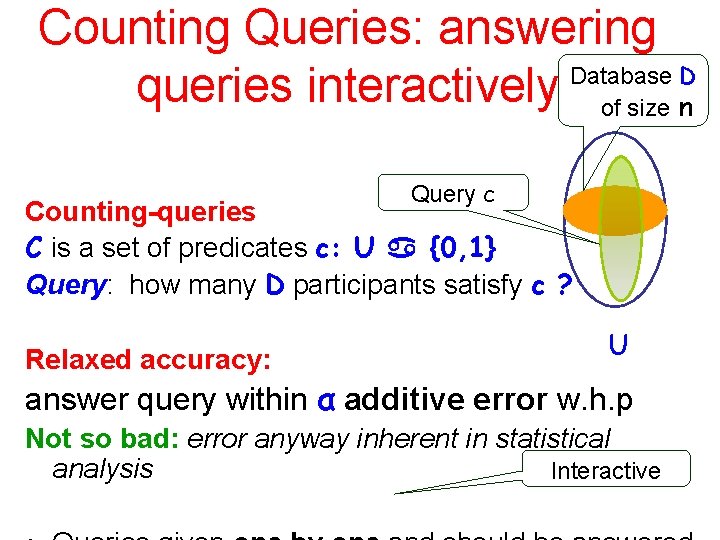

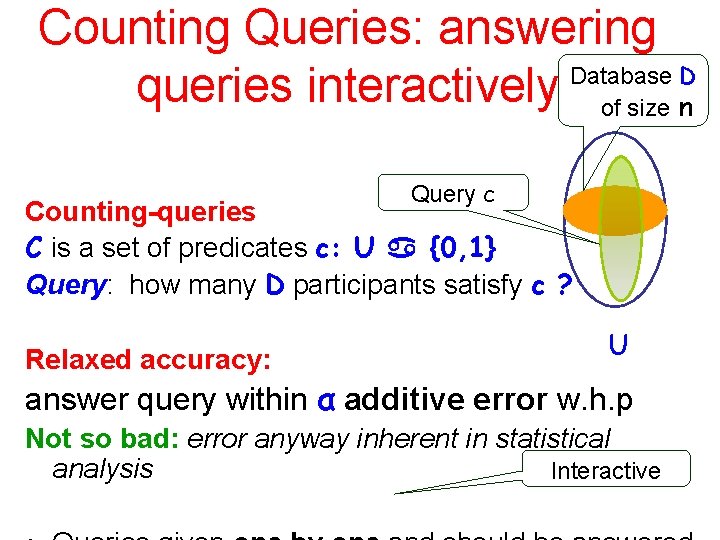

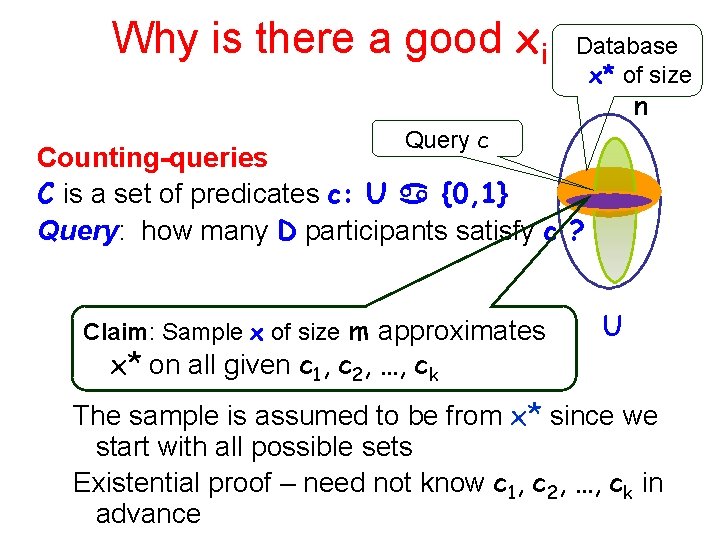

Counting Queries: answering Database D queries interactively of size n Query c Counting-queries C is a set of predicates c: U {0, 1} Query: how many D participants satisfy c ? Relaxed accuracy: U answer query within α additive error w. h. p Not so bad: error anyway inherent in statistical analysis Interactive

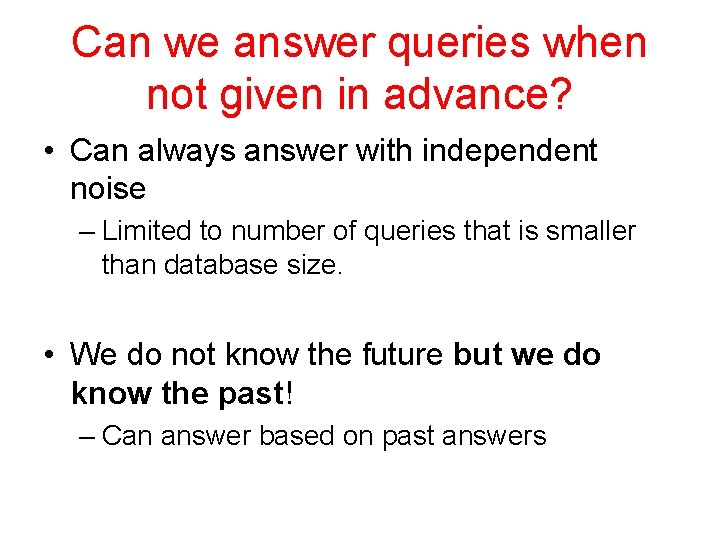

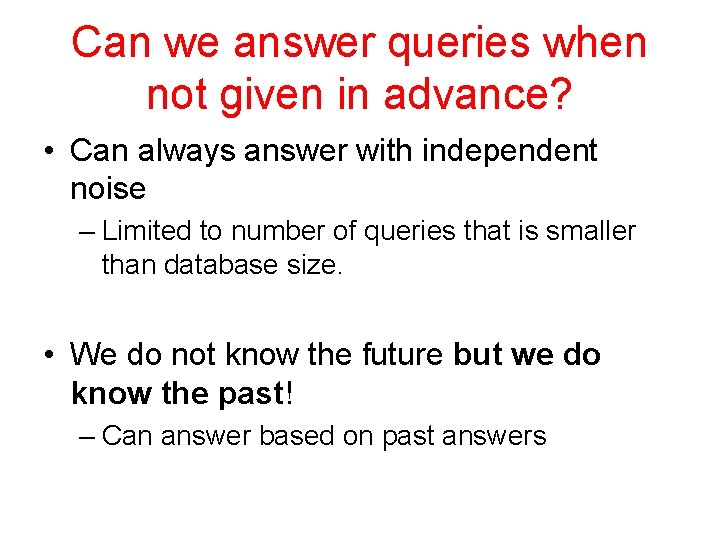

Can we answer queries when not given in advance? • Can always answer with independent noise – Limited to number of queries that is smaller than database size. • We do not know the future but we do know the past! – Can answer based on past answers

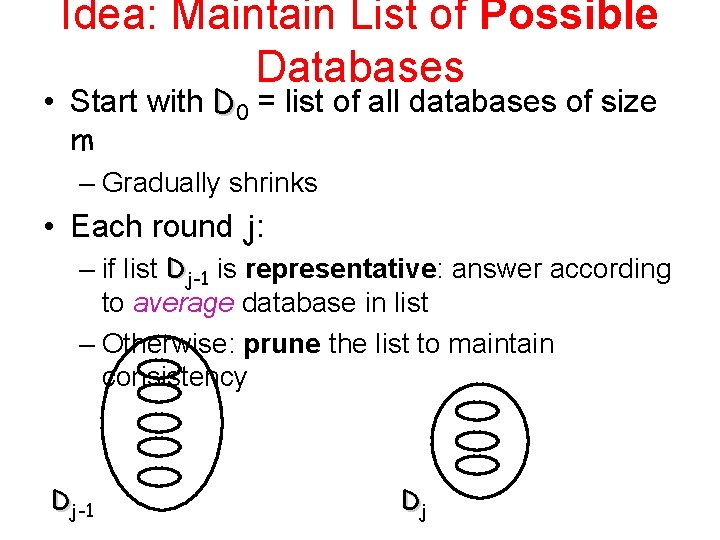

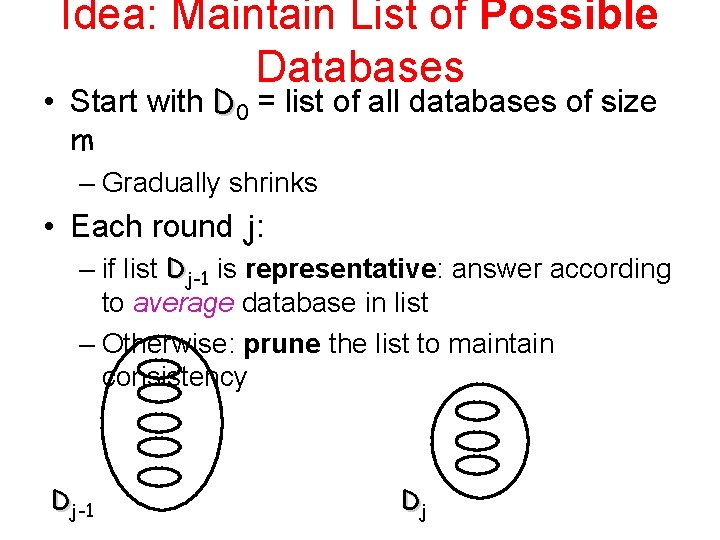

Idea: Maintain List of Possible Databases • Start with D 0 = list of all databases of size m – Gradually shrinks • Each round j: – if list Dj-1 is representative: answer according to average database in list – Otherwise: prune the list to maintain consistency Dj-1 Dj

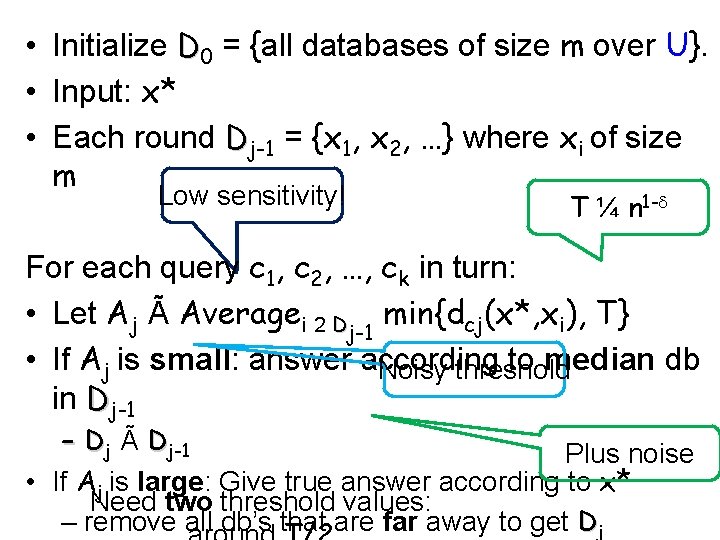

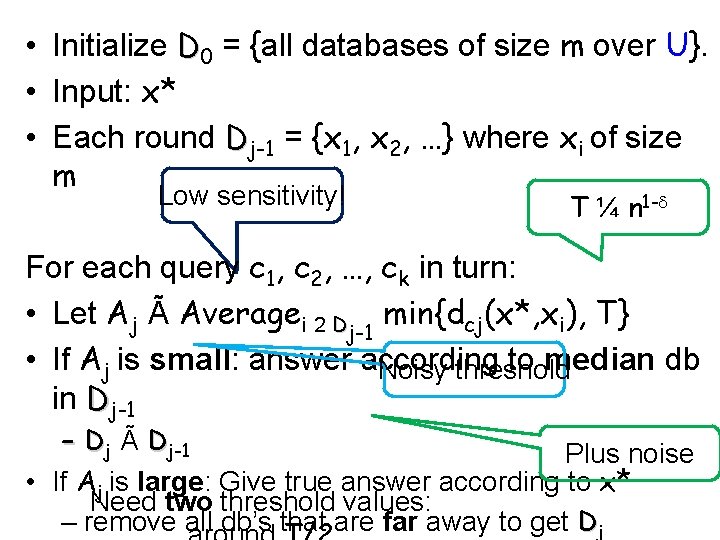

• Initialize D 0 = {all databases of size m over U}. • Input: x* • Each round Dj-1 = {x 1, x 2, …} where xi of size m Low sensitivity! T ¼ n 1 - For each query c 1, c 2, …, ck in turn: • Let Aj à Averagei 2 Dj-1 min{dcj(x*, xi), T} • If Aj is small: answer according to median db Noisy threshold in Dj-1 – Dj à Dj-1 Plus noise • If Aj is large: Give true answer according to x* Need two threshold values: – remove all db’s that are far away to get Dj

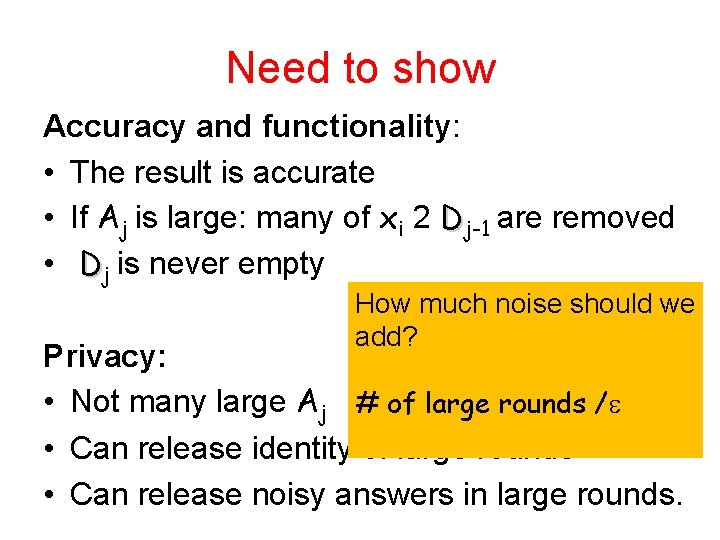

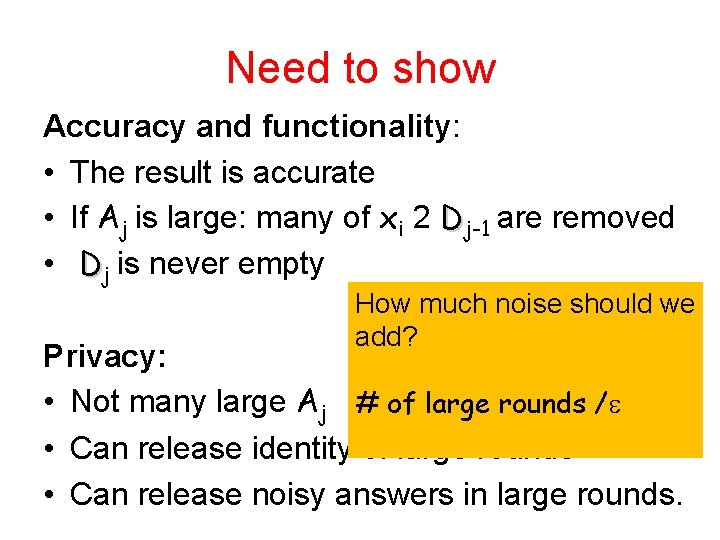

Need to show Accuracy and functionality: • The result is accurate • If Aj is large: many of xi 2 Dj-1 are removed • Dj is never empty How much noise should we add? Privacy: • Not many large Aj # of large rounds / • Can release identity of large rounds • Can release noisy answers in large rounds.

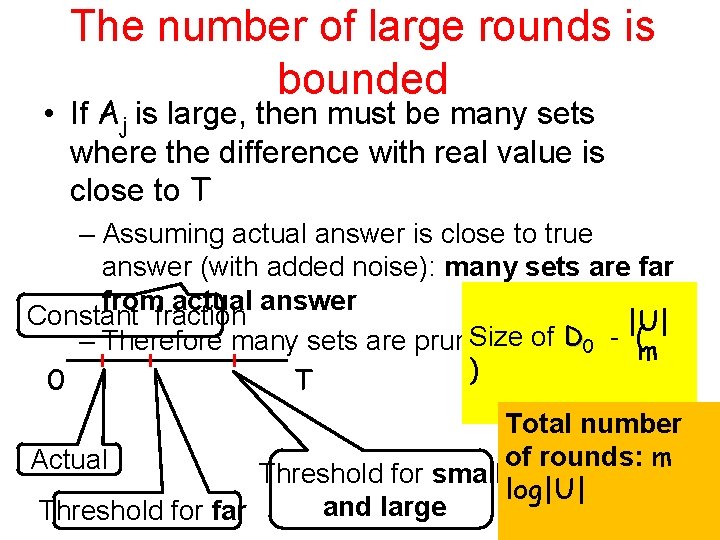

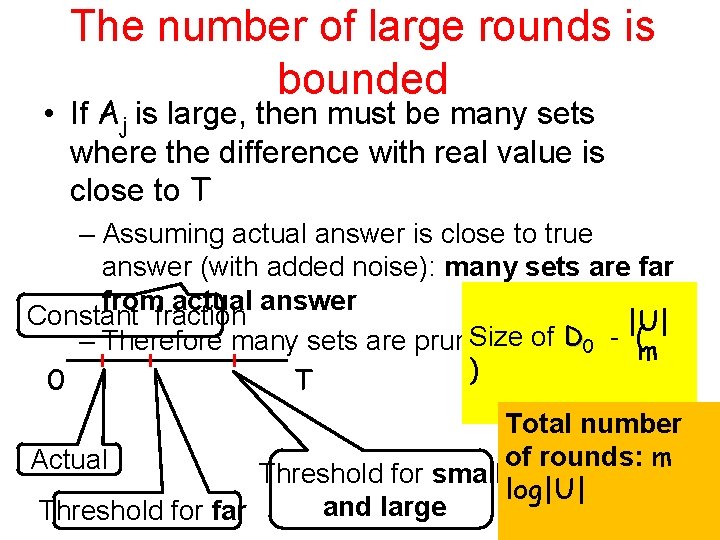

The number of large rounds is bounded • If Aj is large, then must be many sets where the difference with real value is close to T – Assuming actual answer is close to true answer (with added noise): many sets are far from actual answer Constant fraction |U| Size of D 0 - ( – Therefore many sets are pruned m ) 0 T Total number of rounds: m Actual Threshold for small log|U| and large Threshold for far

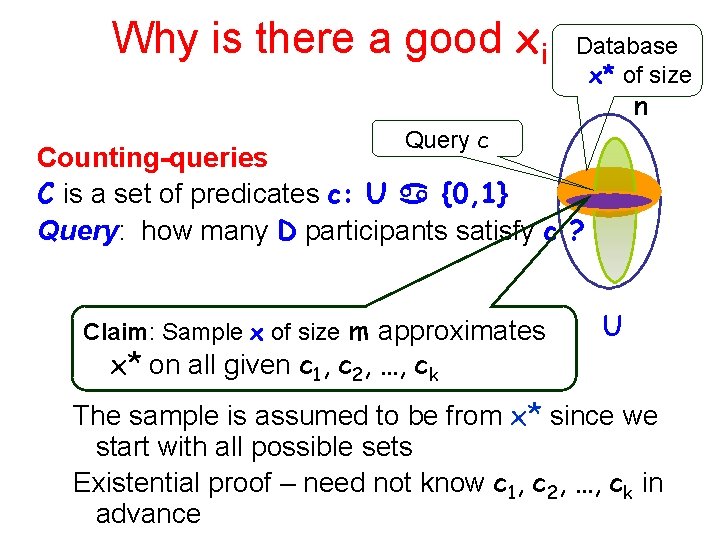

Why is there a good xi Database x* of size n Query c Counting-queries C is a set of predicates c: U {0, 1} Query: how many D participants satisfy c ? Claim: Sample x of size m approximates x* on all given c 1, c 2, …, ck U The sample is assumed to be from x* since we start with all possible sets Existential proof – need not know c 1, c 2, …, ck in advance

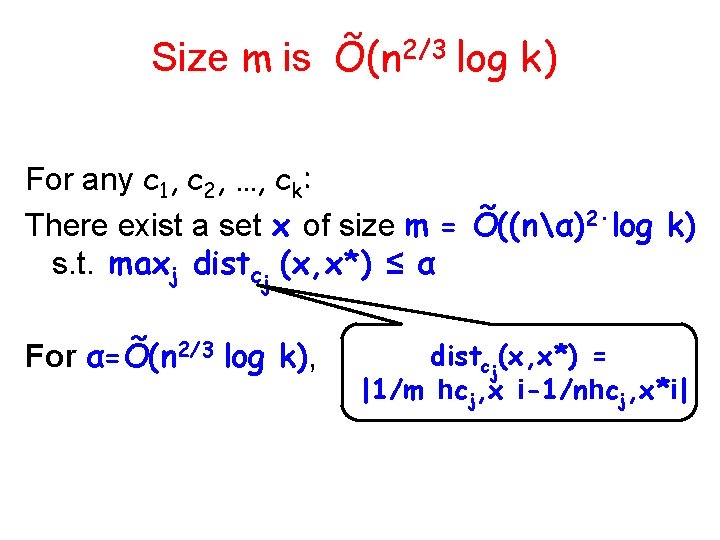

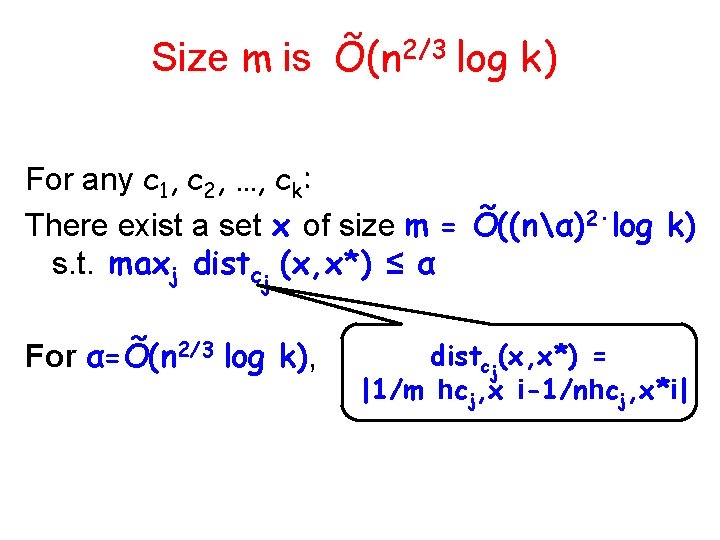

Size m is Õ(n 2/3 log k) For any c 1, c 2, …, ck: There exist a set x of size m = Õ((nα)2·log k) s. t. maxj distcj (x, x*) ≤ α For α=Õ(n 2/3 log k), distcj(x, x*) = |1/m hcj, x i-1/nhcj, x*i|

Why can we release when large rounds occur? • Do not expect more than O(m log|U| ) large rounds • Make threshold noisy For every pair of neighboring databases: x* and x’* • Consider vector of threshold noises – Of length k • If a point is far away from threshold – same in both

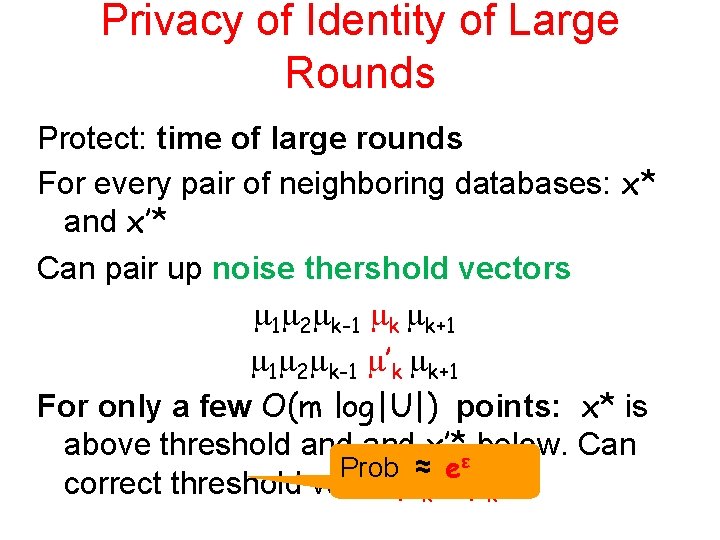

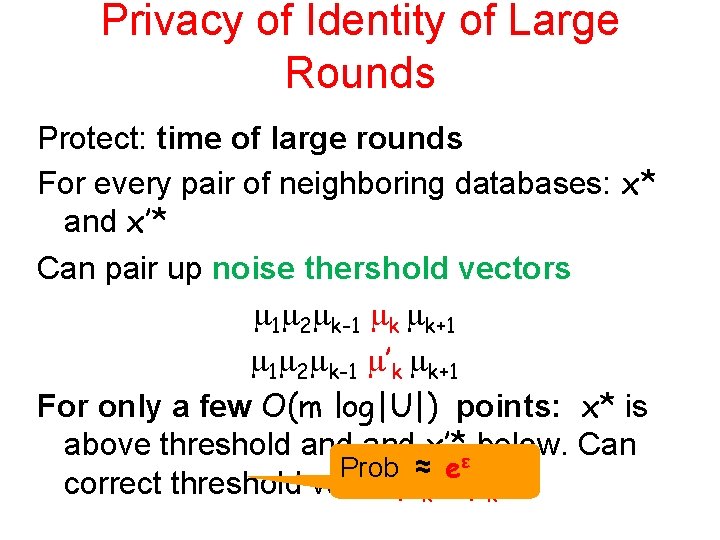

Privacy of Identity of Large Rounds Protect: time of large rounds For every pair of neighboring databases: x* and x’* Can pair up noise thershold vectors 1 2 k-1 k k+1 1 2 k-1 ’k k+1 For only a few O(m log|U|) points: x* is above threshold and x’*ε below. Can Prob ≈ e correct threshold value ’k = k +1

Summary of Algorithms Three algorithms • BLR • DNRRV • RR (with help from HR)

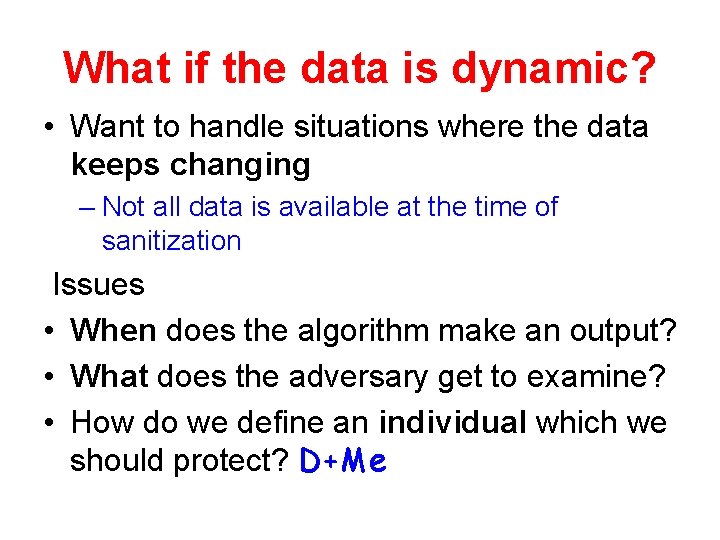

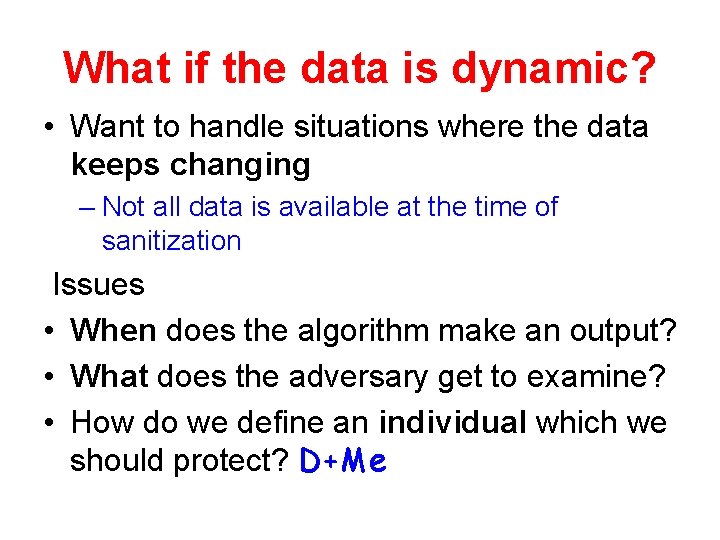

What if the data is dynamic? • Want to handle situations where the data keeps changing – Not all data is available at the time of sanitization Curator/ Sanitizer

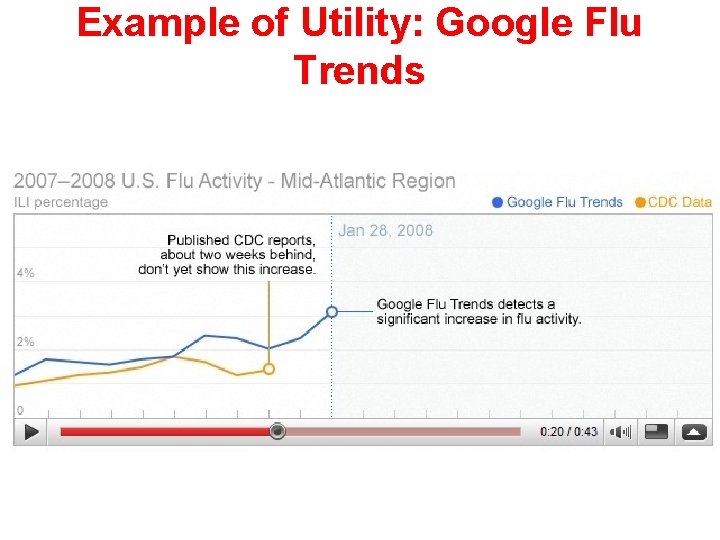

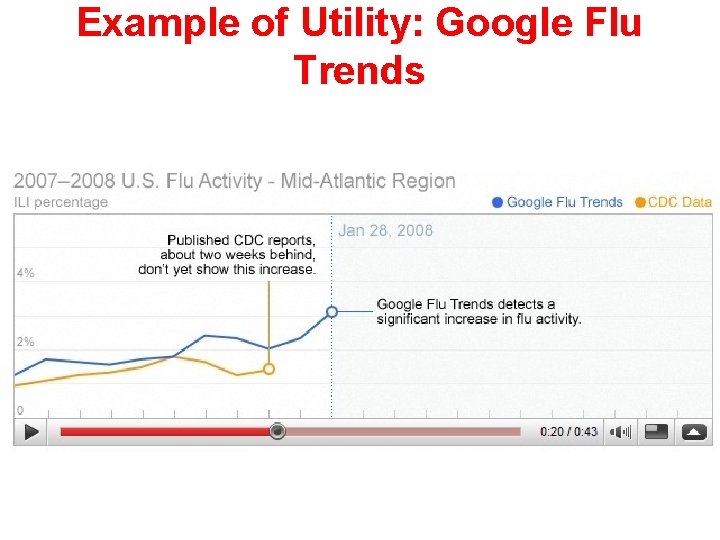

Google Flu Trends “We've found that certain search terms are good indicators of flu activity. Google Flu Trends uses aggregated Google search data to estimate current flu activity around the world in near realtime. ”

Example of Utility: Google Flu Trends

What if the data is dynamic? • Want to handle situations where the data keeps changing – Not all data is available at the time of sanitization Issues • When does the algorithm make an output? • What does the adversary get to examine? • How do we define an individual which we should protect? D+Me

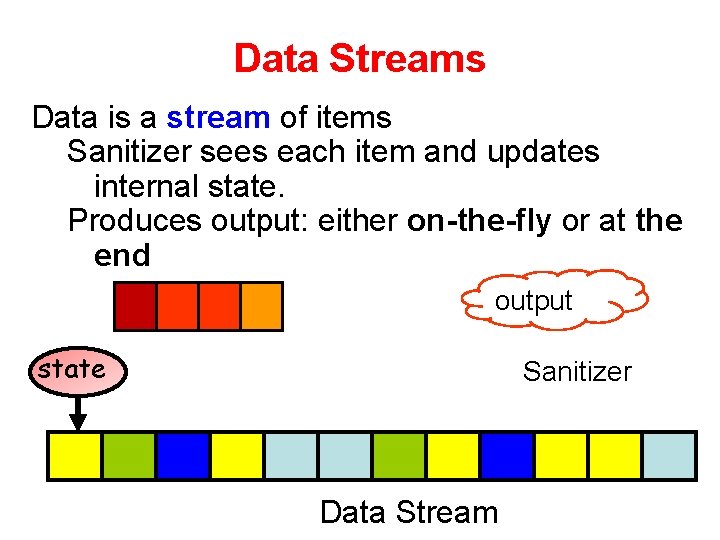

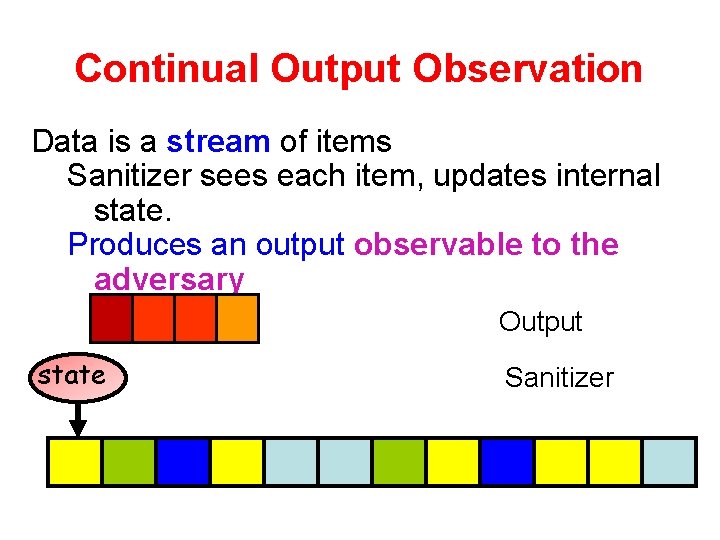

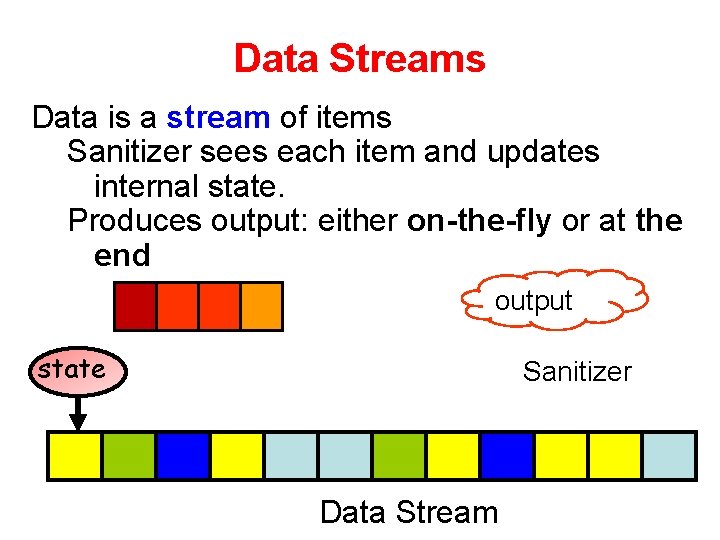

Data Streams Data is a stream of items Sanitizer sees each item and updates internal state. Produces output: either on-the-fly or at the end output state Sanitizer Data Stream

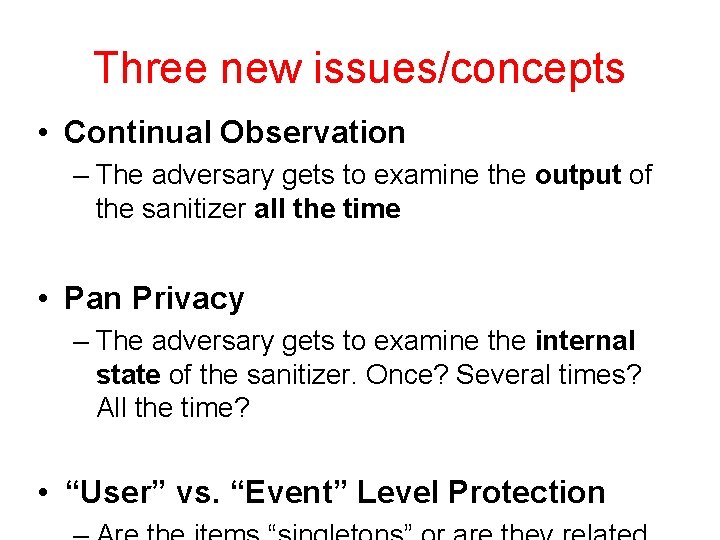

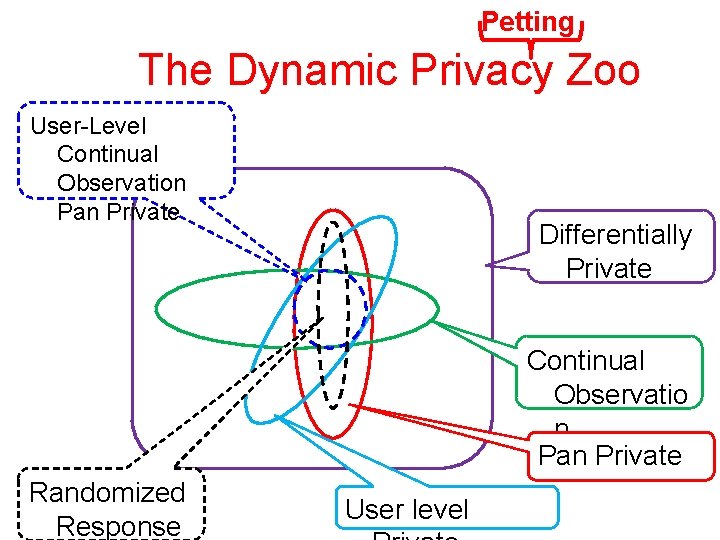

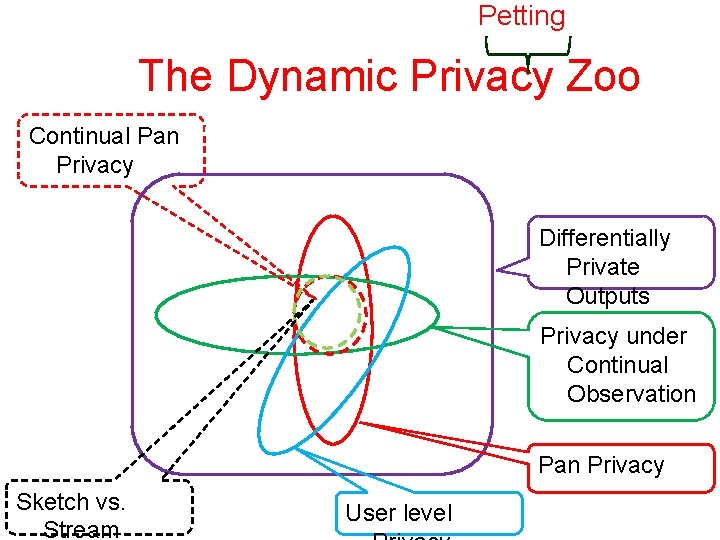

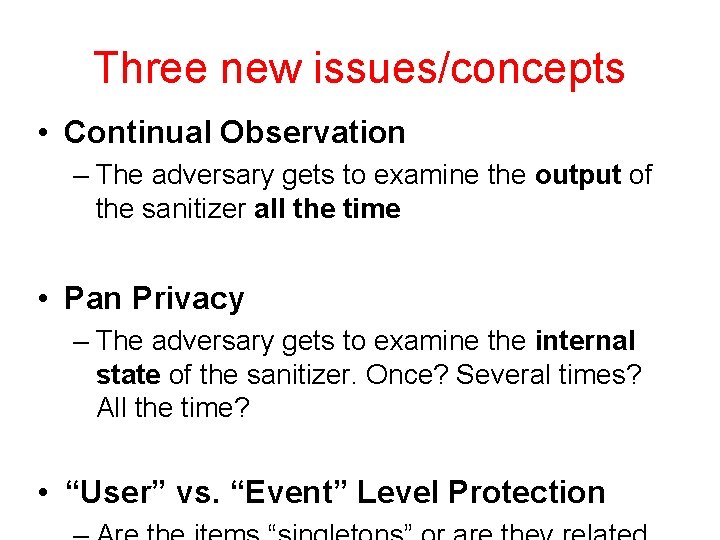

Three new issues/concepts • Continual Observation – The adversary gets to examine the output of the sanitizer all the time • Pan Privacy – The adversary gets to examine the internal state of the sanitizer. Once? Several times? All the time? • “User” vs. “Event” Level Protection

![Randomized Response Randomized Response Technique Warner 1965 Method for polling stigmatizing questions Randomized Response • Randomized Response Technique [Warner 1965] – Method for polling stigmatizing questions](https://slidetodoc.com/presentation_image_h/17291640799d24d94b55c39d27b6c5a0/image-28.jpg)

Randomized Response • Randomized Response Technique [Warner 1965] – Method for polling stigmatizing questions “trust no-one” – Idea: Lie with known probability. • Specific answers are deniable • Aggregate results are still valid • The “in the plain” Popular in 1 DB literature 1 data is 0 never stored + noise Mishra and + Sandler. … noise

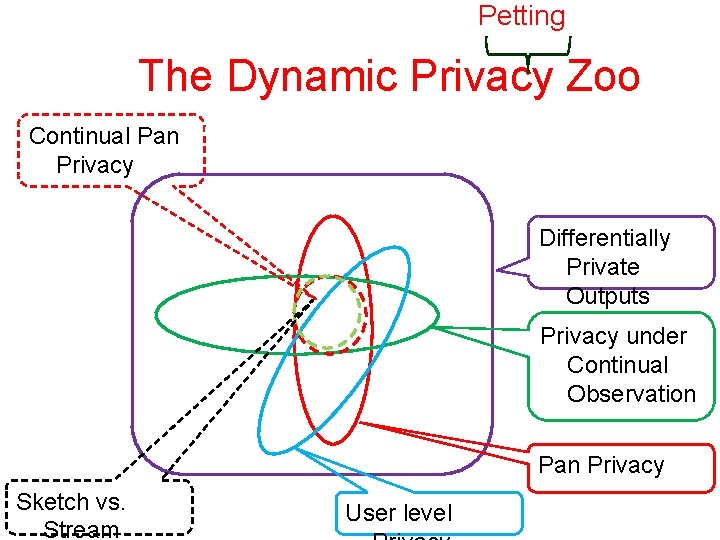

Petting The Dynamic Privacy Zoo User-Level Continual Observation Pan Private Differentially Private Continual Observatio n Pan Private Randomized Response User level

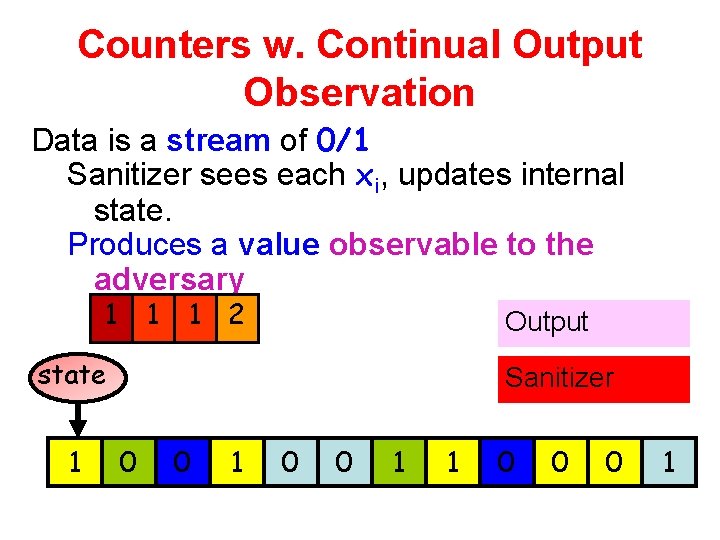

Continual Output Observation Data is a stream of items Sanitizer sees each item, updates internal state. Produces an output observable to the adversary Output state Sanitizer

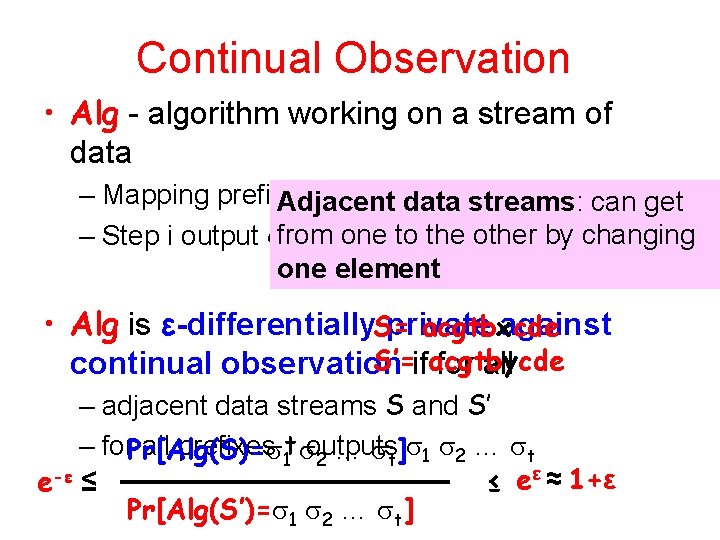

Continual Observation • Alg - algorithm working on a stream of data – Mapping prefixes of data streams to outputs Adjacent streams: can get one to the other by changing – Step i output from i one element • Alg is ε-differentially. S= private against acgtbxcde S’=ifacgtbycde continual observation for all – adjacent data streams S and S’ – for. Pr[Alg(S)= all prefixes 1 t outputs … t 2 … t ] 1 2 ≤ eε ≈ 1+ε e-ε ≤ Pr[Alg(S’)= 1 2 … t]

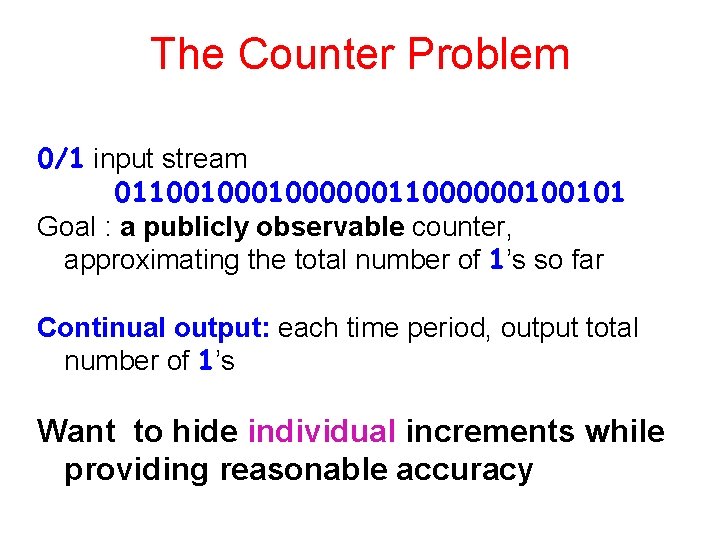

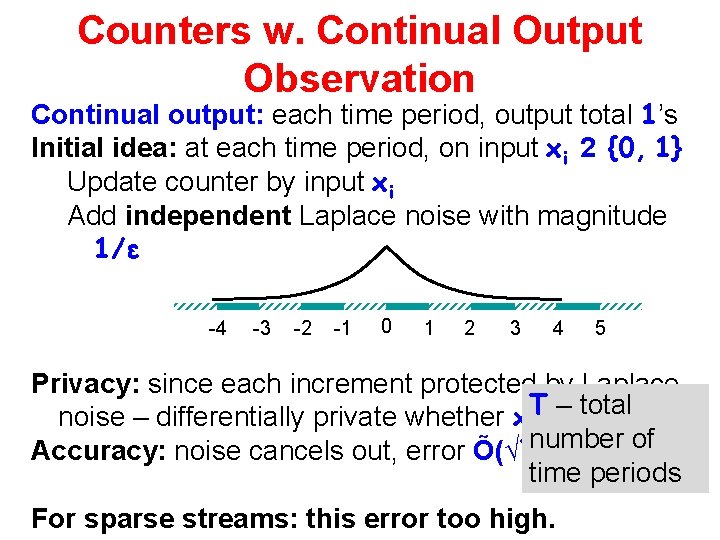

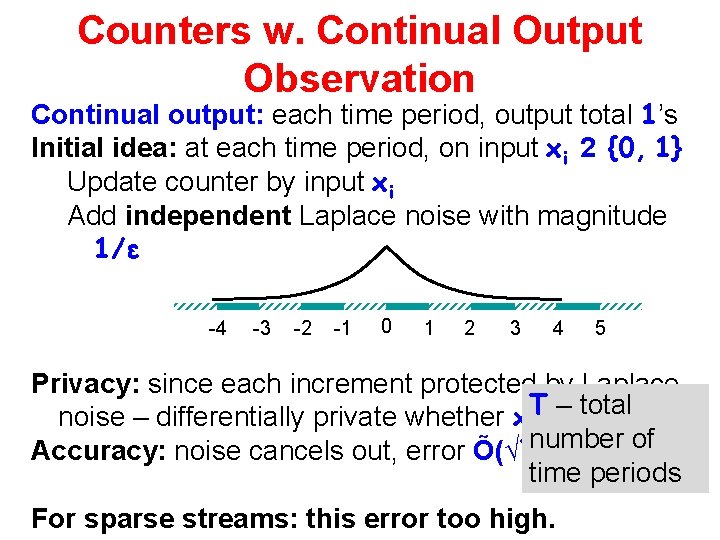

The Counter Problem 0/1 input stream 01100100000011000000100101 Goal : a publicly observable counter, approximating the total number of 1’s so far Continual output: each time period, output total number of 1’s Want to hide individual increments while providing reasonable accuracy

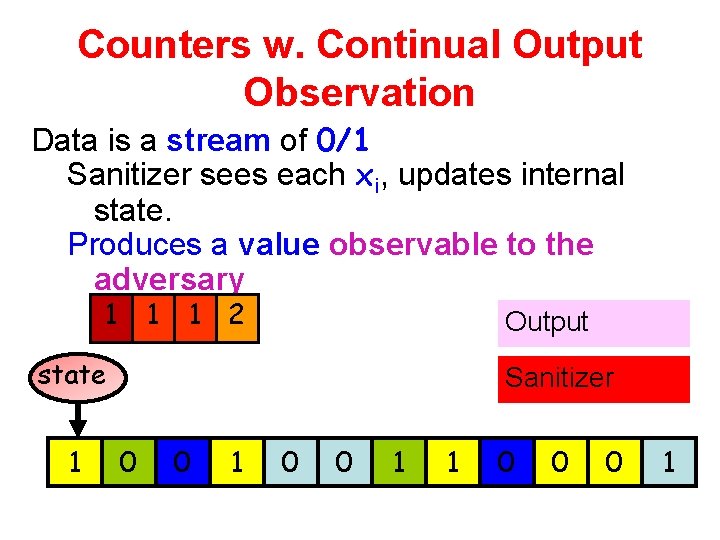

Counters w. Continual Output Observation Data is a stream of 0/1 Sanitizer sees each xi, updates internal state. Produces a value observable to the adversary 1 1 1 2 Output state 1 Sanitizer 0 0 1 1 0 0 0 1

Counters w. Continual Output Observation Continual output: each time period, output total 1’s Initial idea: at each time period, on input xi 2 {0, 1} Update counter by input xi Add independent Laplace noise with magnitude 1/ε -4 -3 -2 -1 0 1 2 3 4 5 Privacy: since each increment protected by Laplace total noise – differentially private whether xi. Tis– 0 or 1 number of Accuracy: noise cancels out, error Õ(√T) time periods For sparse streams: this error too high.

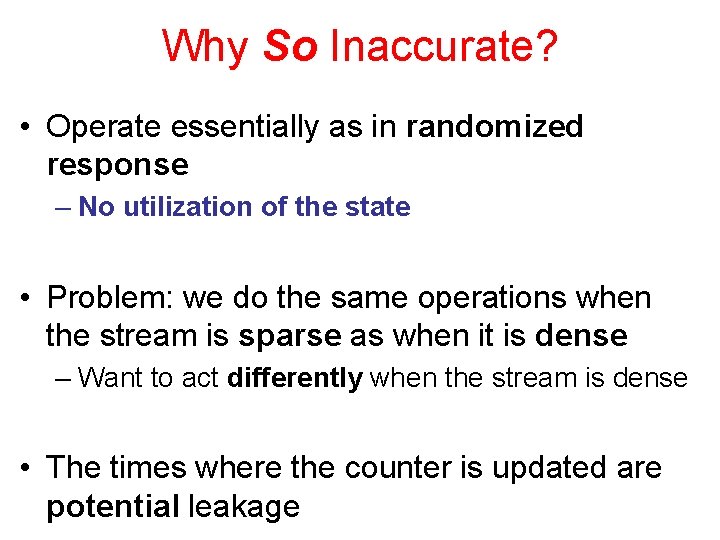

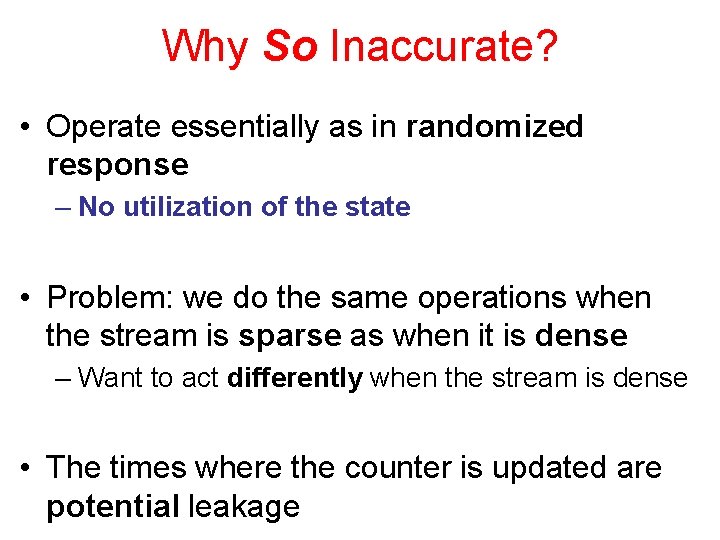

Why So Inaccurate? • Operate essentially as in randomized response – No utilization of the state • Problem: we do the same operations when the stream is sparse as when it is dense – Want to act differently when the stream is dense • The times where the counter is updated are potential leakage

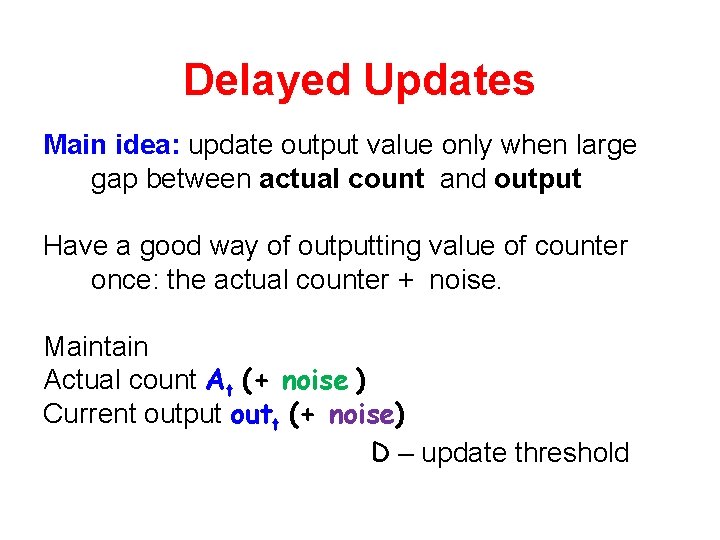

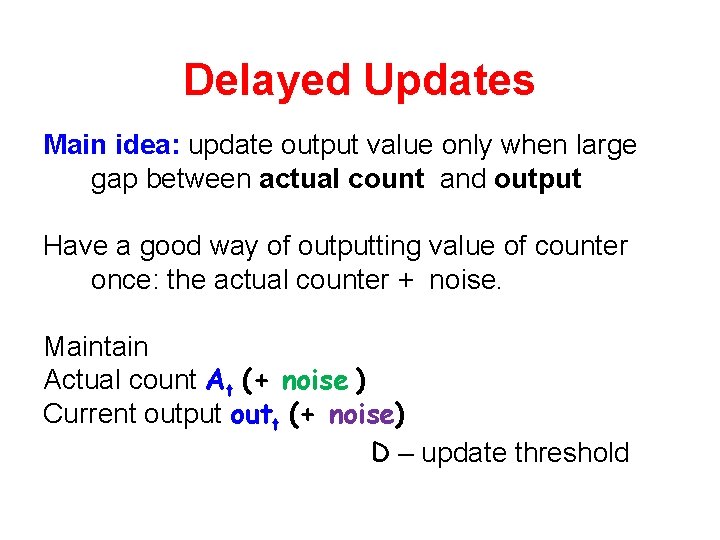

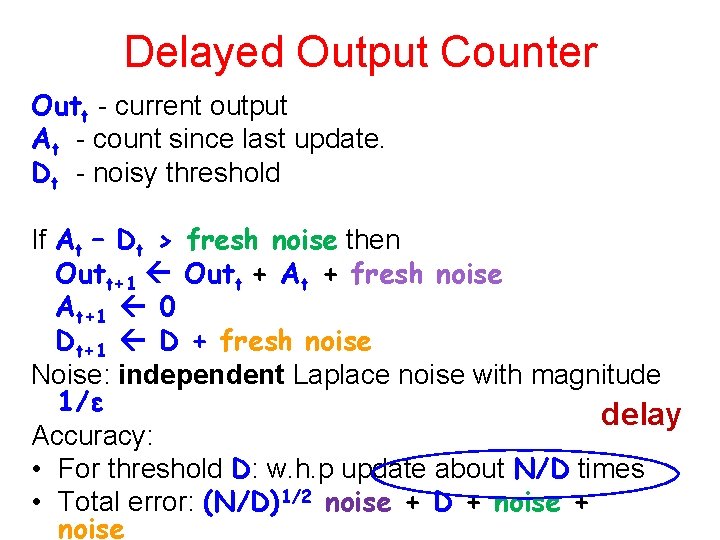

Delayed Updates Main idea: update output value only when large gap between actual count and output Have a good way of outputting value of counter once: the actual counter + noise. Maintain Actual count At (+ noise ) Current output outt (+ noise) D – update threshold

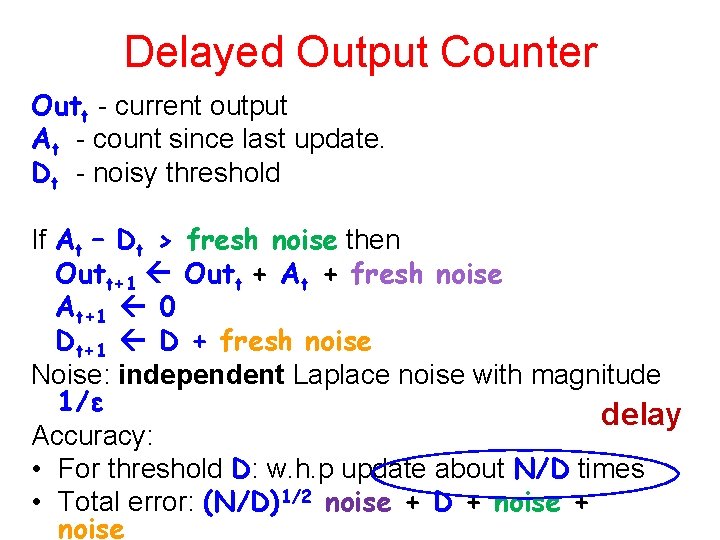

Delayed Output Counter Outt - current output At - count since last update. Dt - noisy threshold If At – Dt > fresh noise then Outt+1 Outt + At + fresh noise At+1 0 Dt+1 D + fresh noise Noise: independent Laplace noise with magnitude 1/ε delay Accuracy: • For threshold D: w. h. p update about N/D times • Total error: (N/D)1/2 noise + D + noise

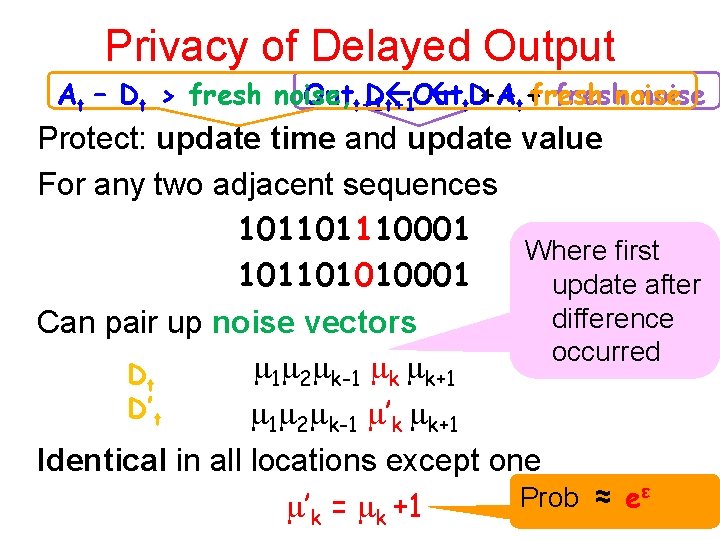

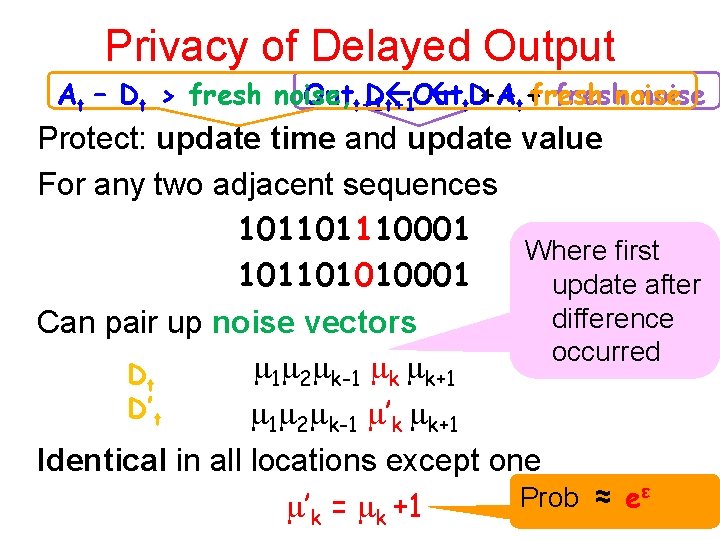

Privacy of Delayed Output At – Dt > fresh noise, Outt+1 D Out +A +t+freshnoise t+1 t. D Protect: update time and update value For any two adjacent sequences 101101110001 Where first 101101010001 update after difference Can pair up noise vectors occurred 1 2 k-1 k k+1 Dt D’t 1 2 k-1 ’k k+1 Identical in all locations except one ε Prob ≈ e ’k = k +1

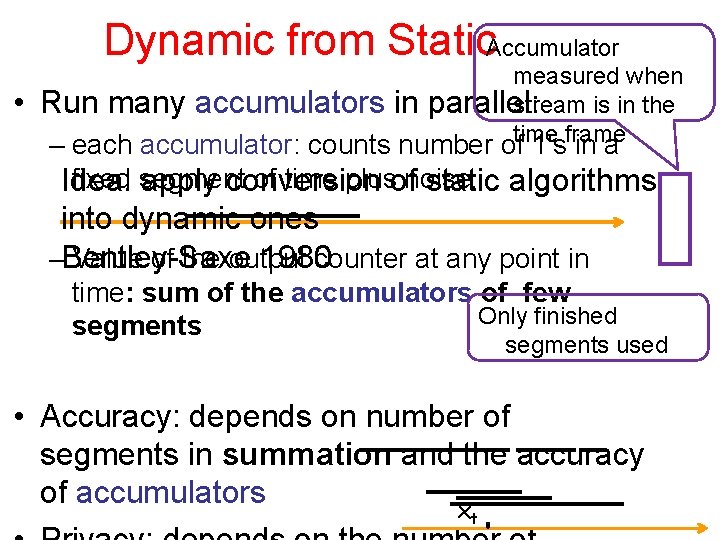

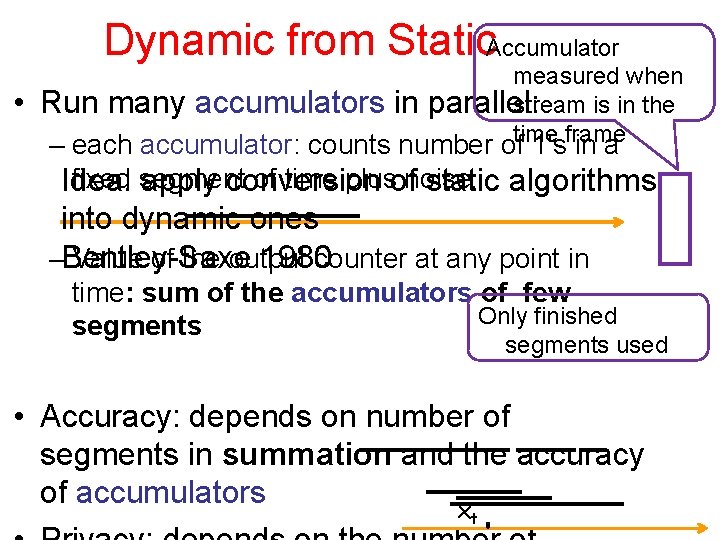

Dynamic from Static. Accumulator • measured when Run many accumulators in parallel: stream is in the – each accumulator: counts number oftime 1'sframe in a fixed segment of time plusofnoise. Idea: apply conversion static algorithms into dynamic ones 1980 –Bentley-Saxe Value of the output counter at any point in time: sum of the accumulators of few Only finished segments used • Accuracy: depends on number of segments in summation and the accuracy of accumulators x t

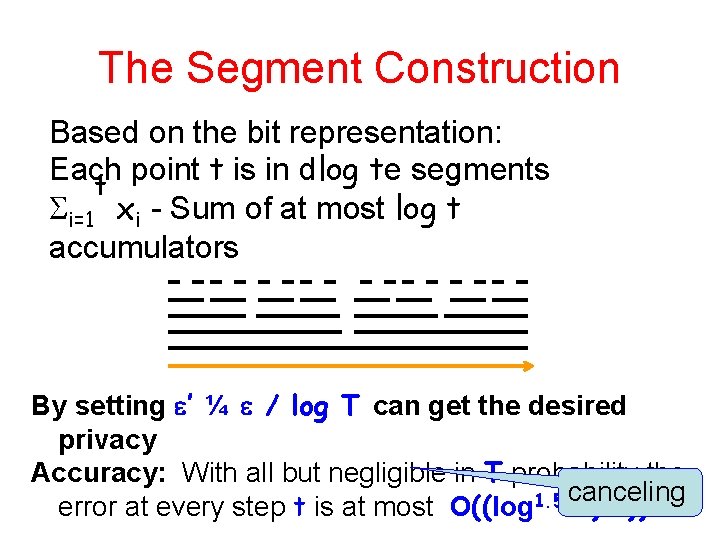

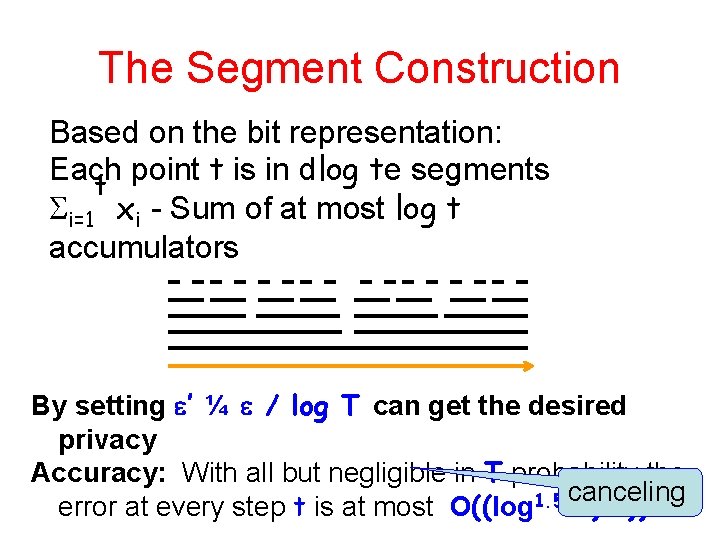

The Segment Construction Based on the bit representation: Each point t is in dlog te segments t i=1 xi - Sum of at most log t accumulators By setting ’ ¼ / log T can get the desired privacy Accuracy: With all but negligible in T probability the canceling error at every step t is at most O((log 1. 5 T)/ )).

Synthetic Counter Can make the counter synthetic • Monotone • Each round counter goes up by at most 1 Apply to any monotone function

Petting The Dynamic Privacy Zoo Continual Pan Privacy Differentially Private Outputs Privacy under Continual Observation Pan Privacy Sketch vs. Stream User level

Cryptography

Cryptography Lecturer's name

Lecturer's name Cvs privacy awareness and hipaa privacy training

Cvs privacy awareness and hipaa privacy training Foundations of privacy

Foundations of privacy Naor penso

Naor penso Genealogia de abraão

Genealogia de abraão Gil naor

Gil naor Shahid arju moni govt. secondary school

Shahid arju moni govt. secondary school Joulumaahan matkamies jo moni tietä kysyy

Joulumaahan matkamies jo moni tietä kysyy Rgine

Rgine Isb machine learning

Isb machine learning 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Designation lecturer

Designation lecturer Pearson lecturer resources

Pearson lecturer resources Spe distinguished lecturer

Spe distinguished lecturer Cfa lecturer handbook

Cfa lecturer handbook Designation of lecturer

Designation of lecturer Spe distinguished lecturer

Spe distinguished lecturer Photography lecturer

Photography lecturer Good afternoon teacher my name is

Good afternoon teacher my name is Lecturer asad ali

Lecturer asad ali Why himalayan rivers are pernnial in nature

Why himalayan rivers are pernnial in nature Lector vs lecturer

Lector vs lecturer Lecturer in charge

Lecturer in charge Lecturer name

Lecturer name Jeannie watkins

Jeannie watkins Lecturer in charge

Lecturer in charge Proofpoint smart search

Proofpoint smart search Privacy manager software

Privacy manager software Differential privacy

Differential privacy Workday data security

Workday data security Health privacy principles victoria

Health privacy principles victoria Microsoft from back doors patch gov

Microsoft from back doors patch gov Army privacy office

Army privacy office Complexity of differential privacy

Complexity of differential privacy Chapter 9 privacy security and ethics

Chapter 9 privacy security and ethics Helen nissenbaum privacy as contextual integrity

Helen nissenbaum privacy as contextual integrity Privacy-enhancing computation

Privacy-enhancing computation Aicpa privacy maturity model

Aicpa privacy maturity model Future of privacy forum

Future of privacy forum âq1

âq1 Foia vs privacy act

Foia vs privacy act Chapter 9 privacy security and ethics

Chapter 9 privacy security and ethics