Foundations of Cryptography Lecture 9 Lecturer Moni Naor

- Slides: 21

Foundations of Cryptography Lecture 9 Lecturer: Moni Naor

Recap of lecture 8 • Tree signature scheme • Proof of security of tree signature schemes • Encryption

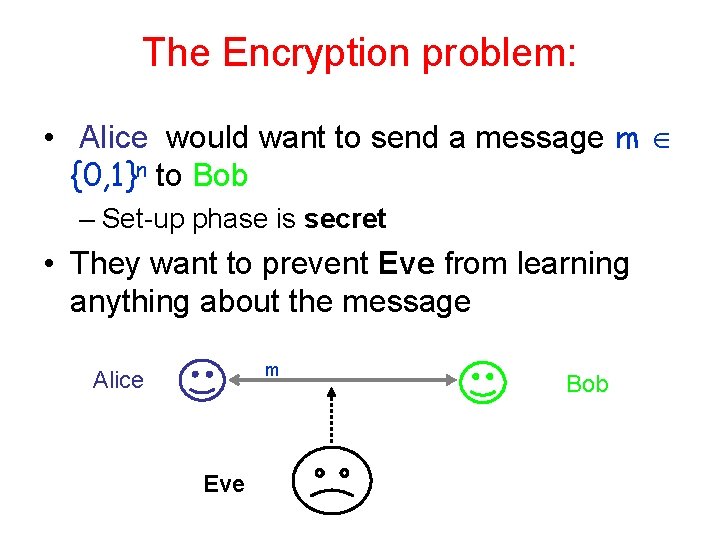

The Encryption problem: • Alice would want to send a message m {0, 1}n to Bob – Set-up phase is secret • They want to prevent Eve from learning anything about the message m Alice Eve Bob

The encryption problem • Relevant both in the shared key and in the secret key setting • Want to use many times • Also add authentication… • Other disruptions by Eve

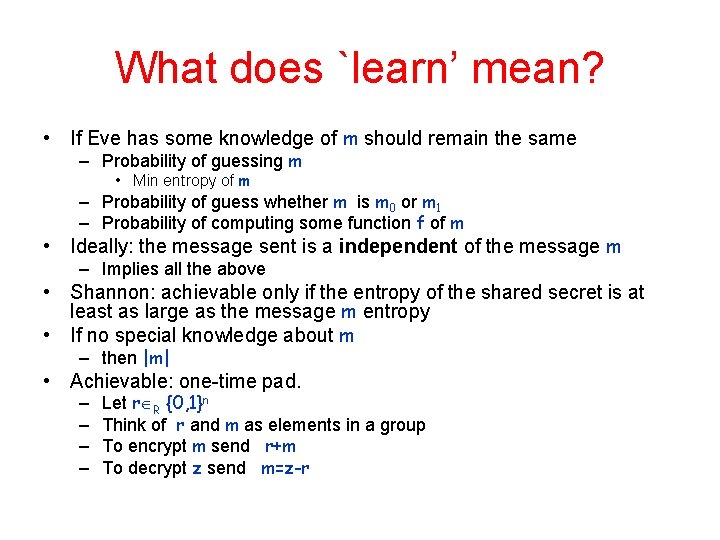

What does `learn’ mean? • If Eve has some knowledge of m should remain the same – Probability of guessing m • Min entropy of m – Probability of guess whether m is m 0 or m 1 – Probability of computing some function f of m • Ideally: the message sent is a independent of the message m – Implies all the above • Shannon: achievable only if the entropy of the shared secret is at least as large as the message m entropy • If no special knowledge about m – then |m| • Achievable: one-time pad. – – Let r R {0, 1}n Think of r and m as elements in a group To encrypt m send r+m To decrypt z send m=z-r

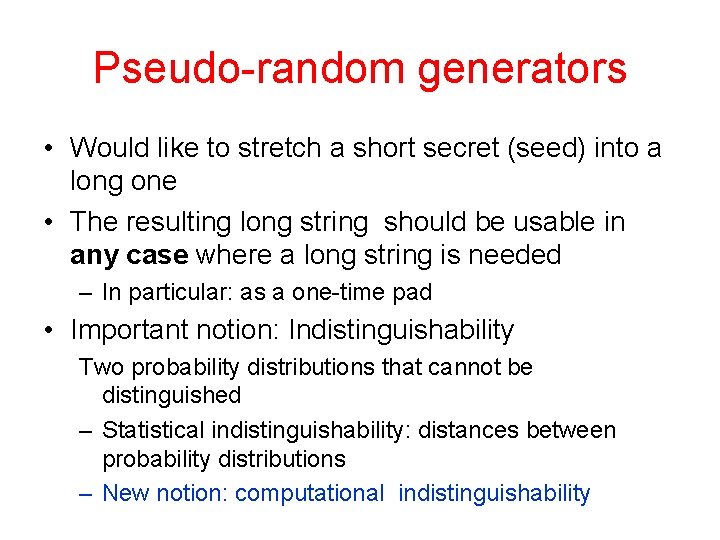

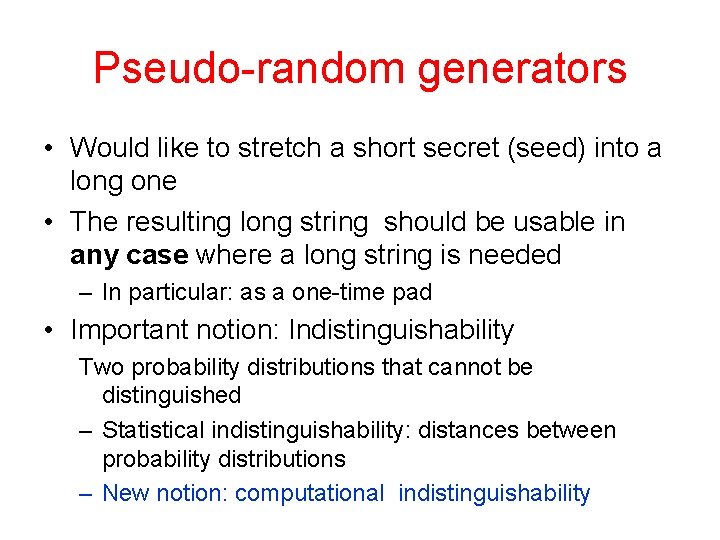

Pseudo-random generators • Would like to stretch a short secret (seed) into a long one • The resulting long string should be usable in any case where a long string is needed – In particular: as a one-time pad • Important notion: Indistinguishability Two probability distributions that cannot be distinguished – Statistical indistinguishability: distances between probability distributions – New notion: computational indistinguishability

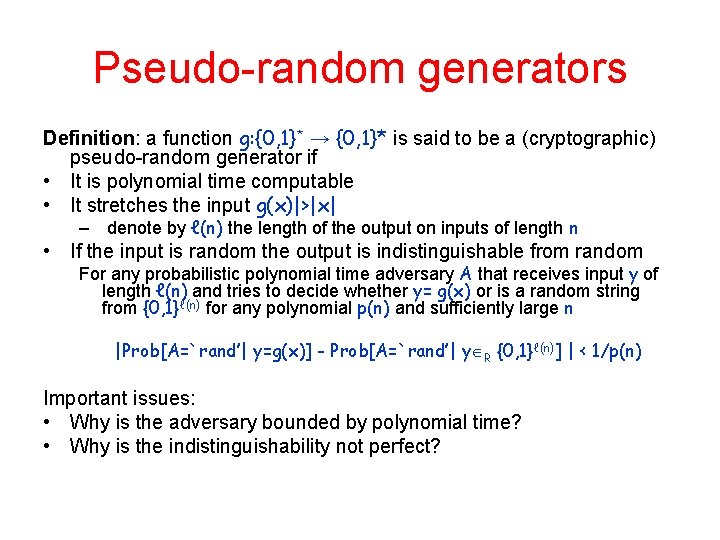

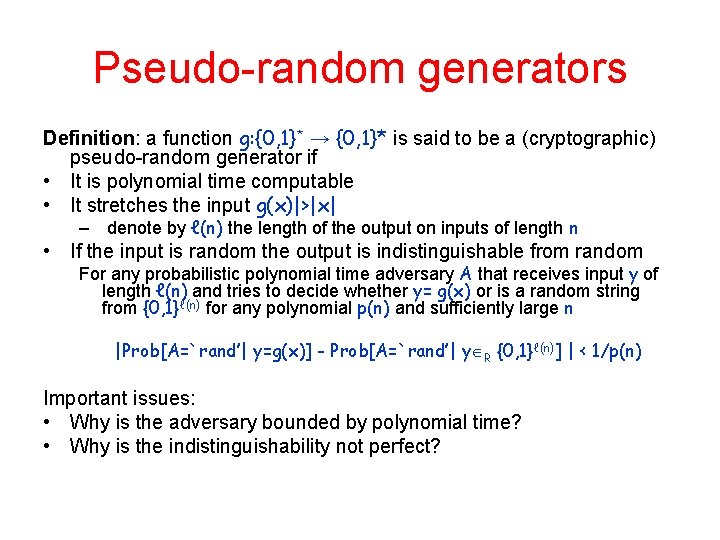

Pseudo-random generators Definition: a function g: {0, 1}* → {0, 1}* is said to be a (cryptographic) pseudo-random generator if • It is polynomial time computable • It stretches the input g(x)|>|x| – denote by ℓ(n) the length of the output on inputs of length n • If the input is random the output is indistinguishable from random For any probabilistic polynomial time adversary A that receives input y of length ℓ(n) and tries to decide whether y= g(x) or is a random string from {0, 1}ℓ(n) for any polynomial p(n) and sufficiently large n |Prob[A=`rand’| y=g(x)] - Prob[A=`rand’| y R {0, 1}ℓ(n)] | < 1/p(n) Important issues: • Why is the adversary bounded by polynomial time? • Why is the indistinguishability not perfect?

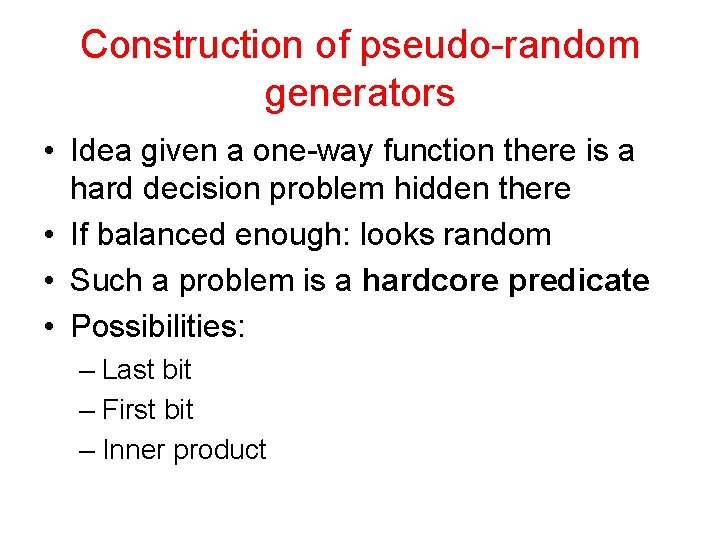

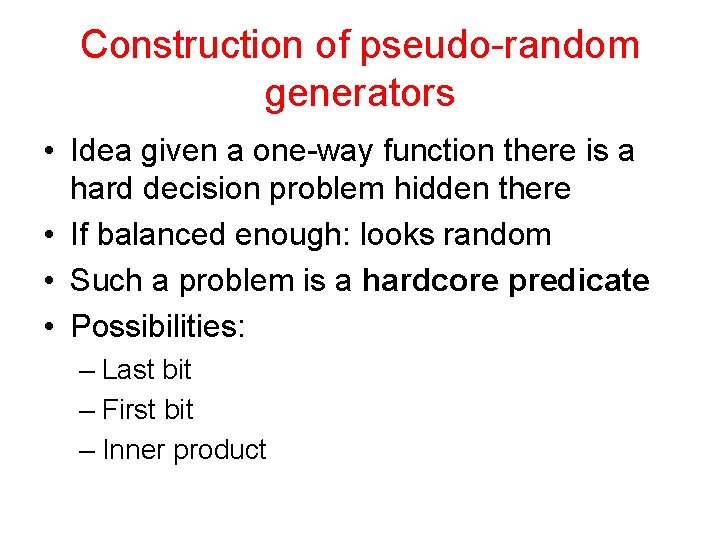

Construction of pseudo-random generators • Idea given a one-way function there is a hard decision problem hidden there • If balanced enough: looks random • Such a problem is a hardcore predicate • Possibilities: – Last bit – First bit – Inner product

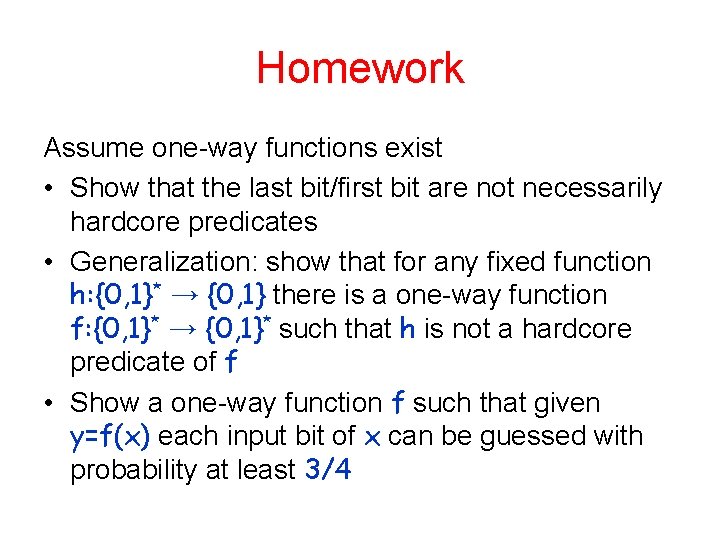

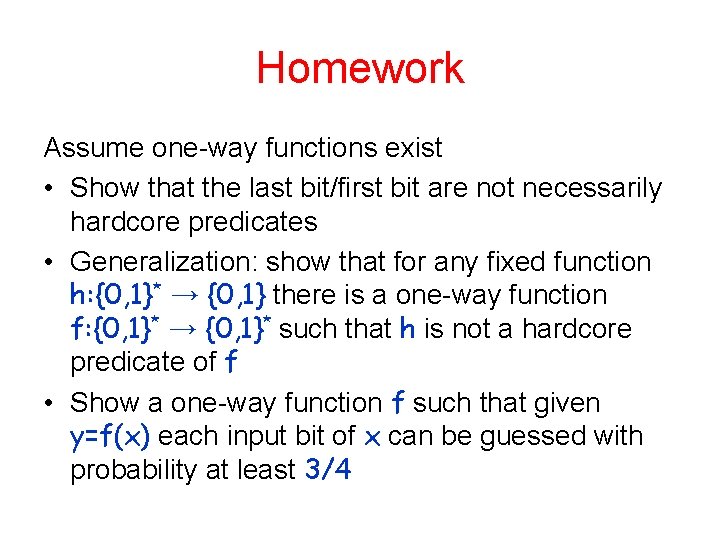

Homework Assume one-way functions exist • Show that the last bit/first bit are not necessarily hardcore predicates • Generalization: show that for any fixed function h: {0, 1}* → {0, 1} there is a one-way function f: {0, 1}* → {0, 1}* such that h is not a hardcore predicate of f • Show a one-way function f such that given y=f(x) each input bit of x can be guessed with probability at least 3/4

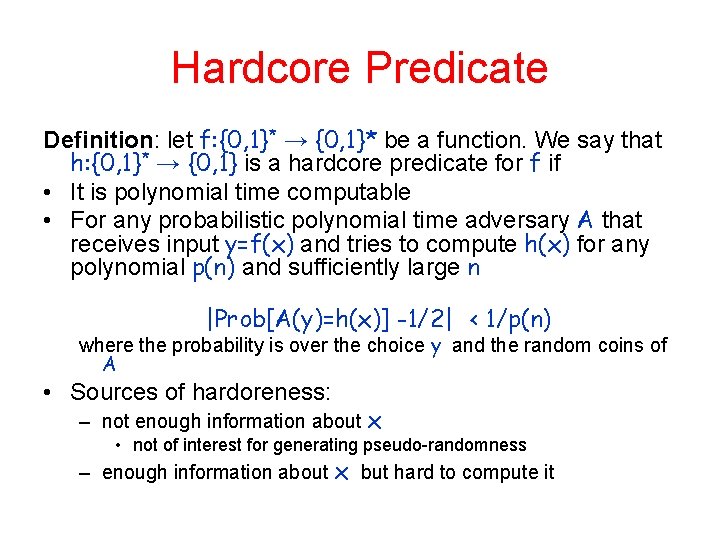

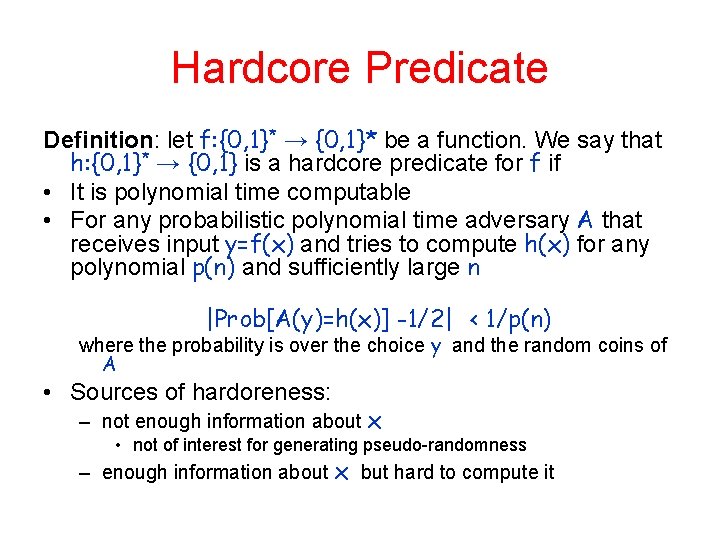

Hardcore Predicate Definition: let f: {0, 1}* → {0, 1}* be a function. We say that h: {0, 1}* → {0, 1} is a hardcore predicate for f if • It is polynomial time computable • For any probabilistic polynomial time adversary A that receives input y=f(x) and tries to compute h(x) for any polynomial p(n) and sufficiently large n |Prob[A(y)=h(x)] -1/2| < 1/p(n) where the probability is over the choice y and the random coins of A • Sources of hardoreness: – not enough information about x • not of interest for generating pseudo-randomness – enough information about x but hard to compute it

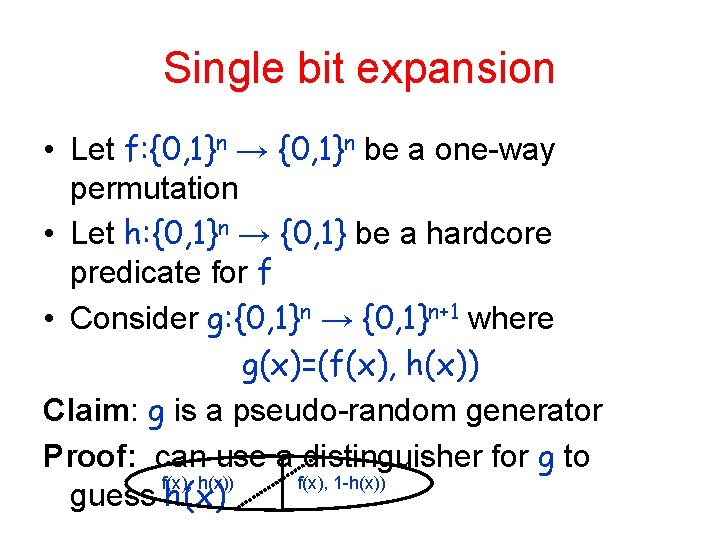

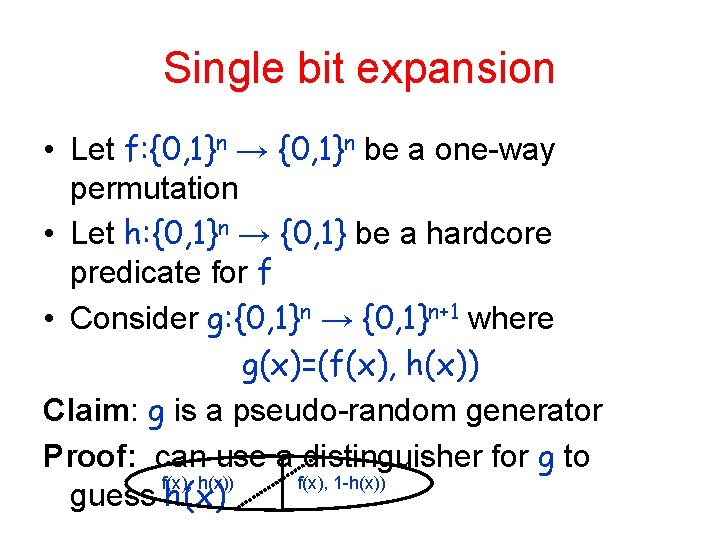

Single bit expansion • Let f: {0, 1}n → {0, 1}n be a one-way permutation • Let h: {0, 1}n → {0, 1} be a hardcore predicate for f • Consider g: {0, 1}n → {0, 1}n+1 where g(x)=(f(x), h(x)) Claim: g is a pseudo-random generator Proof: can use a distinguisher for g to f(x), h(x)) f(x), 1 -h(x)) guess h(x)

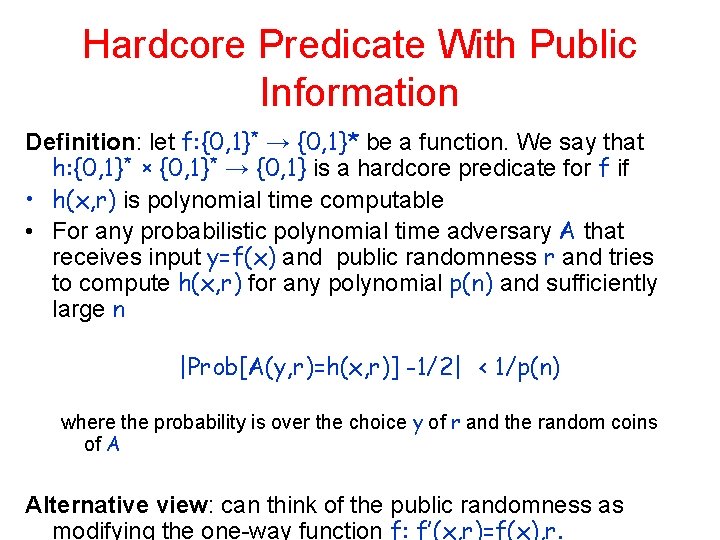

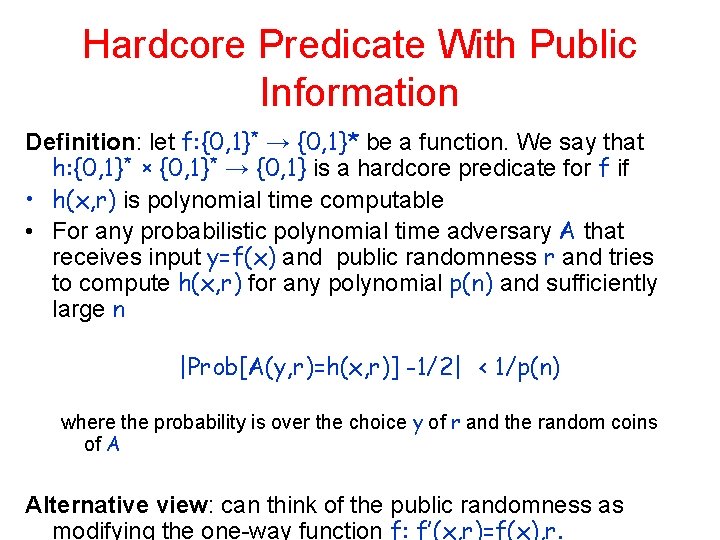

Hardcore Predicate With Public Information Definition: let f: {0, 1}* → {0, 1}* be a function. We say that h: {0, 1}* x {0, 1}* → {0, 1} is a hardcore predicate for f if • h(x, r) is polynomial time computable • For any probabilistic polynomial time adversary A that receives input y=f(x) and public randomness r and tries to compute h(x, r) for any polynomial p(n) and sufficiently large n |Prob[A(y, r)=h(x, r)] -1/2| < 1/p(n) where the probability is over the choice y of r and the random coins of A Alternative view: can think of the public randomness as modifying the one-way function f: f’(x, r)=f(x), r.

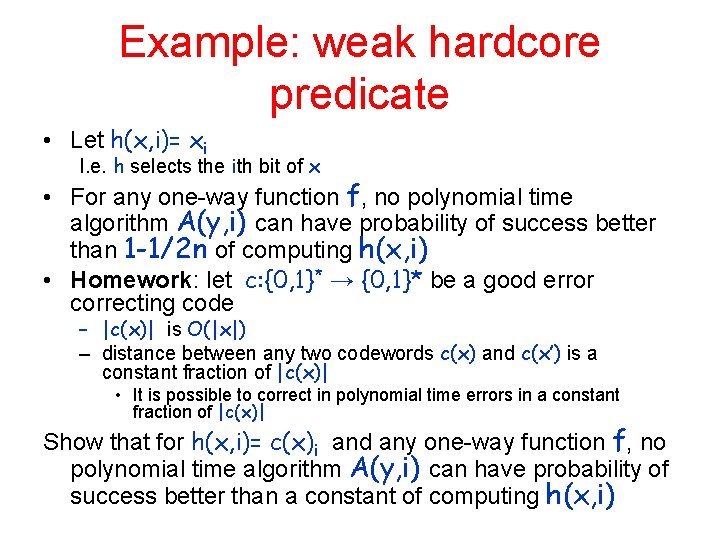

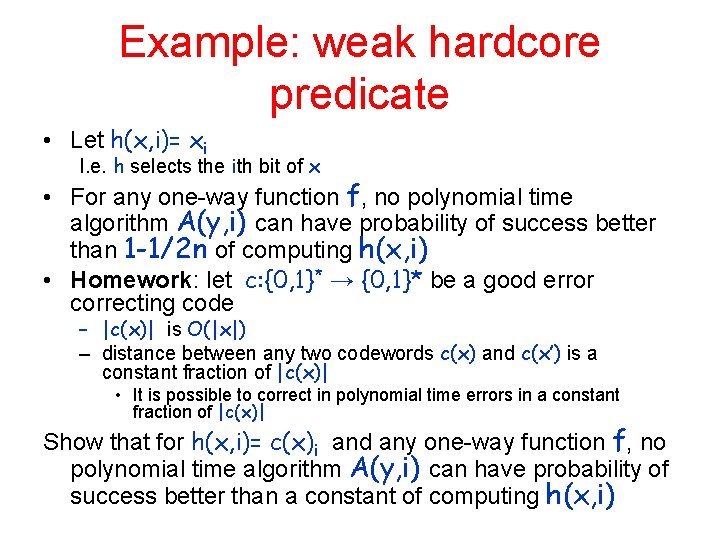

Example: weak hardcore predicate • Let h(x, i)= xi I. e. h selects the ith bit of x • For any one-way function f, no polynomial time algorithm A(y, i) can have probability of success better than 1 -1/2 n of computing h(x, i) • Homework: let c: {0, 1}* → {0, 1}* be a good error correcting code – |c(x)| is O(|x|) – distance between any two codewords c(x) and c(x’) is a constant fraction of |c(x)| • It is possible to correct in polynomial time errors in a constant fraction of |c(x)| Show that for h(x, i)= c(x)i and any one-way function f, no polynomial time algorithm A(y, i) can have probability of success better than a constant of computing h(x, i)

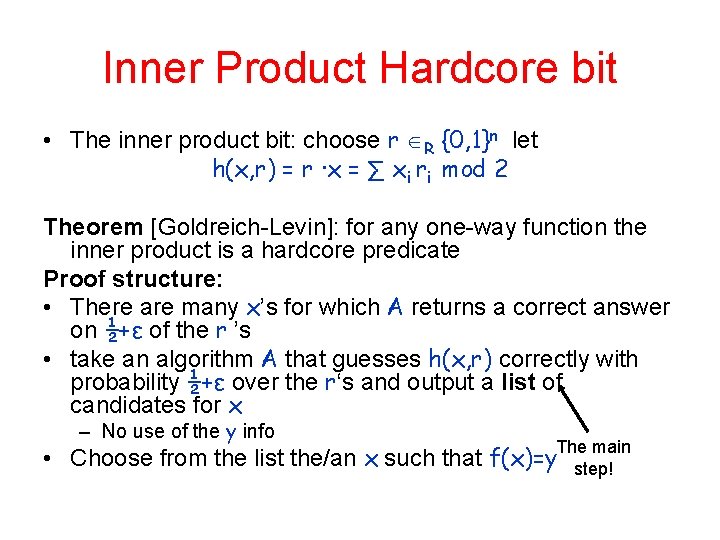

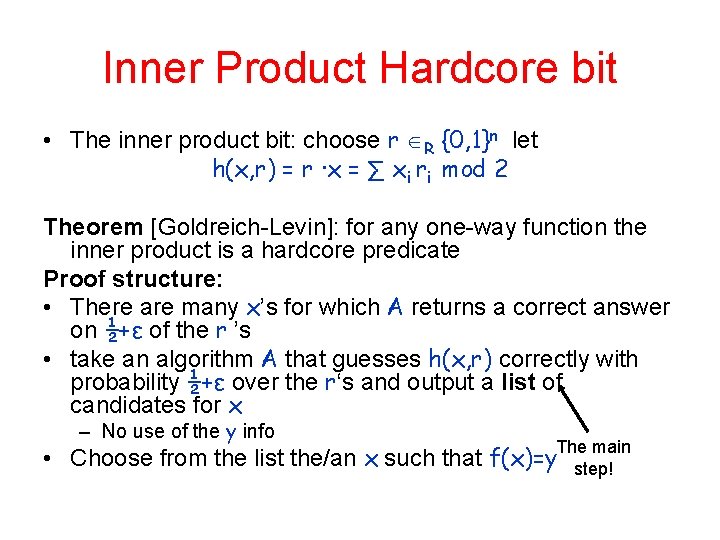

Inner Product Hardcore bit • The inner product bit: choose r R {0, 1}n let h(x, r) = r ∙x = ∑ xi ri mod 2 Theorem [Goldreich-Levin]: for any one-way function the inner product is a hardcore predicate Proof structure: • There are many x’s for which A returns a correct answer on ½+ε of the r ’s • take an algorithm A that guesses h(x, r) correctly with probability ½+ε over the r‘s and output a list of candidates for x – No use of the y info The main step! • Choose from the list the/an x such that f(x)=y

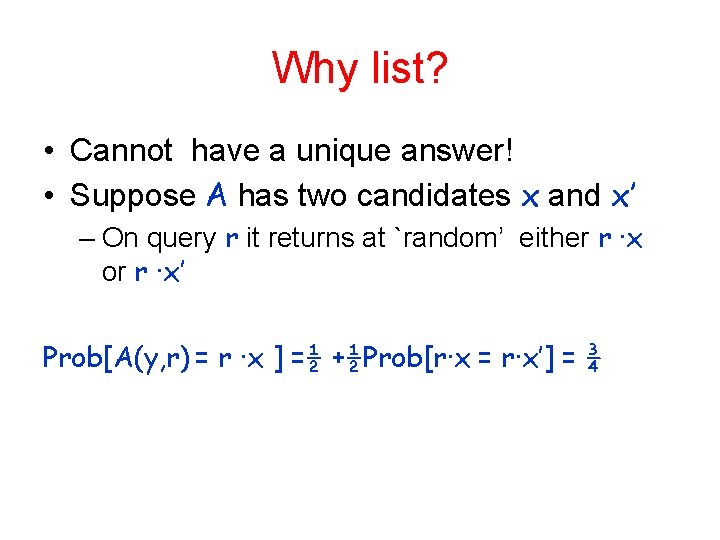

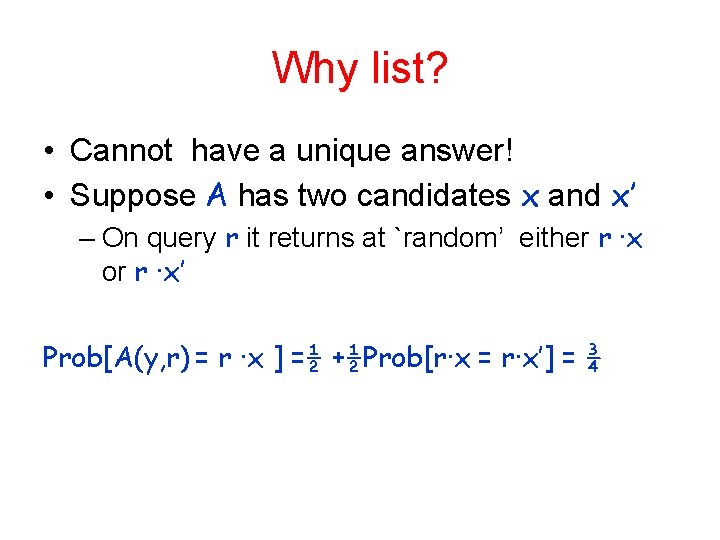

Why list? • Cannot have a unique answer! • Suppose A has two candidates x and x’ – On query r it returns at `random’ either r ∙x or r ∙x’ Prob[A(y, r) = r ∙x ] =½ +½Prob[r∙x = r∙x’] = ¾

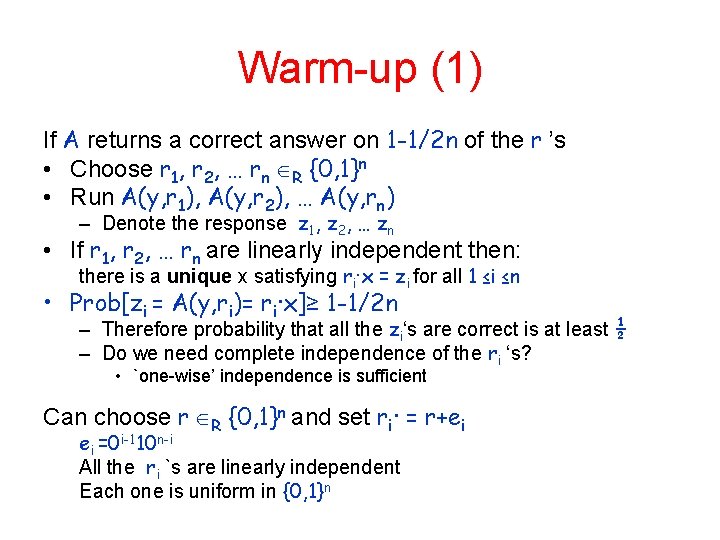

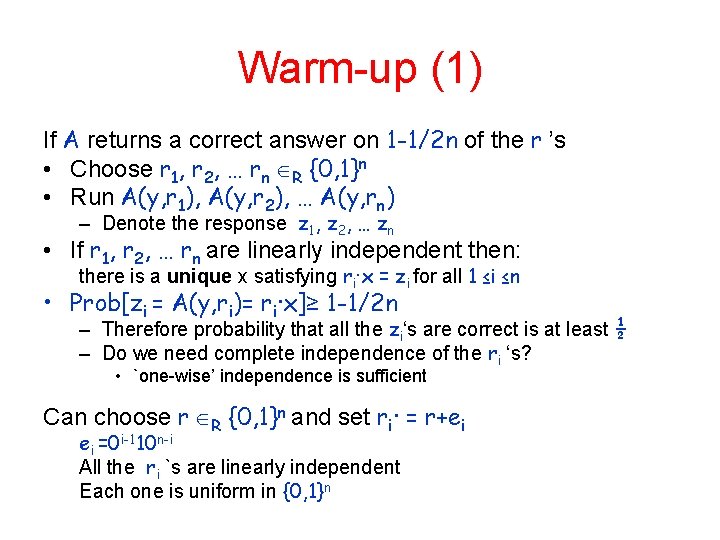

Warm-up (1) If A returns a correct answer on 1 -1/2 n of the r ’s • Choose r 1, r 2, … rn R {0, 1}n • Run A(y, r 1), A(y, r 2), … A(y, rn) – Denote the response z 1, z 2, … zn • If r 1, r 2, … rn are linearly independent then: there is a unique x satisfying ri∙x = zi for all 1 ≤i ≤n • Prob[zi = A(y, ri)= ri∙x]≥ 1 -1/2 n – Therefore probability that all the zi‘s are correct is at least ½ – Do we need complete independence of the ri ‘s? • `one-wise’ independence is sufficient Can choose r R {0, 1}n and set ri∙ = r+ei ei =0 i-110 n-i All the ri `s are linearly independent Each one is uniform in {0, 1}n

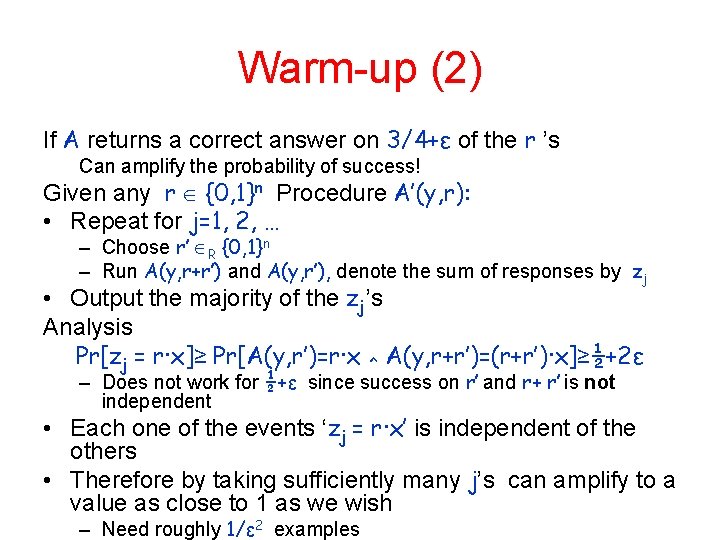

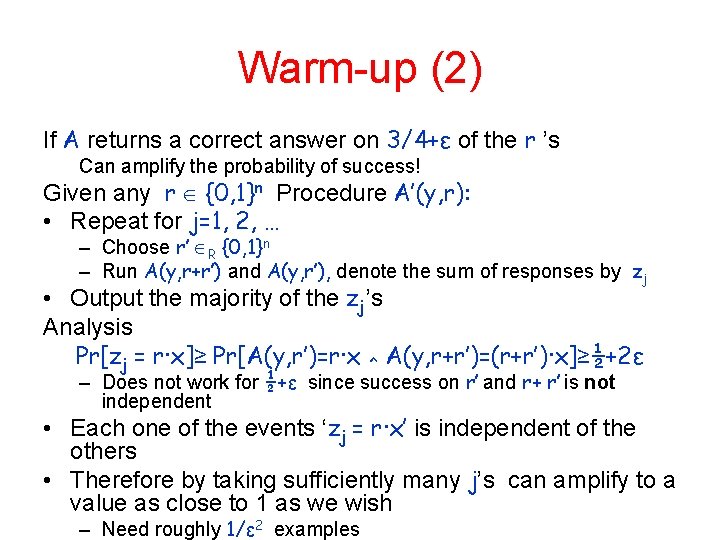

Warm-up (2) If A returns a correct answer on 3/4+ε of the r ’s Can amplify the probability of success! Given any r {0, 1}n Procedure A’(y, r): • Repeat for j=1, 2, … – Choose r’ R {0, 1}n – Run A(y, r+r’) and A(y, r’), denote the sum of responses by zj • Output the majority of the zj’s Analysis Pr[zj = r∙x]≥ Pr[A(y, r’)=r∙x ^ A(y, r+r’)=(r+r’)∙x]≥½+2ε – Does not work for ½+ε since success on r’ and r+ r’ is not independent • Each one of the events ‘zj = r∙x’ is independent of the others • Therefore by taking sufficiently many j’s can amplify to a value as close to 1 as we wish – Need roughly 1/ε 2 examples

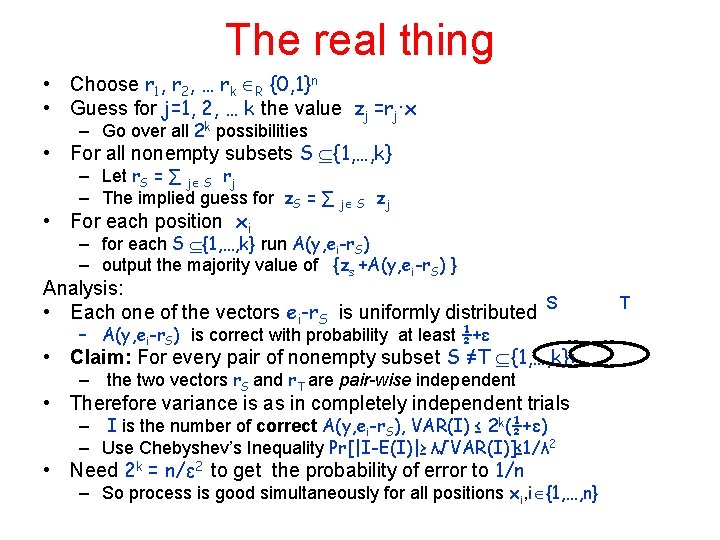

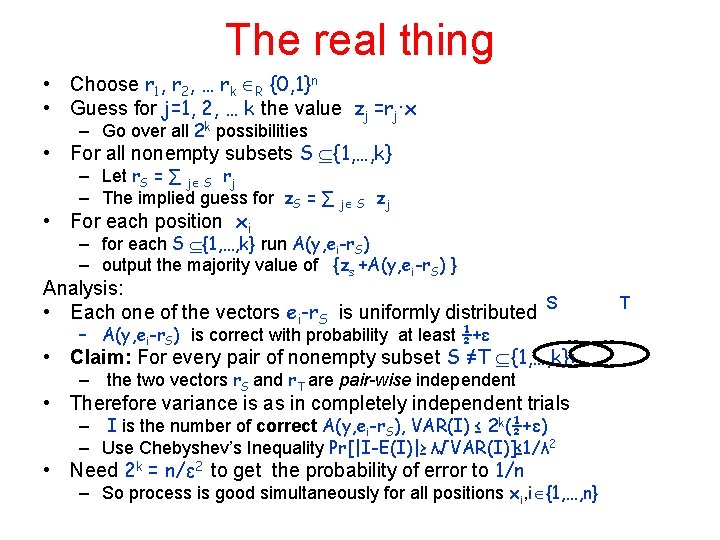

The real thing • Choose r 1, r 2, … rk R {0, 1}n • Guess for j=1, 2, … k the value zj =rj∙x – Go over all 2 k possibilities • For all nonempty subsets S {1, …, k} – Let r. S = ∑ j S rj – The implied guess for z. S = ∑ j S zj • For each position xi – for each S {1, …, k} run A(y, ei-r. S) – output the majority value of {zs +A(y, ei-r. S) } Analysis: • Each one of the vectors ei-r. S is uniformly distributed S – A(y, ei-r. S) is correct with probability at least ½+ε • Claim: For every pair of nonempty subset S ≠T {1, …, k}: – the two vectors r. S and r. T are pair-wise independent • Therefore variance is as in completely independent trials – I is the number of correct A(y, ei-r. S), VAR(I) ≤ 2 k(½+ε) – Use Chebyshev’s Inequality Pr[|I-E(I)|≥ λ√VAR(I)]≤ 1/λ 2 • Need 2 k = n/ε 2 to get the probability of error to 1/n – So process is good simultaneously for all positions xi, i {1, …, n} T

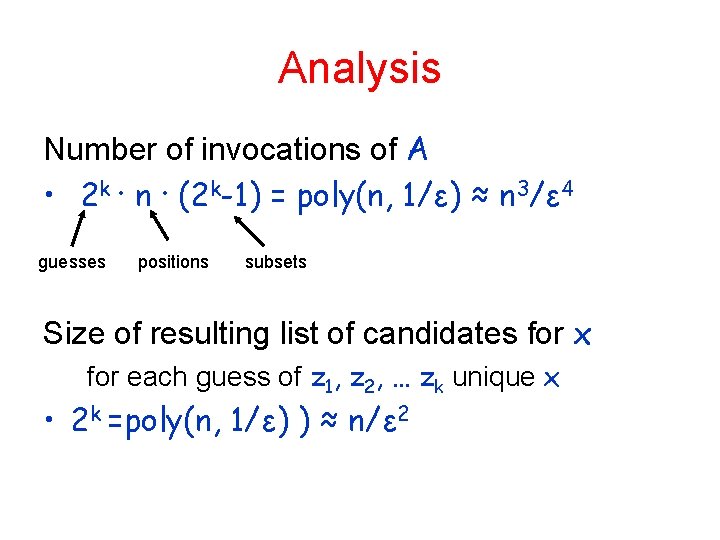

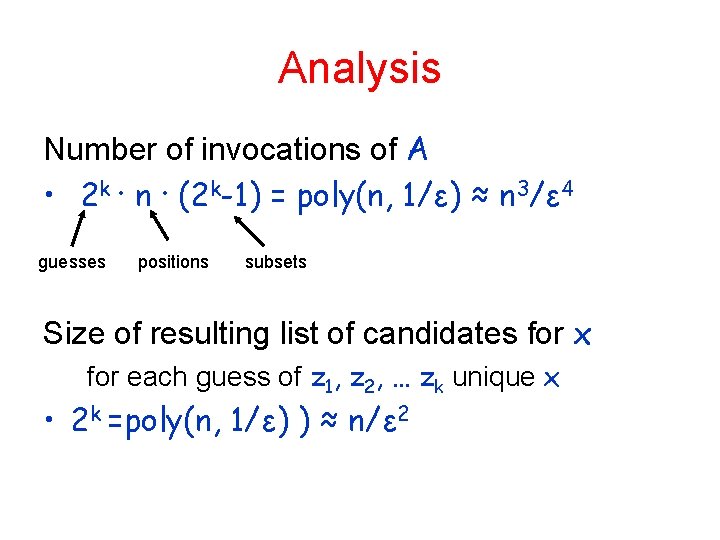

Analysis Number of invocations of A • 2 k ∙ n ∙ (2 k-1) = poly(n, 1/ε) ≈ n 3/ε 4 guesses positions subsets Size of resulting list of candidates for x for each guess of z 1, z 2, … zk unique x • 2 k =poly(n, 1/ε) ) ≈ n/ε 2

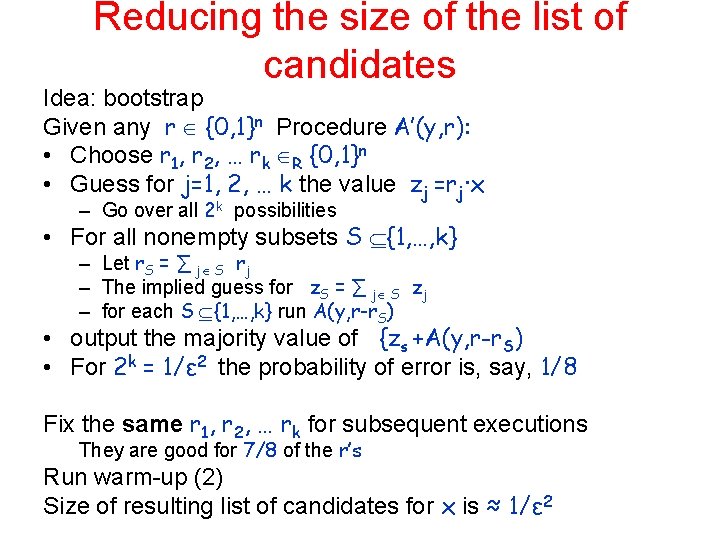

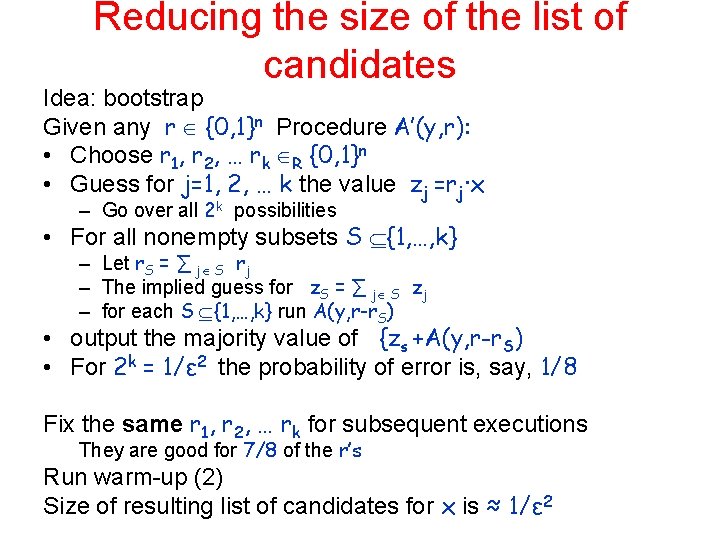

Reducing the size of the list of candidates Idea: bootstrap Given any r {0, 1}n Procedure A’(y, r): • Choose r 1, r 2, … rk R {0, 1}n • Guess for j=1, 2, … k the value zj =rj∙x – Go over all 2 k possibilities • For all nonempty subsets S {1, …, k} – Let r. S = ∑ j S rj – The implied guess for z. S = ∑ j S zj – for each S {1, …, k} run A(y, r-r. S) • output the majority value of {zs +A(y, r-r. S) • For 2 k = 1/ε 2 the probability of error is, say, 1/8 Fix the same r 1, r 2, … rk for subsequent executions They are good for 7/8 of the r’s Run warm-up (2) Size of resulting list of candidates for x is ≈ 1/ε 2

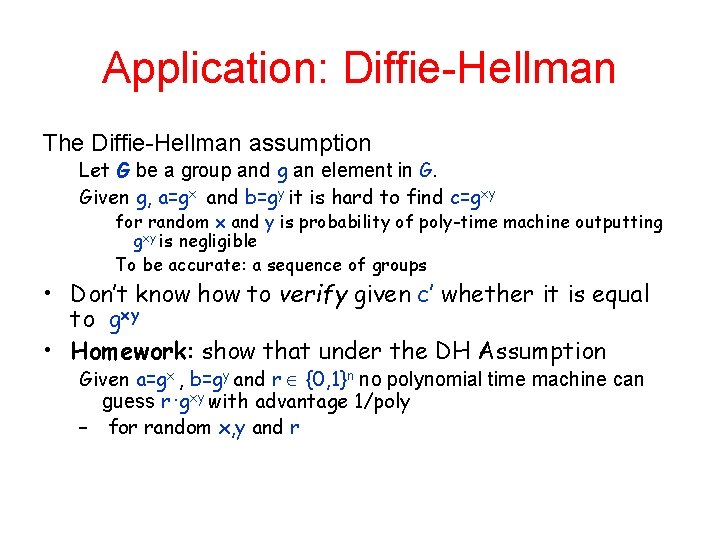

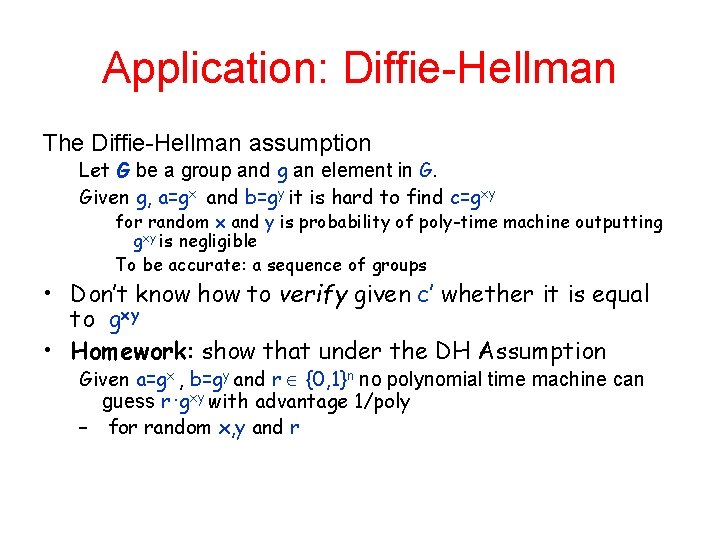

Application: Diffie-Hellman The Diffie-Hellman assumption Let G be a group and g an element in G. Given g, a=gx and b=gy it is hard to find c=gxy for random x and y is probability of poly-time machine outputting gxy is negligible To be accurate: a sequence of groups • Don’t know how to verify given c’ whether it is equal to gxy • Homework: show that under the DH Assumption Given a=gx , b=gy and r {0, 1}n no polynomial time machine can guess r ∙gxy with advantage 1/poly – for random x, y and r

Sudoku crypto

Sudoku crypto Lecturer's name or lecturer name

Lecturer's name or lecturer name Genealogia de naor

Genealogia de naor Gil naor

Gil naor Io penso kant

Io penso kant Joulumaahan matkamies jo moni tietä kysyy

Joulumaahan matkamies jo moni tietä kysyy Moni kizz

Moni kizz Claudio moni

Claudio moni Shahid arju moni govt. secondary school

Shahid arju moni govt. secondary school 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad 140000/120

140000/120 Good afternoon students

Good afternoon students Lecturer asad ali

Lecturer asad ali Why himalayan rivers are pernnial in nature

Why himalayan rivers are pernnial in nature Lector vs lecturer

Lector vs lecturer Photography lecturer

Photography lecturer Lecturer name

Lecturer name Jeannie watkins

Jeannie watkins Lecturer in charge

Lecturer in charge Designation lecturer

Designation lecturer Lecturer in charge

Lecturer in charge Pearson lecturer resources

Pearson lecturer resources