Fairnessoriented Scheduling Support for Multicore Systems Changdae Kim

![Throughput-maximizing Scheduling • Max-perf: maximize the overall throughput [Kumar’ 03], [Koufaty’ 10], [Kwon‘ 11], Throughput-maximizing Scheduling • Max-perf: maximize the overall throughput [Kumar’ 03], [Koufaty’ 10], [Kwon‘ 11],](https://slidetodoc.com/presentation_image_h2/9d4b7e77ef228b6625c69e189054760b/image-3.jpg)

![Related Work: Comparisons Scaled load balancing [1] R%-fair [2] Guaranteed fairness [3] Sim-min-fair (proposed) Related Work: Comparisons Scaled load balancing [1] R%-fair [2] Guaranteed fairness [3] Sim-min-fair (proposed)](https://slidetodoc.com/presentation_image_h2/9d4b7e77ef228b6625c69e189054760b/image-24.jpg)

- Slides: 34

Fairness-oriented Scheduling Support for Multicore Systems Changdae Kim and Jaehyuk Huh KAIST

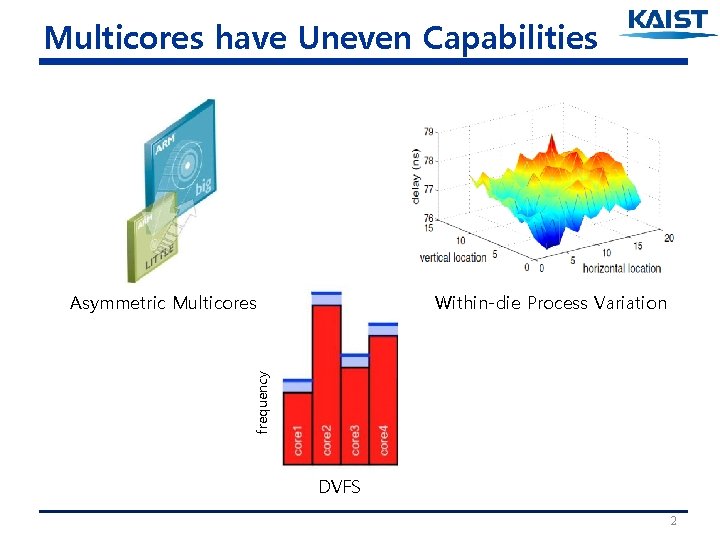

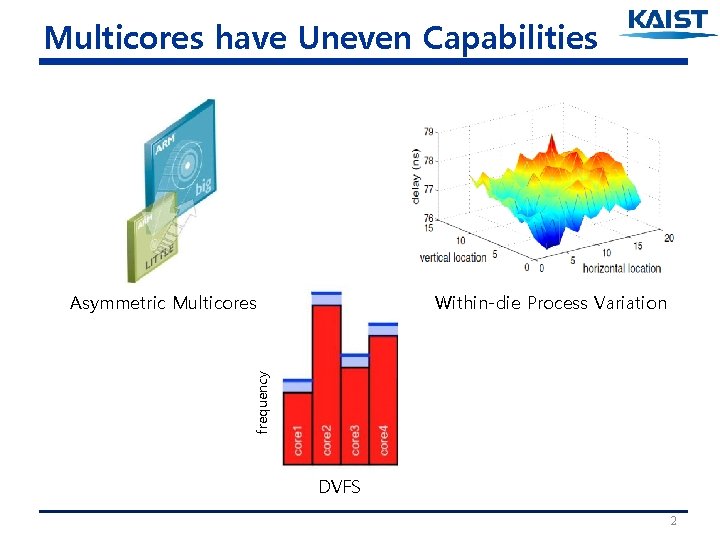

Multicores have Uneven Capabilities Within-die Process Variation frequency Asymmetric Multicores DVFS 2

![Throughputmaximizing Scheduling Maxperf maximize the overall throughput Kumar 03 Koufaty 10 Kwon 11 Throughput-maximizing Scheduling • Max-perf: maximize the overall throughput [Kumar’ 03], [Koufaty’ 10], [Kwon‘ 11],](https://slidetodoc.com/presentation_image_h2/9d4b7e77ef228b6625c69e189054760b/image-3.jpg)

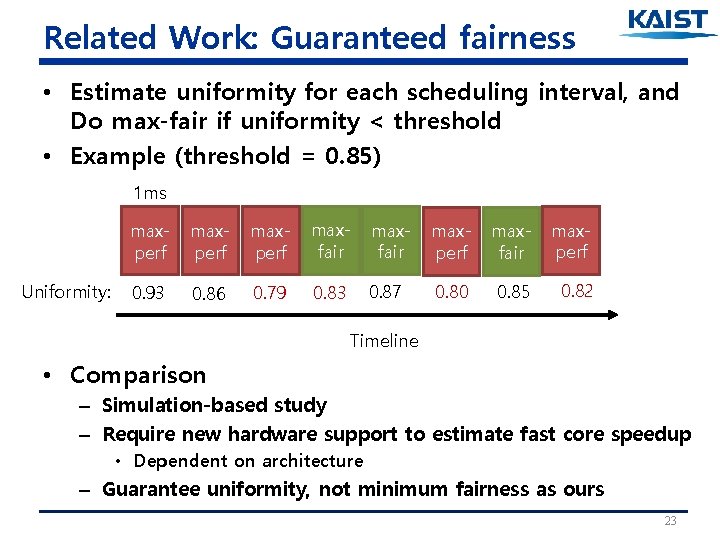

Throughput-maximizing Scheduling • Max-perf: maximize the overall throughput [Kumar’ 03], [Koufaty’ 10], [Kwon‘ 11], [Saez‘ 10], [Shelepov’ 09], [Craeynest‘ 12] App 1 Speedup: 3. 0 App 2 2. 9 Speedup: 1. 5 2. 9 Unfair Fast core Slow core 3

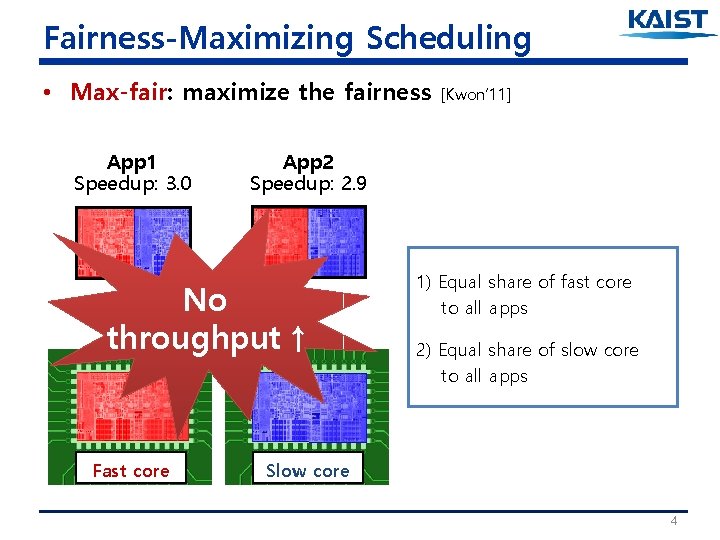

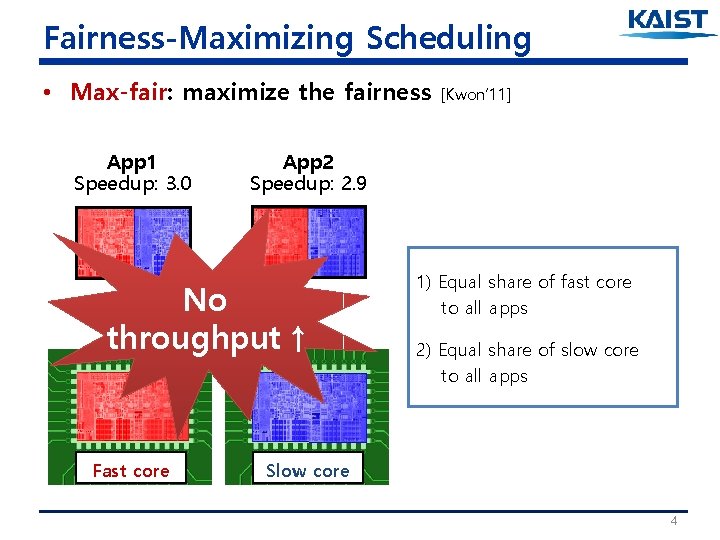

Fairness-Maximizing Scheduling • Max-fair: maximize the fairness App 1 Speedup: 3. 0 App 2 Speedup: 2. 9 No throughput↑ Fast core [Kwon’ 11] 1) Equal share of fast core to all apps 2) Equal share of slow core to all apps Slow core 4

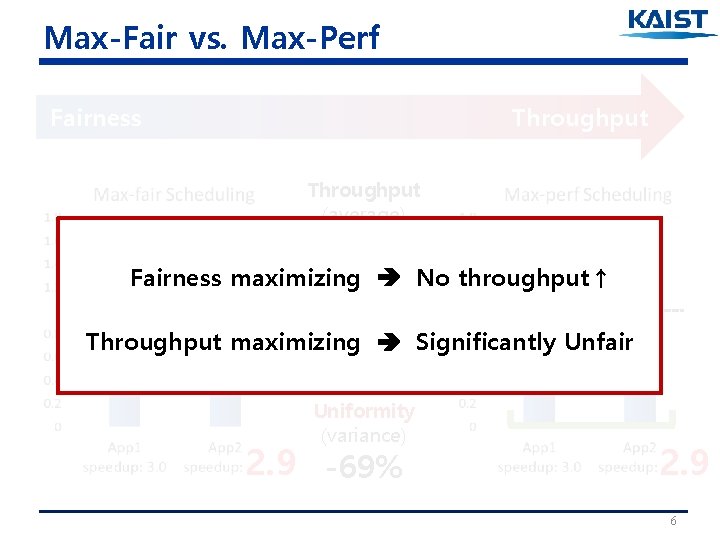

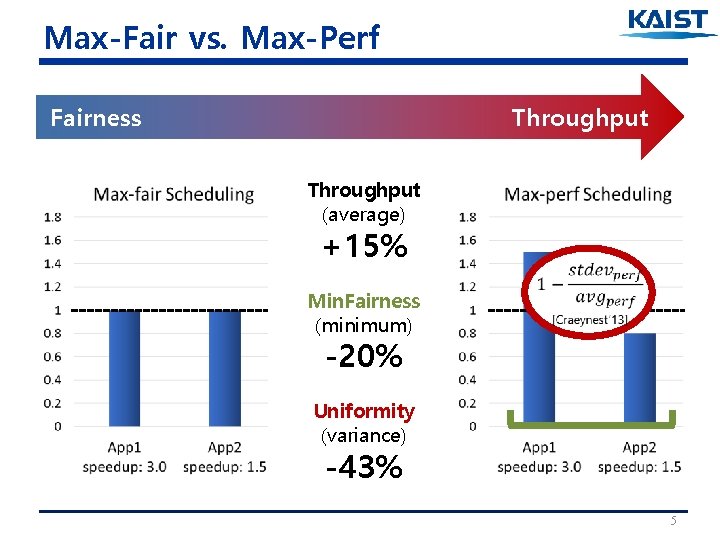

Max-Fair vs. Max-Perf Fairness Throughput (average) +15% Min. Fairness (minimum) -20% Uniformity (variance) -43% 5

Max-Fair vs. Max-Perf Fairness Throughput (average) +1% Fairness maximizing No throughput↑ Min. Fairness (minimum) Throughput maximizing Significantly Unfair -49% Uniformity (variance) 2. 9 -69% 2. 9 6

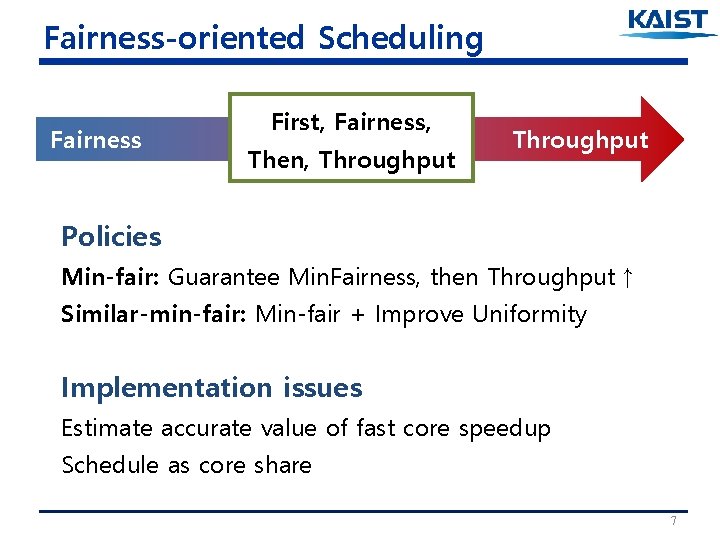

Fairness-oriented Scheduling Fairness First, Fairness, Then, Throughput Policies Min-fair: Guarantee Min. Fairness, then Throughput↑ Similar-min-fair: Min-fair + Improve Uniformity Implementation issues Estimate accurate value of fast core speedup Schedule as core share 7

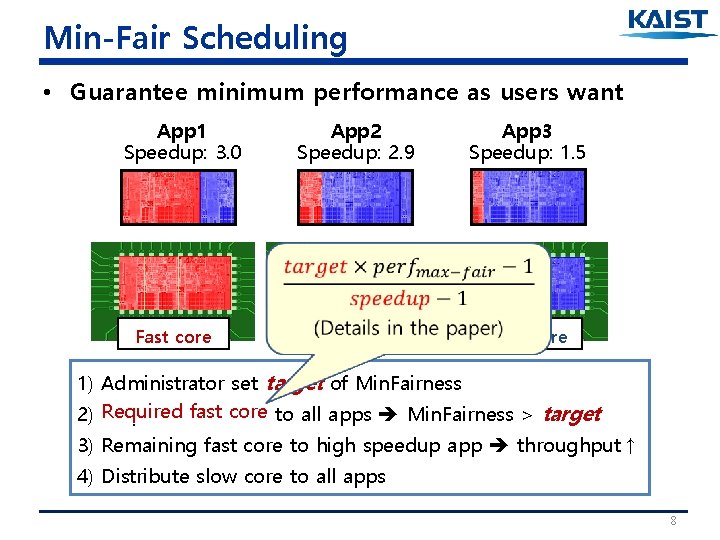

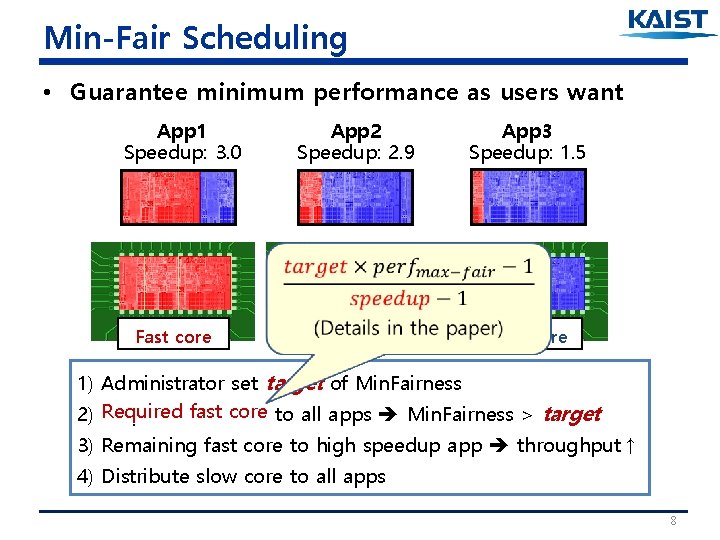

Min-Fair Scheduling • Guarantee minimum performance as users want App 1 Speedup: 3. 0 Fast core App 2 Speedup: 2. 9 Slow core App 3 Speedup: 1. 5 Slow core 1) Administrator set target of Min. Fairness 2) Required fast core to all apps Min. Fairness > target 3) Remaining fast core to high speedup app throughput↑ 4) Distribute slow core to all apps 8

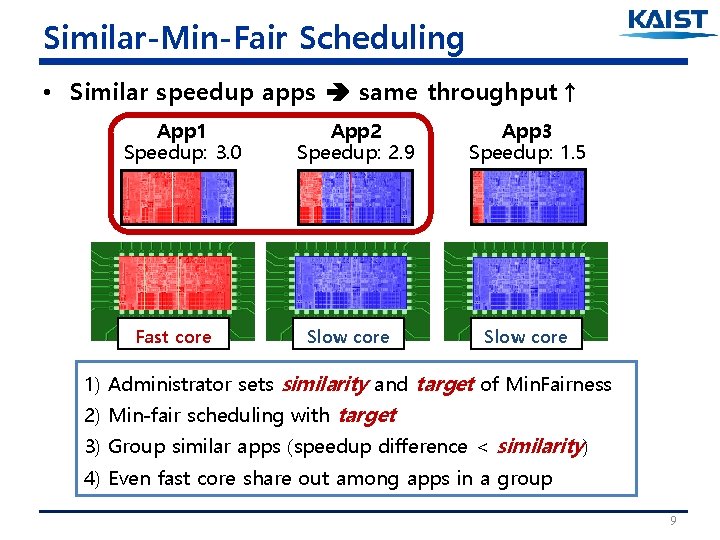

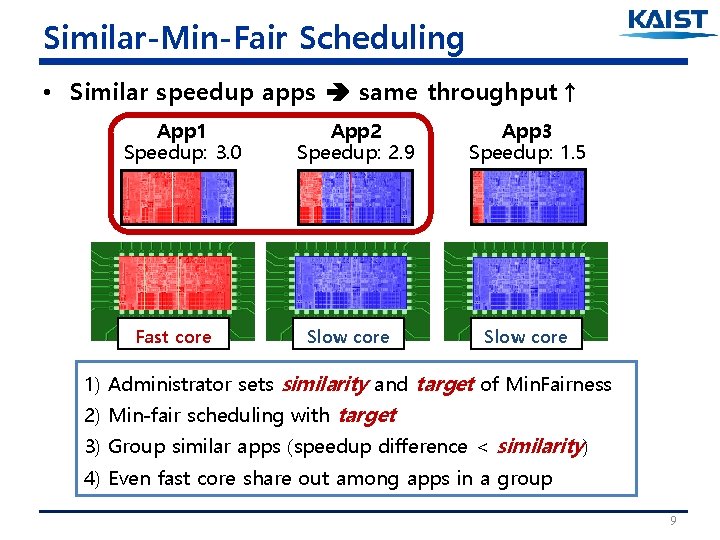

Similar-Min-Fair Scheduling • Similar speedup apps same throughput↑ App 1 Speedup: 3. 0 Fast core App 2 Speedup: 2. 9 Slow core App 3 Speedup: 1. 5 Slow core 1) Administrator sets similarity and target of Min. Fairness 2) Min-fair scheduling with target 3) Group similar apps (speedup difference < similarity) 4) Even fast core share out among apps in a group 9

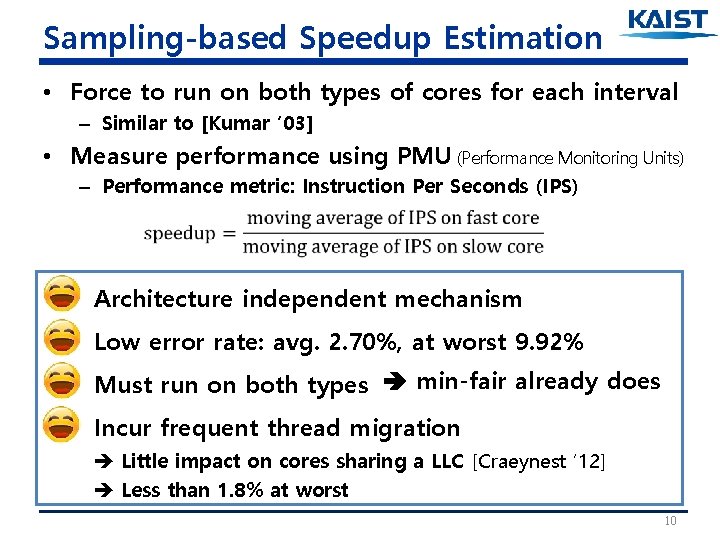

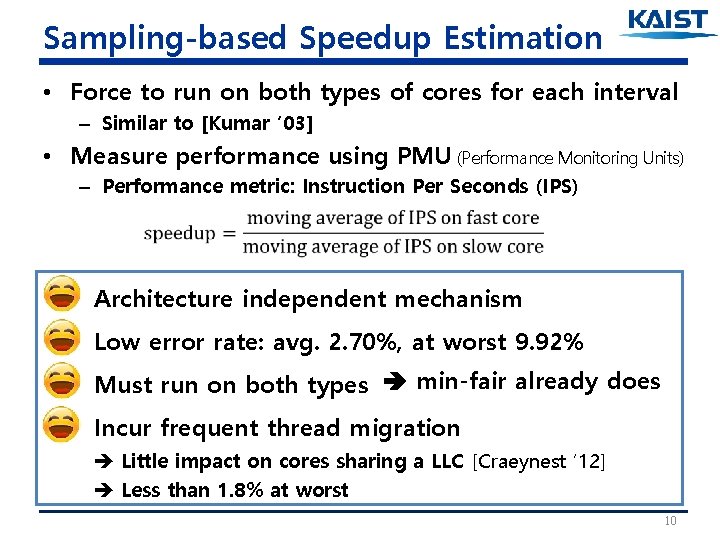

Sampling-based Speedup Estimation • Force to run on both types of cores for each interval – Similar to [Kumar ‘ 03] • Measure performance using PMU (Performance Monitoring Units) – Performance metric: Instruction Per Seconds (IPS) Architecture independent mechanism Low error rate: avg. 2. 70%, at worst 9. 92% Must run on both types min-fair already does Incur frequent thread migration Little impact on cores sharing a LLC [Craeynest ‘ 12] Less than 1. 8% at worst 10

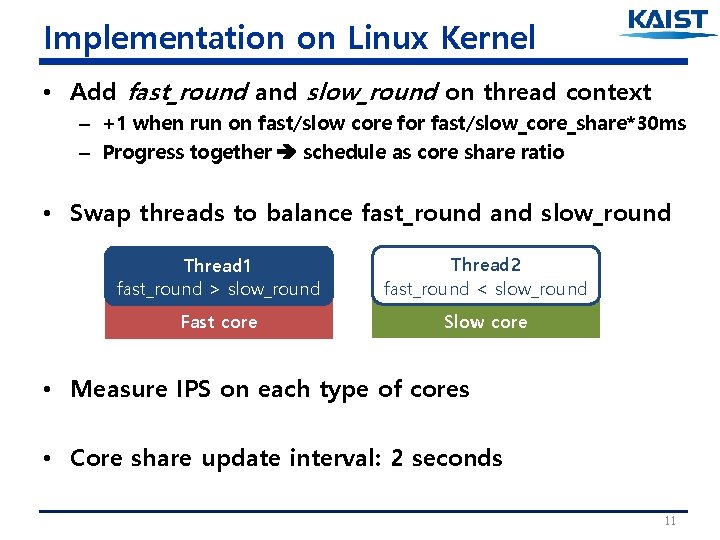

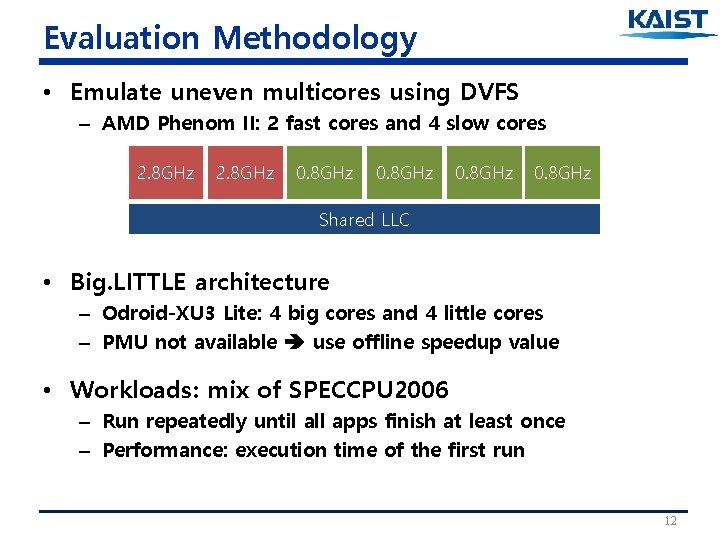

Implementation on Linux Kernel • Add fast_round and slow_round on thread context – +1 when run on fast/slow core for fast/slow_core_share*30 ms – Progress together schedule as core share ratio • Swap threads to balance fast_round and slow_round Thread 1 fast_round > slow_round Thread 2 fast_round < slow_round Fast core Slow core • Measure IPS on each type of cores • Core share update interval: 2 seconds 11

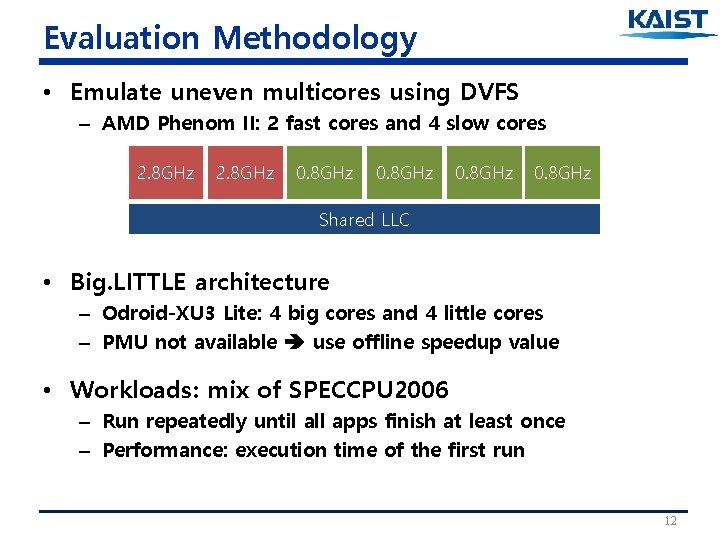

Evaluation Methodology • Emulate uneven multicores using DVFS – AMD Phenom II: 2 fast cores and 4 slow cores 2. 8 GHz 0. 8 GHz Shared LLC • Big. LITTLE architecture – Odroid-XU 3 Lite: 4 big cores and 4 little cores – PMU not available use offline speedup value • Workloads: mix of SPECCPU 2006 – Run repeatedly until all apps finish at least once – Performance: execution time of the first run 12

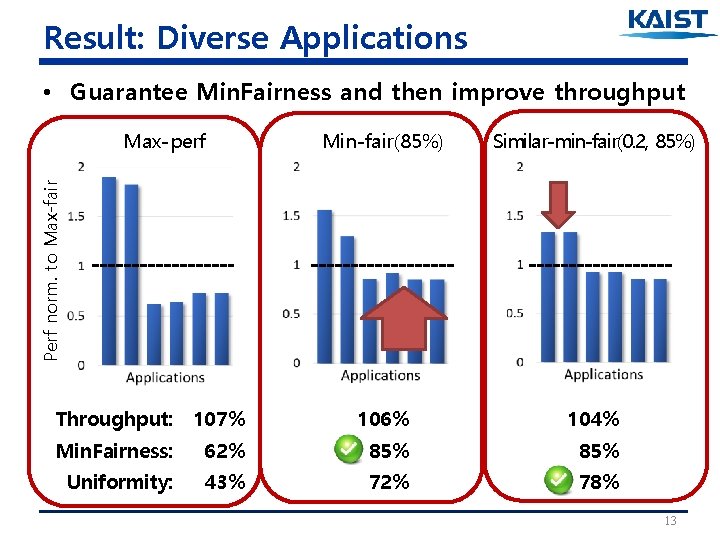

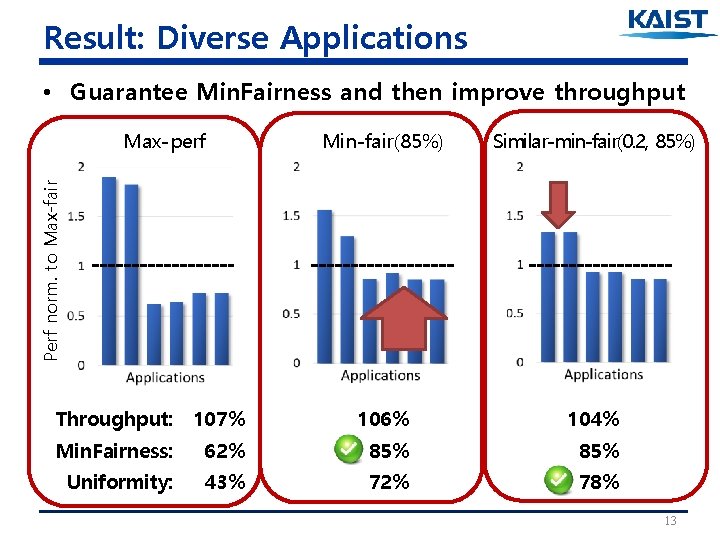

Result: Diverse Applications • Guarantee Min. Fairness and then improve throughput Min-fair(85%) Similar-min-fair(0. 2, 85%) 106% 104% Perf norm. to Max-fair Max-perf Throughput: 107% Min. Fairness: 62% 85% Uniformity: 43% 72% 78% 13

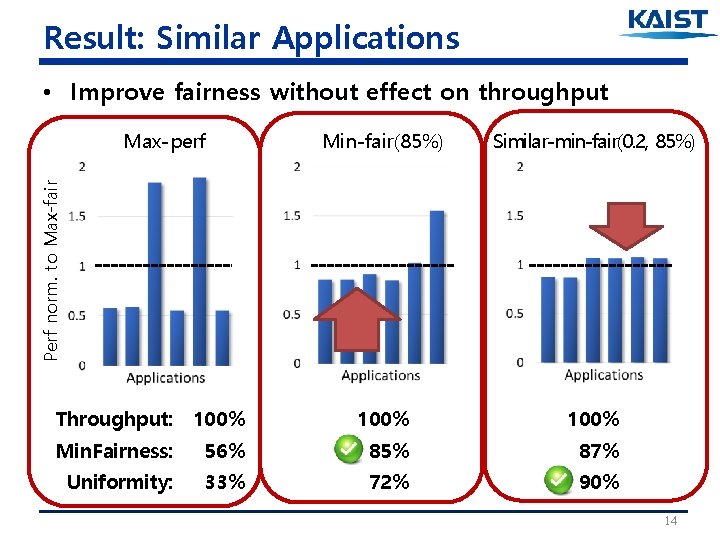

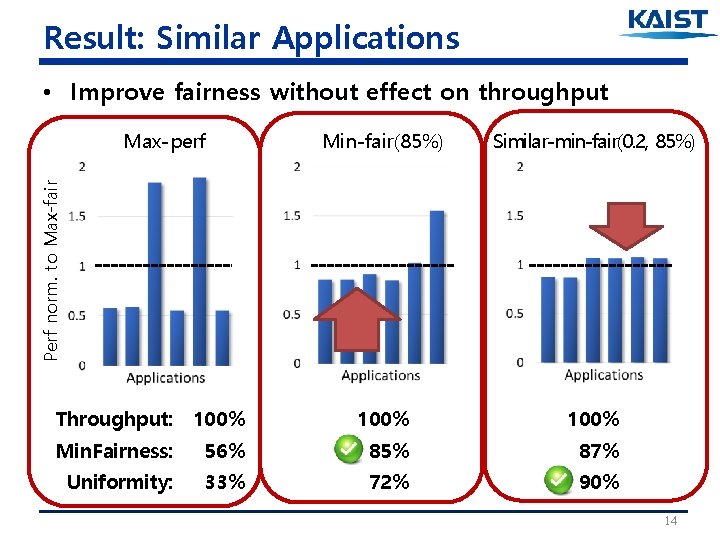

Result: Similar Applications • Improve fairness without effect on throughput Min-fair(85%) Similar-min-fair(0. 2, 85%) 100% Perf norm. to Max-fair Max-perf Throughput: 100% Min. Fairness: 56% 85% 87% Uniformity: 33% 72% 90% 14

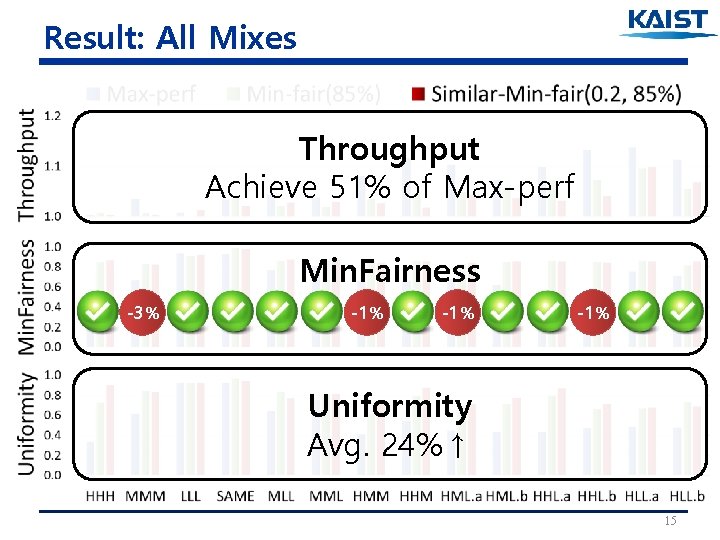

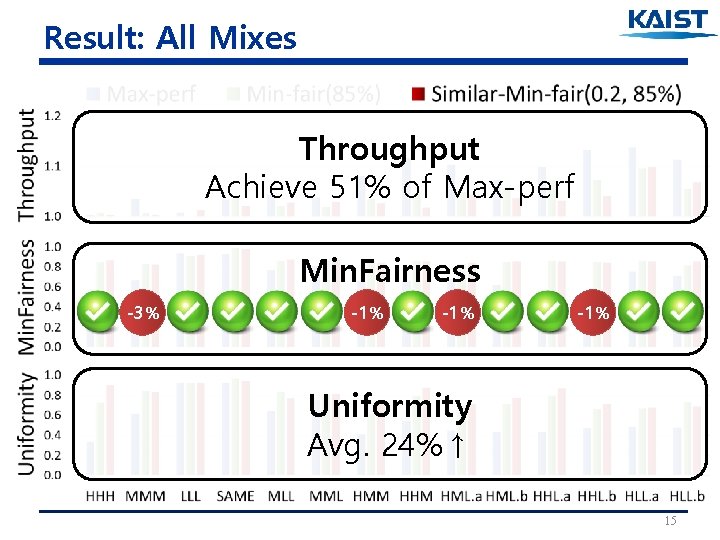

Result: All Mixes Throughput Achieve 51% of Max-perf Min. Fairness -3% -1% -1% Uniformity Avg. 24%↑ 15

Conclusion • Fairness-oriented scheduling for uneven multicores – First, Fairness, Then, Throughput • Architecture independent speedup estimation – High accuracy and little overhead • Implemented on linux kernel 3. 7. 3 • Real machine results – Min. Fairness: mostly guaranteed (missed less than 3%) – Uniformity: avg. 24%↑ – Throughput: achieve 51% of Max-perf 16

ASK YOU WHEN YOU ASK ME Speak Slowly, Slowly and Slowly Or, pick up a question…. 1) Why just TWO core types 2) Effect of cache, or other resources 3) Other works on fairness for uneven multicores 4) Details for required fast core share 5) Multithreaded applications? 6) 2 sec (long) interval… how about short applications? 7) Is Max-fair really fair? 8) Why is (min)fairness important? 9) Is it lose energy efficiency of AMP? 10) How to measure accuracy of speedup? 11) Complex scheduler may burden on CPU 12) less than 1. 8% overhead what’s overhead? 17

Backup Slides 18

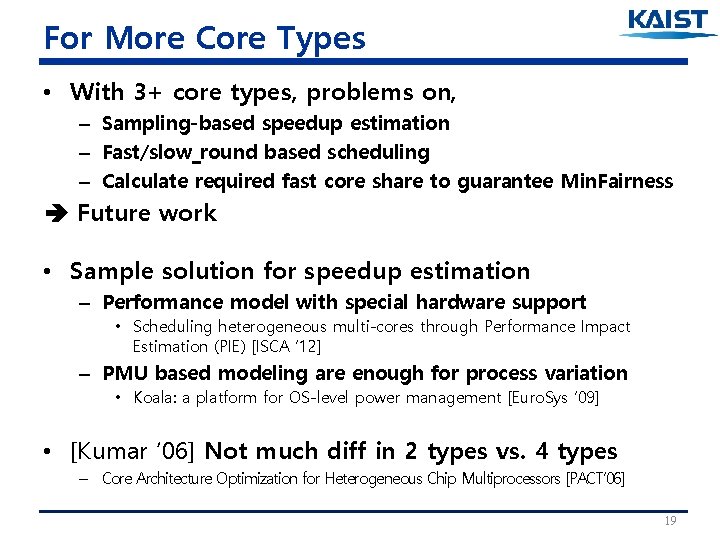

For More Core Types • With 3+ core types, problems on, – Sampling-based speedup estimation – Fast/slow_round based scheduling – Calculate required fast core share to guarantee Min. Fairness Future work • Sample solution for speedup estimation – Performance model with special hardware support • Scheduling heterogeneous multi-cores through Performance Impact Estimation (PIE) [ISCA ‘ 12] – PMU based modeling are enough for process variation • Koala: a platform for OS-level power management [Euro. Sys ’ 09] • [Kumar ‘ 06] Not much diff in 2 types vs. 4 types – Core Architecture Optimization for Heterogeneous Chip Multiprocessors [PACT’ 06] 19

Effect of Shared Cache • Beyond scope of our paper • Unevenness of cores has the primary impact – App runtime with different co-runners with Max-fair >20% difference in only 2 mixes fast core speedup 1. 7~3. 5 But, <20% difference • Corner cases find other ways – E. g. cache partitioning 20

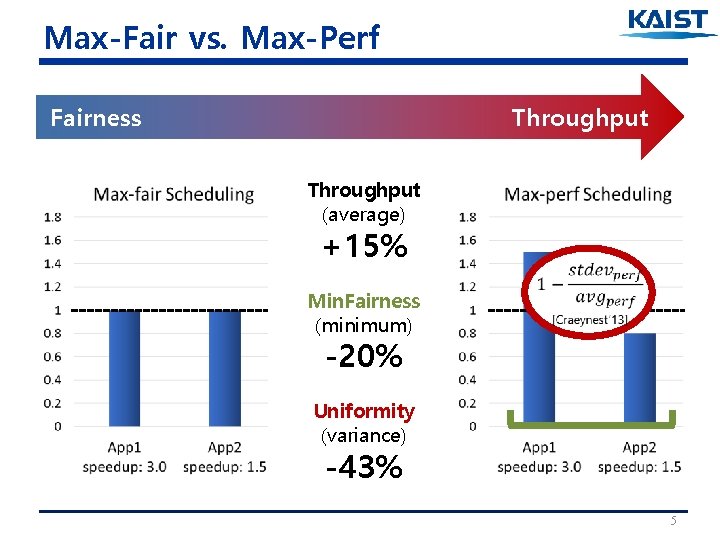

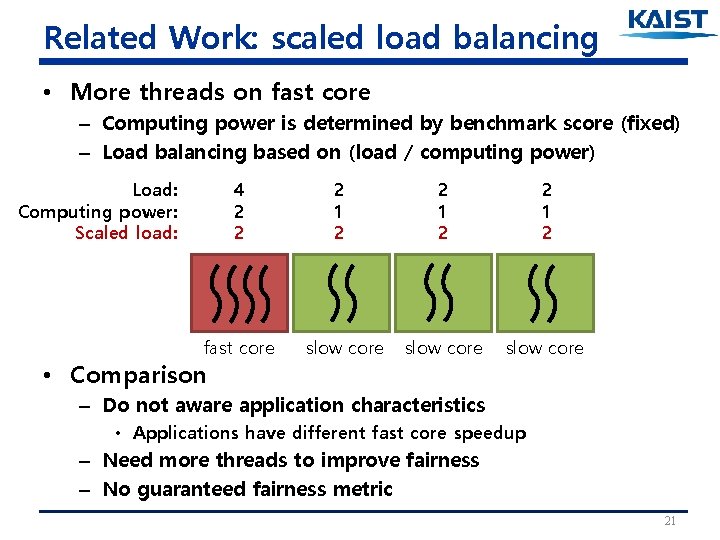

Related Work: scaled load balancing • More threads on fast core – Computing power is determined by benchmark score (fixed) – Load balancing based on (load / computing power) 4 2 2 2 1 2 fast core slow core Load: Computing power: Scaled load: • Comparison – Do not aware application characteristics • Applications have different fast core speedup – Need more threads to improve fairness – No guaranteed fairness metric 21

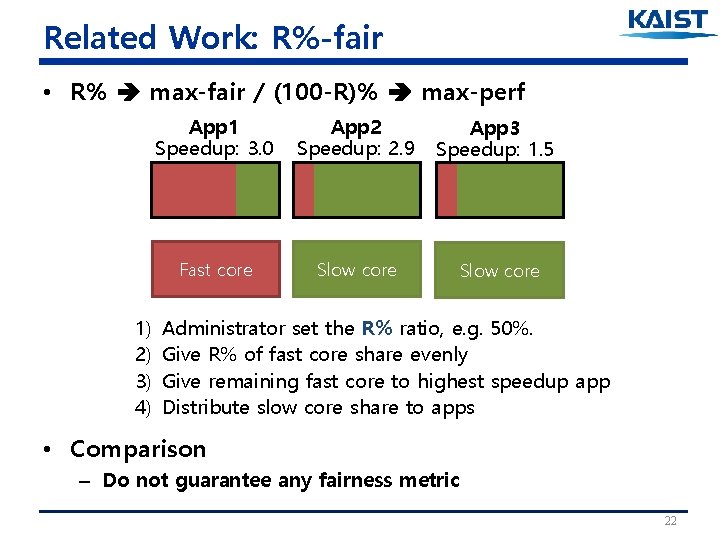

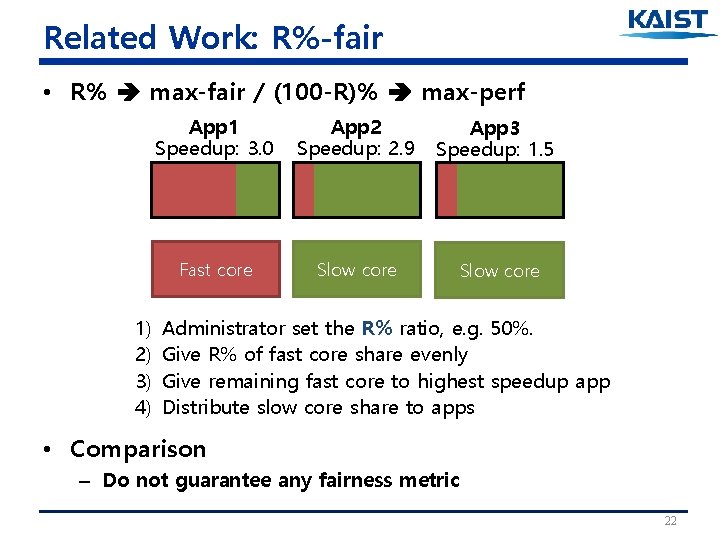

Related Work: R%-fair • R% max-fair / (100 -R)% max-perf 1) 2) 3) 4) App 1 Speedup: 3. 0 App 2 Speedup: 2. 9 App 3 Speedup: 1. 5 Fast core Slow core Administrator set the R% ratio, e. g. 50%. Give R% of fast core share evenly Give remaining fast core to highest speedup app Distribute slow core share to apps • Comparison – Do not guarantee any fairness metric 22

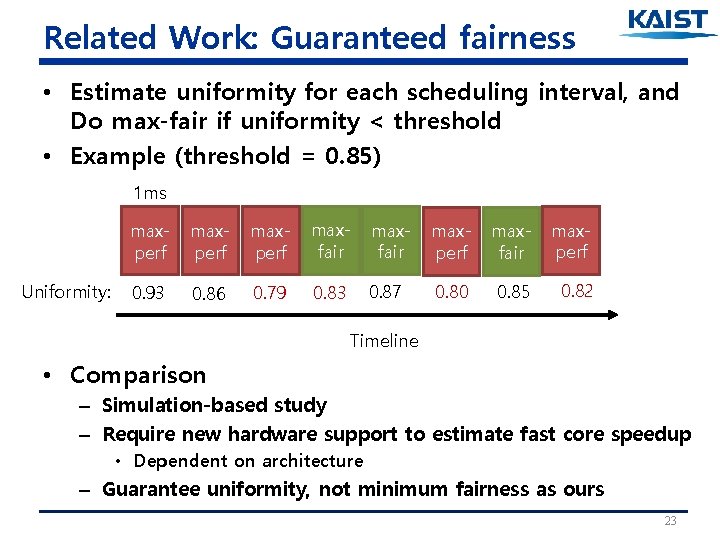

Related Work: Guaranteed fairness • Estimate uniformity for each scheduling interval, and Do max-fair if uniformity < threshold • Example (threshold = 0. 85) 1 ms Uniformity: maxperf maxfair maxperf 0. 93 0. 86 0. 79 0. 83 0. 87 0. 80 0. 85 0. 82 Timeline • Comparison – Simulation-based study – Require new hardware support to estimate fast core speedup • Dependent on architecture – Guarantee uniformity, not minimum fairness as ours 23

![Related Work Comparisons Scaled load balancing 1 Rfair 2 Guaranteed fairness 3 Simminfair proposed Related Work: Comparisons Scaled load balancing [1] R%-fair [2] Guaranteed fairness [3] Sim-min-fair (proposed)](https://slidetodoc.com/presentation_image_h2/9d4b7e77ef228b6625c69e189054760b/image-24.jpg)

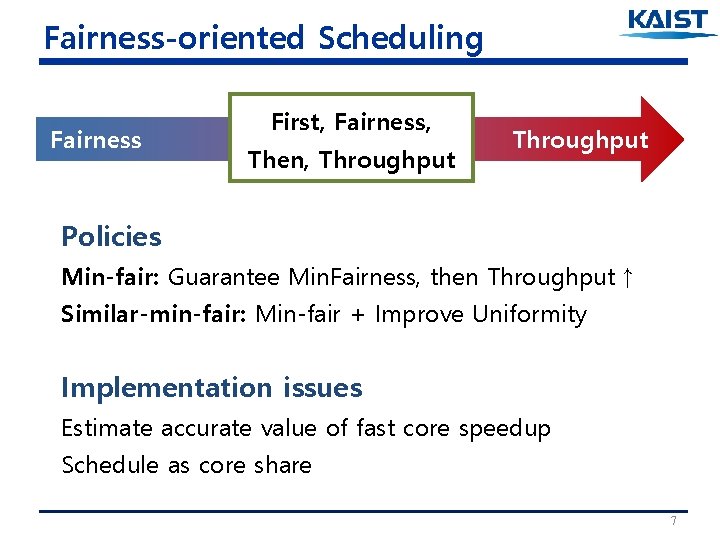

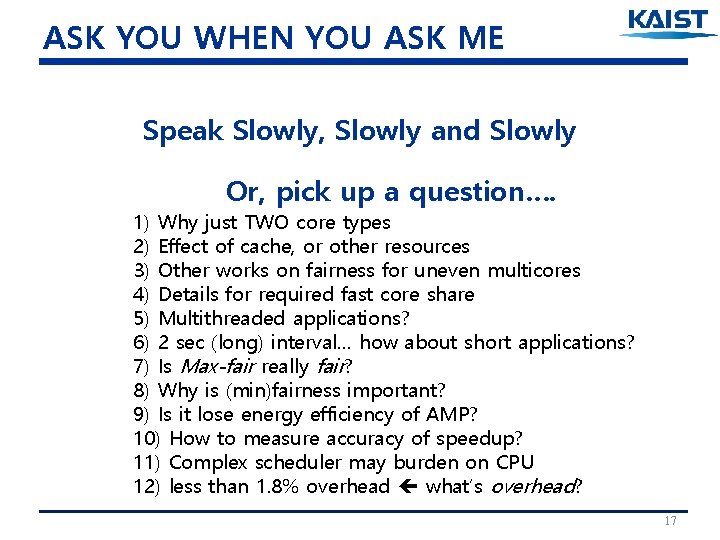

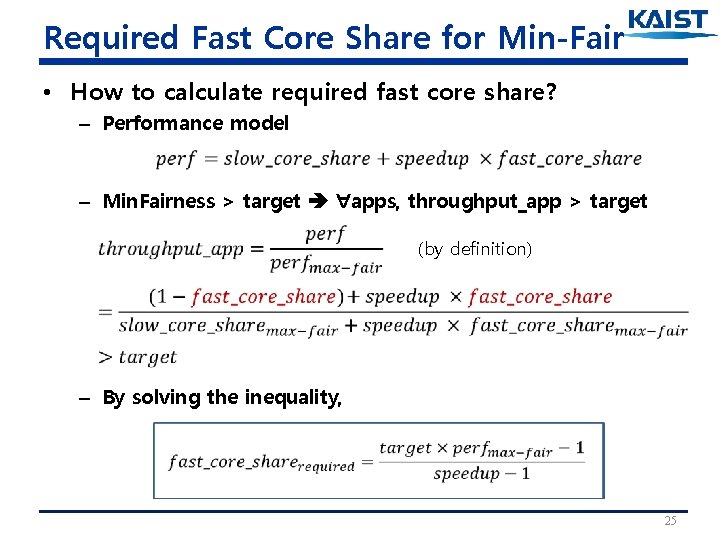

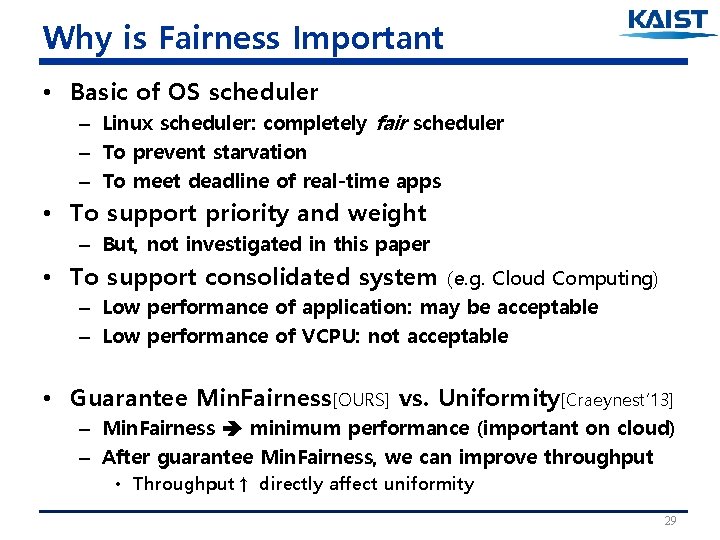

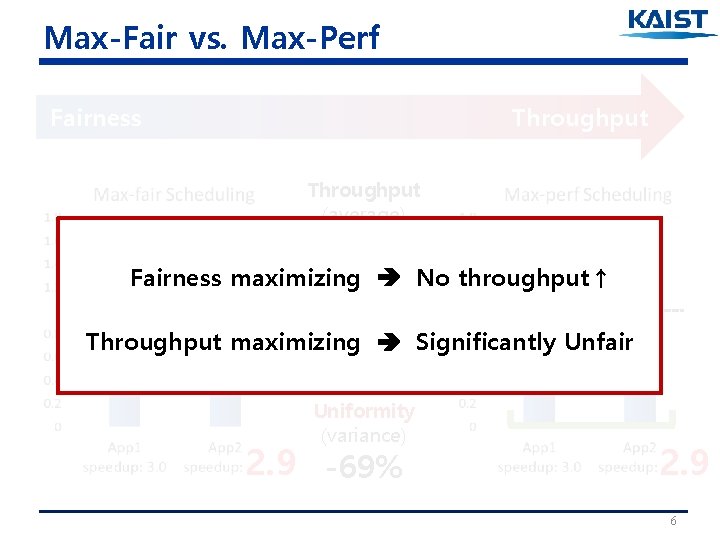

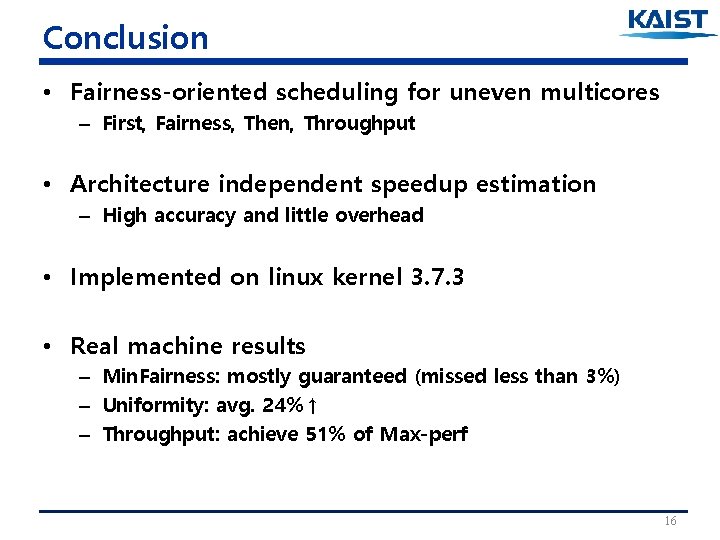

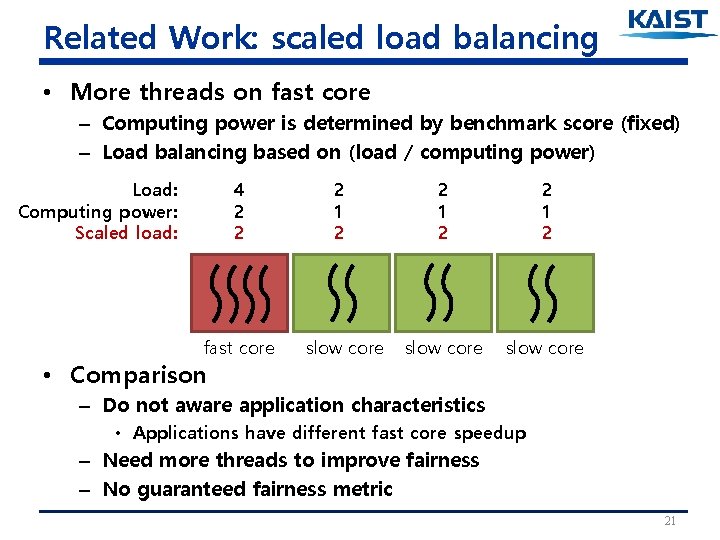

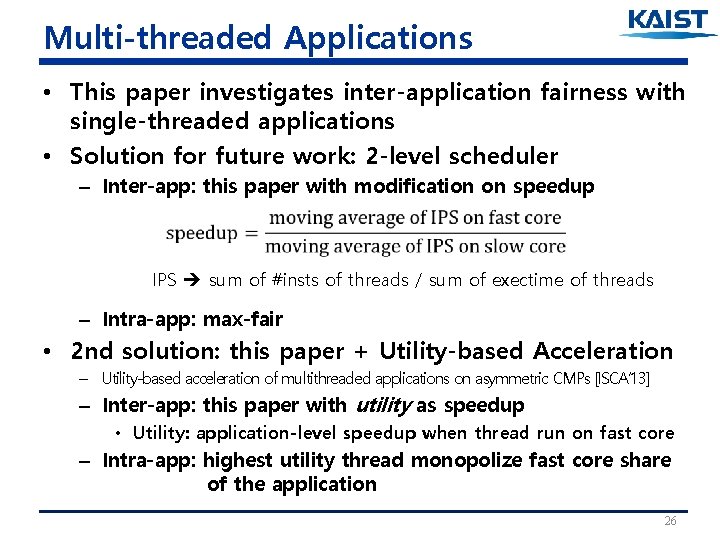

Related Work: Comparisons Scaled load balancing [1] R%-fair [2] Guaranteed fairness [3] Sim-min-fair (proposed) Aware application characteristics? NO YES YES Estimate speedup at runtime? NO YES YES Implement on real machine? YES NO YES Require extra HW support? NO NO YES NO Guaranteed fairness metric - - uniformity Minimum fairness [1] Tong Li et. al. “Efficient operating system scheduling for…” SC’ 07 [2] Youngjin Kown et. al. “Virtualizing performance asymmetric…” ISCA’ 11 [3] Kenzo Van Craeynest et. al. “Fairness-aware scheduling on…” PACT’ 13 24

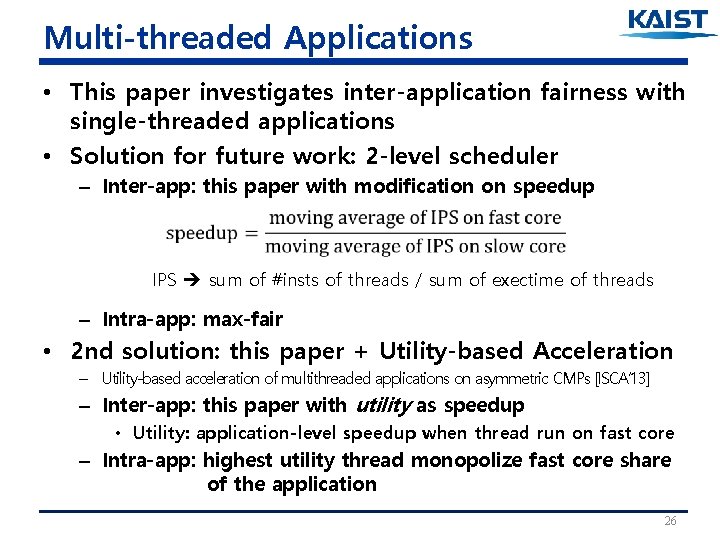

Required Fast Core Share for Min-Fair • How to calculate required fast core share? – Performance model – Min. Fairness > target ∀apps, throughput_app > target (by definition) – By solving the inequality, 25

Multi-threaded Applications • This paper investigates inter-application fairness with single-threaded applications • Solution for future work: 2 -level scheduler – Inter-app: this paper with modification on speedup IPS sum of #insts of threads / sum of exectime of threads – Intra-app: max-fair • 2 nd solution: this paper + Utility-based Acceleration – Utility-based acceleration of multithreaded applications on asymmetric CMPs [ISCA’ 13] – Inter-app: this paper with utility as speedup • Utility: application-level speedup when thread run on fast core – Intra-app: highest utility thread monopolize fast core share of the application 26

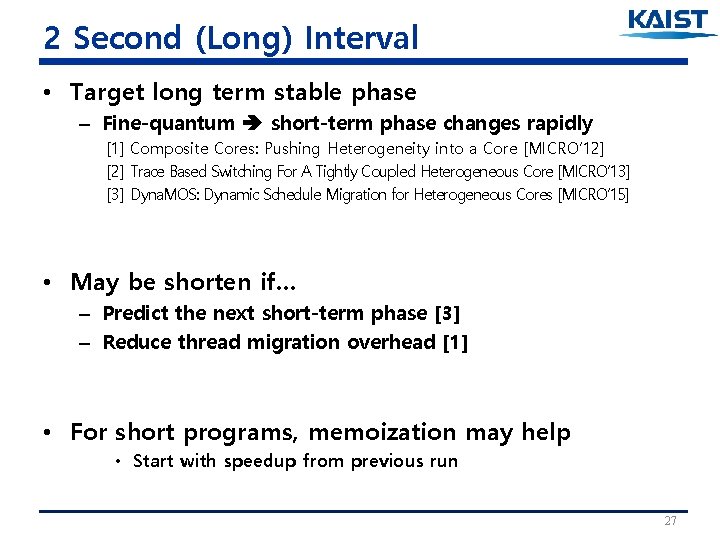

2 Second (Long) Interval • Target long term stable phase – Fine-quantum short-term phase changes rapidly [1] Composite Cores: Pushing Heterogeneity into a Core [MICRO’ 12] [2] Trace Based Switching For A Tightly Coupled Heterogeneous Core [MICRO’ 13] [3] Dyna. MOS: Dynamic Schedule Migration for Heterogeneous Cores [MICRO’ 15] • May be shorten if… – Predict the next short-term phase [3] – Reduce thread migration overhead [1] • For short programs, memoization may help • Start with speedup from previous run 27

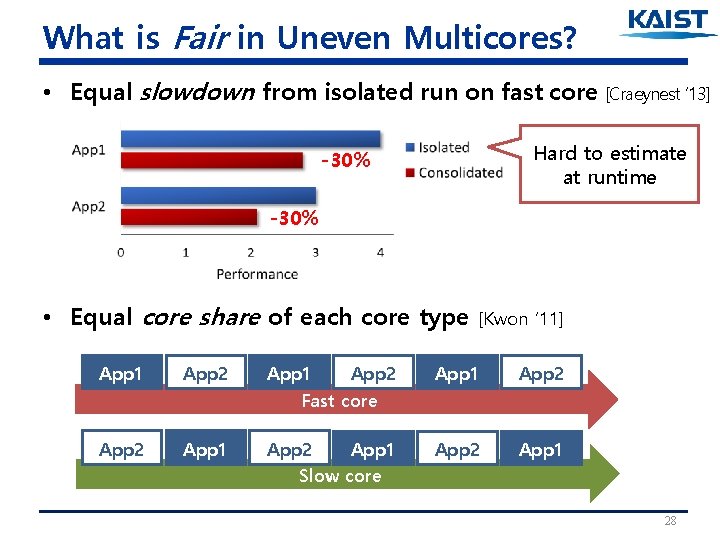

What is Fair in Uneven Multicores? • Equal slowdown from isolated run on fast core [Craeynest ‘ 13] Hard to estimate at runtime -30% • Equal core share of each core type App 1 App 2 [Kwon ‘ 11] App 1 App 2 App 1 Fast core App 2 App 1 Slow core 28

Why is Fairness Important • Basic of OS scheduler – Linux scheduler: completely fair scheduler – To prevent starvation – To meet deadline of real-time apps • To support priority and weight – But, not investigated in this paper • To support consolidated system (e. g. Cloud Computing) – Low performance of application: may be acceptable – Low performance of VCPU: not acceptable • Guarantee Min. Fairness[OURS] vs. Uniformity[Craeynest’ 13] – Min. Fairness minimum performance (important on cloud) – After guarantee Min. Fairness, we can improve throughput • Throughput↑ directly affect uniformity 29

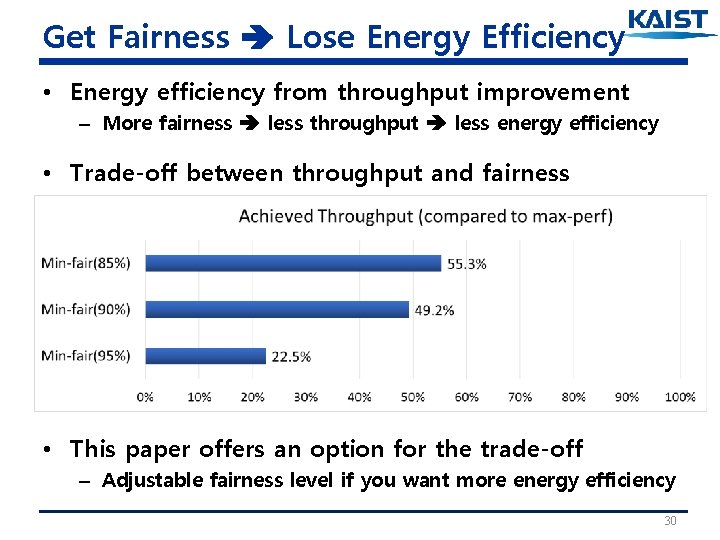

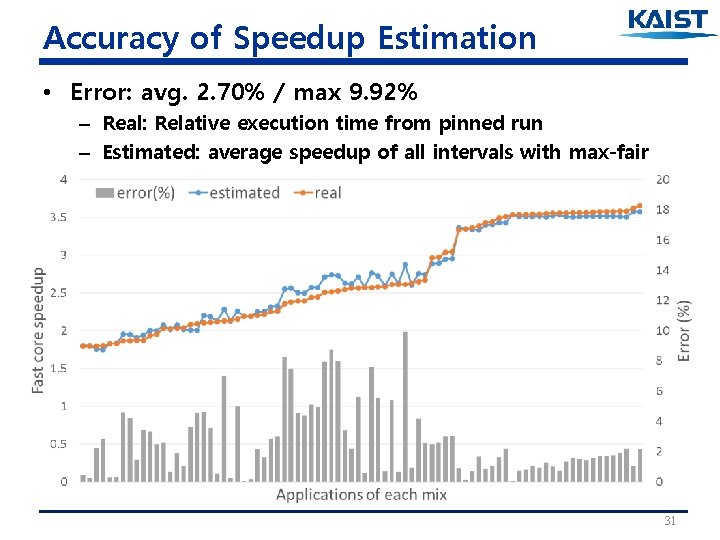

Get Fairness Lose Energy Efficiency • Energy efficiency from throughput improvement – More fairness less throughput less energy efficiency • Trade-off between throughput and fairness • This paper offers an option for the trade-off – Adjustable fairness level if you want more energy efficiency 30

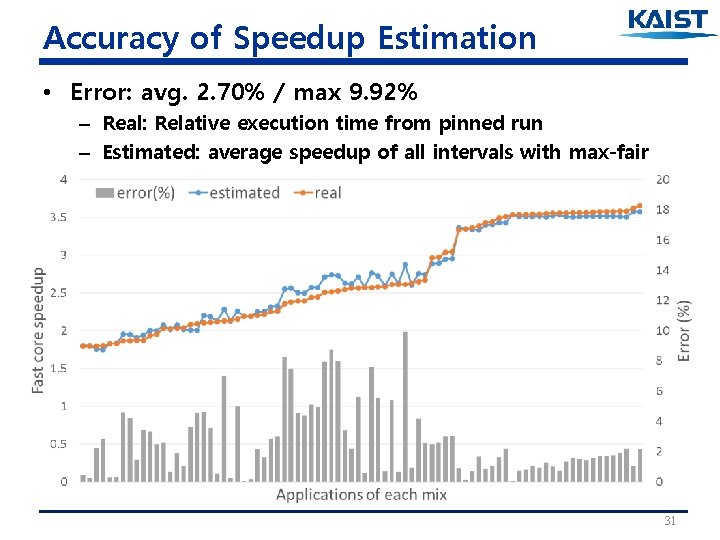

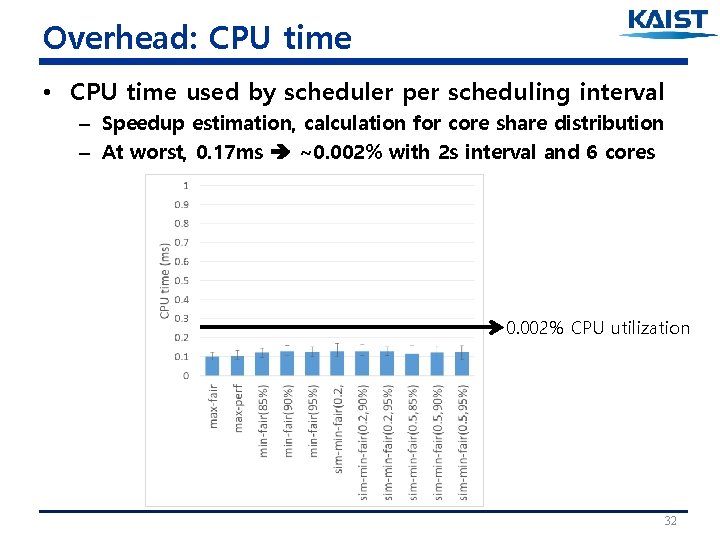

Accuracy of Speedup Estimation • Error: avg. 2. 70% / max 9. 92% – Real: Relative execution time from pinned run – Estimated: average speedup of all intervals with max-fair 31

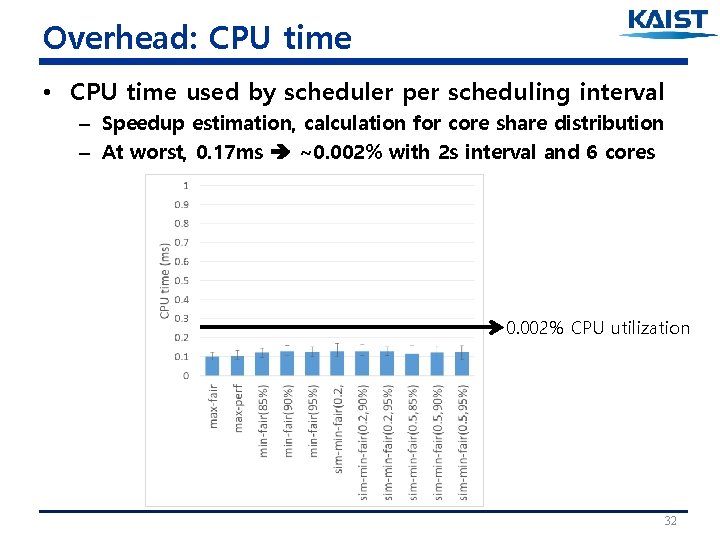

Overhead: CPU time • CPU time used by scheduler per scheduling interval – Speedup estimation, calculation for core share distribution – At worst, 0. 17 ms ~0. 002% with 2 s interval and 6 cores 0. 002% CPU utilization 32

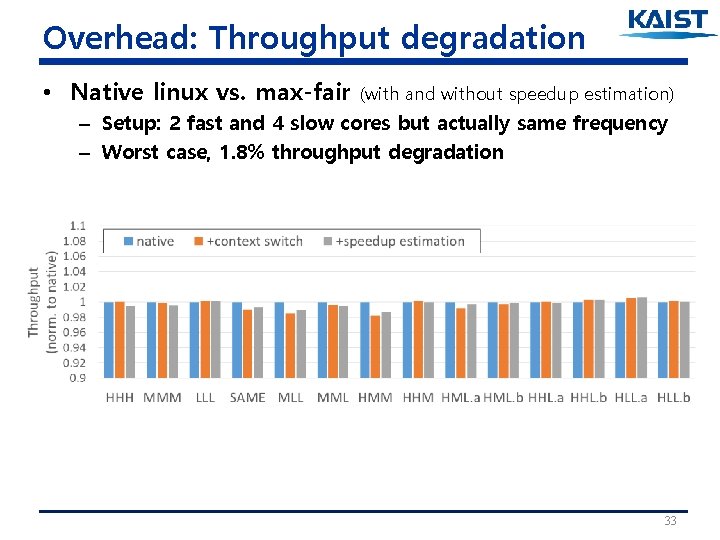

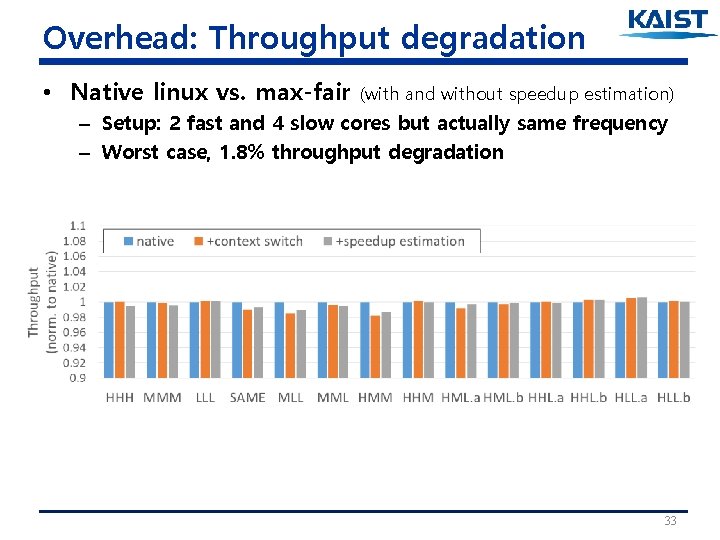

Overhead: Throughput degradation • Native linux vs. max-fair (with and without speedup estimation) – Setup: 2 fast and 4 slow cores but actually same frequency – Worst case, 1. 8% throughput degradation 33

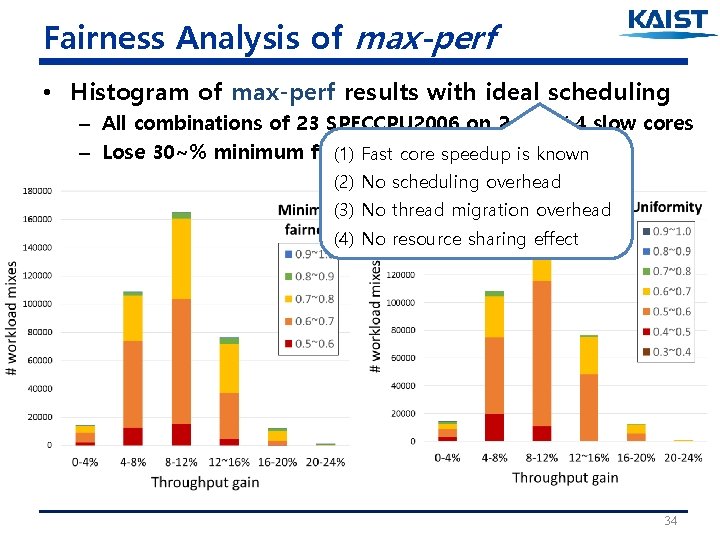

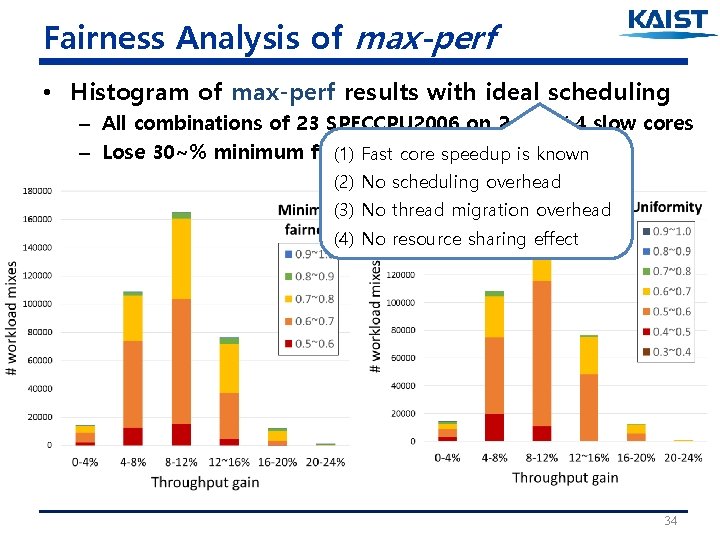

Fairness Analysis of max-perf • Histogram of max-perf results with ideal scheduling – All combinations of 23 SPECCPU 2006 on 2 fast / 4 slow cores – Lose 30~% minimum fairness gain ~4% is throughput (1) Fastto core speedup known (2) No scheduling overhead (3) No thread migration overhead (4) No resource sharing effect 34