Evaluating Coprocessor Effectiveness for DART Ye Feng SIPar

![Algorithm • Not Enough Computation • sum(array[80]) • 79 sums • 7 steps (After Algorithm • Not Enough Computation • sum(array[80]) • 79 sums • 7 steps (After](https://slidetodoc.com/presentation_image/0dcc0e343c4100425a9bd4eabed89f32/image-19.jpg)

- Slides: 37

Evaluating Coprocessor Effectiveness for DART Ye Feng SIPar. CS UCAR/NCAR Boulder, CO University of Wyoming Mentors Helen Kershaw Nancy Collins

Introduction • DART • Data Assimilation Research Testbed • Developed and maintained by the DARe. S at NCAR • GPU • NVIDIA Tesla K 20 x • CUDA FORTRAN • Previous Work • get_close_obs

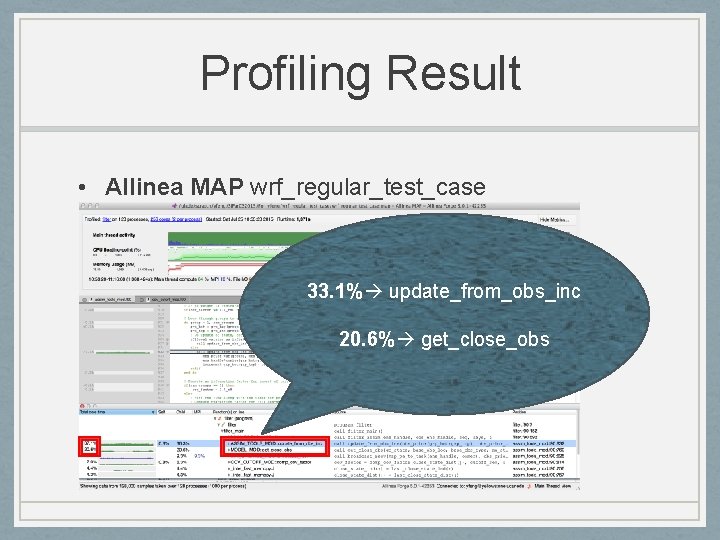

Profiling Result • Allinea MAP wrf_regular_test_case

Profiling Result • Allinea MAP wrf_regular_test_case 33. 1% update_from_obs_inc 20. 6% get_close_obs

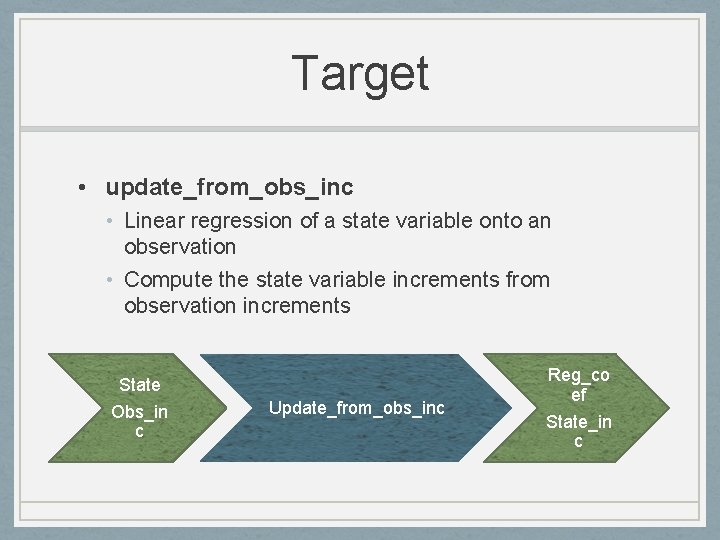

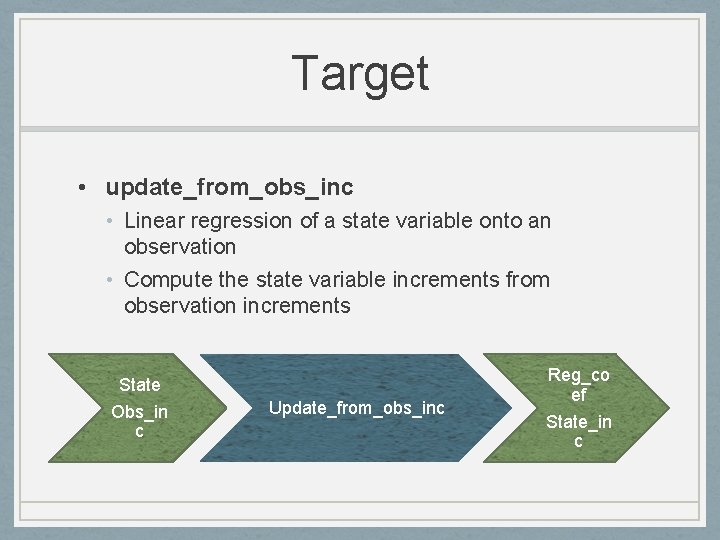

Target • update_from_obs_inc • Linear regression of a state variable onto an observation • Compute the state variable increments from observation increments State Obs_in c Update_from_obs_inc Reg_co ef State_in c

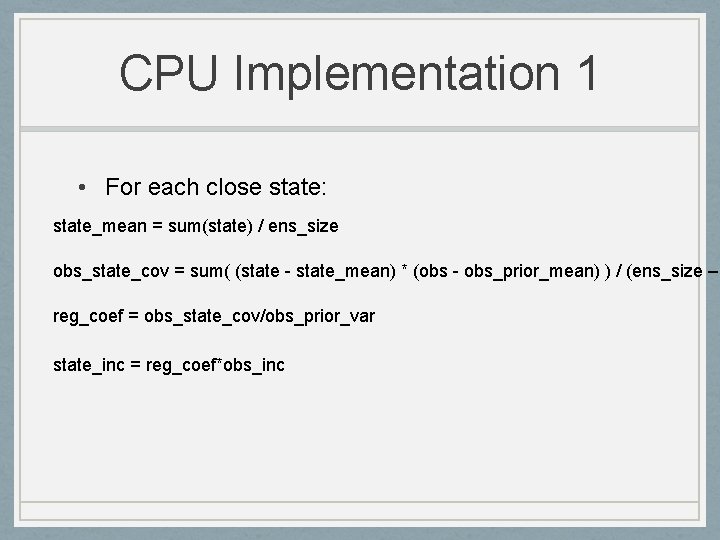

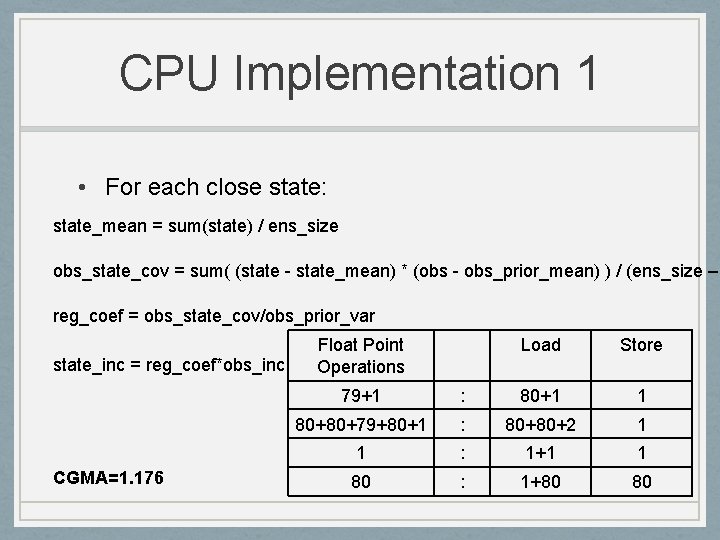

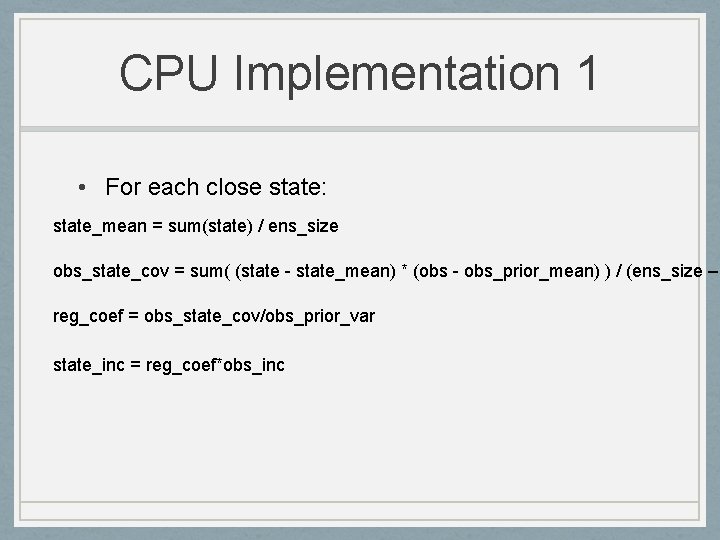

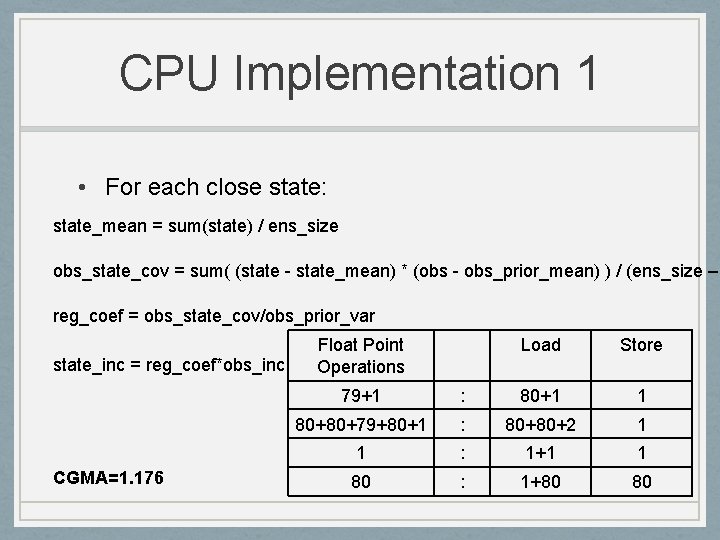

CPU Implementation 1 • For each close state: state_mean = sum(state) / ens_size obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

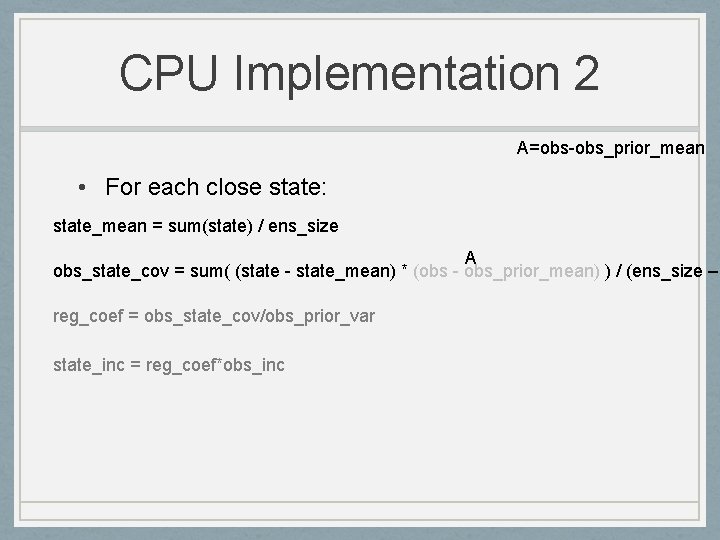

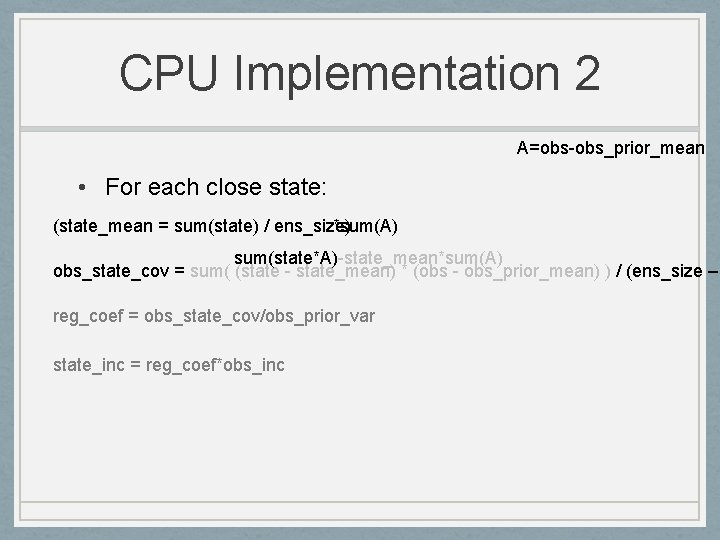

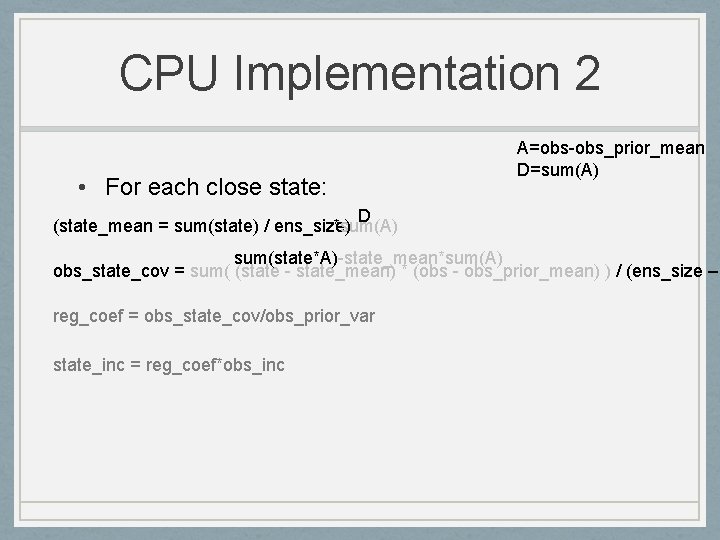

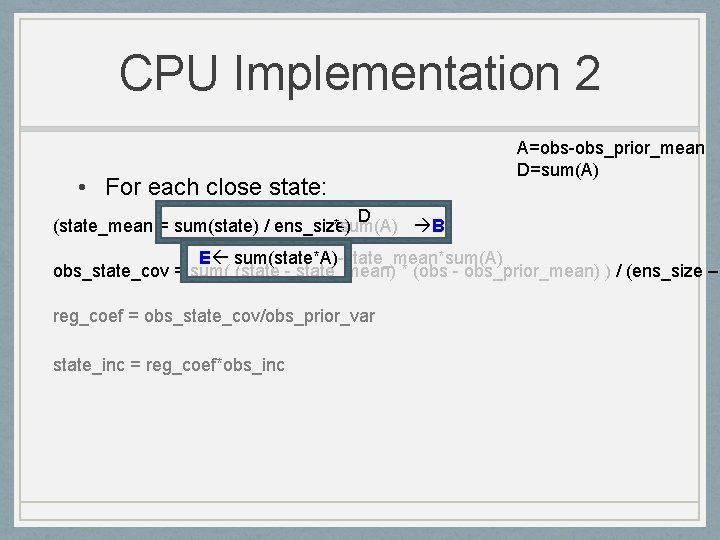

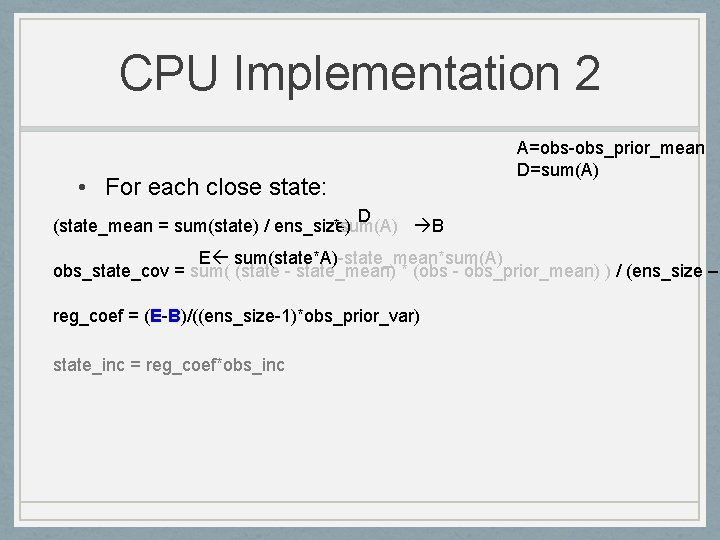

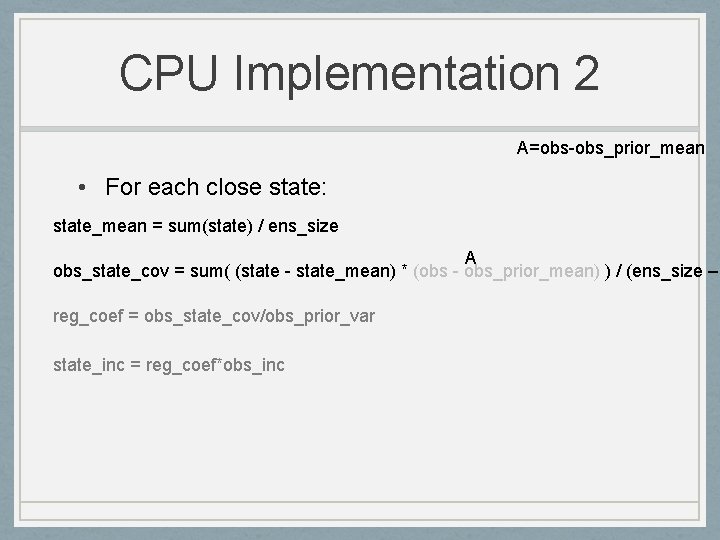

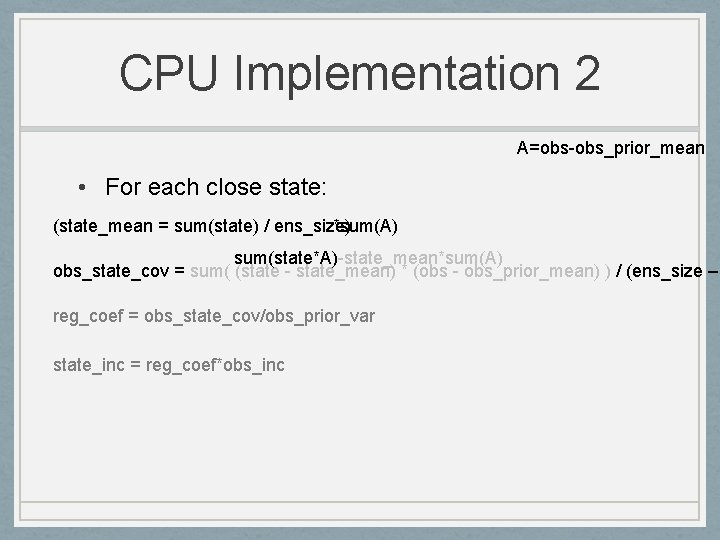

CPU Implementation 2 A=obs-obs_prior_mean • For each close state: state_mean = sum(state) / ens_size A obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

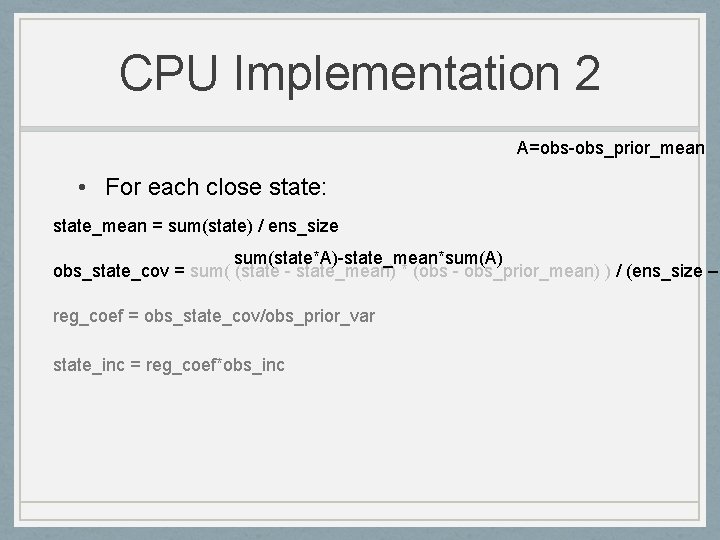

CPU Implementation 2 A=obs-obs_prior_mean • For each close state: state_mean = sum(state) / ens_size sum(state*A)-state_mean*sum(A) obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

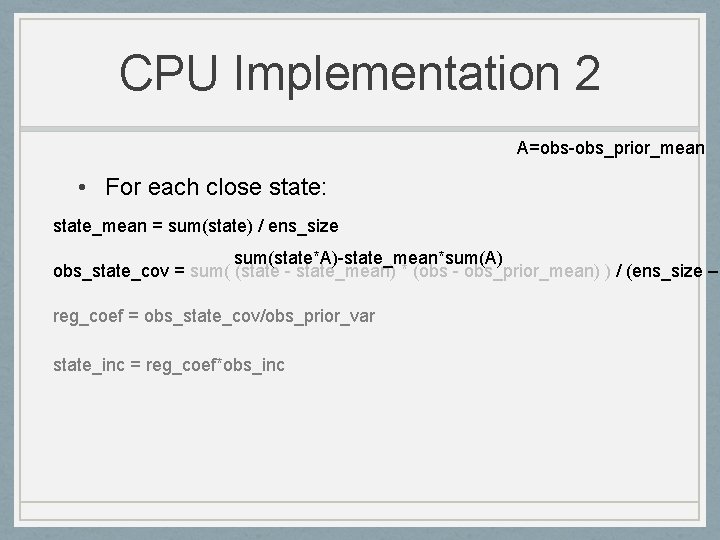

CPU Implementation 2 A=obs-obs_prior_mean • For each close state: (state_mean = sum(state) / ens_size) *sum(A) sum(state*A)-state_mean*sum(A) obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

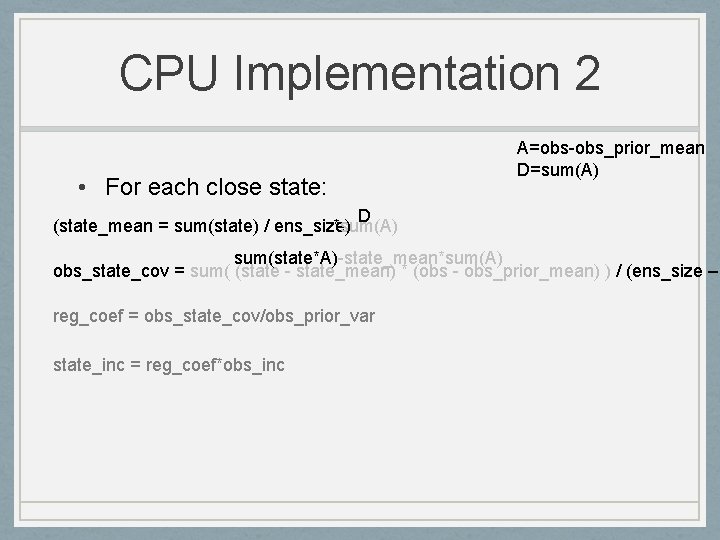

CPU Implementation 2 • For each close state: A=obs-obs_prior_mean D=sum(A) D (state_mean = sum(state) / ens_size) *sum(A) sum(state*A)-state_mean*sum(A) obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

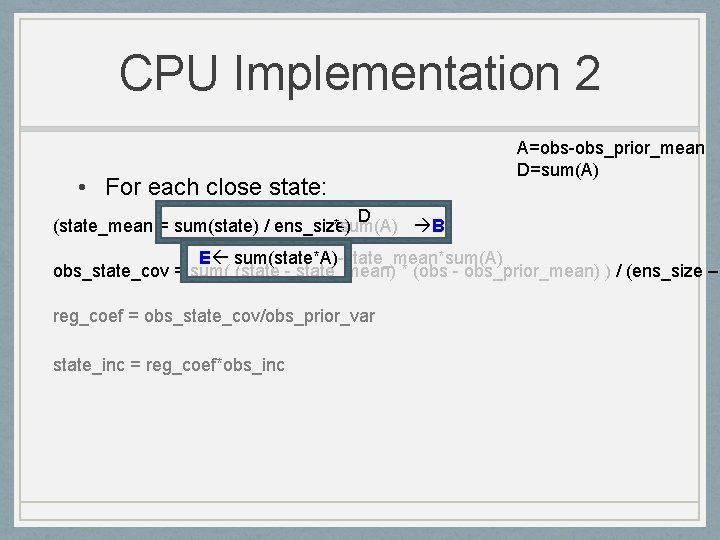

CPU Implementation 2 • For each close state: A=obs-obs_prior_mean D=sum(A) D (state_mean = sum(state) / ens_size) *sum(A) B E sum(state*A)-state_mean*sum(A) obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc

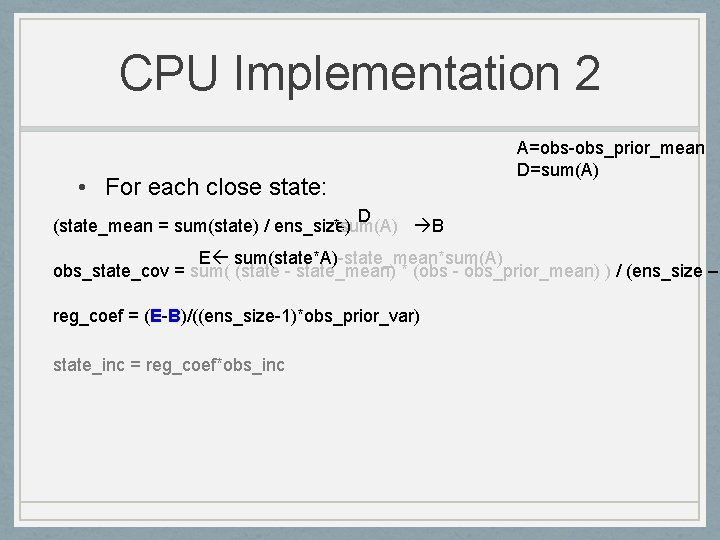

CPU Implementation 2 • For each close state: A=obs-obs_prior_mean D=sum(A) D (state_mean = sum(state) / ens_size) *sum(A) B E sum(state*A)-state_mean*sum(A) obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = (E-B)/((ens_size-1)*obs_prior_var) state_inc = reg_coef*obs_inc

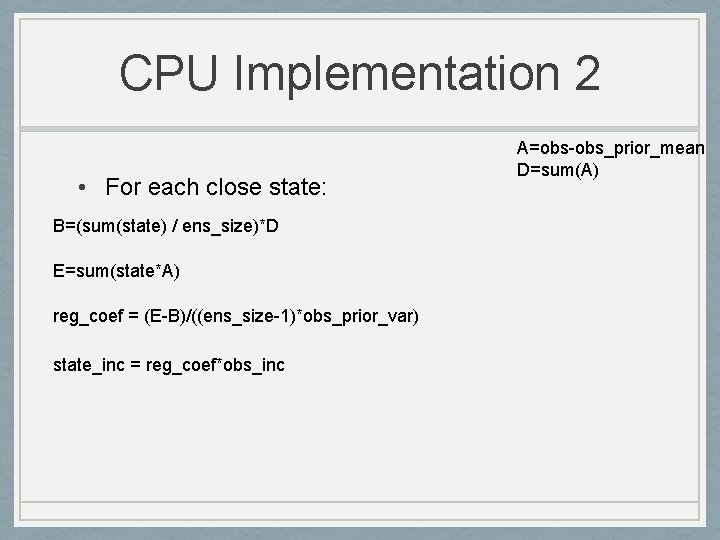

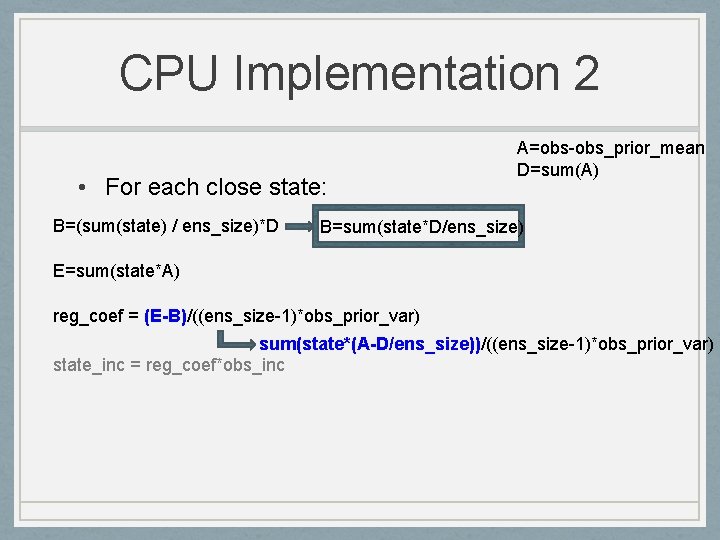

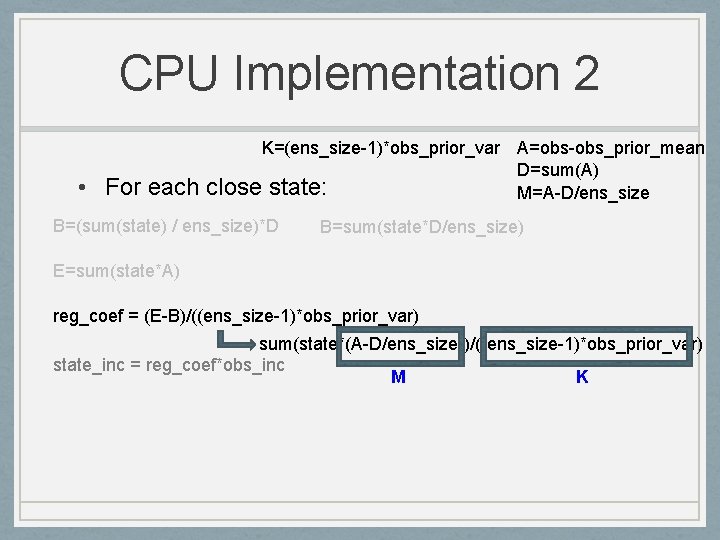

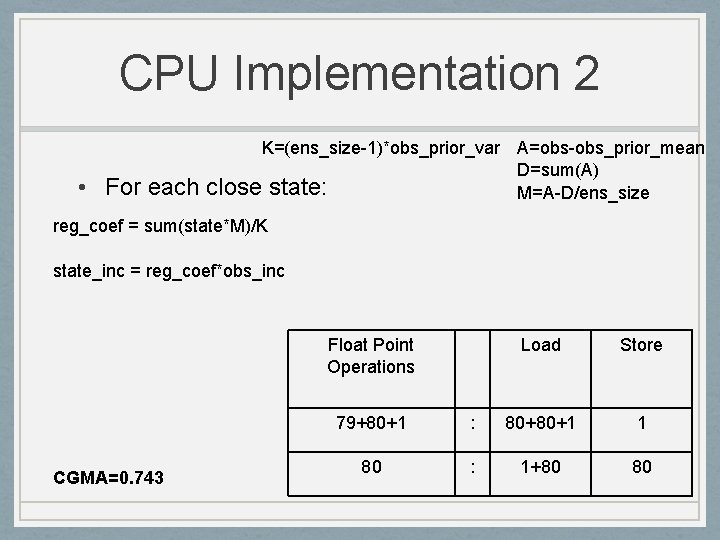

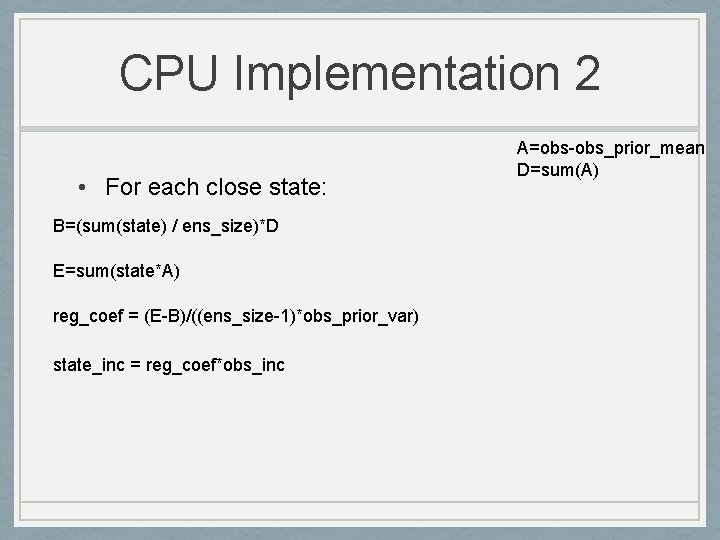

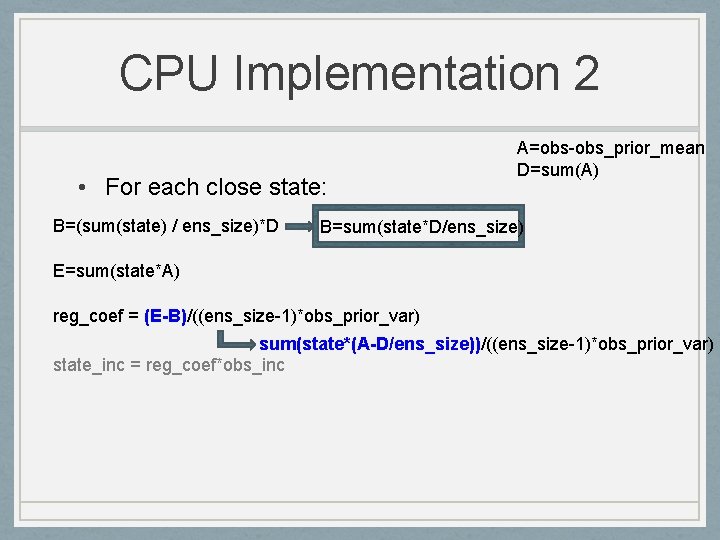

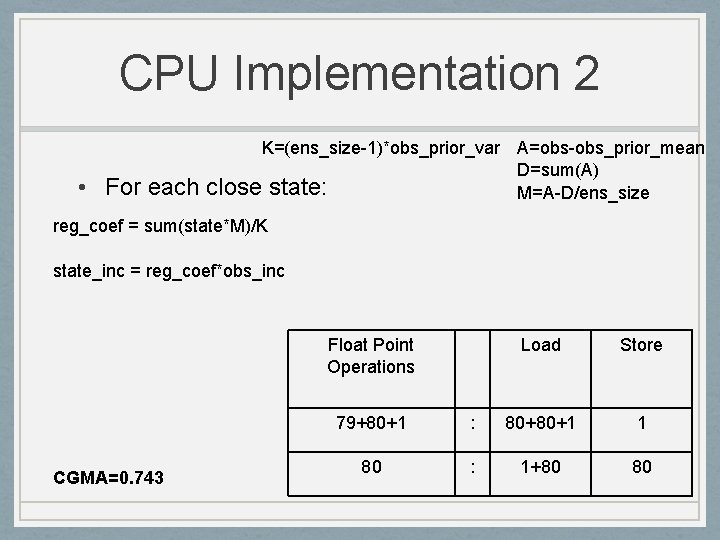

CPU Implementation 2 • For each close state: B=(sum(state) / ens_size)*D E=sum(state*A) reg_coef = (E-B)/((ens_size-1)*obs_prior_var) state_inc = reg_coef*obs_inc A=obs-obs_prior_mean D=sum(A)

CPU Implementation 2 • For each close state: B=(sum(state) / ens_size)*D A=obs-obs_prior_mean D=sum(A) B=sum(state*D/ens_size) E=sum(state*A) reg_coef = (E-B)/((ens_size-1)*obs_prior_var) sum(state*(A-D/ens_size))/((ens_size-1)*obs_prior_var) state_inc = reg_coef*obs_inc

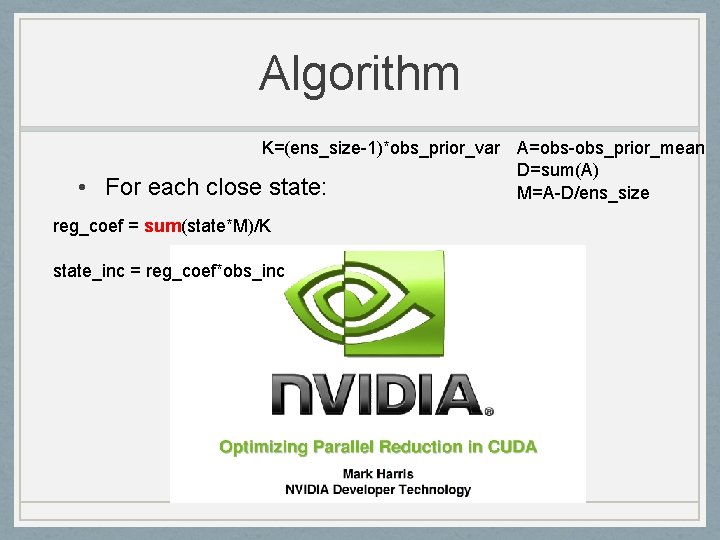

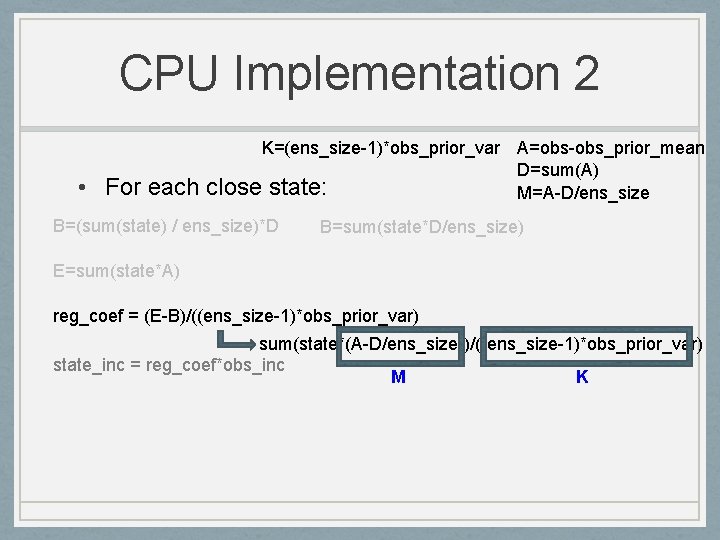

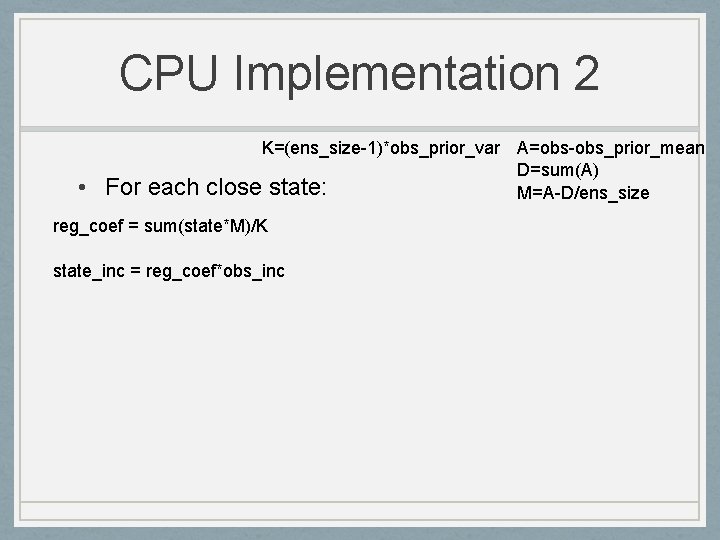

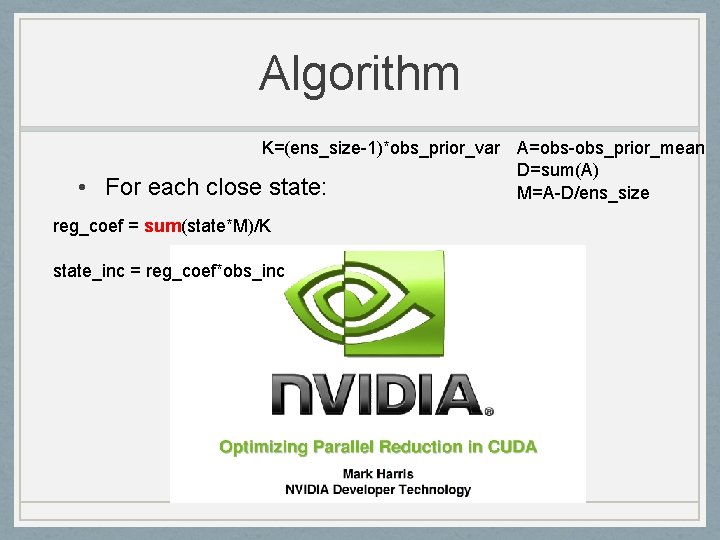

CPU Implementation 2 • For each K=(ens_size-1)*obs_prior_var A=obs-obs_prior_mean D=sum(A) close state: M=A-D/ens_size B=(sum(state) / ens_size)*D B=sum(state*D/ens_size) E=sum(state*A) reg_coef = (E-B)/((ens_size-1)*obs_prior_var) sum(state*(A-D/ens_size))/((ens_size-1)*obs_prior_var) state_inc = reg_coef*obs_inc M K

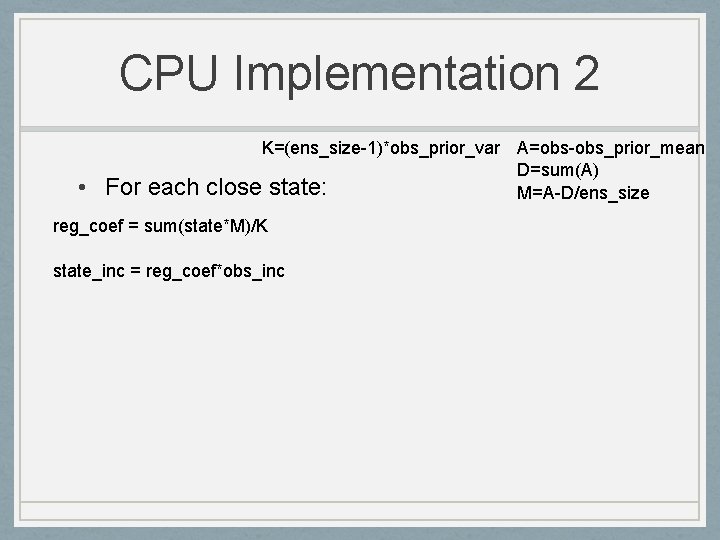

CPU Implementation 2 • For each K=(ens_size-1)*obs_prior_var A=obs-obs_prior_mean D=sum(A) close state: M=A-D/ens_size reg_coef = sum(state*M)/K state_inc = reg_coef*obs_inc

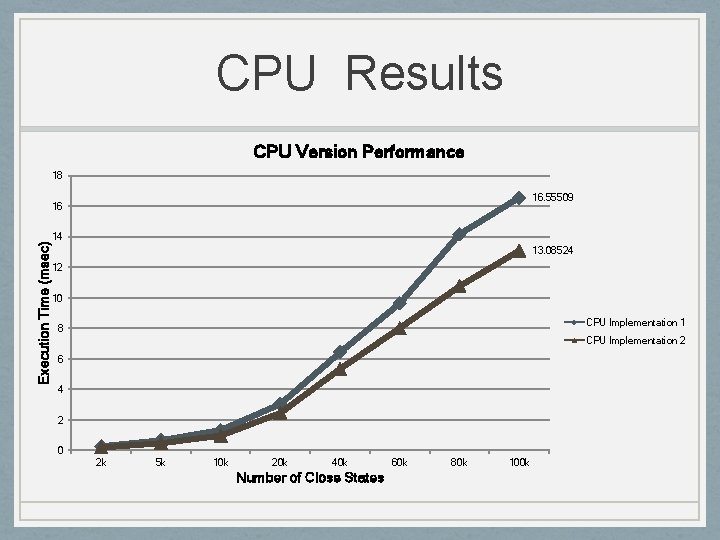

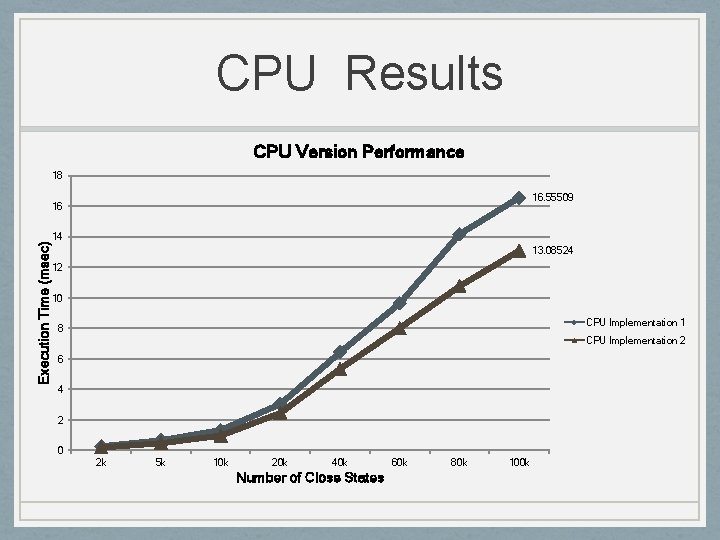

CPU Results CPU Version Performance 18 16. 55509 Execution Time (msec) 16 14 13. 08524 12 10 CPU Implementation 1 8 CPU Implementation 2 6 4 2 0 2 k 5 k 10 k 20 k 40 k Number of Close States 60 k 80 k 100 k

Algorithm • For each K=(ens_size-1)*obs_prior_var A=obs-obs_prior_mean D=sum(A) close state: M=A-D/ens_size reg_coef = sum(state*M)/K state_inc = reg_coef*obs_inc

![Algorithm Not Enough Computation sumarray80 79 sums 7 steps After Algorithm • Not Enough Computation • sum(array[80]) • 79 sums • 7 steps (After](https://slidetodoc.com/presentation_image/0dcc0e343c4100425a9bd4eabed89f32/image-19.jpg)

Algorithm • Not Enough Computation • sum(array[80]) • 79 sums • 7 steps (After padding) • sum(array[4*1024]) • 4, 194, 304 sums • 22 steps www. manutritionniste. com en. wikipedia. org

Algorithm • Low CGMA • Compute to Global Memory Access ratio

CPU Implementation 1 • For each close state: state_mean = sum(state) / ens_size obs_state_cov = sum( (state - state_mean) * (obs - obs_prior_mean) ) / (ens_size – reg_coef = obs_state_cov/obs_prior_var state_inc = reg_coef*obs_inc CGMA=1. 176 Float Point Operations Load Store 79+1 : 80+1 1 80+80+79+80+1 : 80+80+2 1 1 : 1+1 1 80 : 1+80 80

CPU Implementation 2 • For each K=(ens_size-1)*obs_prior_var A=obs-obs_prior_mean D=sum(A) close state: M=A-D/ens_size reg_coef = sum(state*M)/K state_inc = reg_coef*obs_inc Float Point Operations CGMA=0. 743 Load Store 79+80+1 : 80+80+1 1 80 : 1+80 80

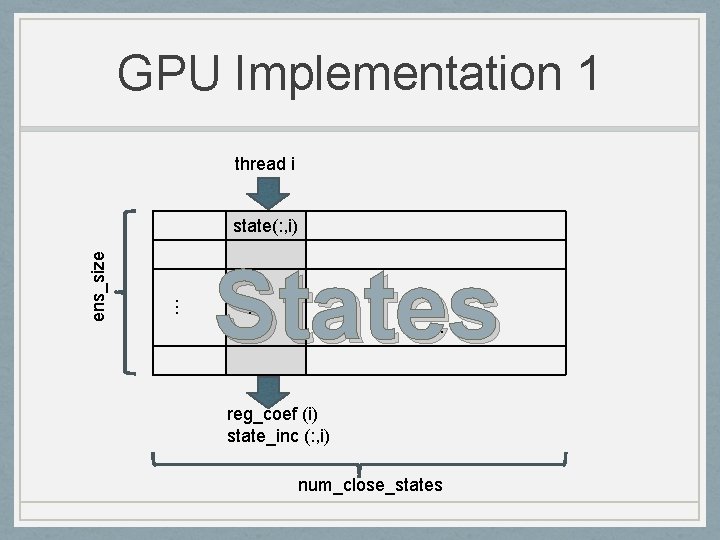

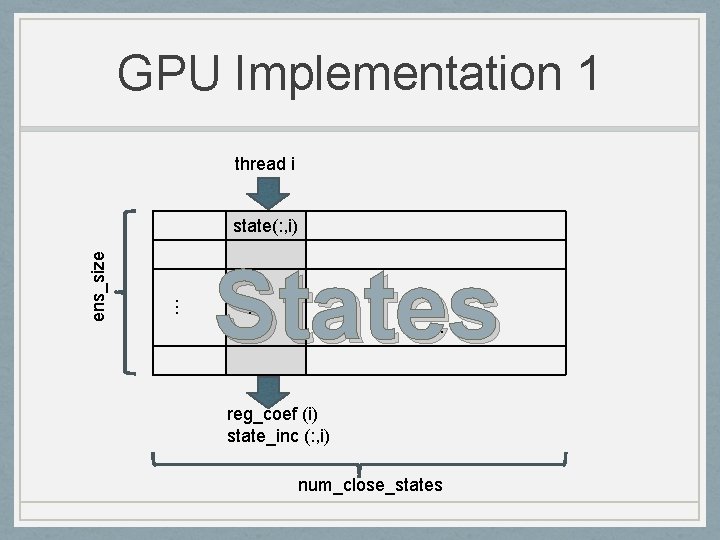

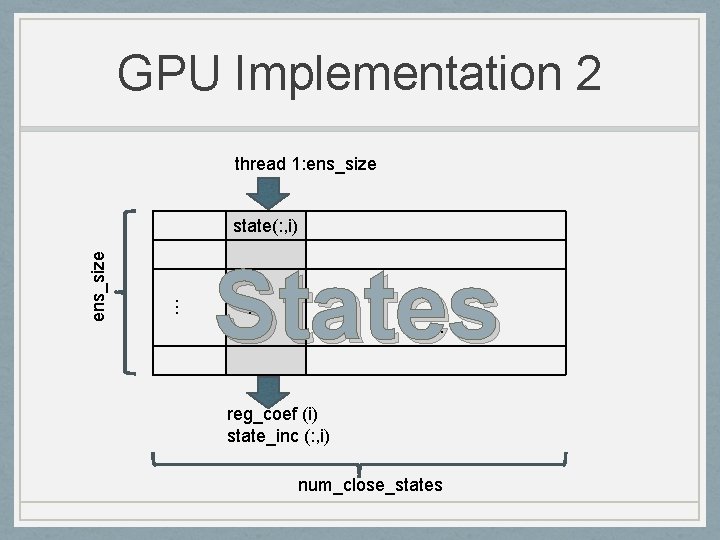

GPU Implementation 1 thread i States … … ens_size state(: , i) … reg_coef (i) state_inc (: , i) num_close_states

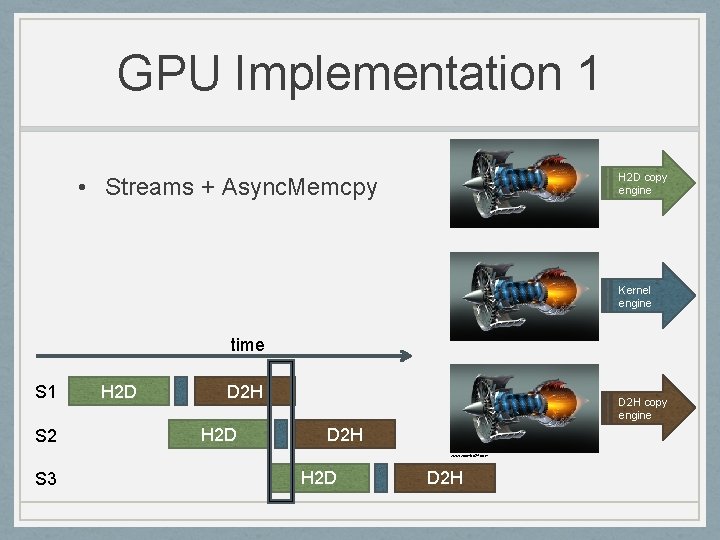

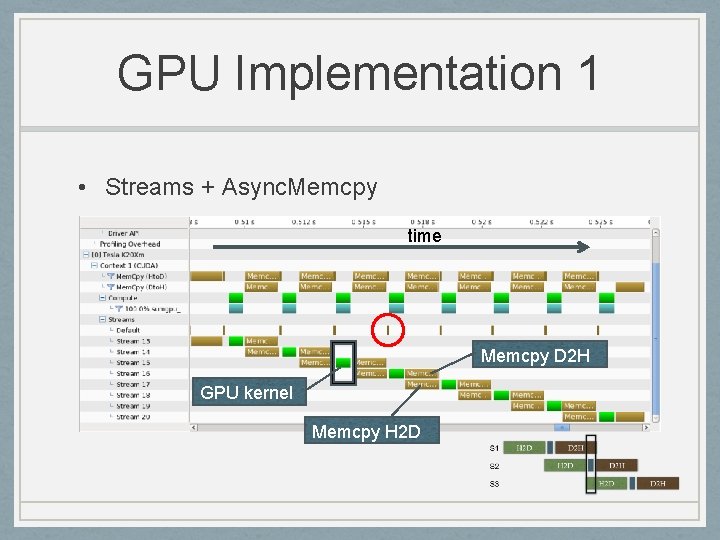

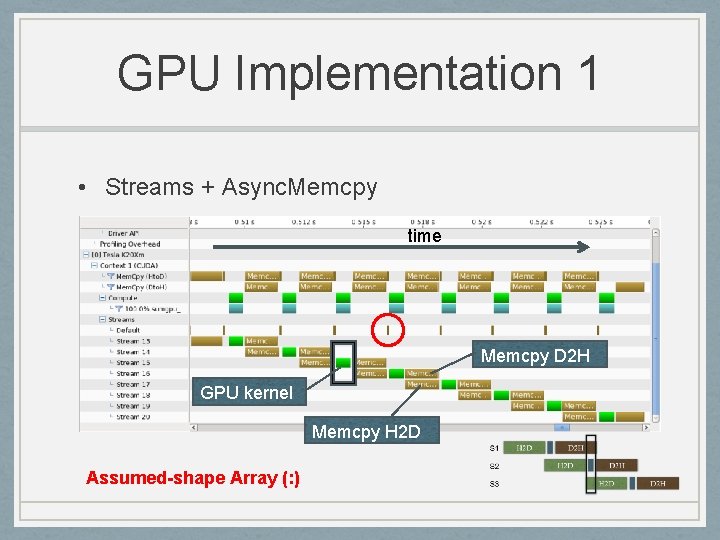

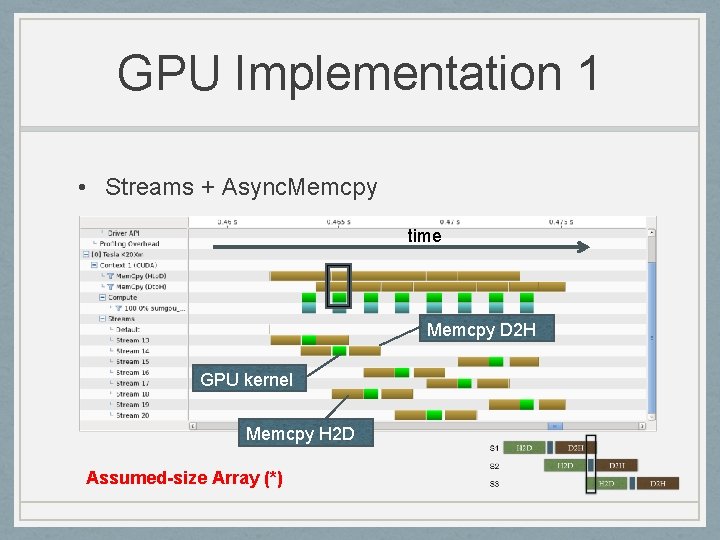

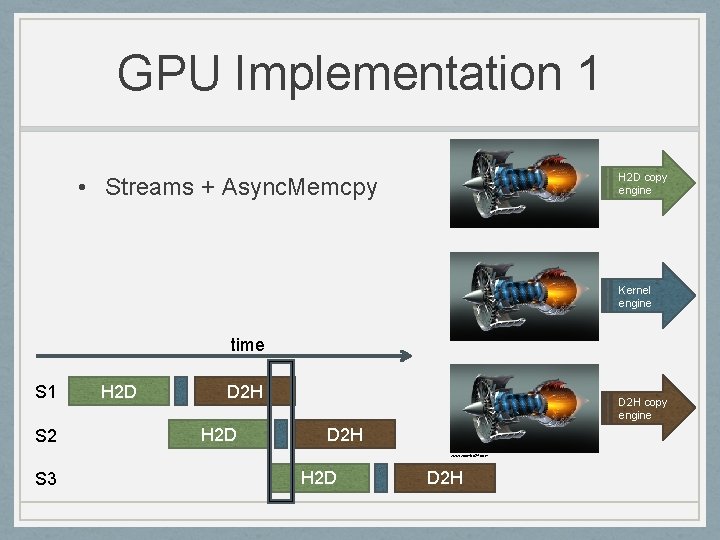

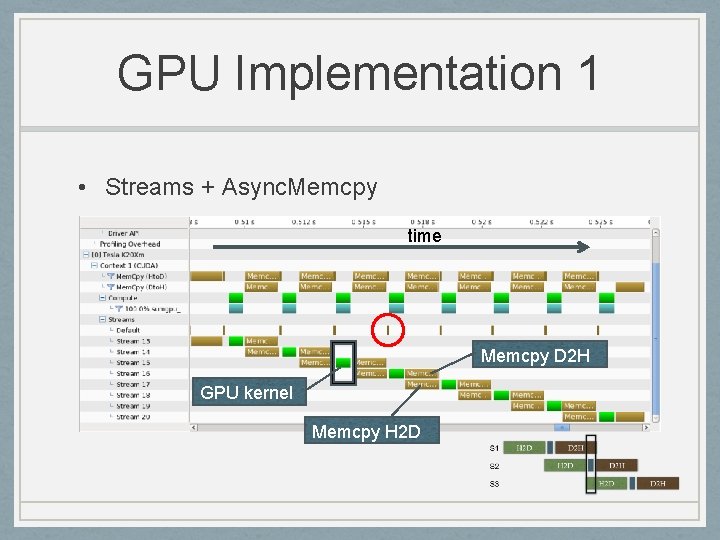

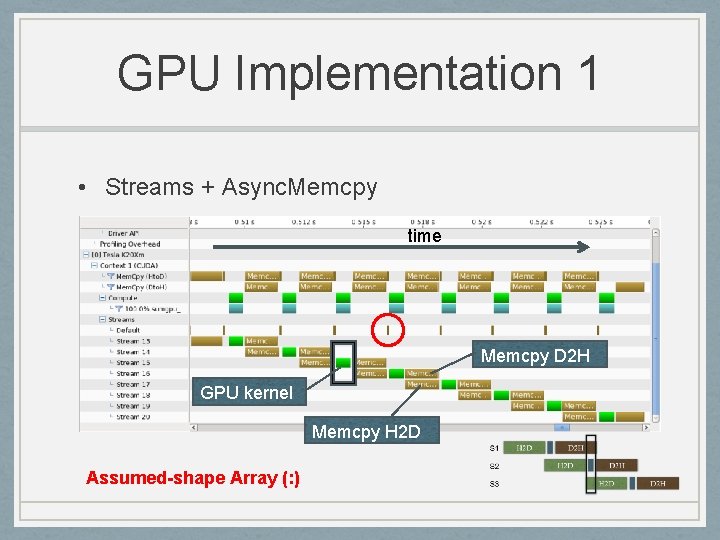

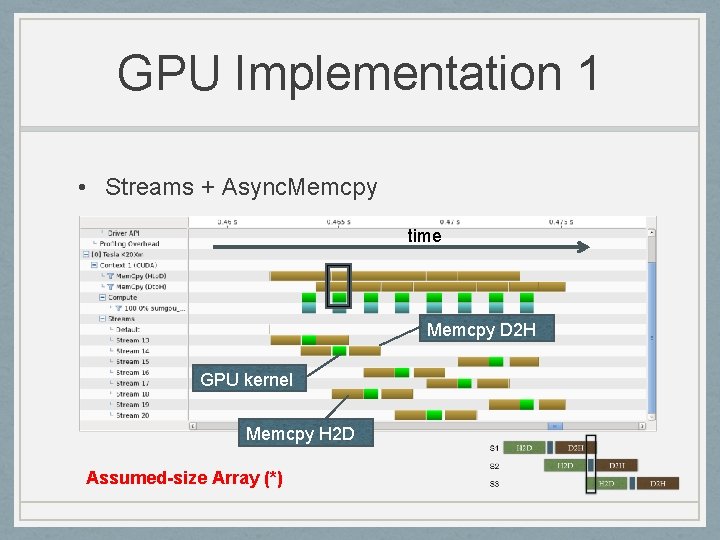

GPU Implementation 1 H 2 D copy engine • Streams + Async. Memcpy Kernel engine time S 1 S 2 H 2 D D 2 H copy engine D 2 H www. zoombd 24. com S 3 H 2 D D 2 H

GPU Implementation 1 • Streams + Async. Memcpy time Memcpy D 2 H GPU kernel Memcpy H 2 D

GPU Implementation 1 • Streams + Async. Memcpy time Memcpy D 2 H GPU kernel Memcpy H 2 D Assumed-shape Array (: )

GPU Implementation 1 • Streams + Async. Memcpy time Memcpy D 2 H GPU kernel Memcpy H 2 D Assumed-size Array (*)

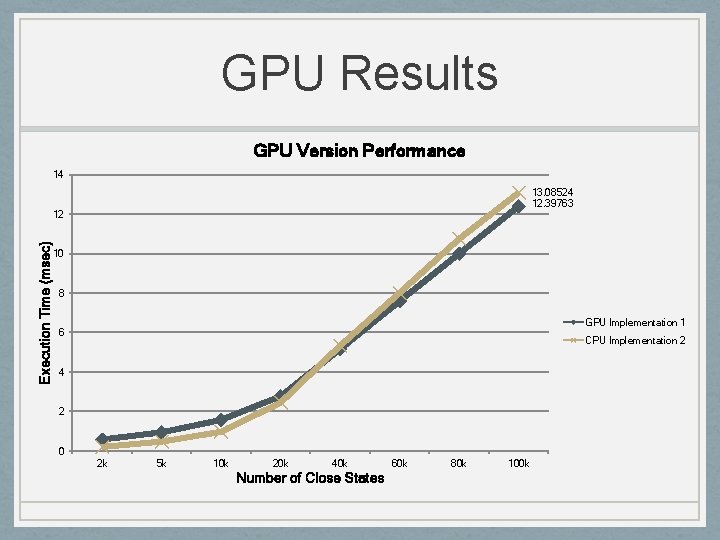

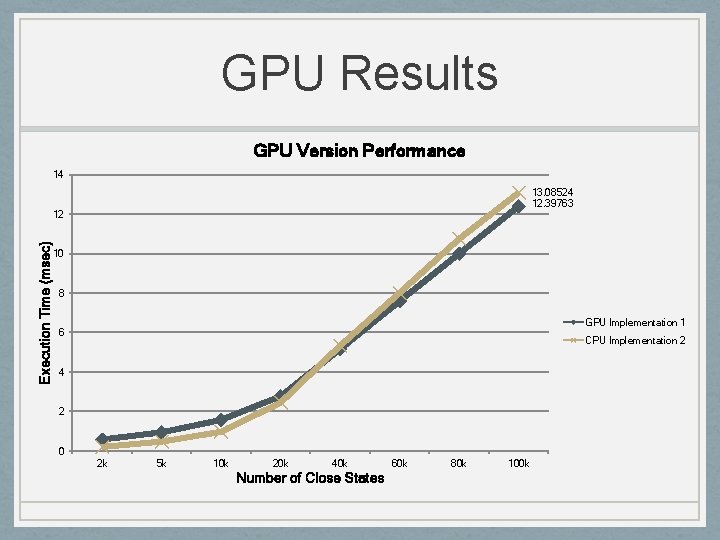

GPU Results GPU Version Performance 14 13. 08524 12. 39763 Execution Time (msec) 12 10 8 GPU Implementation 1 6 CPU Implementation 2 4 2 0 2 k 5 k 10 k 20 k 40 k Number of Close States 60 k 80 k 100 k

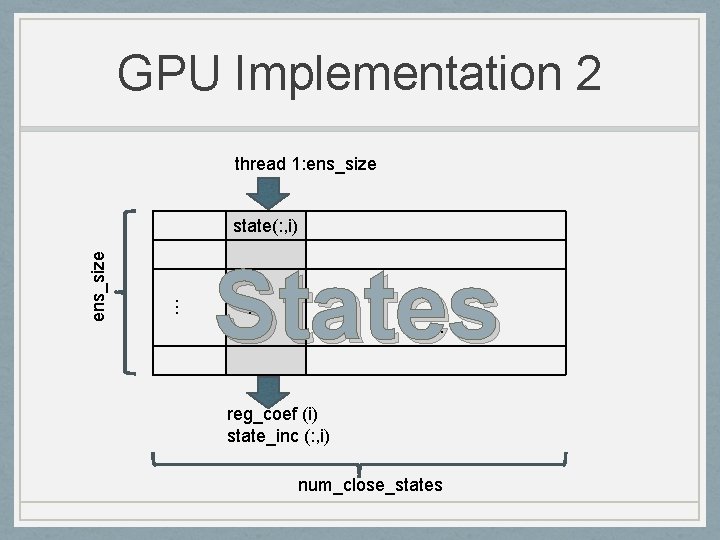

GPU Implementation 2 thread 1: ens_size States … … ens_size state(: , i) … reg_coef (i) state_inc (: , i) num_close_states

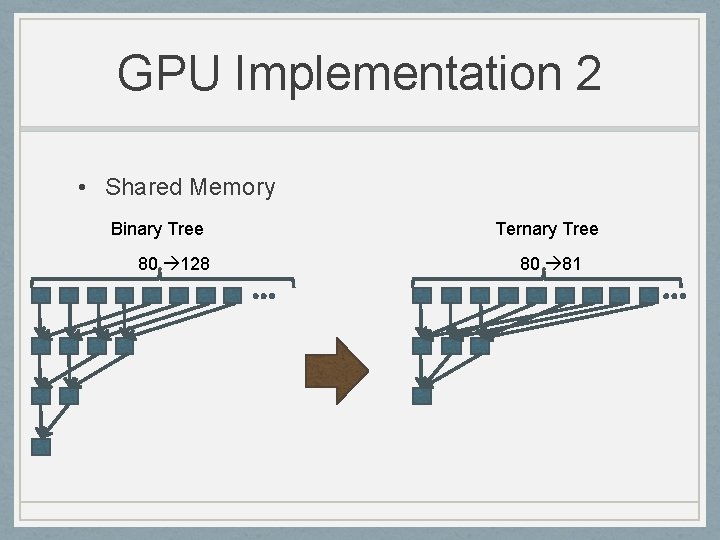

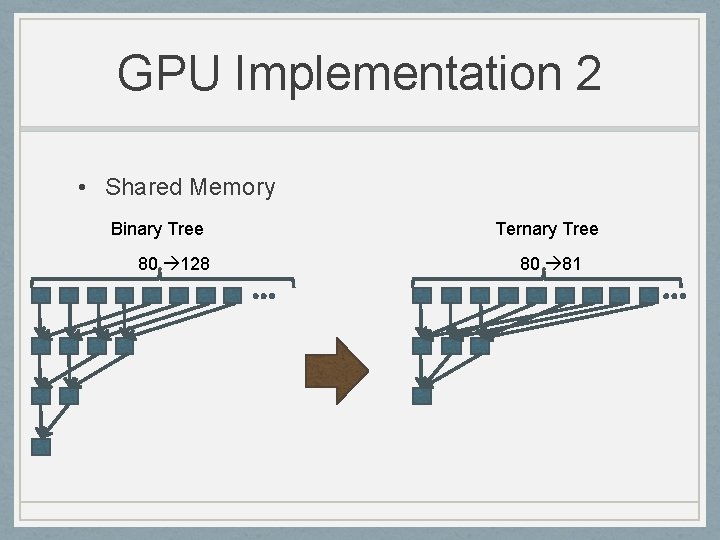

GPU Implementation 2 • Shared Memory Binary Tree 80 128 Ternary Tree 80 81

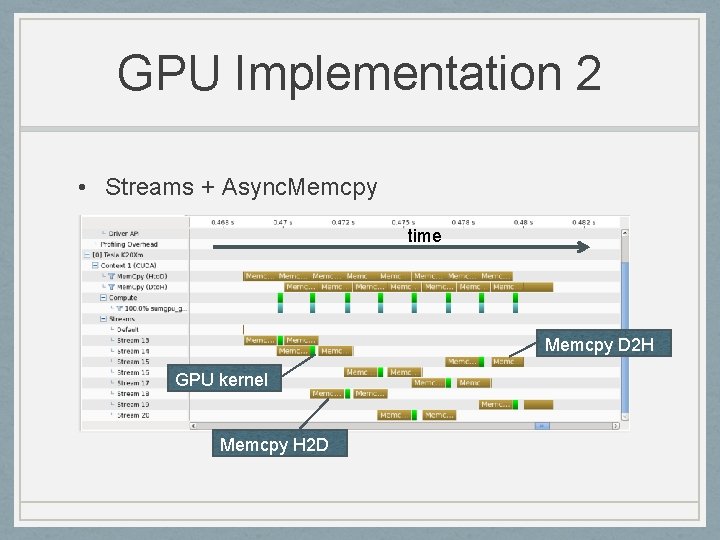

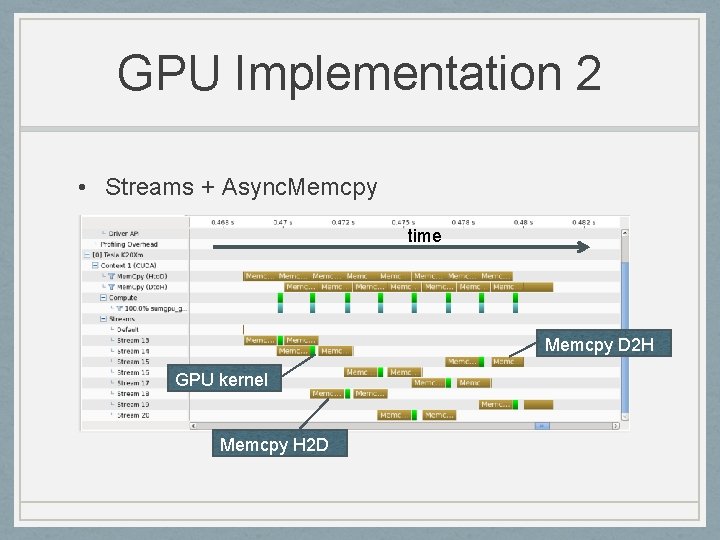

GPU Implementation 2 • Streams + Async. Memcpy time Memcpy D 2 H GPU kernel Memcpy H 2 D

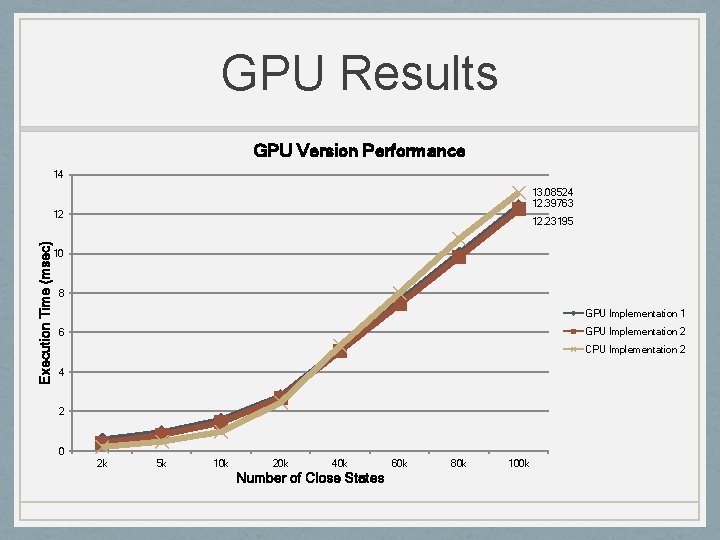

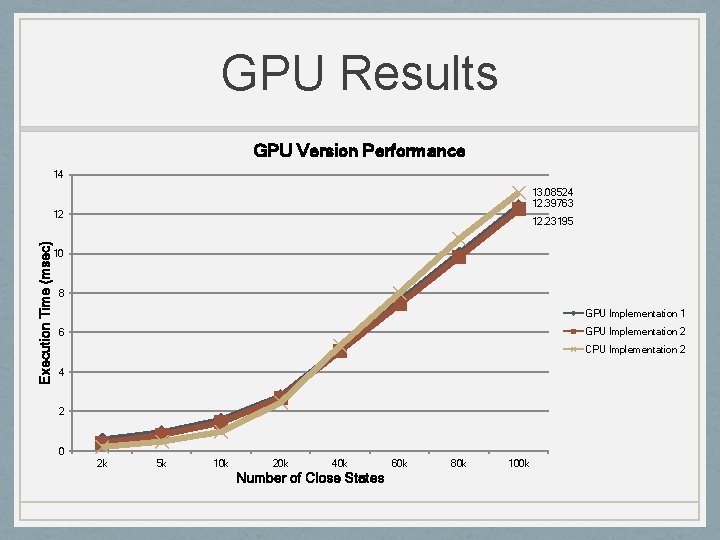

GPU Results GPU Version Performance 14 13. 08524 12. 39763 Execution Time (msec) 12 12. 23195 10 8 GPU Implementation 1 GPU Implementation 2 6 CPU Implementation 2 4 2 0 2 k 5 k 10 k 20 k 40 k Number of Close States 60 k 80 k 100 k

GPU Implementation 3 Image: pixshark. com

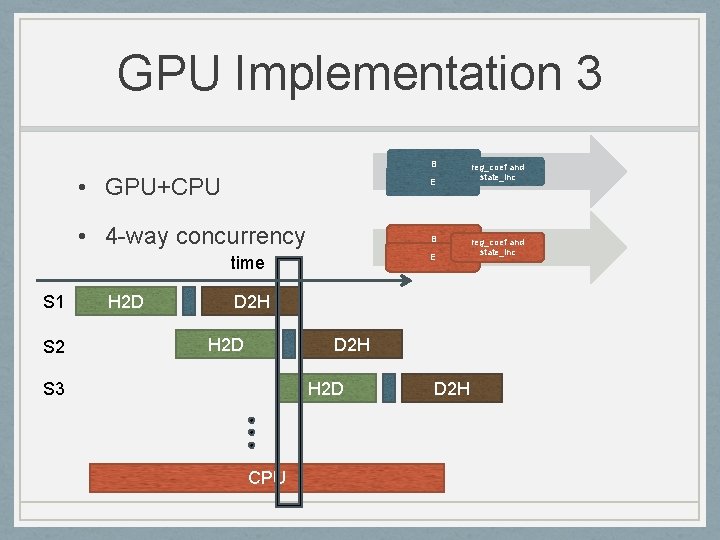

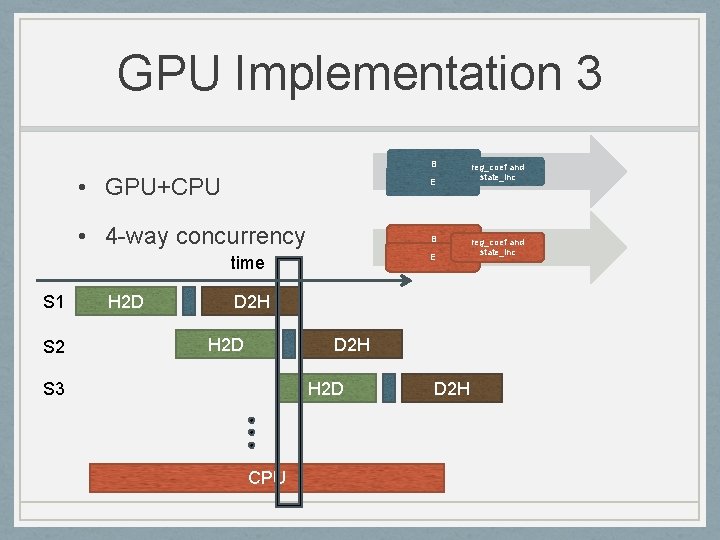

GPU Implementation 3 B • GPU+CPU E • 4 -way concurrency B E time S 1 S 2 H 2 D D 2 H H 2 D S 3 CPU D 2 H reg_coef and state_inc

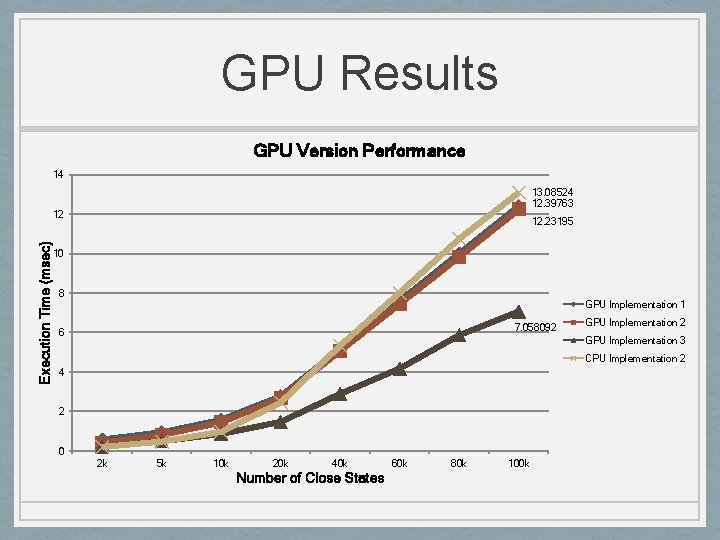

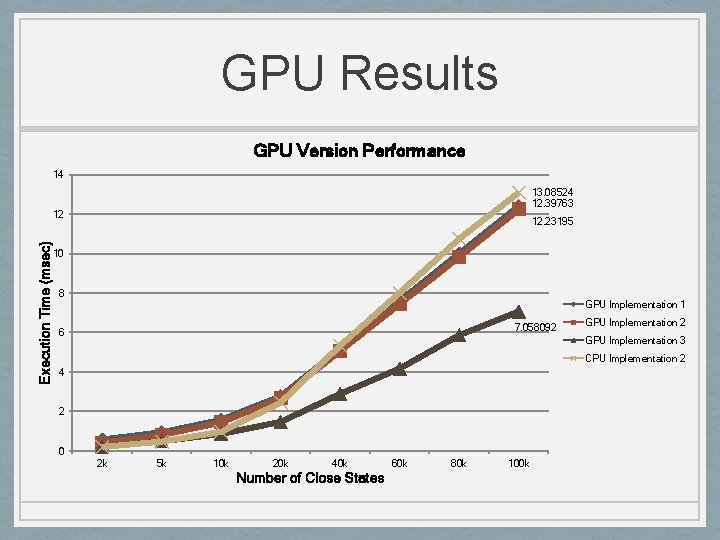

GPU Results GPU Version Performance 14 13. 08524 12. 39763 Execution Time (msec) 12 12. 23195 10 8 GPU Implementation 1 7. 058092 6 GPU Implementation 2 GPU Implementation 3 CPU Implementation 2 4 2 0 2 k 5 k 10 k 20 k 40 k Number of Close States 60 k 80 k 100 k

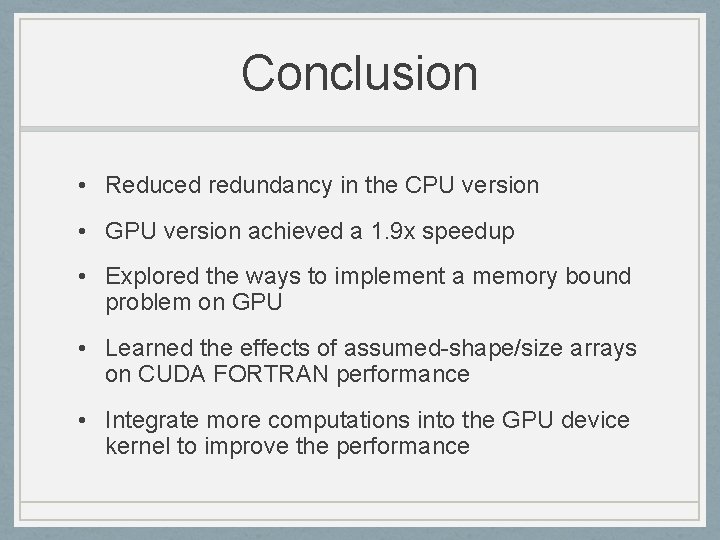

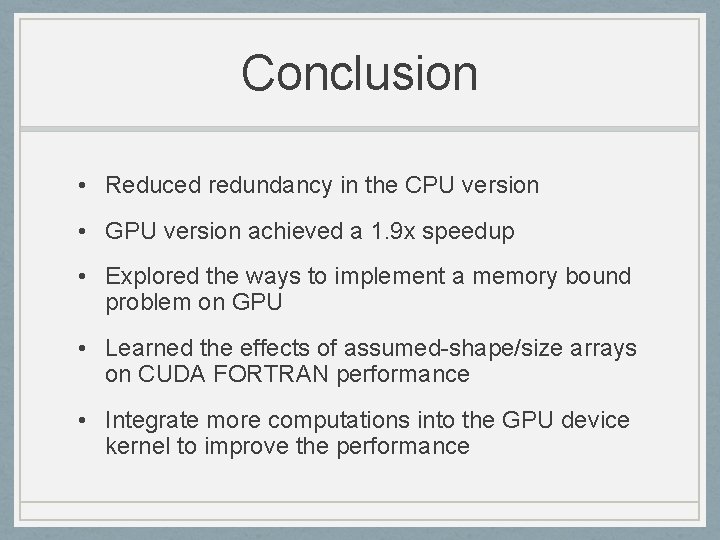

Conclusion • Reduced redundancy in the CPU version • GPU version achieved a 1. 9 x speedup • Explored the ways to implement a memory bound problem on GPU • Learned the effects of assumed-shape/size arrays on CUDA FORTRAN performance • Integrate more computations into the GPU device kernel to improve the performance

Acknowledgement • NCAR / UCAR • University of Wyoming • DARe. S: • • Jeff Anderson Nancy Collins Helen Kershaw Tim Hoar Kevin Raeder Silvia Gentile CISL/ SIPar. CS • Rich Loft • Raghu Raj Kumar Thank You!