Evaluating Coprocessor Effectiveness for the Data Assimilation Research

- Slides: 20

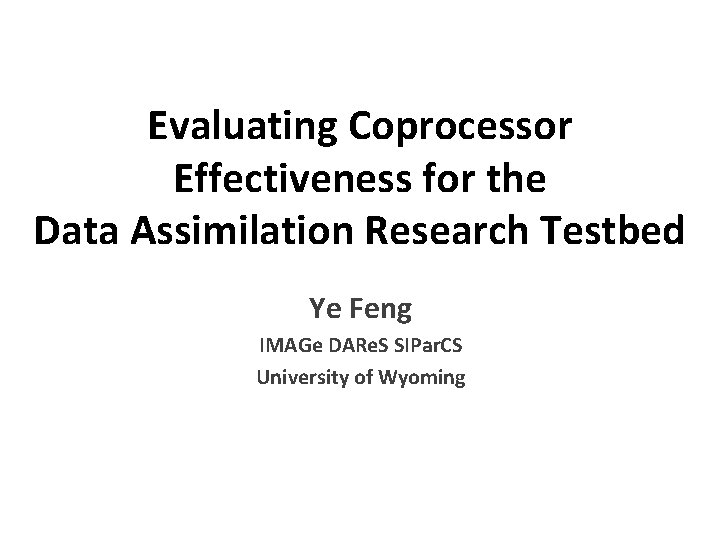

Evaluating Coprocessor Effectiveness for the Data Assimilation Research Testbed Ye Feng IMAGe DARe. S SIPar. CS University of Wyoming

Introduction • Task: evaluating the feasibility and effectiveness of coprocessor on DART. • Target: get_close_obs ( profiling result: computationally intensive & executed multiple times during a typical DART run. ) • Coprocessor: NVDIA GPUs with CUDA Fortran. • Result: Parallel version of exhaustive search on GPU is faster.

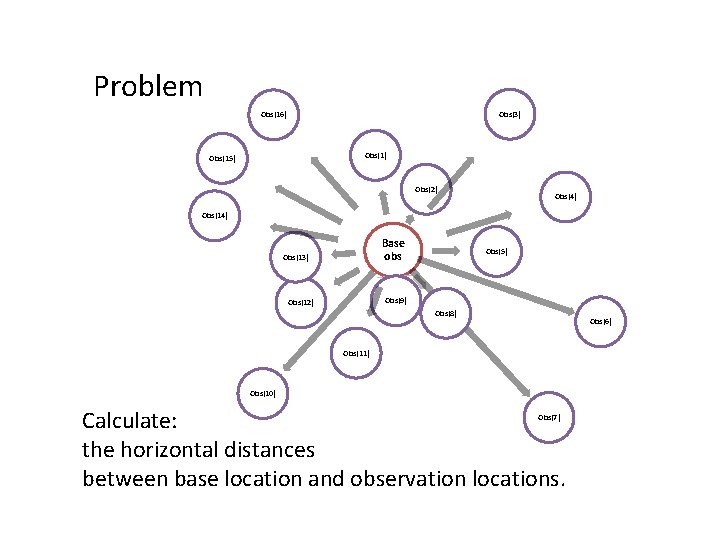

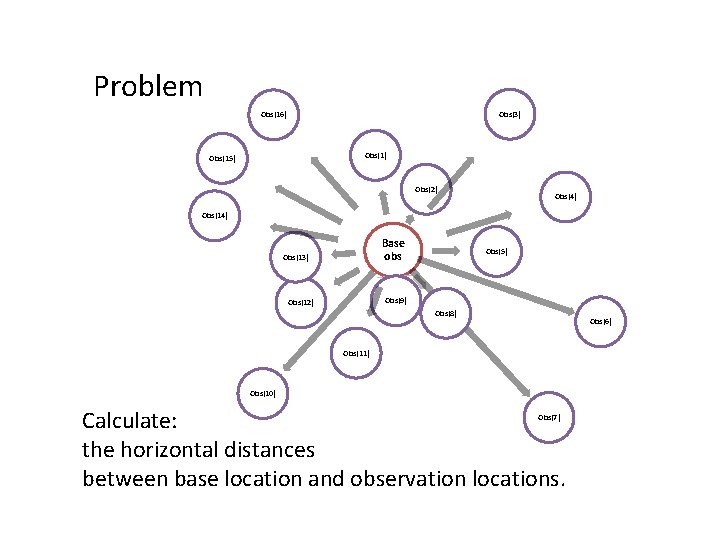

Problem Obs(3) Obs(16) Obs(15) Obs(2) Obs(4) Obs(14) Base obs Obs(13) Obs(5) Obs(9) Obs(12) Obs(8) Obs(6) Obs(11) Obs(10) Calculate: the horizontal distances between base location and observation locations. Obs(7)

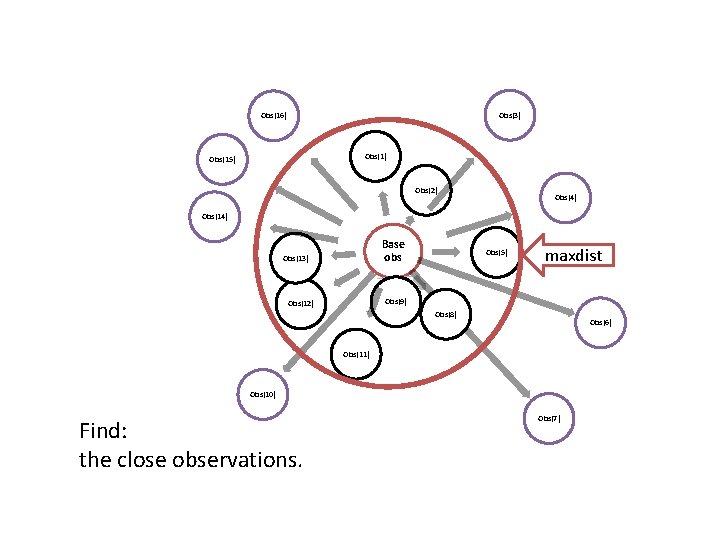

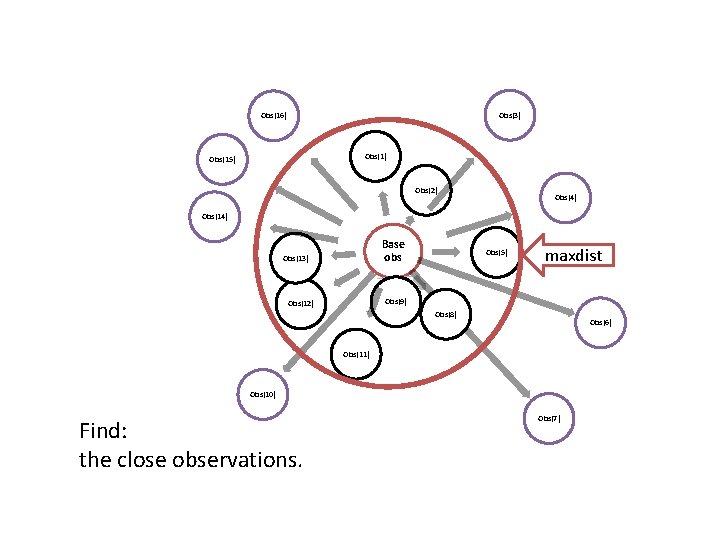

Obs(3) Obs(16) Obs(15) Obs(2) Obs(4) Obs(14) Base obs Obs(13) Obs(5) maxdist Obs(9) Obs(12) Obs(8) Obs(6) Obs(11) Obs(10) Find: the close observations. Obs(7)

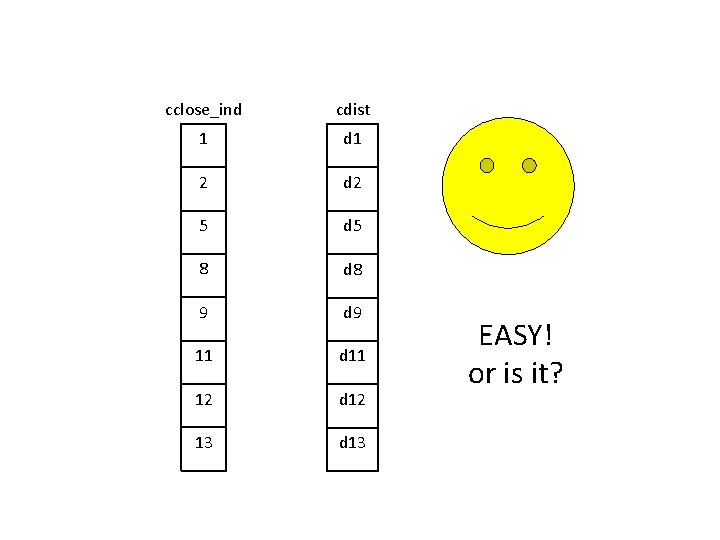

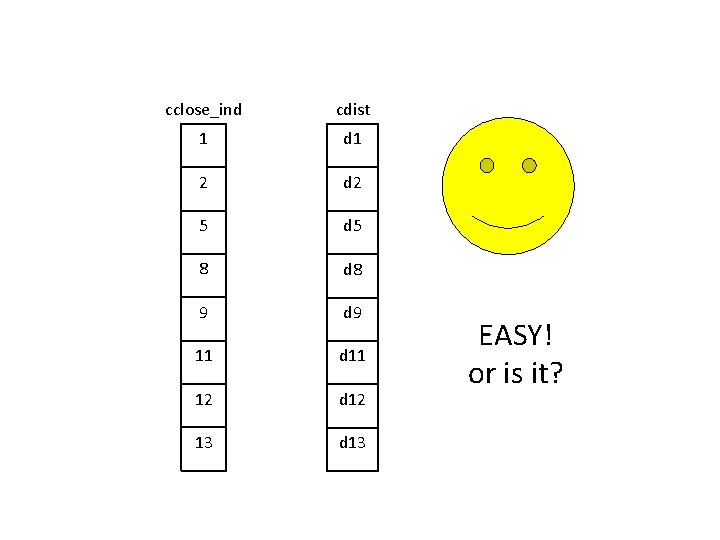

cclose_ind cdist 1 d 1 2 d 2 5 d 5 8 d 8 9 d 9 11 d 11 12 d 12 13 d 13 EASY! or is it?

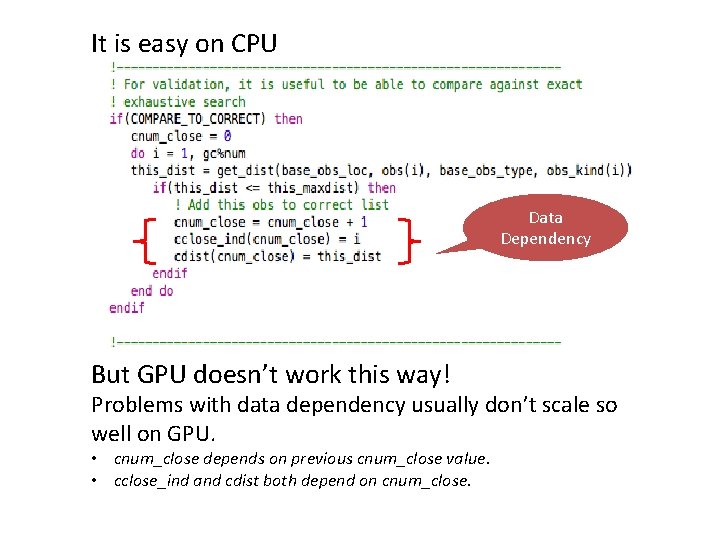

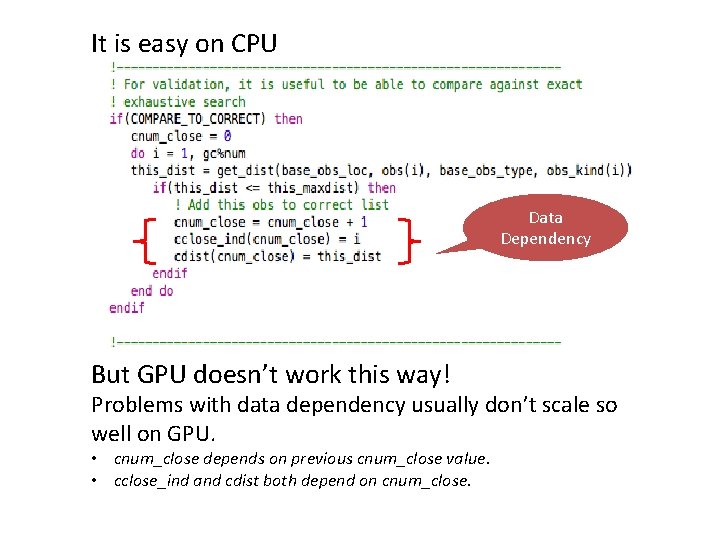

It is easy on CPU Data Dependency But GPU doesn’t work this way! Problems with data dependency usually don’t scale so well on GPU. • cnum_close depends on previous cnum_close value. • cclose_ind and cdist both depend on cnum_close.

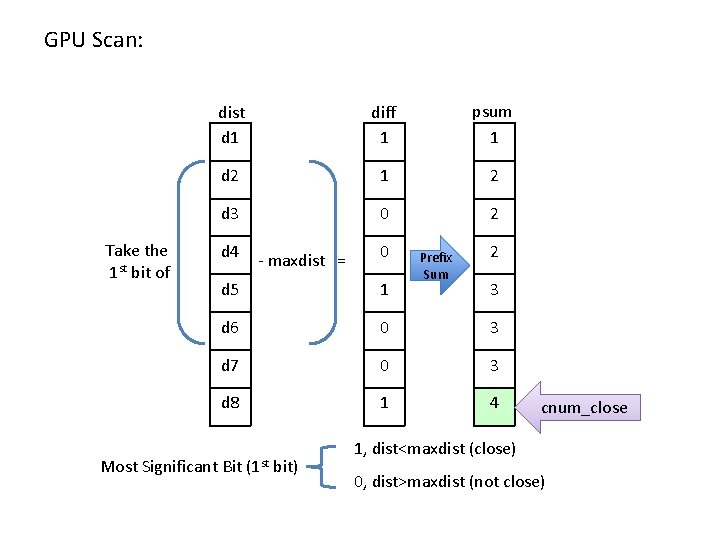

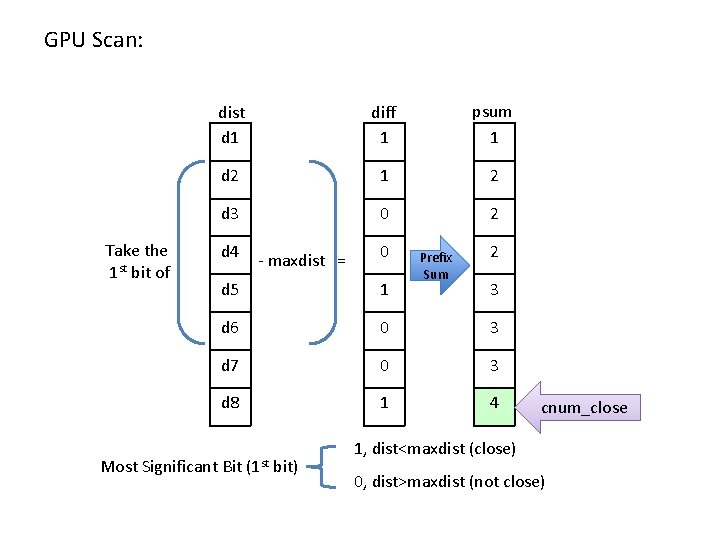

GPU Scan: Take the 1 st bit of dist d 1 diff 1 psum 1 d 2 1 2 d 3 0 2 d 4 - maxdist = 0 Prefix Sum 2 3 d 5 1 d 6 0 3 d 7 0 3 d 8 1 4 Most Significant Bit (1 st bit) cnum_close 1, dist<maxdist (close) 0, dist>maxdist (not close)

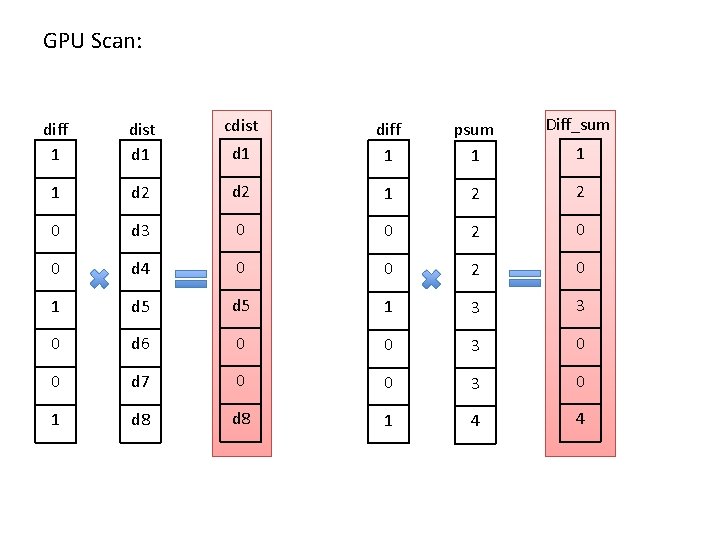

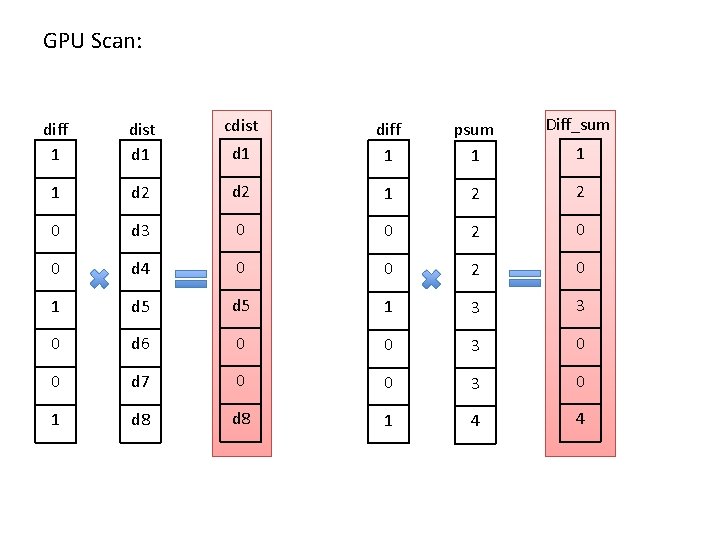

GPU Scan: psum 1 Diff_sum d 1 diff 1 d 2 1 2 2 0 d 3 0 0 2 0 0 d 4 0 0 2 0 1 d 5 1 3 3 0 d 6 0 0 3 0 0 d 7 0 0 3 0 1 d 8 1 4 4 diff 1 dist d 1 cdist 1 1

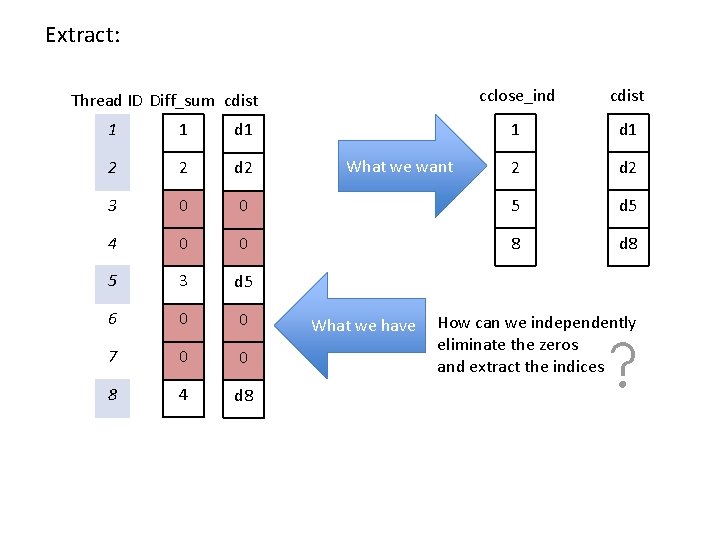

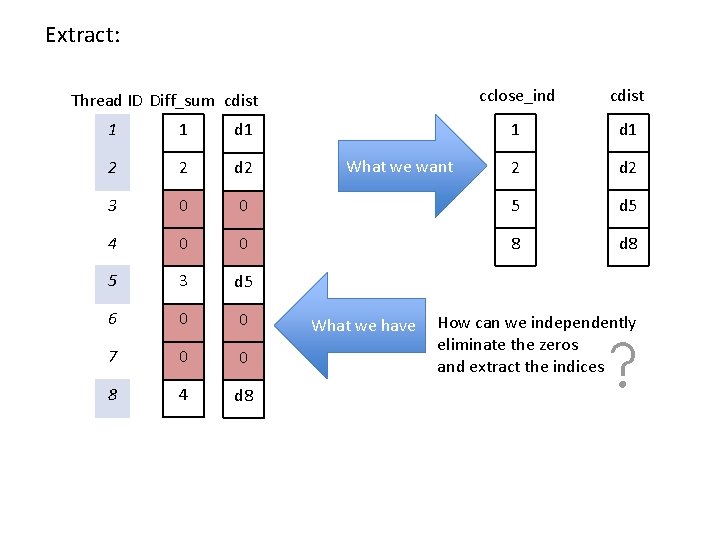

Extract: Thread ID Diff_sum cdist cclose_ind cdist 1 d 1 2 d 2 1 1 d 1 2 2 d 2 3 0 0 5 d 5 4 0 0 8 d 8 5 3 d 5 6 0 0 7 0 0 8 4 d 8 What we want What we have How can we independently eliminate the zeros and extract the indices

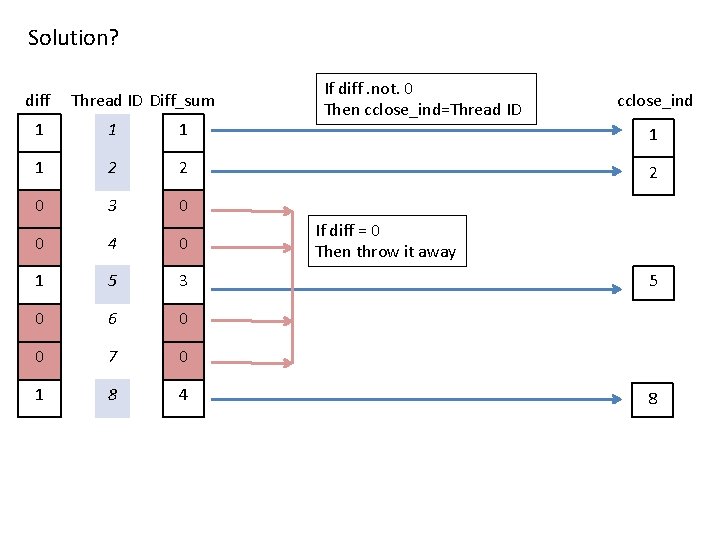

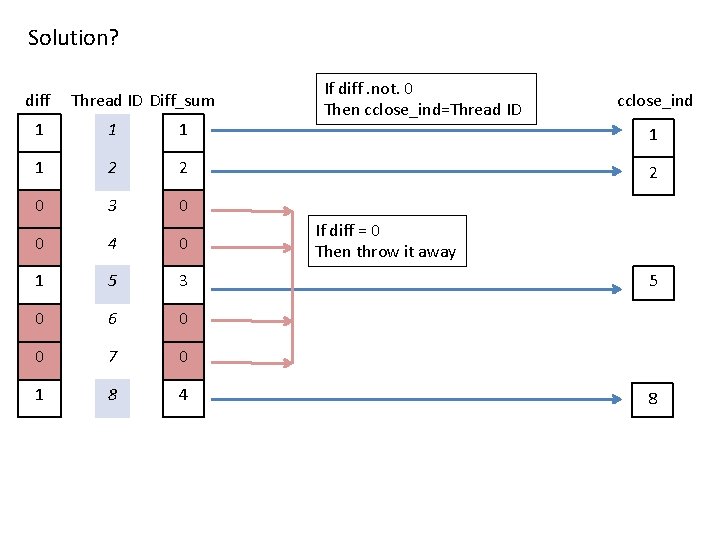

Solution? diff Thread ID Diff_sum 1 1 2 2 0 3 0 0 4 0 1 5 3 0 6 0 0 7 0 1 8 4 If diff. not. 0 Then cclose_ind=Thread ID cclose_ind 1 2 If diff = 0 Then throw it away 5 8

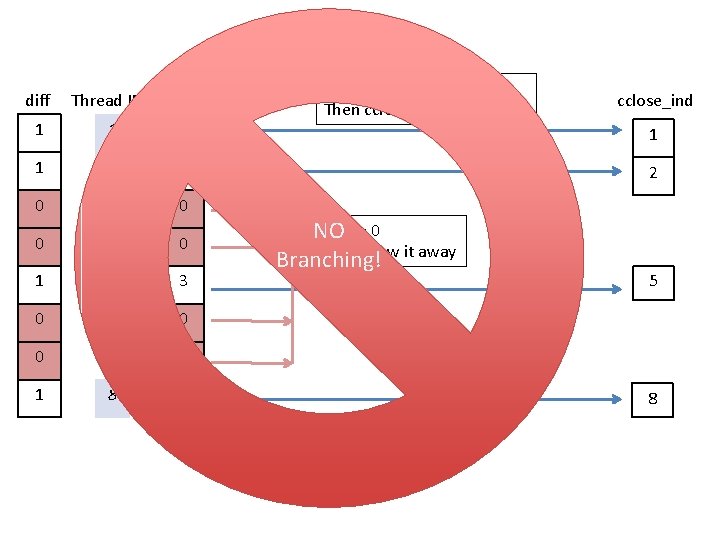

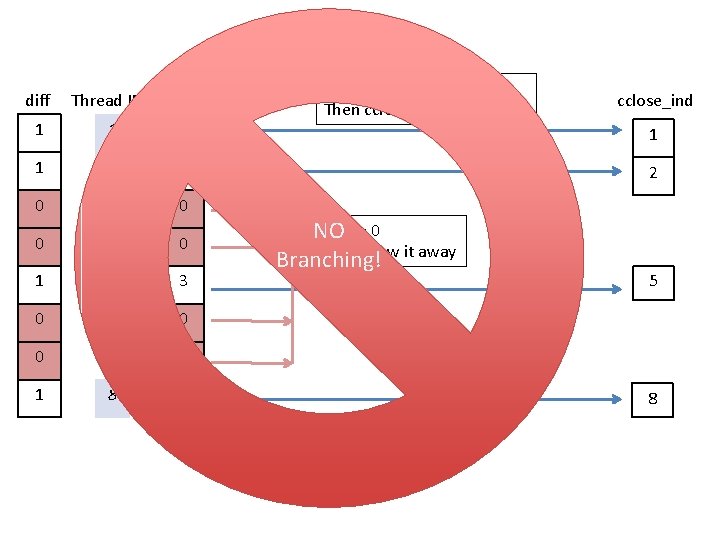

diff Thread ID Diff_sum 1 1 2 2 0 3 0 0 4 0 1 5 3 0 6 0 0 7 0 1 8 4 If diff. not. 0 Then cclose_ind=Thread ID cclose_ind 1 2 If diff = 0 NO Then throw it away Branching! 5 8

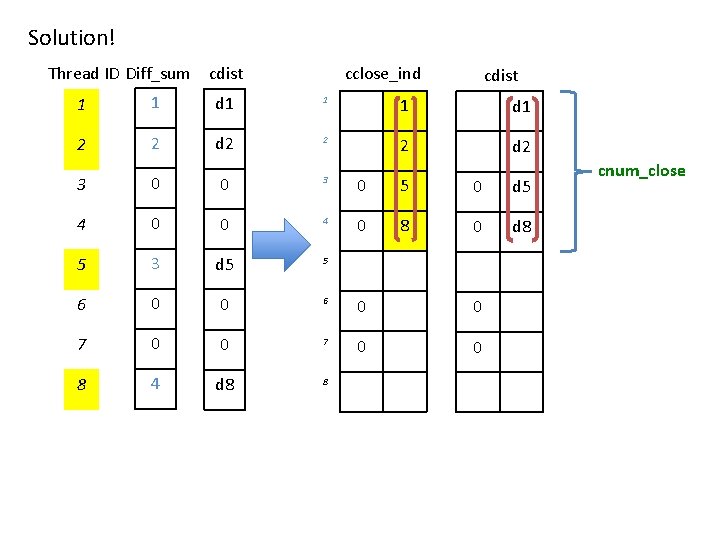

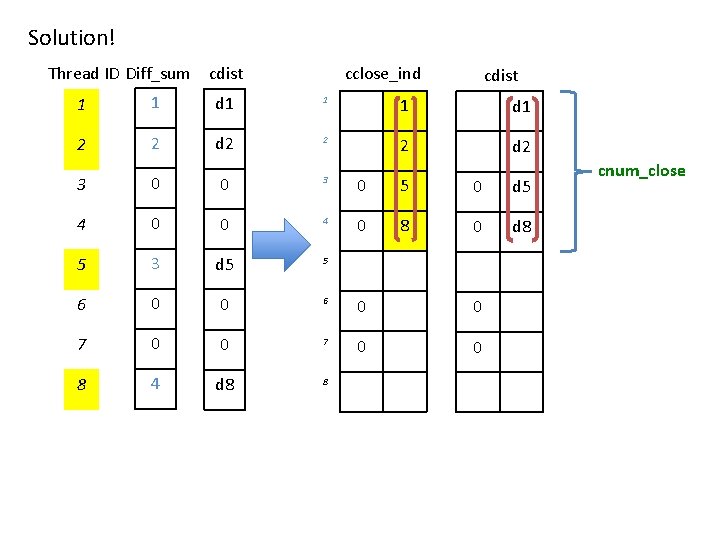

Solution! Thread ID Diff_sum cdist cclose_ind cdist 1 1 d 1 2 2 d 2 3 0 0 3 0 5 0 d 5 4 0 0 4 0 8 0 d 8 5 3 d 5 5 6 0 0 7 0 0 8 4 d 8 8 cnum_close

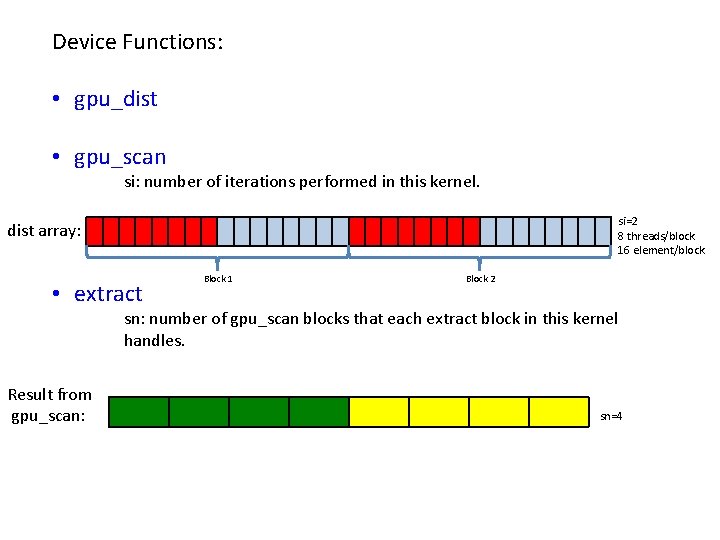

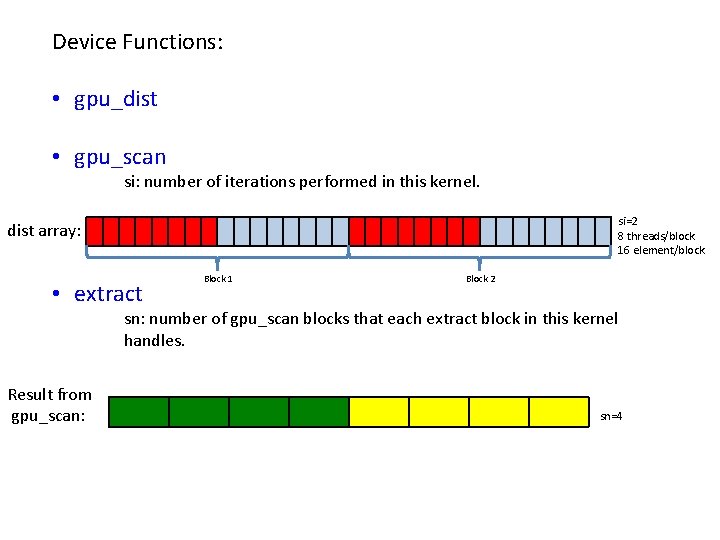

Device Functions: • gpu_dist • gpu_scan si: number of iterations performed in this kernel. si=2 8 threads/block 16 element/block dist array: • extract Block 1 Block 2 sn: number of gpu_scan blocks that each extract block in this kernel handles. Result from gpu_scan: sn=4

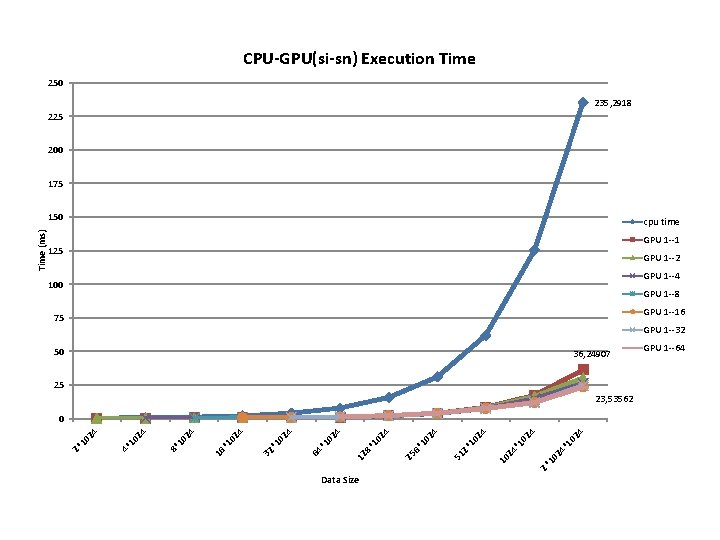

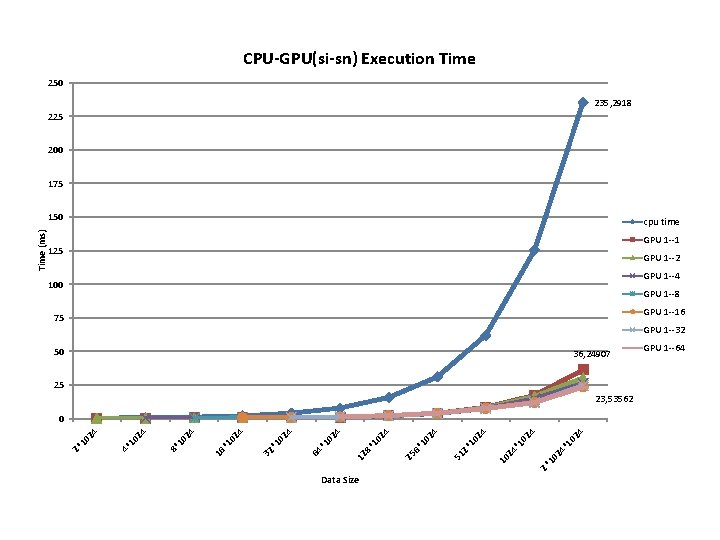

CPU-GPU(si-sn) Execution Time 250 235, 2918 225 200 175 cpu time GPU 1 --1 125 GPU 1 --2 GPU 1 --4 100 GPU 1 --8 GPU 1 --16 75 GPU 1 --32 50 36, 24907 25 23, 53562 2* 10 24 *1 02 4 4 24 *1 02 10 02 4 *1 51 2 02 4 *1 *1 12 8 25 6 02 4 24 10 64 * 24 10 32 * 24 10 16 * 10 24 8* 10 24 4* 10 24 0 2* Time (ms) 150 Data Size GPU 1 --64

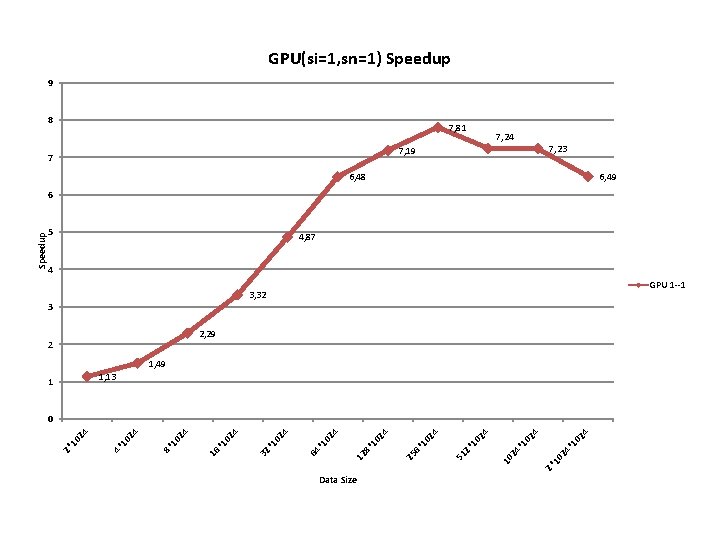

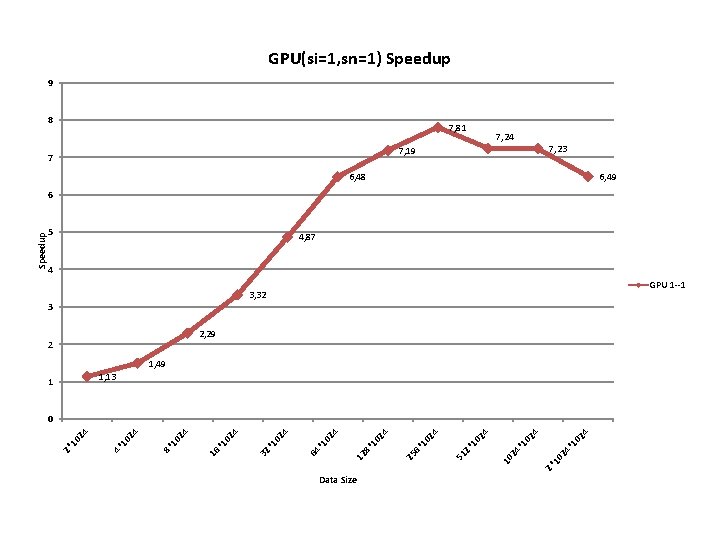

GPU(si=1, sn=1) Speedup 9 8 7, 81 7, 24 7, 23 7, 19 7 6, 49 6, 48 5 4, 87 4 GPU 1 --1 3, 32 3 2, 29 2 1, 49 1, 13 1 2* 10 24 *1 02 4 4 24 *1 02 10 02 4 *1 51 2 02 4 *1 *1 12 8 25 6 02 4 24 10 64 * 24 10 32 * 24 10 16 * 10 24 8* 24 10 4* 10 24 0 2* Speedup 6 Data Size

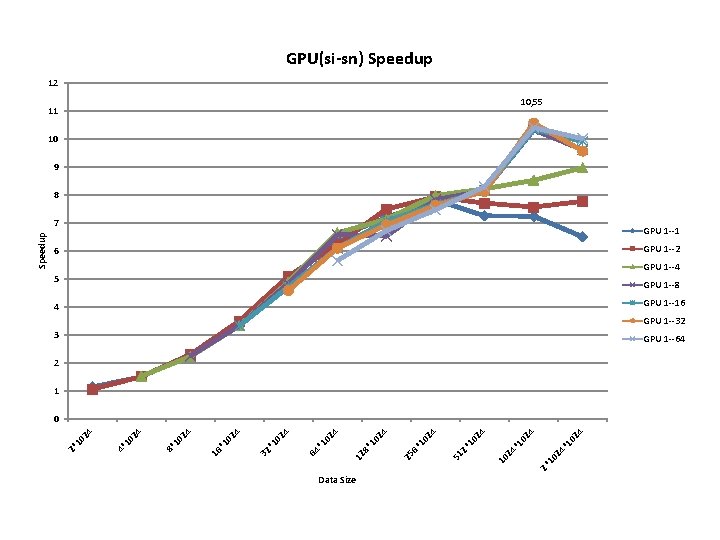

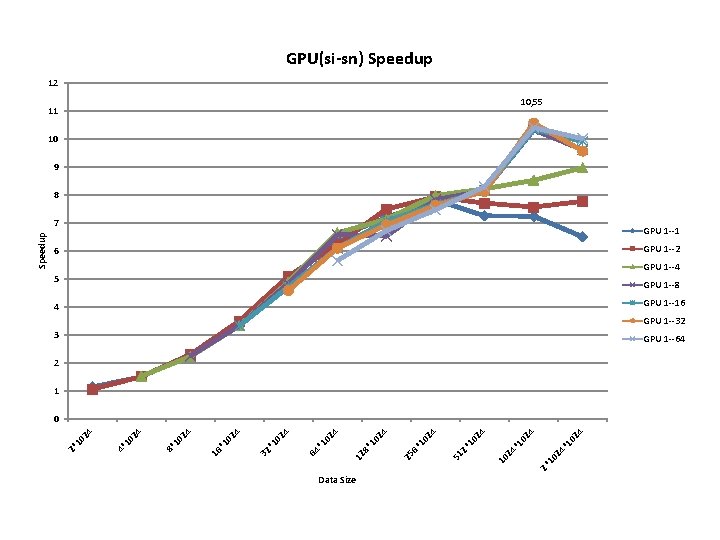

GPU(si-sn) Speedup 12 10, 55 11 10 9 8 GPU 1 --1 GPU 1 --2 6 GPU 1 --4 5 GPU 1 --8 GPU 1 --16 4 GPU 1 --32 3 GPU 1 --64 2 1 2* 10 24 *1 02 4 4 24 *1 02 10 02 4 *1 51 2 02 4 *1 *1 12 8 25 6 02 4 24 10 64 * 24 10 32 * 24 10 16 * 10 24 8* 10 24 4* 10 24 0 2* Speedup 7 Data Size

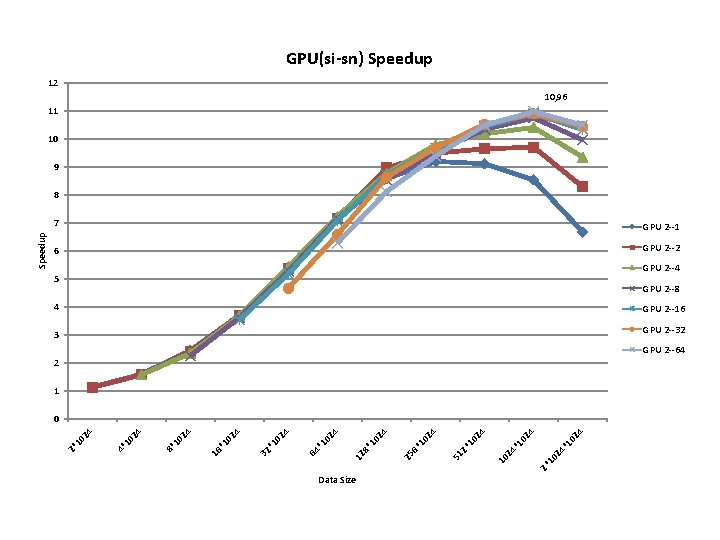

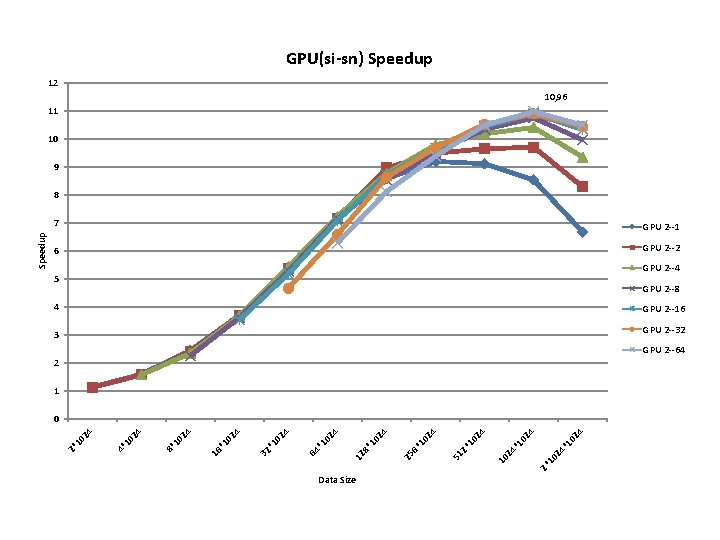

GPU(si-sn) Speedup 12 10, 96 11 10 9 7 GPU 2 --1 6 GPU 2 --2 GPU 2 --4 5 GPU 2 --8 4 GPU 2 --16 GPU 2 --32 3 GPU 2 --64 2 1 2* 10 24 *1 02 4 4 24 *1 02 10 02 4 *1 51 2 02 4 *1 *1 12 8 25 6 02 4 24 10 64 * 24 10 32 * 24 10 16 * 10 24 8* 10 24 4* 10 24 0 2* Speedup 8 Data Size

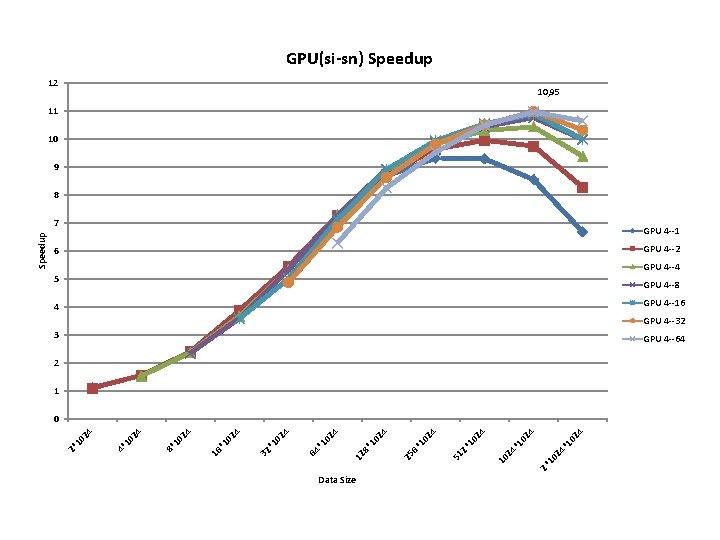

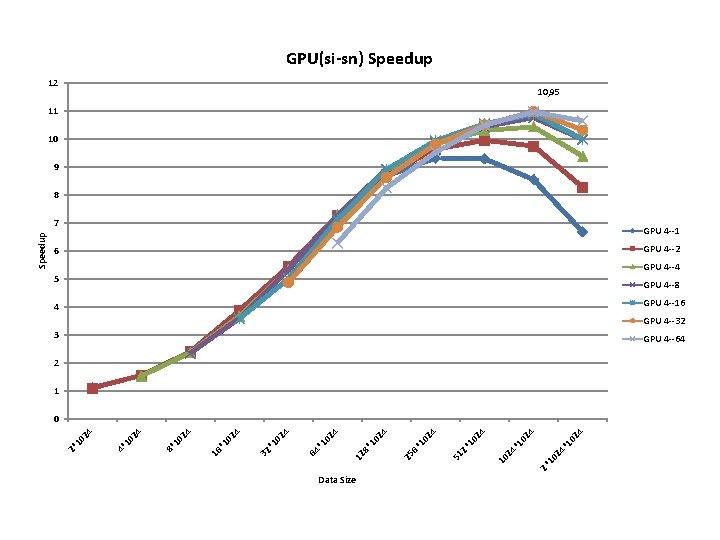

GPU(si-sn) Speedup 12 10, 95 11 10 9 8 GPU 4 --1 GPU 4 --2 6 GPU 4 --4 5 GPU 4 --8 GPU 4 --16 4 GPU 4 --32 3 GPU 4 --64 2 1 2* 10 24 *1 02 4 4 24 *1 02 10 02 4 *1 51 2 02 4 *1 *1 12 8 25 6 02 4 24 10 64 * 24 10 32 * 24 10 16 * 10 24 8* 10 24 4* 10 24 0 2* Speedup 7 Data Size

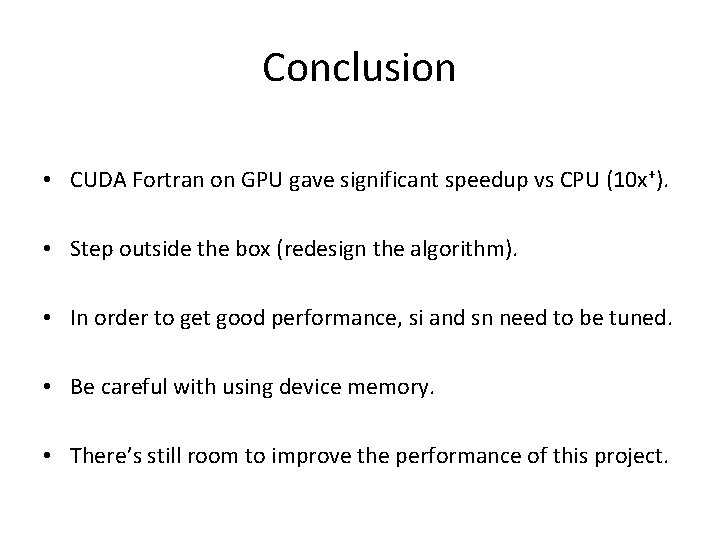

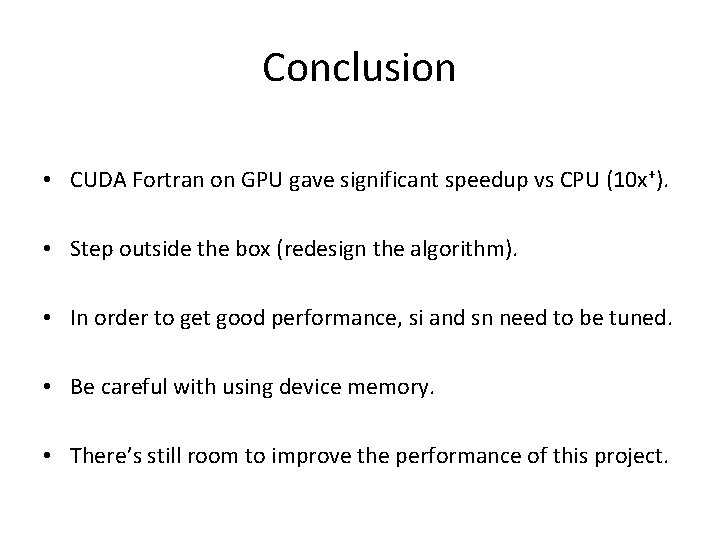

Conclusion • CUDA Fortran on GPU gave significant speedup vs CPU (10 x+). • Step outside the box (redesign the algorithm). • In order to get good performance, si and sn need to be tuned. • Be careful with using device memory. • There’s still room to improve the performance of this project.

Acknowledgements DARe. S/IMAGe Helen Kershaw (Mentor) Nancy Collins (Mentor) Jeff Anderson Tim Hoar Kevin Raeder UCAR NCAR Richard Loft Raghu Raj Prasanna Kumar Kristin Mooney Silvia Gentile Carolyn Mueller University of Wyoming