CS 539 Project Report Evaluating hypothesis Mingyu Feng

- Slides: 5

CS 539 Project Report -- Evaluating hypothesis Mingyu Feng Feb 24 th, 2004 Feb 24 th, 2005

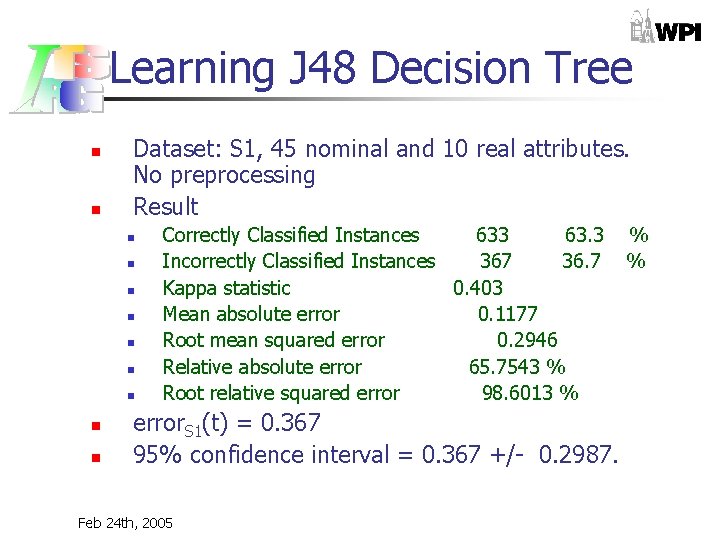

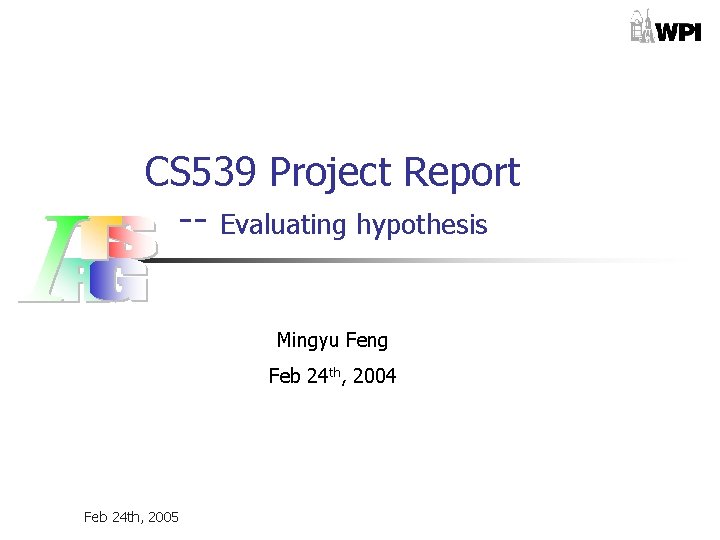

Learning J 48 Decision Tree n n Dataset: S 1, 45 nominal and 10 real attributes. No preprocessing Result n n n n n Correctly Classified Instances 633 63. 3 Incorrectly Classified Instances 367 36. 7 Kappa statistic 0. 403 Mean absolute error 0. 1177 Root mean squared error 0. 2946 Relative absolute error 65. 7543 % Root relative squared error 98. 6013 % error. S 1(t) = 0. 367 95% confidence interval = 0. 367 +/- 0. 2987. Feb 24 th, 2005 % %

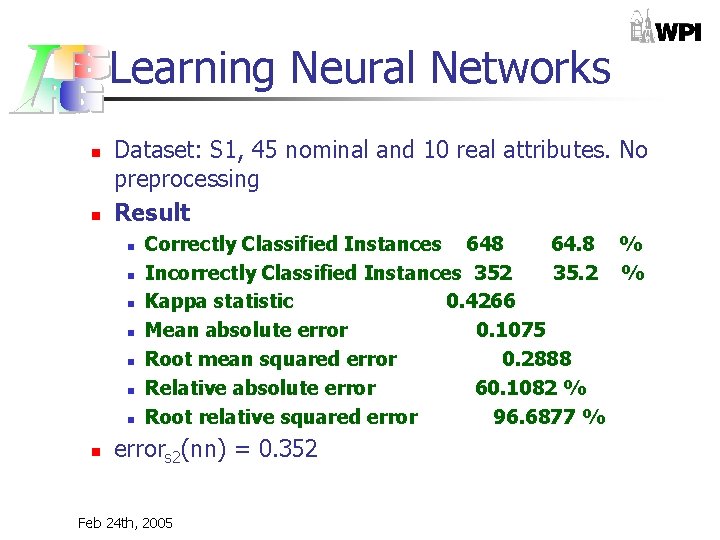

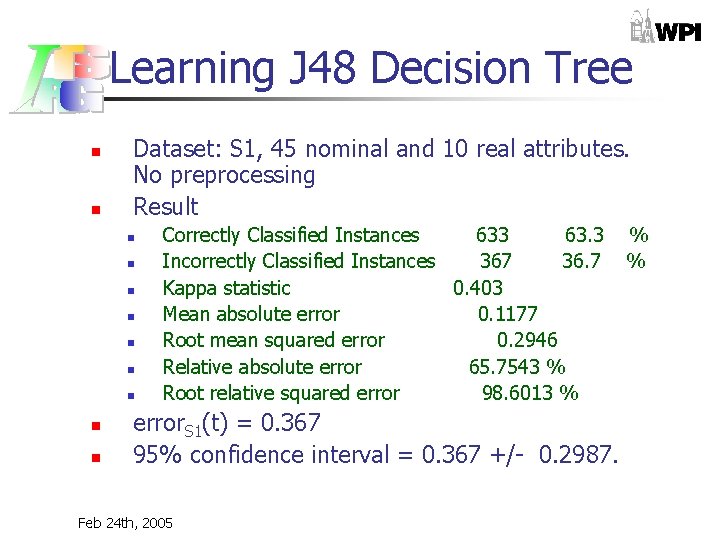

Learning Neural Networks n n Dataset: S 1, 45 nominal and 10 real attributes. No preprocessing Result n n n n Correctly Classified Instances 648 64. 8 % Incorrectly Classified Instances 352 35. 2 % Kappa statistic 0. 4266 Mean absolute error 0. 1075 Root mean squared error 0. 2888 Relative absolute error 60. 1082 % Root relative squared error 96. 6877 % errors 2(nn) = 0. 352 Feb 24 th, 2005

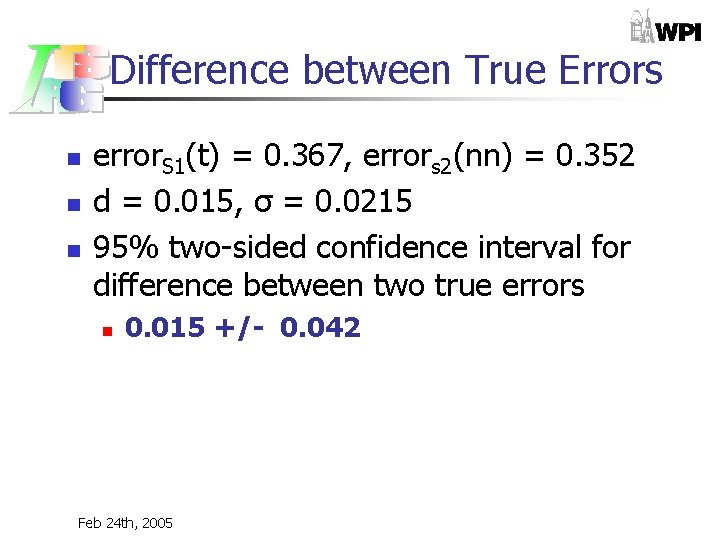

Difference between True Errors n n n error. S 1(t) = 0. 367, errors 2(nn) = 0. 352 d = 0. 015, σ = 0. 0215 95% two-sided confidence interval for difference between two true errors n 0. 015 +/- 0. 042 Feb 24 th, 2005

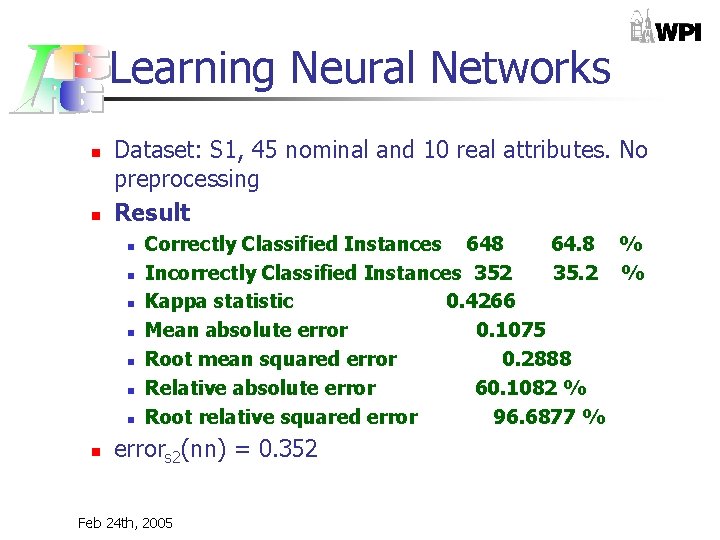

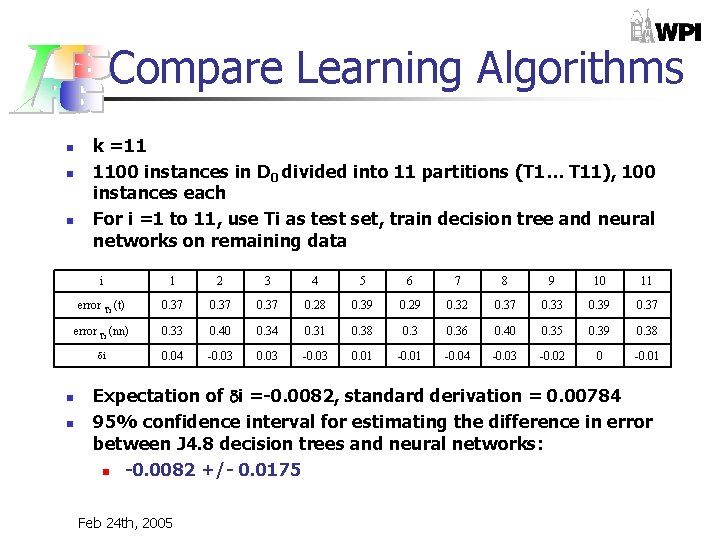

Compare Learning Algorithms n n n k =11 1100 instances in D 0 divided into 11 partitions (T 1… T 11), 100 instances each For i =1 to 11, use Ti as test set, train decision tree and neural networks on remaining data i 1 2 3 4 5 6 7 8 9 10 11 error Ti (t) 0. 37 0. 28 0. 39 0. 29 0. 32 0. 37 0. 33 0. 39 0. 37 error Ti (nn) 0. 33 0. 40 0. 34 0. 31 0. 38 0. 36 0. 40 0. 35 0. 39 0. 38 i 0. 04 -0. 03 0. 01 -0. 04 -0. 03 -0. 02 0 -0. 01 n n Expectation of i =-0. 0082, standard derivation = 0. 00784 95% confidence interval for estimating the difference in error between J 4. 8 decision trees and neural networks: n -0. 0082 +/- 0. 0175 Feb 24 th, 2005