Warp Processor A Dynamically Reconfigurable Coprocessor Frank Vahid

Warp Processor: A Dynamically Reconfigurable Coprocessor Frank Vahid Professor Department of Computer Science and Engineering University of California, Riverside Associate Director, Center for Embedded Computer Systems, UC Irvine Work supported by the National Science Foundation, the Semiconductor Research Corporation, Xilinx, Intel, Motorola/Freescale Contributing Ph. D. Students: Roman Lysecky (2005, now asst. prof. at U. Arizona), Greg Stitt (Ph. D. 2006), Kris Miller (MS 2007), David Sheldon (3 rd yr Ph. D), Scott Sirowy (1 st yr Ph. D) Frank Vahid, UC Riverside

Outline n n Intro and Background: Warp Processors Work in progress under SRC 3 -yr grant 1. 2. 3. 4. 5. n Parallelized-computation memory access Deriving high-level constructs from binaries Case studies Using commercial FPGA fabrics Application-specific FPGA Other ongoing related work n Configurable cache tuning Frank Vahid, UC Riverside 2

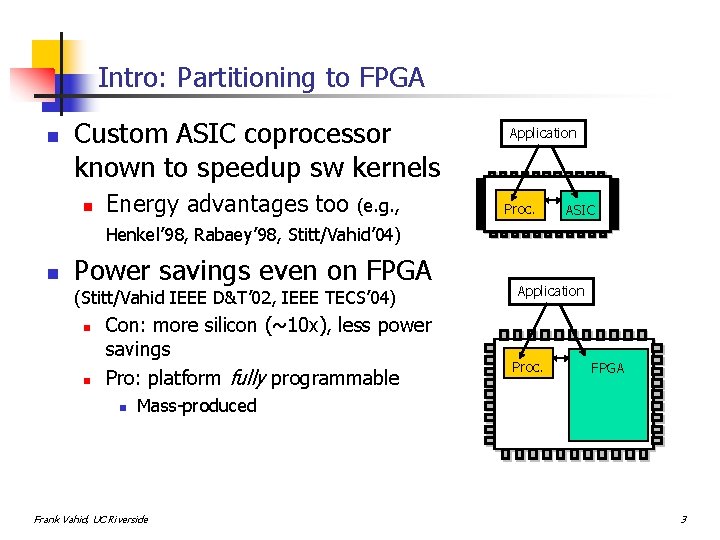

Intro: Partitioning to FPGA n Custom ASIC coprocessor known to speedup sw kernels n Energy advantages too (e. g. , Application Proc. ASIC Henkel’ 98, Rabaey’ 98, Stitt/Vahid’ 04) n Power savings even on FPGA (Stitt/Vahid IEEE D&T’ 02, IEEE TECS’ 04) n n Con: more silicon (~10 x), less power savings Pro: platform fully programmable n Application Proc. FPGA Mass-produced Frank Vahid, UC Riverside 3

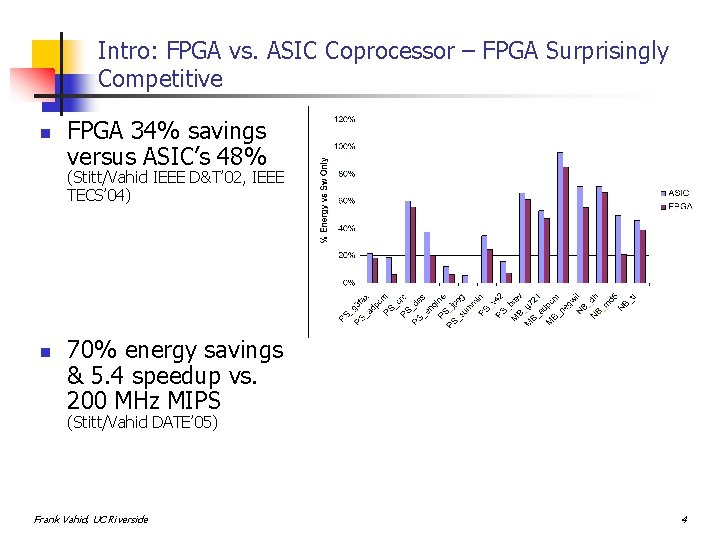

Intro: FPGA vs. ASIC Coprocessor – FPGA Surprisingly Competitive n FPGA 34% savings versus ASIC’s 48% (Stitt/Vahid IEEE D&T’ 02, IEEE TECS’ 04) n 70% energy savings & 5. 4 speedup vs. 200 MHz MIPS (Stitt/Vahid DATE’ 05) Frank Vahid, UC Riverside 4

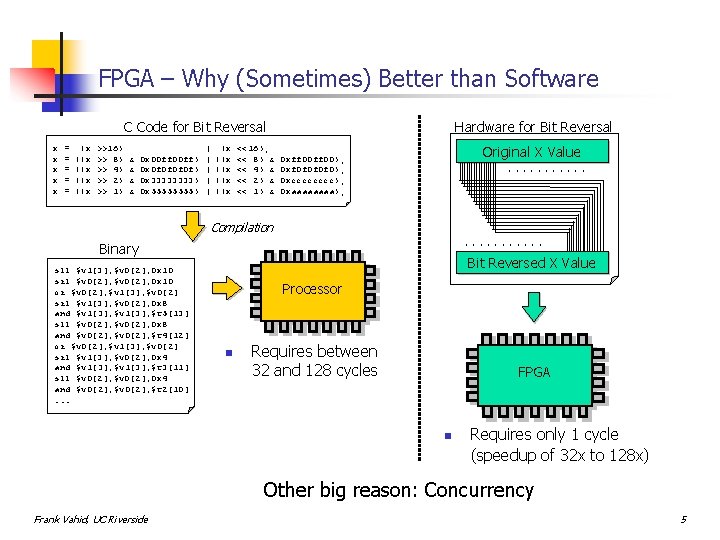

FPGA – Why (Sometimes) Better than Software C Code for Bit Reversal x x x = = = (x ((x ((x >>16) >> 8) >> 4) >> 2) >> 1) & & 0 x 00 ff) 0 x 0 f 0 f) 0 x 3333) 0 x 5555) | | | (x ((x ((x <<16); << 8) & << 4) & << 2) & << 1) & Hardware for Bit Reversal X Value Bit. Original Reversed X Value 0 xff 00); 0 xf 0 f 0); 0 xcccc); 0 xaaaa); . . . Compilation. . . Binary sll $v 1[3], $v 0[2], 0 x 10 srl $v 0[2], 0 x 10 or $v 0[2], $v 1[3], $v 0[2] srl $v 1[3], $v 0[2], 0 x 8 and $v 1[3], $t 5[13] sll $v 0[2], 0 x 8 and $v 0[2], $t 4[12] or $v 0[2], $v 1[3], $v 0[2] srl $v 1[3], $v 0[2], 0 x 4 and $v 1[3], $t 3[11] sll $v 0[2], 0 x 4 and $v 0[2], $t 2[10]. . . Bit Reversed XX Value Processor n Requires between 32 and 128 cycles Processor FPGA n Requires only 1 cycle (speedup of 32 x to 128 x) Other big reason: Concurrency Frank Vahid, UC Riverside 5

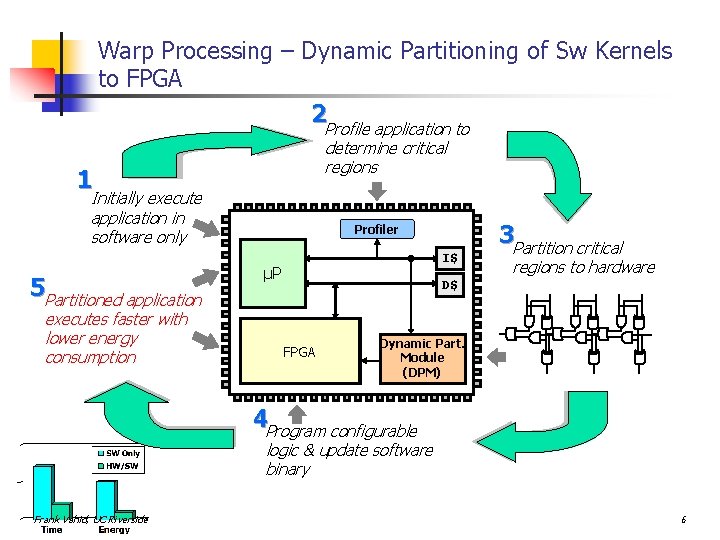

Warp Processing – Dynamic Partitioning of Sw Kernels to FPGA 2 Profile application to determine critical regions 1 Initially execute application in software only 5 Partitioned application executes faster with lower energy consumption Profiler I$ µP 3 Partition critical regions to hardware D$ FPGA Dynamic Part. Module (DPM) 4 Program configurable logic & update software binary Frank Vahid, UC Riverside 6

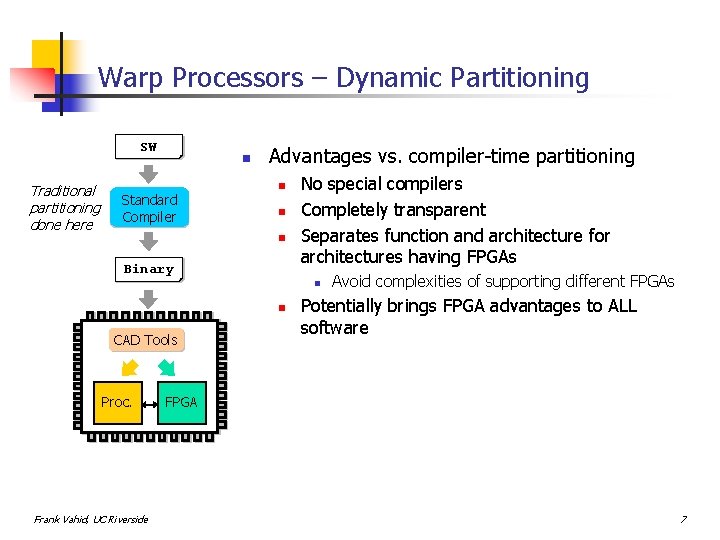

Warp Processors – Dynamic Partitioning SW Binary Traditional partitioning done here Standard Profiling Compiler n Advantages vs. compiler-time partitioning n n n Binary No special compilers Completely transparent Separates function and architecture for architectures having FPGAs n n CAD Tools Profiling Proc. Frank Vahid, UC Riverside Avoid complexities of supporting different FPGAs Potentially brings FPGA advantages to ALL software FPGA 7

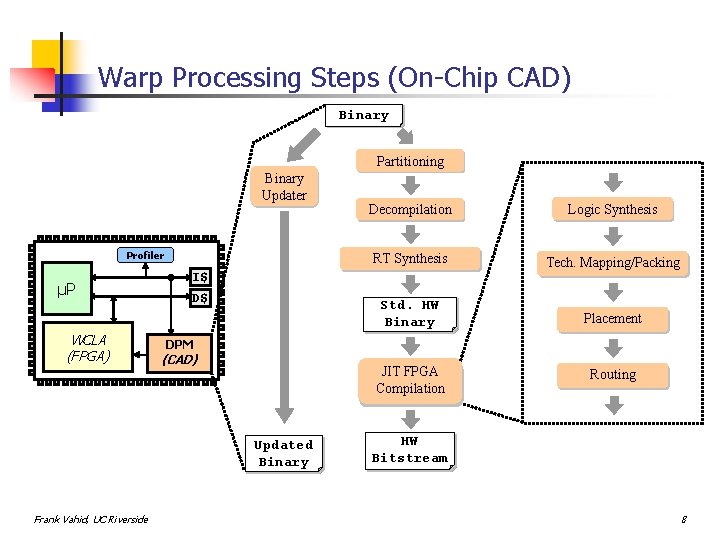

Warp Processing Steps (On-Chip CAD) Binary Partitioning Binary Updater Profiler µP WCLA (FPGA) I$ D$ Logic Synthesis RT Synthesis Tech. Mapping/Packing Std. HW Binary Placement JIT FPGA Compilation Routing DPM (CAD) Updated Binary Frank Vahid, UC Riverside Decompilation HW Binary Bitstream 8

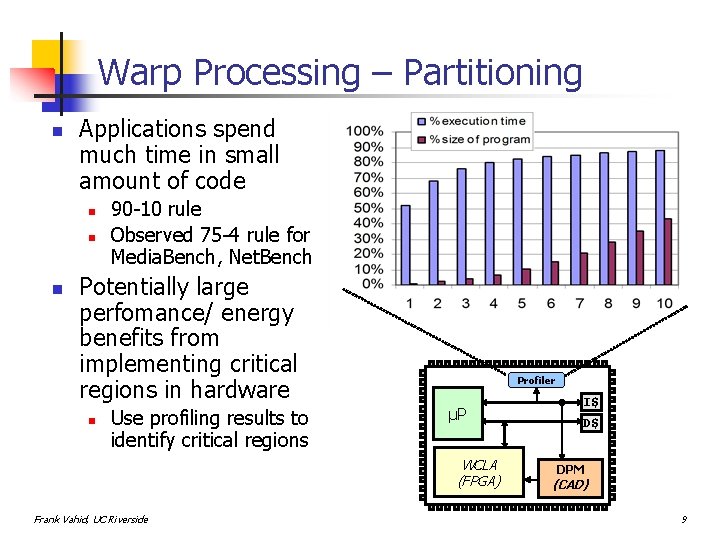

Warp Processing – Partitioning n Applications spend much time in small amount of code n n n 90 -10 rule Observed 75 -4 rule for Media. Bench, Net. Bench Potentially large perfomance/ energy benefits from implementing critical regions in hardware n Use profiling results to identify critical regions Profiler µP WCLA (FPGA) Frank Vahid, UC Riverside I$ D$ DPM (CAD) 9

Warp Processing – Decompilation n Synthesis from binary has a challenge n n High-level information (e. g. , loops, arrays) lost during compilation Solution –Recover high-level information: decompilation Original C Code long f( short a[10] ) { long accum; for (int i=0; i < 10; i++) { accum += a[i]; } return accum; } Corresponding Assembly Mov reg 3, 0 Mov reg 4, 0 loop: Shl reg 1, reg 3, 1 Add reg 5, reg 2, reg 1 Ld reg 6, 0(reg 5) Add reg 4, reg 6 Add reg 3, 1 Beq reg 3, 10, -5 Ret reg 4 Data Flow Analysis Control/Data Flow. Recovery Graph Creation Control Structure Function Recovery Array Recovery long reg 2 ) { : = long array[10] reg 3 long f( f( short long reg 2 ) {0 long reg 4= =0; 0; reg 4 : = 0 int reg 3 for (long reg 3 = 0; reg 3 < 10; reg 3++) { int reg 4 = 0; reg 4 += array[reg 3]; mem[reg 2 loop: + (reg 3 << 1)]; }reg 4 = reg 4 + mem[reg 2 + << reg 4 : = reg 3 reg 4 + mem[ reg 1 1 reg 3 << 1)]; return reg 4; reg 2 + (reg 3 << 1)] reg 5 : = reg 2 + reg 1 } reg 3 = reg 3 + 1; reg 6 reg 3 : = mem[reg 5 reg 3 + 1 + 0] if (reg 3 < 10) goto loop; if (reg 3 < 10)+goto reg 4 : = reg 4 reg 6 loop return reg 4; reg 3 : = reg 3 + 1 } if (reg 3 < 10) goto loop ret reg 4 Frank Vahid, UC Riverside Almost Identical Representations 10

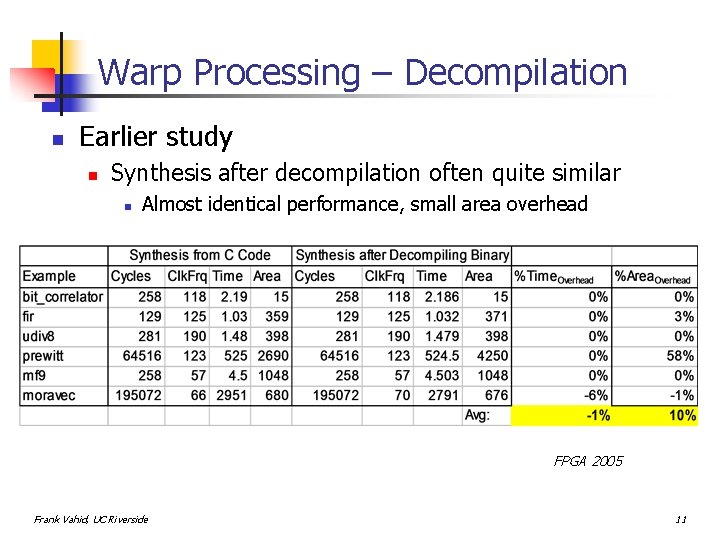

Warp Processing – Decompilation n Earlier study n Synthesis after decompilation often quite similar n Almost identical performance, small area overhead FPGA 2005 Frank Vahid, UC Riverside 11

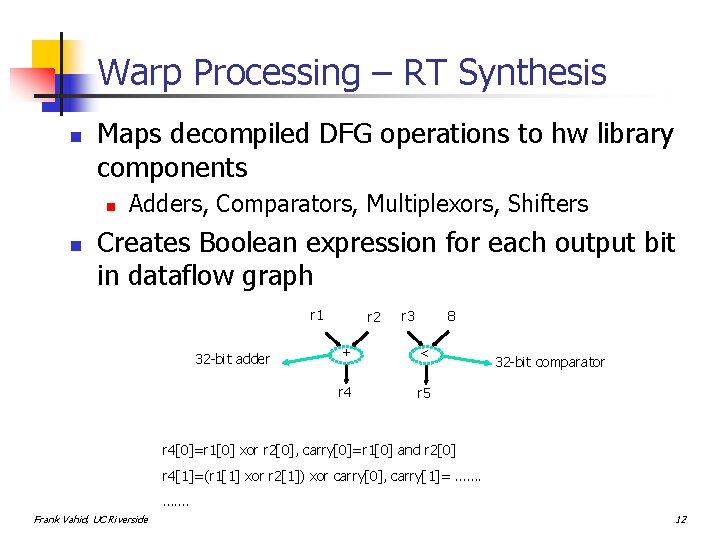

Warp Processing – RT Synthesis n Maps decompiled DFG operations to hw library components n n Adders, Comparators, Multiplexors, Shifters Creates Boolean expression for each output bit in dataflow graph r 1 32 -bit adder r 2 r 3 8 + < r 4 r 5 32 -bit comparator r 4[0]=r 1[0] xor r 2[0], carry[0]=r 1[0] and r 2[0] r 4[1]=(r 1[1] xor r 2[1]) xor carry[0], carry[1]= ……. Frank Vahid, UC Riverside 12

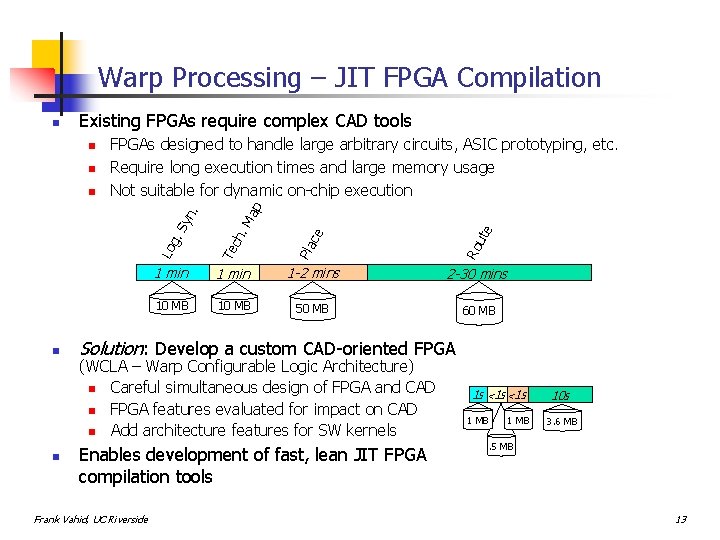

Warp Processing – JIT FPGA Compilation Existing FPGAs require complex CAD tools n n g. Te ch ce ute Lo Sy n. 1 min 1 -2 mins 2 -30 mins 10 MB 50 MB 60 MB Ro n Pla n FPGAs designed to handle large arbitrary circuits, ASIC prototyping, etc. Require long execution times and large memory usage Not suitable for dynamic on-chip execution ap n . M n Solution: Develop a custom CAD-oriented FPGA (WCLA – Warp Configurable Logic Architecture) n Careful simultaneous design of FPGA and CAD n FPGA features evaluated for impact on CAD n Add architecture features for SW kernels Enables development of fast, lean JIT FPGA compilation tools Frank Vahid, UC Riverside 1 s <1 s 1 MB 10 s 3. 6 MB . 5 MB 13

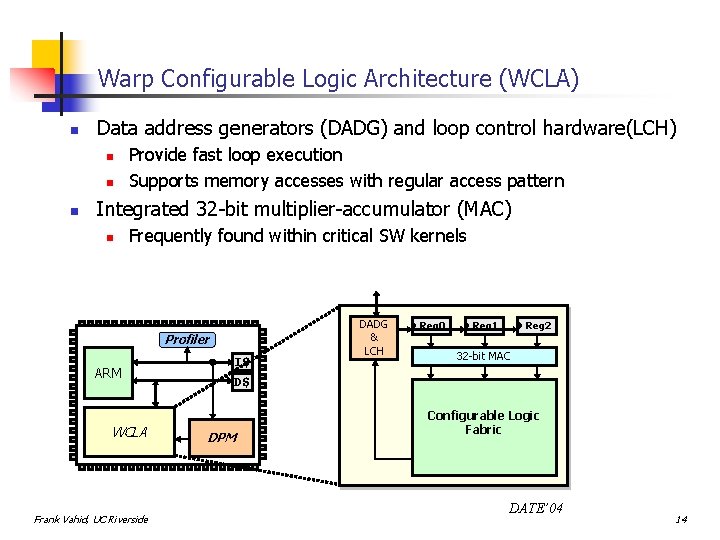

Warp Configurable Logic Architecture (WCLA) n Data address generators (DADG) and loop control hardware(LCH) n n n Provide fast loop execution Supports memory accesses with regular access pattern Integrated 32 -bit multiplier-accumulator (MAC) n Frequently found within critical SW kernels Profiler ARM WCLA Frank Vahid, UC Riverside I$ D$ DPM DADG & LCH Reg 0 Reg 1 Reg 2 32 -bit MAC Configurable Logic Fabric DATE’ 04 14

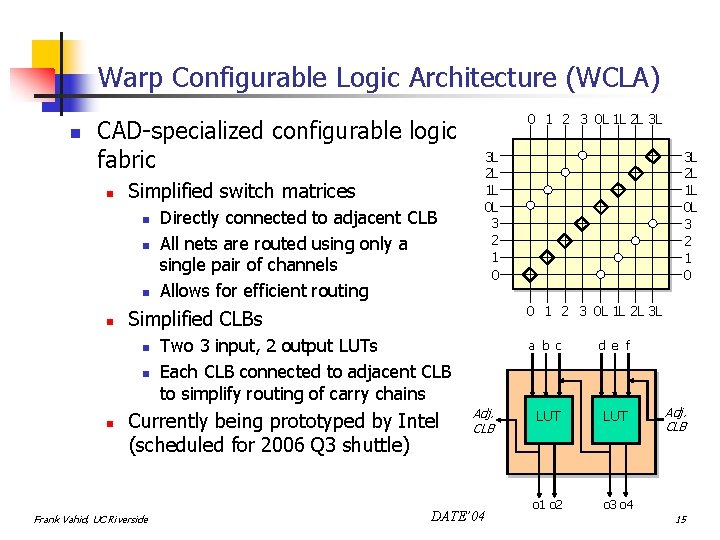

Warp Configurable Logic Architecture (WCLA) n CAD-specialized configurable logic fabric n Simplified switch matrices n n 3 L 2 L 1 L 0 L 3 2 1 0 n Two 3 input, 2 output LUTs Each CLB connected to adjacent CLB to simplify routing of carry chains Currently being prototyped by Intel (scheduled for 2006 Q 3 shuttle) Frank Vahid, UC Riverside 3 L 2 L 1 L 0 L 3 2 1 0 0 1 2 3 0 L 1 L 2 L 3 L Simplified CLBs n n Directly connected to adjacent CLB All nets are routed using only a single pair of channels Allows for efficient routing 0 1 2 3 0 L 1 L 2 L 3 L Adj. CLB DATE’ 04 a b c d e f LUT o 1 o 2 o 3 o 4 Adj. CLB 15

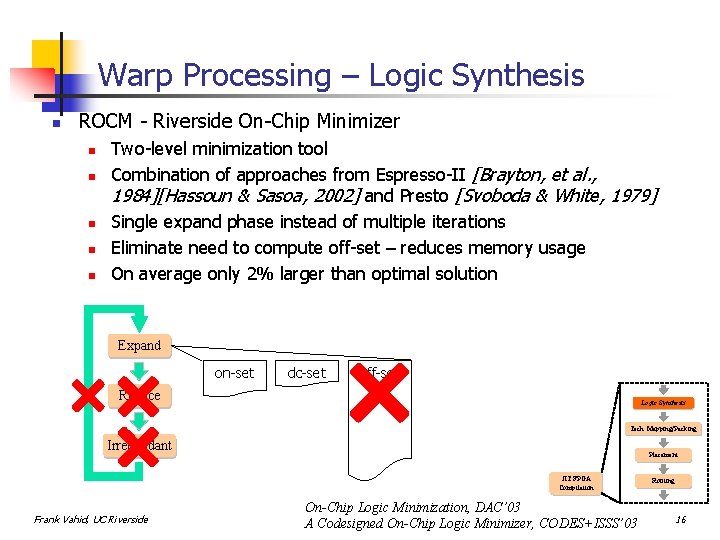

Warp Processing – Logic Synthesis n ROCM - Riverside On-Chip Minimizer n n n Two-level minimization tool Combination of approaches from Espresso-II [Brayton, et al. , 1984][Hassoun & Sasoa, 2002] and Presto [Svoboda & White, 1979] Single expand phase instead of multiple iterations Eliminate need to compute off-set – reduces memory usage On average only 2% larger than optimal solution Expand on-set dc-set off-set Reduce Logic Synthesis Tech. Mapping/Packing Irredundant Placement JIT FPGA Compilation Frank Vahid, UC Riverside On-Chip Logic Minimization, DAC’ 03 A Codesigned On-Chip Logic Minimizer, CODES+ISSS’ 03 Routing 16

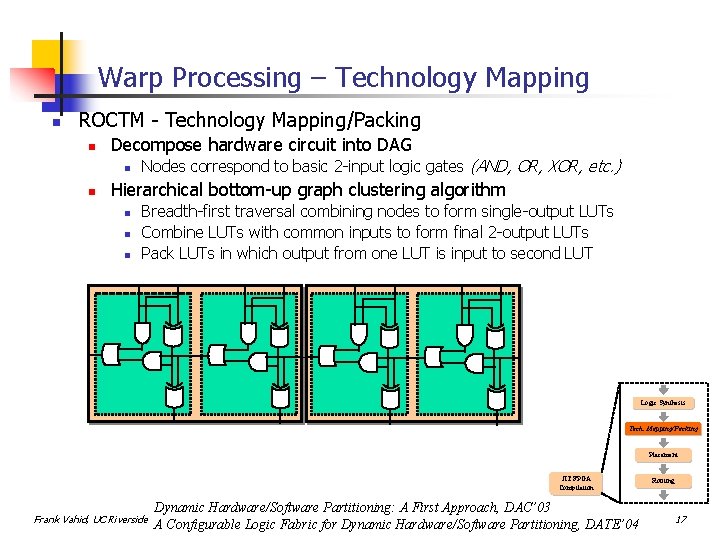

Warp Processing – Technology Mapping n ROCTM - Technology Mapping/Packing n Decompose hardware circuit into DAG n n Nodes correspond to basic 2 -input logic gates (AND, OR, XOR, etc. ) Hierarchical bottom-up graph clustering algorithm n n n Breadth-first traversal combining nodes to form single-output LUTs Combine LUTs with common inputs to form final 2 -output LUTs Pack LUTs in which output from one LUT is input to second LUT Logic Synthesis Tech. Mapping/Packing Placement JIT FPGA Compilation Frank Vahid, UC Riverside Dynamic Hardware/Software Partitioning: A First Approach, DAC’ 03 A Configurable Logic Fabric for Dynamic Hardware/Software Partitioning, DATE’ 04 Routing 17

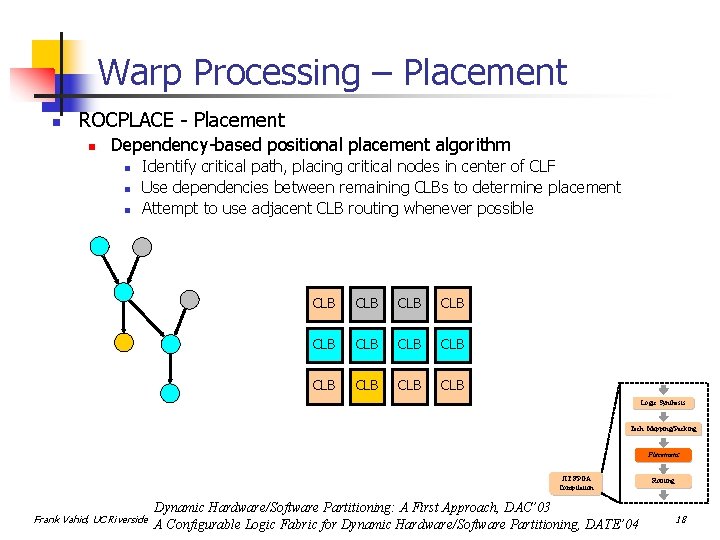

Warp Processing – Placement n ROCPLACE - Placement n Dependency-based positional placement algorithm n n n Identify critical path, placing critical nodes in center of CLF Use dependencies between remaining CLBs to determine placement Attempt to use adjacent CLB routing whenever possible CLB CLB CLB Logic Synthesis Tech. Mapping/Packing Placement JIT FPGA Compilation Frank Vahid, UC Riverside Dynamic Hardware/Software Partitioning: A First Approach, DAC’ 03 A Configurable Logic Fabric for Dynamic Hardware/Software Partitioning, DATE’ 04 Routing 18

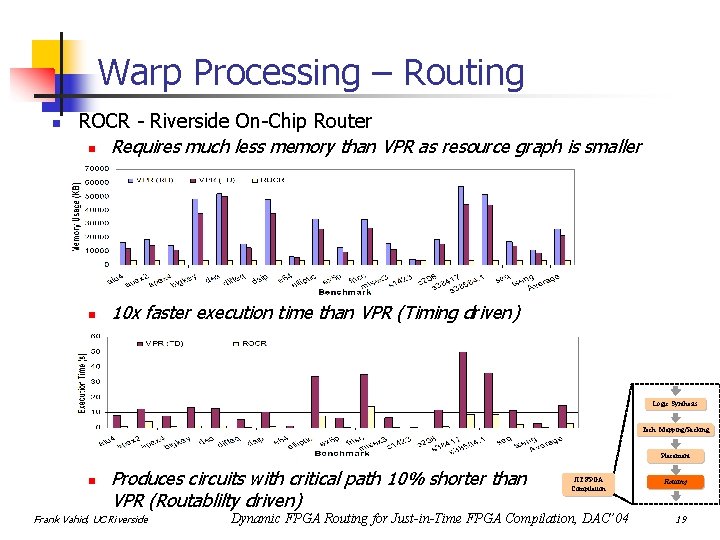

Warp Processing – Routing n ROCR - Riverside On-Chip Router n Requires much less memory than VPR as resource graph is smaller n 10 x faster execution time than VPR (Timing driven) Logic Synthesis Tech. Mapping/Packing Placement n Produces circuits with critical path 10% shorter than VPR (Routablilty driven) Frank Vahid, UC Riverside JIT FPGA Compilation Dynamic FPGA Routing for Just-in-Time FPGA Compilation, DAC’ 04 Routing 19

Experiments with Warp Processing n Warp Processor n n ARM/MIPS plus our fabric Riverside on-chip CAD tools to map critical region to configurable fabric n n Profiler I$ D$ ARM WCLA DPM ARM I$ D$ Requires less than 2 seconds on lean embedded processor to perform synthesis and JIT FPGA compilation Traditional HW/SW Partitioning n n n ARM/MIPS plus Xilinx Virtex-E FPGA Manually partitioned software using VHDL synthesized using Xilinx ISE 4. 1 Frank Vahid, UC Riverside Xilinx Virtex-E FPGA 20

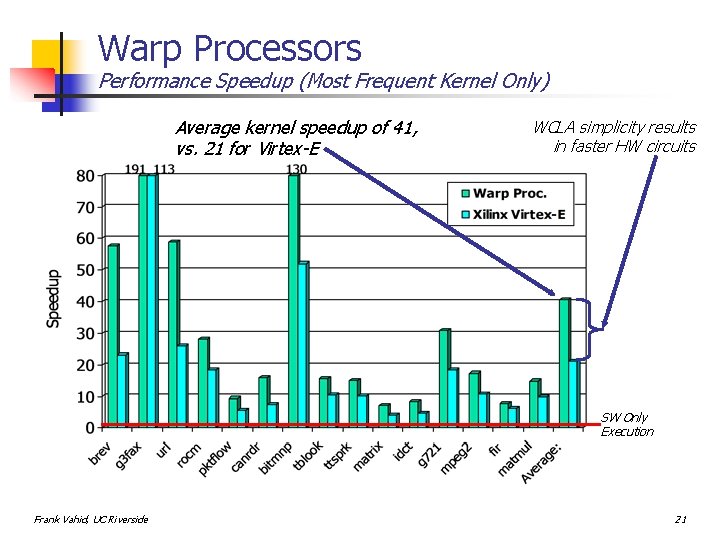

Warp Processors Performance Speedup (Most Frequent Kernel Only) Average kernel speedup of 41, vs. 21 for Virtex-E WCLA simplicity results in faster HW circuits SW Only Execution Frank Vahid, UC Riverside 21

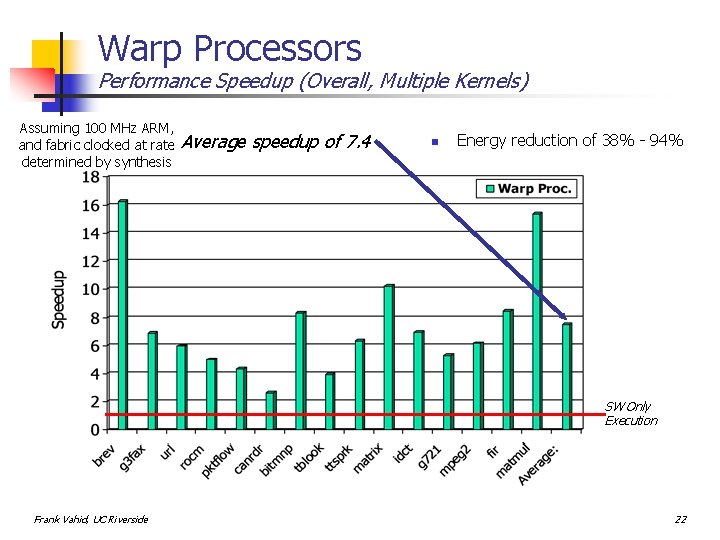

Warp Processors Performance Speedup (Overall, Multiple Kernels) Assuming 100 MHz ARM, and fabric clocked at rate determined by synthesis Average speedup of 7. 4 n Energy reduction of 38% - 94% SW Only Execution Frank Vahid, UC Riverside 22

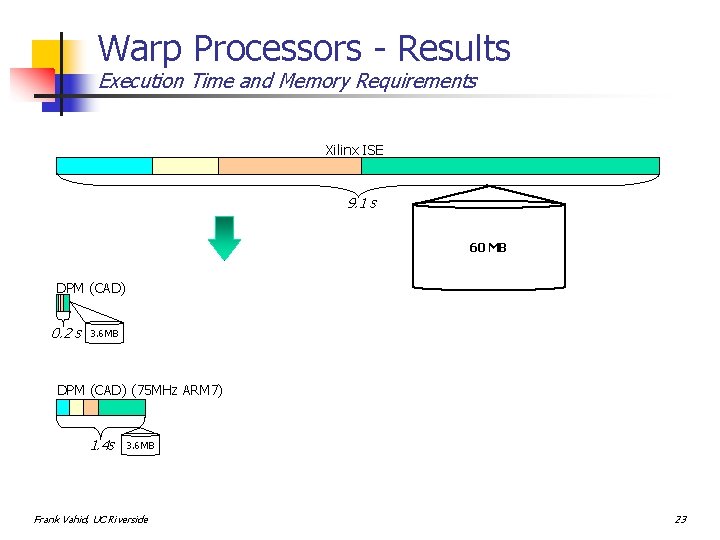

Warp Processors - Results Execution Time and Memory Requirements Xilinx ISE 9. 1 s 60 MB DPM (CAD) 0. 2 s 3. 6 MB DPM (CAD) (75 MHz ARM 7) 1. 4 s 3. 6 MB Frank Vahid, UC Riverside 23

Outline n n Intro and Background: Warp Processors Work in progress under SRC 3 -yr grant 1. 2. 3. 4. 5. n Parallelized-computation memory access Deriving high-level constructs from binaries Case studies Using commercial FPGA fabrics Application-specific FPGA Other ongoing related work n Configurable cache tuning Frank Vahid, UC Riverside 24

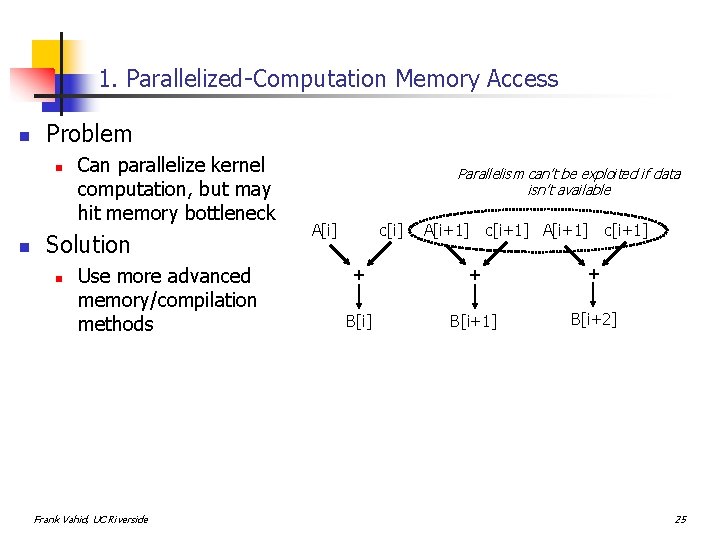

1. Parallelized-Computation Memory Access n Problem n n Can parallelize kernel computation, but may hit memory bottleneck Solution n Use more advanced memory/compilation methods Frank Vahid, UC Riverside Parallelism can’t be exploited if data isn’t available A[i] c[i] A[i+1] c[i+1] + + + B[i] B[i+1] B[i+2] 25

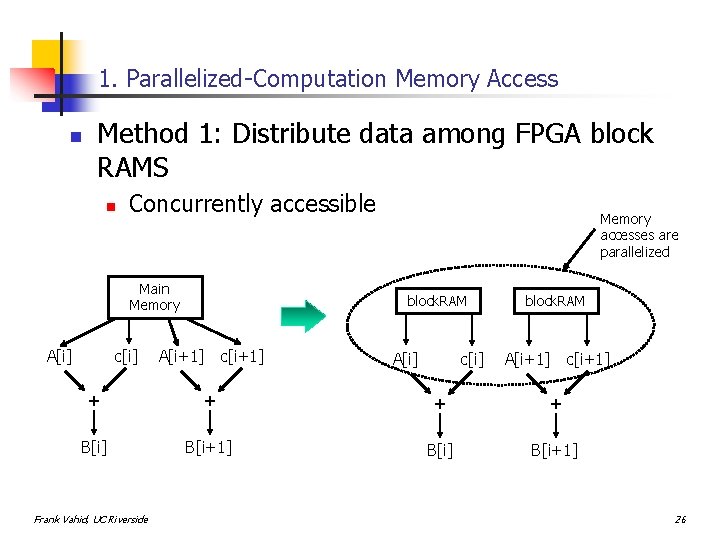

1. Parallelized-Computation Memory Access n Method 1: Distribute data among FPGA block RAMS n Concurrently accessible Main Memory A[i] c[i] Memory accesses are parallelized block. RAM A[i+1] c[i+1] A[i] c[i] block. RAM A[i+1] c[i+1] + + B[i] B[i+1] Frank Vahid, UC Riverside 26

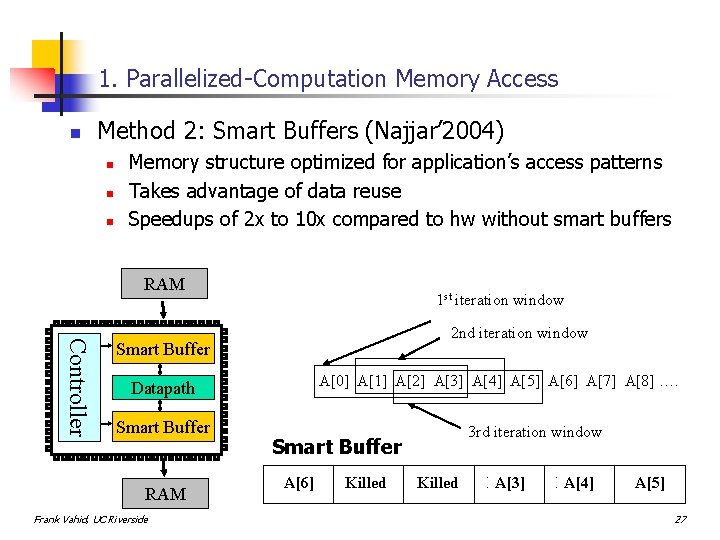

1. Parallelized-Computation Memory Access n Method 2: Smart Buffers (Najjar’ 2004) n n n Memory structure optimized for application’s access patterns Takes advantage of data reuse Speedups of 2 x to 10 x compared to hw without smart buffers RAM 1 st iteration window Controller 2 nd iteration window Smart Buffer Datapath Smart Buffer RAM Frank Vahid, UC Riverside A[0] A[1] A[2] A[3] A[4] A[5] A[6] A[7] A[8] …. 3 rd iteration window Smart Buffer Empty Killed A[0] A[6] Killed Empty A[1] Empty Killed A[2] Empty A[3] Empty A[4] Empty A[5] 27

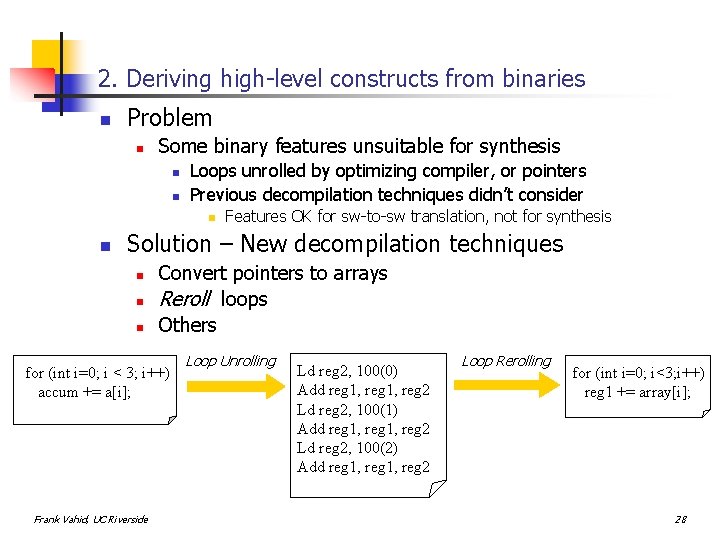

2. Deriving high-level constructs from binaries n Problem n Some binary features unsuitable for synthesis n n Loops unrolled by optimizing compiler, or pointers Previous decompilation techniques didn’t consider n n Features OK for sw-to-sw translation, not for synthesis Solution – New decompilation techniques n n n Convert pointers to arrays Reroll loops Others for (int i=0; i < 3; i++) accum += a[i]; Frank Vahid, UC Riverside Loop Unrolling Ld reg 2, 100(0) Add reg 1, reg 2 Ld reg 2, 100(1) Add reg 1, reg 2 Ld reg 2, 100(2) Add reg 1, reg 2 Loop Rerolling for (int i=0; i<3; i++) reg 1 += array[i]; 28

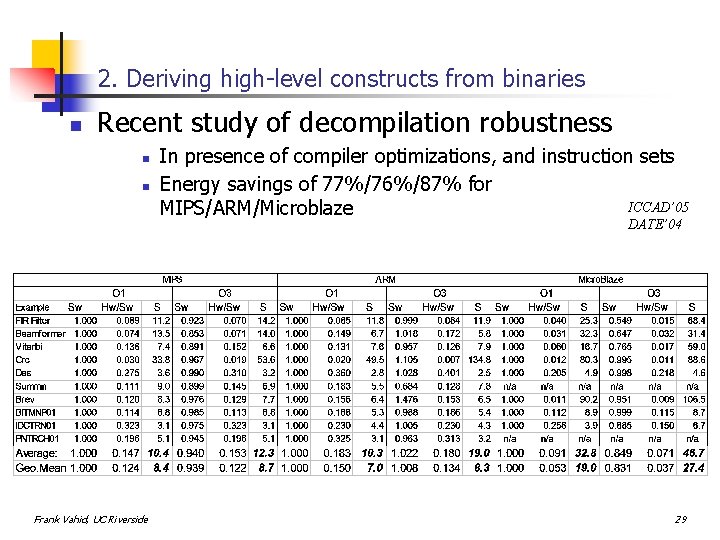

2. Deriving high-level constructs from binaries n Recent study of decompilation robustness n n In presence of compiler optimizations, and instruction sets Energy savings of 77%/76%/87% for ICCAD’ 05 MIPS/ARM/Microblaze DATE’ 04 Frank Vahid, UC Riverside 29

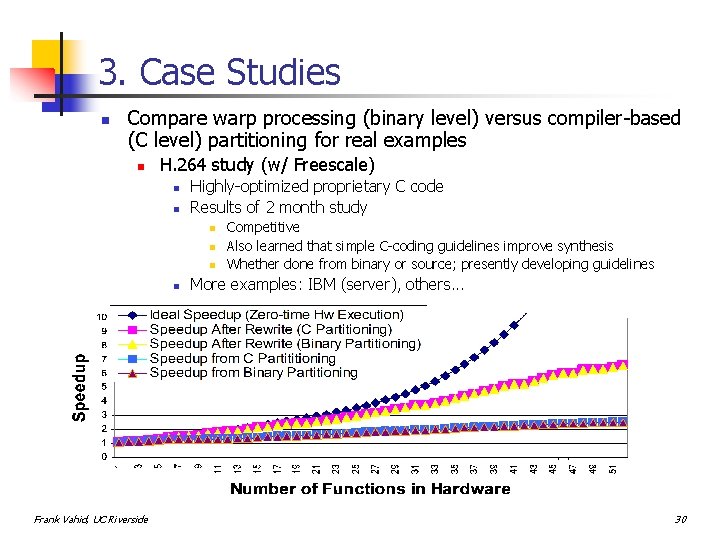

3. Case Studies n Compare warp processing (binary level) versus compiler-based (C level) partitioning for real examples n H. 264 study (w/ Freescale) n n Highly-optimized proprietary C code Results of 2 month study n n Frank Vahid, UC Riverside Competitive Also learned that simple C-coding guidelines improve synthesis Whether done from binary or source; presently developing guidelines More examples: IBM (server), others. . . 30

4. Using Commercial FPGA Fabrics n Can warp processing utilize commercial FPGAs? n Approach 1: “Virtual FPGA” – Map our fabric to FPGA n n Collaboration with Xilinx Initial results: 6 x performance overhead, 100 x area overhead n Main problem is routing Warp fabric u. P Commercial FPGA Map fabric onto a commercial fabric u. P Warp fabric “Virtual FPGA” Frank Vahid, UC Riverside u. P Investigating better methods (one-to-one mapping) 31

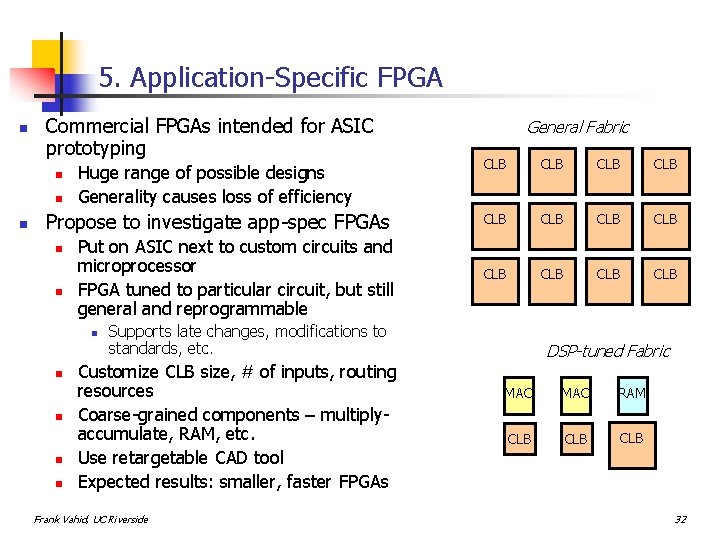

5. Application-Specific FPGA n Commercial FPGAs intended for ASIC prototyping Huge range of possible designs Generality causes loss of efficiency CLB CLB Propose to investigate app-spec FPGAs CLB CLB n n n General Fabric n n Put on ASIC next to custom circuits and microprocessor FPGA tuned to particular circuit, but still general and reprogrammable n n n Supports late changes, modifications to standards, etc. Customize CLB size, # of inputs, routing resources Coarse-grained components – multiplyaccumulate, RAM, etc. Use retargetable CAD tool Expected results: smaller, faster FPGAs Frank Vahid, UC Riverside DSP-tuned Fabric MAC RAM CLB CLB 32

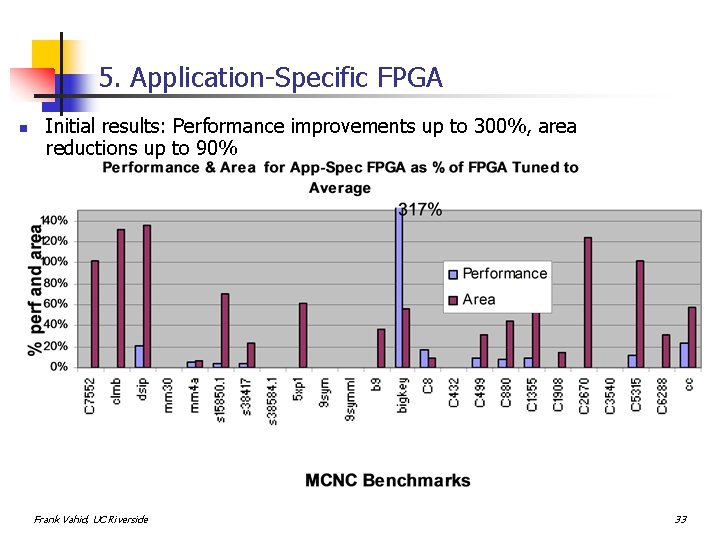

5. Application-Specific FPGA n Initial results: Performance improvements up to 300%, area reductions up to 90% Frank Vahid, UC Riverside 33

Outline n n Intro and Background: Warp Processors Work in progress under SRC 3 -yr grant 1. 2. 3. 4. 5. n Parallelized-computation memory access Deriving high-level constructs from binaries Case studies Using commercial FPGA fabrics Application-specific FPGA Other ongoing related work n Configurable cache tuning Frank Vahid, UC Riverside 34

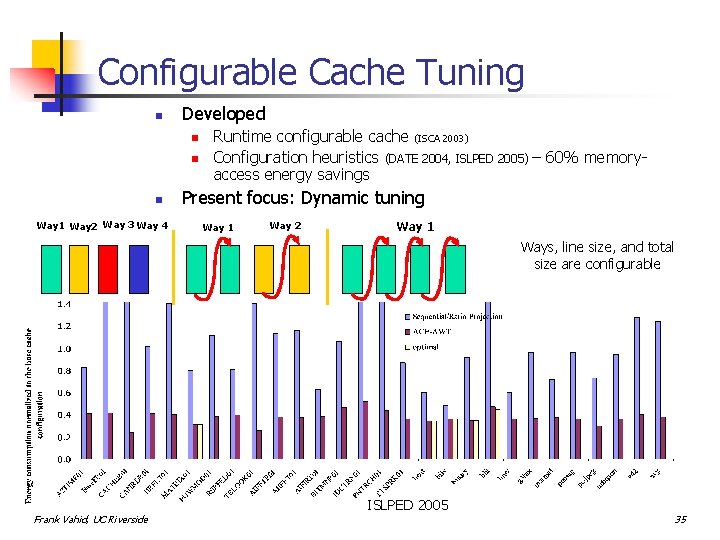

Configurable Cache Tuning n Developed n n n Way 1 Way 2 Way 3 Way 4 Runtime configurable cache (ISCA 2003) Configuration heuristics (DATE 2004, ISLPED 2005) – 60% memoryaccess energy savings Present focus: Dynamic tuning Way 1 Way 2 Way 1 Ways, line size, and total size are configurable Frank Vahid, UC Riverside ISLPED 2005 35

Summary n Basic warp technology n Developed 2002 -2005 n n n Uses binary synthesis and FPGAs Conclusion: Feasible technology, much potential Ongoing work (SRC) n n n (NSF, and 1 -year CSR grants from SRC) Improve and validate effectiveness of binary synthesis Examine FPGA implementation issues Extensive future work to develop robust warp technology Frank Vahid, UC Riverside 36

- Slides: 36