Data Analysis Mark Stamp Data Analysis 1 Topics

- Slides: 46

Data Analysis Mark Stamp Data Analysis 1

Topics q Experimental design o Training set, test set, n-fold cross validation, thresholding, imbalance, etc. q Accuracy o False positive, false negative, etc. q ROC curves o Area under the ROC curve (AUC) o Partial AUC (sometimes written as AUCp) Data Analysis 2

Objective q Assume that we have a proposed method for detecting malware q We want to determine how well it performs on specific dataset o We want to quantify effectiveness q Ideally, compare to previous work o But, often difficult to directly compare q Comparisons Data Analysis to AV products? 3

Basic Assumptions q We have a set of known malware o All from a single (metamorphic) “family”… o …or, at least all of a similar type o Broader “families” are more difficult q Also, a representative non-family set o Often, assumed to be benign files o More diverse may be more difficult q Much Data Analysis depends on problem specifics 4

Experimental Design q Want to test malware detection score o Refer to malware dataset as match set o And benign dataset is nomatch set q Partition match set into… o Training set used to determine parameters of the scoring function o Test set reserved to test scoring function generated from training set q Note: Data Analysis Cannot test on training set 5

Training and Scoring q Two phases: Training and scoring q Training phase o Train a model using training set q Scoring phase o Score data in test set and score nomatch (benign) set q Analyze results from scoring phase o Assume representative of general case Data Analysis 6

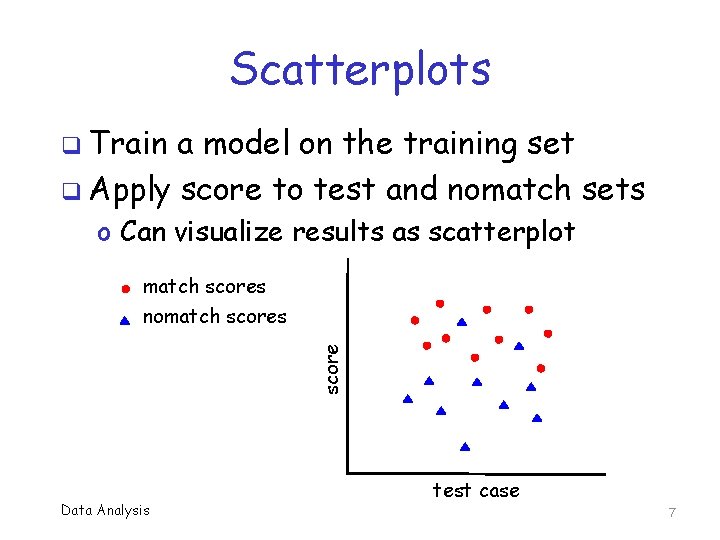

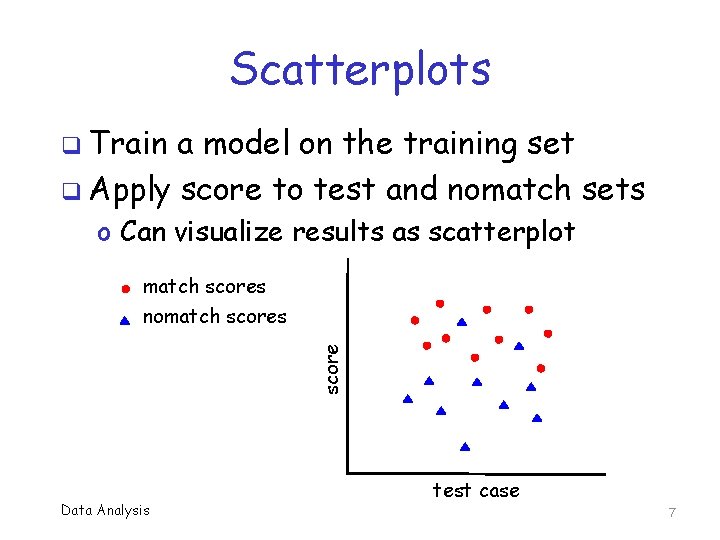

Scatterplots q Train a model on the training set q Apply score to test and nomatch sets o Can visualize results as scatterplot score match scores nomatch scores Data Analysis test case 7

Experimental Design q. A couple of potential problems… o How to partition match set? o How to get most out of limited data set? q Why are these things concerns? o When we partition match set, might get biased training/test sets, and … o … more data points is “more better” q Cross Data Analysis validation solves these problems 8

n-fold Cross Validation q Partition match set into n equal subsets o Denote subsets as S 1, S 2, …, Sn q Let training set be S 2 S 3 … Sn o And test set is S 1 q Repeat with training set S 1 S 3 … Sn o And test set S 2 q And so on, for each of n “folds” o In our work, n = 5 is usually sufficient Data Analysis 9

n-fold Cross Validation q Benefits of cross validation? q Any bias in match data smoothed out o Since bias only affects one/few of the Si q Obtain lots more match scores o Usually, no shortage of nomatch data o But match data may be very limited q And it’s easy to do, so why not? o Best of all, it sounds really fancy… Data Analysis 10

Thresholding q Set threshold after scoring phase q Ideally, we have complete separation o I. e. , no overlap in scatterplot o Usually, that doesn’t happen o So, where to set the threshold? q In practical use, thresholding critical o At research stage, more of a distraction… o …as we discuss later Data Analysis 11

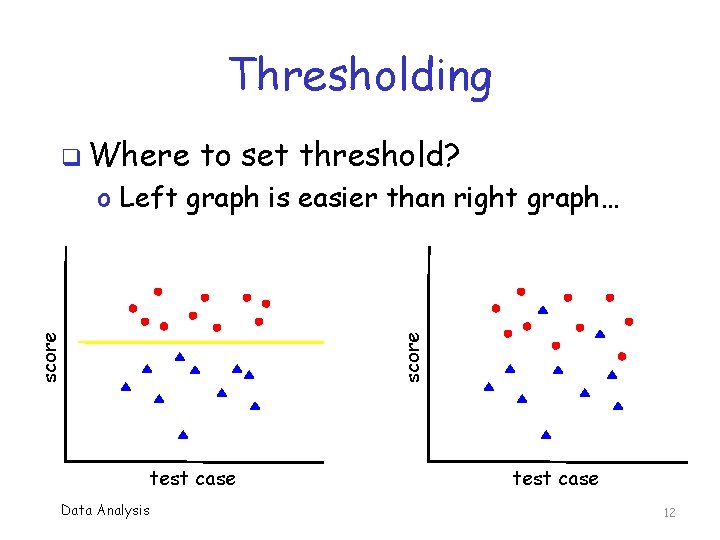

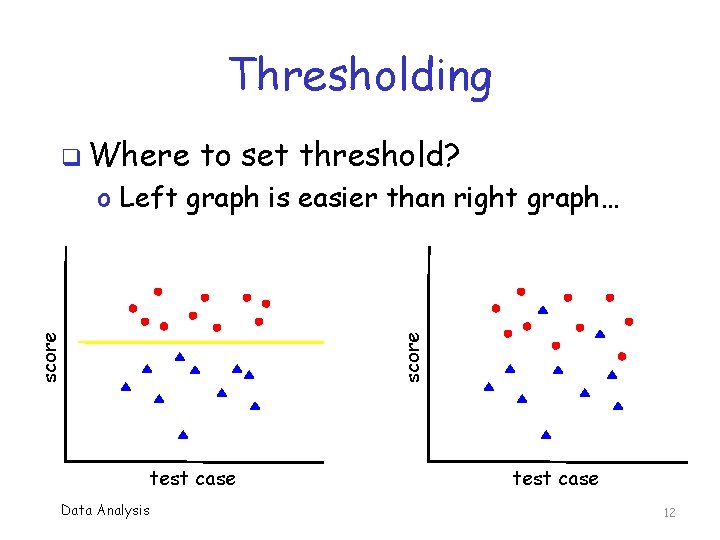

Thresholding q Where to set threshold? score o Left graph is easier than right graph… test case Data Analysis test case 12

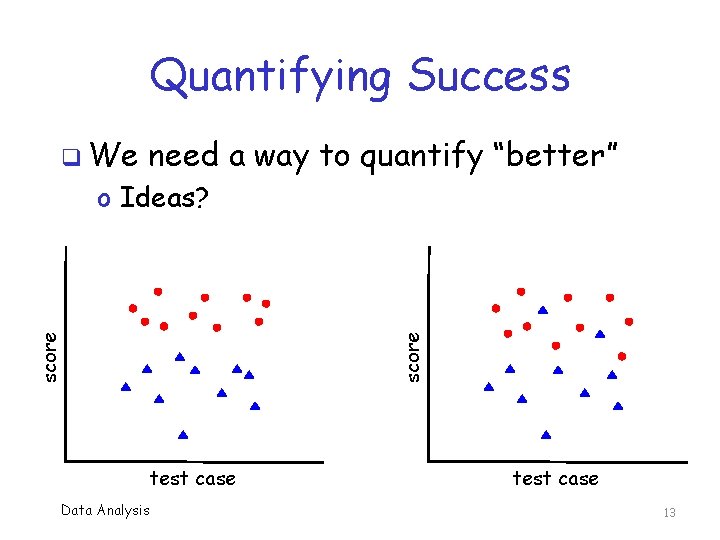

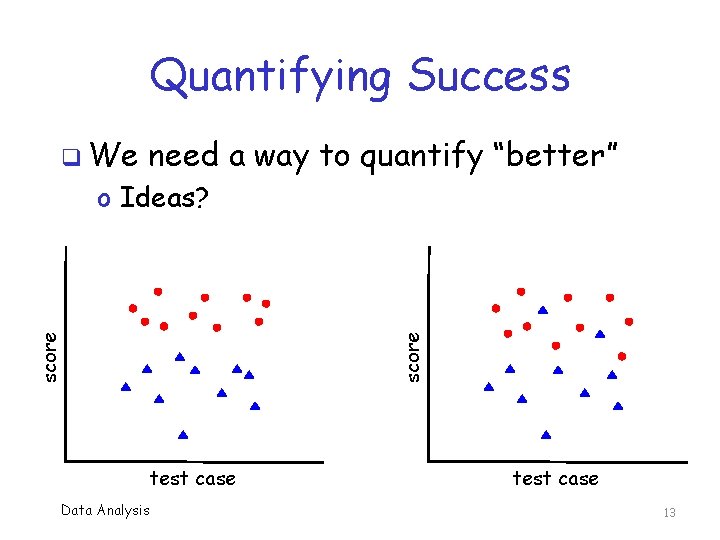

Quantifying Success q We need a way to quantify “better” score o Ideas? test case Data Analysis test case 13

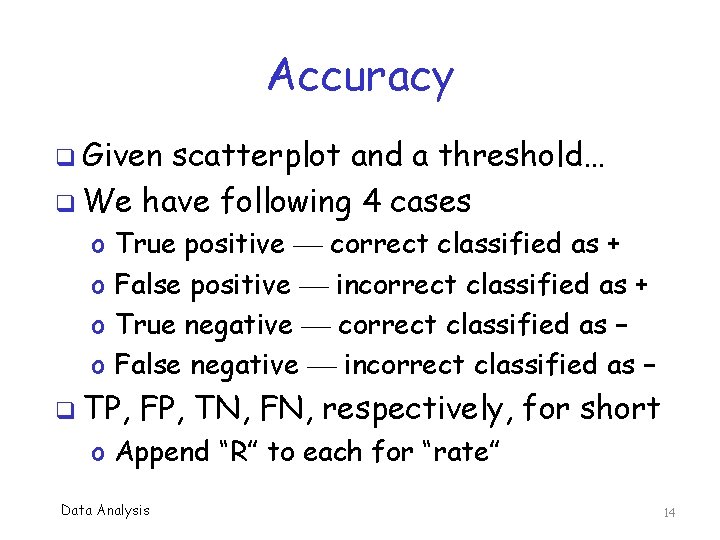

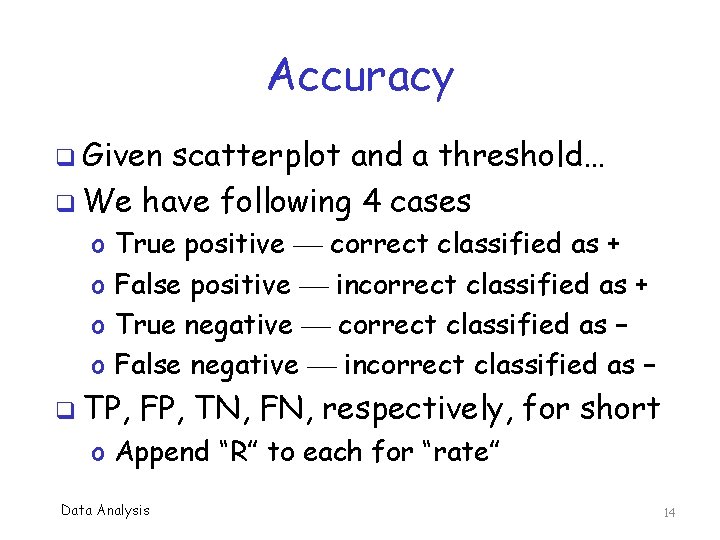

Accuracy q Given scatterplot and a threshold… q We have following 4 cases o o True positive correct classified as + False positive incorrect classified as + True negative correct classified as − False negative incorrect classified as − q TP, FP, TN, FN, respectively, for short o Append “R” to each for “rate” Data Analysis 14

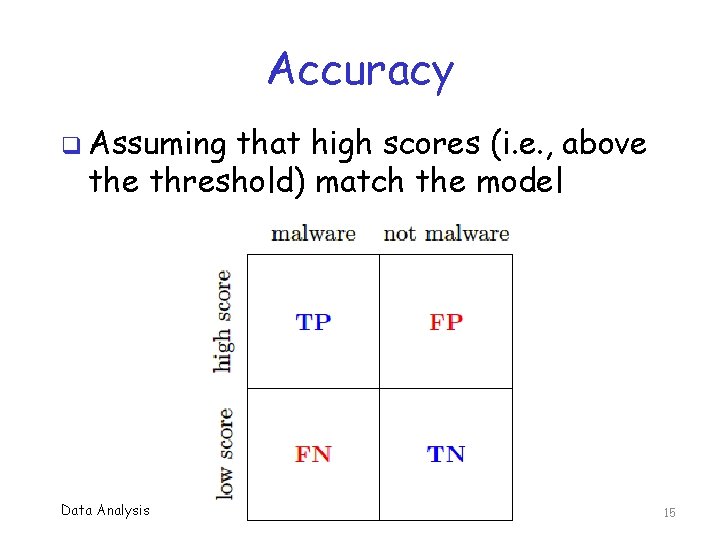

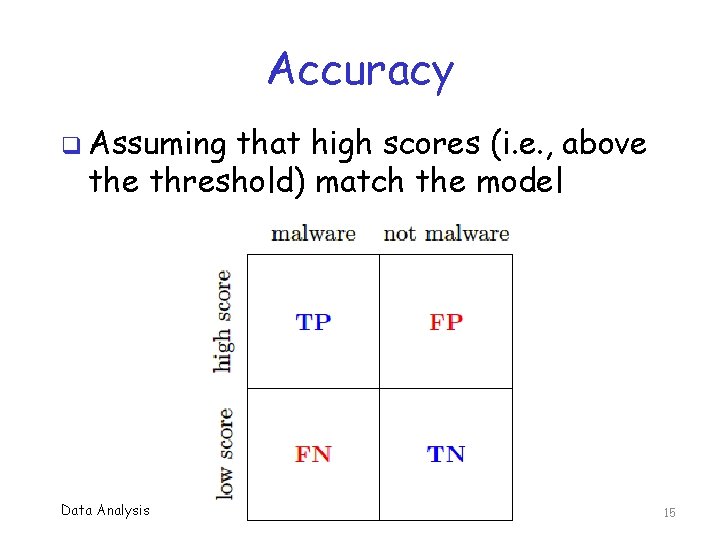

Accuracy q Assuming that high scores (i. e. , above threshold) match the model Data Analysis 15

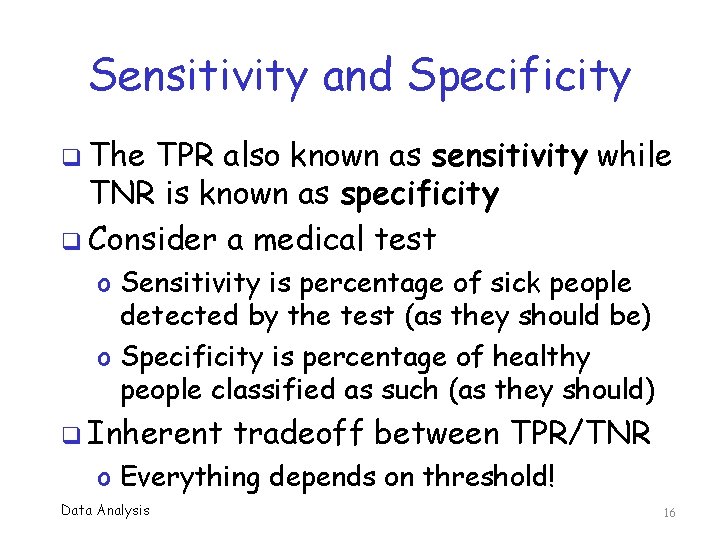

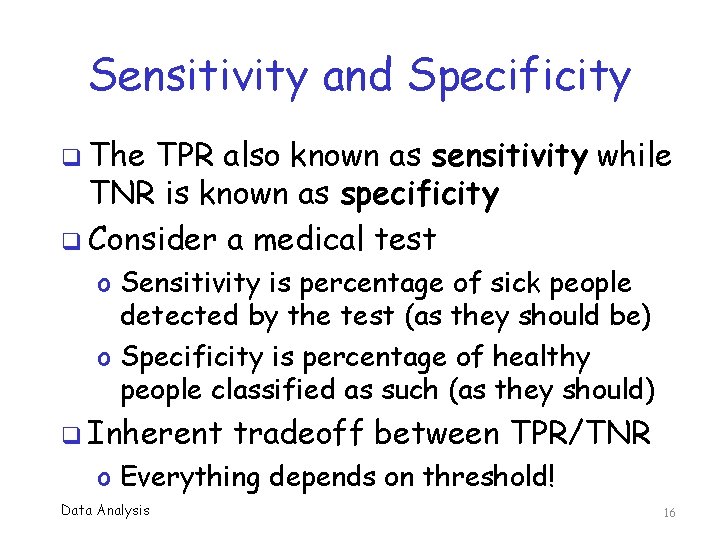

Sensitivity and Specificity q The TPR also known as sensitivity while TNR is known as specificity q Consider a medical test o Sensitivity is percentage of sick people detected by the test (as they should be) o Specificity is percentage of healthy people classified as such (as they should) q Inherent tradeoff between TPR/TNR o Everything depends on threshold! Data Analysis 16

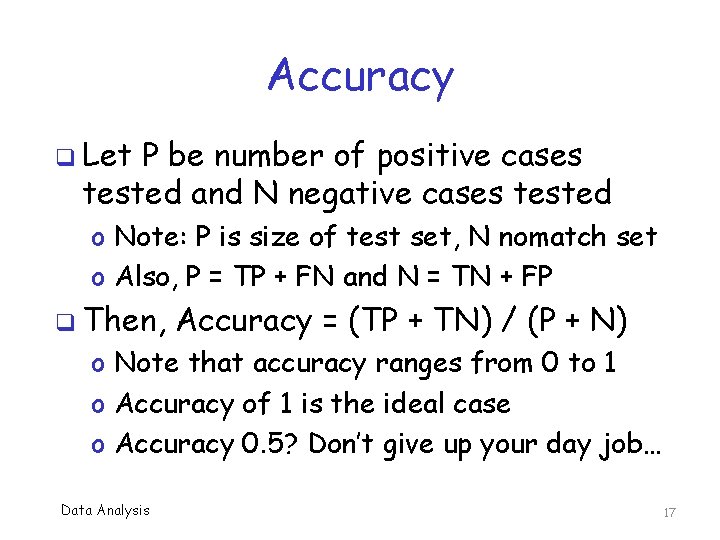

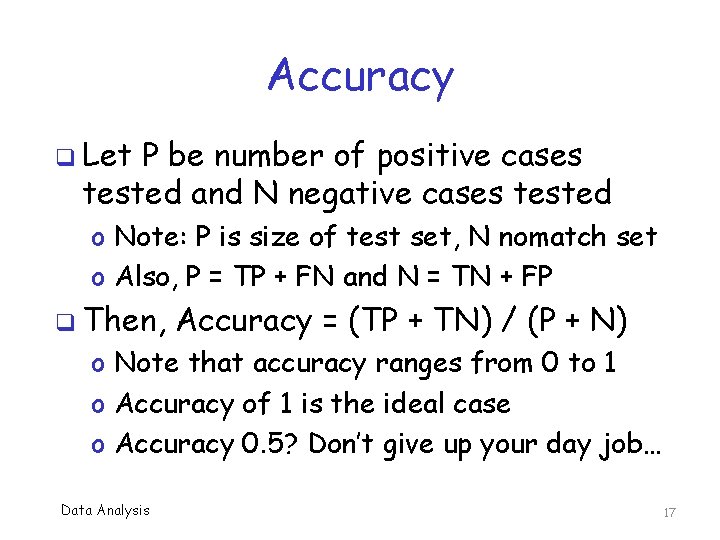

Accuracy q Let P be number of positive cases tested and N negative cases tested o Note: P is size of test set, N nomatch set o Also, P = TP + FN and N = TN + FP q Then, Accuracy = (TP + TN) / (P + N) o Note that accuracy ranges from 0 to 1 o Accuracy of 1 is the ideal case o Accuracy 0. 5? Don’t give up your day job… Data Analysis 17

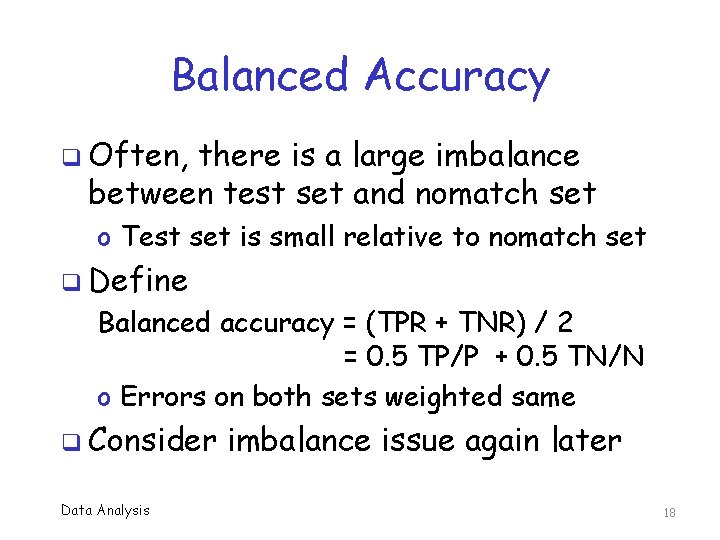

Balanced Accuracy q Often, there is a large imbalance between test set and nomatch set o Test set is small relative to nomatch set q Define Balanced accuracy = (TPR + TNR) / 2 = 0. 5 TP/P + 0. 5 TN/N o Errors on both sets weighted same q Consider Data Analysis imbalance issue again later 18

Accuracy q Accuracy tells us something… o But it depends on where threshold is set o How should we set the threshold? o Seems we are going around in circles like a dog chasing its tail q Bottom line? We still don’t have good way to compare different techniques o Next slide, please… Data Analysis 19

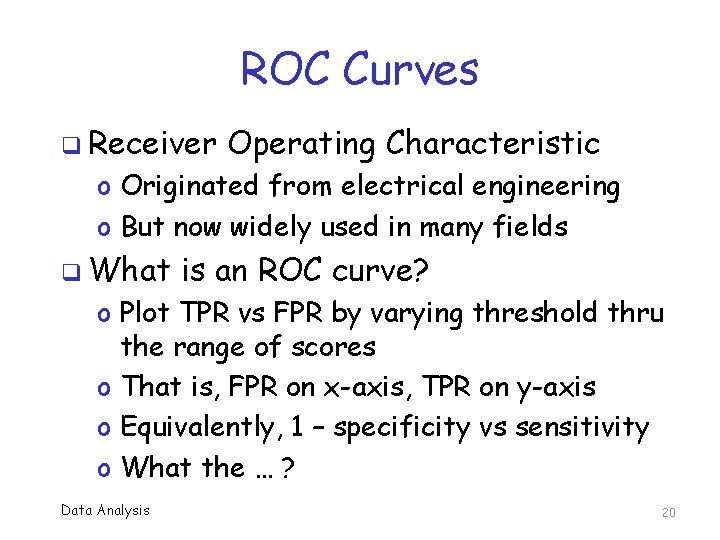

ROC Curves q Receiver Operating Characteristic o Originated from electrical engineering o But now widely used in many fields q What is an ROC curve? o Plot TPR vs FPR by varying threshold thru the range of scores o That is, FPR on x-axis, TPR on y-axis o Equivalently, 1 – specificity vs sensitivity o What the … ? Data Analysis 20

1 TPR ROC Curve q Suppose threshold is set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 1. 0 o FPR = 1. 0 – TNR = 1. 0 – 0. 0 = 1. 0 Data Analysis 1 FPR score q In 0 test case 21

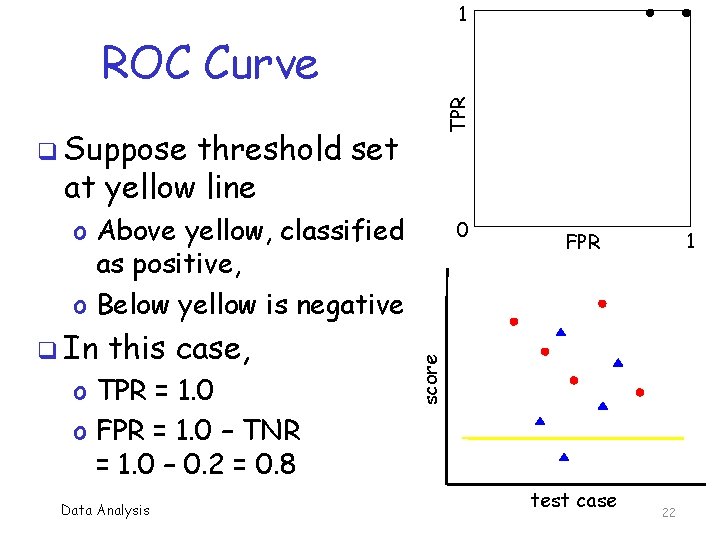

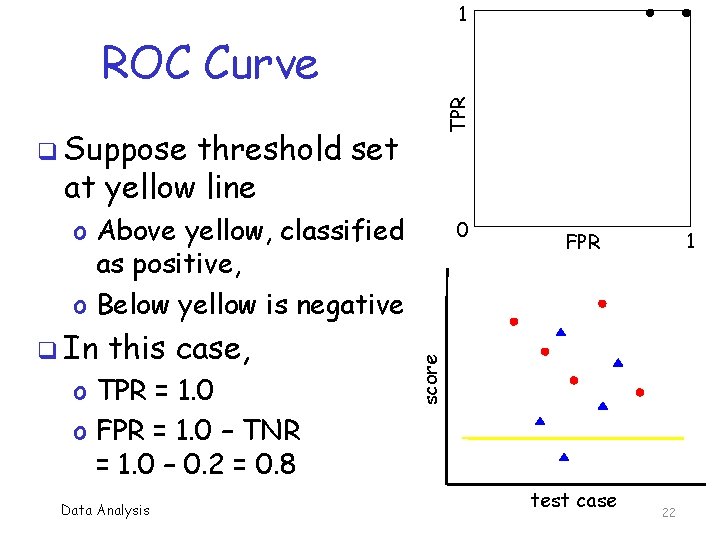

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 1. 0 o FPR = 1. 0 – TNR = 1. 0 – 0. 2 = 0. 8 Data Analysis 1 FPR score q In 0 test case 22

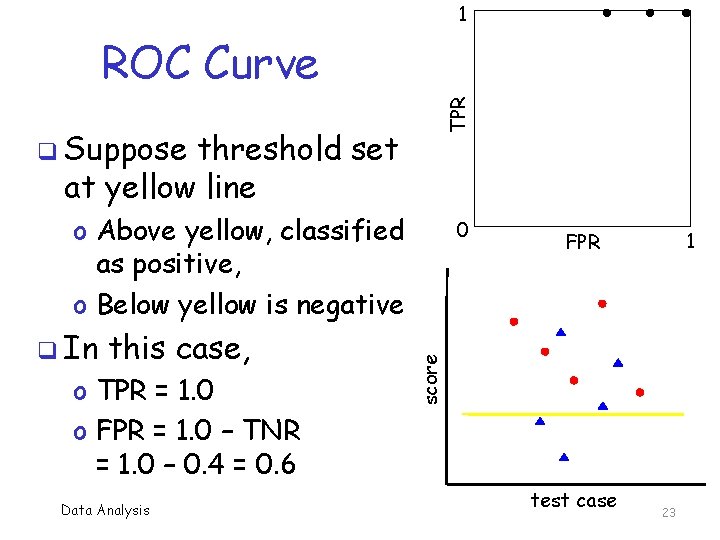

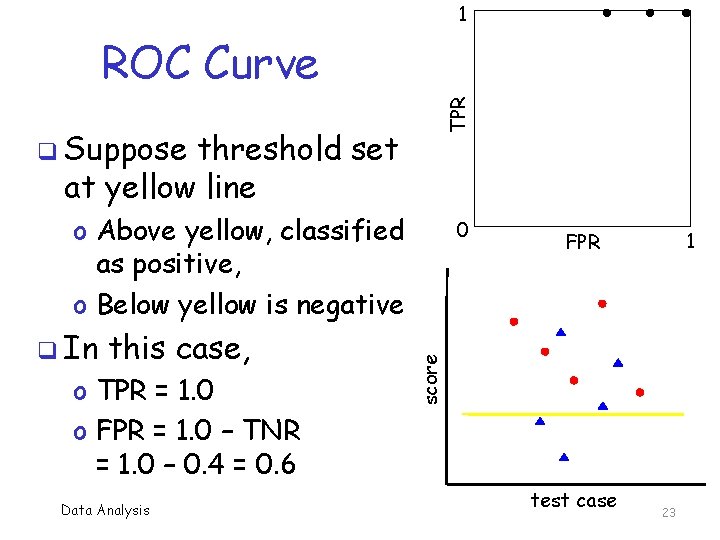

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 1. 0 o FPR = 1. 0 – TNR = 1. 0 – 0. 4 = 0. 6 Data Analysis 1 FPR score q In 0 test case 23

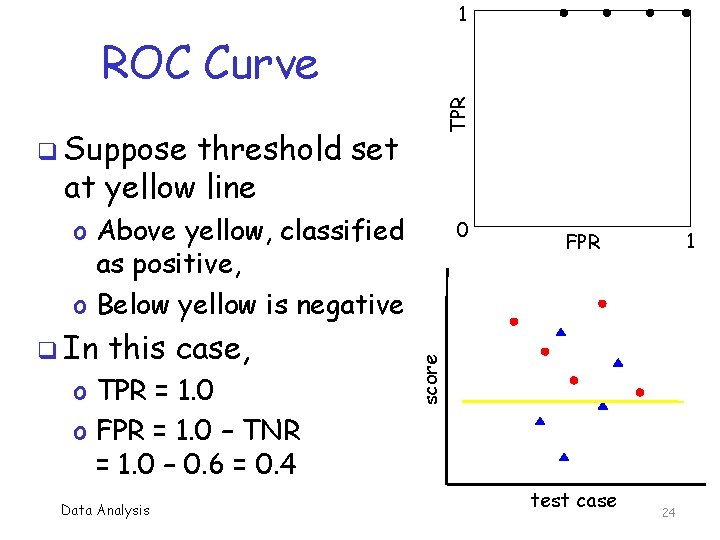

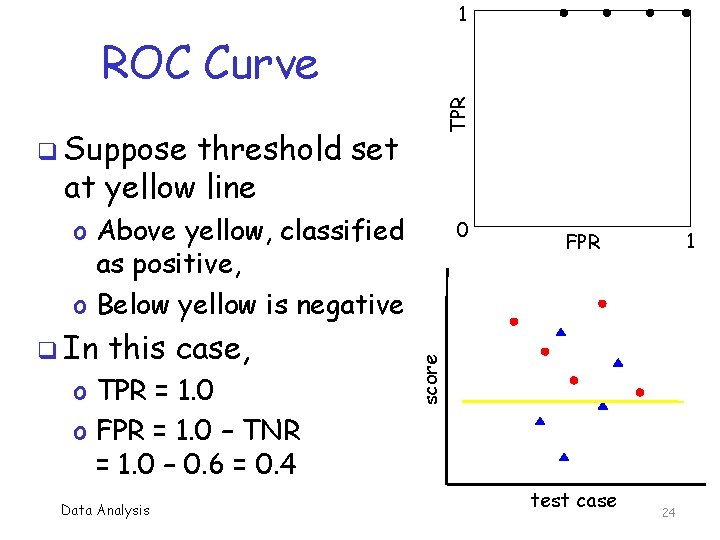

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 1. 0 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 4 Data Analysis 1 FPR score q In 0 test case 24

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 8 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 4 Data Analysis 1 FPR score q In 0 test case 25

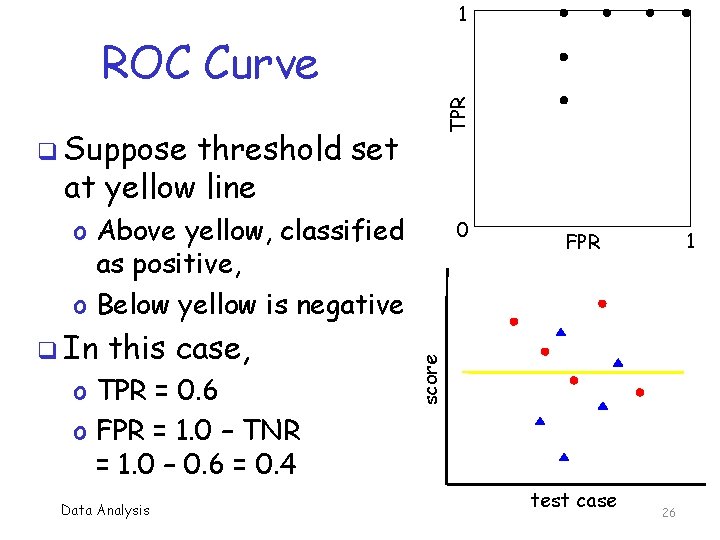

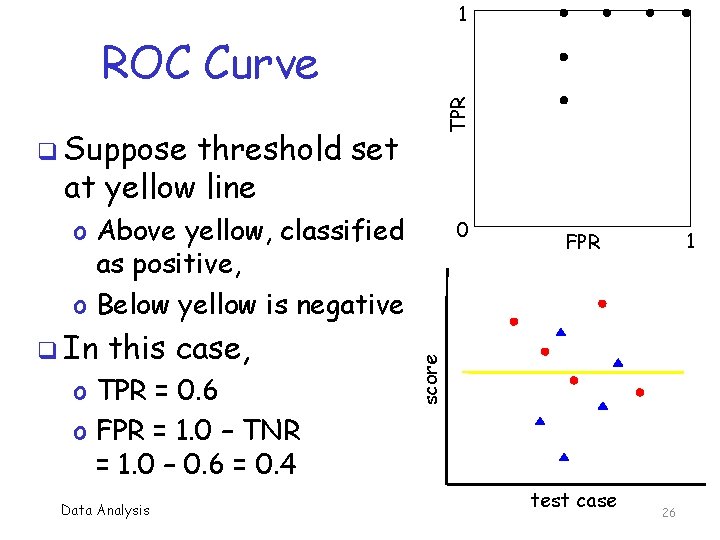

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 6 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 4 Data Analysis 1 FPR score q In 0 test case 26

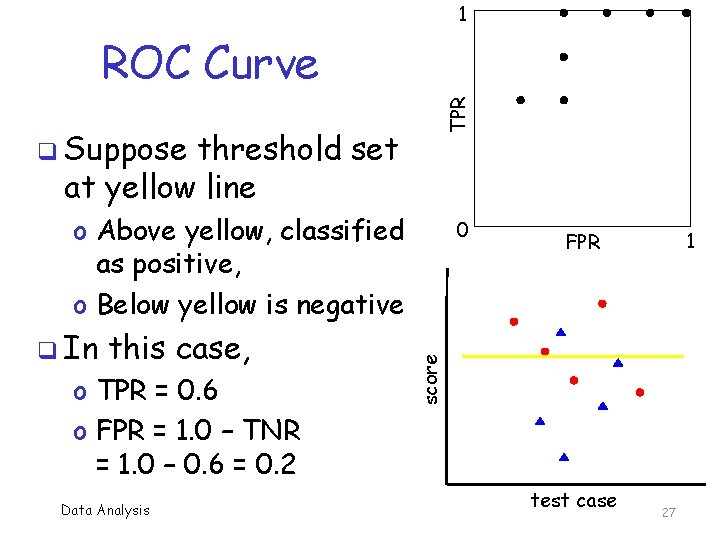

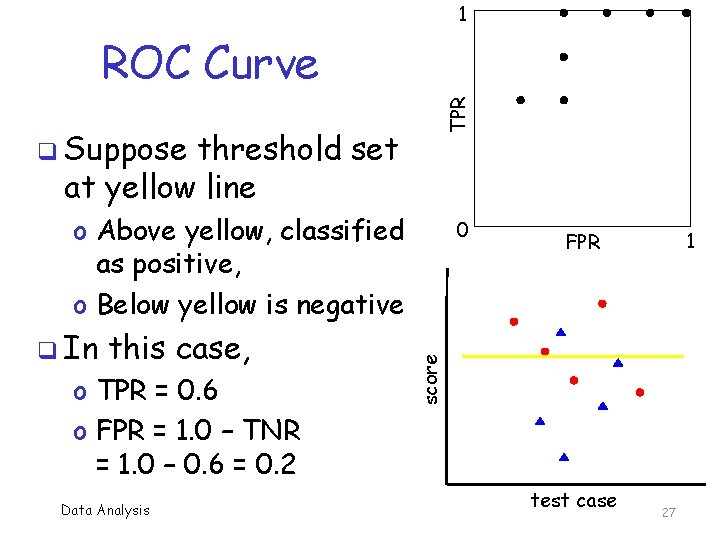

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 6 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 2 Data Analysis 1 FPR score q In 0 test case 27

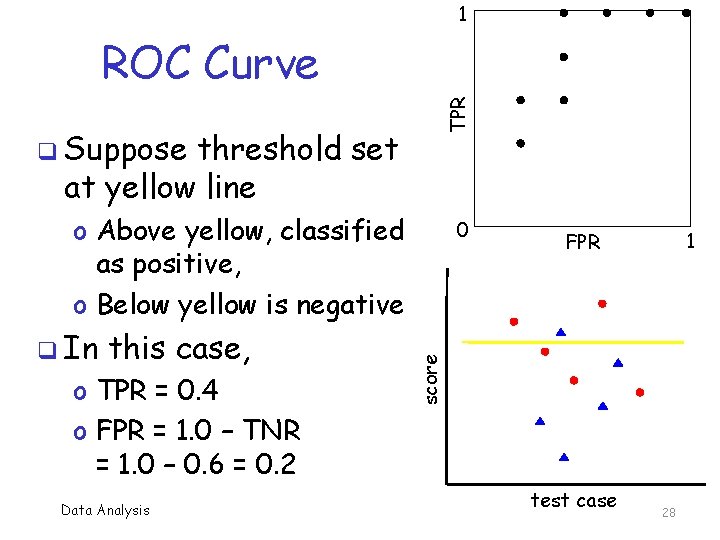

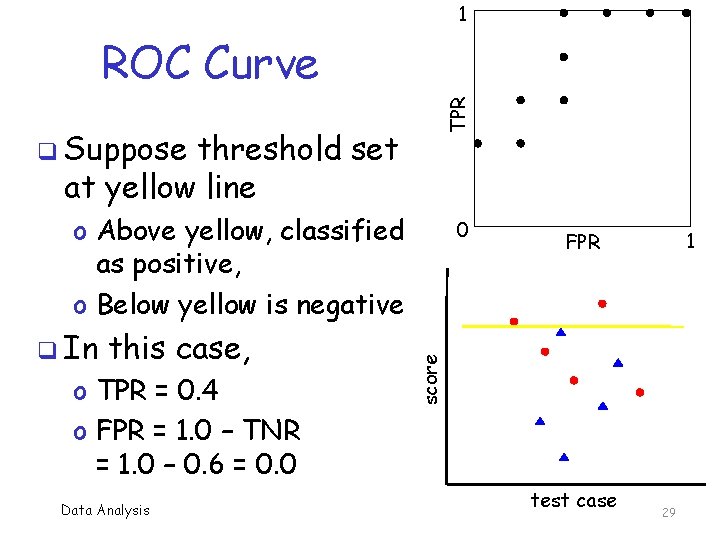

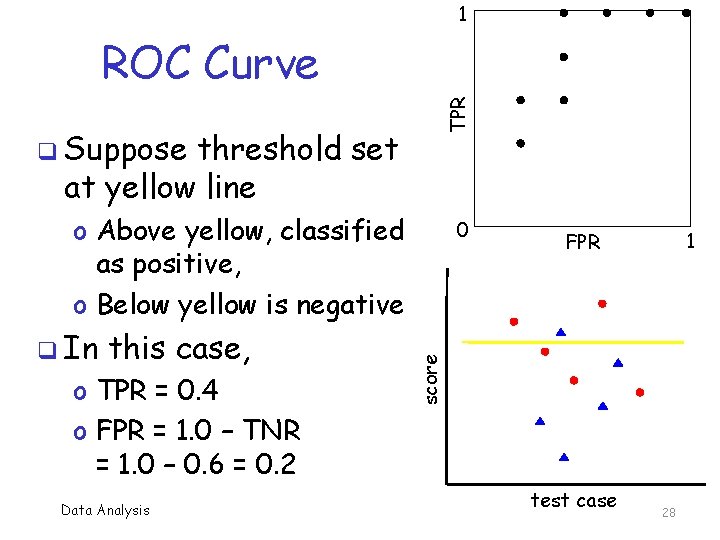

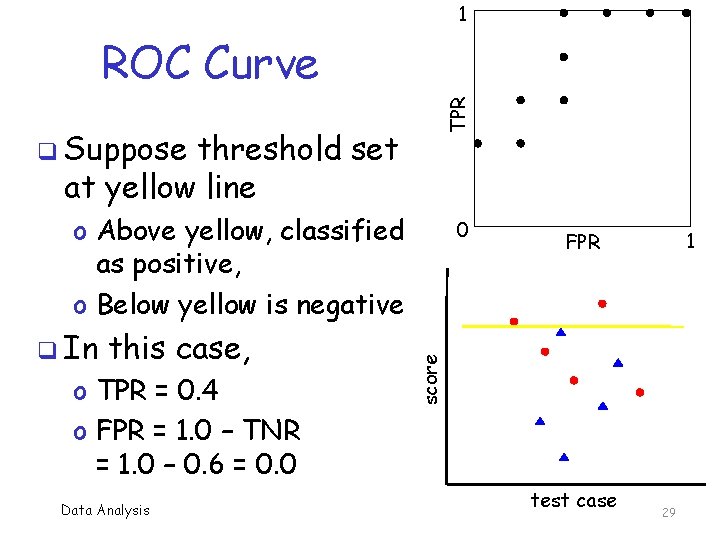

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 4 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 2 Data Analysis 1 FPR score q In 0 test case 28

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 4 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 0 Data Analysis 1 FPR score q In 0 test case 29

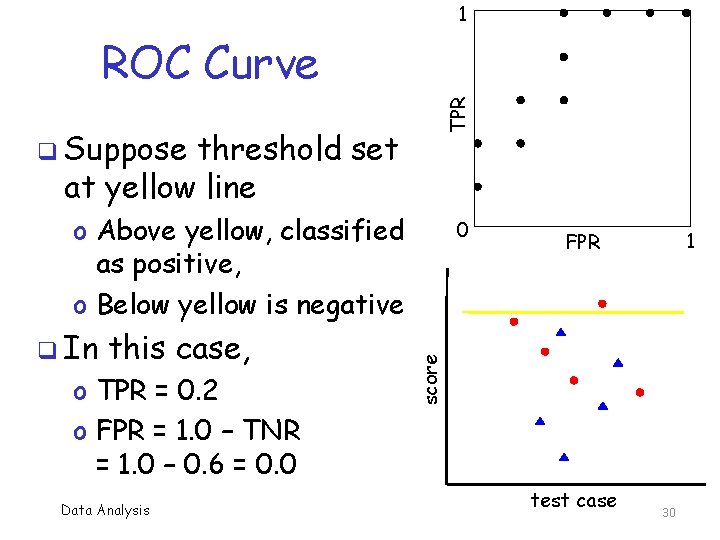

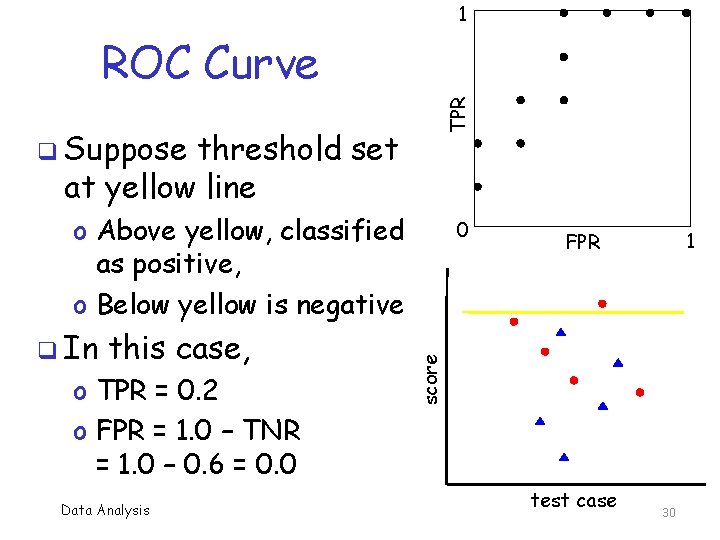

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 2 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 0 Data Analysis 1 FPR score q In 0 test case 30

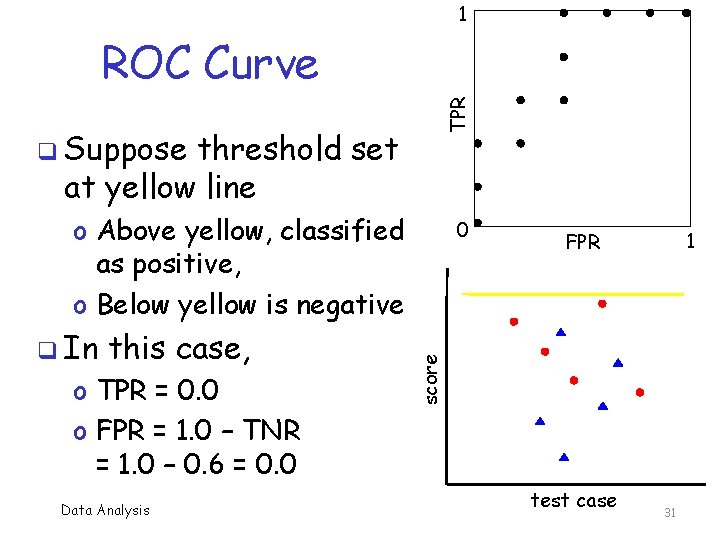

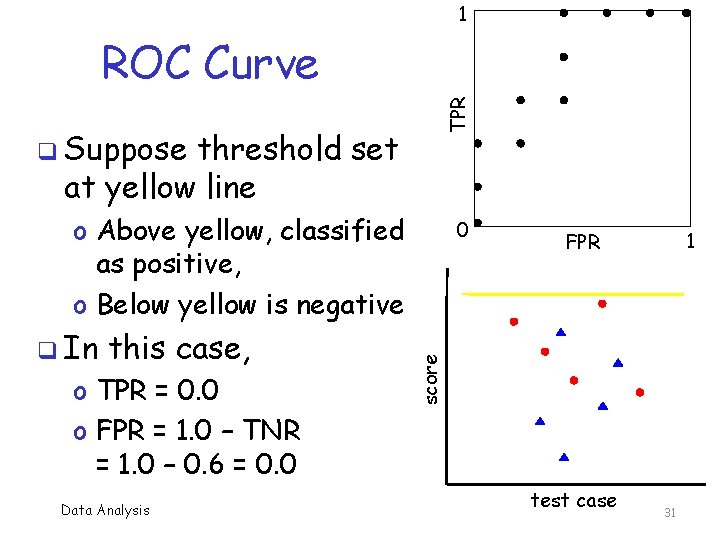

1 TPR ROC Curve q Suppose threshold set at yellow line o Above yellow, classified as positive, o Below yellow is negative this case, o TPR = 0. 0 o FPR = 1. 0 – TNR = 1. 0 – 0. 6 = 0. 0 Data Analysis 1 FPR score q In 0 test case 31

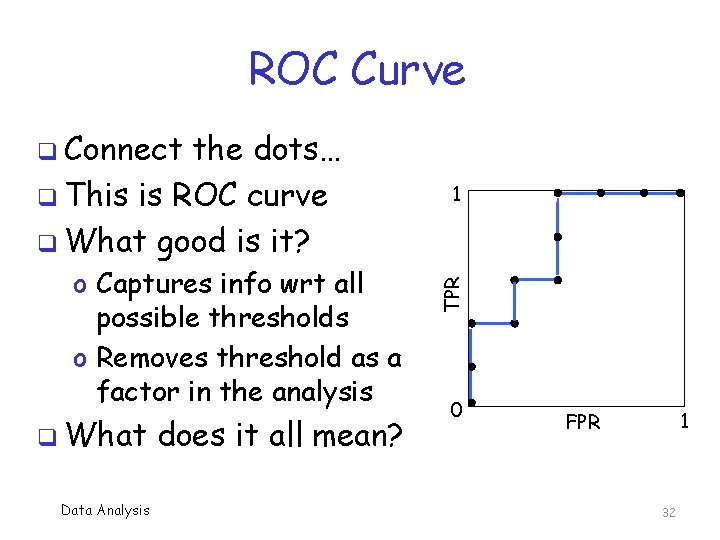

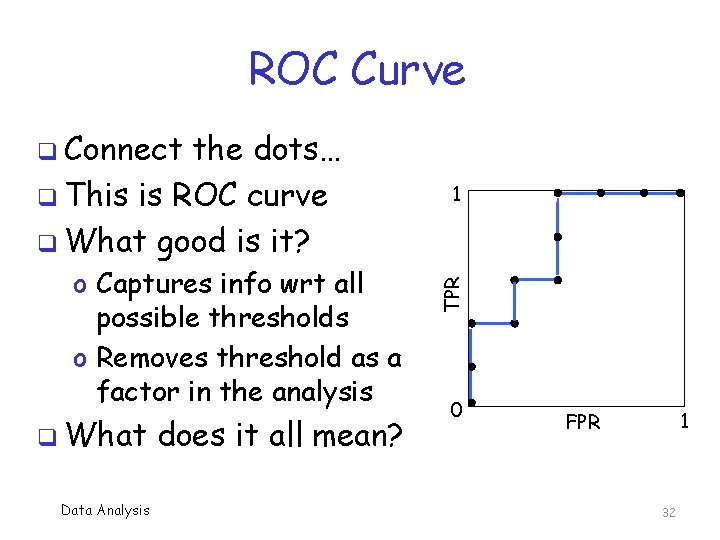

ROC Curve q Connect o Captures info wrt all possible thresholds o Removes threshold as a factor in the analysis q What Data Analysis does it all mean? 1 TPR the dots… q This is ROC curve q What good is it? 0 1 FPR 32

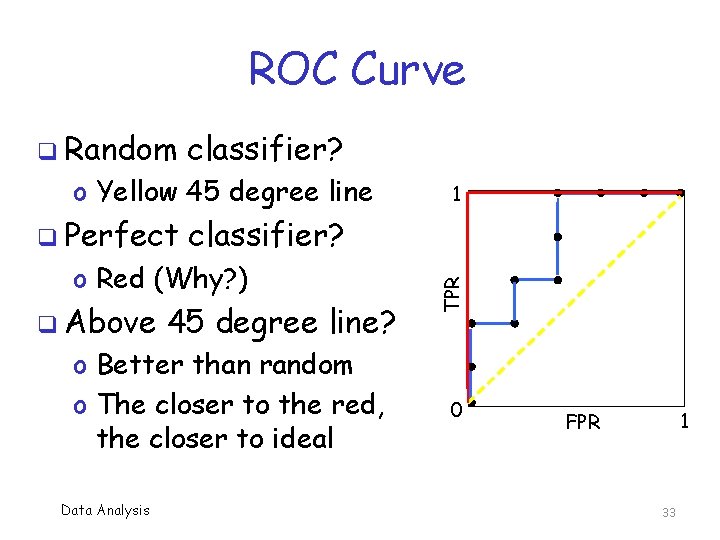

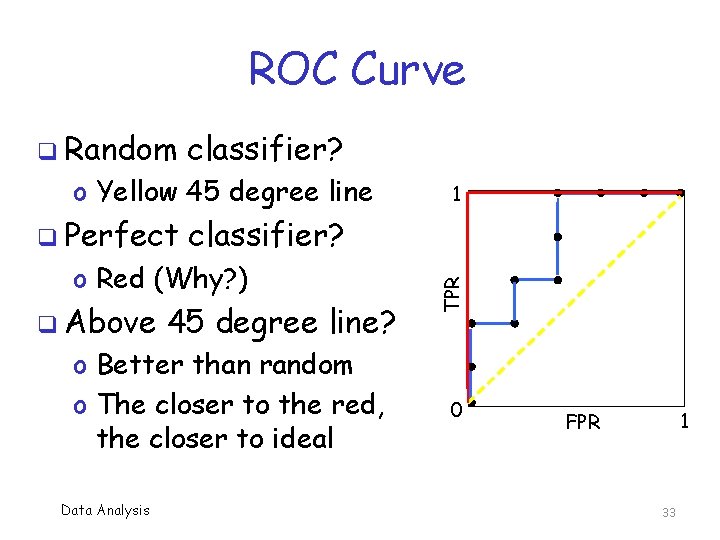

ROC Curve classifier? o Yellow 45 degree line q Perfect classifier? o Red (Why? ) q Above 45 degree line? o Better than random o The closer to the red, the closer to ideal Data Analysis 1 TPR q Random 0 1 FPR 33

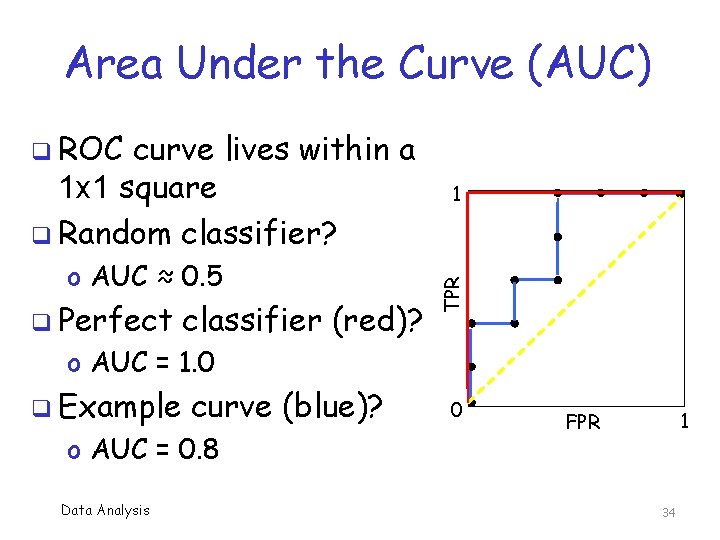

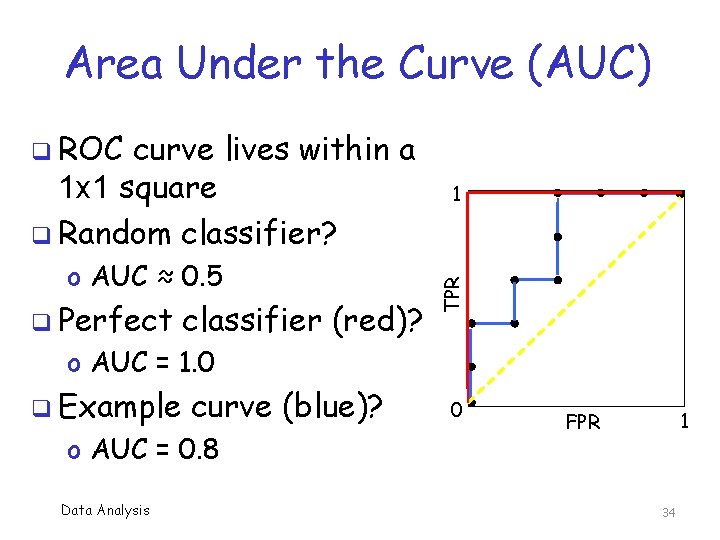

Area Under the Curve (AUC) q ROC o AUC ≈ 0. 5 q Perfect classifier (red)? 1 TPR curve lives within a 1 x 1 square q Random classifier? o AUC = 1. 0 q Example curve (blue)? o AUC = 0. 8 Data Analysis 0 1 FPR 34

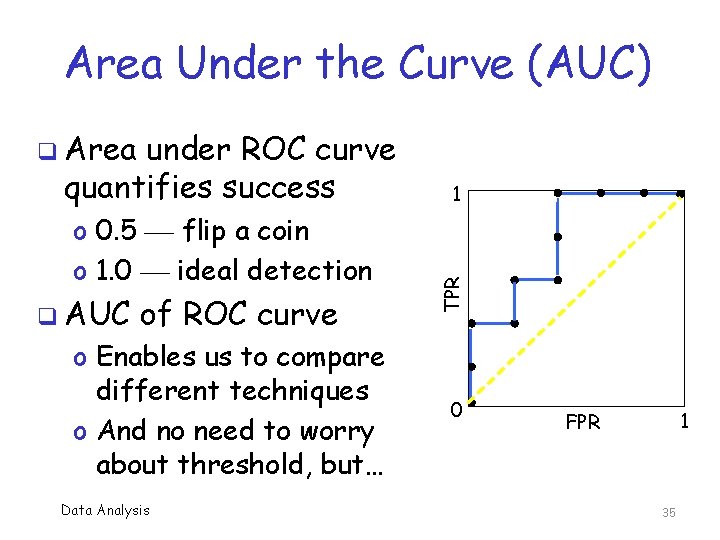

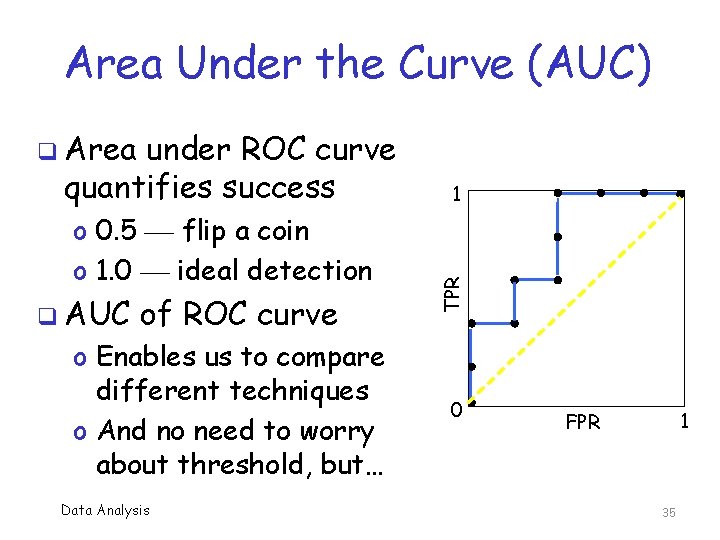

Area Under the Curve (AUC) q Area o 0. 5 flip a coin o 1. 0 ideal detection q AUC of ROC curve o Enables us to compare different techniques o And no need to worry about threshold, but… Data Analysis 1 TPR under ROC curve quantifies success 0 1 FPR 35

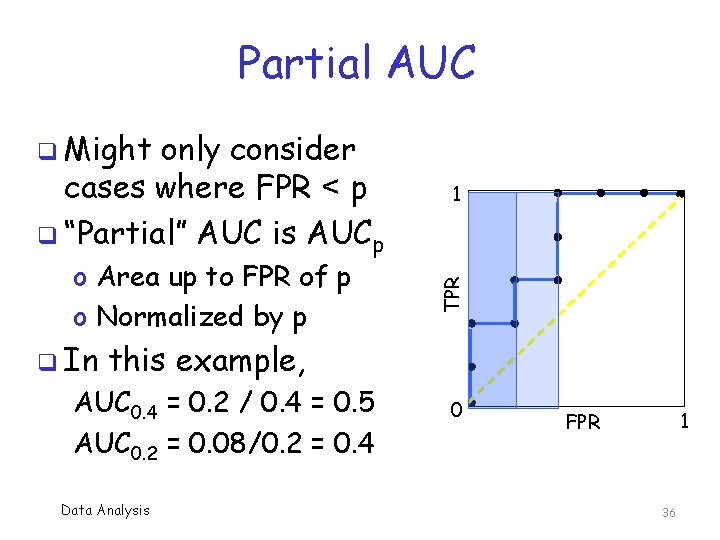

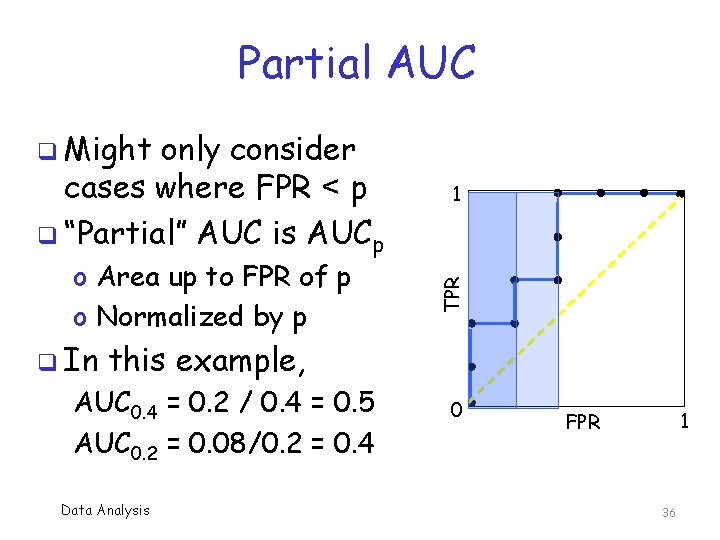

Partial AUC q Might o Area up to FPR of p o Normalized by p q In 1 TPR only consider cases where FPR < p q “Partial” AUC is AUCp this example, AUC 0. 4 = 0. 2 / 0. 4 = 0. 5 AUC 0. 2 = 0. 08/0. 2 = 0. 4 Data Analysis 0 1 FPR 36

Imbalance Problem q Suppose we train model for given malware family q In practice, we expect to score many more non-family files than family o Number of negative cases is large o Number of positive cases is small q So what? q Let’s consider an example Data Analysis 37

Imbalance Problem q In practice, we need threshold q For a given threshold, suppose sensitivity = 0. 99, specificity = 0. 98 o Then TPR = 0. 99 and FPR = 0. 02 q Assume 1 in 1 k files tested is malware o Of the type our model trained to detect q Suppose we scan, say, 100 k files o What do we find? Data Analysis 38

Imbalance Problem q Assuming TPR = 0. 99 and FPR = 0. 02 o And 1 in 1000 is malware q After scanning 100 k files… o Detect 99 of 100 actual malware (TP) o Misclassify 1 malware as benign (FN) o Correctly classify 97902 (out of 99900) benign as benign (TN) o Misclassify 1998 benign as malware (FP) Data Analysis 39

Imbalance Problem q We have 97903 classified as benign o Of those, 97902 are actually benign o And 97902/97903 > 0. 9999 q We classified 2097 as malware o Of these, only 99 are actual malware o But 99/2097 < 0. 05 q Remember the “boy who cried wolf”? o Here, the detector cries wolf (a lot)… Data Analysis 40

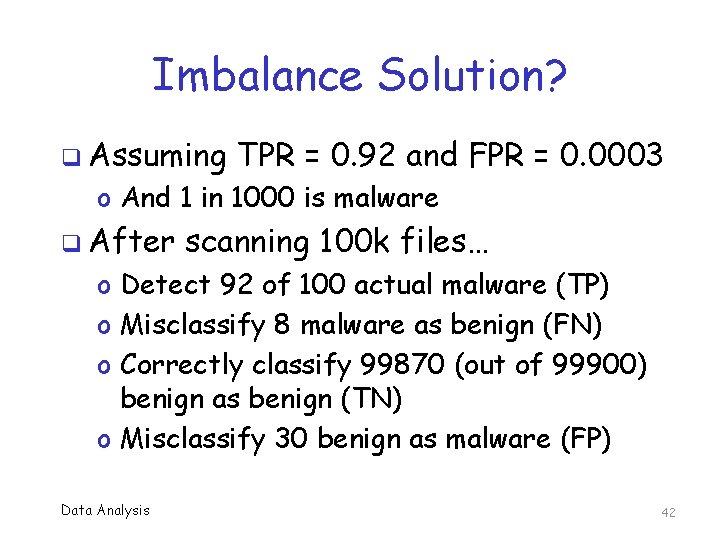

Imbalance Solution? q What to do? q There is inherent tradeoff between sensitivity and specificity q Suppose we can adjust threshold so o TPR = 0. 92 and FPR = 0. 0003 q As before… o We have 1 in 1000 is malware o And we test 100 k files Data Analysis 41

Imbalance Solution? q Assuming TPR = 0. 92 and FPR = 0. 0003 o And 1 in 1000 is malware q After scanning 100 k files… o Detect 92 of 100 actual malware (TP) o Misclassify 8 malware as benign (FN) o Correctly classify 99870 (out of 99900) benign as benign (TN) o Misclassify 30 benign as malware (FP) Data Analysis 42

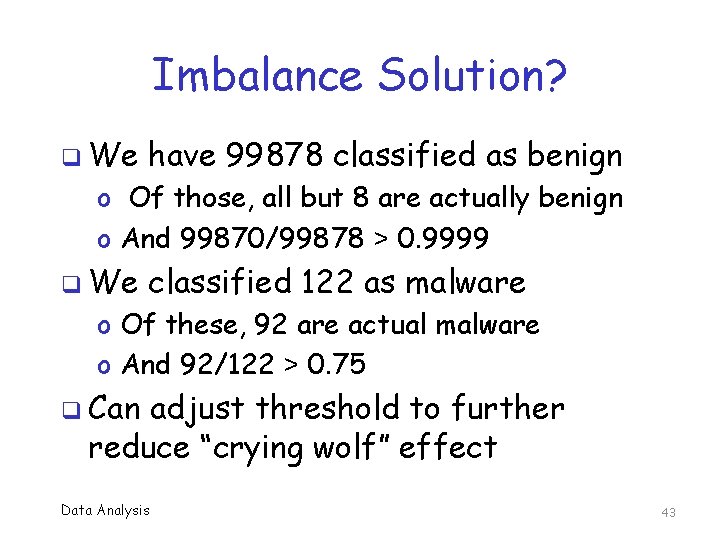

Imbalance Solution? q We have 99878 classified as benign o Of those, all but 8 are actually benign o And 99870/99878 > 0. 9999 q We classified 122 as malware o Of these, 92 are actual malware o And 92/122 > 0. 75 q Can adjust threshold to further reduce “crying wolf” effect Data Analysis 43

Imbalance Problem q. A better alternative? q Instead of increasing FPR to lower TPR o Perform secondary testing on files that are initially classified as malware o We can thus weed out most FP cases q This gives us best of both worlds o Low FPR, few benign reported as malware q No free lunch, so what’s the cost? Data Analysis 44

Bottom Line q Design your experiments properly o Use n-fold cross validation (e. g. , n = 5) o Generally, cross validation is important q Thresholding is important in practice o But not so useful for analyzing results o Accuracy not as informative as ROC analysis q Use ROC curves and compute AUC o Sometimes, partial AUC may be better q Imbalance problem may be significant issue Data Analysis 45

References q A. P. Bradley, The use of the area under the ROC curve in the evaluation of machine learning algorithms, Pattern Recognition, 30: 1145 -1159, 1997 Data Analysis 46