Clustering Applications Mark Stamp Clustering Applications 1 KMeans

- Slides: 45

Clustering Applications Mark Stamp Clustering Applications 1

K-Means for Malware Classification Chinmayee Annachhatre Mark Stamp Clustering Applications 2

Quest for the Holy Grail q Holy Grail of malware research is to detect previously unseen malware o So-called “zero day” malware q If you solve this problem, you’ll be rich q We don’t consider this problem here q But we do consider something similar q Problem here… classify “new” malware o New in a sense… Clustering Applications 3

Motivation q Can we automatically classify malware? o Using only HMM scores q Train HMMs for several compilers and metamorphic generators q Then cluster malware based on scores q Note that the clustered malware does not correspond to any of the HMMs o Why not model clustered families? Clustering Applications 4

Related Work q Previous work on metamorphic detection using HMMs q Other work showed HMMs can identify the compiler used o Not too surprising, since metamorphic generator is a type of “compiler” q Here, we extend these results to malware classification problem Clustering Applications 5

Malware Classification q Some examples of previous work o Structured control flow call graphs or other graph structures o Behavioral analysis dynamic analysis o Data mining methods n-gram analysis using Naïve Bayes, SVM, etc. o VILO feature vectors (n-grams), TFIDF weighting, nearest neighbor Clustering Applications 6

Implementation q Next, consider implementation details related to each of the following o o Training HMMs Malware dataset Scoring Clustering q Then we discuss experimental results Clustering Applications 7

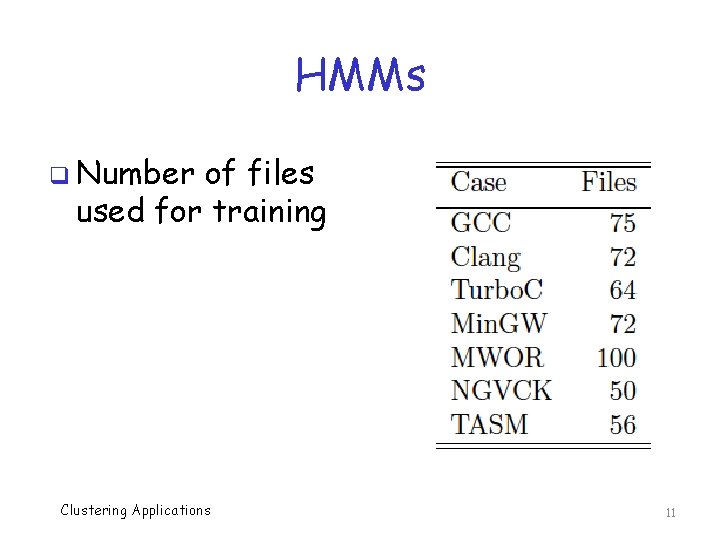

Training HMMs q Train HMM for each of the following… q Four compilers o GCC, Min. GW, Turbo. C, Clang q Hand-written assembly code o We refer to this model as TASM q Two metamorphic families o NGVCK and MWOR Clustering Applications 8

HMMs q Previous work has shown that HMMs effective at detecting compiler o Based on opcodes q Implies that each compiler has a distinctive statistical profile q Previous work included TASM, NGVCK, and MWOR for comparison o Here we apply models to other malware Clustering Applications 9

HMMs q NGVCK o Next Generation Virus Construction Kit o Highly metamorphic o Studied in lots of previous research q MWOR o Experimental metamorphic generator o Designed to be “stealthy” wrt statistical analysis Clustering Applications 10

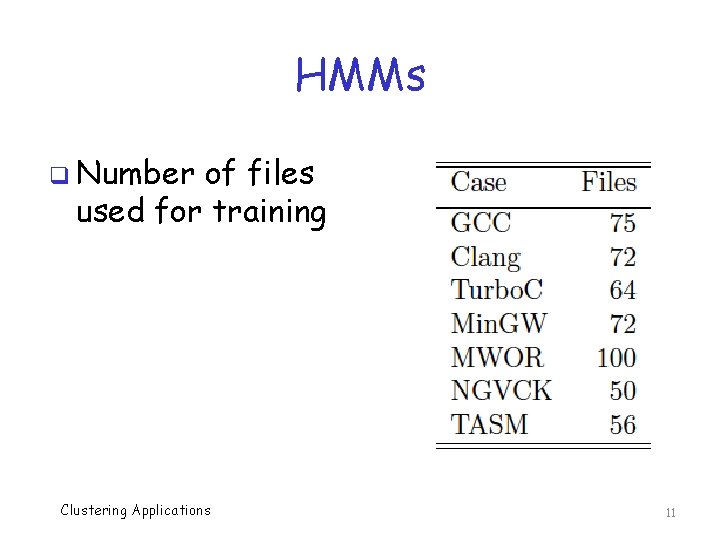

HMMs q Number of files used for training Clustering Applications 11

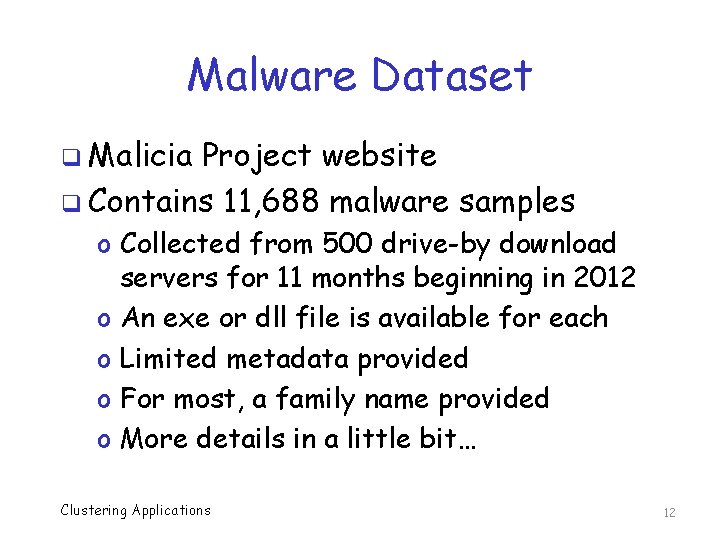

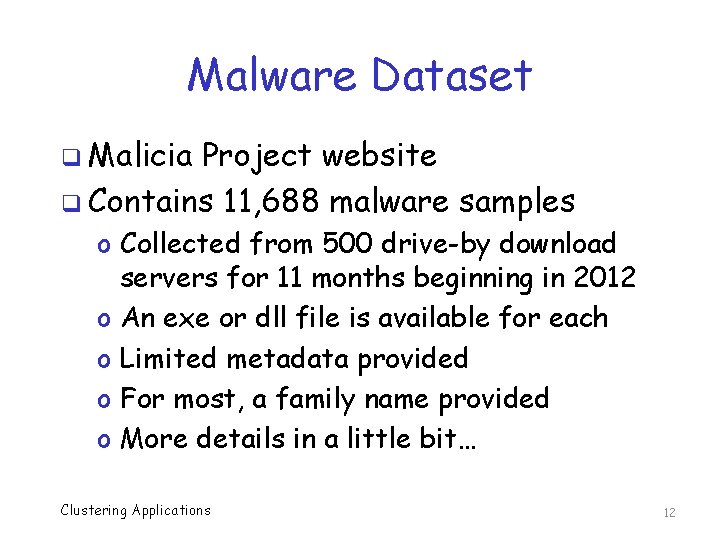

Malware Dataset q Malicia Project website q Contains 11, 688 malware samples o Collected from 500 drive-by download servers for 11 months beginning in 2012 o An exe or dll file is available for each o Limited metadata provided o For most, a family name provided o More details in a little bit… Clustering Applications 12

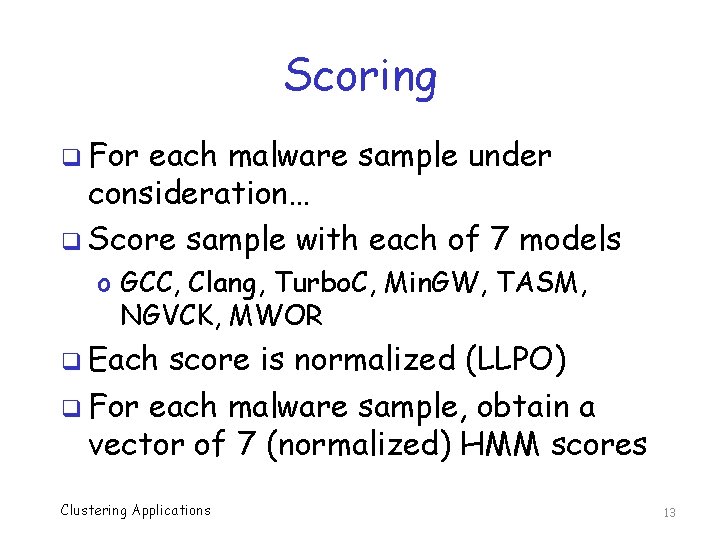

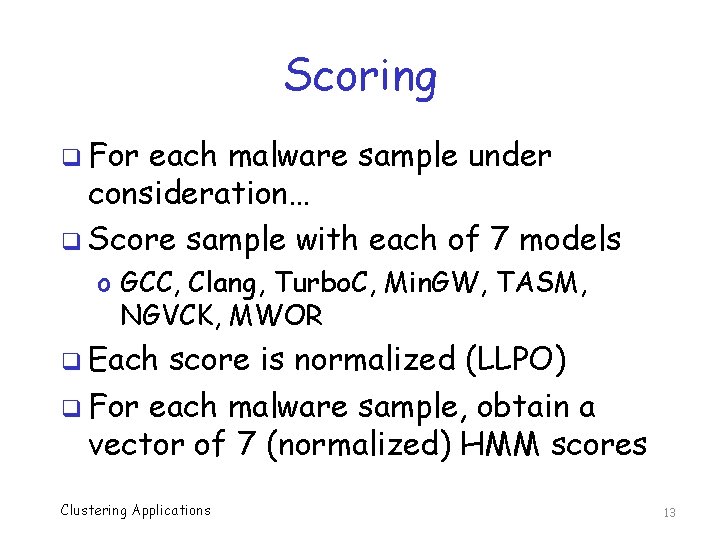

Scoring q For each malware sample under consideration… q Score sample with each of 7 models o GCC, Clang, Turbo. C, Min. GW, TASM, NGVCK, MWOR q Each score is normalized (LLPO) q For each malware sample, obtain a vector of 7 (normalized) HMM scores Clustering Applications 13

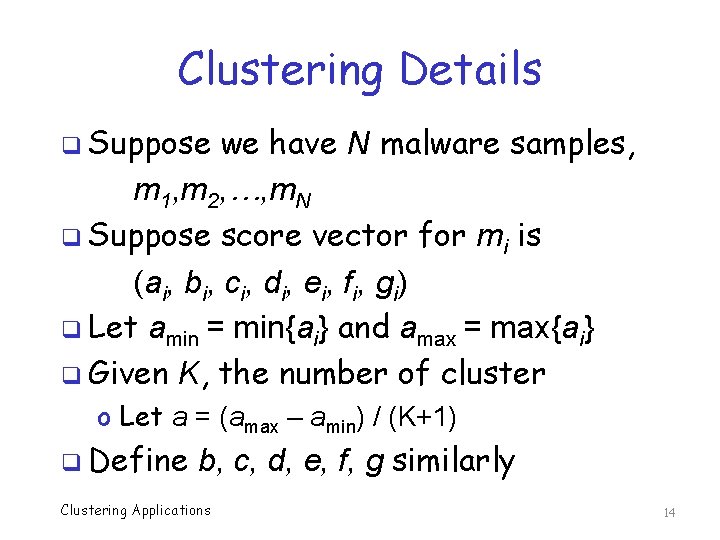

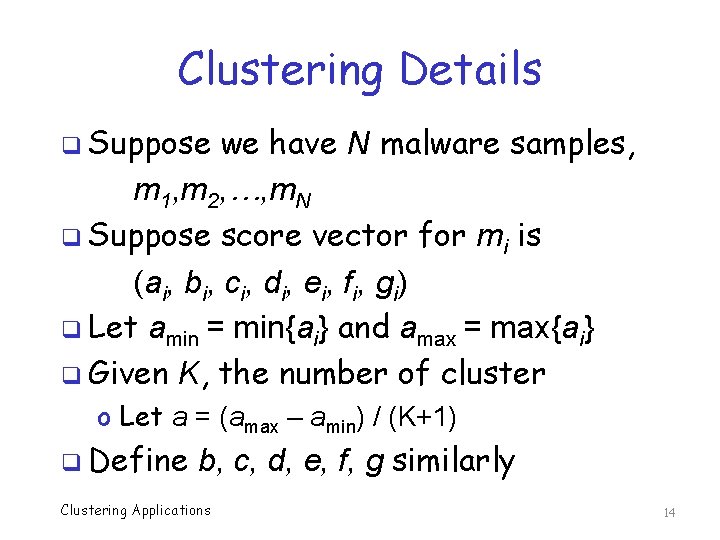

Clustering Details q Suppose we have N malware samples, m 1, m 2, …, m. N q Suppose score vector for mi is (ai, bi, ci, di, ei, fi, gi) q Let amin = min{ai} and amax = max{ai} q Given K, the number of cluster o Let a = (amax – amin) / (K+1) q Define b, c, d, e, f, g similarly Clustering Applications 14

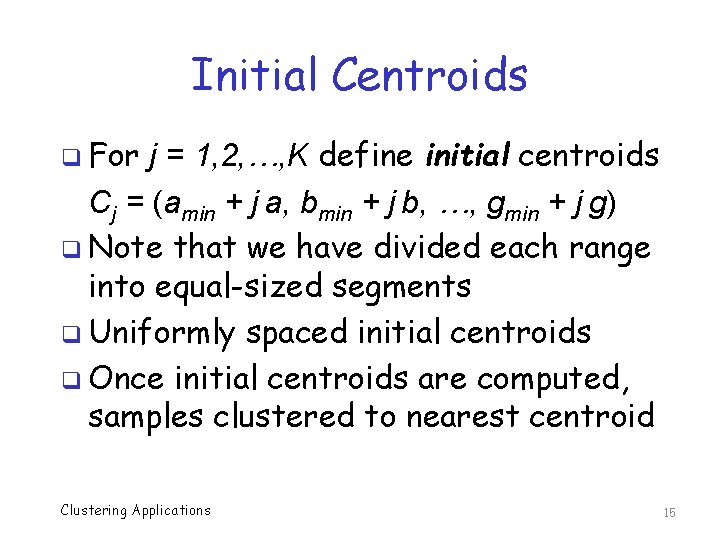

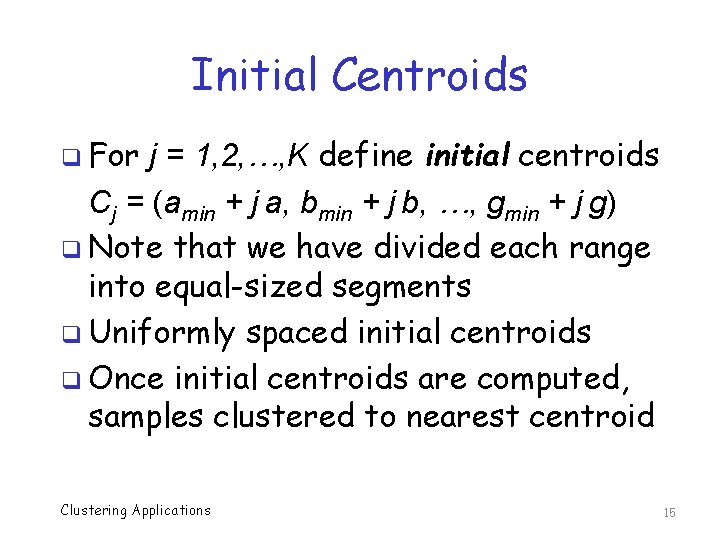

Initial Centroids q For j = 1, 2, …, K define initial centroids Cj = (amin + j a, bmin + j b, …, gmin + j g) q Note that we have divided each range into equal-sized segments q Uniformly spaced initial centroids q Once initial centroids are computed, samples clustered to nearest centroid Clustering Applications 15

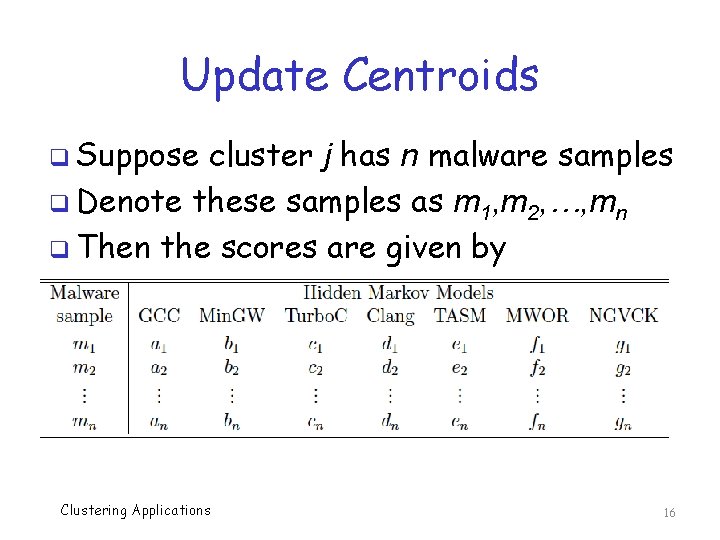

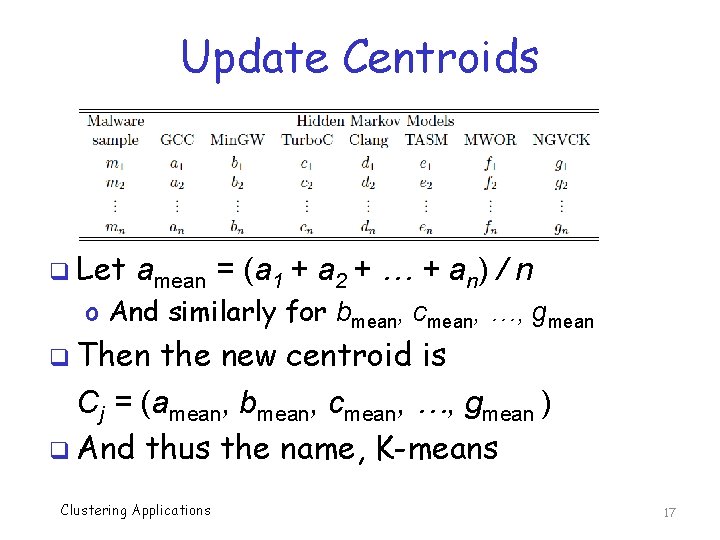

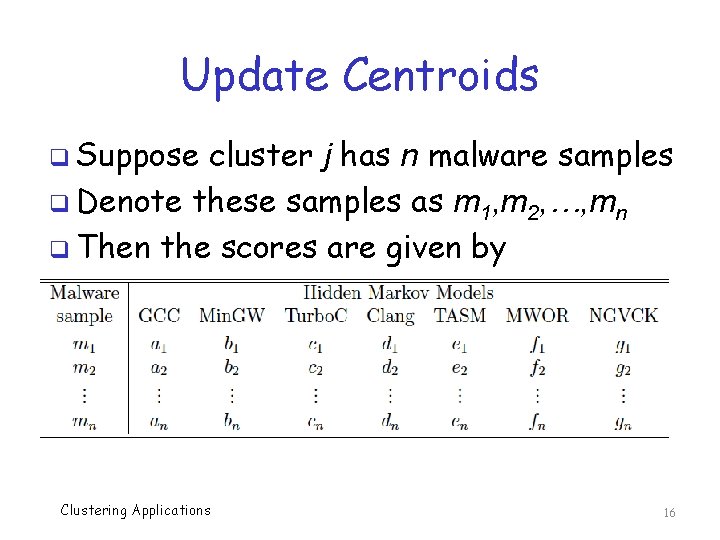

Update Centroids q Suppose cluster j has n malware samples q Denote these samples as m 1, m 2, …, mn q Then the scores are given by Clustering Applications 16

Update Centroids q Let amean = (a 1 + a 2 + … + an) / n o And similarly for bmean, cmean, …, gmean q Then the new centroid is Cj = (amean, bmean, cmean, …, gmean ) q And thus the name, K-means Clustering Applications 17

Update Clusters q After all K centroids computed… q Regroup malware samples, based on nearest centroid q Recompute centroids (as on previous slide) and regroup… q Continue until no significant change occurs when regrouping q Definition of K-means clustering!!! Clustering Applications 18

Experimental Setup q Host and virtual (guest) machine o Why use a virtual machine? q Host: Sony Vaio T 15, Intel i 5 -3337 U (1. 8 GHz), 400 GB RAM Windows 8 o All processing not involving malware q Guest: Oracle Virtual. Box VM (1 GB), Ubuntu 12. 04. 3 LTS o For dealing directly with malware Clustering Applications 19

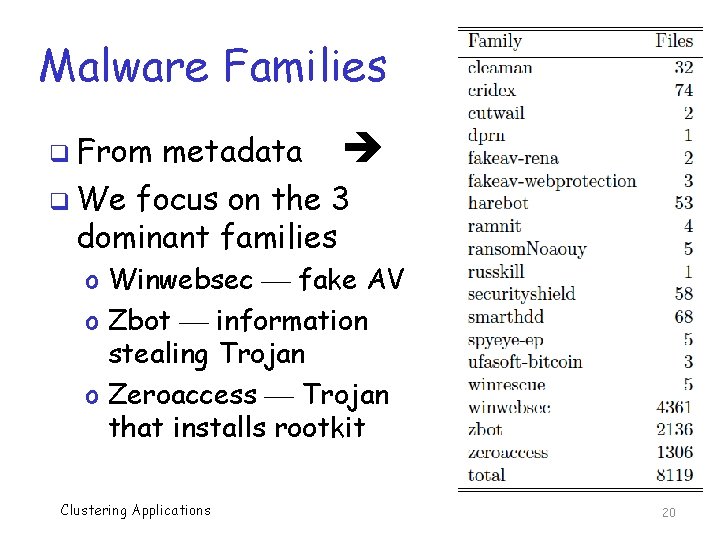

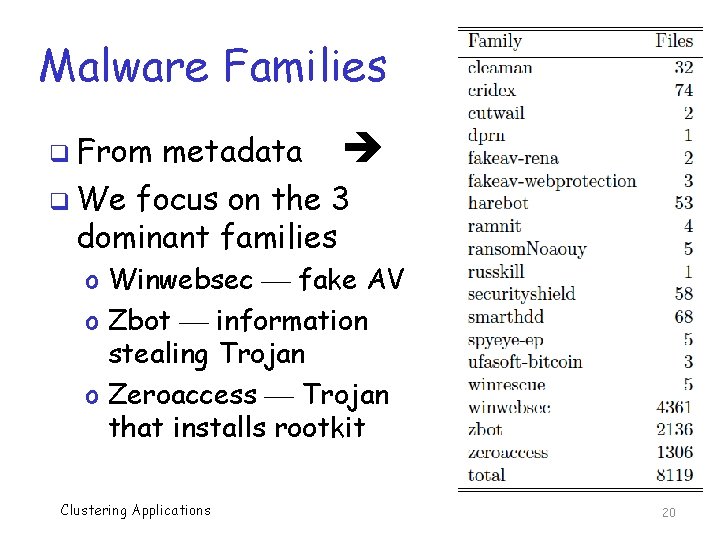

Malware Families metadata q We focus on the 3 dominant families q From o Winwebsec fake AV o Zbot information stealing Trojan o Zeroaccess Trojan that installs rootkit Clustering Applications 20

Experiments q Performed clustering, K = 2 to K = 15 q Results on next slides… o Using all 7 scores o Uniform initial centroids o And N = 2 hidden states in all HMMs q Also experimented with o Combinations of 7 (or less) scores, uniform vs random initial centroid, N in HMMs, … Clustering Applications 21

Measuring Success q Can we quantify the quality of results? q Ideally, each cluster should include only one family (i. e. , one color) o Here, we focus on 3 dominant families o Winwebsec, Zbot, Zeroaccess q How to measure this? Clustering Applications 22

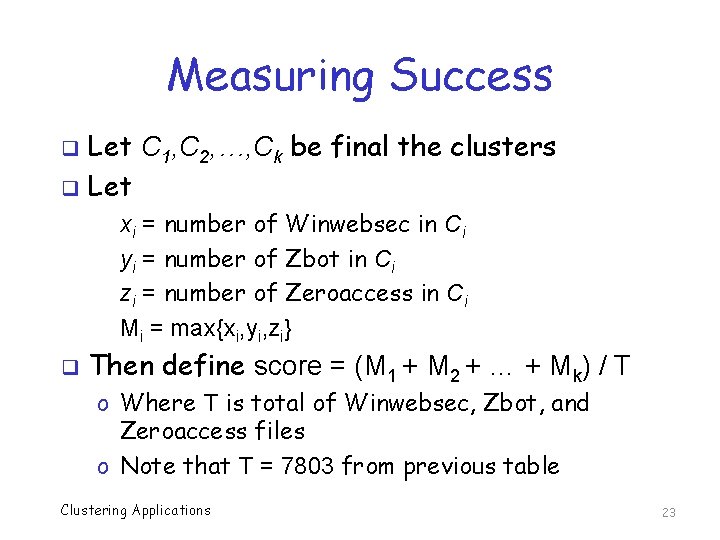

Measuring Success Let C 1, C 2, …, Ck be final the clusters q Let q xi = number of Winwebsec in Ci yi = number of Zbot in Ci zi = number of Zeroaccess in Ci Mi = max{xi, yi, zi} q Then define score = (M 1 + M 2 + … + Mk) / T o Where T is total of Winwebsec, Zbot, and Zeroaccess files o Note that T = 7803 from previous table Clustering Applications 23

Measuring Success q Recall, score = (M 1 + M 2 + … + Mk) / T o Note that 0 < score ≤ 1 o And, score = 1 implies all clusters are uniform (wrt 3 major families) q Suppose we classify simply based on dominant family in a cluster q Then score = 1 is a perfect result o Wrt the three major families Clustering Applications 24

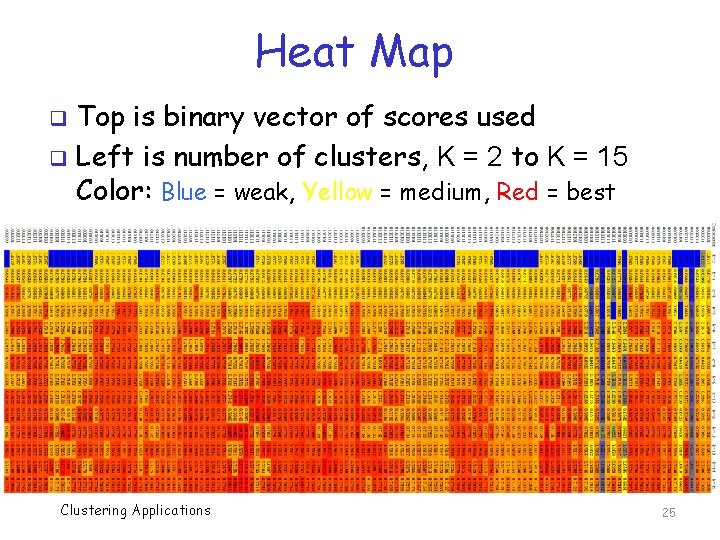

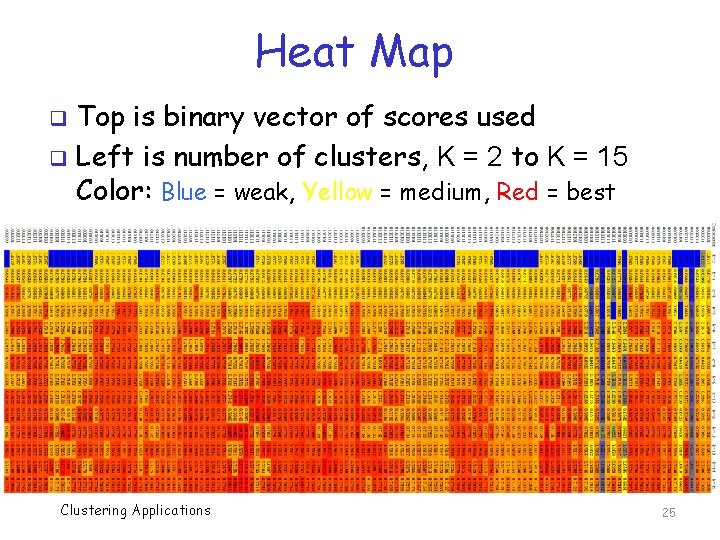

Heat Map Top is binary vector of scores used q Left is number of clusters, K = 2 to K = 15 Color: Blue = weak, Yellow = medium, Red = best q Clustering Applications 25

Heat Map q 7 -tuple of scores o GCC, Min. GW, Turbo. C, Clang, TASM, MWOR, NGVCK q Observations… o Best score is ≈ 0. 82 o Need 6 or more clusters for best results o Do not need to use all of the HMM scores q So, is 0. 82 good or not? q Better than “random” classification? Clustering Applications 26

Clusters for Classification q Our “score” is accuracy if we classify based on dominant type in cluster o That is, score samples of unknown type by clustering to nearest centroid o And classify by dominant type in cluster q Previous slide says we can get more than 0. 82 correct in this manner q Good? Bad? Compared to what? Clustering Applications 27

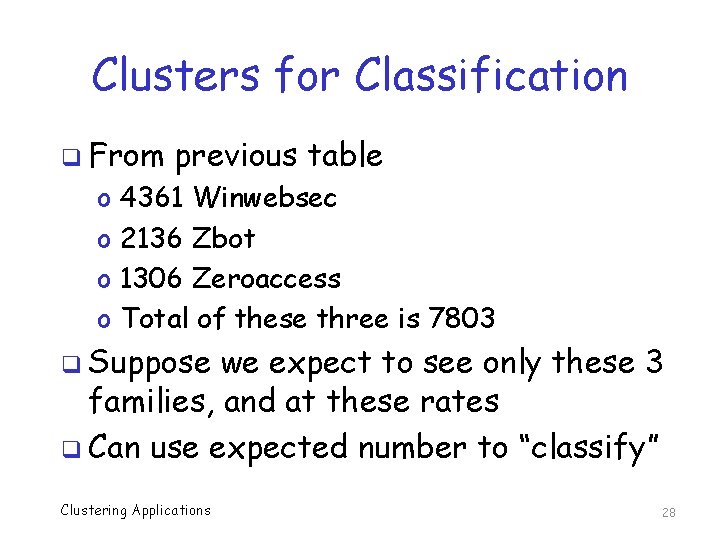

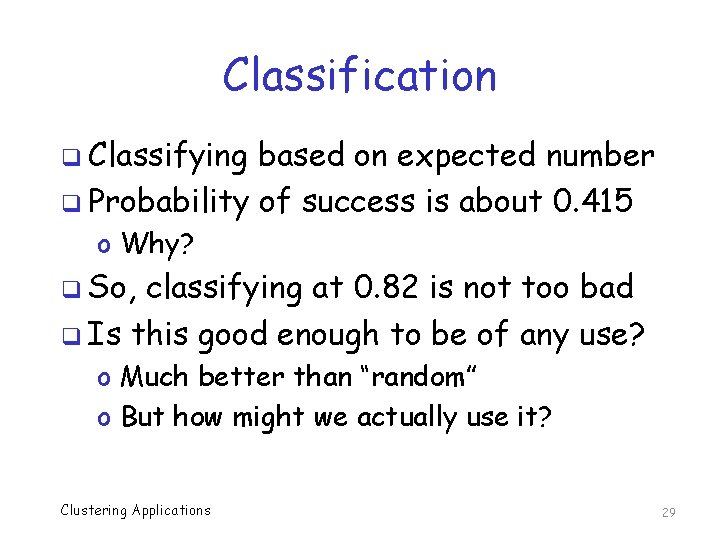

Clusters for Classification q From o o previous table 4361 Winwebsec 2136 Zbot 1306 Zeroaccess Total of these three is 7803 q Suppose we expect to see only these 3 families, and at these rates q Can use expected number to “classify” Clustering Applications 28

Classification q Classifying based on expected number q Probability of success is about 0. 415 o Why? q So, classifying at 0. 82 is not too bad q Is this good enough to be of any use? o Much better than “random” o But how might we actually use it? Clustering Applications 29

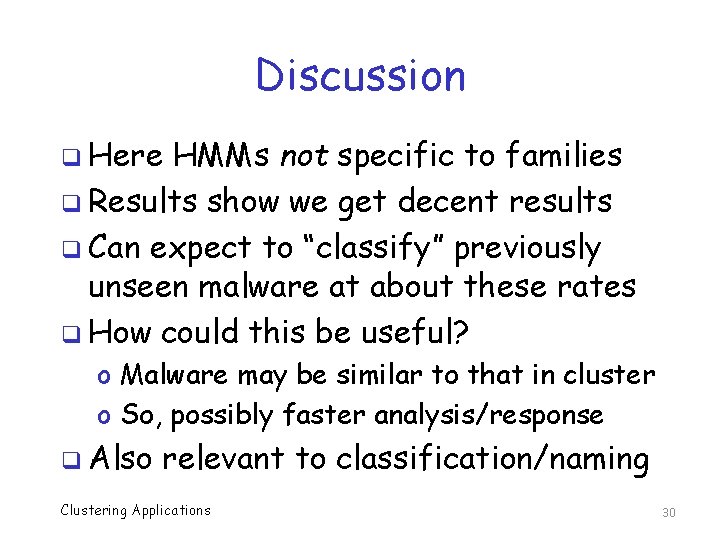

Discussion q Here HMMs not specific to families q Results show we get decent results q Can expect to “classify” previously unseen malware at about these rates q How could this be useful? o Malware may be similar to that in cluster o So, possibly faster analysis/response q Also relevant to classification/naming Clustering Applications 30

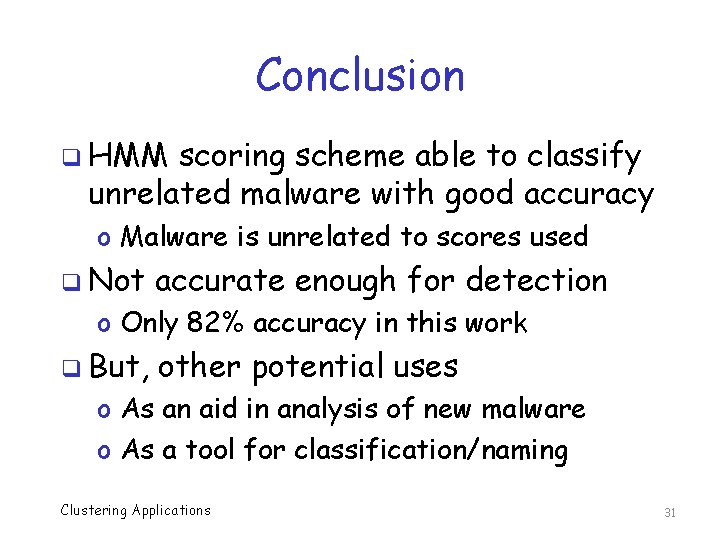

Conclusion q HMM scoring scheme able to classify unrelated malware with good accuracy o Malware is unrelated to scores used q Not accurate enough for detection o Only 82% accuracy in this work q But, other potential uses o As an aid in analysis of new malware o As a tool for classification/naming Clustering Applications 31

Future Work q Other clustering techniques o K-mediods, fuzzy K-means, EM, etc. q Use additional malware-specific models q Other types of scores/scoring o Structural scores (entropy, compression, eigenvector-based, etc. ) o More advanced combinations of scores q Experiments Clustering Applications with other data sets 32

EM versus K-Means for Malware Analysis Swathi Pai Usha Narra Mark Stamp Clustering Applications 33

Clustering Malware q Again, focused on “new” malware q Can we detect/analyze previously unseen malware using clustering? q Compare K-means and EM clustering q Number of clusters varies from 2 to 10 q Number of models (i. e. , “dimensions”) varies from 2 to 5 q Analyze clusters vs dimensions Clustering Applications 34

Data and HMMs q Again use Malicia dataset q And again focus on the 3 main families o Zbot, Zeroaccess, Winwebsec q Train HMMs for each of these 3, plus NGVCK and Smart. HDD o We have 5 models in total q Then 5 HMM scores for each sample q Cluster based on (subsets of) scores Clustering Applications 35

Dimensions q “Dimension” is number of models used o 2 -d Winwebsec, Zbot o 3 -d Winwebsec, Zbot, and Zeroaccess o 4 -d Winwebsec, Zbot, Zeroaccess, and NGCVK o 5 -d Winwebsec, Zbot, Zeroaccess, NGCVK, and Smart. HDD q Why these subsets? o Why not? Clustering Applications 36

Cluster Quality q Use a simple purity-based measure o pij = mij / mj o Where mij is number of type i in cluster j o And mj is number of elements in cluster i q If sample x is in cluster Cj, then o scorei(x) = pij o That is, scorei(x) is proportion of data of type i in cluster that sample x belongs to Clustering Applications 37

Clustering Scores q Let i=0 correspond to Zbot q And i=1 correspond to Zeroaccess q And i=2 correspond to Winwebsec q If sample x is in cluster Cj, then o score 0(x) = Zbot score of x o score 1(x) = Zeroaccess score of x o score 2(x) = Winwebsec score of x Clustering Applications 38

Clustering Scores q ROC and AUC based on each of the 3 scores on previous slide q For example, we use score 0(x) to score all samples, where… o Zbot is match and all others are nomatch q Generate scatterplot and ROC curve o Compute AUC q Similarly Clustering Applications for score 1(x) and score 2(x) 39

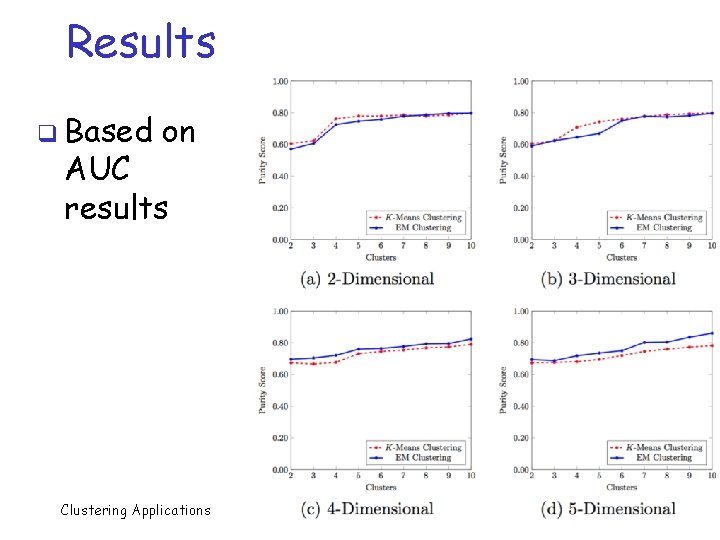

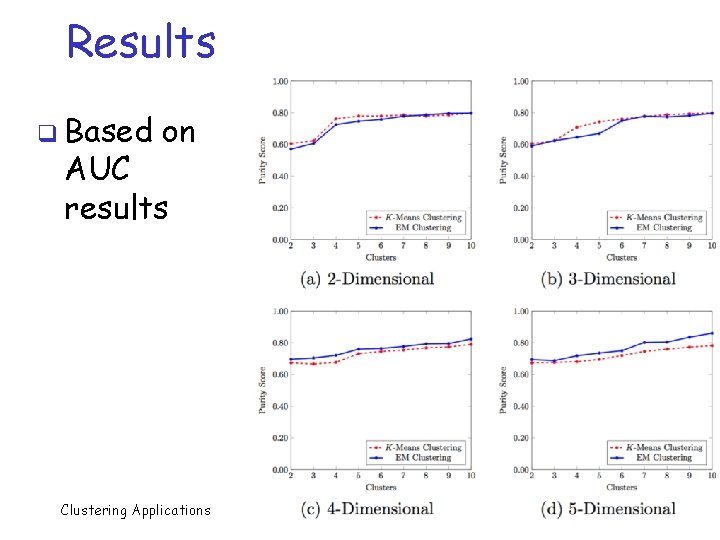

Results q Based on AUC results Clustering Applications 40

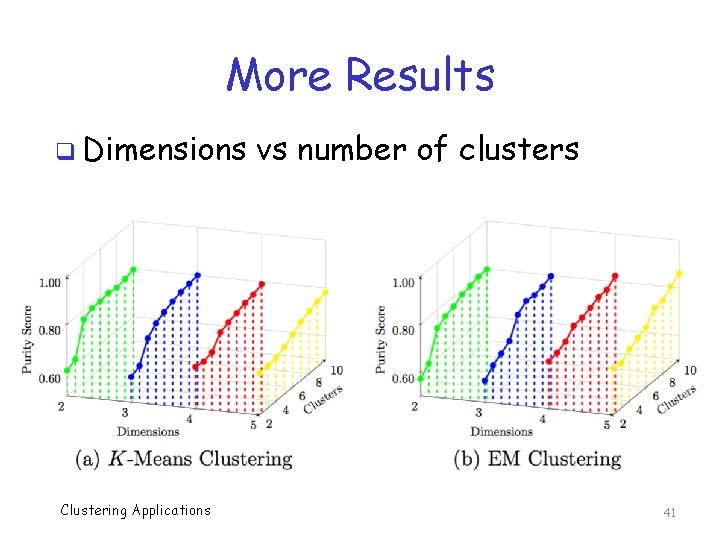

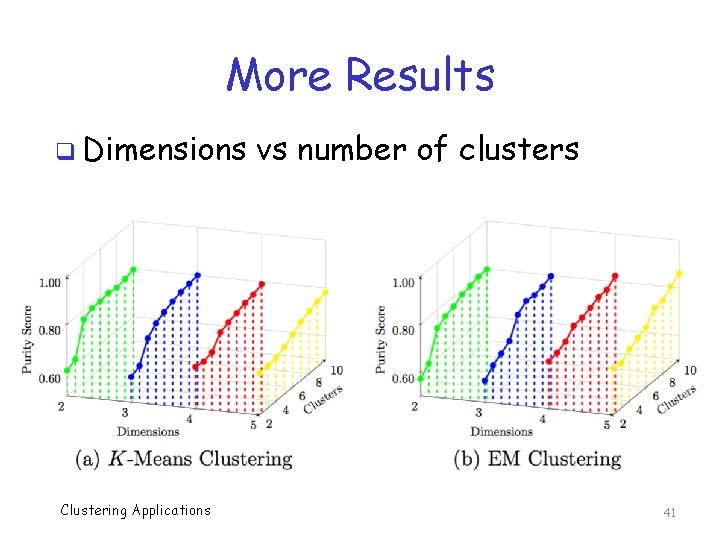

More Results q Dimensions Clustering Applications vs number of clusters 41

Discussion q For K-means, number of clusters is important but dimension, not so much q For EM, both number of clusters and number of dimensions seem to matter o Dimension matters more in EM q And overall, EM is better o Generally expect EM to be at least as good, so, this is not too surprising Clustering Applications 42

Discussion q Zero-day malware is malware that has never been seen before o So, no signature is available o How to detect or analyze? q Results indicate that clustering might be useful for such malware q Reasonably accurate classification o Even when model does not match family Clustering Applications 43

References C. Annachhatre, Hidden Markov models for malware classification, Journal of Computer Virology and Hacking Techniques, 11(2): 5973, May 2015 q T. H. Austin, et al, Exploring hidden Markov models for virus analysis: A semantic approach, Proceedings of 46 th Hawaii International Conference on System Sciences (HICSS 46), January 7– 10, 2013 q Clustering Applications 44

References q S. Pai, et al, Clustering for malware classification, Journal of Computer Virology and Hacking Techniques, 13(4): 95– 107, May 2017 q U. Narra, et al, Clustering versus SVM for malware detection, Journal of Computer Virology and Hacking Techniques, 2(4): 213 -224, Nov. 2016 Clustering Applications 45