Finite State Transducers Mark Stamp Finite State Transducers

- Slides: 40

Finite State Transducers Mark Stamp Finite State Transducers 1

Finite State Automata q FSA states and transitions o Represented as labeled directed graphs o FSA has one label per edge q State are circles: o Double circles for end states: q Beginning state o Denoted by arrowhead: o Or, sometimes bold circle is used: Finite State Transducers 2

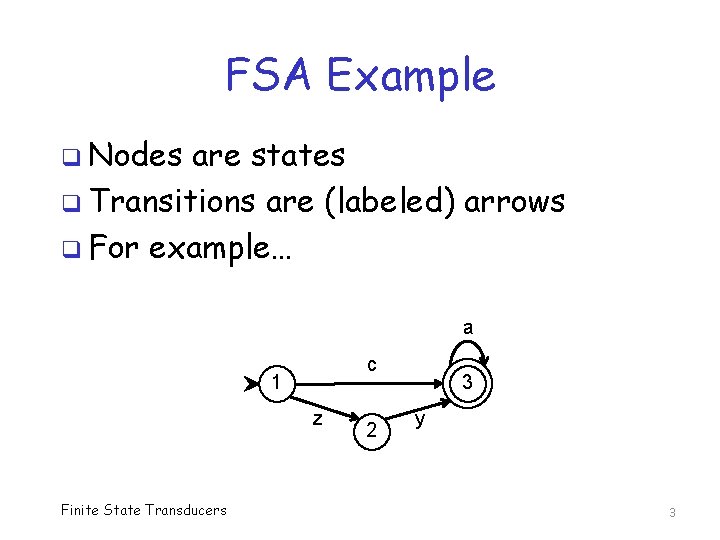

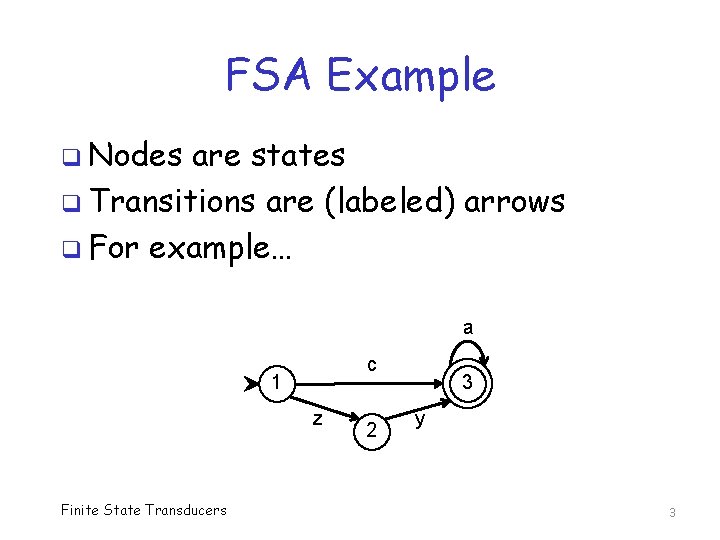

FSA Example q Nodes are states q Transitions are (labeled) arrows q For example… a c 1 z Finite State Transducers 2 3 y 3

Finite State Transducer q FST input & output labels on edge o That is, 2 labels per edge o Can be more labels (e. g. , edge weights) o Recall, FSA has one label per edge q FST represented as directed graph o And same symbols used as for FSA o FSTs may be useful in malware analysis… Finite State Transducers 4

Finite State Transducer q FST has input and output “tapes” o Transducer, i. e. , can map input to output o Often viewed as “translating” machine o But somewhat more general q FST is a finite automata with output o Usual finite automata only has input o Used in natural language processing (NLP) o Also used in many other applications Finite State Transducers 5

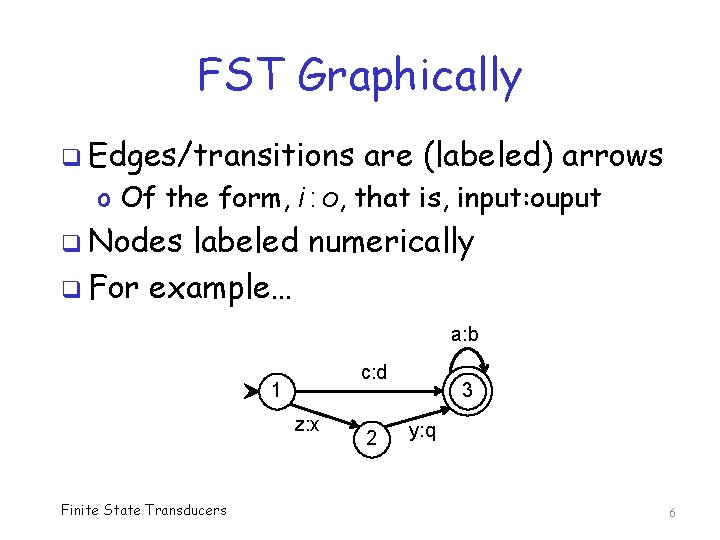

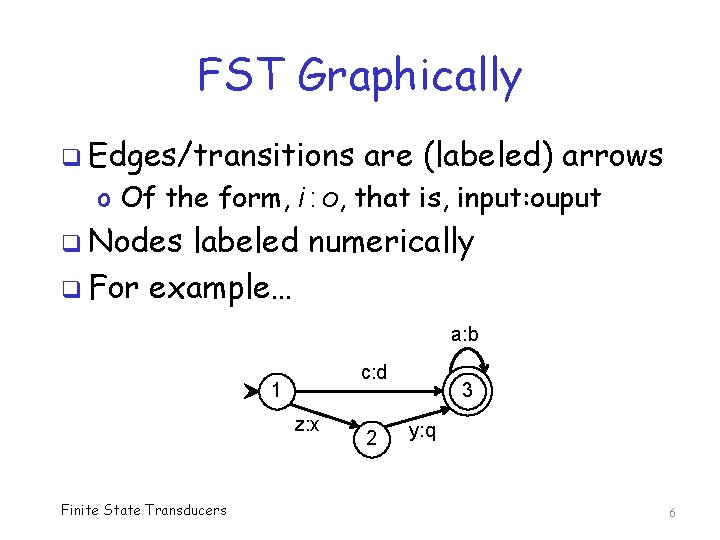

FST Graphically q Edges/transitions are (labeled) arrows o Of the form, i : o, that is, input: ouput q Nodes labeled numerically q For example… a: b c: d 1 z: x Finite State Transducers 2 3 y: q 6

FST Modes q As previously mentioned, FST usually viewed as translating machine q But FST can operate in several modes o Generation o Recognition o Translation (left-to-right or right-to-left) q Examples Finite State Transducers of modes considered next… 7

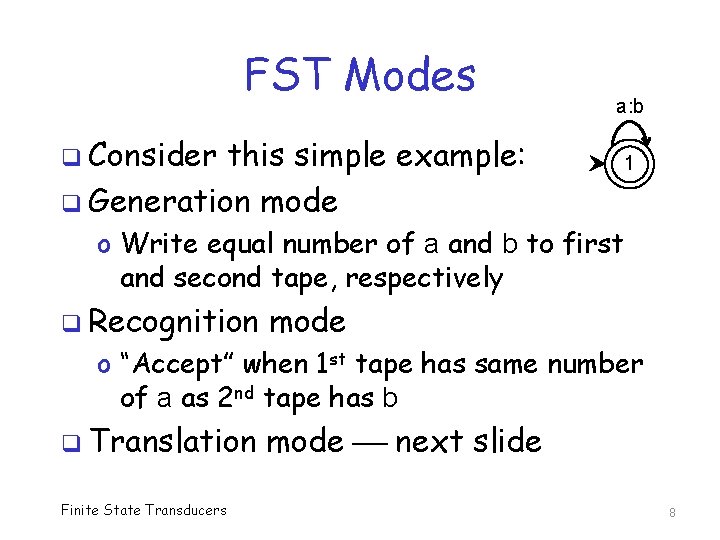

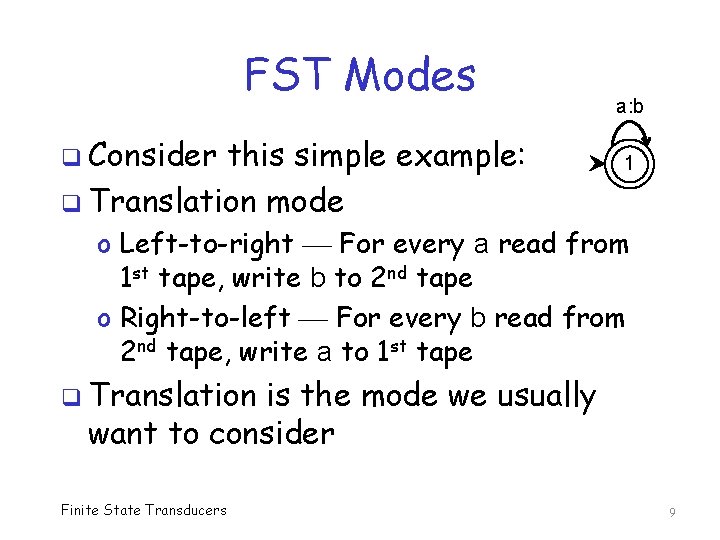

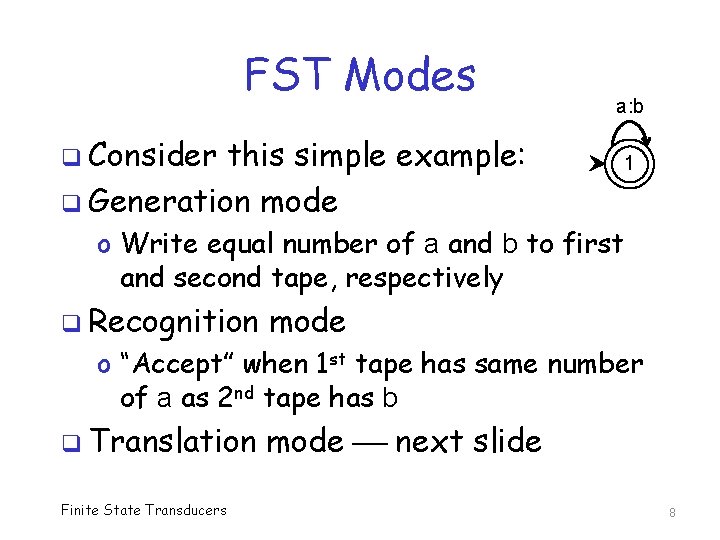

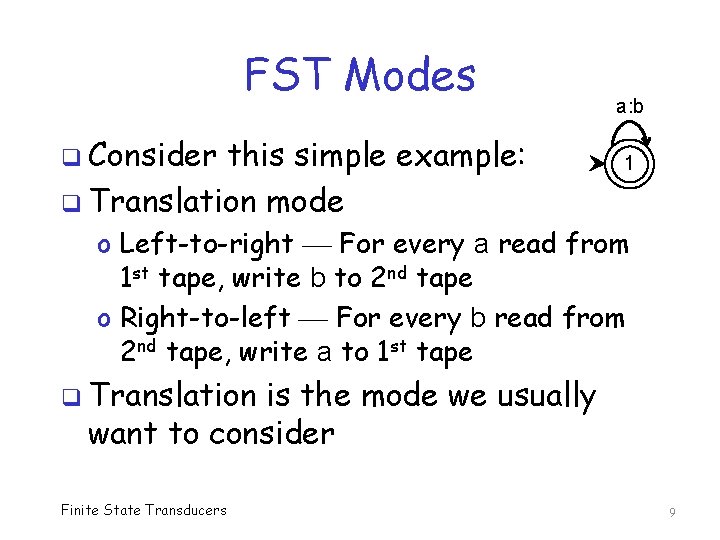

FST Modes a: b q Consider this simple example: q Generation mode 1 o Write equal number of a and b to first and second tape, respectively q Recognition mode o “Accept” when 1 st tape has same number of a as 2 nd tape has b q Translation Finite State Transducers mode next slide 8

FST Modes q Consider this simple example: q Translation mode a: b 1 o Left-to-right For every a read from 1 st tape, write b to 2 nd tape o Right-to-left For every b read from 2 nd tape, write a to 1 st tape q Translation is the mode we usually want to consider Finite State Transducers 9

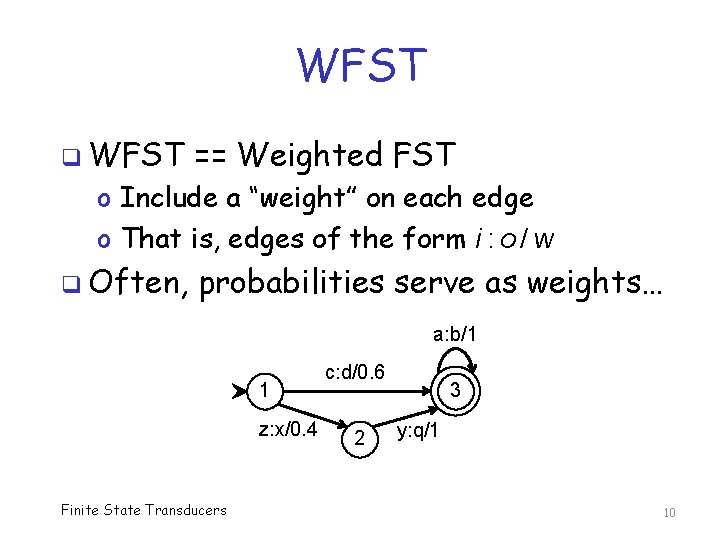

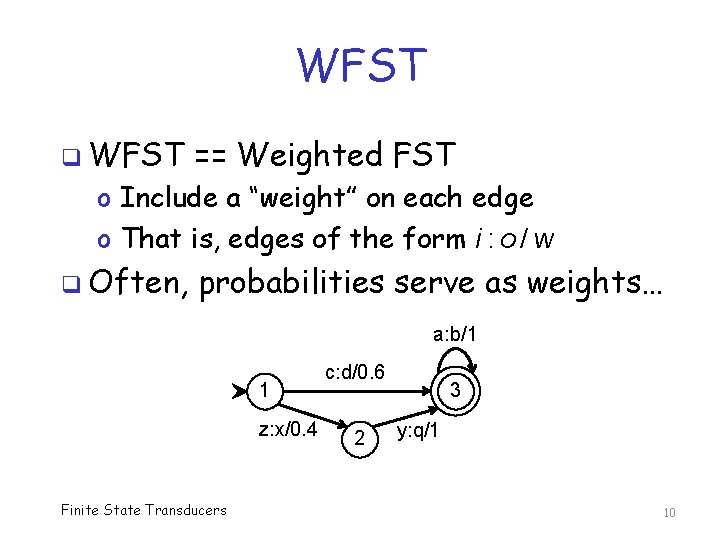

WFST q WFST == Weighted FST o Include a “weight” on each edge o That is, edges of the form i : o / w q Often, probabilities serve as weights… a: b/1 1 z: x/0. 4 Finite State Transducers c: d/0. 6 2 3 y: q/1 10

FST Example q Homework… Finite State Transducers 11

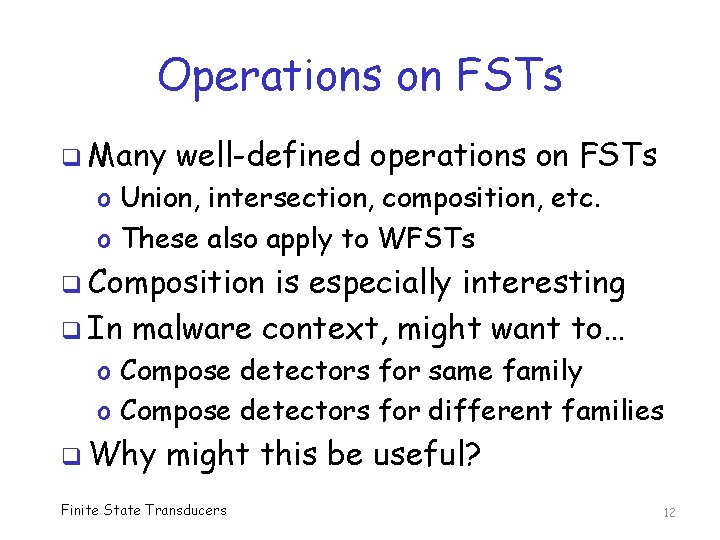

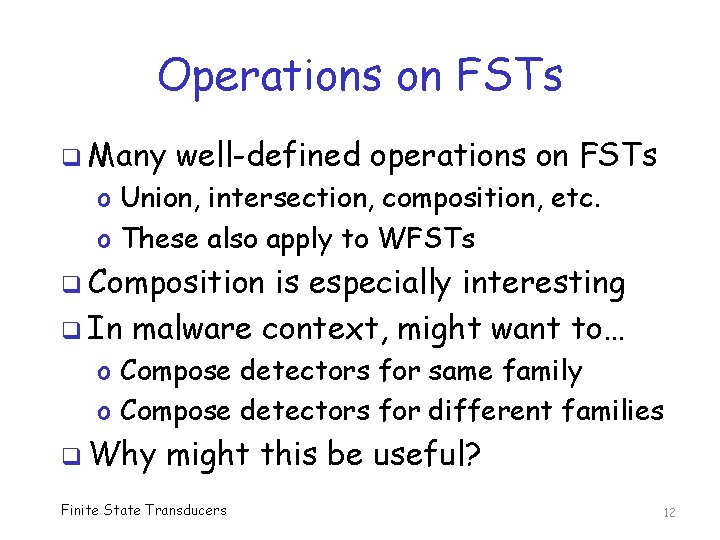

Operations on FSTs q Many well-defined operations on FSTs o Union, intersection, composition, etc. o These also apply to WFSTs q Composition is especially interesting q In malware context, might want to… o Compose detectors for same family o Compose detectors for different families q Why might this be useful? Finite State Transducers 12

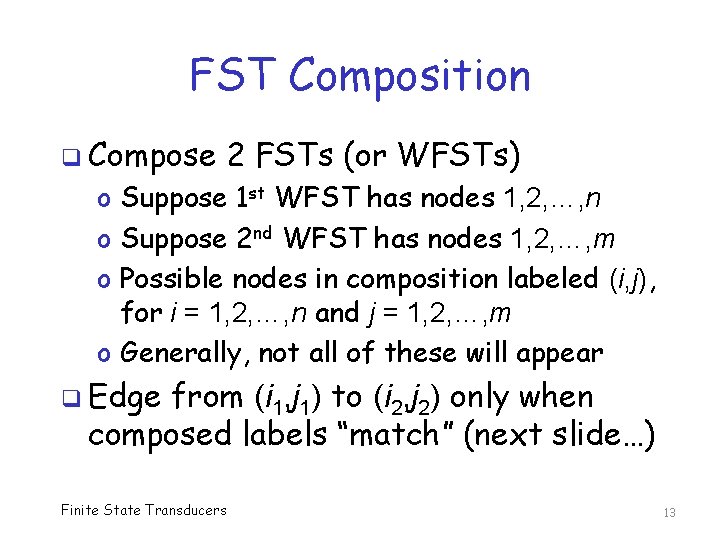

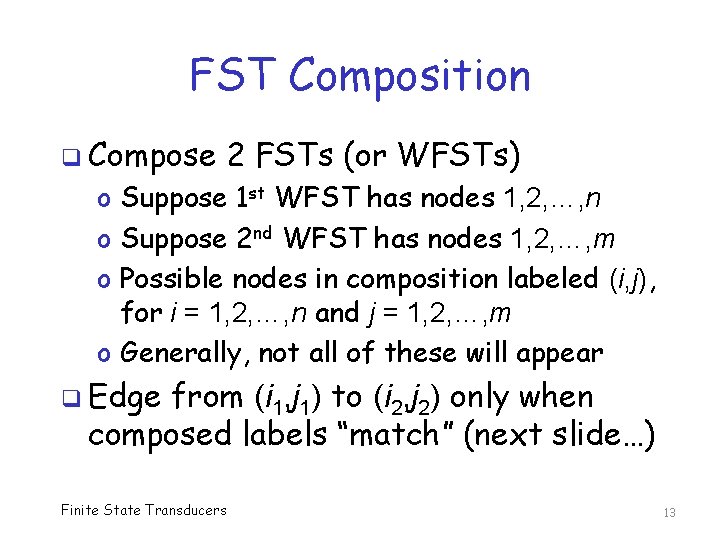

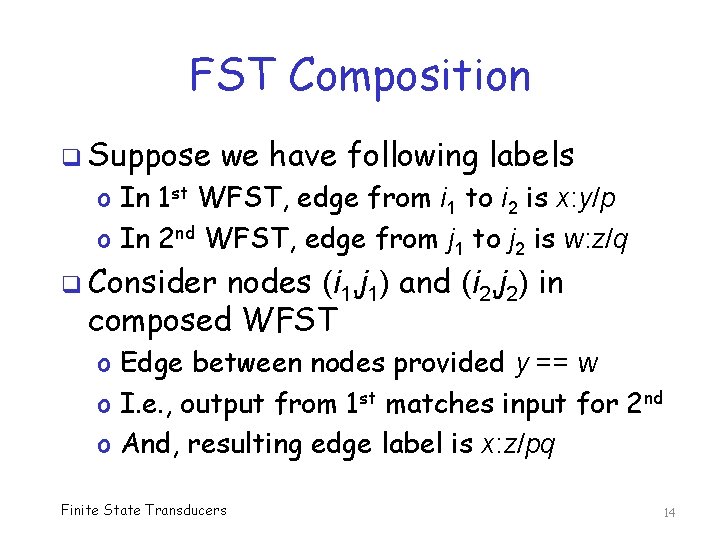

FST Composition q Compose 2 FSTs (or WFSTs) o Suppose 1 st WFST has nodes 1, 2, …, n o Suppose 2 nd WFST has nodes 1, 2, …, m o Possible nodes in composition labeled (i, j), for i = 1, 2, …, n and j = 1, 2, …, m o Generally, not all of these will appear q Edge from (i 1, j 1) to (i 2, j 2) only when composed labels “match” (next slide…) Finite State Transducers 13

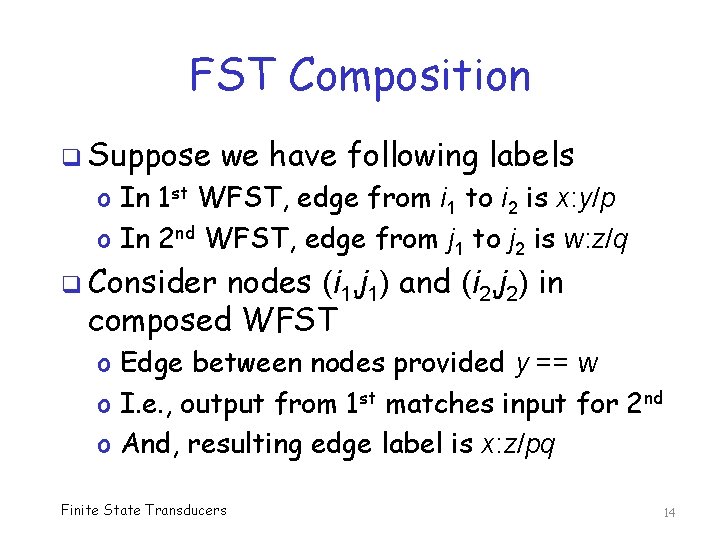

FST Composition q Suppose we have following labels o In 1 st WFST, edge from i 1 to i 2 is x: y/p o In 2 nd WFST, edge from j 1 to j 2 is w: z/q q Consider nodes (i 1, j 1) and (i 2, j 2) in composed WFST o Edge between nodes provided y == w o I. e. , output from 1 st matches input for 2 nd o And, resulting edge label is x: z/pq Finite State Transducers 14

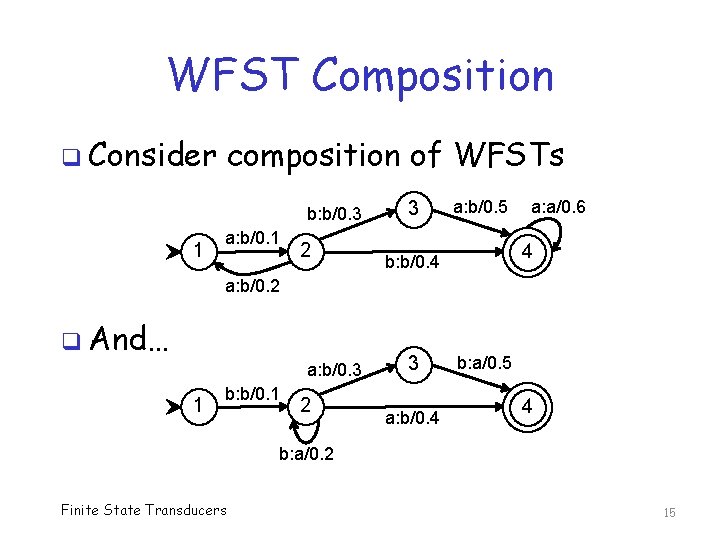

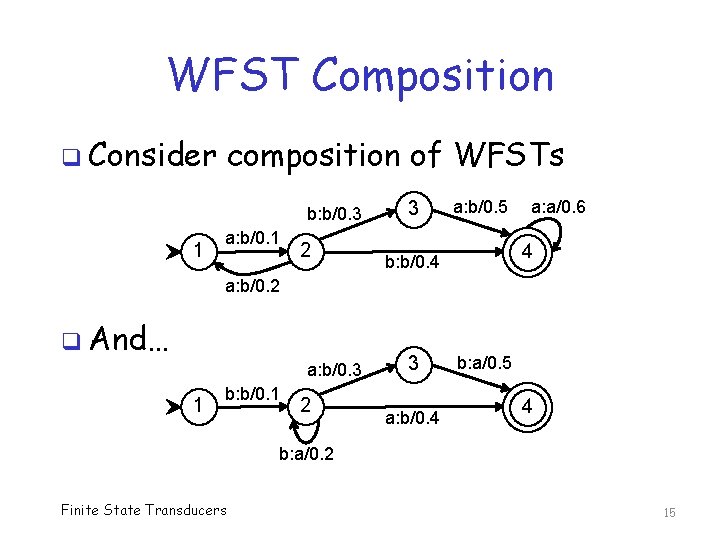

WFST Composition q Consider composition of WFSTs b: b/0. 3 1 a: b/0. 1 2 3 a: b/0. 5 a: a/0. 6 4 b: b/0. 4 a: b/0. 2 q And… a: b/0. 3 1 b: b/0. 1 2 3 a: b/0. 4 b: a/0. 5 4 b: a/0. 2 Finite State Transducers 15

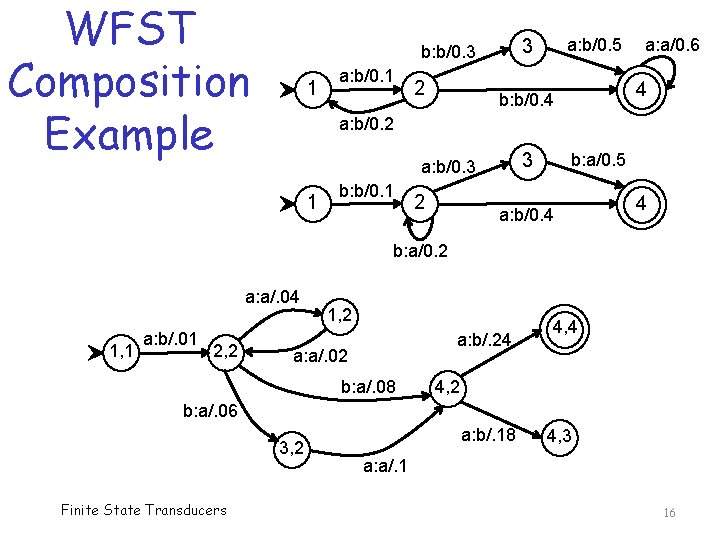

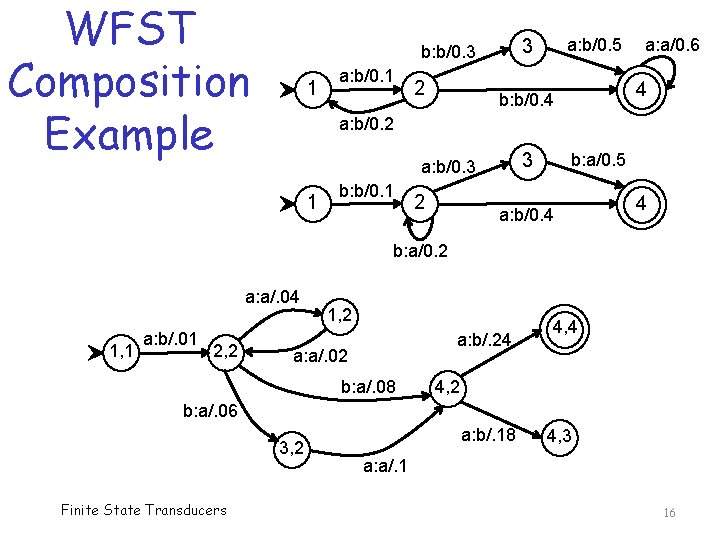

WFST Composition Example 1 a: b/0. 1 2 a: b/0. 5 3 b: b/0. 3 a: a/0. 6 4 b: b/0. 4 a: b/0. 2 3 a: b/0. 3 1 b: b/0. 1 2 b: a/0. 5 a: b/0. 4 4 b: a/0. 2 a: a/. 04 1, 1 a: b/. 01 2, 2 1, 2 a: b/. 24 a: a/. 02 b: a/. 08 4, 4 4, 2 b: a/. 06 3, 2 Finite State Transducers a: b/. 18 4, 3 a: a/. 1 16

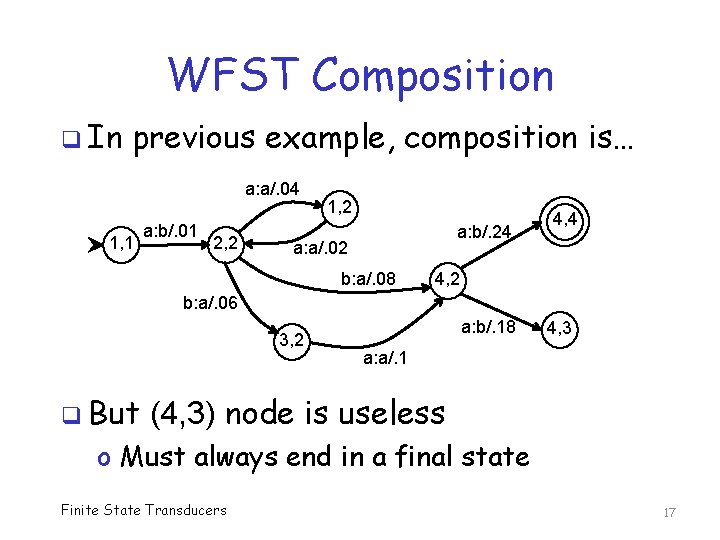

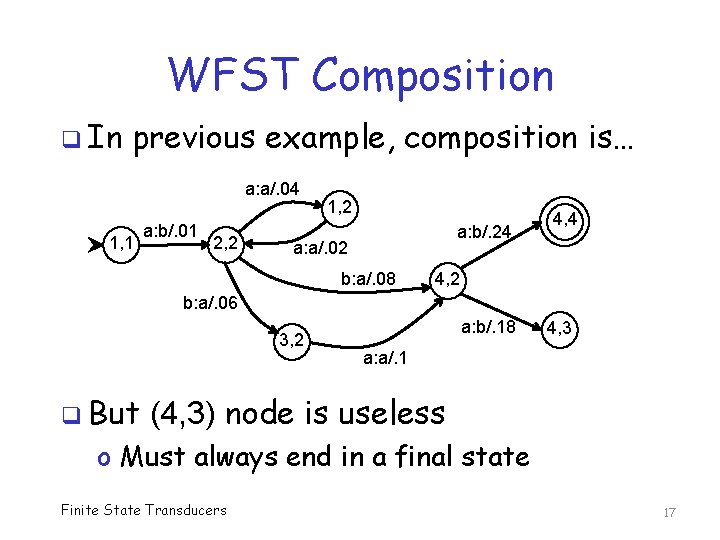

WFST Composition q In previous example, composition is… a: a/. 04 1, 1 a: b/. 01 2, 2 1, 2 a: b/. 24 a: a/. 02 b: a/. 08 4, 4 4, 2 b: a/. 06 3, 2 q But a: b/. 18 4, 3 a: a/. 1 (4, 3) node is useless o Must always end in a final state Finite State Transducers 17

FST Approximation of HMM q Why would we want to approximate an HMM by FST? o o Faster scoring using FST Easier to correct misclassification in FST Possible to compose FSTs Most important, it’s really cool and fun… q Down side? o FST may be less accurate than the HMM Finite State Transducers 18

FST Approximation of HMM q How to approximate HMM by FST? q We consider 2 methods known as o n-type approximation o s-type approximation q These usually focused on “problem 2” o That is, uncovering the hidden states o This is the usual concern in NLP, such as “part of speech” tagging Finite State Transducers 19

n-type Approximation q Let V be distinct observations in HMM o Let λ = (A, B, π) be a trained HMM o Recall, A is N x N, B is N x M, π is 1 x N q Let (input : output / weight) = (Vi : Sj / p) o Where i {1, 2, …, M} and j {1, 2, …, N} o And Sj are hidden states (rows of B) o And weight is max probability (from λ) q Examples Finite State Transducers later… 20

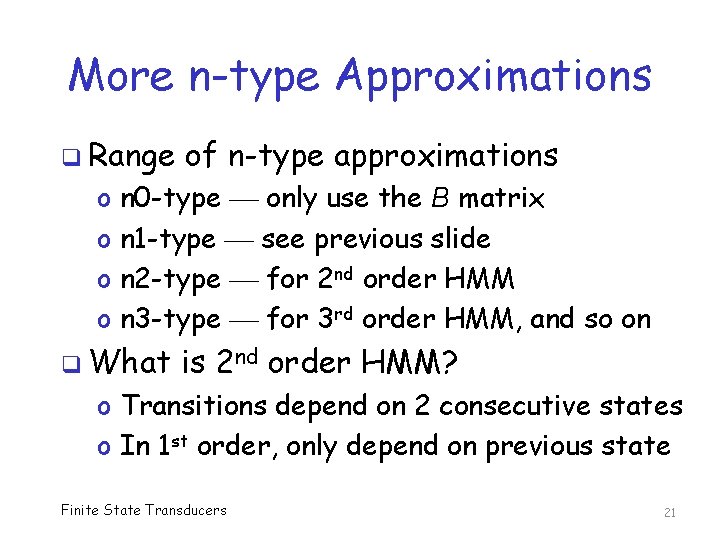

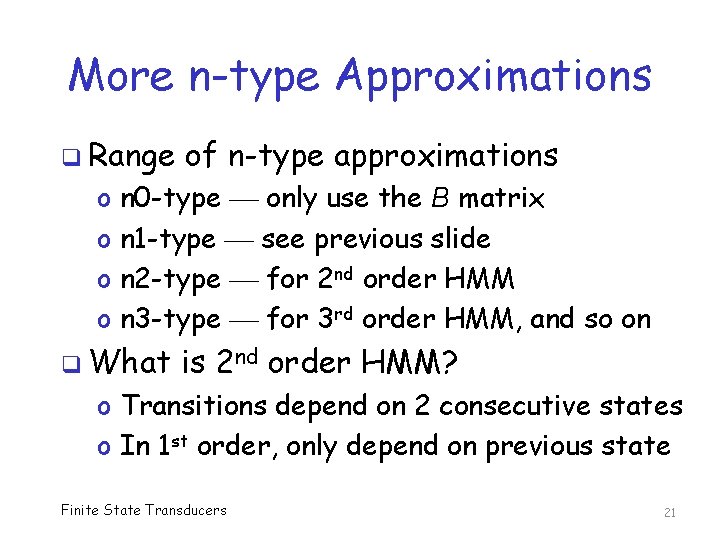

More n-type Approximations q Range o o of n-type approximations n 0 -type only use the B matrix n 1 -type see previous slide n 2 -type for 2 nd order HMM n 3 -type for 3 rd order HMM, and so on q What is 2 nd order HMM? o Transitions depend on 2 consecutive states o In 1 st order, only depend on previous state Finite State Transducers 21

s-type Approximation “Sentence type” approximation q Use sequences and/or natural breaks q o In n-type, max probability over one transition using A and B matrices o In s-type, all sequences up to some length q Ideally, break at boundaries of some sort o In NLP, sentence is such a boundary o For malware, not so clear where to break o So in malware, maybe just use a fixed length Finite State Transducers 22

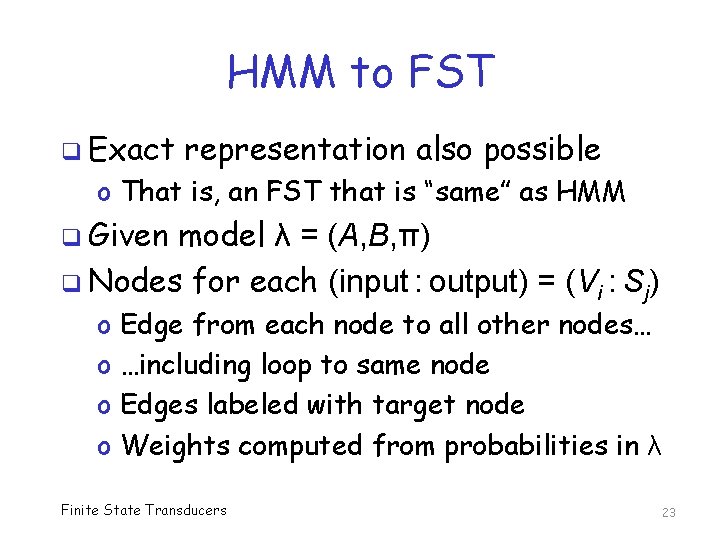

HMM to FST q Exact representation also possible o That is, an FST that is “same” as HMM q Given model λ = (A, B, π) q Nodes for each (input : output) = (Vi : Sj) o o Edge from each node to all other nodes… …including loop to same node Edges labeled with target node Weights computed from probabilities in λ Finite State Transducers 23

HMM to FST q Note that some probabilities may be 0 o Remove edges with 0 probabilities q. A lot of probabilities may be small o So, maybe approximate by removing edges with “small” probabilities? o Could be an interesting experiment… o A reasonable way to approximate HMM that does not seem to have been studied Finite State Transducers 24

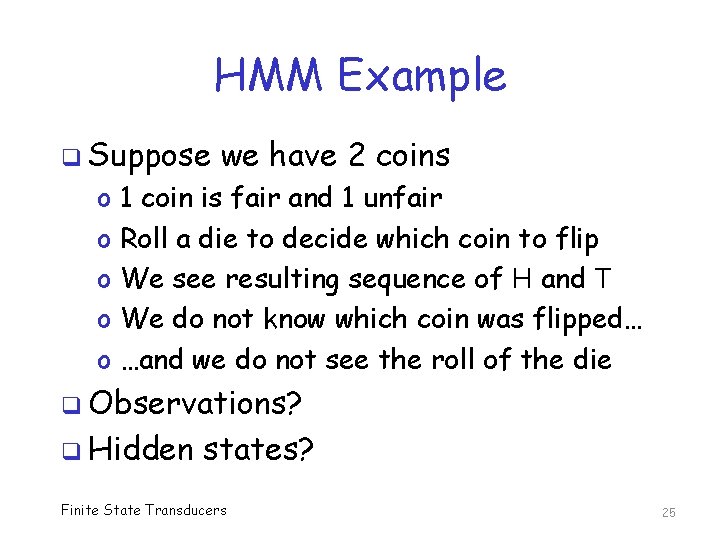

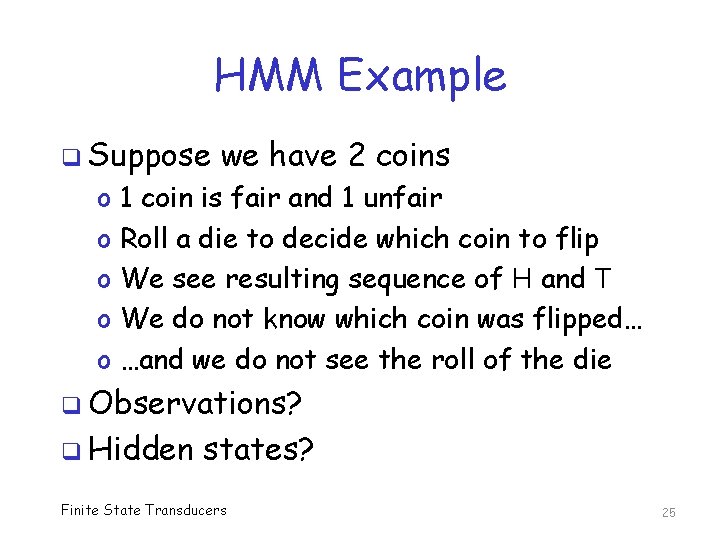

HMM Example q Suppose o o o we have 2 coins 1 coin is fair and 1 unfair Roll a die to decide which coin to flip We see resulting sequence of H and T We do not know which coin was flipped… …and we do not see the roll of the die q Observations? q Hidden states? Finite State Transducers 25

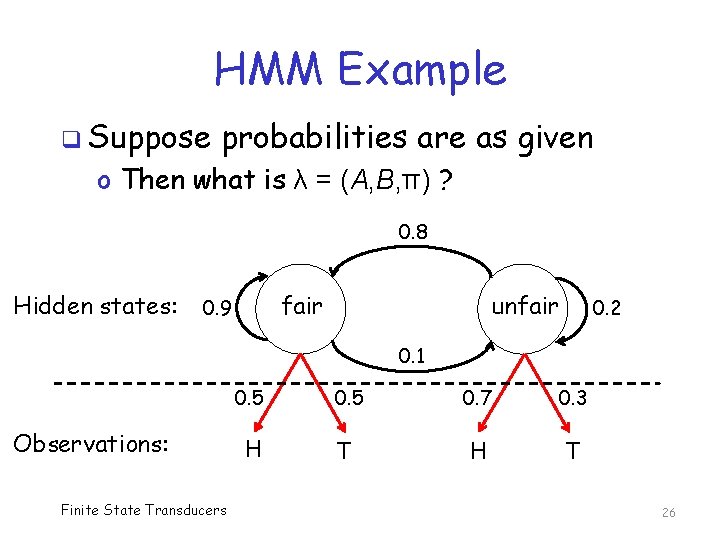

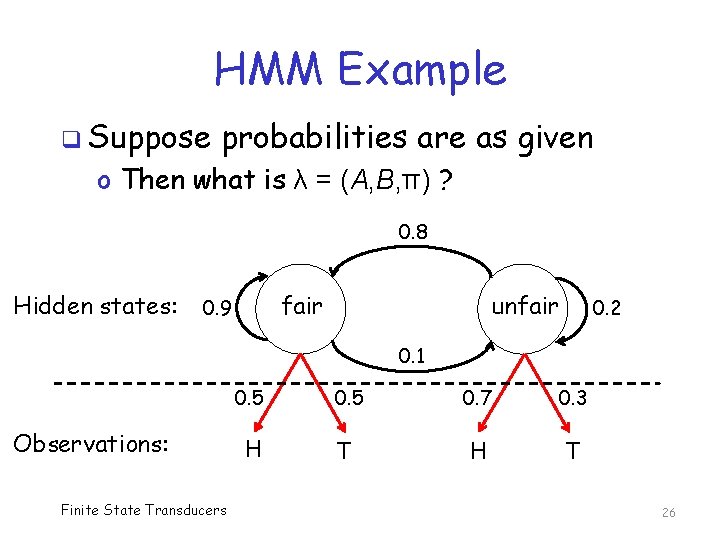

HMM Example q Suppose probabilities are as given o Then what is λ = (A, B, π) ? 0. 8 Hidden states: fair 0. 9 unfair 0. 2 0. 1 Observations: Finite State Transducers 0. 5 0. 7 0. 3 H T 26

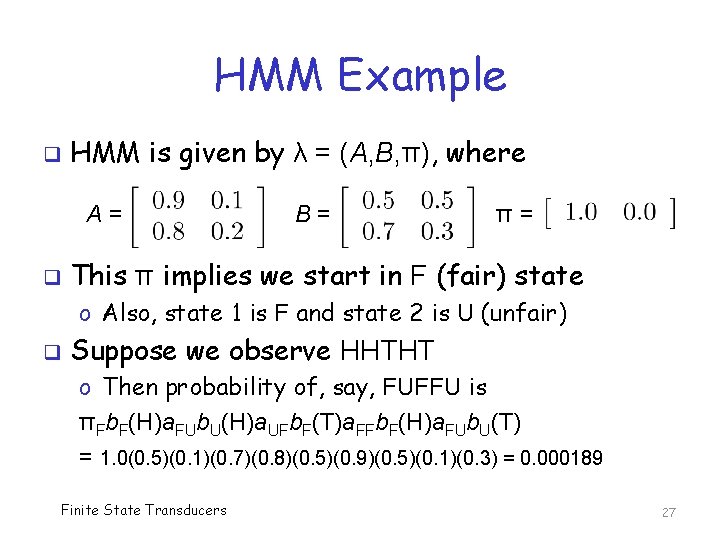

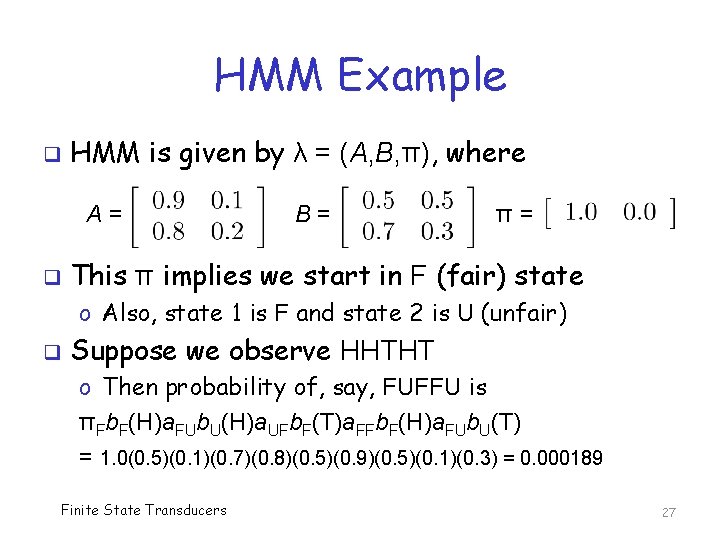

HMM Example q HMM is given by λ = (A, B, π), where A= q B= π= This π implies we start in F (fair) state o Also, state 1 is F and state 2 is U (unfair) q Suppose we observe HHTHT o Then probability of, say, FUFFU is πFb. F(H)a. FUb. U(H)a. UFb. F(T)a. FFb. F(H)a. FUb. U(T) = 1. 0(0. 5)(0. 1)(0. 7)(0. 8)(0. 5)(0. 9)(0. 5)(0. 1)(0. 3) = 0. 000189 Finite State Transducers 27

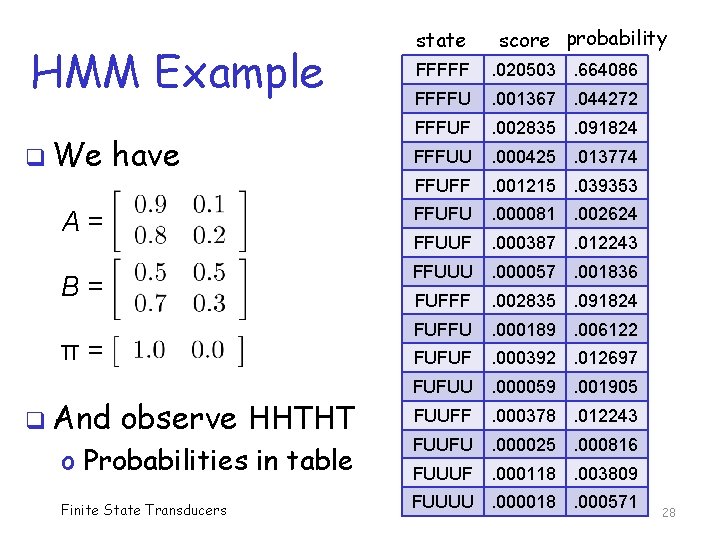

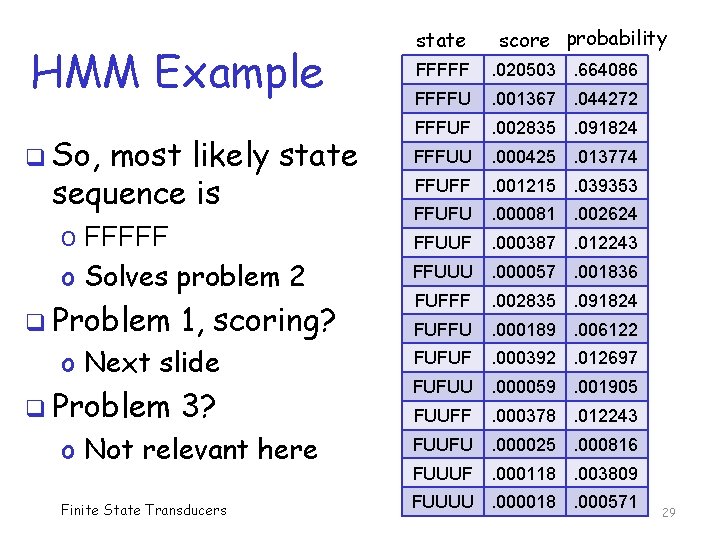

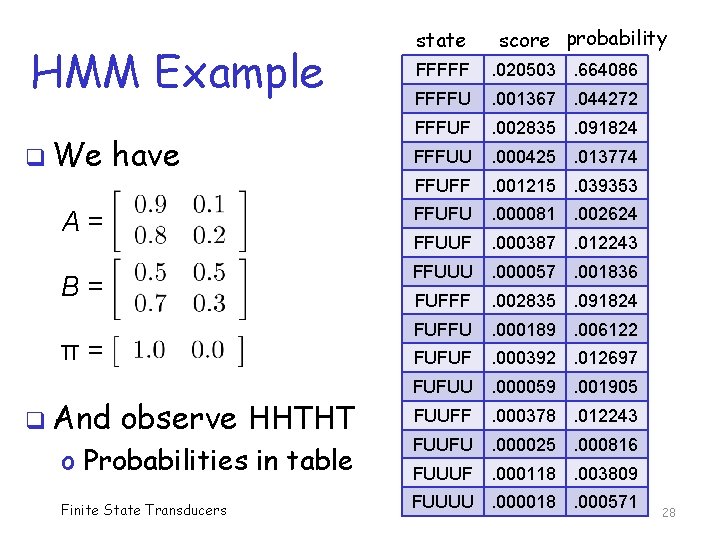

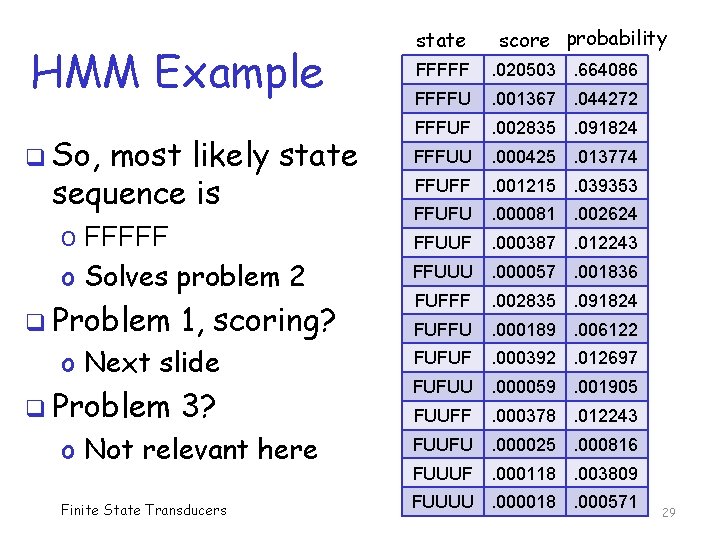

HMM Example state score probability FFFFF . 020503. 664086 FFFFU . 001367. 044272 FFFUF . 002835. 091824 FFFUU . 000425. 013774 FFUFF . 001215. 039353 A= FFUFU . 000081. 002624 FFUUF . 000387. 012243 B= FFUUU. 000057. 001836 q We have π= q And observe HHTHT o Probabilities in table Finite State Transducers FUFFF . 002835. 091824 FUFFU . 000189. 006122 FUFUF . 000392. 012697 FUFUU. 000059. 001905 FUUFF . 000378. 012243 FUUFU. 000025. 000816 FUUUF. 000118. 003809 FUUUU. 000018. 000571 28

HMM Example q So, most likely state sequence is o FFFFF o Solves problem 2 q Problem 1, scoring? o Next slide q Problem 3? o Not relevant here Finite State Transducers state score probability FFFFF . 020503. 664086 FFFFU . 001367. 044272 FFFUF . 002835. 091824 FFFUU . 000425. 013774 FFUFF . 001215. 039353 FFUFU . 000081. 002624 FFUUF . 000387. 012243 FFUUU. 000057. 001836 FUFFF . 002835. 091824 FUFFU . 000189. 006122 FUFUF . 000392. 012697 FUFUU. 000059. 001905 FUUFF . 000378. 012243 FUUFU. 000025. 000816 FUUUF. 000118. 003809 FUUUU. 000018. 000571 29

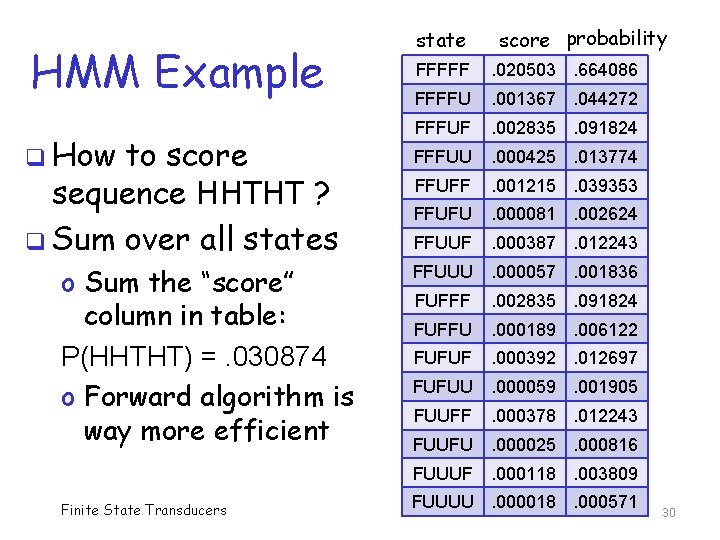

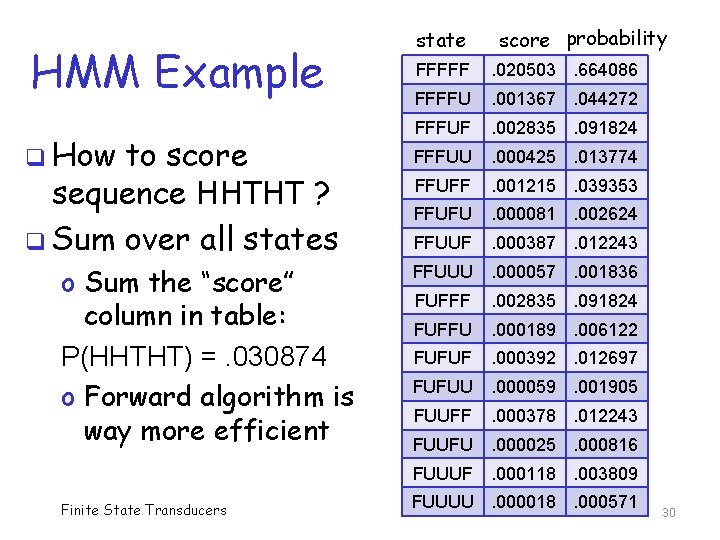

HMM Example q How to score sequence HHTHT ? q Sum over all states state score probability FFFFF . 020503. 664086 FFFFU . 001367. 044272 FFFUF . 002835. 091824 FFFUU . 000425. 013774 FFUFF . 001215. 039353 FFUFU . 000081. 002624 FFUUF . 000387. 012243 o Sum the “score” column in table: FFUUU. 000057. 001836 P(HHTHT) =. 030874 o Forward algorithm is way more efficient FUFFF . 002835. 091824 FUFFU . 000189. 006122 FUFUF . 000392. 012697 FUFUU. 000059. 001905 FUUFF . 000378. 012243 FUUFU. 000025. 000816 FUUUF. 000118. 003809 Finite State Transducers FUUUU. 000018. 000571 30

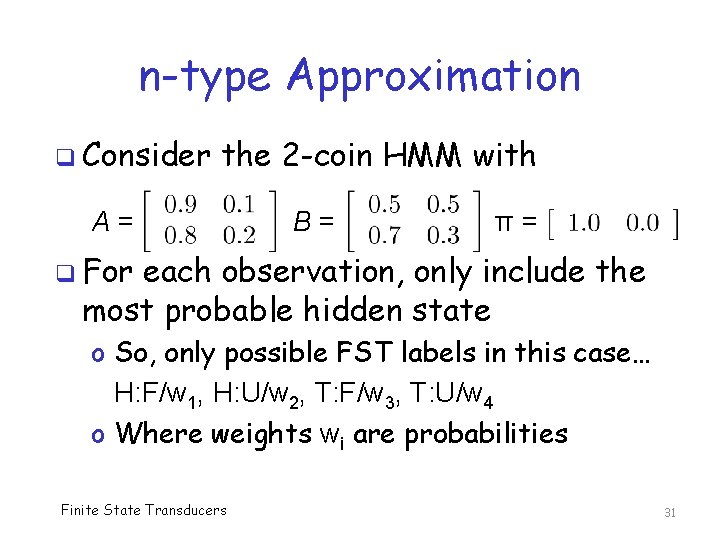

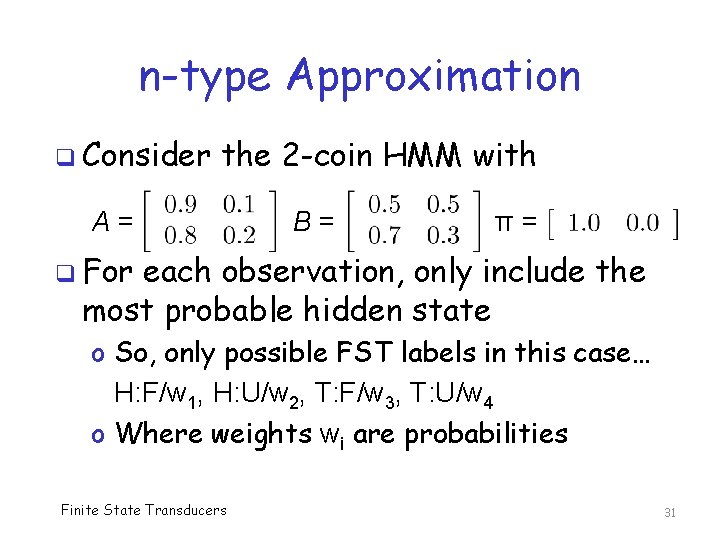

n-type Approximation q Consider the 2 -coin HMM with A= B= π= q For each observation, only include the most probable hidden state o So, only possible FST labels in this case… H: F/w 1, H: U/w 2, T: F/w 3, T: U/w 4 o Where weights wi are probabilities Finite State Transducers 31

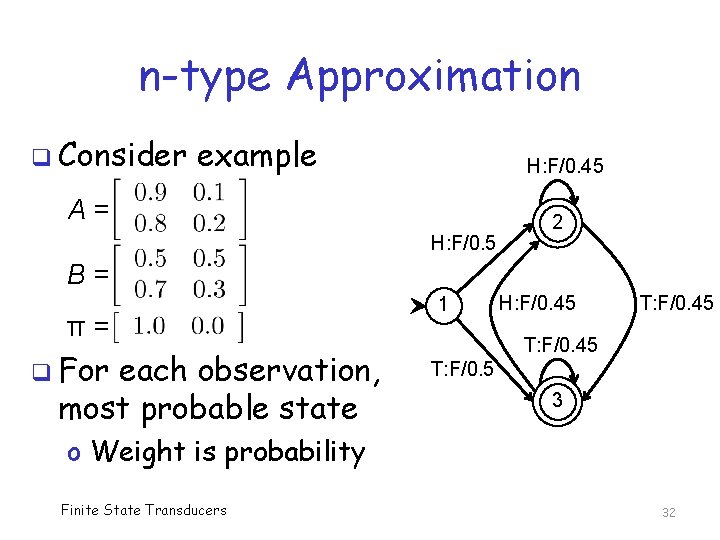

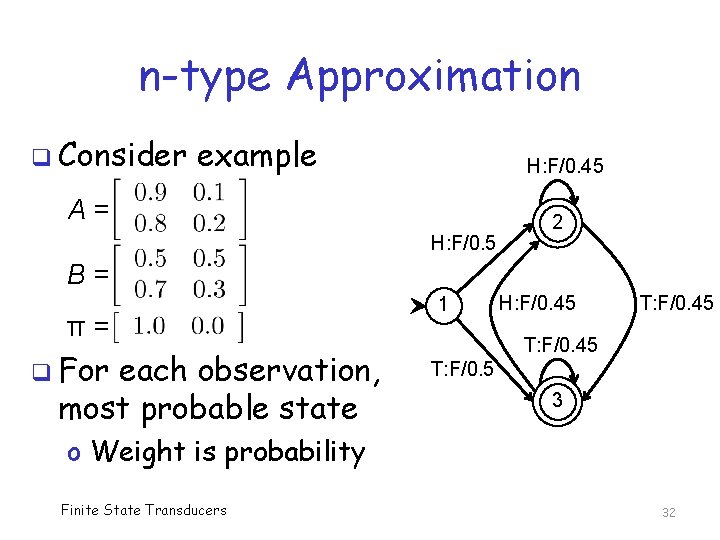

n-type Approximation q Consider example H: F/0. 45 A= H: F/0. 5 2 B= π= q For each observation, most probable state 1 H: F/0. 45 T: F/0. 5 3 o Weight is probability Finite State Transducers 32

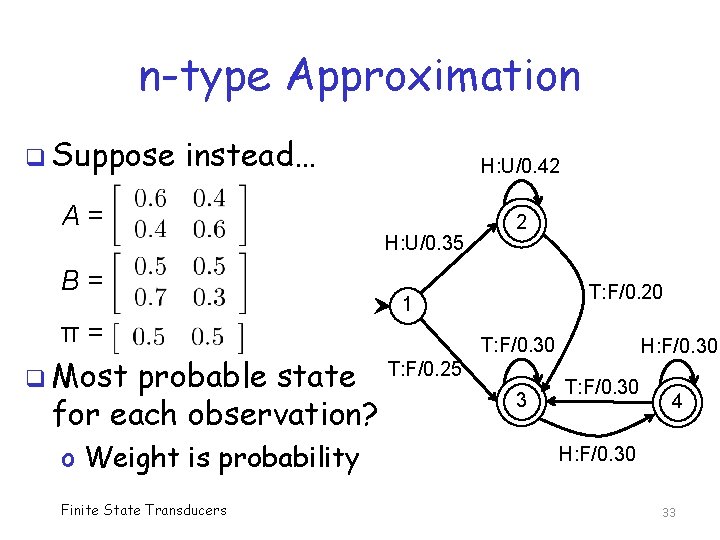

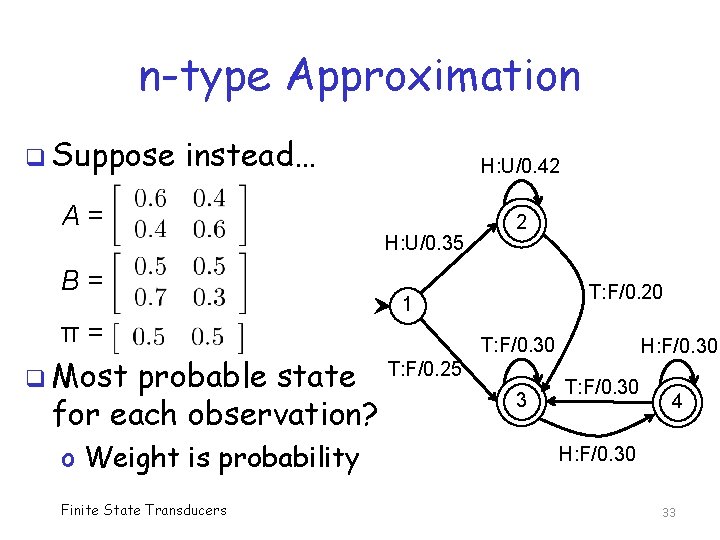

n-type Approximation q Suppose instead… H: U/0. 42 A= H: U/0. 35 B= probable state T: F/0. 25 for each observation? o Weight is probability Finite State Transducers T: F/0. 20 1 π= q Most 2 T: F/0. 30 3 H: F/0. 30 T: F/0. 30 4 H: F/0. 30 33

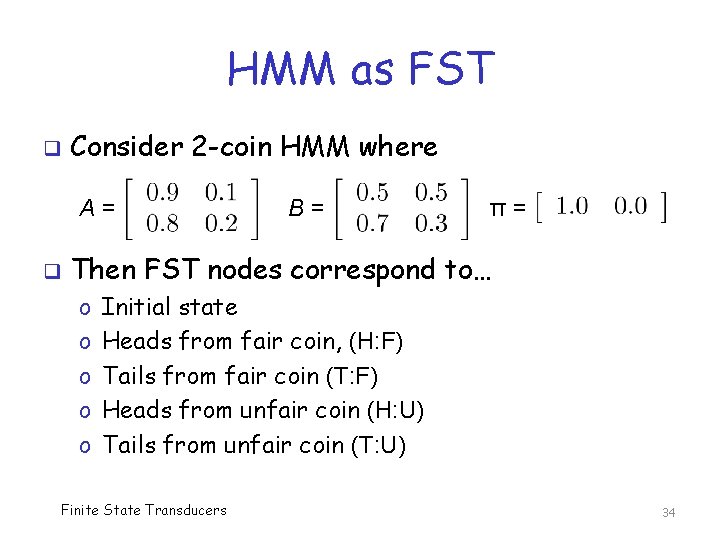

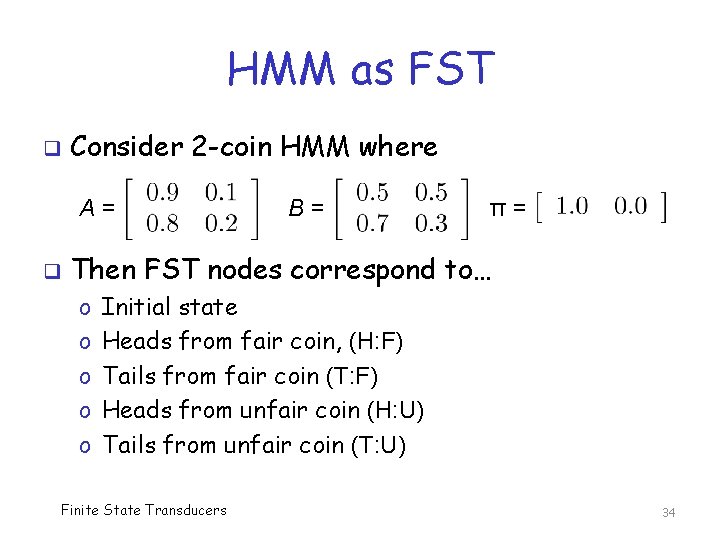

HMM as FST q Consider 2 -coin HMM where A= q B= π= Then FST nodes correspond to… o o o Initial state Heads from fair coin, (H: F) Tails from fair coin (T: F) Heads from unfair coin (H: U) Tails from unfair coin (T: U) Finite State Transducers 34

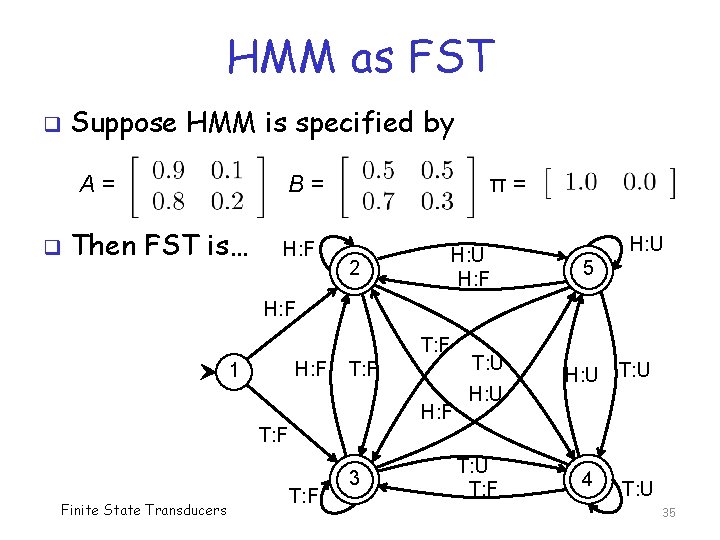

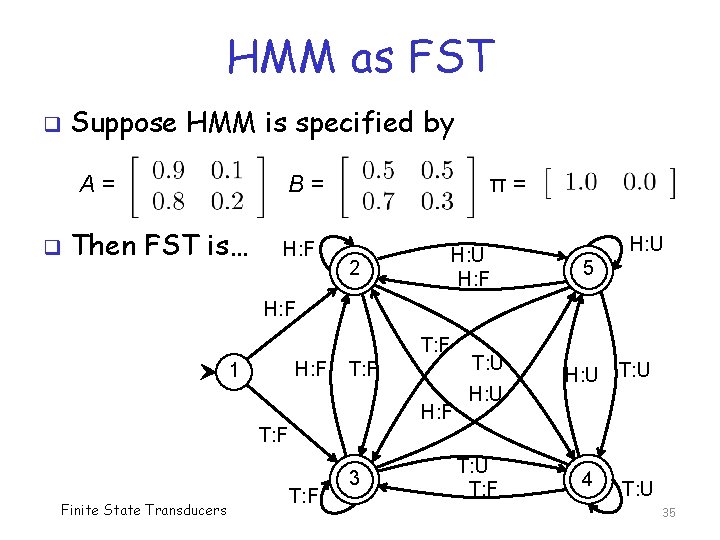

HMM as FST q Suppose HMM is specified by A= q B= Then FST is… H: F π= H: U H: F 2 H: U 5 H: F T: F H: F 1 T: F H: F T: U H: U T: F Finite State Transducers T: F 3 T: U T: F 4 T: U 35

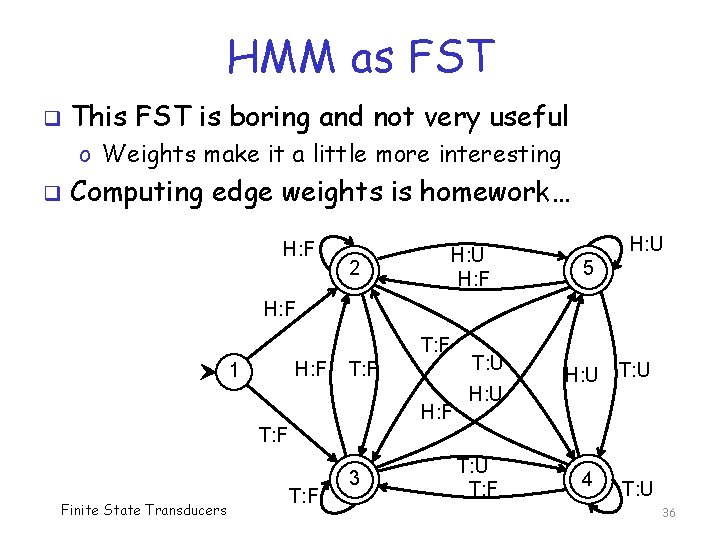

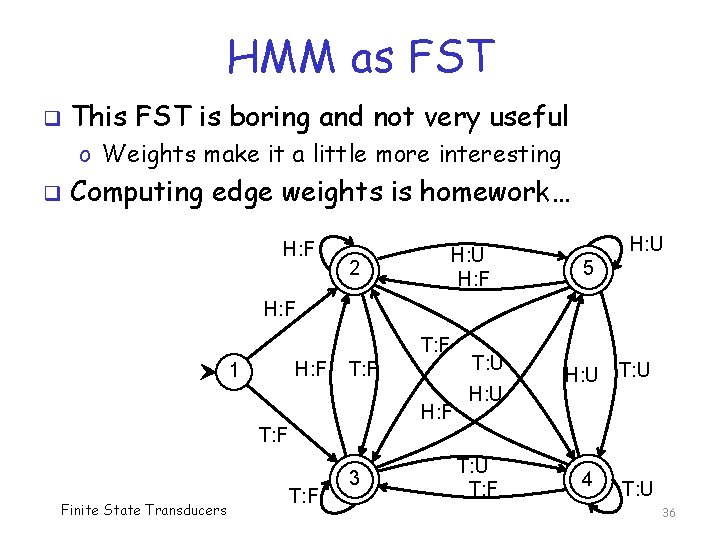

HMM as FST q This FST is boring and not very useful o Weights make it a little more interesting q Computing edge weights is homework… H: F H: U H: F 2 H: U 5 H: F T: F H: F 1 T: F H: F T: U H: U T: F Finite State Transducers T: F 3 T: U T: F 4 T: U 36

Why Consider FSTs? q FST used as “translating machine” q Well-defined operations on FSTs o Composition is an interesting example q Can convert HMM to FST o Either exact or approximation o Approximations may be much simplified, but might not be as accurate q Advantages Finite State Transducers of FST over HMM? 37

Why Consider FSTs? q Scoring/translating faster with FST q Able to compose multiple FSTs o Where FSTs may be derived from HMMs q One idea… o Multiple HMMs trained on malware (same family and/or different families) o Convert each HMM to FST o Compose resulting FSTs Finite State Transducers 38

Bottom Line q Can o o we get best of both worlds? Fast scoring, composition with FSTs Simplify/approximate HMMs via FSTs Tweak FST to improve scoring Efficient training using HMMs q Other possibilities? o Directly compute an FST without HMM o Or FST as first pass (e. g. , disassembly? ) Finite State Transducers 39

References q A. Kempe, Finite state transducers approximating hidden Markov models q J. R. Novak, Weighted finite state transducers: Important algorithms q K. Striegnitz, Finite state transducers Finite State Transducers 40