Intro to Machine Learning Mark Stamp Introduction to

- Slides: 40

Intro to Machine Learning Mark Stamp Introduction to Machine Learning 1

What is Machine Learning? q Definition of machine learning (ML)? o Our working definition is… o Statistical discrimination, where the “machine” does the hard work of “learning” o So, we humans don’t have to think too much q Often associated with AI o But actually much more widely applicable q Often, based on a binary classifier o Multiclass problems also of interest q ML said to generate “data driven” models Introduction to Machine Learning 2

What Can ML Do for Me? q Machine learning is very powerful o Practical and useful q Successfully applied to problems in… o Speech recognition and NLP, bioinformatics, stock market analysis, AI (robotics, computer vision, etc. ), malware, spam detection, autonomous vehicles, … o More and more applications all the time o Limited only by your imagination Introduction to Machine Learning 3

Black Box Approach q Machine learning (ML) algorithm often treated as a black box o This is one of ML’s main selling points! q ML black box view often works well o Can get good results even if you know nothing about underlying algorithms q But, black box approach is limiting o Especially in new and novel applications Introduction to Machine Learning 4

Analogy to a Doctor q Nurse practitioner (NP) is a nurse with advanced training o Physician has much more education o Like medical doctor, an NP can diagnose, treat, and manage patients’ problems q Studies show that NPs can do about 80% to 90% of what physicians do o But for the most challenging 10% to 20% of cases, a physician is required Introduction to Machine Learning 5

Interesting, But What Does This Have to Do with ML? q ML version of NP would have knowledge beyond black box, but not too much q ML version of physician would really understand how/why things work q Goal is for you to become ML physician q For doctors, most challenging 10% to 20% of cases are most interesting… o …and the most lucrative! Introduction to Machine Learning 6

Auto Mechanic Analogy q The majority of diagnosis work done by auto mechanics is routine o Often easy to see what the problem is (not necessarily easy to fix, though!) q But, there are some difficult cases o Where no “cookbook” diagnosis will work o Skill needed to analyze such problems o Requires deep understanding of inner workings of engine and related systems Introduction to Machine Learning 7

ML from 10, 000 Feet q We focus on binary classification o Data of “type A” and “not type A” q First, we train a model on “type A” q Then given sample of unknown type o Score the sample against the model o If it scores high, classify it as “type A” o Otherwise, classify it as “not type A” q Key ideas are training and scoring Introduction to Machine Learning 8

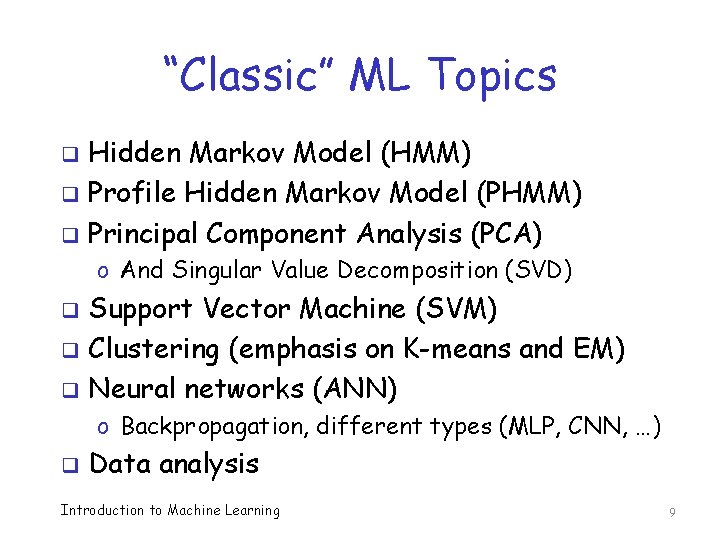

“Classic” ML Topics Hidden Markov Model (HMM) q Profile Hidden Markov Model (PHMM) q Principal Component Analysis (PCA) q o And Singular Value Decomposition (SVD) Support Vector Machine (SVM) q Clustering (emphasis on K-means and EM) q Neural networks (ANN) q o Backpropagation, different types (MLP, CNN, …) q Data analysis Introduction to Machine Learning 9

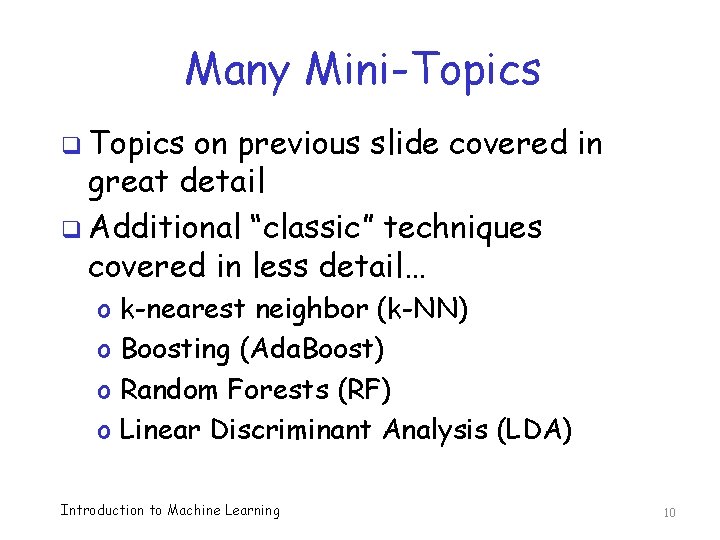

Many Mini-Topics q Topics on previous slide covered in great detail q Additional “classic” techniques covered in less detail… o o k-nearest neighbor (k-NN) Boosting (Ada. Boost) Random Forests (RF) Linear Discriminant Analysis (LDA) Introduction to Machine Learning 10

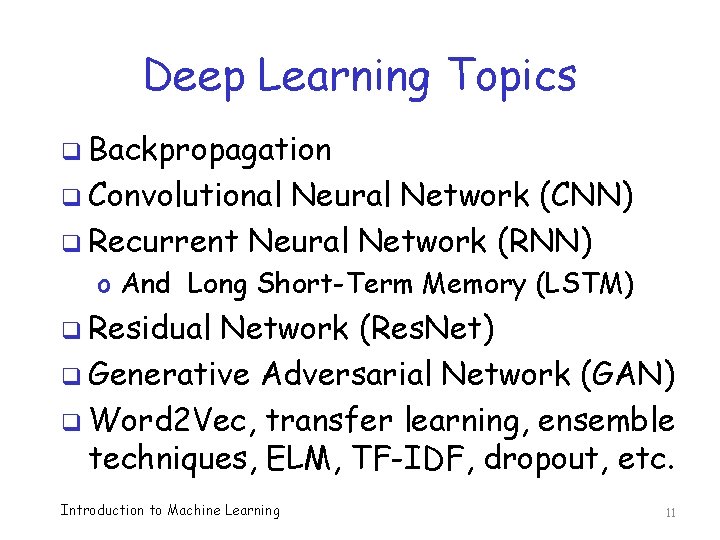

Deep Learning Topics q Backpropagation q Convolutional Neural Network (CNN) q Recurrent Neural Network (RNN) o And Long Short-Term Memory (LSTM) q Residual Network (Res. Net) q Generative Adversarial Network (GAN) q Word 2 Vec, transfer learning, ensemble techniques, ELM, TF-IDF, dropout, etc. Introduction to Machine Learning 11

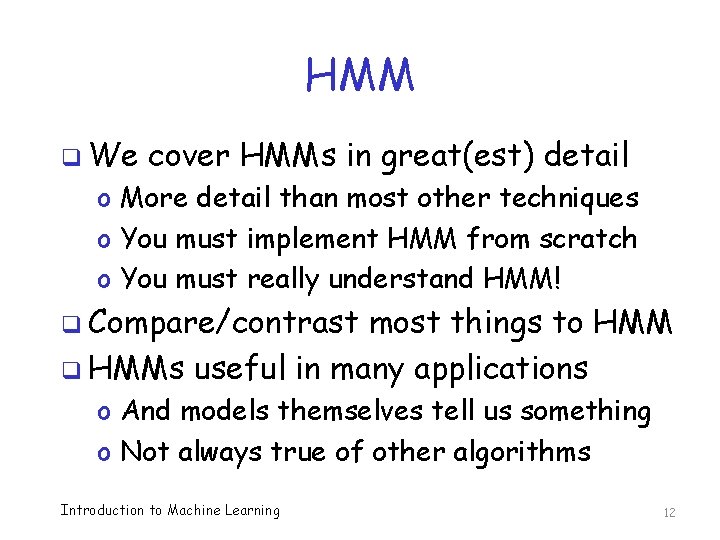

HMM q We cover HMMs in great(est) detail o More detail than most other techniques o You must implement HMM from scratch o You must really understand HMM! q Compare/contrast most things to HMM q HMMs useful in many applications o And models themselves tell us something o Not always true of other algorithms Introduction to Machine Learning 12

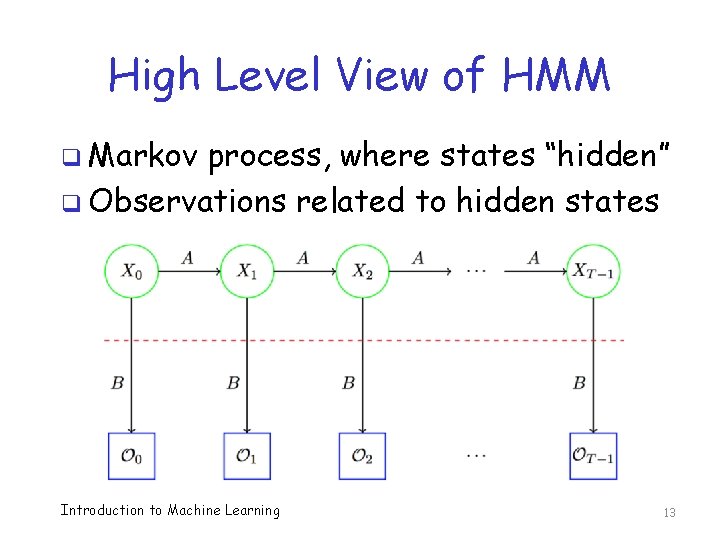

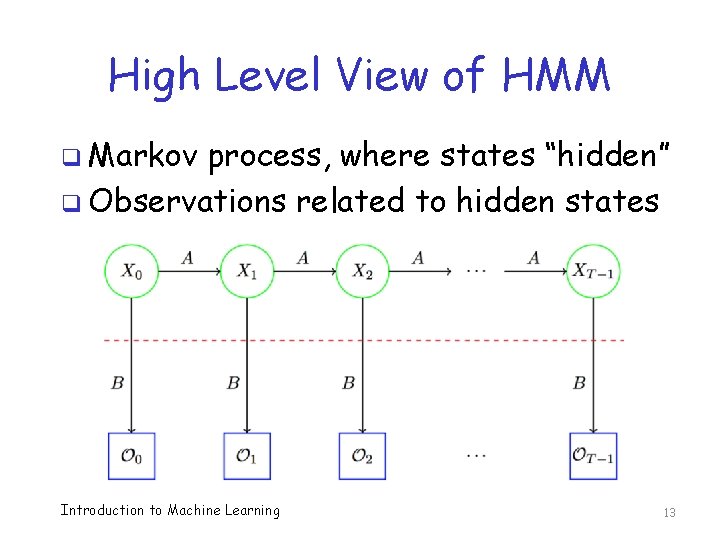

High Level View of HMM q Markov process, where states “hidden” q Observations related to hidden states Introduction to Machine Learning 13

HMM as Hill Climb q Hill climb on parameter space q What is a hill climb? o Only go “up”, never “down” o In contrast to heuristic search, such as genetic algorithm or simulated annealing q Advantage(s) of hill climb algorithm? q Disadvantages/limitations of hill climb q Alternatives to hill climb? Introduction to Machine Learning 14

PHMM q Like HMM with positional information q Conceptually appealing o Details tend to be very problem specific q Widely used in bioinformatics o And other applications where position within sequence is critical information q Has been used successfully in security research (IDS, malware detection) Introduction to Machine Learning 15

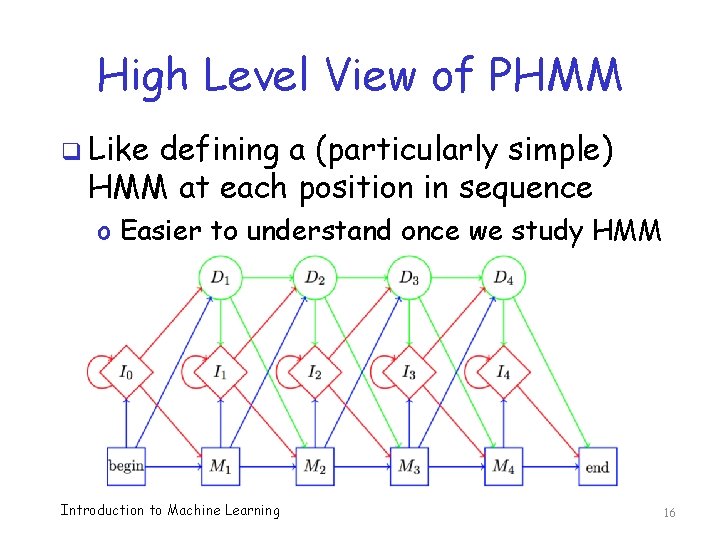

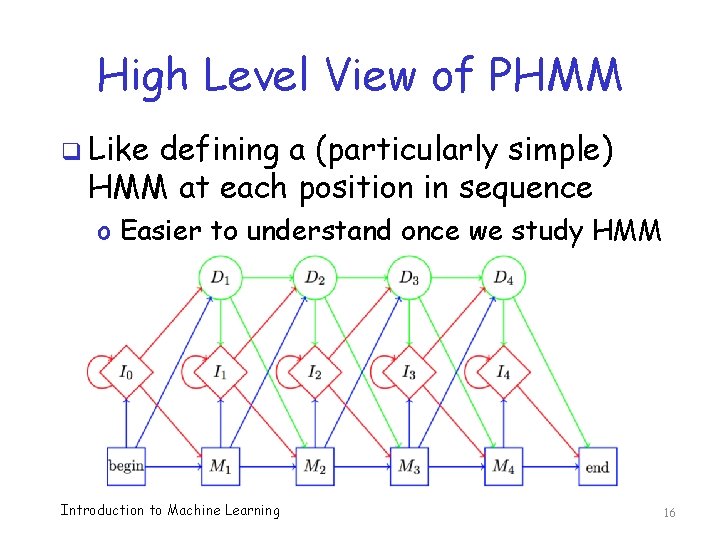

High Level View of PHMM q Like defining a (particularly simple) HMM at each position in sequence o Easier to understand once we study HMM Introduction to Machine Learning 16

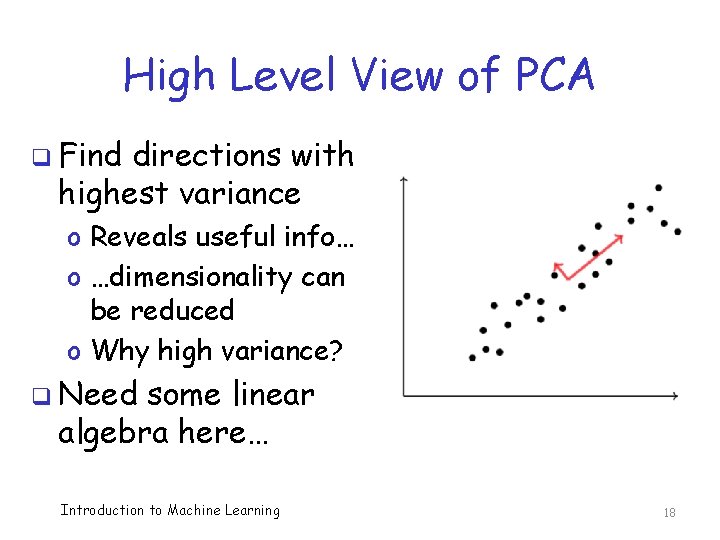

PCA q PCA serves to reduce dimensionality q Training is complex (linear algebra) o But scoring is fast and efficient o So, when the dust settles, PCA is actually easy to use and very efficient q Singular Value Decomposition (SVD) (almost) synonymous with PCA o SVD is one way to train a model in PCA Introduction to Machine Learning 17

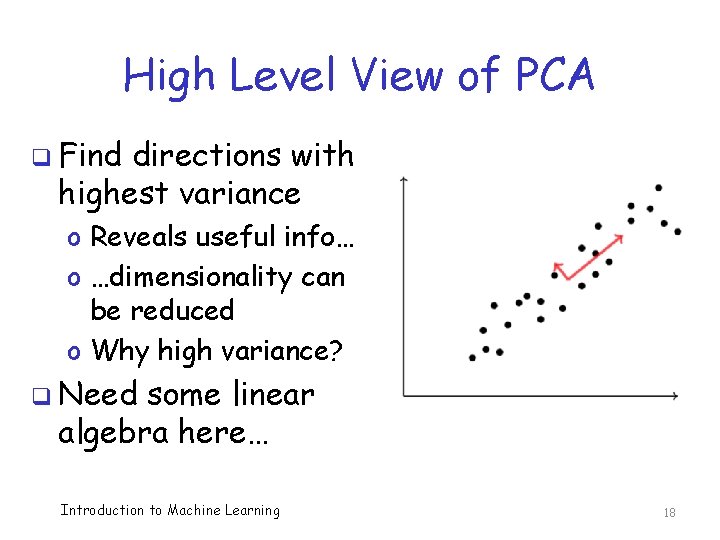

High Level View of PCA q Find directions with highest variance o Reveals useful info… o …dimensionality can be reduced o Why high variance? q Need some linear algebra here… Introduction to Machine Learning 18

SVM q SVM has nice geometric interpretation o We can draw pretty pictures! q In SVM, we increase the dimension o May be counterintuitive (compare to PCA) q SVM often used similar to other ML o But also ideal as a “meta-score” to combine other scores/techniques q Nice combination of theory & practice Introduction to Machine Learning 19

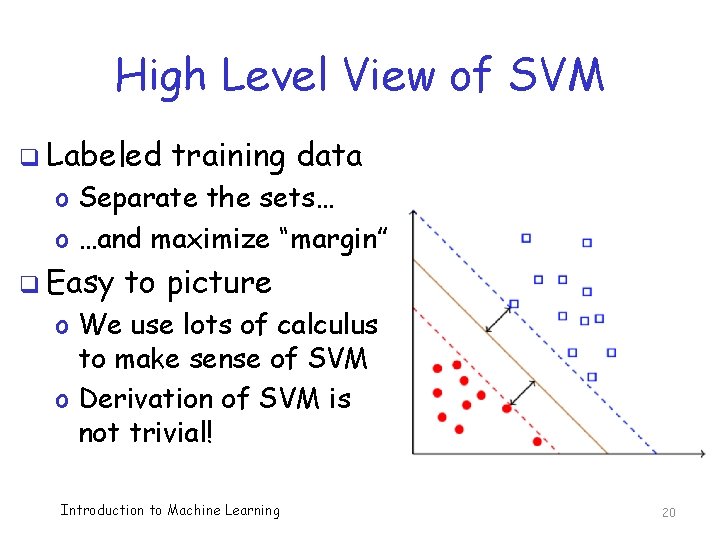

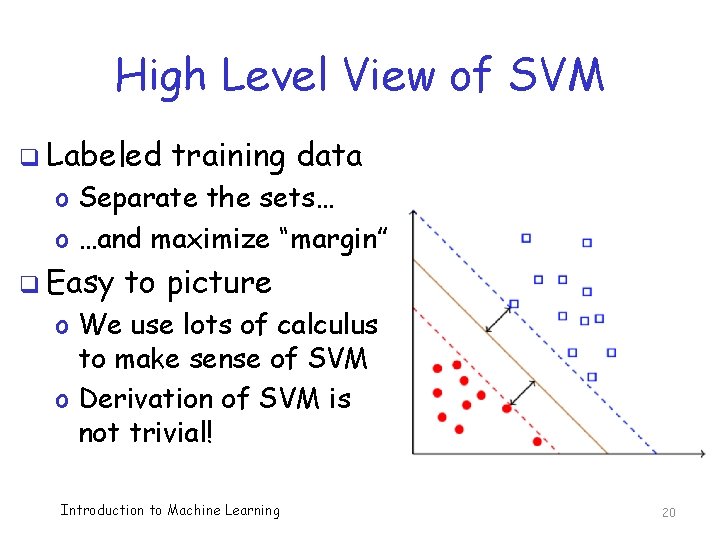

High Level View of SVM q Labeled training data o Separate the sets… o …and maximize “margin” q Easy to picture o We use lots of calculus to make sense of SVM o Derivation of SVM is not trivial! Introduction to Machine Learning 20

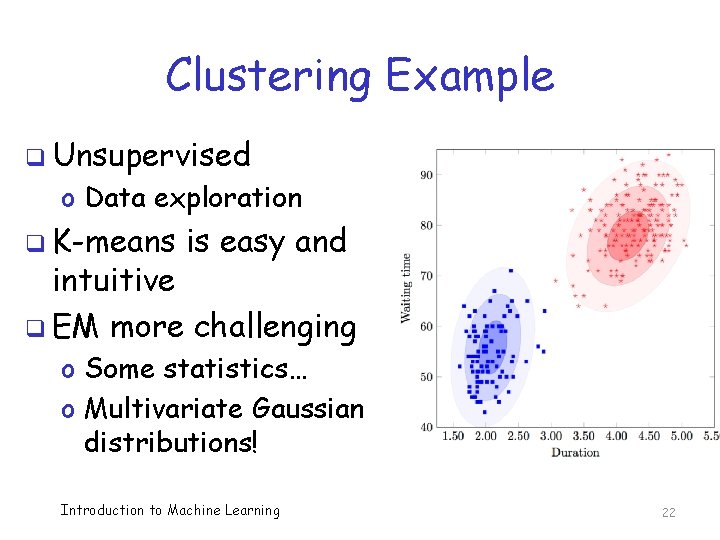

Clustering q Usually, used for “data exploration” o I. e. , we cluster hoping to learn structure from data that we know little about o Observed structure may or may not be meaningful (we can cluster anything) q In detail, we consider o K-means clustering o EM (expectation maximization) clustering Introduction to Machine Learning 21

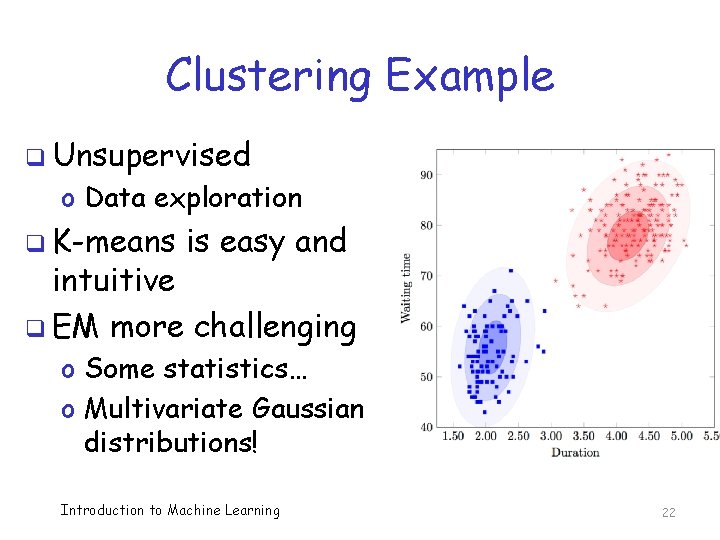

Clustering Example q Unsupervised o Data exploration q K-means is easy and intuitive q EM more challenging o Some statistics… o Multivariate Gaussian distributions! Introduction to Machine Learning 22

Classic Mini Topics++ q Boosting o Make arbitrarily strong classifier from many (weak) classifiers o Focus on Ada. Boost (Adaptive Boosting), which is simplest strategy q Linear Discriminant Analysis (LDA) o Discuss this in some detail o Interesting connections to PCA and SVM Introduction to Machine Learning 23

Classic Mini Topics q Random forest (RF) o Very popular and useful o Based on decision trees o We cover only the very basics q K-nearest neighbor (k-NN) o Simplest “machine learning” imaginable o Often works surprisingly well Introduction to Machine Learning 24

Deep Learning Topics q Artificial neural network (ANN) o A mini-topic in the book o But now major-topic of the course q Backpropagation o Technique to train most neural networks o Essentially, a BIG calculus problem o Based on automatic differentiation and… o Reverse mode automatic differenatiation Introduction to Machine Learning 25

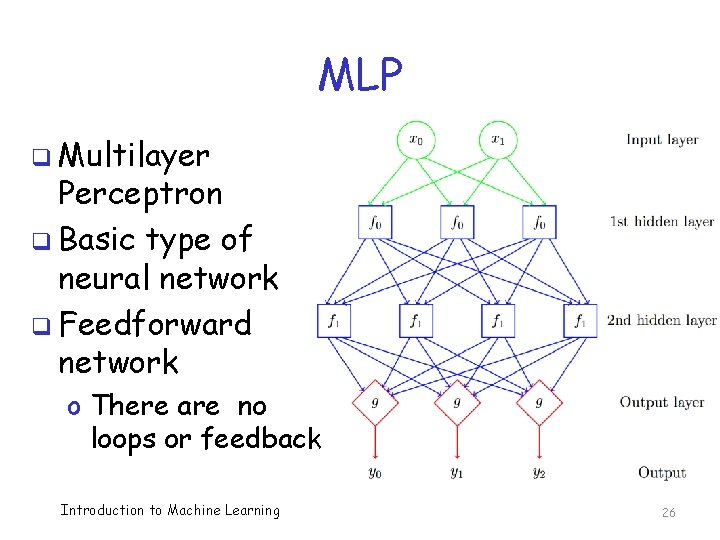

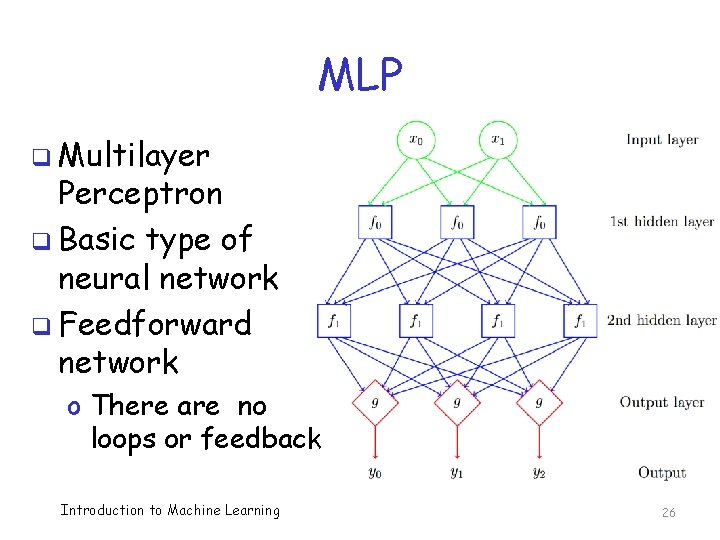

MLP q Multilayer Perceptron q Basic type of neural network q Feedforward network o There are no loops or feedback Introduction to Machine Learning 26

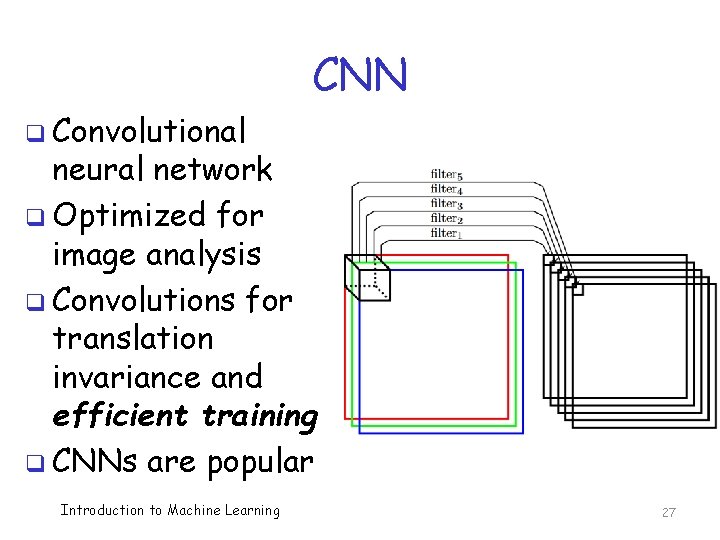

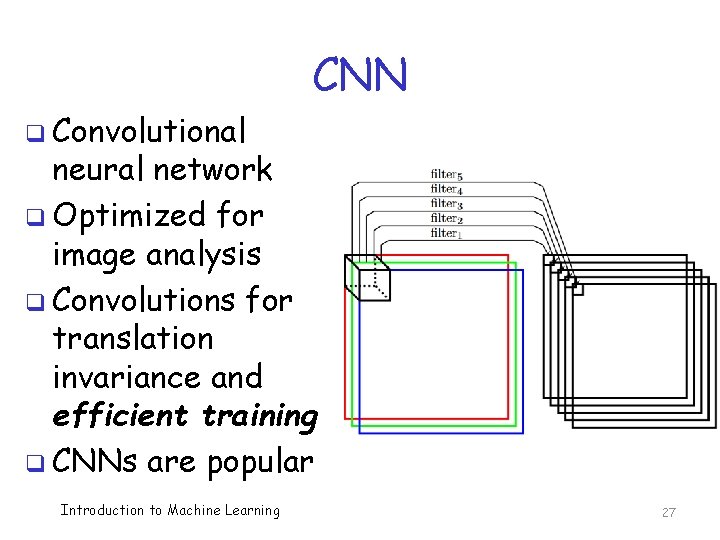

CNN q Convolutional neural network q Optimized for image analysis q Convolutions for translation invariance and efficient training q CNNs are popular Introduction to Machine Learning 27

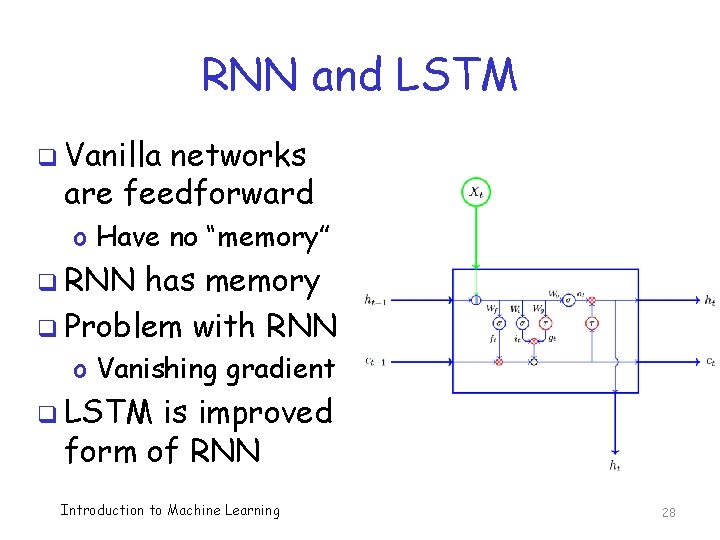

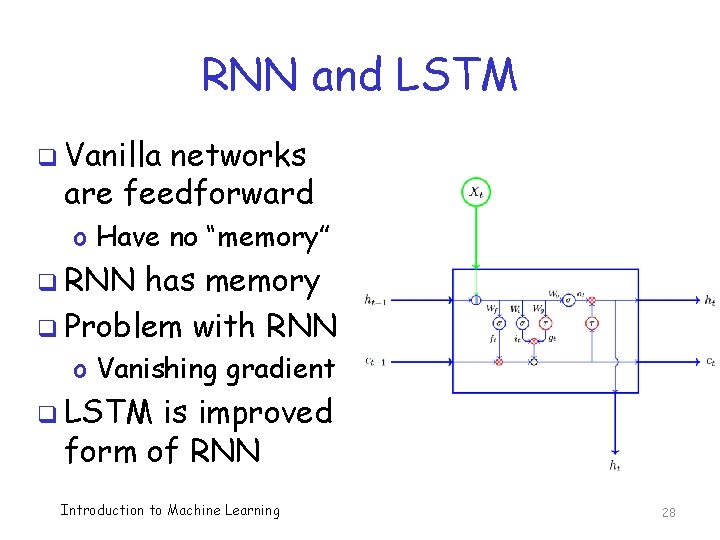

RNN and LSTM q Vanilla networks are feedforward o Have no “memory” q RNN has memory q Problem with RNN o Vanishing gradient q LSTM is improved form of RNN Introduction to Machine Learning 28

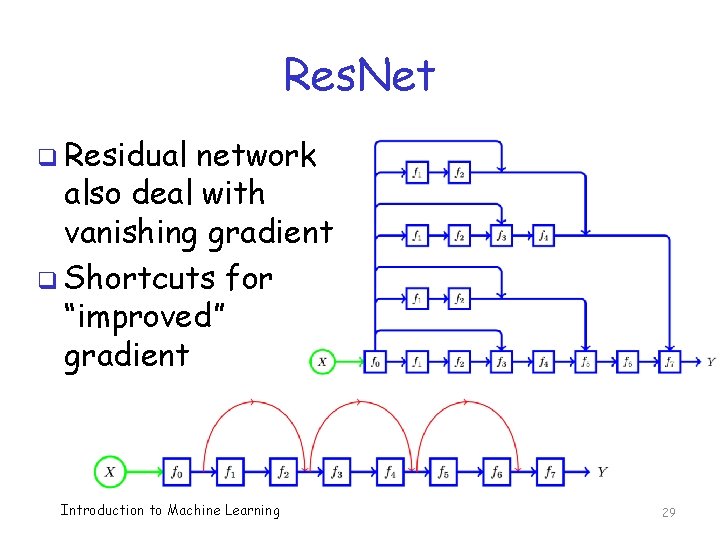

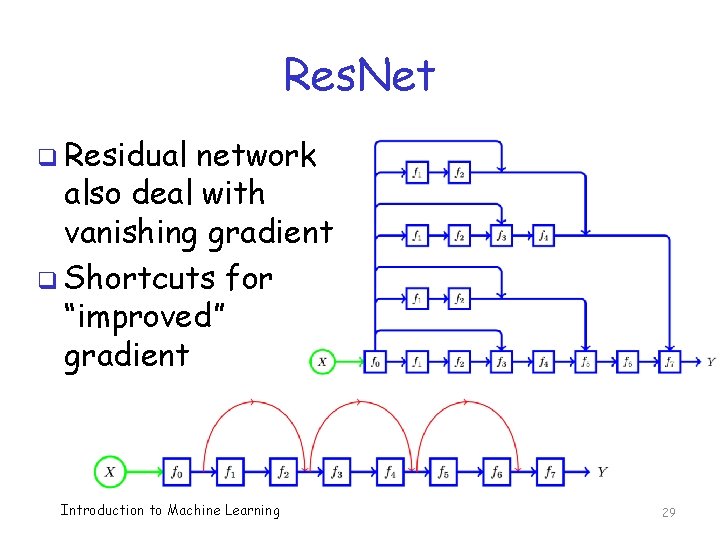

Res. Net q Residual network also deal with vanishing gradient q Shortcuts for “improved” gradient Introduction to Machine Learning 29

GAN q Discriminative network (D) classifies q Generative network (G) generates (fake) samples that fit training data q Generative adversarial network (GAN) pits D and G against each other o D trained to distinguish between real samples and fake data from G o G trained to trick D network o D and G compete in a “minimax” game q Both D and G can improve in training Introduction to Machine Learning 30

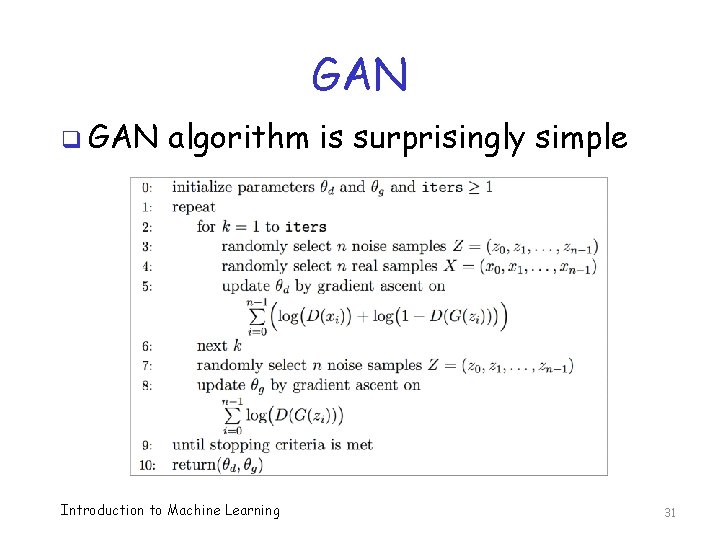

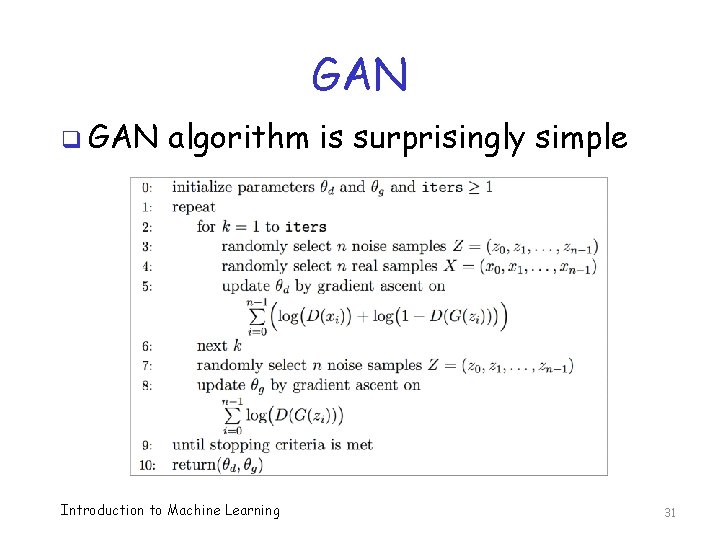

GAN q GAN algorithm is surprisingly simple Introduction to Machine Learning 31

Word 2 Vec q Vector “embeddings” of words q Word 2 Vec uses shallow neural network o Train neural network on words o Weights of trained network are the embedding vectors o Model not used for any other purpose q Word 2 Vec algebraic properties captures “meaning” of langauge Introduction to Machine Learning 32

Deep Learning Mini Topics q Transfer learning q Ensemble techniques o Use pre-trained model o Re-train the output layer o Combine multiple techniques o Ensembles win most competitions q Dropout and regularization o Ways to avoid overfitting o “Learning” vs “memorization” q And more… Introduction to Machine Learning 33

The Dreaded Math/Stats q We strive to keep math to a minimum o Course is (mostly) self-contained wrt math o We do assume knowledge of calculus q To o o understand ML, cannot avoid math. . . HMM/PHMM discrete probability PCA fancy linear algebra (eigenvectors) SVM calculus (Lagrange multipliers) Clustering statistics/probability Backpropagation calculus (computational) Introduction to Machine Learning 34

Data Analysis q Critical to analyze data carefully q Especially true in research mode, as we must compare to previous work q Often, a major weakness! q We’ll discuss… o Experimental design, cross validation, accuracy, ROC curves, PR curves, imbalance problem, and so on Introduction to Machine Learning 35

Applications q Applications mostly from security o Malware detection or analysis HMM, PCA, SVM, CNN, and clustering o Masquerade detection PHMM o Image spam PCA and SVM o Classic cryptanalysis HMM o Facial recognition PCA o Text analysis HMM o Old Faithful geyser clustering Introduction to Machine Learning 36

3 Stages to ML Enlightenment q First stage elementary-school level o “Big picture” from 10 k feet o See the descriptions in this intro q Second stage drill down on big picture o More detailed and nuanced than first stage o Understand the pictures used in stage 1 q Third stage deep understanding o Learn derivations and (mostly) understand Introduction to Machine Learning 37

ML Enlightenment q In this class, we aim for highest stage of ML enlightenment o To pass the class, at a minimum, you must have stage 2++ knowledge… o … and ability to effectively use ML … o … and understand strengths/weaknesses q Key to success o Work hard on homework and project! Introduction to Machine Learning 38

Bottom Line q We cover selected machine learning techniques in considerable detail q We discuss many applications, mostly related to information security q Goal is for students to gain a deep understanding of the techniques o And be able to apply ML… o …especially in new and novel situations Introduction to Machine Learning 39

How to Succeed in ML Class q Ask questions o Good questions are good for everybody q Treat the math as your friend o Math is necessary to make sense of ML q Do not fear hard work! o Machine learning is not a spectator sport q Learning is a 3 step process o Read book, attend lecture, do homework Introduction to Machine Learning 40