CS 598 KN Advanced Multimedia Systems Lecture 10

- Slides: 70

CS 598 KN – Advanced Multimedia Systems Lecture 10 – Media Server Klara Nahrstedt Fall 2018 CS 598 KN - Fall 2018

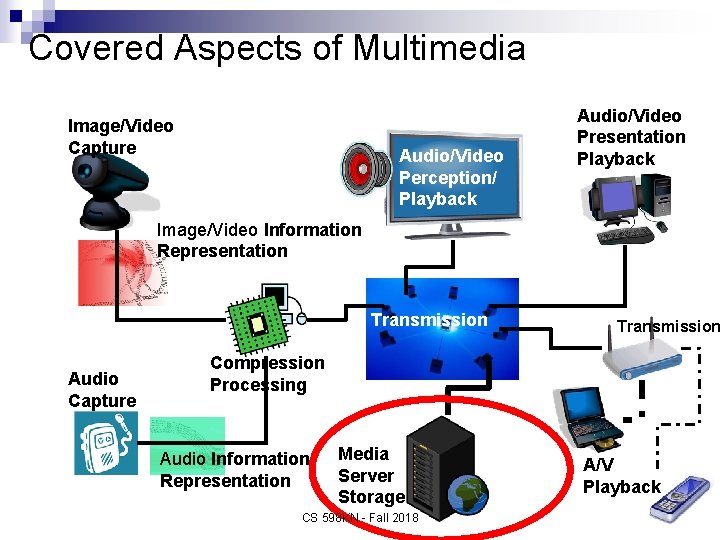

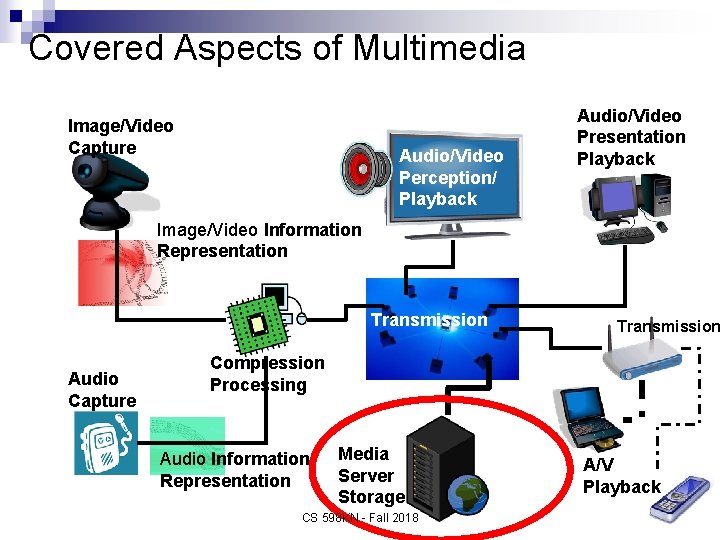

Covered Aspects of Multimedia Image/Video Capture Audio/Video Perception/ Playback Audio/Video Presentation Playback Image/Video Information Representation Transmission Audio Capture Transmission Compression Processing Audio Information Representation Media Server Storage CS 598 KN - Fall 2018 A/V Playback

Outline Media Server Requirements n Media Server Layered Architecture n CS 598 KN - Fall 2018

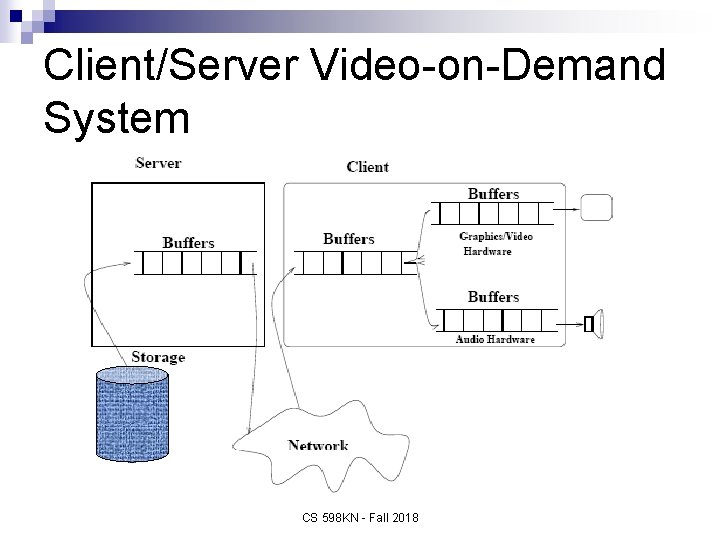

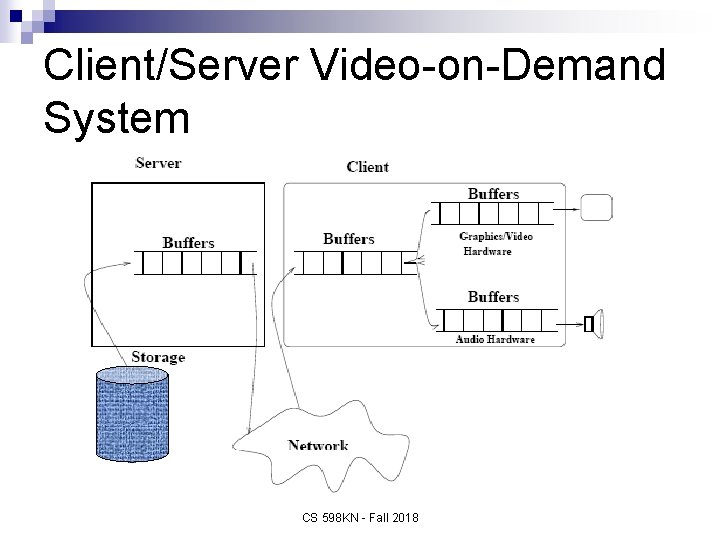

Client/Server Video-on-Demand System CS 598 KN - Fall 2018

Video-on-Demand Systems must be designed with goals: Avoid starvation n Minimize buffer space requirement n Minimize initiation latency (video startup time) n Optimize cost n CS 598 KN - Fall 2018

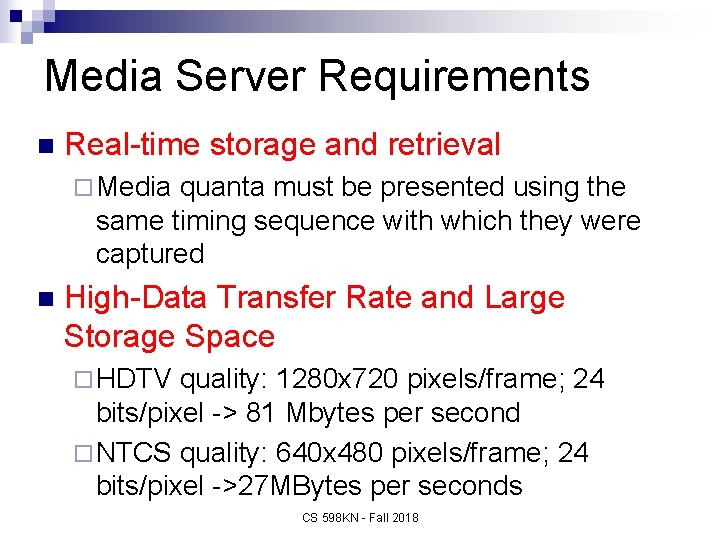

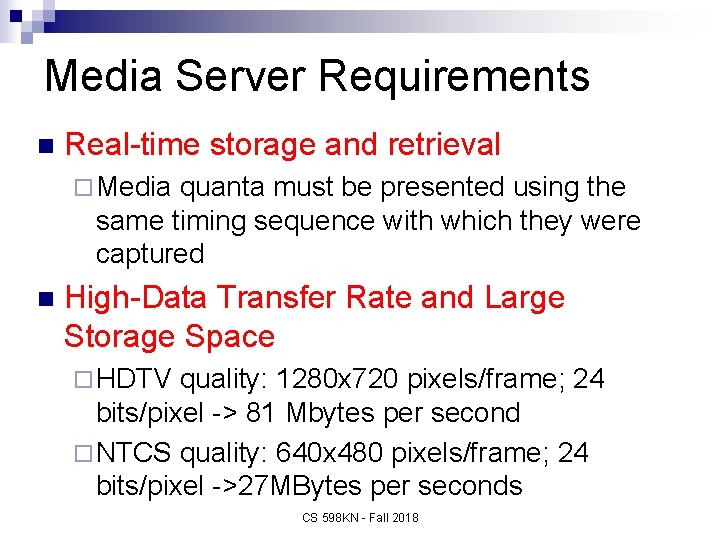

Media Server Requirements n Real-time storage and retrieval ¨ Media quanta must be presented using the same timing sequence with which they were captured n High-Data Transfer Rate and Large Storage Space ¨ HDTV quality: 1280 x 720 pixels/frame; 24 bits/pixel -> 81 Mbytes per second ¨ NTCS quality: 640 x 480 pixels/frame; 24 bits/pixel ->27 MBytes per seconds CS 598 KN - Fall 2018

You. Tube Video Server n n n 1. 8 B users logged-in users (May 2018) 90 countries are users of You. Tube (February 2017) 1 B hours per day – average amount of content watched daily on You. Tube (February 2017) Number of channels that have over 1 M viewers: 1500 (February 2016) Source: https: //expandedramblings. com/index. php/youtu be-statistics/ CS 598 KN - Fall 2018

You. Tube Storage n According to the new recording rate, 20 hrs of video per minute could require You. Tube to increase its storage capacity by up to 21. 0 Terabytes per day, or 7. 7 Petabytes per year, placing it at a point roughly 4 x more than the total amount of data generated per year by the NCSA’s supercomputers in Urbana, IL. CS 598 KN - Fall 2018

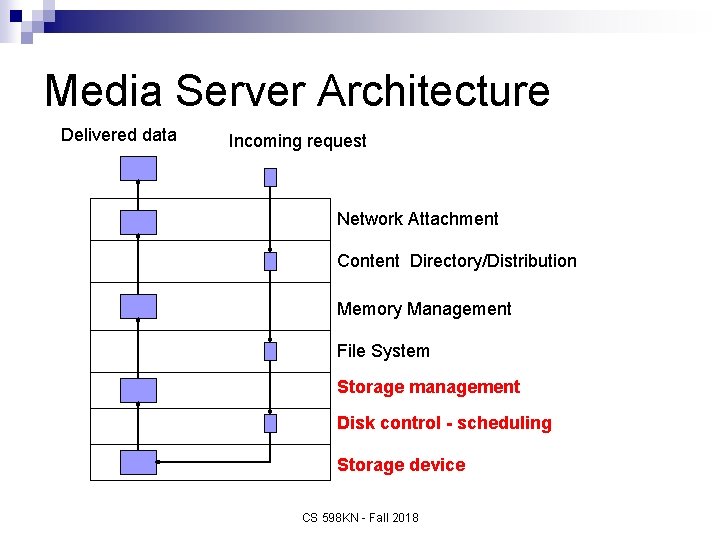

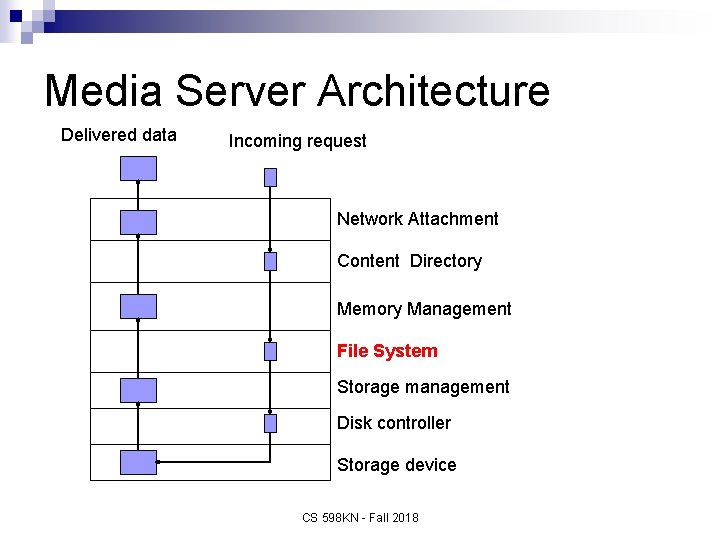

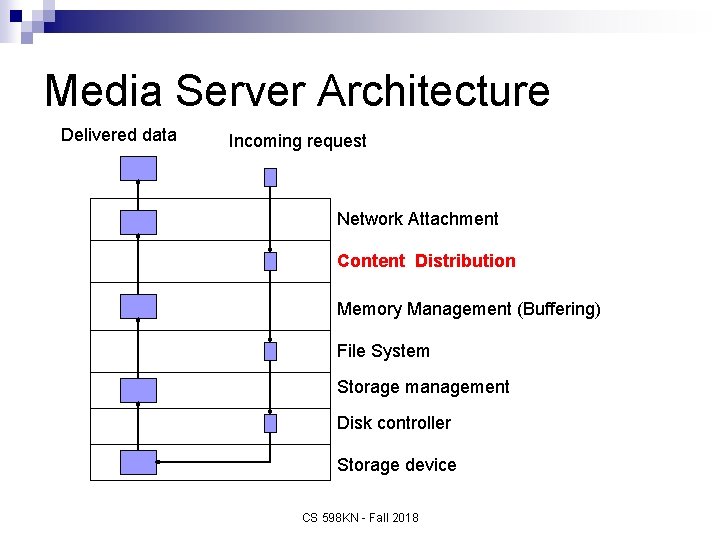

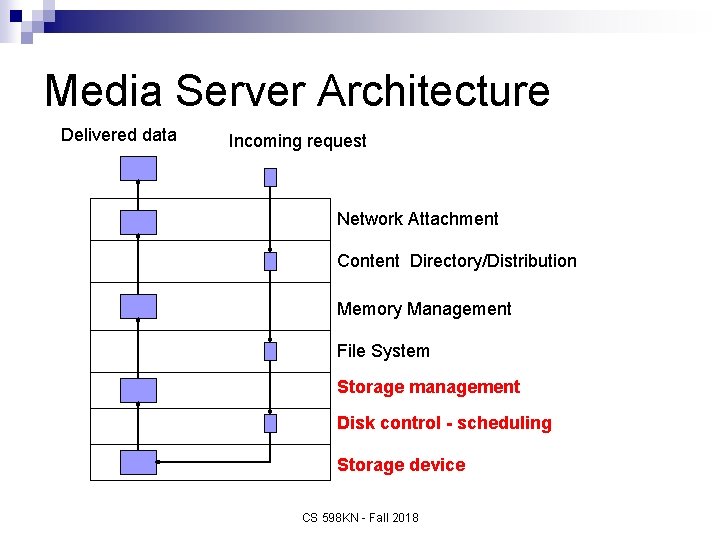

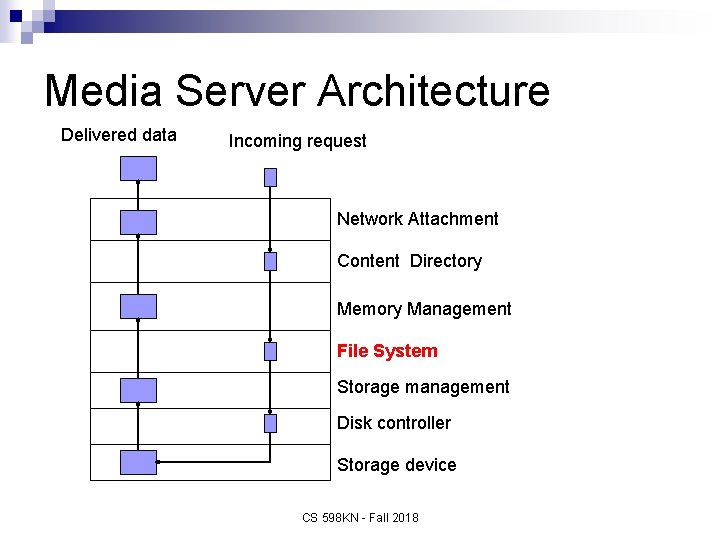

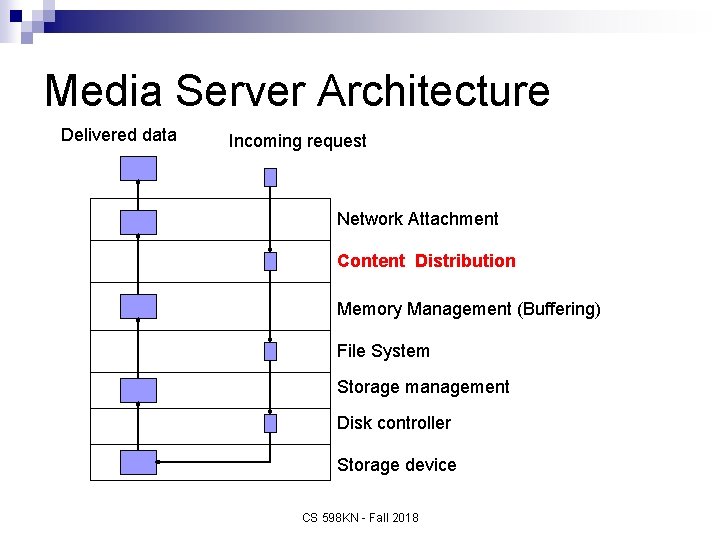

Media Server Architecture Delivered data Incoming request Network Attachment Content Directory/Distribution Memory Management File System Storage management Disk control - scheduling Storage device CS 598 KN - Fall 2018

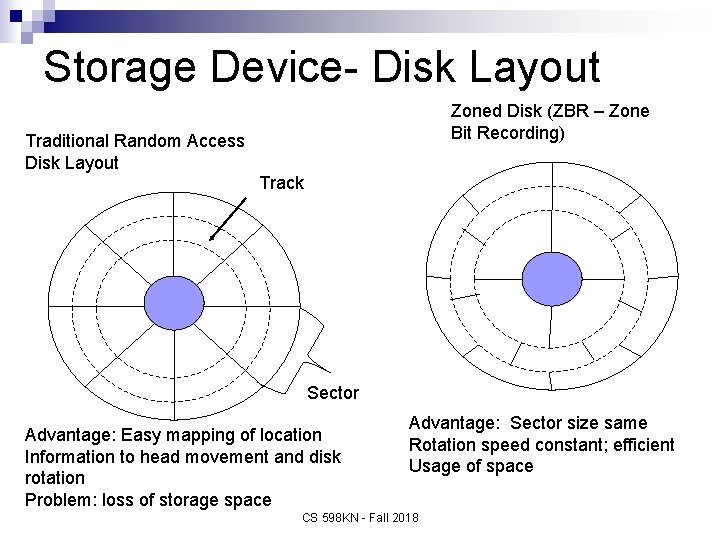

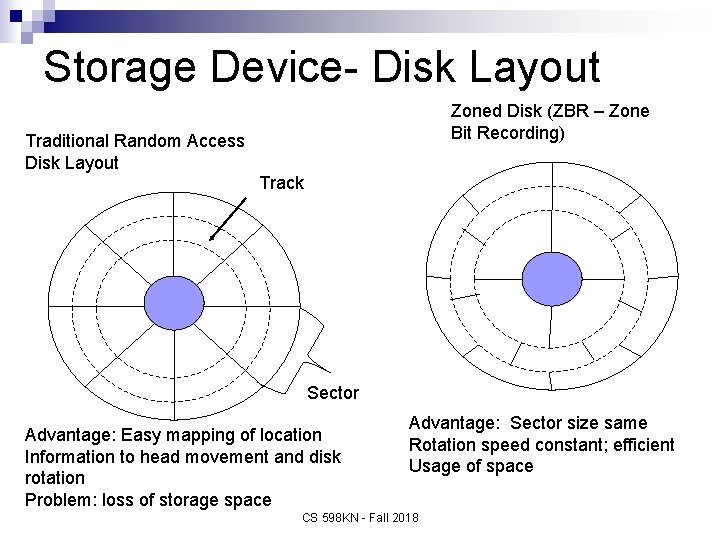

Storage Device- Disk Layout Zoned Disk (ZBR – Zone Bit Recording) Traditional Random Access Disk Layout Track Sector Advantage: Easy mapping of location Information to head movement and disk rotation Problem: loss of storage space Advantage: Sector size same Rotation speed constant; efficient Usage of space CS 598 KN - Fall 2018

Storage/Disk Management n Optimal placement of MM data blocks ¨ Single disk ¨ multiple disks Timely disk scheduling algorithms and sufficient buffers to avoid jitter n Possible Admission control n CS 598 KN - Fall 2018

Storage Management n Storage access time to read/write disk block is determined by 3 components ¨ Seek Time n Time required for the movement of read/write head ¨ Rotational Time (Latency Time) n Time during which transfer cannot proceed until the right block or sector rotates under read/write head ¨ Data Transfer Time needed for data to copy from disk into main memory CS 598 KN - Fall 2018

Impact of Access Time Metrics If data blocks are arbitrarily placed n If requests are served on FCFC basis n Then effort for locating data place may cost time period in order of magnitude 10 ms n This performance degrades disk efficiency n Need techniques to reduce seek, rotation and transmission times !!! n CS 598 KN - Fall 2018

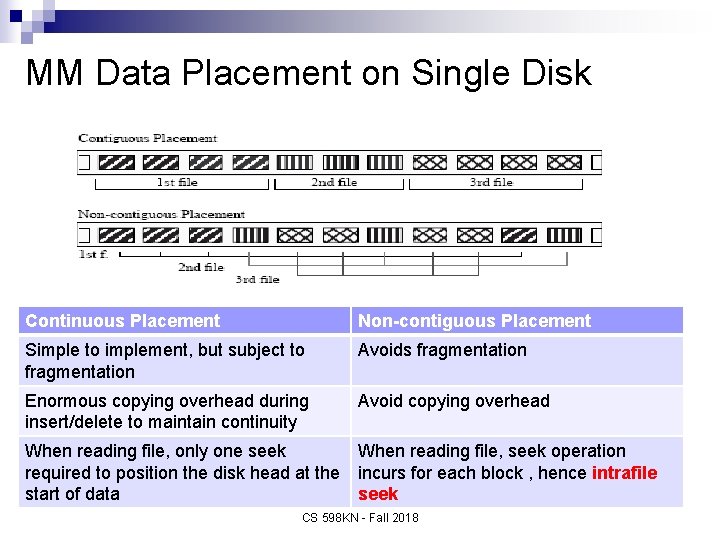

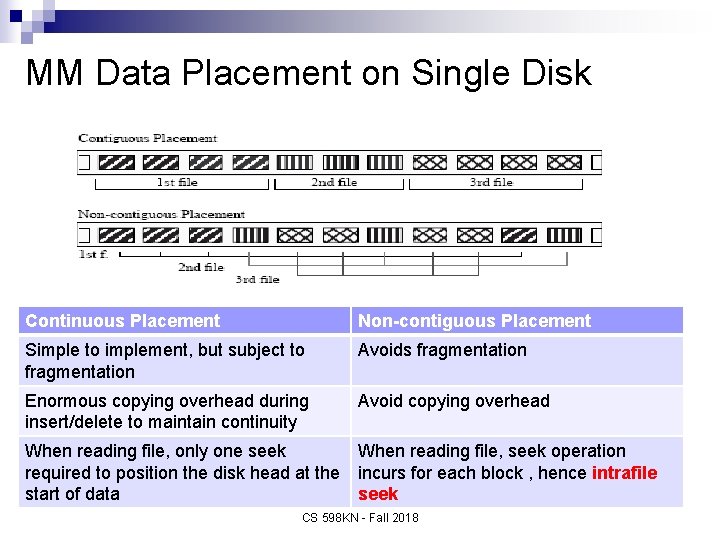

MM Data Placement on Single Disk Continuous Placement Non-contiguous Placement Simple to implement, but subject to fragmentation Avoids fragmentation Enormous copying overhead during insert/delete to maintain continuity Avoid copying overhead When reading file, only one seek When reading file, seek operation required to position the disk head at the incurs for each block , hence intrafile start of data seek CS 598 KN - Fall 2018

Intra-file Seek Time Intra-file seek – can be avoided in noncontiguous layout if the amount read from a stream always evenly divides block n Solution: select sufficient large block and read one block in each round n ¨ If more than one block is required to prevent starvation prior to next read, deal with intra-file seek n Solution: constrained placement or logstructure placement CS 598 KN - Fall 2018

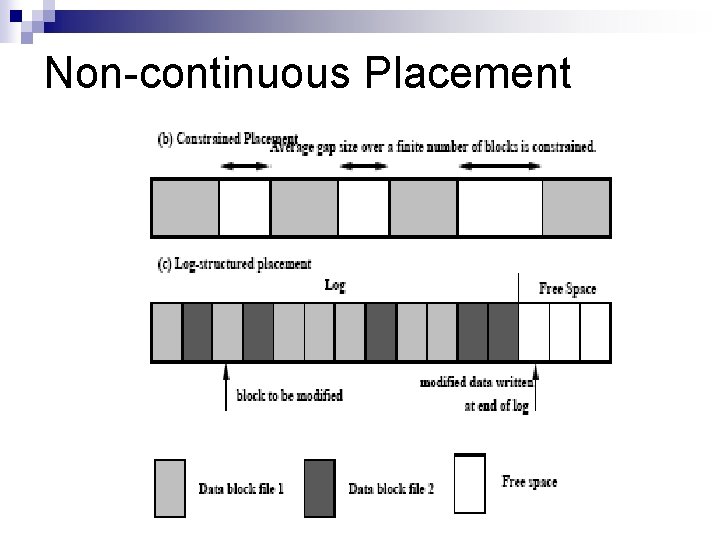

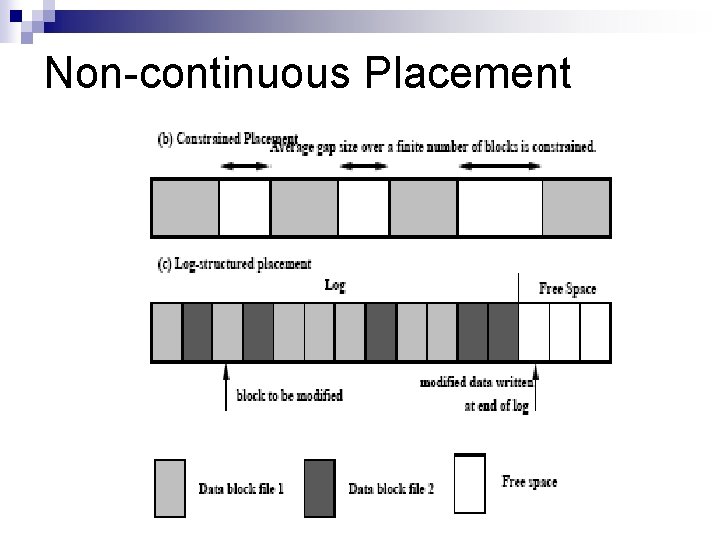

Non-continuous Placement CS 598 KN - Fall 2018

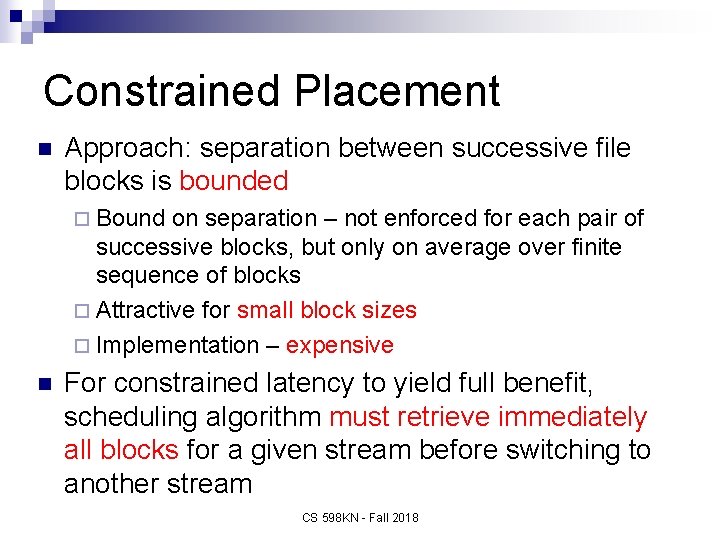

Constrained Placement n Approach: separation between successive file blocks is bounded ¨ Bound on separation – not enforced for each pair of successive blocks, but only on average over finite sequence of blocks ¨ Attractive for small block sizes ¨ Implementation – expensive n For constrained latency to yield full benefit, scheduling algorithm must retrieve immediately all blocks for a given stream before switching to another stream CS 598 KN - Fall 2018

Log-Structure Placement n This approach writes modified blocks sequentially in a large contiguous space, instead of requiring seek for each block in stream when writing (recording) ¨ Reduction of disk seeks ¨ Large performance improvements during recording, editing video and audio n n Problem: bad performance during playback Implementation: complex CS 598 KN - Fall 2018

Disk Scheduling Policies n Goal of Scheduling in Traditional Disk Management ¨ Reduce cost of seek time ¨ Achieve high throughput ¨ Provide fair disk access n Goal of Scheduling in Multimedia Disk Management ¨ Meet deadline of all time-critical tasks ¨ Keep necessary buffer requirements low ¨ Serve many streams concurrently ¨ Find balance between time constraints and efficiency CS 598 KN - Fall 2018

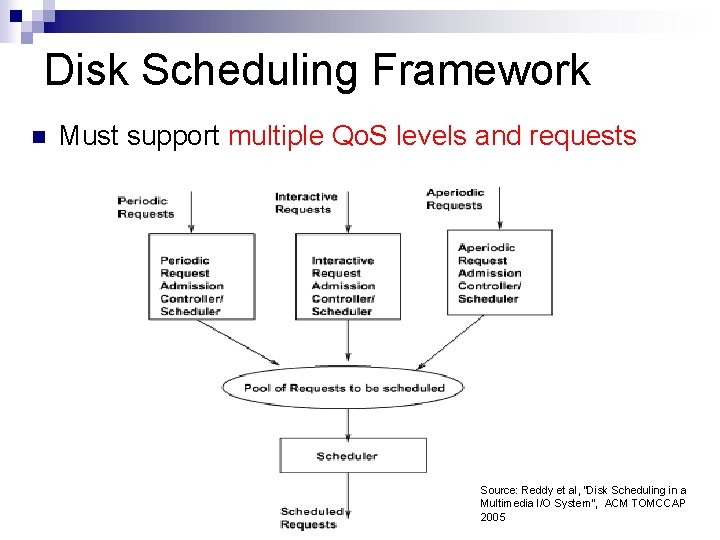

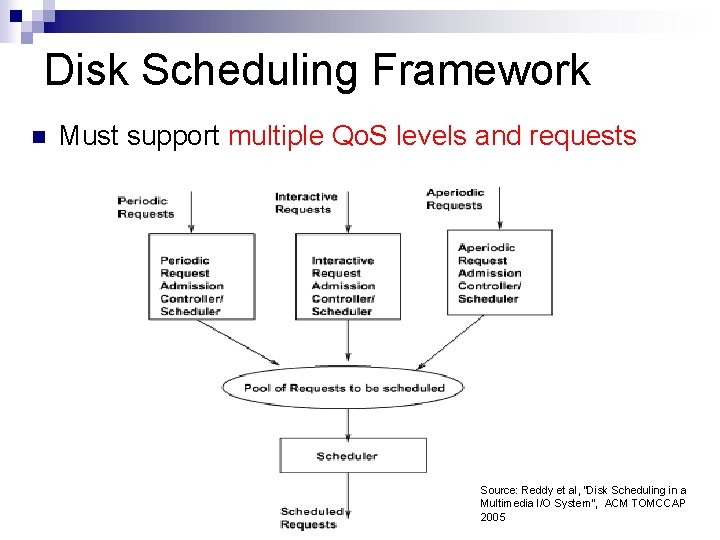

Disk Scheduling Framework n Must support multiple Qo. S levels and requests CS 598 KN - Fall 2018 Source: Reddy et al, “Disk Scheduling in a Multimedia I/O System”, ACM TOMCCAP 2005

EDF (Earliest Deadline First) Disk Scheduling n Each disk block request is tagged with deadline ¨ Very good scheduling policy for periodic requests n Policy: ¨ Schedule disk block request with earliest deadline ¨ Excessive seek time – high overhead ¨ Pure EDF must be adapted or combined with file system strategies CS 598 KN - Fall 2018

EDF Example Note: Consider that block number Implicitly encapsulates the disk track number CS 598 KN - Fall 2018

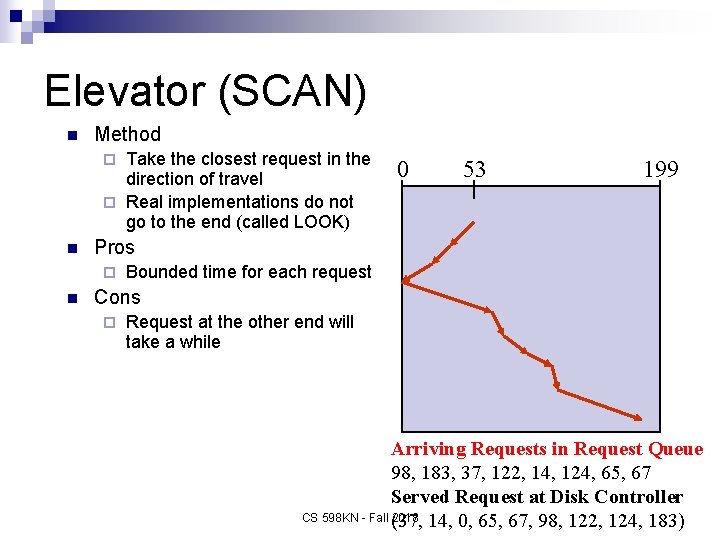

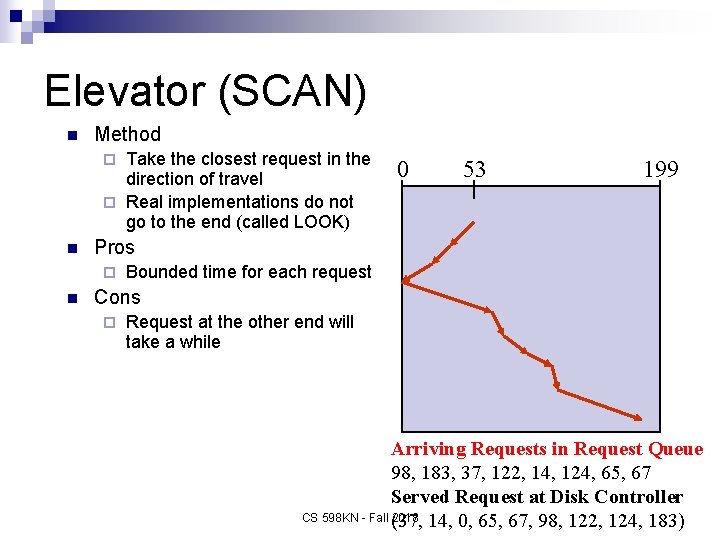

Elevator (SCAN) n Method Take the closest request in the direction of travel ¨ Real implementations do not go to the end (called LOOK) ¨ n 53 199 Pros ¨ n 0 Bounded time for each request Cons ¨ Request at the other end will take a while Arriving Requests in Request Queue 98, 183, 37, 122, 14, 124, 65, 67 Served Request at Disk Controller CS 598 KN - Fall 2018 (37, 14, 0, 65, 67, 98, 122, 124, 183)

SCAN-EDF Scheduling Algorithm Combination of SCAN and EDF algorithms n Each disk block request tagged with augmented deadline n ¨ Add to each deadline perturbation n Policy: ¨ SCAN-EDF chooses the earliest deadline ¨ If requests with same deadline, then choose request according to scan direction CS 598 KN - Fall 2018

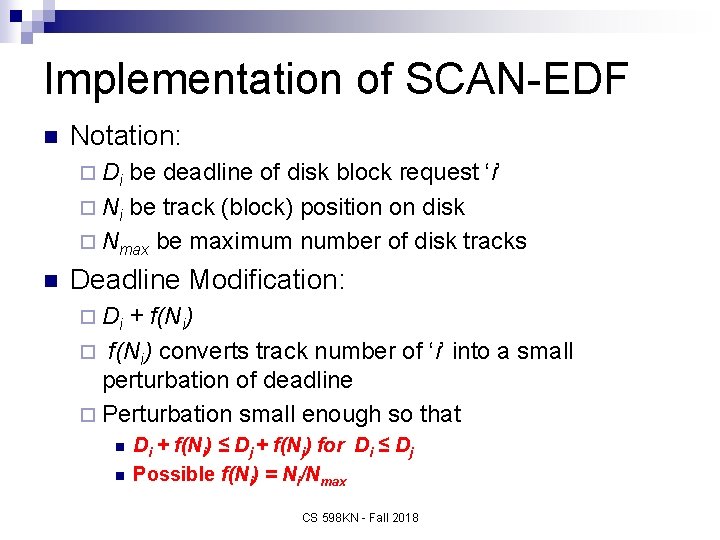

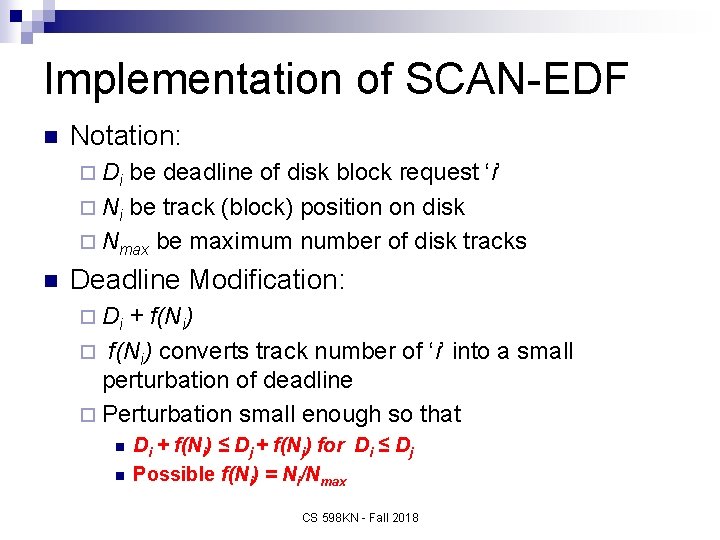

Implementation of SCAN-EDF n Notation: ¨ Di be deadline of disk block request ‘i’ ¨ Ni be track (block) position on disk ¨ Nmax be maximum number of disk tracks n Deadline Modification: ¨ Di + f(Ni) ¨ f(Ni) converts track number of ‘i’ into a small perturbation of deadline ¨ Perturbation small enough so that n n Di + f(Ni) ≤ Dj + f(Nj) for Di ≤ Dj Possible f(Ni) = Ni/Nmax CS 598 KN - Fall 2018

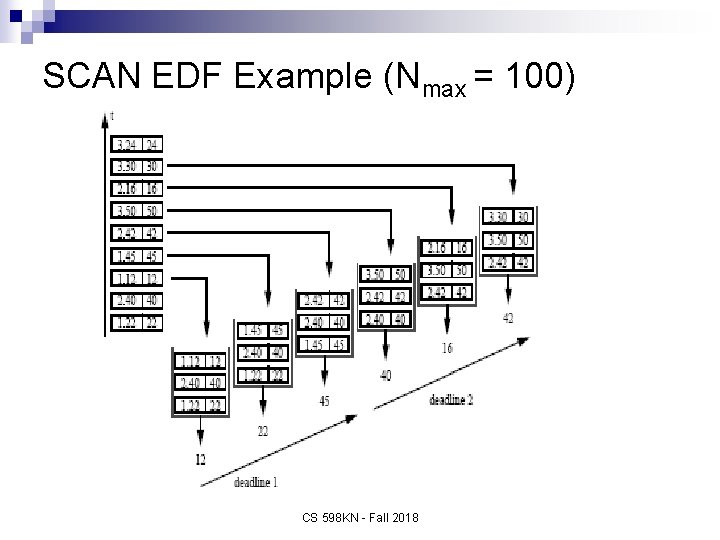

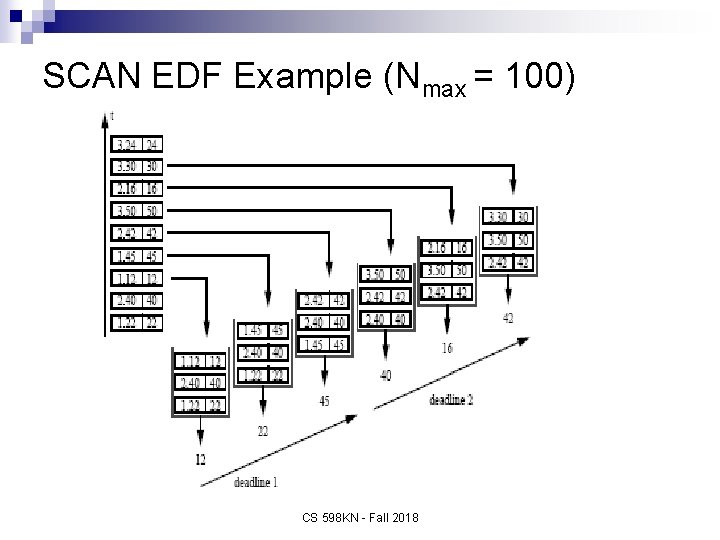

SCAN EDF Example (Nmax = 100) CS 598 KN - Fall 2018

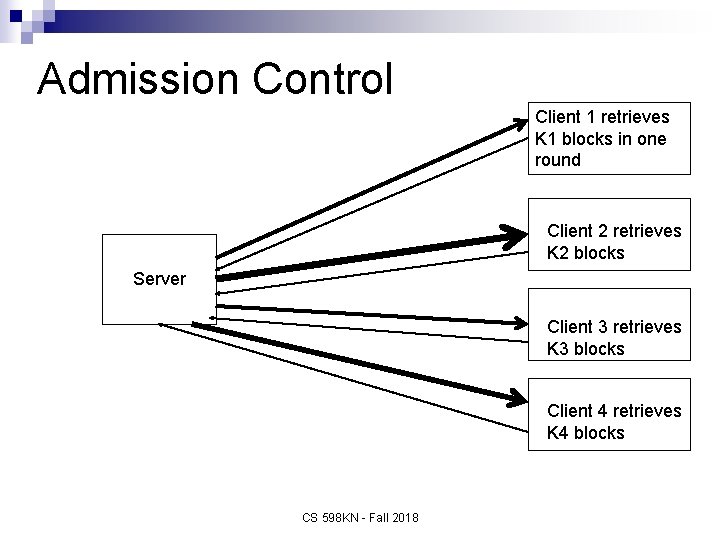

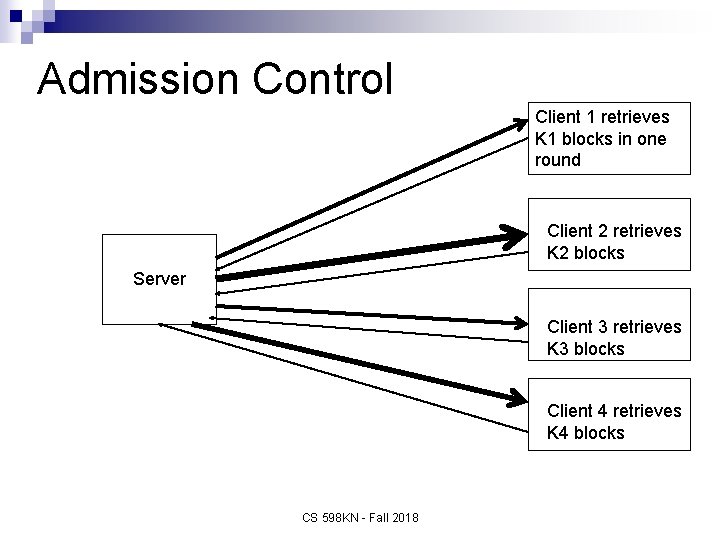

Admission Control Client 1 retrieves K 1 blocks in one round Client 2 retrieves K 2 blocks Server Client 3 retrieves K 3 blocks Client 4 retrieves K 4 blocks CS 598 KN - Fall 2018

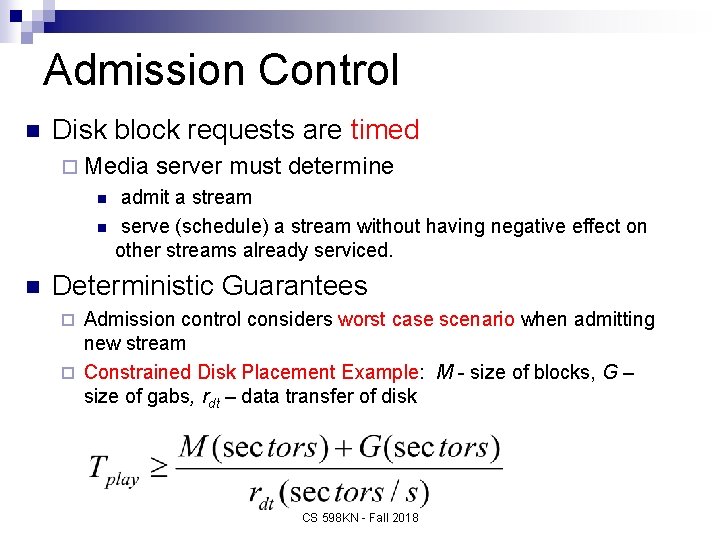

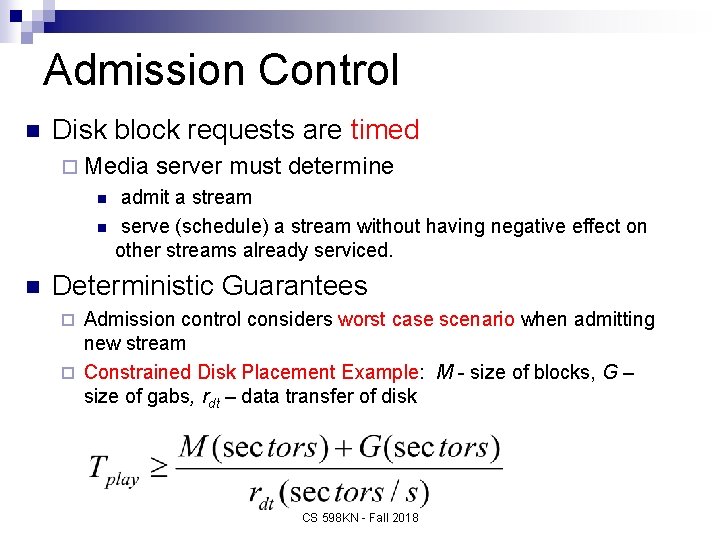

Admission Control n Disk block requests are timed ¨ Media server must determine n n n admit a stream serve (schedule) a stream without having negative effect on other streams already serviced. Deterministic Guarantees Admission control considers worst case scenario when admitting new stream ¨ Constrained Disk Placement Example: M - size of blocks, G – size of gabs, rdt – data transfer of disk ¨ CS 598 KN - Fall 2018

Media Server Architecture Delivered data Incoming request Network Attachment Content Directory Memory Management File System Storage management Disk controller Storage device CS 598 KN - Fall 2018

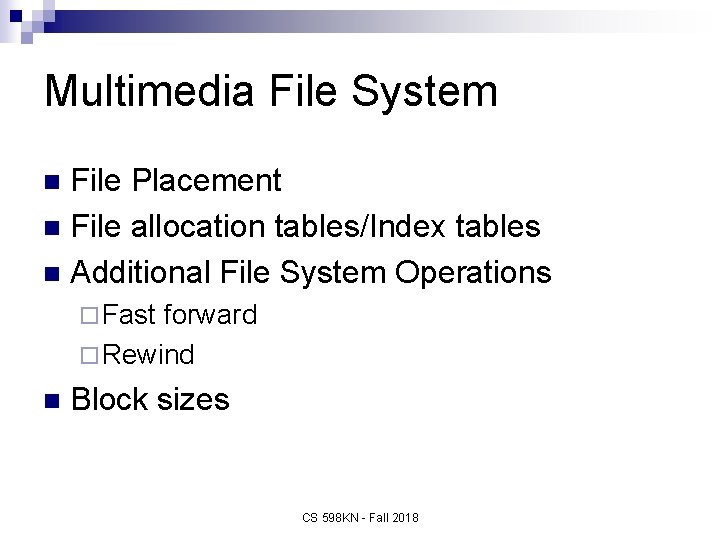

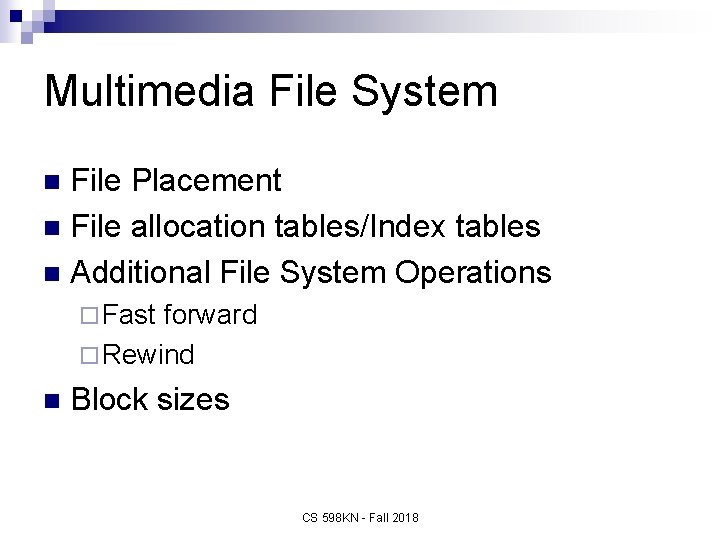

Multimedia File System File Placement n File allocation tables/Index tables n Additional File System Operations n ¨ Fast forward ¨ Rewind n Block sizes CS 598 KN - Fall 2018

Multimedia File Systems n Real-time Characteristics ¨ Read operation must be executed before well-defined deadline with small jitter n n Additional buffers smooth data File Size ¨ Can be very large even those compressed ¨ Files larger than 232 bytes n Multiple Correlated Data Streams ¨ Retrieval of a movie requires processing and synch of audio and video streams CS 598 KN - Fall 2018

Placement of Multiple MM Files on Single Disk n n n Popularity concept among multimedia content - very important Take popularity into account when placing movies on disk Model of popularity distribution – Zipf’s Law ¨ Movies are kth ranked n if their probability of customer usage is C/k, ¨ n C = normalization factor Condition holds: C/1 + C/2 + … C/N = 1, ¨ N is number of customers CS 598 KN - Fall 2018

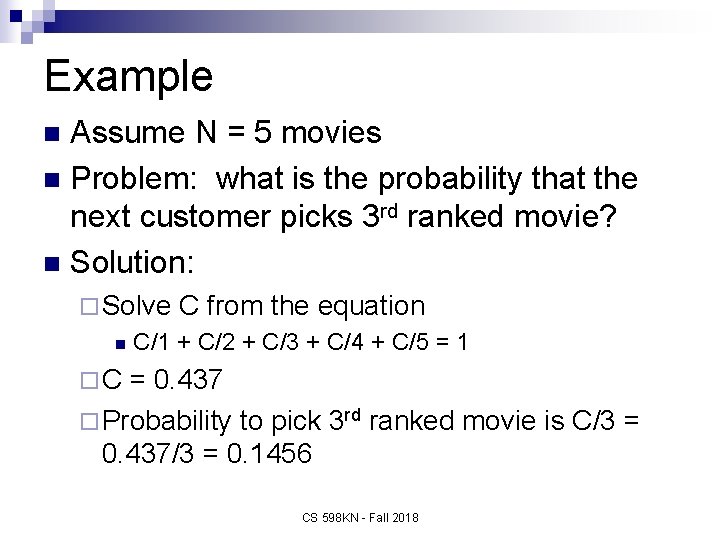

Example Assume N = 5 movies n Problem: what is the probability that the next customer picks 3 rd ranked movie? n Solution: n ¨ Solve C from the equation n C/1 + C/2 + C/3 + C/4 + C/5 = 1 ¨ C = 0. 437 ¨ Probability to pick 3 rd ranked movie is C/3 = 0. 437/3 = 0. 1456 CS 598 KN - Fall 2018

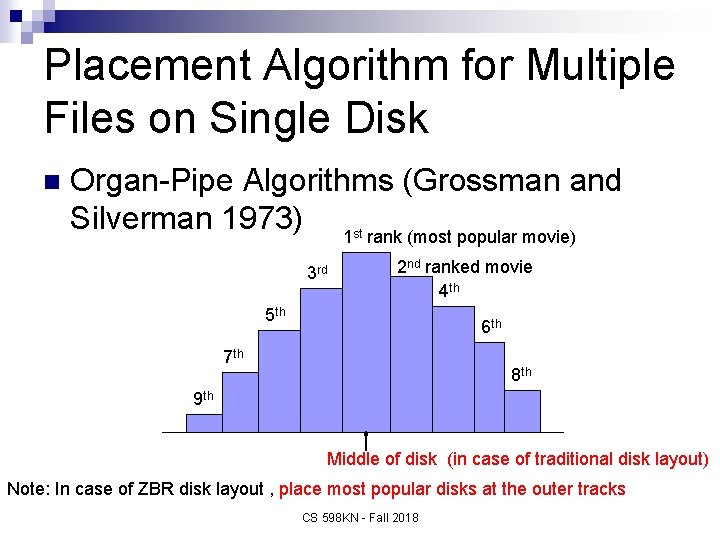

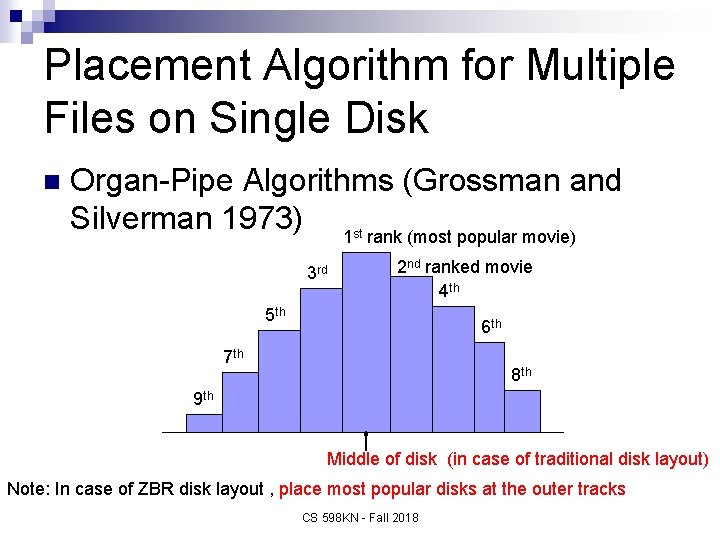

Placement Algorithm for Multiple Files on Single Disk n Organ-Pipe Algorithms (Grossman and Silverman 1973) 1 rank (most popular movie) st 3 rd 2 nd ranked movie 4 th 5 th 6 th 7 th 8 th 9 th Middle of disk (in case of traditional disk layout) Note: In case of ZBR disk layout , place most popular disks at the outer tracks CS 598 KN - Fall 2018

Placement of Mapping Tables Fundamental Issue: keep track of which disk blocks belong to each file (I-nodes in UNIX) n For continuous files/contiguous placement n ¨ don’t need maps n For scattered files ¨ Need maps Linked lists (inefficient for multimedia files) n File allocation tables (FAT) n CS 598 KN - Fall 2018

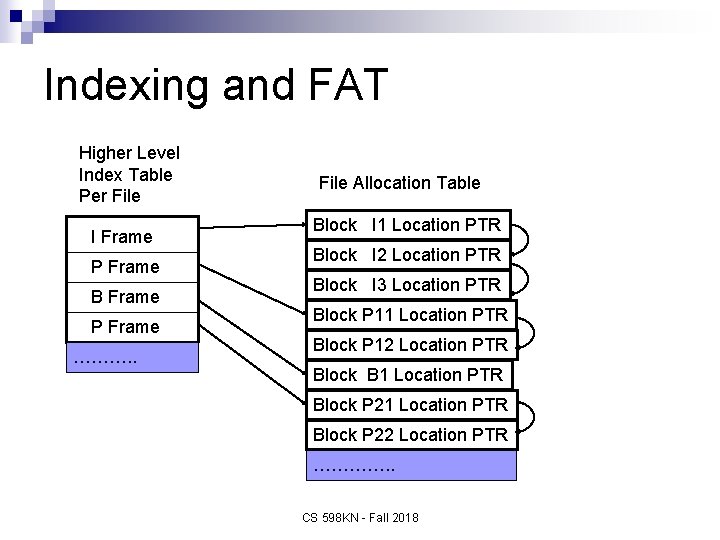

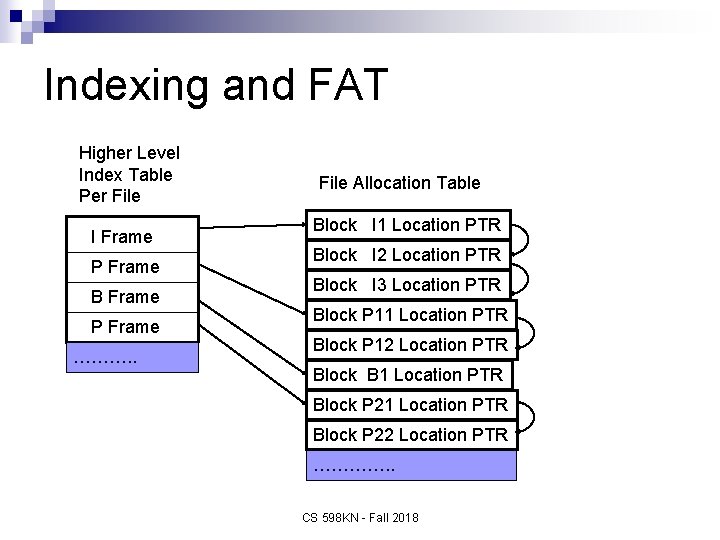

Indexing and FAT Higher Level Index Table Per File I Frame P Frame B Frame P Frame ………. . File Allocation Table Block I 1 Location PTR Block I 2 Location PTR Block I 3 Location PTR Block P 11 Location PTR Block P 12 Location PTR Block B 1 Location PTR Block P 22 Location PTR …………. . CS 598 KN - Fall 2018

Constant and Real-time Retrieval of MM Data n n Retrieve index in real-time Retrieve block information from FAT Retrieve data from disk in real-time Real-time playback ¨ n Implement linked list Random seek (Fast Forward, Rewind) ¨ Implement indexing n MM File Maps ¨ include metadata about MM objects: creator of video, sync info CS 598 KN - Fall 2018

Fast Forward and Rewind (Implementation) n Play back media at higher rate ¨ n Not practical solution Continue playback at normal rate, but skip frames Define skip steps, e. g. skip every 3 rd, or 5 th frame ¨ Be careful about interdependencies within MPEG frames ¨ n Approaches for FF: Create a separate and highly compressed file ¨ Categorize each frame as relevant or irrelevant ¨ Intelligent arrangement of blocks for FF ¨ CS 598 KN - Fall 2018

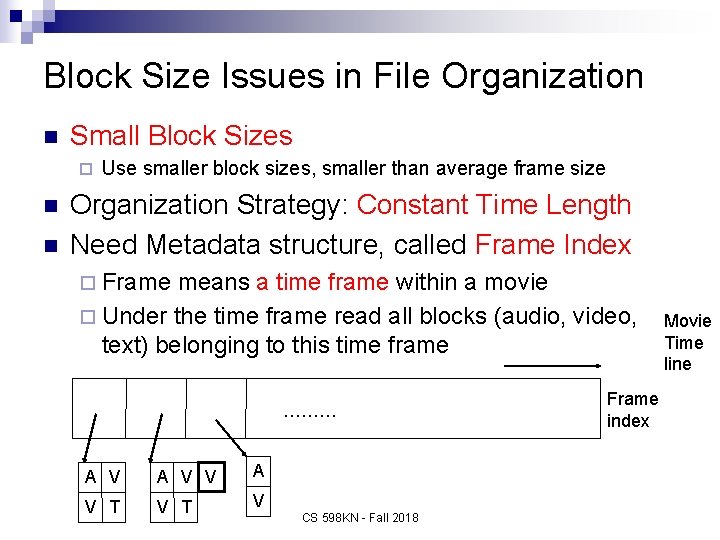

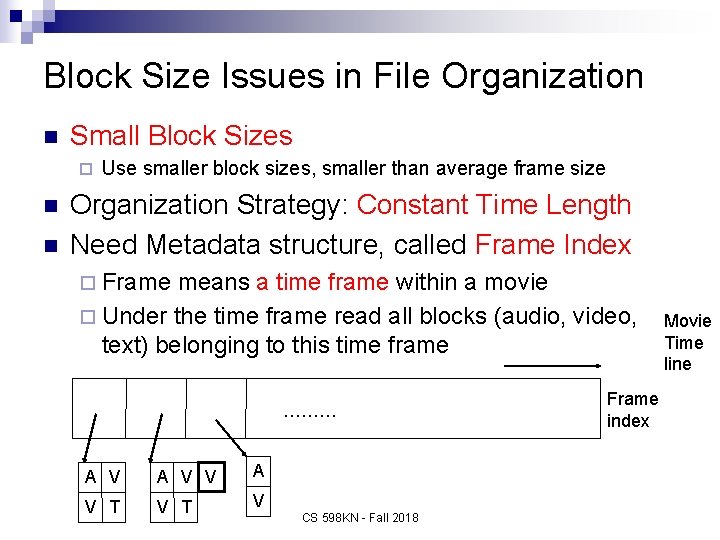

Block Size Issues in File Organization n Small Block Sizes ¨ n n Use smaller block sizes, smaller than average frame size Organization Strategy: Constant Time Length Need Metadata structure, called Frame Index ¨ Frame means a time frame within a movie ¨ Under the time frame read all blocks (audio, video, text) belonging to this time frame ……… A V V A V T V CS 598 KN - Fall 2018 Frame index Movie Time line

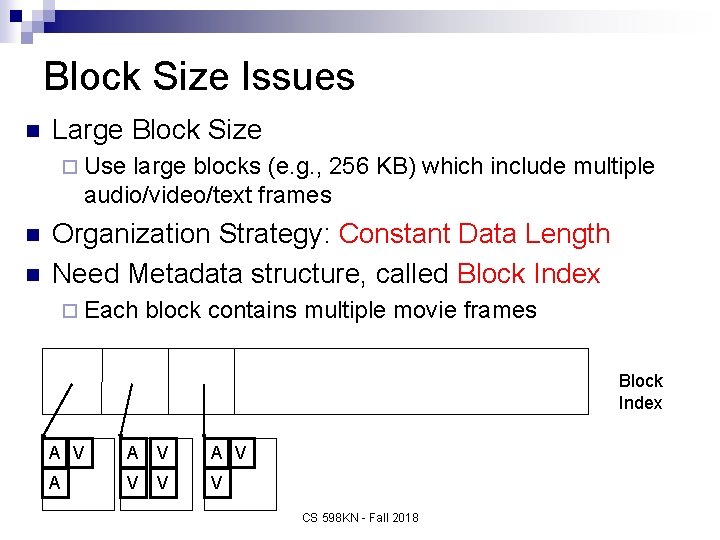

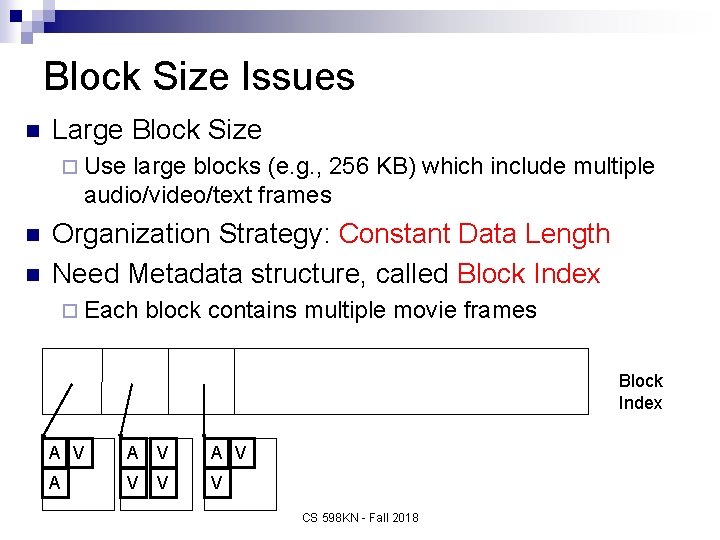

Block Size Issues n Large Block Size ¨ Use large blocks (e. g. , 256 KB) which include multiple audio/video/text frames n n Organization Strategy: Constant Data Length Need Metadata structure, called Block Index ¨ Each block contains multiple movie frames Block Index A V A V V V CS 598 KN - Fall 2018

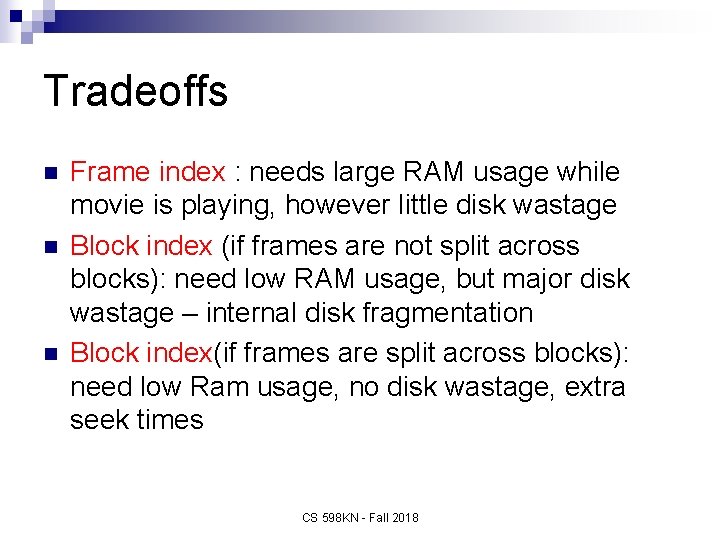

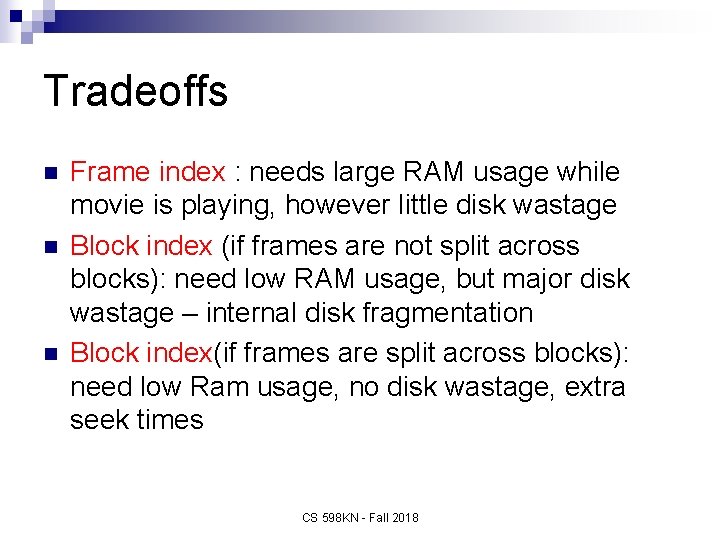

Tradeoffs n n n Frame index : needs large RAM usage while movie is playing, however little disk wastage Block index (if frames are not split across blocks): need low RAM usage, but major disk wastage – internal disk fragmentation Block index(if frames are split across blocks): need low Ram usage, no disk wastage, extra seek times CS 598 KN - Fall 2018

Media Server Architecture Delivered data Incoming request Network Attachment Content Distribution Memory Management (Buffering) File System Storage management Disk controller Storage device CS 598 KN - Fall 2018

True Video-On Demand System n n True VOD: serve thousands of clients simultaneously and allowing service any time (variable access time) Goal: minimize the required resource consumption such as ¨ Server bandwidth (disk I/O and network) – amount of data per time unit sent from server to clients ¨ Client bandwidth – network bandwidth that a client must be able to receive ¨ Client buffer requirements – amount of data client has to be able to temporarily store locally ¨ Start-up delay – time between issuing request for playback and start of playback CS 598 KN - Fall 2018

Caching for Streaming Media Caching – common technique to enhance scalability of general information dissemination n Existing caching schemes are not designed for and do not take advantage of streaming characteristics n Need New Caching for Streaming Media n CS 598 KN - Fall 2018

Techniques for Increasing Server Capacity n Caching ¨ Interval Caching ¨ Frequency Caching n Key Point ¨ In conventional systems, caching used to improve program performance ¨ In video servers, caching is used to increase server capacity CS 598 KN - Fall 2018

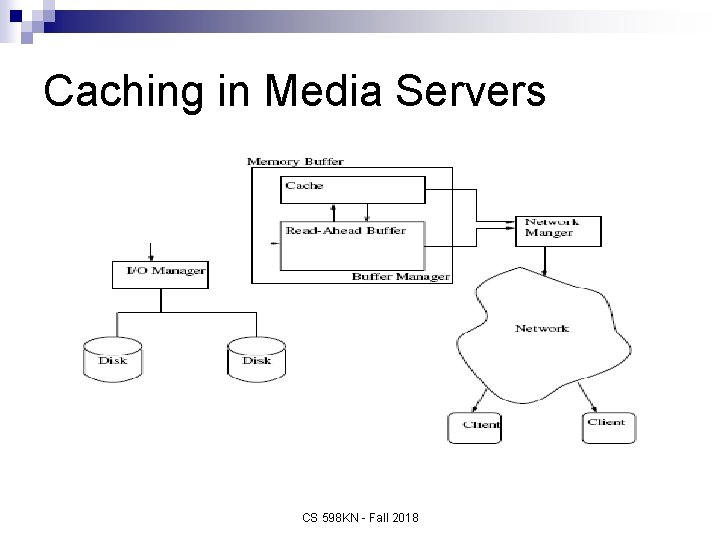

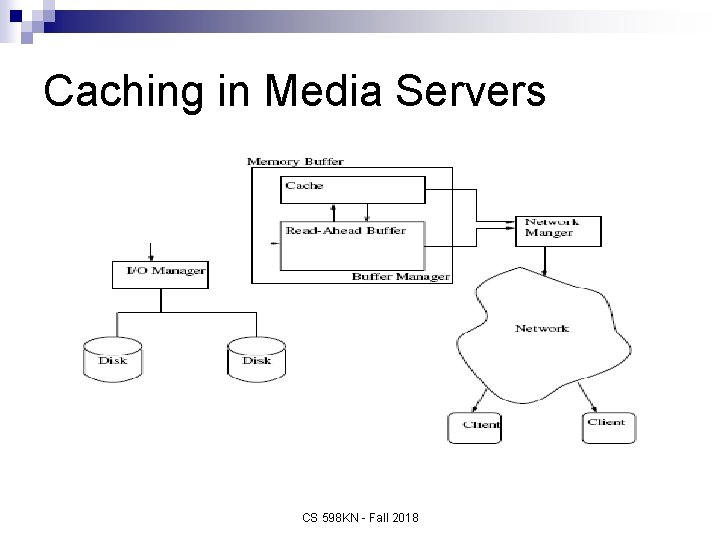

Caching n Read-ahead buffering ¨ Blocks are read and buffered ahead of time they are needed ¨ Early systems assumed separate buffers for each clients n Recent systems assume a global buffer cache, where cached data is shared among all clients CS 598 KN - Fall 2018

Caching in Media Servers CS 598 KN - Fall 2018

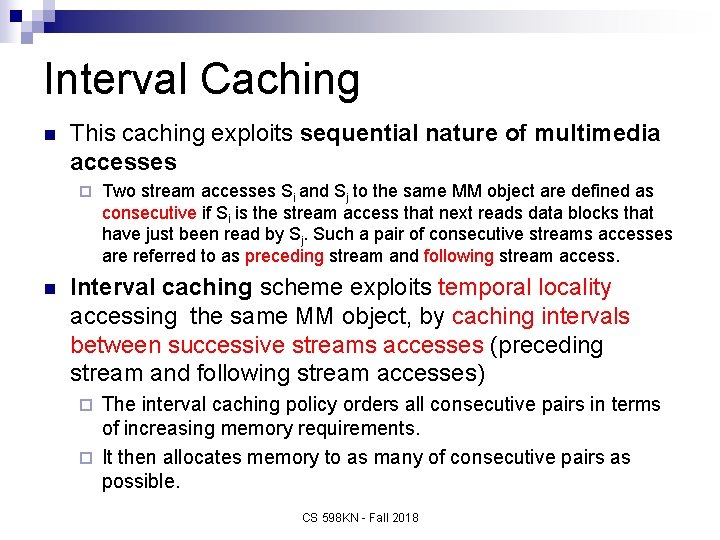

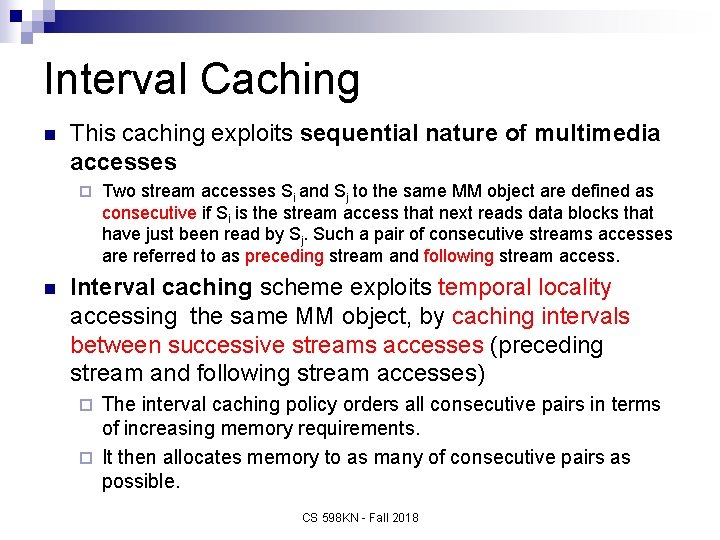

Interval Caching n This caching exploits sequential nature of multimedia accesses ¨ n Two stream accesses Si and Sj to the same MM object are defined as consecutive if Si is the stream access that next reads data blocks that have just been read by Sj. Such a pair of consecutive streams accesses are referred to as preceding stream and following stream access. Interval caching scheme exploits temporal locality accessing the same MM object, by caching intervals between successive streams accesses (preceding stream and following stream accesses) The interval caching policy orders all consecutive pairs in terms of increasing memory requirements. ¨ It then allocates memory to as many of consecutive pairs as possible. ¨ CS 598 KN - Fall 2018

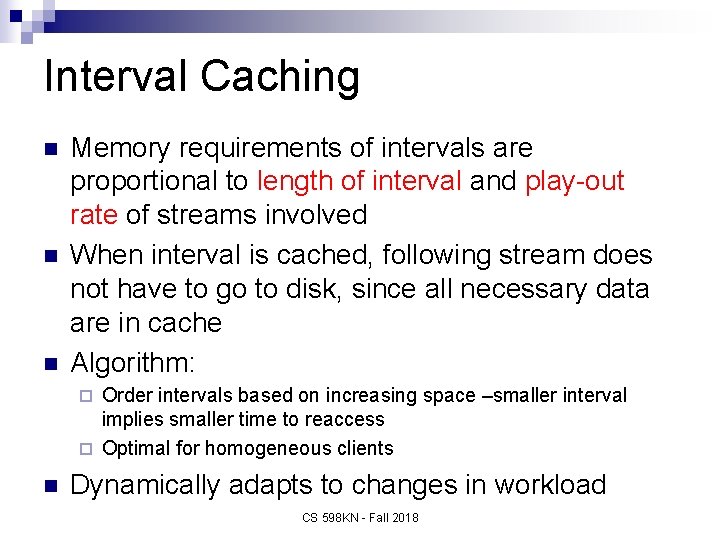

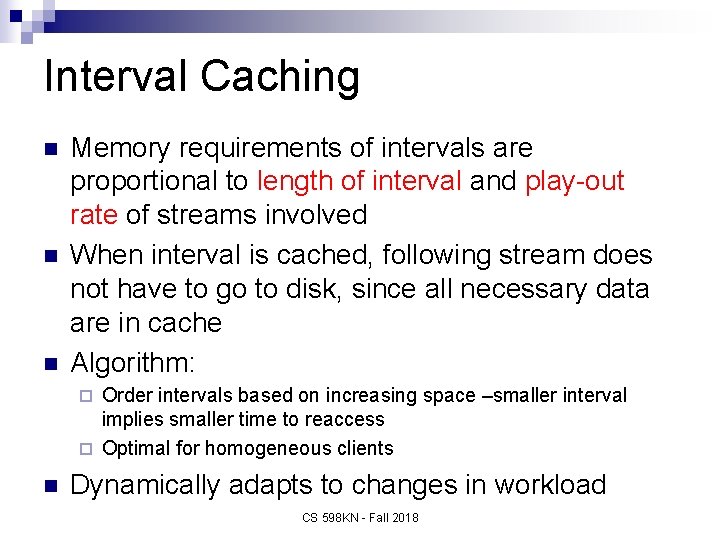

Interval Caching n n n Memory requirements of intervals are proportional to length of interval and play-out rate of streams involved When interval is cached, following stream does not have to go to disk, since all necessary data are in cache Algorithm: Order intervals based on increasing space –smaller interval implies smaller time to reaccess ¨ Optimal for homogeneous clients ¨ n Dynamically adapts to changes in workload CS 598 KN - Fall 2018

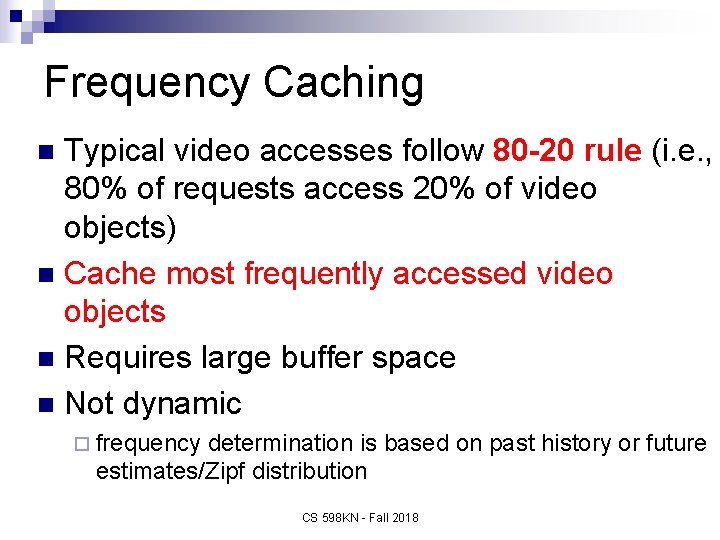

Frequency Caching Typical video accesses follow 80 -20 rule (i. e. , 80% of requests access 20% of video objects) n Cache most frequently accessed video objects n Requires large buffer space n Not dynamic n ¨ frequency determination is based on past history or future estimates/Zipf distribution CS 598 KN - Fall 2018

Taxonomy of Cache Replacement Policies n n n Recency of access: locality of reference Frequency based: hot sets with independent accesses Optimal: knowledge of the time of next access Size-based: different size objects Miss cost based: different times to fetch objects Resource-based: resource usage of different object classes CS 598 KN - Fall 2018

Conclusion n n The data placement, scheduling, block size decisions, caching, concurrent clients support, buffering, are very important for any media server design and implementation. Huge explosion in media storage-cloud storage ¨ Similar software – Apache Cassandra, Hadoop, Hypertable, Apache Accumulo, Apache Hbase, … CS 598 KN - Fall 2018

IF TIME PERMITS, EXAMPLES CS 598 KN - Fall 2018

Examples n Example of Early Media Server – Medusa n Example of Multimedia File System – Symphony n Example of Industrial Multimedia File System – Google File System CS 598 KN - Fall 2018

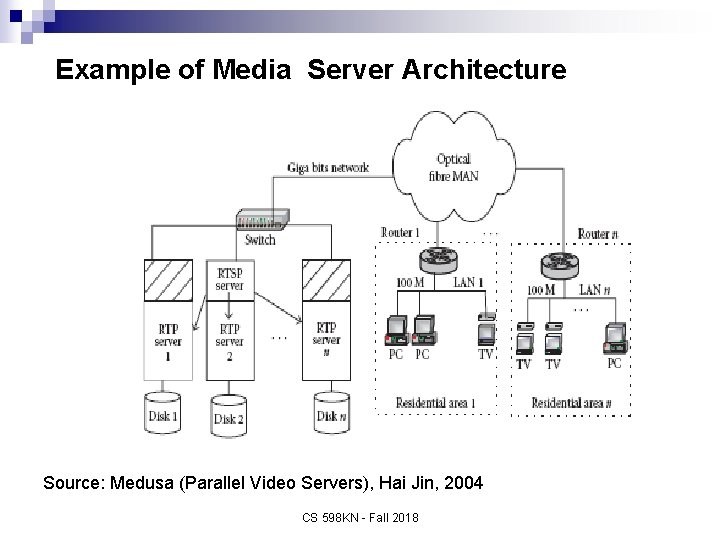

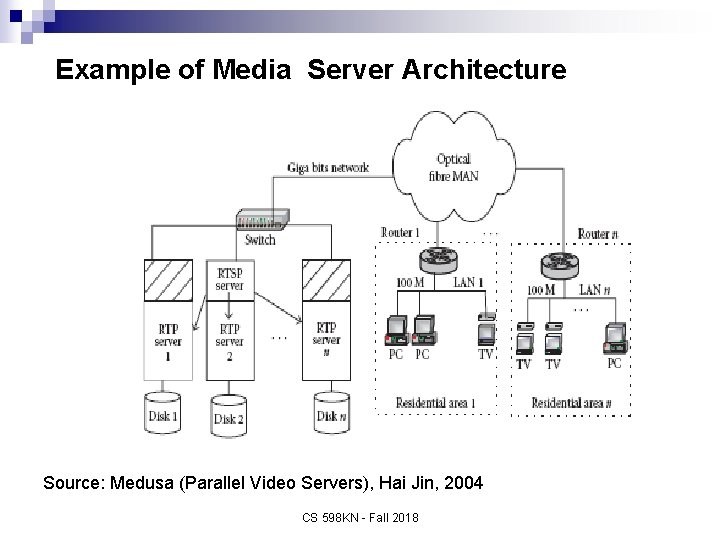

Example of Media Server Architecture Source: Medusa (Parallel Video Servers), Hai Jin, 2004 CS 598 KN - Fall 2018

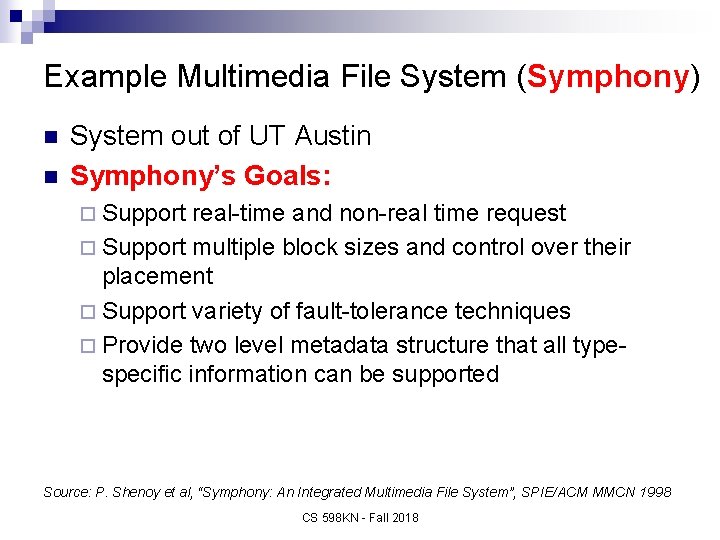

Example Multimedia File System (Symphony) n n System out of UT Austin Symphony’s Goals: ¨ Support real-time and non-real time request ¨ Support multiple block sizes and control over their placement ¨ Support variety of fault-tolerance techniques ¨ Provide two level metadata structure that all typespecific information can be supported Source: P. Shenoy et al, “Symphony: An Integrated Multimedia File System”, SPIE/ACM MMCN 1998 CS 598 KN - Fall 2018

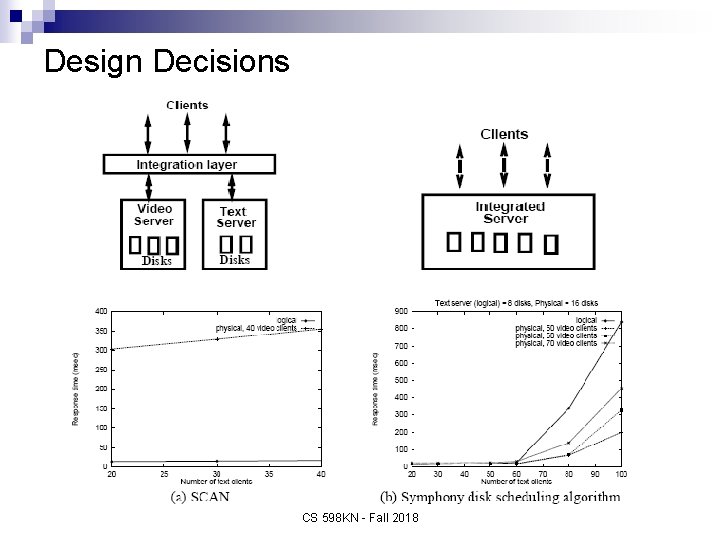

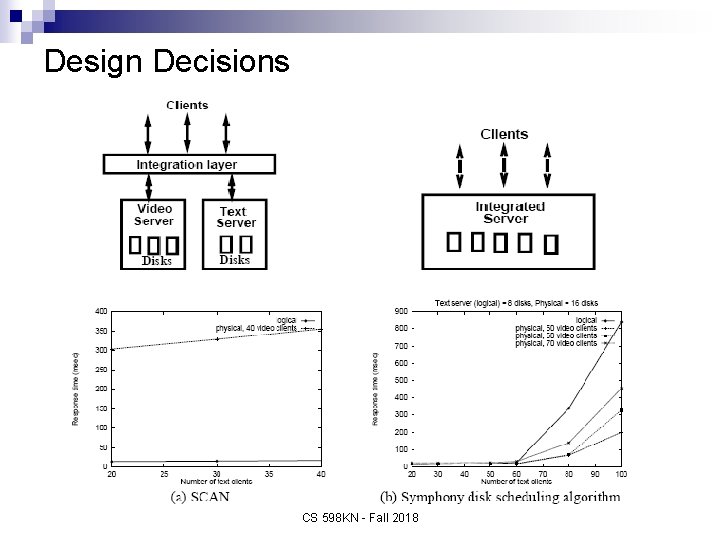

Design Decisions CS 598 KN - Fall 2018

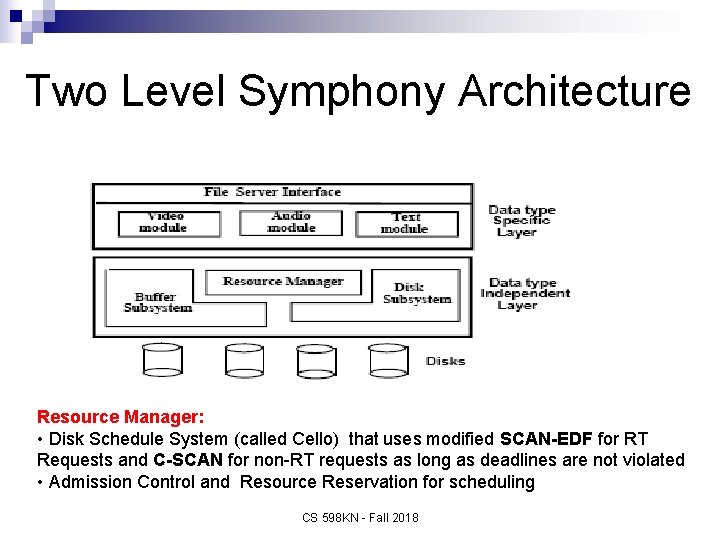

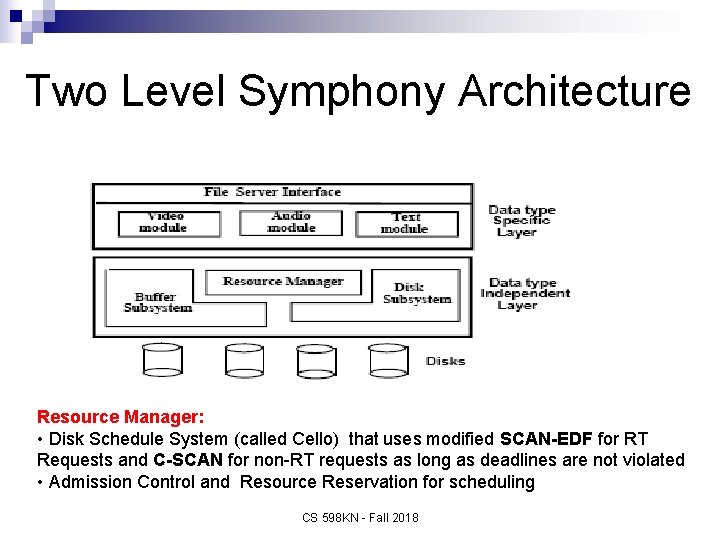

Two Level Symphony Architecture Resource Manager: • Disk Schedule System (called Cello) that uses modified SCAN-EDF for RT Requests and C-SCAN for non-RT requests as long as deadlines are not violated • Admission Control and Resource Reservation for scheduling CS 598 KN - Fall 2018

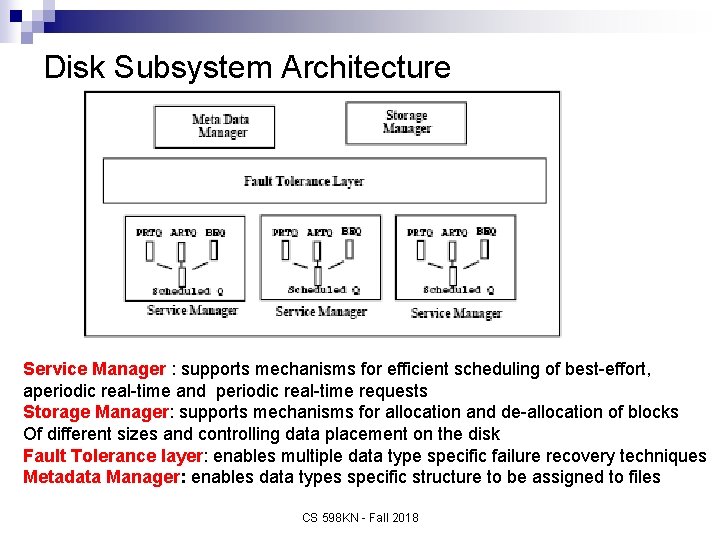

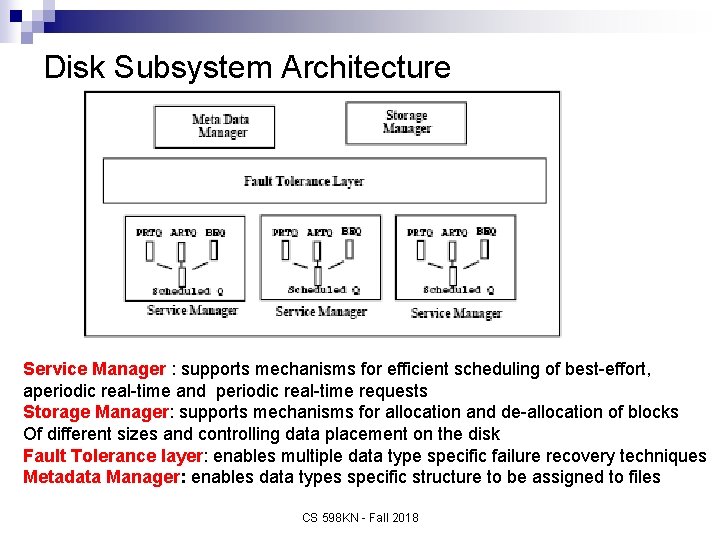

Disk Subsystem Architecture Service Manager : supports mechanisms for efficient scheduling of best-effort, aperiodic real-time and periodic real-time requests Storage Manager: supports mechanisms for allocation and de-allocation of blocks Of different sizes and controlling data placement on the disk Fault Tolerance layer: enables multiple data type specific failure recovery techniques Metadata Manager: enables data types specific structure to be assigned to files CS 598 KN - Fall 2018

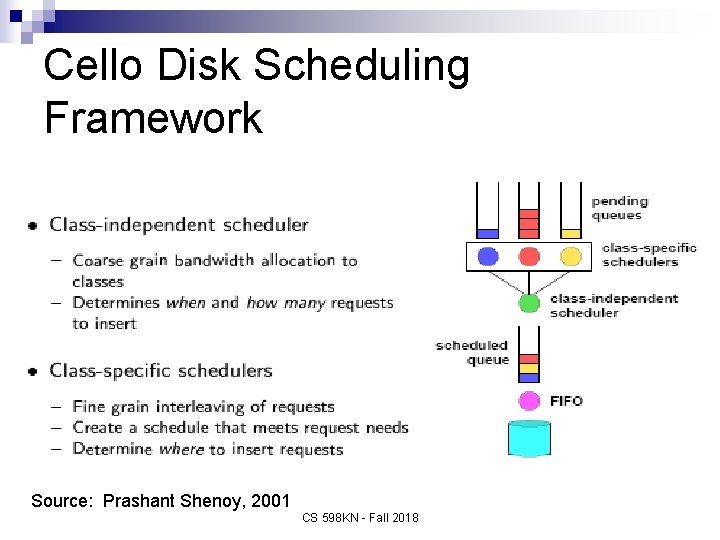

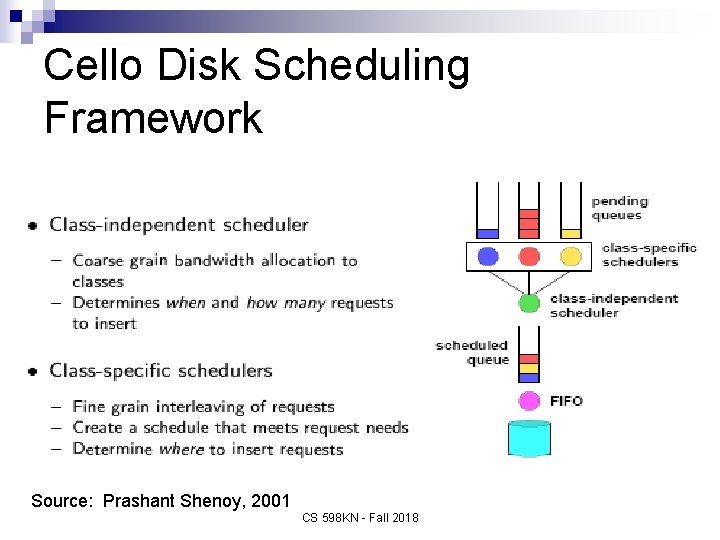

Cello Disk Scheduling Framework Source: Prashant Shenoy, 2001 CS 598 KN - Fall 2018

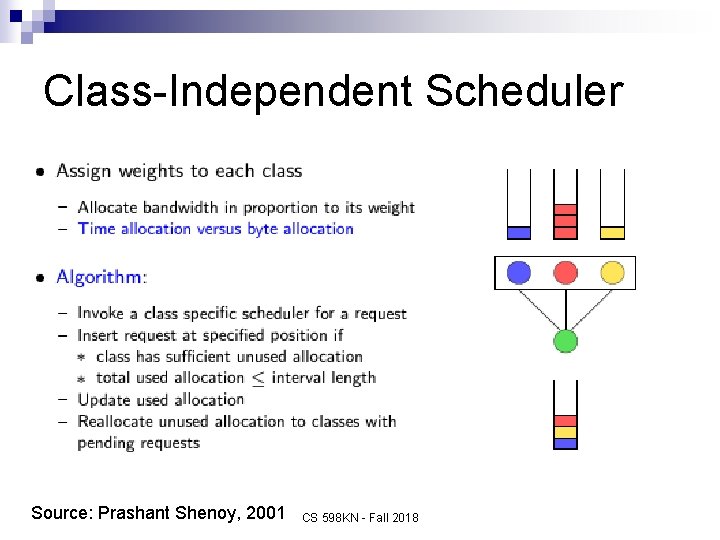

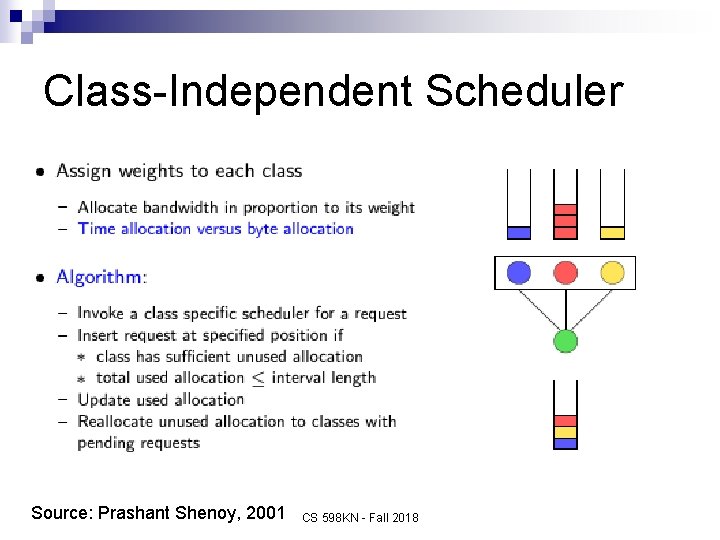

Class-Independent Scheduler Source: Prashant Shenoy, 2001 CS 598 KN - Fall 2018

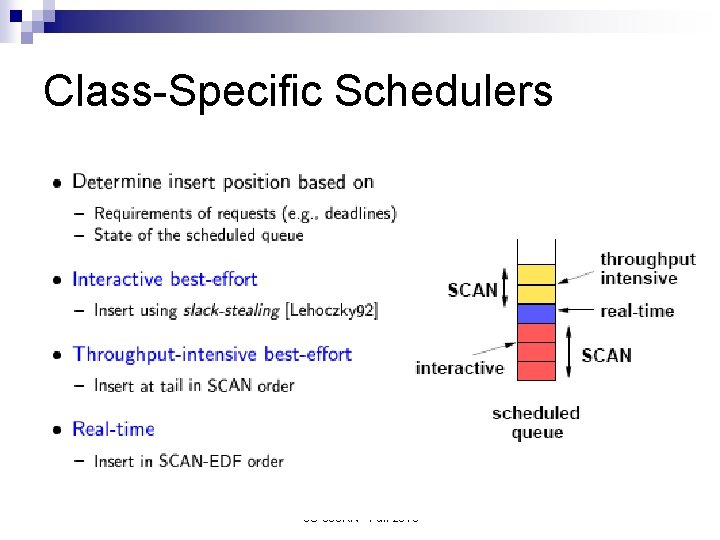

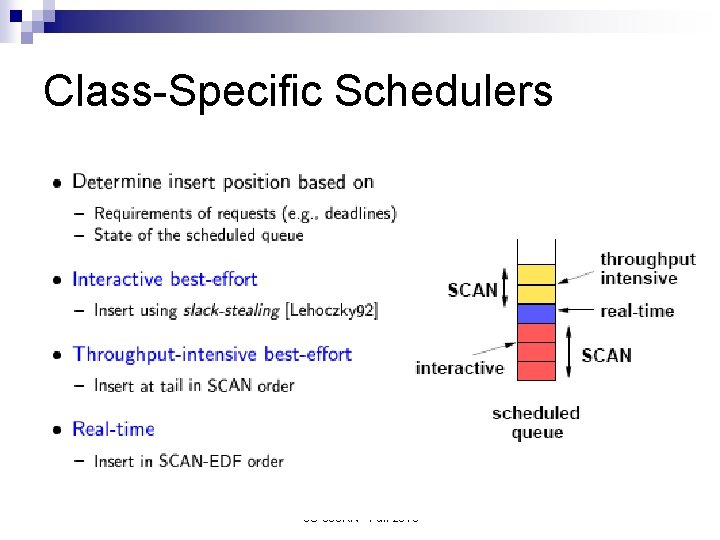

Class-Specific Schedulers CS 598 KN - Fall 2018

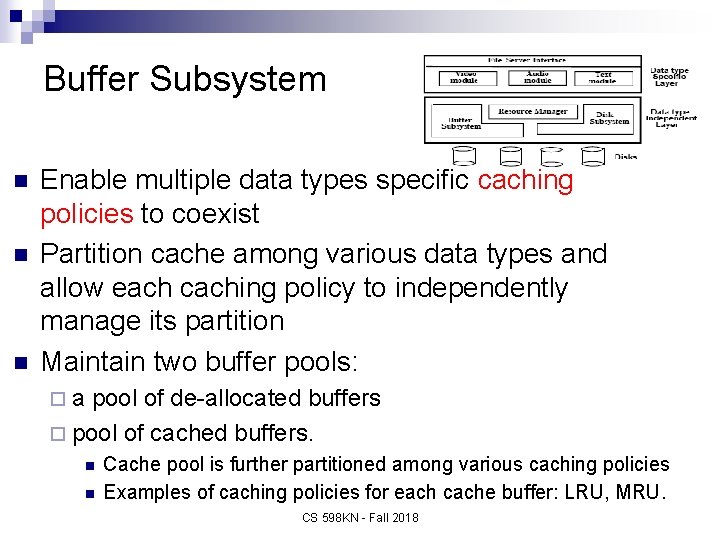

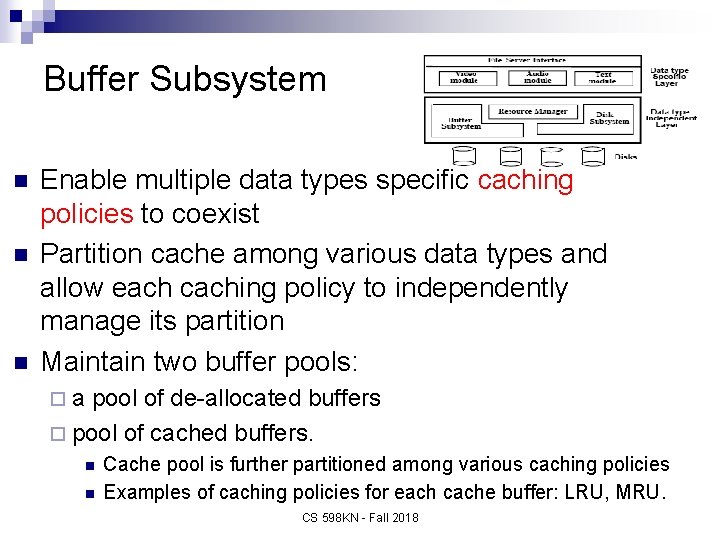

Buffer Subsystem n n n Enable multiple data types specific caching policies to coexist Partition cache among various data types and allow each caching policy to independently manage its partition Maintain two buffer pools: ¨ a pool of de-allocated buffers ¨ pool of cached buffers. n n Cache pool is further partitioned among various caching policies Examples of caching policies for each cache buffer: LRU, MRU. CS 598 KN - Fall 2018

Buffer Subsystem (Protocol) n n Receive buffer allocation request Check if the requested block is cached. If yes, it returns the requested block ¨ If cache miss, allocate buffer from the pool of de-allocated buffers and insert this buffer into the appropriate cache partition ¨ n Determine (Caching policy that manages individual cache) position in the buffer cache If pool of de-allocated buffers falls below watermark, buffers are evicted from cache and returned to de-allocated pool ¨ Use TTR (Time-To- Reaccess) values to determine victims ¨ n TTR – estimate of next time at which the buffer is likely to be accessed CS 598 KN - Fall 2018

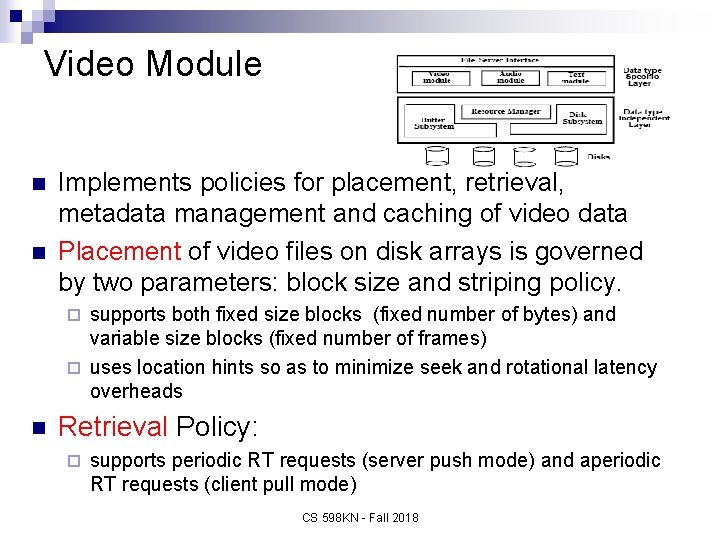

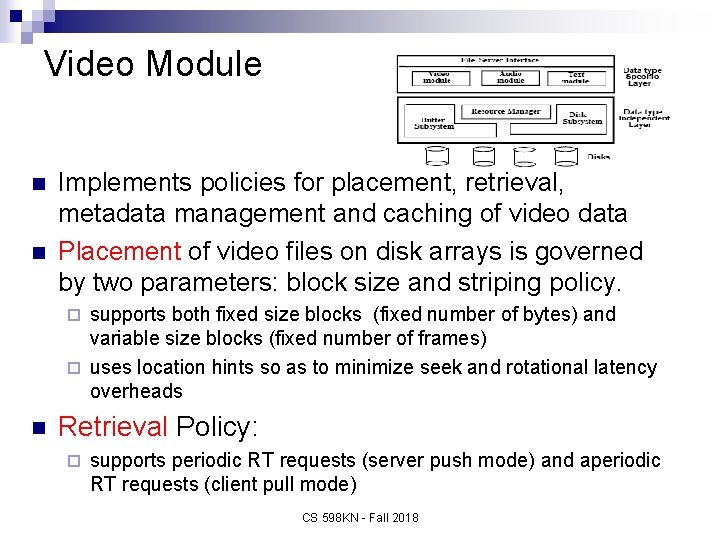

Video Module n n Implements policies for placement, retrieval, metadata management and caching of video data Placement of video files on disk arrays is governed by two parameters: block size and striping policy. supports both fixed size blocks (fixed number of bytes) and variable size blocks (fixed number of frames) ¨ uses location hints so as to minimize seek and rotational latency overheads ¨ n Retrieval Policy: ¨ supports periodic RT requests (server push mode) and aperiodic RT requests (client pull mode) CS 598 KN - Fall 2018

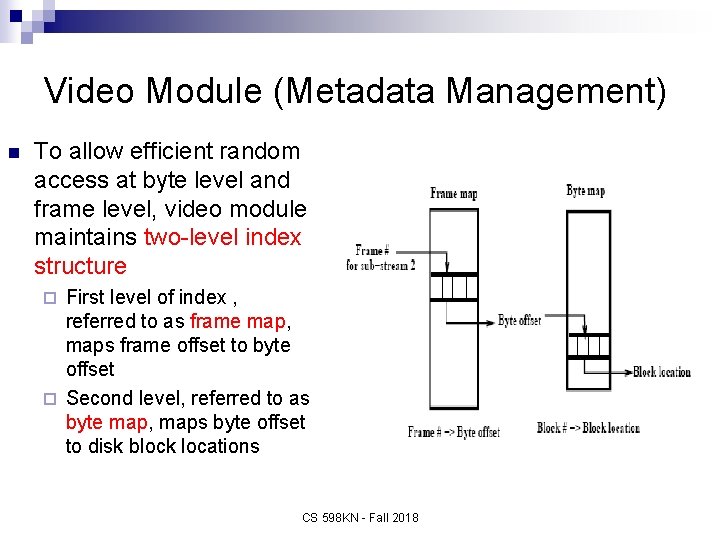

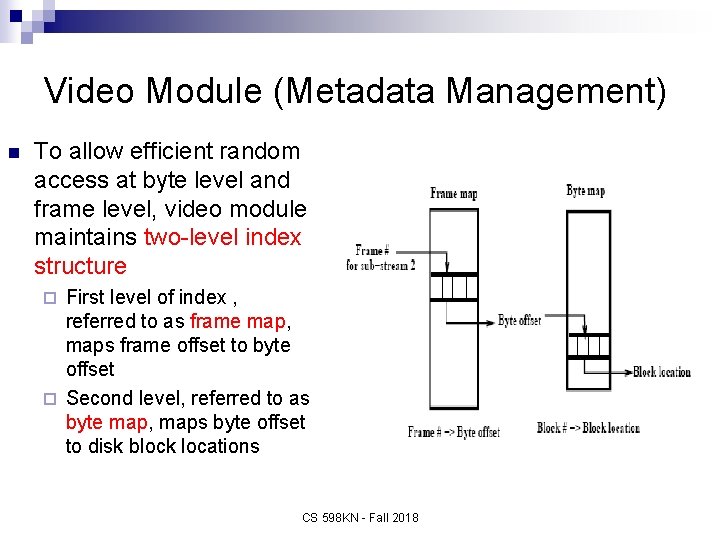

Video Module (Metadata Management) n To allow efficient random access at byte level and frame level, video module maintains two-level index structure First level of index , referred to as frame map, maps frame offset to byte offset ¨ Second level, referred to as byte map, maps byte offset to disk block locations ¨ CS 598 KN - Fall 2018

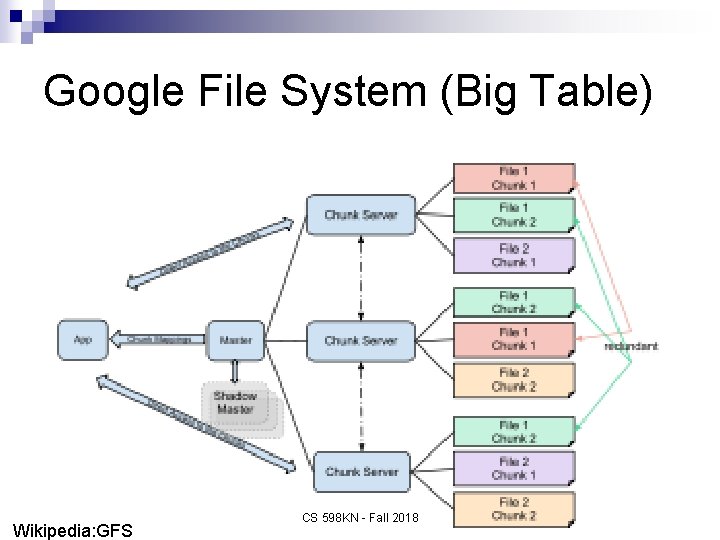

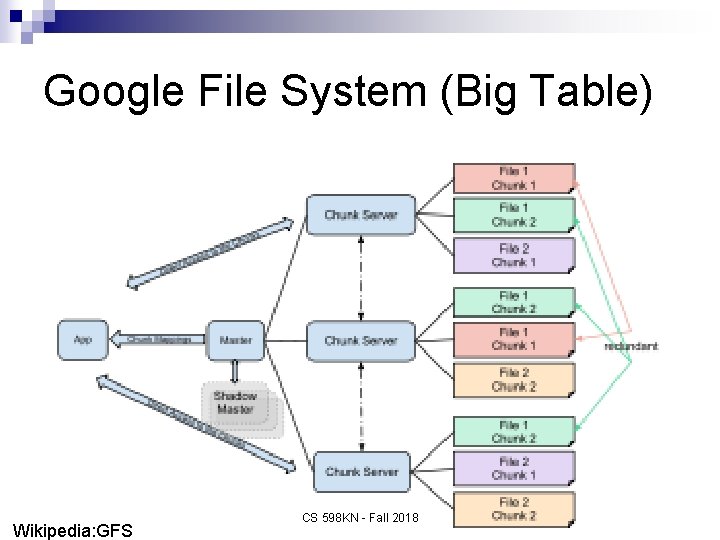

Google File System (Big Table) Wikipedia: GFS CS 598 KN - Fall 2018

Google File System n n n n Files divided into fixed size big chunks of 64 Mbytes Chunk servers Files are usually appended to or read Run on cheap commodity computers High failure rate High data throughputs and cost of latency Two types of nodes ¨ n Master node and large number of chunk servers. Designed for system-to-system interaction CS 598 KN - Fall 2018 The Google File System: Sanjay Ghemawat, Howard Gobioff, and Shun-Tak Leung, Google, SOSP '03

Big Table High-performance data storage system n Built on top of n Google File System ¨ Chubby Lock Service ¨ SSTable (log-structured storage) ¨ n Supports systems such as ¨ ¨ ¨ Map. Reduce You. Tube Google Earth Google Maps Gmail CS 598 KN - Fall 2018

Spanner Successor to Big Table n Globally distributed relational database management system (RDBMS) n Google F 1 – built on top of Spanner (replaces My. SQL) n Corbett, James C; Dean, Jeffrey; Epstein, Michael; Fikes, Andrew; Frost, Christopher; Furman, JJ; Ghemawat, Sanjay; Gubarev, Andrey; Heiser, Christopher; Hochschild, Peter; Hsieh, Wilson; Kanthak, Sebastian; Kogan, Eugene; Li, Hongyi; Lloyd, Alexander; Melnik, Sergey; Mwaura, David; Nagle, David; Quinlan, Sean; Rao, Rajesh; Rolig, Lindsay; Saito, Yasushi; Szymaniak, Michal; Taylor, Christopher; Wang, Ruth; Woodford, Dale, "Spanner: Google’s Globally-Distributed Database", Proceedings of OSDI 2012 (Google), retrieved 18 September 2012. CS 598 KN - Fall 2018