Tensorflow in Deep Learning Lecture 2 Neural Network

- Slides: 22

Tensorflow in Deep Learning Lecture 2: Neural Network JAHANDAR JAHANIPOUR jjahanipour@uh. edu www. easy-tensorflow. com https: //github. com/easy-tensorflow 30/04/2018 www. easy-tensorflow. com 1

Outline • Neural Network • • • Introduction to Neural Network Implementing a Neural Network in Tensor. Flow Tensor. Board • • 30/04/2018 Visualize the network graph Write summary www. easy-tensorflow. com 2

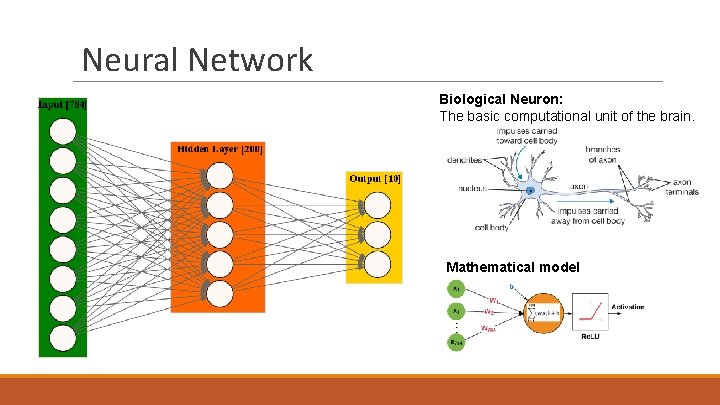

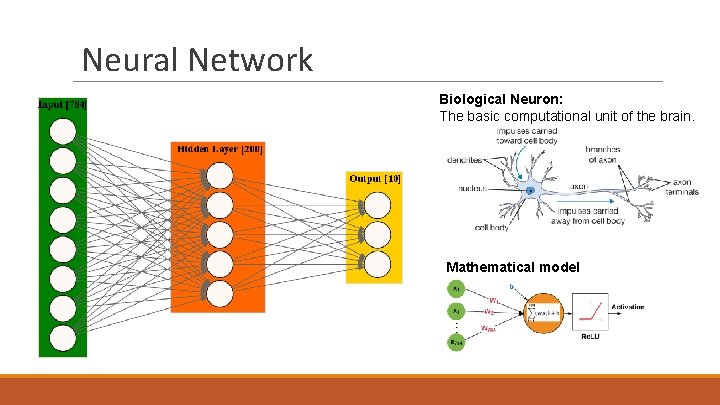

Neural Network Biological Neuron: The basic computational unit of the brain. Mathematical model

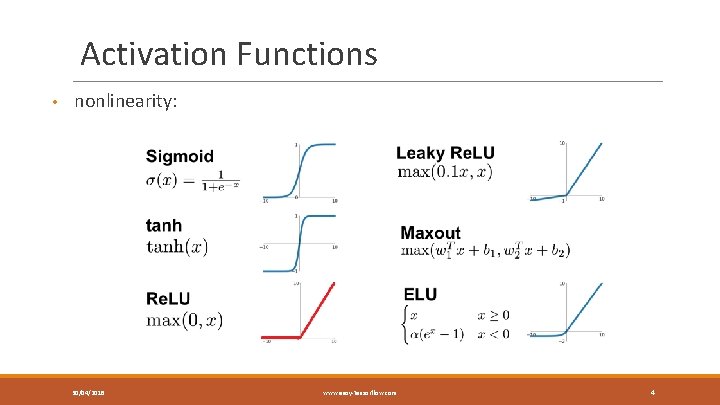

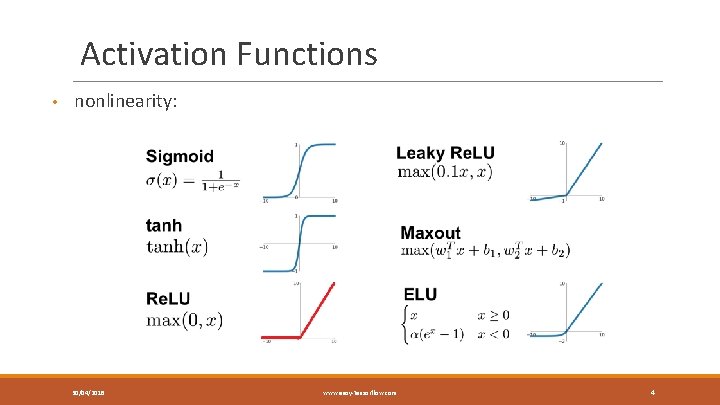

Activation Functions • nonlinearity: 30/04/2018 www. easy-tensorflow. com 4

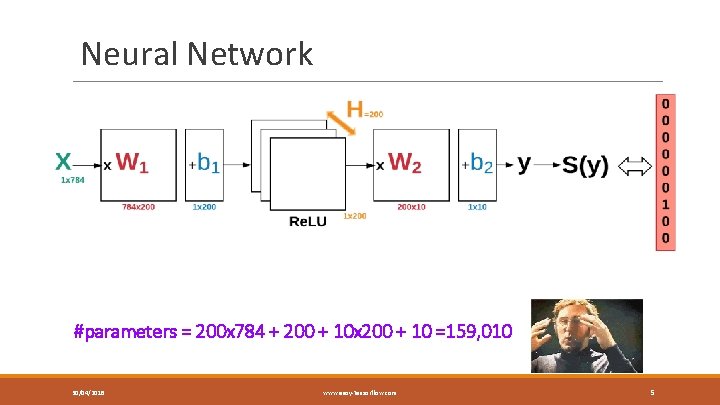

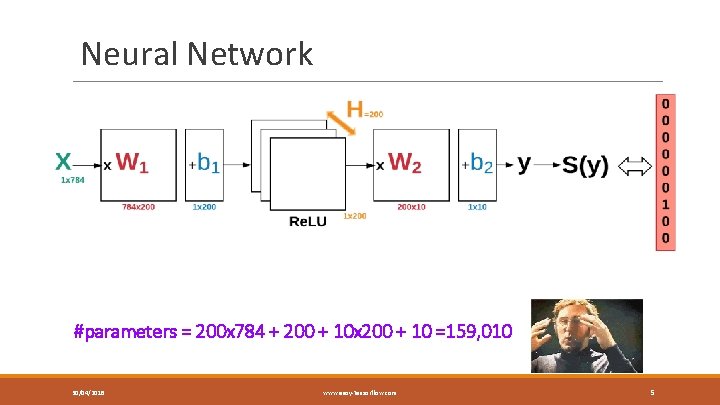

Neural Network #parameters = 200 x 784 + 200 + 10 x 200 + 10 =159, 010 30/04/2018 www. easy-tensorflow. com 5

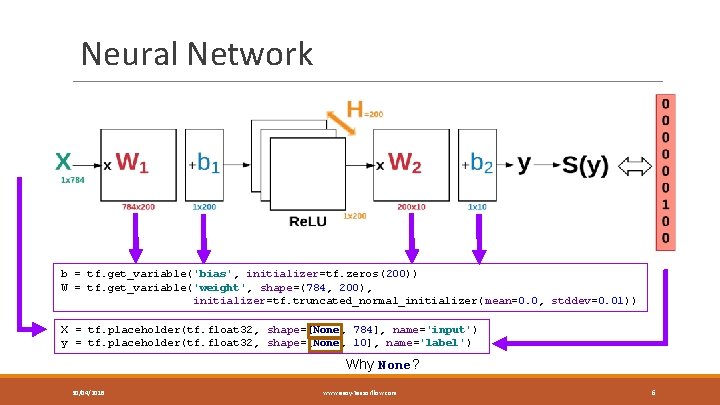

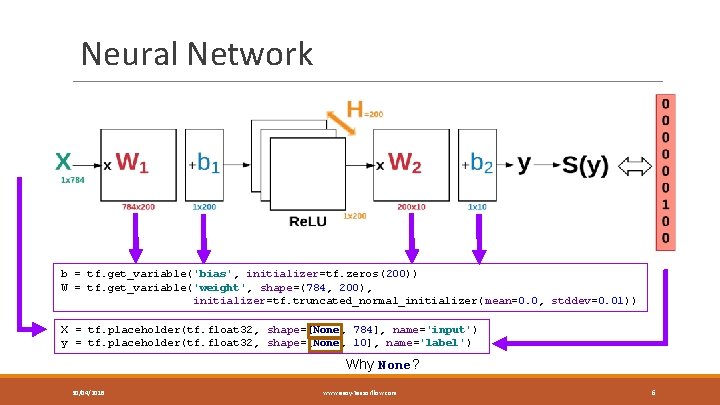

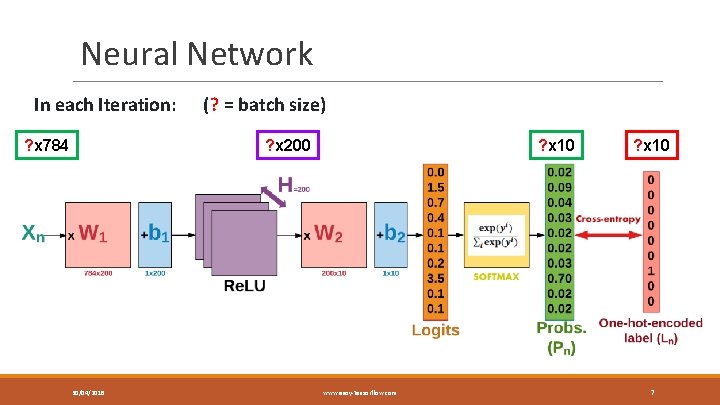

Neural Network b = tf. get_variable('bias', initializer=tf. zeros(200)) W = tf. get_variable('weight', shape=(784, 200), initializer=tf. truncated_normal_initializer(mean=0. 0, stddev=0. 01)) X = tf. placeholder(tf. float 32, shape=[None, 784], name='input') y = tf. placeholder(tf. float 32, shape=[None, 10], name='label') Why None? 30/04/2018 www. easy-tensorflow. com 6

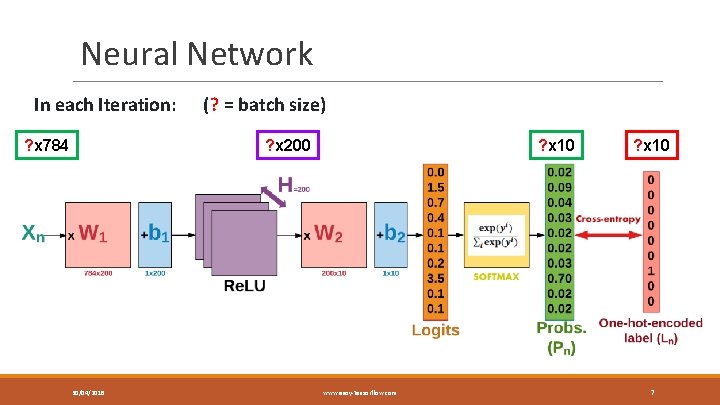

Neural Network In each Iteration: ? x 784 (? = batch size) ? x 10 ? x 200 30/04/2018 www. easy-tensorflow. com ? x 10 7

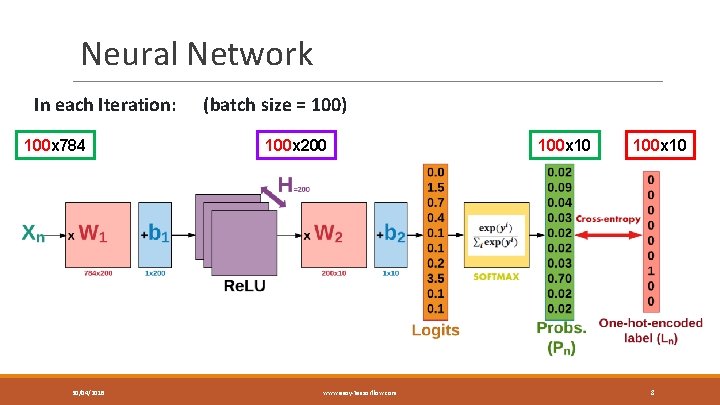

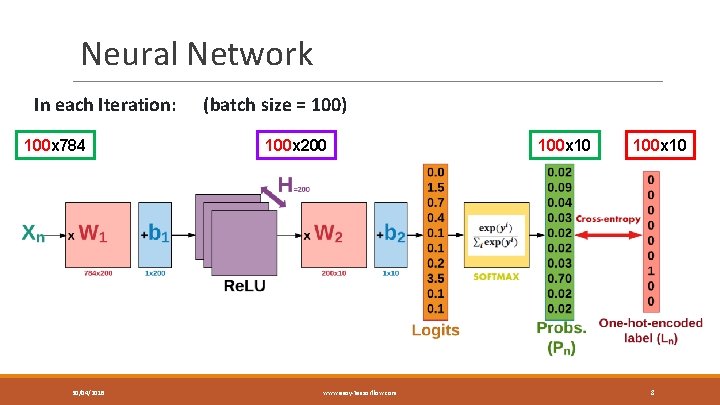

Neural Network In each Iteration: 100 x 784 30/04/2018 (batch size = 100) 100 x 200 www. easy-tensorflow. com 100 x 10 8

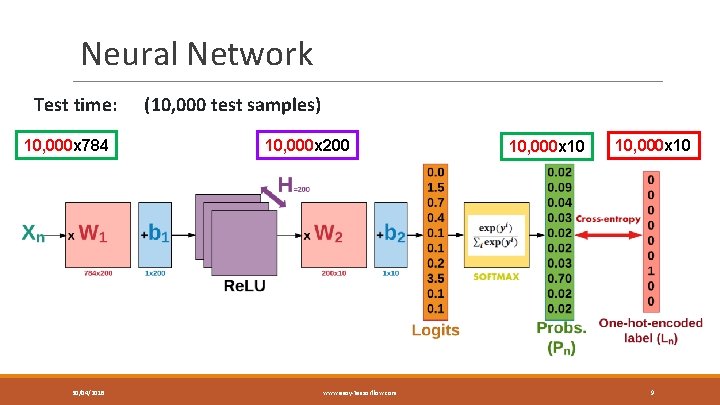

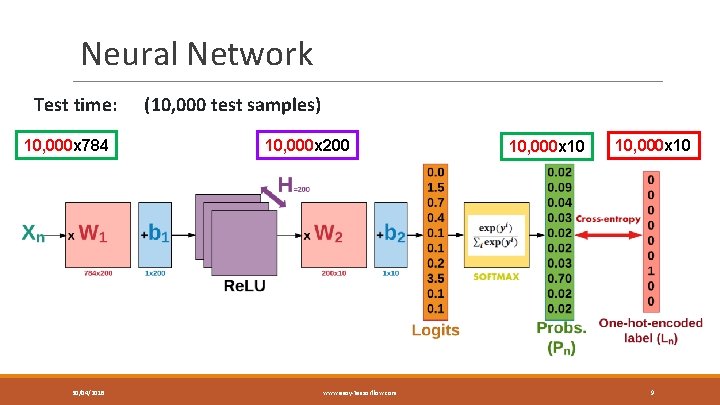

Neural Network Test time: 10, 000 x 784 30/04/2018 (10, 000 test samples) 10, 000 x 200 www. easy-tensorflow. com 10, 000 x 10 9

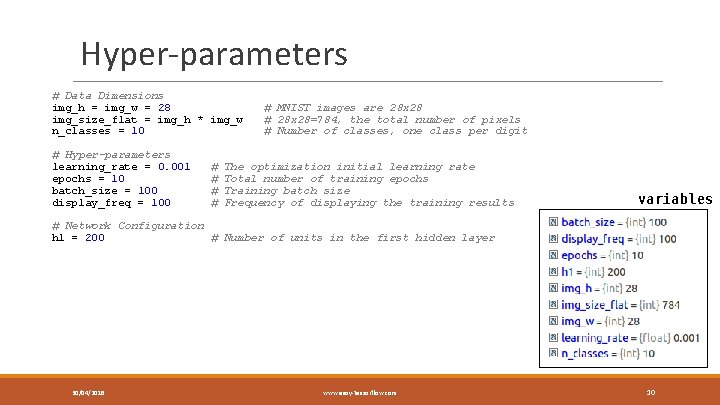

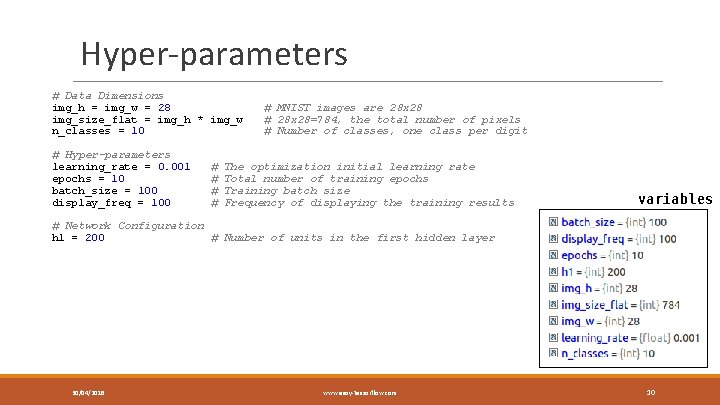

Hyper-parameters # Data Dimensions img_h = img_w = 28 img_size_flat = img_h * img_w n_classes = 10 # Hyper-parameters learning_rate = 0. 001 epochs = 10 batch_size = 100 display_freq = 100 # # # MNIST images are 28 x 28 # 28 x 28=784, the total number of pixels # Number of classes, one class per digit The optimization initial learning rate Total number of training epochs Training batch size Frequency of displaying the training results variables # Network Configuration h 1 = 200 # Number of units in the first hidden layer 30/04/2018 www. easy-tensorflow. com 10

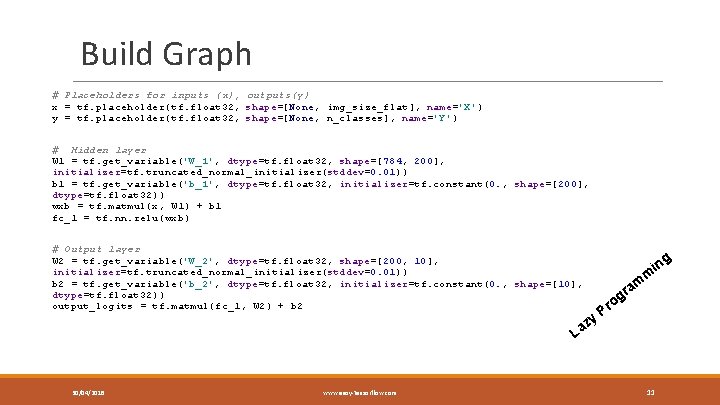

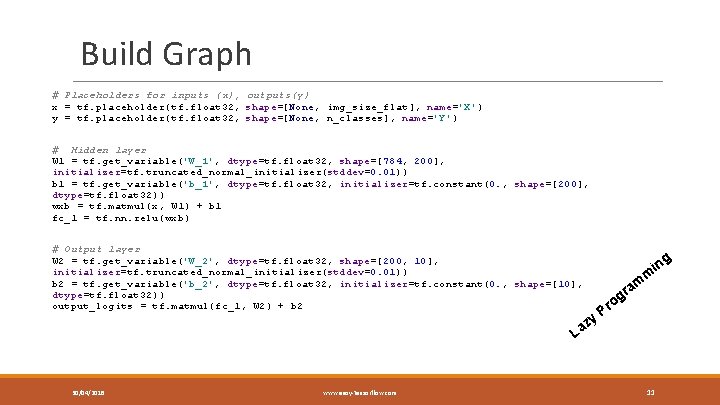

Build Graph # Placeholders for inputs (x), outputs(y) x = tf. placeholder(tf. float 32, shape=[None, img_size_flat], name='X') y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') # Hidden layer W 1 = tf. get_variable('W_1', dtype=tf. float 32, shape=[784, 200], initializer=tf. truncated_normal_initializer(stddev=0. 01)) b 1 = tf. get_variable('b_1', dtype=tf. float 32, initializer=tf. constant(0. , shape=[200], dtype=tf. float 32)) wxb = tf. matmul(x, W 1) + b 1 fc_1 = tf. nn. relu(wxb) # Output layer W 2 = tf. get_variable('W_2', dtype=tf. float 32, shape=[200, 10], initializer=tf. truncated_normal_initializer(stddev=0. 01)) b 2 = tf. get_variable('b_2', dtype=tf. float 32, initializer=tf. constant(0. , shape=[10], dtype=tf. float 32)) output_logits = tf. matmul(fc_1, W 2) + b 2 g m www. easy-tensorflow. com a gr o y z La 30/04/2018 m Pr 11 in

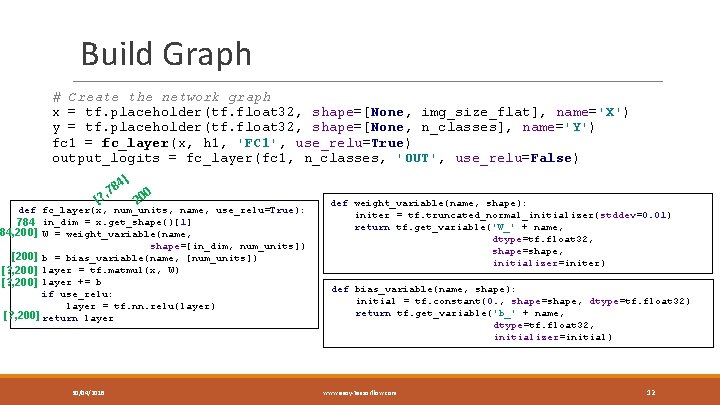

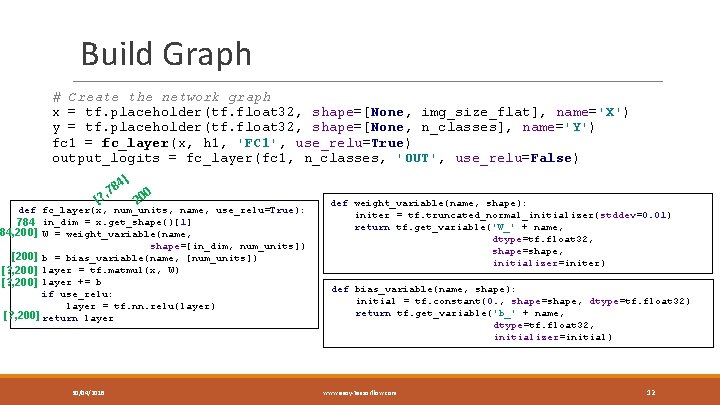

Build Graph # Create the network graph x = tf. placeholder(tf. float 32, shape=[None, img_size_flat], name='X') y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') fc 1 = fc_layer(x, h 1, 'FC 1', use_relu=True) output_logits = fc_layer(fc 1, n_classes, 'OUT', use_relu=False) 4] [ 78 ? , 0 20 def fc_layer(x, num_units, name, use_relu=True): 784 in_dim = x. get_shape()[1] 784, 200] W = weight_variable(name, shape=[in_dim, num_units]) [200] b = bias_variable(name, [num_units]) [? , 200] layer = tf. matmul(x, W) [? , 200] layer += b if use_relu: layer = tf. nn. relu(layer) [? , 200] return layer 30/04/2018 def weight_variable(name, shape): initer = tf. truncated_normal_initializer(stddev=0. 01) return tf. get_variable('W_' + name, dtype=tf. float 32, shape=shape, initializer=initer) def bias_variable(name, shape): initial = tf. constant(0. , shape=shape, dtype=tf. float 32) return tf. get_variable('b_' + name, dtype=tf. float 32, initializer=initial) www. easy-tensorflow. com 12

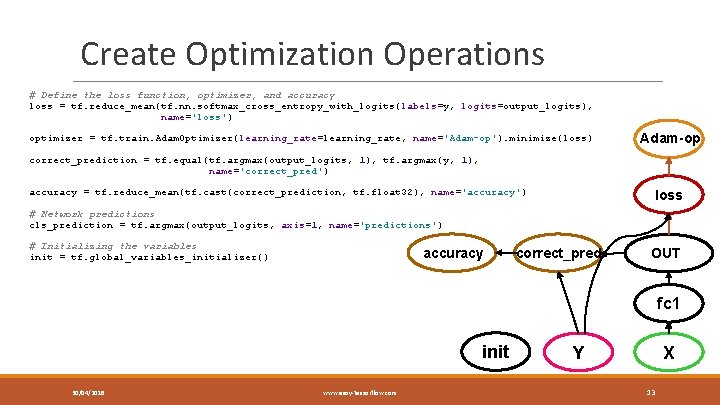

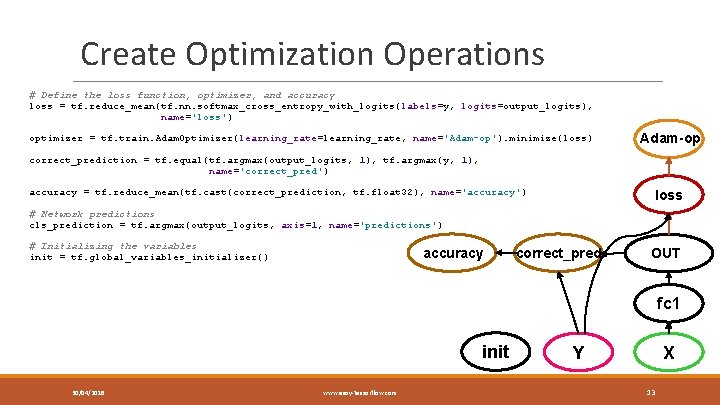

Create Optimization Operations # Define the loss function, optimizer, and accuracy loss = tf. reduce_mean(tf. nn. softmax_cross_entropy_with_logits(labels=y, logits=output_logits), name='loss') optimizer = tf. train. Adam. Optimizer(learning_rate=learning_rate, name='Adam-op'). minimize(loss) Adam-op correct_prediction = tf. equal(tf. argmax(output_logits, 1), tf. argmax(y, 1), name='correct_pred') accuracy = tf. reduce_mean(tf. cast(correct_prediction, tf. float 32), name='accuracy') loss # Network predictions cls_prediction = tf. argmax(output_logits, axis=1, name='predictions') # Initializing the variables init = tf. global_variables_initializer() accuracy correct_pred OUT fc 1 init 30/04/2018 www. easy-tensorflow. com Y X 13

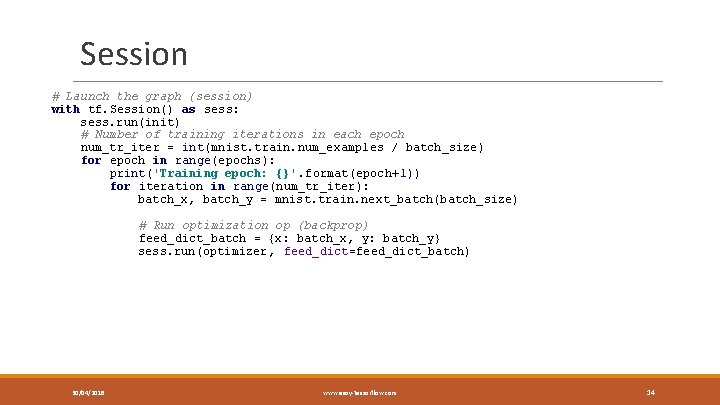

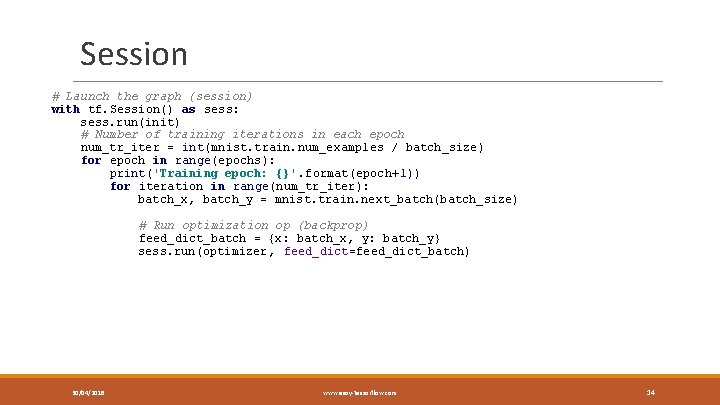

Session # Launch the graph (session) with tf. Session() as sess: sess. run(init) # Number of training iterations in each epoch num_tr_iter = int(mnist. train. num_examples / batch_size) for epoch in range(epochs): print('Training epoch: {}'. format(epoch+1)) for iteration in range(num_tr_iter): batch_x, batch_y = mnist. train. next_batch(batch_size) # Run optimization op (backprop) feed_dict_batch = {x: batch_x, y: batch_y} sess. run(optimizer, feed_dict=feed_dict_batch) 30/04/2018 www. easy-tensorflow. com 14

Tensor. Board Tensorboard is a flashlight for our Neural Net's black box. 1. Visualize graph 2. Write summaries 3. Embedding Visualization 30/04/2018 www. easy-tensorflow. com 15

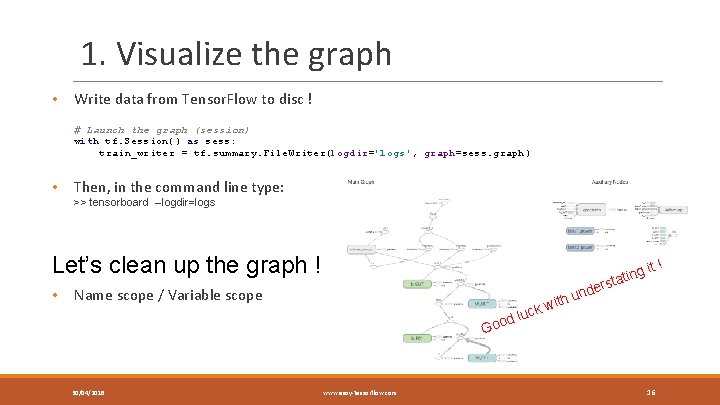

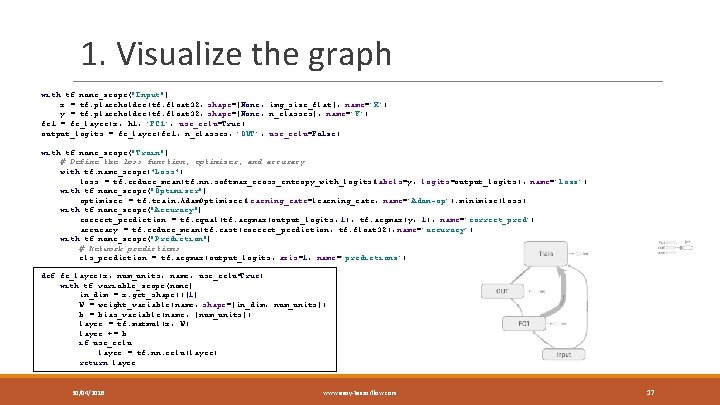

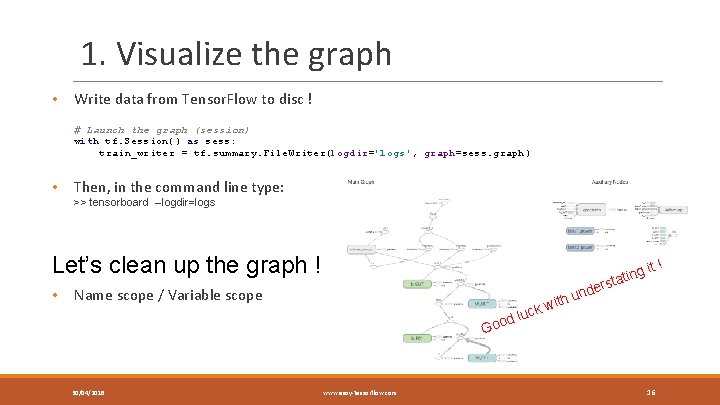

1. Visualize the graph • Write data from Tensor. Flow to disc ! # Launch the graph (session) with tf. Session() as sess: train_writer = tf. summary. File. Writer(logdir='logs', graph=sess. graph) • Then, in the command line type: >> tensorboard --logdir=logs Let’s clean up the graph ! • Name scope / Variable scope it ! n d Goo 30/04/2018 g atin t s r de www. easy-tensorflow. com ith u w k c lu 16

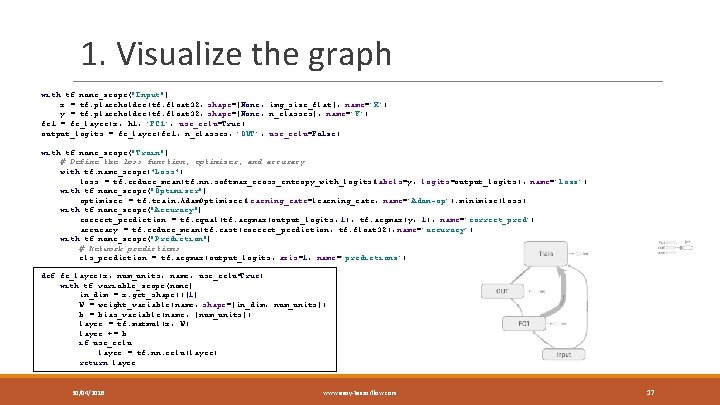

1. Visualize the graph with tf. name_scope("Input"): x = tf. placeholder(tf. float 32, shape=[None, img_size_flat], name='X') y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') fc 1 = fc_layer(x, h 1, 'FC 1', use_relu=True) output_logits = fc_layer(fc 1, n_classes, 'OUT', use_relu=False) with tf. name_scope("Train"): # Define the loss function, optimizer, and accuracy with tf. name_scope("Loss"): loss = tf. reduce_mean(tf. nn. softmax_cross_entropy_with_logits(labels=y, logits=output_logits), name='loss') with tf. name_scope("Optimizer"): optimizer = tf. train. Adam. Optimizer(learning_rate=learning_rate, name='Adam-op'). minimize(loss) with tf. name_scope("Accuracy"): correct_prediction = tf. equal(tf. argmax(output_logits, 1), tf. argmax(y, 1), name='correct_pred') accuracy = tf. reduce_mean(tf. cast(correct_prediction, tf. float 32), name='accuracy') with tf. name_scope("Prediction"): # Network predictions cls_prediction = tf. argmax(output_logits, axis=1, name='predictions') def fc_layer(x, num_units, name, use_relu=True): with tf. variable_scope(name): in_dim = x. get_shape()[1] W = weight_variable(name, shape=[in_dim, num_units]) b = bias_variable(name, [num_units]) layer = tf. matmul(x, W) layer += b if use_relu: layer = tf. nn. relu(layer) return layer 30/04/2018 www. easy-tensorflow. com 17

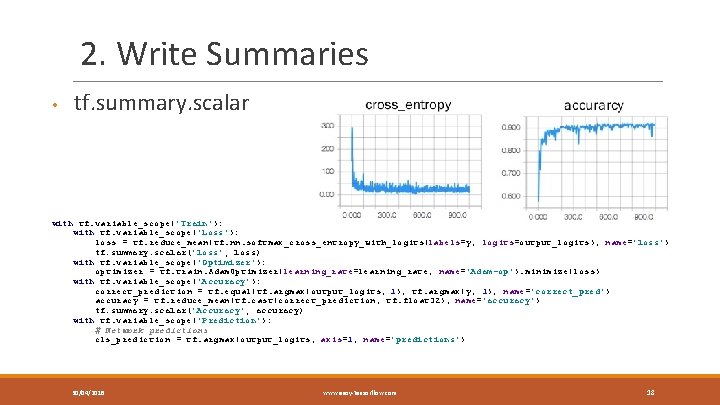

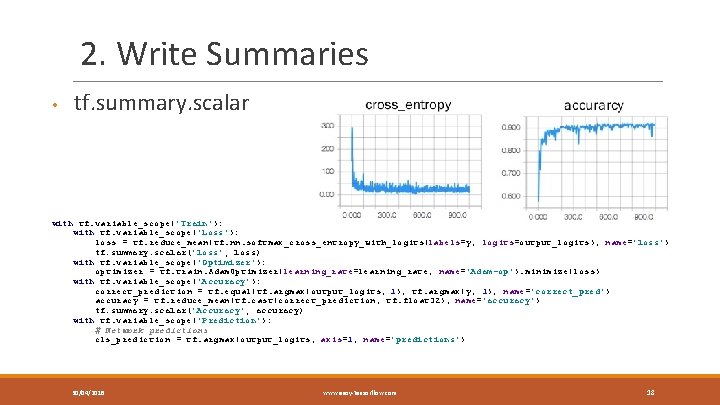

2. Write Summaries • tf. summary. scalar with tf. variable_scope('Train'): with tf. variable_scope('Loss'): loss = tf. reduce_mean(tf. nn. softmax_cross_entropy_with_logits(labels=y, logits=output_logits), name='loss') tf. summary. scalar('loss', loss) with tf. variable_scope('Optimizer'): optimizer = tf. train. Adam. Optimizer(learning_rate=learning_rate, name='Adam-op'). minimize(loss) with tf. variable_scope('Accuracy'): correct_prediction = tf. equal(tf. argmax(output_logits, 1), tf. argmax(y, 1), name='correct_pred') accuracy = tf. reduce_mean(tf. cast(correct_prediction, tf. float 32), name='accuracy') tf. summary. scalar('Accuracy', accuracy) with tf. variable_scope('Prediction'): # Network predictions cls_prediction = tf. argmax(output_logits, axis=1, name='predictions') 30/04/2018 www. easy-tensorflow. com 18

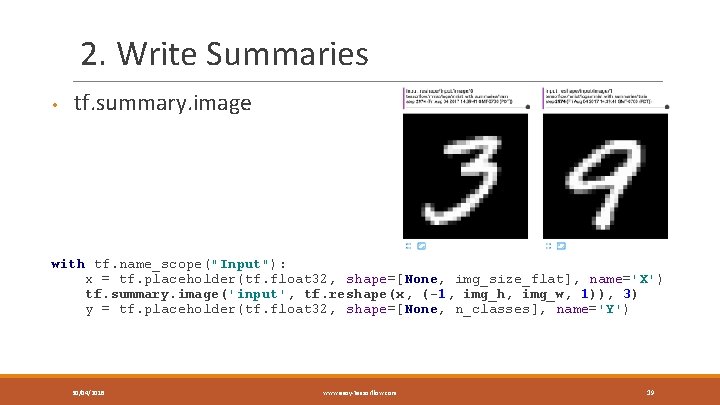

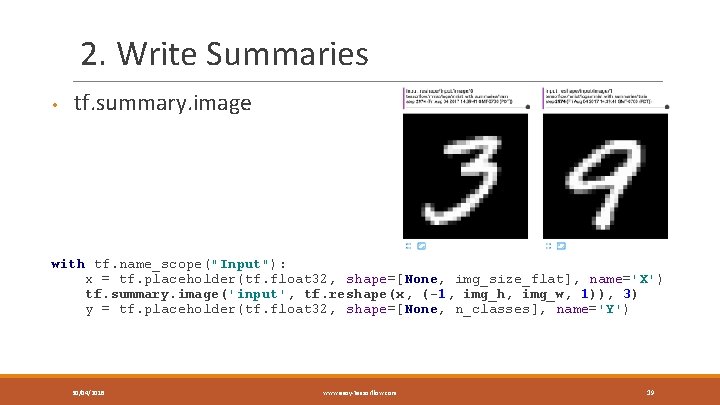

2. Write Summaries • tf. summary. image with tf. name_scope("Input"): x = tf. placeholder(tf. float 32, shape=[None, img_size_flat], name='X') tf. summary. image('input', tf. reshape(x, (-1, img_h, img_w, 1)), 3) y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') 30/04/2018 www. easy-tensorflow. com 19

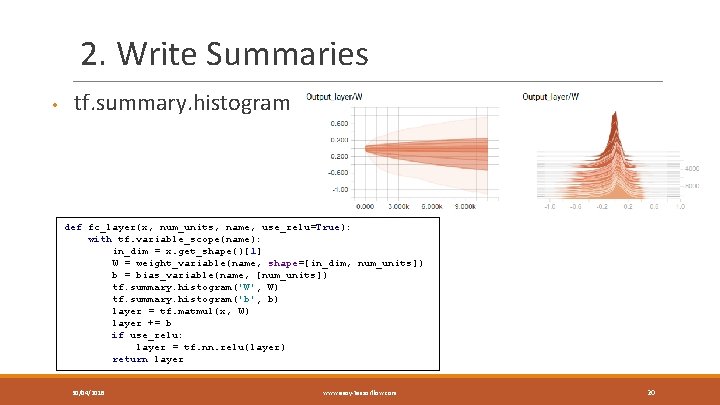

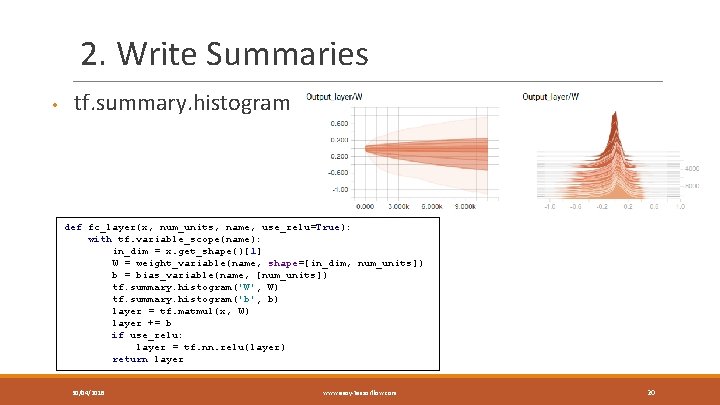

2. Write Summaries • tf. summary. histogram def fc_layer(x, num_units, name, use_relu=True): with tf. variable_scope(name): in_dim = x. get_shape()[1] W = weight_variable(name, shape=[in_dim, num_units]) b = bias_variable(name, [num_units]) tf. summary. histogram('W', W) tf. summary. histogram('b', b) layer = tf. matmul(x, W) layer += b if use_relu: layer = tf. nn. relu(layer) return layer 30/04/2018 www. easy-tensorflow. com 20

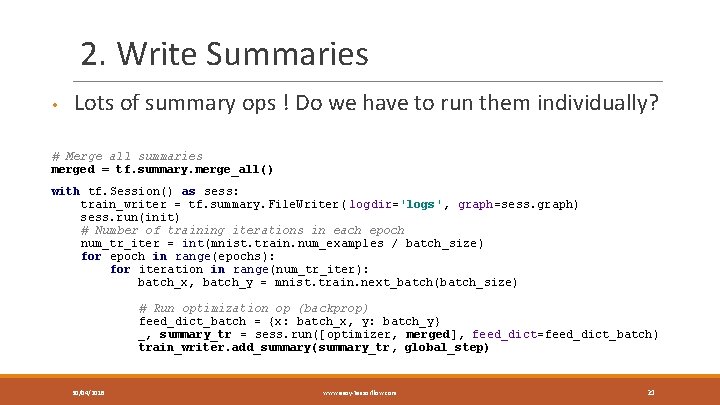

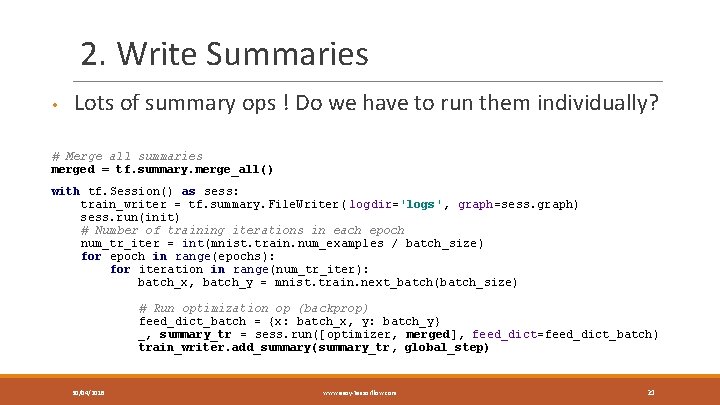

2. Write Summaries • Lots of summary ops ! Do we have to run them individually? # Merge all summaries merged = tf. summary. merge_all() with tf. Session() as sess: train_writer = tf. summary. File. Writer( logdir='logs', graph=sess. graph) sess. run(init) # Number of training iterations in each epoch num_tr_iter = int(mnist. train. num_examples / batch_size) for epoch in range(epochs): for iteration in range(num_tr_iter): batch_x, batch_y = mnist. train. next_batch(batch_size) # Run optimization op (backprop) feed_dict_batch = {x: batch_x, y: batch_y} _, summary_tr = sess. run([optimizer, merged], feed_dict=feed_dict_batch) train_writer. add_summary(summary_tr, global_step) 30/04/2018 www. easy-tensorflow. com 21

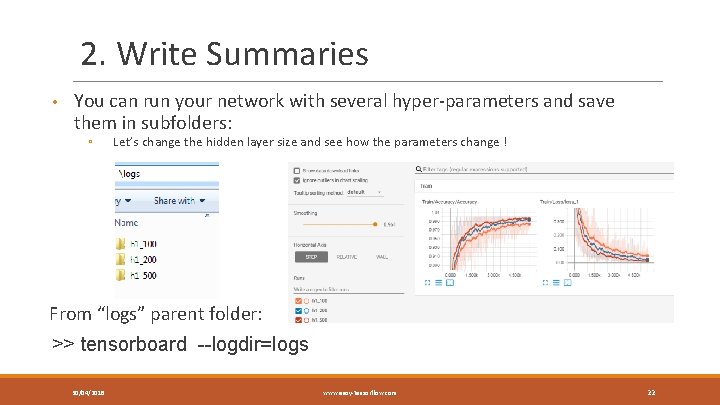

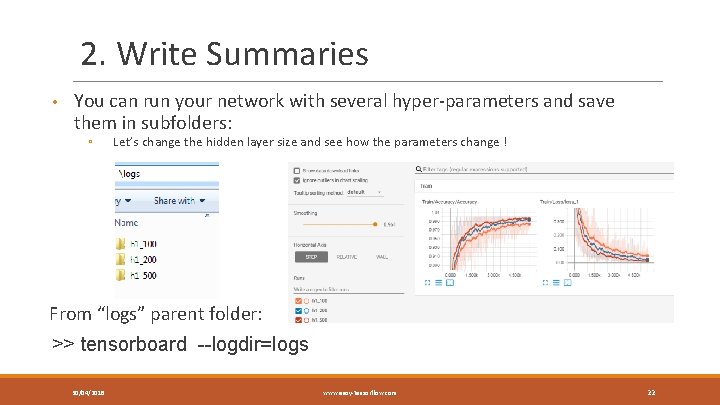

2. Write Summaries • You can run your network with several hyper-parameters and save them in subfolders: ◦ Let’s change the hidden layer size and see how the parameters change ! From “logs” parent folder: >> tensorboard --logdir=logs 30/04/2018 www. easy-tensorflow. com 22