Multimedia Database Management Systems Michael Lang National University

Multimedia Database Management Systems © Michael Lang, National University of Ireland, Galway

Multimedia Database Management Systems (MMDBMS) A true MMDBMS should be able to: • Operate (create, update, delete, retrieve) with at least all the audio-visual multimedia data types (text, images, video, audio) and perhaps some nonaudio-visual types (e. g. olfactory, taste, haptic) • Fulfill all the standard requirements of a DBMS i. e. data integrity, persistence, transaction management, concurrency control, system recovery, queries, versioning, data integrity, data security, etc. • Manipulate huge data volumes (virtually no restriction concerning the number of multimedia structures and their size) • Allow interaction with the user e. g. object searches, generated media • Retrieve multimedia data based on their content (attributes, features and concepts) • Efficiently manage data distribution over the nodes of a computer network (distributed architecture) • By way of combination of basic multimedia objects, it should be possible to create new complex objects (i. e. “specialisation” and “inheritance”) © Michael Lang, National University of Ireland, Galway

Multimedia Database Management Systems (MMDBMS) • Because many different data types are involved, special methods may be needed for optimal storage, access, indexing, and retrieval - in particular, for time-based media objects • May include advanced features such as character recognition, voice recognition, and image analysis • With hypermedia databases, aka “Hyperbases”, links between generated pages/reports are derived from associations between objects v Many hypermedia systems are based on relational or objectrelational database technologies e. g. Active Server Pages, Cold. Fusion, Java Server Pages © Michael Lang, National University of Ireland, Galway

Audio-Visual Data • Taking into account time-dependency, audio-visual multimedia data may be divided into two categories: v Data not depending on a time scale are called discrete data or static data v Data depending on a time scale are called continuous data or dynamic data • Text, graphics and images are discrete data, while audio and video are continuous • Continuous data are more complex than discrete data demanding the use of much better compression/decompression algorithms and more sophisticated operations for their interpretation and manipulation © Michael Lang, National University of Ireland, Galway

Audio-Visual Data • Problem: how do you search audio-visual data? v e. g. Consider a news agency wishing to resolve the query “give me a list of all colour photographs of Nelson Mandela taken while he was President of South Africa” v Not possible in SQL … v … unless we use meta-data to tag/catalogue the images v Problem with meta-data • BBC have multimedia archives going back to early 20 th century, how do you create keywords when you cannot predict all possible future uses? • Resolution: use a broad set of keywords to catalogue data • There are some specialised applications e. g. biometrics (fingerprints, face recognition, voice analysis) which support multimedia data mining using AI heuristics © Michael Lang, National University of Ireland, Galway

Generated Interactive Media • “Generated media” are interactive multimedia objects / presentations generated by computers in real-time using specific devices and instruments v Most popular are animation, music and (vector) graphics • Main advantage of generated media over audio and video data resides in a much better interaction with the user, which is crucial in the case of MMDBMS © Michael Lang, National University of Ireland, Galway

Speech Data • Due to the recent development of advanced techniques for speech recognition and speech understanding speech data are likely to be treated independently from audio data • The main difference between “speech recognition” and “speech understanding” resides in the fact that the latter implies action taken by the system in response to the vocal command of the user • Current speech recognition systems have better than 95% accuracy and the errors that might occur are very easy to correct e. g. IBM Via. Voice, Voice. XML • Speech understanding is more complex, especially when the semantic of the command plays an important role – so far, limited to basic commands (e. g. “Open File”, “Print”, “Close”) and simple applications (e. g. telebanking phone commands “Yes”, “No”, “ 1”, “ 2”, “ 3”, …) © Michael Lang, National University of Ireland, Galway

Olfactory Data • Olfactory (smell) and tasting interfaces are the least developed among HCI technologies, mainly due to the lack of useful applications compared to other sense-based technologies • However, the use of scents and taste in military (chemical and biological warfare detectors), medicine (surgical training) and electronic commerce (sample of groceries, cosmetics, household products) has fostered research on olfactory and tasting systems in the last few years • There are two types of olfactory interfaces: olfactory input interfaces and olfactory delivery (output) systems v Olfactory input interfaces, aka “electronic noses”, are used to collect and interpret odours (very useful in product quality control and warfare detectors). There are three basic approaches for this type of input device: gas chromatography; mass spectrometry; chemical sensor arrays v Olfactory delivery systems are a combination of at least four different processing steps: odour storage (liquid, gels, microencapsulation), odour selection, evacuation and cleaning of exhaled air and odour display © Michael Lang, National University of Ireland, Galway

Olfactory Data • Olfactory delivery systems are already available for the consumer market, e. g. : v v SENX device from Tri. Senx (http: //www. trisenx. com) Sniffman portable scent system from Ruetz Technologies (Germany) - has already been adapted for a multimedia entertainment application (Duftkino – the smell cinema at http: //www. duftkino. de) • France Telecom R&D and the Burgundy wine industry association (BIVP) are creating a website that lets visitors take an olfactory stroll through the famous vineyards of Burgundy. Aromas, pictures and sounds will be brought together to recreate the atmosphere of the vineyards © Michael Lang, National University of Ireland, Galway

Taste Data • Tasting systems, or “electronic tongues”, mimic their natural counterparts, being able to distinguish between sweet, sour, salty and bitter, and having the potential to respond to an array of subtle flavours • e-tongues can also "taste" cholesterol levels in blood, cocaine concentration in urine, or toxins in water, which means that they can return both qualitative (type of) and quantitative (concentration of) results • Most of the applications of electronic tongues are in the field of quality control (flavours, beverages, fragrances, pharmaceuticals) and medicine (blood and urine tests) © Michael Lang, National University of Ireland, Galway

Haptic Data • Haptic interfaces are devices that measure the motion of, and provide sensory stimulus to, the users' hands and fingers • Haptic devices make it possible for users to “touch”, feel, manipulate, create, and/or alter with their own hands and fingers, objects presented on computer displays as if they were real physical objects • Haptic interfaces can be used to train physical skills such as those jobs requiring specialised hand-help tools (e. g. surgeons, astronauts, mechanics); to provide haptic-feedback modelling of 3 D objects without a physical medium (such as automobile body designers working with clay models); or to mock-up developmental prototypes directly from CAD databases (rather than in a machine shop) © Michael Lang, National University of Ireland, Galway

Haptic Devices • Based on the interaction between the user and the machine, haptic devices can be classified as: v v Finger-based: attached to user's finger and responding to its movements e. g. PHANTOM (developed at MIT, but commercialised by Sensable); pen based device from University of Washington; Rutgers Masters (RM-I, RM-II); Feelit Mouse by Immersion Hand-based: users interact with the device by grasping a rigid tool. The machine gives the human arm the sensation of forces associated with various arbitrary manoeuvres e. g. Touch. Sense by Immersion, Cyberglove and Cyber. Touch of Virtual Tech Exoskeletal: track the movements of user's arm, shoulder or even of the whole body, allowing high interactivity, but at extremely high prices mostly used in medicine, for people with disabilities, and military e. g. Cybergrasp by Virtual Technologies, Dextrous Arms and Hands from Sarcos, Arm Master by Exos Inherently passive devices (or intelligent assist devices): these are passive, therefore safe, robots for direct physical interaction with human operators within shared environments © Michael Lang, National University of Ireland, Galway

Haptic Devices • Besides force feedback, other tactile display technologies include: v Vibration: Vibration can be used to transmit information about texture, puncture, slip, and impact. Since vibrations often are sensed as being diffuse or unlocalised, a single vibrating device for each finger or skin area is often sufficient v v v Thermal display: Thermal perceptions of an object are based on combination of thermal conductivity, thermal capacity, and temperature. This enables users to infer material composition as well as temperature Small-scale shape or pressure distribution: The most frequently used devices have an array of closely spaced pins that can be raised or lowered individually against the skin to approximate a shape. To conform to the human ability to perceive tactile sensations, the pins must be spaced within a few millimetres of one another Other tactile display technologies: These include electrorheological devices (materials that use a “smart fluid” which can change viscosity in an electrical field) combined with sensors, electrocutaneous stimulators (using electrodes to stimulate cutaneous nerve endings), ultrasonic friction displays, and rotating disks for creating the sensation of slip e. g. MEMICA prototype from Rutgers University © Michael Lang, National University of Ireland, Galway

Management of Multimedia Data • Having considered the various data types, the obvious question is: how do we manage multimedia data? v We can easily accommodate textual data v v v Object-oriented and object-relational databases provide some support for image, audio and video data – but we still have some distance to go and will probably require specialised databases Smell databases, taste databases and touch databases will probably evolve separately and specific storage devices, querying techniques, and presentation methods will be built for these media types The process of integration of digital smell, taste and touch in a MMDBMS will most likely take the form of a multimedia federated database system – i. e. multiple specialised databases in an interconnected system architecture © Michael Lang, National University of Ireland, Galway

Research Challenges for Video Databases • Development of video processing techniques for automatic object identification and motion tracking • Development of data models with powerful semantic expressiveness • Designing efficient indexing, classification and eventbased searching techniques with high degree of precision • Providing suitable video querying environment • Addressing scalability © Michael Lang, National University of Ireland, Galway

Multimedia Data Types • DBMS provide different kinds of domains for multimedia data: v v Large object domains – these are long unstructured sequences of data e. g. Binary Large Objects (BLOBs) or Character Large Objects (CLOBs) File references – instead of holding the data, a file reference contains a link to the data MS Windows Object Linking and Embedding (OLE) as in Microsoft Access Actual multimedia domains • object-relational databases support specialised multimedia data types • object-oriented databases support specialised multimedia classes © Michael Lang, National University of Ireland, Galway

Object Linking and Embedding (OLE) • Object Linking and Embedding was Microsoft’s first architecture for integrating files of different types • Each file type in Windows is associated with an application • It is possible to place a file of one type inside another, either by wholly embedding a separate copy of the file, or by placing a link to the actual file • Microsoft Access supports OLE data – but you can’t do much with OLE objects since the data is in a format that Access doesn’t understand e. g. you can plug the foreign data into a report or a form (e. g. employee photo) and little else © Michael Lang, National University of Ireland, Galway

SQL 3: Large Objects • Oracle and SQL 3 support three large object types: v BLOB - The BLOB domain type stores unstructured binary data in the database. BLOBs can store up to 4 GB of binary data. v CLOB – The CLOB domain type stores up to 4 GB of single-byte character set data v NCLOB - The NCLOB domain type stores up to 4 GB of fixed-width and varying width multi-byte national character set data • These types support the following operations: v Concatenation – making up one LOB by putting two of them together v Substring – extract a section of a LOB v Overlay – replace a substring of one LOB with another v Trim – removing particular characters (e. g. whitespace) from the beginning or end v Length – returns the length of the LOB v Position – returns the position of a substring in a LOB v Upper and Lower – turns a CLOB or NCLOB into upper or lower case v LOBs can only appear in a where clause using “=”, “<>” or “like” and not in GROUP BY by or ORDER BY © Michael Lang, National University of Ireland, Galway

Oracle: BFILE • The BFILE datatype provides access to BLOB files of up to 4 gigabytes that are stored in filesystems outside an Oracle database • The BFILE datatype allows read-only support of large binary files; you cannot modify a file through Oracle provides APIs to access file data © Michael Lang, National University of Ireland, Galway

ORACLE inter. Media • ORACLE inter. Media supports multimedia storage, retrieval, and management of: v v BLOBs stored locally in Oracle 8 i and containing audio, image, or video data BFILEs, stored locally in operating system-specific files and containing audio, image, or video data URLs containing audio, image, or video data stored on any HTTP server e. g. Oracle Application Server, Microsoft Internet Information Server or Apache Streaming audio or video data stored on specialised media servers e. g. Oracle Video Server • SQL *Loader and PL/SQL can be used to bring the data in • ORACLE also stores metadata including: source type, location, and source name; MIME type and formatting information; characteristics such as height and width of an image, number of audio channels, video frame rate, etc. © Michael Lang, National University of Ireland, Galway

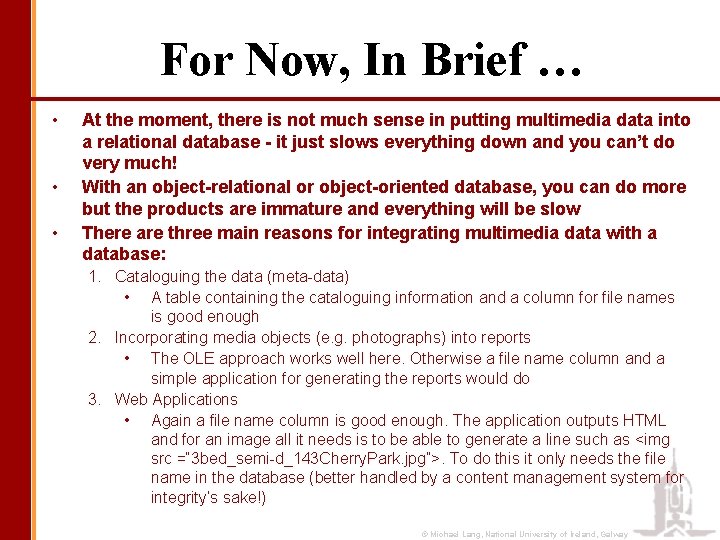

For Now, In Brief … • • • At the moment, there is not much sense in putting multimedia data into a relational database - it just slows everything down and you can’t do very much! With an object-relational or object-oriented database, you can do more but the products are immature and everything will be slow There are three main reasons for integrating multimedia data with a database: 1. Cataloguing the data (meta-data) • A table containing the cataloguing information and a column for file names is good enough 2. Incorporating media objects (e. g. photographs) into reports • The OLE approach works well here. Otherwise a file name column and a simple application for generating the reports would do 3. Web Applications • Again a file name column is good enough. The application outputs HTML and for an image all it needs is to be able to generate a line such as <img src =“ 3 bed_semi-d_143 Cherry. Park. jpg”>. To do this it only needs the file name in the database (better handled by a content management system for integrity’s sake!) © Michael Lang, National University of Ireland, Galway

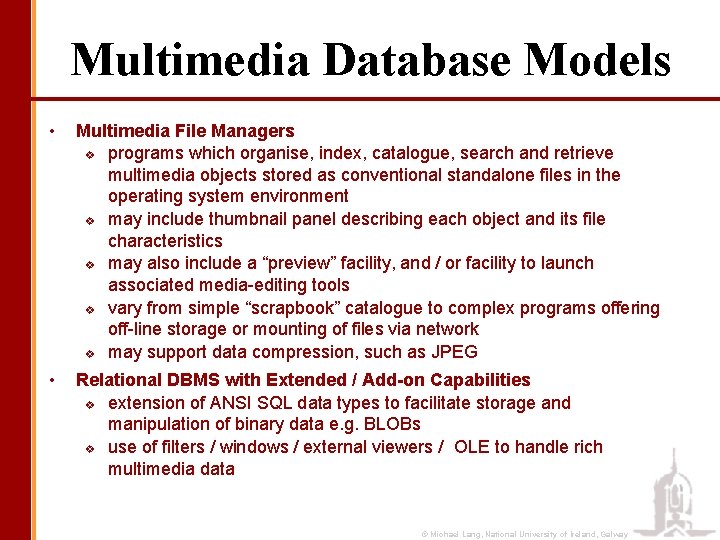

Multimedia Database Models • Multimedia File Managers v programs which organise, index, catalogue, search and retrieve multimedia objects stored as conventional standalone files in the operating system environment v may include thumbnail panel describing each object and its file characteristics v may also include a “preview” facility, and / or facility to launch associated media-editing tools v vary from simple “scrapbook” catalogue to complex programs offering off-line storage or mounting of files via network v may support data compression, such as JPEG • Relational DBMS with Extended / Add-on Capabilities v extension of ANSI SQL data types to facilitate storage and manipulation of binary data e. g. BLOBs v use of filters / windows / external viewers / OLE to handle rich multimedia data © Michael Lang, National University of Ireland, Galway

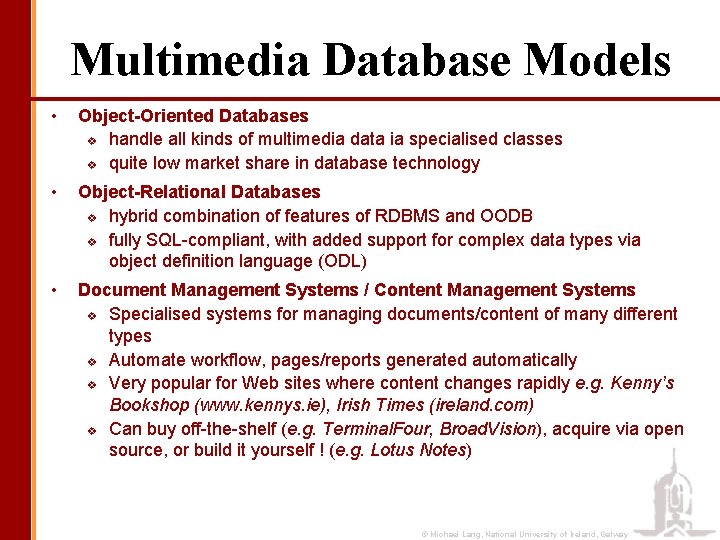

Multimedia Database Models • Object-Oriented Databases v handle all kinds of multimedia data ia specialised classes v quite low market share in database technology • Object-Relational Databases v hybrid combination of features of RDBMS and OODB v fully SQL-compliant, with added support for complex data types via object definition language (ODL) • Document Management Systems / Content Management Systems v Specialised systems for managing documents/content of many different types v Automate workflow, pages/reports generated automatically v Very popular for Web sites where content changes rapidly e. g. Kenny’s Bookshop (www. kennys. ie), Irish Times (ireland. com) v Can buy off-the-shelf (e. g. Terminal. Four, Broad. Vision), acquire via open source, or build it yourself ! (e. g. Lotus Notes) © Michael Lang, National University of Ireland, Galway

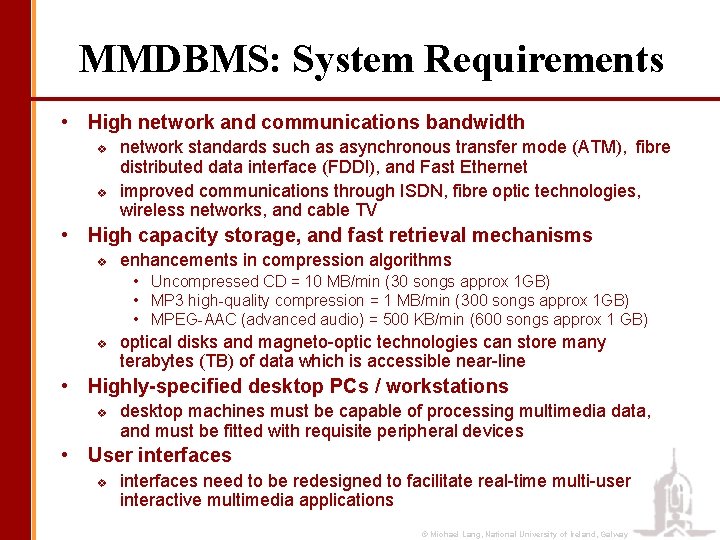

MMDBMS: System Requirements • High network and communications bandwidth v v network standards such as asynchronous transfer mode (ATM), fibre distributed data interface (FDDI), and Fast Ethernet improved communications through ISDN, fibre optic technologies, wireless networks, and cable TV • High capacity storage, and fast retrieval mechanisms v enhancements in compression algorithms • Uncompressed CD = 10 MB/min (30 songs approx 1 GB) • MP 3 high-quality compression = 1 MB/min (300 songs approx 1 GB) • MPEG-AAC (advanced audio) = 500 KB/min (600 songs approx 1 GB) v optical disks and magneto-optic technologies can store many terabytes (TB) of data which is accessible near-line • Highly-specified desktop PCs / workstations v desktop machines must be capable of processing multimedia data, and must be fitted with requisite peripheral devices • User interfaces v interfaces need to be redesigned to facilitate real-time multi-user interactive multimedia applications © Michael Lang, National University of Ireland, Galway

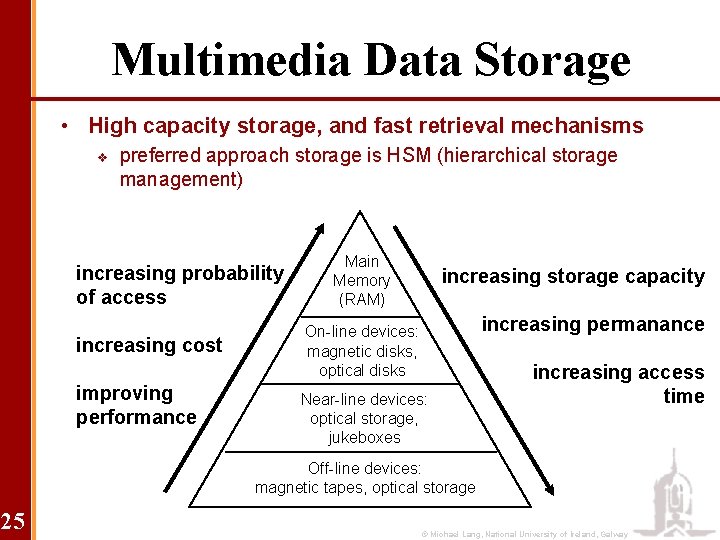

Multimedia Data Storage • High capacity storage, and fast retrieval mechanisms v preferred approach storage is HSM (hierarchical storage management) increasing probability of access increasing cost improving performance Main Memory (RAM) increasing storage capacity increasing permanance On-line devices: magnetic disks, optical disks Near-line devices: optical storage, jukeboxes increasing access time Off-line devices: magnetic tapes, optical storage 25 © Michael Lang, National University of Ireland, Galway

Quality of Service (Qo. S) • Quality-of-Service (Qo. S) parameters: v Average delay : shows reponse time v Speed ratio : relates presentation to real time v Utilisation : describes ratio of data available to used v Skew : measures accumulated deviation of two streams v Reliability : gives average frequency of errors © Michael Lang, National University of Ireland, Galway

Quality of Service (Qo. S) • Experimental results on permissible skew: v 80 milliseconds (ms) for lip synchronisation of video and audio v 240 ms for text overlaid on video v 80 ms for event correlation between animation and audio (e. g. in dancing) v 11 microseconds for tightly coupled audio with audio (e. g. stereo) v 120 ms for loosely coupled audio with audio (e. g. dialogue) v 5 ms for tightly coupled audio and image (e. g. music and notes) v 500 ms for loosely coupled audio and image (e. g. slide show) v 240 ms for text annotation to audio © Michael Lang, National University of Ireland, Galway

Applications of Multimedia Databases • Generic Business & Office Information Systems: document imaging, document editing tools, multimedia email, multimedia conferencing, multimedia workflow management, teleworking • Software development: multimedia object libraries; computer-aided software engineering (CASE); multimedia communication histories; multimedia system artefacts • Education: multimedia encyclopediae; multimedia courseware & training materials; education-on-demand (distance education, JIT learning) • Banking: tele-banking • Retail: home shopping; customer guidance • Tourism & Hospitality: trip visualisation & planning; sales & marketing • Publishing: electronic publishing; document editing tools; multimedia archives © Michael Lang, National University of Ireland, Galway

Applications of Multimedia Databases • Medicine: digitised x-rays; patient histories; image analysis; teleconsultation • Criminal investigation: biometrics; fingerprint matching; “photo-fit” analysis • Entertainment: video-on-demand; interactive TV; Virtual Reality gaming • Museums & Libraries • Science: spatial data analysis; cartographic databases; geographic information systems (GIS’s) • Engineering: computer-aided design / manufacture (CAD / CAM); collaborative design; concurrent engineering • Pharmaceuticals: dossier management for new drug applications (NDA’s) © Michael Lang, National University of Ireland, Galway

Example: Museums/Libraries • Below is an example of multimedia data types that might be used in a museum or library © Michael Lang, National University of Ireland, Galway

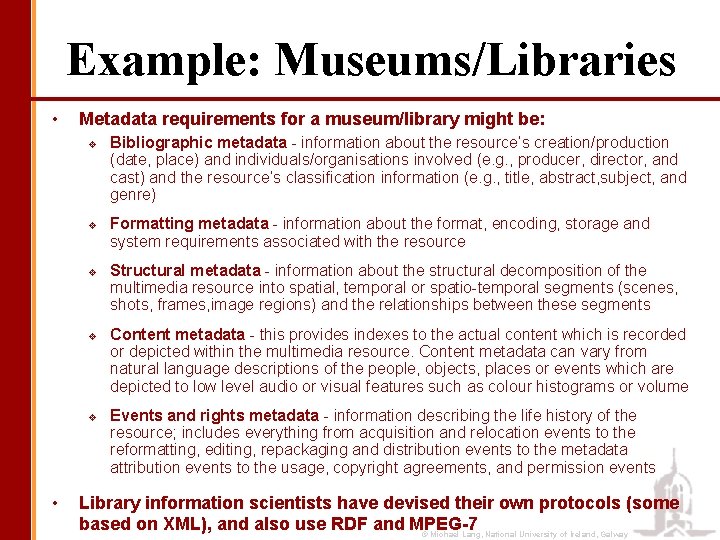

Example: Museums/Libraries • Metadata requirements for a museum/library might be: v v v • Bibliographic metadata - information about the resource’s creation/production (date, place) and individuals/organisations involved (e. g. , producer, director, and cast) and the resource’s classification information (e. g. , title, abstract, subject, and genre) Formatting metadata - information about the format, encoding, storage and system requirements associated with the resource Structural metadata - information about the structural decomposition of the multimedia resource into spatial, temporal or spatio-temporal segments (scenes, shots, frames, image regions) and the relationships between these segments Content metadata - this provides indexes to the actual content which is recorded or depicted within the multimedia resource. Content metadata can vary from natural language descriptions of the people, objects, places or events which are depicted to low level audio or visual features such as colour histograms or volume Events and rights metadata - information describing the life history of the resource; includes everything from acquisition and relocation events to the reformatting, editing, repackaging and distribution events to the metadata attribution events to the usage, copyright agreements, and permission events Library information scientists have devised their own protocols (some based on XML), and also use RDF and MPEG-7 © Michael Lang, National University of Ireland, Galway

Multimedia Data Retrieval • Multimedia DBMS must support a standard data manipulation language (DML) such as SQL, but will have extended query features for processing rich media objects (such as audio, images, and video) • Complex media objects may be categorised using meta-data and keywords • Retrieval of complex media objects typically requires “fuzzy”-match / partial-match search mechanisms • Query results may use a ranking mechanism so that the most relevant matches are at fore of list © Michael Lang, National University of Ireland, Galway

MPEG • Moving Pictures Experts Group v v Started in 1988 as a working group within ISO/IEC Comprises a number of sub-groups e. g. Digital Storage Media (DSM), Delivery, Video, Audio • Generates generic standards for digital video and audio compression v MPEG-1: "Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to about 1. 5 MBit/s" v MPEG-2: "Generic Coding of Moving Pictures and Associated Audio" v MPEG-3: no longer exists (was merged into MPEG-2) v MPEG-4: “Coding of Audio-Visual Objects" v MPEG-7: “Multimedia Content Description Interface” v MPEG-21 © Michael Lang, National University of Ireland, Galway

XML for Media Description • Benefits of XML as meta-data language are well known: v Grammar based; Declarative; Hierarchical structure; Modular; Extensible; Human-readable; Separation of content & structure from presentation & behaviour; etc. • XML- / SGML-based Markup Languages for audio/video include: v v Hy. Time, SMDL, 4 ML, Chord. ML, Flow. ML, Musi. XML, MNML, Music. XML, Voice. XML, SMIL, MPEG-7 See http: //xml. coverpages. org/xml. Music. html © Michael Lang, National University of Ireland, Galway

Music Retrieval • Consider how you find and browse CD’s in a music store: Jazz Rap Classical R&B Rock World © Michael Lang, National University of Ireland, Galway

Music Retrieval • Now consider how you find and browse CD’s in an on -line music store … same metaphor! But on-line stores can have 1000’s of CD’s / tracks Music Styles CDNOW Classical Jazz Rock R&B Rap Dance World New Age © Michael Lang, National University of Ireland, Galway

Music Retrieval & Delivery • When MP 3. com was up and running, 5000 new bands each month, 1200 new songs every day! v You probably aren’t interested in most of those songs - can we do better than genre tags? v v How about queries like: “Find me new bands that sound like U 2” Collaborative filtering: “Find me new bands that sound like REM that my friend Tom likes” MPEG-7: a new standard for meta-data (genre, composer, title, instrumentation, etc. ) - can be used in music retrieval applications With proliferation of hand-held personalised digital audio devices, interesting futures lie ahead for music ! © Michael Lang, National University of Ireland, Galway

- Slides: 37