CS 258 Parallel Computer Architecture Lecture 1 Introduction

![Computer Architecture Is … the attributes of a [computing] system as seen by the Computer Architecture Is … the attributes of a [computing] system as seen by the](https://slidetodoc.com/presentation_image_h2/b91d171be48aa85bd2ce83e0cd9a11d0/image-2.jpg)

- Slides: 51

CS 258 Parallel Computer Architecture Lecture 1 Introduction to Parallel Architecture January 23, 2002 Prof John D. Kubiatowicz CS 258 S 99

![Computer Architecture Is the attributes of a computing system as seen by the Computer Architecture Is … the attributes of a [computing] system as seen by the](https://slidetodoc.com/presentation_image_h2/b91d171be48aa85bd2ce83e0cd9a11d0/image-2.jpg)

Computer Architecture Is … the attributes of a [computing] system as seen by the programmer, i. e. , the conceptual structure and functional behavior, as distinct from the organization of the data flows and controls the logic design, and the physical implementation. Amdahl, Blaaw, and Brooks, 1964 SOFTWARE 1/23/02 2

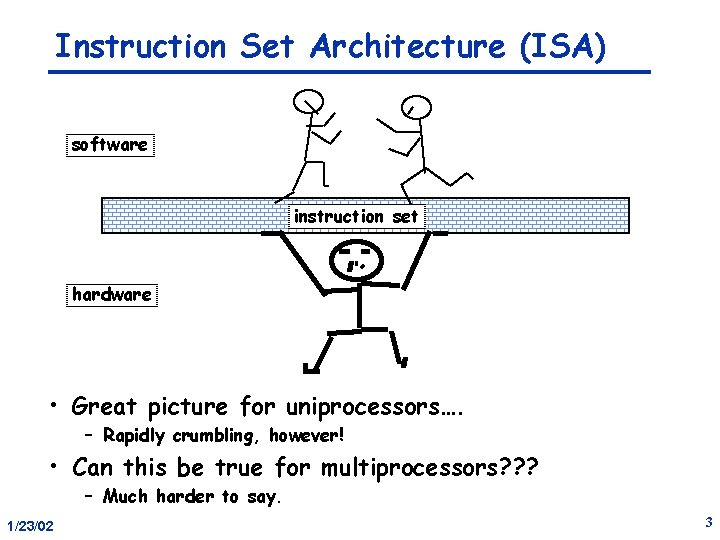

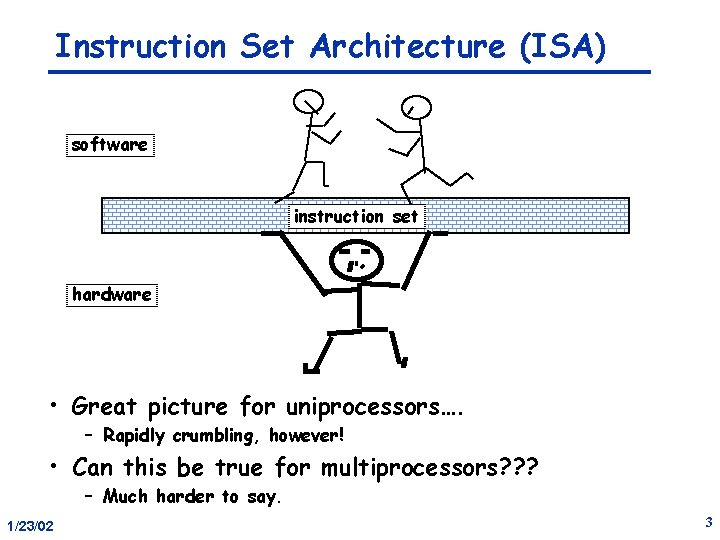

Instruction Set Architecture (ISA) software instruction set hardware • Great picture for uniprocessors…. – Rapidly crumbling, however! • Can this be true for multiprocessors? ? ? – Much harder to say. 1/23/02 3

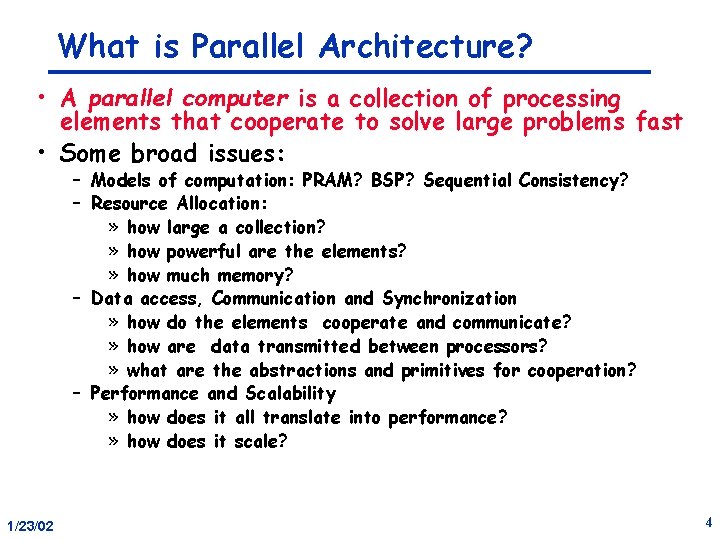

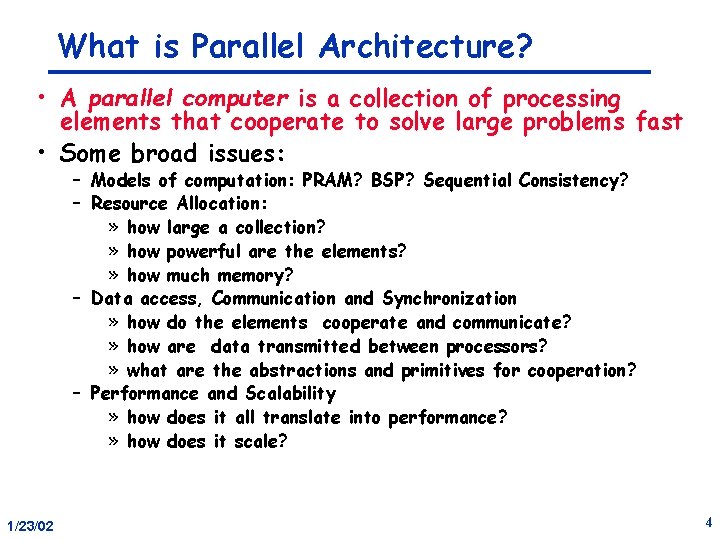

What is Parallel Architecture? • A parallel computer is a collection of processing elements that cooperate to solve large problems fast • Some broad issues: – Models of computation: PRAM? BSP? Sequential Consistency? – Resource Allocation: » how large a collection? » how powerful are the elements? » how much memory? – Data access, Communication and Synchronization » how do the elements cooperate and communicate? » how are data transmitted between processors? » what are the abstractions and primitives for cooperation? – Performance and Scalability » how does it all translate into performance? » how does it scale? 1/23/02 4

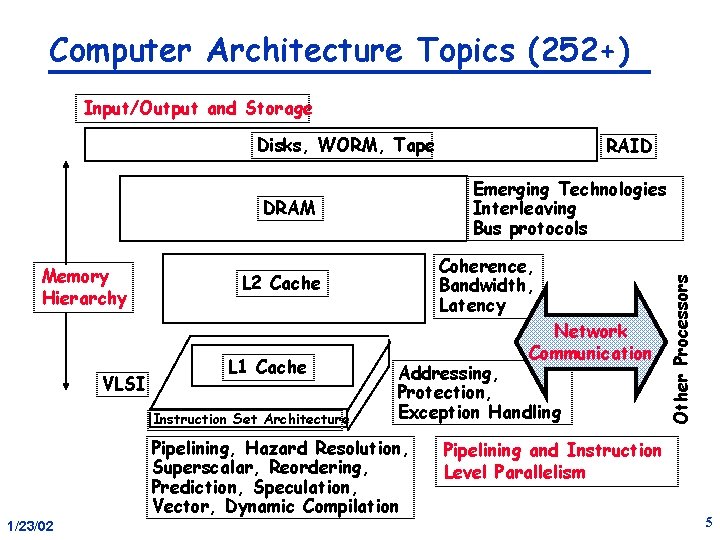

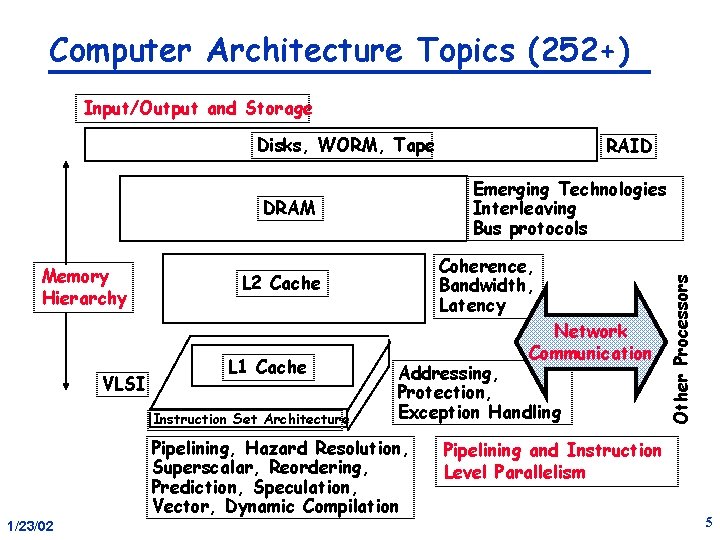

Computer Architecture Topics (252+) Input/Output and Storage Disks, WORM, Tape VLSI Coherence, Bandwidth, Latency L 2 Cache L 1 Cache Instruction Set Architecture Addressing, Protection, Exception Handling Pipelining, Hazard Resolution, Superscalar, Reordering, Prediction, Speculation, Vector, Dynamic Compilation 1/23/02 Network Communication Other Processors Emerging Technologies Interleaving Bus protocols DRAM Memory Hierarchy RAID Pipelining and Instruction Level Parallelism 5

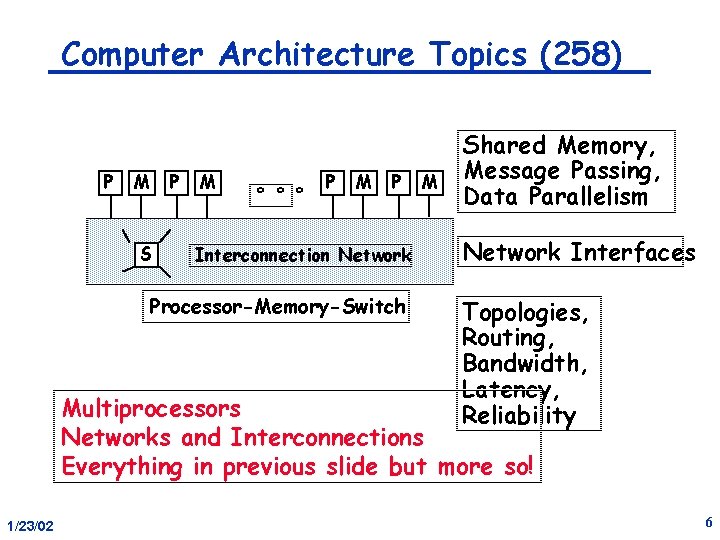

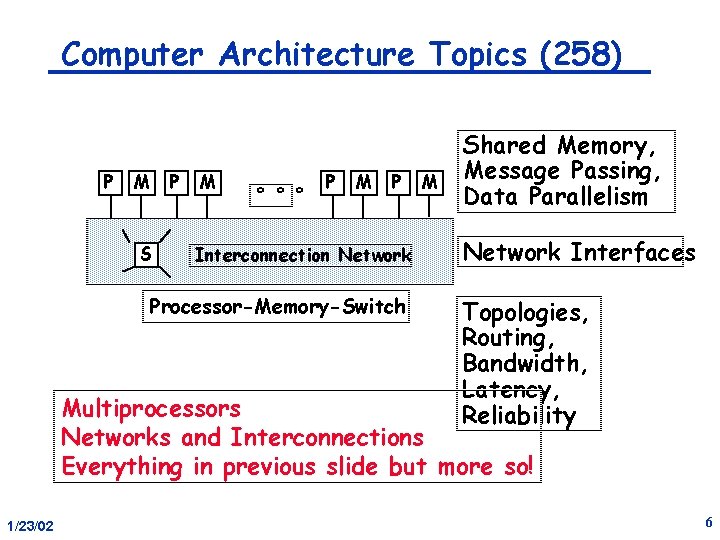

Computer Architecture Topics (258) P M P S M ° ° ° P M P Interconnection Network Processor-Memory-Switch M Shared Memory, Message Passing, Data Parallelism Network Interfaces Topologies, Routing, Bandwidth, Latency, Reliability Multiprocessors Networks and Interconnections Everything in previous slide but more so! 1/23/02 6

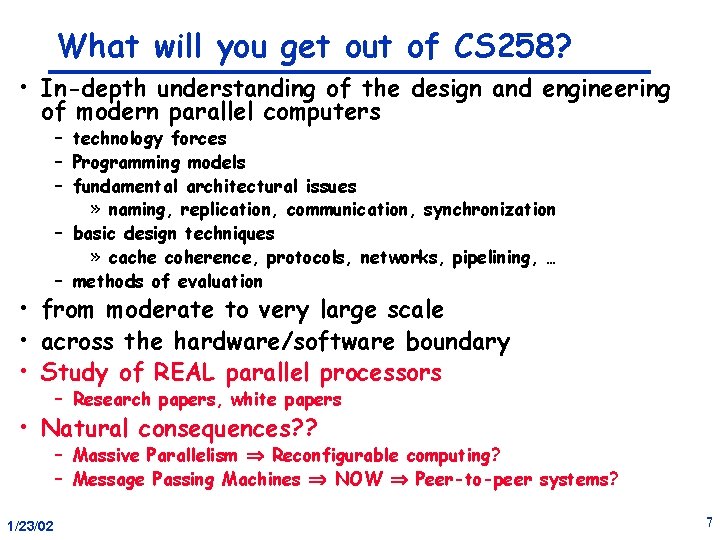

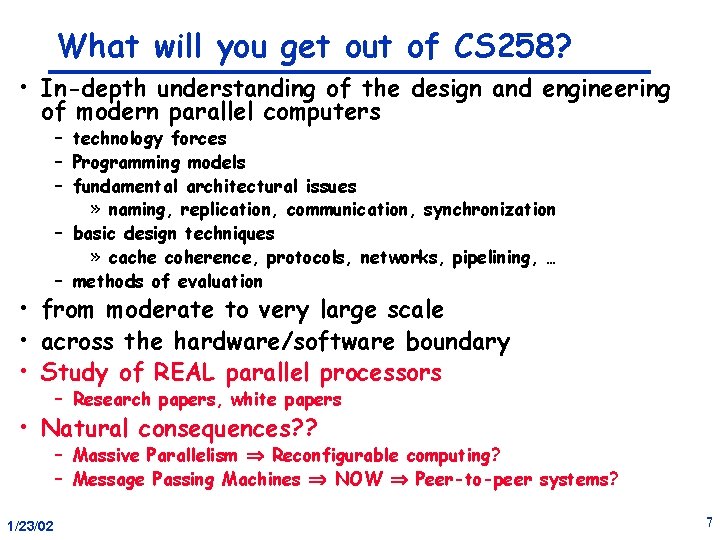

What will you get out of CS 258? • In-depth understanding of the design and engineering of modern parallel computers – technology forces – Programming models – fundamental architectural issues » naming, replication, communication, synchronization – basic design techniques » cache coherence, protocols, networks, pipelining, … – methods of evaluation • from moderate to very large scale • across the hardware/software boundary • Study of REAL parallel processors – Research papers, white papers • Natural consequences? ? – Massive Parallelism Reconfigurable computing? – Message Passing Machines NOW Peer-to-peer systems? 1/23/02 7

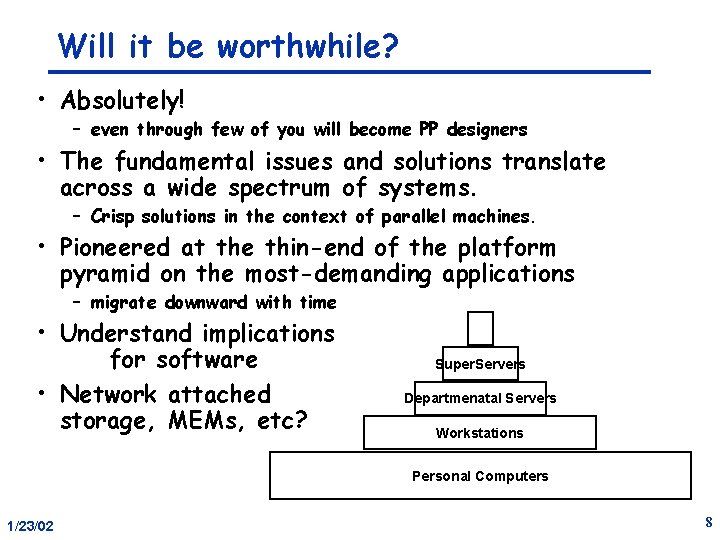

Will it be worthwhile? • Absolutely! – even through few of you will become PP designers • The fundamental issues and solutions translate across a wide spectrum of systems. – Crisp solutions in the context of parallel machines. • Pioneered at the thin-end of the platform pyramid on the most-demanding applications – migrate downward with time • Understand implications for software • Network attached storage, MEMs, etc? Super. Servers Departmenatal Servers Workstations Personal Computers 1/23/02 8

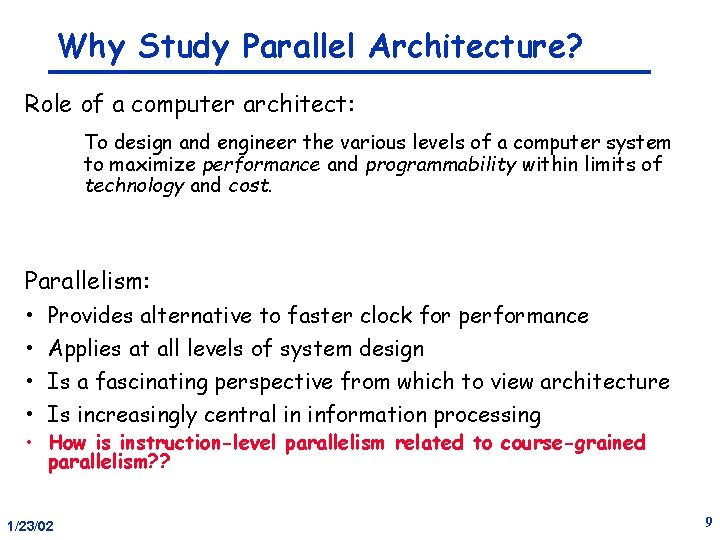

Why Study Parallel Architecture? Role of a computer architect: To design and engineer the various levels of a computer system to maximize performance and programmability within limits of technology and cost. Parallelism: • • Provides alternative to faster clock for performance Applies at all levels of system design Is a fascinating perspective from which to view architecture Is increasingly central in information processing • How is instruction-level parallelism related to course-grained parallelism? ? 1/23/02 9

Is Parallel Computing Inevitable? This was certainly not clear just a few years ago Today, however: • Application demands: Our insatiable need for computing cycles • Technology Trends: Easier to build • Architecture Trends: Better abstractions • Economics: Cost of pushing uniprocessor • Current trends: – Today’s microprocessors have multiprocessor support – Servers and workstations becoming MP: Sun, SGI, DEC, COMPAQ!. . . – Tomorrow’s microprocessors are multiprocessors 1/23/02 10

Can programmers handle parallelism? • Humans not as good at parallel programming as they would like to think! – Need good model to think of machine – Architects pushed on instruction-level parallelism really hard, because it is “transparent” • Can compiler extract parallelism? – Sometimes • How do programmers manage parallelism? ? – Language to express parallelism? – How to schedule varying number of processors? • Is communication Explicit (message-passing) or Implicit (shared memory)? – Are there any ordering constraints on communication? 1/23/02 11

Granularity: • Is communication fine or coarse grained? – Small messages vs big messages • Is parallelism fine or coarse grained – Small tasks (frequent synchronization) vs big tasks • If hardware handles fine-grained parallelism, then easier to get incremental scalability • Fine-grained communication and parallelism harder than coarse-grained: – Harder to build with low overhead – Custom communication architectures often needed • Ultimate course grained communication: – GIMPS (Great Internet Mercenne Prime Search) – Communication once a month 1/23/02 12

CS 258: Staff Instructor: Prof John D. Kubiatowicz Office: 673 Soda Hall, 643 -6817 kubitron@cs Office Hours: Thursday 1: 30 - 3: 00 or by appt. Class: Wed, Fri, 1: 00 - 2: 30 pm 310 Soda Hall Administrative: Veronique Richard, Office: 676 Soda Hall, 642 -4334 nicou@cs Web page: http: //www. cs/~kubitron/courses/cs 258 -S 02/ Lectures available online <11: 30 AM day of lecture Email: cs 258@kubi. cs. berkeley. edu Clip signup link on web page (as soon as it is up) 1/23/02 13

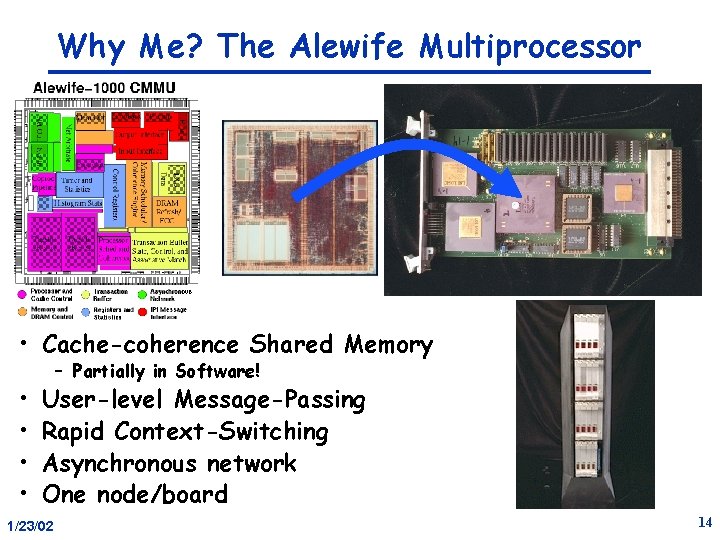

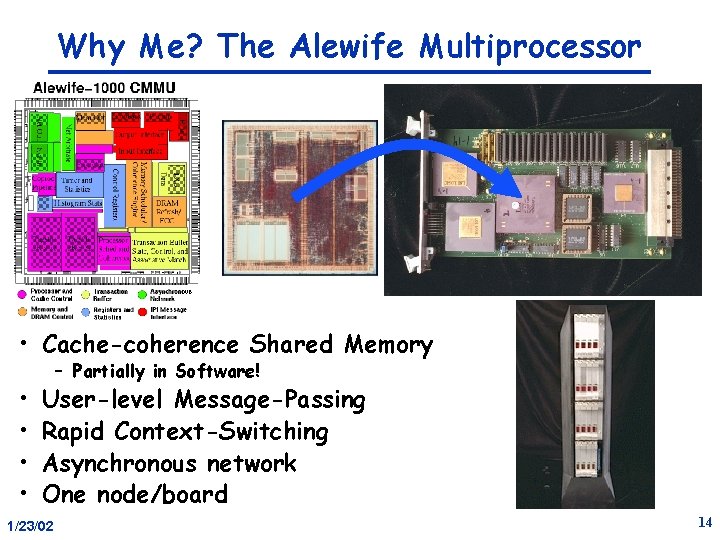

Why Me? The Alewife Multiprocessor • Cache-coherence Shared Memory • • – Partially in Software! User-level Message-Passing Rapid Context-Switching Asynchronous network One node/board 1/23/02 14

Text. Book: Two leaders in field Text: Parallel Computer Architecture: A Hardware/Software Approach, By: David Culler & Jaswinder Singh Covers a range of topics We will not necessarily cover them in order. 1/23/02 15

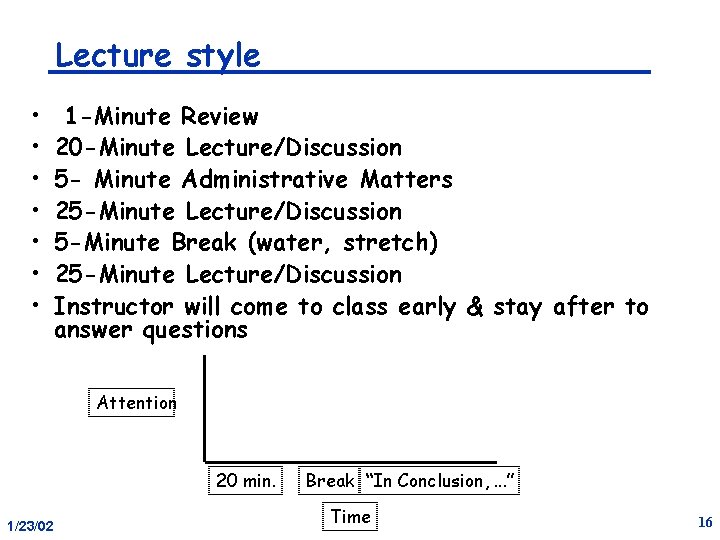

Lecture style • • 1 -Minute Review 20 -Minute Lecture/Discussion 5 - Minute Administrative Matters 25 -Minute Lecture/Discussion 5 -Minute Break (water, stretch) 25 -Minute Lecture/Discussion Instructor will come to class early & stay after to answer questions Attention 20 min. 1/23/02 Break “In Conclusion, . . . ” Time 16

Research Paper Reading • As graduate students, you are now researchers. • Most information of importance to you will be in research papers. • Ability to rapidly scan and understand research papers is key to your success. • So: you will read lots of papers in this course! – Quick 1 paragraph summaries will be due in class – Students will take turns discussing papers • Papers will be scanned and on web page. 1/23/02 17

How will grading work? • No TA This term! • Tentative breakdown: – – 1/23/02 20% 30% 40% 10% homeworks / paper presentations exam project (teams of 2) participation 18

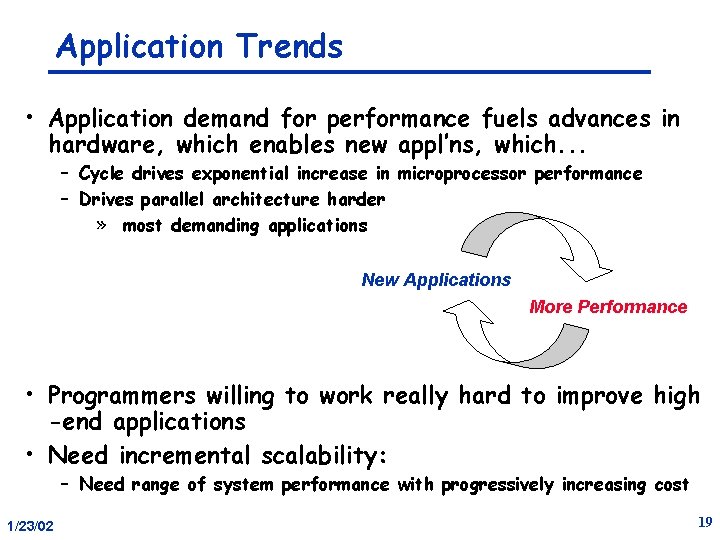

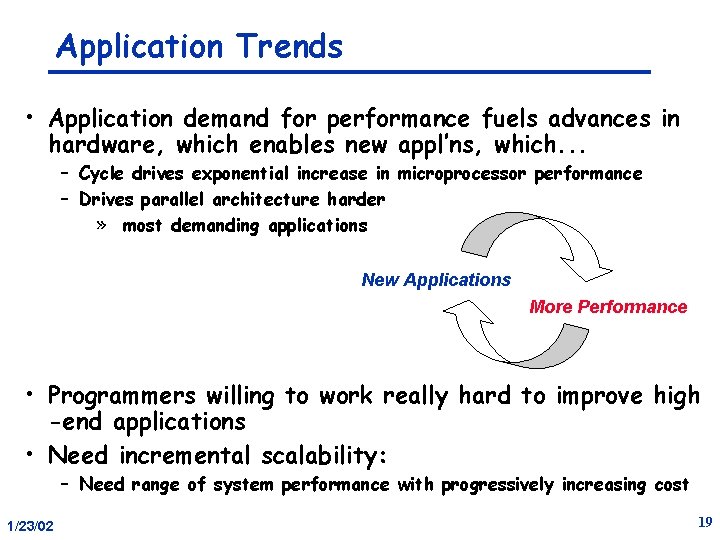

Application Trends • Application demand for performance fuels advances in hardware, which enables new appl’ns, which. . . – Cycle drives exponential increase in microprocessor performance – Drives parallel architecture harder » most demanding applications New Applications More Performance • Programmers willing to work really hard to improve high -end applications • Need incremental scalability: – Need range of system performance with progressively increasing cost 1/23/02 19

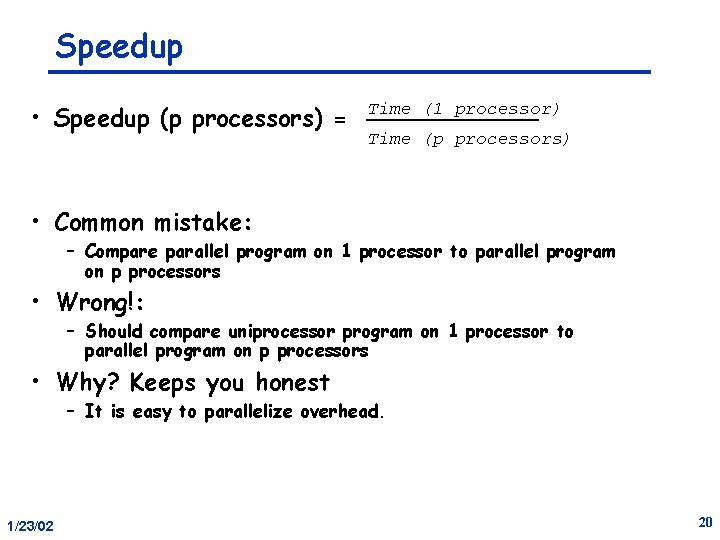

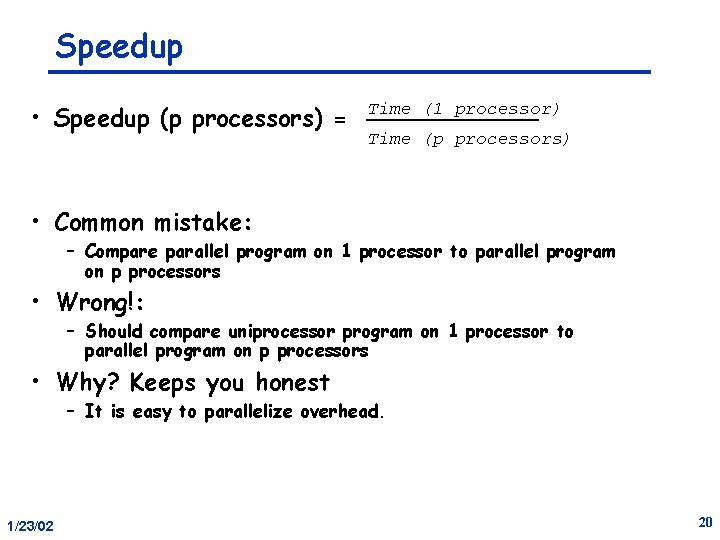

Speedup • Speedup (p processors) = Time (1 processor) Time (p processors) • Common mistake: – Compare parallel program on 1 processor to parallel program on p processors • Wrong!: – Should compare uniprocessor program on 1 processor to parallel program on p processors • Why? Keeps you honest – It is easy to parallelize overhead. 1/23/02 20

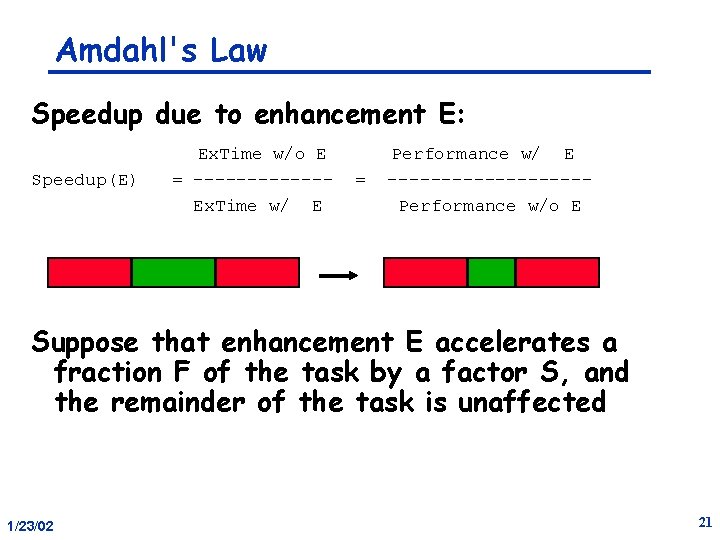

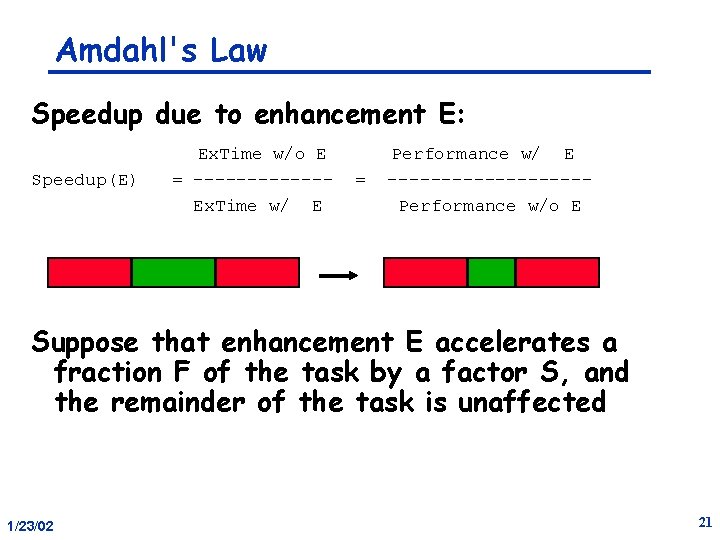

Amdahl's Law Speedup due to enhancement E: Speedup(E) Ex. Time w/o E = ------Ex. Time w/ E = Performance w/ E ---------Performance w/o E Suppose that enhancement E accelerates a fraction F of the task by a factor S, and the remainder of the task is unaffected 1/23/02 21

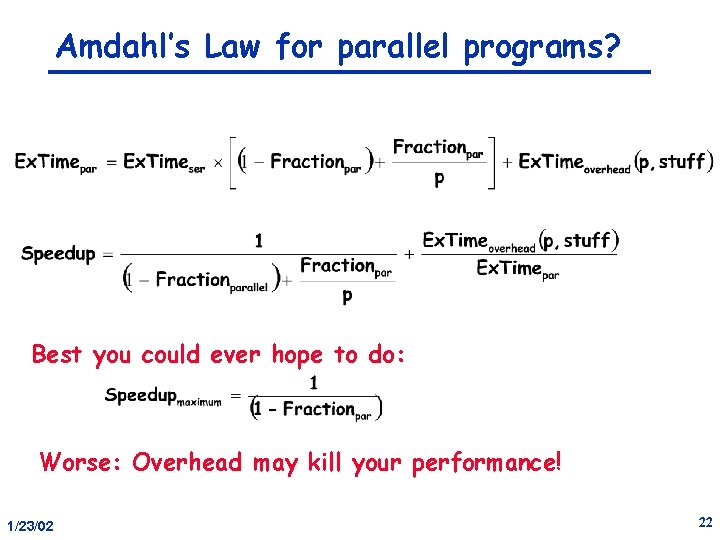

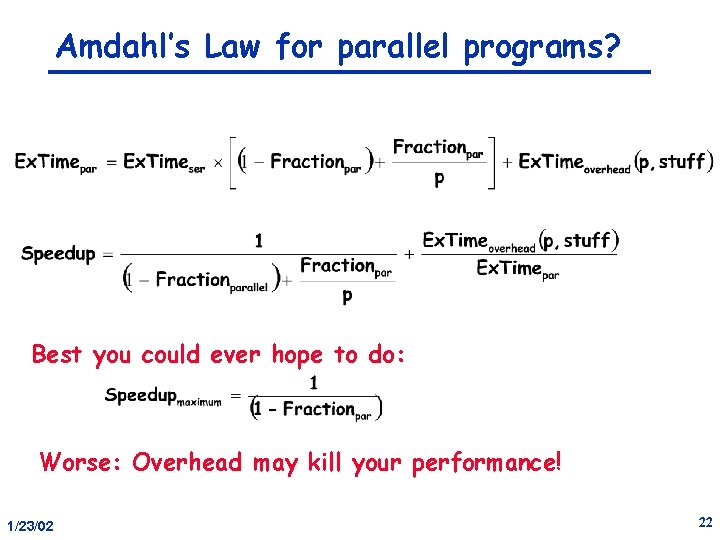

Amdahl’s Law for parallel programs? Best you could ever hope to do: Worse: Overhead may kill your performance! 1/23/02 22

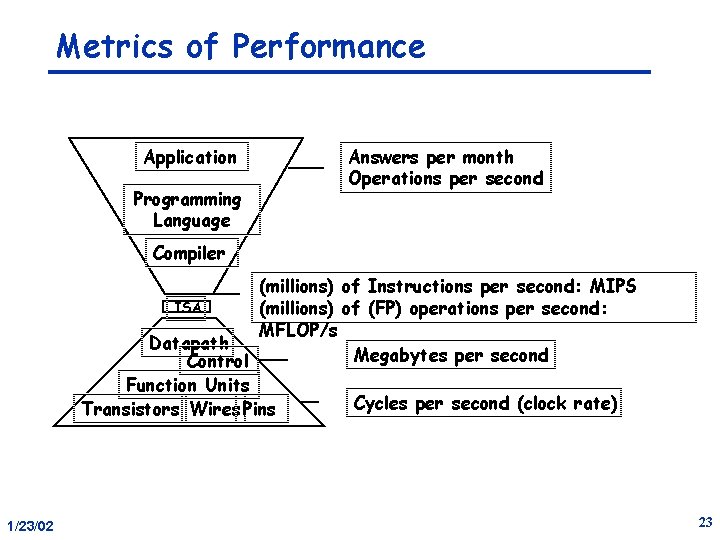

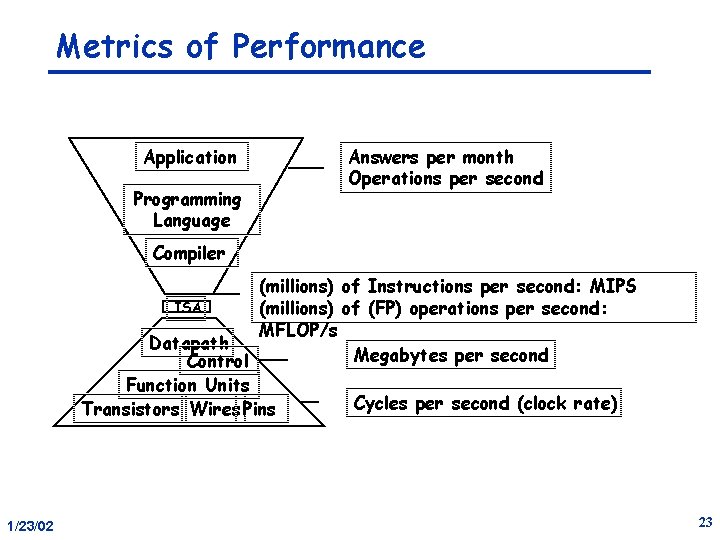

Metrics of Performance Application Programming Language Answers per month Operations per second Compiler (millions) of Instructions per second: MIPS ISA (millions) of (FP) operations per second: MFLOP/s Datapath Megabytes per second Control Function Units Cycles per second (clock rate) Transistors Wires Pins 1/23/02 23

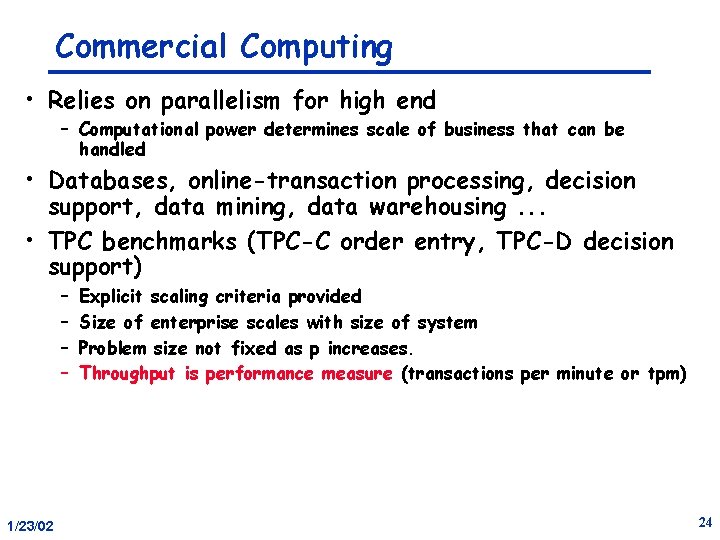

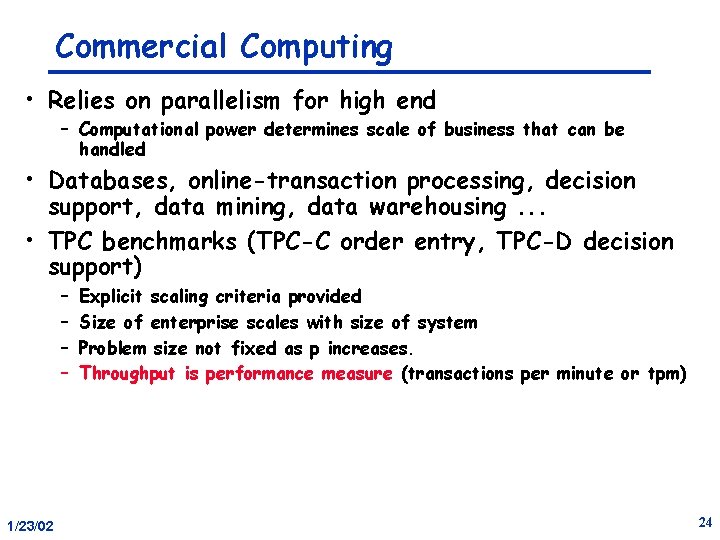

Commercial Computing • Relies on parallelism for high end – Computational power determines scale of business that can be handled • Databases, online-transaction processing, decision support, data mining, data warehousing. . . • TPC benchmarks (TPC-C order entry, TPC-D decision support) – – 1/23/02 Explicit scaling criteria provided Size of enterprise scales with size of system Problem size not fixed as p increases. Throughput is performance measure (transactions per minute or tpm) 24

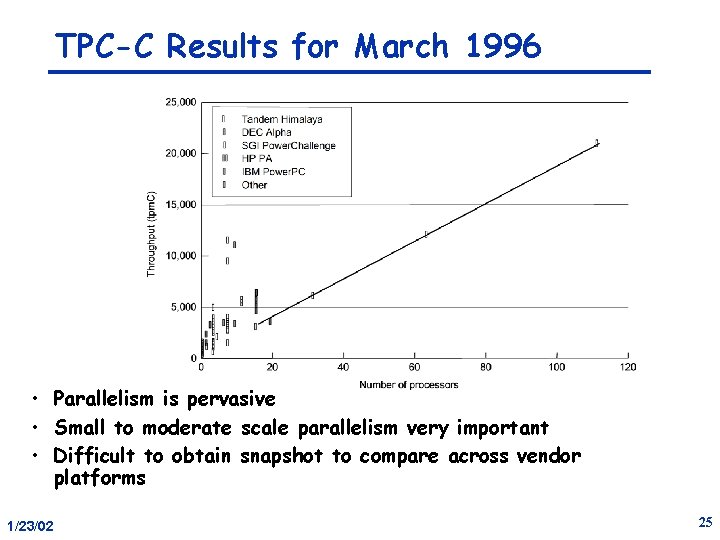

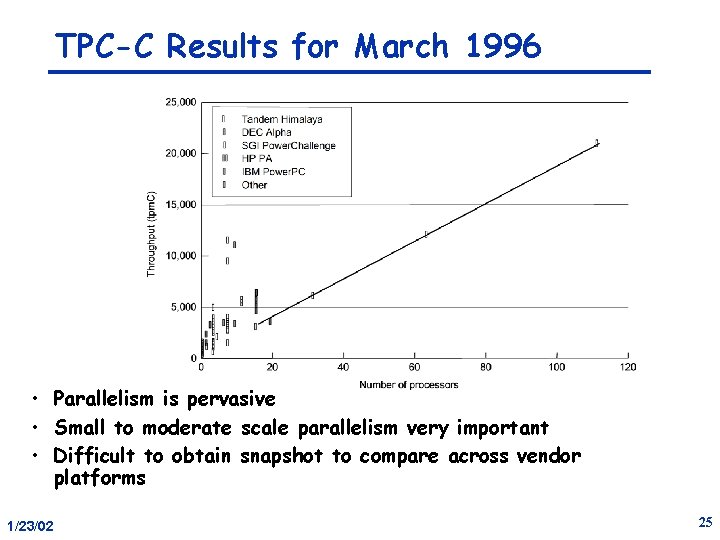

TPC-C Results for March 1996 • Parallelism is pervasive • Small to moderate scale parallelism very important • Difficult to obtain snapshot to compare across vendor platforms 1/23/02 25

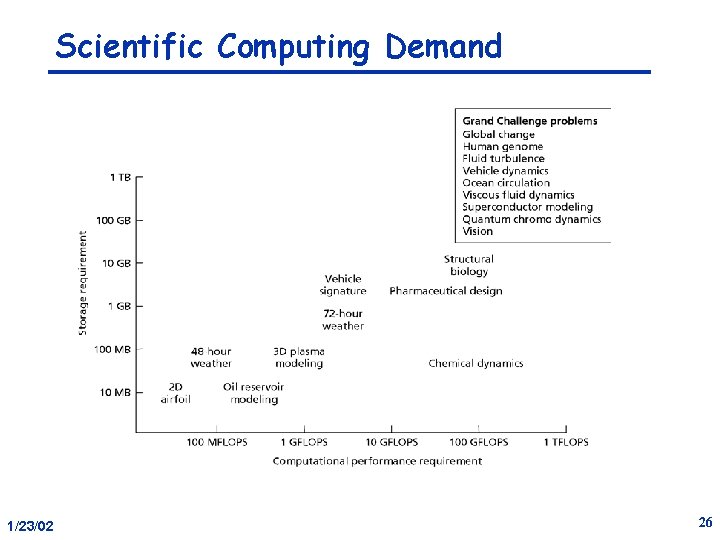

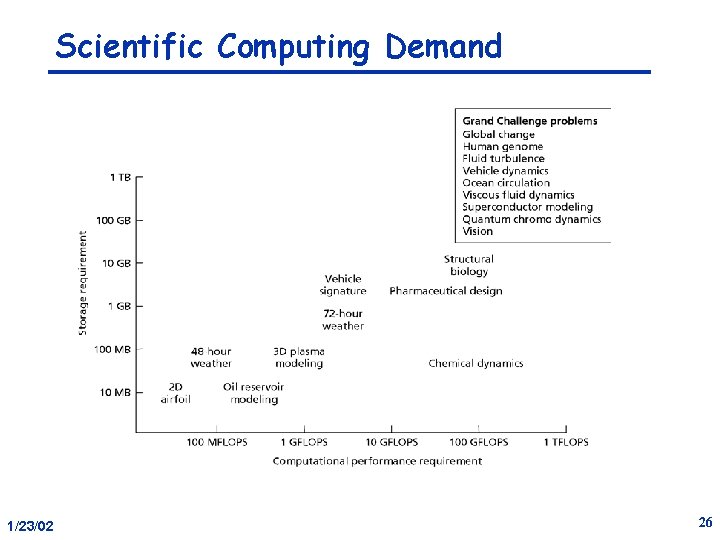

Scientific Computing Demand 1/23/02 26

Engineering Computing Demand • Large parallel machines a mainstay in many industries – Petroleum (reservoir analysis) – Automotive (crash simulation, drag analysis, combustion efficiency), – Aeronautics (airflow analysis, engine efficiency, structural mechanics, electromagnetism), – Computer-aided design – Pharmaceuticals (molecular modeling) – Visualization » in all of the above » entertainment (films like Toy Story) » architecture (walk-throughs and rendering) – Financial modeling (yield and derivative analysis) – etc. 1/23/02 27

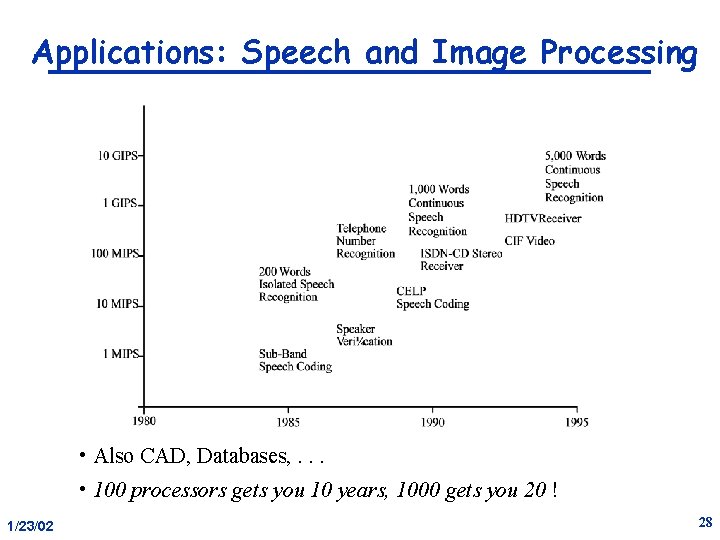

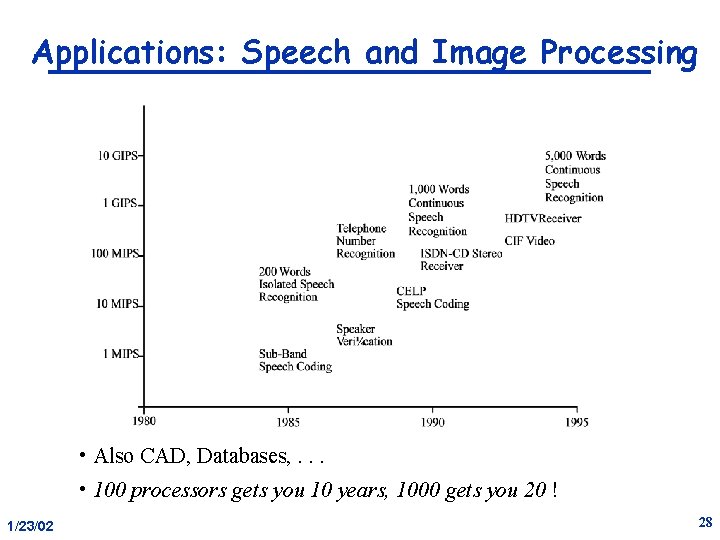

Applications: Speech and Image Processing • Also CAD, Databases, . . . • 100 processors gets you 10 years, 1000 gets you 20 ! 1/23/02 28

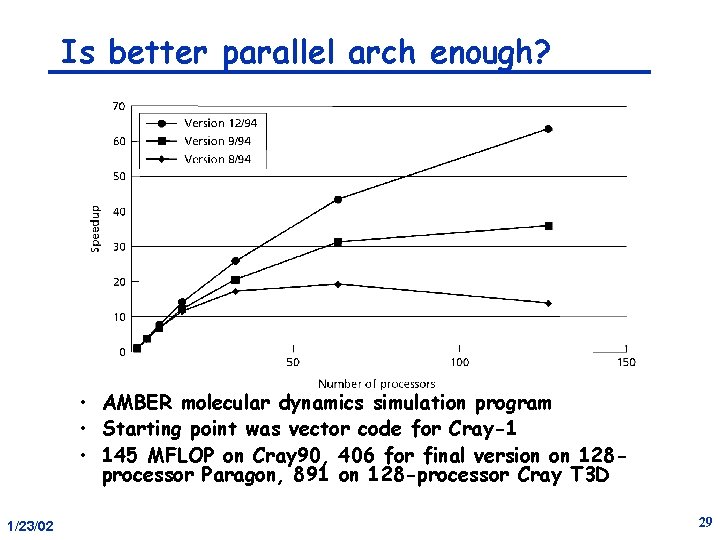

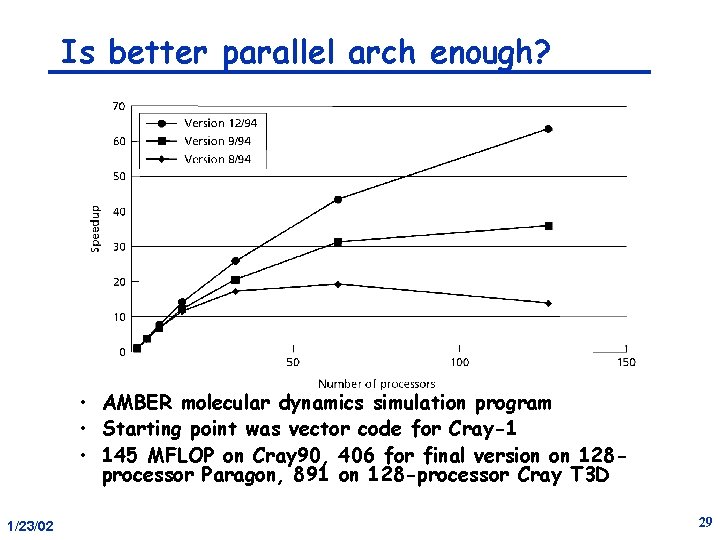

Is better parallel arch enough? • AMBER molecular dynamics simulation program • Starting point was vector code for Cray-1 • 145 MFLOP on Cray 90, 406 for final version on 128 processor Paragon, 891 on 128 -processor Cray T 3 D 1/23/02 29

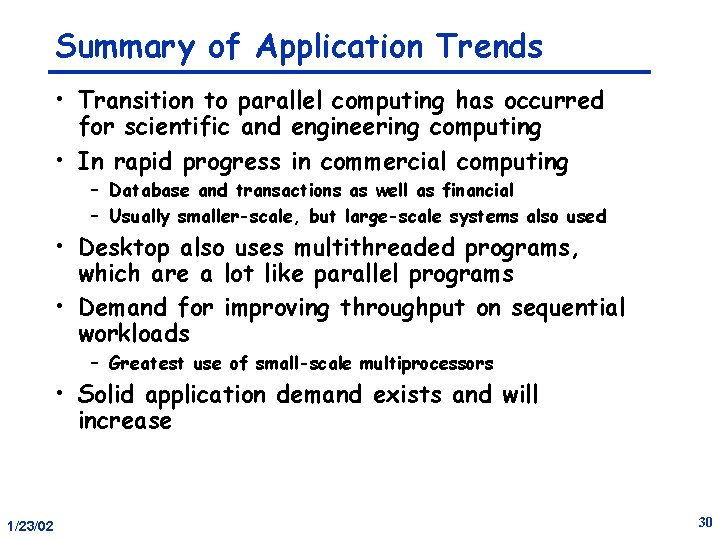

Summary of Application Trends • Transition to parallel computing has occurred for scientific and engineering computing • In rapid progress in commercial computing – Database and transactions as well as financial – Usually smaller-scale, but large-scale systems also used • Desktop also uses multithreaded programs, which are a lot like parallel programs • Demand for improving throughput on sequential workloads – Greatest use of small-scale multiprocessors • Solid application demand exists and will increase 1/23/02 30

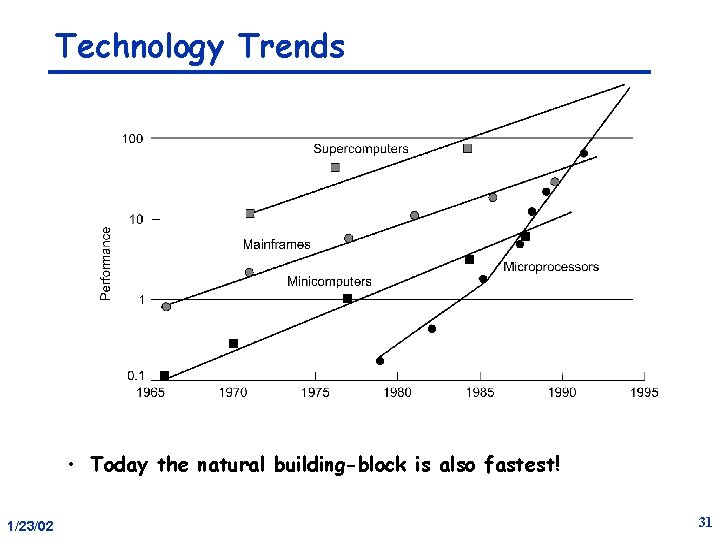

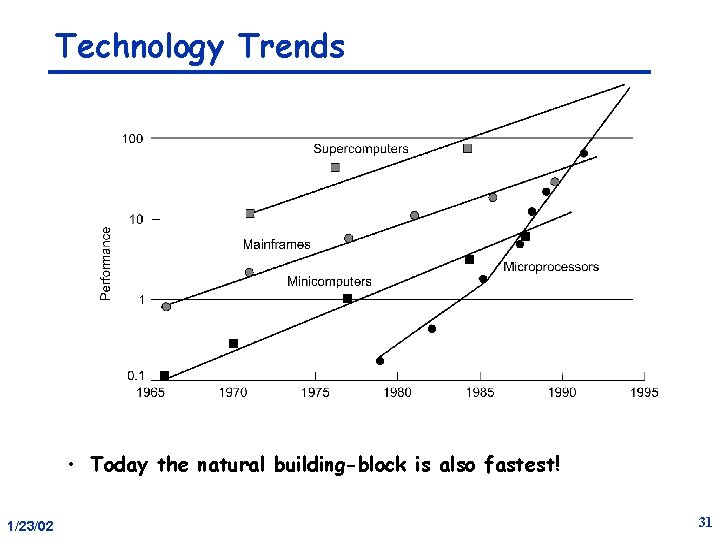

Technology Trends • Today the natural building-block is also fastest! 1/23/02 31

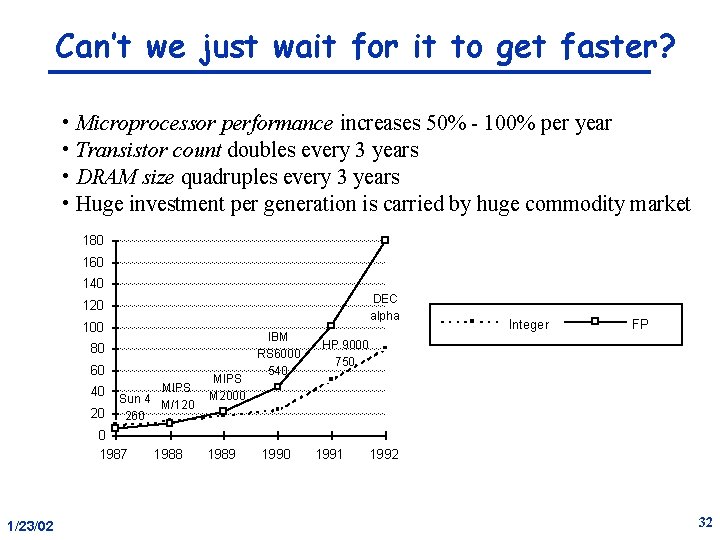

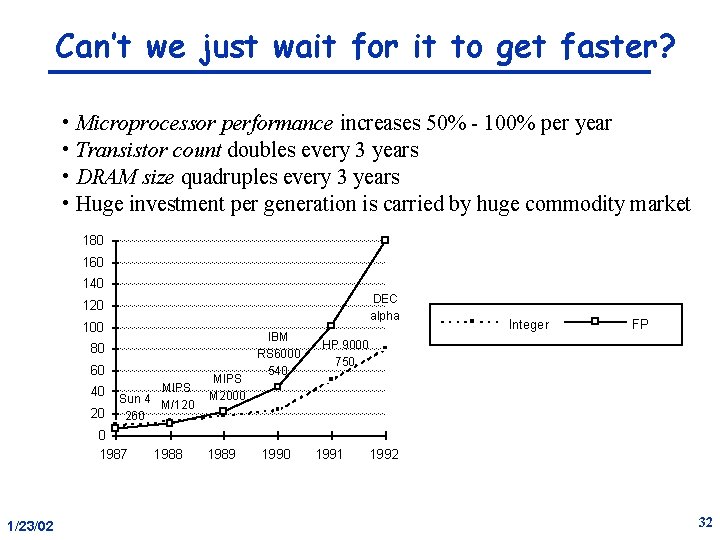

Can’t we just wait for it to get faster? • Microprocessor performance increases 50% - 100% per year • Transistor count doubles every 3 years • DRAM size quadruples every 3 years • Huge investment per generation is carried by huge commodity market 180 160 140 DEC alpha 120 100 80 60 40 20 MIPS Sun 4 M/120 260 0 1987 1/23/02 1988 MIPS M 2000 1989 IBM RS 6000 540 1990 Integer FP HP 9000 750 1991 1992 32

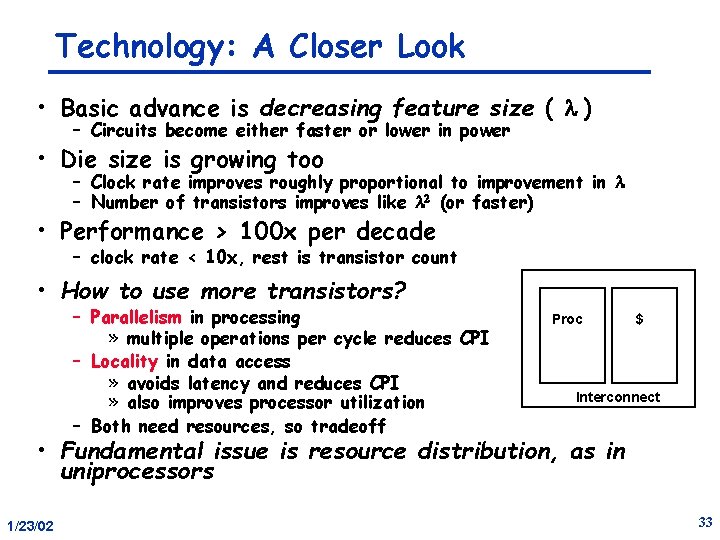

Technology: A Closer Look • Basic advance is decreasing feature size ( ) – Circuits become either faster or lower in power • Die size is growing too – Clock rate improves roughly proportional to improvement in – Number of transistors improves like (or faster) • Performance > 100 x per decade – clock rate < 10 x, rest is transistor count • How to use more transistors? – Parallelism in processing » multiple operations per cycle reduces CPI – Locality in data access » avoids latency and reduces CPI » also improves processor utilization – Both need resources, so tradeoff Proc $ Interconnect • Fundamental issue is resource distribution, as in uniprocessors 1/23/02 33

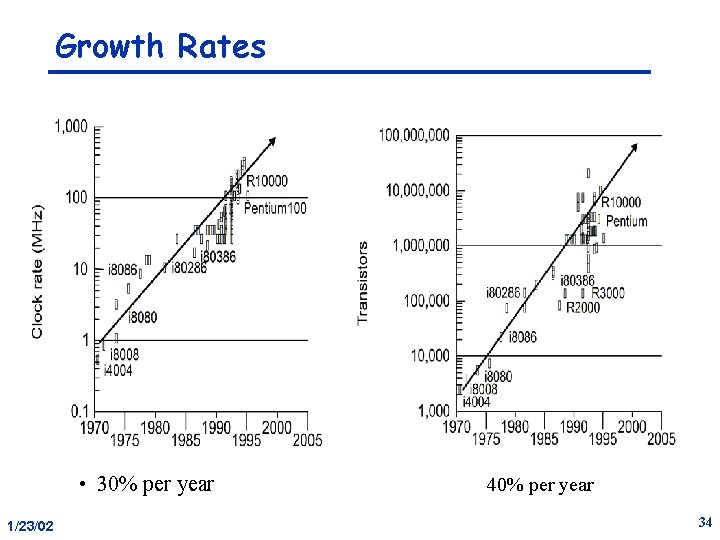

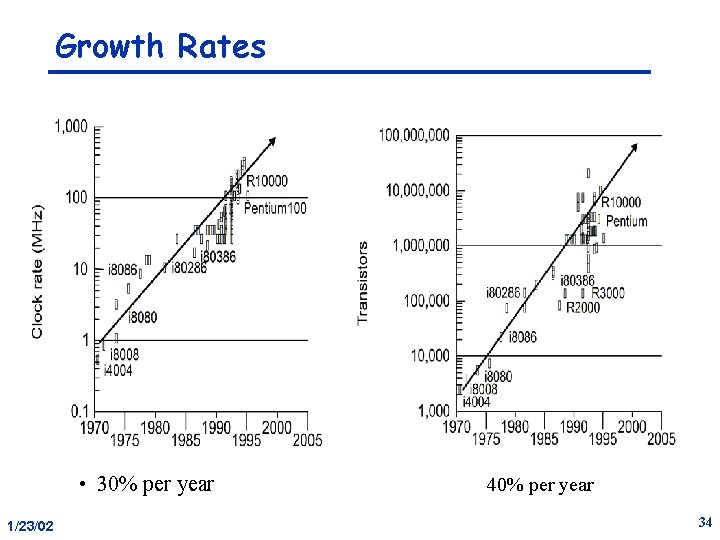

Growth Rates • 30% per year 1/23/02 40% per year 34

Architectural Trends • Architecture translates technology’s gifts into performance and capability • Resolves the tradeoff between parallelism and locality – Current microprocessor: 1/3 compute, 1/3 cache, 1/3 offchip connect – Tradeoffs may change with scale and technology advances • Understanding microprocessor architectural trends => Helps build intuition about design issues or parallel machines => Shows fundamental role of parallelism even in “sequential” computers 1/23/02 35

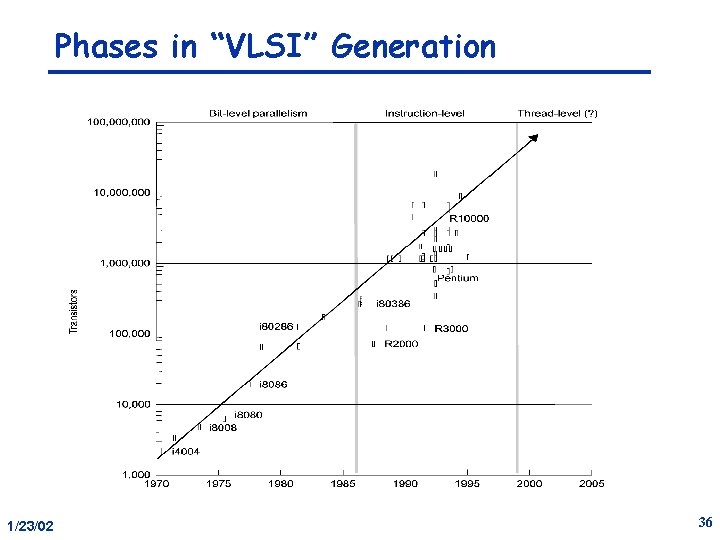

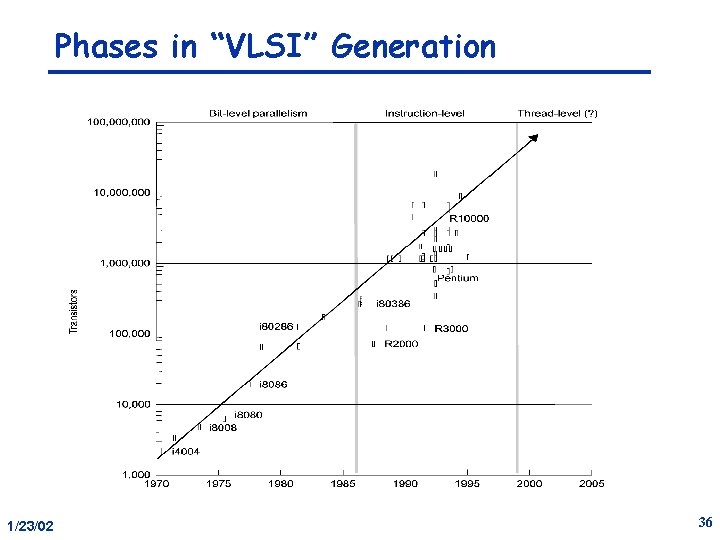

Phases in “VLSI” Generation 1/23/02 36

Architectural Trends • Greatest trend in VLSI generation is increase in parallelism – Up to 1985: bit level parallelism: 4 -bit -> 8 bit -> 16 -bit » slows after 32 bit » adoption of 64 -bit now under way, 128 -bit far (not performance issue) » great inflection point when 32 -bit micro and cache fit on a chip – Mid 80 s to mid 90 s: instruction level parallelism » pipelining and simple instruction sets, + compiler advances (RISC) » on-chip caches and functional units => superscalar execution » greater sophistication: out of order execution, speculation, prediction • to deal with control transfer and latency problems – Next step: thread level parallelism? Bit-level parallelism? 1/23/02 37

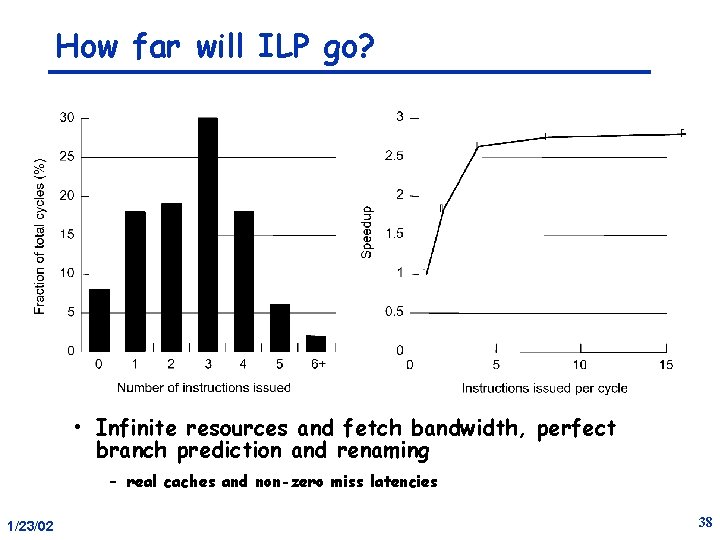

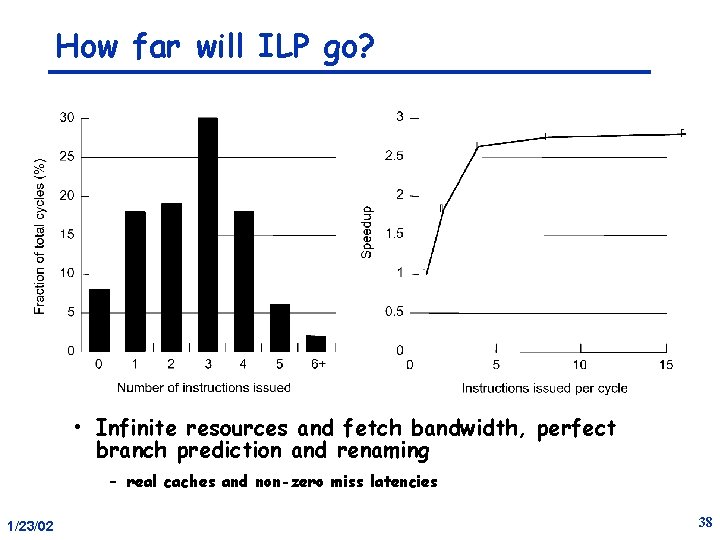

How far will ILP go? • Infinite resources and fetch bandwidth, perfect branch prediction and renaming – real caches and non-zero miss latencies 1/23/02 38

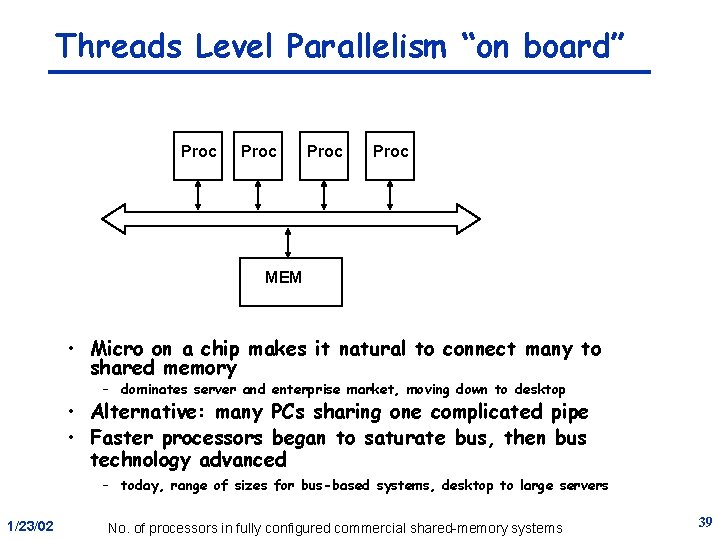

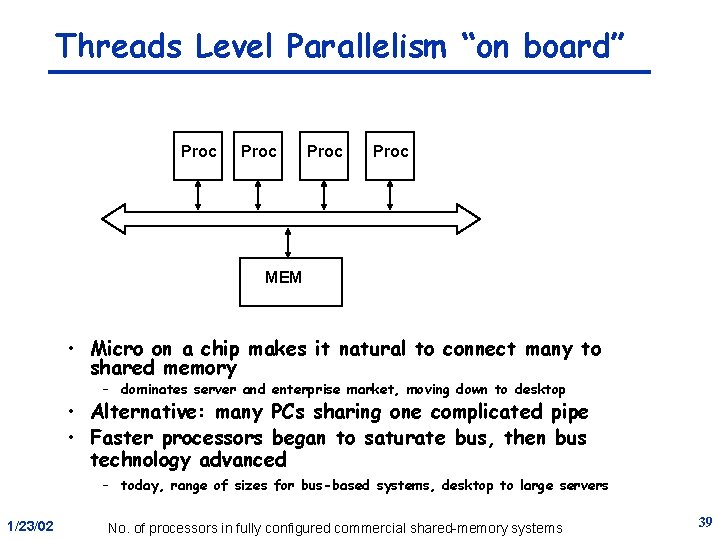

Threads Level Parallelism “on board” Proc MEM • Micro on a chip makes it natural to connect many to shared memory – dominates server and enterprise market, moving down to desktop • Alternative: many PCs sharing one complicated pipe • Faster processors began to saturate bus, then bus technology advanced – today, range of sizes for bus-based systems, desktop to large servers 1/23/02 No. of processors in fully configured commercial shared-memory systems 39

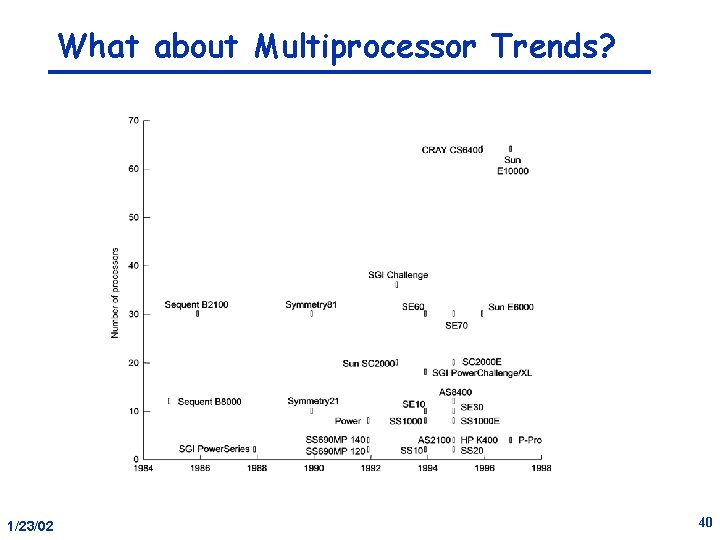

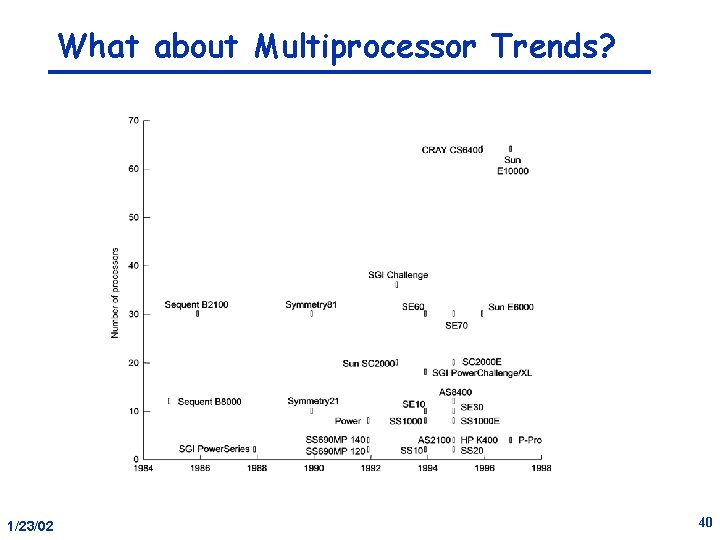

What about Multiprocessor Trends? 1/23/02 40

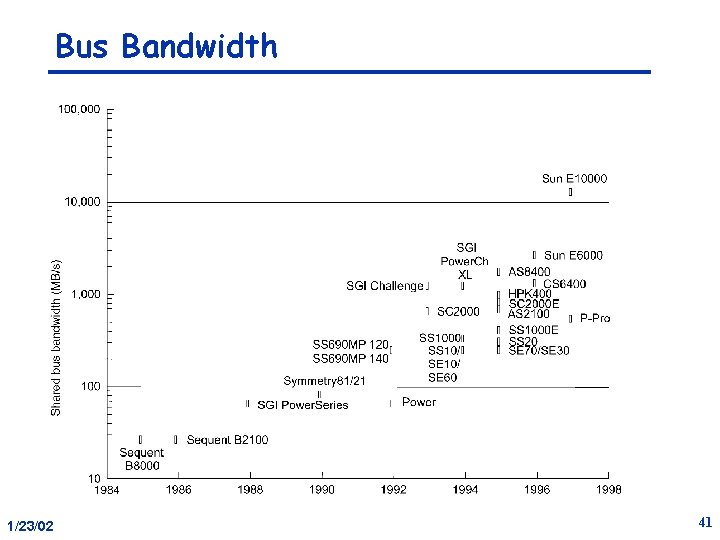

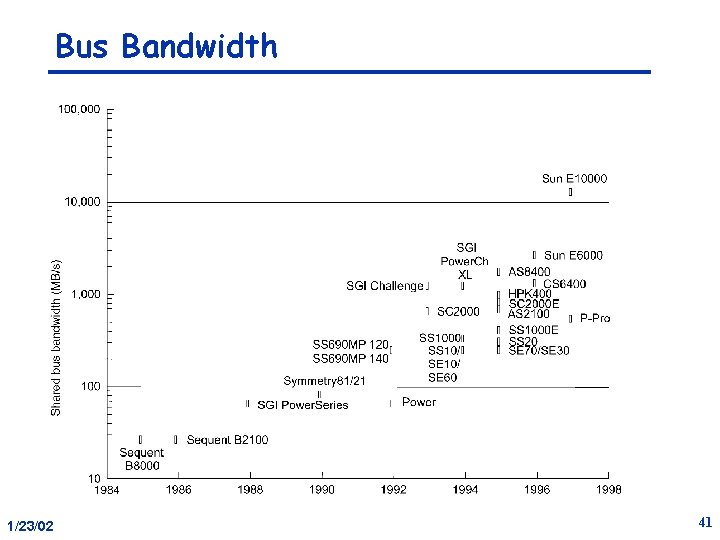

Bus Bandwidth 1/23/02 41

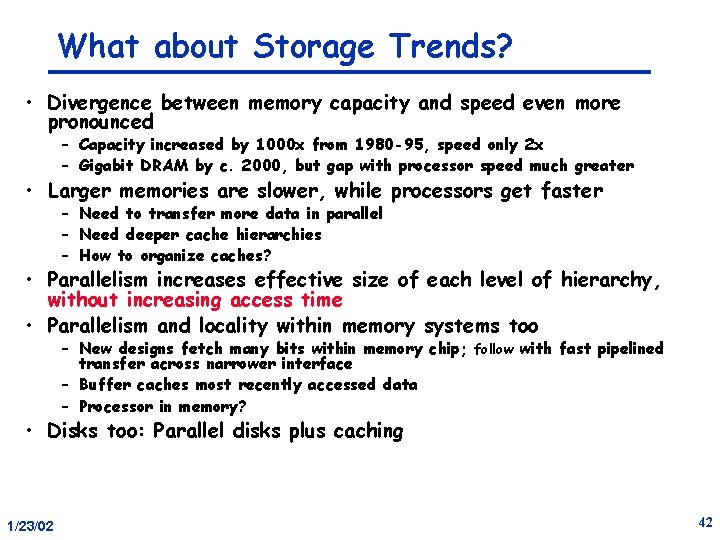

What about Storage Trends? • Divergence between memory capacity and speed even more pronounced – Capacity increased by 1000 x from 1980 -95, speed only 2 x – Gigabit DRAM by c. 2000, but gap with processor speed much greater • Larger memories are slower, while processors get faster – Need to transfer more data in parallel – Need deeper cache hierarchies – How to organize caches? • Parallelism increases effective size of each level of hierarchy, without increasing access time • Parallelism and locality within memory systems too – New designs fetch many bits within memory chip; follow with fast pipelined transfer across narrower interface – Buffer caches most recently accessed data – Processor in memory? • Disks too: Parallel disks plus caching 1/23/02 42

Economics • Commodity microprocessors not only fast but CHEAP – Development costs tens of millions of dollars – BUT, many more are sold compared to supercomputers – Crucial to take advantage of the investment, and use the commodity building block • Multiprocessors being pushed by software vendors (e. g. database) as well as hardware vendors • Standardization makes small, bus-based SMPs commodity • Desktop: few smaller processors versus one larger one? • Multiprocessor on a chip? 1/23/02 43

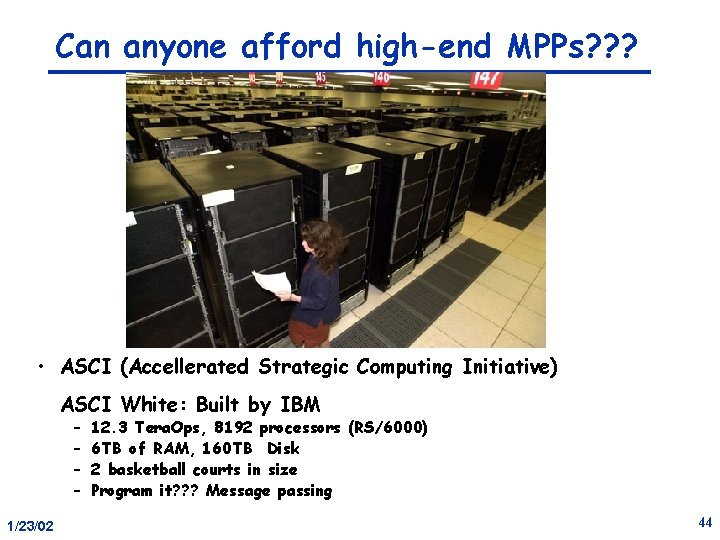

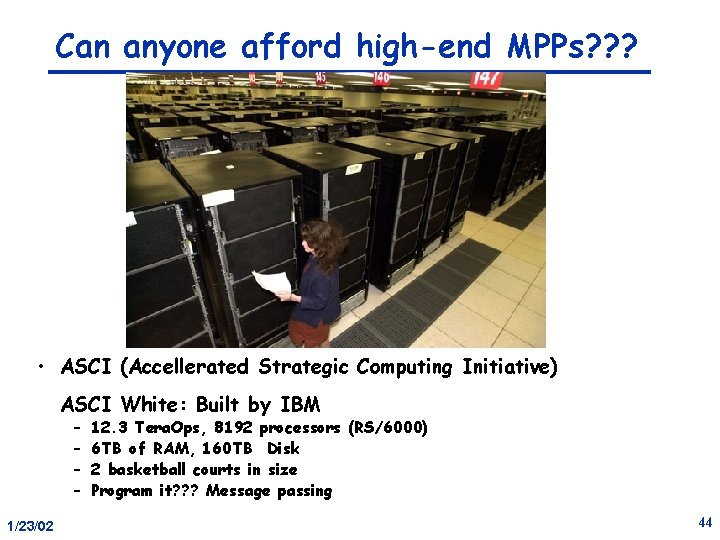

Can anyone afford high-end MPPs? ? ? • ASCI (Accellerated Strategic Computing Initiative) ASCI White: Built by IBM – – 1/23/02 12. 3 Tera. Ops, 8192 processors (RS/6000) 6 TB of RAM, 160 TB Disk 2 basketball courts in size Program it? ? ? Message passing 44

Consider Scientific Supercomputing • Proving ground and driver for innovative architecture and techniques – Market smaller relative to commercial as MPs become mainstream – Dominated by vector machines starting in 70 s – Microprocessors have made huge gains in floating-point performance » high clock rates » pipelined floating point units (e. g. , multiply-add every cycle) » instruction-level parallelism » effective use of caches (e. g. , automatic blocking) – Plus economics • Large-scale multiprocessors replace vector supercomputers 1/23/02 45

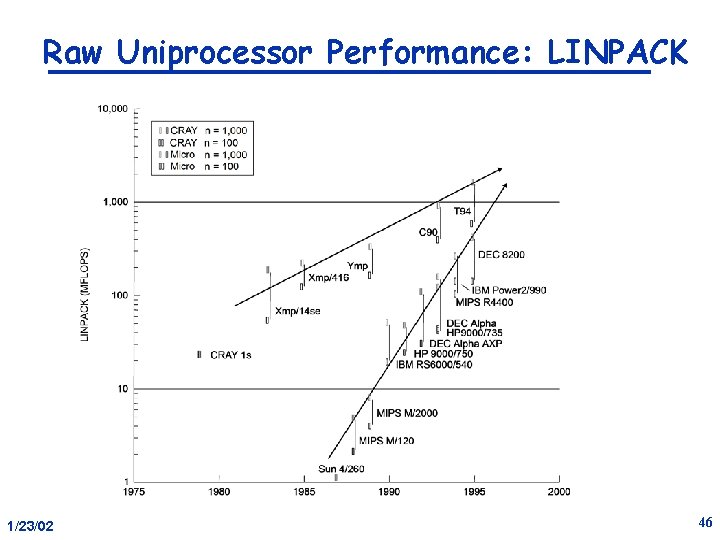

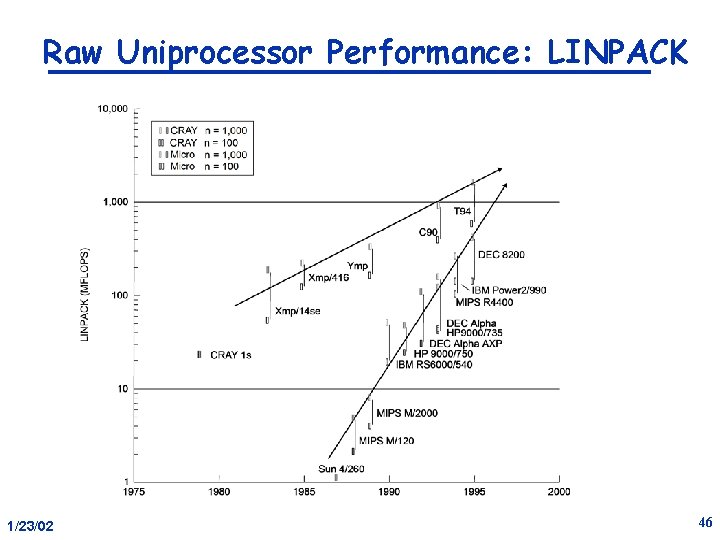

Raw Uniprocessor Performance: LINPACK 1/23/02 46

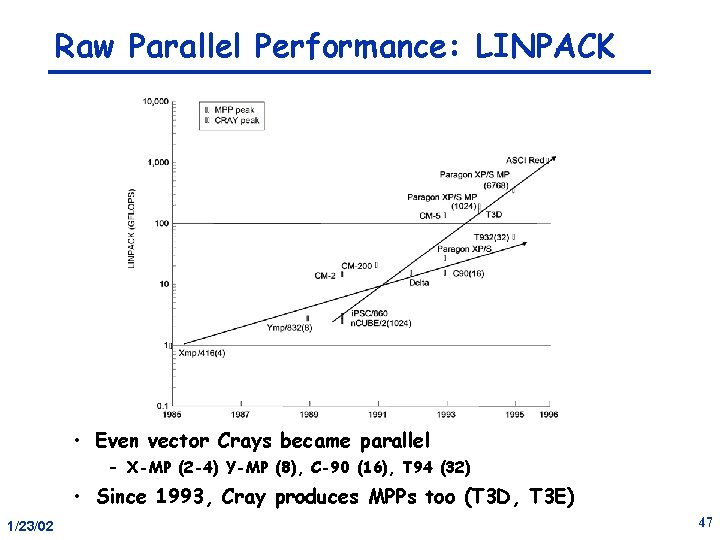

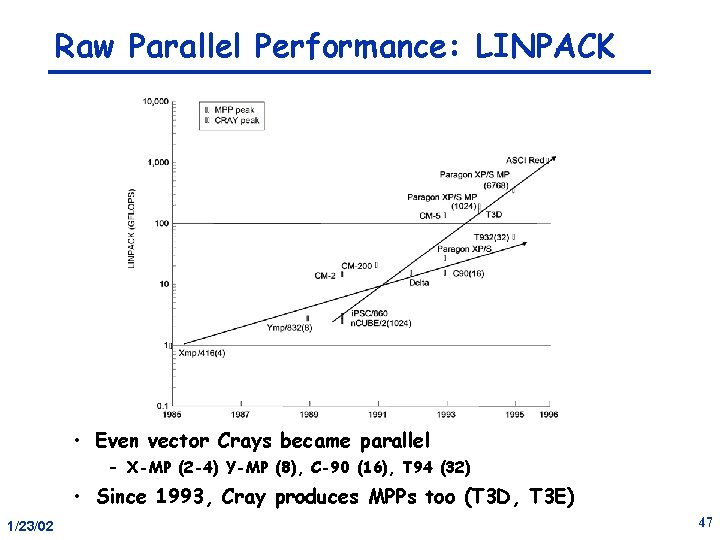

Raw Parallel Performance: LINPACK • Even vector Crays became parallel – X-MP (2 -4) Y-MP (8), C-90 (16), T 94 (32) • Since 1993, Cray produces MPPs too (T 3 D, T 3 E) 1/23/02 47

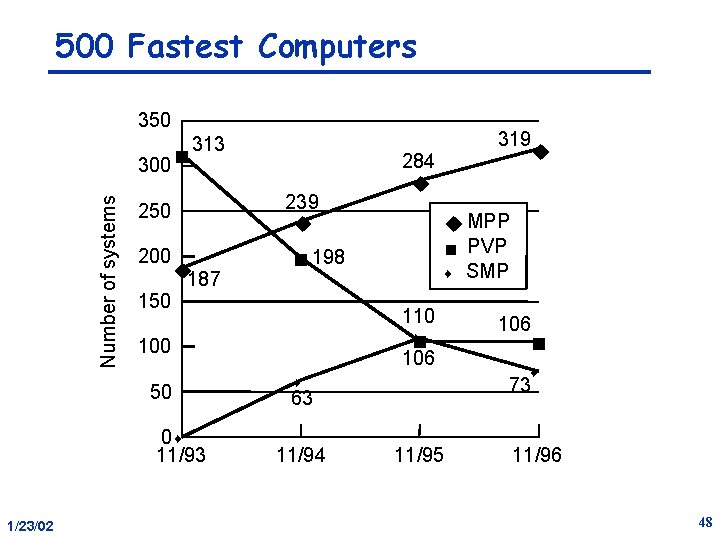

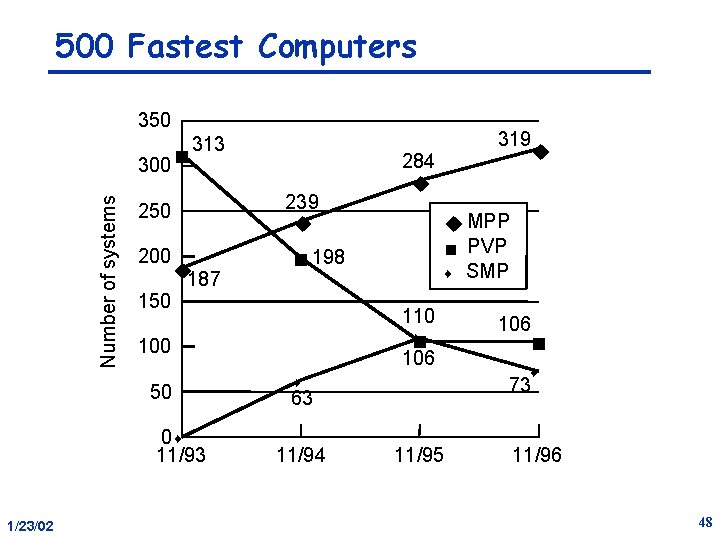

500 Fastest Computers 350 Number of systems 300 n 313 239 u 250 200 u 187 0 s 11/93 1/23/02 u MPP n PVP s SMP 110 sn 106 100 50 284 u n 198 150 319 u s 63 11/94 11/95 106 n s 73 11/96 48

Summary: Why Parallel Architecture? • Increasingly attractive – Economics, technology, architecture, application demand • Increasingly central and mainstream • Parallelism exploited at many levels – Instruction-level parallelism – Multiprocessor servers – Large-scale multiprocessors (“MPPs”) • Focus of this class: multiprocessor level of parallelism • Same story from memory system perspective – Increase bandwidth, reduce average latency with many local memories • Spectrum of parallel architectures make sense – Different cost, performance and scalability 1/23/02 49

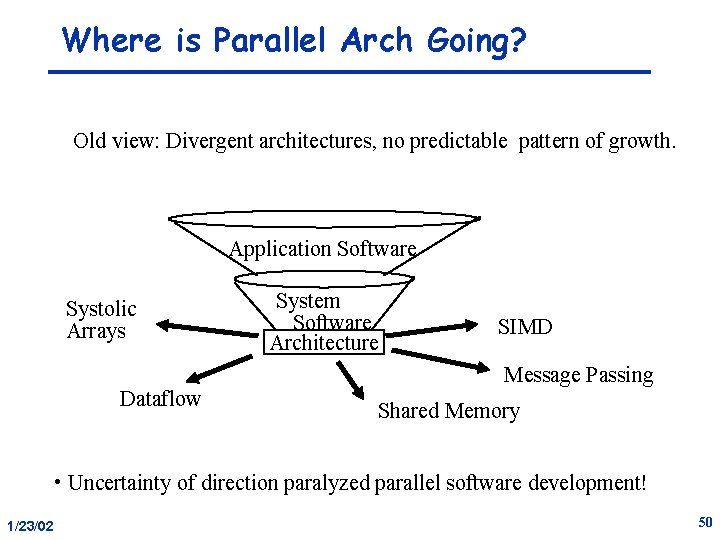

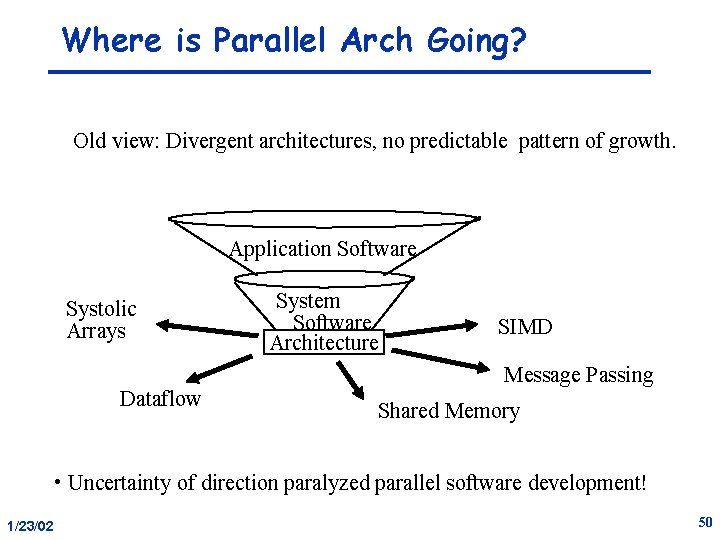

Where is Parallel Arch Going? Old view: Divergent architectures, no predictable pattern of growth. Application Software Systolic Arrays Dataflow System Software Architecture SIMD Message Passing Shared Memory • Uncertainty of direction paralyzed parallel software development! 1/23/02 50

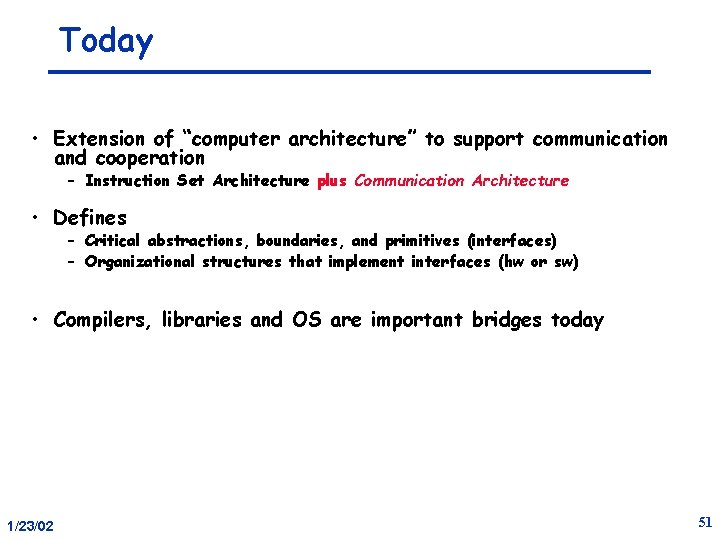

Today • Extension of “computer architecture” to support communication and cooperation – Instruction Set Architecture plus Communication Architecture • Defines – Critical abstractions, boundaries, and primitives (interfaces) – Organizational structures that implement interfaces (hw or sw) • Compilers, libraries and OS are important bridges today 1/23/02 51