CS 258 Parallel Computer Architecture Lecture 15 1

![Hardware cost of directory • [table 2 in the paper] • 13. 7% DRAM Hardware cost of directory • [table 2 in the paper] • 13. 7% DRAM](https://slidetodoc.com/presentation_image_h2/97e153ed6a188968886fc290f5ae1a44/image-8.jpg)

- Slides: 12

CS 258 Parallel Computer Architecture Lecture 15. 1 DASH: Directory Architecture for Shared memory Implementation, cost, performance Daniel Lenoski, et. al. “The DASH Prototype: Implementation and Performance”, Proceedings of the International symposium on Computer Architecture, 1992. March 17, 2008 Rhishikesh Limaye

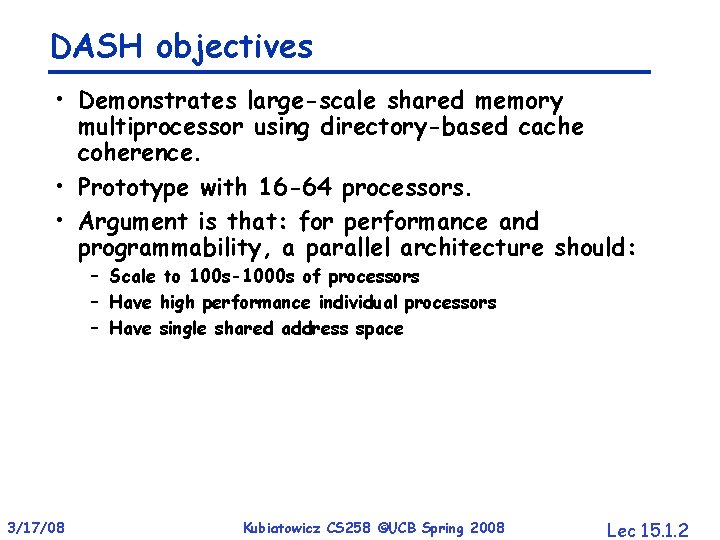

DASH objectives • Demonstrates large-scale shared memory multiprocessor using directory-based cache coherence. • Prototype with 16 -64 processors. • Argument is that: for performance and programmability, a parallel architecture should: – Scale to 100 s-1000 s of processors – Have high performance individual processors – Have single shared address space 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 2

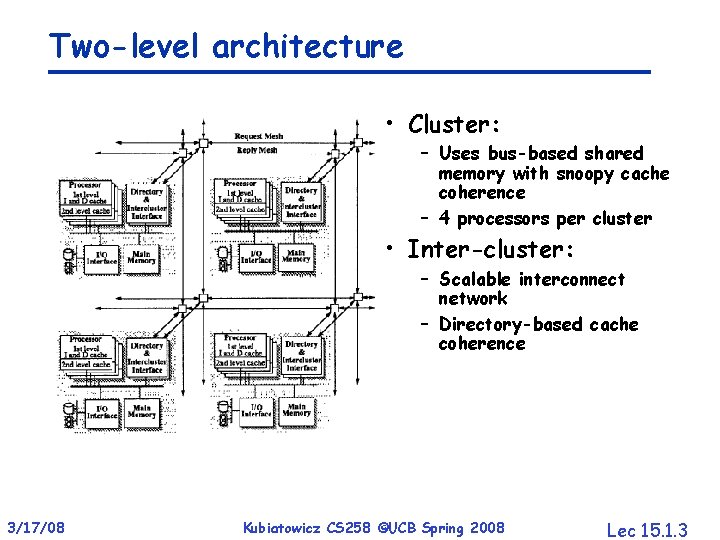

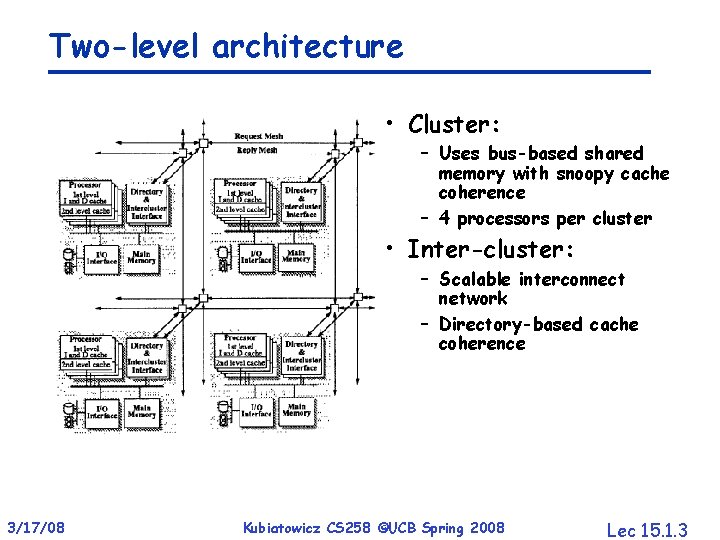

Two-level architecture • Cluster: – Uses bus-based shared memory with snoopy cache coherence – 4 processors per cluster • Inter-cluster: – Scalable interconnect network – Directory-based cache coherence 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 3

Cluster level • Minor modifications to off-the-shelf 4 D/340 cluster • 4 MIPS R 3000 processors + 4 R 3010 floating point coprocessors • L 1 write-through, L 2 write-back. • Cache coherence: – MESI i. e. Illinois » Cache-to-cache transfers good for cached remote locations – L 1 cache is write-through => inclusion property • Pipelined bus with maximum bandwidth 64 MB/s. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 4

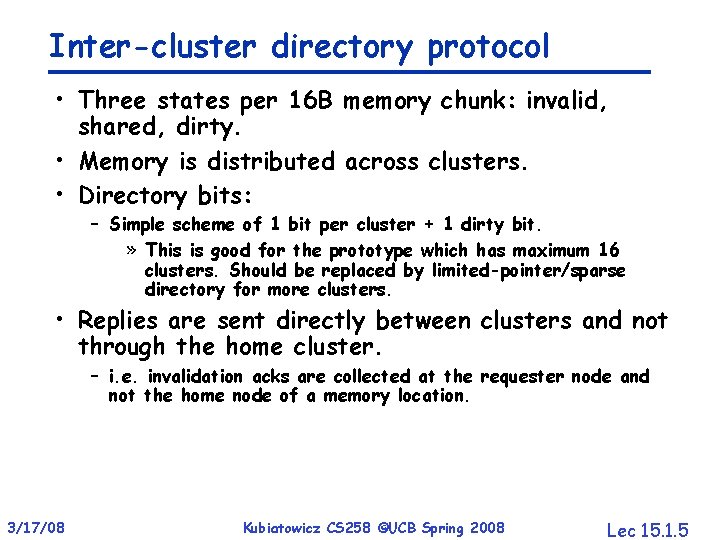

Inter-cluster directory protocol • Three states per 16 B memory chunk: invalid, shared, dirty. • Memory is distributed across clusters. • Directory bits: – Simple scheme of 1 bit per cluster + 1 dirty bit. » This is good for the prototype which has maximum 16 clusters. Should be replaced by limited-pointer/sparse directory for more clusters. • Replies are sent directly between clusters and not through the home cluster. – i. e. invalidation acks are collected at the requester node and not the home node of a memory location. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 5

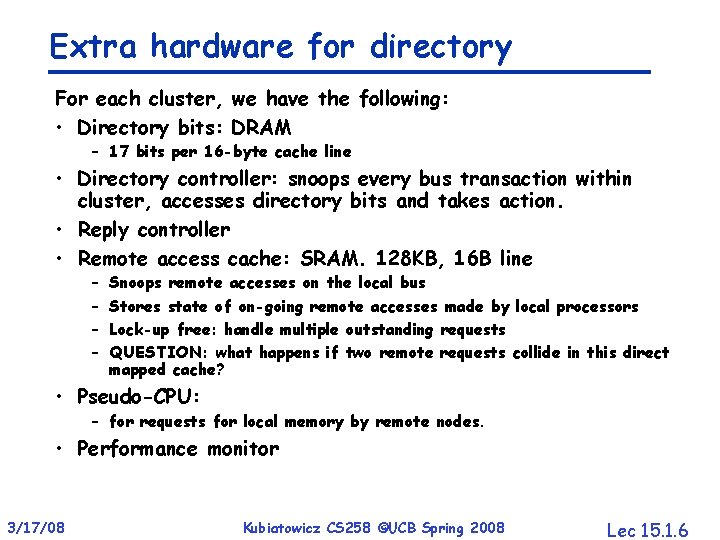

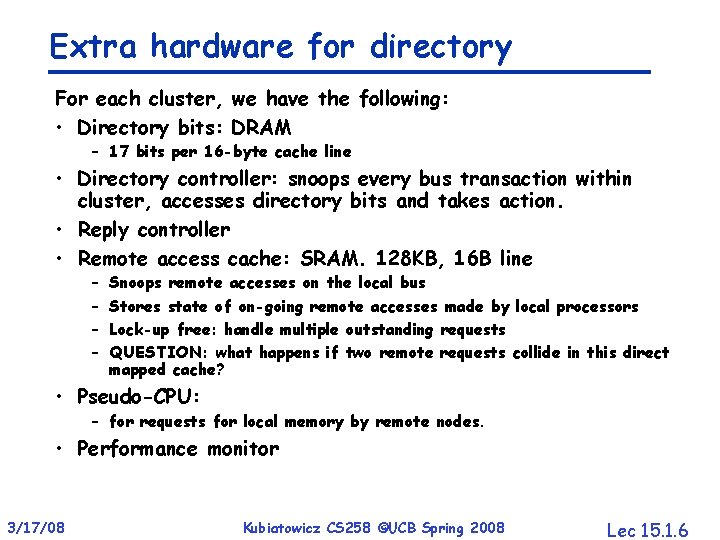

Extra hardware for directory For each cluster, we have the following: • Directory bits: DRAM – 17 bits per 16 -byte cache line • Directory controller: snoops every bus transaction within cluster, accesses directory bits and takes action. • Reply controller • Remote access cache: SRAM. 128 KB, 16 B line – – Snoops remote accesses on the local bus Stores state of on-going remote accesses made by local processors Lock-up free: handle multiple outstanding requests QUESTION: what happens if two remote requests collide in this direct mapped cache? • Pseudo-CPU: – for requests for local memory by remote nodes. • Performance monitor 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 6

Memory performance • 4 -level memory hierarchy: (L 1, L 2), (local L 2 s + memory), directory home, remote cluster Best case MB/s Read from L 1 Worst case Clock/word MB/s Clock/word 133 1 Fill from L 2 30 4. 5 9 15 Fill from local bus 17 8 5 29 Fill from remote 5 26 1. 3 101 Fill from dirty remote 4 34 1 132 Write to cache 32 4 Write to local bus 18 7 8 17 Write to remote 5 25 1. 5 89 Write to dirty-remote 4 33 1 120 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 7

![Hardware cost of directory table 2 in the paper 13 7 DRAM Hardware cost of directory • [table 2 in the paper] • 13. 7% DRAM](https://slidetodoc.com/presentation_image_h2/97e153ed6a188968886fc290f5ae1a44/image-8.jpg)

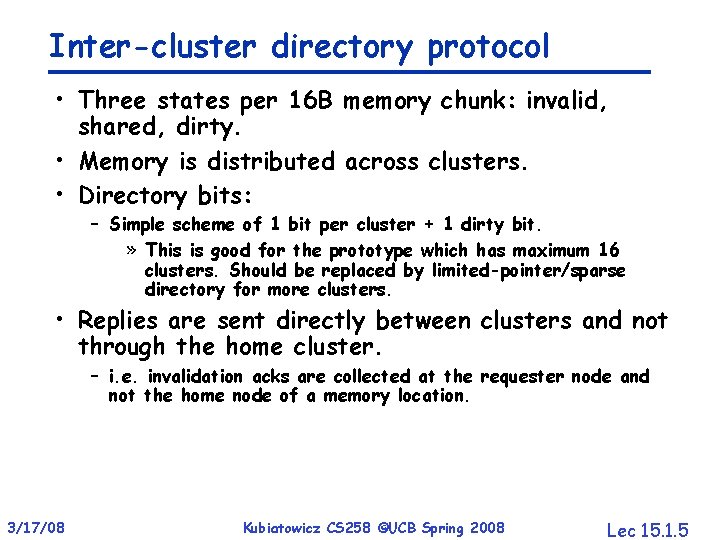

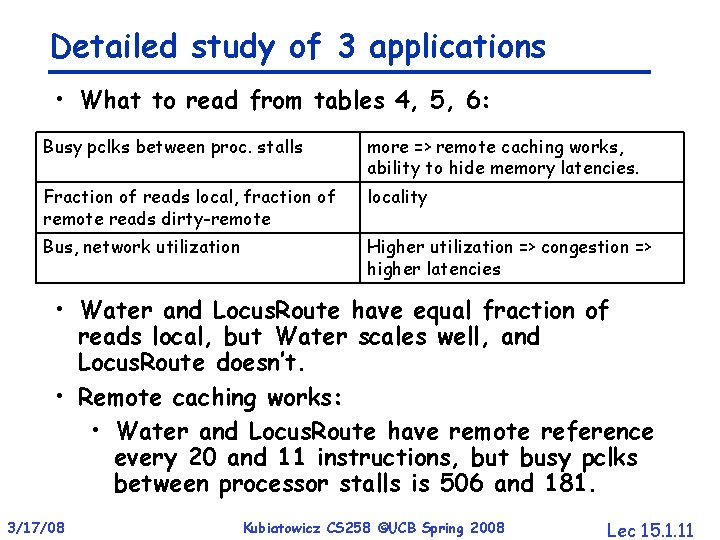

Hardware cost of directory • [table 2 in the paper] • 13. 7% DRAM – directory bits – For larger systems, sparse representation needed. • 10% SRAM – remote access cache • 20% logic gates – controllers and network interfaces • Clustering is important: – For uniprocessor node, directory logic is 44%. • Compare to message passing: – Message passing has about 10% logic + ~0 memory cost. – Thus, hardware coherence costs 10% more logic and 10% more memory. – Later argued that, the performance improvement is much greater than 10% -- 3 -4 X. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 8

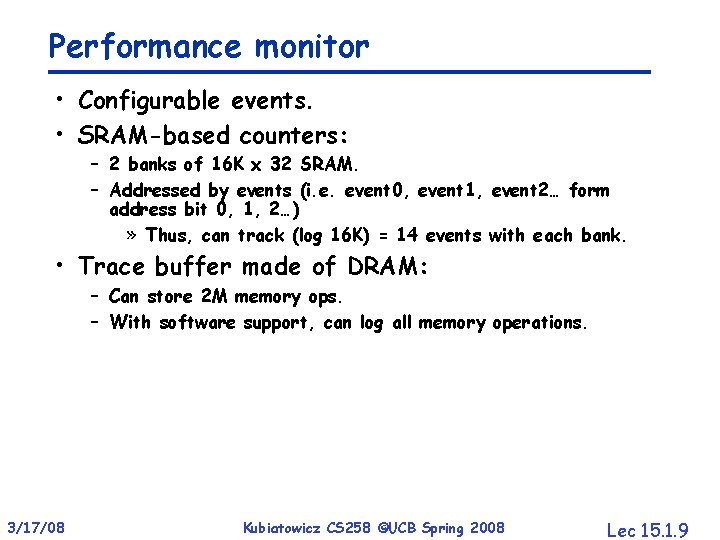

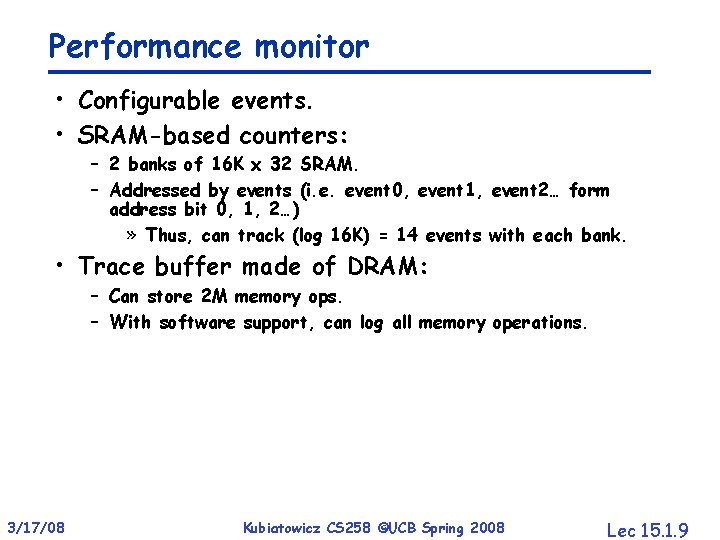

Performance monitor • Configurable events. • SRAM-based counters: – 2 banks of 16 K x 32 SRAM. – Addressed by events (i. e. event 0, event 1, event 2… form address bit 0, 1, 2…) » Thus, can track (log 16 K) = 14 events with each bank. • Trace buffer made of DRAM: – Can store 2 M memory ops. – With software support, can log all memory operations. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 9

Performance results • 9 applications • Good speed-up on 5 – without specially optimizing for DASH. • MP 3 D has bad locality. PSIM 4 is enhanced version of MP 3 D. • Cholesky: more processors => too fine granularity, unless problem size is increased unreasonably. • Note: dip after P = 4. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 10

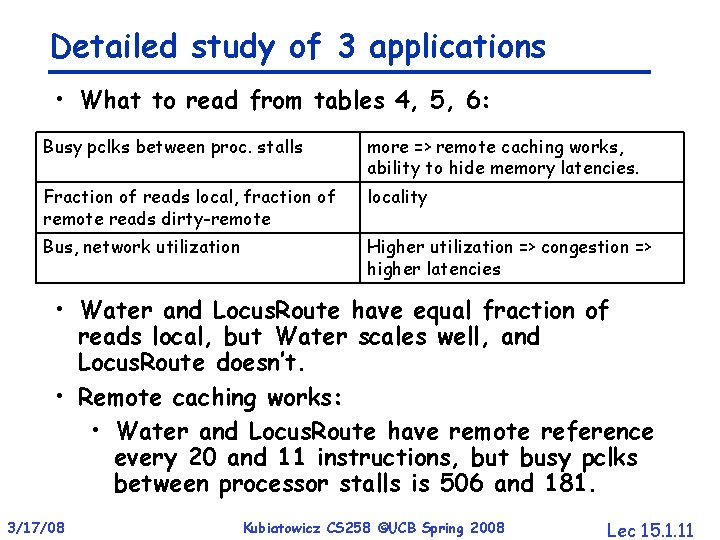

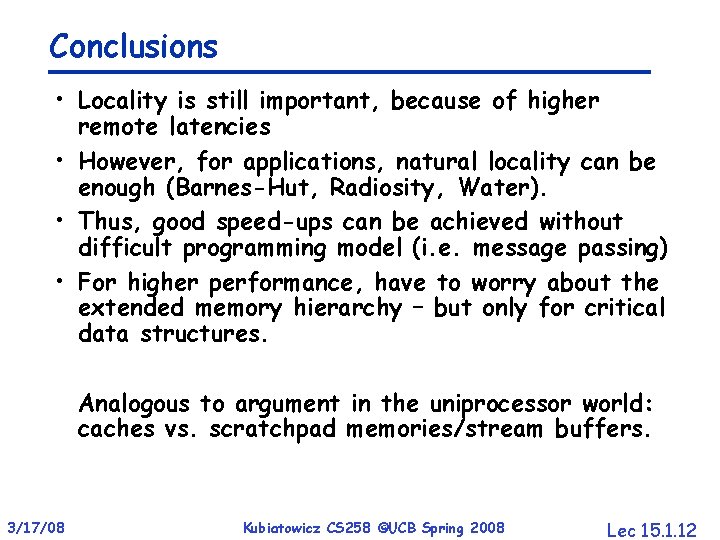

Detailed study of 3 applications • What to read from tables 4, 5, 6: Busy pclks between proc. stalls more => remote caching works, ability to hide memory latencies. Fraction of reads local, fraction of remote reads dirty-remote locality Bus, network utilization Higher utilization => congestion => higher latencies • Water and Locus. Route have equal fraction of reads local, but Water scales well, and Locus. Route doesn’t. • Remote caching works: • Water and Locus. Route have remote reference every 20 and 11 instructions, but busy pclks between processor stalls is 506 and 181. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 11

Conclusions • Locality is still important, because of higher remote latencies • However, for applications, natural locality can be enough (Barnes-Hut, Radiosity, Water). • Thus, good speed-ups can be achieved without difficult programming model (i. e. message passing) • For higher performance, have to worry about the extended memory hierarchy – but only for critical data structures. Analogous to argument in the uniprocessor world: caches vs. scratchpad memories/stream buffers. 3/17/08 Kubiatowicz CS 258 ©UCB Spring 2008 Lec 15. 1. 12