Computer Organization CS 224 Fall 2012 Welcome to

![Levels of Representation temp = v[k]; High Level Language Program v[k] = v[k+1]; v[k+1] Levels of Representation temp = v[k]; High Level Language Program v[k] = v[k+1]; v[k+1]](https://slidetodoc.com/presentation_image/57bf561df6d8e6a54b07fd58a8c7bcb6/image-27.jpg)

- Slides: 28

Computer Organization CS 224 Fall 2012 “Welcome to our course” Will Sawyer & Fazlı Can With thanks to M. J. Irwin, D. Patterson, and J. Hennessy for some lecture slide contents

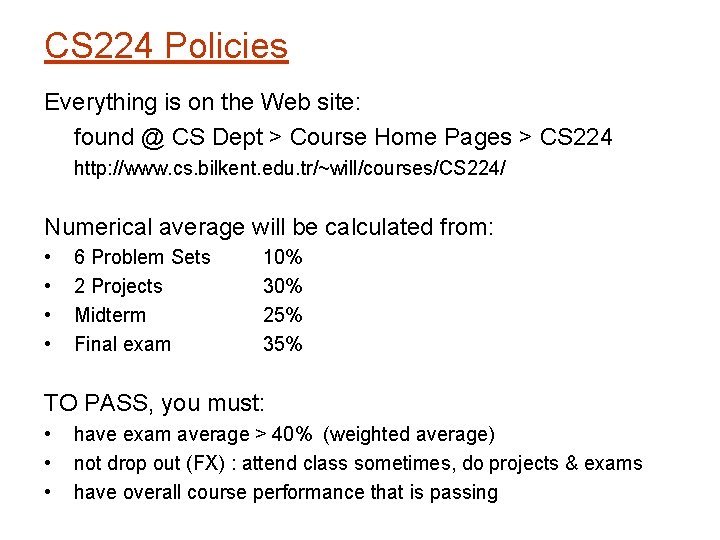

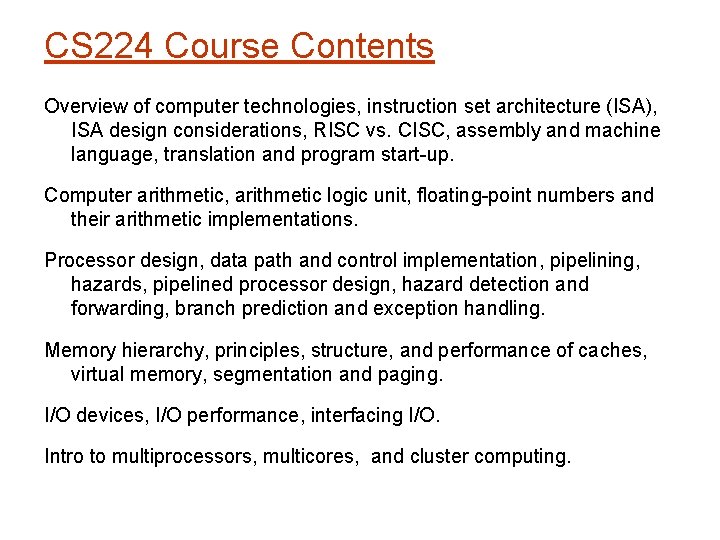

CS 224 Course Contents Overview of computer technologies, instruction set architecture (ISA), ISA design considerations, RISC vs. CISC, assembly and machine language, translation and program start-up. Computer arithmetic, arithmetic logic unit, floating-point numbers and their arithmetic implementations. Processor design, data path and control implementation, pipelining, hazards, pipelined processor design, hazard detection and forwarding, branch prediction and exception handling. Memory hierarchy, principles, structure, and performance of caches, virtual memory, segmentation and paging. I/O devices, I/O performance, interfacing I/O. Intro to multiprocessors, multicores, and cluster computing.

CS 224 Policies Everything is on the Web site: found @ CS Dept > Course Home Pages > CS 224 http: //www. cs. bilkent. edu. tr/~will/courses/CS 224/ Numerical average will be calculated from: • • 6 Problem Sets 2 Projects Midterm Final exam 10% 30% 25% 35% TO PASS, you must: • • • have exam average > 40% (weighted average) not drop out (FX) : attend class sometimes, do projects & exams have overall course performance that is passing

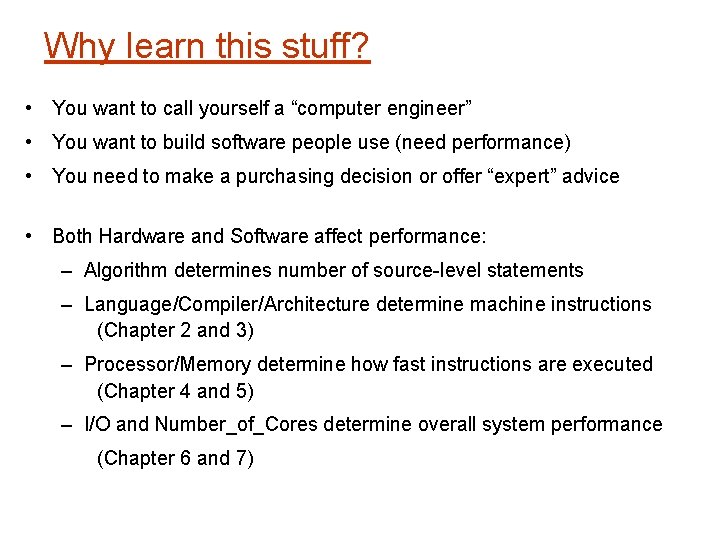

Why learn this stuff? • You want to call yourself a “computer engineer” • You want to build software people use (need performance) • You need to make a purchasing decision or offer “expert” advice • Both Hardware and Software affect performance: – Algorithm determines number of source-level statements – Language/Compiler/Architecture determine machine instructions (Chapter 2 and 3) – Processor/Memory determine how fast instructions are executed (Chapter 4 and 5) – I/O and Number_of_Cores determine overall system performance (Chapter 6 and 7)

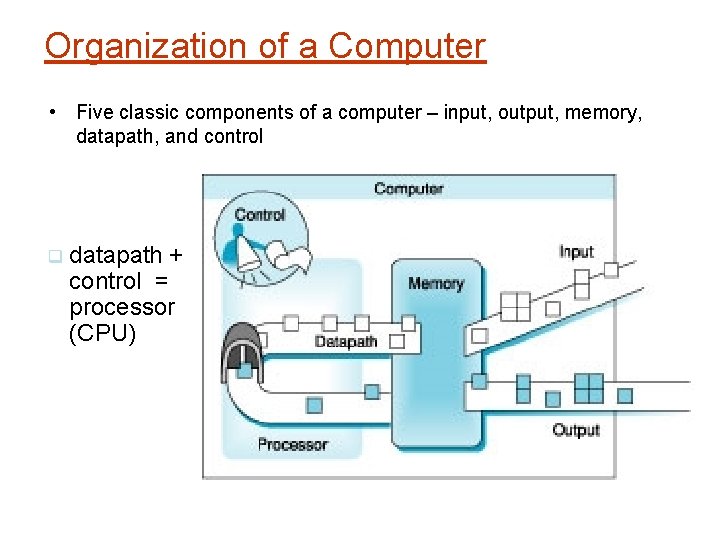

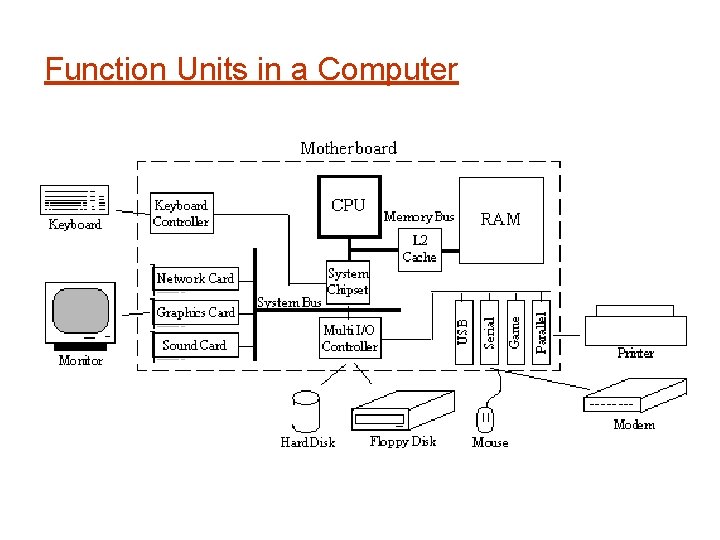

Organization of a Computer • Five classic components of a computer – input, output, memory, datapath, and control datapath + control = processor (CPU)

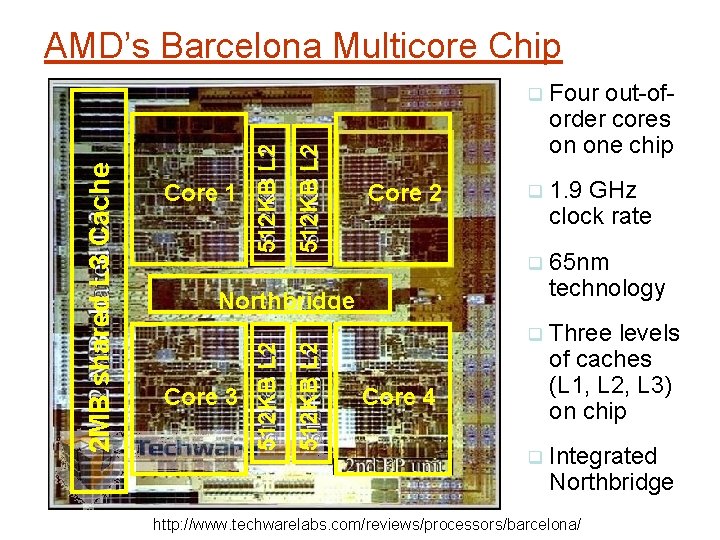

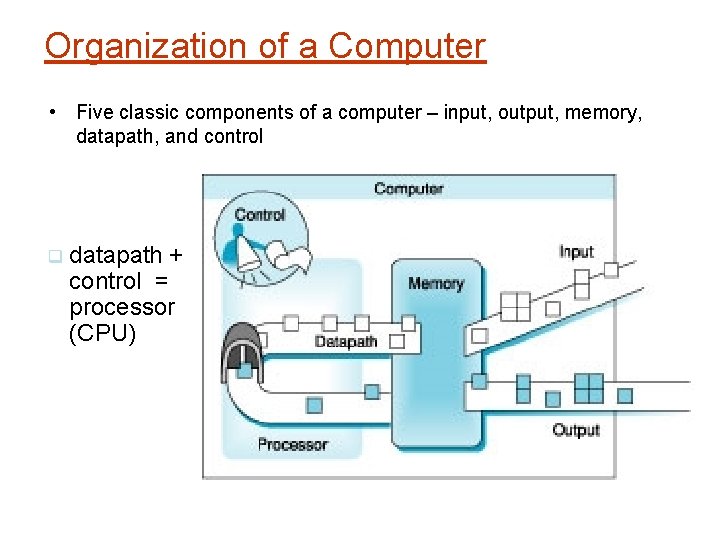

512 KB L 2 Core 1 Core 2 Four out-oforder cores on one chip 1. 9 GHz clock rate 65 nm technology Three levels of caches (L 1, L 2, L 3) on chip Integrated Northbridge Core 3 512 KB L 2 Northbridge 512 KB L 2 2 MB shared L 3 Cache AMD’s Barcelona Multicore Chip Core 4 http: //www. techwarelabs. com/reviews/processors/barcelona/

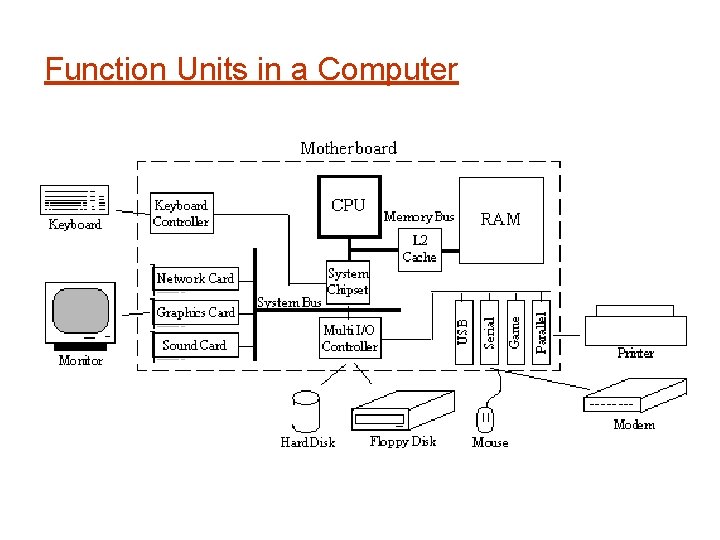

Function Units in a Computer

Magnetic Storage Source: Quantum Corp Disk capacity increasing 60%/year for common form factor

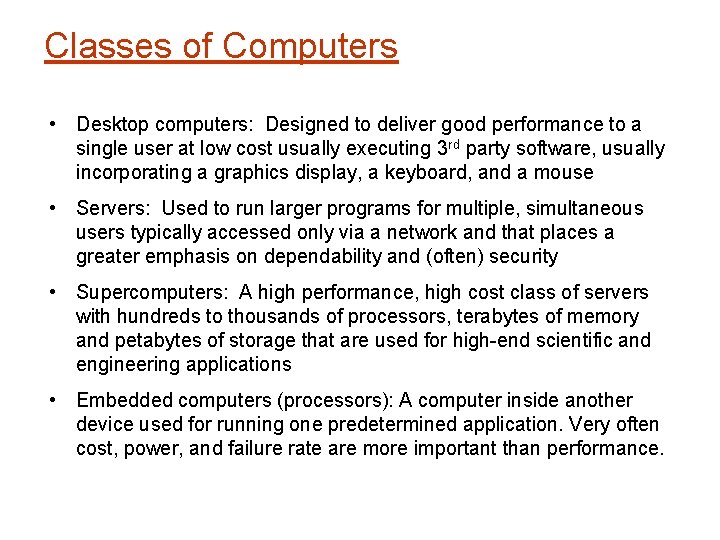

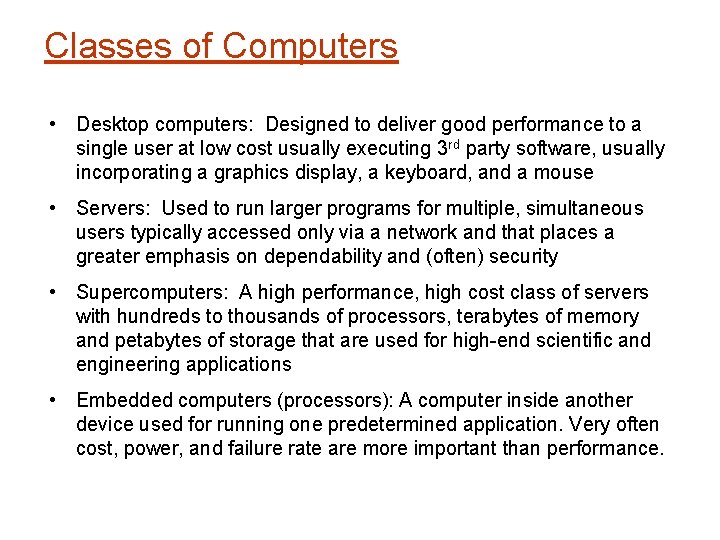

Classes of Computers • Desktop computers: Designed to deliver good performance to a single user at low cost usually executing 3 rd party software, usually incorporating a graphics display, a keyboard, and a mouse • Servers: Used to run larger programs for multiple, simultaneous users typically accessed only via a network and that places a greater emphasis on dependability and (often) security • Supercomputers: A high performance, high cost class of servers with hundreds to thousands of processors, terabytes of memory and petabytes of storage that are used for high-end scientific and engineering applications • Embedded computers (processors): A computer inside another device used for running one predetermined application. Very often cost, power, and failure rate are more important than performance.

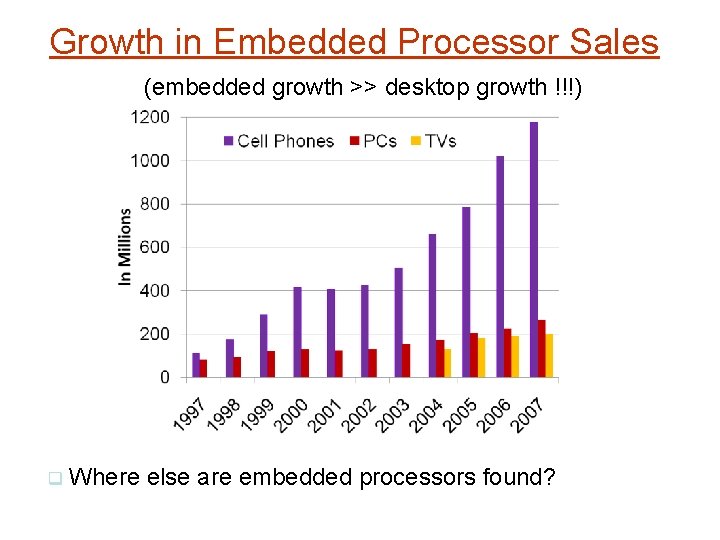

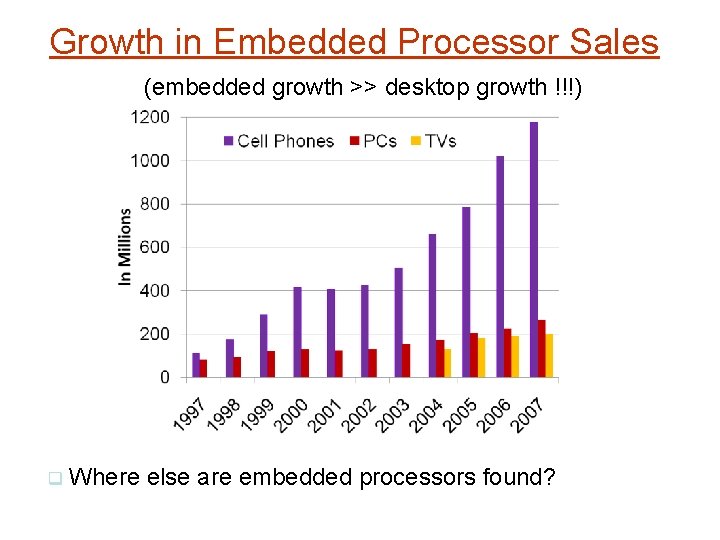

Growth in Embedded Processor Sales (embedded growth >> desktop growth !!!) Where else are embedded processors found?

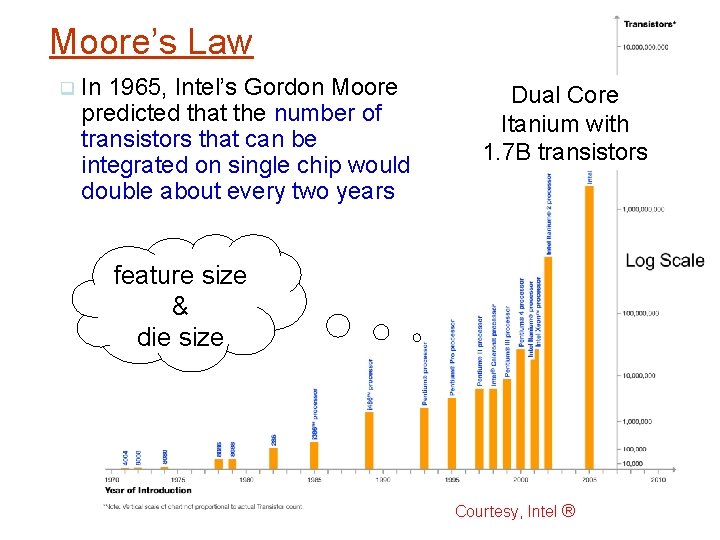

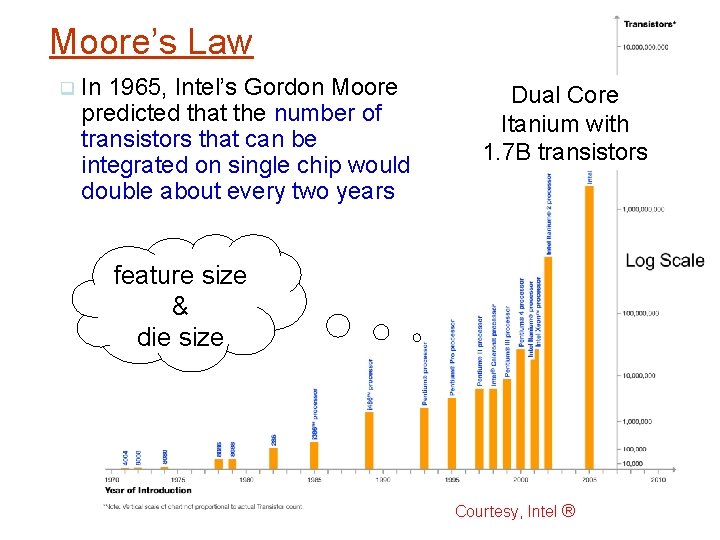

Moore’s Law In 1965, Intel’s Gordon Moore predicted that the number of transistors that can be integrated on single chip would double about every two years Dual Core Itanium with 1. 7 B transistors feature size & die size Courtesy, Intel ®

Moore’s Law for CPUs and DRAMs

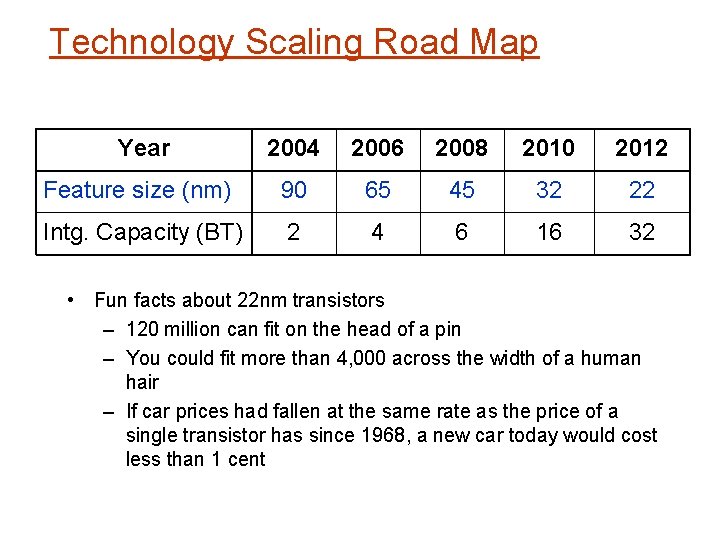

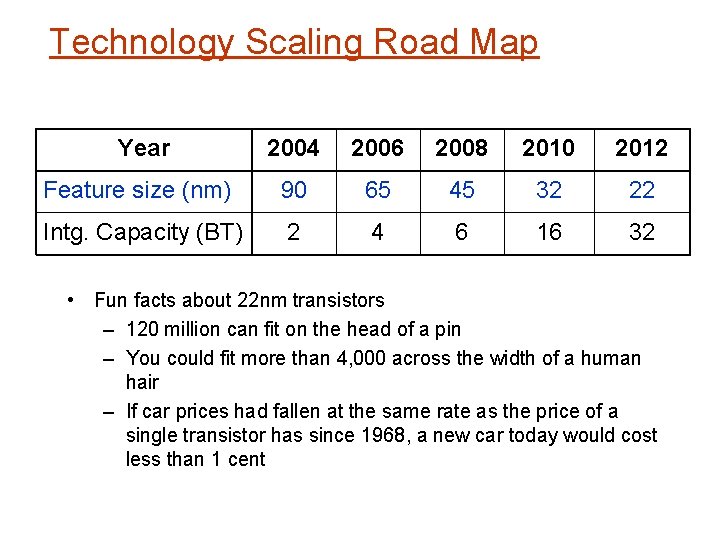

Technology Scaling Road Map Year 2004 2006 2008 2010 2012 Feature size (nm) 90 65 45 32 22 Intg. Capacity (BT) 2 4 6 16 32 • Fun facts about 22 nm transistors – 120 million can fit on the head of a pin – You could fit more than 4, 000 across the width of a human hair – If car prices had fallen at the same rate as the price of a single transistor has since 1968, a new car today would cost less than 1 cent

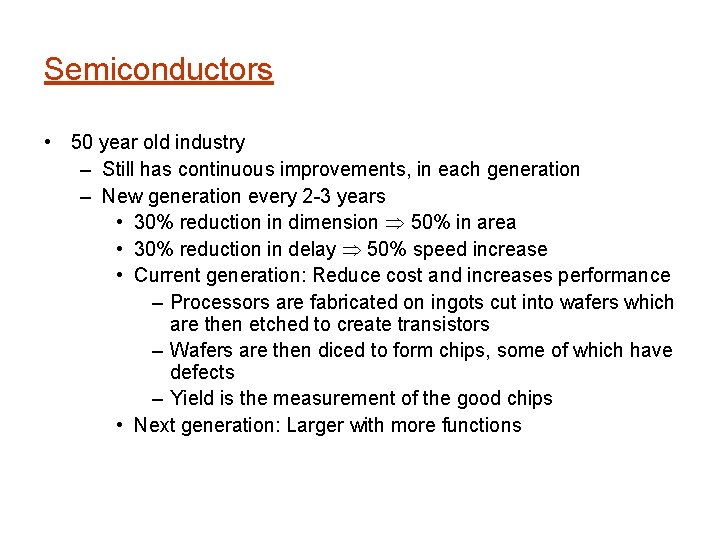

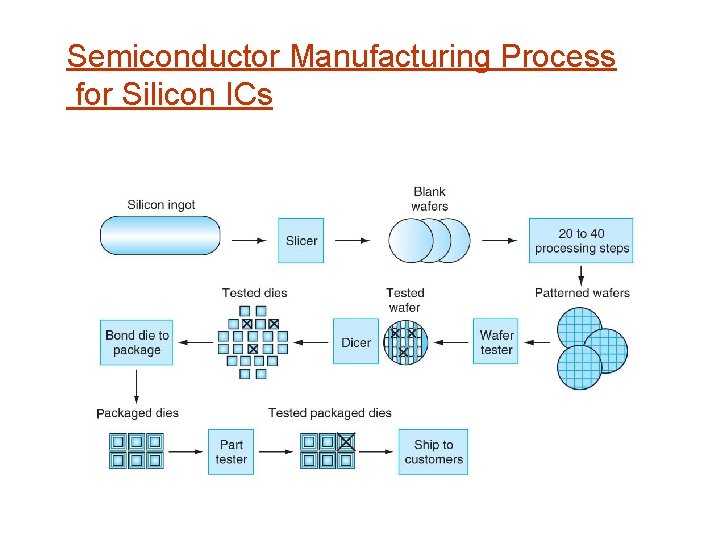

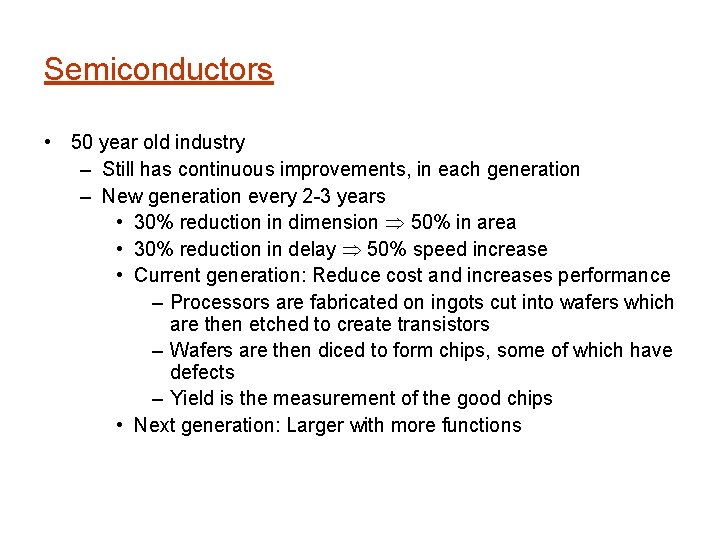

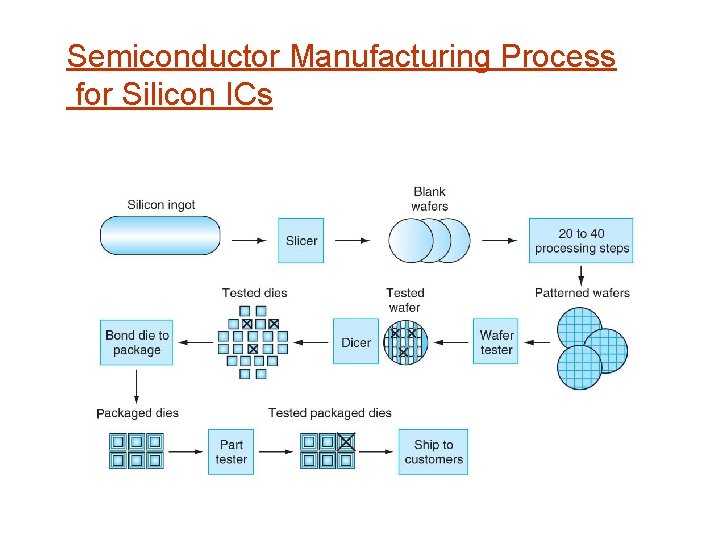

Semiconductors • 50 year old industry – Still has continuous improvements, in each generation – New generation every 2 -3 years • 30% reduction in dimension 50% in area • 30% reduction in delay 50% speed increase • Current generation: Reduce cost and increases performance – Processors are fabricated on ingots cut into wafers which are then etched to create transistors – Wafers are then diced to form chips, some of which have defects – Yield is the measurement of the good chips • Next generation: Larger with more functions

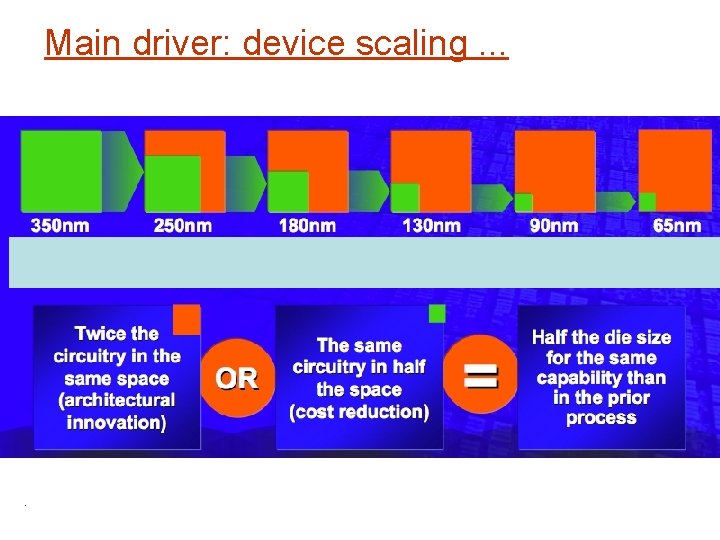

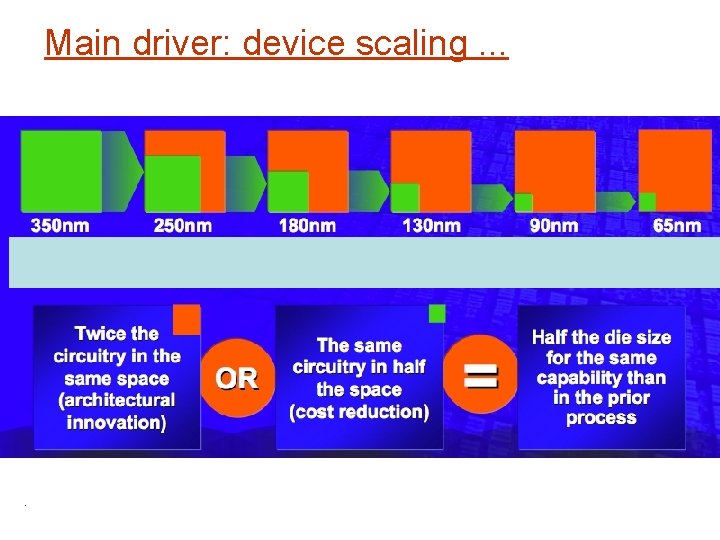

Main driver: device scaling. .

Semiconductor Manufacturing Process for Silicon ICs

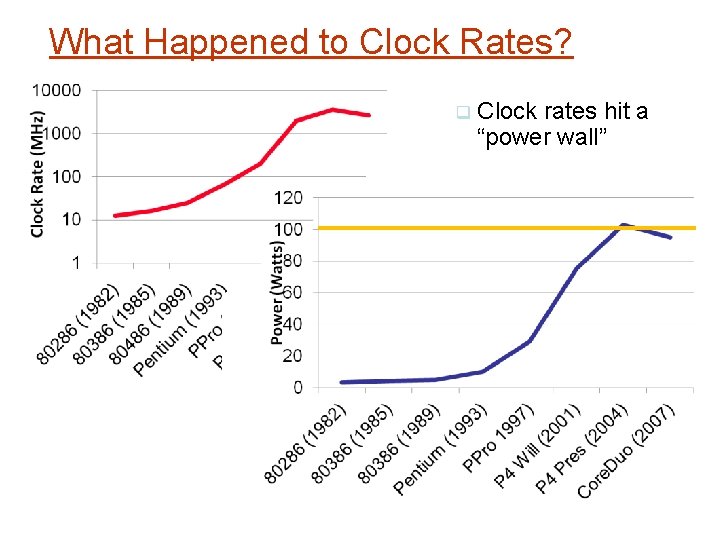

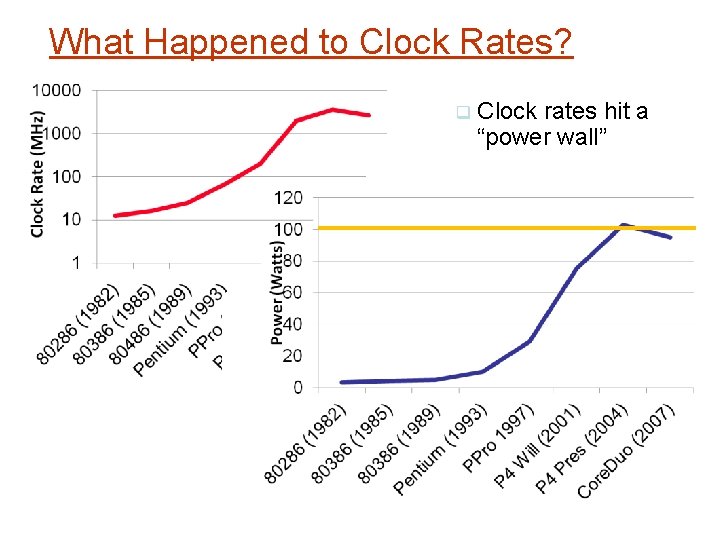

What Happened to Clock Rates? Clock rates hit a “power wall”

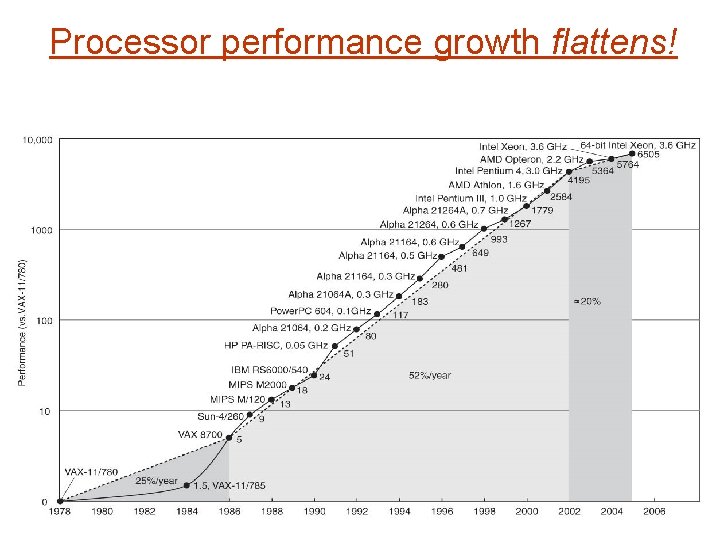

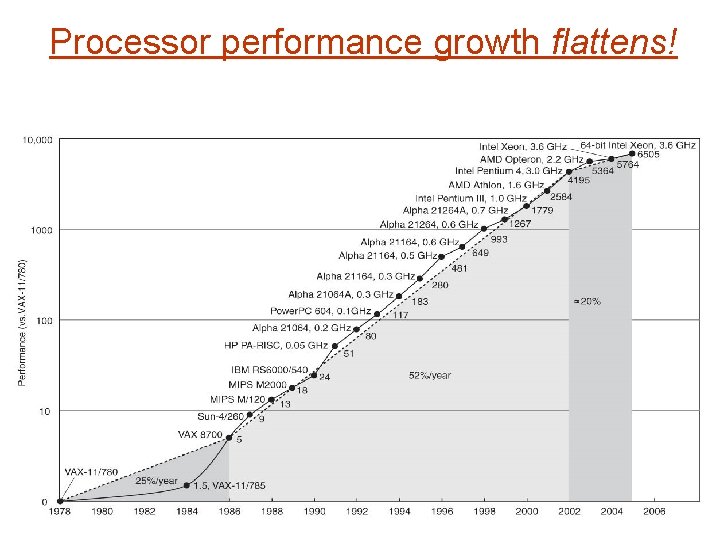

Processor performance growth flattens!

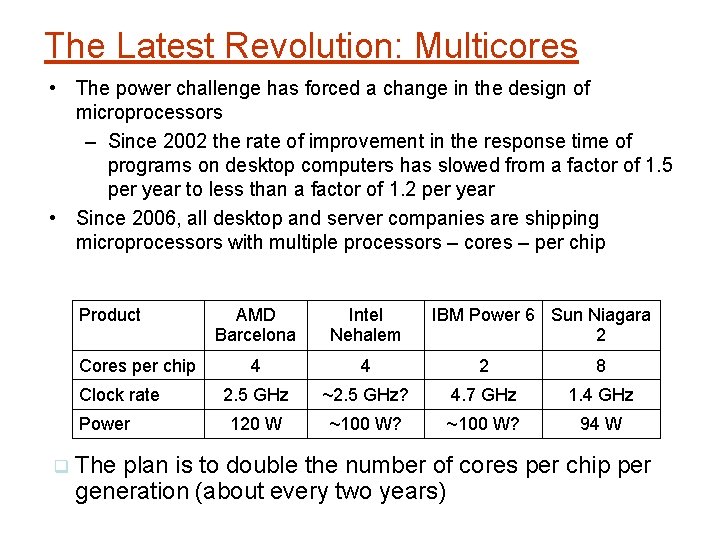

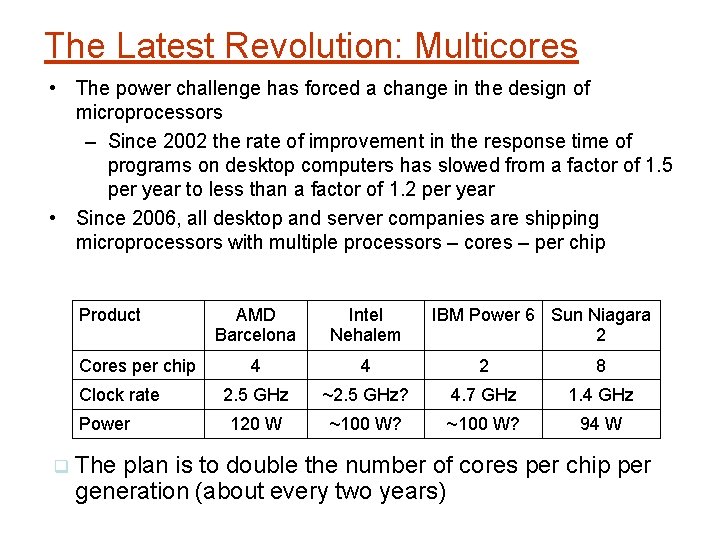

The Latest Revolution: Multicores • The power challenge has forced a change in the design of microprocessors – Since 2002 the rate of improvement in the response time of programs on desktop computers has slowed from a factor of 1. 5 per year to less than a factor of 1. 2 per year • Since 2006, all desktop and server companies are shipping microprocessors with multiple processors – cores – per chip Product Cores per chip Clock rate Power AMD Barcelona Intel Nehalem IBM Power 6 Sun Niagara 2 4 4 2 8 2. 5 GHz ~2. 5 GHz? 4. 7 GHz 1. 4 GHz 120 W ~100 W? 94 W The plan is to double the number of cores per chip per generation (about every two years)

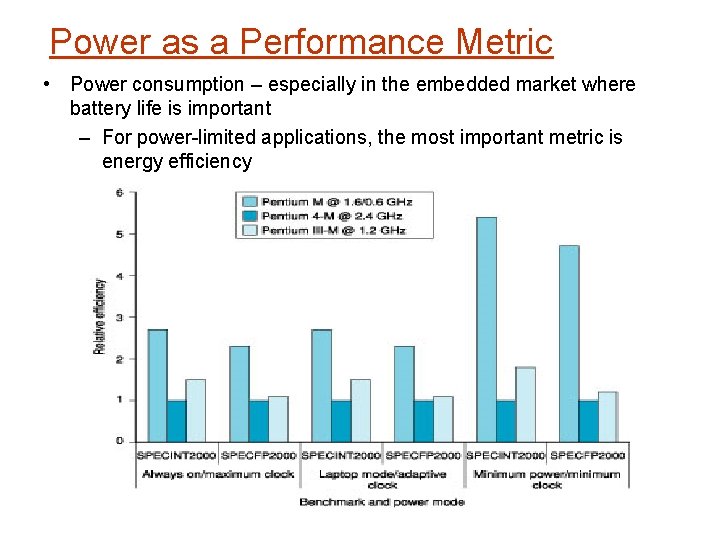

Power as a Performance Metric • Power consumption – especially in the embedded market where battery life is important – For power-limited applications, the most important metric is energy efficiency

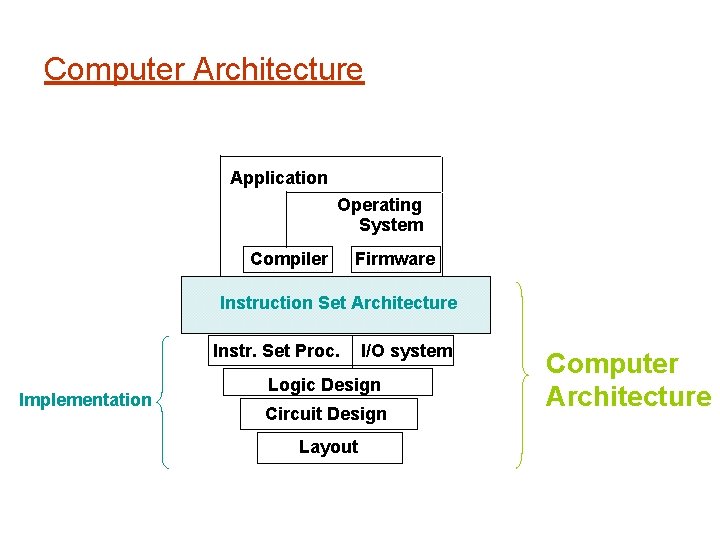

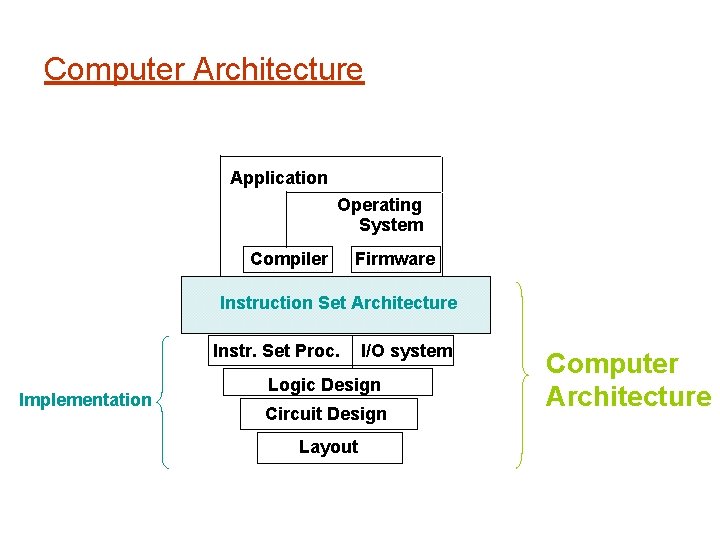

Computer Architecture Application Operating System Compiler Firmware Instruction Set Architecture Instr. Set Proc. Implementation I/O system Logic Design Circuit Design Layout Computer Architecture

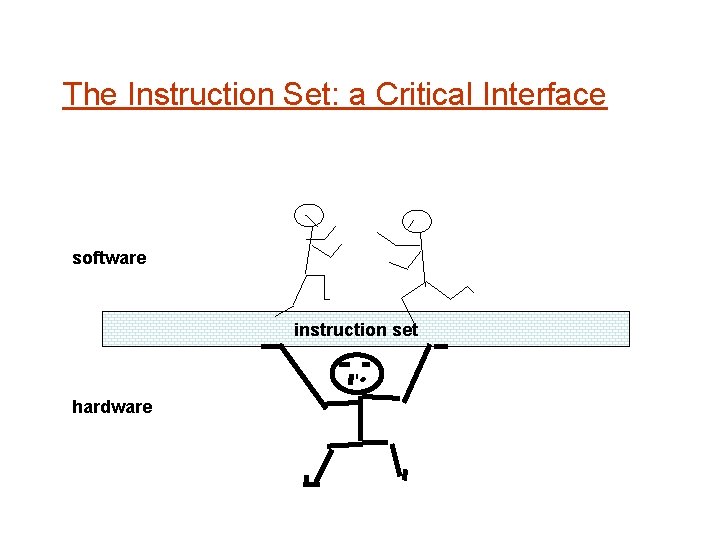

The Instruction Set: a Critical Interface software instruction set hardware

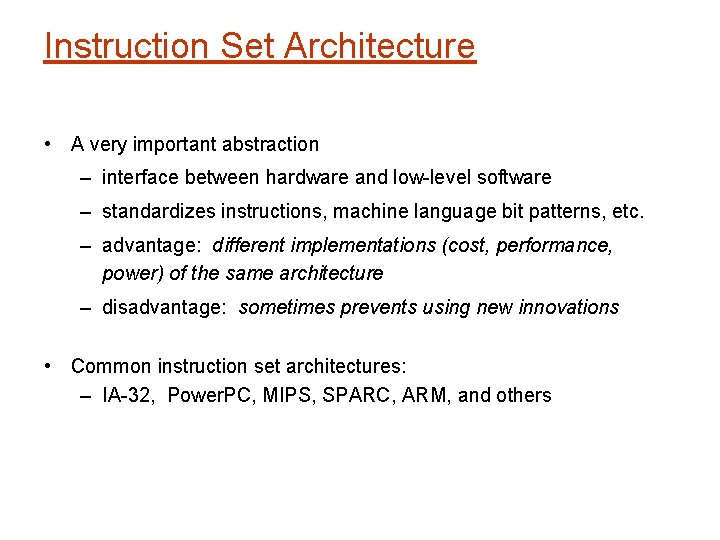

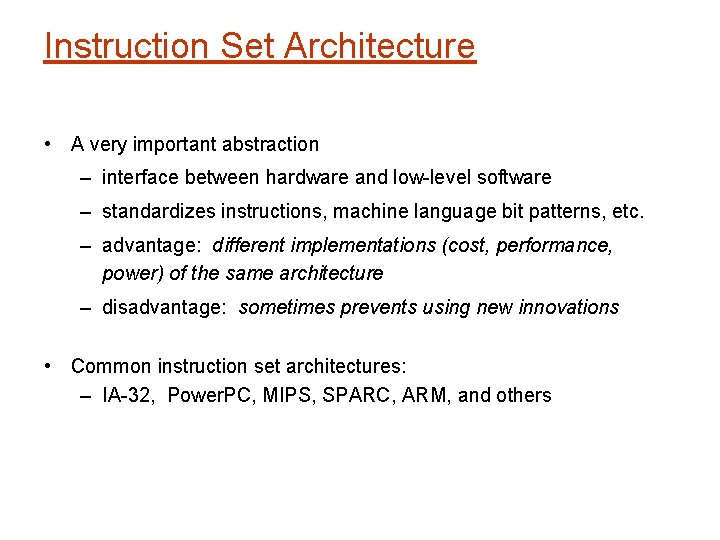

Instruction Set Architecture • A very important abstraction – interface between hardware and low-level software – standardizes instructions, machine language bit patterns, etc. – advantage: different implementations (cost, performance, power) of the same architecture – disadvantage: sometimes prevents using new innovations • Common instruction set architectures: – IA-32, Power. PC, MIPS, SPARC, ARM, and others

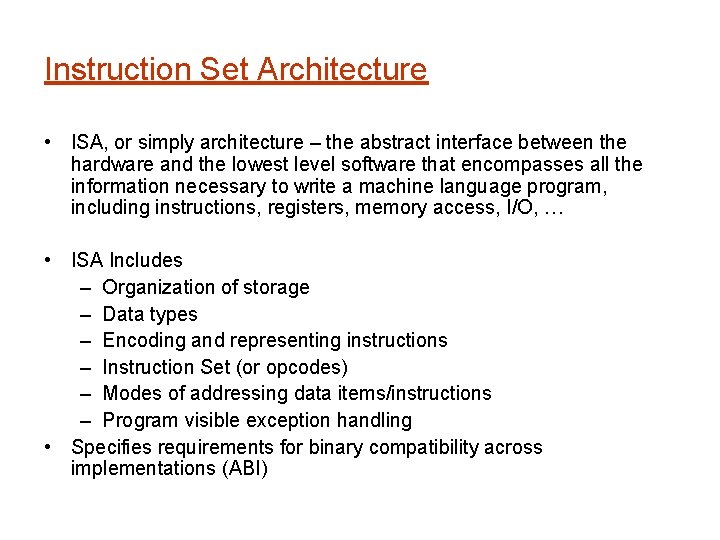

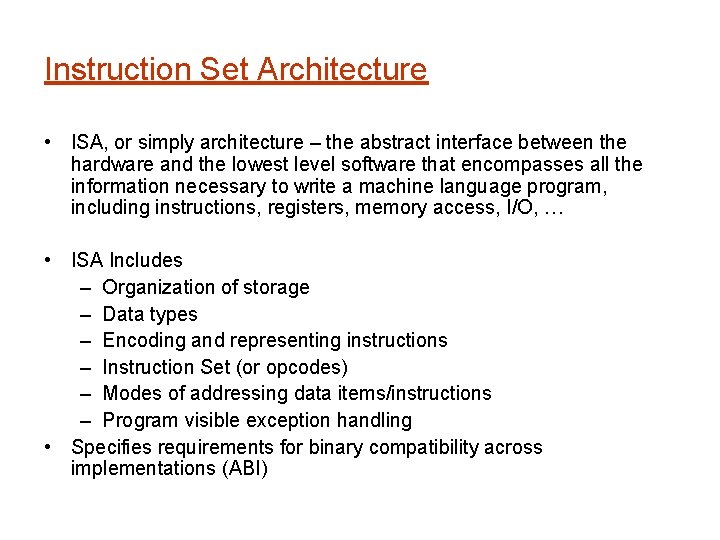

Instruction Set Architecture • ISA, or simply architecture – the abstract interface between the hardware and the lowest level software that encompasses all the information necessary to write a machine language program, including instructions, registers, memory access, I/O, … • ISA Includes – Organization of storage – Data types – Encoding and representing instructions – Instruction Set (or opcodes) – Modes of addressing data items/instructions – Program visible exception handling • Specifies requirements for binary compatibility across implementations (ABI)

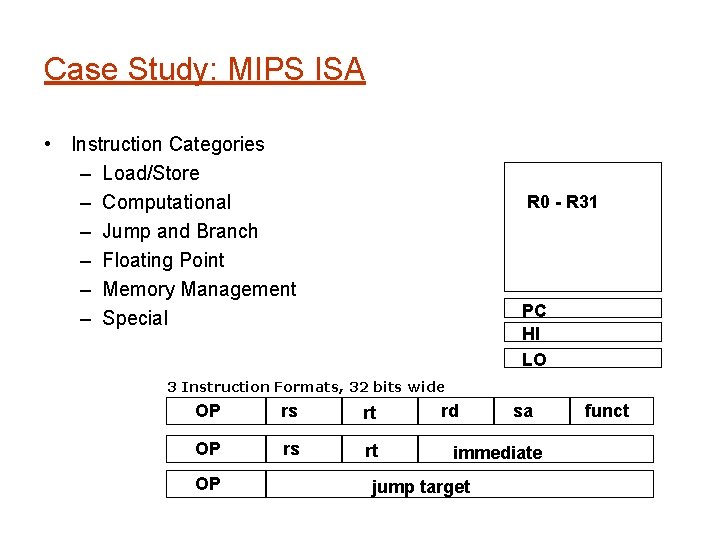

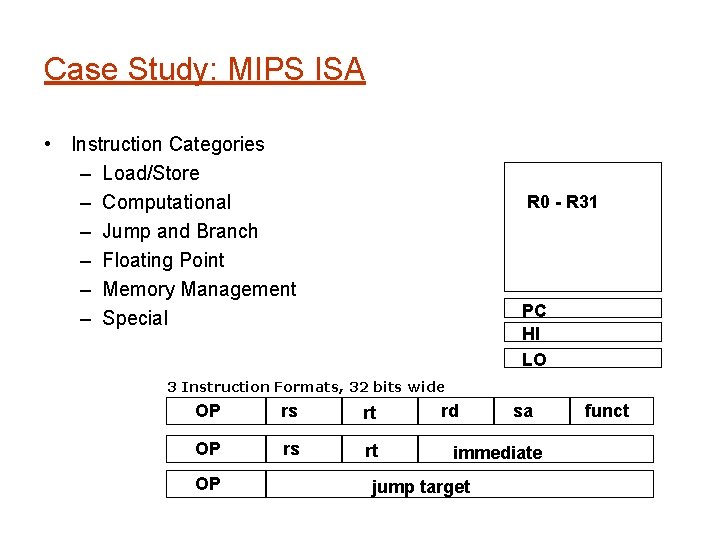

Case Study: MIPS ISA • Instruction Categories – Load/Store – Computational – Jump and Branch – Floating Point – Memory Management – Special R 0 - R 31 PC HI LO 3 Instruction Formats, 32 bits wide OP rs rt OP rd sa immediate jump target funct

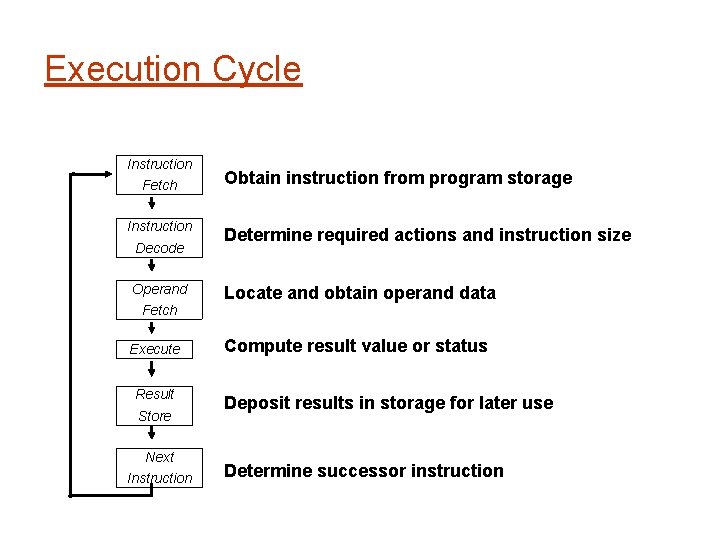

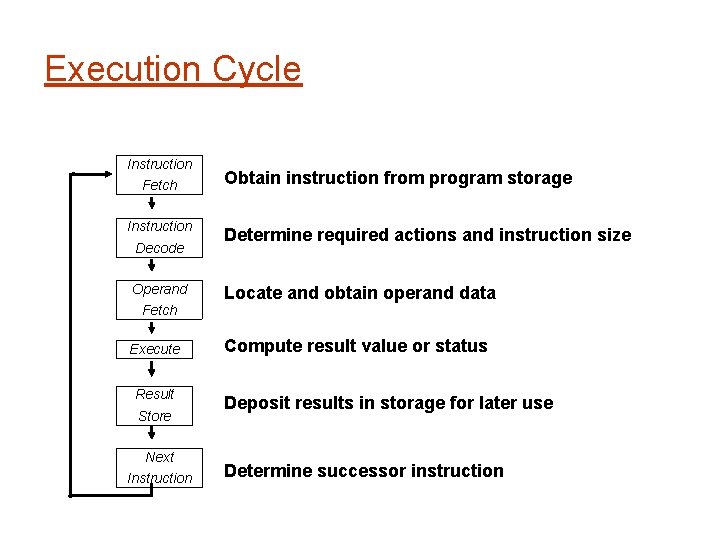

Execution Cycle Instruction Fetch Instruction Decode Operand Fetch Execute Result Store Next Instruction Obtain instruction from program storage Determine required actions and instruction size Locate and obtain operand data Compute result value or status Deposit results in storage for later use Determine successor instruction

![Levels of Representation temp vk High Level Language Program vk vk1 vk1 Levels of Representation temp = v[k]; High Level Language Program v[k] = v[k+1]; v[k+1]](https://slidetodoc.com/presentation_image/57bf561df6d8e6a54b07fd58a8c7bcb6/image-27.jpg)

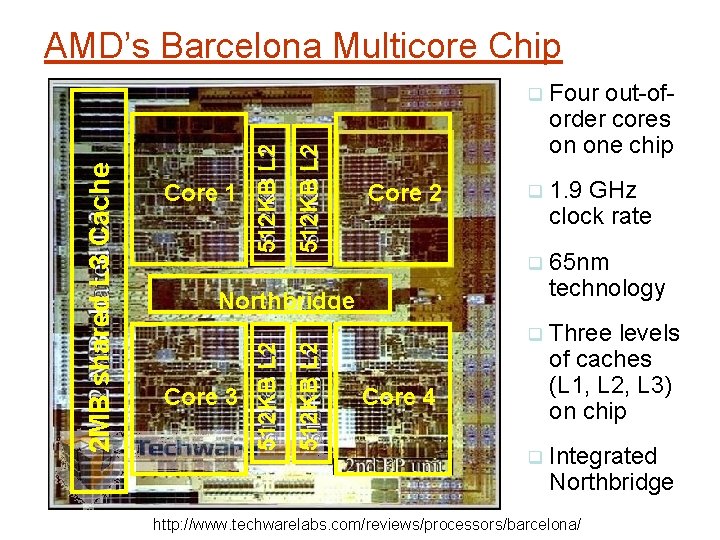

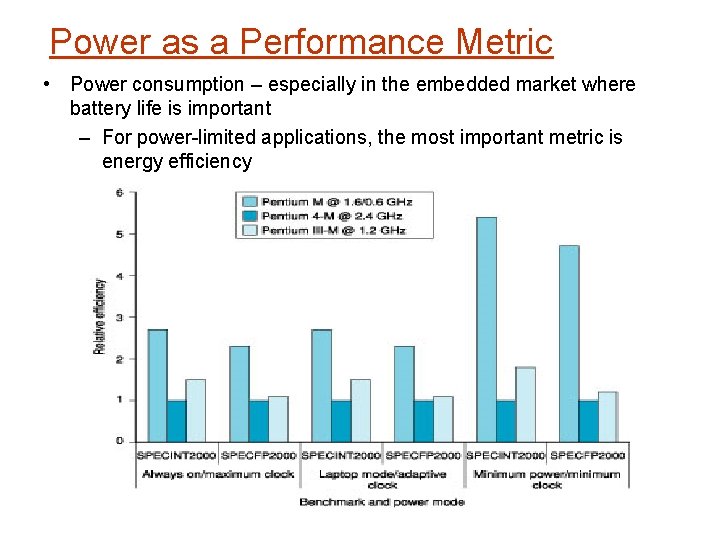

Levels of Representation temp = v[k]; High Level Language Program v[k] = v[k+1]; v[k+1] = temp; Compiler lw lw sw sw Assembly Language Program $15, $16, $15, 0($2) 4($2) Assembler Machine Language Program 0000 1010 1100 0101 1001 1111 0110 1000 1100 0101 1010 0000 0110 1000 1111 1001 1010 0000 0101 1100 1111 1000 0110 0101 1100 0000 1010 1000 0110 1001 1111 Machine Interpretation Control Signal Specification ° ° ALUOP[0: 3] <= Inst. Reg[9: 11] & MASK [i. e. high/low on control lines]

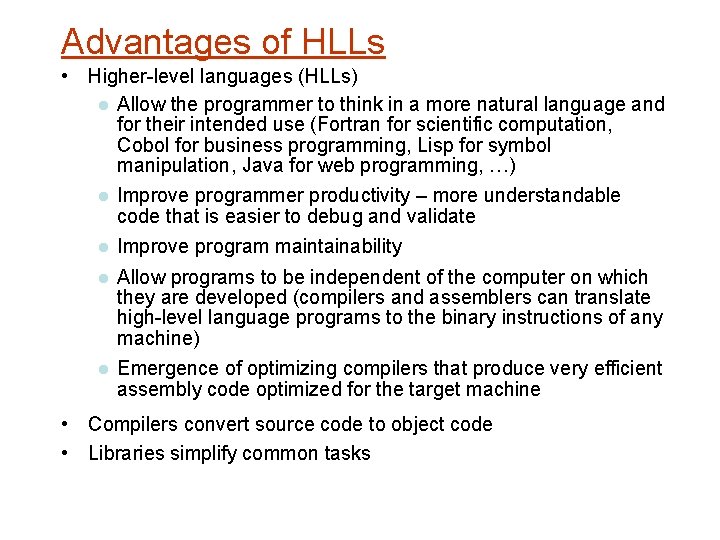

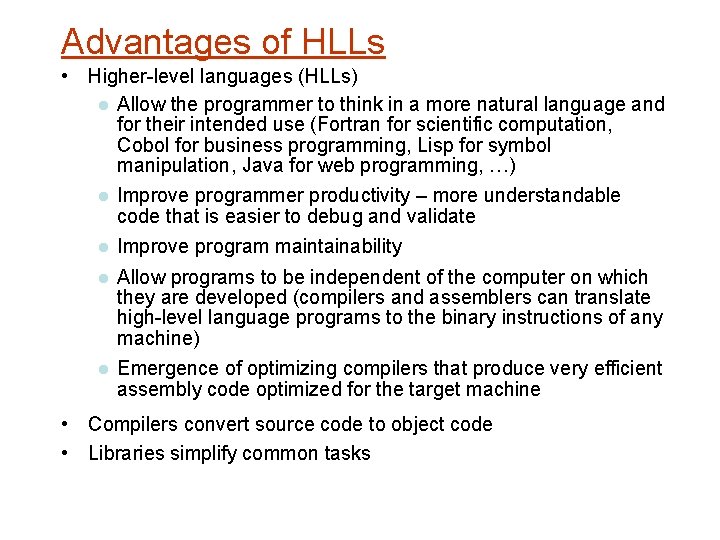

Advantages of HLLs • Higher-level languages (HLLs) Allow the programmer to think in a more natural language and for their intended use (Fortran for scientific computation, Cobol for business programming, Lisp for symbol manipulation, Java for web programming, …) Improve programmer productivity – more understandable code that is easier to debug and validate Improve program maintainability Allow programs to be independent of the computer on which they are developed (compilers and assemblers can translate high-level language programs to the binary instructions of any machine) Emergence of optimizing compilers that produce very efficient assembly code optimized for the target machine • Compilers convert source code to object code • Libraries simplify common tasks