Computer Organization CS 224 Fall 2012 Lessons 41

- Slides: 11

Computer Organization CS 224 Fall 2012 Lessons 41 & 42

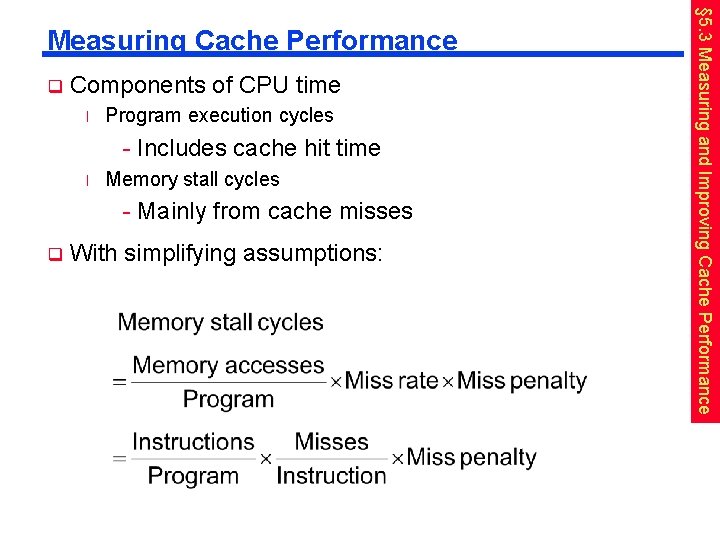

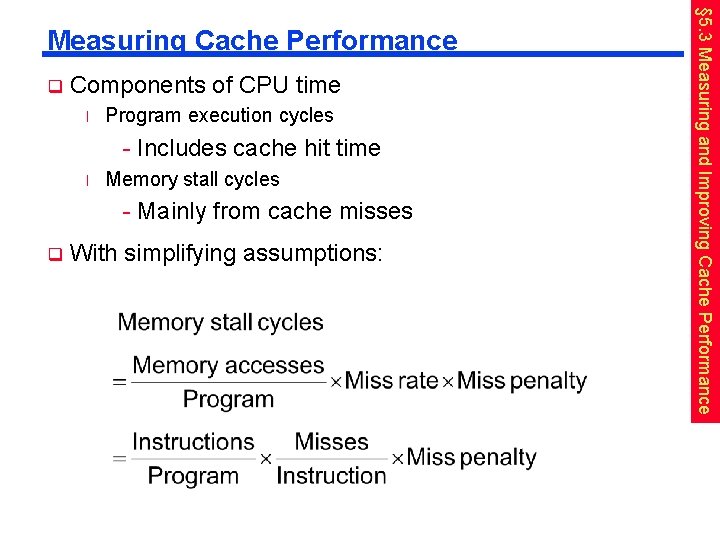

q Components of CPU time l Program execution cycles - Includes cache hit time l Memory stall cycles - Mainly from cache misses q With simplifying assumptions: § 5. 3 Measuring and Improving Cache Performance Measuring Cache Performance

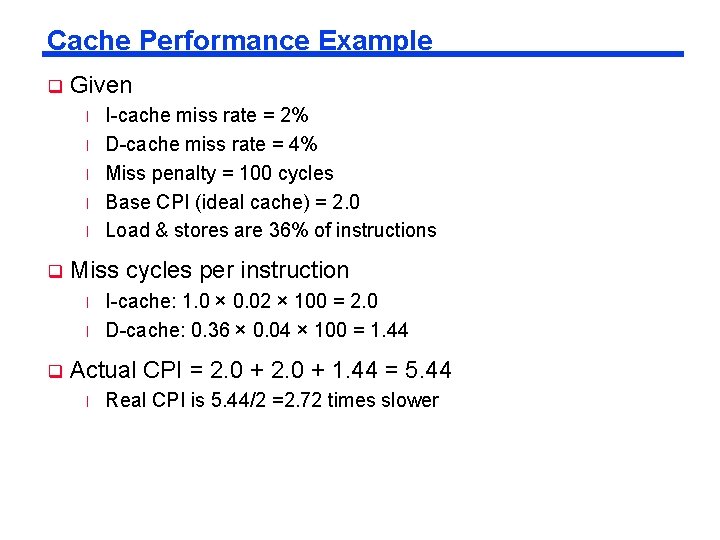

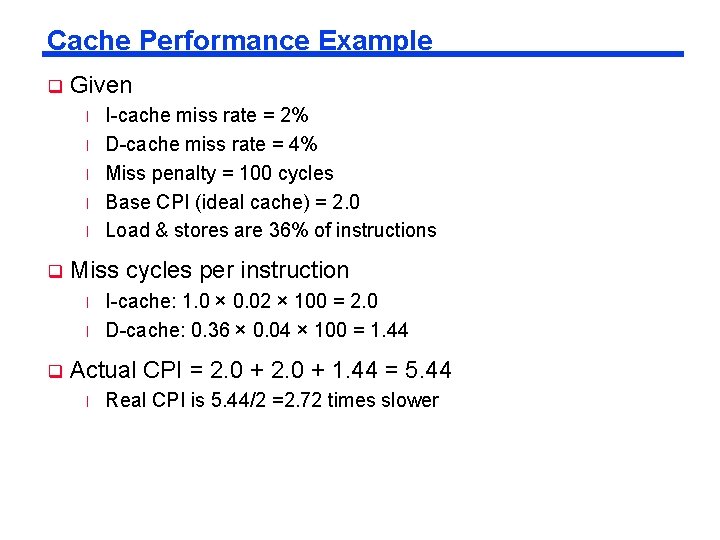

Cache Performance Example q Given l l l q Miss cycles per instruction l l q I-cache miss rate = 2% D-cache miss rate = 4% Miss penalty = 100 cycles Base CPI (ideal cache) = 2. 0 Load & stores are 36% of instructions I-cache: 1. 0 × 0. 02 × 100 = 2. 0 D-cache: 0. 36 × 0. 04 × 100 = 1. 44 Actual CPI = 2. 0 + 1. 44 = 5. 44 l Real CPI is 5. 44/2 =2. 72 times slower

Average Access Time q Hit time is also important for performance q Average memory access time (AMAT) l q AMAT = Hit time + Miss rate × Miss penalty Example l CPU with 1 ns clock, hit time = 1 cycle, miss penalty = 20 cycles, I-cache miss rate = 5% l AMAT = 1 ns + (0. 05 × 20 ns) = 2 ns - 2 cycles per instruction

Performance Summary q When CPU performance increased l q Decreasing base CPI l q Greater proportion of time spent on memory stalls Increasing clock rate l q Miss penalty becomes more significant Memory stalls account for more CPU cycles Can’t neglect cache behavior when evaluating system performance

Interactions with Software q Misses depend on memory access patterns l l Algorithm behavior Compiler optimization for memory access

Replacement Policy q Direct mapped: no choice q Set associative l l q Least-recently used (LRU) l q Prefer non-valid entry, if there is one Otherwise, choose among entries in the set Choose the one unused for the longest time - Simple for 2 -way, manageable for 4 -way, too hard beyond that Random l Gives approximately the same performance as LRU for high associativity

Multilevel Caches q Primary cache attached to CPU l q Small, but fast Level-2 cache services misses from primary cache l Larger, slower, but still faster than main memory q Main memory services L-2 cache misses q Some high-end systems include L-3 cache

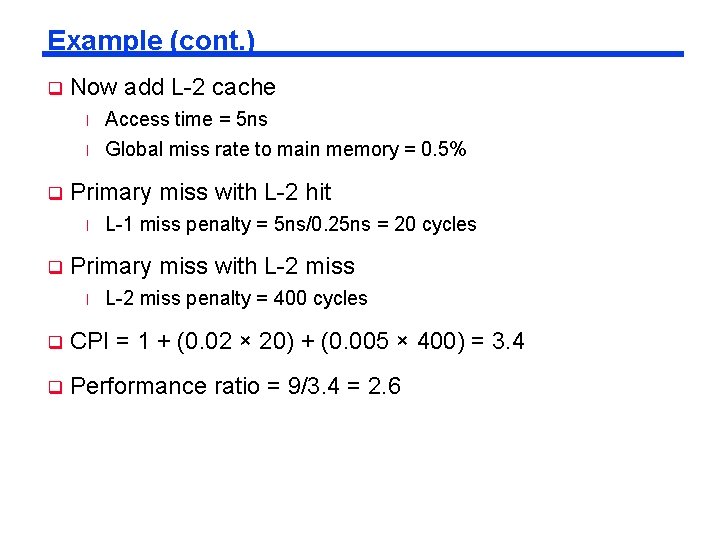

Multilevel Cache Example q Given l l l q CPU base CPI = 1, clock rate = 4 GHz Miss rate/instruction = 2% Main memory access time = 100 ns With just primary cache l Miss penalty = 100 ns/0. 25 ns = 400 cycles l Effective CPI = 1 + 0. 02 × 400 = 9

Example (cont. ) q Now add L-2 cache l l q Primary miss with L-2 hit l q Access time = 5 ns Global miss rate to main memory = 0. 5% L-1 miss penalty = 5 ns/0. 25 ns = 20 cycles Primary miss with L-2 miss l L-2 miss penalty = 400 cycles q CPI = 1 + (0. 02 × 20) + (0. 005 × 400) = 3. 4 q Performance ratio = 9/3. 4 = 2. 6

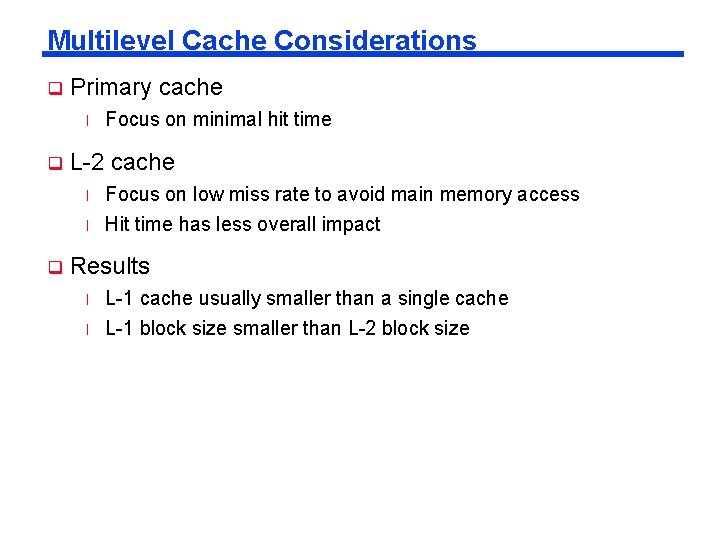

Multilevel Cache Considerations q Primary cache l q q Focus on minimal hit time L-2 cache l Focus on low miss rate to avoid main memory access l Hit time has less overall impact Results l L-1 cache usually smaller than a single cache l L-1 block size smaller than L-2 block size