Computer Organization CS 224 Fall 2012 Lesson 52

- Slides: 25

Computer Organization CS 224 Fall 2012 Lesson 52

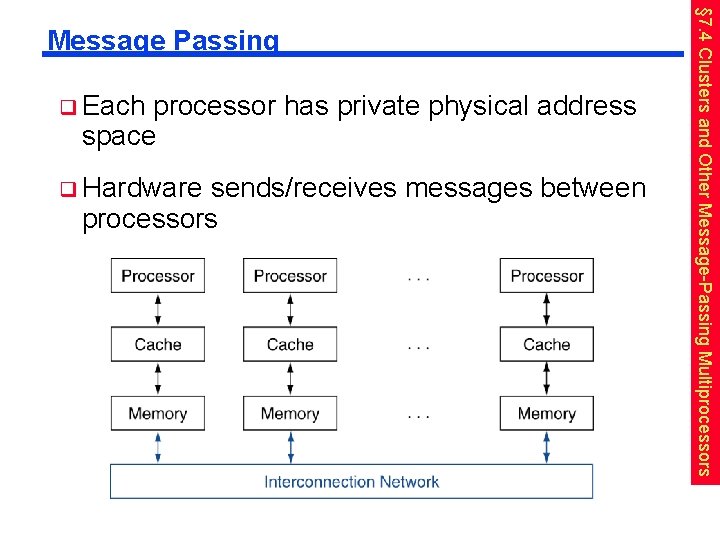

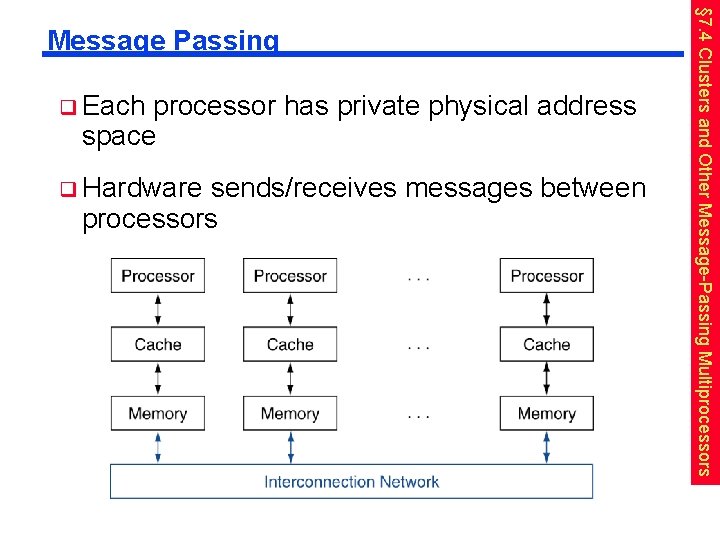

q Each processor has private physical address space q Hardware sends/receives messages between processors § 7. 4 Clusters and Other Message-Passing Multiprocessors Message Passing

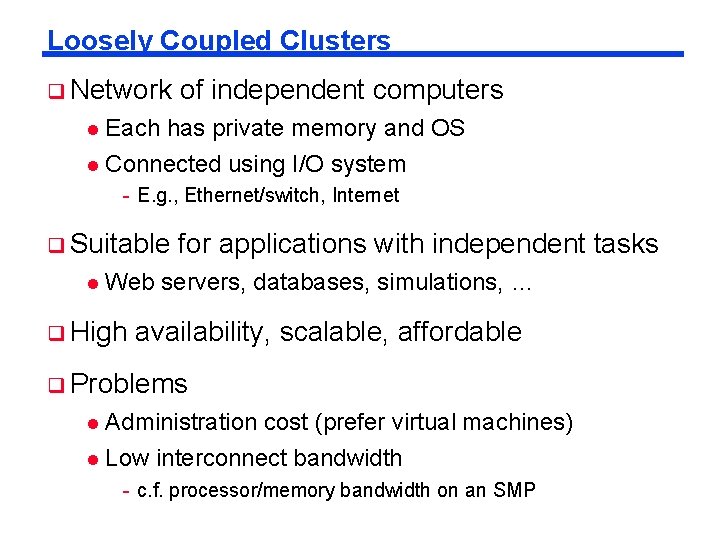

Loosely Coupled Clusters q Network of independent computers l Each has private memory and OS l Connected using I/O system - E. g. , Ethernet/switch, Internet q Suitable l for applications with independent tasks Web servers, databases, simulations, … q High availability, scalable, affordable q Problems Administration cost (prefer virtual machines) l Low interconnect bandwidth l - c. f. processor/memory bandwidth on an SMP

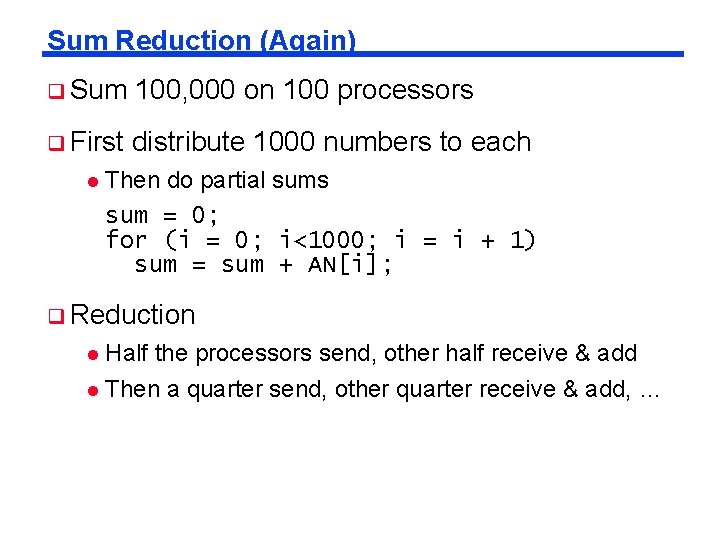

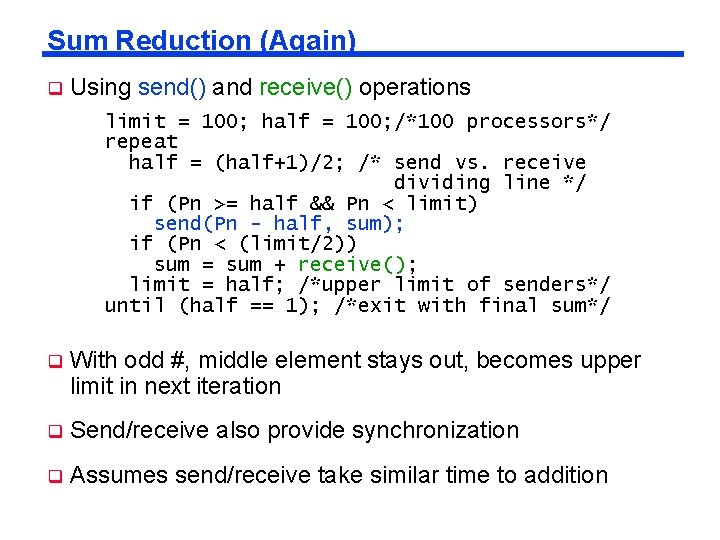

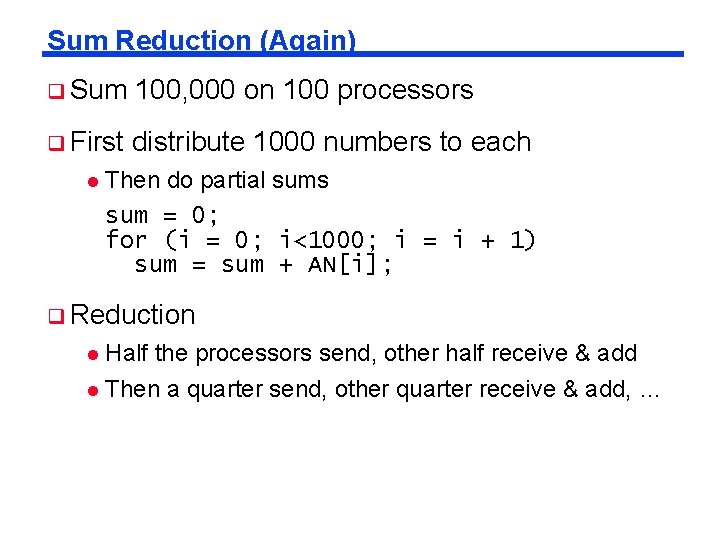

Sum Reduction (Again) q Sum 100, 000 on 100 processors q First distribute 1000 numbers to each l Then do partial sums sum = 0; for (i = 0; i<1000; i = i + 1) sum = sum + AN[i]; q Reduction Half the processors send, other half receive & add l Then a quarter send, other quarter receive & add, … l

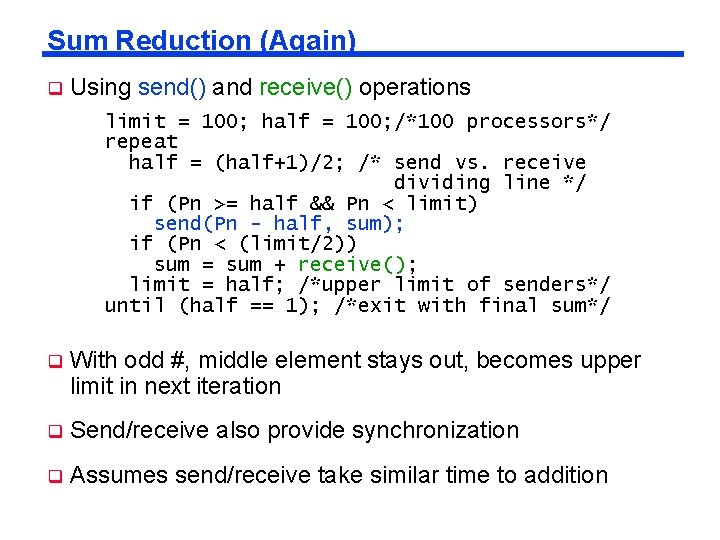

Sum Reduction (Again) q Using send() and receive() operations limit = 100; half = 100; /*100 processors*/ repeat half = (half+1)/2; /* send vs. receive dividing line */ if (Pn >= half && Pn < limit) send(Pn - half, sum); if (Pn < (limit/2)) sum = sum + receive(); limit = half; /*upper limit of senders*/ until (half == 1); /*exit with final sum*/ q With odd #, middle element stays out, becomes upper limit in next iteration q Send/receive also provide synchronization q Assumes send/receive take similar time to addition

Grid Computing q Separate networks computers interconnected by long-haul E. g. , Internet connections l Work units farmed out, results sent back l q Can l make use of idle time on PCs E. g. , SETI@home, World Community Grid

Multiprocessor Programming q q Unfortunately, writing programs which take advantage of multiprocessors is not a trivial task l Inter-processor communication required to complete a task l Traditional tools require that the program understand the specifics of the underlying hardware l Amdahl’s law limits performance due to a lack of inherent parallelism in many applications Given these issues, a limited number of applications have been rewritten to take advantage of multiprocessor systems l Examples: Databases, file servers, CAD, MP OSes

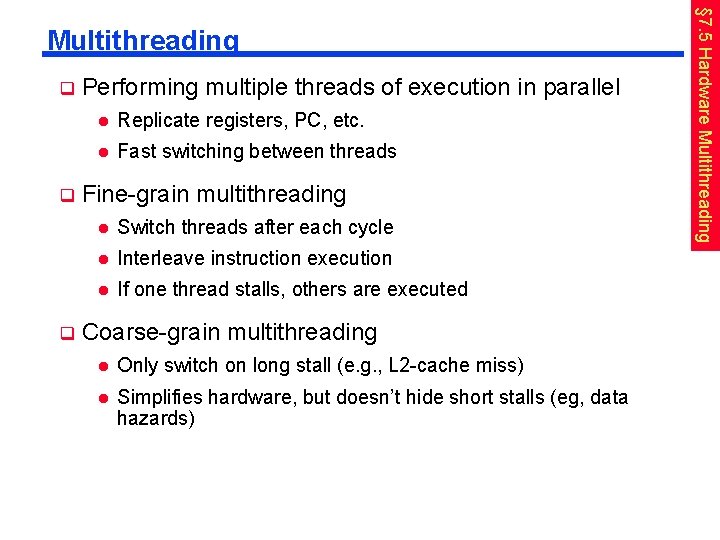

q q q Performing multiple threads of execution in parallel l Replicate registers, PC, etc. l Fast switching between threads Fine-grain multithreading l Switch threads after each cycle l Interleave instruction execution l If one thread stalls, others are executed Coarse-grain multithreading l Only switch on long stall (e. g. , L 2 -cache miss) l Simplifies hardware, but doesn’t hide short stalls (eg, data hazards) § 7. 5 Hardware Multithreading

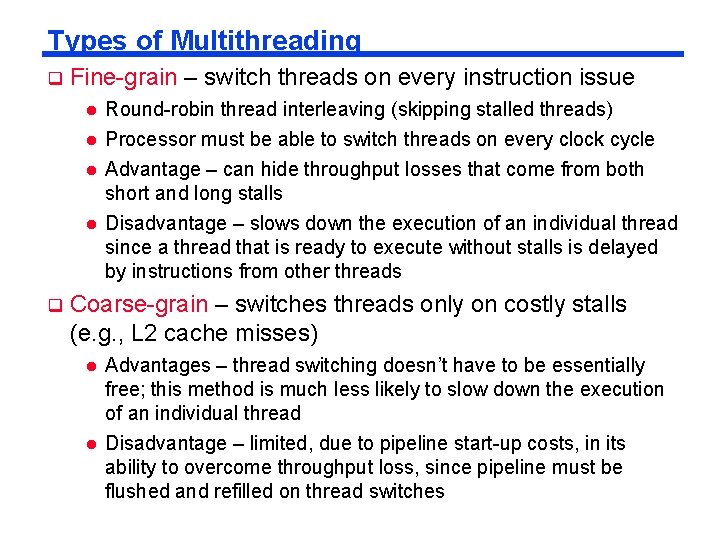

Types of Multithreading q Fine-grain – switch threads on every instruction issue l l q Round-robin thread interleaving (skipping stalled threads) Processor must be able to switch threads on every clock cycle Advantage – can hide throughput losses that come from both short and long stalls Disadvantage – slows down the execution of an individual thread since a thread that is ready to execute without stalls is delayed by instructions from other threads Coarse-grain – switches threads only on costly stalls (e. g. , L 2 cache misses) l l Advantages – thread switching doesn’t have to be essentially free; this method is much less likely to slow down the execution of an individual thread Disadvantage – limited, due to pipeline start-up costs, in its ability to overcome throughput loss, since pipeline must be flushed and refilled on thread switches

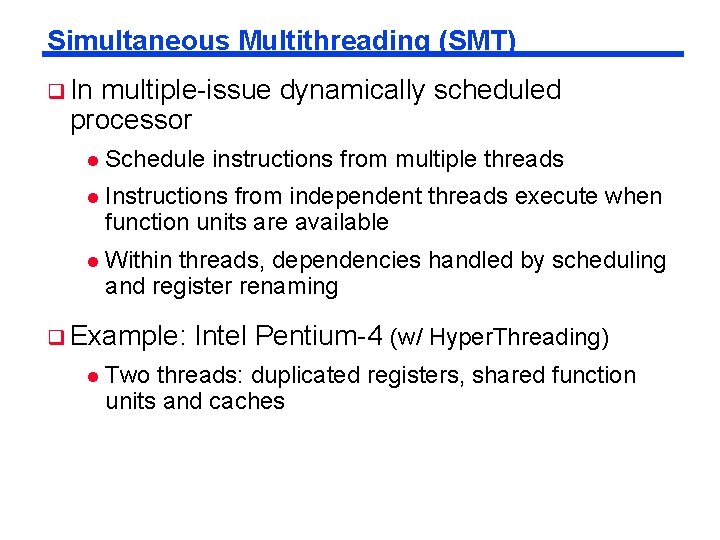

Simultaneous Multithreading (SMT) q In multiple-issue dynamically scheduled processor l Schedule instructions from multiple threads l Instructions from independent threads execute when function units are available l Within threads, dependencies handled by scheduling and register renaming q Example: l Intel Pentium-4 (w/ Hyper. Threading) Two threads: duplicated registers, shared function units and caches

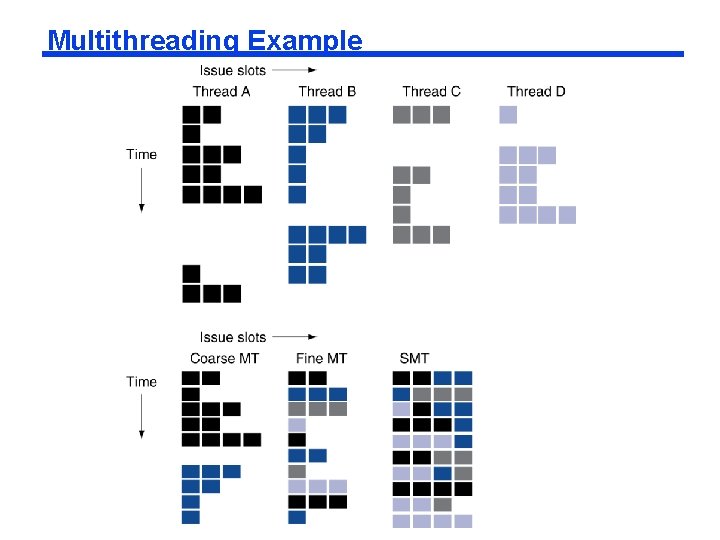

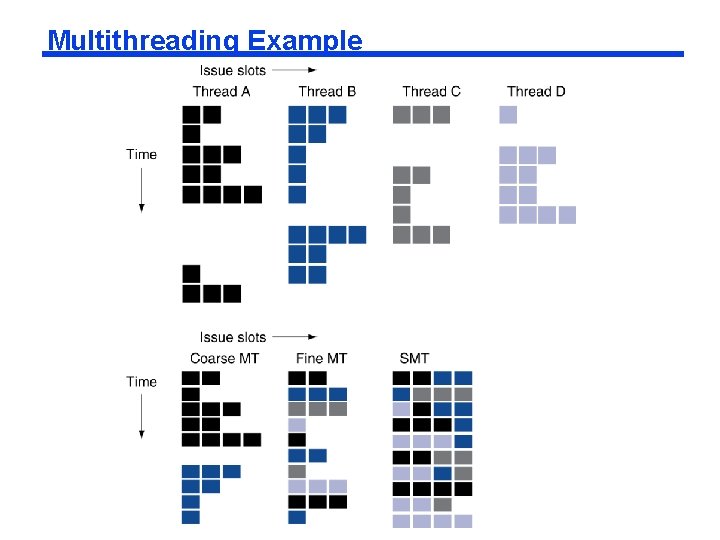

Multithreading Example

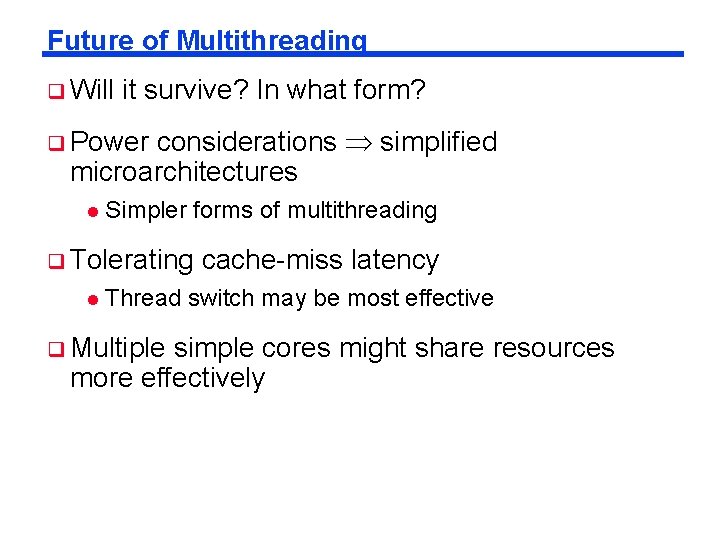

Future of Multithreading q Will it survive? In what form? considerations simplified microarchitectures q Power l Simpler forms of multithreading q Tolerating l cache-miss latency Thread switch may be most effective q Multiple simple cores might share resources more effectively

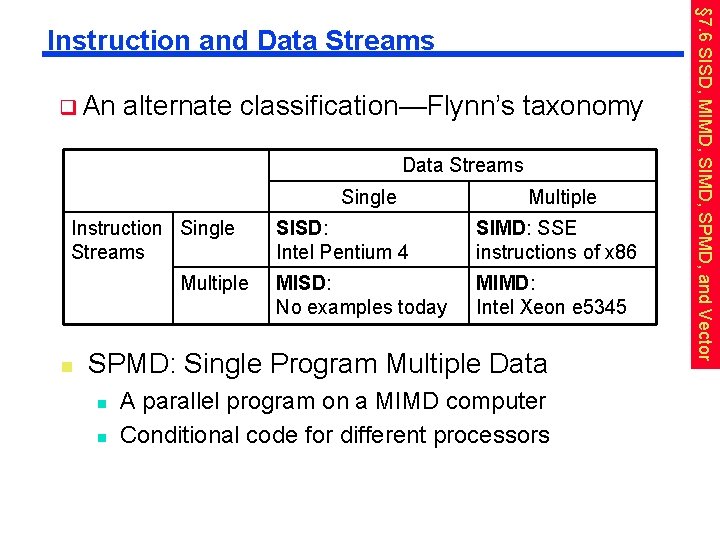

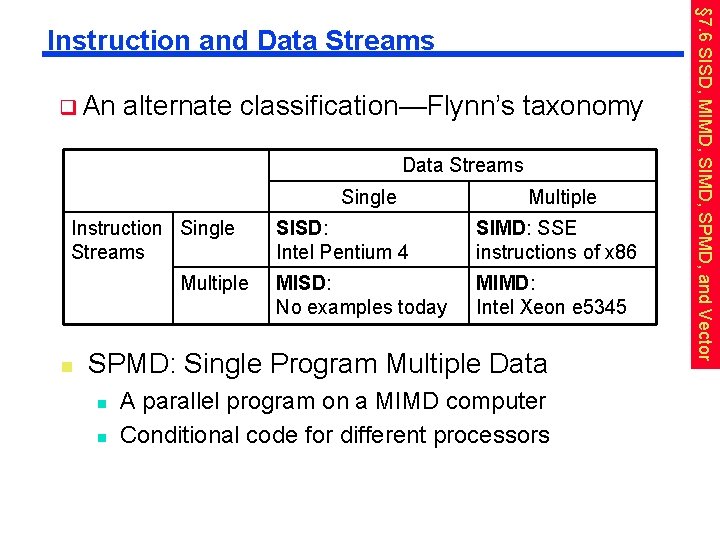

q An alternate classification—Flynn’s taxonomy Data Streams Single Instruction Single Streams Multiple n Multiple SISD: Intel Pentium 4 SIMD: SSE instructions of x 86 MISD: No examples today MIMD: Intel Xeon e 5345 SPMD: Single Program Multiple Data n n A parallel program on a MIMD computer Conditional code for different processors § 7. 6 SISD, MIMD, SPMD, and Vector Instruction and Data Streams

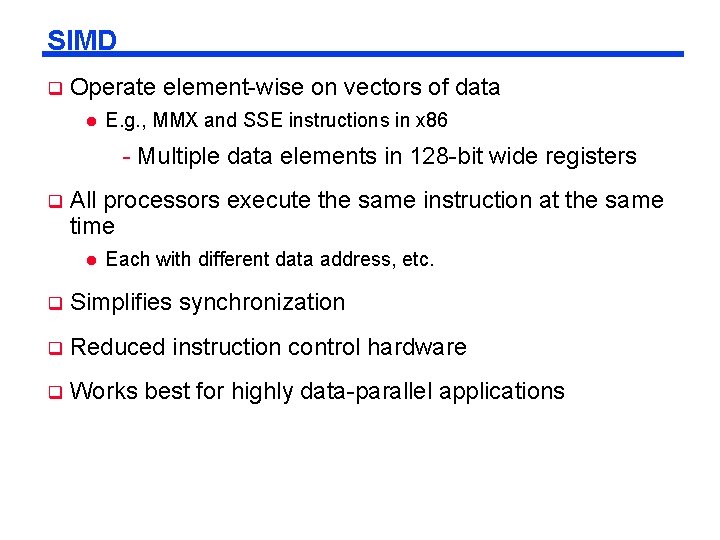

SIMD q Operate element-wise on vectors of data l E. g. , MMX and SSE instructions in x 86 - Multiple data elements in 128 -bit wide registers q All processors execute the same instruction at the same time l Each with different data address, etc. q Simplifies synchronization q Reduced instruction control hardware q Works best for highly data-parallel applications

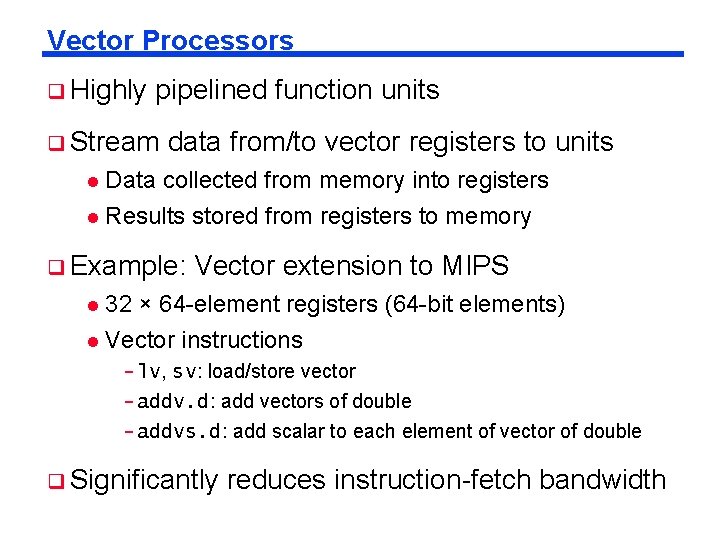

Vector Processors q Highly pipelined function units q Stream data from/to vector registers to units Data collected from memory into registers l Results stored from registers to memory l q Example: Vector extension to MIPS 32 × 64 -element registers (64 -bit elements) l Vector instructions l - lv, sv: load/store vector - addv. d: add vectors of double - addvs. d: add scalar to each element of vector of double q Significantly reduces instruction-fetch bandwidth

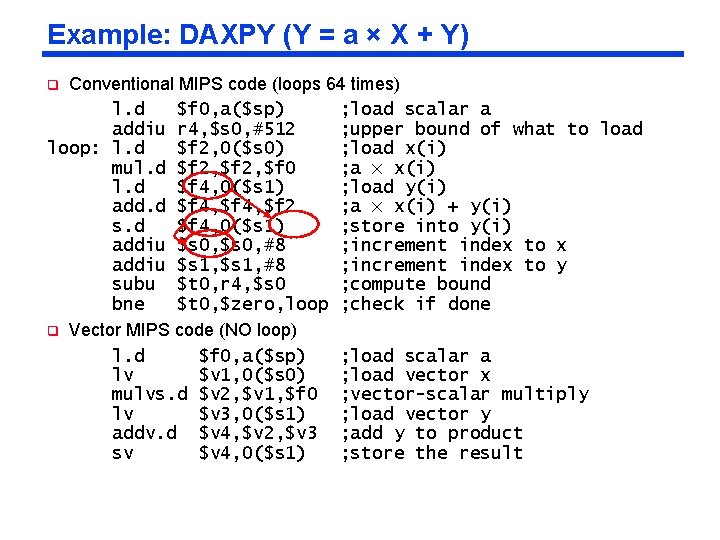

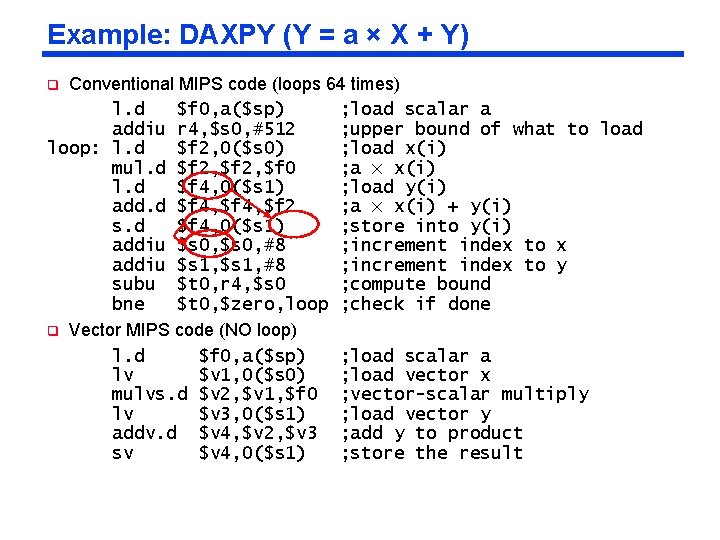

Example: DAXPY (Y = a × X + Y) Conventional MIPS code (loops 64 times) l. d $f 0, a($sp) ; load scalar a addiu r 4, $s 0, #512 ; upper bound of what to load loop: l. d $f 2, 0($s 0) ; load x(i) mul. d $f 2, $f 0 ; a × x(i) l. d $f 4, 0($s 1) ; load y(i) add. d $f 4, $f 2 ; a × x(i) + y(i) s. d $f 4, 0($s 1) ; store into y(i) addiu $s 0, #8 ; increment index to x addiu $s 1, #8 ; increment index to y subu $t 0, r 4, $s 0 ; compute bound bne $t 0, $zero, loop ; check if done q Vector MIPS code (NO loop) l. d $f 0, a($sp) ; load scalar a lv $v 1, 0($s 0) ; load vector x mulvs. d $v 2, $v 1, $f 0 ; vector-scalar multiply lv $v 3, 0($s 1) ; load vector y addv. d $v 4, $v 2, $v 3 ; add y to product sv $v 4, 0($s 1) ; store the result q

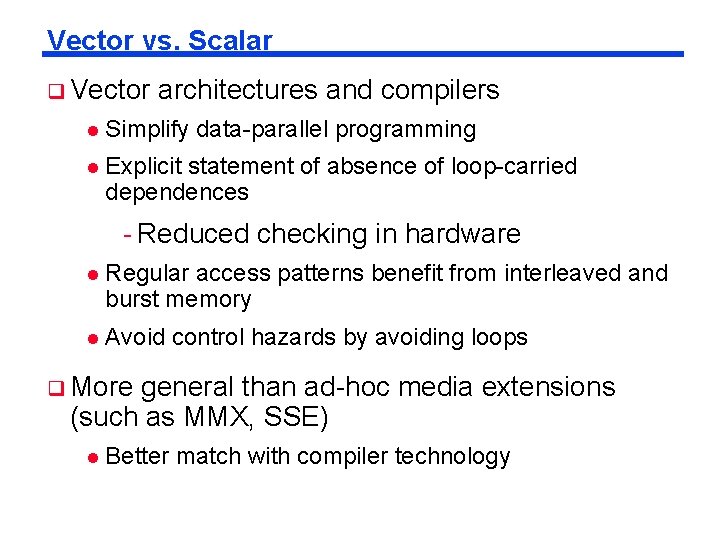

Vector vs. Scalar q Vector architectures and compilers l Simplify data-parallel programming l Explicit statement of absence of loop-carried dependences - Reduced checking in hardware l Regular access patterns benefit from interleaved and burst memory l Avoid control hazards by avoiding loops q More general than ad-hoc media extensions (such as MMX, SSE) l Better match with compiler technology

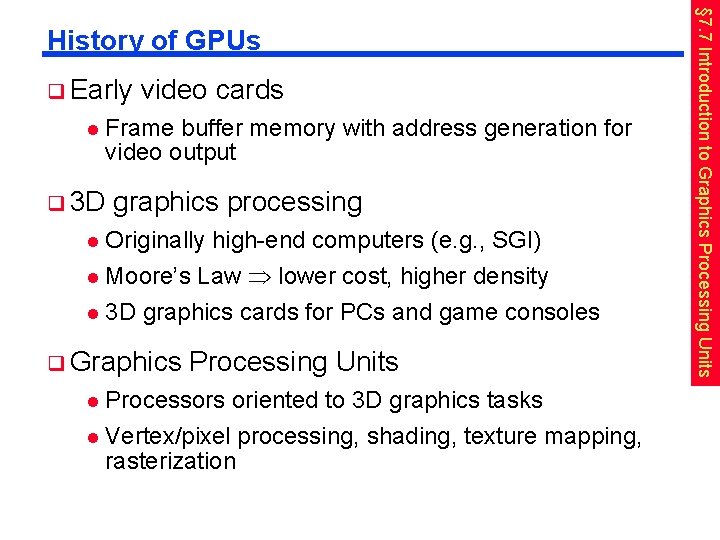

q Early l video cards Frame buffer memory with address generation for video output q 3 D graphics processing Originally high-end computers (e. g. , SGI) l Moore’s Law lower cost, higher density l 3 D graphics cards for PCs and game consoles l q Graphics Processing Units Processors oriented to 3 D graphics tasks l Vertex/pixel processing, shading, texture mapping, rasterization l § 7. 7 Introduction to Graphics Processing Units History of GPUs

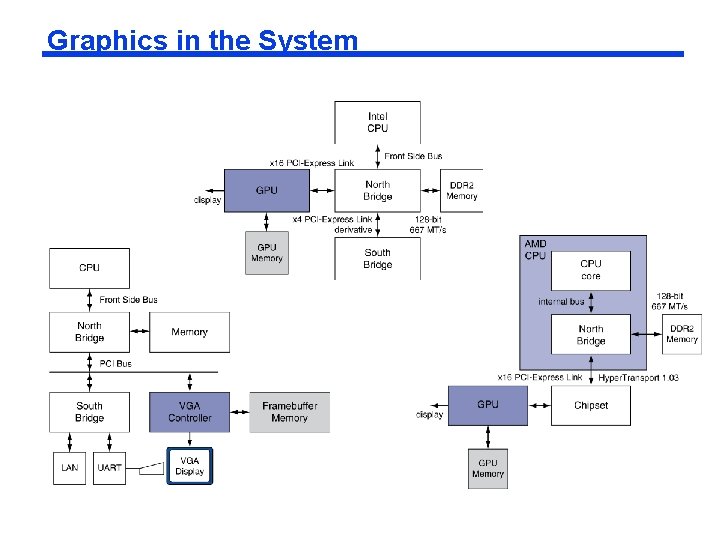

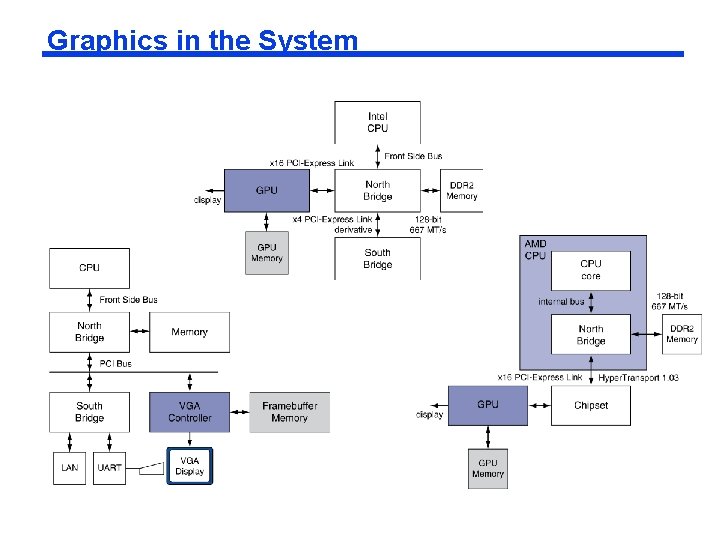

Graphics in the System

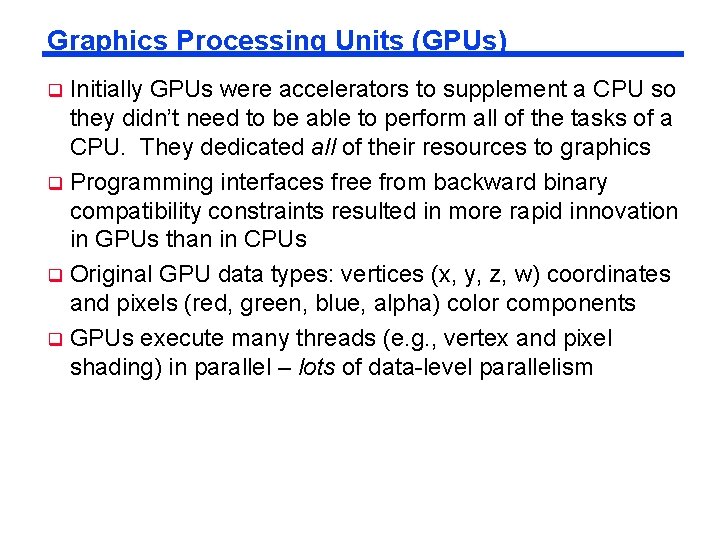

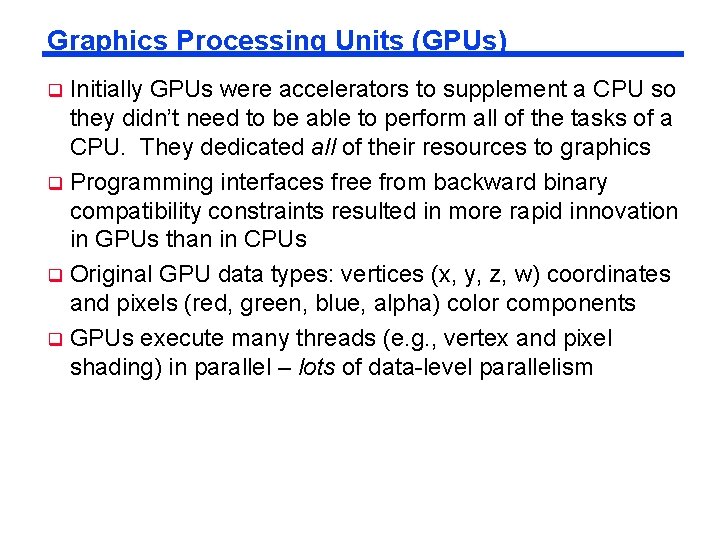

Graphics Processing Units (GPUs) Initially GPUs were accelerators to supplement a CPU so they didn’t need to be able to perform all of the tasks of a CPU. They dedicated all of their resources to graphics q Programming interfaces free from backward binary compatibility constraints resulted in more rapid innovation in GPUs than in CPUs q Original GPU data types: vertices (x, y, z, w) coordinates and pixels (red, green, blue, alpha) color components q GPUs execute many threads (e. g. , vertex and pixel shading) in parallel – lots of data-level parallelism q

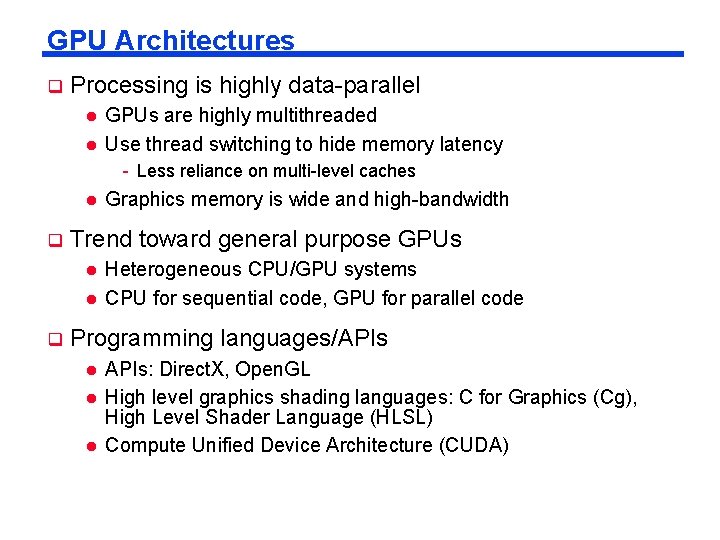

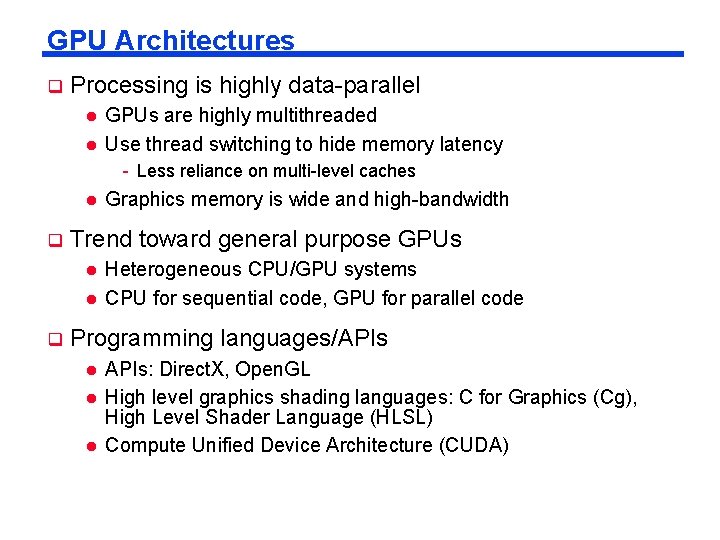

GPU Architectures q Processing is highly data-parallel l l GPUs are highly multithreaded Use thread switching to hide memory latency - Less reliance on multi-level caches l q Trend toward general purpose GPUs l l q Graphics memory is wide and high-bandwidth Heterogeneous CPU/GPU systems CPU for sequential code, GPU for parallel code Programming languages/APIs l l l APIs: Direct. X, Open. GL High level graphics shading languages: C for Graphics (Cg), High Level Shader Language (HLSL) Compute Unified Device Architecture (CUDA)

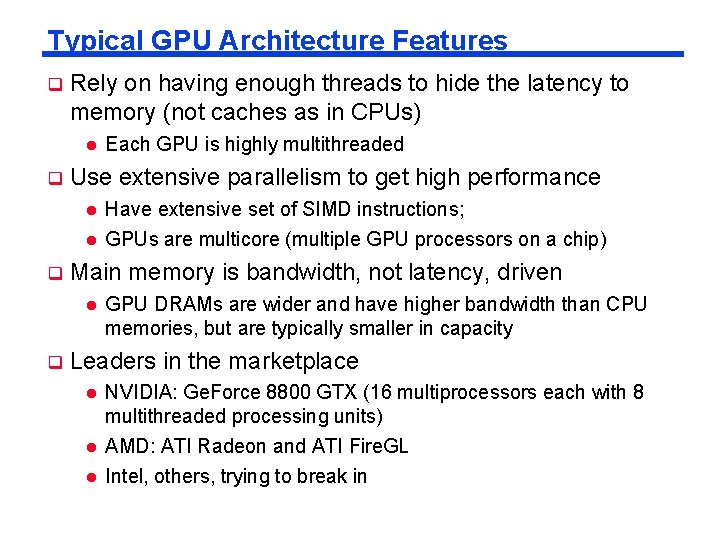

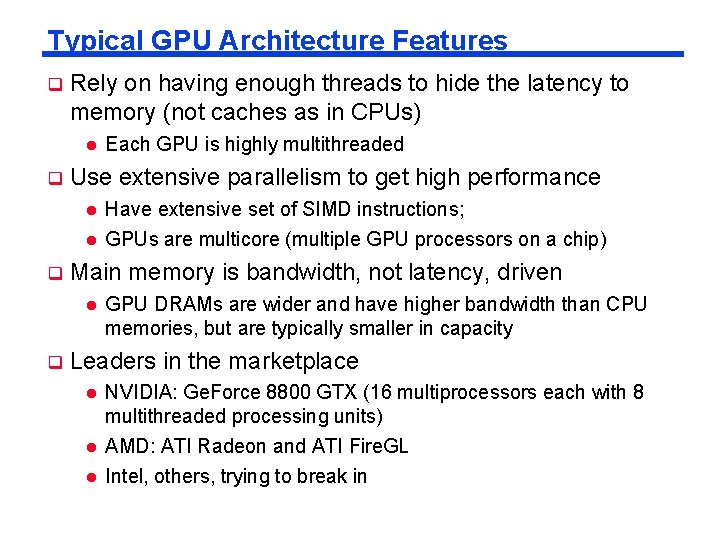

Typical GPU Architecture Features q Rely on having enough threads to hide the latency to memory (not caches as in CPUs) l q Use extensive parallelism to get high performance l l q Have extensive set of SIMD instructions; GPUs are multicore (multiple GPU processors on a chip) Main memory is bandwidth, not latency, driven l q Each GPU is highly multithreaded GPU DRAMs are wider and have higher bandwidth than CPU memories, but are typically smaller in capacity Leaders in the marketplace l l l NVIDIA: Ge. Force 8800 GTX (16 multiprocessors each with 8 multithreaded processing units) AMD: ATI Radeon and ATI Fire. GL Intel, others, trying to break in

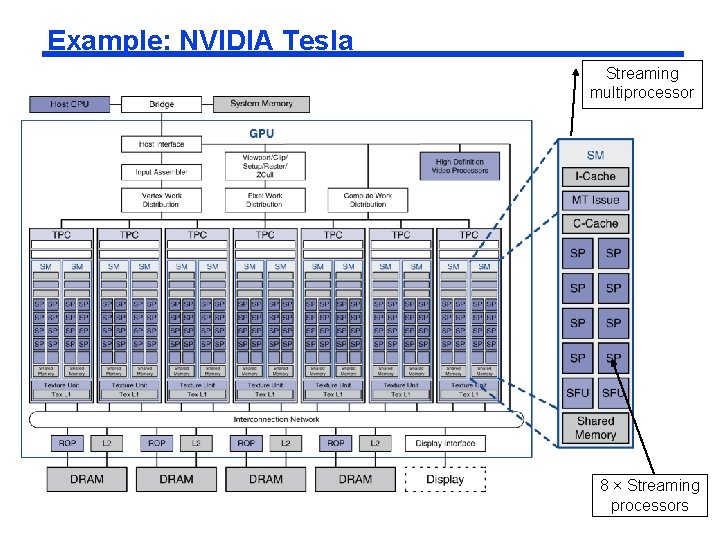

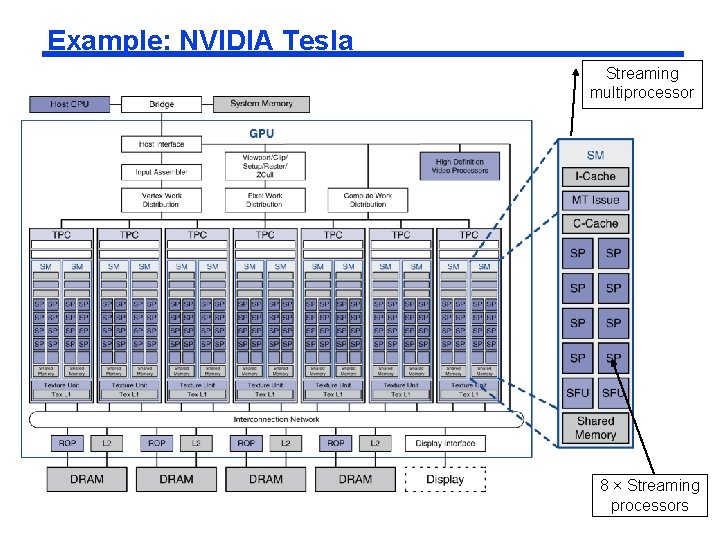

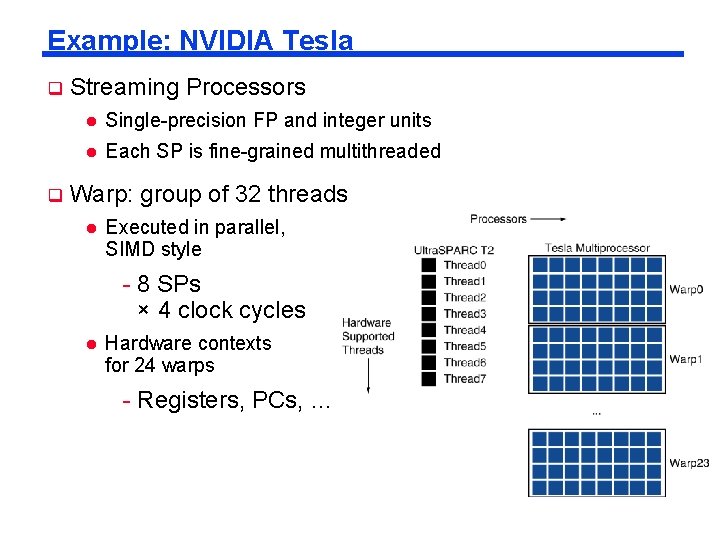

Example: NVIDIA Tesla Streaming multiprocessor 8 × Streaming processors

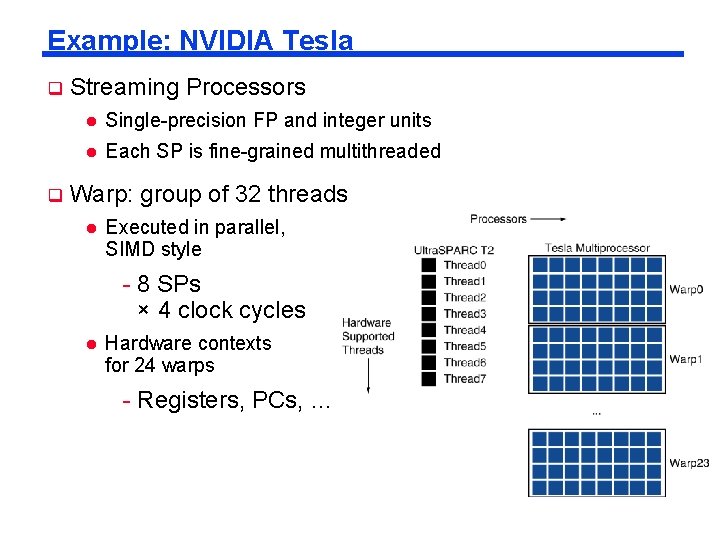

Example: NVIDIA Tesla q q Streaming Processors l Single-precision FP and integer units l Each SP is fine-grained multithreaded Warp: group of 32 threads l Executed in parallel, SIMD style - 8 SPs × 4 clock cycles l Hardware contexts for 24 warps - Registers, PCs, …

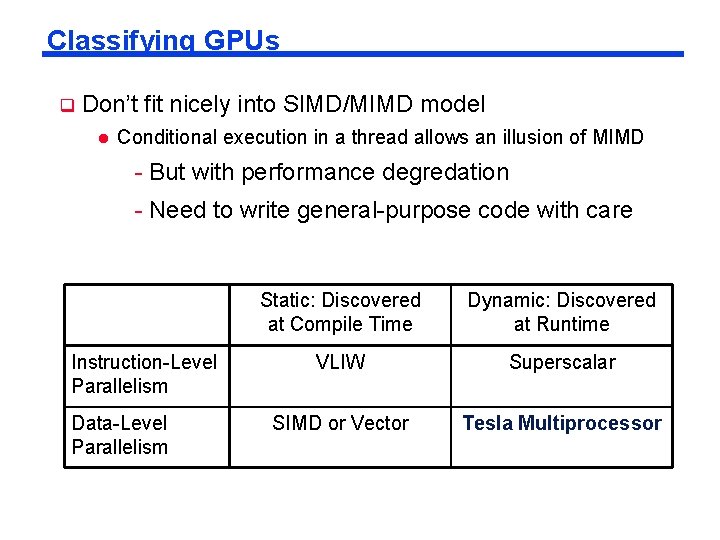

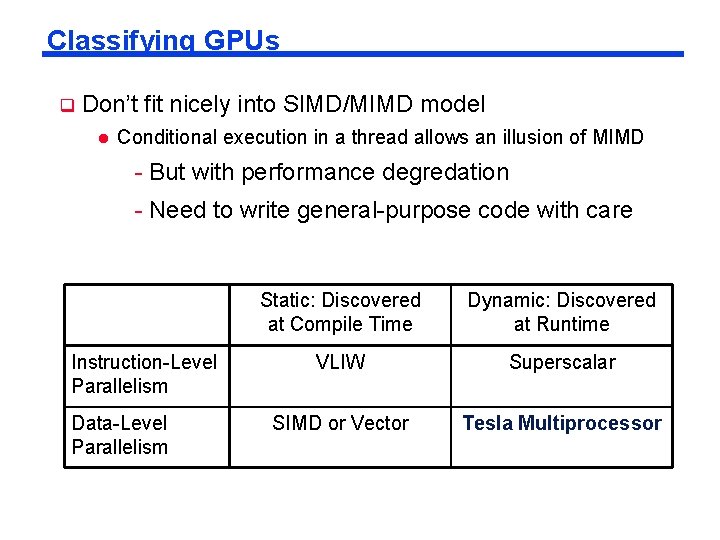

Classifying GPUs q Don’t fit nicely into SIMD/MIMD model l Conditional execution in a thread allows an illusion of MIMD - But with performance degredation - Need to write general-purpose code with care Instruction-Level Parallelism Data-Level Parallelism Static: Discovered at Compile Time Dynamic: Discovered at Runtime VLIW Superscalar SIMD or Vector Tesla Multiprocessor