Computer Architecture Memory Architecture Dr Eng Amr T

![Reducing Misses by Compiler Optimizations Ø Mc. Farling [1989] reduced caches misses by 75% Reducing Misses by Compiler Optimizations Ø Mc. Farling [1989] reduced caches misses by 75%](https://slidetodoc.com/presentation_image_h2/3254d7e2bd90f04d662f68c3482273ba/image-30.jpg)

![Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /*](https://slidetodoc.com/presentation_image_h2/3254d7e2bd90f04d662f68c3482273ba/image-31.jpg)

- Slides: 40

Computer Architecture: Memory Architecture Dr. Eng. Amr T. Abdel-Hamid Slides are Adapted from: • J. L. Hennessy and D. A. Patterson, Computer Architecture: A Quantitative Approach, 2 nd Edition, Morgan Kaufmann Publishing Co. , Menlo Park, CA. 1996. Copyright 1998 UCB • S. Tahar, Computer Architecture, Concordia University Winter 2012 Computer Architecture Elect 707

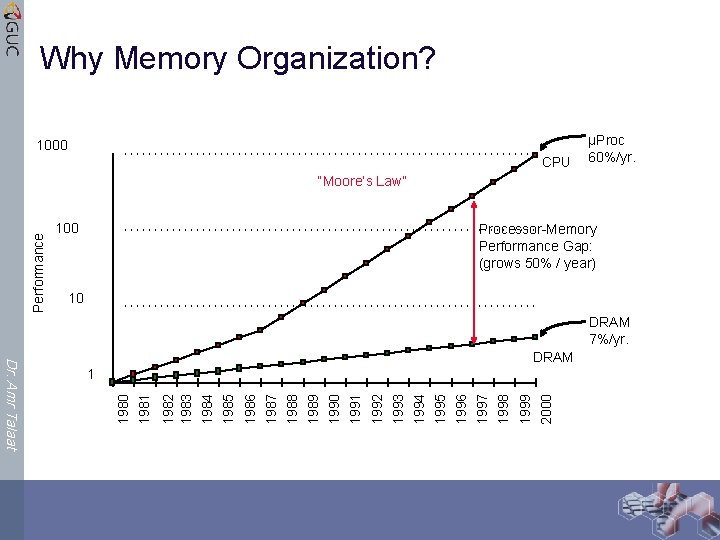

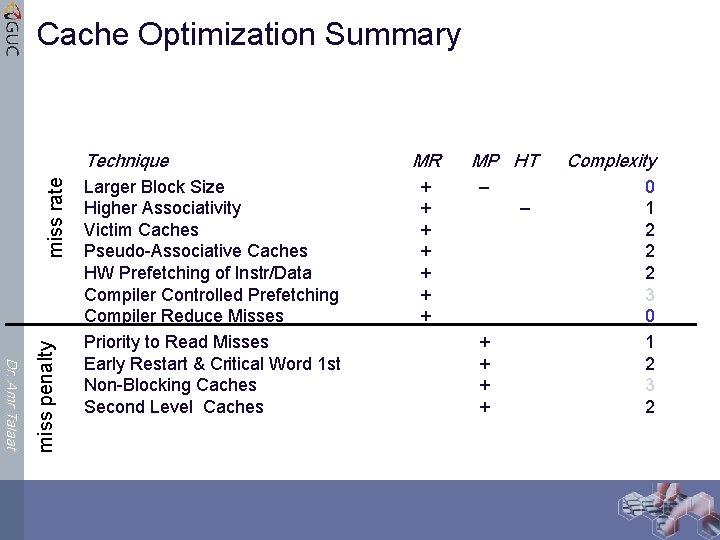

Why Memory Organization? 1000 CPU µProc 60%/yr. Performance “Moore’s Law” 100 Processor-Memory Performance Gap: (grows 50% / year) 10 DRAM 7%/yr. 1999 2000 1998 1995 1996 1997 1992 1993 1994 1989 1990 1991 1986 1987 1988 1985 1982 1983 1984 1981 1 1980 Dr. Amr Talaat DRAM

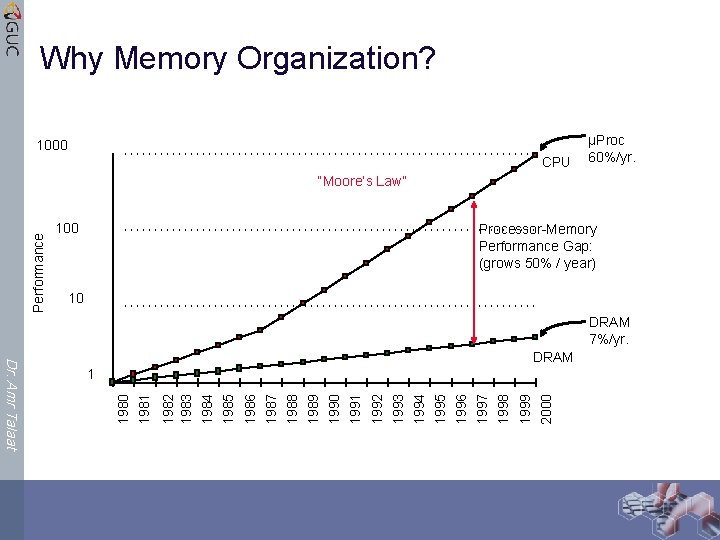

The Principle of Locality Ø The Principle of Locality: Ø Programs access a relatively small portion of the address space at any instant of time. Ø Two Different Types of Locality: Ø Temporal Locality (Locality in Time): If an item is referenced, it will tend to be referenced again soon (e. g. , loops, reuse) Ø Spatial Locality (Locality in Space): If an item is referenced, items whose addresses are close by tend to be referenced soon (e. g. , straightline code, array access) Dr. Amr Talaat Ø Basic Principle to overcome the memory/proc. Interaction problem

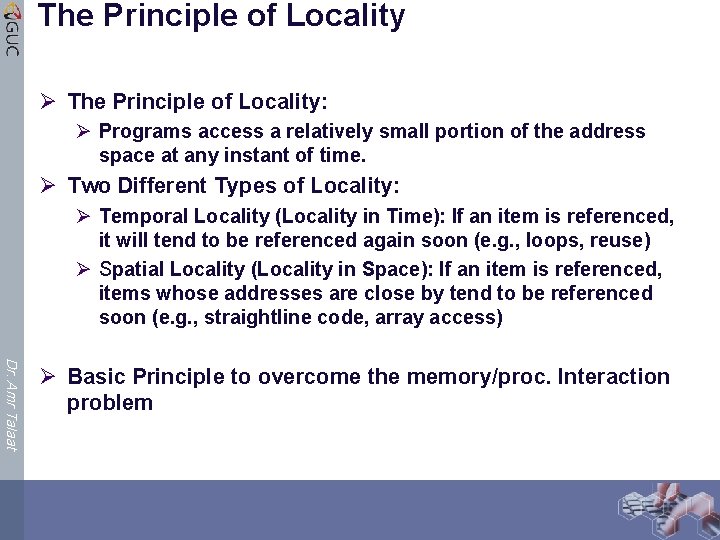

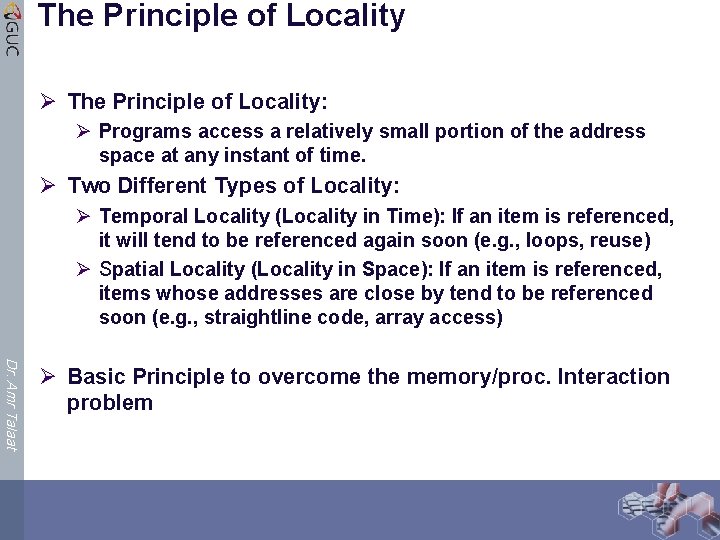

What is a cache? Ø Small, fast storage used to improve average access time to slow memory. Ø Exploits spatial and temporal locality Ø In computer architecture, almost everything is a cache! Ø Ø Registers a cache on variables First-level cache a cache on second-level cache Second-level cache a cache on memory Memory a cache on disk (virtual memory) Proc/Regs Dr. Amr Talaat Bigger L 1 -Cache L 2 -Cache Memory Disk, etc. Faster

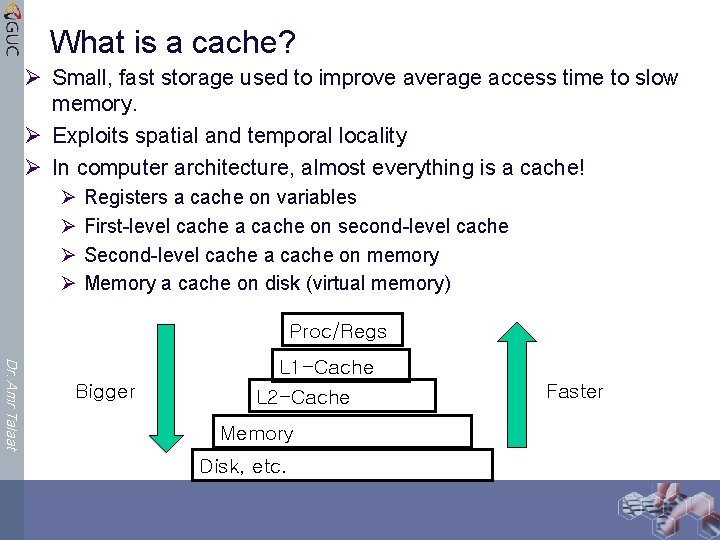

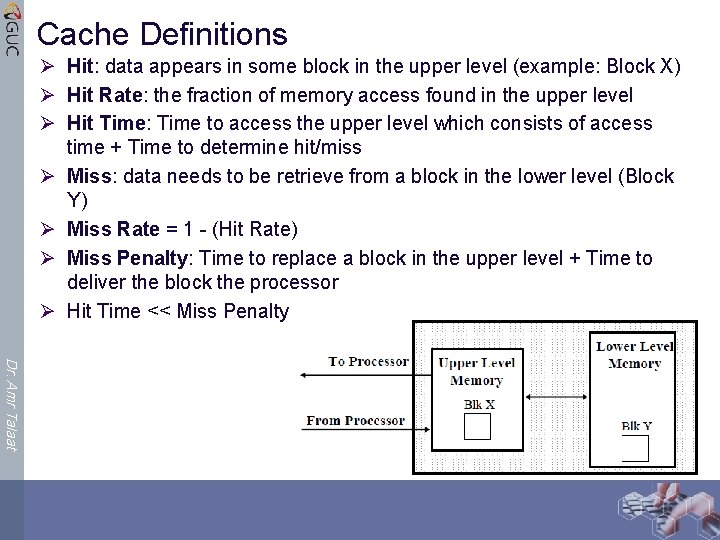

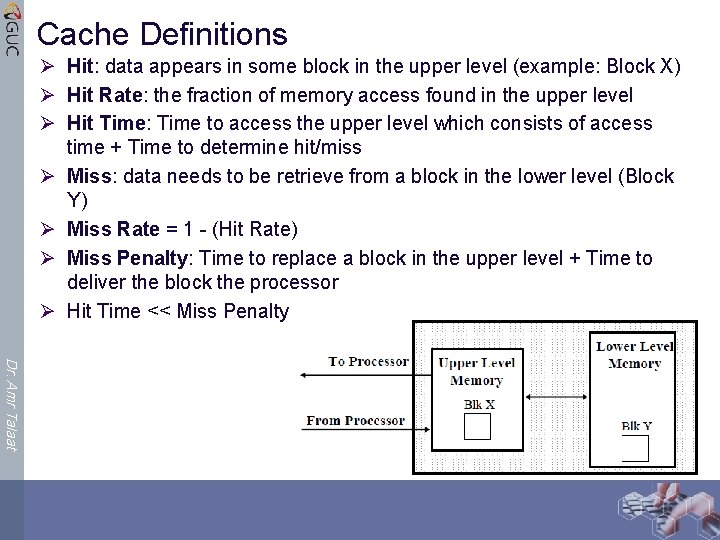

Cache Definitions Ø Hit: data appears in some block in the upper level (example: Block X) Ø Hit Rate: the fraction of memory access found in the upper level Ø Hit Time: Time to access the upper level which consists of access time + Time to determine hit/miss Ø Miss: data needs to be retrieve from a block in the lower level (Block Y) Ø Miss Rate = 1 - (Hit Rate) Ø Miss Penalty: Time to replace a block in the upper level + Time to deliver the block the processor Ø Hit Time << Miss Penalty Dr. Amr Talaat

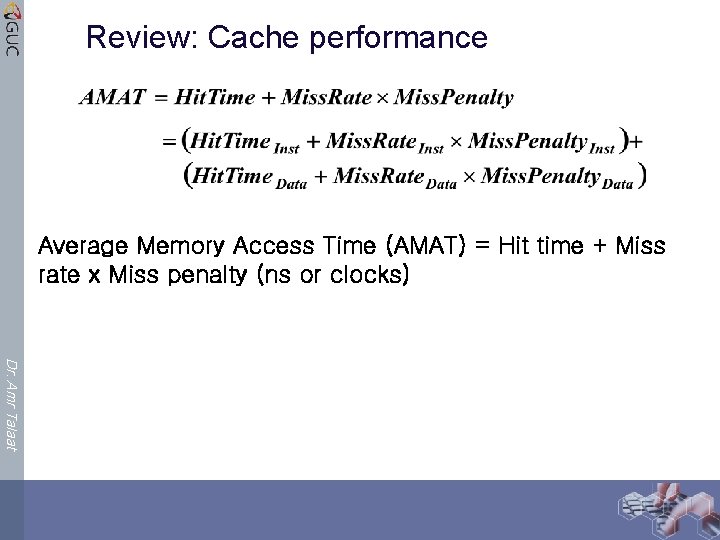

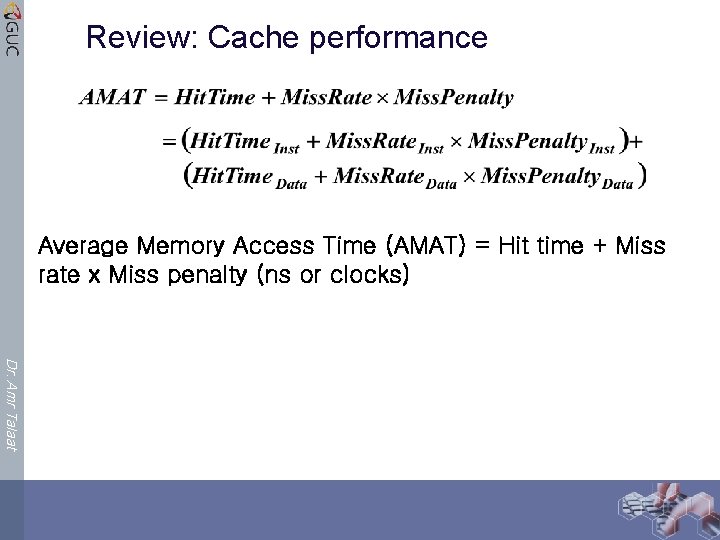

Review: Cache performance Average Memory Access Time (AMAT) = Hit time + Miss rate x Miss penalty (ns or clocks) Dr. Amr Talaat

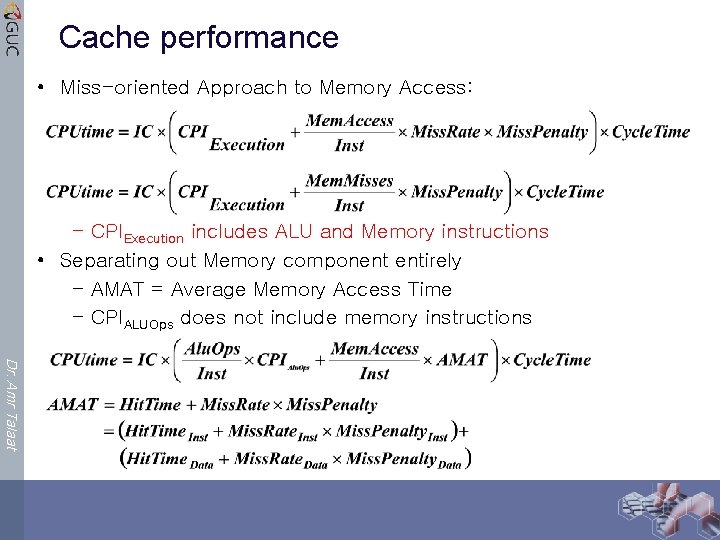

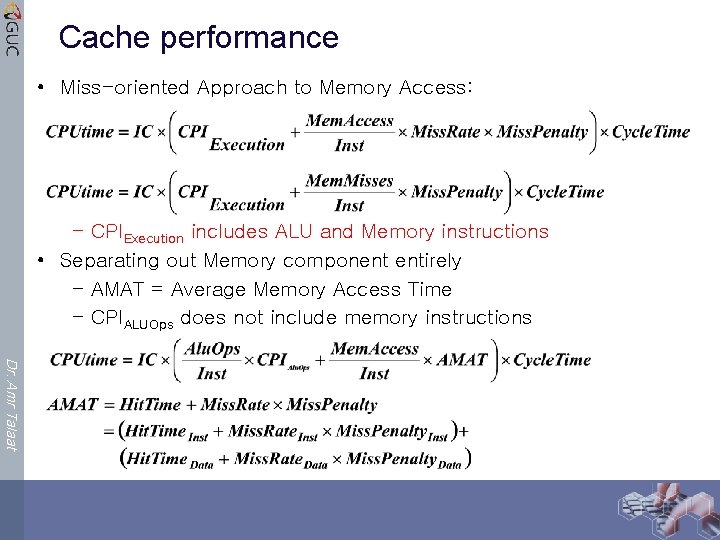

Cache performance • Miss-oriented Approach to Memory Access: – CPIExecution includes ALU and Memory instructions • Separating out Memory component entirely – AMAT = Average Memory Access Time – CPIALUOps does not include memory instructions Dr. Amr Talaat

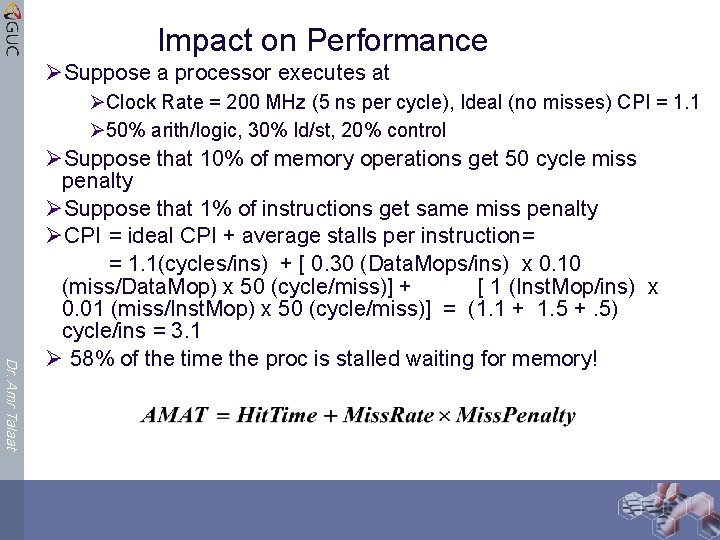

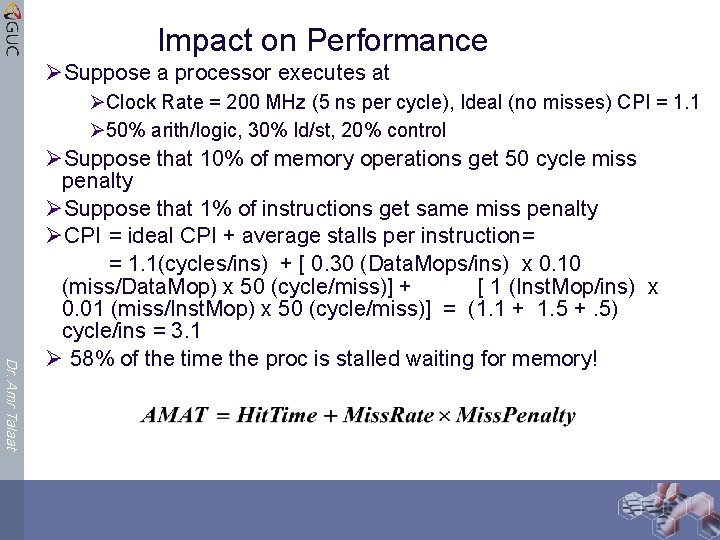

Impact on Performance ØSuppose a processor executes at ØClock Rate = 200 MHz (5 ns per cycle), Ideal (no misses) CPI = 1. 1 Ø 50% arith/logic, 30% ld/st, 20% control Dr. Amr Talaat ØSuppose that 10% of memory operations get 50 cycle miss penalty ØSuppose that 1% of instructions get same miss penalty ØCPI = ideal CPI + average stalls per instruction= = 1. 1(cycles/ins) + [ 0. 30 (Data. Mops/ins) x 0. 10 (miss/Data. Mop) x 50 (cycle/miss)] + [ 1 (Inst. Mop/ins) x 0. 01 (miss/Inst. Mop) x 50 (cycle/miss)] = (1. 1 + 1. 5 +. 5) cycle/ins = 3. 1 Ø 58% of the time the proc is stalled waiting for memory!

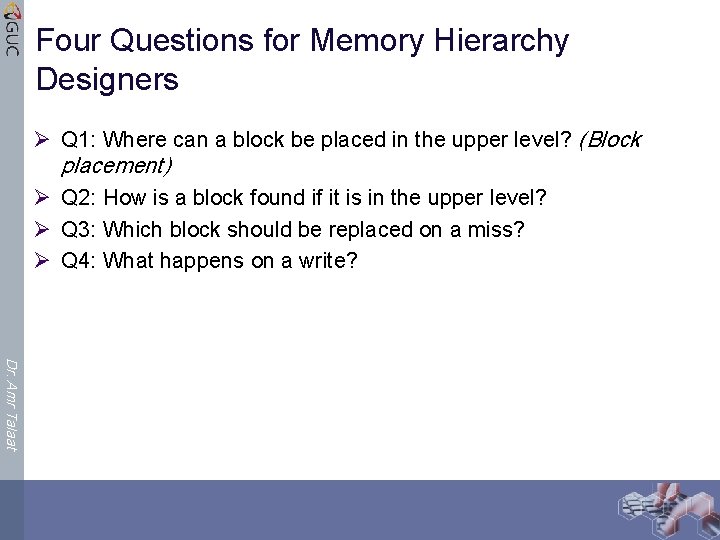

Four Questions for Memory Hierarchy Designers Ø Q 1: Where can a block be placed in the upper level? (Block placement) Ø Q 2: How is a block found if it is in the upper level? Ø Q 3: Which block should be replaced on a miss? Ø Q 4: What happens on a write? Dr. Amr Talaat

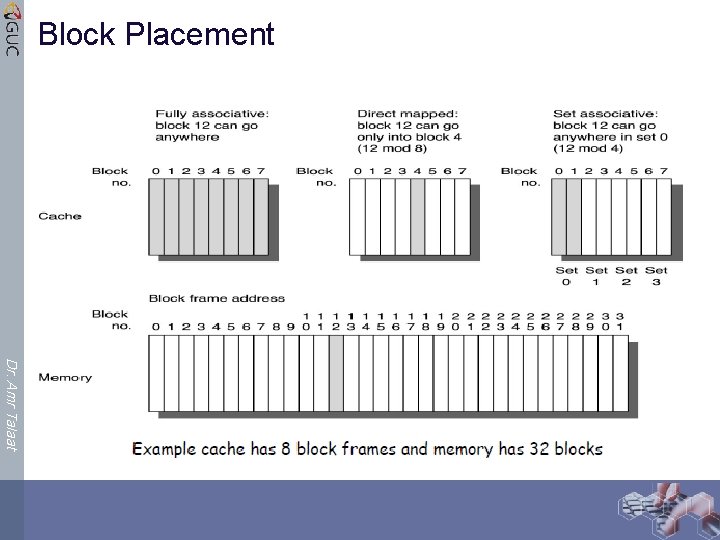

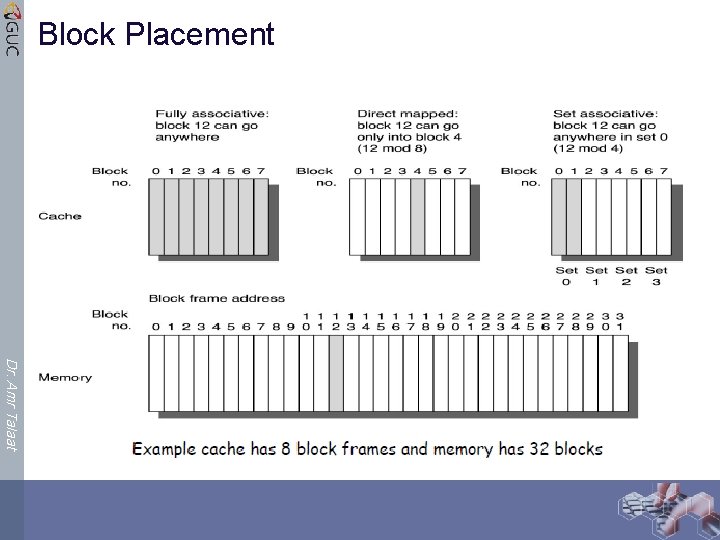

Block Placement Ø Q 1: Where can a block be placed in the upper level? Ø Fully Associative, Ø Set Associative, Ø Direct Mapped Dr. Amr Talaat 10

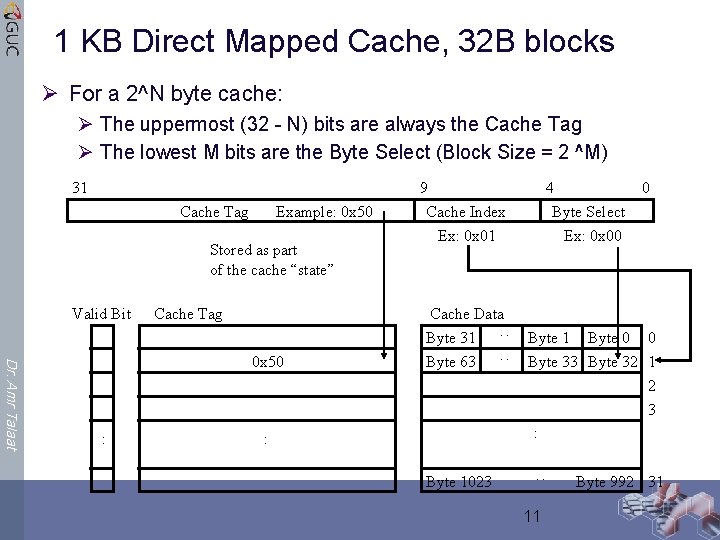

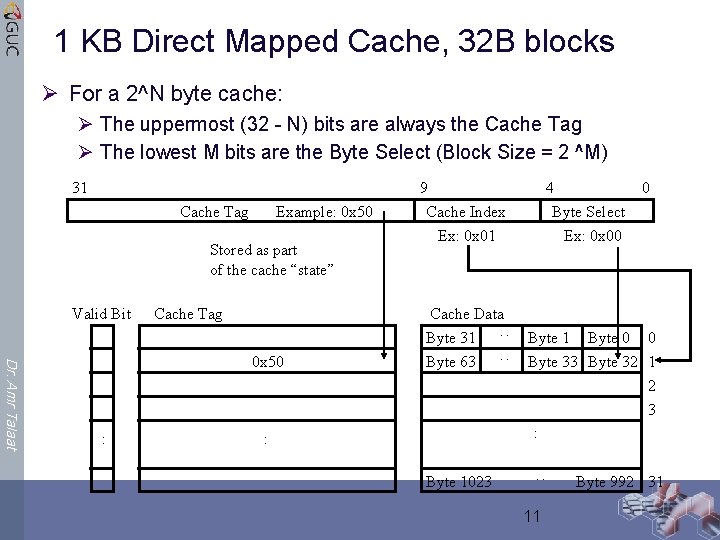

1 KB Direct Mapped Cache, 32 B blocks Ø For a 2^N byte cache: Ø The uppermost (32 - N) bits are always the Cache Tag Ø The lowest M bits are the Byte Select (Block Size = 2 ^M) Cache Tag Example: 0 x 50 Stored as part of the cache “state” Cache Tag Cache Data Byte 31 Byte 63 Dr. Amr Talaat 0 x 50 : : : Valid Bit 9 Cache Index Ex: 0 x 01 : Byte 1023 4 Byte Select Ex: 0 x 00 0 Byte 1 Byte 0 0 Byte 33 Byte 32 1 2 3 : : 31 11 Byte 992 31

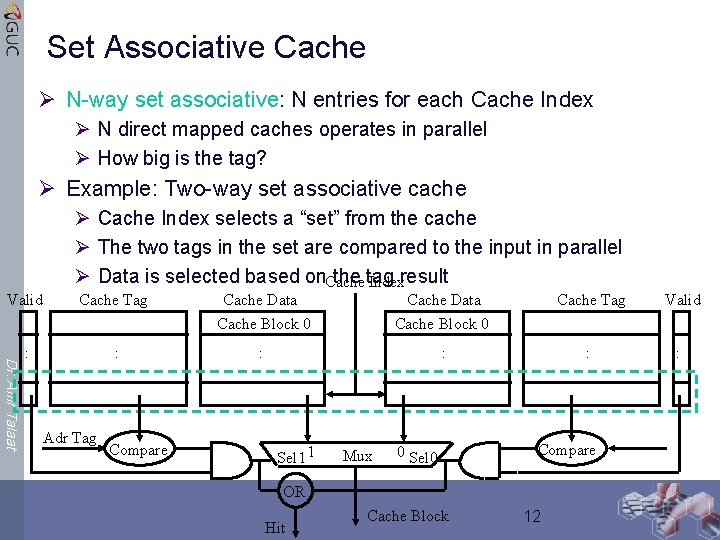

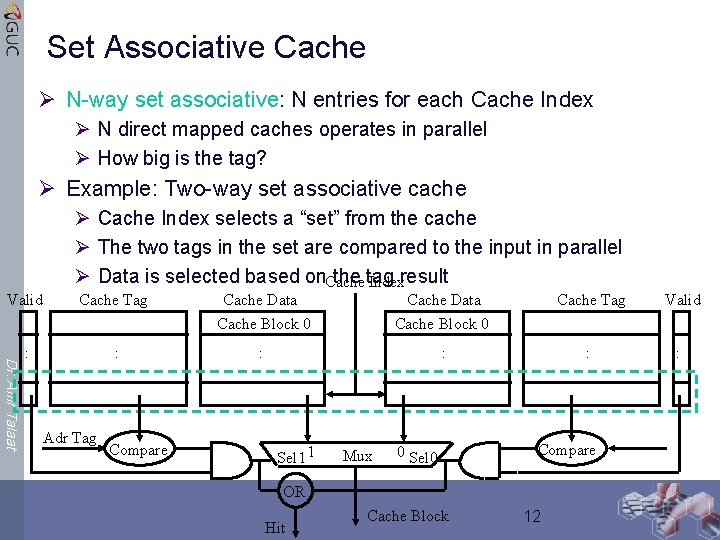

Set Associative Cache Ø N-way set associative: N entries for each Cache Index Ø N direct mapped caches operates in parallel Ø How big is the tag? Ø Example: Two-way set associative cache Ø Cache Index selects a “set” from the cache Ø The two tags in the set are compared to the input in parallel Ø Data is selected based on. Cache tag Indexresult Cache Tag Cache Data Cache Block 0 Cache Tag Valid : : : Dr. Amr Talaat Valid Adr Tag Compare Sel 1 1 Mux 0 Sel 0 Compare OR Hit Cache Block 12

Block Placement Dr. Amr Talaat

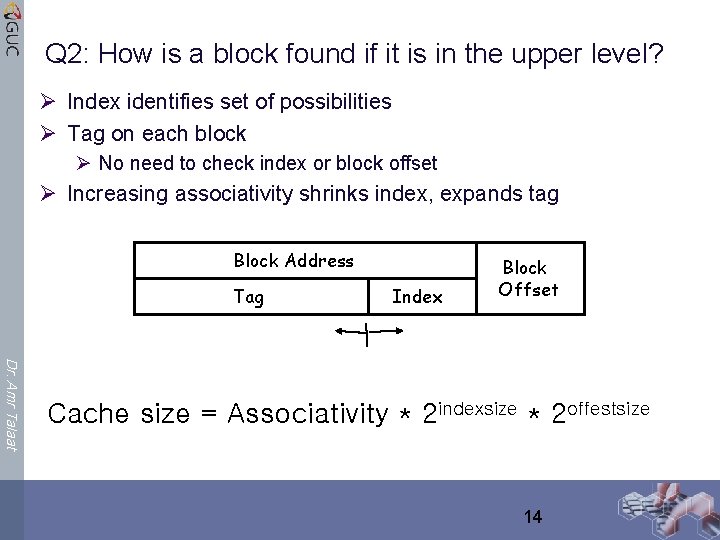

Q 2: How is a block found if it is in the upper level? Ø Index identifies set of possibilities Ø Tag on each block Ø No need to check index or block offset Ø Increasing associativity shrinks index, expands tag Block Address Tag Index Block Offset Dr. Amr Talaat Cache size = Associativity * 2 indexsize * 2 offestsize 14

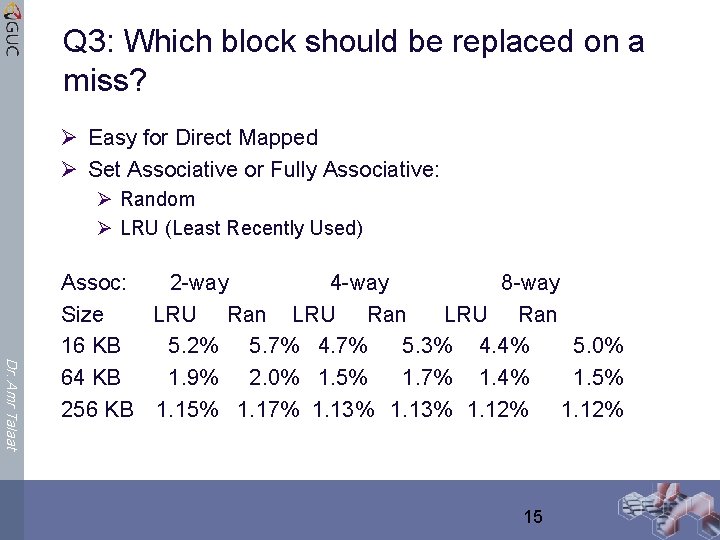

Q 3: Which block should be replaced on a miss? Ø Easy for Direct Mapped Ø Set Associative or Fully Associative: Ø Random Ø LRU (Least Recently Used) Dr. Amr Talaat Assoc: 2 -way 4 -way 8 -way Size LRU Ran 16 KB 5. 2% 5. 7% 4. 7% 5. 3% 4. 4% 5. 0% 64 KB 1. 9% 2. 0% 1. 5% 1. 7% 1. 4% 1. 5% 256 KB 1. 15% 1. 17% 1. 13% 1. 12% 15

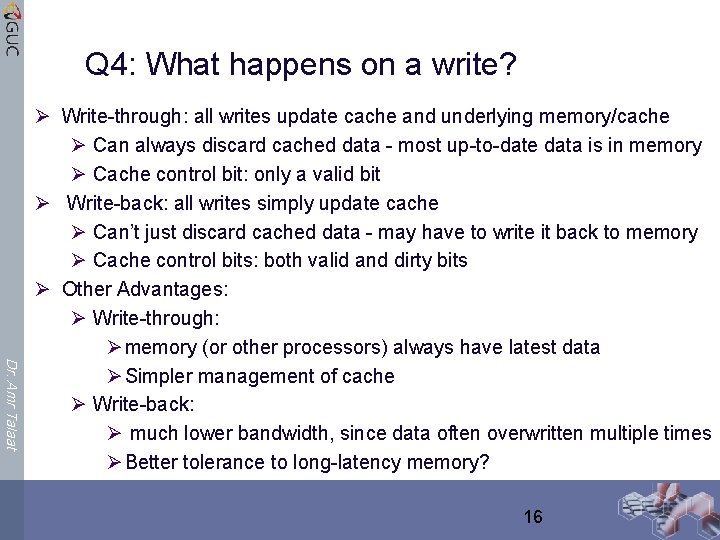

Q 4: What happens on a write? Dr. Amr Talaat Ø Write-through: all writes update cache and underlying memory/cache Ø Can always discard cached data - most up-to-date data is in memory Ø Cache control bit: only a valid bit Ø Write-back: all writes simply update cache Ø Can’t just discard cached data - may have to write it back to memory Ø Cache control bits: both valid and dirty bits Ø Other Advantages: Ø Write-through: Ø memory (or other processors) always have latest data Ø Simpler management of cache Ø Write-back: Ø much lower bandwidth, since data often overwritten multiple times Ø Better tolerance to long-latency memory? 16

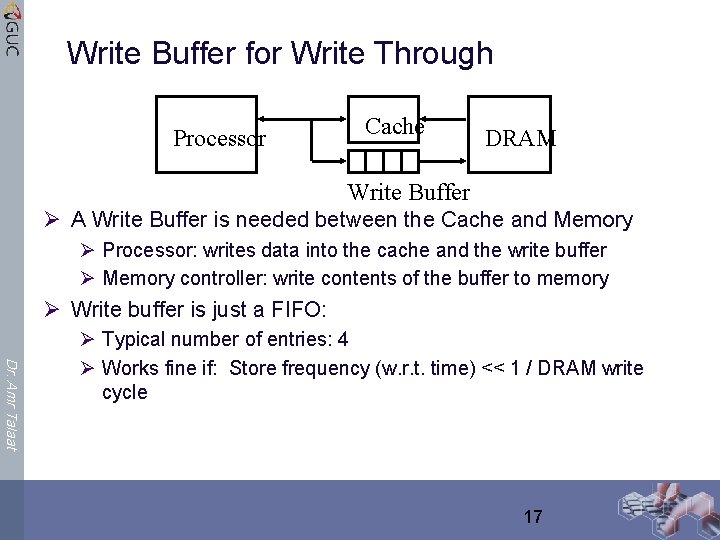

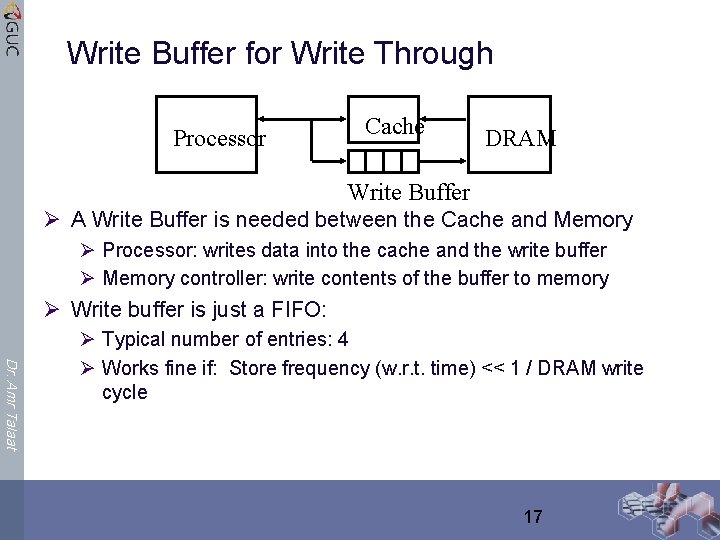

Write Buffer for Write Through Processor Cache DRAM Write Buffer Ø A Write Buffer is needed between the Cache and Memory Ø Processor: writes data into the cache and the write buffer Ø Memory controller: write contents of the buffer to memory Ø Write buffer is just a FIFO: Dr. Amr Talaat Ø Typical number of entries: 4 Ø Works fine if: Store frequency (w. r. t. time) << 1 / DRAM write cycle 17

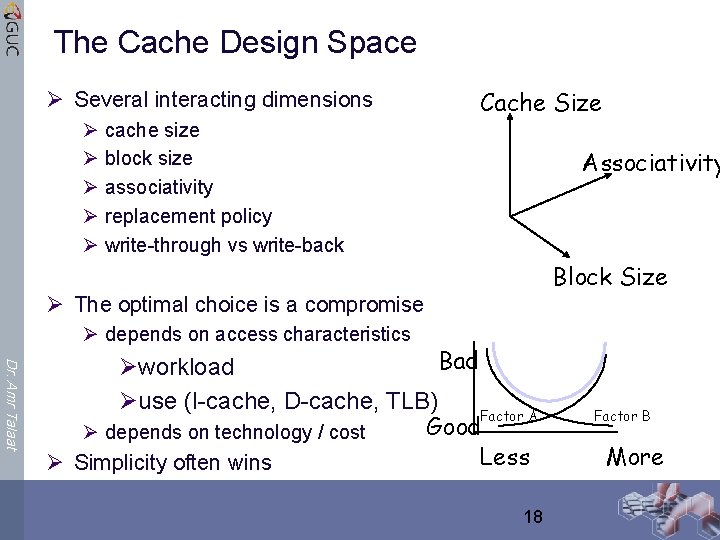

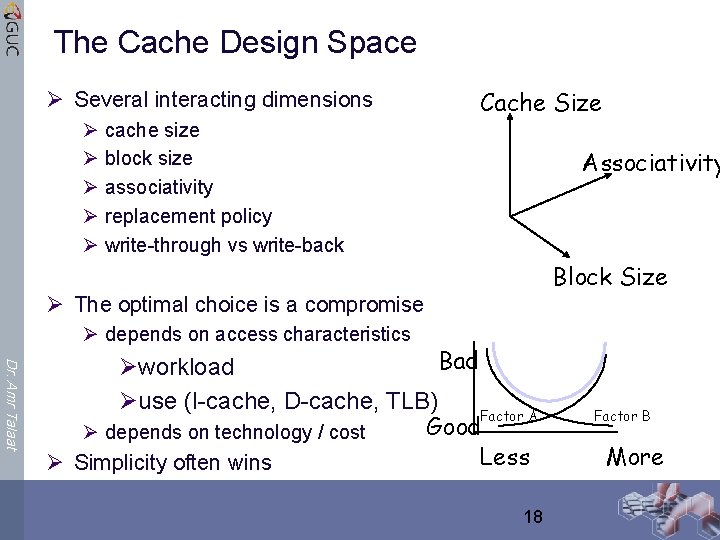

The Cache Design Space Ø Several interacting dimensions Ø Ø Ø Cache Size cache size block size associativity replacement policy write-through vs write-back Associativity Block Size Ø The optimal choice is a compromise Ø depends on access characteristics Dr. Amr Talaat Bad Øworkload Øuse (I-cache, D-cache, TLB) Factor A Good Ø depends on technology / cost Less Ø Simplicity often wins 18 Factor B More

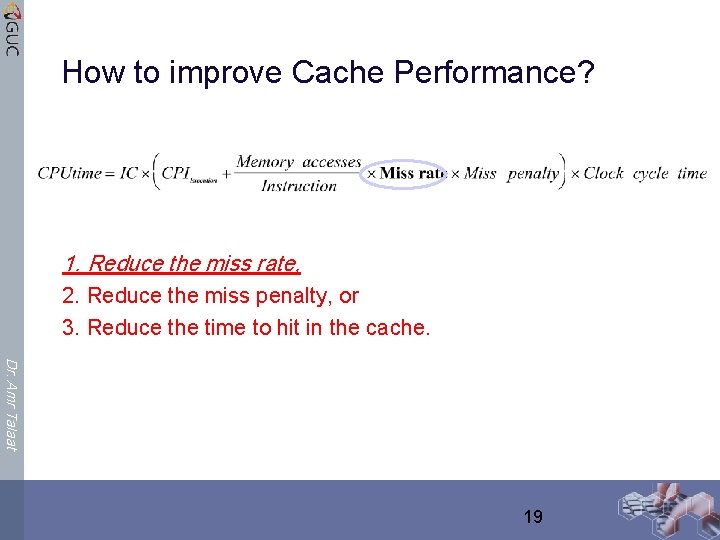

How to improve Cache Performance? 1. Reduce the miss rate, 2. Reduce the miss penalty, or 3. Reduce the time to hit in the cache. Dr. Amr Talaat 19

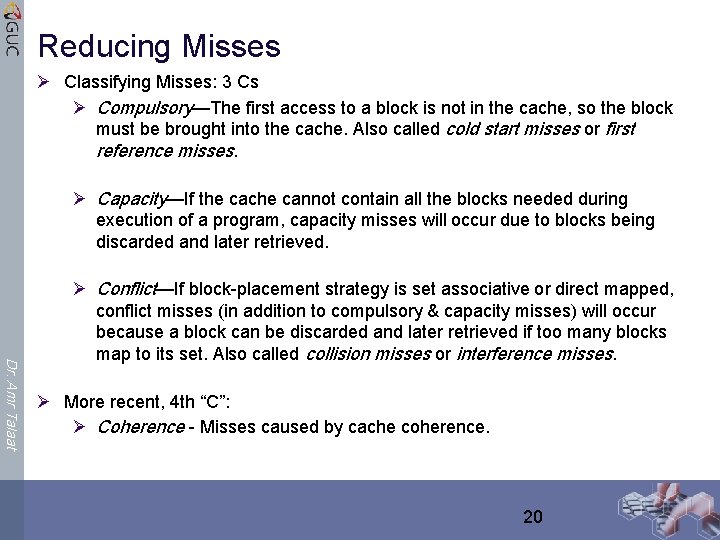

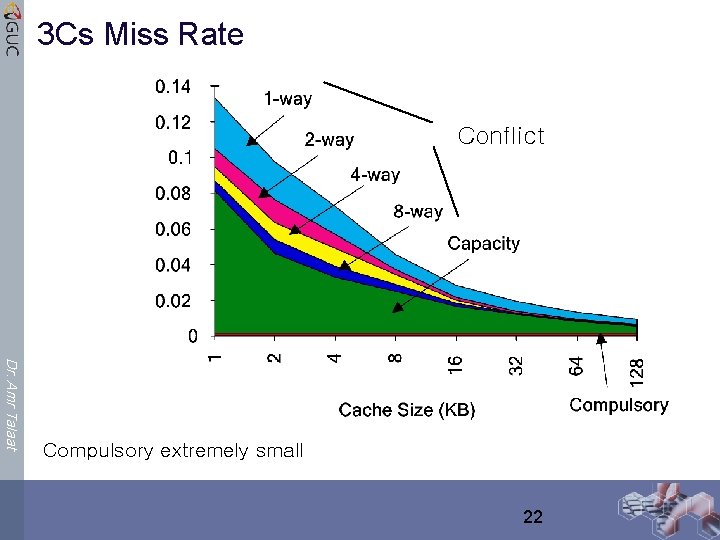

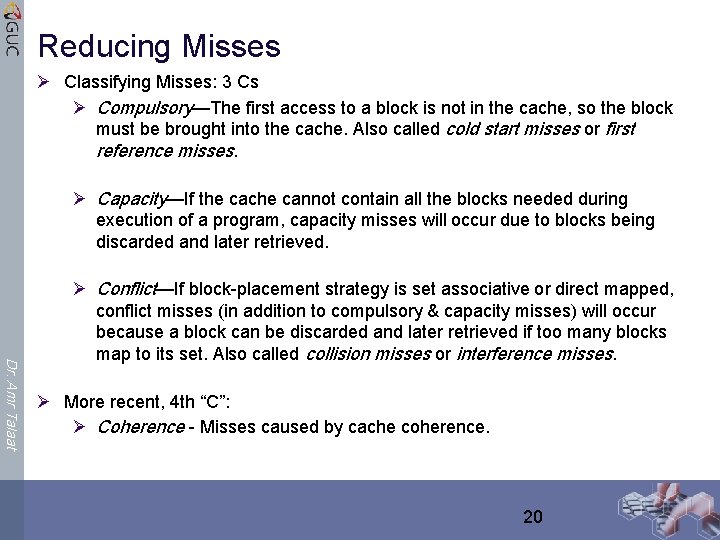

Reducing Misses Ø Classifying Misses: 3 Cs Ø Compulsory—The first access to a block is not in the cache, so the block must be brought into the cache. Also called cold start misses or first reference misses. Ø Capacity—If the cache cannot contain all the blocks needed during execution of a program, capacity misses will occur due to blocks being discarded and later retrieved. Dr. Amr Talaat Ø Conflict—If block-placement strategy is set associative or direct mapped, conflict misses (in addition to compulsory & capacity misses) will occur because a block can be discarded and later retrieved if too many blocks map to its set. Also called collision misses or interference misses. Ø More recent, 4 th “C”: Ø Coherence - Misses caused by cache coherence. 20

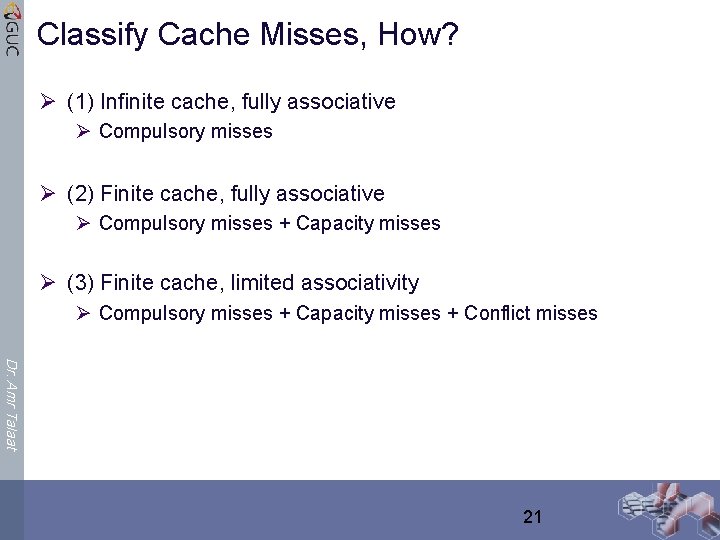

Classify Cache Misses, How? Ø (1) Infinite cache, fully associative Ø Compulsory misses Ø (2) Finite cache, fully associative Ø Compulsory misses + Capacity misses Ø (3) Finite cache, limited associativity Ø Compulsory misses + Capacity misses + Conflict misses Dr. Amr Talaat 21

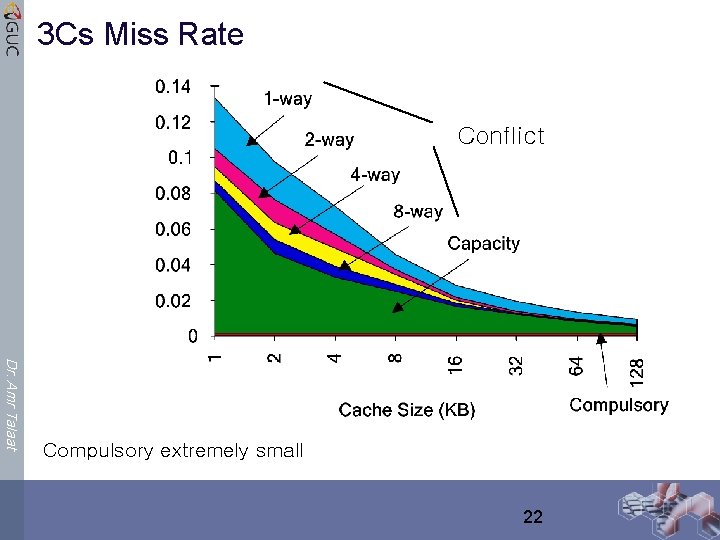

3 Cs Miss Rate Conflict Dr. Amr Talaat Compulsory extremely small 22

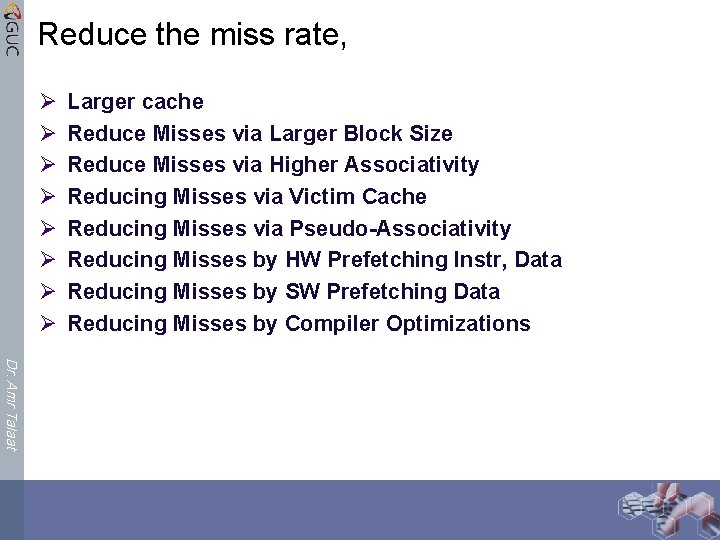

Reduce the miss rate, Ø Ø Ø Ø Larger cache Reduce Misses via Larger Block Size Reduce Misses via Higher Associativity Reducing Misses via Victim Cache Reducing Misses via Pseudo-Associativity Reducing Misses by HW Prefetching Instr, Data Reducing Misses by SW Prefetching Data Reducing Misses by Compiler Optimizations Dr. Amr Talaat

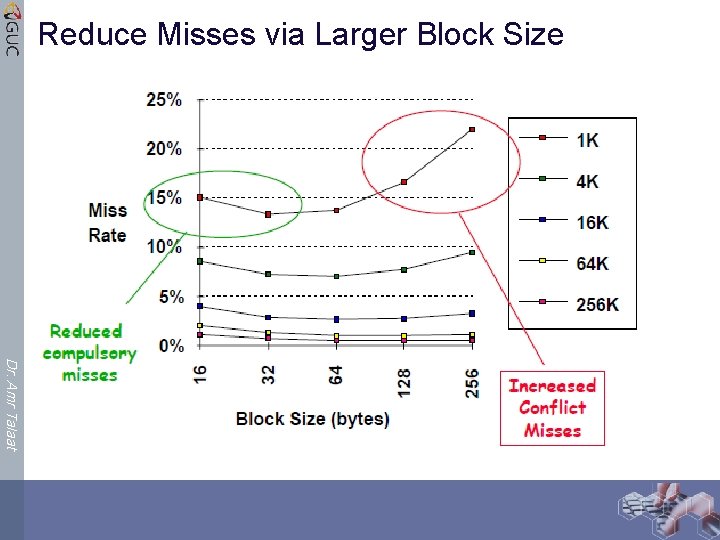

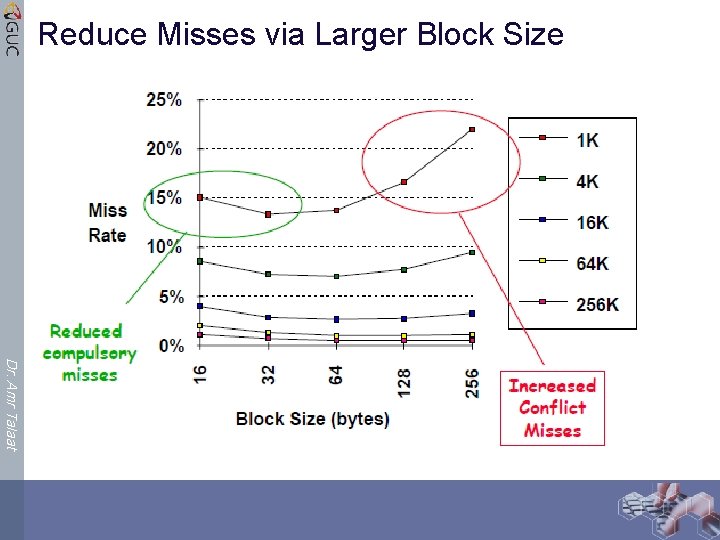

Reduce Misses via Larger Block Size Dr. Amr Talaat

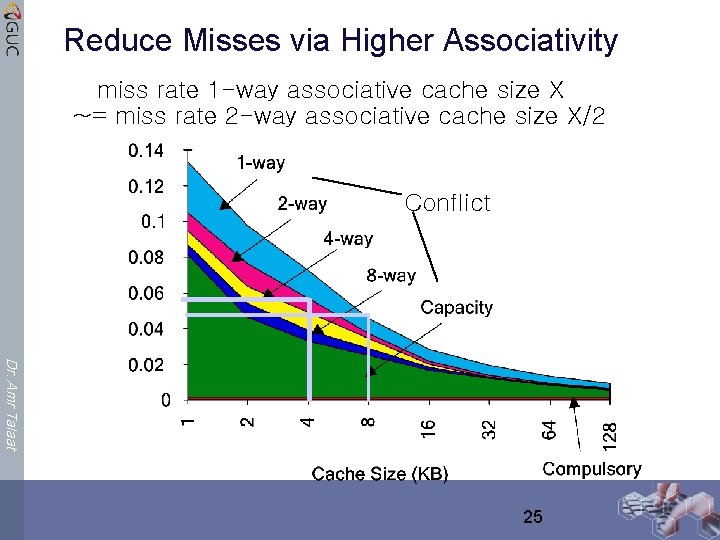

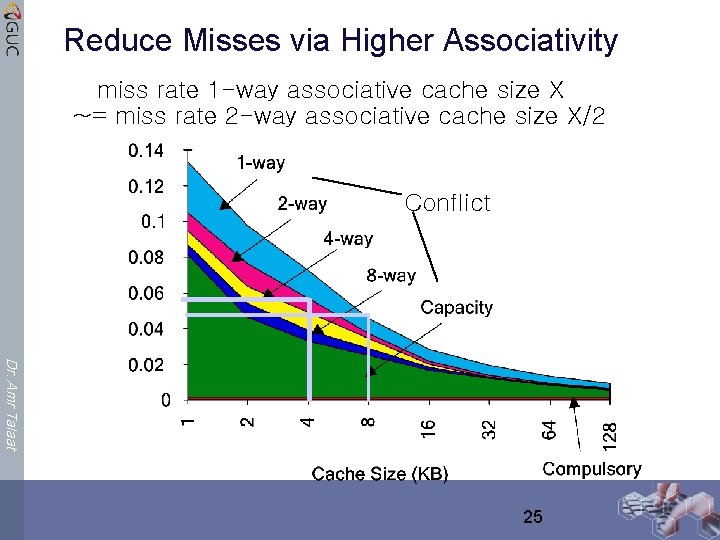

Reduce Misses via Higher Associativity miss rate 1 -way associative cache size X ~= miss rate 2 -way associative cache size X/2 Conflict Dr. Amr Talaat 25

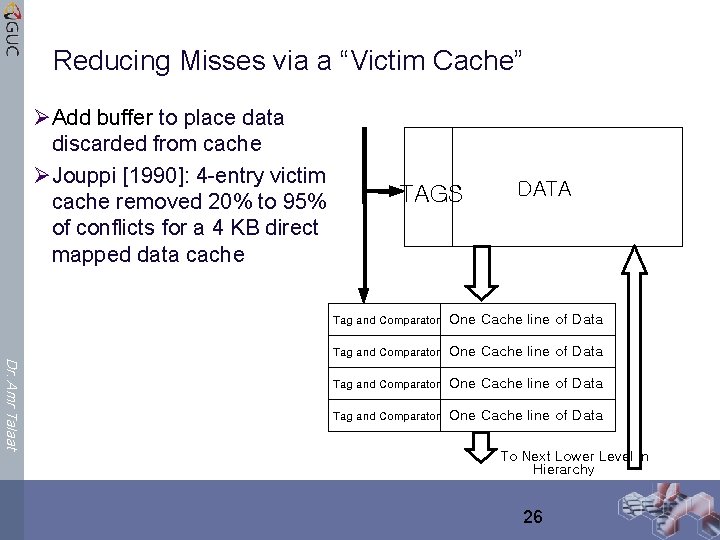

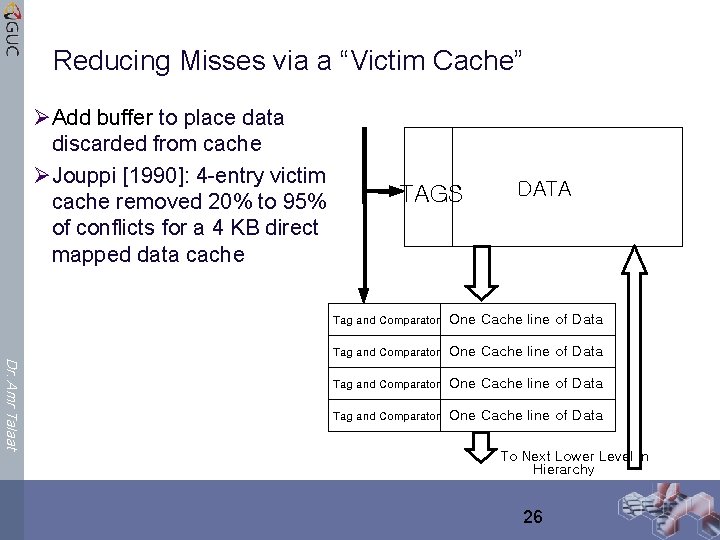

Reducing Misses via a “Victim Cache” ØAdd buffer to place data discarded from cache ØJouppi [1990]: 4 -entry victim cache removed 20% to 95% of conflicts for a 4 KB direct mapped data cache TAGS DATA Dr. Amr Talaat Tag and Comparator One Cache line of Data To Next Lower Level In Hierarchy 26

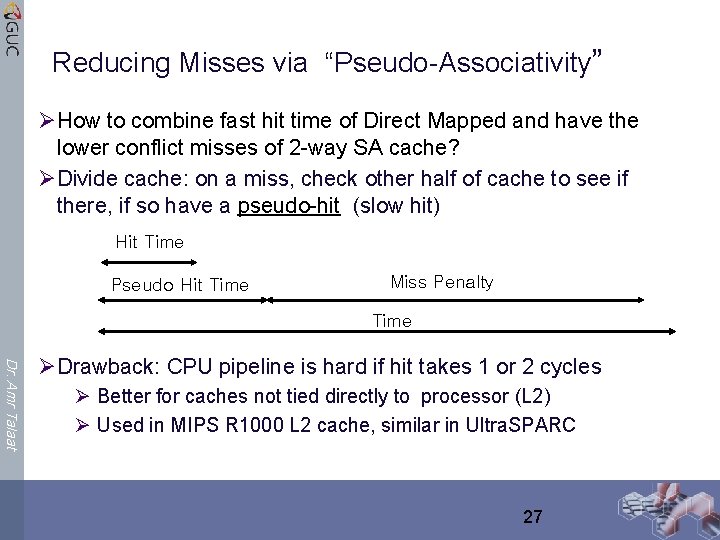

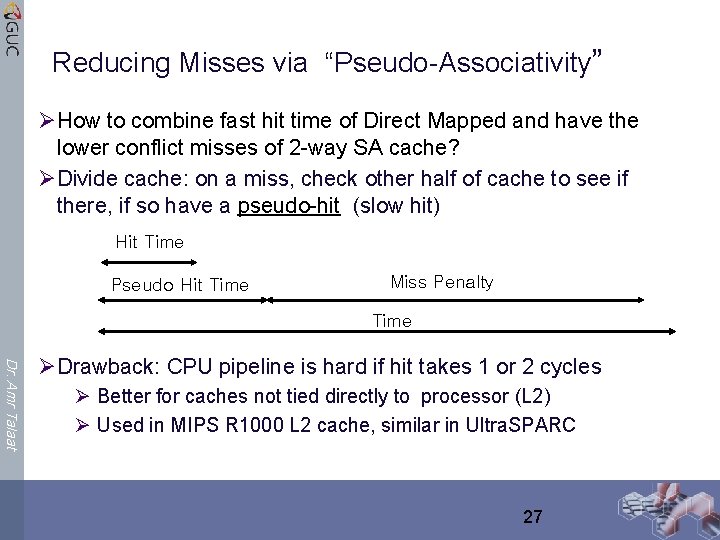

Reducing Misses via “Pseudo-Associativity” ØHow to combine fast hit time of Direct Mapped and have the lower conflict misses of 2 -way SA cache? ØDivide cache: on a miss, check other half of cache to see if there, if so have a pseudo-hit (slow hit) Hit Time Pseudo Hit Time Miss Penalty Time Dr. Amr Talaat ØDrawback: CPU pipeline is hard if hit takes 1 or 2 cycles Ø Better for caches not tied directly to processor (L 2) Ø Used in MIPS R 1000 L 2 cache, similar in Ultra. SPARC 27

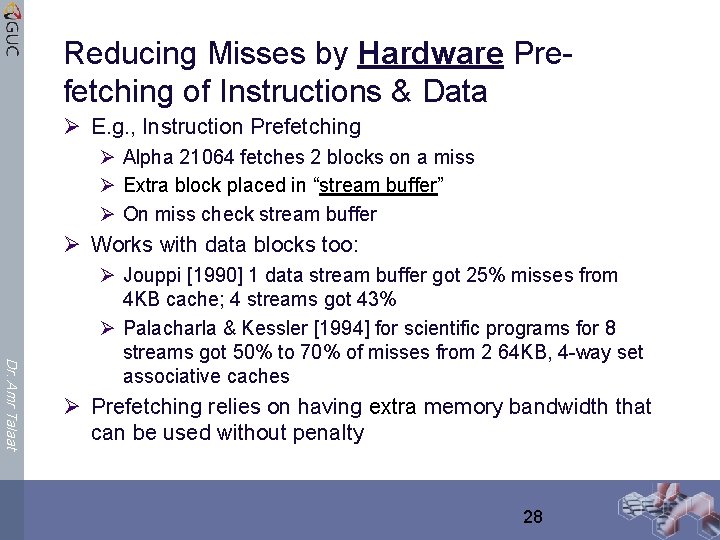

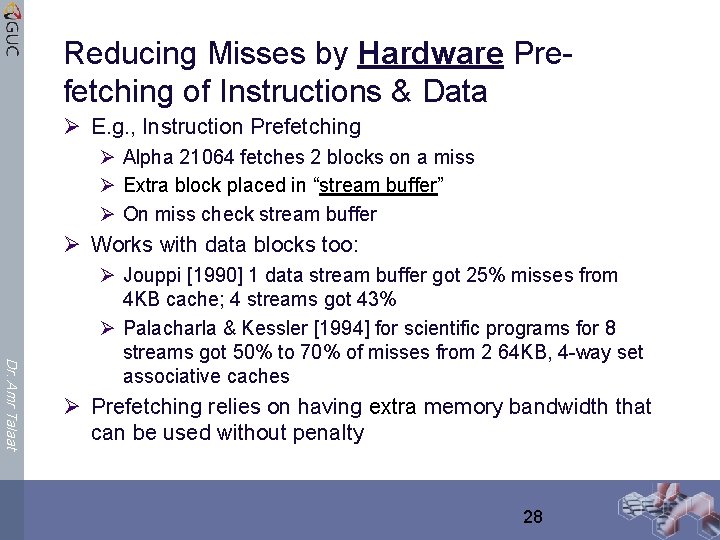

Reducing Misses by Hardware Prefetching of Instructions & Data Ø E. g. , Instruction Prefetching Ø Alpha 21064 fetches 2 blocks on a miss Ø Extra block placed in “stream buffer” Ø On miss check stream buffer Ø Works with data blocks too: Dr. Amr Talaat Ø Jouppi [1990] 1 data stream buffer got 25% misses from 4 KB cache; 4 streams got 43% Ø Palacharla & Kessler [1994] for scientific programs for 8 streams got 50% to 70% of misses from 2 64 KB, 4 -way set associative caches Ø Prefetching relies on having extra memory bandwidth that can be used without penalty 28

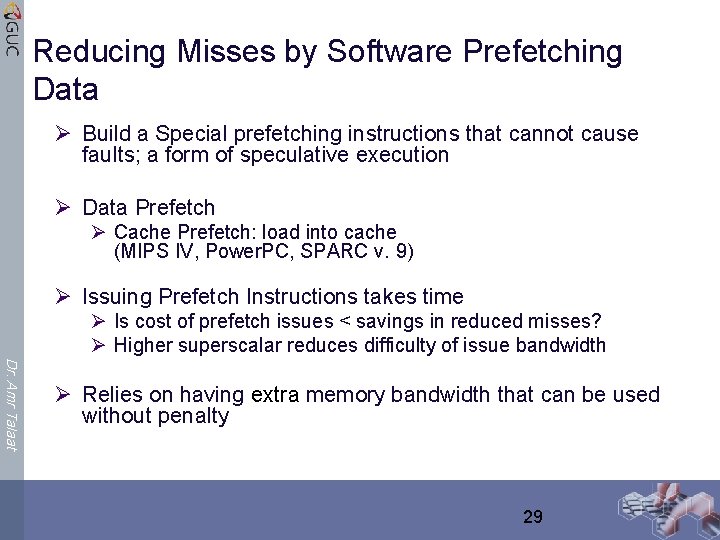

Reducing Misses by Software Prefetching Data Ø Build a Special prefetching instructions that cannot cause faults; a form of speculative execution Ø Data Prefetch Ø Cache Prefetch: load into cache (MIPS IV, Power. PC, SPARC v. 9) Ø Issuing Prefetch Instructions takes time Ø Is cost of prefetch issues < savings in reduced misses? Ø Higher superscalar reduces difficulty of issue bandwidth Dr. Amr Talaat Ø Relies on having extra memory bandwidth that can be used without penalty 29

![Reducing Misses by Compiler Optimizations Ø Mc Farling 1989 reduced caches misses by 75 Reducing Misses by Compiler Optimizations Ø Mc. Farling [1989] reduced caches misses by 75%](https://slidetodoc.com/presentation_image_h2/3254d7e2bd90f04d662f68c3482273ba/image-30.jpg)

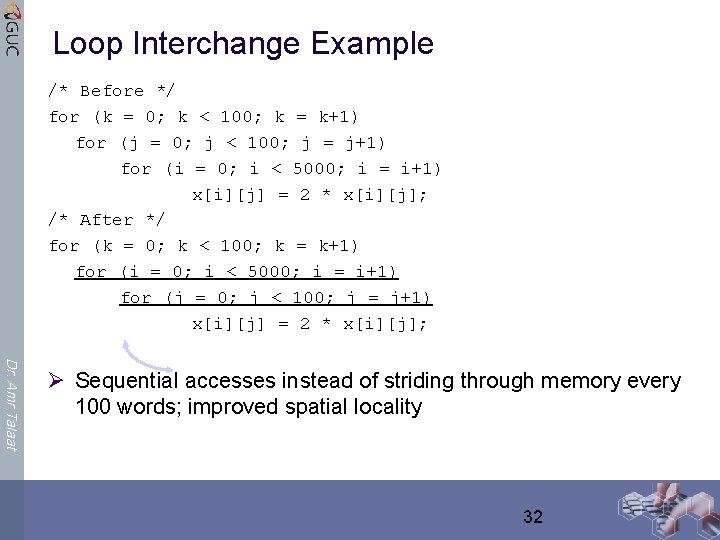

Reducing Misses by Compiler Optimizations Ø Mc. Farling [1989] reduced caches misses by 75% on 8 KB direct mapped cache, 4 byte blocks in software Ø How? Ø For instructions: Ølook at conflicts (using tools they developed) ØReorder procedures in memory so as to reduce conflict misses Ø For Data Dr. Amr Talaat ØMerging Arrays: improve spatial locality by single array of compound elements vs. 2 arrays ØLoop Interchange: change nesting of loops to access data in order stored in memory ØLoop Fusion: Combine 2 independent loops that have same looping and some variables overlap 30

![Merging Arrays Example Before 2 sequential arrays int valSIZE int keySIZE Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /*](https://slidetodoc.com/presentation_image_h2/3254d7e2bd90f04d662f68c3482273ba/image-31.jpg)

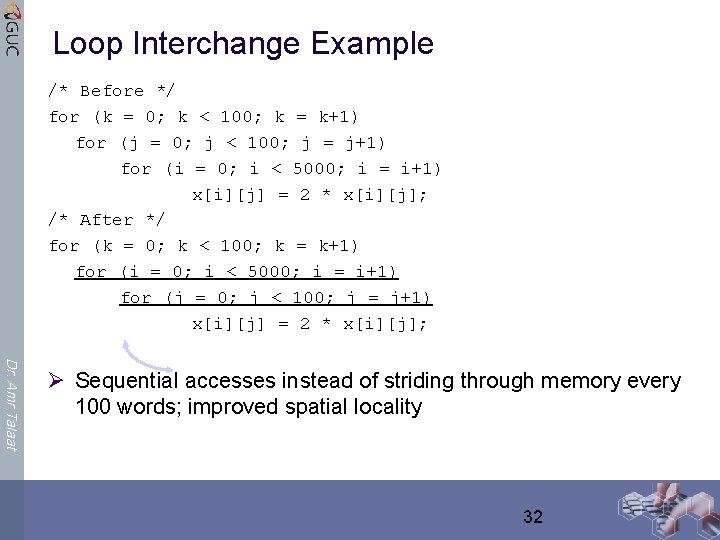

Merging Arrays Example /* Before: 2 sequential arrays */ int val[SIZE]; int key[SIZE]; /* After: 1 array of stuctures */ struct merge { int val; int key; }; struct merged_array[SIZE]; Dr. Amr Talaat Ø Reducing conflicts between val & key; improve spatial locality 31

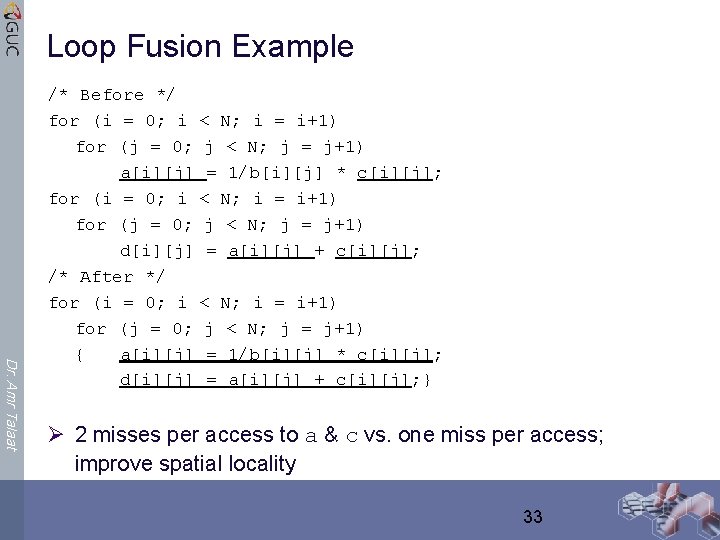

Loop Interchange Example /* Before */ for (k = 0; k < 100; k = k+1) for (j = 0; j < 100; j = j+1) for (i = 0; i < 5000; i = i+1) x[i][j] = 2 * x[i][j]; /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < 100; j = j+1) x[i][j] = 2 * x[i][j]; Dr. Amr Talaat Ø Sequential accesses instead of striding through memory every 100 words; improved spatial locality 32

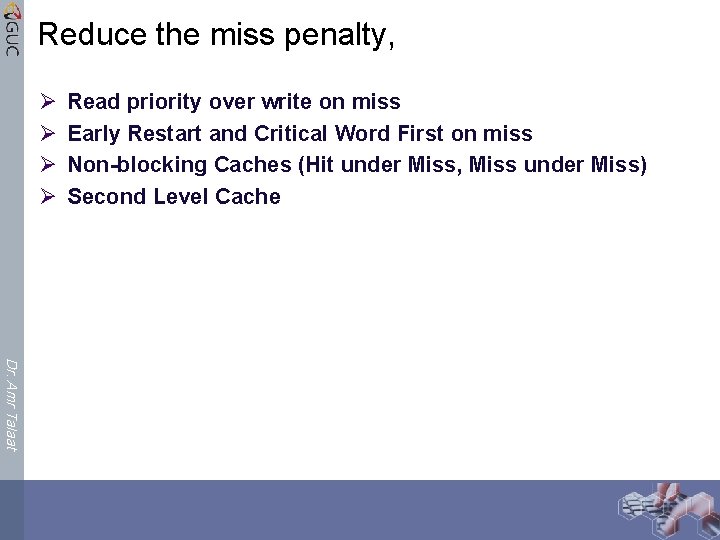

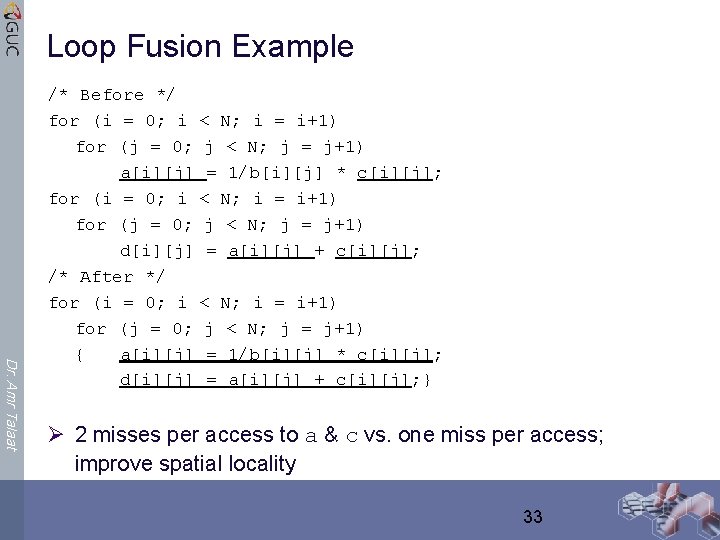

Loop Fusion Example Dr. Amr Talaat /* Before */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) a[i][j] = 1/b[i][j] * c[i][j]; for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) d[i][j] = a[i][j] + c[i][j]; /* After */ for (i = 0; i < N; i = i+1) for (j = 0; j < N; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; d[i][j] = a[i][j] + c[i][j]; } Ø 2 misses per access to a & c vs. one miss per access; improve spatial locality 33

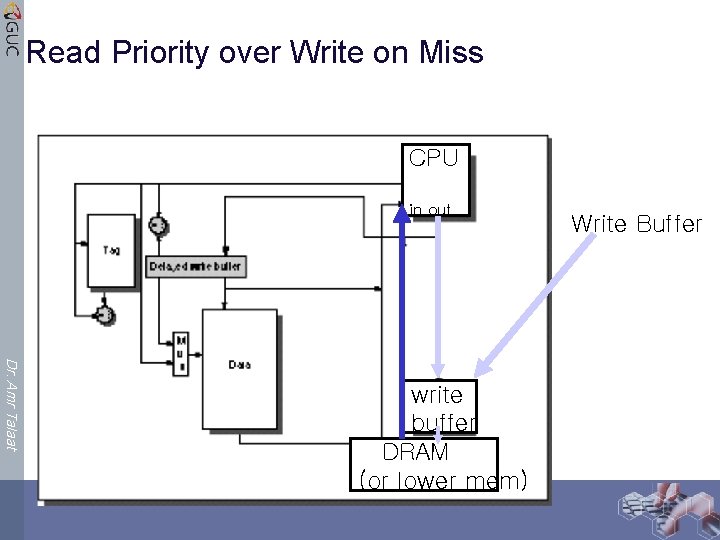

Reduce the miss penalty, Ø Ø Read priority over write on miss Early Restart and Critical Word First on miss Non-blocking Caches (Hit under Miss, Miss under Miss) Second Level Cache Dr. Amr Talaat

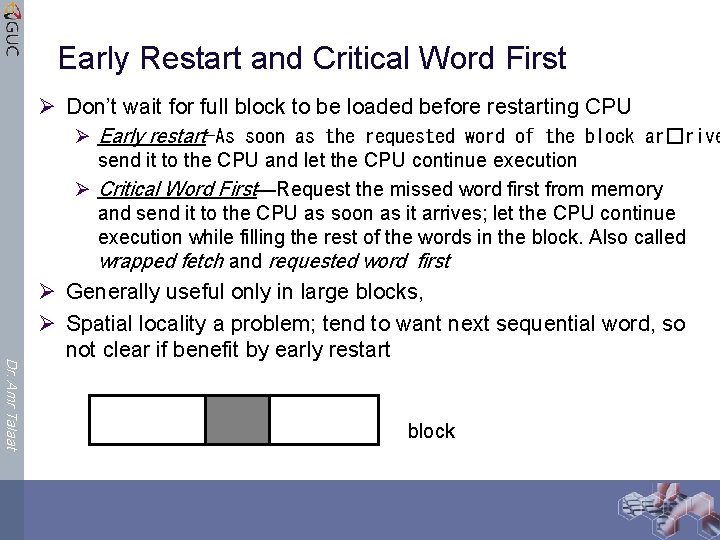

Read Priority over Write on Miss CPU in out Dr. Amr Talaat write buffer DRAM (or lower mem) Write Buffer

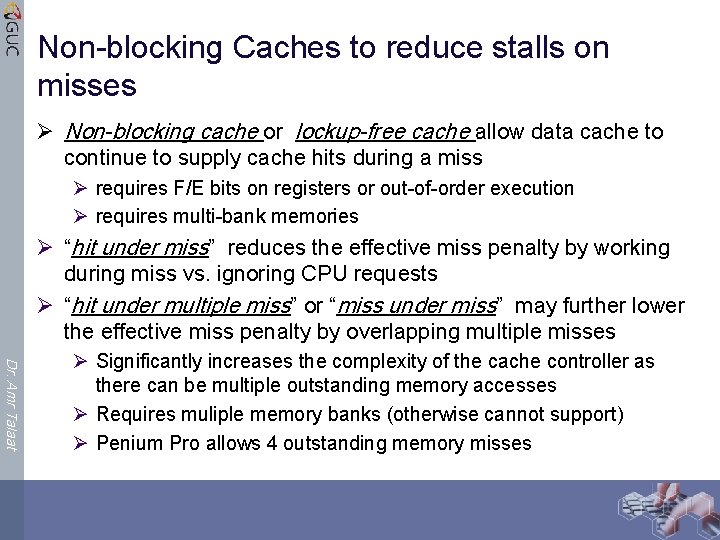

Read Priority over Write on Miss Ø Write-through w/ write buffers => RAW conflicts with main memory reads on cache misses Ø If simply wait for write buffer to empty, might increase read miss penalty (old MIPS 1000 by 50% ) Ø Check write buffer contents before read; if no conflicts, let the memory access continue Ø Write-back want buffer to hold displaced blocks Ø Read miss replacing dirty block Dr. Amr Talaat Ø Normal: Write dirty block to memory, and then do the read Ø Instead copy the dirty block to a write buffer, then do the read, and then do the write Ø CPU stall less since restarts as soon as do read

Early Restart and Critical Word First Ø Don’t wait for full block to be loaded before restarting CPU Ø Early restart—As soon as the requested word of the block ar�rive send it to the CPU and let the CPU continue execution Ø Critical Word First—Request the missed word first from memory and send it to the CPU as soon as it arrives; let the CPU continue execution while filling the rest of the words in the block. Also called wrapped fetch and requested word first Dr. Amr Talaat Ø Generally useful only in large blocks, Ø Spatial locality a problem; tend to want next sequential word, so not clear if benefit by early restart block

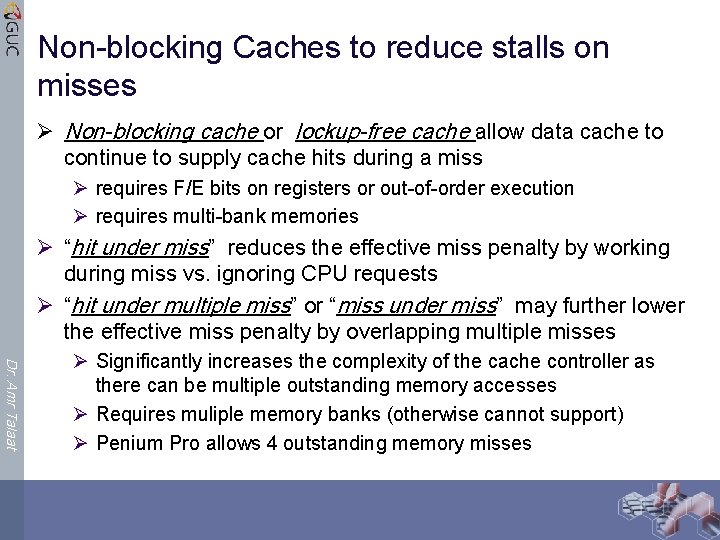

Non-blocking Caches to reduce stalls on misses Ø Non-blocking cache or lockup-free cache allow data cache to continue to supply cache hits during a miss Ø requires F/E bits on registers or out-of-order execution Ø requires multi-bank memories Ø “hit under miss” reduces the effective miss penalty by working during miss vs. ignoring CPU requests Ø “hit under multiple miss” or “miss under miss” may further lower the effective miss penalty by overlapping multiple misses Dr. Amr Talaat Ø Significantly increases the complexity of the cache controller as there can be multiple outstanding memory accesses Ø Requires muliple memory banks (otherwise cannot support) Ø Penium Pro allows 4 outstanding memory misses

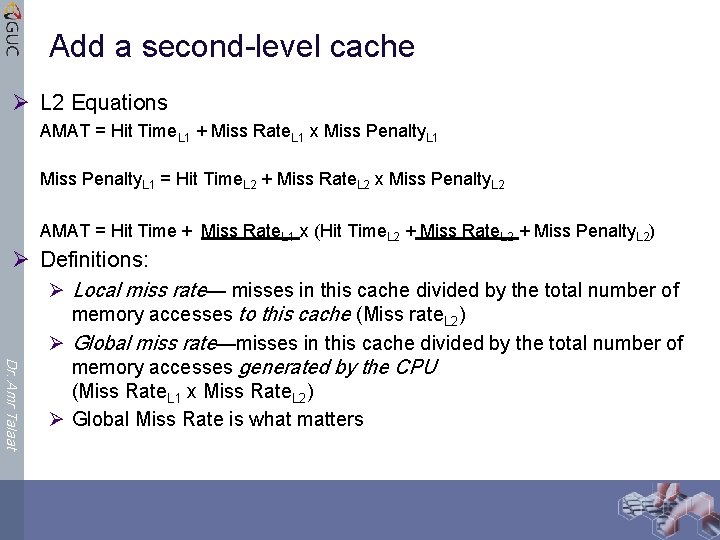

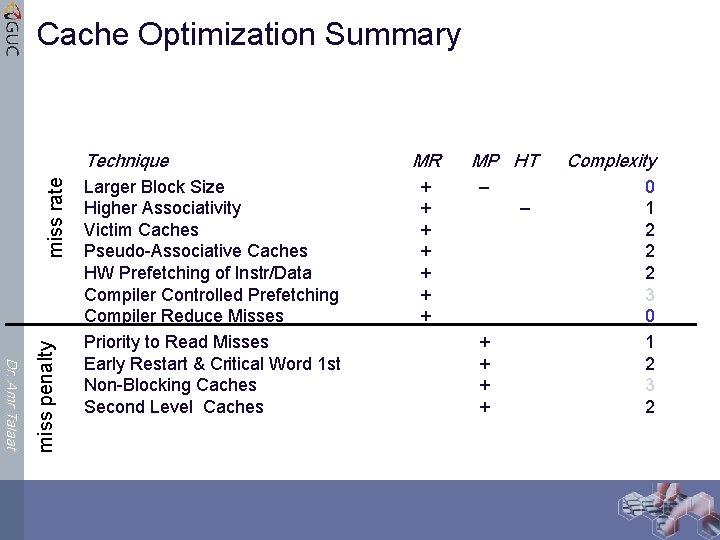

Add a second-level cache Ø L 2 Equations AMAT = Hit Time. L 1 + Miss Rate. L 1 x Miss Penalty. L 1 = Hit Time. L 2 + Miss Rate. L 2 x Miss Penalty. L 2 AMAT = Hit Time + Miss Rate. L 1 x (Hit Time. L 2 + Miss Rate. L 2 + Miss Penalty. L 2) Ø Definitions: Dr. Amr Talaat Ø Local miss rate— misses in this cache divided by the total number of memory accesses to this cache (Miss rate. L 2) Ø Global miss rate—misses in this cache divided by the total number of memory accesses generated by the CPU (Miss Rate. L 1 x Miss Rate. L 2) Ø Global Miss Rate is what matters

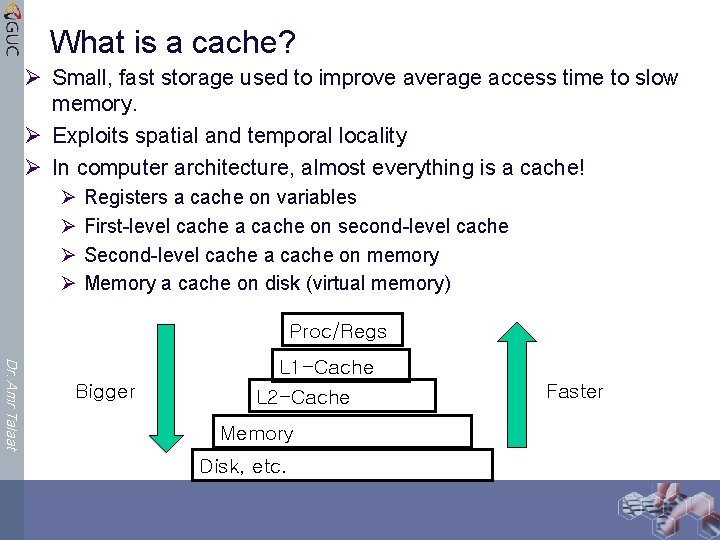

Cache Optimization Summary Dr. Amr Talaat miss penalty miss rate Technique Larger Block Size Higher Associativity Victim Caches Pseudo-Associative Caches HW Prefetching of Instr/Data Compiler Controlled Prefetching Compiler Reduce Misses Priority to Read Misses Early Restart & Critical Word 1 st Non-Blocking Caches Second Level Caches MR + + + + MP HT – – + + Complexity 0 1 2 2 2 3 0 1 2 3 2