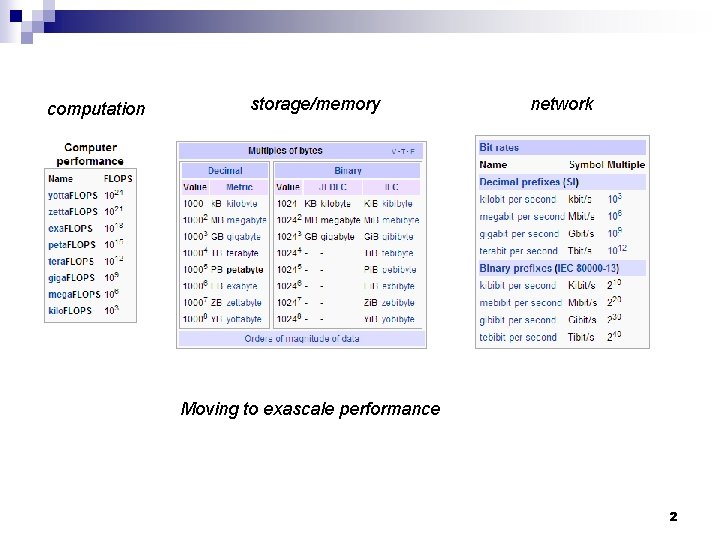

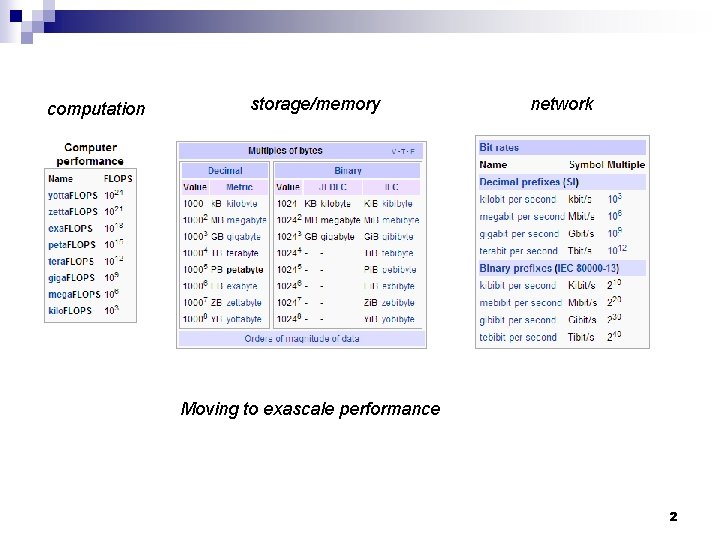

computation storagememory network Moving to exascale performance 2

- Slides: 39

computation storage/memory network Moving to exascale performance 2

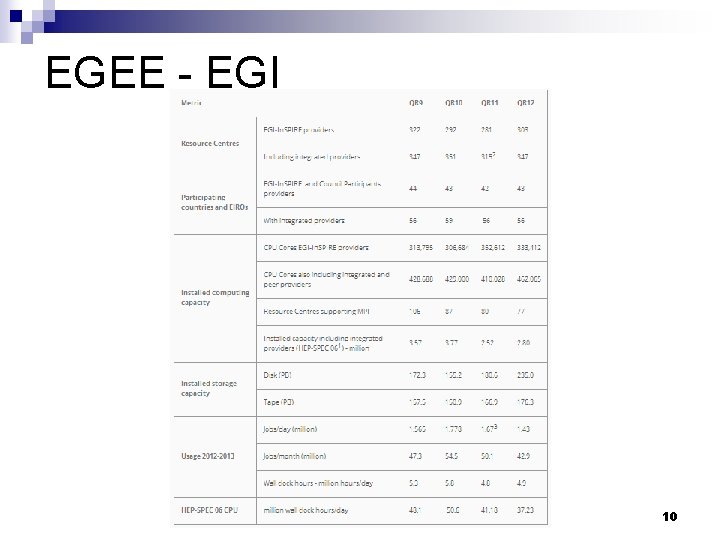

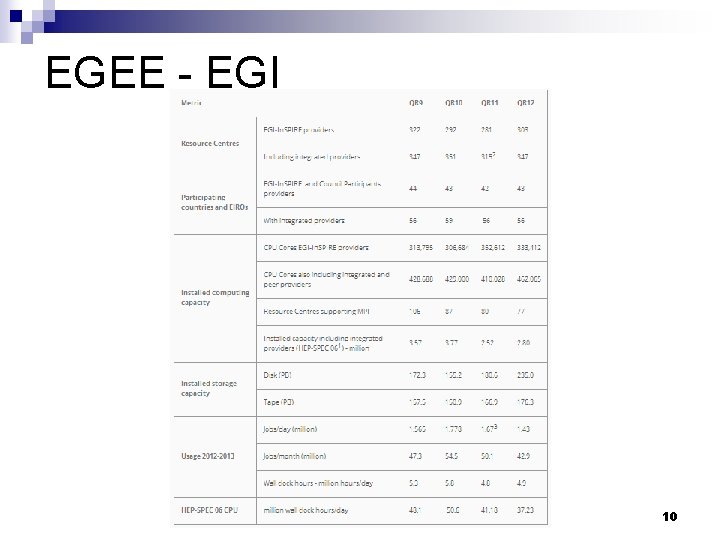

EGEE - EGI 10

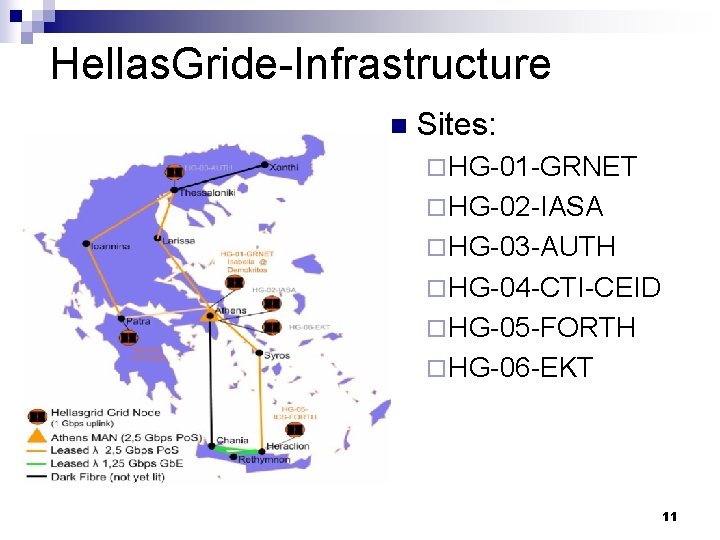

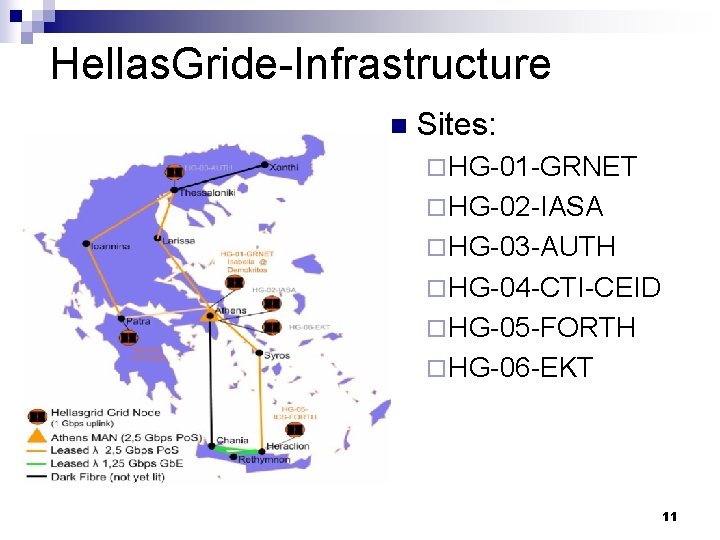

Hellas. Gride-Infrastructure n Sites: ¨ HG-01 -GRNET ¨ HG-02 -IASA ¨ HG-03 -AUTH ¨ HG-04 -CTI-CEID ¨ ΗG-05 -FORTH ¨ HG-06 -EKT 11

HG-04 -CTI-CEID site 12

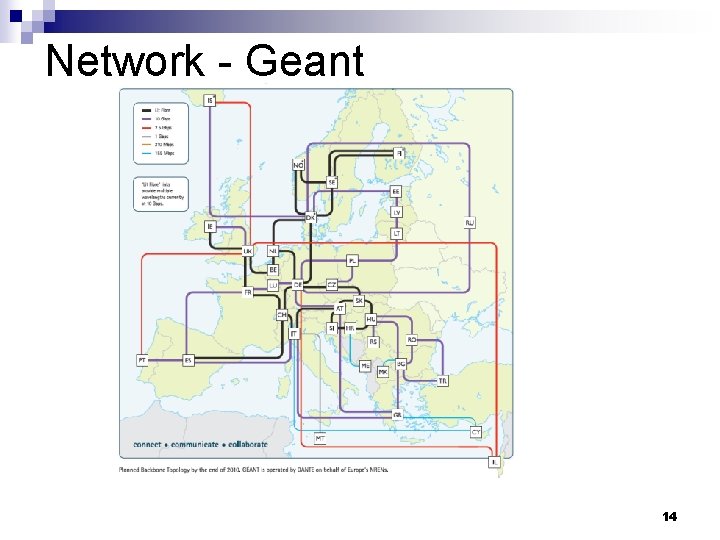

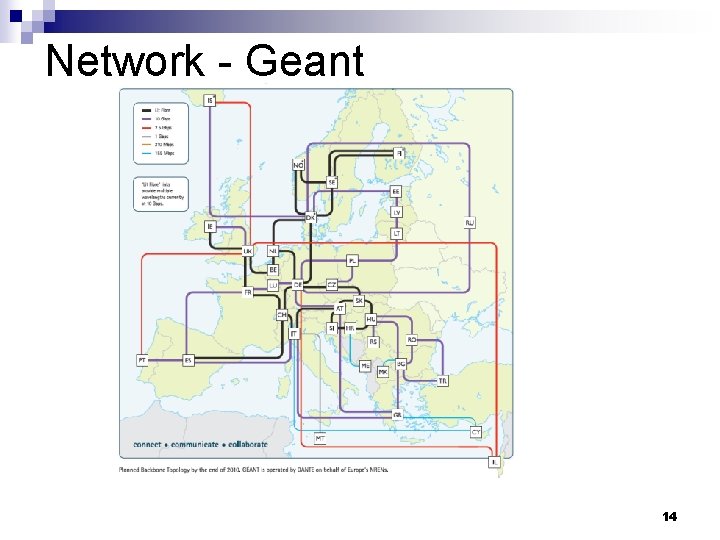

Network - Geant 14

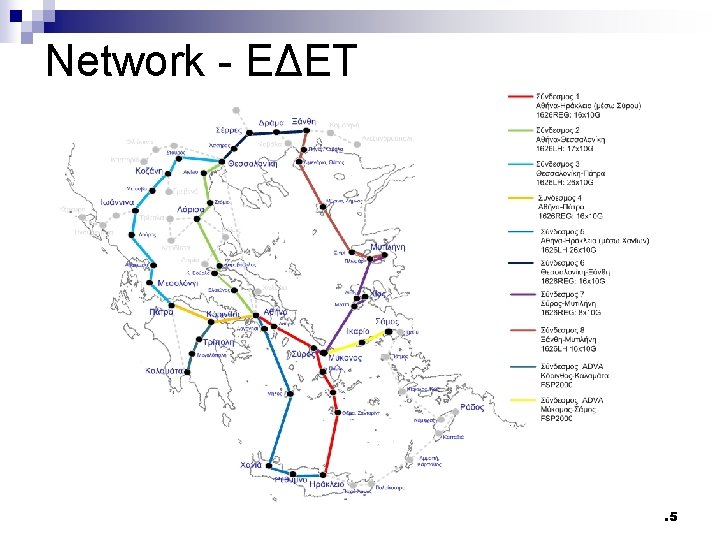

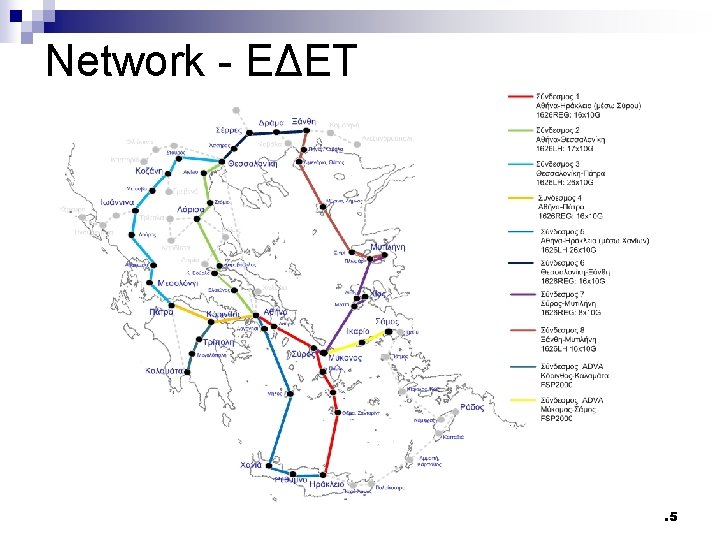

Network - ΕΔΕΤ 15

Volunteer Computing – Goodwill Grids n n BOINC (Berkeley Open Infrastructure for Network Computing) middlware, is an open -source software platform for computing using volunteered resources. SETI@home is a scientific experiment that uses Internet-connected computers in the Search for Extraterrestrial Intelligence (SETI). Folding@home, is a distributed computing project for disease research that simulates protein folding, computational drug design, and other types of molecular dynamics. 'Desktop grid' computing - which uses desktop PCs within an organization - is superficially similar to volunteer computing, but because it has accountability and lacks anonymity, it is significantly different. 20

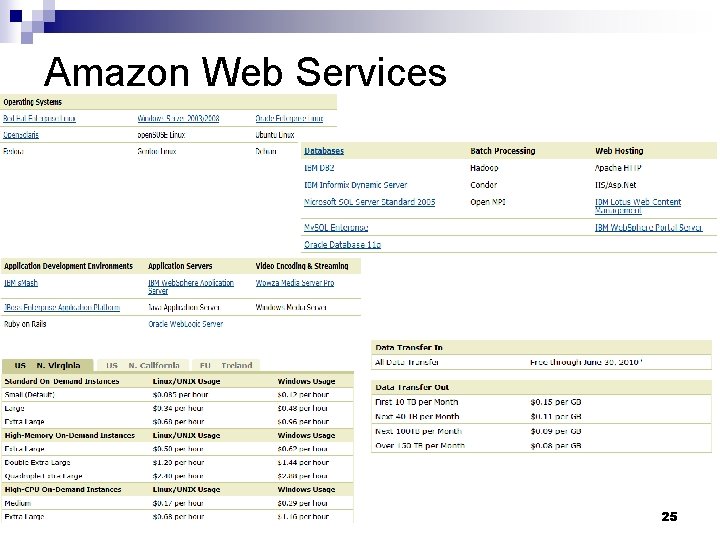

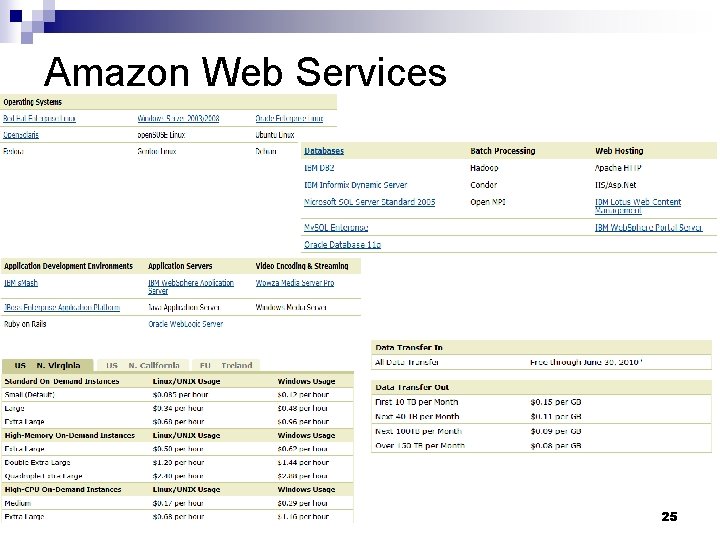

Amazon Web Services 25

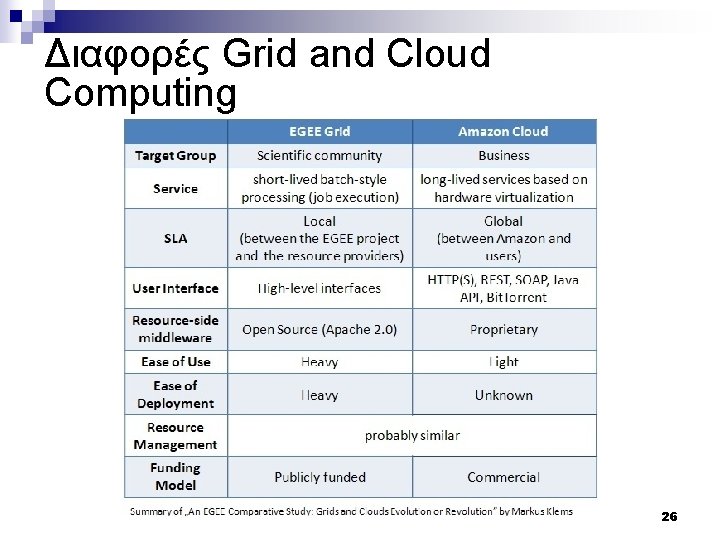

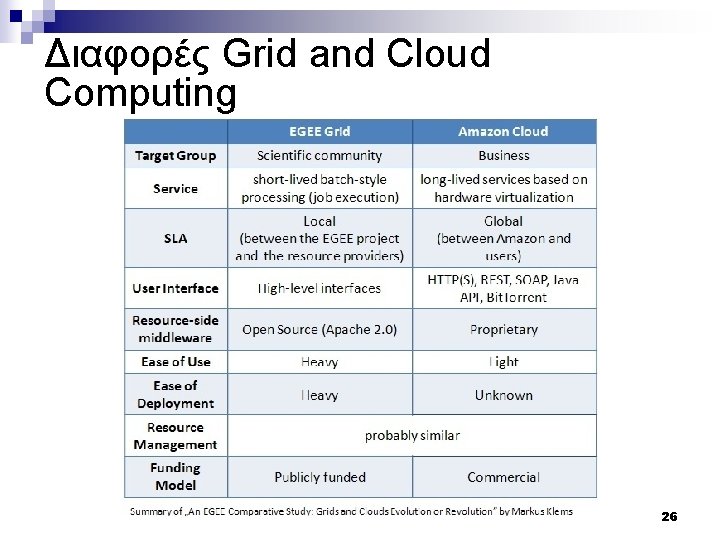

Διαφορές Grid and Cloud Computing 26

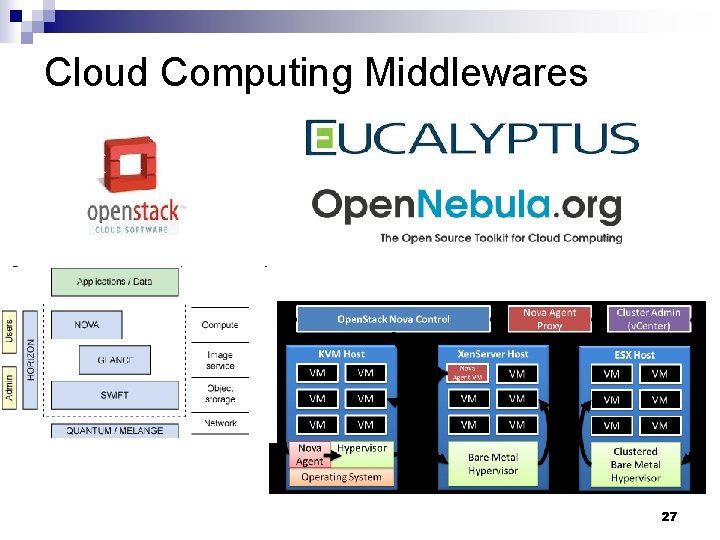

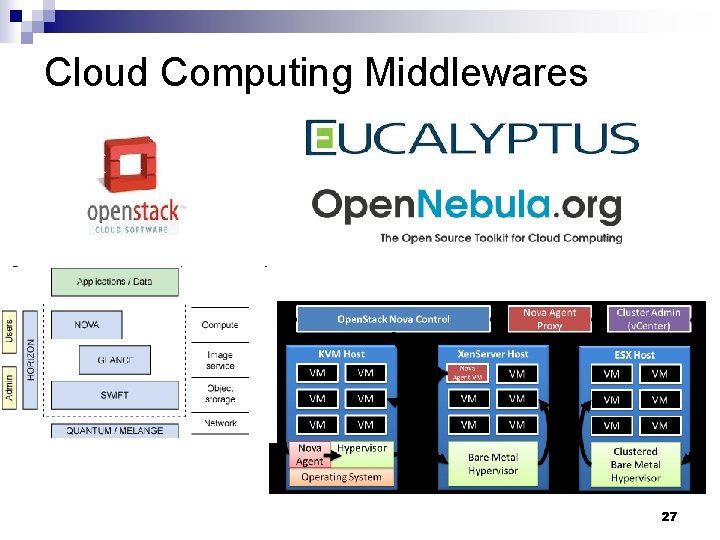

Cloud Computing Middlewares 27

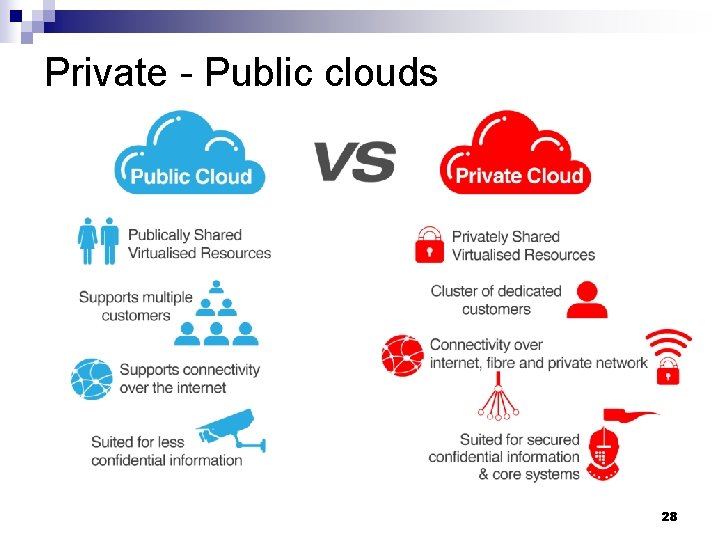

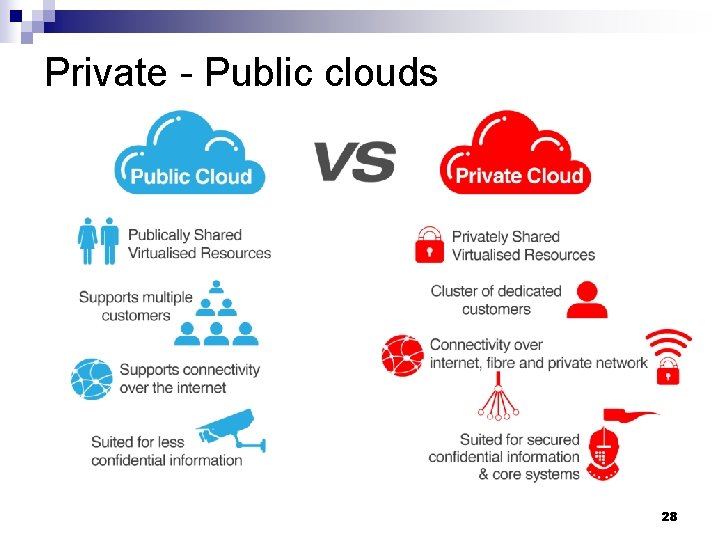

Private - Public clouds 28

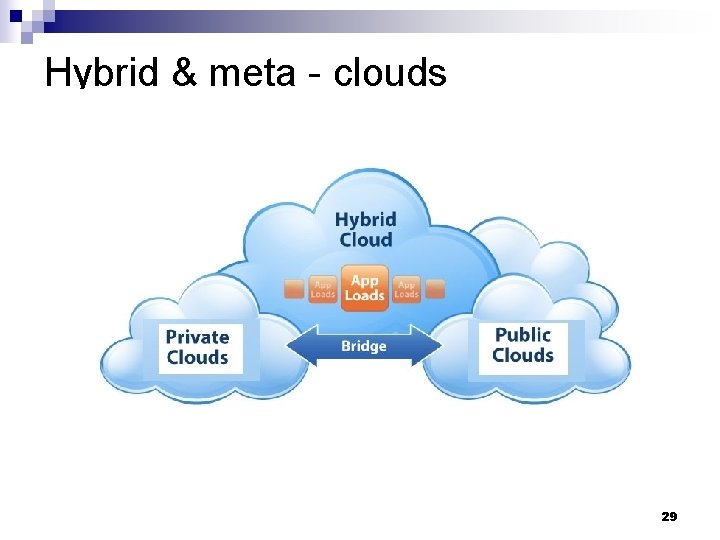

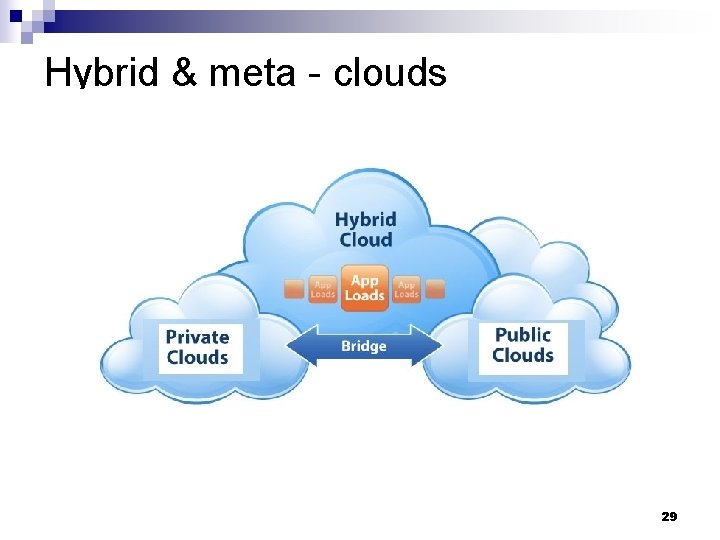

Hybrid & meta - clouds 29

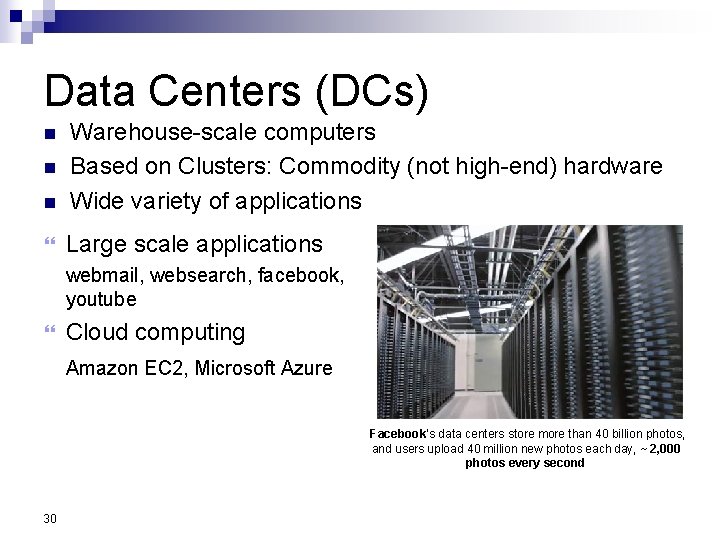

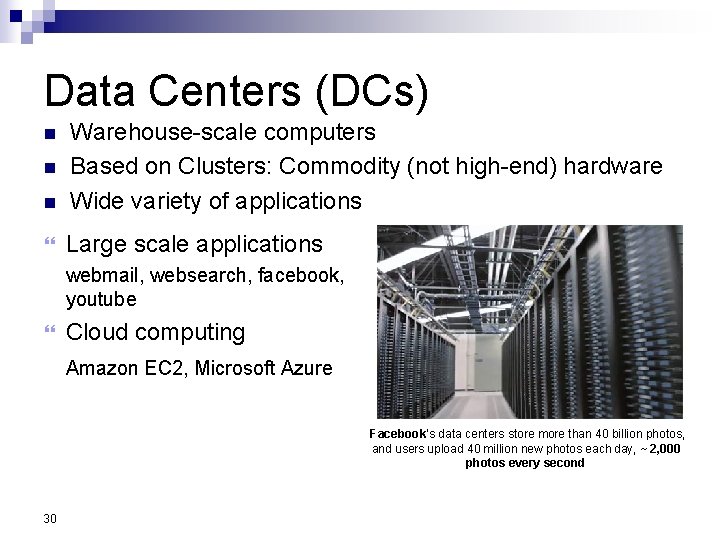

Data Centers (DCs) n Warehouse-scale computers Based on Clusters: Commodity (not high-end) hardware Wide variety of applications Large scale applications n n webmail, websearch, facebook, youtube Cloud computing Amazon EC 2, Microsoft Azure Facebook’s data centers store more than 40 billion photos, and users upload 40 million new photos each day, ~ 2, 000 photos every second 30

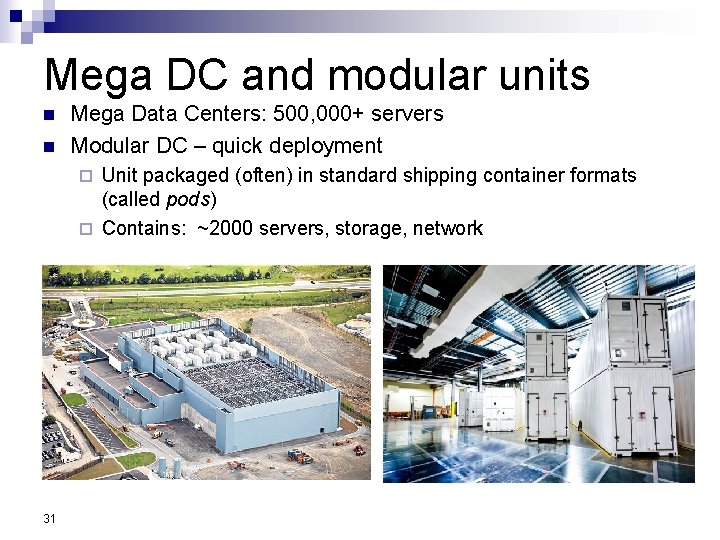

Mega DC and modular units n n Mega Data Centers: 500, 000+ servers Modular DC – quick deployment Unit packaged (often) in standard shipping container formats (called pods) ¨ Contains: ~2000 servers, storage, network ¨ 31

Supercomputers (SCs) n n Special purpose high-end systems High Performance Computing (HPC) applications Quantum physics, weather forecasting, climate research, Oil and gas exploration, molecular modeling, physical simulations n 32 Dedicated execution mode vs. virtualized services in the Cloud

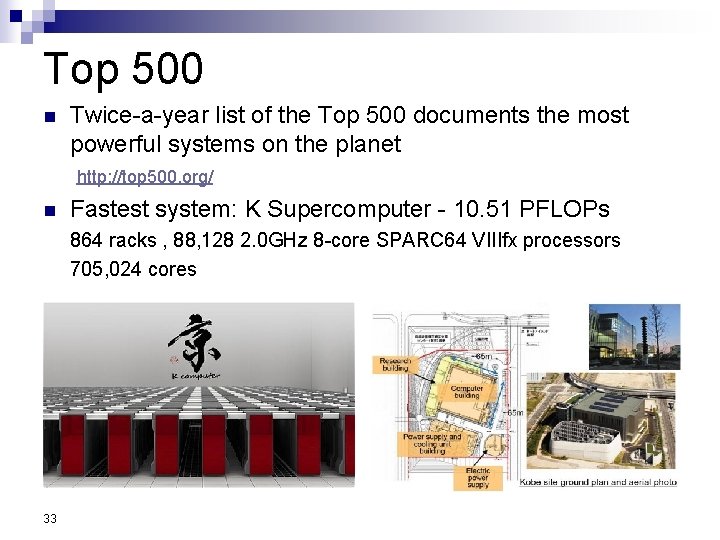

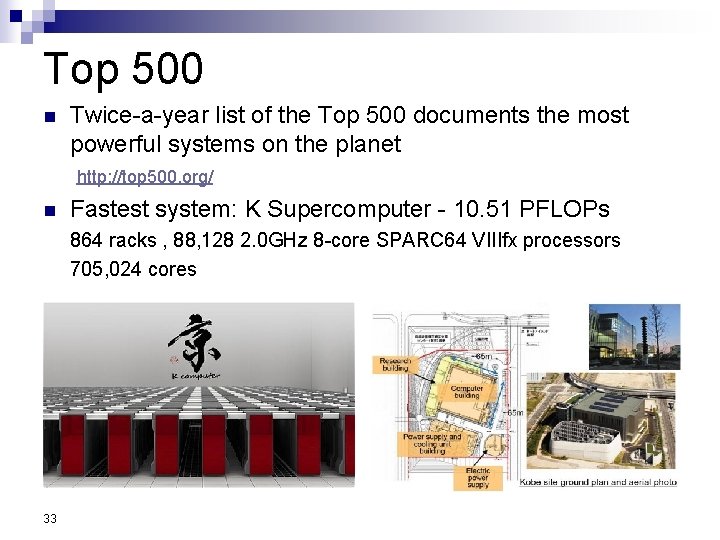

Top 500 n n Twice-a-year list of the Top 500 documents the most powerful systems on the planet http: //top 500. org/ Fastest system: K Supercomputer - 10. 51 PFLOPs 864 racks , 88, 128 2. 0 GHz 8 -core SPARC 64 VIIIfx processors 705, 024 cores 33

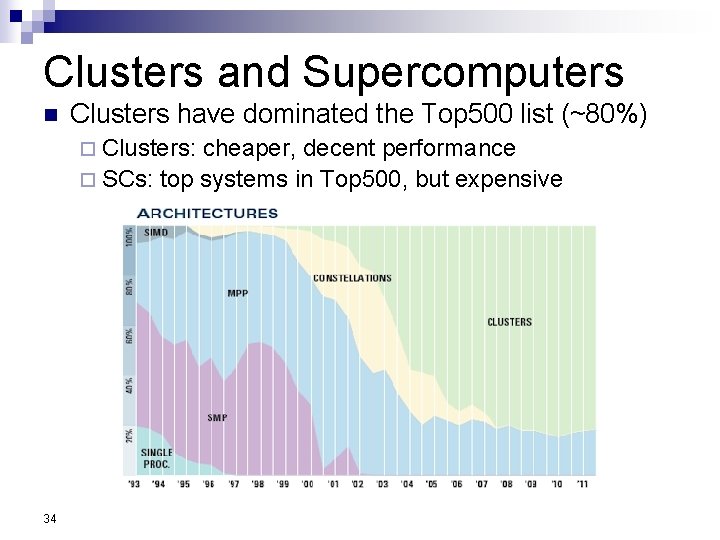

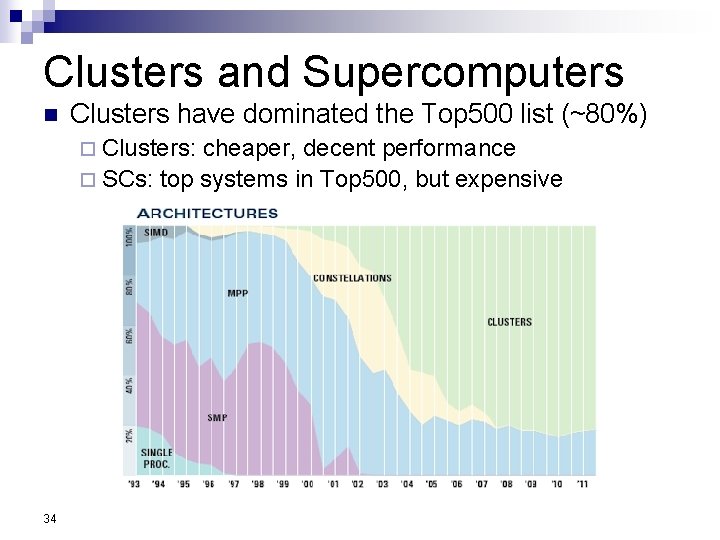

Clusters and Supercomputers n Clusters have dominated the Top 500 list (~80%) ¨ Clusters: cheaper, decent performance ¨ SCs: top systems in Top 500, but expensive 34

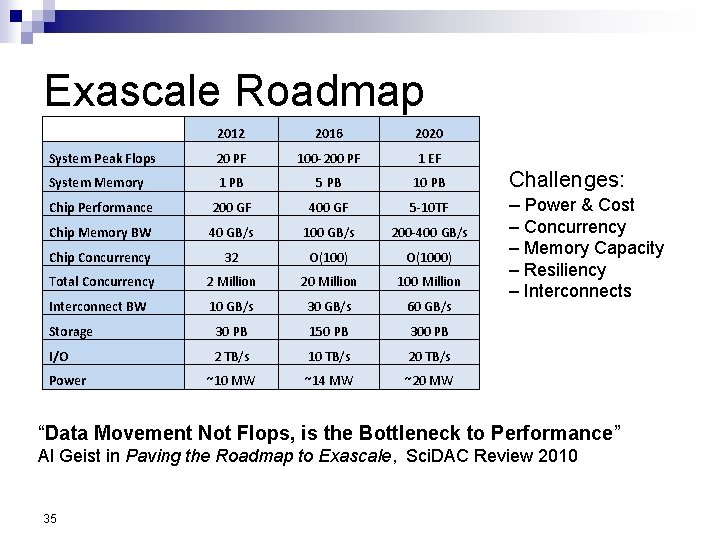

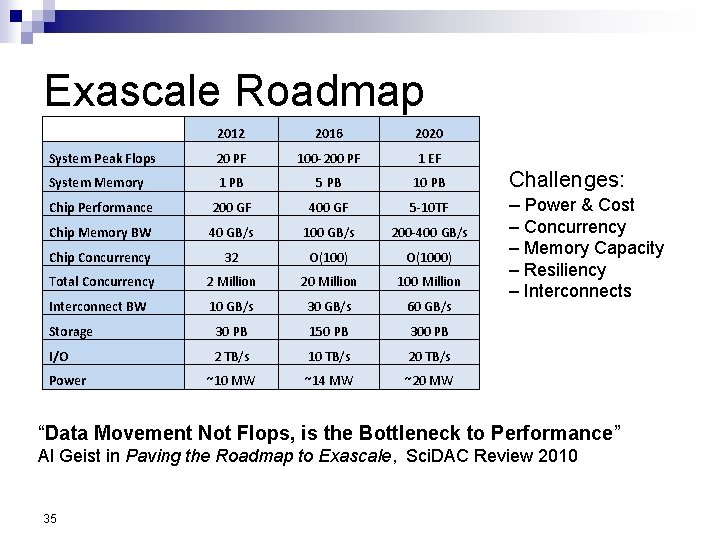

Exascale Roadmap 2012 2016 2020 System Peak Flops 20 PF 100 -200 PF 1 EF System Memory 1 PB 5 PB 10 PB Challenges: Chip Performance 200 GF 400 GF 5 -10 TF – Power & Cost Chip Memory BW 40 GB/s 100 GB/s 200 -400 GB/s Chip Concurrency 32 O(100) O(1000) Total Concurrency 2 Million 20 Million 100 Million Interconnect BW 10 GB/s 30 GB/s 60 GB/s Storage 30 PB 150 PB 300 PB I/O 2 TB/s 10 TB/s 20 TB/s ~10 MW ~14 MW ~20 MW Power – Concurrency – Memory Capacity – Resiliency – Interconnects “Data Movement Not Flops, is the Bottleneck to Performance” Al Geist in Paving the Roadmap to Exascale, Sci. DAC Review 2010 35

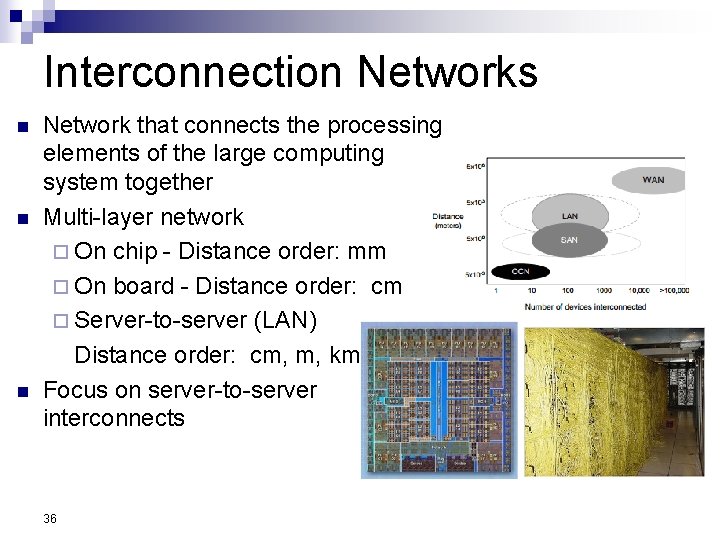

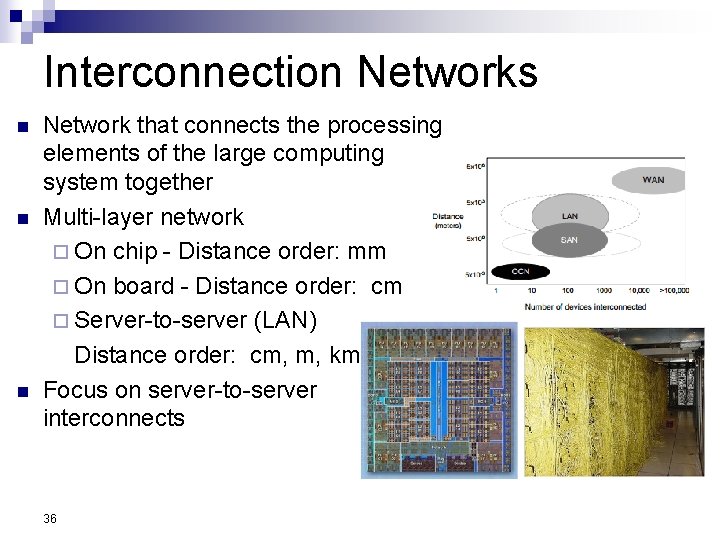

Interconnection Networks n n n Network that connects the processing elements of the large computing system together Multi-layer network ¨ On chip - Distance order: mm ¨ On board - Distance order: cm ¨ Server-to-server (LAN) Distance order: cm, m, km Focus on server-to-server interconnects 36

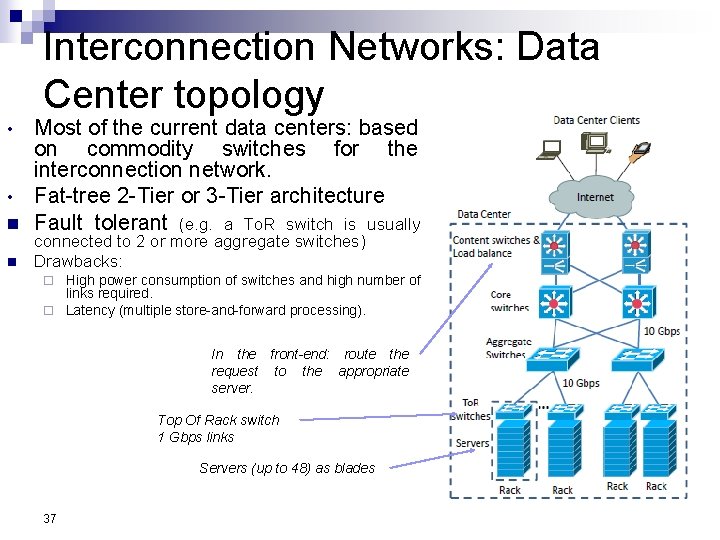

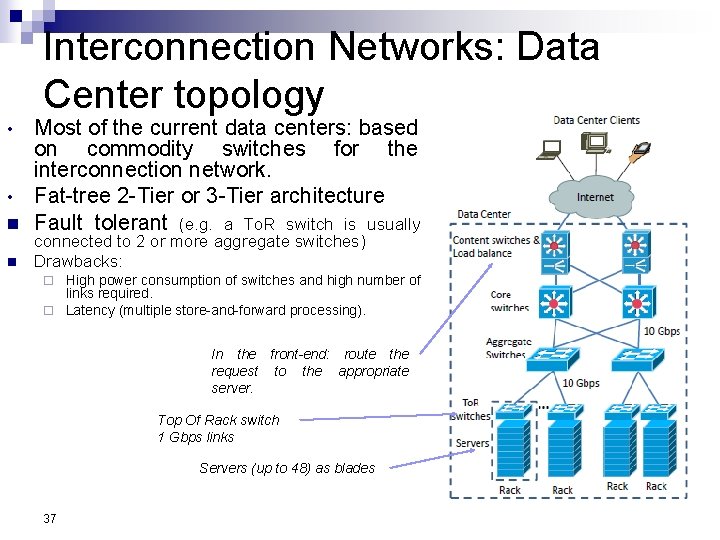

Interconnection Networks: Data Center topology • • n n Most of the current data centers: based on commodity switches for the interconnection network. Fat-tree 2 -Tier or 3 -Tier architecture Fault tolerant (e. g. a To. R switch is usually connected to 2 or more aggregate switches) Drawbacks: High power consumption of switches and high number of links required. ¨ Latency (multiple store-and-forward processing). ¨ In the front-end: route the request to the appropriate server. Top Of Rack switch 1 Gbps links Servers (up to 48) as blades 37

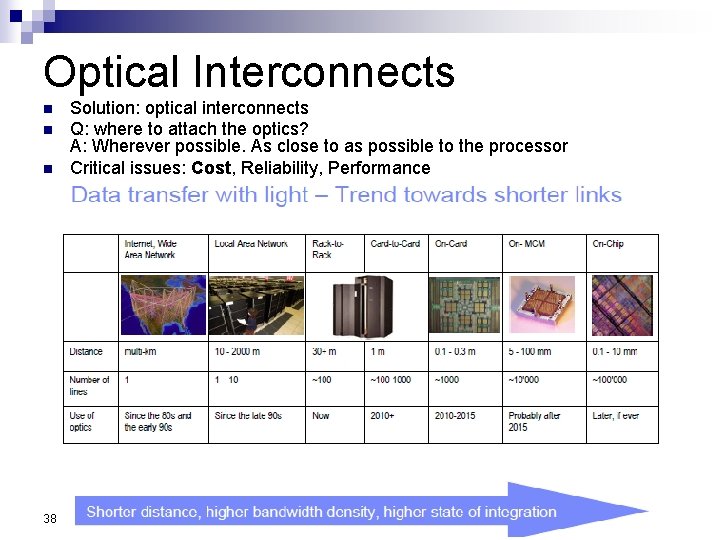

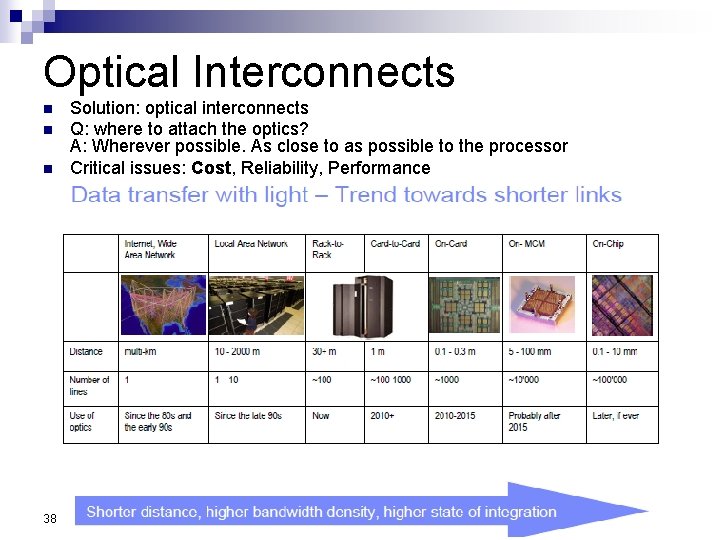

Optical Interconnects n n n 38 Solution: optical interconnects Q: where to attach the optics? A: Wherever possible. As close to as possible to the processor Critical issues: Cost, Reliability, Performance

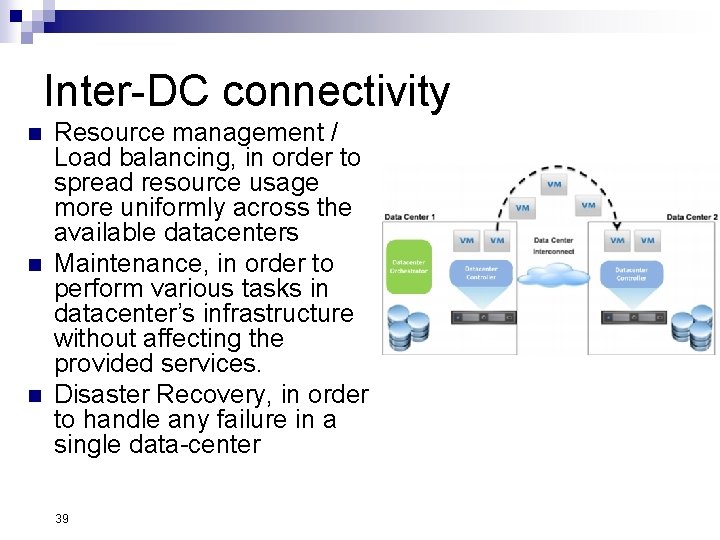

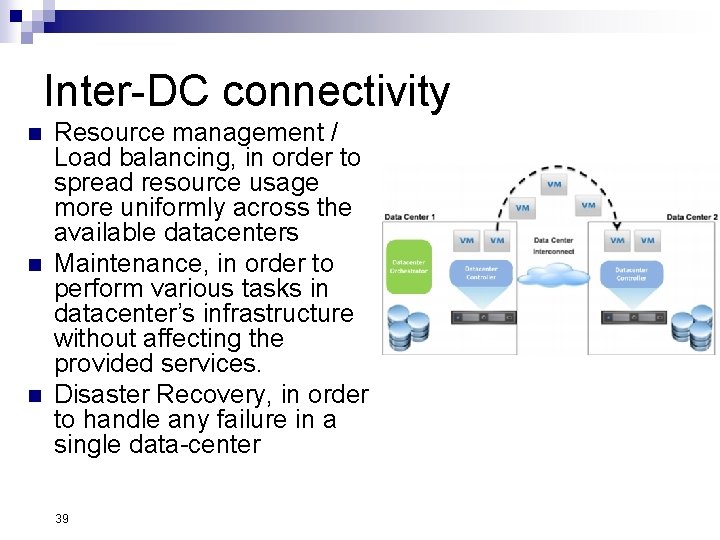

Inter-DC connectivity n n n Resource management / Load balancing, in order to spread resource usage more uniformly across the available datacenters Maintenance, in order to perform various tasks in datacenter’s infrastructure without affecting the provided services. Disaster Recovery, in order to handle any failure in a single data-center 39