PIPER Performance Insights for Programmers and Exascale Runtimes

- Slides: 24

PIPER Performance Insights for Programmers and Exascale Runtimes X-Stack 2 PI Meeting @ LBL, Berkeley, CA – April 6 -7, 2016 Martin Schulz (lead PI, LLNL), Todd Gamblin (Co-PI and presenter, LLNL) Co-PIs: Peer-Timo Bremer (LLNL), Jeff Hollingsworth (UMD), John Mellor-Crummey (Rice), Bart Miller (UW), Valerio Pascucci (Utah), Nathan Tallent (PNNL) PIPE

The PIPER Team v Lawrence Livermore National Laboratory Ø Martin Schulz (lead PI), Peer-Timo Bremer, Todd Gamblin, Abhinav Bhatele, David Boehme v Pacific Northwest Laboratory Ø Nathan Tallent v Rice University Ø John Mellor-Crummey, Mark Krentel, Laksono Adhianto v University of Maryland Ø Jeff Hollingsworth, Ray Chen v University of Utah Ø Valerio Pascucci, Yarden Livnat v University of Wisconsin Ø Bart Miller, Bill Williams PIPE

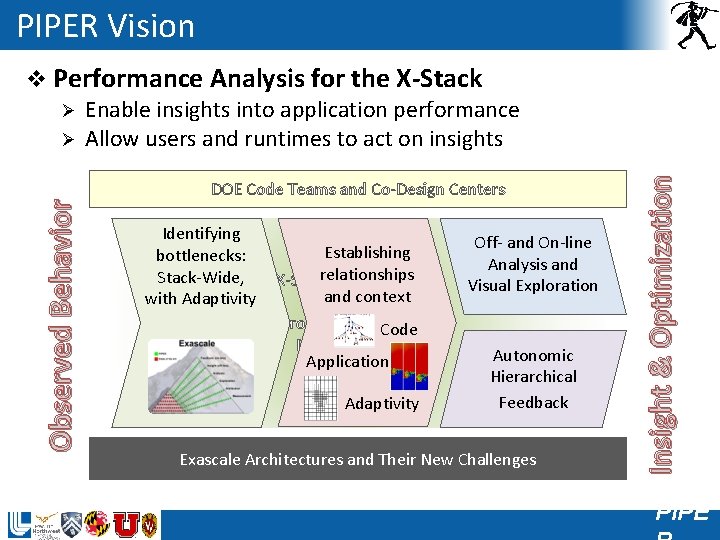

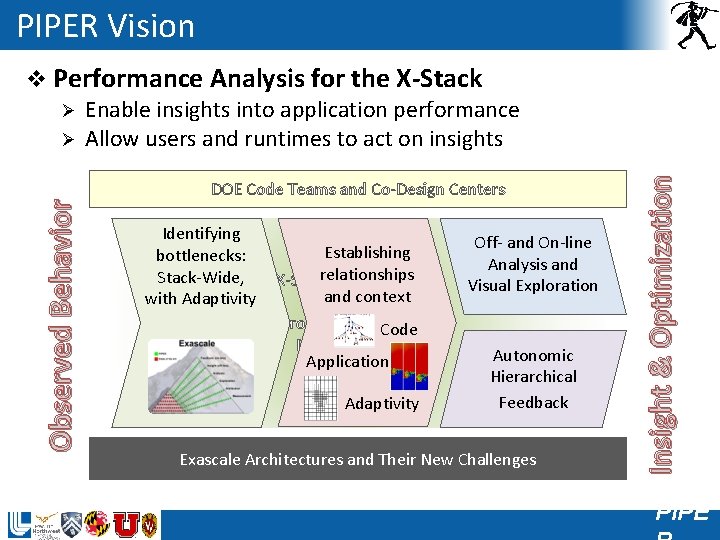

PIPER Vision DOE Code Teams and Co-Design Centers Identifying bottlenecks: Stack-Wide, with Adaptivity Establishing relationships X-Stack Software Stack and context Programming Models Code Runtime Systems Application Exascale OS Adaptivity Off- and On-line Analysis and Visual Exploration Autonomic Hierarchical Feedback Exascale Architectures and Their New Challenges Insight & Optimization Observed Behavior v Performance Analysis for the X-Stack Ø Enable insights into application performance Ø Allow users and runtimes to act on insights PIPE

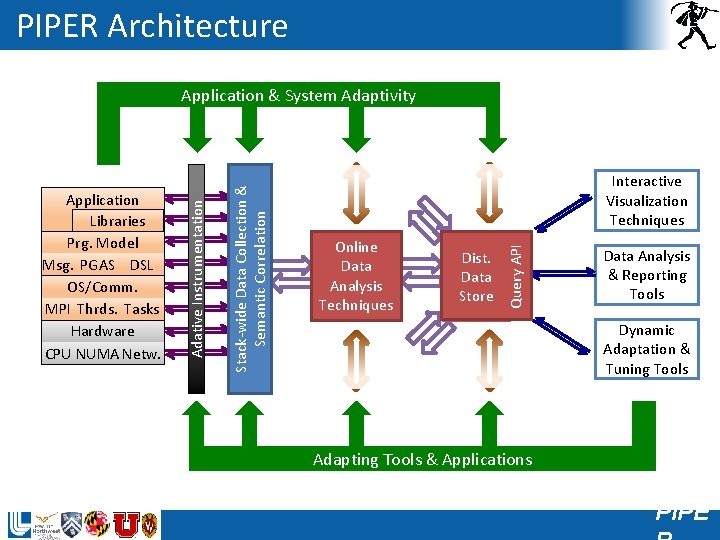

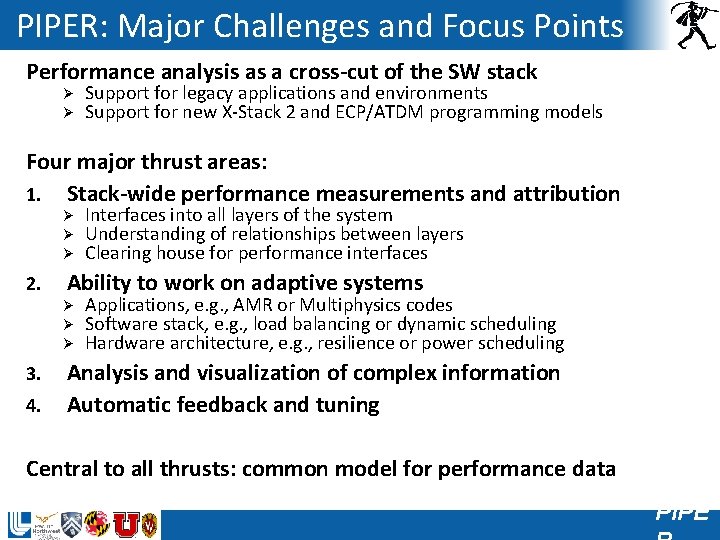

PIPER: Major Challenges and Focus Points Performance analysis as a cross-cut of the SW stack Ø Ø Support for legacy applications and environments Support for new X-Stack 2 and ECP/ATDM programming models Four major thrust areas: 1. Stack-wide performance measurements and attribution Ø Ø Ø 2. Ability to work on adaptive systems Ø Ø Ø 3. 4. Interfaces into all layers of the system Understanding of relationships between layers Clearing house for performance interfaces Applications, e. g. , AMR or Multiphysics codes Software stack, e. g. , load balancing or dynamic scheduling Hardware architecture, e. g. , resilience or power scheduling Analysis and visualization of complex information Automatic feedback and tuning Central to all thrusts: common model for performance data PIPE

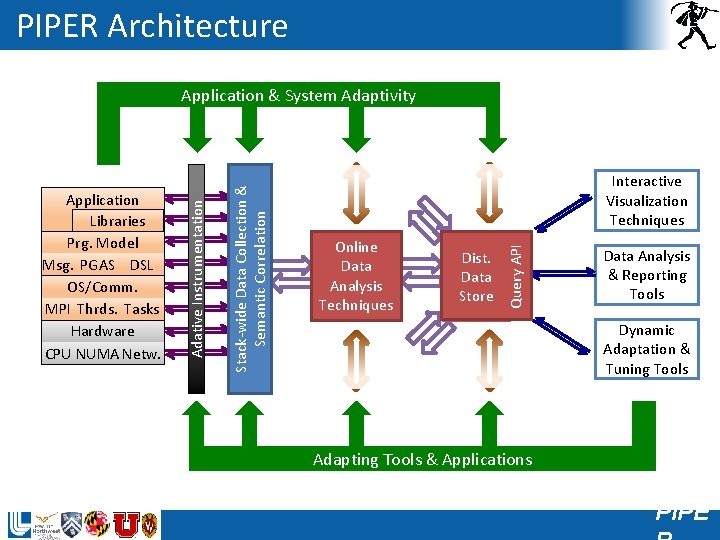

PIPER Architecture Interactive Visualization Techniques Online Data Analysis Techniques Dist. Data Store Query API Stack-wide Data Collection & Semantic Correlation Application Libraries Prg. Model Msg. PGAS DSL OS/Comm. MPI Thrds. Tasks Hardware CPU NUMA Netw. Adative Instrumentation Application & System Adaptivity Data Analysis & Reporting Tools Dynamic Adaptation & Tuning Tools Adapting Tools & Applications PIPE

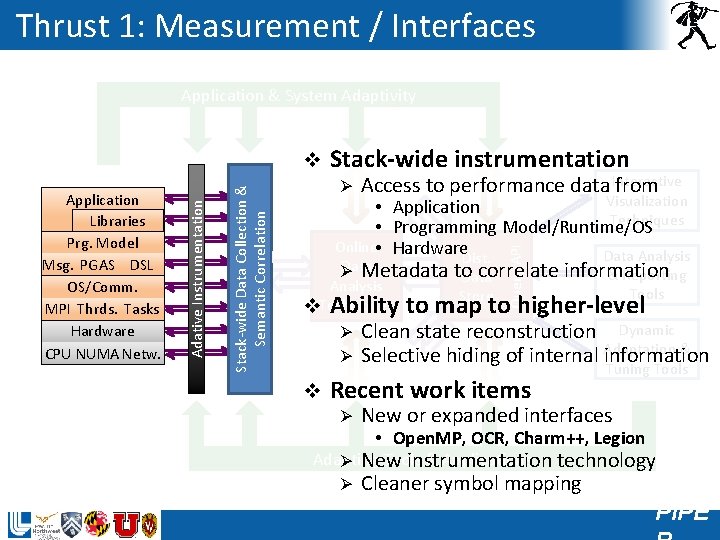

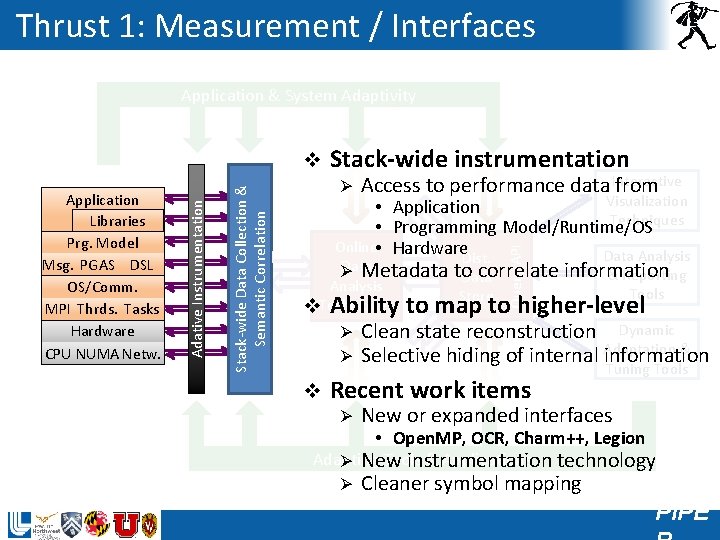

Thrust 1: Measurement / Interfaces Application & System Adaptivity Stack-wide instrumentation Ø Interactive Access to performance data from Visualization • Application Techniques • Programming Model/Runtime/OS Online • Hardware Data Analysis Dist. Query API Stack-wide Data Collection & Semantic Correlation Application Libraries Prg. Model Msg. PGAS DSL OS/Comm. MPI Thrds. Tasks Hardware CPU NUMA Netw. Adative Instrumentation v Data Ø Metadata to correlate information & Reporting Data Analysis v. Techniques Store Tools Ability to map to higher-level Ø Ø v Clean state reconstruction Dynamic Adaptation & Selective hiding of internal information Recent work items Ø Tuning Tools New or expanded interfaces • Open. MP, OCR, Charm++, Legion Adapting Tools & Applications Ø New instrumentation technology Ø Cleaner symbol mapping PIPE

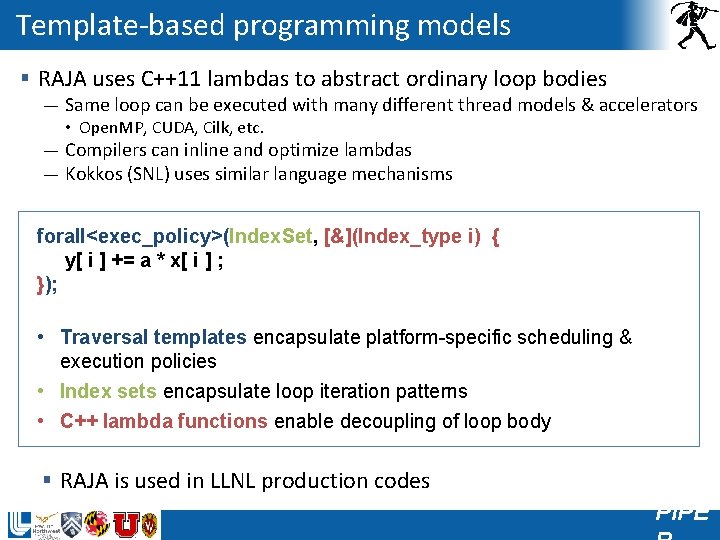

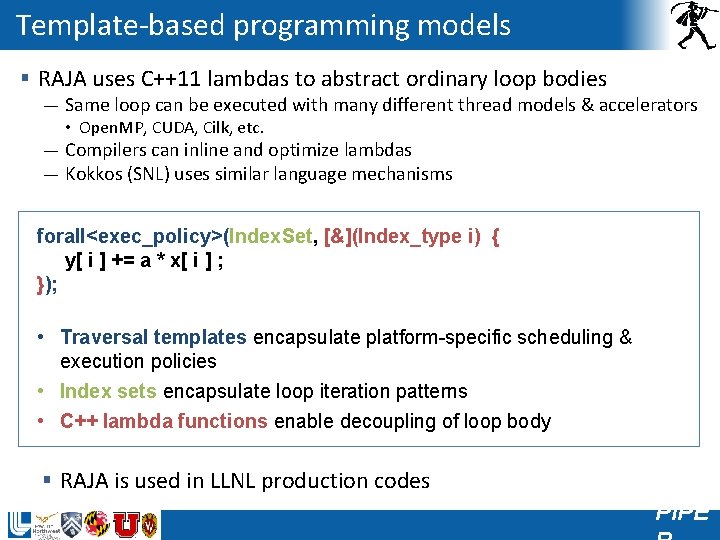

Template-based programming models § RAJA uses C++11 lambdas to abstract ordinary loop bodies — Same loop can be executed with many different thread models & accelerators • Open. MP, CUDA, Cilk, etc. — Compilers can inline and optimize lambdas — Kokkos (SNL) uses similar language mechanisms forall<exec_policy>(Index. Set, [&](Index_type i) { y[ i ] += a * x[ i ] ; }); • Traversal templates encapsulate platform-specific scheduling & execution policies • Index sets encapsulate loop iteration patterns • C++ lambda functions enable decoupling of loop body § RAJA is used in LLNL production codes PIPE

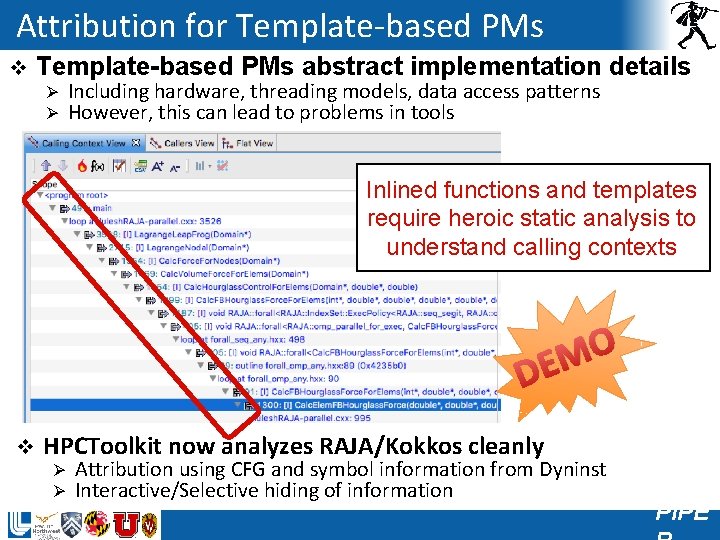

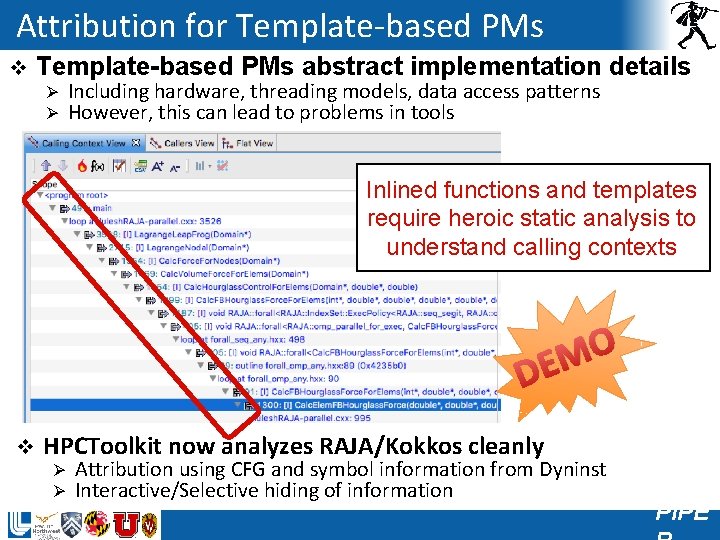

Attribution for Template-based PMs v Template-based PMs abstract implementation details Ø Ø Including hardware, threading models, data access patterns However, this can lead to problems in tools Inlined functions and templates require heroic static analysis to understand calling contexts O M DE v HPCToolkit now analyzes RAJA/Kokkos cleanly Ø Ø Attribution using CFG and symbol information from Dyninst Interactive/Selective hiding of information PIPE

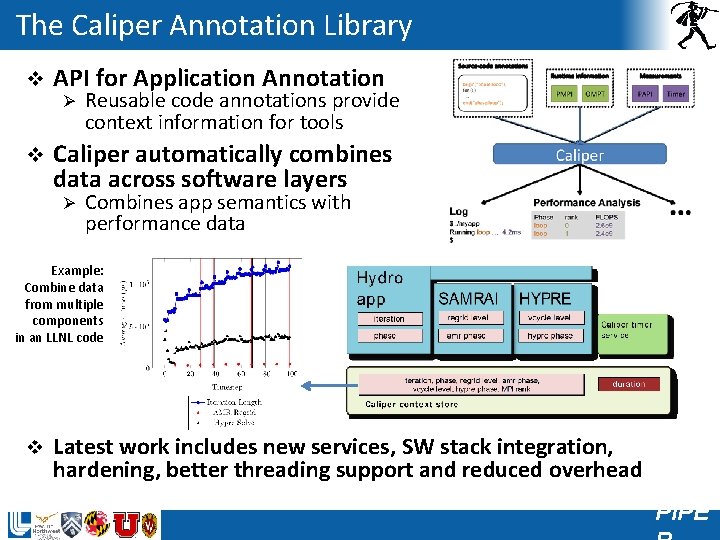

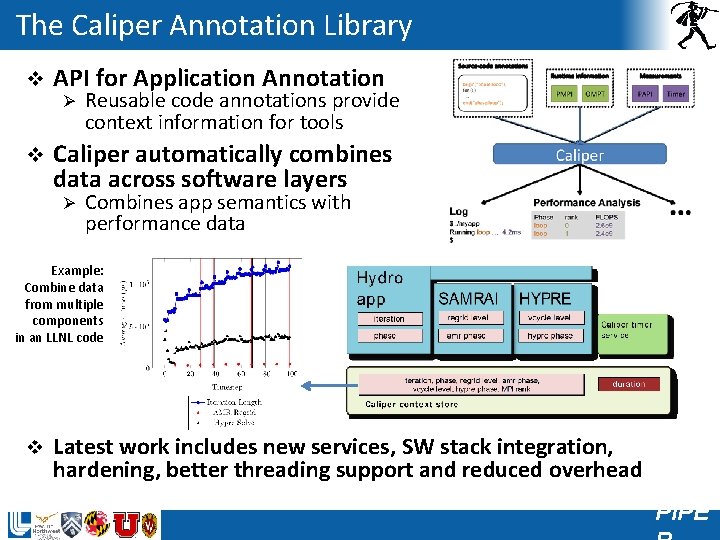

The Caliper Annotation Library v API for Application Annotation Ø v Reusable code annotations provide context information for tools Caliper automatically combines data across software layers Ø Combines app semantics with performance data Example: Combine data from multiple components in an LLNL code v Latest work includes new services, SW stack integration, hardening, better threading support and reduced overhead PIPE

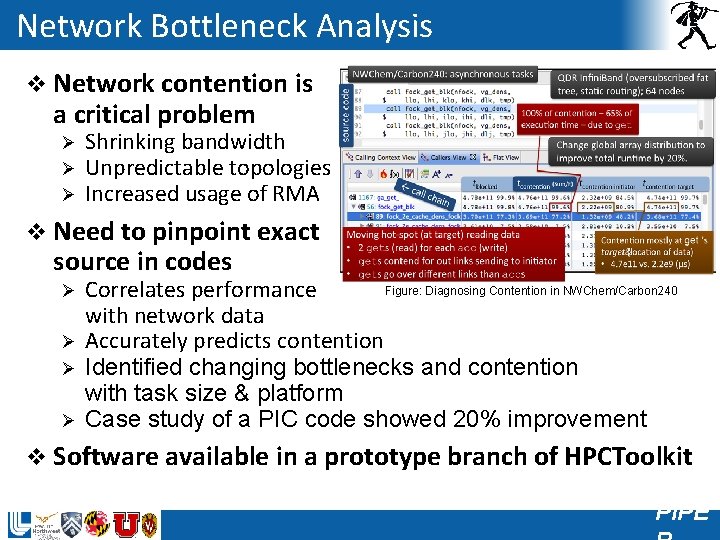

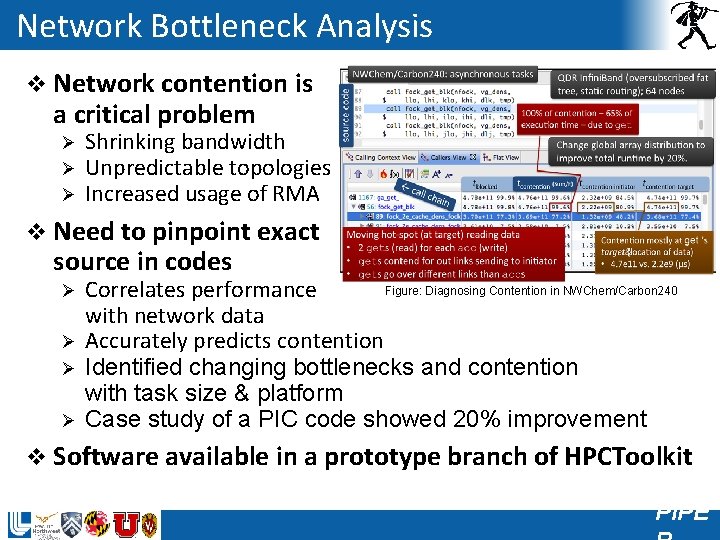

Network Bottleneck Analysis v Network contention is a critical problem Ø Ø Ø Shrinking bandwidth Unpredictable topologies Increased usage of RMA v Need to pinpoint exact source in codes Ø Ø Figure: Diagnosing Contention in NWChem/Carbon 240 Correlates performance with network data Accurately predicts contention Identified changing bottlenecks and contention with task size & platform Case study of a PIC code showed 20% improvement v Software available in a prototype branch of HPCToolkit PIPE

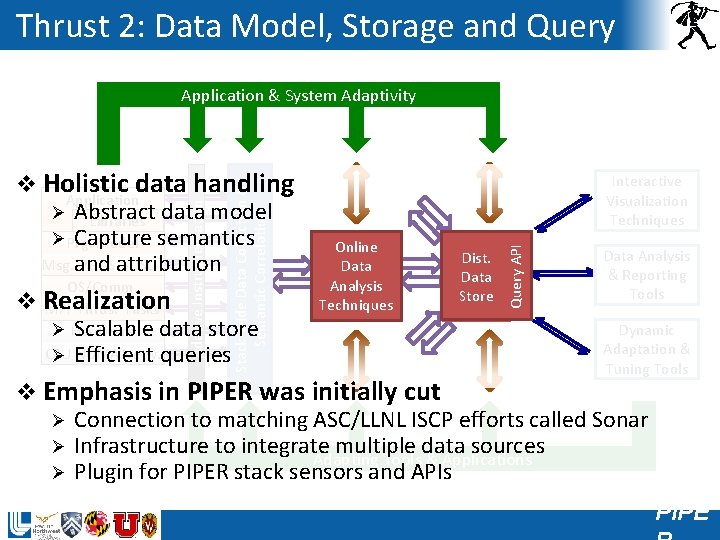

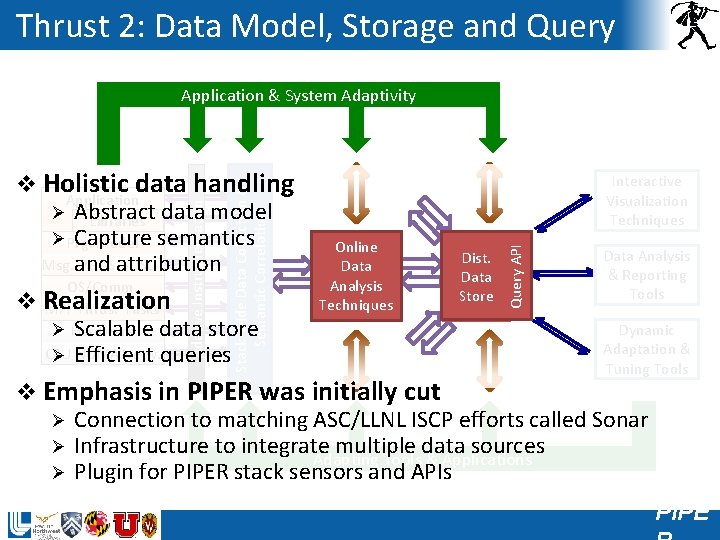

Thrust 2: Data Model, Storage and Query Application & System Adaptivity OS/Comm. MPI Thrds. Tasks Ø Hardware Scalable data store CPU NUMA Netw. Ø Efficient queries v Realization Interactive Visualization Techniques Online Data Analysis Techniques Dist. Data Store Query API Stack-wide Data Collection & Semantic Correlation Adative Instrumentation v Holistic data handling Application Ø Abstract data model Libraries Ø Prg. Model Capture semantics Msg. and attribution PGAS DSL Data Analysis & Reporting Tools Dynamic Adaptation & Tuning Tools v Emphasis in PIPER was initially cut Ø Connection to matching ASC/LLNL ISCP efforts called Sonar Ø Infrastructure to integrate multiple data sources Adapting Tools & Applications Ø Plugin for PIPER stack sensors and APIs PIPE

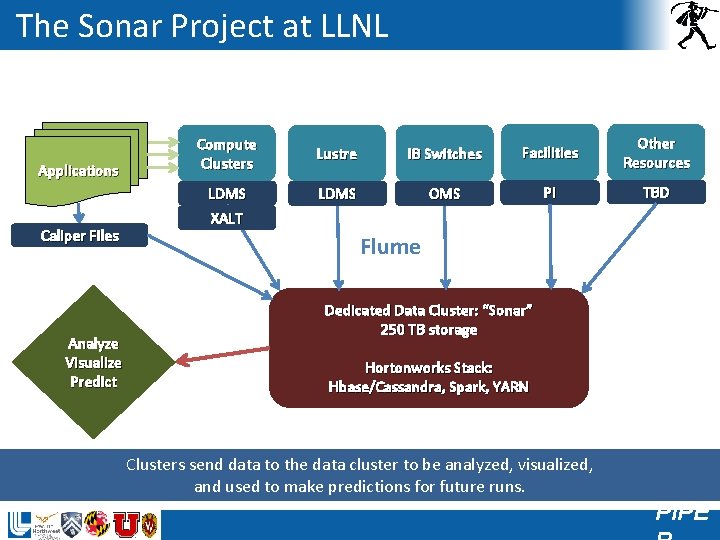

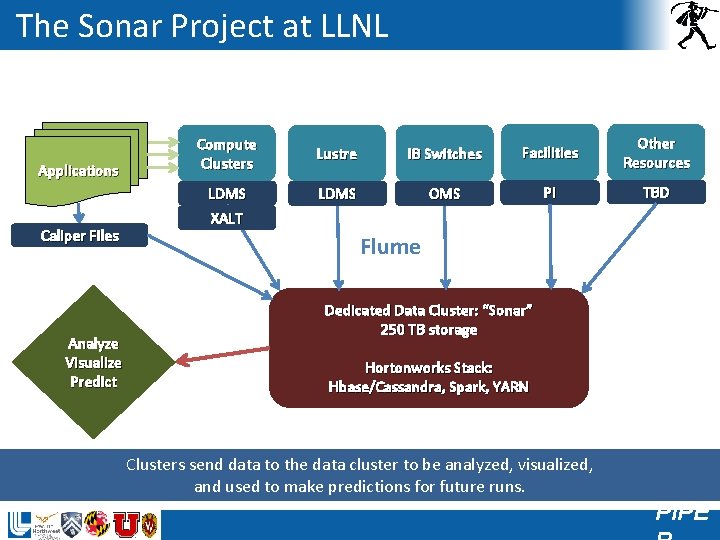

The Sonar Project at LLNL Applications Caliper Files Analyze Visualize Predict Compute Clusters LDMS XALT Lustre IB Switches Facilities Other Resources LDMS OMS PI TBD Flume Dedicated Data Cluster: “Sonar” 250 TB storage Hortonworks Stack: Hbase/Cassandra, Spark, YARN Clusters send data to the data cluster to be analyzed, visualized, and used to make predictions for future runs. PIPE

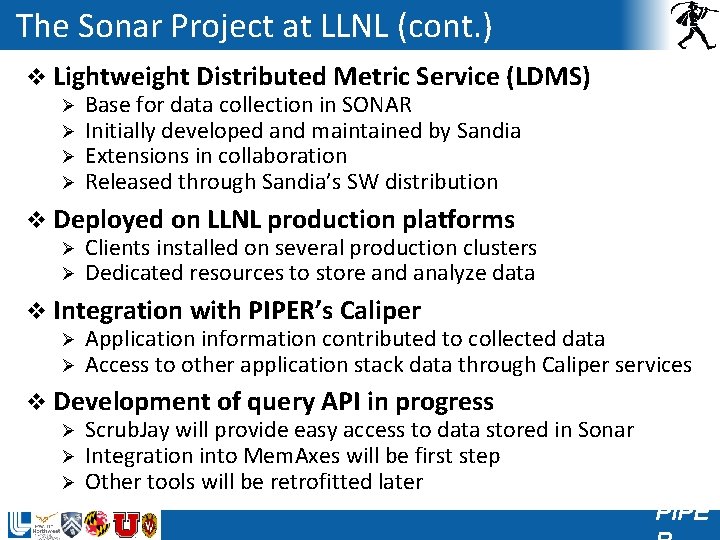

The Sonar Project at LLNL (cont. ) v Lightweight Distributed Metric Service (LDMS) Ø Base for data collection in SONAR Ø Initially developed and maintained by Sandia Ø Extensions in collaboration Ø Released through Sandia’s SW distribution v Deployed on LLNL production platforms Ø Clients installed on several production clusters Ø Dedicated resources to store and analyze data v Integration with PIPER’s Caliper Ø Application information contributed to collected data Ø Access to other application stack data through Caliper services v Development of query API in progress Ø Scrub. Jay will provide easy access to data stored in Sonar Ø Integration into Mem. Axes will be first step Ø Other tools will be retrofitted later PIPE

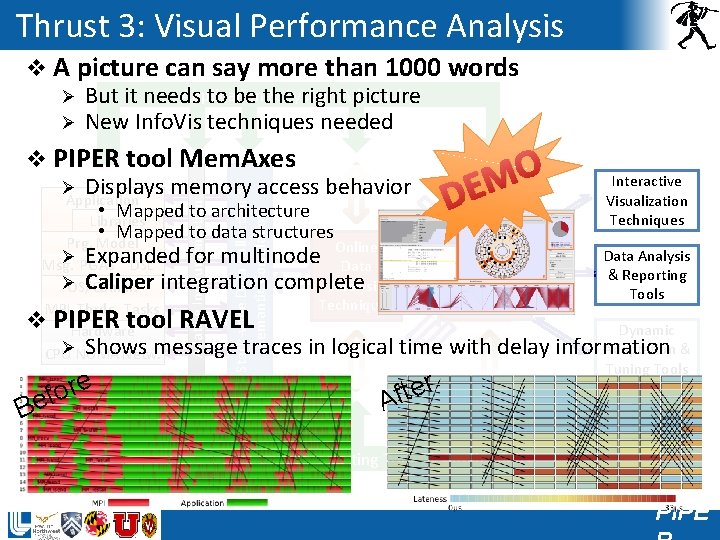

Thrust 3: Visual Performance Analysis v A picture can say more than 1000 words Application & System Adaptivity Ø But it needs to be the right picture Ø New Info. Vis techniques needed Stack-wide Data Collection & Semantic Correlation v PIPER tool Mem. Axes Ø Displays memory access behavior Application Adative Instrumentation • Mapped to architecture O M DE Interactive Visualization Techniques Query API Libraries • Mapped to data structures Prg. Model Online Data Analysis Dist. Ø Expanded for multinode Msg. PGAS DSL Data & Reporting Data Ø Caliper integration complete Analysis OS/Comm. Tools Store Techniques MPI Thrds. Tasks v Hardware Dynamic Adaptation & ØNUMA Shows message traces in logical time with delay information CPU Netw. Tuning Tools PIPER tool RAVEL re o f Be r e t f A Adapting Tools & Applications PIPE

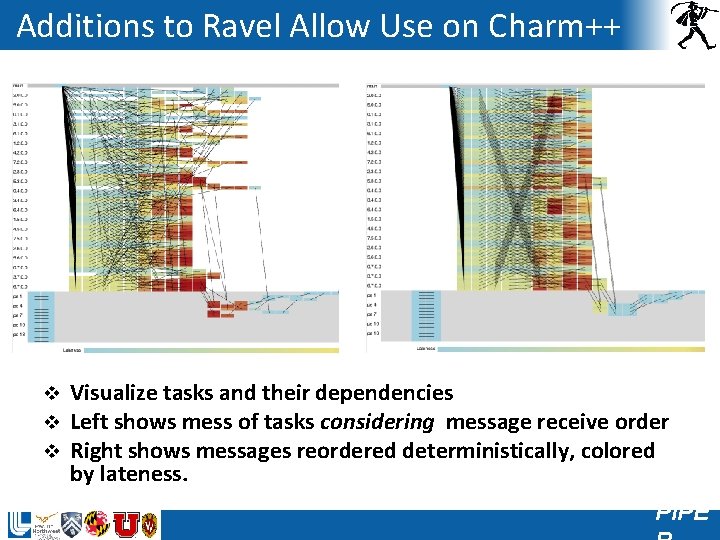

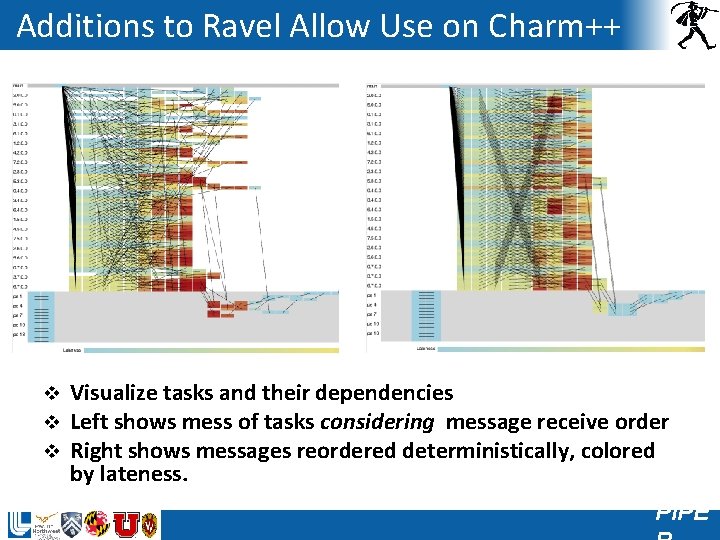

Additions to Ravel Allow Use on Charm++ v v v Visualize tasks and their dependencies Left shows mess of tasks considering message receive order Right shows messages reordered deterministically, colored by lateness. PIPE

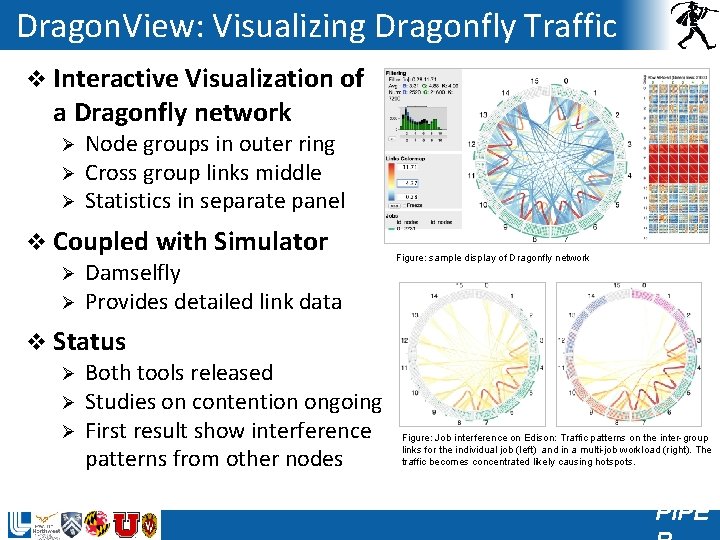

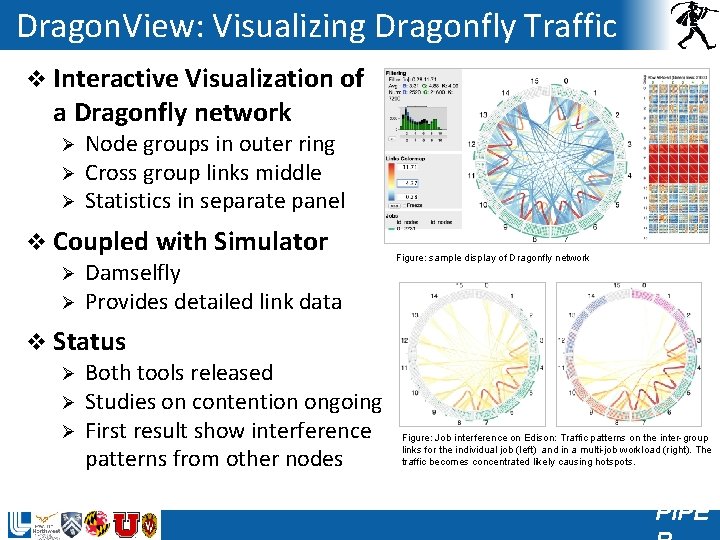

Dragon. View: Visualizing Dragonfly Traffic v Interactive Visualization of a Dragonfly network Ø Ø Ø Node groups in outer ring Cross group links middle Statistics in separate panel v Coupled with Simulator Ø Damselfly Ø Provides detailed link data v Status Ø Both tools released Ø Studies on contention ongoing Ø First result show interference patterns from other nodes Figure: sample display of Dragonfly network Figure: Job interference on Edison: Traffic patterns on the inter-group links for the individual job (left) and in a multi-job workload (right). The traffic becomes concentrated likely causing hotspots. PIPE

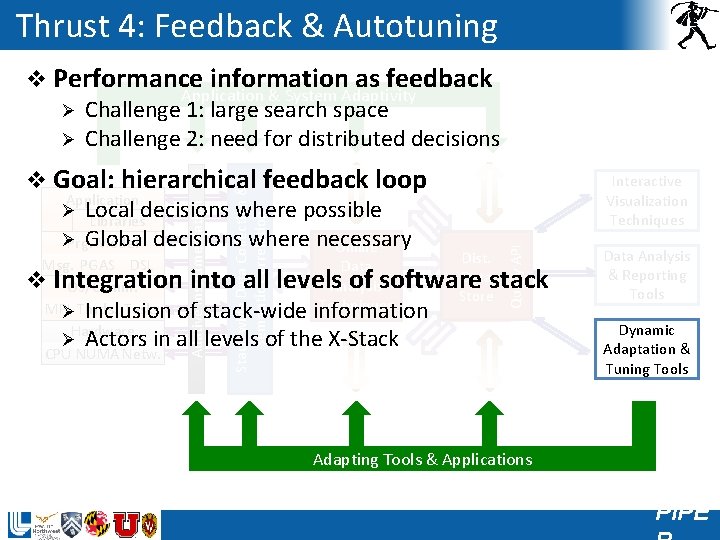

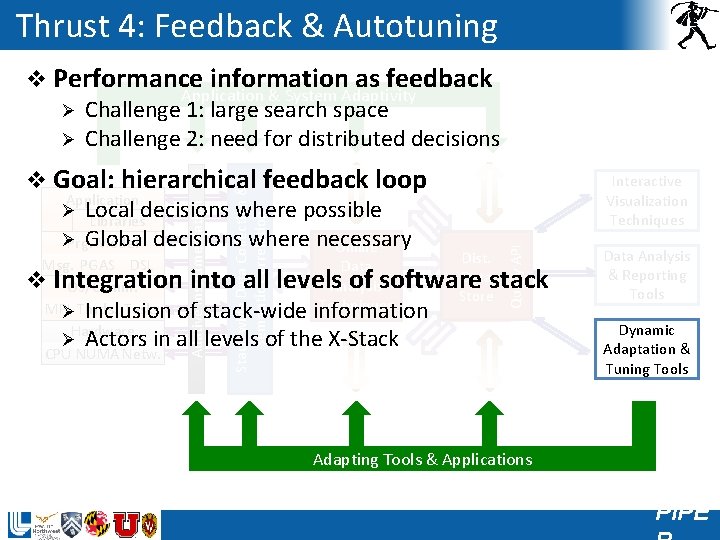

Thrust 4: Feedback & Autotuning v Performance information as feedback Application & System Adaptivity Ø Challenge 1: large search space Ø Challenge 2: need for distributed decisions Msg. PGAS DSL Data v OS/Comm. Analysis Techniques MPI Tasks ØThrds. Inclusion of stack-wide information Hardware Ø Actors in all levels of the X-Stack CPU NUMA Netw. Interactive Visualization Techniques Dist. Data Store Query API Stack-wide Data Collection & Semantic Correlation Adative Instrumentation v Goal: hierarchical feedback loop Application Ø Local decisions where possible Libraries Ø Global decisions where necessary Prg. Model Online Integration into all levels of software stack Data Analysis & Reporting Tools Dynamic Adaptation & Tuning Tools Adapting Tools & Applications PIPE

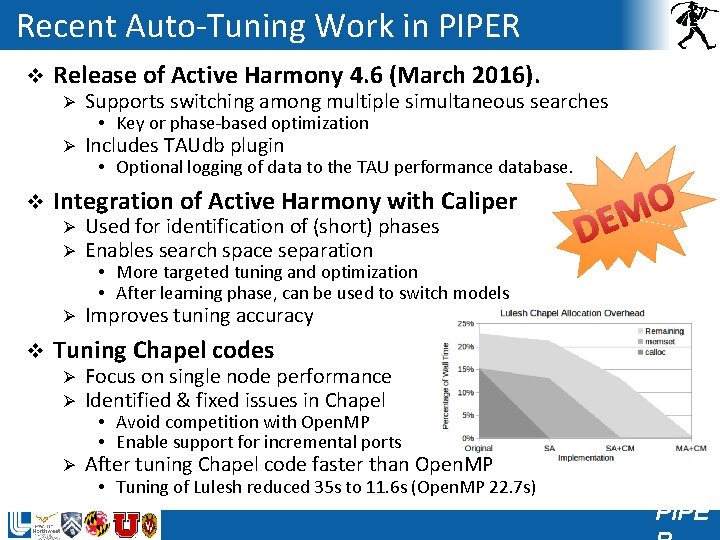

Recent Auto-Tuning Work in PIPER v v v Release of Active Harmony 4. 6 (March 2016). Ø Supports switching among multiple simultaneous searches Ø Includes TAUdb plugin • Key or phase-based optimization • Optional logging of data to the TAU performance database. Integration of Active Harmony with Caliper Ø Ø Used for identification of (short) phases Enables search space separation Ø Improves tuning accuracy O M DE • More targeted tuning and optimization • After learning phase, can be used to switch models Tuning Chapel codes Ø Ø Focus on single node performance Identified & fixed issues in Chapel Ø After tuning Chapel code faster than Open. MP • Avoid competition with Open. MP • Enable support for incremental ports • Tuning of Lulesh reduced 35 s to 11. 6 s (Open. MP 22. 7 s) PIPE

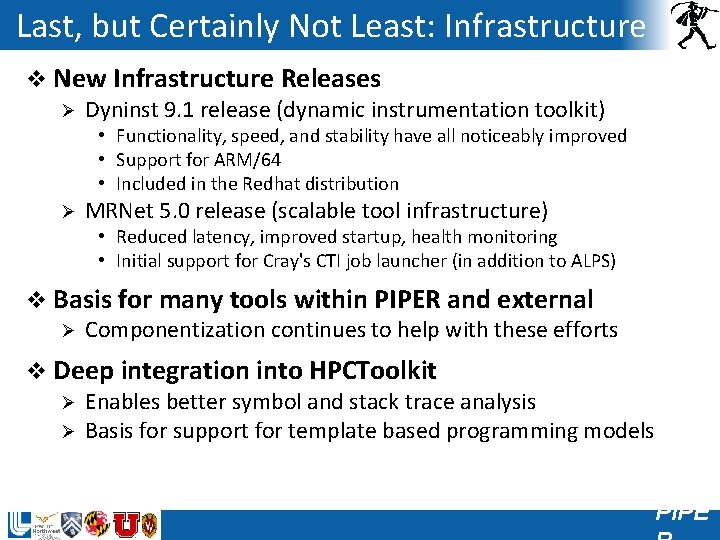

Last, but Certainly Not Least: Infrastructure v New Infrastructure Releases Ø Dyninst 9. 1 release (dynamic instrumentation toolkit) • Functionality, speed, and stability have all noticeably improved • Support for ARM/64 • Included in the Redhat distribution Ø MRNet 5. 0 release (scalable tool infrastructure) • Reduced latency, improved startup, health monitoring • Initial support for Cray's CTI job launcher (in addition to ALPS) v Basis for many tools within PIPER and external Ø Componentization continues to help with these efforts v Deep integration into HPCToolkit Ø Enables better symbol and stack trace analysis Ø Basis for support for template based programming models PIPE

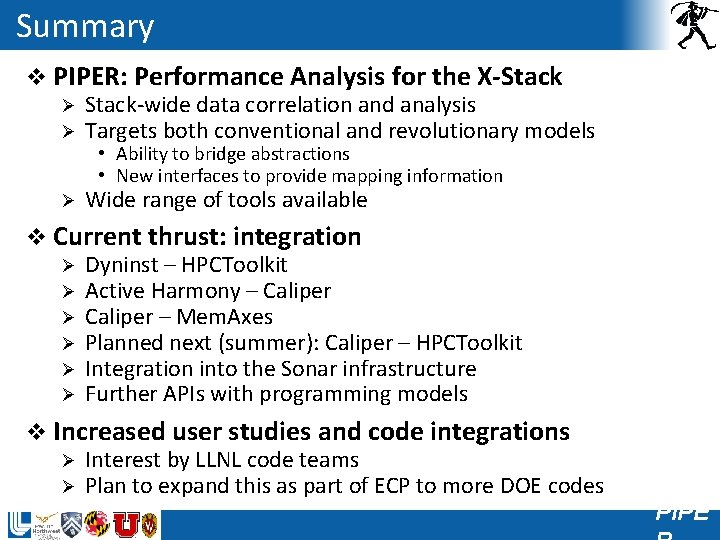

Summary v PIPER: Performance Analysis for the X-Stack Ø Stack-wide data correlation and analysis Ø Targets both conventional and revolutionary models • Ability to bridge abstractions • New interfaces to provide mapping information Ø Wide range of tools available v Current thrust: integration Ø Dyninst – HPCToolkit Ø Active Harmony – Caliper Ø Caliper – Mem. Axes Ø Planned next (summer): Caliper – HPCToolkit Ø Integration into the Sonar infrastructure Ø Further APIs with programming models v Increased user studies and code integrations Ø Interest by LLNL code teams Ø Plan to expand this as part of ECP to more DOE codes PIPE

Demos Later Today – Come Visit Us! v Attribution for template-based parallel programming Ø HPCToolkit Ø Applied to RAJA and Kokkos v Application instrumentation Ø Caliper integration with Mem. Axes Ø Applied to a Shock-Hydro Code v Annotations to improve autotuning Ø Active Harmony Ø Using Caliper Annotations PIPE

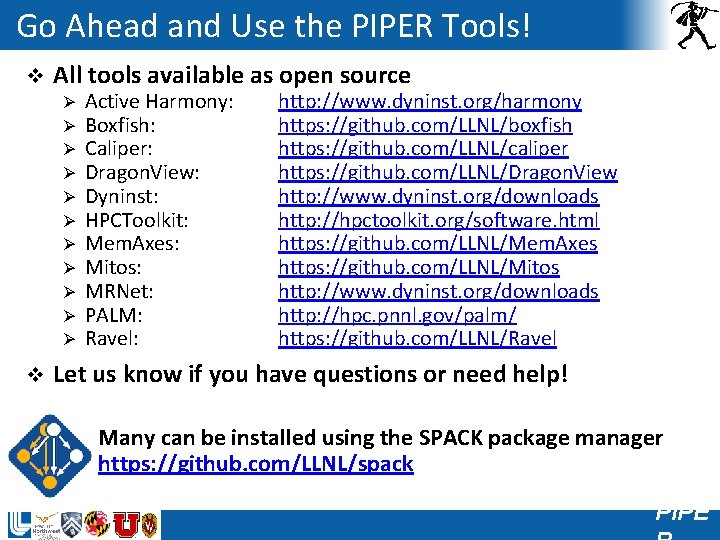

Go Ahead and Use the PIPER Tools! v All tools available as open source Ø Ø Ø v Active Harmony: Boxfish: Caliper: Dragon. View: Dyninst: HPCToolkit: Mem. Axes: Mitos: MRNet: PALM: Ravel: http: //www. dyninst. org/harmony https: //github. com/LLNL/boxfish https: //github. com/LLNL/caliper https: //github. com/LLNL/Dragon. View http: //www. dyninst. org/downloads http: //hpctoolkit. org/software. html https: //github. com/LLNL/Mem. Axes https: //github. com/LLNL/Mitos http: //www. dyninst. org/downloads http: //hpc. pnnl. gov/palm/ https: //github. com/LLNL/Ravel Let us know if you have questions or need help! Many can be installed using the SPACK package manager https: //github. com/LLNL/spack PIPE

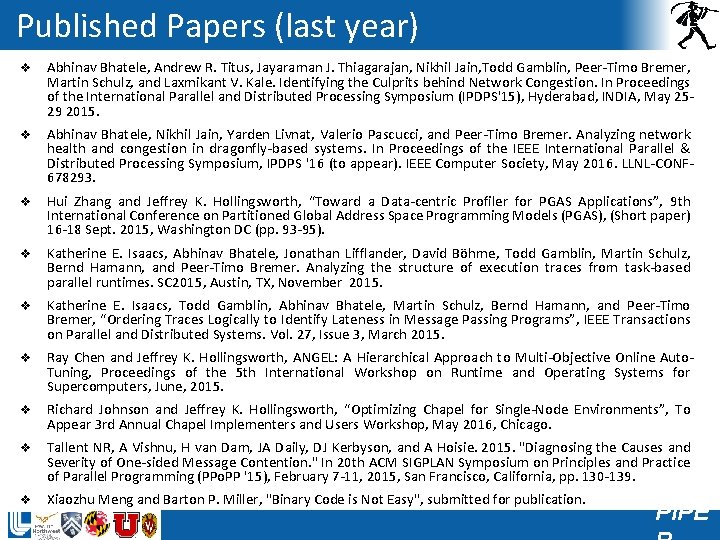

Published Papers (last year) v Abhinav Bhatele, Andrew R. Titus, Jayaraman J. Thiagarajan, Nikhil Jain, Todd Gamblin, Peer-Timo Bremer, Martin Schulz, and Laxmikant V. Kale. Identifying the Culprits behind Network Congestion. In Proceedings of the International Parallel and Distributed Processing Symposium (IPDPS'15), Hyderabad, INDIA, May 2529 2015. v Abhinav Bhatele, Nikhil Jain, Yarden Livnat, Valerio Pascucci, and Peer-Timo Bremer. Analyzing network health and congestion in dragonfly-based systems. In Proceedings of the IEEE International Parallel & Distributed Processing Symposium, IPDPS '16 (to appear). IEEE Computer Society, May 2016. LLNL-CONF 678293. v Hui Zhang and Jeffrey K. Hollingsworth, “Toward a Data-centric Profiler for PGAS Applications”, 9 th International Conference on Partitioned Global Address Space Programming Models (PGAS), (Short paper) 16 -18 Sept. 2015, Washington DC (pp. 93 -95). v Katherine E. Isaacs, Abhinav Bhatele, Jonathan Lifflander, David Böhme, Todd Gamblin, Martin Schulz, Bernd Hamann, and Peer-Timo Bremer. Analyzing the structure of execution traces from task-based parallel runtimes. SC 2015, Austin, TX, November 2015. v Katherine E. Isaacs, Todd Gamblin, Abhinav Bhatele, Martin Schulz, Bernd Hamann, and Peer-Timo Bremer, “Ordering Traces Logically to Identify Lateness in Message Passing Programs”, IEEE Transactions on Parallel and Distributed Systems. Vol. 27, Issue 3, March 2015. v Ray Chen and Jeffrey K. Hollingsworth, ANGEL: A Hierarchical Approach to Multi-Objective Online Auto. Tuning, Proceedings of the 5 th International Workshop on Runtime and Operating Systems for Supercomputers, June, 2015. v Richard Johnson and Jeffrey K. Hollingsworth, “Optimizing Chapel for Single-Node Environments”, To Appear 3 rd Annual Chapel Implementers and Users Workshop, May 2016, Chicago. v Tallent NR, A Vishnu, H van Dam, JA Daily, DJ Kerbyson, and A Hoisie. 2015. "Diagnosing the Causes and Severity of One-sided Message Contention. " In 20 th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming (PPo. PP '15), February 7 -11, 2015, San Francisco, California, pp. 130 -139. v Xiaozhu Meng and Barton P. Miller, "Binary Code is Not Easy", submitted for publication. PIPE