1 Efficient crawling through URL ordering 7 th

- Slides: 37

1) Efficient crawling through URL ordering, 7 th WWW Conf. 2) The Page. Rank Citation Ranking: Bringing order to the Web, 7 th WWW Conf. Instructor: P. Krishna Reddy E-mail: pkreddy@iiit. net http: //www. iiit. net/~pkreddy

Course Roadmap • Introduction to Data mining – Introduction, Preprocessing, Clustering, Association Rules, Classification, … • Web structure mining – – – – – • • Authoritative Sources in a Hyperlinked environment, by John. M. Kleonberg Efficient Crawling through URL ordering, J. Cho, H. Garcia Molina and L. Page The Page. Rank Citation Ranking: Bringing order to the Web. The anatomy large scale Hyper-textual Web Search engine, Sergey Brin and Lawrance Page Graph Structure in the Web Focused Crawling: A new approach to topic-specific web resource discovery, by Souman Chakravarthi et al. Trawling the web for emerging cyber communities, Ravi Kumar… Building a cyber-community hierarchy based on link analysis, P. Krishna Reddy and Masaru Kitsuregawa Efficient identification of web communities, GW Flake, S. . Lawrence, et al. Finding related pages in WWW, J Dean and MR Herizinger Web content mining/Information Retrieval Web log mining/Recommendation systems/E-commerce

Efficient Crawling Through URL ordering • • • Introduction Important metrics Problem definition Ordering metrics Experiments

Introduction • A crawler is a program that retrieves web pages; used by search engine of web cache. • It starts off with the URL of initial page P 0. • It retrievs P 0, extracts any URLs in it and adds them to a queue of URLs to be scanned. • The crawler get the URLS in some order. • It must carefully decide what URL to scan in what order. • It must also decide how frequently to revisit the web pages it has already seen.

In this paper • How to select URLs to scan from its queue of known URLs. • Most crawlers may not want to visit all the URLs. – The client may have limited storage capacity. – Crawling takes time • So, it is important to visit important pages first.

Important metrics • Given a web page P, we can define the importance of web page, I(P) in one of the following ways. – Similarity to driving query Q • If a query Q drives the crawling process and I(P) is defined as textual similarity between P and Q. • To compute similarity we can view each document an mdimensional vector (w 1, w 2, …, wn) where wi represents ith word. – Back link count (IB(P)): The value of I(P) is the number of links to P that appear over the entire Web. • A crawler may estimate IB(P) with the # of links to P that have been so far.

Important metrics…. – Page rank: IB(P) metric treats all links equally. • Example: A link from Yahoo home page counts same as the link from the individual home page. • So, a page rank back link metric IR(P) recuursively defines the importance of the page to be the weighted sum of back links to it. – – – Suppose a page p is pointed by pages T 1, …, Tn. Let Ci be the number of links going out of page Ti. Let d be a damping factor IR(P)=(1 -d)+d(IR(T 1)/C 1+…. +IR(Tn)/Cn) One equation per web page. d when the user is on a page, there is some probability ‘d’ that next visited page will be completely random.

Important metrics…. – Forward link count: • IF(P) # of links that emanate from P. – Location metric: • IL(P) is a function of its location not of its contents. • Example: – URLs ending with. com may be more useful than URLs with other endings. – URLs with fewer slashes more useful than with more slashes. • We can also combine the importance. – IC(P)= K 1. IS(P, Q) +K 2. IB(P)

Problem definition • Define a crawler that if possible visits high I(P) pages before lower ranked pages for some definition of I(P). • Options: – Crawl and stop: after visiting K pages – Crawl and stop with threshold: IP(P) >= G is considered HOT. – Limited buffer crawl: After filling the buffer drop unimportant pages, lowest I(P) value.

Ordering Metrics • A crawler keeps a queue of URLs it has seen during a crawl, and selects next URL from this queue. • The ordering metric O is used by the crawler for this selection. – It selects the URL u such that O(u) has the highest value among all URLs in the queue. – The O metric can use the information seen. – The O metric is based on importance metric.

Experiments • Data set – Repository consisting of all Stanford web pages. – Experiments are conducted on this data set. – # of pages=179, 000

Results • X-axis: Fraction of Stanford pages crawled over time. • Y-axis: fraction of the total hot pages that has been crawled at a given point (Pst). • Threshold G=3, 100. • A page with G or more back links considered as hot. • Crawl and stop model is used with G=100. • Ordering metrics: – – – Random Ideal Breadth first: Do nothing Back link: Sorl URL-queue by back-link count Pagerank: Sort URL queue with IR[u] • IR(u)=(1 -0. 9)+0. 9 IR(vi)/ci, where (vi, u) ε links and ci is the number of links in the page vi.

Results • Page rank metric outperforms back link metric. • Reason – IB(P) carwler behaved like depth first one by frequently visiting pages within one cluster. – IR(p) crawler combined breath and depth in a better way, because it is based on ranking.

Similarity based crawlers • Similarity based importance metric: IS(P) measures the relevance of each page to a topic or the query the user has in mind. • For first two experiments: A page is hot if it contains computer in its title or if it has more than 10 occurrences of computer in its body. • Two queues are maintained: – Hot queue – URL queue – Crawler prefers Hot queue. • Observation: – When similarity is important it is effective to use ordering metric that considers the content of anchors and URLs and the distance to the hot pages that have been discovered.

Conclusion • Page rank is excellent ordering metric when pages with many backlinks or with high Page rank or sought. • If similarity to a driving query is important, it is useful to visit the URLs that: – Have anchor text that is similar to the driving query. – Have some of the query terms within the URL itself. – Have a short link distance to a page that is known to be hot.

The Page. Rank Citation Ranking: Bringing Order to the Web

Contents • • • Motivation Related work Page Rank & Random Surfer Model Implementation Application Conclusion

Motivation l Web: heterogeneous and unstructured l The pages are diverse l Containing casual activities to research papers. l Free of quality control on the web l Commercial interest to manipulate ranking l Simplicity of publishing web pages results in a large fraction of low quality web pages that the users are unlikely to read.

Related Work • Academic citation analysis – Theory of information flow in academic community (epidemic process). • Link-based analysis – Charecterizing world wide web ecologies, Jim Pitkow. • Clustering methods of link structure • Hubs & Authorities Model • Notion of Quality from library community

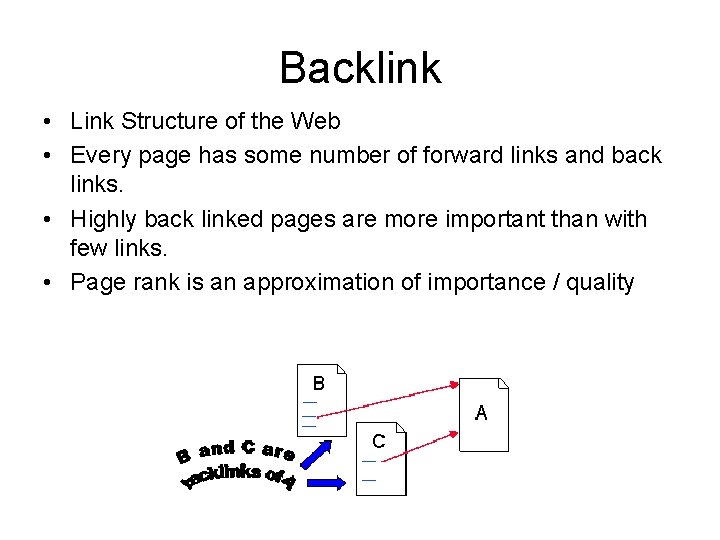

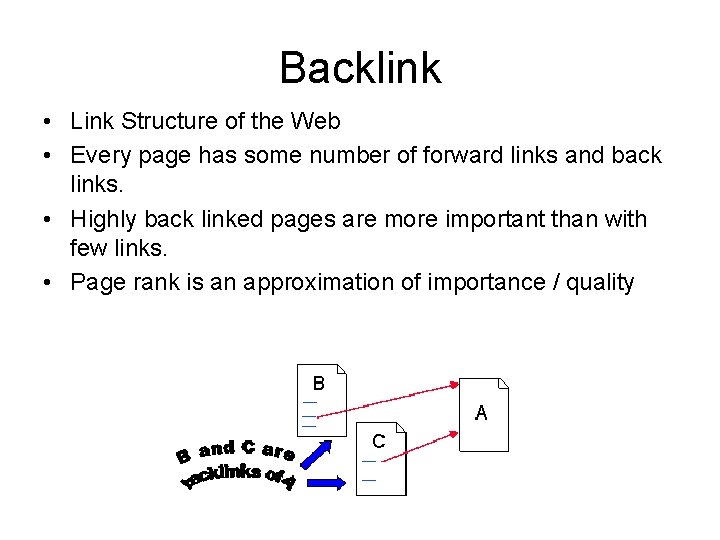

Backlink • Link Structure of the Web • Every page has some number of forward links and back links. • Highly back linked pages are more important than with few links. • Page rank is an approximation of importance / quality

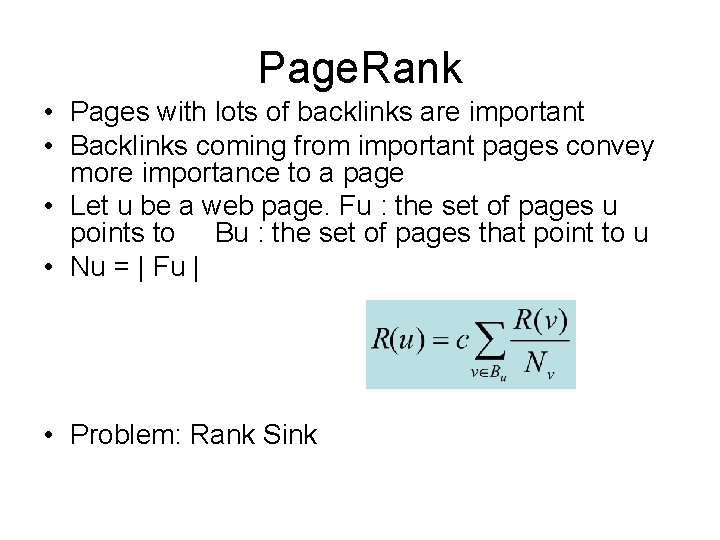

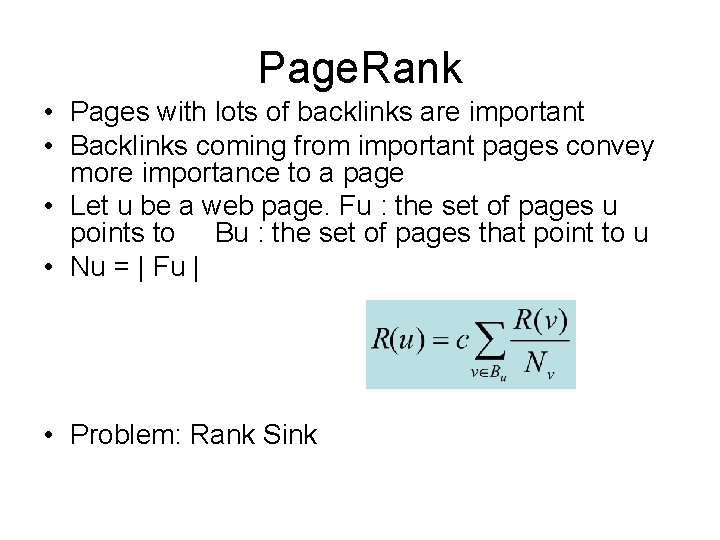

Page. Rank • Pages with lots of backlinks are important • Backlinks coming from important pages convey more importance to a page • Let u be a web page. Fu : the set of pages u points to Bu : the set of pages that point to u • Nu = | Fu | • Problem: Rank Sink

Rank Sink • Page cycles pointed by some incoming link • Problem: this loop will accumulate rank but never distribute any rank outside

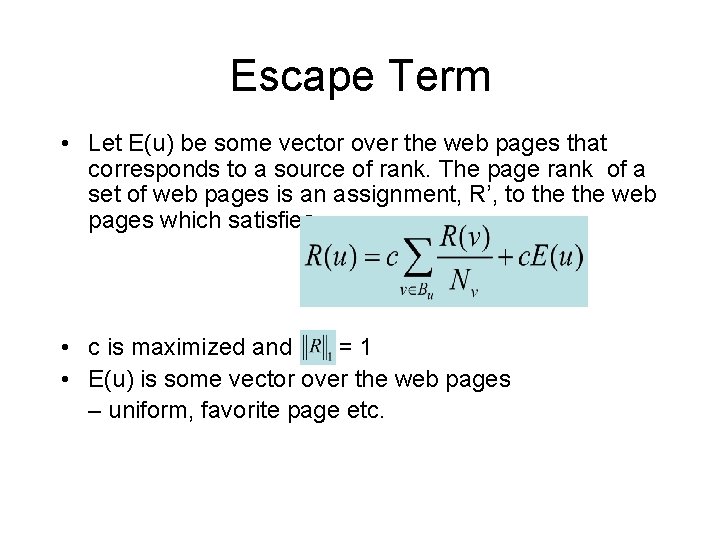

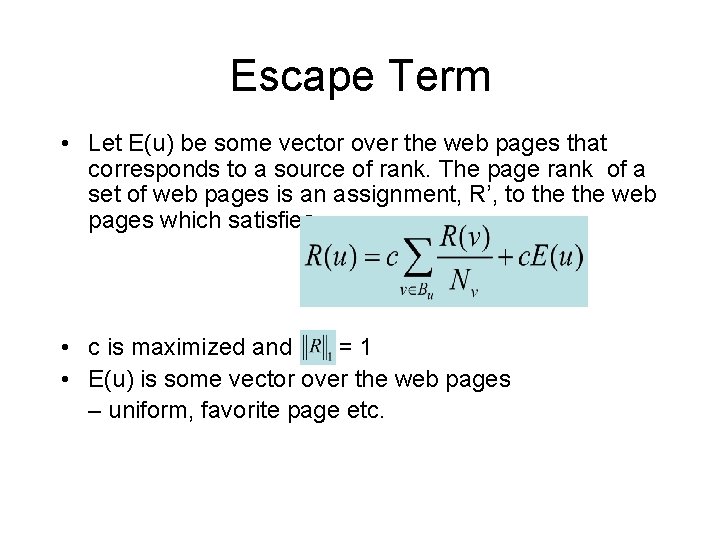

Escape Term • Let E(u) be some vector over the web pages that corresponds to a source of rank. The page rank of a set of web pages is an assignment, R’, to the web pages which satisfies • c is maximized and =1 • E(u) is some vector over the web pages – uniform, favorite page etc.

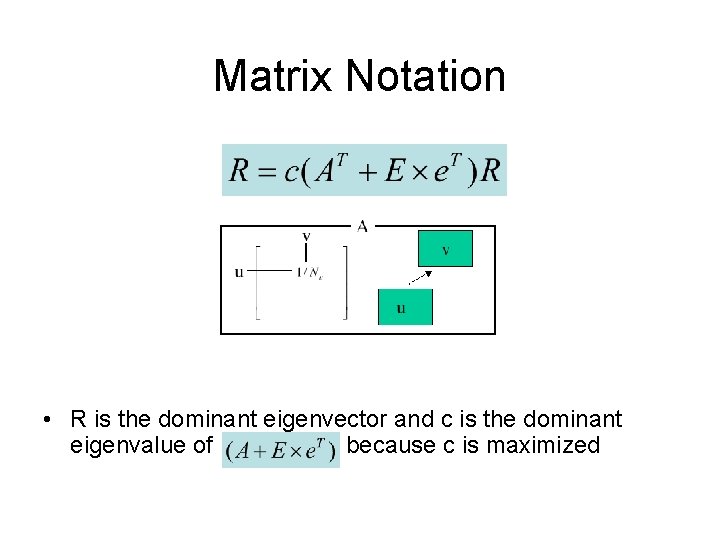

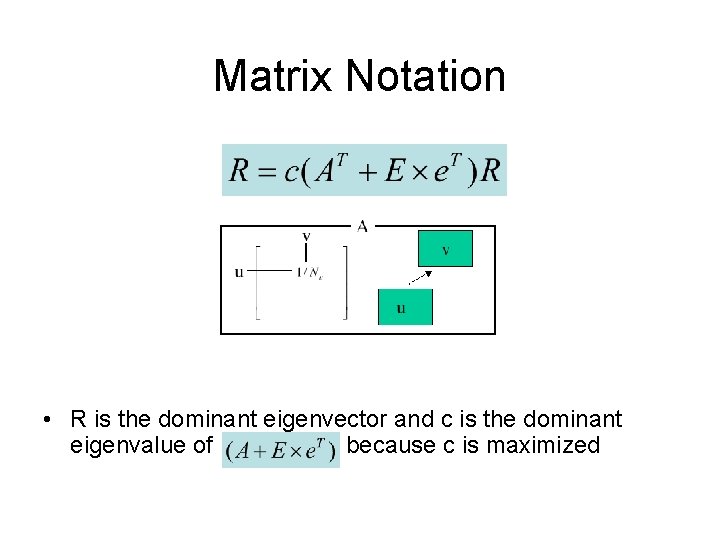

Matrix Notation • R is the dominant eigenvector and c is the dominant eigenvalue of because c is maximized

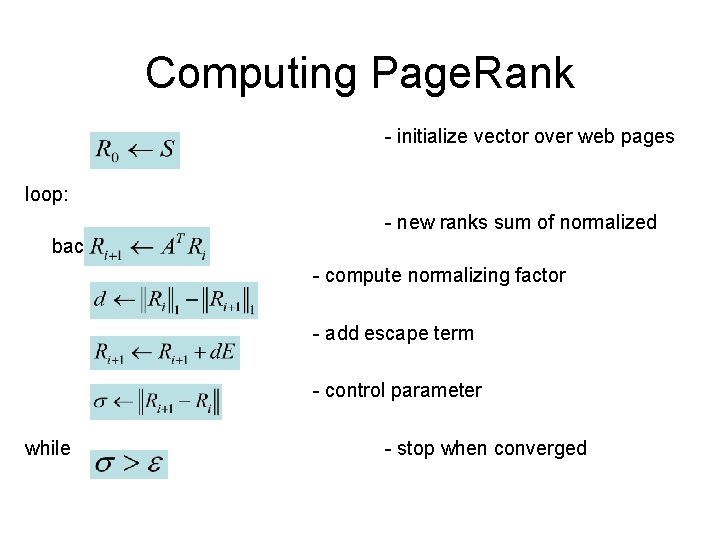

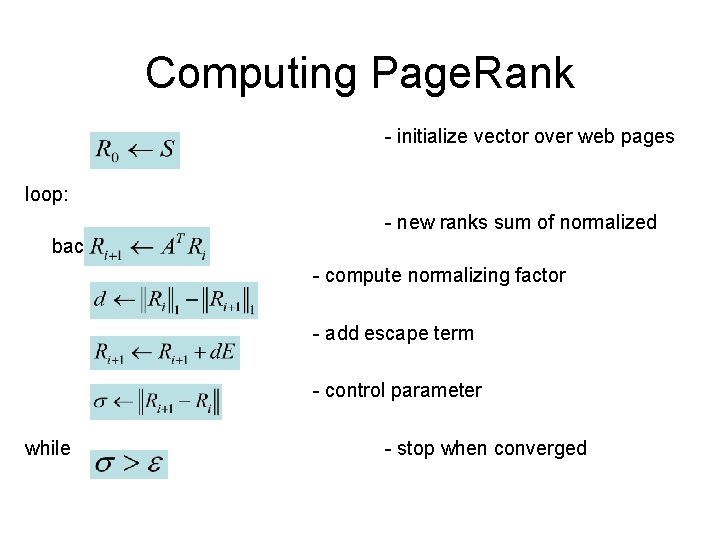

Computing Page. Rank - initialize vector over web pages loop: - new ranks sum of normalized backlink ranks - compute normalizing factor - add escape term - control parameter while - stop when converged

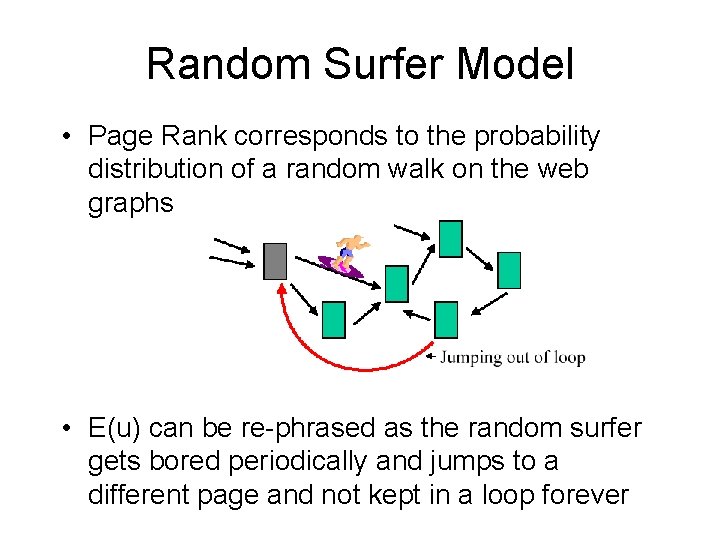

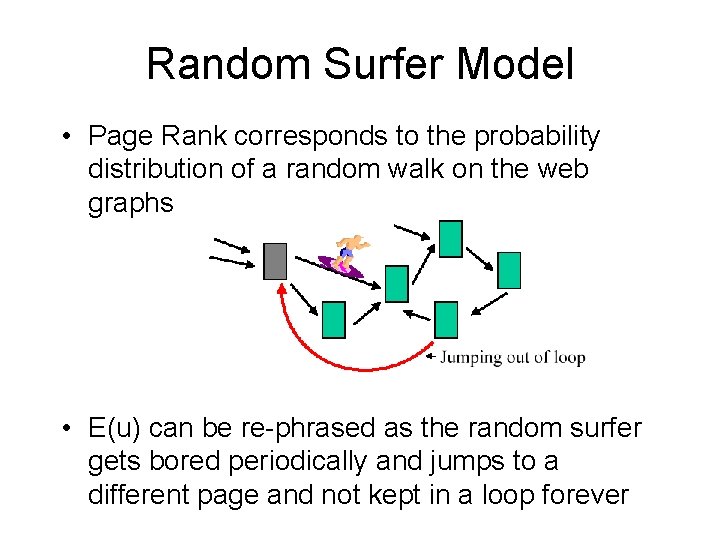

Random Surfer Model • Page Rank corresponds to the probability distribution of a random walk on the web graphs • E(u) can be re-phrased as the random surfer gets bored periodically and jumps to a different page and not kept in a loop forever

Implementation • Computing resources — 24 million pages — 75 million URLs • Memory and disk storage Weight Vector (4 byte float) Matrix A (linear access)

Implementation (Con't) • • • Unique integer ID for each URL Sort and Remove dangling links Rank initial assignment Iteration until convergence Add back dangling links and Re-compute

Convergence Properties • Graph (V, E) is an expander with factor if for all (not too large) subsets S: |As| |s| • Random walk converges fast to a limiting probability distribution on a set of nodes in the graph.

Convergence Properties (con't) • Page. Rank computation is O(log(|V|)) due to rapidly mixing graph G of the web.

Personalized Page. Rank • Rank Source E can be initialized : – uniformly over all pages: e. g. copyright warnings, disclaimers, mailing lists archives result in overly high ranking – total weight on a single page, e. g. Netscape, Mc. Carthy great variation of ranks under different single pages as rank source – and everything in-between, e. g. server root pages allow manipulation by commercial interests

Applications I • Estimate web traffic – Server/page aliases – Link/traffic disparity, • Backlink predictor – Citation counts have been used to predict future citations – very difficult to map the citation structure of the web completely – avoid the local maxima that citation counts get stuck in and get better performance

Applications II - Ranking Proxy • Surfer's Navigation Aid • Annotating links by Page. Rank (bar graph) • Not query dependent

Issues • Users are no random walkers – Content based methods • Starting point distribution – Actual usage data as starting vector • • Reinforcing effects/bias towards main pages How about traffic to ranking pages? No query specific rank Linkage spam – Page. Rank favors pages that managed to get other pages to link to them – Linkage not necessarily a sign of relevancy, only of promotion (advertisement…)

Evaluation I

Evaluation II

Conclusion • Page. Rank is a global ranking based on the web's graph structure • Page. Rank use backlinks information to bring order to the web • Page. Rank can separate out representative pages as cluster center • A great variety of applications