Compiler Code Optimization A language definition says what

- Slides: 59

Compiler Code Optimization ' ' A language definition says what the meaning of a program is. It does not say what code must be generated. Compiling * as repeated + would be valid Optimization is about compiling efficient code Optimization is about improving code, not making it optimal (which is beyond reach)

Part 1, Local Optimization ' ' ' Part 1 is about local optimizations Defined as optimizations that can be done without building global data structures representing an entire procedure Boundary between global and local optimizations is most certainly not a precise one.

Notion of Basic Block ' A basic block is a sequence of instructions ' ' ' With a single entry point And a single exit point Last condition may be relaxed Can be processed serially, starting with known conditions on entry Many local optimizations based on basic blocks

Constant Folding ' ' A programmer may write 7 * 24 for number of hours in a week. We do not want to see the multiplication in the code, instead compiler should change to 168. Note that in Ada, static expressions are defined within the language. But of course an Ada compiler can do more.

More on Constant Folding ' ' Must be careful not to transform run-time errors to compile time errors (e. g. Folding 5 / 0). Also overflow has to be carefully handled. Must be careful to give exactly the same result that would have occurred at run-time (particularly important with floating-point).

Common Subexpressions ' ' ' CSE is common subexpression elimination Don't compute the same expression more than once. For example ' ' x : = (y + z) + (a * b); k : = (y + z)**4; We only need to compute y+z once

More on CSE Elimination ' Must worry about side effects ' ' ' x : = (y + z)*(r + q); y : = y + m; z : = (y + z) + g; In this case y+z is NOT a constant subexpression Simple approach ' ' Keep hash table of subexpressions within basic block Destroy entries on an assignment

CSE and Side Effects ' Suppose we have ' ' x : = (a + b) + f(x) + (a + b); And f is a function that modifies b Is (a+b) a common subexpression Check language definition carefully ' ' Order of evaluation rules Rules about side effects

Commutative Operators ' ' The expressions (a+b) and (b+a) have same value So we can replace one by another ' ' x : = a + f(y); -- probably better to compute f first x : = (a + b) * g (b + a); -- a+b is CSE In general we can commute to improve register usage Can also commute subtraction ' a - b = -(b - a)

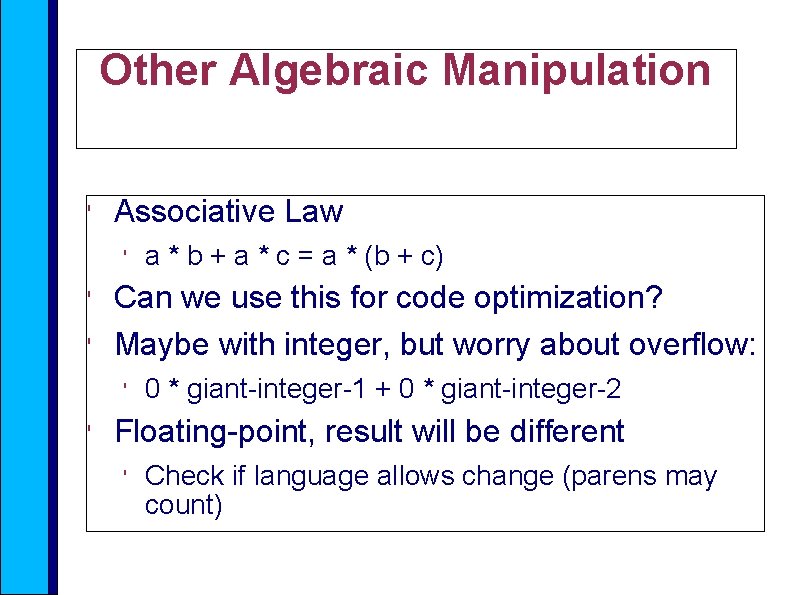

Other Algebraic Manipulation ' Associative Law ' ' ' Can we use this for code optimization? Maybe with integer, but worry about overflow: ' ' a * b + a * c = a * (b + c) 0 * giant-integer-1 + 0 * giant-integer-2 Floating-point, result will be different ' Check if language allows change (parens may count)

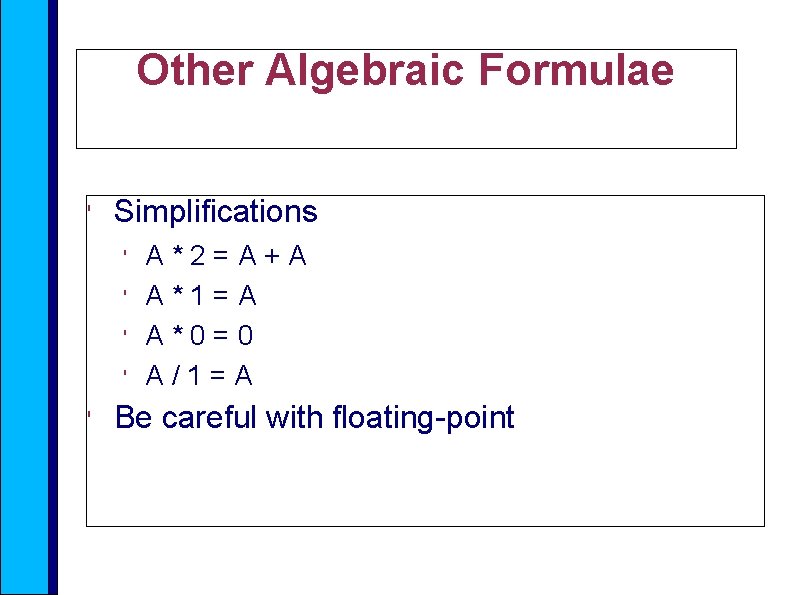

Other Algebraic Formulae ' Simplifications ' ' ' A*2=A+A A*1=A A*0=0 A/1=A Be careful with floating-point

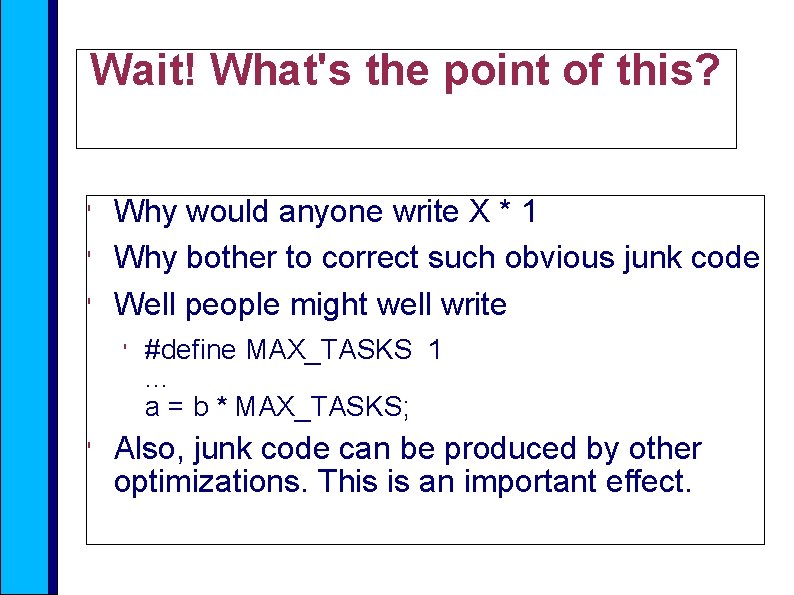

Wait! What's the point of this? ' ' ' Why would anyone write X * 1 Why bother to correct such obvious junk code Well people might well write ' ' #define MAX_TASKS 1. . . a = b * MAX_TASKS; Also, junk code can be produced by other optimizations. This is an important effect.

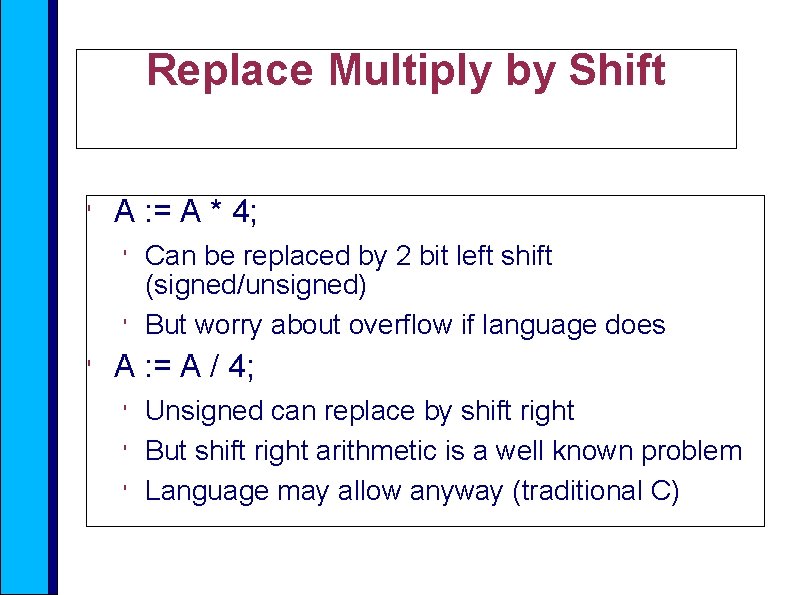

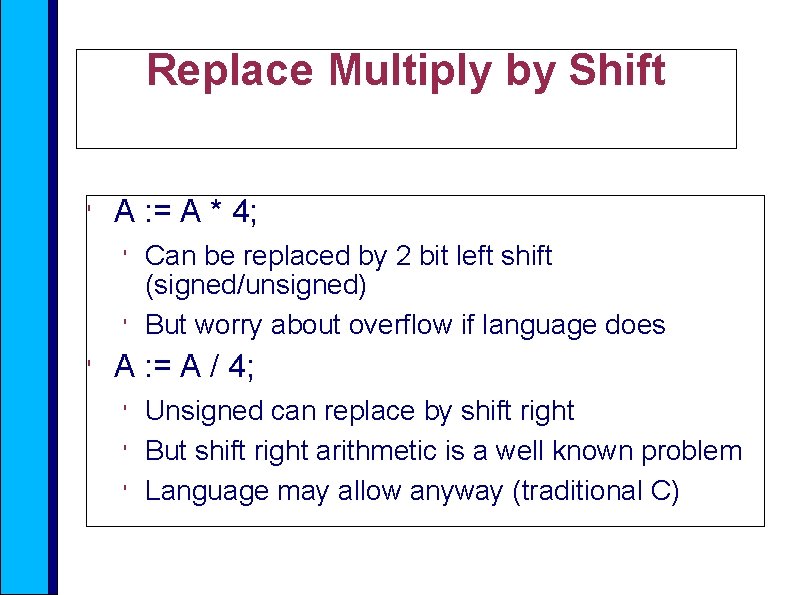

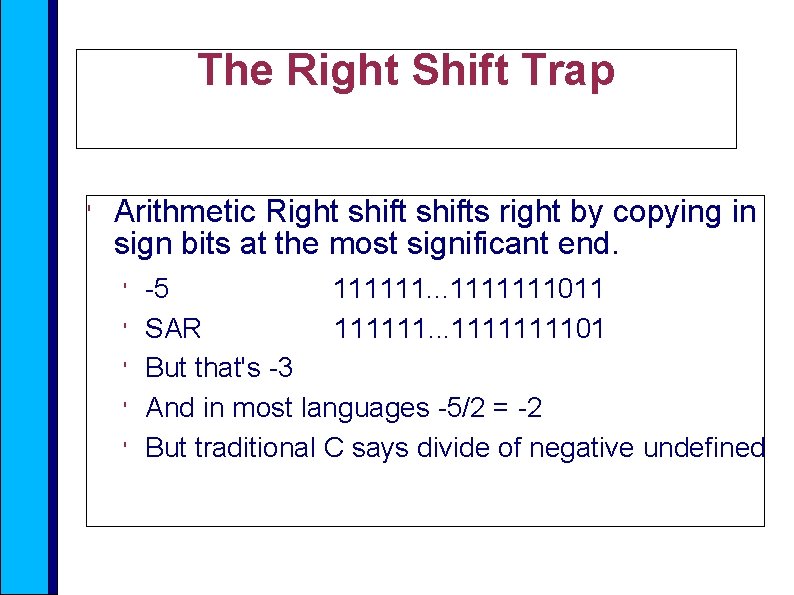

Replace Multiply by Shift ' A : = A * 4; ' ' ' Can be replaced by 2 bit left shift (signed/unsigned) But worry about overflow if language does A : = A / 4; ' ' ' Unsigned can replace by shift right But shift right arithmetic is a well known problem Language may allow anyway (traditional C)

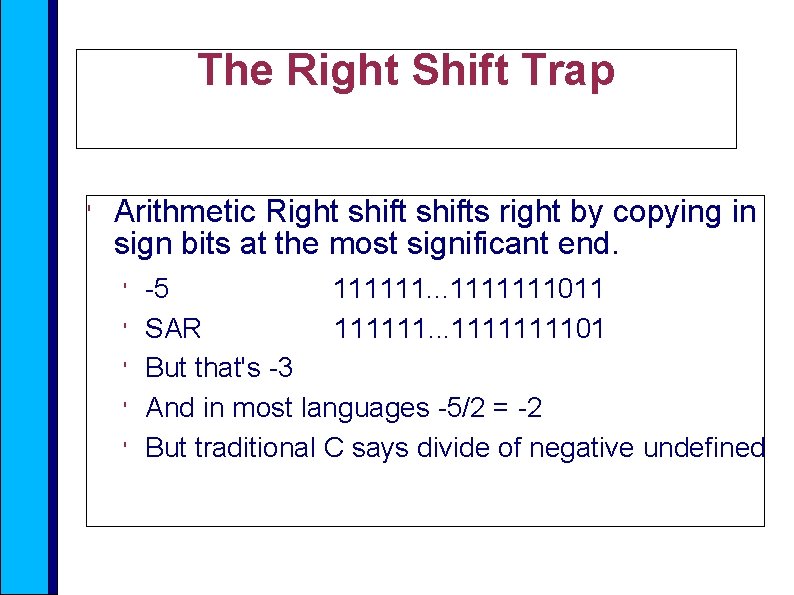

The Right Shift Trap ' Arithmetic Right shifts right by copying in sign bits at the most significant end. ' ' ' -5 111111. . . 1111111011 SAR 111111. . . 111101 But that's -3 And in most languages -5/2 = -2 But traditional C says divide of negative undefined

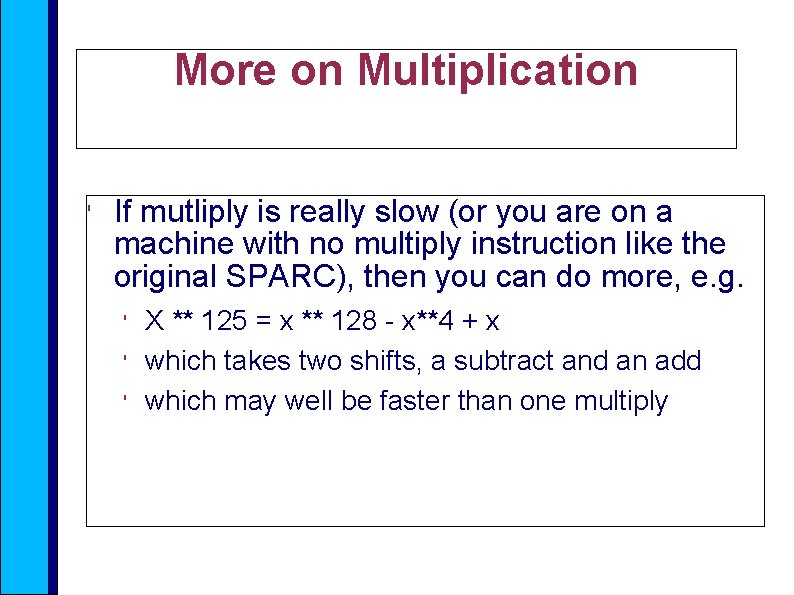

More on Multiplication ' If mutliply is really slow (or you are on a machine with no multiply instruction like the original SPARC), then you can do more, e. g. ' ' ' X ** 125 = x ** 128 - x**4 + x which takes two shifts, a subtract and an add which may well be faster than one multiply

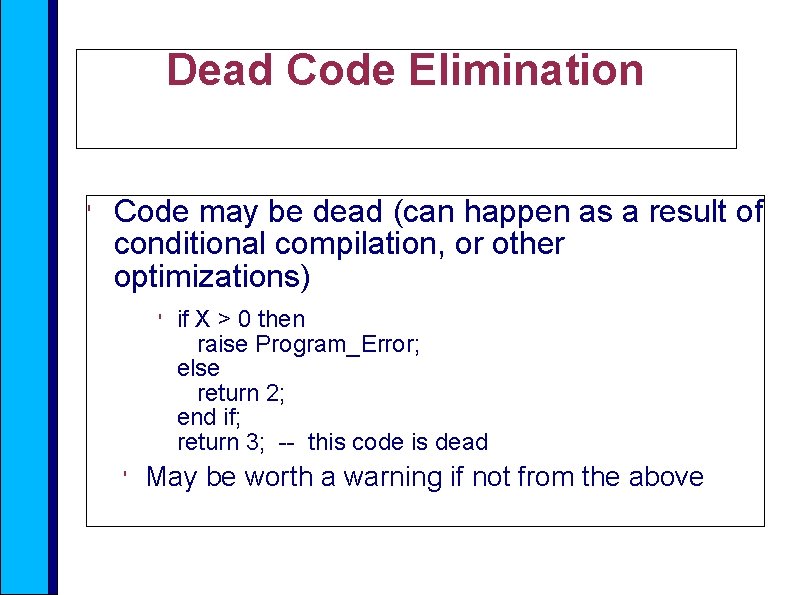

Dead Code Elimination ' Code may be dead (can happen as a result of conditional compilation, or other optimizations) ' ' if X > 0 then raise Program_Error; else return 2; end if; return 3; -- this code is dead May be worth a warning if not from the above

Inlining ' ' ' Instead of generating a call to a small procedure, put the procedure code inline, substituting parameters appropriately. Saves the call Must know body, some languages (Ada, C++) enable this notion explicitly, but it can also be done implicitly by compiler for simple cases

Inlining Cache Effects ' ' ' Simple direct cache works by using low order bits of address to determine cache line. This means that two addresses may always end up in the same cache line, so that a reference to either address evicts the other This happens with instructions in the icache

Inlining Cache Effects ' Suppose we have a loop ' ' ' for J in 1. . N loop A (J) : = sqrt (A (J)); end loop; What if code for assignment and code for sqrt function map to same cache address, we get efficiency disaster! Inlining the sqrt code prevents this anomoly

Peephole Optimization ' Look at a few consecutive instructions ' ' Few might be 2 -4 See if an obvious replacement is possible ' ' MOV %eax => mema MOV mema => %eax Can obviously eliminate the second instruction ' without needing any global knowledge of mema

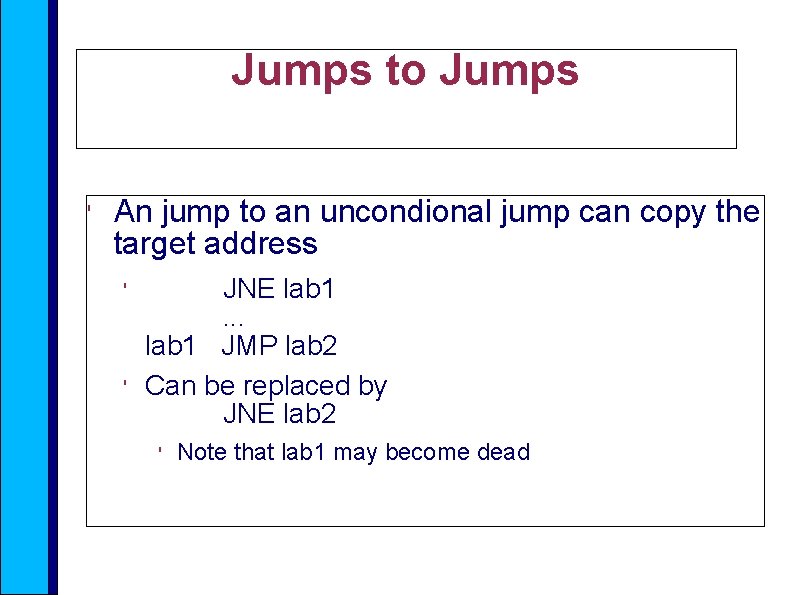

Jumps to Jumps ' An jump to an uncondional jump can copy the target address ' ' JNE lab 1. . . lab 1 JMP lab 2 Can be replaced by JNE lab 2 ' Note that lab 1 may become dead

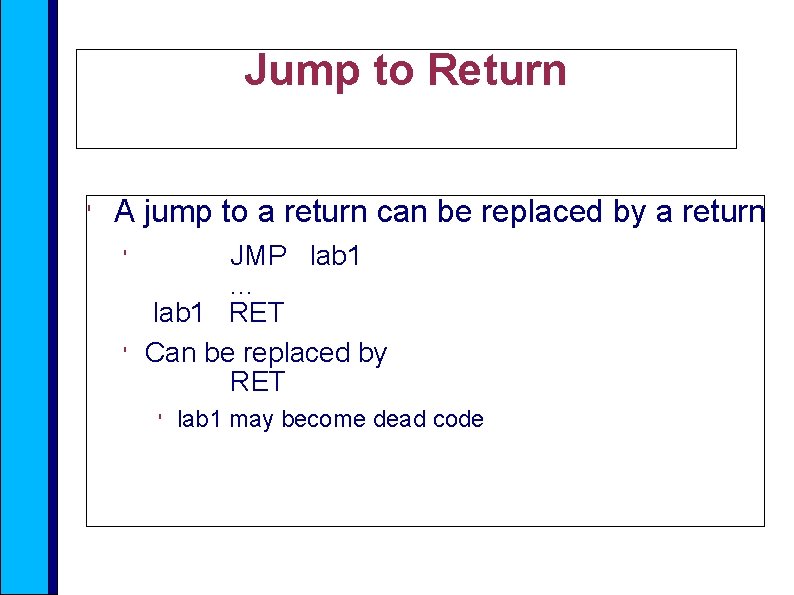

Jump to Return ' A jump to a return can be replaced by a return ' ' JMP lab 1. . . lab 1 RET Can be replaced by RET ' lab 1 may become dead code

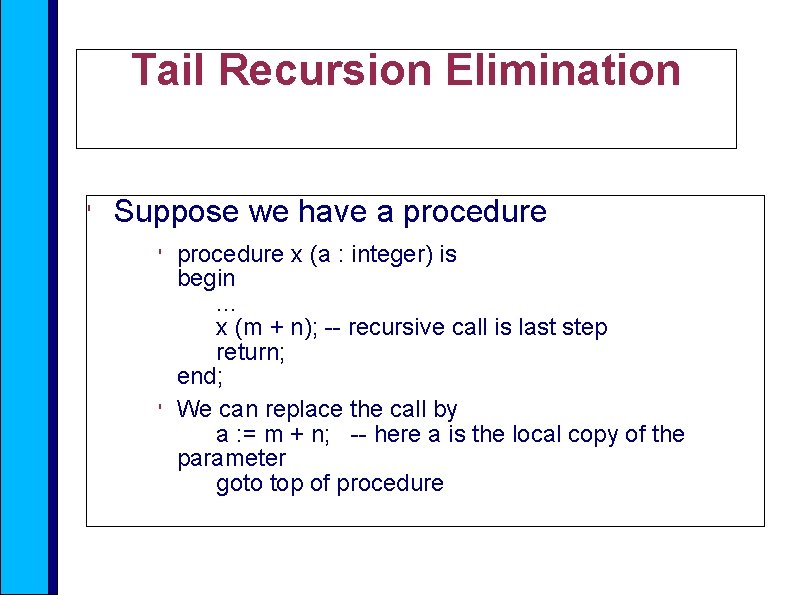

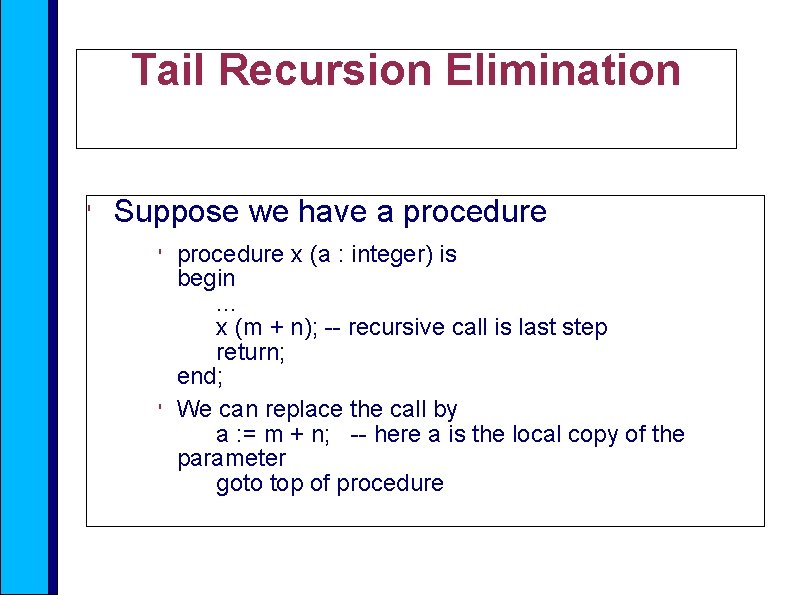

Tail Recursion Elimination ' Suppose we have a procedure ' ' procedure x (a : integer) is begin. . . x (m + n); -- recursive call is last step return; end; We can replace the call by a : = m + n; -- here a is the local copy of the parameter goto top of procedure

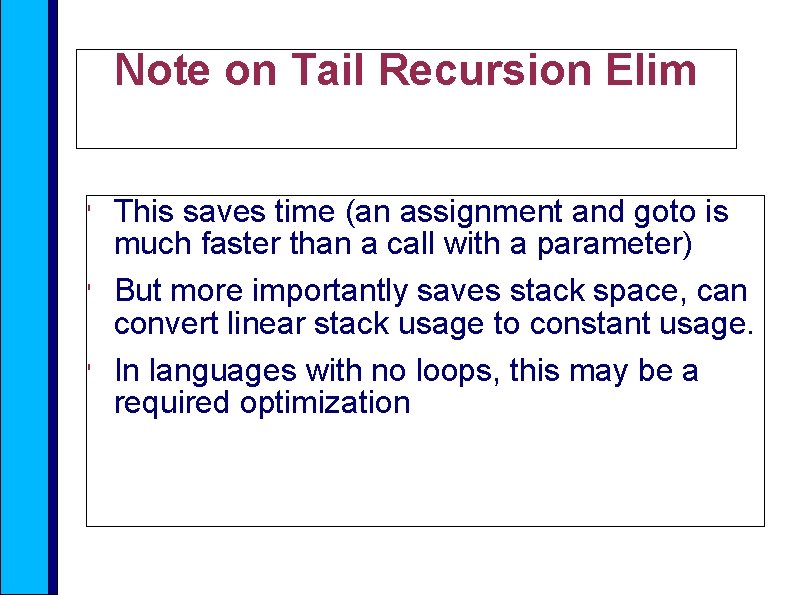

Note on Tail Recursion Elim ' ' ' This saves time (an assignment and goto is much faster than a call with a parameter) But more importantly saves stack space, can convert linear stack usage to constant usage. In languages with no loops, this may be a required optimization

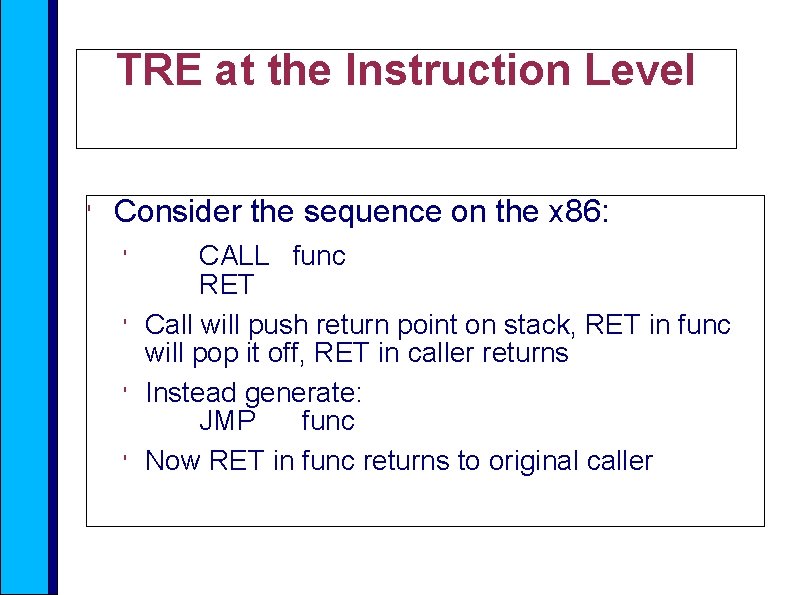

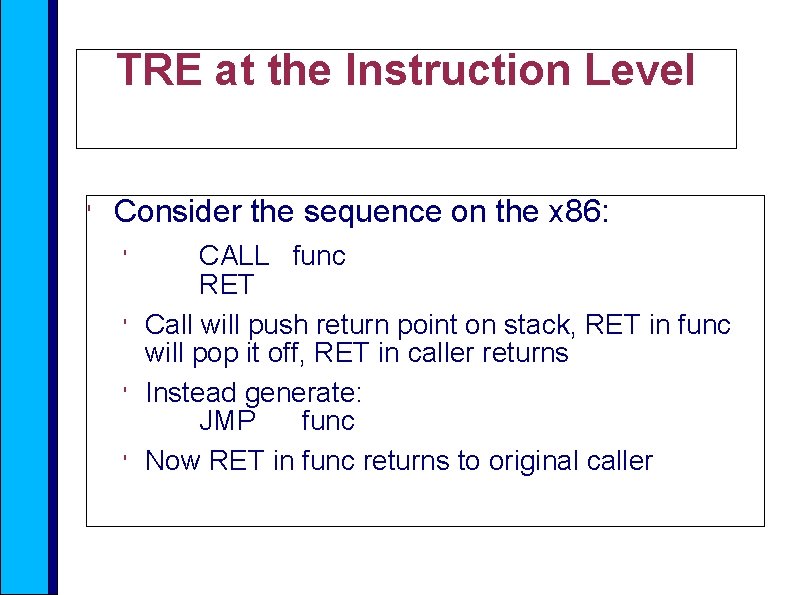

TRE at the Instruction Level ' Consider the sequence on the x 86: ' ' CALL func RET Call will push return point on stack, RET in func will pop it off, RET in caller returns Instead generate: JMP func Now RET in func returns to original caller

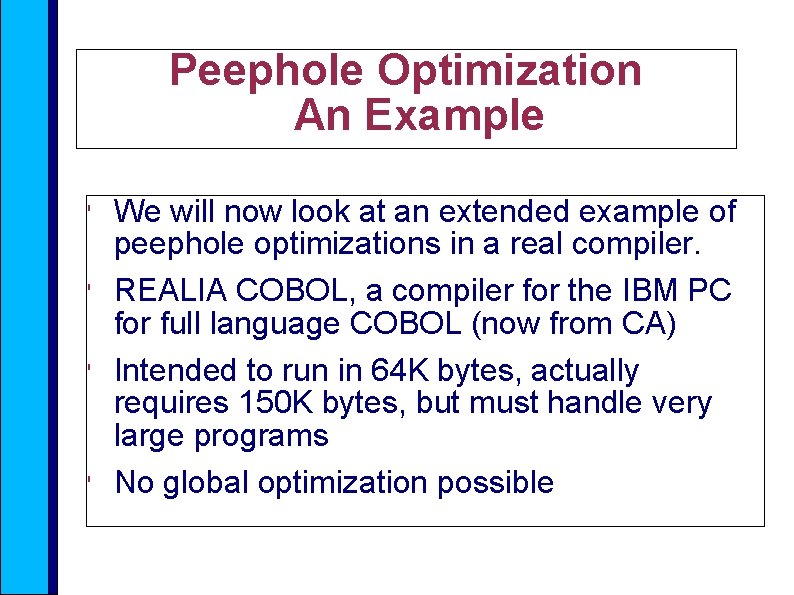

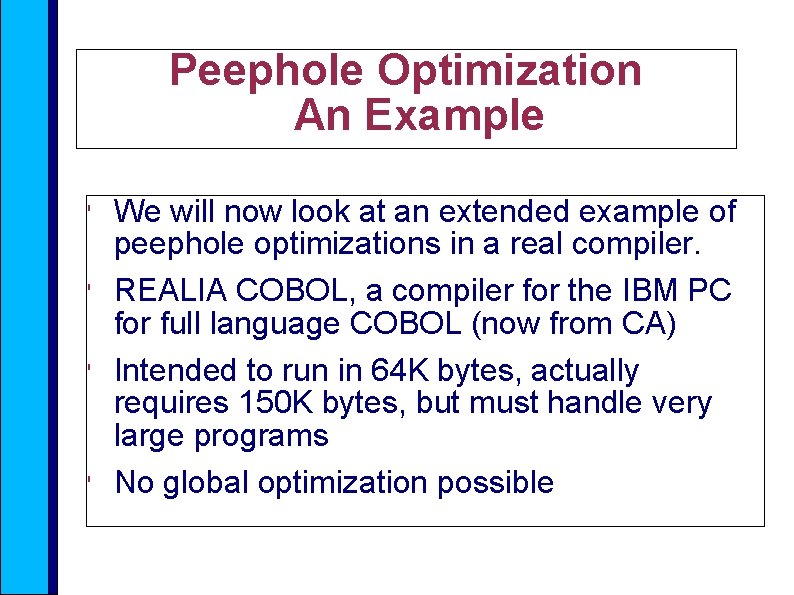

Peephole Optimization An Example ' ' We will now look at an extended example of peephole optimizations in a real compiler. REALIA COBOL, a compiler for the IBM PC for full language COBOL (now from CA) Intended to run in 64 K bytes, actually requires 150 K bytes, but must handle very large programs No global optimization possible

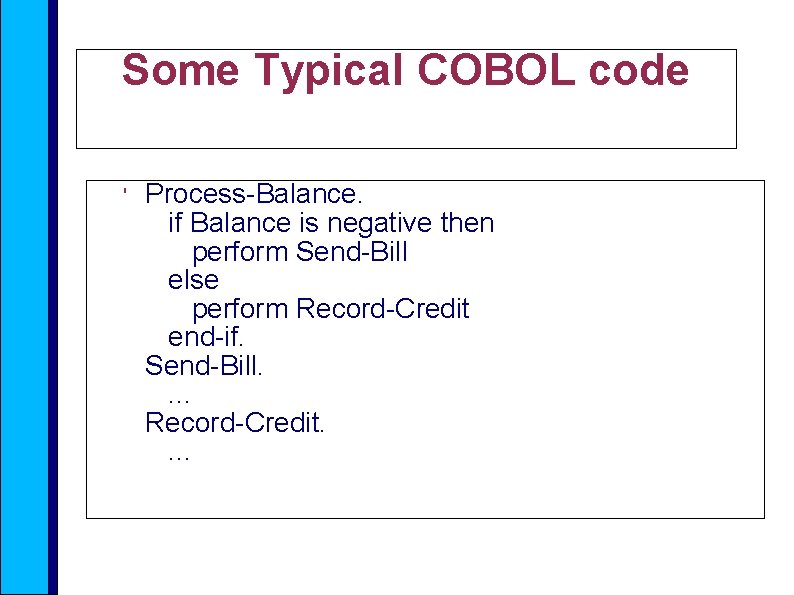

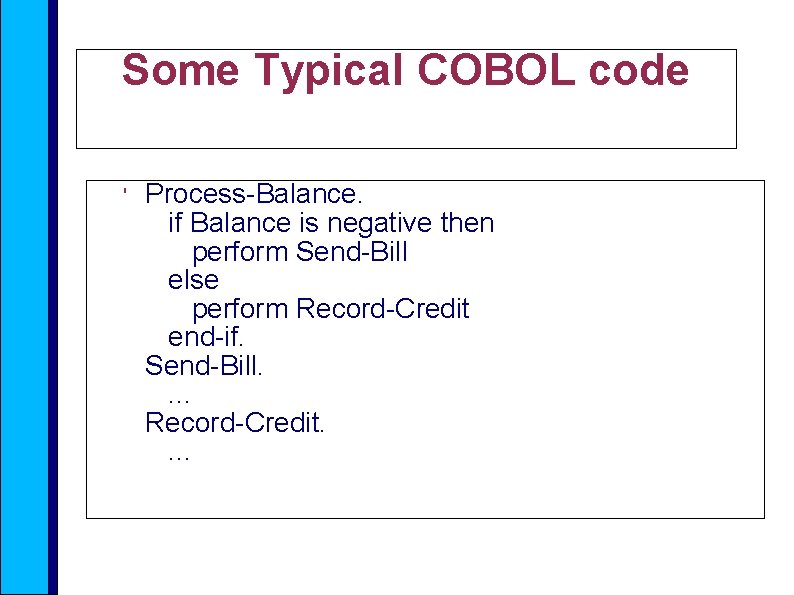

Some Typical COBOL code ' Process-Balance. if Balance is negative then perform Send-Bill else perform Record-Credit end-if. Send-Bill. . Record-Credit. .

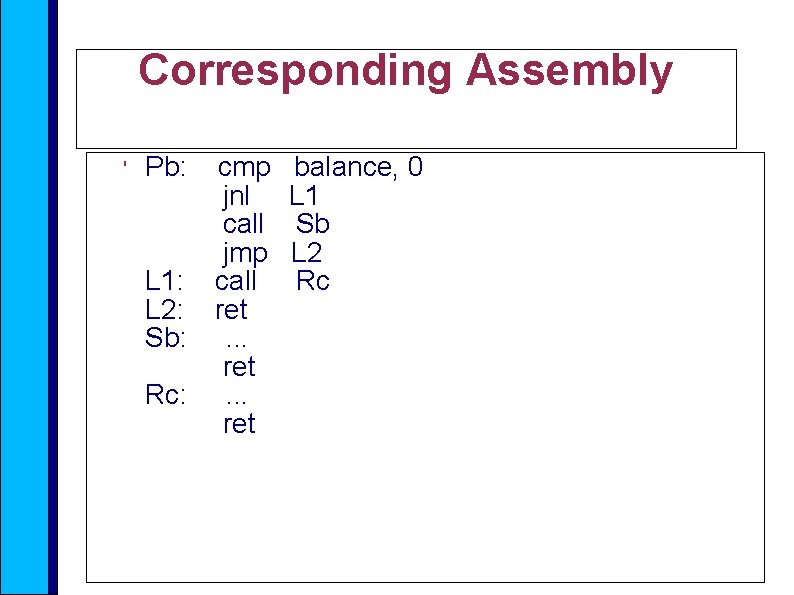

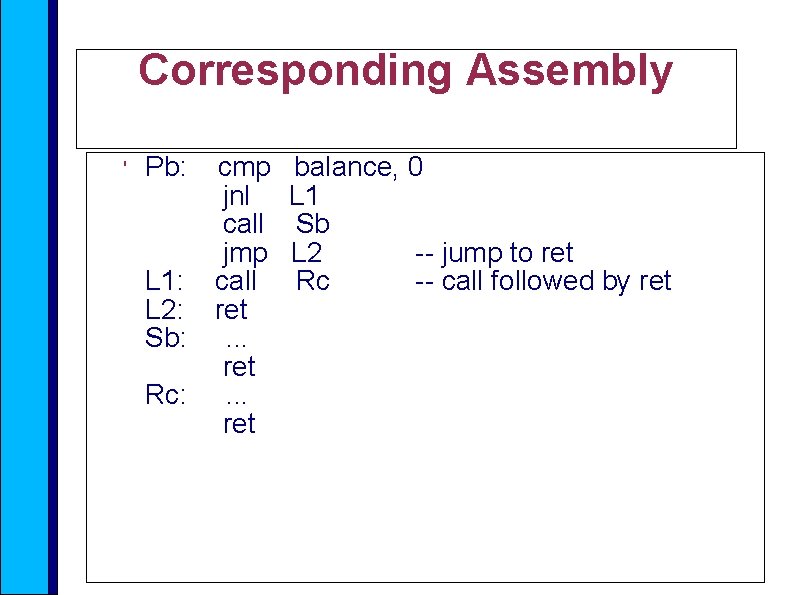

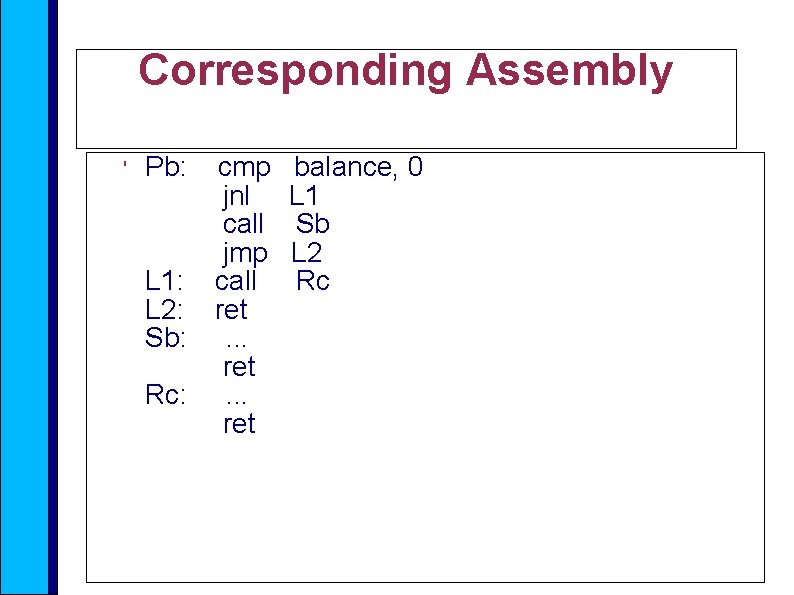

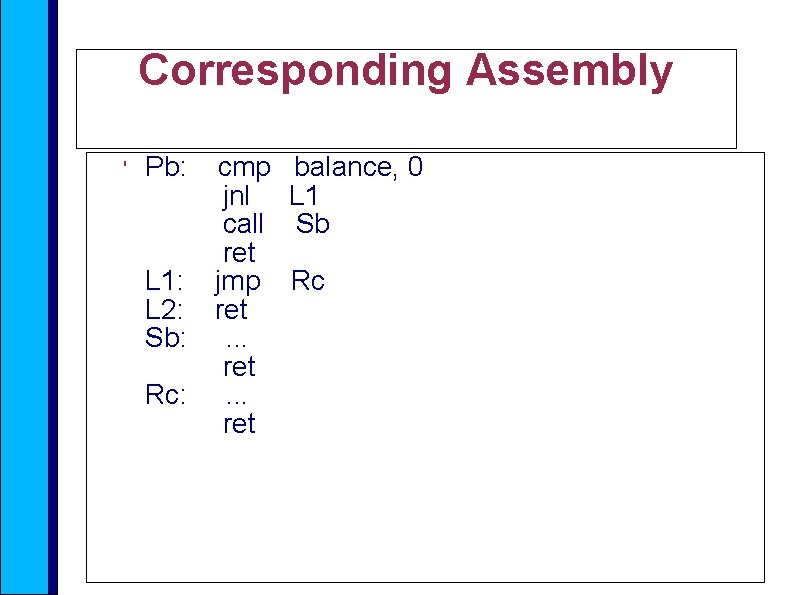

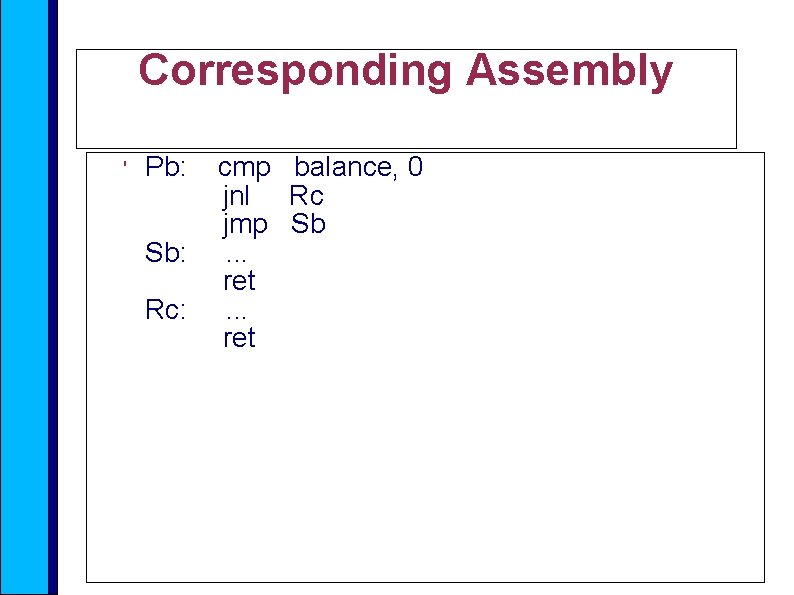

Corresponding Assembly ' Pb: cmp jnl call jmp L 1: call L 2: ret Sb: . . . ret Rc: . . . ret balance, 0 L 1 Sb L 2 Rc

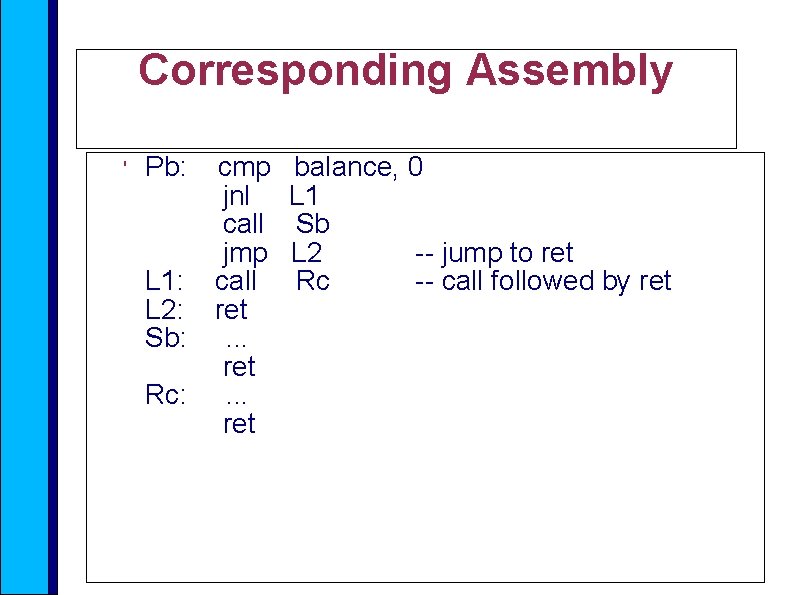

Corresponding Assembly ' Pb: cmp jnl call jmp L 1: call L 2: ret Sb: . . . ret Rc: . . . ret balance, 0 L 1 Sb L 2 -- jump to ret Rc -- call followed by ret

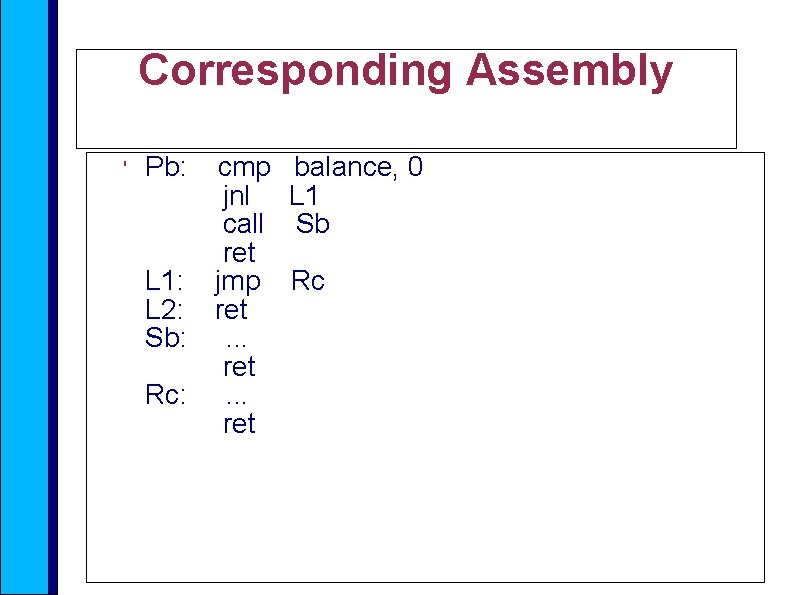

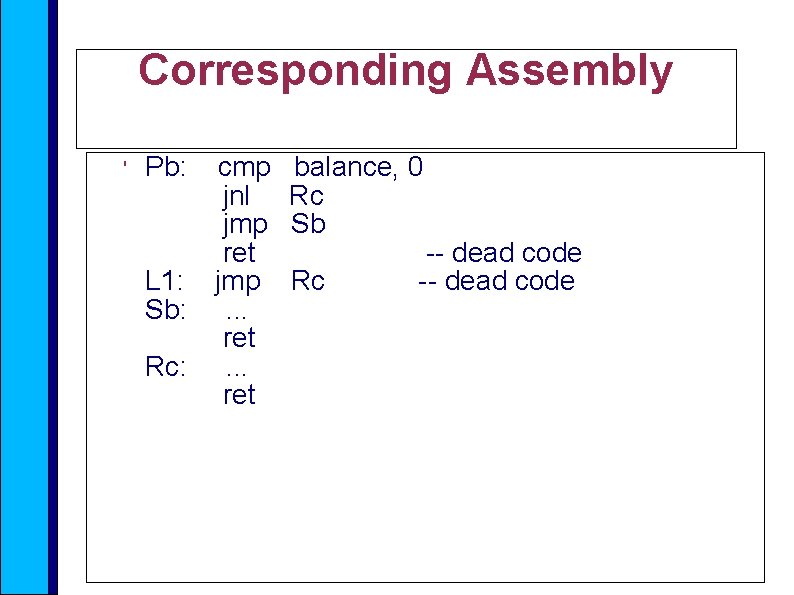

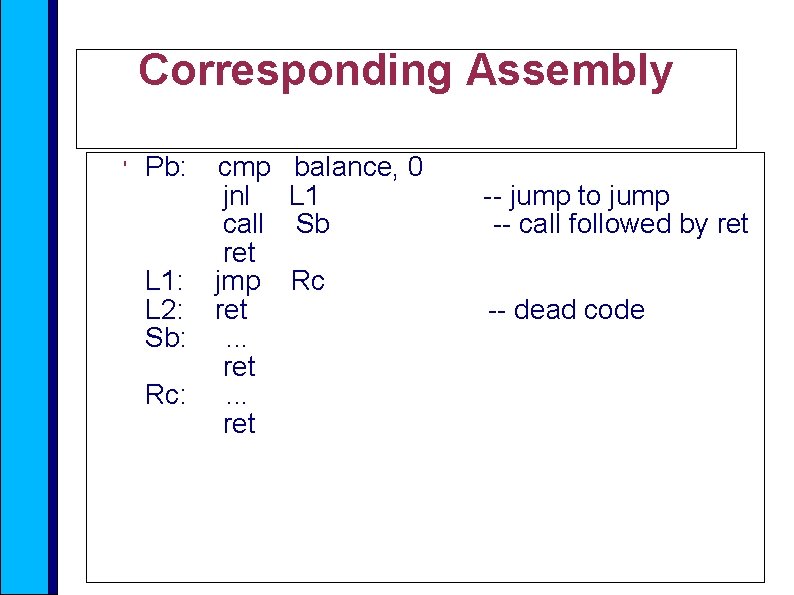

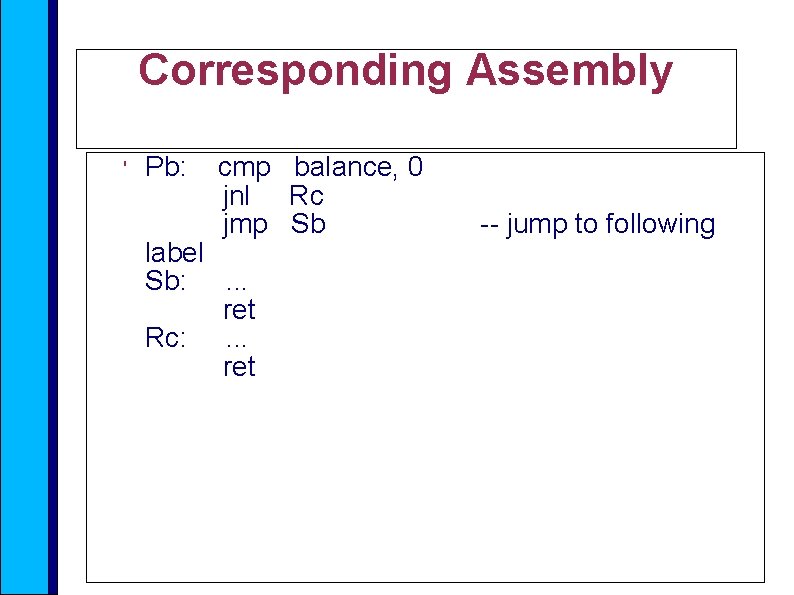

Corresponding Assembly ' Pb: cmp jnl call ret L 1: jmp L 2: ret Sb: . . . ret Rc: . . . ret balance, 0 L 1 Sb Rc

Corresponding Assembly ' Pb: cmp jnl call ret L 1: jmp L 2: ret Sb: . . . ret Rc: . . . ret balance, 0 L 1 Sb Rc -- jump to jump -- call followed by ret -- dead code

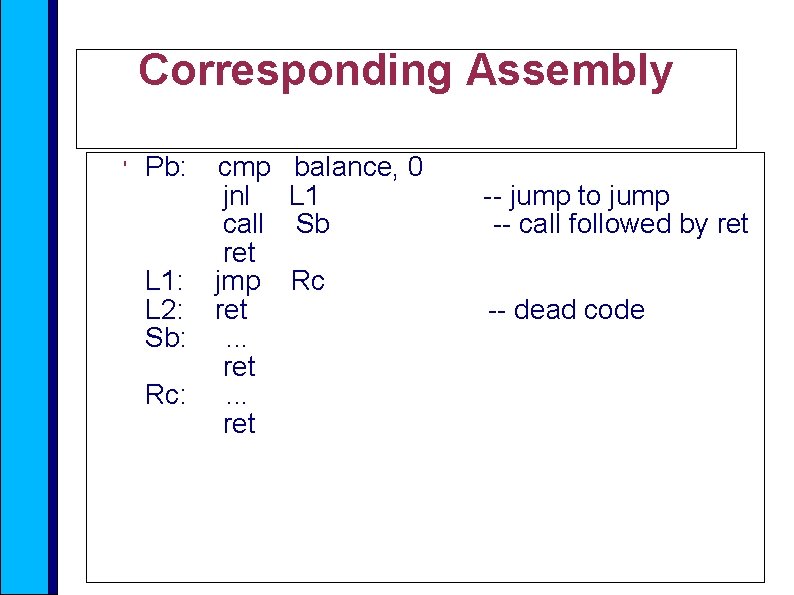

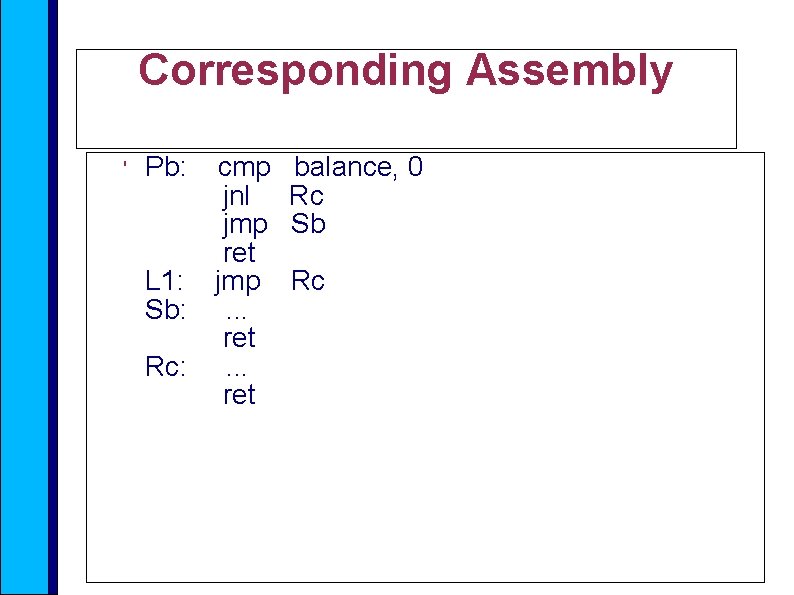

Corresponding Assembly ' Pb: cmp jnl jmp ret L 1: jmp Sb: . . . ret Rc: . . . ret balance, 0 Rc Sb Rc

Corresponding Assembly ' Pb: cmp jnl jmp ret L 1: jmp Sb: . . . ret Rc: . . . ret balance, 0 Rc Sb Rc -- dead code

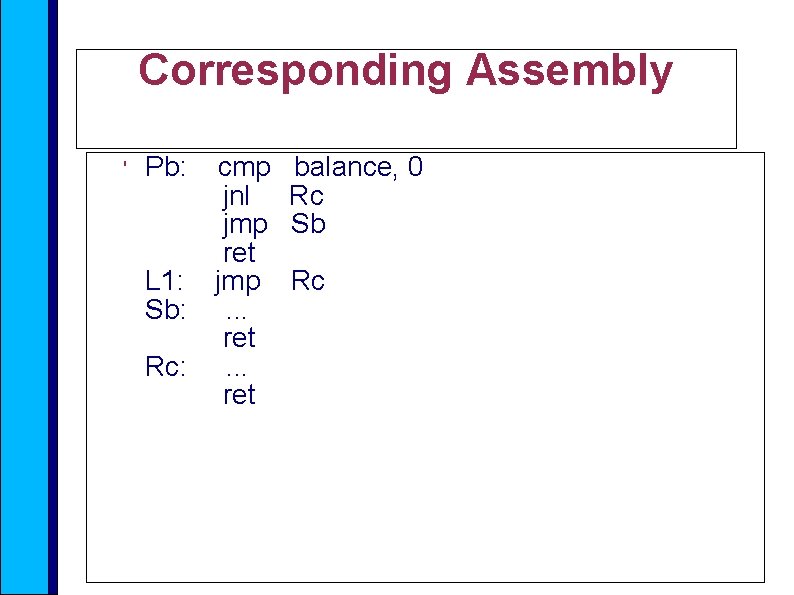

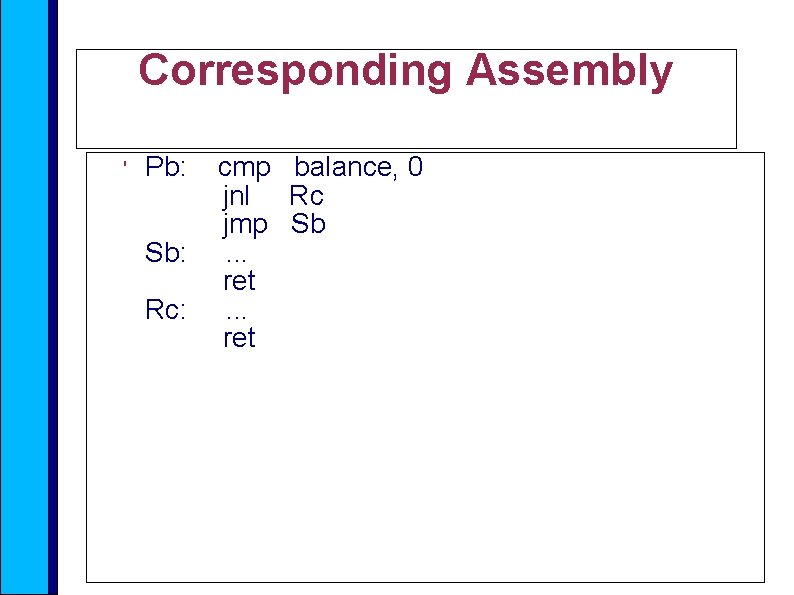

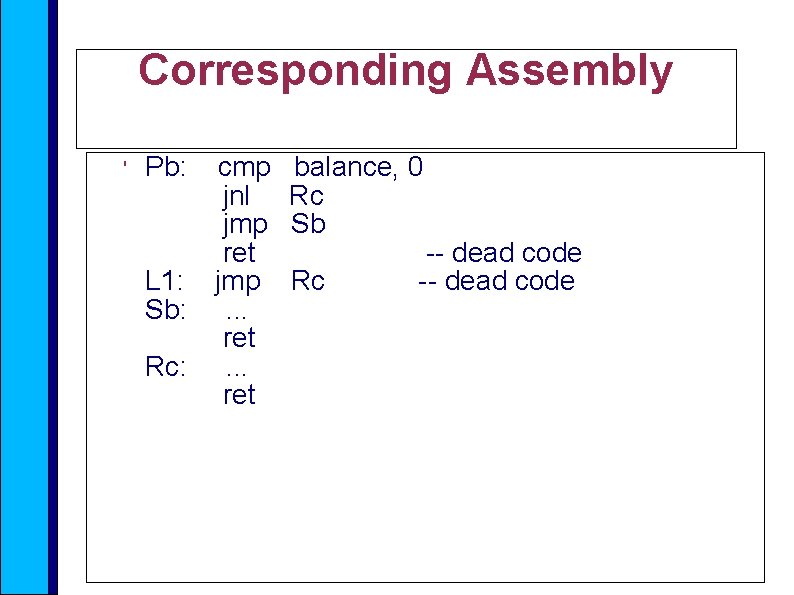

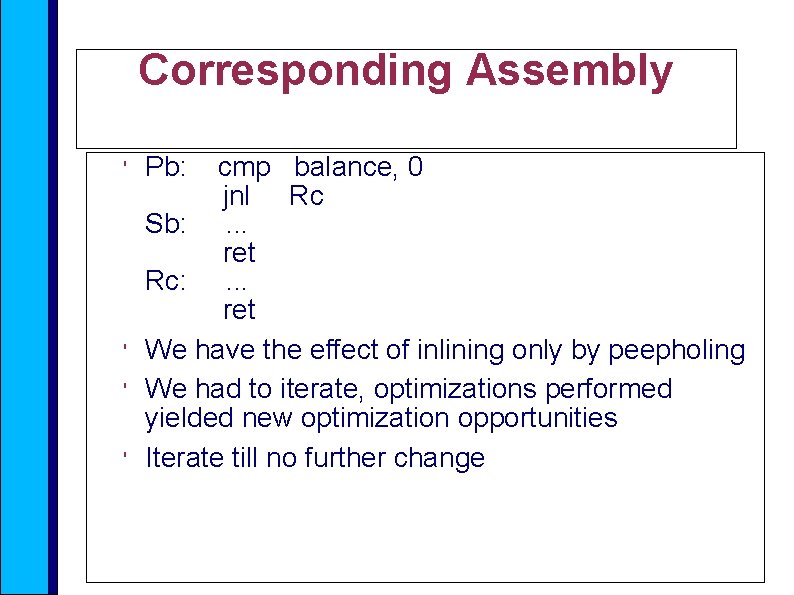

Corresponding Assembly ' Pb: Sb: Rc: cmp balance, 0 jnl Rc jmp Sb. . . ret

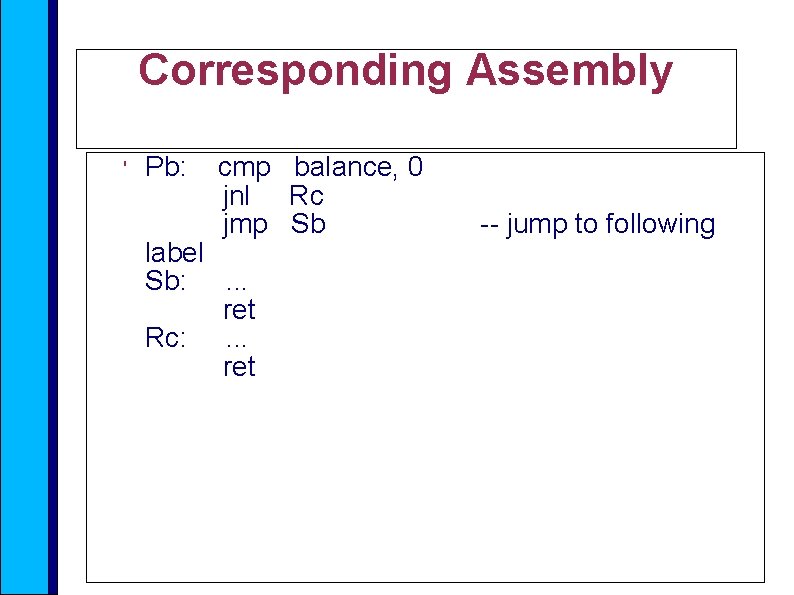

Corresponding Assembly ' Pb: cmp balance, 0 jnl Rc jmp Sb label Sb: . . . ret Rc: . . . ret -- jump to following

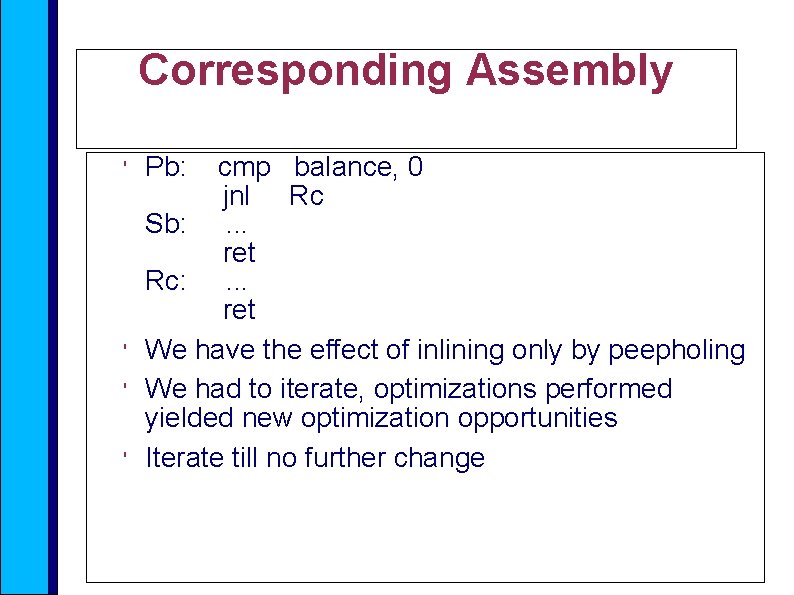

Corresponding Assembly ' ' Pb: cmp balance, 0 jnl Rc Sb: . . . ret Rc: . . . ret We have the effect of inlining only by peepholing We had to iterate, optimizations performed yielded new optimization opportunities Iterate till no further change

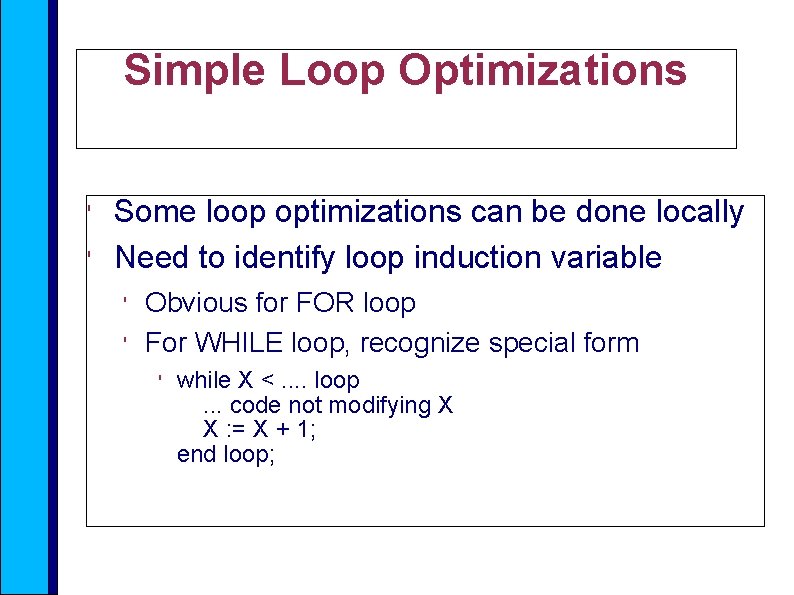

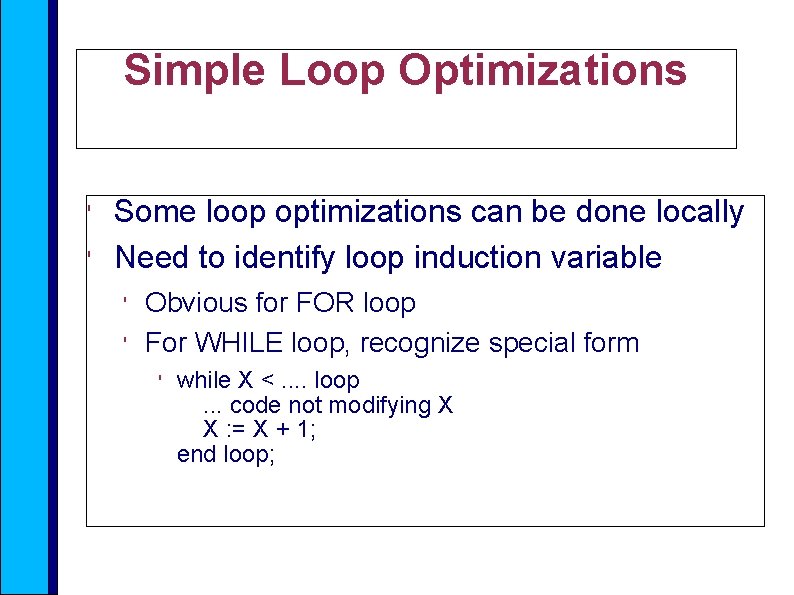

Simple Loop Optimizations ' ' Some loop optimizations can be done locally Need to identify loop induction variable ' ' Obvious for FOR loop For WHILE loop, recognize special form ' while X <. . loop. . . code not modifying X X : = X + 1; end loop;

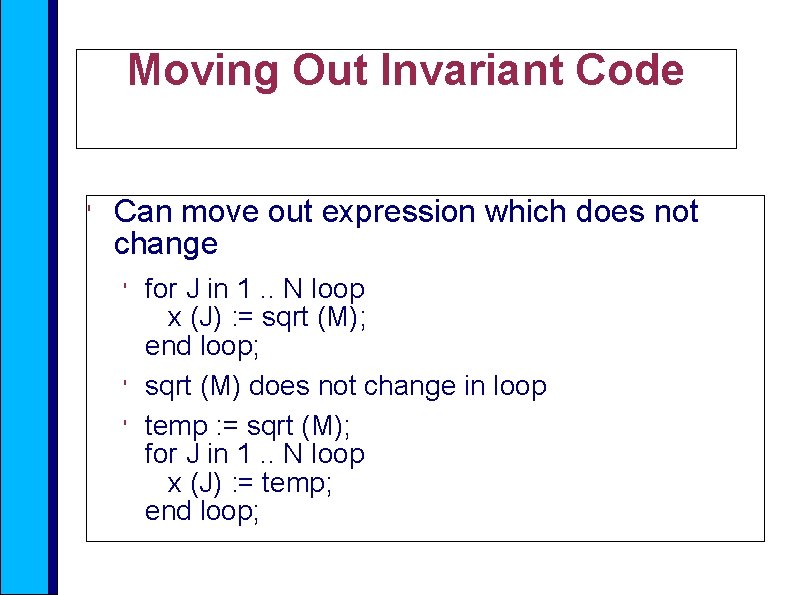

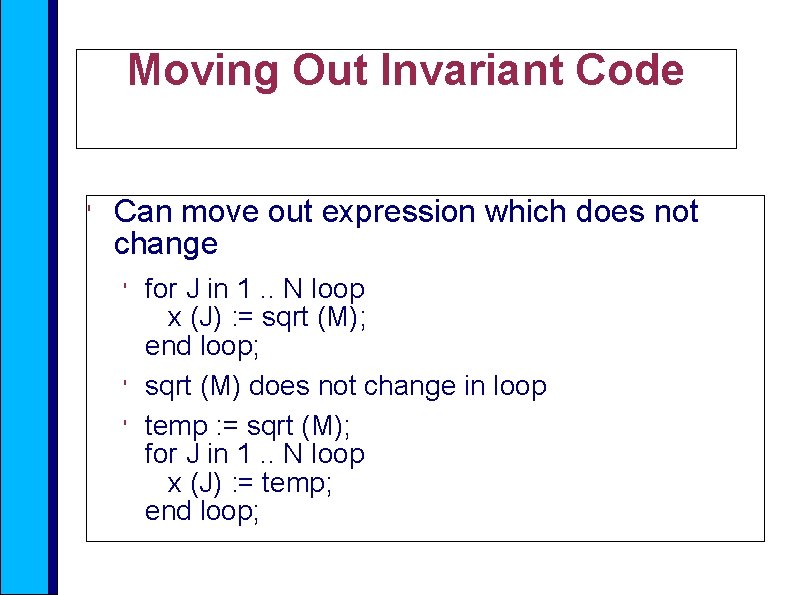

Moving Out Invariant Code ' Can move out expression which does not change ' ' ' for J in 1. . N loop x (J) : = sqrt (M); end loop; sqrt (M) does not change in loop temp : = sqrt (M); for J in 1. . N loop x (J) : = temp; end loop;

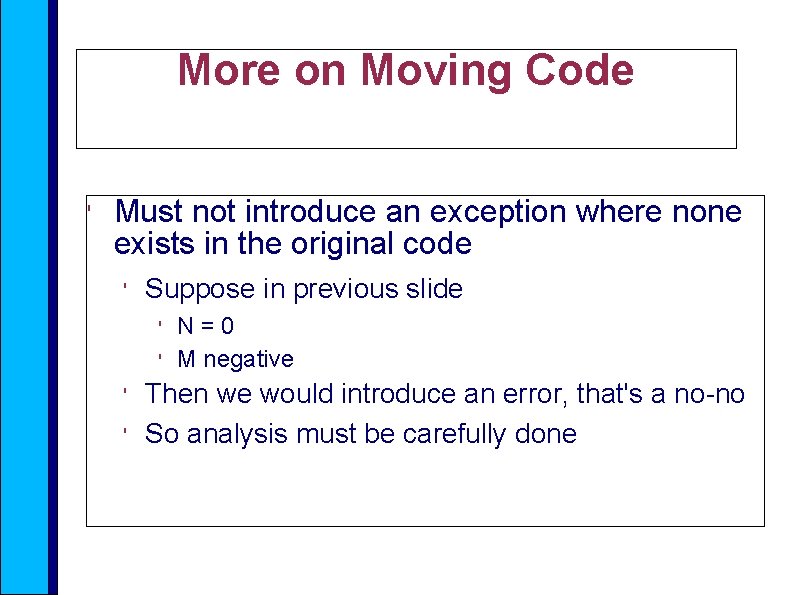

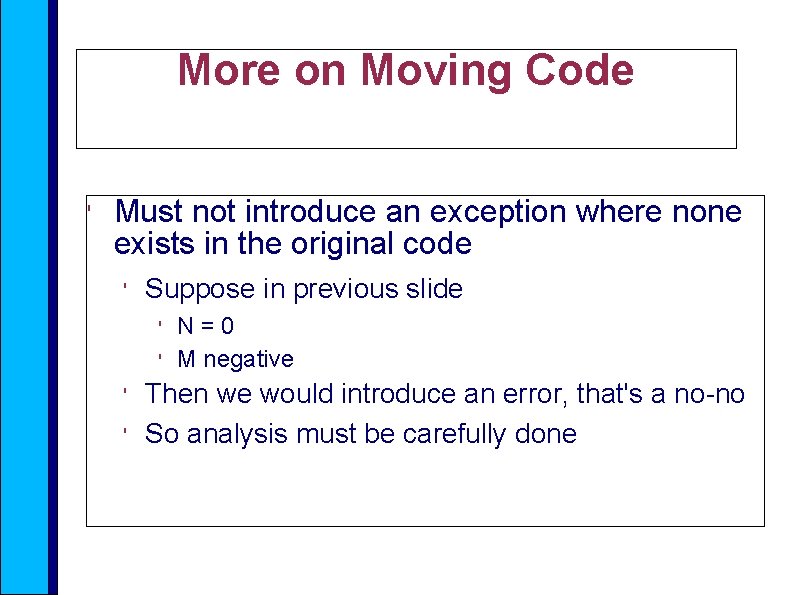

More on Moving Code ' Must not introduce an exception where none exists in the original code ' Suppose in previous slide ' ' N=0 M negative Then we would introduce an error, that's a no-no So analysis must be carefully done

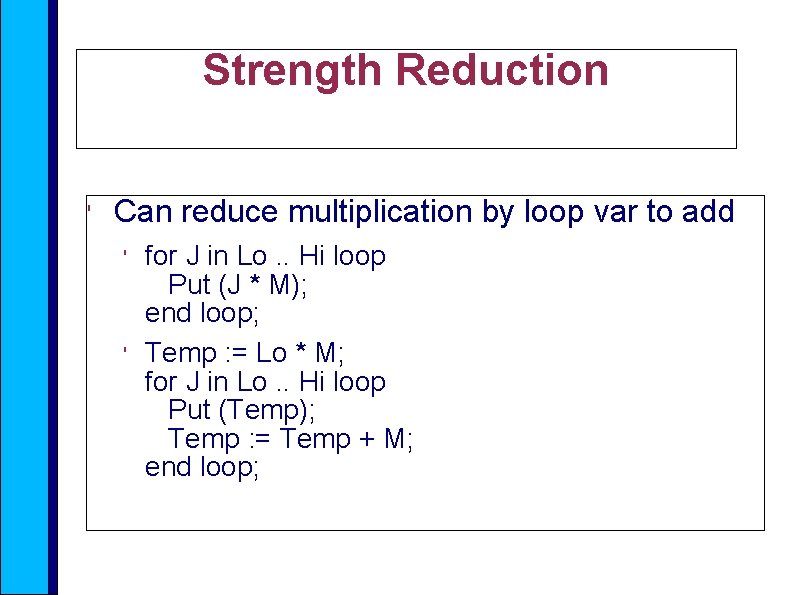

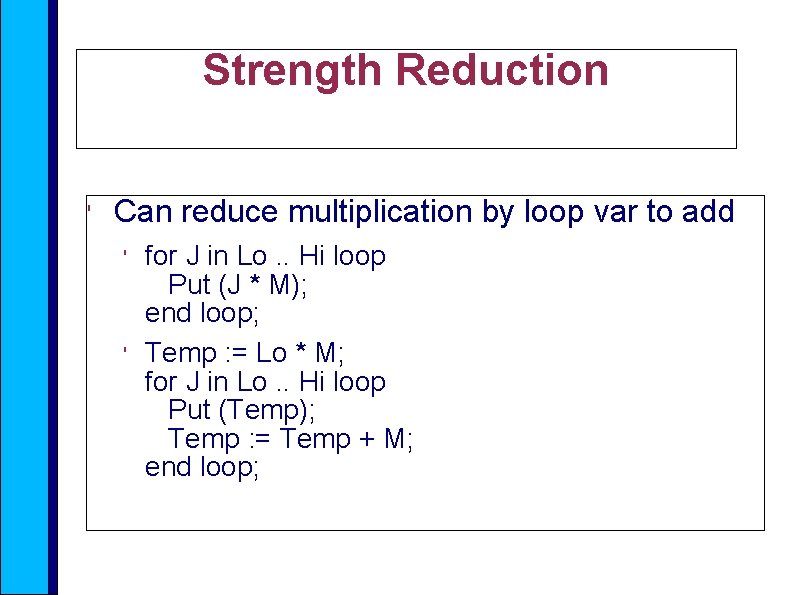

Strength Reduction ' Can reduce multiplication by loop var to add ' ' for J in Lo. . Hi loop Put (J * M); end loop; Temp : = Lo * M; for J in Lo. . Hi loop Put (Temp); Temp : = Temp + M; end loop;

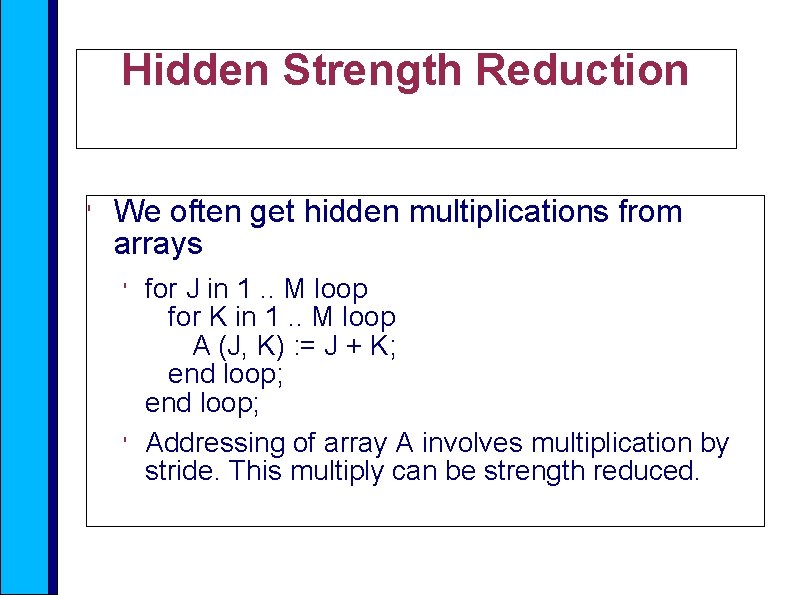

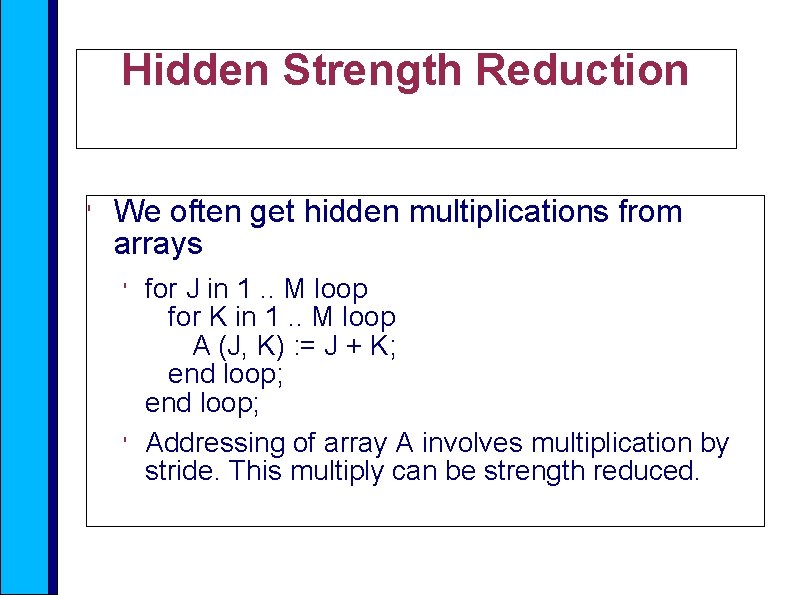

Hidden Strength Reduction ' We often get hidden multiplications from arrays ' ' for J in 1. . M loop for K in 1. . M loop A (J, K) : = J + K; end loop; Addressing of array A involves multiplication by stride. This multiply can be strength reduced.

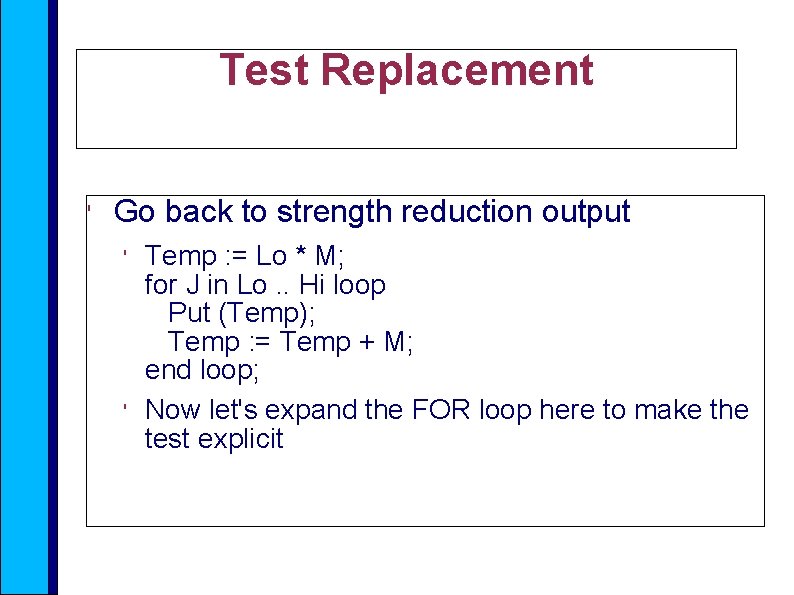

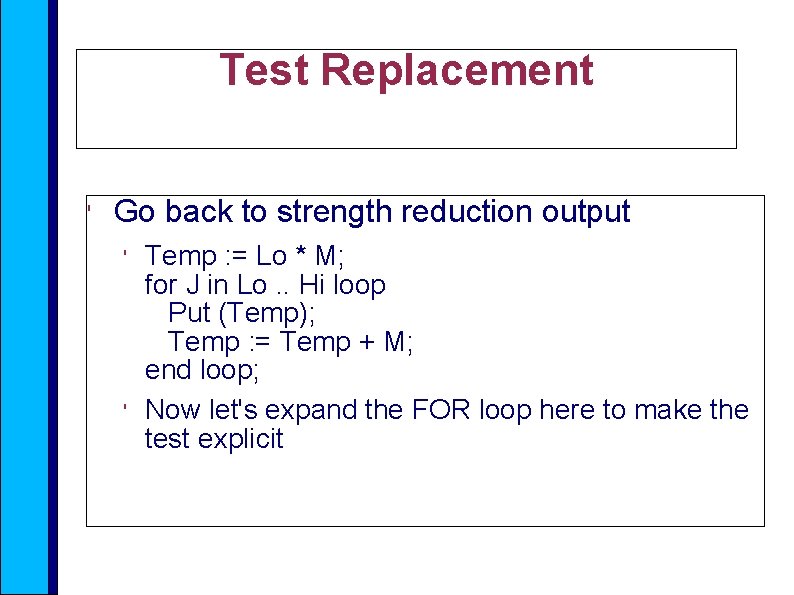

Test Replacement ' Go back to strength reduction output ' ' Temp : = Lo * M; for J in Lo. . Hi loop Put (Temp); Temp : = Temp + M; end loop; Now let's expand the FOR loop here to make the test explicit

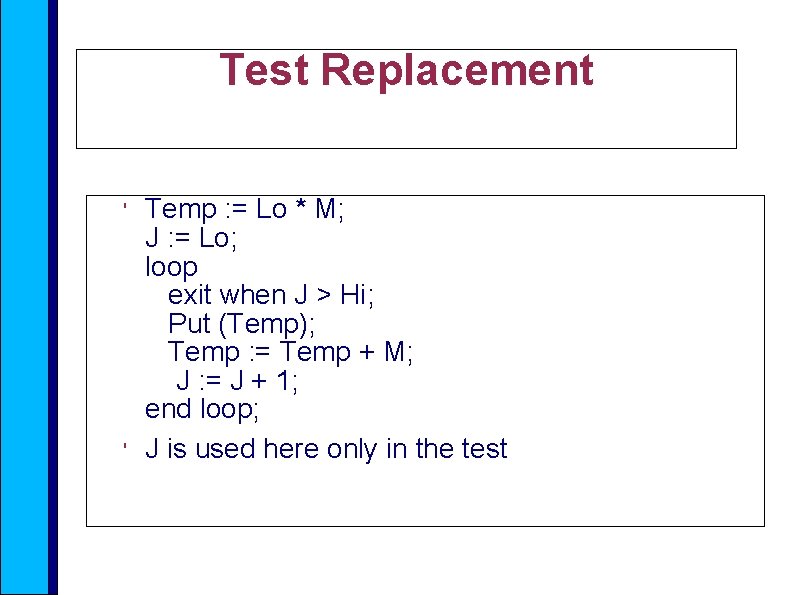

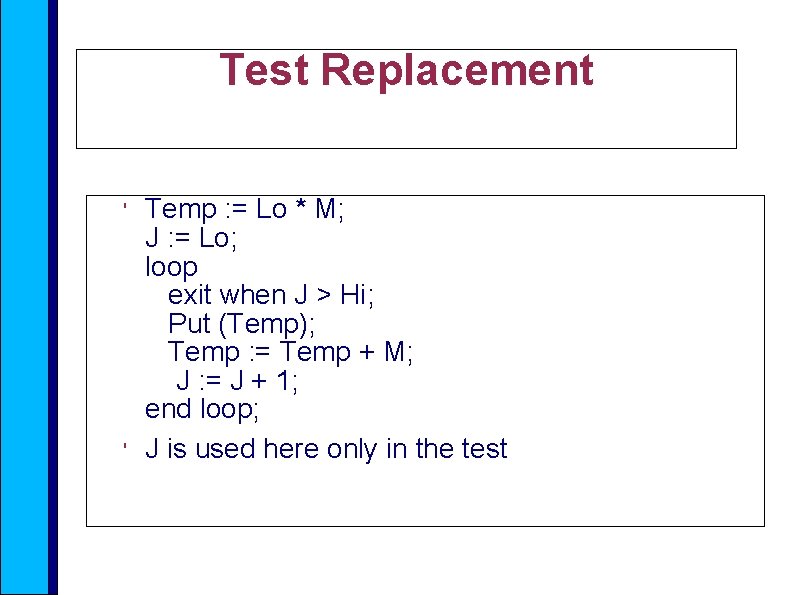

Test Replacement ' ' Temp : = Lo * M; J : = Lo; loop exit when J > Hi; Put (Temp); Temp : = Temp + M; J : = J + 1; end loop; J is used here only in the test

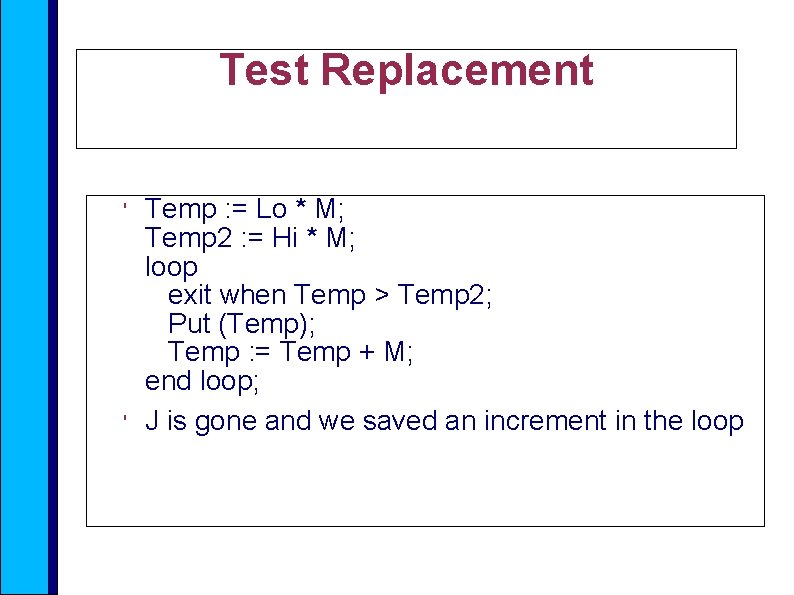

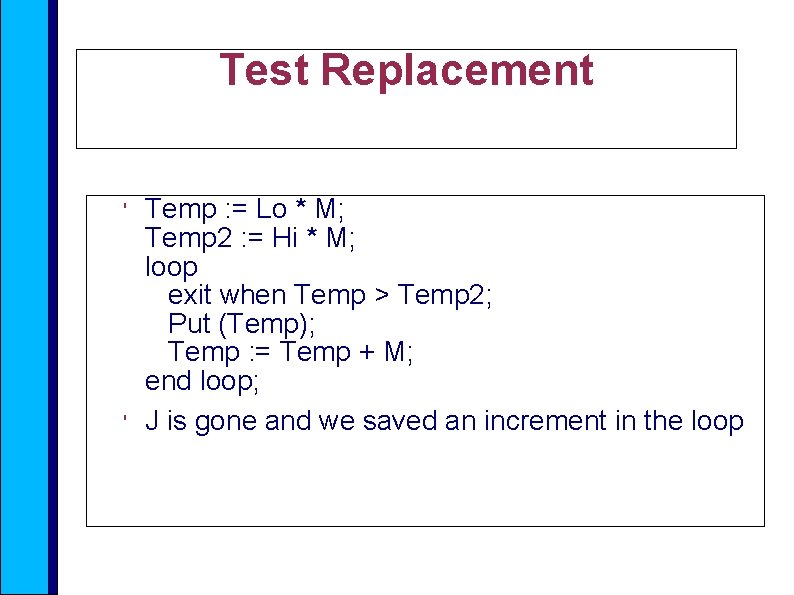

Test Replacement ' ' Temp : = Lo * M; Temp 2 : = Hi * M; loop exit when Temp > Temp 2; Put (Temp); Temp : = Temp + M; end loop; J is gone and we saved an increment in the loop

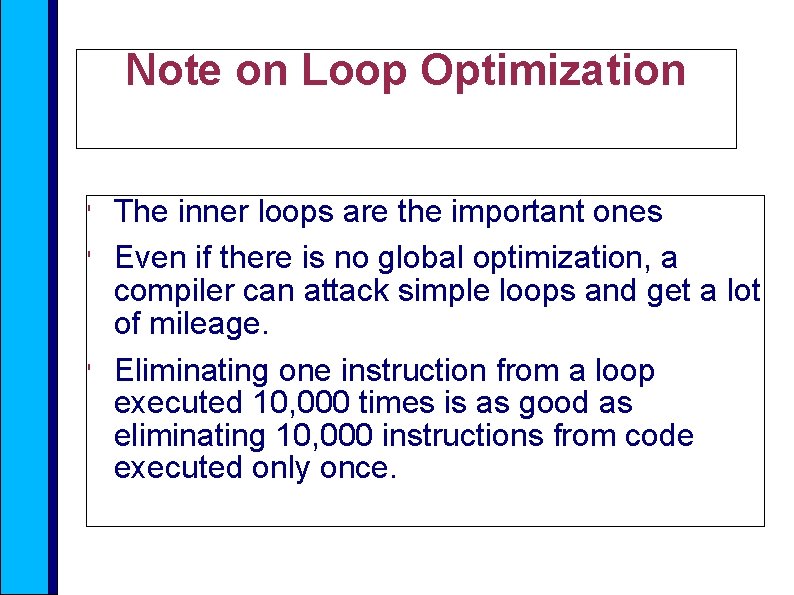

Note on Loop Optimization ' ' ' The inner loops are the important ones Even if there is no global optimization, a compiler can attack simple loops and get a lot of mileage. Eliminating one instruction from a loop executed 10, 000 times is as good as eliminating 10, 000 instructions from code executed only once.

Jump Elimination ' ' ' Jumps are really deadly on modern machines If they are predicted right, not too bad, look ahead mechanism loads right instructions. But if predicted wrong, may have to abandon lots of pipeline work, and wait for icache. Penalty can be 10's of clocks (even hundreds) If statements are hard (how to predict? )

Use special instructions ' Consider typical maximum computation ' ' if A >= B then C : = A; else C : = B; end if; For simplicity assume all unsigned, and all in registers

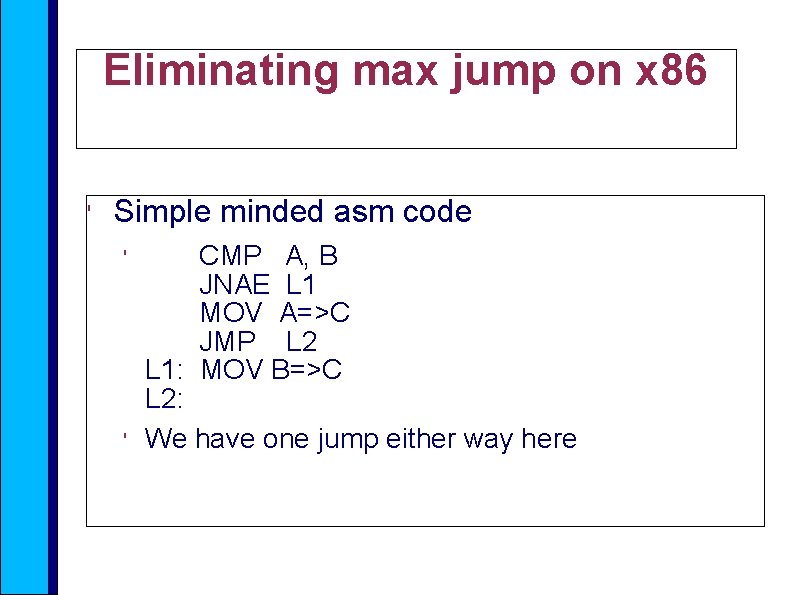

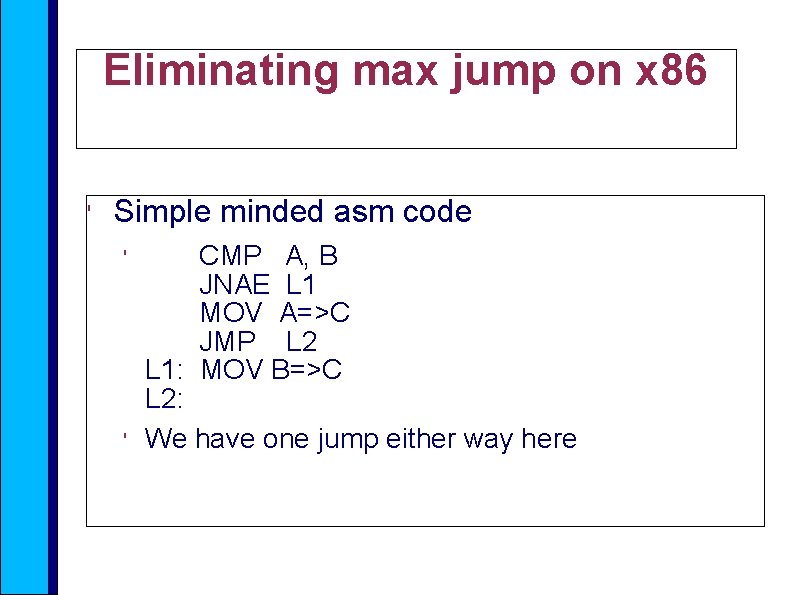

Eliminating max jump on x 86 ' Simple minded asm code ' ' CMP A, B JNAE L 1 MOV A=>C JMP L 2 L 1: MOV B=>C L 2: We have one jump either way here

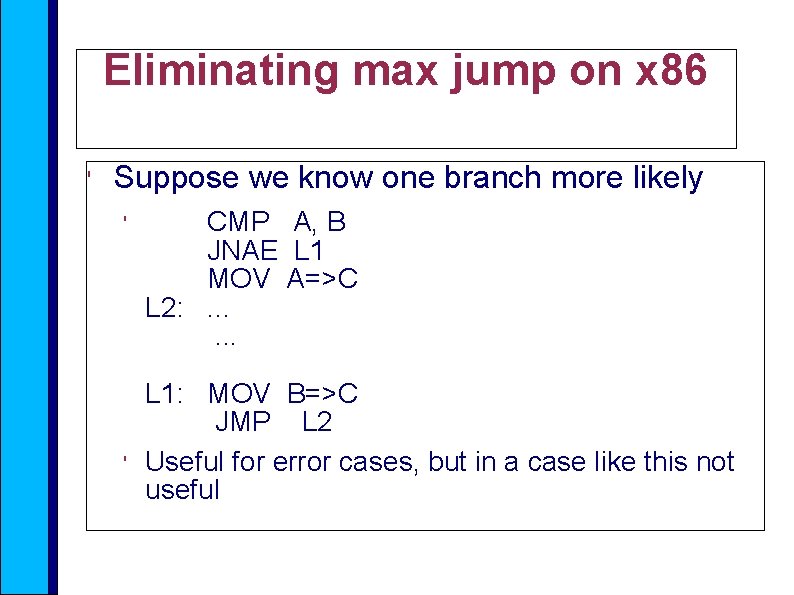

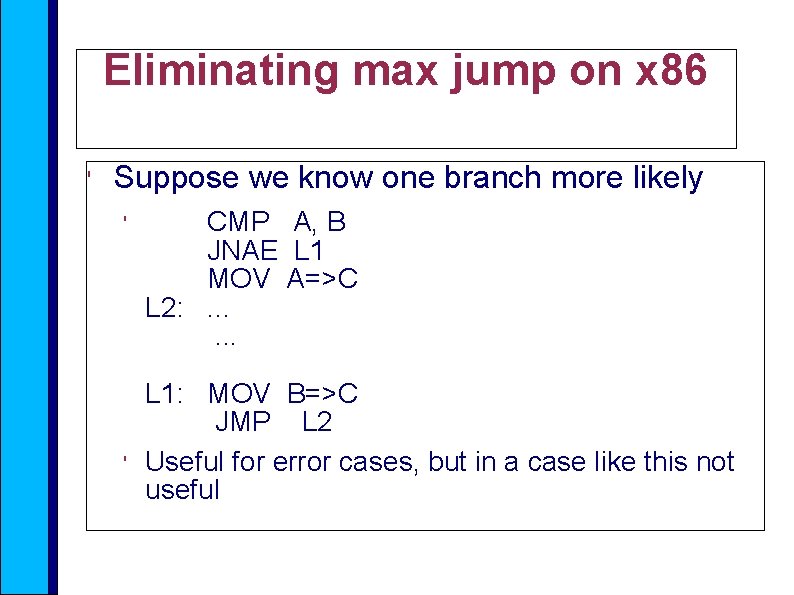

Eliminating max jump on x 86 ' Suppose we know one branch more likely ' ' CMP A, B JNAE L 1 MOV A=>C L 2: . . . L 1: MOV B=>C JMP L 2 Useful for error cases, but in a case like this not useful

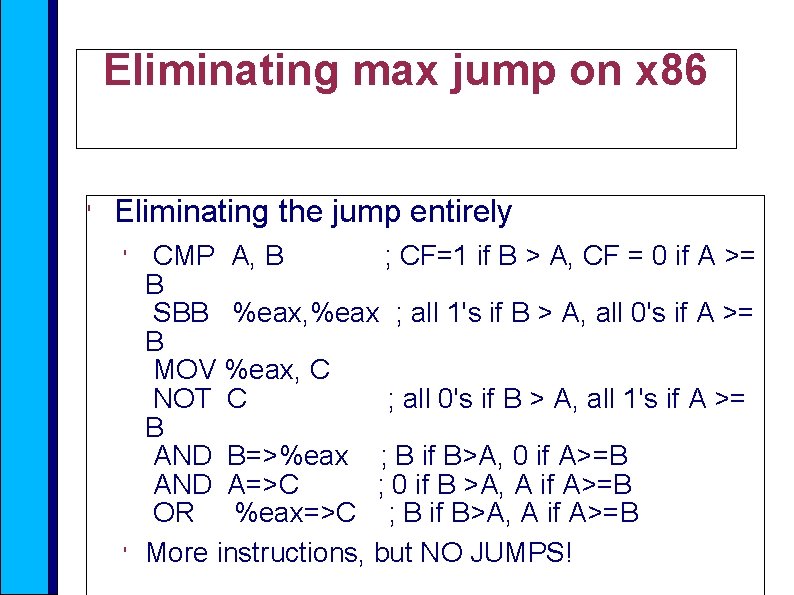

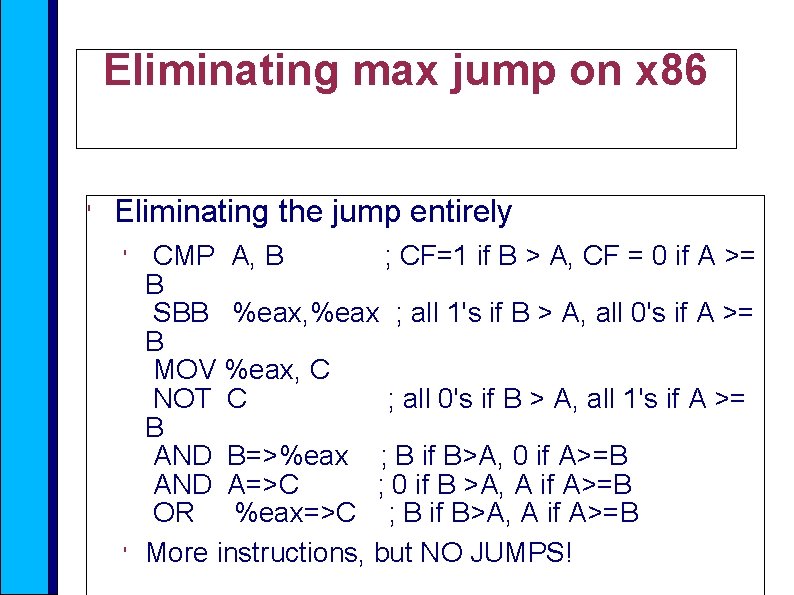

Eliminating max jump on x 86 ' Eliminating the jump entirely ' ' CMP A, B ; CF=1 if B > A, CF = 0 if A >= B SBB %eax, %eax ; all 1's if B > A, all 0's if A >= B MOV %eax, C NOT C ; all 0's if B > A, all 1's if A >= B AND B=>%eax ; B if B>A, 0 if A>=B AND A=>C ; 0 if B >A, A if A>=B OR %eax=>C ; B if B>A, A if A>=B More instructions, but NO JUMPS!

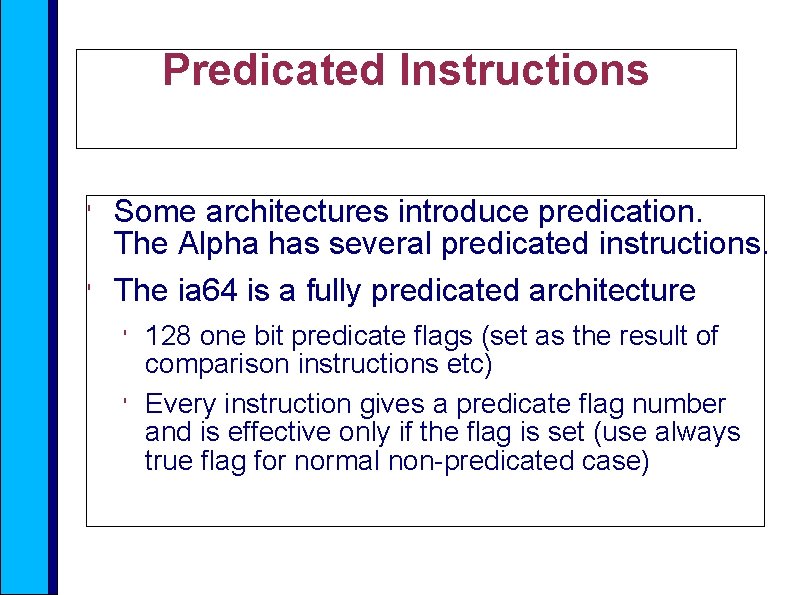

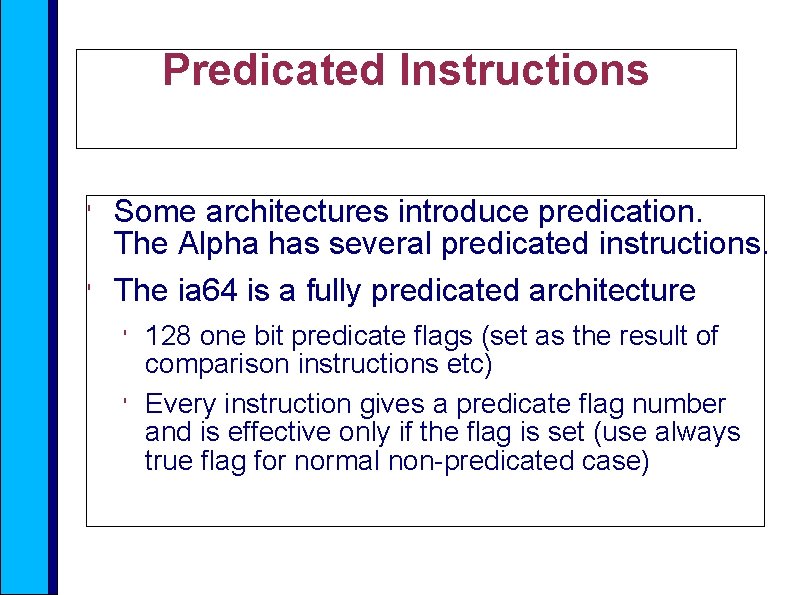

Predicated Instructions ' ' Some architectures introduce predication. The Alpha has several predicated instructions. The ia 64 is a fully predicated architecture ' ' 128 one bit predicate flags (set as the result of comparison instructions etc) Every instruction gives a predicate flag number and is effective only if the flag is set (use always true flag for normal non-predicated case)

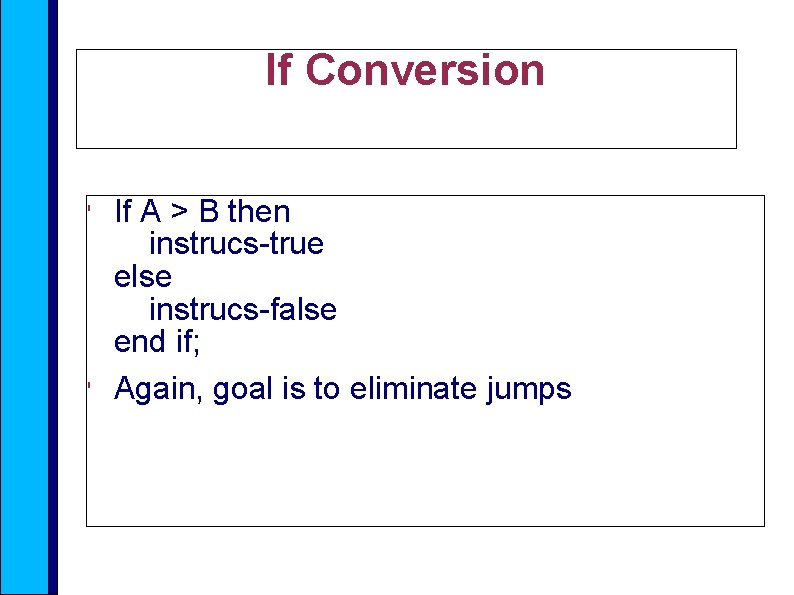

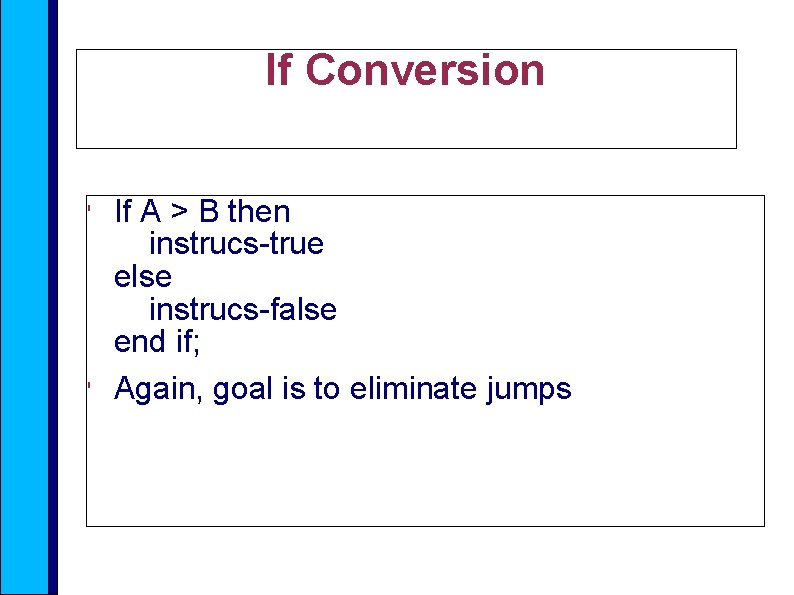

If Conversion ' ' If A > B then instrucs-true else instrucs-false end if; Again, goal is to eliminate jumps

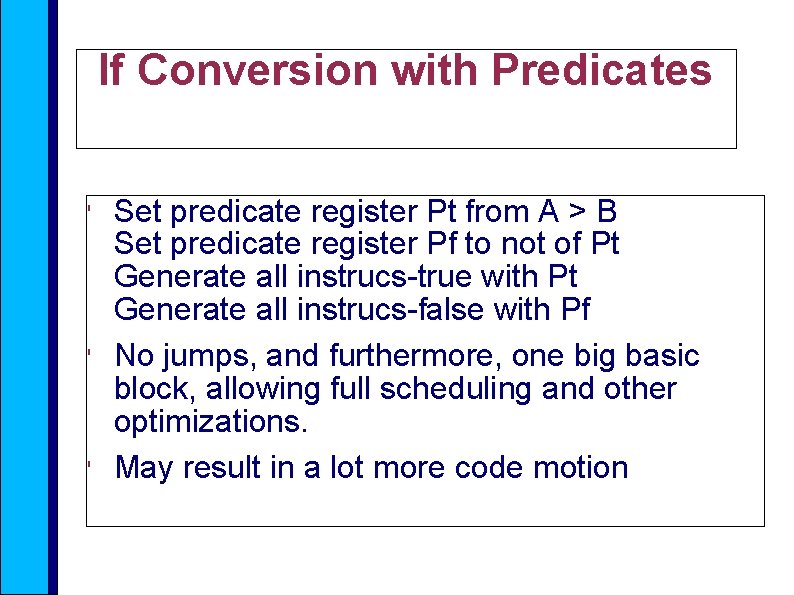

If Conversion with Predicates ' ' ' Set predicate register Pt from A > B Set predicate register Pf to not of Pt Generate all instrucs-true with Pt Generate all instrucs-false with Pf No jumps, and furthermore, one big basic block, allowing full scheduling and other optimizations. May result in a lot more code motion

Effects of Optimization ' Optimization can ' ' ' Make program run faster, good Make program run slower, probably bad Make program shorter, good Make program longer, not necessarily bad (e. g. inlining may increase size but also increase speed) Change results. Not good, but not always preventable

Optimization Changing Results ' ' ' Suppose we have an uninitialized variable In unoptimized code, this variable lives in static memory, and just happens to be initialized to zero, or is on the stack, which happens to be initialized to zero. But when we optimize, variable is in a register, and we no longer have a zero. Program was wrong before, and optimization reveals the bug!

Optimization Changing Results ' ' ' Floating point on x 86 in registers is always done in 80 -bits, memory forms are typically 32 - and 64 -bits. So arithmetic in registers is more accurate, optimization may cause greater accuracy. But that may change results, e. g. An infinite loop might result because convergence does not happen at the greater accuracy.

Optimization Changing Results ' ' ' If program corrupts memory, then perhaps the corruption is benign in unoptimized code and does not get noticed. But when we optimize, something important gets clobbered. No bounds on difference in behavior in a case like this!

Is Optimization Always Good? ' ' No, since it may reveal bugs as per previous slide No, If program is fast enough and small enough, optimization is not useful No, optimization may intefere with debugging No, optimization may make certification done at object level harder by making code hard to follow, e. g. With extensive code motion.

A Little Project ' ' Go check the GCC manual Loop up all the optimization options Ask on list if any of them does not make sense to you! The GCC manual is on the web site