Assessment Literacy Series PA Module 6 Conducting Reviews

![ALIGNMENT AUDIT [TASKS REVISITED] Alignment audits are focused on the following four key tasks: ALIGNMENT AUDIT [TASKS REVISITED] Alignment audits are focused on the following four key tasks:](https://slidetodoc.com/presentation_image_h2/3cd8a3209c534068de07109fb22bc82b/image-31.jpg)

![ALIGNMENT AUDIT [TASKS REVISITED, CONT. ] 3. Item/task distributions represent the emphasis placed on ALIGNMENT AUDIT [TASKS REVISITED, CONT. ] 3. Item/task distributions represent the emphasis placed on](https://slidetodoc.com/presentation_image_h2/3cd8a3209c534068de07109fb22bc82b/image-32.jpg)

- Slides: 73

Assessment Literacy Series: PA Module #6 – Conducting Reviews Keystone Activities © Pennsylvania Department of Education

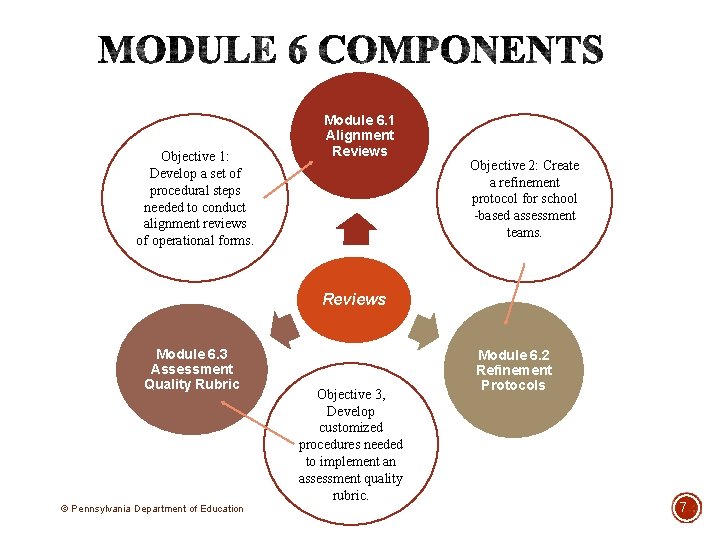

OBJECTIVES Participants will be able to: § Develop a set of procedural steps needed to conduct alignment reviews of operational forms. § Create a refinement protocol for school-based assessment teams. § Develop customized procedures needed to implement an assessment quality rubric. © Pennsylvania Department of Education 2

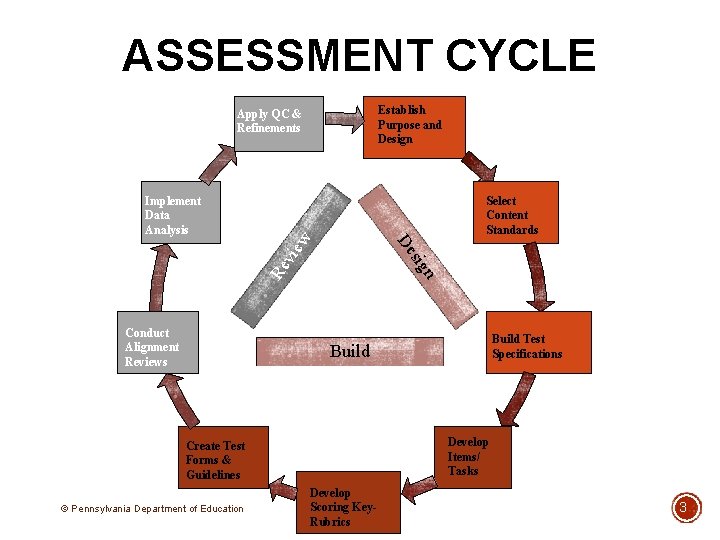

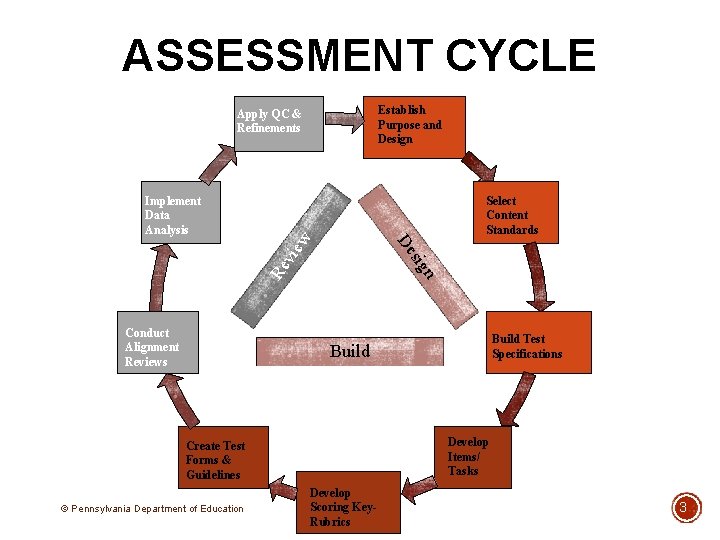

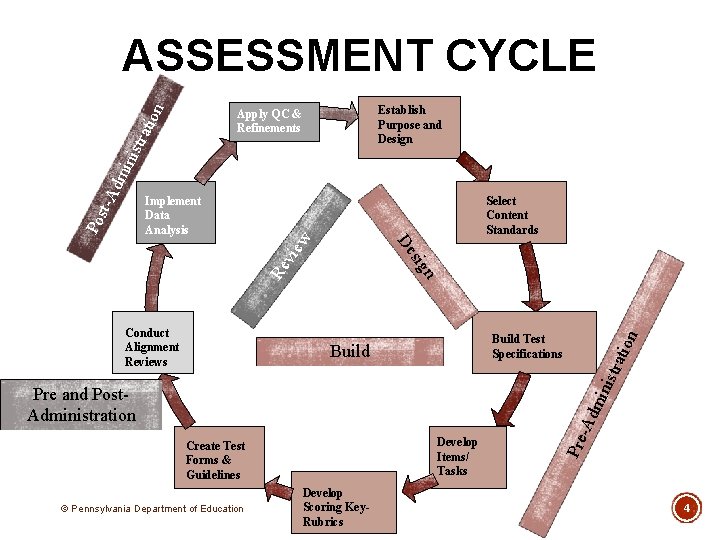

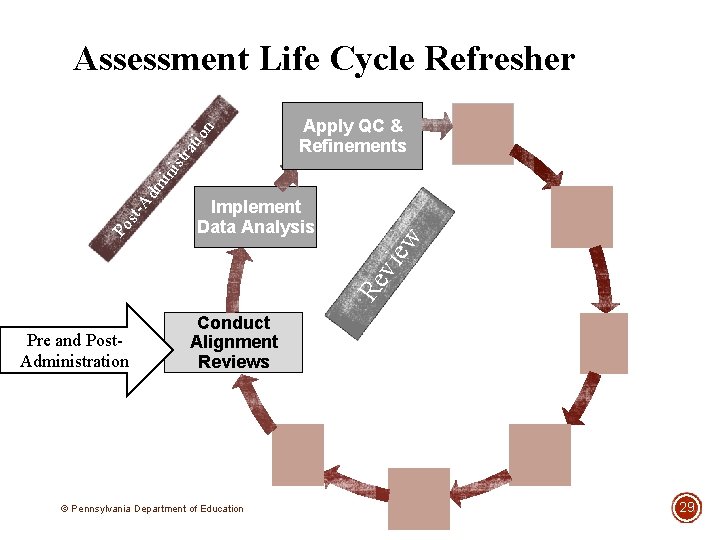

ASSESSMENT CYCLE Establish Purpose and Design Apply QC & Refinements Select Content Standards Conduct Alignment Reviews n Re v sig De iew Implement Data Analysis Build Test Specifications Build Develop Items/ Tasks Create Test Forms & Guidelines © Pennsylvania Department of Education Develop Scoring Key. Rubrics 3

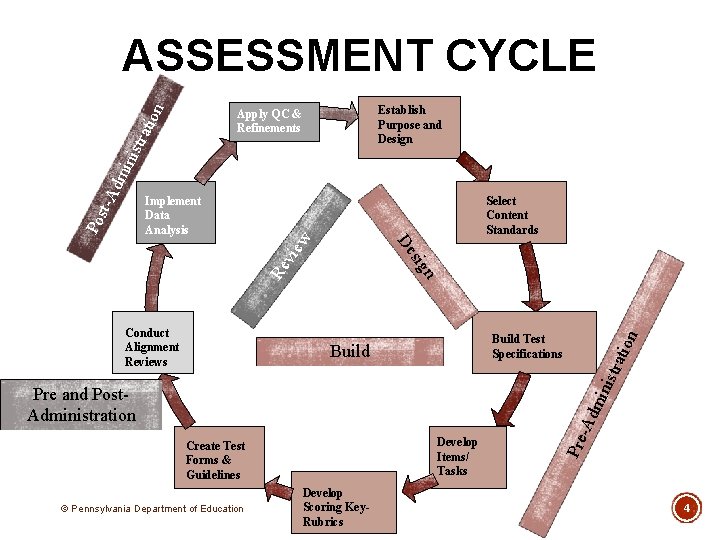

trat ion ASSESSMENT CYCLE Establish Purpose and Design Select Content Standards Build Test Specifications istr Build atio n Conduct Alignment Reviews n Re v sig De iew min Pre and Post. Administration Develop Items/ Tasks Create Test Forms & Guidelines © Pennsylvania Department of Education Develop Scoring Key. Rubrics -Ad Pos Implement Data Analysis Pre t-A dm inis Apply QC & Refinements 4

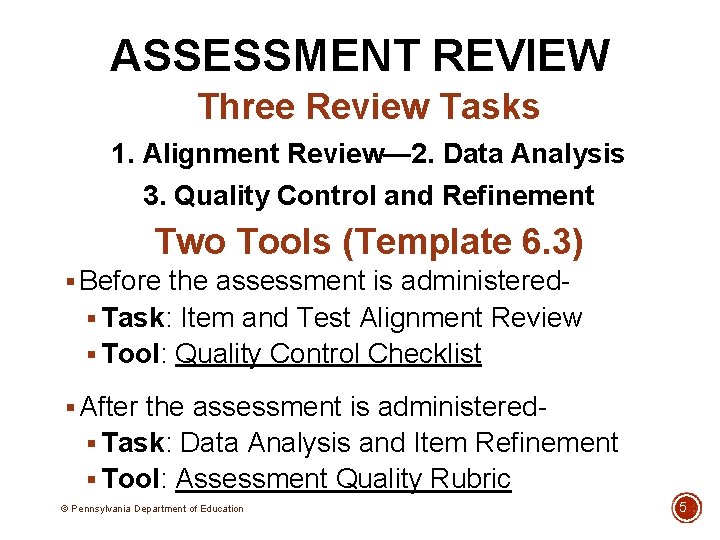

ASSESSMENT REVIEW Three Review Tasks 1. Alignment Review— 2. Data Analysis 3. Quality Control and Refinement Two Tools (Template 6. 3) § Before the assessment is administered§ Task: Item and Test Alignment Review § Tool: Quality Control Checklist § After the assessment is administered§ Task: Data Analysis and Item Refinement § Tool: Assessment Quality Rubric © Pennsylvania Department of Education 5

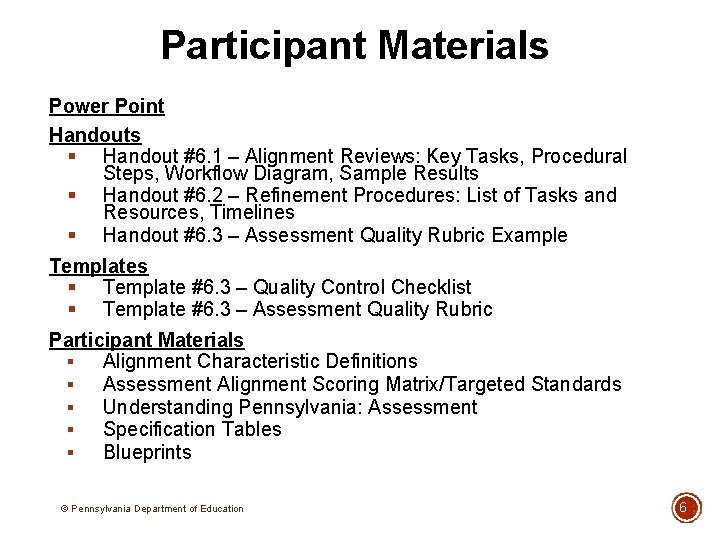

Participant Materials Power Point Handouts § Handout #6. 1 – Alignment Reviews: Key Tasks, Procedural Steps, Workflow Diagram, Sample Results § Handout #6. 2 – Refinement Procedures: List of Tasks and Resources, Timelines § Handout #6. 3 – Assessment Quality Rubric Example Templates § Template #6. 3 – Quality Control Checklist § Template #6. 3 – Assessment Quality Rubric Participant Materials § Alignment Characteristic Definitions § Assessment Alignment Scoring Matrix/Targeted Standards § Understanding Pennsylvania: Assessment § Specification Tables § Blueprints © Pennsylvania Department of Education 6

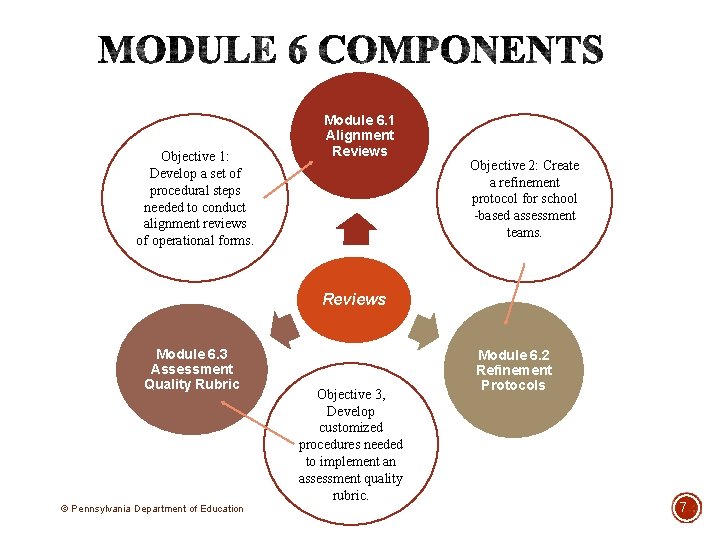

Objective 1: Develop a set of procedural steps needed to conduct alignment reviews of operational forms. Module 6. 1 Alignment Reviews Objective 2: Create a refinement protocol for school -based assessment teams. Reviews Module 6. 3 Assessment Quality Rubric © Pennsylvania Department of Education Objective 3, Develop customized procedures needed to implement an assessment quality rubric. Module 6. 2 Refinement Protocols 7

PM 1 MODULE 6. 1 ALIGNMENT REVIEWS © Pennsylvania Department of Education 8

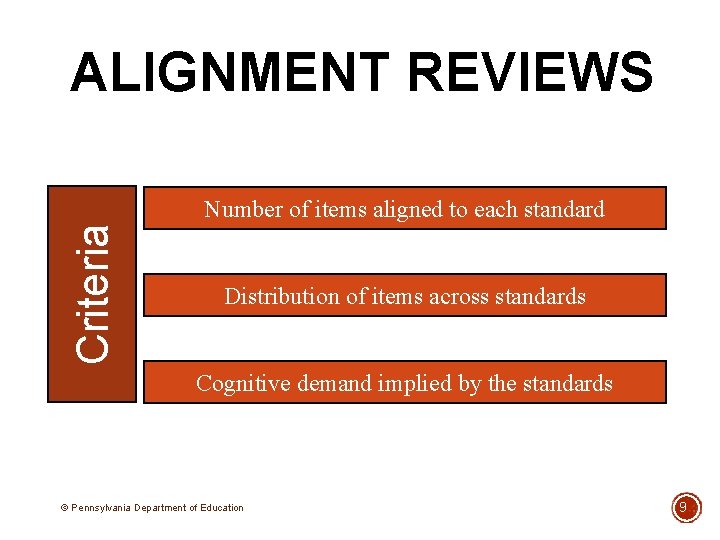

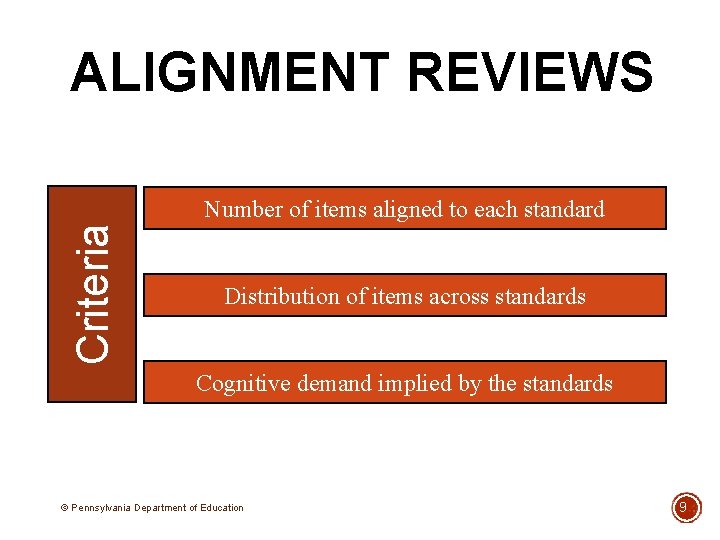

ALIGNMENT REVIEWS Criteria Number of items aligned to each standard Distribution of items across standards Cognitive demand implied by the standards © Pennsylvania Department of Education 9

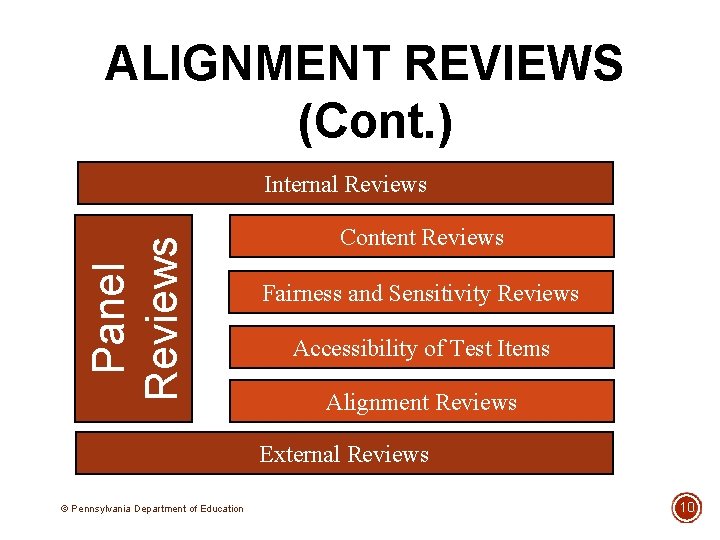

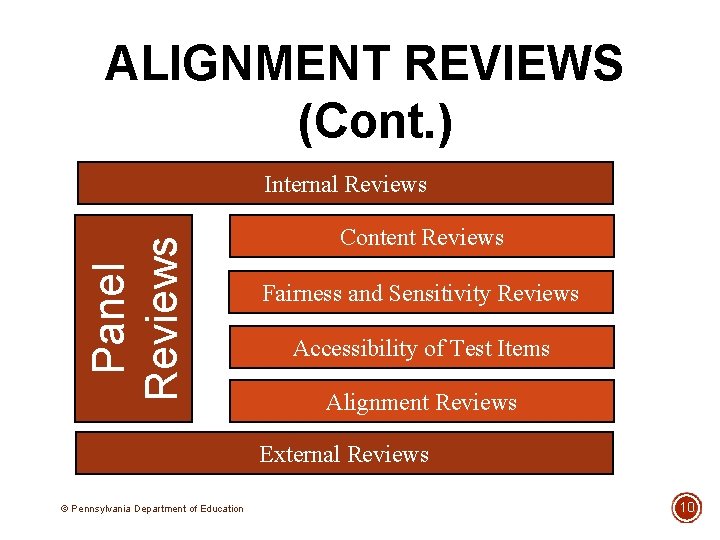

ALIGNMENT REVIEWS (Cont. ) Panel Reviews Internal Reviews Content Reviews Fairness and Sensitivity Reviews Accessibility of Test Items Alignment Reviews External Reviews © Pennsylvania Department of Education 10

PM 1 Defined • The degree to which content standards and assessments address the same construct (Webb, 1997). Relevance • Relevance means that alignment characteristics of the assessment supports inferences about the latent construct being measured. Relevance is a key validity characteristic of an assessment. © Pennsylvania Department of Education 11

ALIGNMENT REVIEW PROCESSES 1. Alignment Focus 2. (Alignment Models) Webb Alignment Model 3. PA Assessment Alignment Model 4. Work Flow Diagram 5. Alignment Procedural Steps 6. Alignment Summary Example © Pennsylvania Department of Education 12

Items/Tasks • The degree to which the items/tasks address the targeted content standards in terms of • (a) content match, and • (b) cognitive demand/ higher order thinking skills. © Pennsylvania Department of Education 13

1. ALIGNMENT FOCUS (CONT. ) Operational Form • The degree to which the completed assessment reflects (as described in the specification table and blueprint) the • (a) content pattern of emphasis, and • (b) item/task sufficiency. • Also, focuses on the developmental appropriateness and linguistic demand. © Pennsylvania Department of Education 12 14

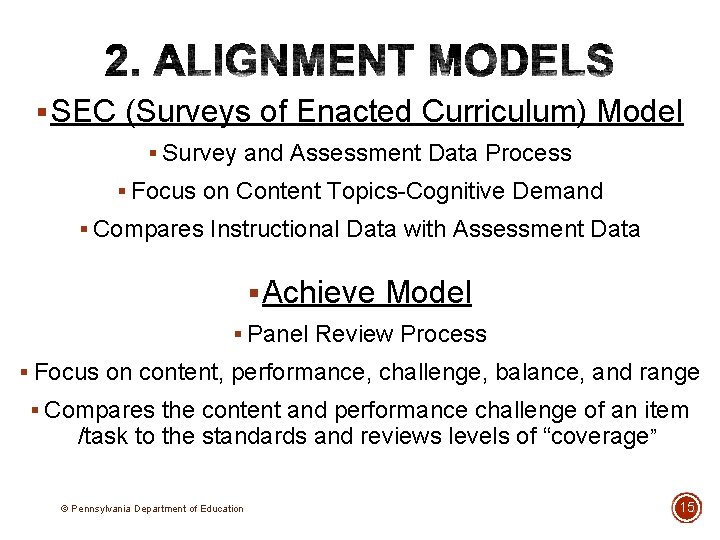

§ SEC (Surveys of Enacted Curriculum) Model § Survey and Assessment Data Process § Focus on Content Topics-Cognitive Demand § Compares Instructional Data with Assessment Data § Achieve Model § Panel Review Process § Focus on content, performance, challenge, balance, and range § Compares the content and performance challenge of an item /task to the standards and reviews levels of “coverage” © Pennsylvania Department of Education 15

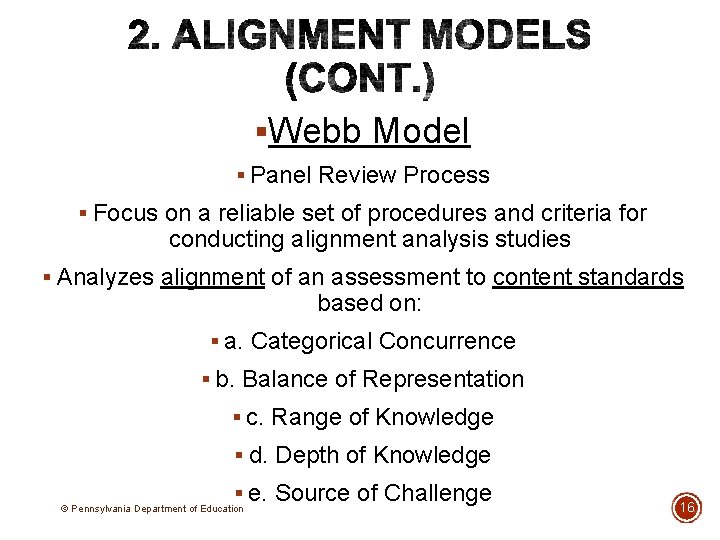

§Webb Model § Panel Review Process § Focus on a reliable set of procedures and criteria for conducting alignment analysis studies § Analyzes alignment of an assessment to content standards based on: § a. Categorical Concurrence § b. Balance of Representation § c. Range of Knowledge § d. Depth of Knowledge § e. Source of Challenge © Pennsylvania Department of Education 16

• Categorical Concurrence • The same categories of the content standards are included in the assessment. • Items could be aligned to more than one content standard. • Balance of Representation • Ensures the distribution of the content standards across the operational form matches the test blueprint. © Pennsylvania Department of Education 10 17

• Range of Knowledge • The extent of knowledge required to answer parallels the knowledge the standard requires. • Depth of Knowledge • The cognitive demand of the content standard must align to the cognitive demand of the test item. • Source of Challenge • Students give a correct or incorrect response for the wrong reason (e. g. , linguistic demand). © Pennsylvania Department of Education 11 18

Handout #6. 1: Key Tasks 1. Content Match (CM) • Items/tasks match a specific content standard based upon the narrative description of the standard and a professional understanding of the knowledge, skill, and/or concept being described. 2. Cognitive Demand/Depth of Knowledge (Do. K) • Items/tasks reflect the cognitive demand/higherorder thinking skill(s) articulated in the standards, with extended performance tasks typically focused on several, integrated content standards. © Pennsylvania Department of Education 19

Handout #6. 1: Key Tasks 3. Content Pattern (CP) • Item/task distributions represent the emphasis placed on the targeted content standards in terms of “density” and “instructional focus”, while encompassing the range of standards articulated on the test blueprint. 4. Item/Task Sufficiency (ITS) • Item/task distributions consist of sufficient opportunities for test-takers to demonstrate skills, knowledge, and concept mastery at the appropriate developmental range. © Pennsylvania Department of Education 20

Handout #6. 1: Alignment Workflow 1 Select content experts (teachers) Identify technical support from LEA 2 Organize materials, establish timeline, logistics, etc. Conduct panelist training (initial or refresher) Begin independent panelist review with focus on: 3 4 • Content Match (CM) • Cognitive Demand/ Depth of Knowledge (Do. K) • Content Pattern (CP) • Item/Task Sufficiency (ITS) Establish consensus on issues and document needed changes © Pennsylvania Department of Education Record findings, present to other panelists Begin refinement tasks 21

PM 2 -8 Handout #6. 1: Procedural Steps 1. Identify a team of teachers to conduct the alignment review (best accomplished by department or grade-level committees) with technical support from the district. 2. Organize items/tasks, operational forms, test specification tables, and targeted content standards. 3. Conduct panelist training on the alignment criteria and rating scheme. Use calibration techniques with a “training set” of materials prior to conducting the actual review. © Pennsylvania Department of Education 22

PM 2 -8 Handout #6. 1: Procedural Steps Evaluate the following areas: • Content Match (CM) and Depth of Knowledge (Do. K) • Read each item/task in terms of matching the standards both in terms of content reflection and cognitive demand/(Do. K). • For SA, ECR, Extended Performance Tasks, ensure that scoring rubrics are focused on specific content-based expectations. • After reviewing all items/tasks, including scoring rubrics, count the number of item/task points assigned to each targeted content standard. © Pennsylvania Department of Education 23

5. ALIGNMENT PROCEDURAL STEPS (CONT. ) Handout #6. 1: Procedural Steps PM 2 -8 Evaluate the following area: • Content Pattern § Determine if the items/tasks are sampling the complexity and extensiveness of the targeted content standards. § If assessment’s range is too narrowly defined, refine blueprints and replace items/tasks to match the range of skills and knowledge implied within the targeted standards. © Pennsylvania Department of Education 24

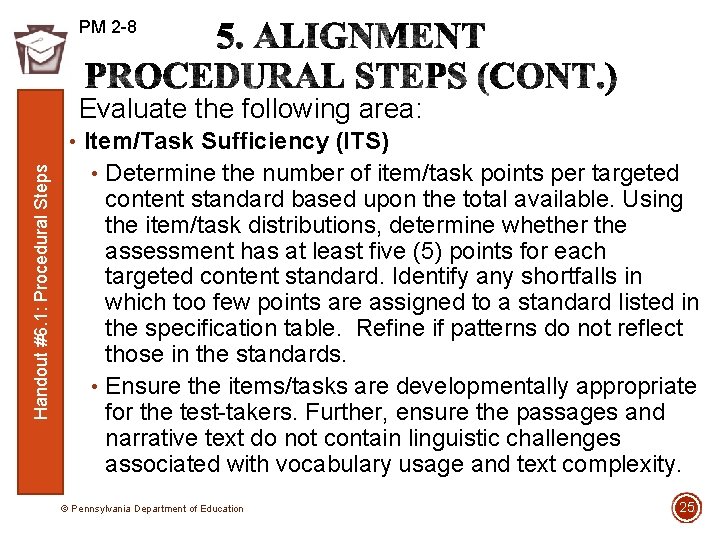

PM 2 -8 Evaluate the following area: Handout #6. 1: Procedural Steps • Item/Task Sufficiency (ITS) • Determine the number of item/task points per targeted content standard based upon the total available. Using the item/task distributions, determine whether the assessment has at least five (5) points for each targeted content standard. Identify any shortfalls in which too few points are assigned to a standard listed in the specification table. Refine if patterns do not reflect those in the standards. • Ensure the items/tasks are developmentally appropriate for the test-takers. Further, ensure the passages and narrative text do not contain linguistic challenges associated with vocabulary usage and text complexity. © Pennsylvania Department of Education 25

PM 2 -8 Handout #6. 1: Table 1 • Record findings and present to the larger group with recommendations for improvements (e. g. , new items/tasks, design changes, item/task refinements, etc. ). • Document the group’s findings and prepare for refinement tasks. © Pennsylvania Department of Education 26

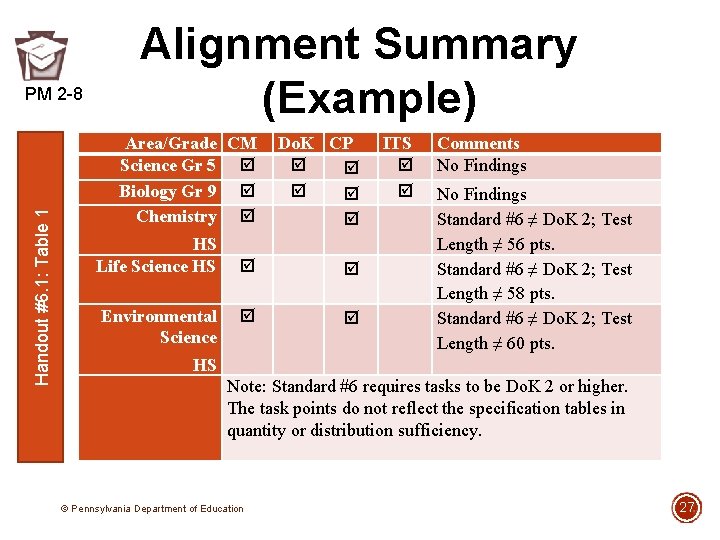

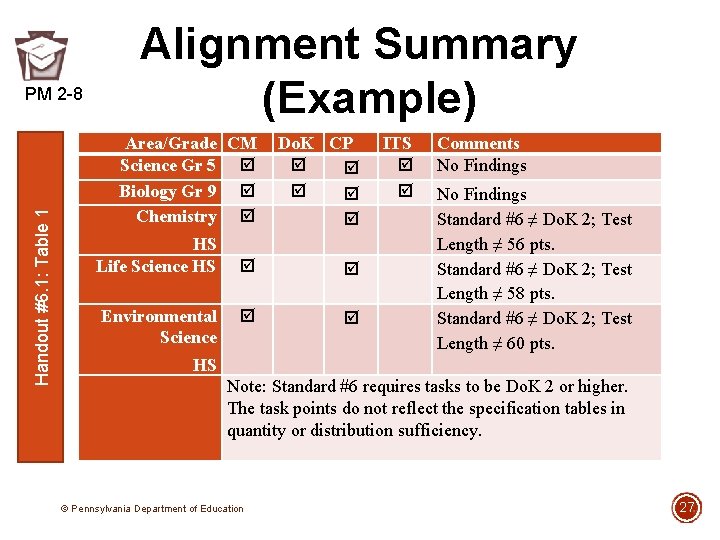

Handout #6. 1: Table 1 PM 2 -8 Alignment Summary (Example) Area/Grade CM Science Gr 5 Biology Gr 9 Chemistry HS Life Science HS Environmental Science Do. K CP ITS Comments No Findings Standard #6 ≠ Do. K 2; Test Length ≠ 56 pts. Standard #6 ≠ Do. K 2; Test Length ≠ 58 pts. Standard #6 ≠ Do. K 2; Test Length ≠ 60 pts. HS Note: Standard #6 requires tasks to be Do. K 2 or higher. The task points do not reflect the specification tables in quantity or distribution sufficiency. © Pennsylvania Department of Education 27

MODULE 6. 2 REFINEMENT PROTOCOLS © Pennsylvania Department of Education 28

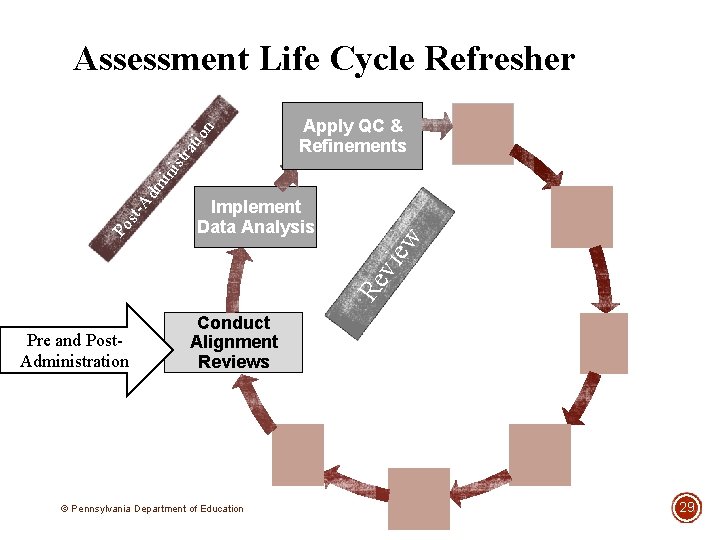

Apply QC & Refinements vie w Implement Data Analysis Re Po st- Ad mi nis tra tio n Assessment Life Cycle Refresher Pre and Post. Administration Conduct Alignment Reviews © Pennsylvania Department of Education 29

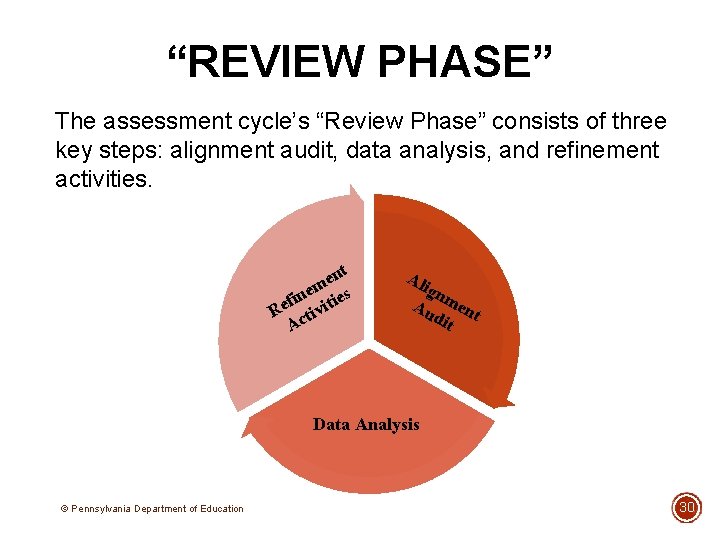

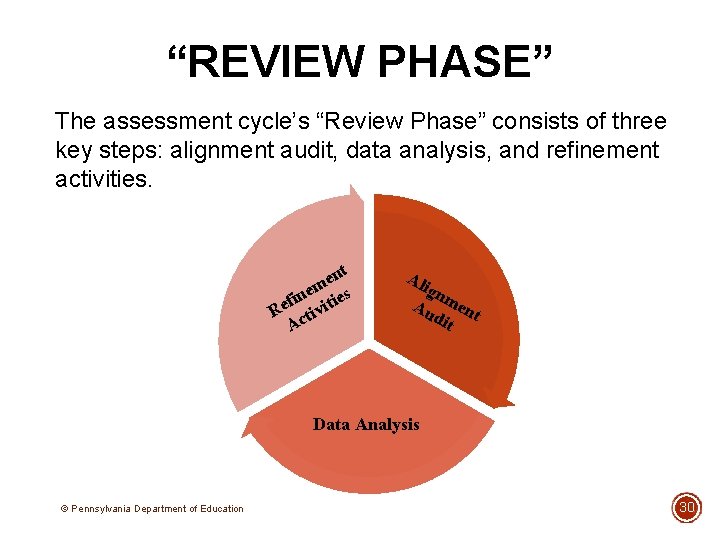

“REVIEW PHASE” The assessment cycle’s “Review Phase” consists of three key steps: alignment audit, data analysis, and refinement activities. nt e m ne ties i f Re ctivi A Ali gn Au men dit t Data Analysis © Pennsylvania Department of Education 30

![ALIGNMENT AUDIT TASKS REVISITED Alignment audits are focused on the following four key tasks ALIGNMENT AUDIT [TASKS REVISITED] Alignment audits are focused on the following four key tasks:](https://slidetodoc.com/presentation_image_h2/3cd8a3209c534068de07109fb22bc82b/image-31.jpg)

ALIGNMENT AUDIT [TASKS REVISITED] Alignment audits are focused on the following four key tasks: 1. Items/tasks match a specific content standard based upon the narrative description of the standard and a professional understanding of the knowledge, skill, and/or concept being described. 2. Items/tasks reflect the cognitive demand/higher-order thinking skill(s) articulated in the standards, with extended performance tasks typically focused on several, integrated content standards. © Pennsylvania Department of Education 31

![ALIGNMENT AUDIT TASKS REVISITED CONT 3 Itemtask distributions represent the emphasis placed on ALIGNMENT AUDIT [TASKS REVISITED, CONT. ] 3. Item/task distributions represent the emphasis placed on](https://slidetodoc.com/presentation_image_h2/3cd8a3209c534068de07109fb22bc82b/image-32.jpg)

ALIGNMENT AUDIT [TASKS REVISITED, CONT. ] 3. Item/task distributions represent the emphasis placed on the targeted content standards in terms of “density” and “instructional focus”, while encompassing the range of standards articulated on the test blueprint. 4. Item/task distributions consist of sufficient opportunities for test-takers to demonstrate skills, knowledge, and concept mastery at the appropriate developmental range. © Pennsylvania Department of Education 32

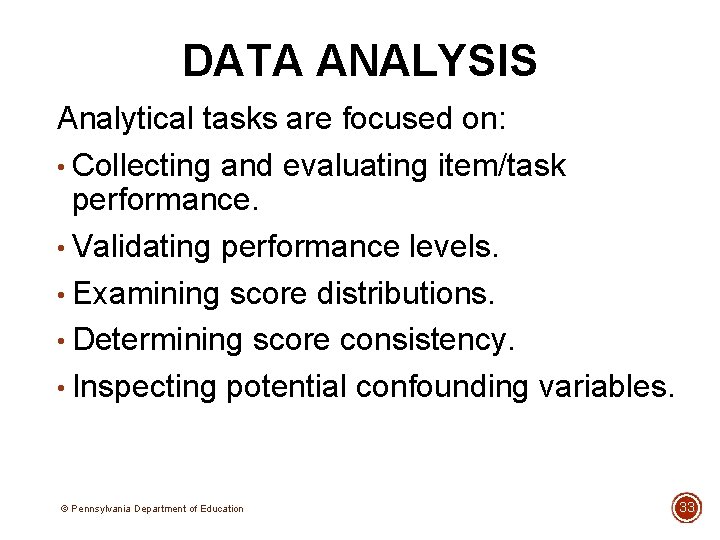

DATA ANALYSIS Analytical tasks are focused on: • Collecting and evaluating item/task performance. • Validating performance levels. • Examining score distributions. • Determining score consistency. • Inspecting potential confounding variables. © Pennsylvania Department of Education 33

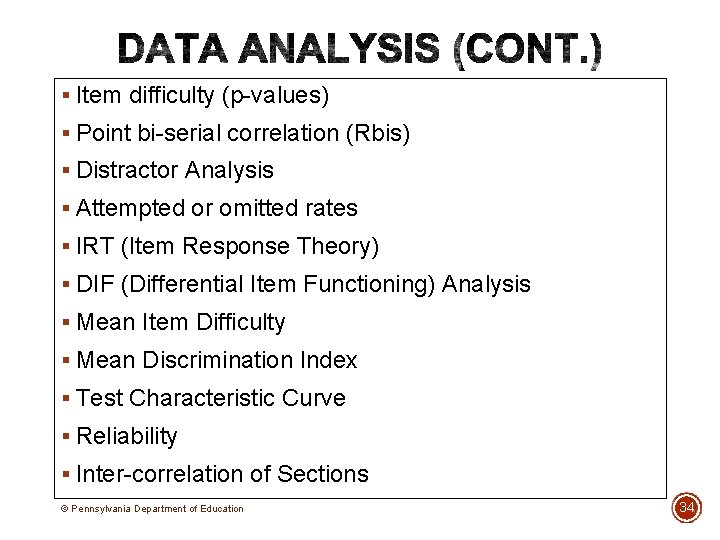

§ Item difficulty (p-values) § Point bi-serial correlation (Rbis) § Distractor Analysis § Attempted or omitted rates § IRT (Item Response Theory) § DIF (Differential Item Functioning) Analysis § Mean Item Difficulty § Mean Discrimination Index § Test Characteristic Curve § Reliability § Inter-correlation of Sections © Pennsylvania Department of Education 34

DATA ANALYSIS (CONT. ) Post-administration data are used to evaluate the psychometric properties of the assessment by examining aspects such as: • rater reliability • internal consistency • inter-item correlations • decision consistency • measurement error © Pennsylvania Department of Education 35

§ Sample Size § Preliminary items statistics: n=100 § DIF (Differential Item Functioning) Analysis: n=100 per group § Performance of Operational Forms and Items: § IRT (Item Response Theory): n=500 -1000 § Rasch Model: n=smaller samples © Pennsylvania Department of Education 36

REFINEMENT ACTIVITIES Handout #6. 2: Refinement Procedures PM 2 -8 Refinement activities are those tasks used to: • Create new items/tasks. • Improve existing items/tasks. • Create alternate forms. © Pennsylvania Department of Education 37

Handout #6. 2: Table 1 PM 6 REFINEMENT ACTIVITIES (CONT. ) Refinement activities are those tasks used to: • improve human-scoring guidelines. • streamline administration protocols. • conduct professional development seminars/workshops. © Pennsylvania Department of Education 38

T 1 -7 MODULE 6. 3 ASSESSMENT QUALITY REVIEW TOOLS © Pennsylvania Department of Education 39

Ensuring the assessment: • Reflects the developed test blueprint or specification table. • Matches targeted content standards. • Includes multiple ways for test-takers to demonstrate knowledge, skills, and abilities. Eliminating potential validity threats by reviewing for: • Bias • Fairness • Sensitive Topics • Accessibility/Universal Design Features © Pennsylvania Department of Education 40

Template #6. 3: Quality Control Checklist © Pennsylvania Department of Education 41

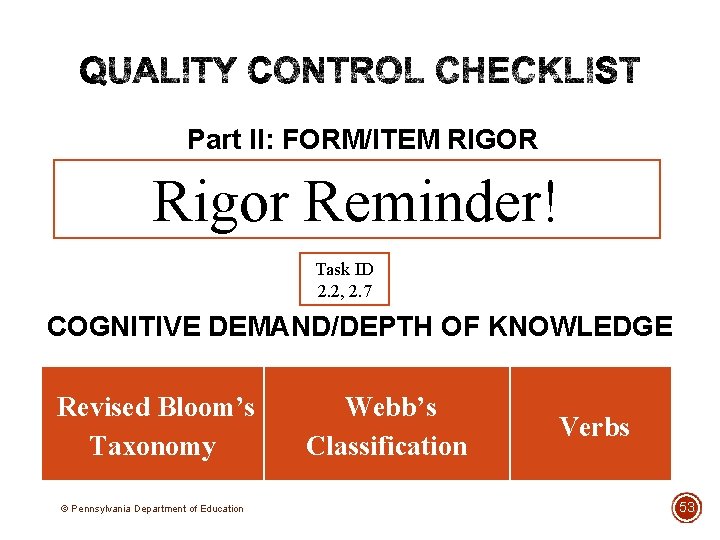

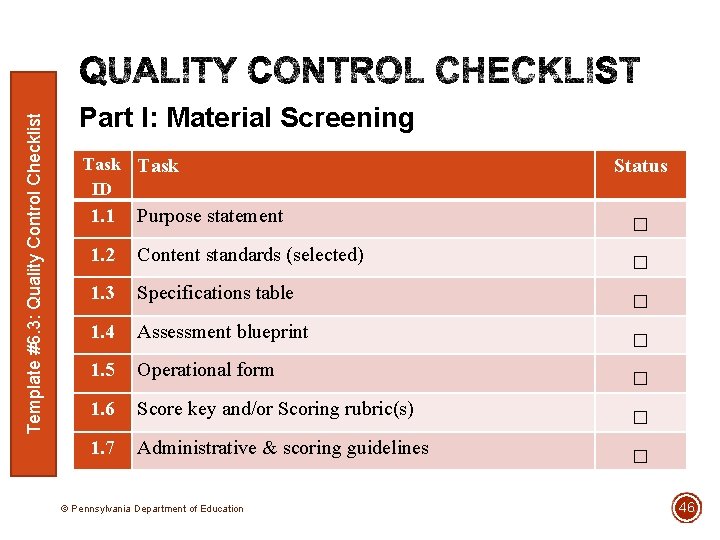

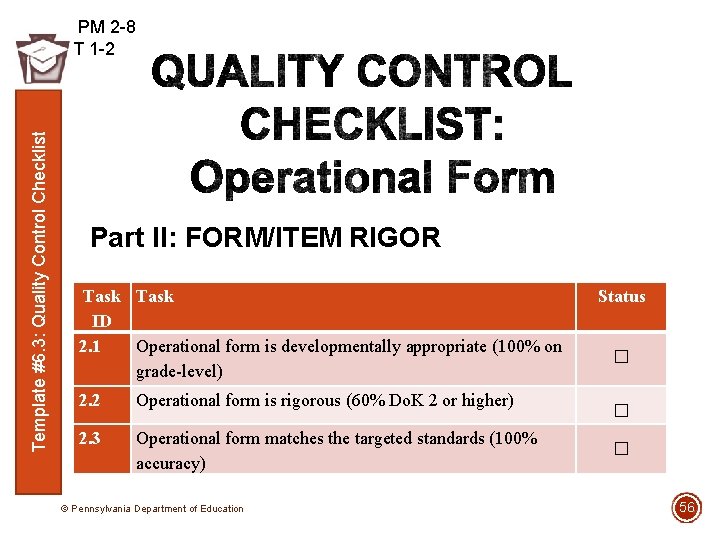

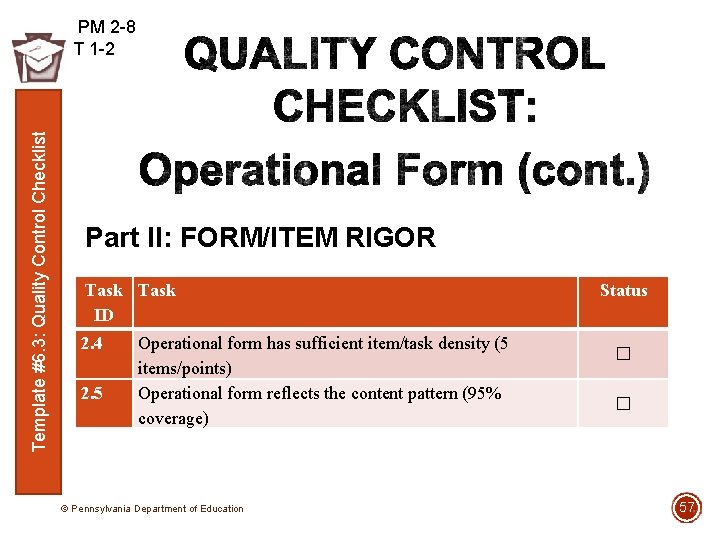

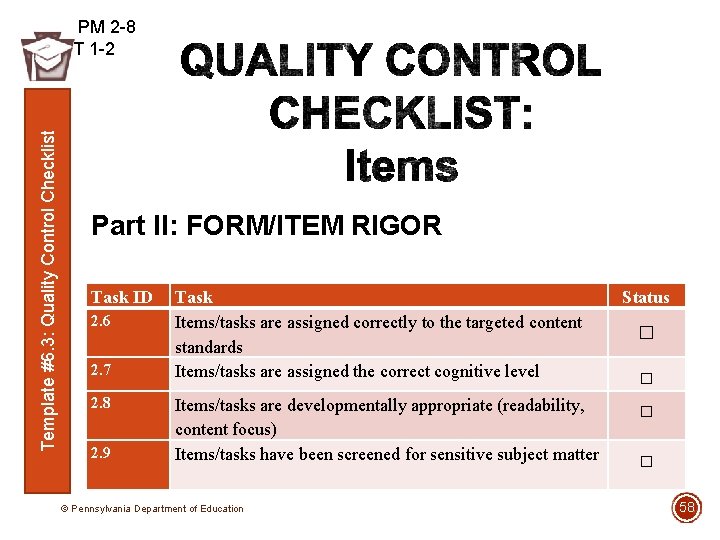

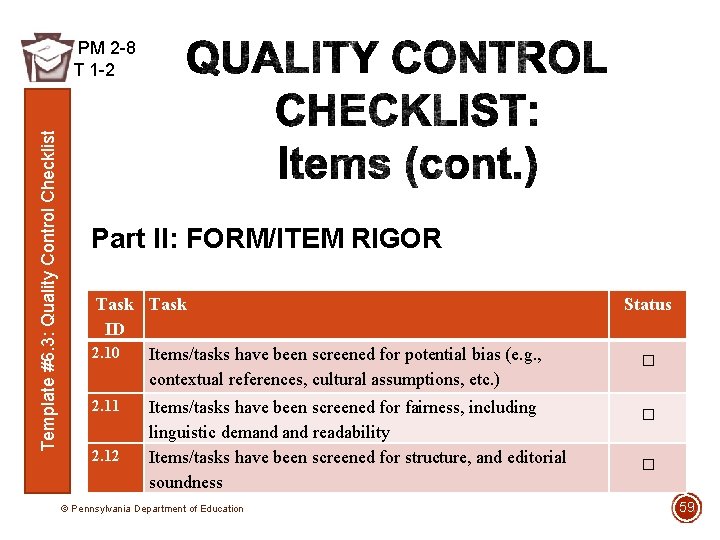

Template #6. 3: Quality Control Checklist • Checklist is designed to provide a “quick reference” to those criteria associated with high-quality, item/task development. • Checklist is organized into three parts: • Part I: Material Screening • Part II: Form/Item Rigor • Part III: Standardized Protocols © Pennsylvania Department of Education 42

§ Review assessment items and tasks for: 1. Content accuracy §Factually correct prompts and answers that are connected to the curriculum. 2. Item stems (Multiple Choice items) §Stems should present a definite, explicit, and singular question; avoid extraneous information in the stem. 3. Distractors (Multiple Choice items) §There should be one and only one correct answer and the distractors must be incorrect; however, distractors should seem plausible to students who have not mastered the material; all choices should be internally consistent (parallel), contain the same level of detail, and be grammatically consistent with the stem. © Pennsylvania Department of Education 43

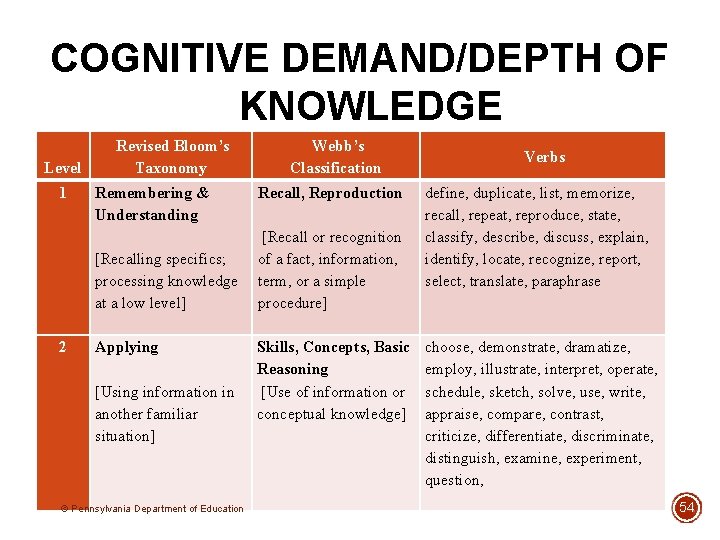

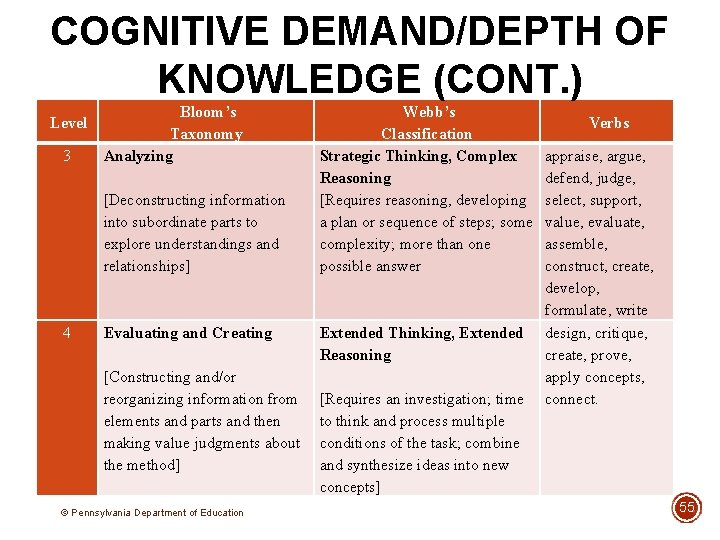

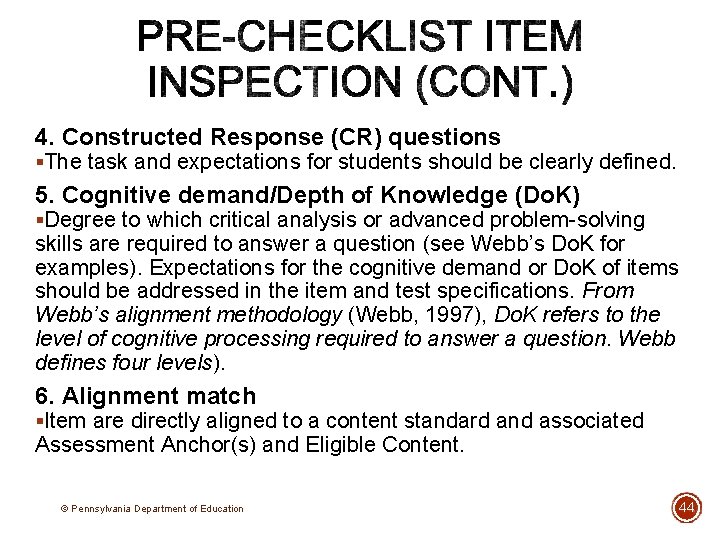

4. Constructed Response (CR) questions §The task and expectations for students should be clearly defined. 5. Cognitive demand/Depth of Knowledge (Do. K) §Degree to which critical analysis or advanced problem-solving skills are required to answer a question (see Webb’s Do. K for examples). Expectations for the cognitive demand or Do. K of items should be addressed in the item and test specifications. From Webb’s alignment methodology (Webb, 1997), Do. K refers to the level of cognitive processing required to answer a question. Webb defines four levels). 6. Alignment match §Item are directly aligned to a content standard and associated Assessment Anchor(s) and Eligible Content. © Pennsylvania Department of Education 44

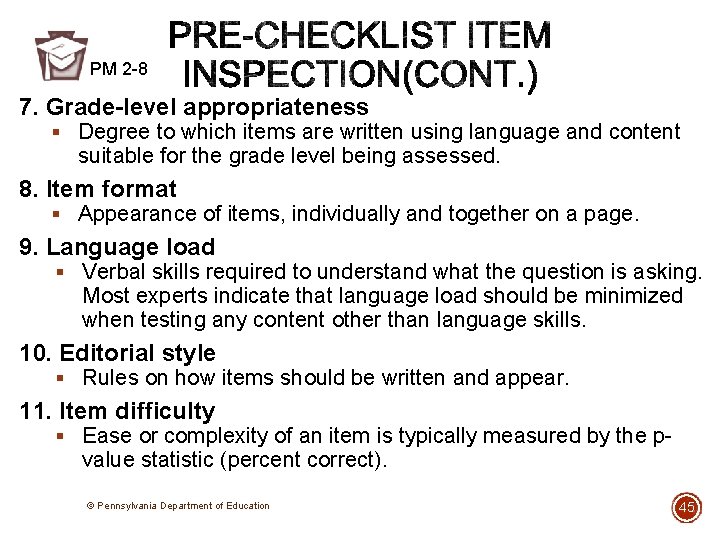

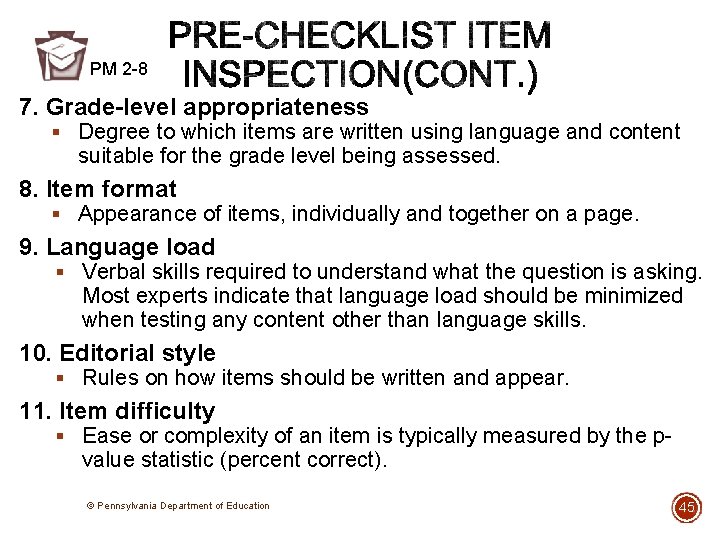

PM 2 -8 7. Grade-level appropriateness § Degree to which items are written using language and content suitable for the grade level being assessed. 8. Item format § Appearance of items, individually and together on a page. 9. Language load § Verbal skills required to understand what the question is asking. Most experts indicate that language load should be minimized when testing any content other than language skills. 10. Editorial style § Rules on how items should be written and appear. 11. Item difficulty § Ease or complexity of an item is typically measured by the p- value statistic (percent correct). © Pennsylvania Department of Education 45

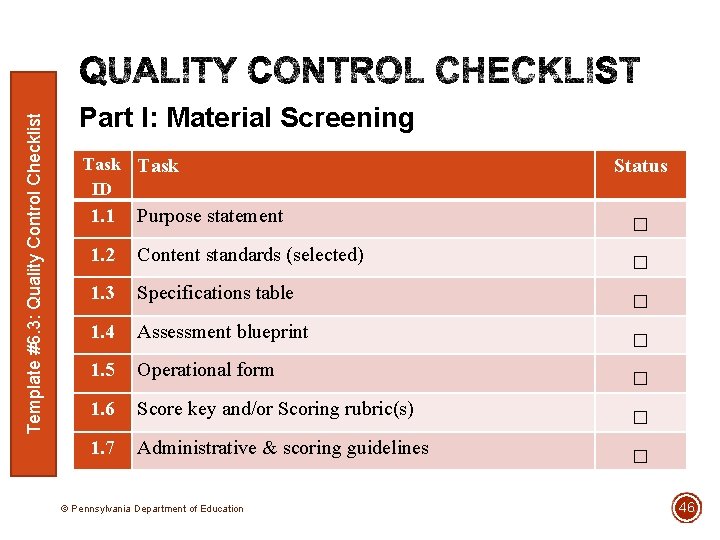

Template #6. 3: Quality Control Checklist Part I: Material Screening Task ID 1. 1 Purpose statement 1. 2 Content standards (selected) 1. 3 Specifications table 1. 4 Assessment blueprint 1. 5 Operational form 1. 6 Score key and/or Scoring rubric(s) 1. 7 Administrative & scoring guidelines © Pennsylvania Department of Education Status □ □ □ □ 46

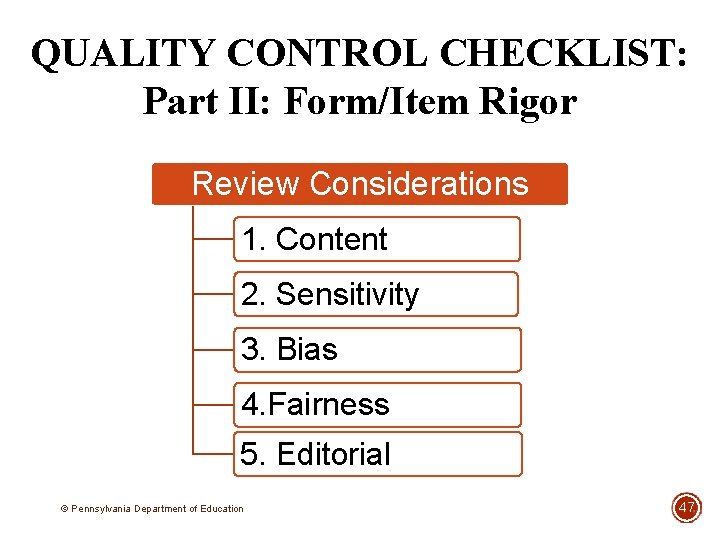

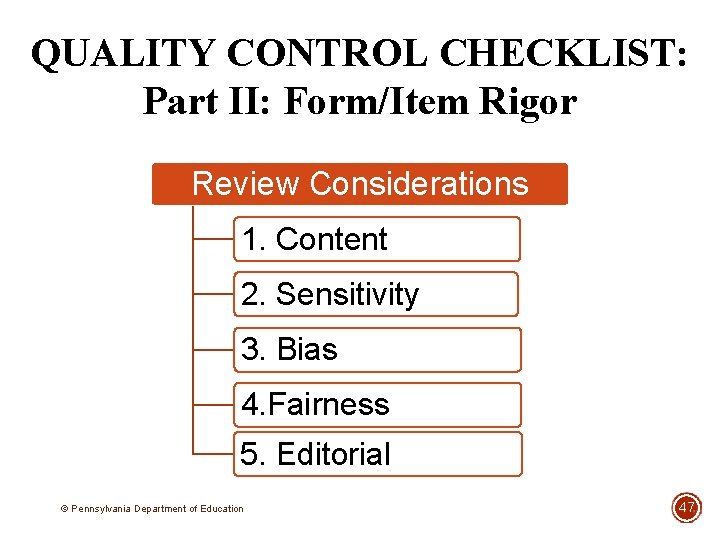

QUALITY CONTROL CHECKLIST: Part II: Form/Item Rigor Review Considerations 1. Content 2. Sensitivity 3. Bias 4. Fairness 5. Editorial © Pennsylvania Department of Education 47

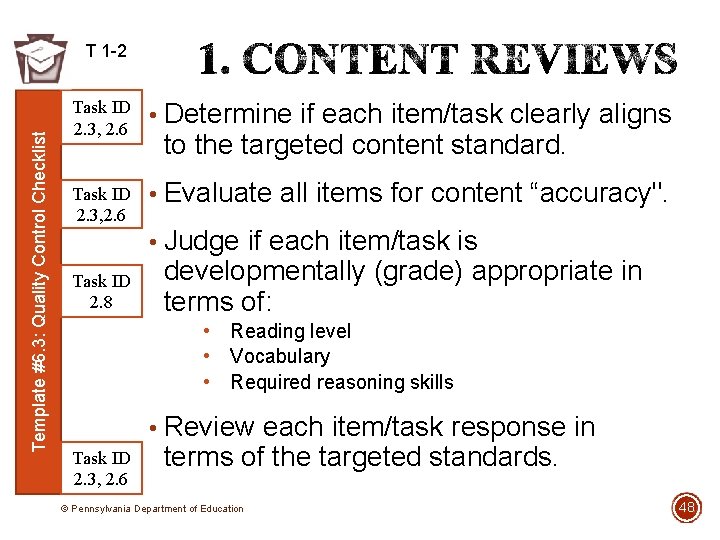

Template #6. 3: Quality Control Checklist T 1 -2 Task ID 2. 3, 2. 6 • Determine if each item/task clearly aligns Task ID 2. 3, 2. 6 • Evaluate all items for content “accuracy". Task ID 2. 8 to the targeted content standard. • Judge if each item/task is developmentally (grade) appropriate in terms of: • Reading level • Vocabulary • Required reasoning skills • Review each item/task response in Task ID 2. 3, 2. 6 terms of the targeted standards. © Pennsylvania Department of Education 48

Template #6. 3: Quality Control Checklist PM 2 -8 T 2 Sensitive to different cultures, religions, ethnic and socioeconomic groups, and disabilities. Balanced by gender roles. Task ID 2. 9 Positive in their language, situations, and imagery. Void of text that may elicit strong emotional responses by specific groups of students. © Pennsylvania Department of Education 49

Template #6. 3: Quality Control Checklist PM 2 -8 T 2 • Bias is the presence of some Task ID 2. 10 characteristic of an item/task that results in the differential performance of two individuals with the same ability but from different subgroups. • Bias-free items/tasks provide an equal opportunity for all students to demonstrate their knowledge and skills. • Bias is not the same as stereotyping. © Pennsylvania Department of Education 50

Template #6. 3: Quality Control Checklist PM 2 -8 T 2 • Fairness generally refers to the opportunity for test-takers to learn the content being measured. • Item/task concepts and skills should Task ID 2. 11 have been taught to the test-taker prior to evaluating content mastery. • Item/task should be more complex for large-scale assessments. • Fairness reviews assumptions that earlier grades taught the foundational content. © Pennsylvania Department of Education 51

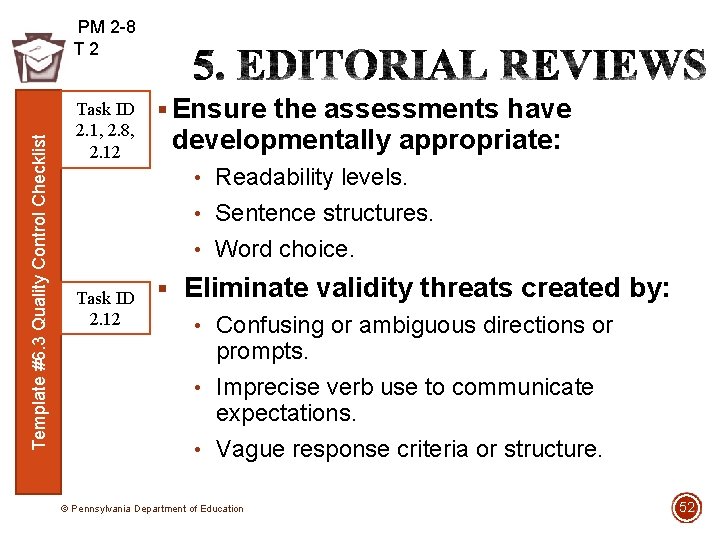

Template #6. 3 Quality Control Checklist PM 2 -8 T 2 Task ID 2. 1, 2. 8, 2. 12 § Ensure the assessments have developmentally appropriate: • Readability levels. • Sentence structures. • Word choice. Task ID 2. 12 § Eliminate validity threats created by: • Confusing or ambiguous directions or prompts. • Imprecise verb use to communicate expectations. • Vague response criteria or structure. © Pennsylvania Department of Education 52

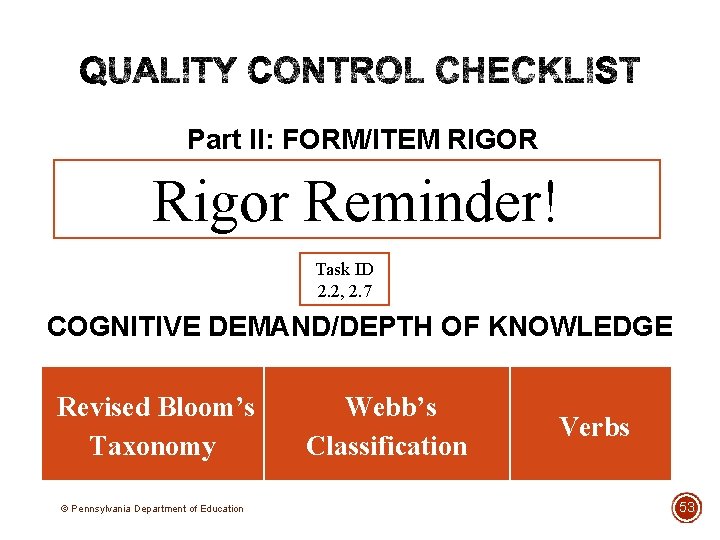

Part II: FORM/ITEM RIGOR Rigor Reminder! Task ID 2. 2, 2. 7 COGNITIVE DEMAND/DEPTH OF KNOWLEDGE Revised Bloom’s Taxonomy © Pennsylvania Department of Education Webb’s Classification Verbs 53

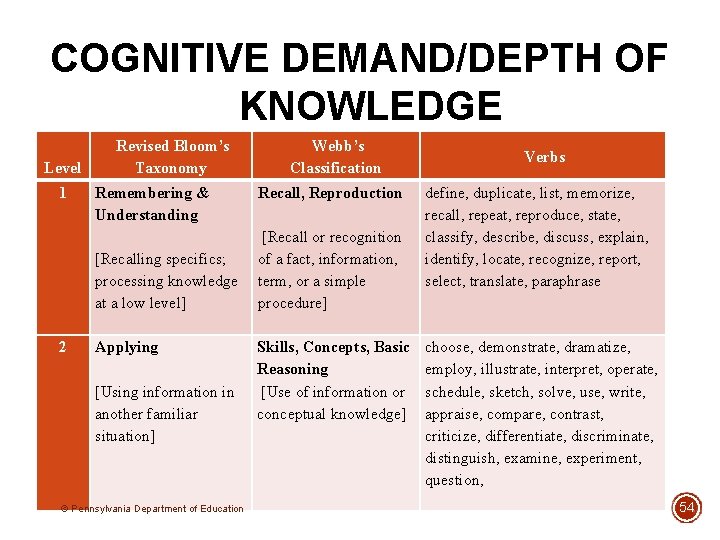

COGNITIVE DEMAND/DEPTH OF KNOWLEDGE Level 1 Revised Bloom’s Taxonomy Remembering & Understanding [Recalling specifics; processing knowledge at a low level] 2 Applying [Using information in another familiar situation] © Pennsylvania Department of Education Webb’s Classification Recall, Reproduction [Recall or recognition of a fact, information, term, or a simple procedure] Skills, Concepts, Basic Reasoning [Use of information or conceptual knowledge] Verbs define, duplicate, list, memorize, recall, repeat, reproduce, state, classify, describe, discuss, explain, identify, locate, recognize, report, select, translate, paraphrase choose, demonstrate, dramatize, employ, illustrate, interpret, operate, schedule, sketch, solve, use, write, appraise, compare, contrast, criticize, differentiate, discriminate, distinguish, examine, experiment, question, 54

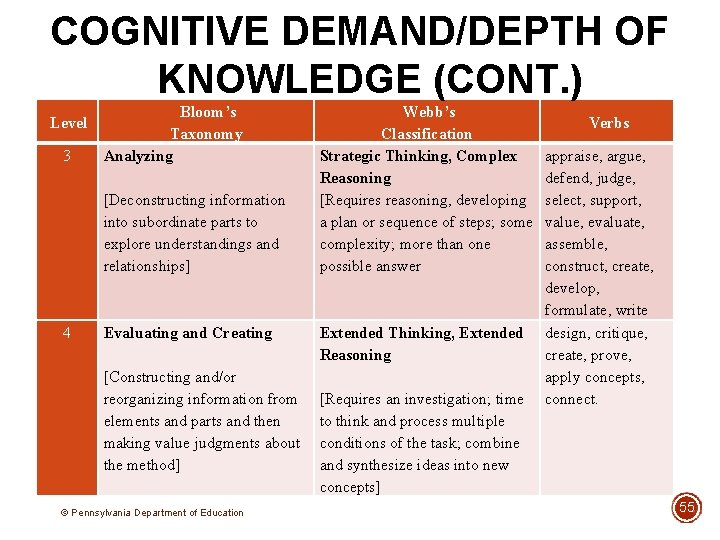

COGNITIVE DEMAND/DEPTH OF KNOWLEDGE (CONT. ) Level 3 Bloom’s Taxonomy Analyzing [Deconstructing information into subordinate parts to explore understandings and relationships] 4 Evaluating and Creating [Constructing and/or reorganizing information from elements and parts and then making value judgments about the method] © Pennsylvania Department of Education Webb’s Classification Strategic Thinking, Complex Reasoning [Requires reasoning, developing a plan or sequence of steps; some complexity; more than one possible answer Extended Thinking, Extended Reasoning [Requires an investigation; time to think and process multiple conditions of the task; combine and synthesize ideas into new concepts] Verbs appraise, argue, defend, judge, select, support, value, evaluate, assemble, construct, create, develop, formulate, write design, critique, create, prove, apply concepts, connect. 55

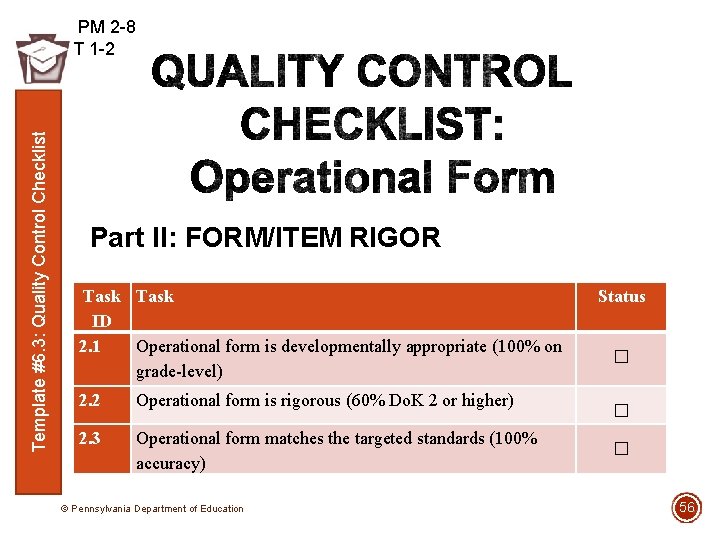

Template #6. 3: Quality Control Checklist PM 2 -8 T 1 -2 Part II: FORM/ITEM RIGOR Task ID 2. 1 Operational form is developmentally appropriate (100% on grade-level) 2. 2 Operational form is rigorous (60% Do. K 2 or higher) 2. 3 Operational form matches the targeted standards (100% accuracy) © Pennsylvania Department of Education Status □ □ □ 56

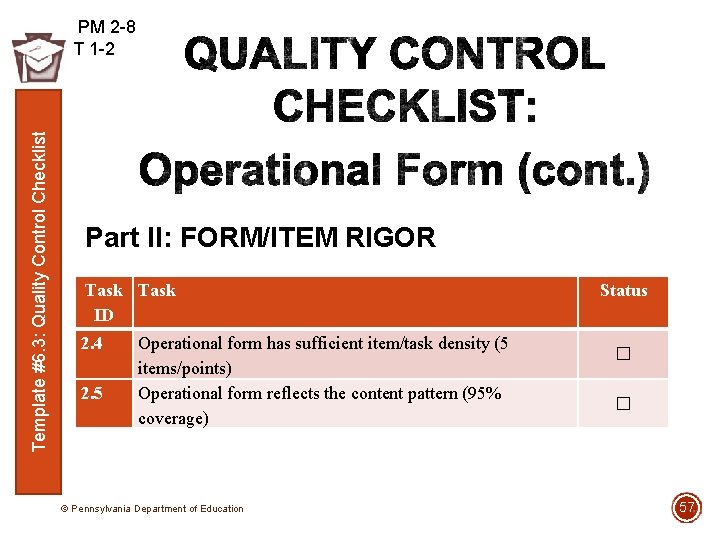

Template #6. 3: Quality Control Checklist PM 2 -8 T 1 -2 Part II: FORM/ITEM RIGOR Task ID 2. 4 2. 5 Operational form has sufficient item/task density (5 items/points) Operational form reflects the content pattern (95% coverage) © Pennsylvania Department of Education Status □ □ 57

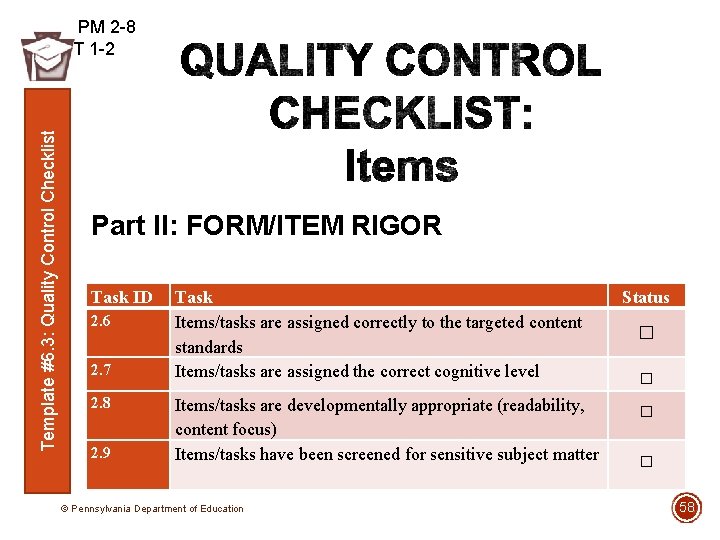

Template #6. 3: Quality Control Checklist PM 2 -8 T 1 -2 Part II: FORM/ITEM RIGOR Task ID 2. 6 2. 7 2. 8 2. 9 Task Items/tasks are assigned correctly to the targeted content standards Items/tasks are assigned the correct cognitive level Items/tasks are developmentally appropriate (readability, content focus) Items/tasks have been screened for sensitive subject matter © Pennsylvania Department of Education Status □ □ 58

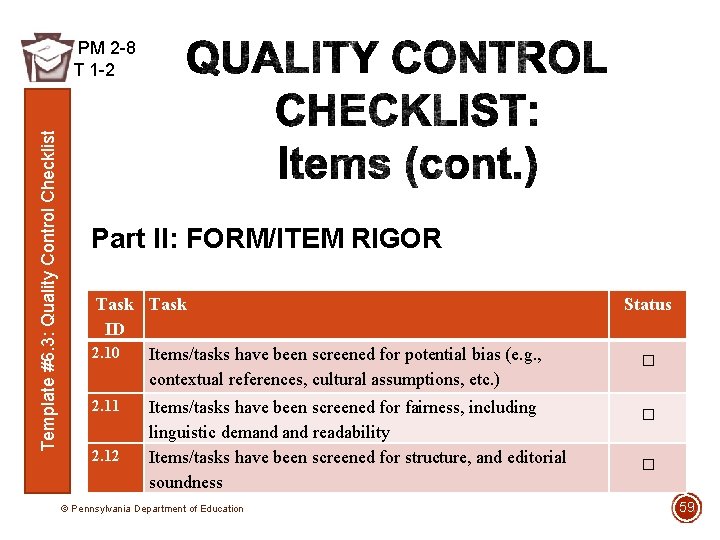

Template #6. 3: Quality Control Checklist PM 2 -8 T 1 -2 Part II: FORM/ITEM RIGOR Task ID 2. 10 Items/tasks have been screened for potential bias (e. g. , contextual references, cultural assumptions, etc. ) 2. 11 2. 12 Items/tasks have been screened for fairness, including linguistic demand readability Items/tasks have been screened for structure, and editorial soundness © Pennsylvania Department of Education Status □ □ □ 59

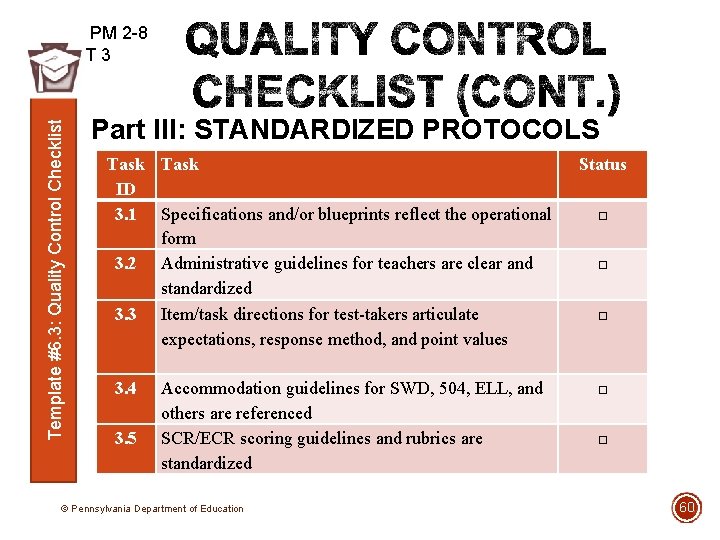

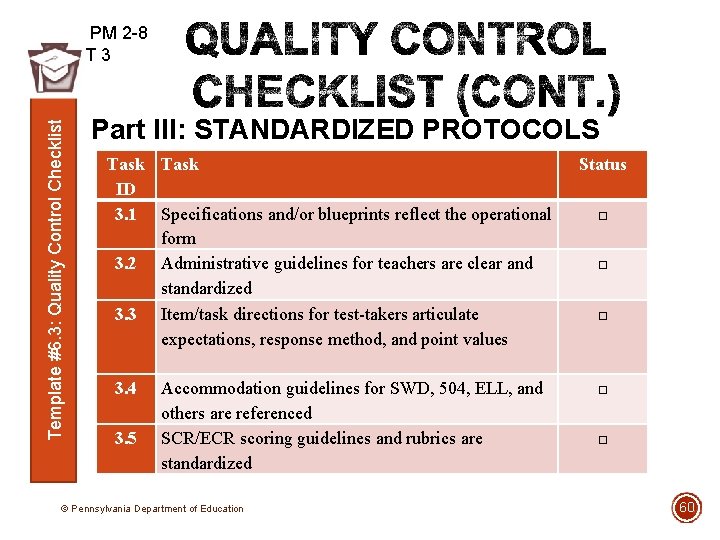

Template #6. 3: Quality Control Checklist PM 2 -8 T 3 Part III: STANDARDIZED PROTOCOLS Task ID 3. 1 Specifications and/or blueprints reflect the operational form 3. 2 Administrative guidelines for teachers are clear and standardized 3. 3 Item/task directions for test-takers articulate expectations, response method, and point values 3. 4 3. 5 Accommodation guidelines for SWD, 504, ELL, and others are referenced SCR/ECR scoring guidelines and rubrics are standardized © Pennsylvania Department of Education Status □ □ □ 60

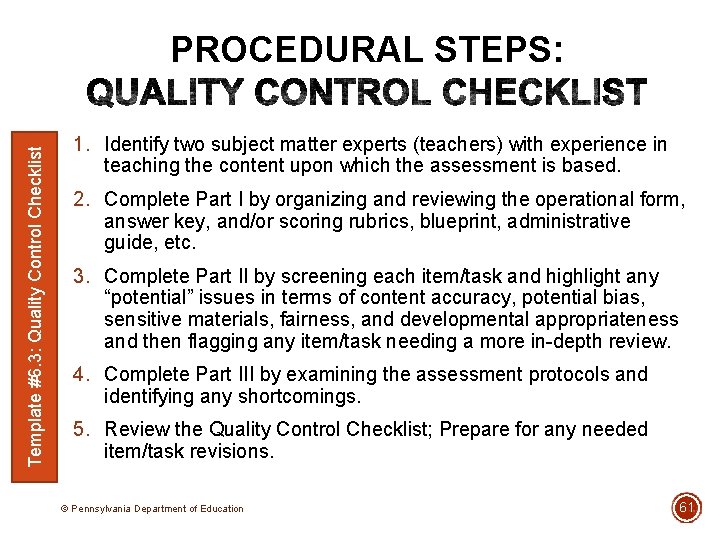

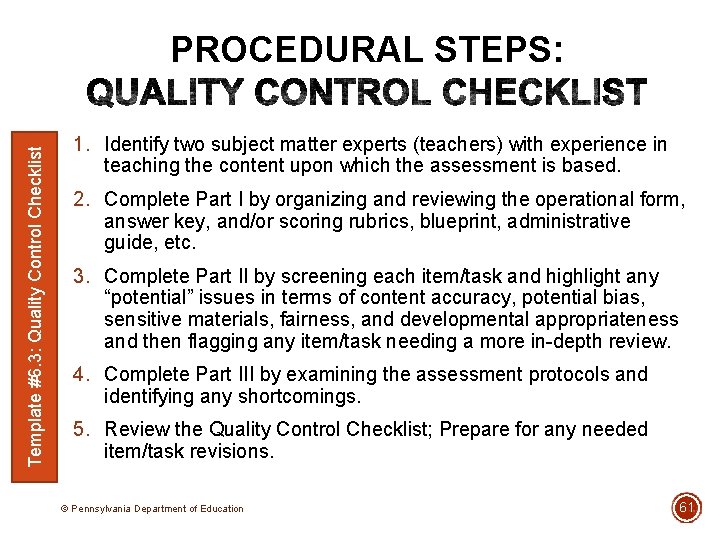

Template #6. 3: Quality Control Checklist PROCEDURAL STEPS: 1. Identify two subject matter experts (teachers) with experience in teaching the content upon which the assessment is based. 2. Complete Part I by organizing and reviewing the operational form, answer key, and/or scoring rubrics, blueprint, administrative guide, etc. 3. Complete Part II by screening each item/task and highlight any “potential” issues in terms of content accuracy, potential bias, sensitive materials, fairness, and developmental appropriateness and then flagging any item/task needing a more in-depth review. 4. Complete Part III by examining the assessment protocols and identifying any shortcomings. 5. Review the Quality Control Checklist; Prepare for any needed item/task revisions. © Pennsylvania Department of Education 61

Template #6. 3: Assessment Quality Rubric ASSESSMENT QUALITY RUBRIC © Pennsylvania Department of Education 62

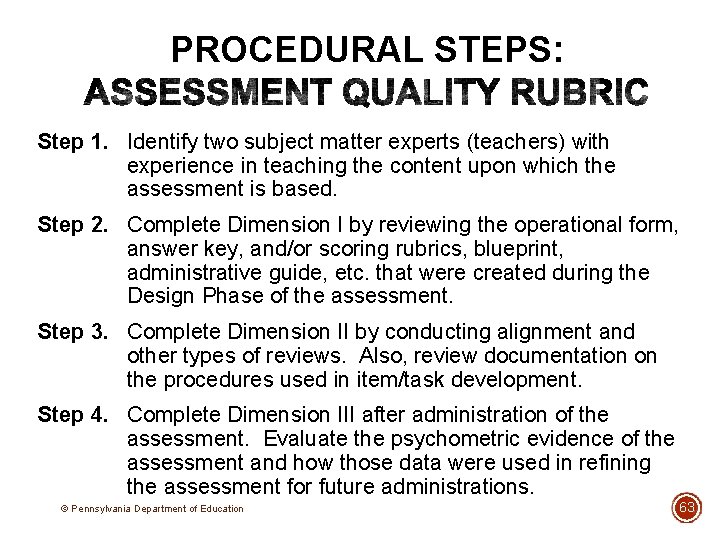

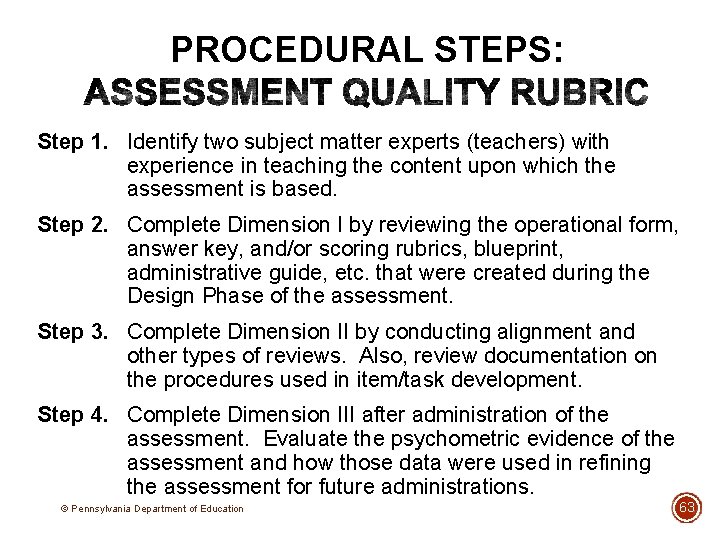

PROCEDURAL STEPS: Step 1. Identify two subject matter experts (teachers) with experience in teaching the content upon which the assessment is based. Step 2. Complete Dimension I by reviewing the operational form, answer key, and/or scoring rubrics, blueprint, administrative guide, etc. that were created during the Design Phase of the assessment. Step 3. Complete Dimension II by conducting alignment and other types of reviews. Also, review documentation on the procedures used in item/task development. Step 4. Complete Dimension III after administration of the assessment. Evaluate the psychometric evidence of the assessment and how those data were used in refining the assessment for future administrations. © Pennsylvania Department of Education 63

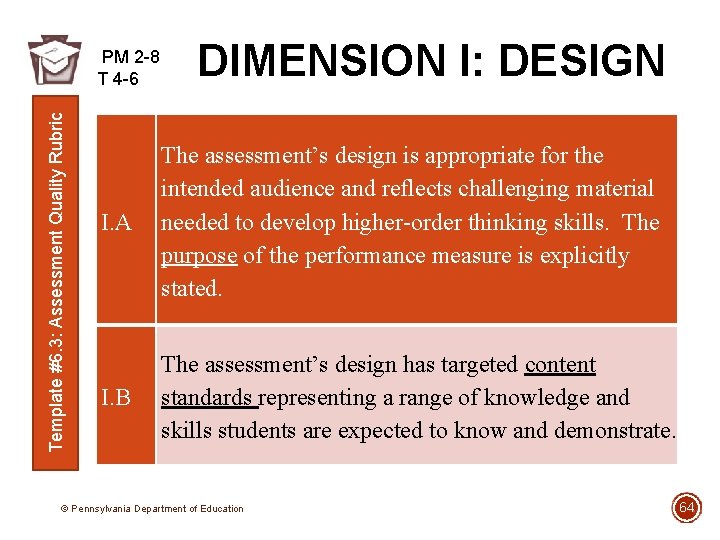

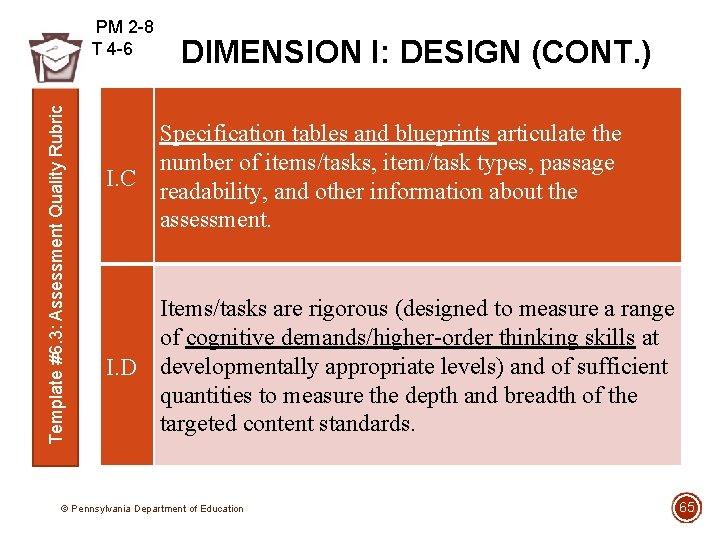

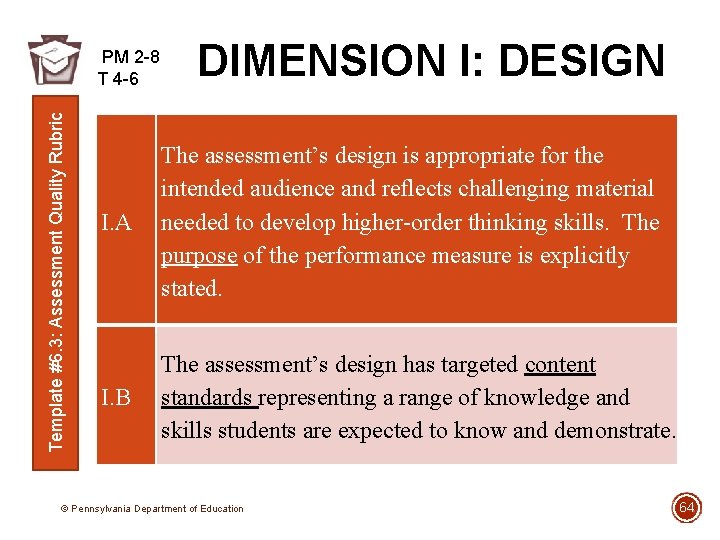

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION I: DESIGN I. A The assessment’s design is appropriate for the intended audience and reflects challenging material needed to develop higher-order thinking skills. The purpose of the performance measure is explicitly stated. I. B The assessment’s design has targeted content standards representing a range of knowledge and skills students are expected to know and demonstrate. © Pennsylvania Department of Education 64

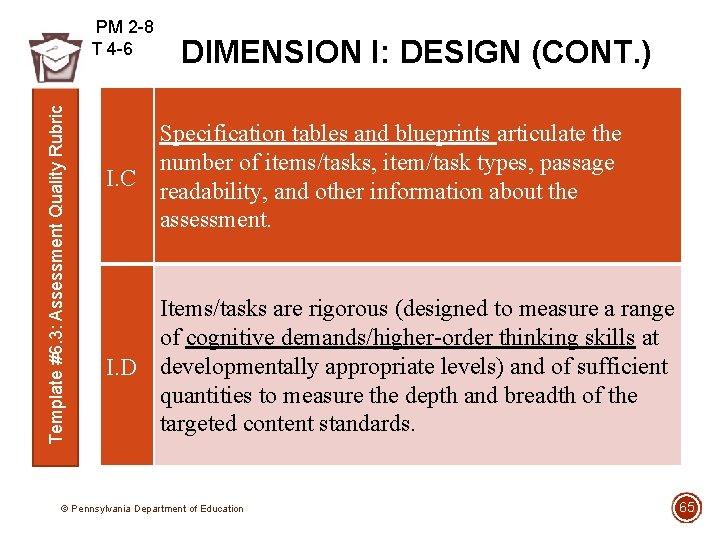

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 I. C DIMENSION I: DESIGN (CONT. ) Specification tables and blueprints articulate the number of items/tasks, item/task types, passage readability, and other information about the assessment. Items/tasks are rigorous (designed to measure a range of cognitive demands/higher-order thinking skills at I. D developmentally appropriate levels) and of sufficient quantities to measure the depth and breadth of the targeted content standards. © Pennsylvania Department of Education 65

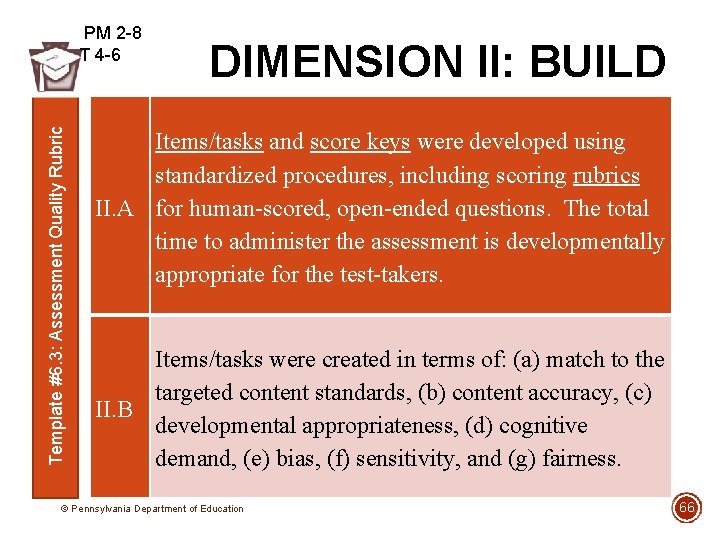

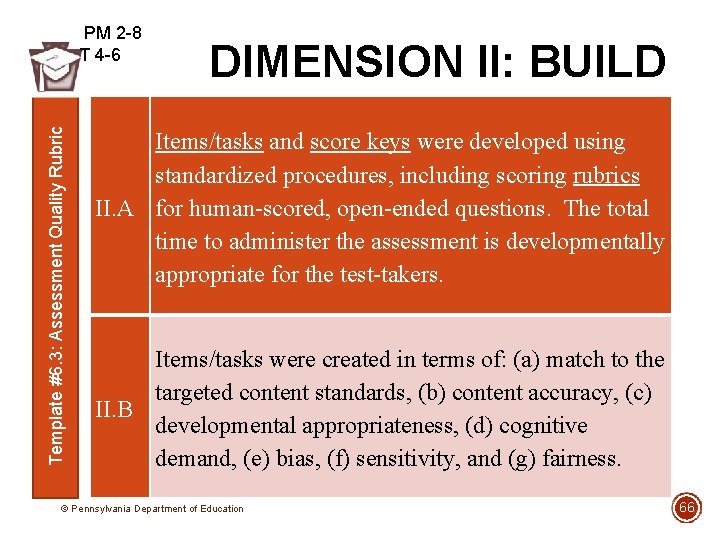

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION II: BUILD Items/tasks and score keys were developed using standardized procedures, including scoring rubrics II. A for human-scored, open-ended questions. The total time to administer the assessment is developmentally appropriate for the test-takers. Items/tasks were created in terms of: (a) match to the targeted content standards, (b) content accuracy, (c) II. B developmental appropriateness, (d) cognitive demand, (e) bias, (f) sensitivity, and (g) fairness. © Pennsylvania Department of Education 66

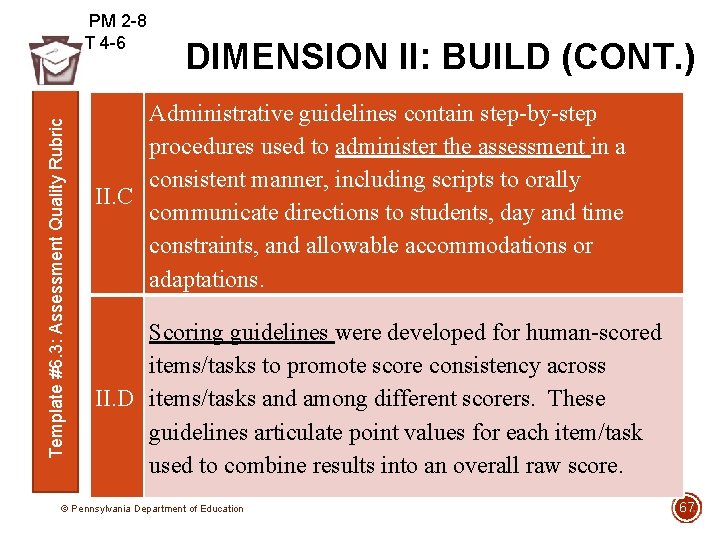

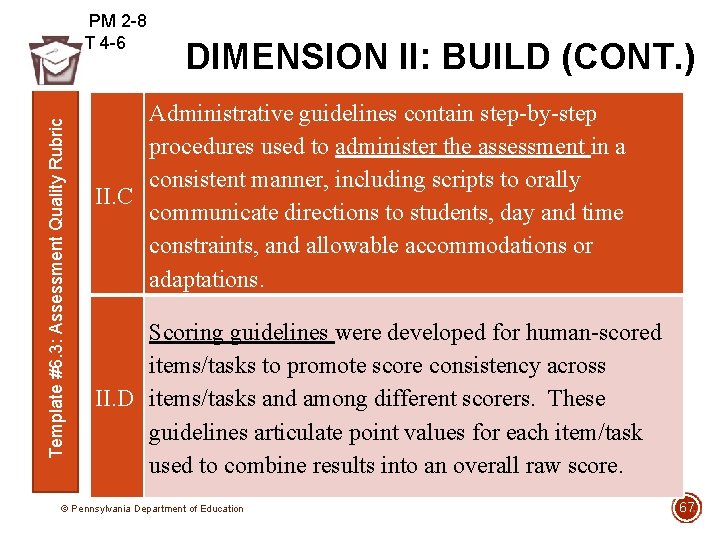

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION II: BUILD (CONT. ) Administrative guidelines contain step-by-step procedures used to administer the assessment in a consistent manner, including scripts to orally II. C communicate directions to students, day and time constraints, and allowable accommodations or adaptations. Scoring guidelines were developed for human-scored items/tasks to promote score consistency across II. D items/tasks and among different scorers. These guidelines articulate point values for each item/task used to combine results into an overall raw score. © Pennsylvania Department of Education 67

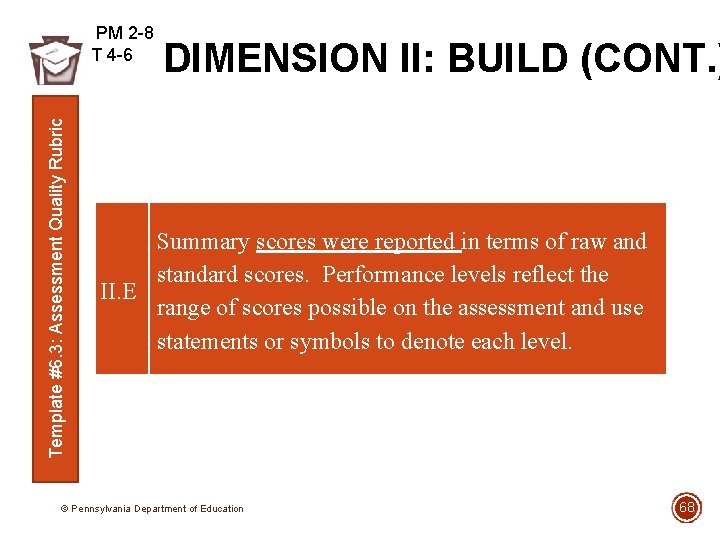

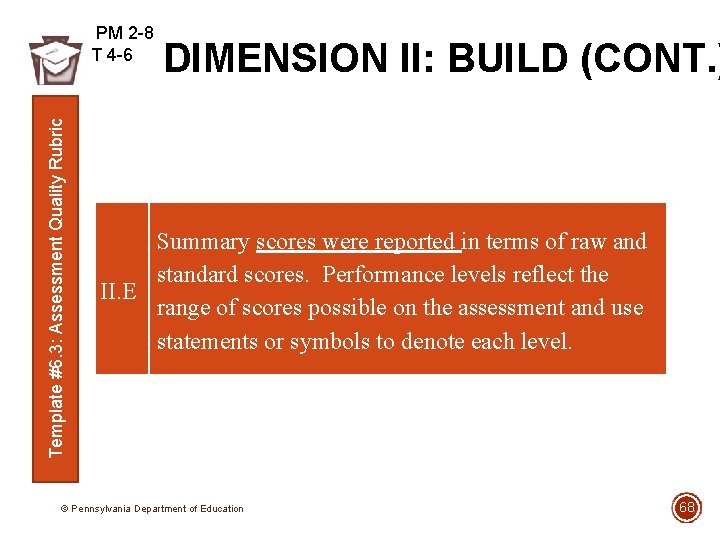

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION II: BUILD (CONT. ) Summary scores were reported in terms of raw and standard scores. Performance levels reflect the II. E range of scores possible on the assessment and use statements or symbols to denote each level. © Pennsylvania Department of Education 68

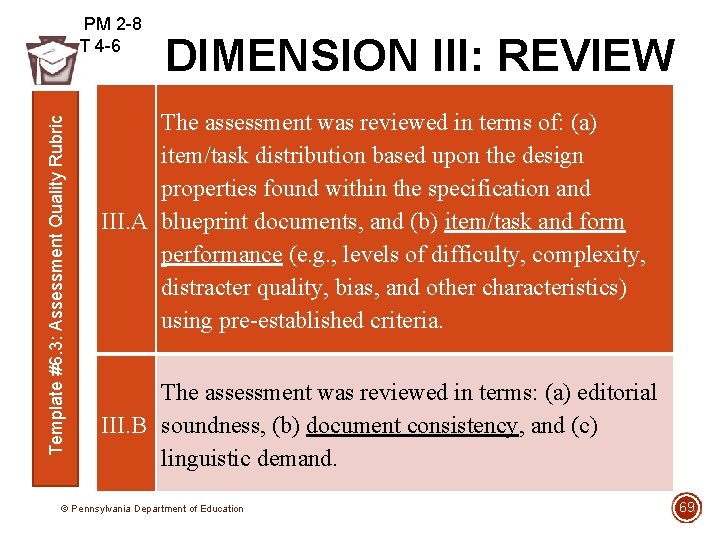

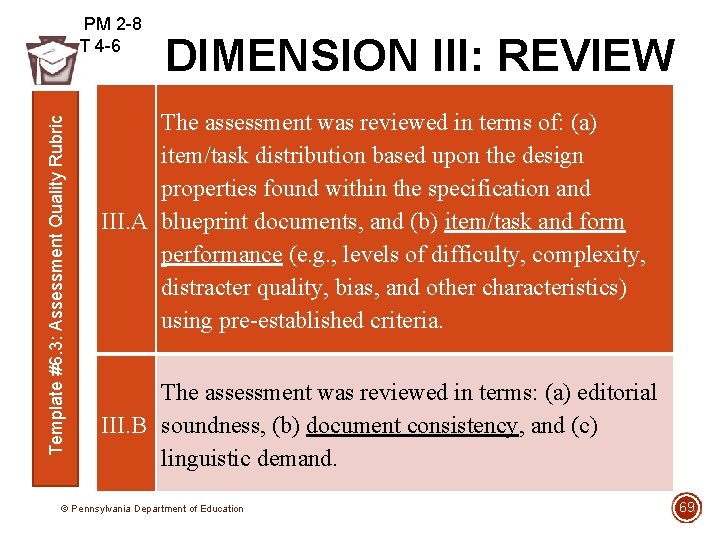

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION III: REVIEW The assessment was reviewed in terms of: (a) item/task distribution based upon the design properties found within the specification and III. A blueprint documents, and (b) item/task and form performance (e. g. , levels of difficulty, complexity, distracter quality, bias, and other characteristics) using pre-established criteria. The assessment was reviewed in terms: (a) editorial III. B soundness, (b) document consistency, and (c) linguistic demand. © Pennsylvania Department of Education 69

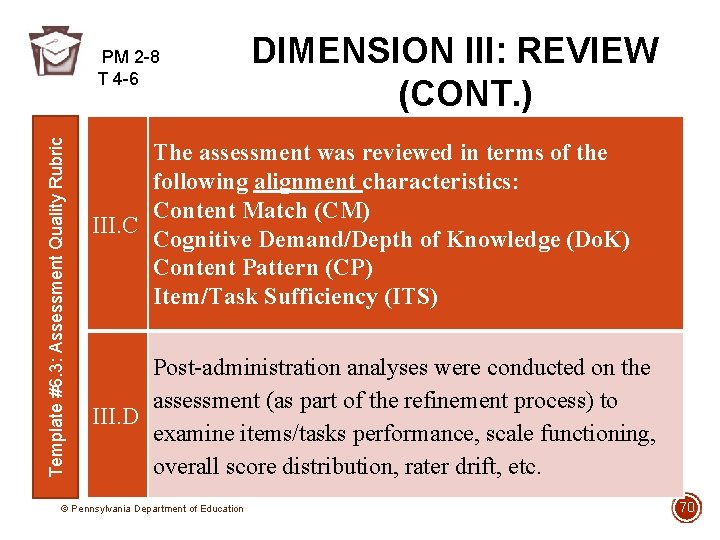

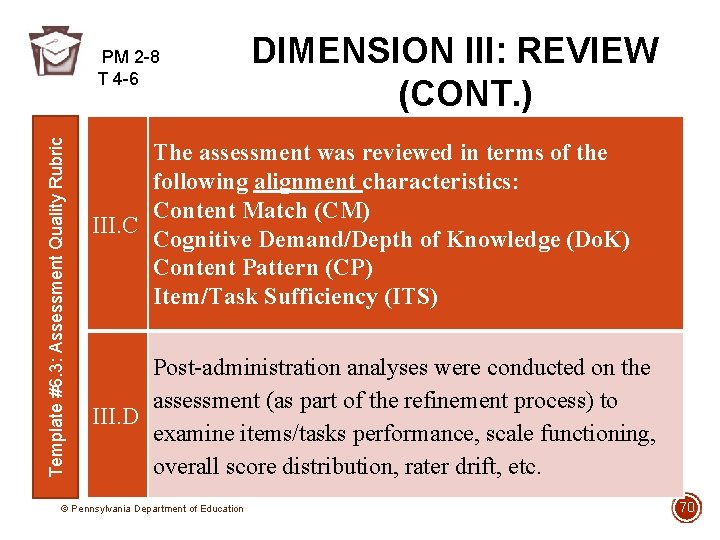

Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 DIMENSION III: REVIEW (CONT. ) The assessment was reviewed in terms of the following alignment characteristics: Content Match (CM) III. C Cognitive Demand/Depth of Knowledge (Do. K) Content Pattern (CP) Item/Task Sufficiency (ITS) Post-administration analyses were conducted on the assessment (as part of the refinement process) to III. D examine items/tasks performance, scale functioning, overall score distribution, rater drift, etc. © Pennsylvania Department of Education 70

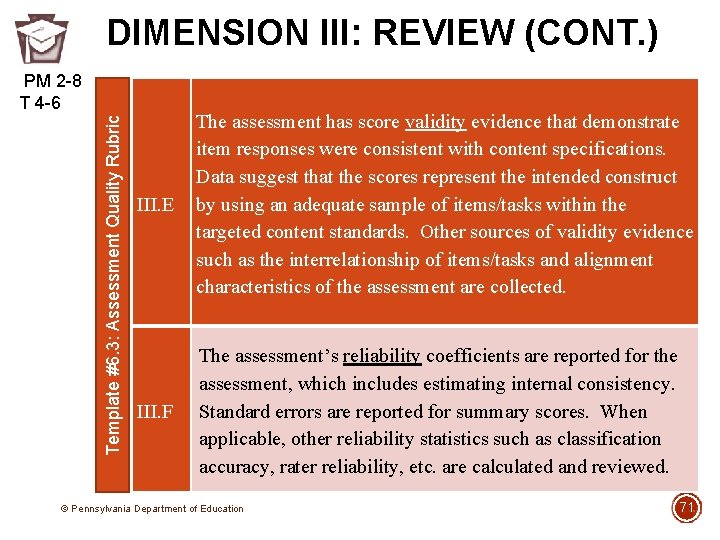

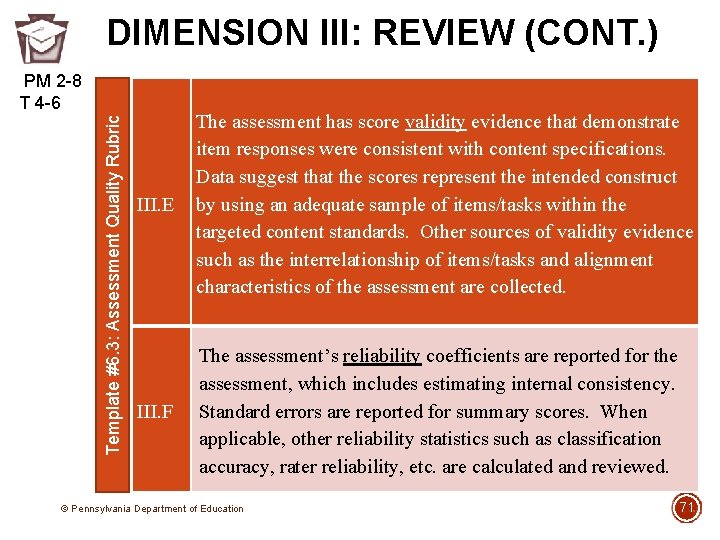

DIMENSION III: REVIEW (CONT. ) Template #6. 3: Assessment Quality Rubric PM 2 -8 T 4 -6 III. E The assessment has score validity evidence that demonstrate item responses were consistent with content specifications. Data suggest that the scores represent the intended construct by using an adequate sample of items/tasks within the targeted content standards. Other sources of validity evidence such as the interrelationship of items/tasks and alignment characteristics of the assessment are collected. III. F The assessment’s reliability coefficients are reported for the assessment, which includes estimating internal consistency. Standard errors are reported for summary scores. When applicable, other reliability statistics such as classification accuracy, rater reliability, etc. are calculated and reviewed. © Pennsylvania Department of Education 71

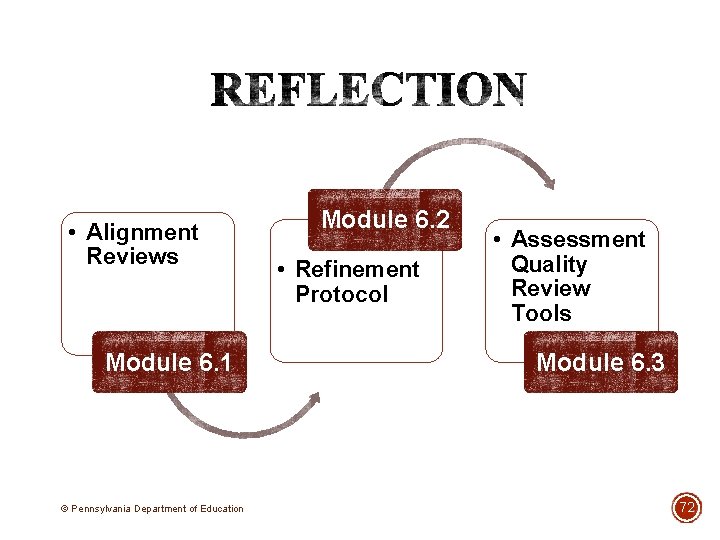

• Alignment Reviews Module 6. 1 © Pennsylvania Department of Education Module 6. 2 • Refinement Protocol • Assessment Quality Review Tools Module 6. 3 72

SUMMARY §Outlined steps needed to conduct alignment reviews of operational forms. §Explored the refinement tasks. §Examined an assessment quality control checklist tool and an assessment quality rubric. © Pennsylvania Department of Education 73

Venn diagram media information and technology literacy

Venn diagram media information and technology literacy Venn diagram of media information and digital literacy

Venn diagram of media information and digital literacy Different people in media

Different people in media Cyber literacy for the digital age

Cyber literacy for the digital age Media and information literacy module 6

Media and information literacy module 6 C device module module 1

C device module module 1 Module 12 sequences and series answers

Module 12 sequences and series answers M class.amplify.com/student

M class.amplify.com/student Northstar digital literacy assessment

Northstar digital literacy assessment Northstar digital literacy assessment

Northstar digital literacy assessment Maclaurin series vs taylor series

Maclaurin series vs taylor series Heisenberg 1925 paper

Heisenberg 1925 paper Maclaurin expansion

Maclaurin expansion Maclaurin series vs taylor series

Maclaurin series vs taylor series Ibm p series models

Ibm p series models Shunt series feedback system diagram

Shunt series feedback system diagram Series aiding and series opposing

Series aiding and series opposing Sum of infinite series

Sum of infinite series Self assessment module 3

Self assessment module 3 Communicative grammar

Communicative grammar Pmrn sso

Pmrn sso Occupational health and safety course outline

Occupational health and safety course outline Spirogram diagram

Spirogram diagram Respiratory zone vs conducting zone

Respiratory zone vs conducting zone Conducting zone lungs

Conducting zone lungs Electric potential inside non conducting sphere

Electric potential inside non conducting sphere Planning and conducting instruction in the classroom

Planning and conducting instruction in the classroom What is the conducting zone of the respiratory system

What is the conducting zone of the respiratory system Statistics is the science of conducting studies to:

Statistics is the science of conducting studies to: Conducting a fair test

Conducting a fair test A physics teacher rubs a glass object

A physics teacher rubs a glass object Electric potential

Electric potential Chapter 29 conducting marketing research answers

Chapter 29 conducting marketing research answers Chapter 29 conducting marketing research answers

Chapter 29 conducting marketing research answers Research process

Research process Steps in conducting evaluation

Steps in conducting evaluation Steps of contrastive analysis

Steps of contrastive analysis Governing marriage laws and conducting elections involve

Governing marriage laws and conducting elections involve Relation between electric field and potential energy

Relation between electric field and potential energy Heart conducting system

Heart conducting system Survey experiment or observational study

Survey experiment or observational study Juan is conducting today's meeting of the spanish club

Juan is conducting today's meeting of the spanish club Socially sensitive research definition

Socially sensitive research definition Combines natural observation with personal interviews

Combines natural observation with personal interviews The nurse is conducting developmental surveillance

The nurse is conducting developmental surveillance Conducting computerized interviews

Conducting computerized interviews Naturalistic observation def

Naturalistic observation def When conducting research online the keyword

When conducting research online the keyword Conducting business ethically and responsibly

Conducting business ethically and responsibly Supervisor coaching

Supervisor coaching Shunt voltage regulator using op-amp

Shunt voltage regulator using op-amp Conducting the assurance engagement

Conducting the assurance engagement Conducting airways

Conducting airways When conducting post project audits

When conducting post project audits Conducting effective meetings ppt

Conducting effective meetings ppt Marketing research and forecasting demand

Marketing research and forecasting demand Conducting marketing research and forecasting demand

Conducting marketing research and forecasting demand Trevor sofield

Trevor sofield Normal minute ventilation

Normal minute ventilation Flippase

Flippase Fibroserous sac

Fibroserous sac Six identical conducting rods

Six identical conducting rods Conducting slab

Conducting slab 6 phases of fire drill

6 phases of fire drill Conducting slab

Conducting slab Two parallel conducting plates

Two parallel conducting plates Luann is conducting a performance appraisal on bill

Luann is conducting a performance appraisal on bill Market research demand forecasting

Market research demand forecasting A square conductor moves through a uniform

A square conductor moves through a uniform A conducting loop is halfway into a magnetic field

A conducting loop is halfway into a magnetic field Process oriented learning competencies

Process oriented learning competencies Static assessment vs dynamic assessment

Static assessment vs dynamic assessment Portfolio assessment matches assessment to teaching

Portfolio assessment matches assessment to teaching Riva crm

Riva crm