Artificial Neural Networks ANNs Lecture 12 Outline of

- Slides: 28

Artificial Neural Networks (ANNs) Lecture 12

Outline of Rule-Based Classification 1. Overview of ANN 2. Basic Feedforward ANN 3. Linear Perceptron Algorithm 4. Nonlinear and Multilayer Perceptron 5. Advanced ANN

1. Overview of ANNs �Inspired by neuroscience of the brain �Neurons linked together by axons (strands of fiber) �Axons transmit nerve impulses between neurons �Dendrites connect neurons to axons of other neurons at synapses �Learning happens through changes in synaptic connection strength

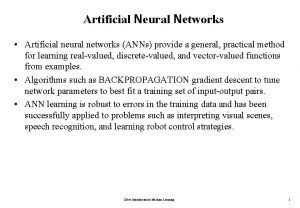

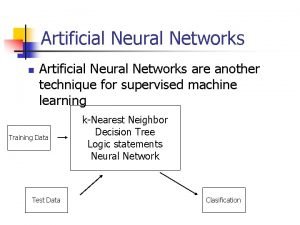

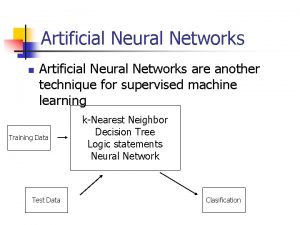

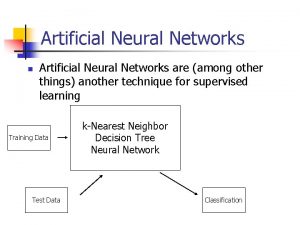

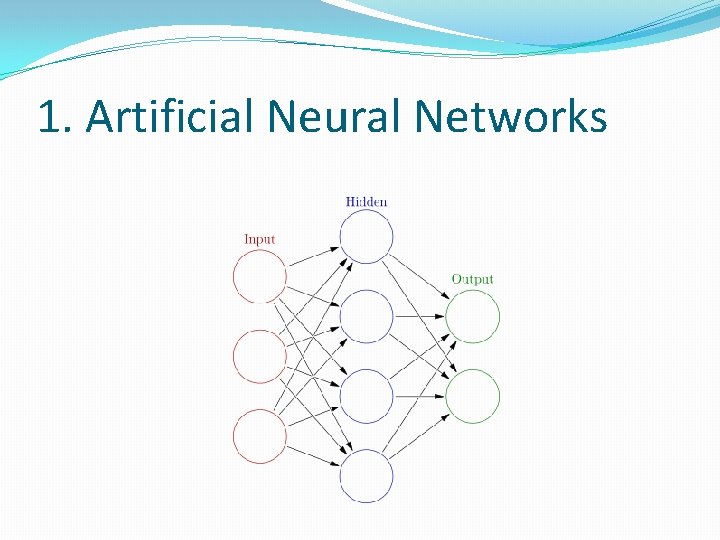

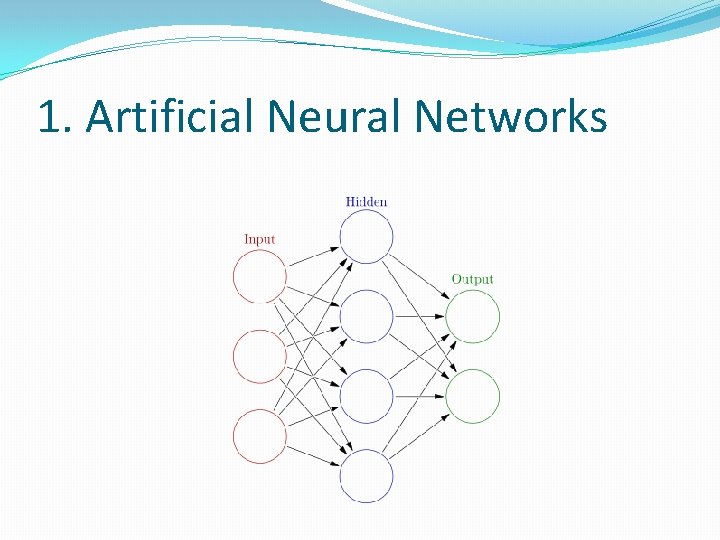

1. Artificial Neural Networks

Artificial Neural Networks (ANNs) �Perceptron � Invented at Cornell Aeronautical Laboratory in 1957 by Frank Rosenblatt � Single layer feed-forward neural network � Initially promising but ultimately disappointing – only able to learn linearly separable patterns � Minsky and Papert extended to multi-layer perceptrons

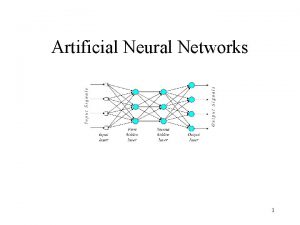

Artificial Neural Networks (ANNs) �Basic types of ANNs � Feedforward �No directed cycles �Multilayer perceptron � Recurrent �Directed cycles �Often used for handwriting recognition

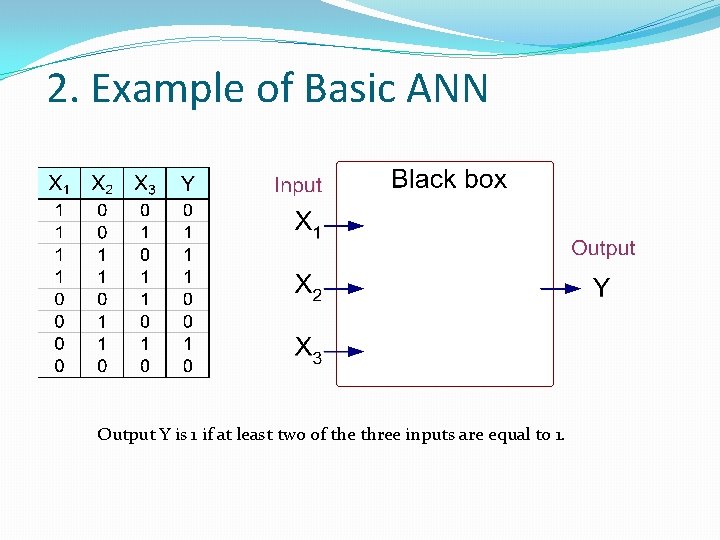

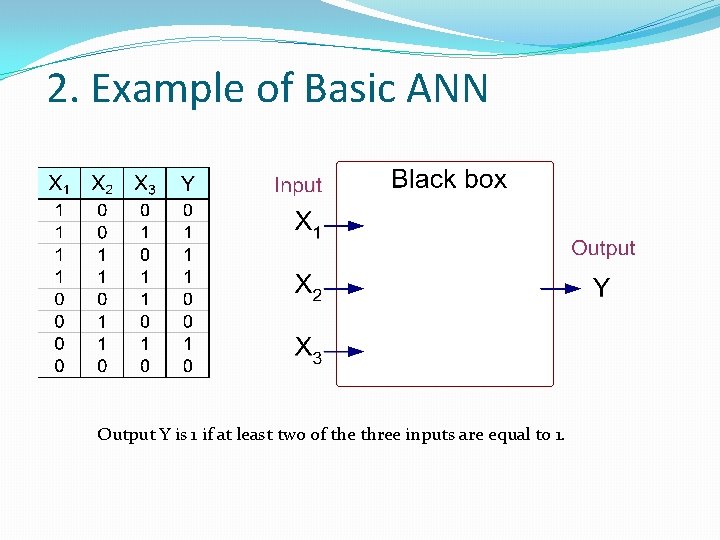

2. Example of Basic ANN Output Y is 1 if at least two of the three inputs are equal to 1.

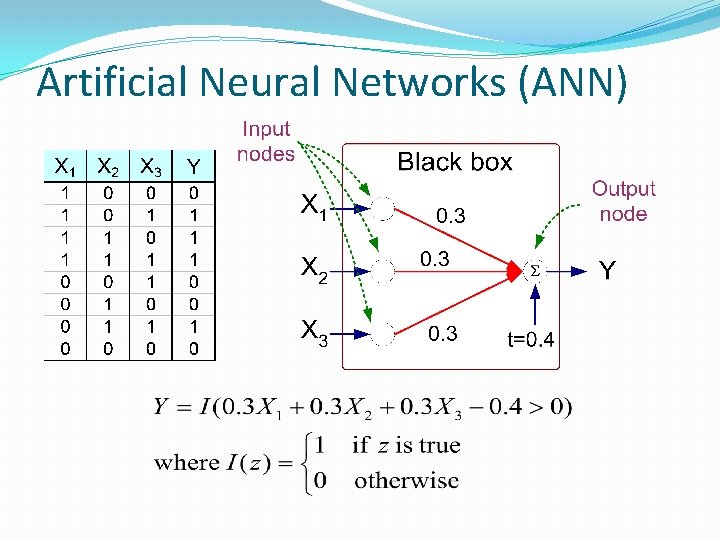

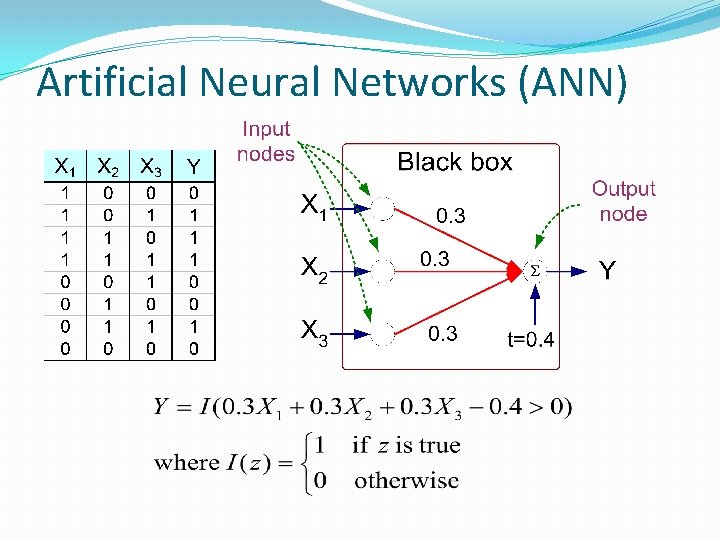

Artificial Neural Networks (ANN)

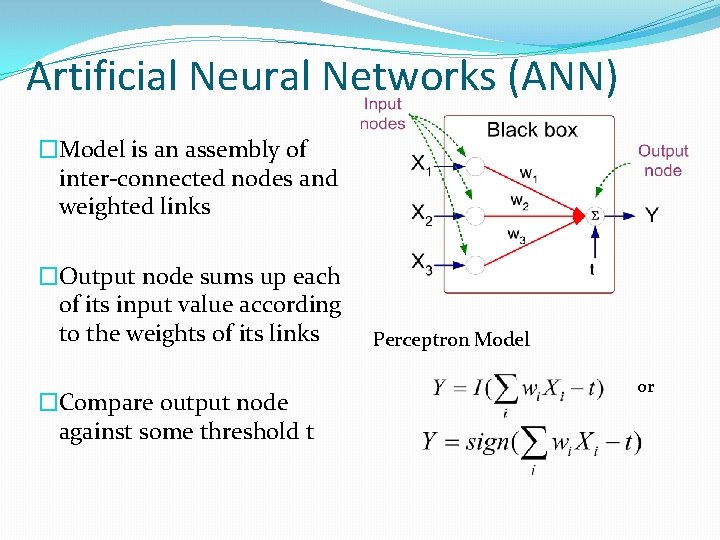

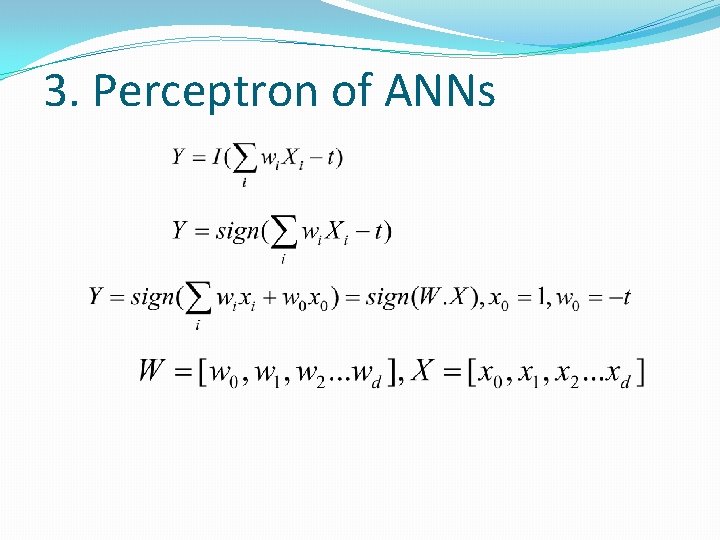

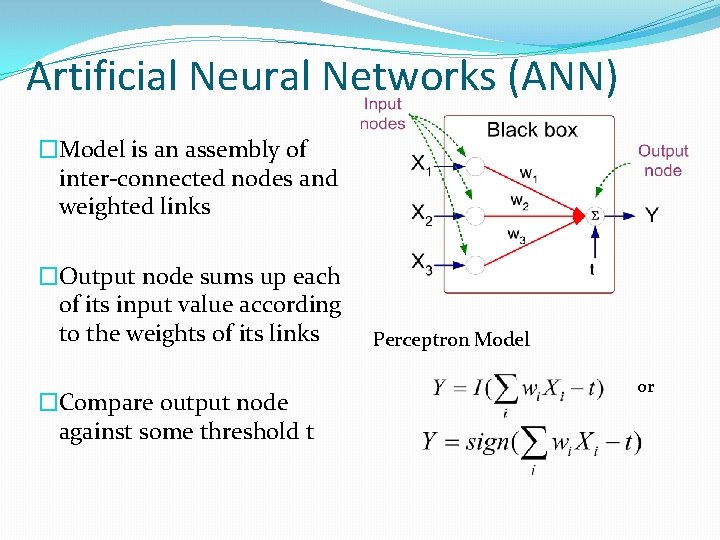

Artificial Neural Networks (ANN) �Model is an assembly of inter-connected nodes and weighted links �Output node sums up each of its input value according to the weights of its links �Compare output node against some threshold t Perceptron Model or

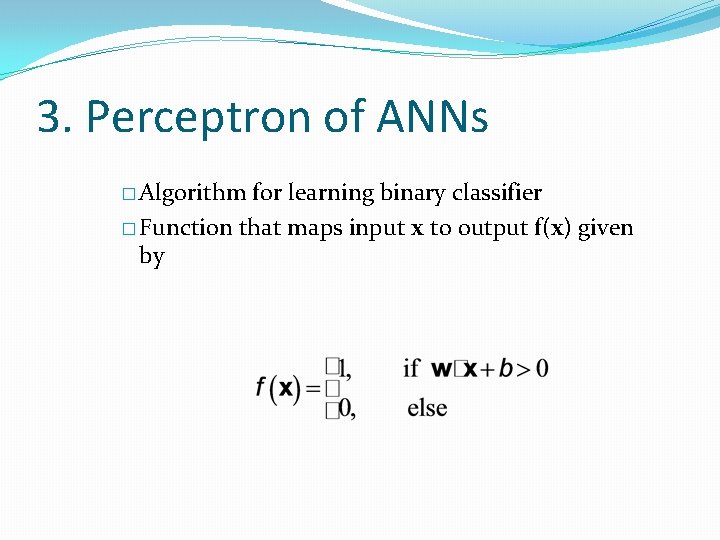

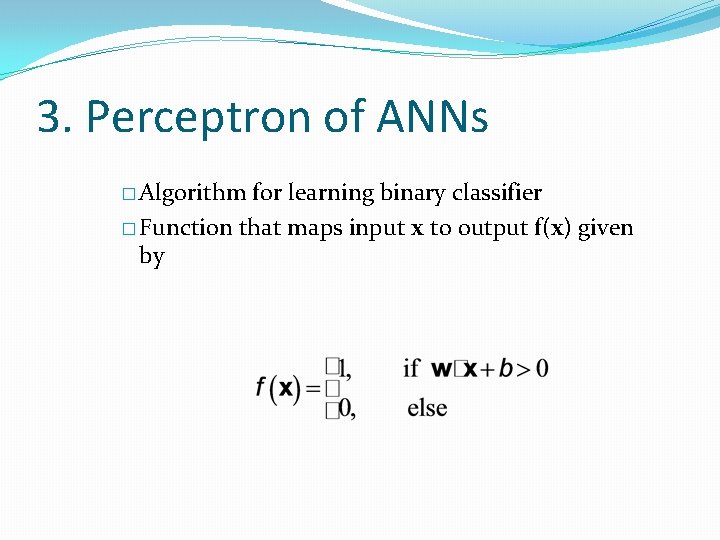

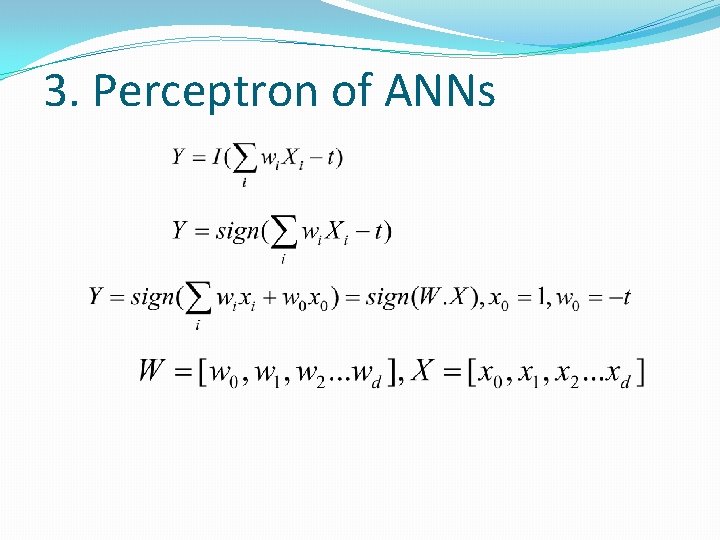

3. Perceptron of ANNs � Algorithm for learning binary classifier � Function that maps input x to output f(x) given by

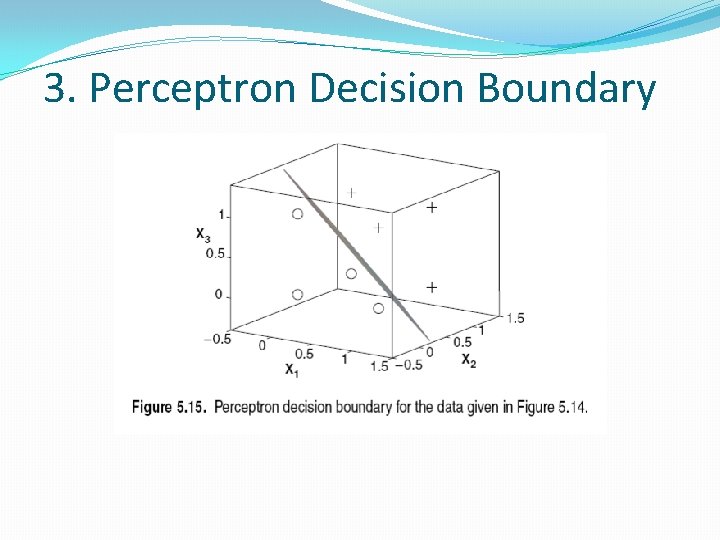

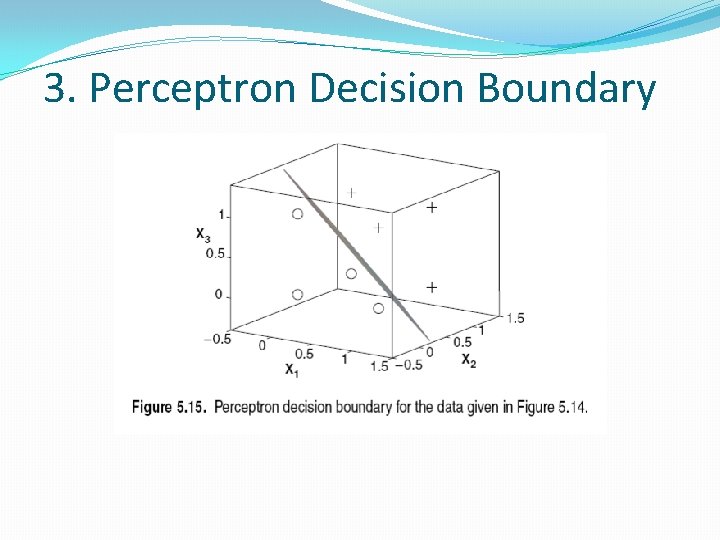

3. Perceptron Decision Boundary

3. Perceptron of ANNs

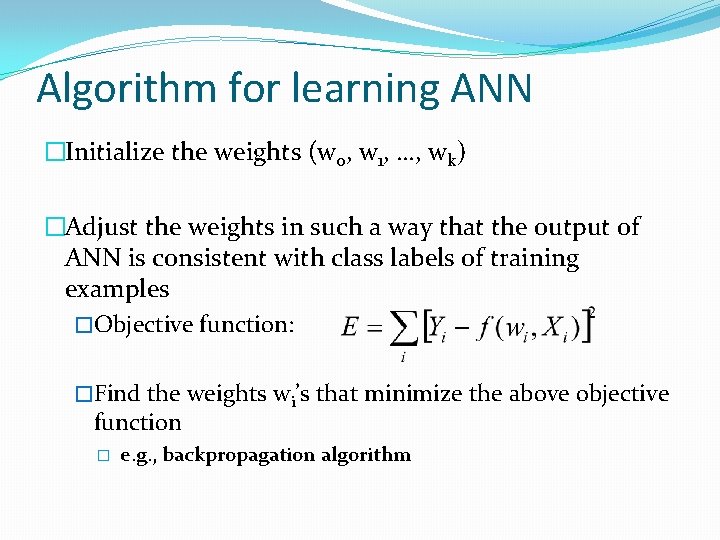

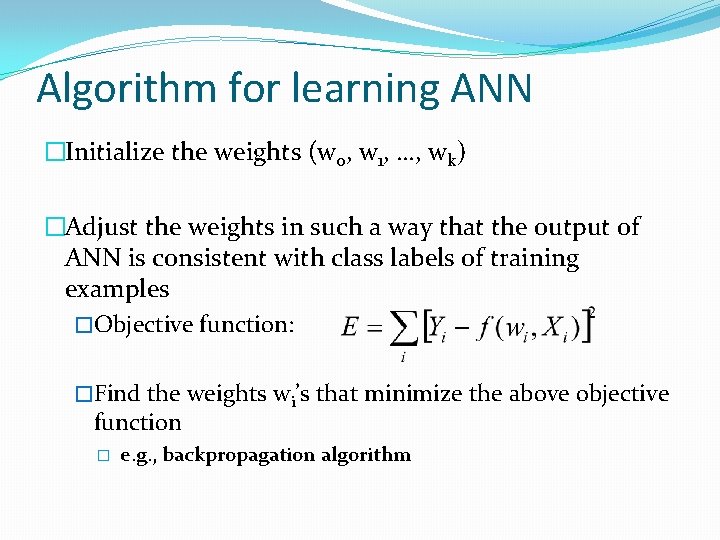

Algorithm for learning ANN �Initialize the weights (w 0, w 1, …, wk) �Adjust the weights in such a way that the output of ANN is consistent with class labels of training examples �Objective function: �Find the weights wi’s that minimize the above objective function � e. g. , backpropagation algorithm

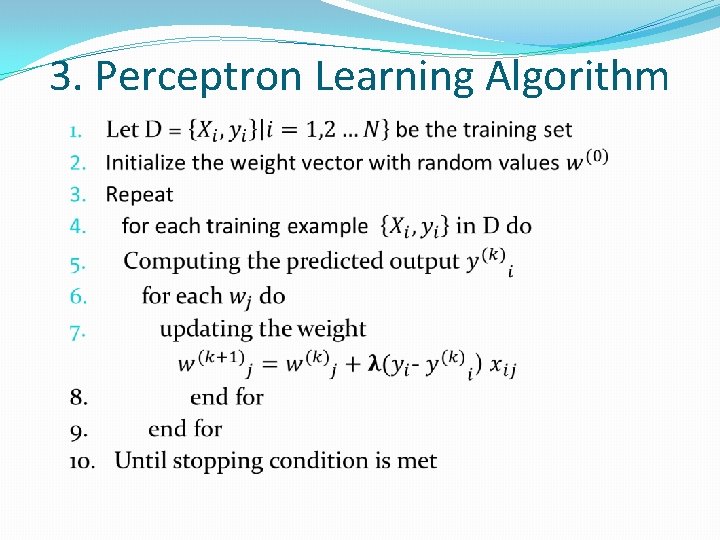

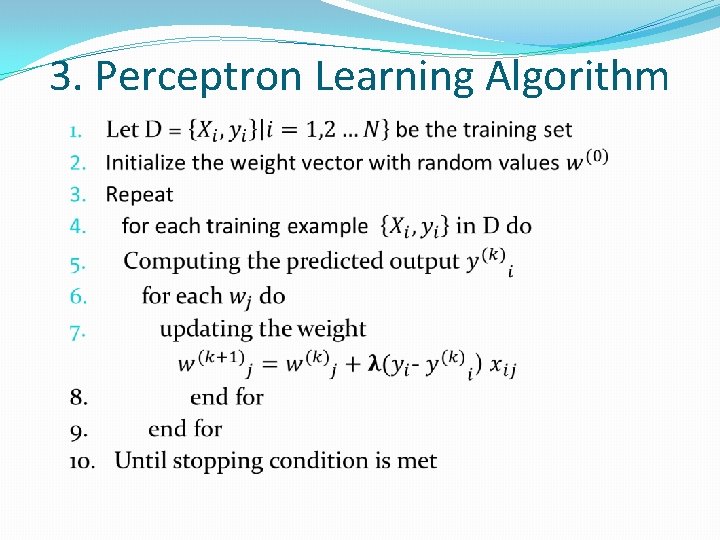

3. Perceptron Learning Algorithm

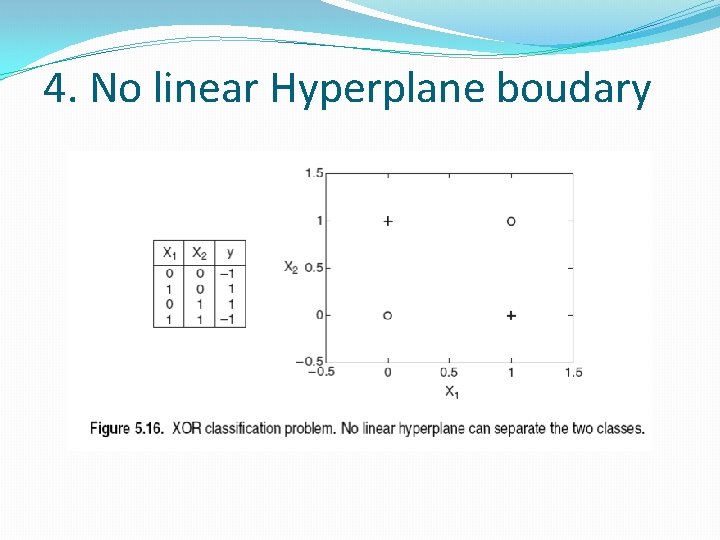

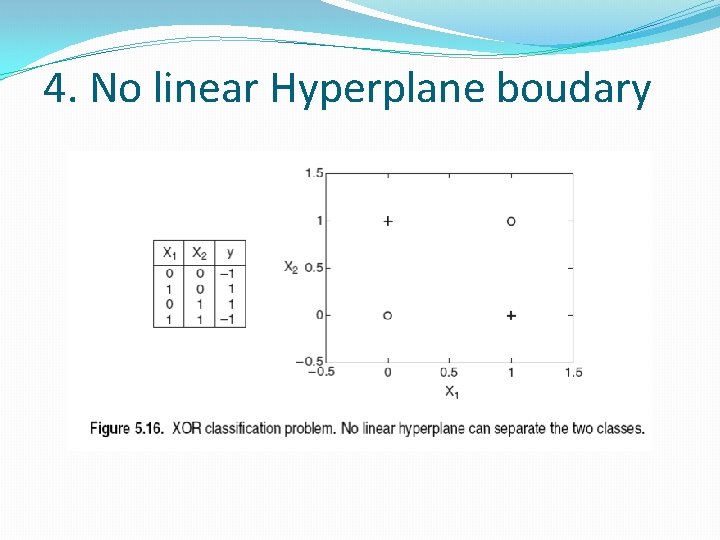

4. No linear Hyperplane boudary

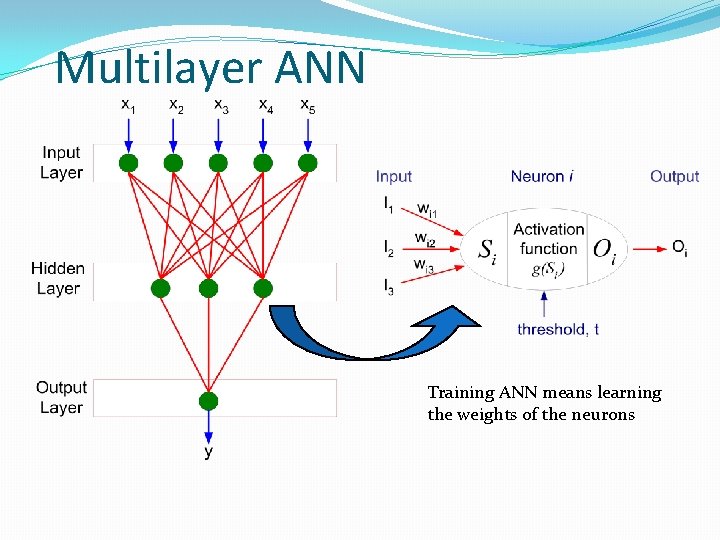

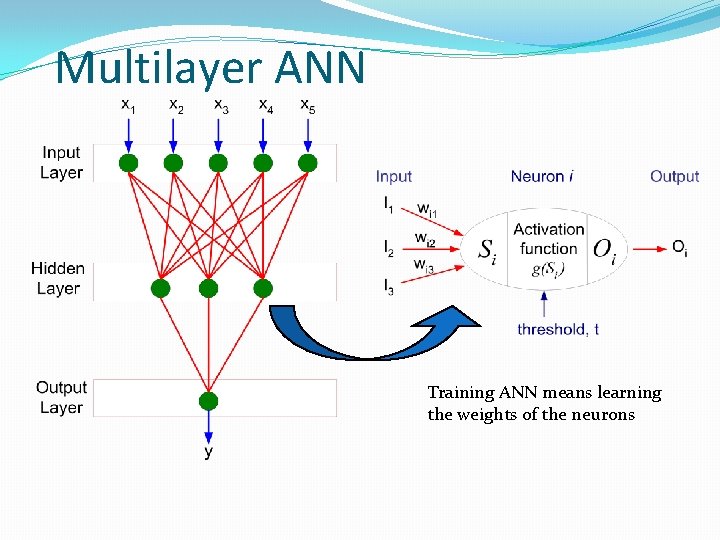

Multilayer ANN Training ANN means learning the weights of the neurons

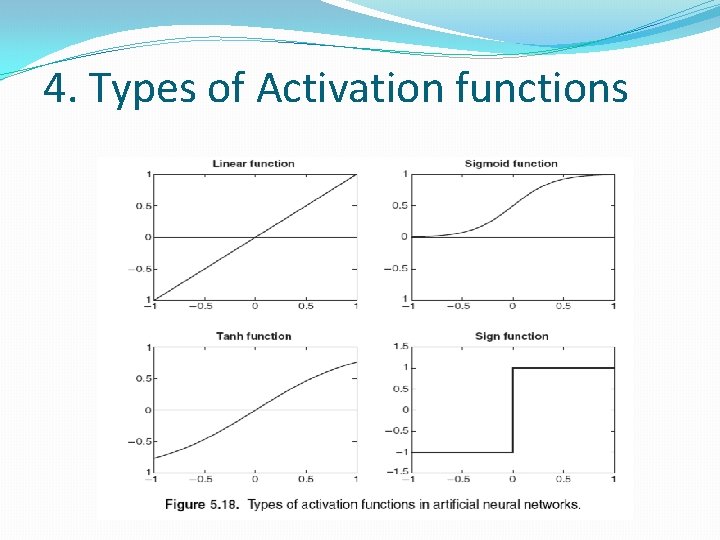

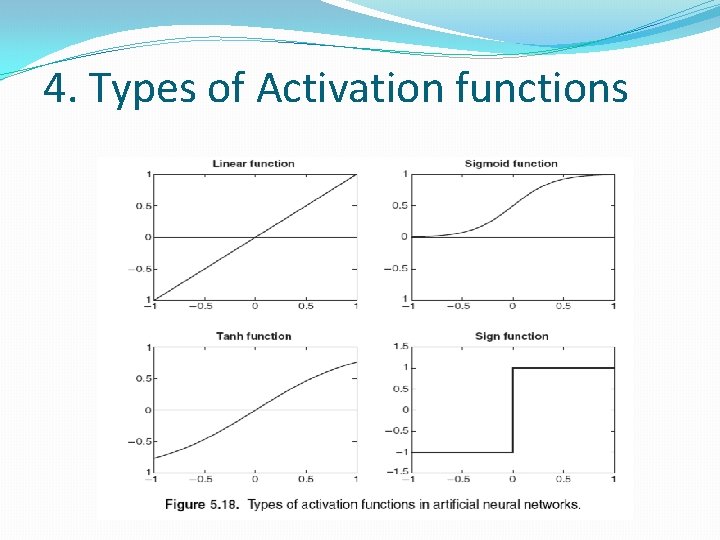

4. Types of Activation functions

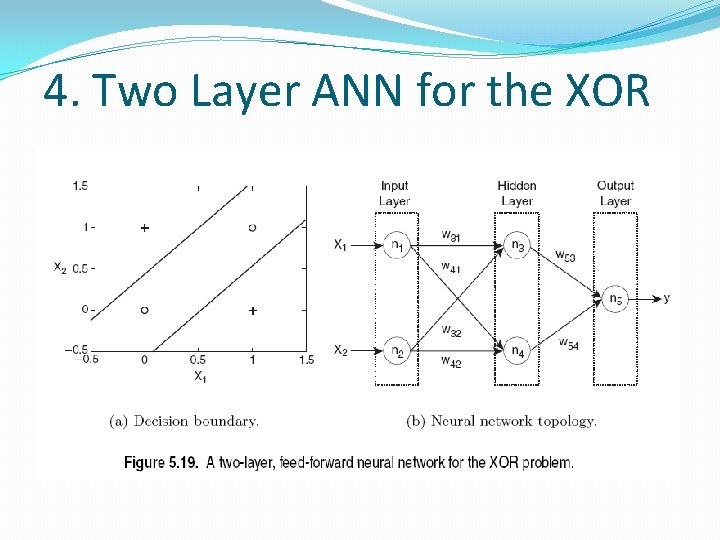

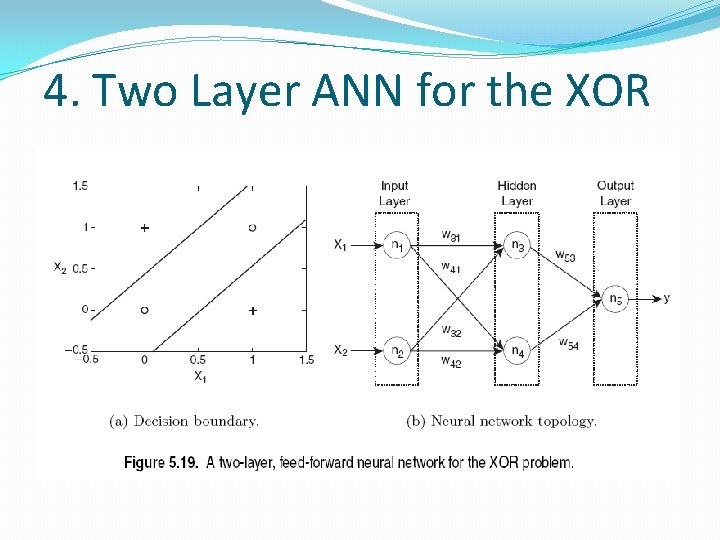

4. Two Layer ANN for the XOR

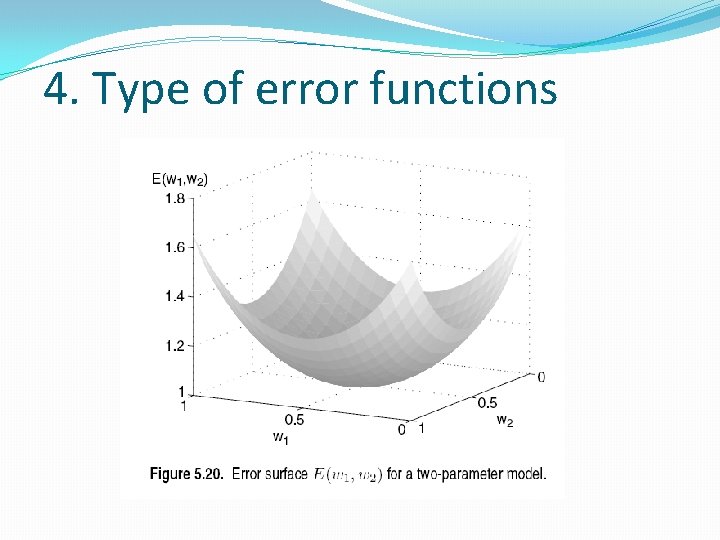

4. Type of error functions

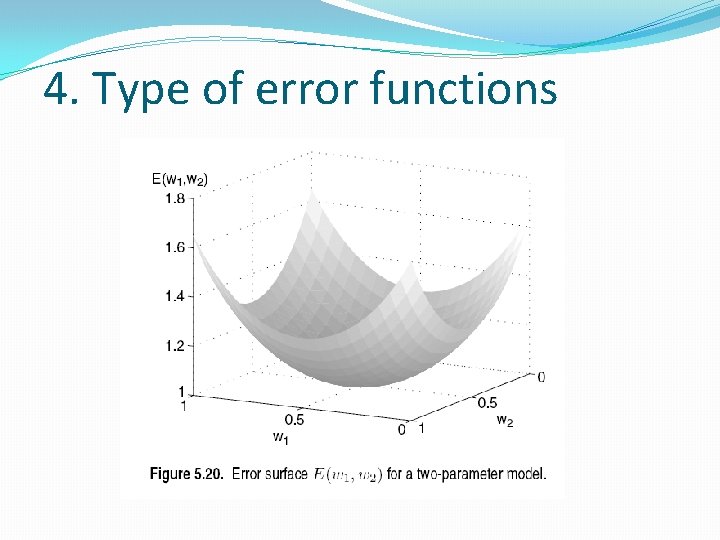

4. Type of error functions

4. Advanced ANNs �Recurrent ANN examples �Fully recurrent �Long short term memory (Jürgen Schmidhuber)

Artificial Neural Networks (ANNs) �Recurrent ANN examples �Hopfield (ECANs) �Symmetric connections �John Hopfield, 1982 �Attractor network: dynamics guaranteed to converge �Can function as associative memory

Artificial Neural Networks (ANNs) �Deep learning architectures � Hierarchical temporal memory (Jeff Hawkins and Dileep George) � Deep belief networks (George Hinton) � Convolutional networks (Yann Lecun, Yoshua Bengio) � Deep Spatiotemporal Inference Networks (Itamar Arel) � Google Deepmind

Artificial Neural Networks (ANNs) �Basic learning mechanisms � Supervised learning �Infer mapping implied by the training data �Gradient descent/Backpropagation

Artificial Neural Networks (ANNs) �Basic learning mechanisms � Unsupervised learning �Minimize some given cost/energy function � Reinforcement learning �Data generated by agent’s interactions with environment �Agent observes accumulated costs and adjust actions accordingly

Artificial Neural Networks (ANNs) �Characteristics of ANNs �Choice of model � Depends upon application � Complex models generally more difficult to learn �Learning algorithm � May require considerable experimentation to determine appropriate cost function and parameters

Artificial Neural Networks (ANNs) �Characteristics of ANNs �Choice of threshold function �ANNs can be robust �Easily implemented in parallel �Neuromorphic computing (IBM)

Relevant Reference Books Gödel, Escher, Bach – an Eternal Golden Braid By Douglas R. Hofstadter, 1999. Pulitzer Prize Winner

St anns college chirala

St anns college chirala Artificial neural network in data mining

Artificial neural network in data mining Artificial neural network terminology

Artificial neural network terminology Conclusion of artificial neural network

Conclusion of artificial neural network Pengertian artificial neural network

Pengertian artificial neural network Graph neural network lecture

Graph neural network lecture Efficient processing of deep neural networks pdf

Efficient processing of deep neural networks pdf The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks Neural networks and fuzzy logic

Neural networks and fuzzy logic Toolbox neural network matlab

Toolbox neural network matlab Deep forest towards an alternative to deep neural networks

Deep forest towards an alternative to deep neural networks Audio super resolution using neural networks

Audio super resolution using neural networks Rnn

Rnn Csrmm

Csrmm Deep neural networks and mixed integer linear optimization

Deep neural networks and mixed integer linear optimization Bharath subramanyam

Bharath subramanyam Visualizing and understanding convolutional neural networks

Visualizing and understanding convolutional neural networks Xooutput

Xooutput Neural networks for rf and microwave design

Neural networks for rf and microwave design Convolutional neural networks

Convolutional neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Predicting nba games using neural networks

Predicting nba games using neural networks On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Convolutional neural network

Convolutional neural network Alternatives to convolutional neural networks

Alternatives to convolutional neural networks Convolutional neural networks

Convolutional neural networks Liran szlak

Liran szlak 11-747 neural networks for nlp

11-747 neural networks for nlp