Artificial Neural Networks ANN Artificial Neural Networks First

- Slides: 16

Artificial Neural Networks (ANN)

Artificial Neural Networks First proposed in 1940 s as an attempt to simulate the human brain’s cognitive learning processes. They have ability to model complex, yet poorly understood problems. ANNs are simple computer-based programs whose function is to model a problem space based on trial and error.

Fundamentals of Neural Computing The basic processing element in the human nervous system is the neuron. Networks of these interconnected cells receive information from sensors in the eye, ear, etc. Information received by a neuron will either excite it (and it will pass a message along the network) or will inhibit it (suppressing information flow). Sensitivity can change with passing of time or gaining of experience.

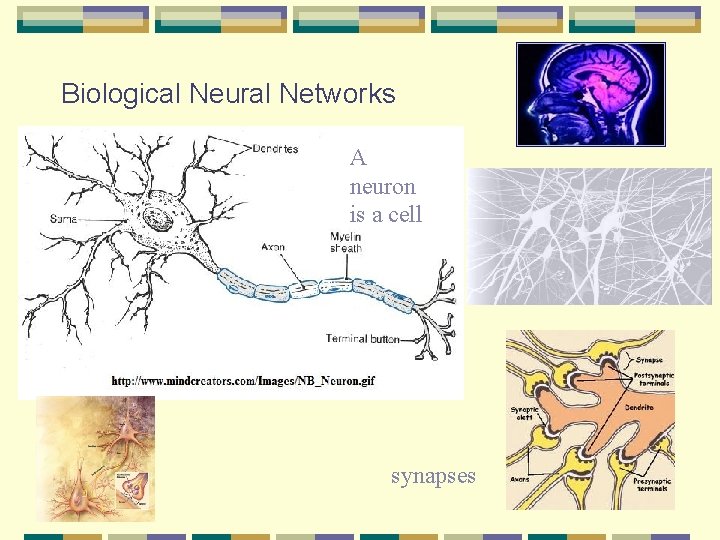

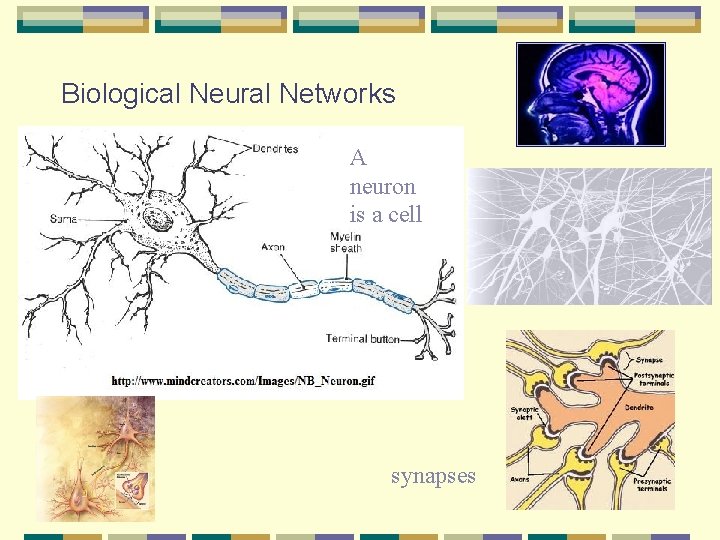

Biological Neural Networks A neuron is a cell synapses

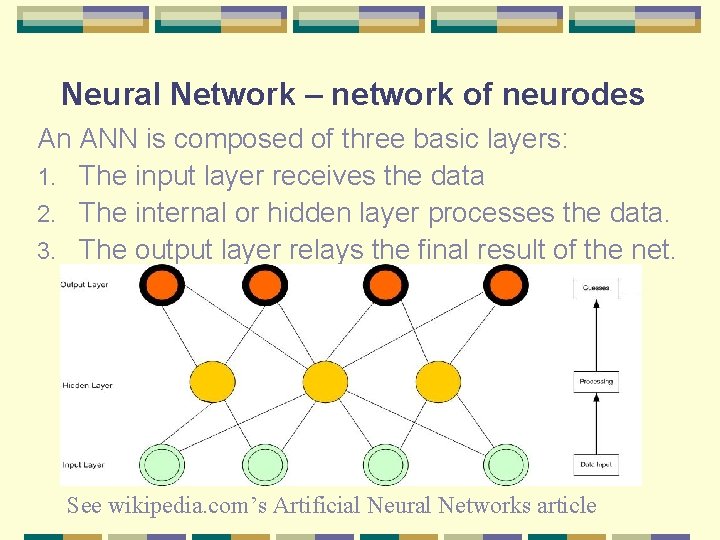

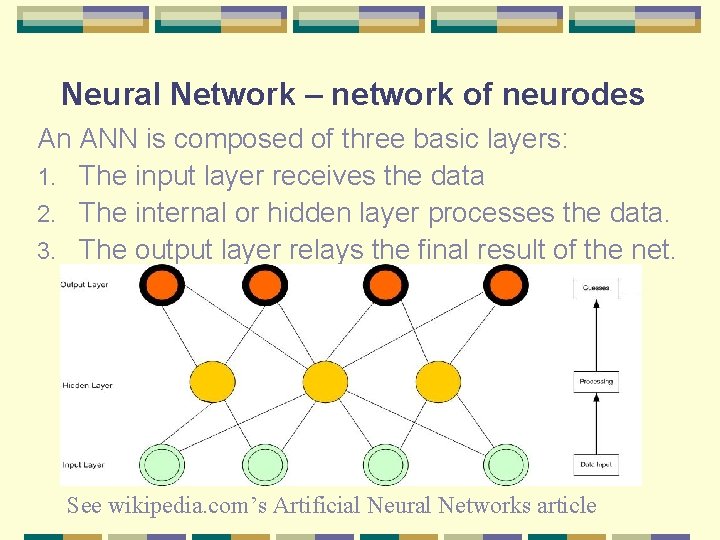

Neural Network – network of neurodes An ANN is composed of three basic layers: 1. The input layer receives the data 2. The internal or hidden layer processes the data. 3. The output layer relays the final result of the net. See wikipedia. com’s Artificial Neural Networks article

Inside the Neurode The neurode usually has multiple inputs, each input with its own weight or importance. A bias input can be used to amplify the output. The state function consolidates the weights of the various inputs into a single value (weighted sum). The transfer (also called activation) function processes this state value and makes the output (sigmoid).

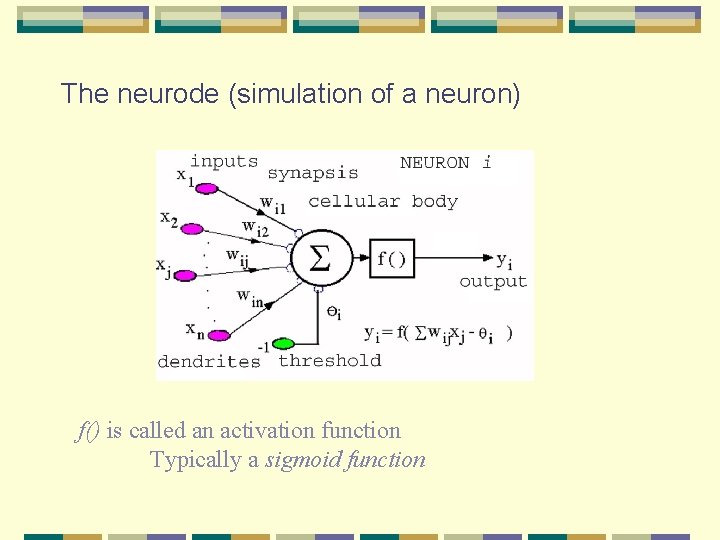

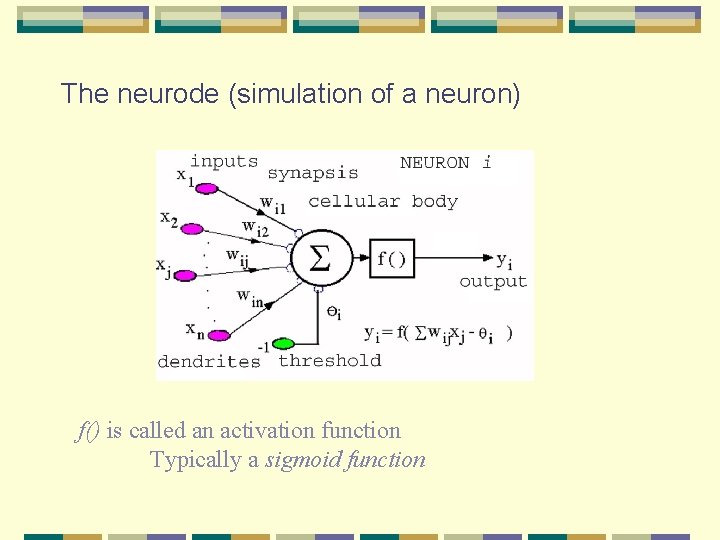

The neurode (simulation of a neuron) f() is called an activation function Typically a sigmoid function

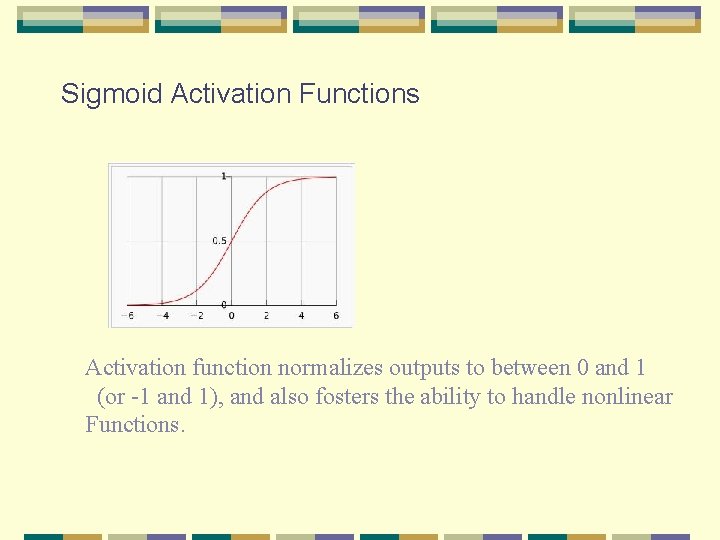

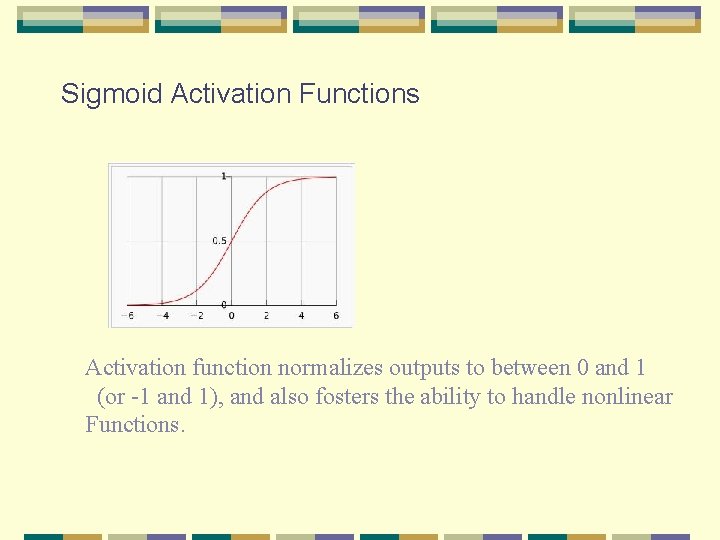

Sigmoid Activation Functions Activation function normalizes outputs to between 0 and 1 (or -1 and 1), and also fosters the ability to handle nonlinear Functions.

Types of Neural Networks Perceptron l No hidden layers l Can only learn linearly separable functions l Cannot learn nonlinear functions Multilayer feed-forward networks l Includes at least one hidden layer l Uses backpropagation to modify neuron weights l Can learn nonlinear functions

Types of learning In unsupervised learning paradigms, the ANN receives input data but not any feedback about desired results. It develops clusters of the training records based on data similarities. In a supervised learning paradigm, the ANN gets to compare its guess to feedback containing the desired results. The most common of these is backpropagation, which does the comparison with squared errors.

Learning From Experience The process is: 1. A piece of data is presented to a neural net. The ANN “guesses” an output. 2. The prediction is compared with the actual or correct value. If the guess was correct, no action is taken. 3. An incorrect guess causes the ANN to examine itself to determine which parameters to adjust. 4. Another piece of data is presented and the process is repeated.

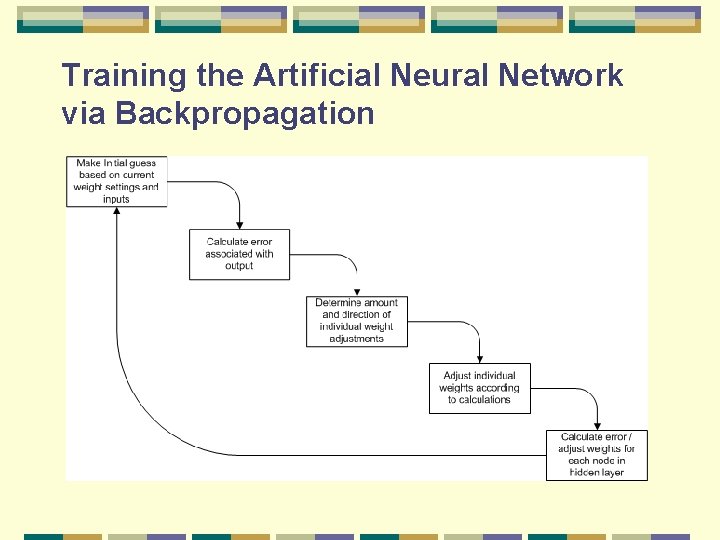

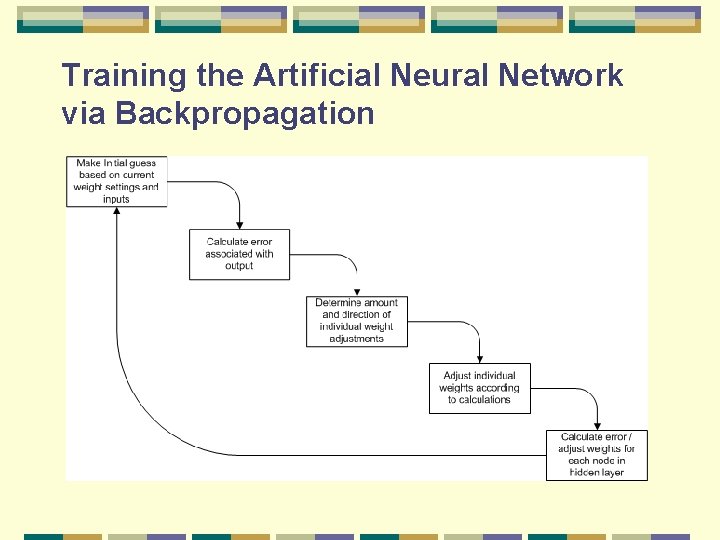

Training the Artificial Neural Network via Backpropagation

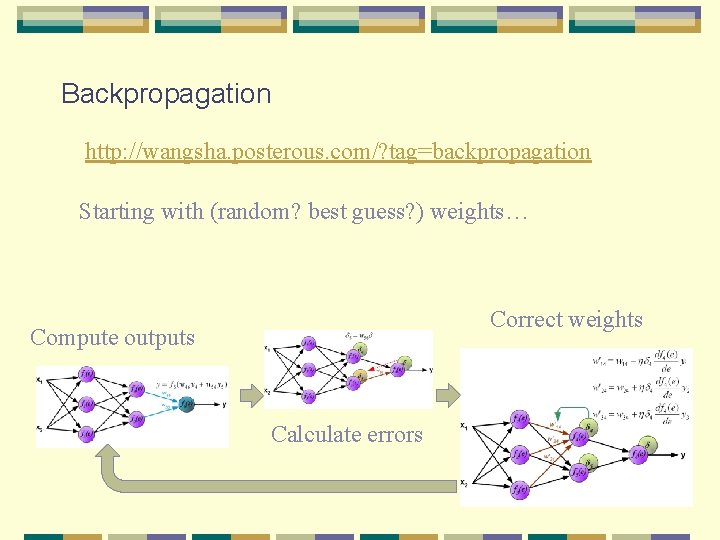

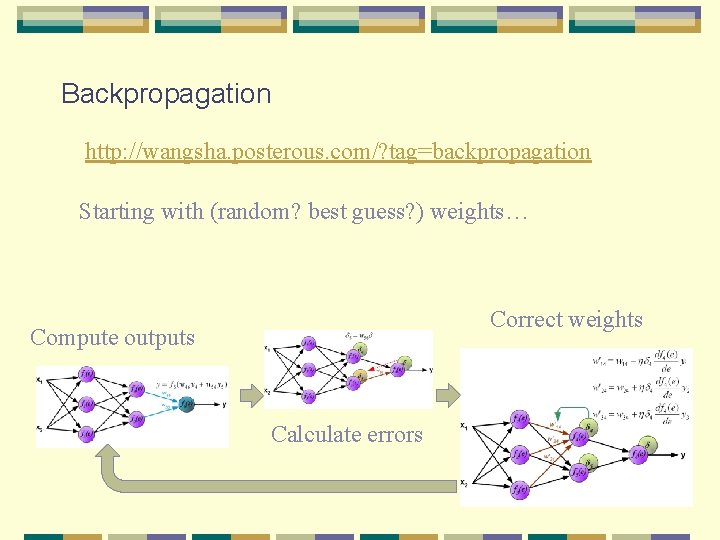

Backpropagation http: //wangsha. posterous. com/? tag=backpropagation Starting with (random? best guess? ) weights… Correct weights Compute outputs Calculate errors

Benefits Associated with Neural Computing Avoidance of explicit programming Reduced need for experts ANNs are adaptable to changed inputs No need for refined knowledge base ANNs are dynamic and improve with use Able to process erroneous or incomplete data Allows for generalization from specific info Allows inclusion of common sense into the problem-solving domain

Limitations Associated with Neural Computing ANNs cannot “explain” their inference The “black box” nature makes accountability and reliability issues difficult Repetitive training process is time consuming Highly skilled machine learning analysts and designers are still a scarce resource ANN technology pushes the limits of current hardware ANN require “faith” be imparted to the output

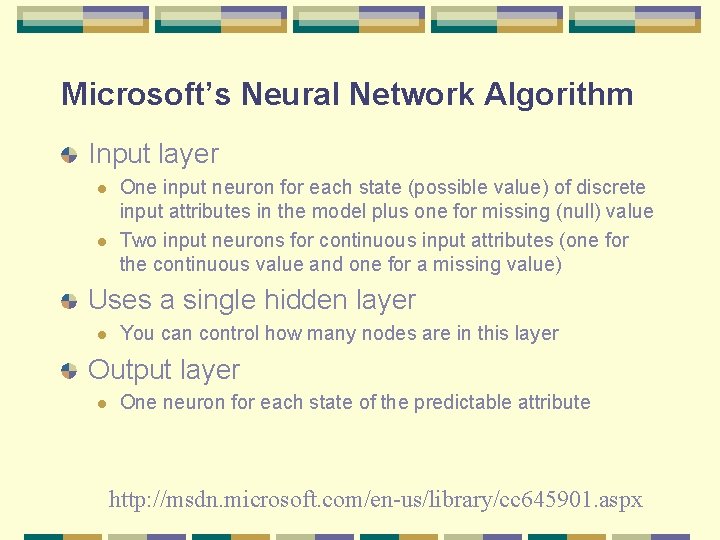

Microsoft’s Neural Network Algorithm Input layer l l One input neuron for each state (possible value) of discrete input attributes in the model plus one for missing (null) value Two input neurons for continuous input attributes (one for the continuous value and one for a missing value) Uses a single hidden layer l You can control how many nodes are in this layer Output layer l One neuron for each state of the predictable attribute http: //msdn. microsoft. com/en-us/library/cc 645901. aspx