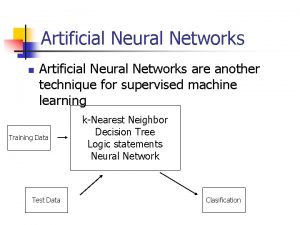

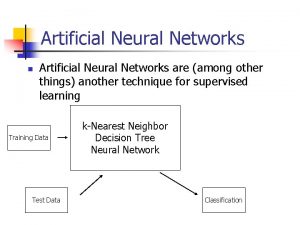

Artificial Neural Networks Introduction Artificial Neural Networks I

- Slides: 61

Artificial Neural Networks Introduction Artificial Neural Networks - I

My whereabouts • • • Dr. M. Castellani Centria – Centre for Artificial Intelligence Room 2. 50 Ext. 10757 Email: mcas@fct. unl. pt Research Interests – – – 22/11/2020 Machine Learning, Approximate Reasoning Evolutionary techniques for generation of Fuzzy Logic Systems Evolutionary techniques for generation of Neural Networks Systems Spiking Neural Network models. Data Classification, Time Series Modelling and Control. Artificial Neural Networks - I 2

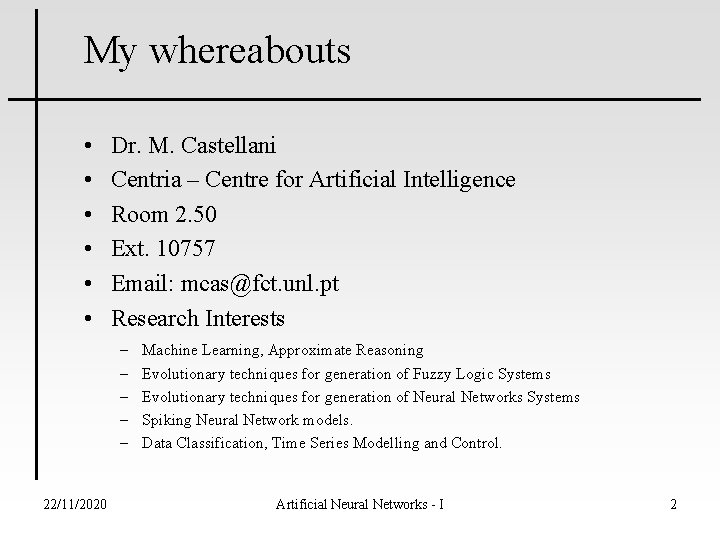

Table of Contents • Introduction to ANNs – Taxonomy – Features – Learning – Applications I • Supervised ANNs – Examples – Applications – Further topics • Unsupervised ANNs – Examples – Applications – Further topics II 22/11/2020 Artificial Neural Networks - I III 3

Contents - I • Introduction to ANNs – Processing elements (neurons) – Architecture • • • 22/11/2020 Functional Taxonomy of ANNs Structural Taxonomy of ANNs Features Learning Paradigms Applications Artificial Neural Networks - I 4

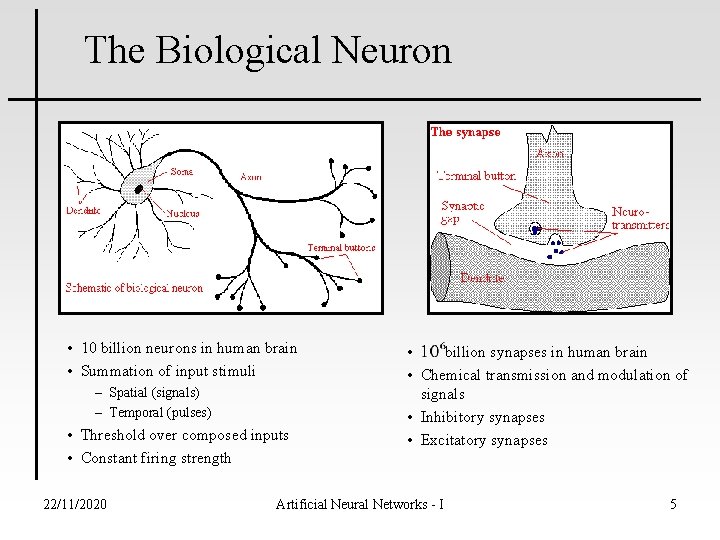

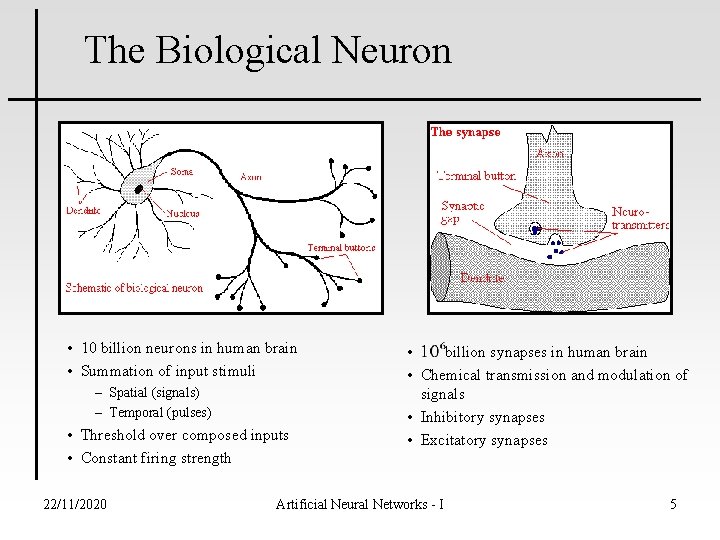

The Biological Neuron • 10 billion neurons in human brain • Summation of input stimuli – Spatial (signals) – Temporal (pulses) • Threshold over composed inputs • Constant firing strength 22/11/2020 • billion synapses in human brain • Chemical transmission and modulation of signals • Inhibitory synapses • Excitatory synapses Artificial Neural Networks - I 5

Biological Neural Networks • 10, 000 synapses per neuron • Computational power = connectivity • Plasticity – new connections (? ) – strength of connections modified 22/11/2020 Artificial Neural Networks - I 6

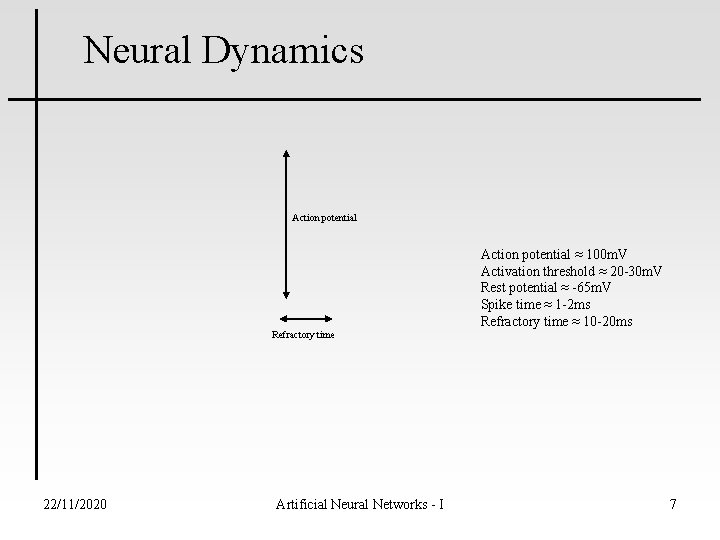

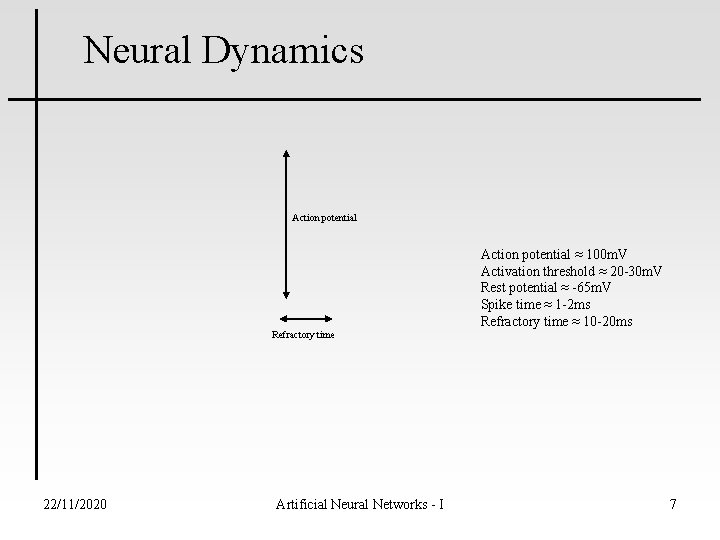

Neural Dynamics Action potential Refractory time 22/11/2020 Artificial Neural Networks - I Action potential ≈ 100 m. V Activation threshold ≈ 20 -30 m. V Rest potential ≈ -65 m. V Spike time ≈ 1 -2 ms Refractory time ≈ 10 -20 ms 7

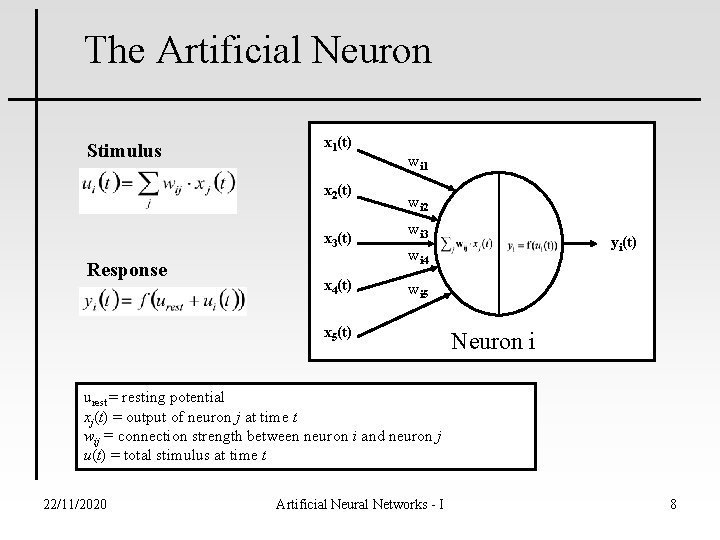

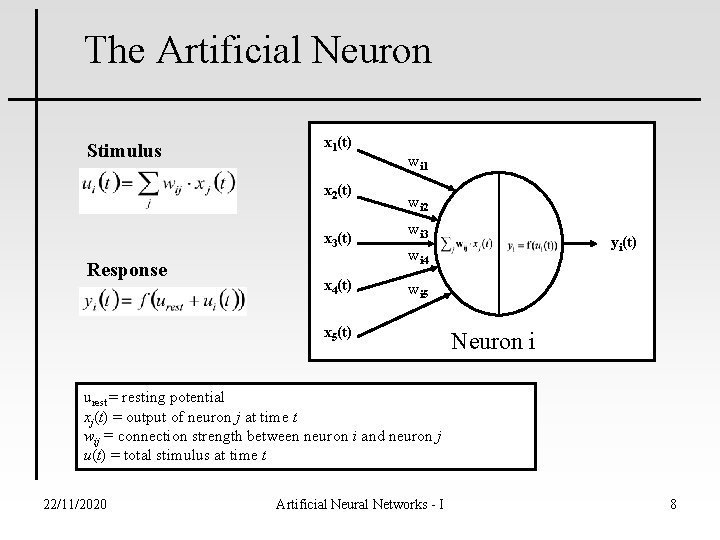

The Artificial Neuron Stimulus x 1(t) x 2(t) x 3(t) Response x 4(t) wi 1 wi 2 wi 3 yi(t) wi 4 wi 5 x 5(t) Neuron i urest = resting potential xj(t) = output of neuron j at time t wij = connection strength between neuron i and neuron j u(t) = total stimulus at time t 22/11/2020 Artificial Neural Networks - I 8

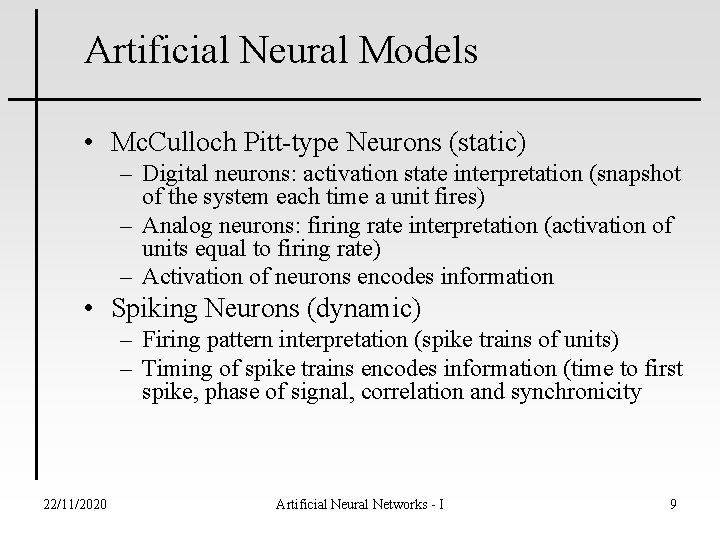

Artificial Neural Models • Mc. Culloch Pitt-type Neurons (static) – Digital neurons: activation state interpretation (snapshot of the system each time a unit fires) – Analog neurons: firing rate interpretation (activation of units equal to firing rate) – Activation of neurons encodes information • Spiking Neurons (dynamic) – Firing pattern interpretation (spike trains of units) – Timing of spike trains encodes information (time to first spike, phase of signal, correlation and synchronicity 22/11/2020 Artificial Neural Networks - I 9

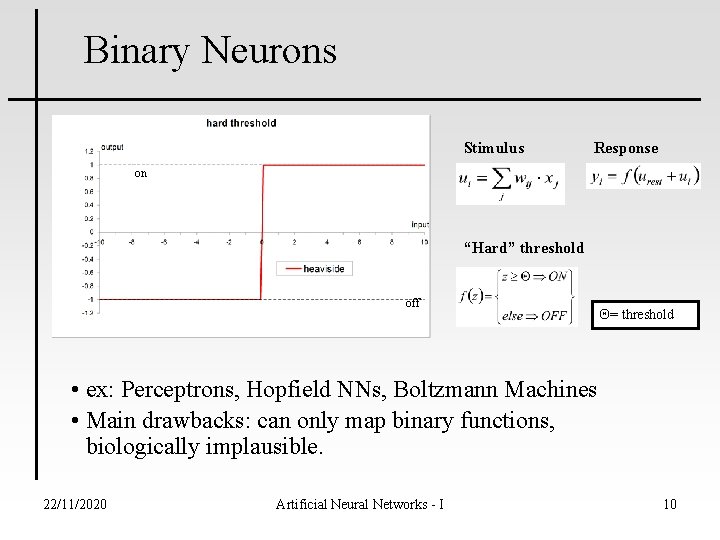

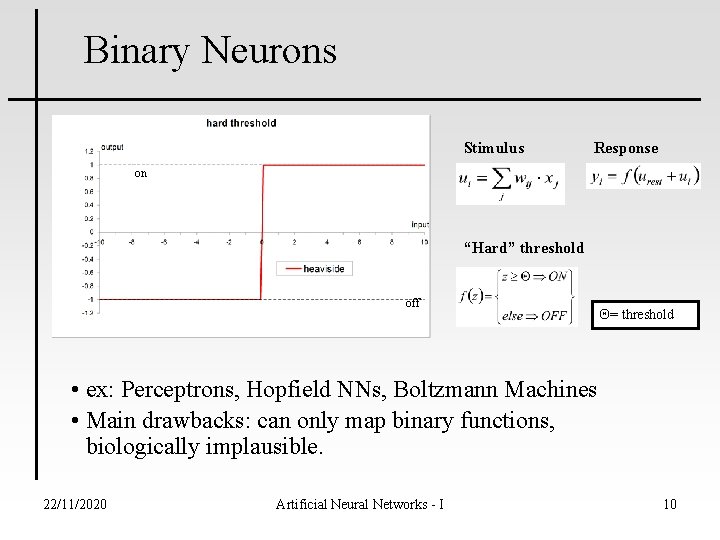

Binary Neurons Stimulus Response on “Hard” threshold off = threshold • ex: Perceptrons, Hopfield NNs, Boltzmann Machines • Main drawbacks: can only map binary functions, biologically implausible. 22/11/2020 Artificial Neural Networks - I 10

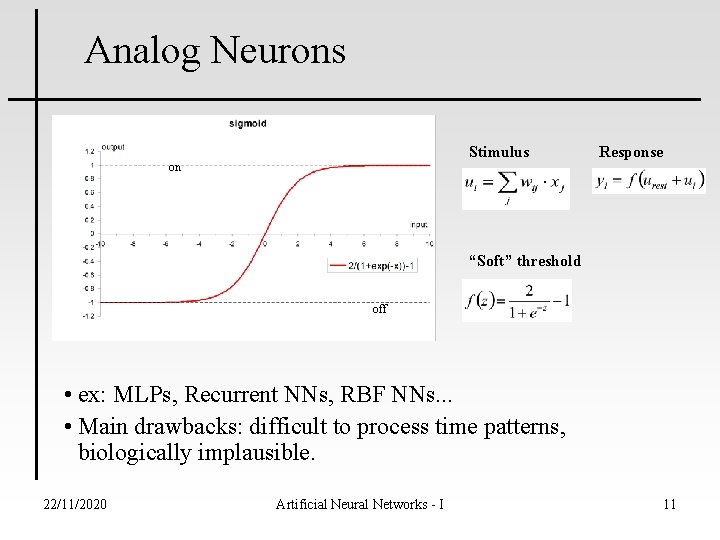

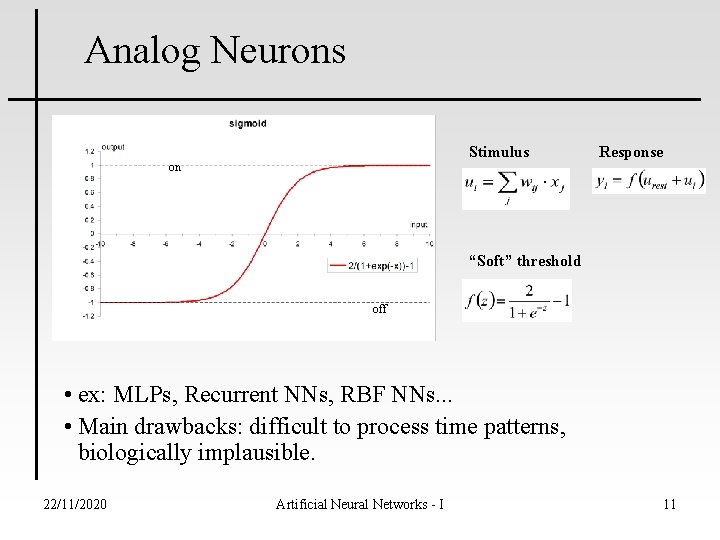

Analog Neurons Stimulus on Response “Soft” threshold off • ex: MLPs, Recurrent NNs, RBF NNs. . . • Main drawbacks: difficult to process time patterns, biologically implausible. 22/11/2020 Artificial Neural Networks - I 11

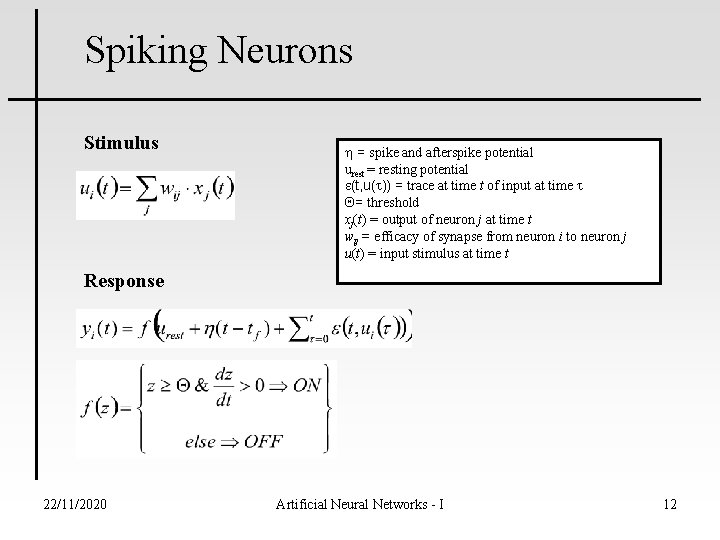

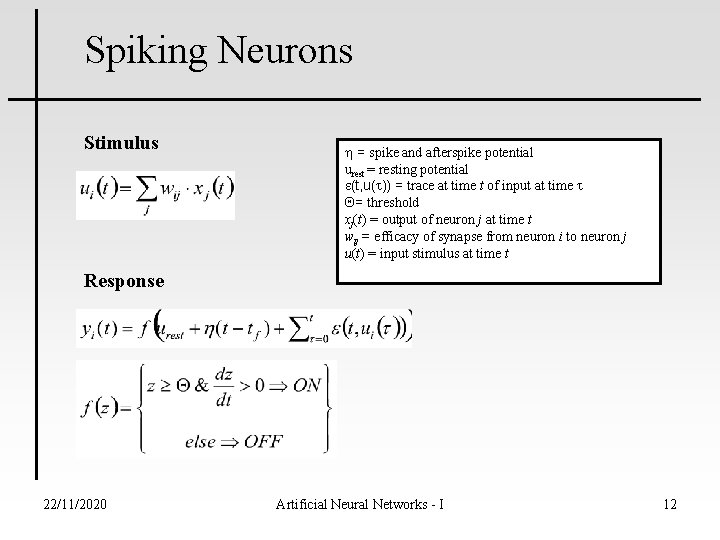

Spiking Neurons Stimulus = spike and afterspike potential urest = resting potential e(t, u(t)) = trace at time t of input at time t = threshold xj(t) = output of neuron j at time t wij = efficacy of synapse from neuron i to neuron j u(t) = input stimulus at time t Response 22/11/2020 Artificial Neural Networks - I 12

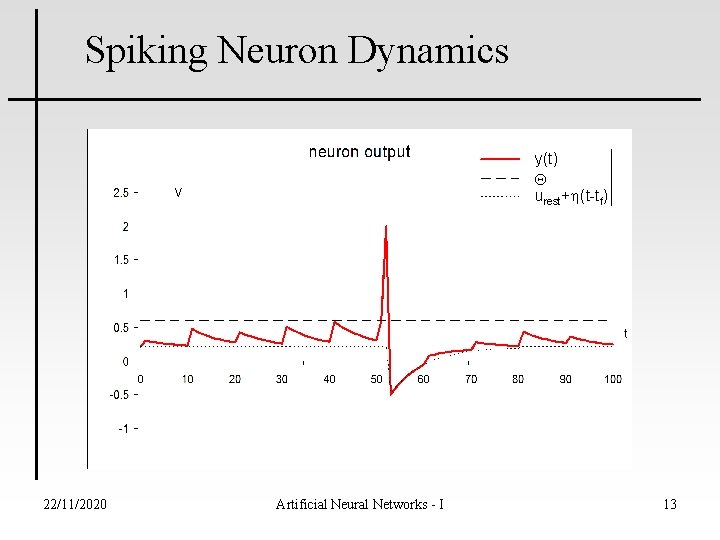

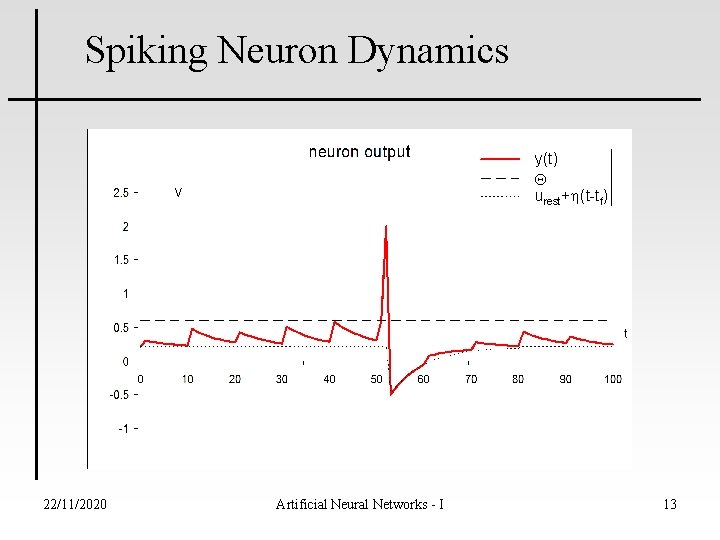

Spiking Neuron Dynamics y(t) urest+ (t-tf) 22/11/2020 Artificial Neural Networks - I 13

Hebb’s Postulate of Learning • Biological formulation • When an axon of cell A is near enough to excite a cell and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that A’s efficiency as one of the cells firing B is increased. 22/11/2020 Artificial Neural Networks - I 14

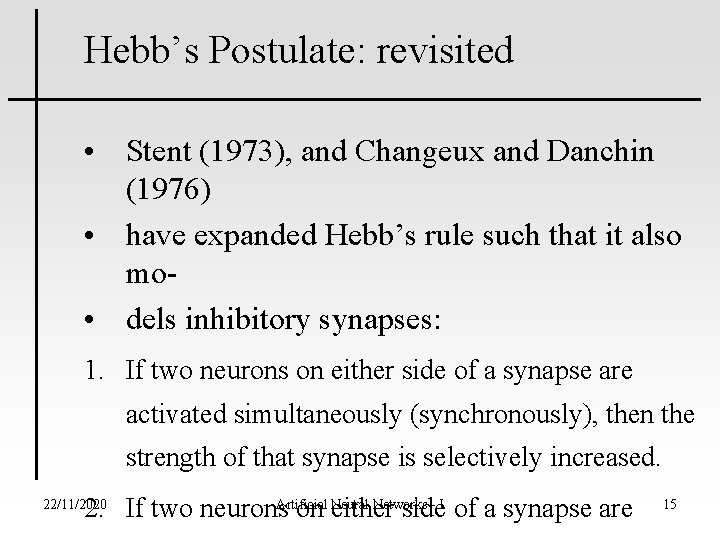

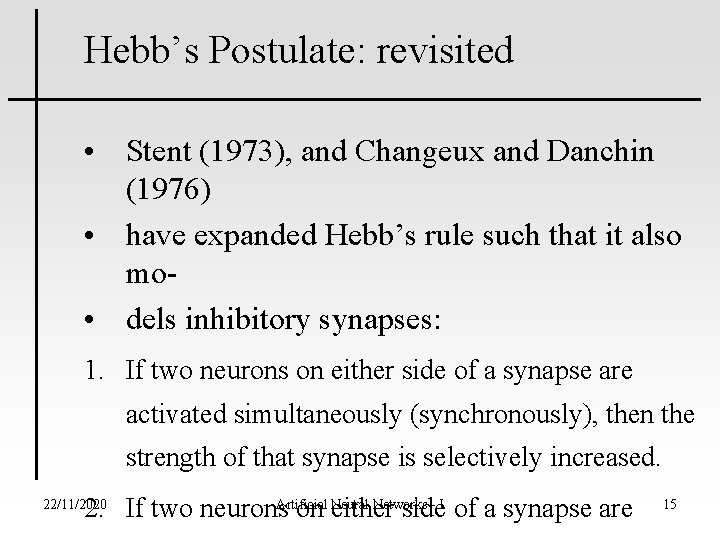

Hebb’s Postulate: revisited • Stent (1973), and Changeux and Danchin (1976) • have expanded Hebb’s rule such that it also mo • dels inhibitory synapses: 1. If two neurons on either side of a synapse are activated simultaneously (synchronously), then the strength of that synapse is selectively increased. Neural Networks - I 2. If two neurons. Artificial on either side of a synapse are 22/11/2020 15

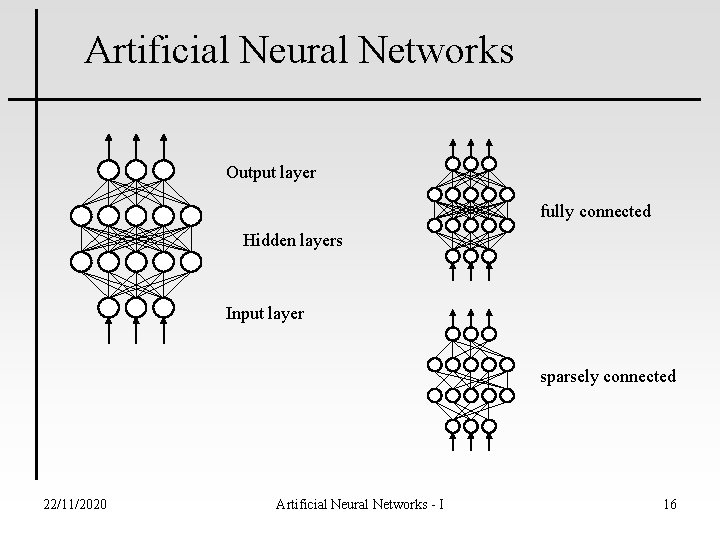

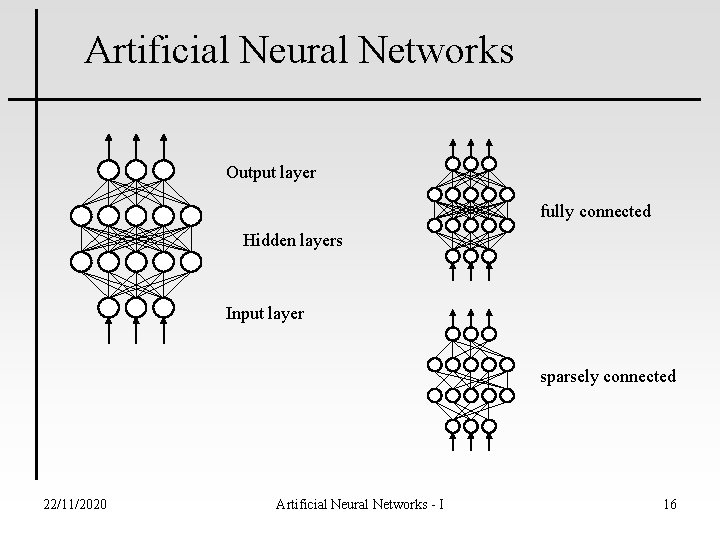

Artificial Neural Networks Output layer fully connected Hidden layers Input layer sparsely connected 22/11/2020 Artificial Neural Networks - I 16

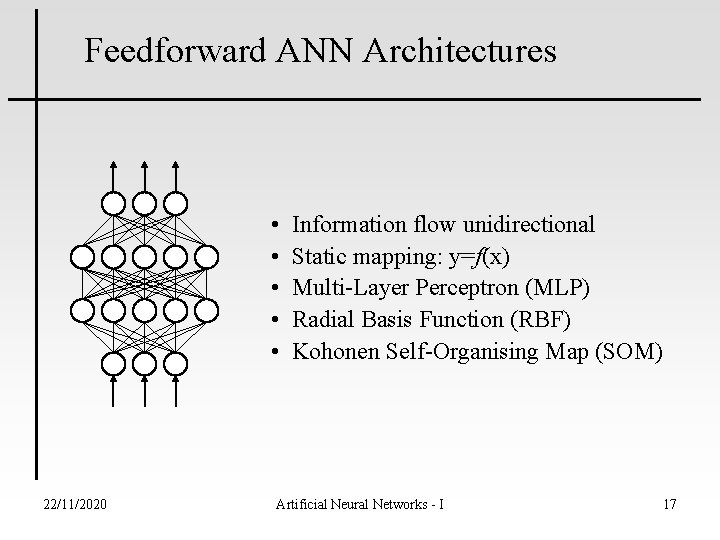

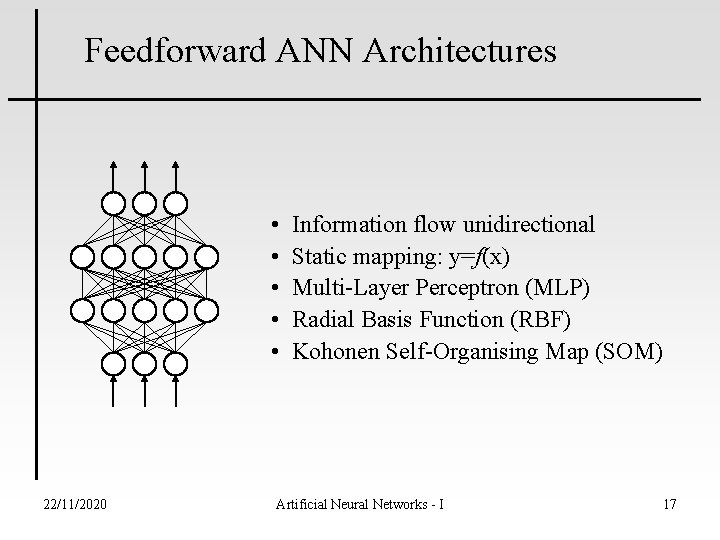

Feedforward ANN Architectures • • • 22/11/2020 Information flow unidirectional Static mapping: y=f(x) Multi-Layer Perceptron (MLP) Radial Basis Function (RBF) Kohonen Self-Organising Map (SOM) Artificial Neural Networks - I 17

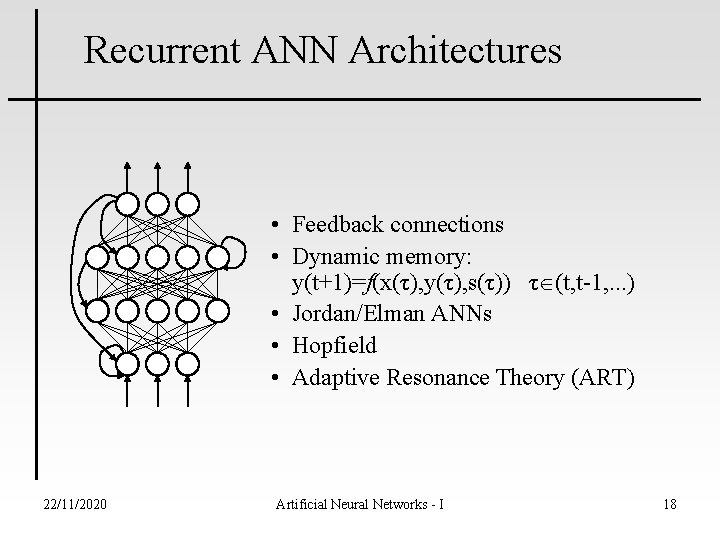

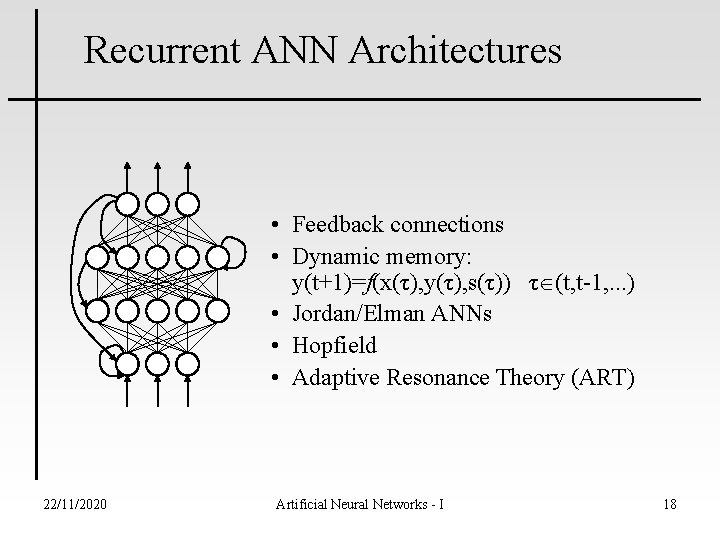

Recurrent ANN Architectures • Feedback connections • Dynamic memory: y(t+1)=f(x(τ), y(τ), s(τ)) τ (t, t-1, . . . ) • Jordan/Elman ANNs • Hopfield • Adaptive Resonance Theory (ART) 22/11/2020 Artificial Neural Networks - I 18

History • Early stages – – – 1943 Mc. Culloch-Pitts: neuron as comp. elem. 1948 Wiener: cybernatics 1949 Hebb: learning rule 1958 Rosenblatt: perceptron 1960 Widrow-Hoff: least mean square algorithm • Recession – 1969 Minsky-Papert: limitations perceptron model • Revival – 1982 Hopfield: recurrent network model – 1982 Kohonen: self-organizing maps – 1986 Rumelhart et. al. : backpropagation 22/11/2020 Artificial Neural Networks - I 19

ANN Capabilities • • 22/11/2020 Learning Approximate reasoning Generalisation capability Noise filtering Parallel processing Distributed knowledge base Fault tolerance Artificial Neural Networks - I 20

Main Problems with ANN • Knowledge base not transparent (black box) (Partially resolved) • Learning sometimes difficult/slow • Limited storage capability 22/11/2020 Artificial Neural Networks - I 21

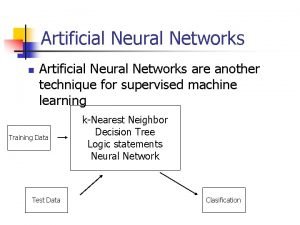

ANN Learning Paradigms • Supervised learning – Classification – Control – Function approximation – Associative memory • Unsupervised learning – Clustering • Reinforcement learning – Control 22/11/2020 Artificial Neural Networks - I 22

Supervised Learning • • • 22/11/2020 Teacher presents ANN input-output pairs ANN weights adjusted according to error Iterative algorithms (e. g. Delta rule, BP rule) One-shot learning (Hopfield) Quality of training examples is critical Artificial Neural Networks - I 23

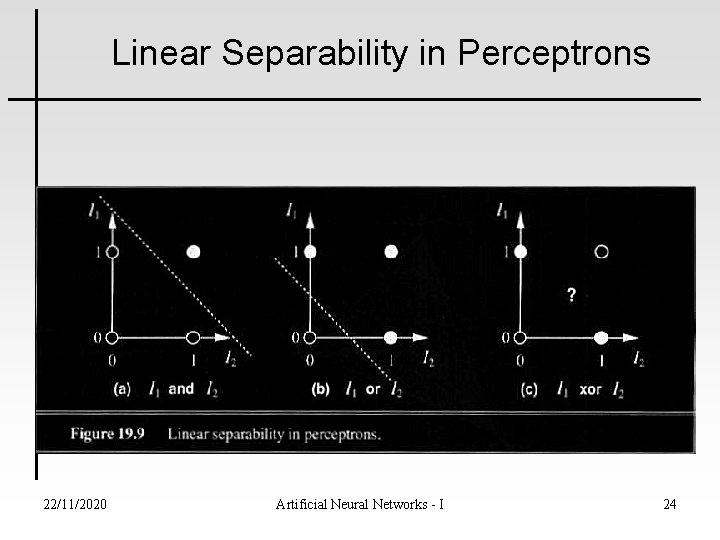

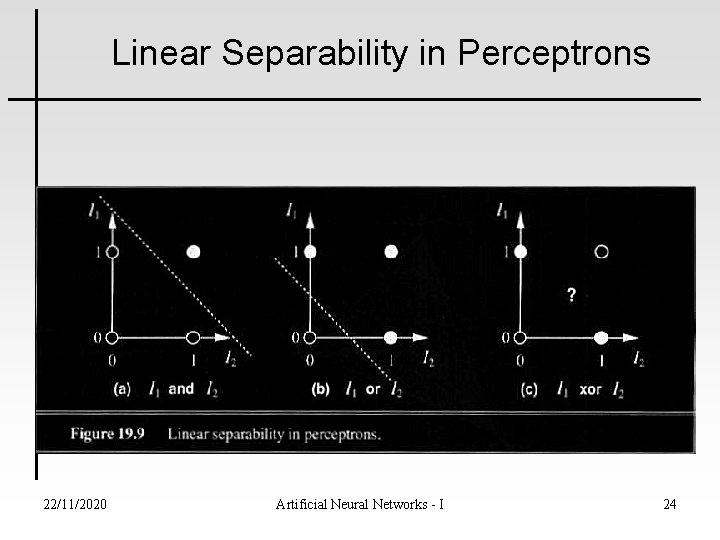

Linear Separability in Perceptrons 22/11/2020 Artificial Neural Networks - I Presented by Martin Ho, Eddy Li, Eric Wong and Kitty Wong - Copyright© 2000 24

Learning Linearly Separable Functions (1) What can these functions learn ? Bad news: - There are not many linearly separable functions. Good news: - There is a perceptron algorithm that will learn any linearly separable function, given enough training examples. 22/11/2020 Artificial Neural Networks - I Presented by Martin Ho, Eddy Li, Eric Wong and Kitty Wong - Copyright© 2000 25

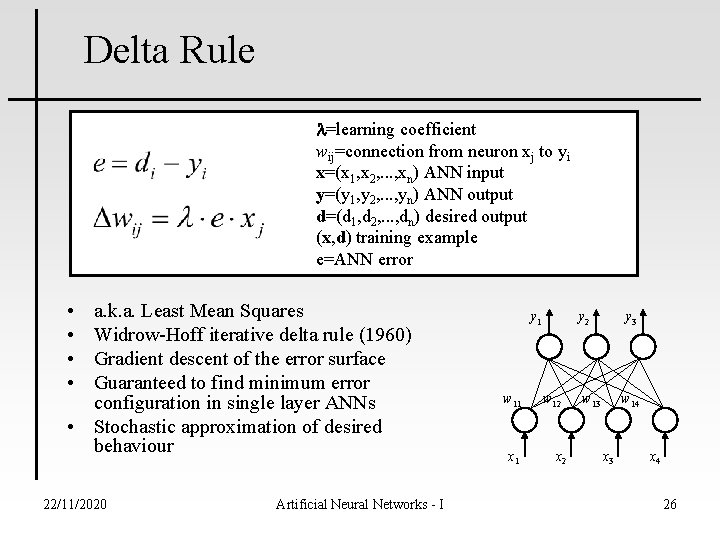

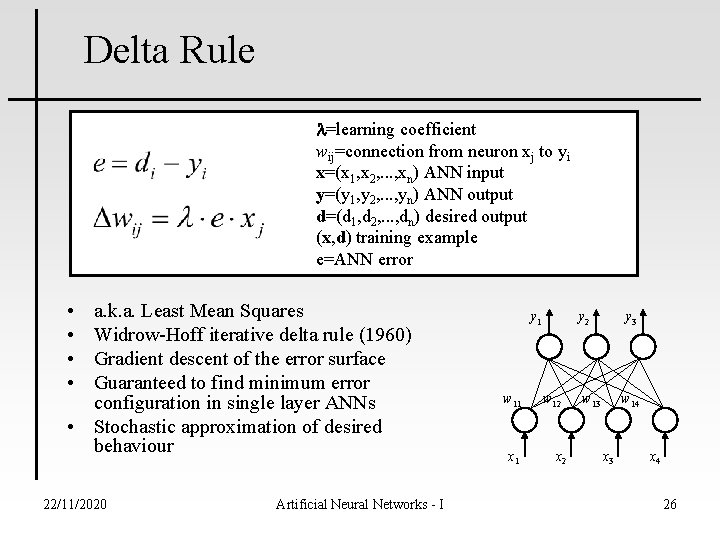

Delta Rule l=learning coefficient wij=connection from neuron xj to yi x=(x 1, x 2, . . . , xn) ANN input y=(y 1, y 2, . . . , yn) ANN output d=(d 1, d 2, . . . , dn) desired output (x, d) training example e=ANN error • • a. k. a. Least Mean Squares Widrow-Hoff iterative delta rule (1960) Gradient descent of the error surface Guaranteed to find minimum error configuration in single layer ANNs • Stochastic approximation of desired behaviour 22/11/2020 Artificial Neural Networks - I y 1 w 11 x 1 w 12 x 2 y 3 w 14 x 3 x 4 26

Unsupervised Learning • ANN adapts weights to cluster input data • Hebbian learning – Connection stimulus-response strengthened (hebbian) • Competitive learning algorithms – Kohonen & ART – Input weights adjusted to resemble stimulus 22/11/2020 Artificial Neural Networks - I 27

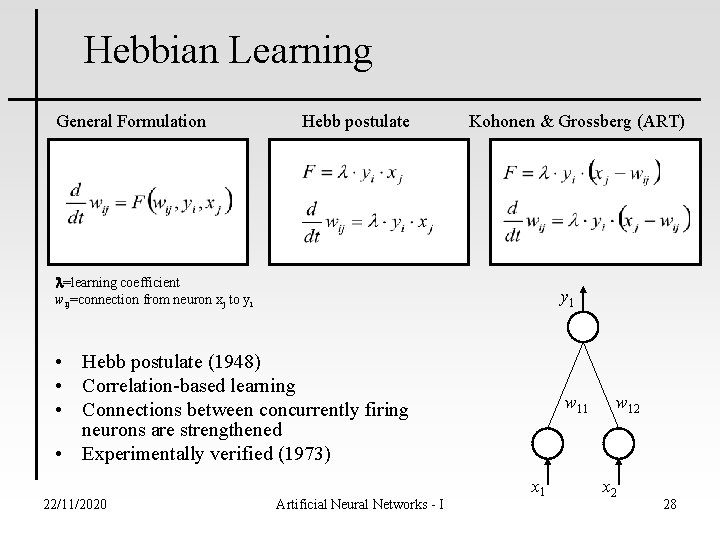

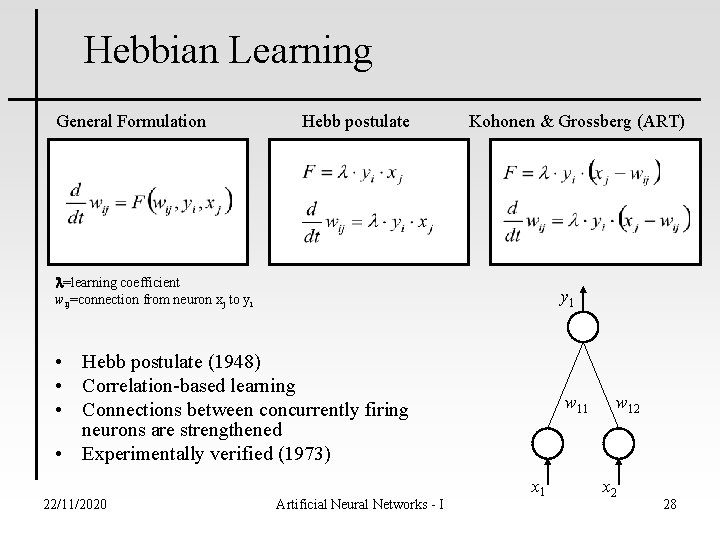

Hebbian Learning General Formulation Hebb postulate Kohonen & Grossberg (ART) l=learning coefficient wij=connection from neuron xj to yi y 1 • Hebb postulate (1948) • Correlation-based learning • Connections between concurrently firing neurons are strengthened • Experimentally verified (1973) w 11 22/11/2020 Artificial Neural Networks - I x 1 w 12 x 2 28

Learning principle for artificial neural networks ENERGY MINIMIZATION We need an appropriate definition of energy for artificial neural networks, and having that we can use mathematical optimisation techniques to find how to change the weights of the synaptic connections between neurons. ENERGY = measure of task performance error 22/11/2020 Artificial Neural Networks - I 29

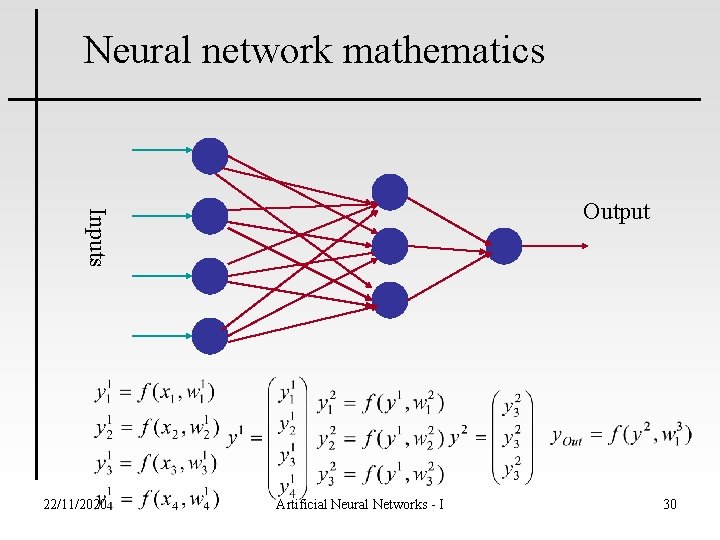

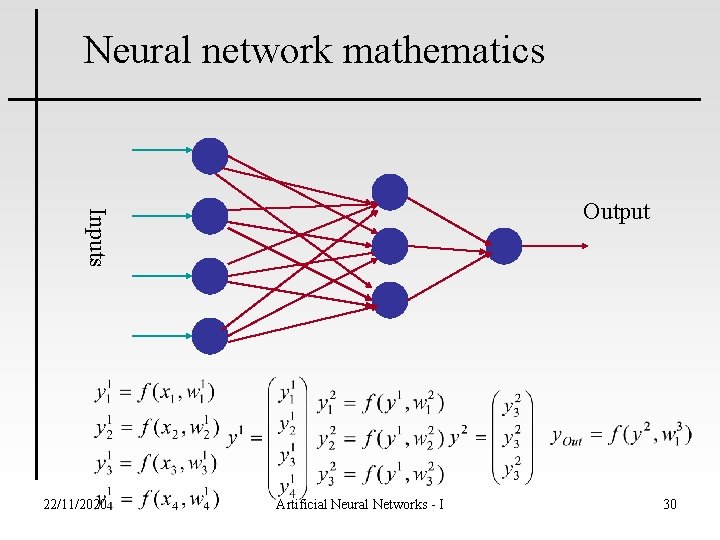

Neural network mathematics Inputs Output 22/11/2020 Artificial Neural Networks - I 30

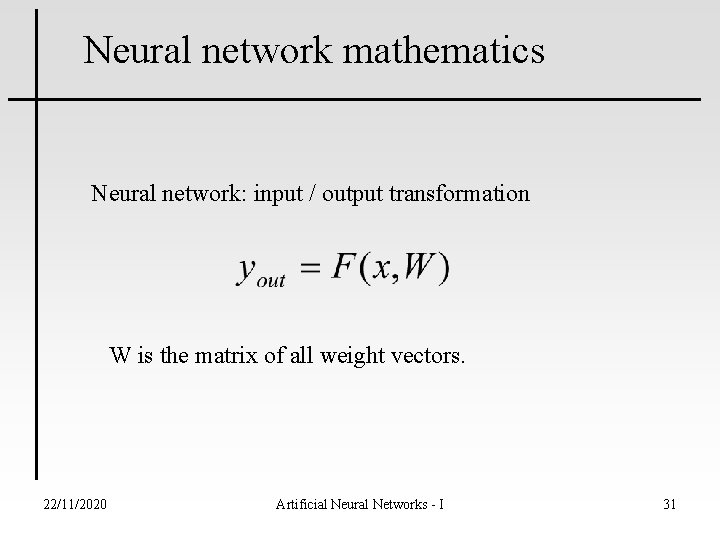

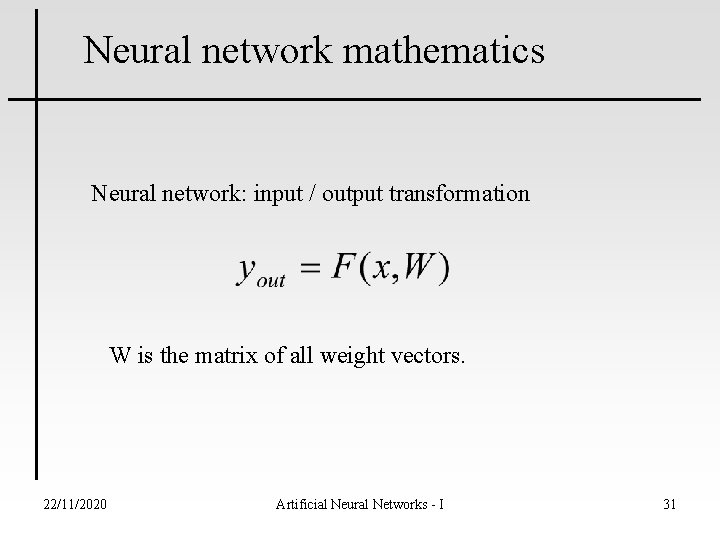

Neural network mathematics Neural network: input / output transformation W is the matrix of all weight vectors. 22/11/2020 Artificial Neural Networks - I 31

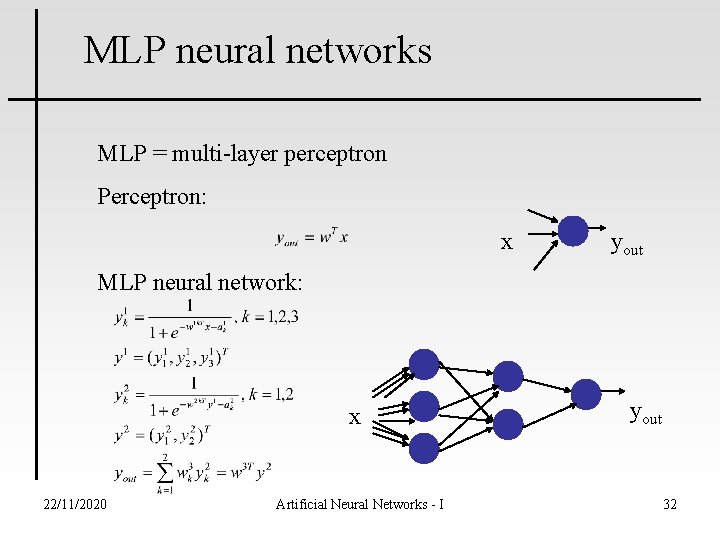

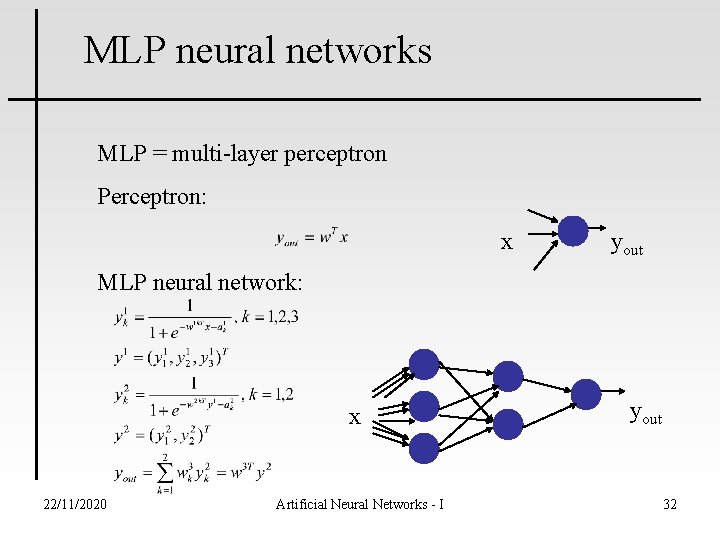

MLP neural networks MLP = multi-layer perceptron Perceptron: x yout MLP neural network: x 22/11/2020 Artificial Neural Networks - I yout 32

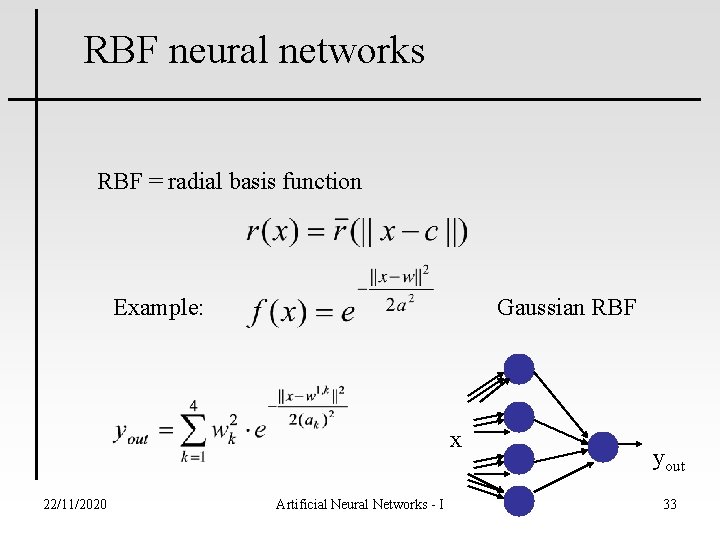

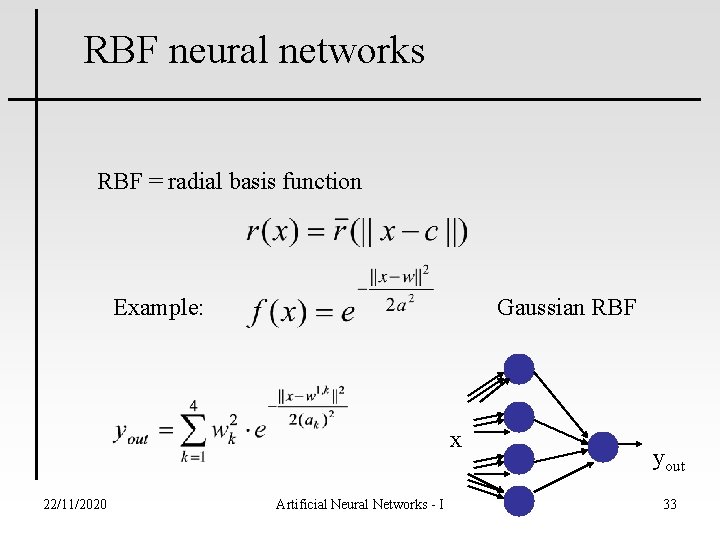

RBF neural networks RBF = radial basis function Example: Gaussian RBF x 22/11/2020 Artificial Neural Networks - I yout 33

Neural network tasks • control • classification • prediction • approximation These can be reformulated in general as FUNCTION APPROXIMATION tasks. Approximation: given a set of values of a function g(x) build a neural network that approximates the g(x) values for any input x. 22/11/2020 Artificial Neural Networks - I 34

Neural network approximation Task specification: Data: set of value pairs: (xt, yt), yt=g(xt) + zt; zt is random measurement noise. Objective: find a neural network that represents the input / output transformation (a function) F(x, W) such that F(x, W) approximates g(x) for every x 22/11/2020 Artificial Neural Networks - I 35

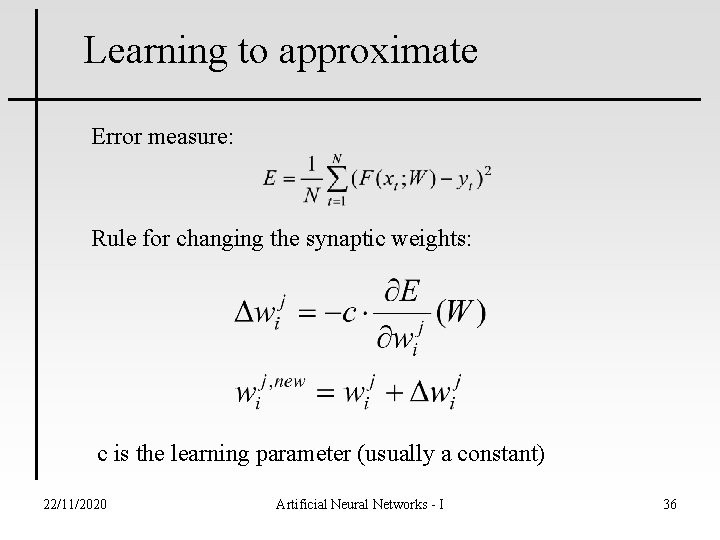

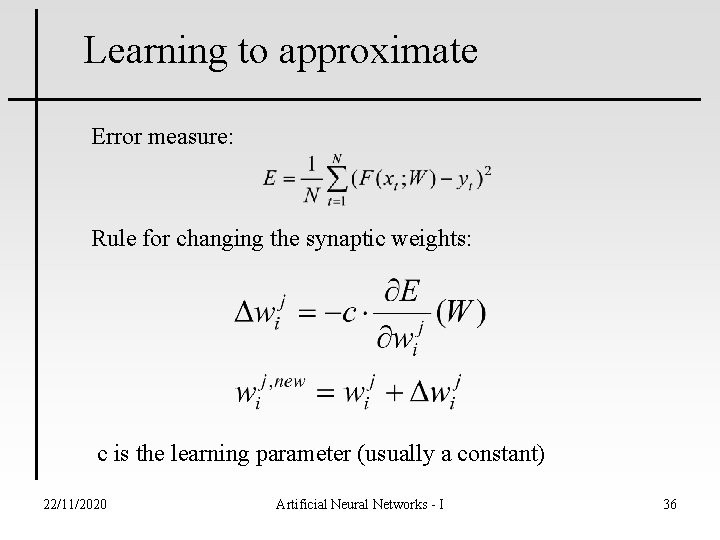

Learning to approximate Error measure: Rule for changing the synaptic weights: c is the learning parameter (usually a constant) 22/11/2020 Artificial Neural Networks - I 36

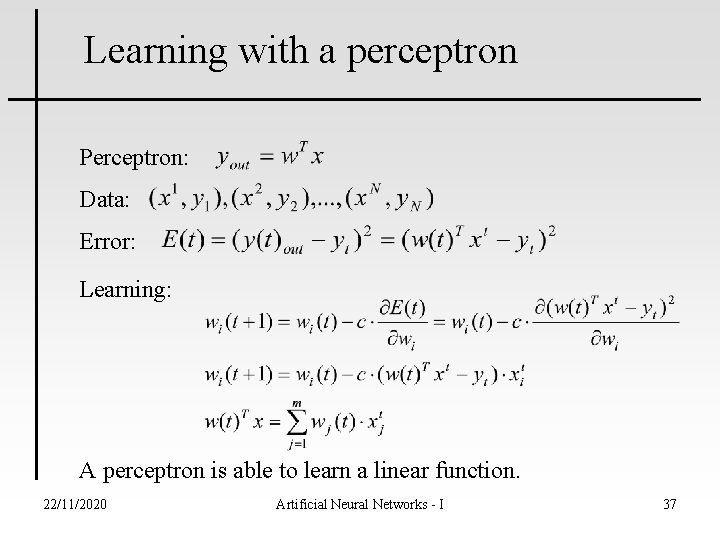

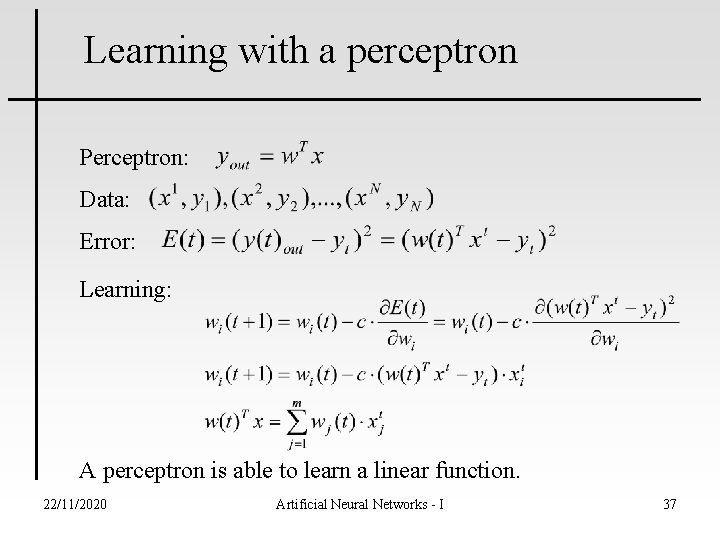

Learning with a perceptron Perceptron: Data: Error: Learning: A perceptron is able to learn a linear function. 22/11/2020 Artificial Neural Networks - I 37

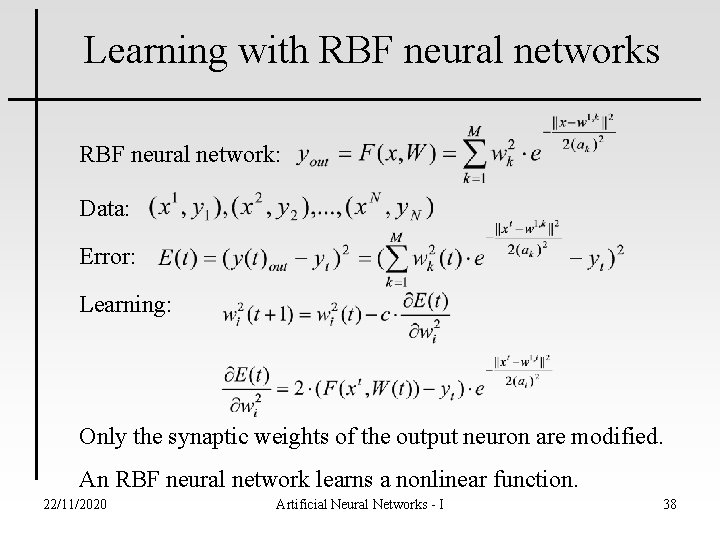

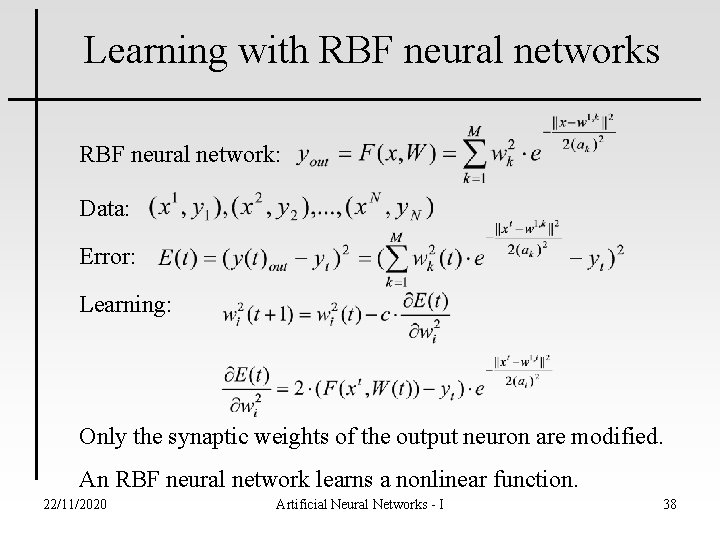

Learning with RBF neural networks RBF neural network: Data: Error: Learning: Only the synaptic weights of the output neuron are modified. An RBF neural network learns a nonlinear function. 22/11/2020 Artificial Neural Networks - I 38

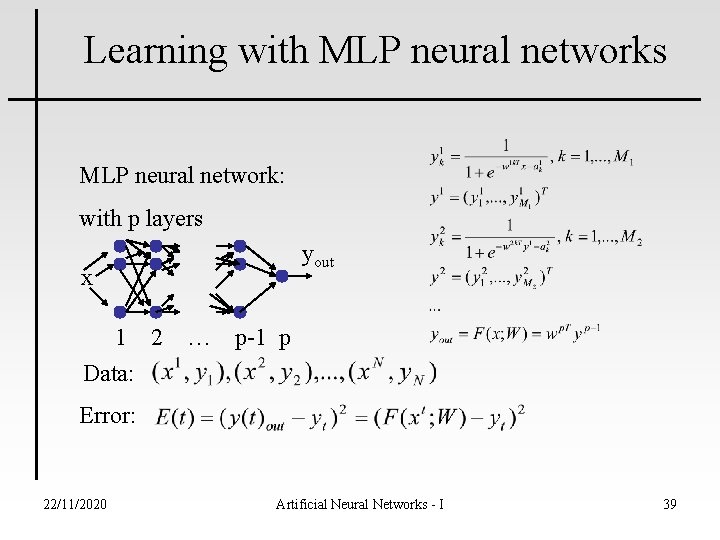

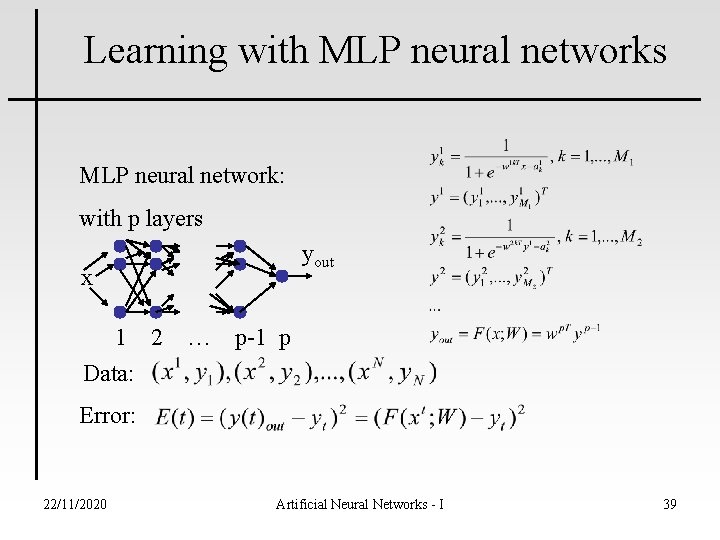

Learning with MLP neural networks MLP neural network: with p layers yout x 1 2 Data: … p-1 p Error: 22/11/2020 Artificial Neural Networks - I 39

Learning with backpropagation Learning: Apply the chain rule for differentiation: • calculate first the changes for the synaptic weights of the output neuron; • calculate the changes backward starting from layer p-1, and propagate backward the local error terms. The method is still relatively complicated but it is much simpler than the original optimisation problem. 22/11/2020 Artificial Neural Networks - I 40

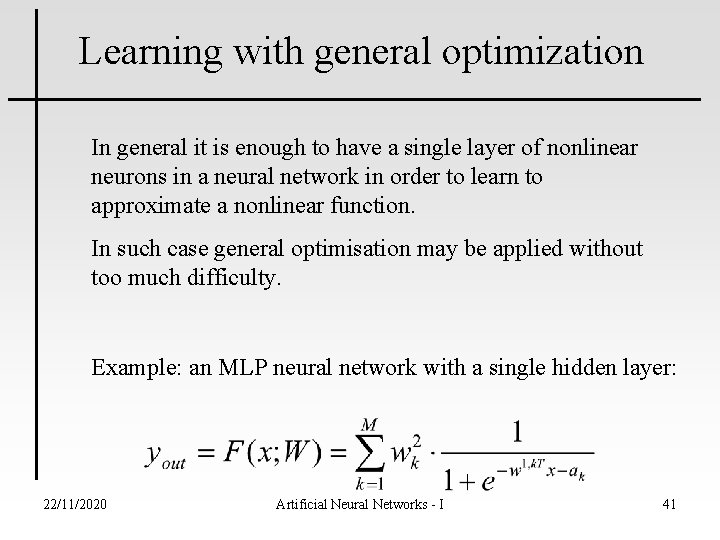

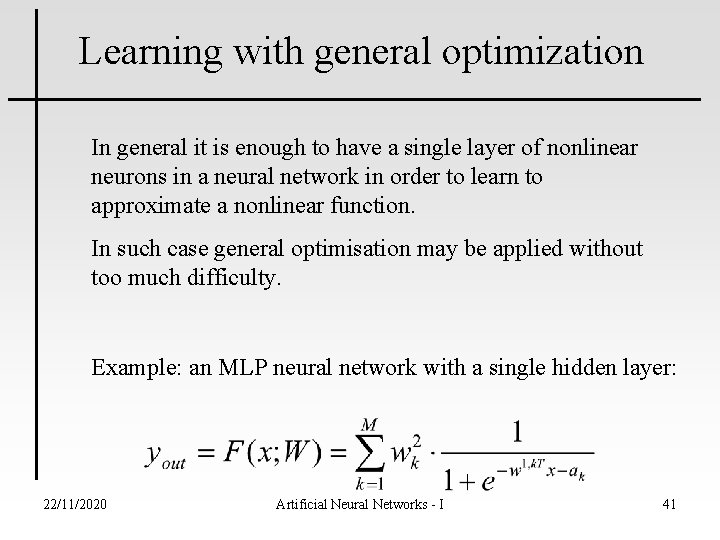

Learning with general optimization In general it is enough to have a single layer of nonlinear neurons in a neural network in order to learn to approximate a nonlinear function. In such case general optimisation may be applied without too much difficulty. Example: an MLP neural network with a single hidden layer: 22/11/2020 Artificial Neural Networks - I 41

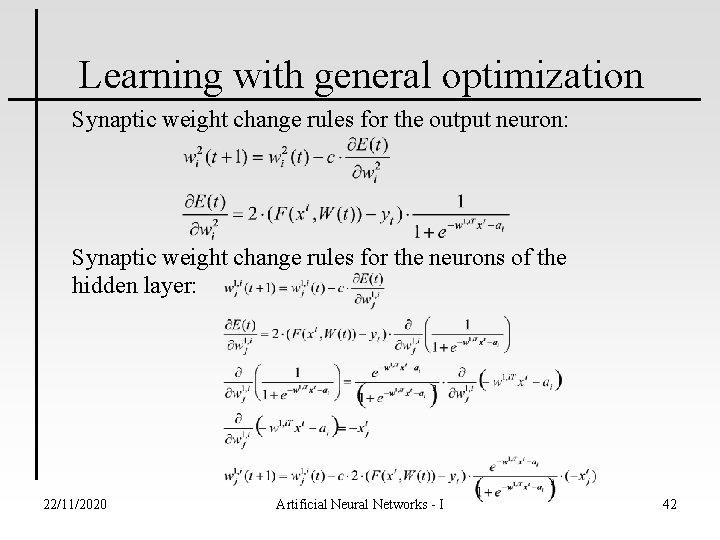

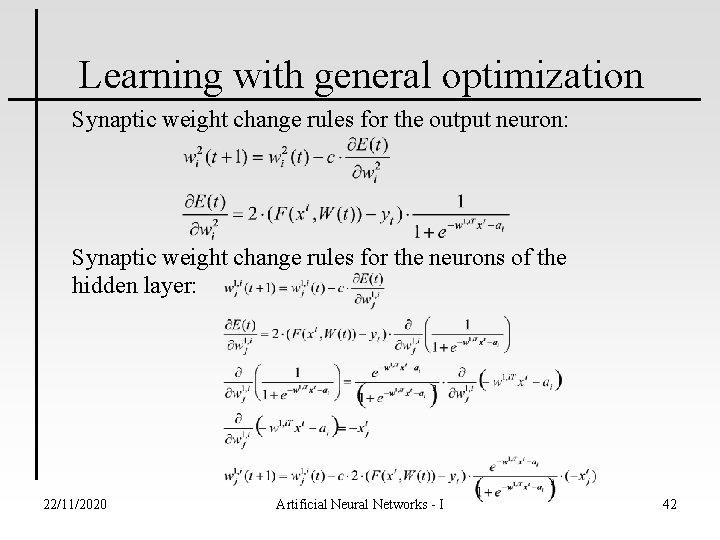

Learning with general optimization Synaptic weight change rules for the output neuron: Synaptic weight change rules for the neurons of the hidden layer: 22/11/2020 Artificial Neural Networks - I 42

New methods for learning with neural networks Bayesian learning: the distribution of the neural network parameters is learnt Support vector learning: the minimal representative subset of the available data is used to calculate the synaptic weights of the neurons 22/11/2020 Artificial Neural Networks - I 43

Reinforcement Learning • • • Sequential tasks Desired action may not be known Critic evaluation of ANN behaviour Weights adjusted according to critic May require credit assignment Population-based learning – Evolutionary Algorithms – Swarming Techniques – Immune Networks 22/11/2020 Artificial Neural Networks - I 44

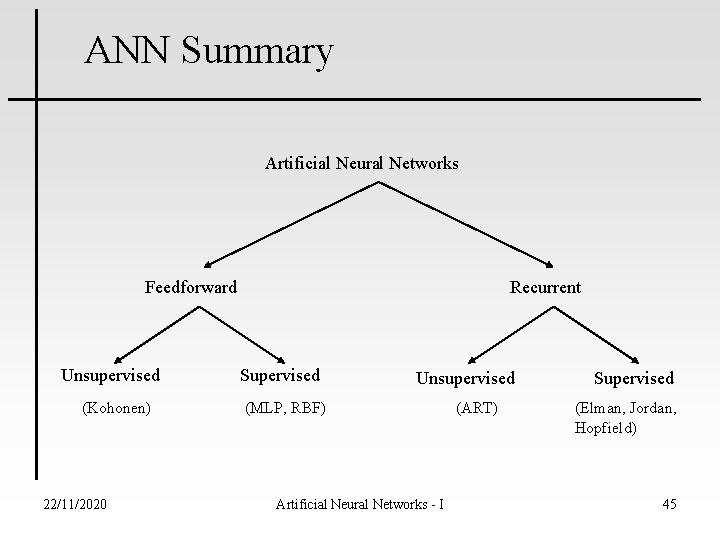

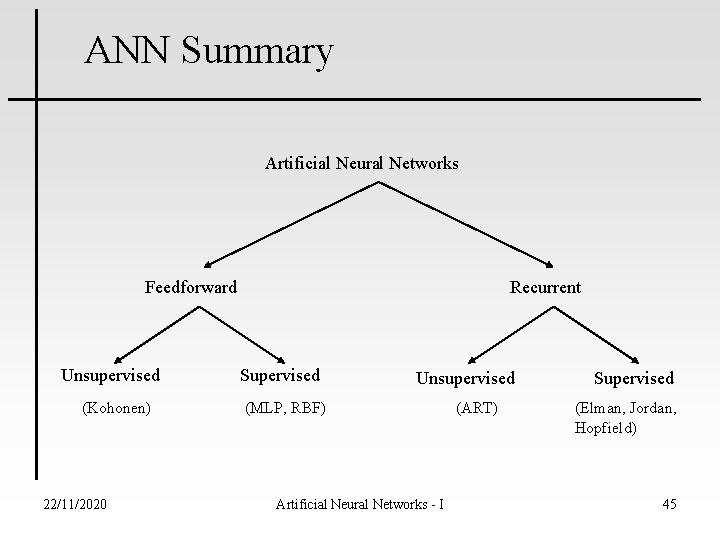

ANN Summary Artificial Neural Networks Feedforward Unsupervised (Kohonen) 22/11/2020 Recurrent Supervised Unsupervised (MLP, RBF) Artificial Neural Networks - I (ART) Supervised (Elman, Jordan, Hopfield) 45

ANN Application Areas • • • 22/11/2020 Classification Clustering Associative memory Control Function approximation Artificial Neural Networks - I 46

ANN Classifier systems • • 22/11/2020 Learning capability Statistical classifier systems Data driven Generalisation capability Handle and filter large input data Reconstruct noisy and incomplete patterns Classification rules not transparent Artificial Neural Networks - I 47

Applications for ANN Classifiers • Pattern recognition – – – Industrial inspection Fault diagnosis Image recognition Target recognition Speech recognition Natural language processing • Character recognition – Handwriting recognition – Automatic text-to-speech conversion 22/11/2020 Artificial Neural Networks - I 48

Clustering with ANNs • • 22/11/2020 Fast parallel distributed processing Handle large input information Robust to noise and incomplete patterns Data driven Plasticity/Adaptation Visualisation of results Accuracy sometimes poor Artificial Neural Networks - I 49

ANN Clustering Applications • Natural language processing – Document clustering – Document retrieval – Automatic query • Image segmentation • Data mining – Data set partitioning – Detection of emerging clusters • Fuzzy partitioning • Condition-action association 22/11/2020 Artificial Neural Networks - I 50

Associative ANN Memories • • • 22/11/2020 Stimulus-response association Auto-associative memory Content addressable memory Fast parallel distributed processing Robust to noise and incomplete patterns Limited storage capability Artificial Neural Networks - I 51

Application of ANN Associative Memories • • • 22/11/2020 Character recognition Handwriting recognition Noise filtering Data compression Information retrieval Artificial Neural Networks - I 52

ANN Control Systems • • • 22/11/2020 Learning/adaptation capability Data driven Non-linear mapping Fast response Fault tolerance Generalisation capability Handle and filter large input data Reconstruct noisy and incomplete patterns Control rules not transparent Learning may be problematic Artificial Neural Networks - I 53

ANN Control Schemes • ANN controller • conventional controller + ANN for unknown or non-linear dynamics • Indirect control schemes – ANN models direct plant dynamics – ANN models inverse plant dynamics 22/11/2020 Artificial Neural Networks - I 54

ANN Control Applications • Non-linear process control – – Chemical reaction control Industrial process control Water treatment Intensive care of patients • Servo control – Robot manipulators – Autonomous vehicles – Automotive control • Dynamic system control – Helicopter flight control – Underwater robot control 22/11/2020 Artificial Neural Networks - I 55

ANN Function Modelling • • 22/11/2020 ANN as universal function approximator Dynamic system modelling Learning capability Data driven Non-linear mapping Generalisation capability Handle and filter large input data Reconstruct noisy and incomplete inputs Artificial Neural Networks - I 56

ANN Modelling Applications • Modelling of highly nonlinear industrial processes • Financial market prediction • Weather forecasts • River flow prediction • Fault/breakage prediction • Monitoring of critically ill patients 22/11/2020 Artificial Neural Networks - I 57

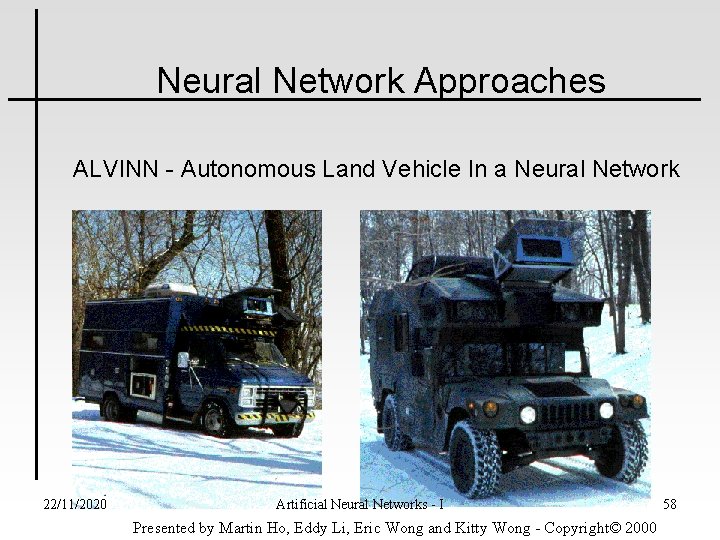

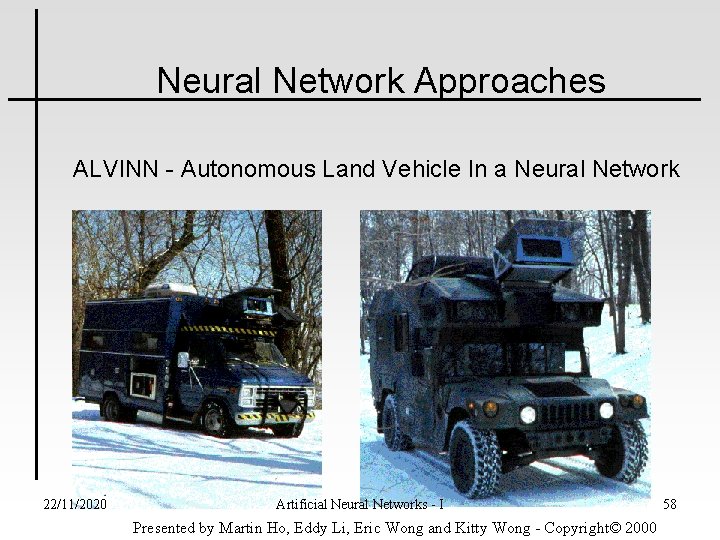

Neural Network Approaches ALVINN - Autonomous Land Vehicle In a Neural Network 22/11/2020 Artificial Neural Networks - I Presented by Martin Ho, Eddy Li, Eric Wong and Kitty Wong - Copyright© 2000 58

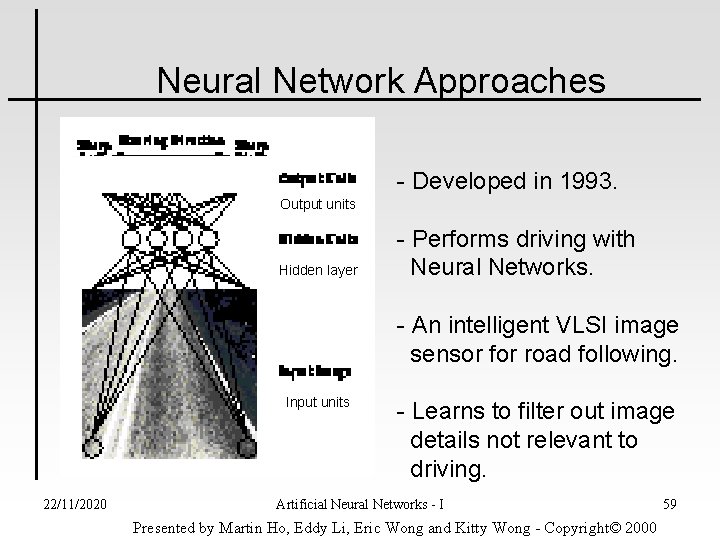

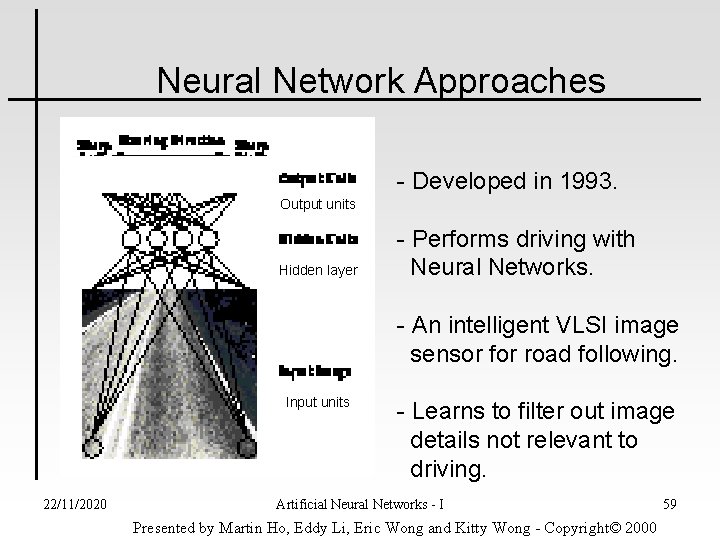

Neural Network Approaches - Developed in 1993. Output units Hidden layer - Performs driving with Neural Networks. - An intelligent VLSI image sensor for road following. Input units 22/11/2020 - Learns to filter out image details not relevant to driving. Artificial Neural Networks - I Presented by Martin Ho, Eddy Li, Eric Wong and Kitty Wong - Copyright© 2000 59

Summary • Artificial neural networks are inspired by the learning processes that take place in biological systems. • Artificial neurons and neural networks try to imitate the working mechanisms of their biological counterparts. • Learning can be perceived as an optimisation process. • Biological neural learning happens by the modification of the synaptic strength. Artificial neural networks learn in the same way. • The synapse strength modification rules for artificial neural networks can be derived by applying mathematical optimisation methods. 22/11/2020 Artificial Neural Networks - I 60

References • B. Kosko (1992), Neural Networks and Fuzzy Systems, Prentice-Hall Int. Ed. • R. Rojas (1992), Neural Networks, Springer Verlag • P. Mehra and B. W. Wah 1992, Artificial Neural Networks: Concepts And Theory, Ieee Computer Society Press. • M. H. Hassoun (1995), Fundamentals of Artificial Neural Networks, MIT Press. 22/11/2020 Artificial Neural Networks - I 61

Newff matlab

Newff matlab Convolutional neural network

Convolutional neural network Introduction to convolutional neural networks

Introduction to convolutional neural networks Pengertian artificial neural network

Pengertian artificial neural network Artificial neural network in data mining

Artificial neural network in data mining Artificial neural network terminology

Artificial neural network terminology Conclusion neural network

Conclusion neural network Visualizing and understanding convolutional networks

Visualizing and understanding convolutional networks Fat shattering dimension

Fat shattering dimension Freed et al 2001 ib psychology

Freed et al 2001 ib psychology Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition Leon gatys

Leon gatys Nvdla

Nvdla Deep neural networks and mixed integer linear optimization

Deep neural networks and mixed integer linear optimization Neural networks and learning machines 3rd edition

Neural networks and learning machines 3rd edition Lstm components

Lstm components Neural networks for rf and microwave design

Neural networks for rf and microwave design 11-747 neural networks for nlp

11-747 neural networks for nlp Perceptron xor

Perceptron xor Sparse convolutional neural networks

Sparse convolutional neural networks On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Threshold logic unit

Threshold logic unit Fuzzy logic lecture

Fuzzy logic lecture Convolutional neural networks

Convolutional neural networks Few shot learning with graph neural networks

Few shot learning with graph neural networks Deep forest towards an alternative to deep neural networks

Deep forest towards an alternative to deep neural networks Convolutional neural networks

Convolutional neural networks Neuraltools neural networks

Neuraltools neural networks Andrew ng lstm

Andrew ng lstm Predicting nba games using neural networks

Predicting nba games using neural networks Neural networks and learning machines

Neural networks and learning machines The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks Audio super resolution using neural networks

Audio super resolution using neural networks Convolutional neural network alternatives

Convolutional neural network alternatives Virtual circuit network and datagram network

Virtual circuit network and datagram network Basestore iptv

Basestore iptv Pxdes

Pxdes Cpsc 322: introduction to artificial intelligence

Cpsc 322: introduction to artificial intelligence Cpsc 322 ubc

Cpsc 322 ubc Introduction to storage area networks

Introduction to storage area networks Circuit switched wan

Circuit switched wan Introduction to communication networks

Introduction to communication networks Introduction to wide area networks

Introduction to wide area networks Introduction to switched networks

Introduction to switched networks Body paragraph

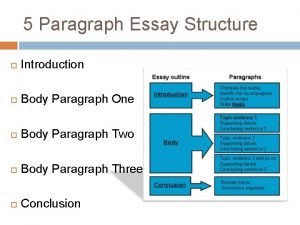

Body paragraph A neural probabilistic language model

A neural probabilistic language model улмфи

улмфи Spinal cord

Spinal cord Neural theory

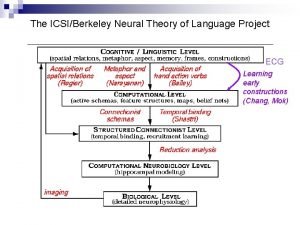

Neural theory Textured neural avatars

Textured neural avatars Tubo neural

Tubo neural Show and tell a neural image caption generator

Show and tell a neural image caption generator Student teacher neural network

Student teacher neural network Mesoderm somites

Mesoderm somites Neural circuits the organization of neuronal pools

Neural circuits the organization of neuronal pools Spina bifida

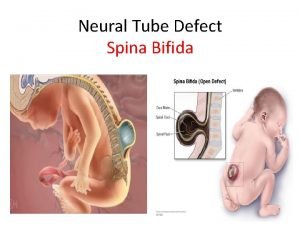

Spina bifida Neural recovery strategies

Neural recovery strategies Neural packet classification

Neural packet classification Cost function neural network

Cost function neural network Mink ph reviews

Mink ph reviews Meshnet: mesh neural network for 3d shape representation

Meshnet: mesh neural network for 3d shape representation