After IRON File Systems My Story of Systems

![IRON File Systems [SOSP’ 05] IRON File System Question Observed anomalous behavior in previous IRON File Systems [SOSP’ 05] IRON File System Question Observed anomalous behavior in previous](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-12.jpg)

![Type-Aware Fault Injection IRON File Systems [SOSP’ 05] File systems consist of many on-disk Type-Aware Fault Injection IRON File Systems [SOSP’ 05] File systems consist of many on-disk](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-13.jpg)

![IRON File Systems [SOSP’ 05] Journaling File System Crash consistency • Achieve atomic updates IRON File Systems [SOSP’ 05] Journaling File System Crash consistency • Achieve atomic updates](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-15.jpg)

![IRON File Systems [SOSP’ 05] Example: File Append Workload: Appends block to single file IRON File Systems [SOSP’ 05] Example: File Append Workload: Appends block to single file](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-16.jpg)

![IRON File Systems [SOSP’ 05] Ordered Journaling Protocol: Append of data block Data inod IRON File Systems [SOSP’ 05] Ordered Journaling Protocol: Append of data block Data inod](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-17.jpg)

![IRON File Systems [SOSP’ 05] Journaling Observations Waiting for writes to complete and be IRON File Systems [SOSP’ 05] Journaling Observations Waiting for writes to complete and be](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-18.jpg)

![Transaction Checksums IRON File Systems [SOSP’ 05] Key idea: Use checksums to replace ordering Transaction Checksums IRON File Systems [SOSP’ 05] Key idea: Use checksums to replace ordering](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-19.jpg)

![Journaling + Tx. Checksum Protocol: Write. . . IRON File Systems [SOSP’ 05] • Journaling + Tx. Checksum Protocol: Write. . . IRON File Systems [SOSP’ 05] •](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-20.jpg)

![IRON File Systems [SOSP’ 05] All Done? Success? • Improves performance • Paper published IRON File Systems [SOSP’ 05] All Done? Success? • Improves performance • Paper published](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-21.jpg)

![Coerced Cache Eviction [DSN’ 11] Can We Trust Wait? Experts say not always • Coerced Cache Eviction [DSN’ 11] Can We Trust Wait? Experts say not always •](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-24.jpg)

![Caches Incent Cheating Coerced Cache Eviction [DSN’ 11] Hard disk performance Critical for performance Caches Incent Cheating Coerced Cache Eviction [DSN’ 11] Hard disk performance Critical for performance](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-25.jpg)

![Optimistic Crash Consistency (SOSP’ 13) SQLite Analysis Crashpoints Inconsistent Consistent[old] Consistent[new] Time/op ext 4

Optimistic Crash Consistency (SOSP’ 13) SQLite Analysis Crashpoints Inconsistent Consistent[old] Consistent[new] Time/op ext 4](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-32.jpg)

- Slides: 71

After IRON File Systems My Story of Systems Research Andrea C. Arpaci-Dusseau Professor @ University of Wisconsin-Madison

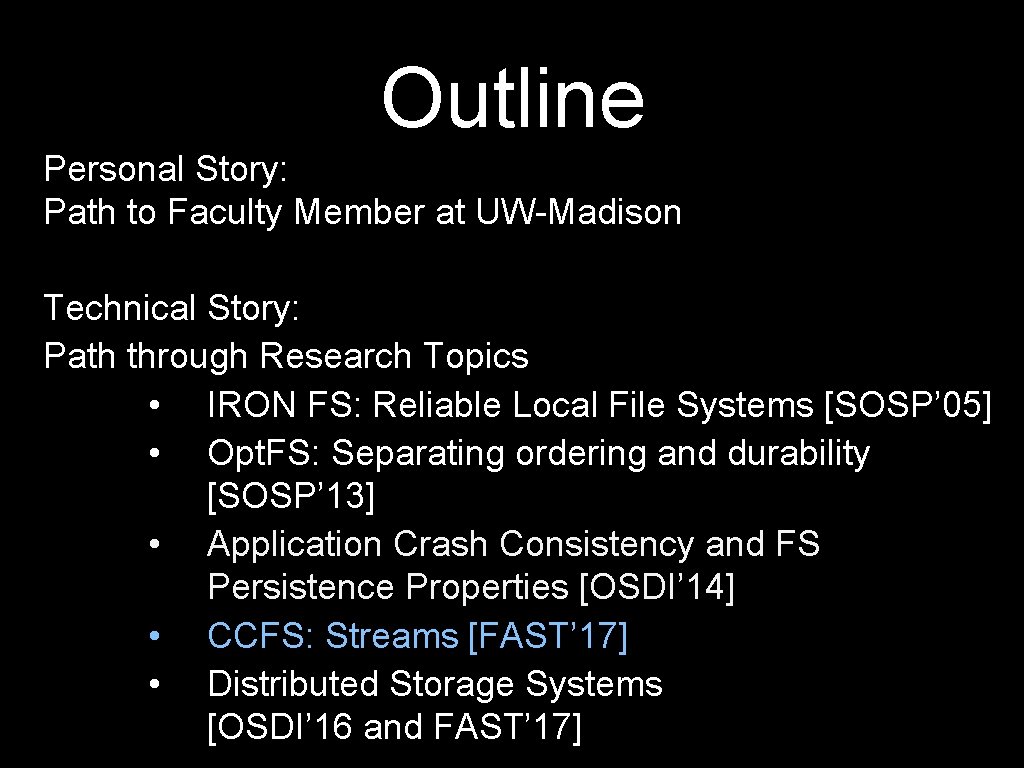

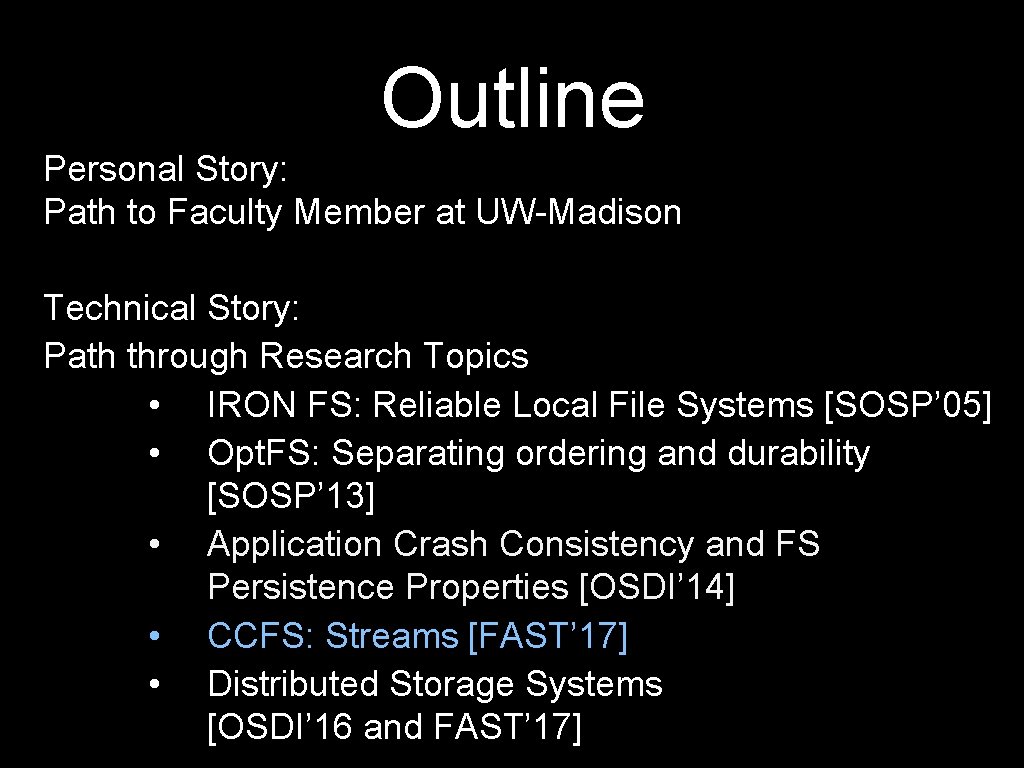

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’ 05] • Opt. FS: Separating ordering and durability [SOSP’ 13] • Application Crash Consistency and FS Persistence Properties [OSDI’ 14] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

Why am I a Computer Scientist?

One reason I went to grad school

Graduate School

Graduate School: Languages, Scheduling, and Sorting Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau, David E. Culler, Joseph M. Hellerstein, and David A. Patterson. Searching for the Sorting Record: Experiences in Tuning NOW-Sort. Symposium on Parallel and Distributed Tools (SPDT). Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau, David E. Culler, Joseph M. Hellerstein, and David P. Patterson. High-Performance Sorting on Networks of Workstations. SIGMOD Conference. Andrea C. Arpaci-Dusseau, David E. Culler, and Alan M. Mainwaring. Scheduling with Implicit Information in Distributed Systems. Sigmetrics. Andrea Arpaci-Dusseau and David Culler. Extending Proportional-Share Scheduling to a Network of Workstations. In International Conference on Parallel and Distributed Processing Techniques and Applications (PDPTA). Andrea C. Dusseau, Remzi H. Arpaci, and David E. Culler. Effective Distributed Scheduling of Parallel Workloads. 1996 ACM Sigmetrics. Remzi H. Arpaci, Andrea C. Dusseau, Amin M. Vahdat, Lok T. Liu, Thomas E. Anderson, and David A. Patterson. The Interaction of Parallel and Sequential Workloads on a Network of Workstations, Sigmetrics. David E. Culler, Andrea Dusseau, Seth Copen Goldstein, Arvind Krishnamurthy, Steven Lumetta, Thorsten von Eicken, and Katherine Yelick. Parallel Programming in Split-C. In Proceedings of

UW-Madison Goal: Be a systems researcher publish in SOSP/OSDI…

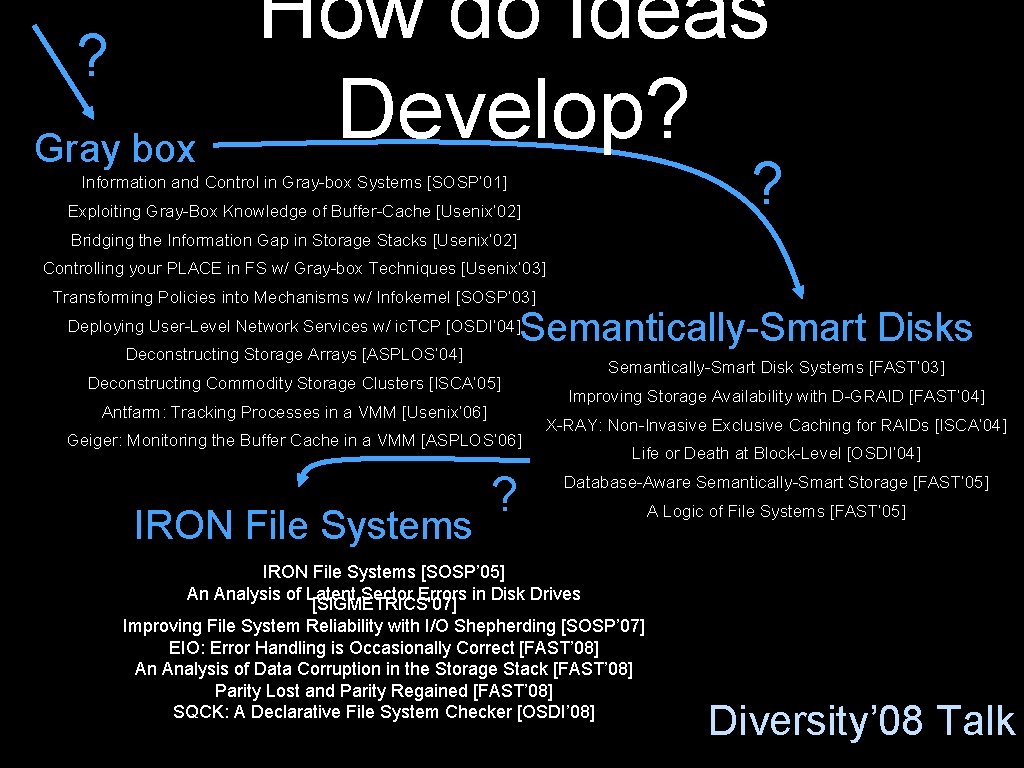

? Gray box How do Ideas Develop? ? Information and Control in Gray-box Systems [SOSP’ 01] Exploiting Gray-Box Knowledge of Buffer-Cache [Usenix’ 02] Bridging the Information Gap in Storage Stacks [Usenix’ 02] Controlling your PLACE in FS w/ Gray-box Techniques [Usenix’ 03] Transforming Policies into Mechanisms w/ Infokernel [SOSP’ 03] Semantically-Smart Disks Deploying User-Level Network Services w/ ic. TCP [OSDI’ 04] Deconstructing Storage Arrays [ASPLOS’ 04] Deconstructing Commodity Storage Clusters [ISCA’ 05] Antfarm: Tracking Processes in a VMM [Usenix’ 06] Geiger: Monitoring the Buffer Cache in a VMM [ASPLOS’ 06] IRON File Systems ? Semantically-Smart Disk Systems [FAST’ 03] Improving Storage Availability with D-GRAID [FAST’ 04] X-RAY: Non-Invasive Exclusive Caching for RAIDs [ISCA’ 04] Life or Death at Block-Level [OSDI’ 04] Database-Aware Semantically-Smart Storage [FAST’ 05] IRON File Systems [SOSP’ 05] An Analysis of Latent Sector Errors in Disk Drives [SIGMETRICS'07] Improving File System Reliability with I/O Shepherding [SOSP’ 07] EIO: Error Handling is Occasionally Correct [FAST’ 08] An Analysis of Data Corruption in the Storage Stack [FAST’ 08] Parity Lost and Parity Regained [FAST’ 08] SQCK: A Declarative File System Checker [OSDI’ 08] A Logic of File Systems [FAST’ 05] Diversity’ 08 Talk

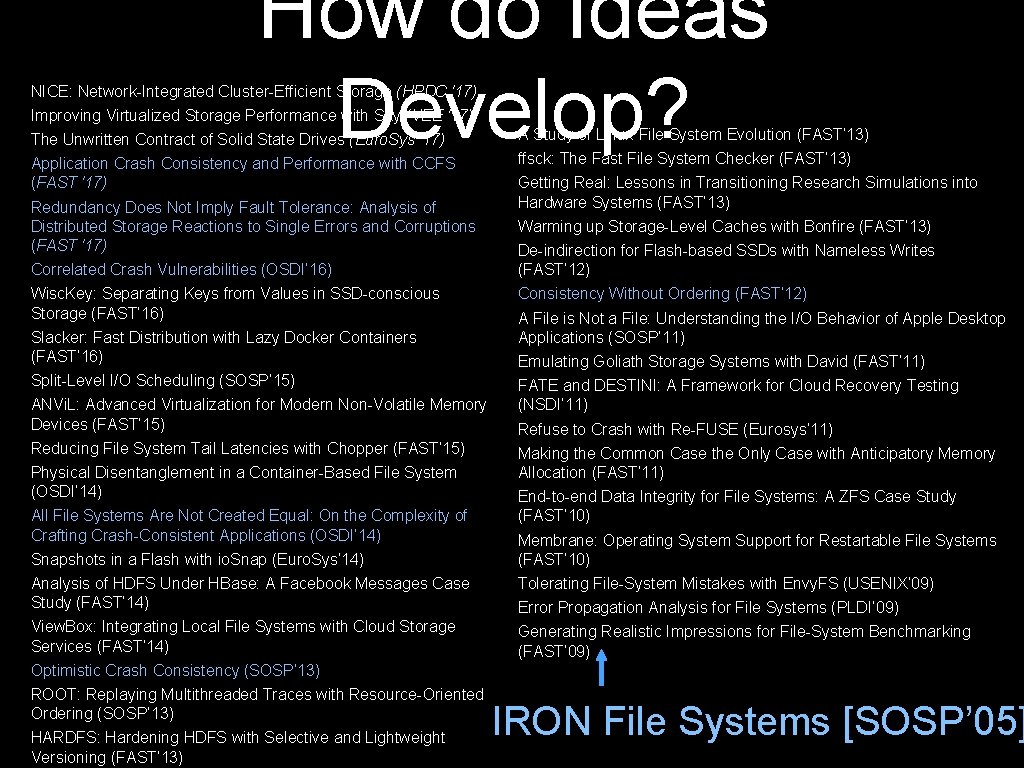

How do Ideas Develop? NICE: Network-Integrated Cluster-Efficient Storage (HPDC '17) Improving Virtualized Storage Performance with Sky (VEE '17) The Unwritten Contract of Solid State Drives (Euro. Sys '17) A Study of Linux File System Evolution (FAST’ 13) Application Crash Consistency and Performance with CCFS (FAST '17) ffsck: The Fast File System Checker (FAST’ 13) Getting Real: Lessons in Transitioning Research Simulations into Hardware Systems (FAST’ 13) Redundancy Does Not Imply Fault Tolerance: Analysis of Distributed Storage Reactions to Single Errors and Corruptions (FAST '17) Warming up Storage-Level Caches with Bonfire (FAST’ 13) De-indirection for Flash-based SSDs with Nameless Writes (FAST’ 12) Correlated Crash Vulnerabilities (OSDI’ 16) Consistency Without Ordering (FAST’ 12) Wisc. Key: Separating Keys from Values in SSD-conscious Storage (FAST’ 16) A File is Not a File: Understanding the I/O Behavior of Apple Desktop Applications (SOSP’ 11) Slacker: Fast Distribution with Lazy Docker Containers (FAST’ 16) Emulating Goliath Storage Systems with David (FAST’ 11) Split-Level I/O Scheduling (SOSP’ 15) ANVi. L: Advanced Virtualization for Modern Non-Volatile Memory Devices (FAST’ 15) Reducing File System Tail Latencies with Chopper (FAST’ 15) Physical Disentanglement in a Container-Based File System (OSDI’ 14) All File Systems Are Not Created Equal: On the Complexity of Crafting Crash-Consistent Applications (OSDI’ 14) Snapshots in a Flash with io. Snap (Euro. Sys’ 14) FATE and DESTINI: A Framework for Cloud Recovery Testing (NSDI’ 11) Refuse to Crash with Re-FUSE (Eurosys’ 11) Making the Common Case the Only Case with Anticipatory Memory Allocation (FAST’ 11) End-to-end Data Integrity for File Systems: A ZFS Case Study (FAST’ 10) Membrane: Operating System Support for Restartable File Systems (FAST’ 10) Analysis of HDFS Under HBase: A Facebook Messages Case Study (FAST’ 14) Tolerating File-System Mistakes with Envy. FS (USENIX’ 09) View. Box: Integrating Local File Systems with Cloud Storage Services (FAST’ 14) Generating Realistic Impressions for File-System Benchmarking (FAST’ 09) Error Propagation Analysis for File Systems (PLDI’ 09) Optimistic Crash Consistency (SOSP’ 13) ROOT: Replaying Multithreaded Traces with Resource-Oriented Ordering (SOSP’ 13) HARDFS: Hardening HDFS with Selective and Lightweight Versioning (FAST’ 13) IRON File Systems [SOSP’ 05]

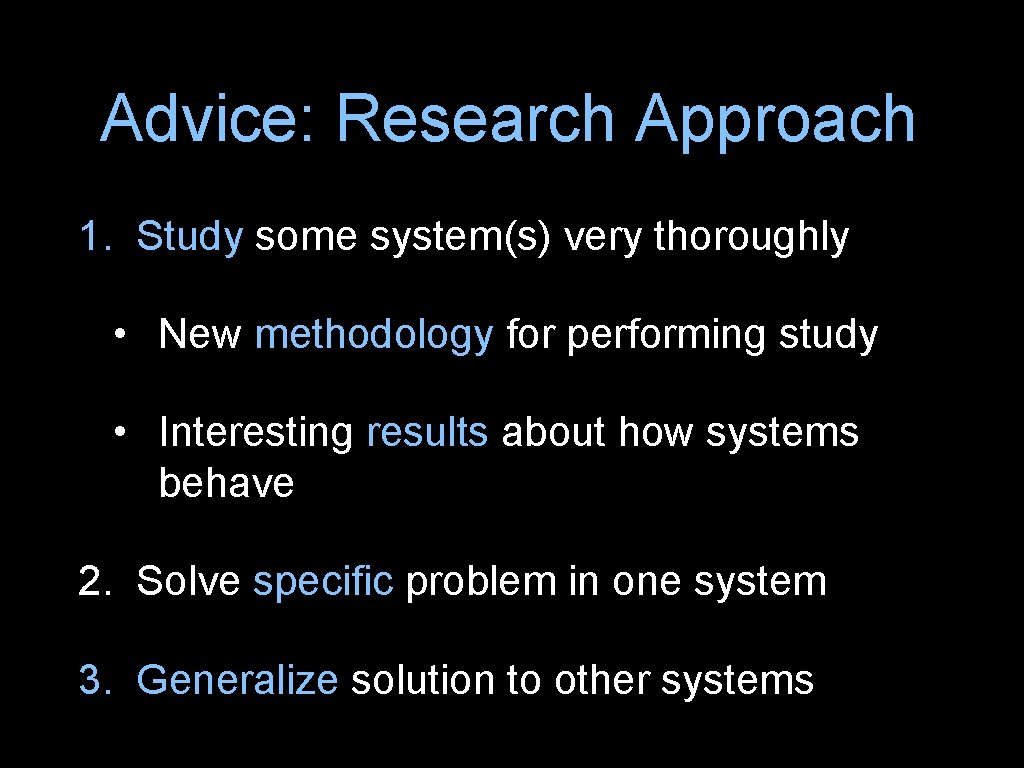

Advice: Research Approach 1. Study some system(s) very thoroughly • New methodology for performing study • Interesting results about how systems behave 2. Solve specific problem in one system 3. Generalize solution to other systems

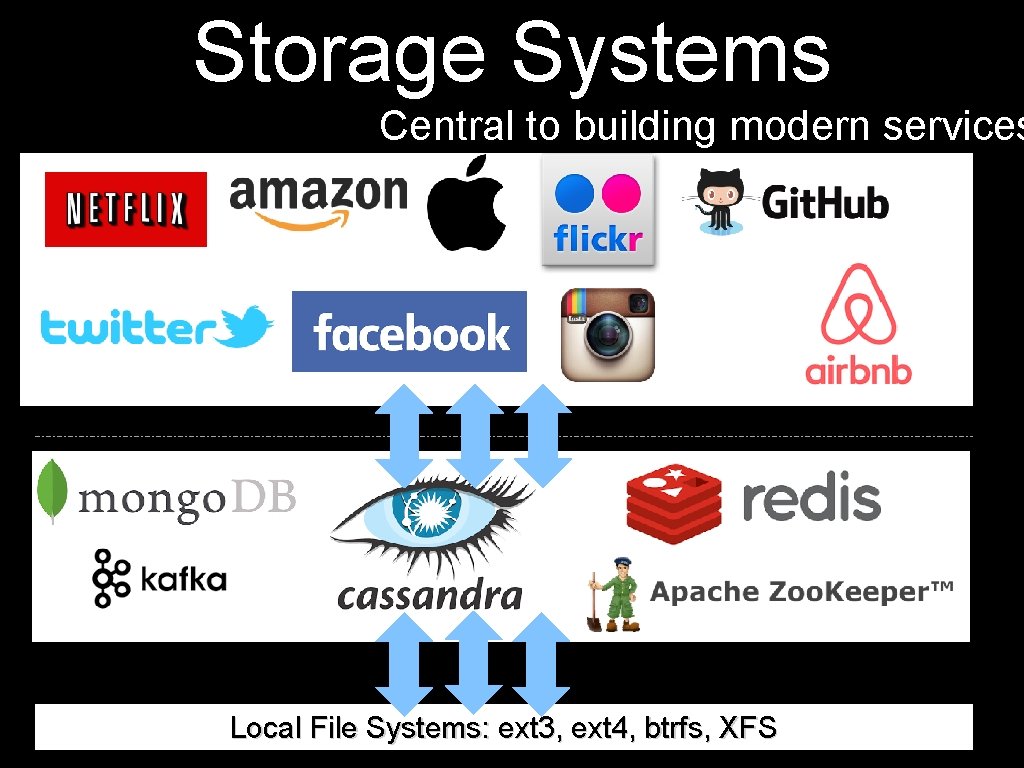

Storage Systems Central to building modern services Local File Systems: ext 3, ext 4, btrfs, XFS

![IRON File Systems SOSP 05 IRON File System Question Observed anomalous behavior in previous IRON File Systems [SOSP’ 05] IRON File System Question Observed anomalous behavior in previous](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-12.jpg)

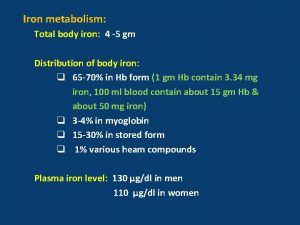

IRON File Systems [SOSP’ 05] IRON File System Question Observed anomalous behavior in previous work on semantically-smart storage How do local file systems react to faults? • Corrupted data • Read/write error codes from lower layer How to measure file system reactions?

![TypeAware Fault Injection IRON File Systems SOSP 05 File systems consist of many ondisk Type-Aware Fault Injection IRON File Systems [SOSP’ 05] File systems consist of many on-disk](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-13.jpg)

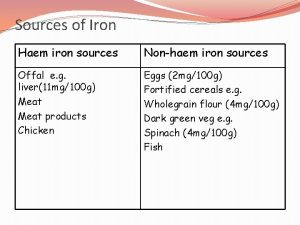

Type-Aware Fault Injection IRON File Systems [SOSP’ 05] File systems consist of many on-disk structures e. g. , superblock, inode, data block Super Methodology: Created layer beneath file system to inject faults in each of these types Inodes Data

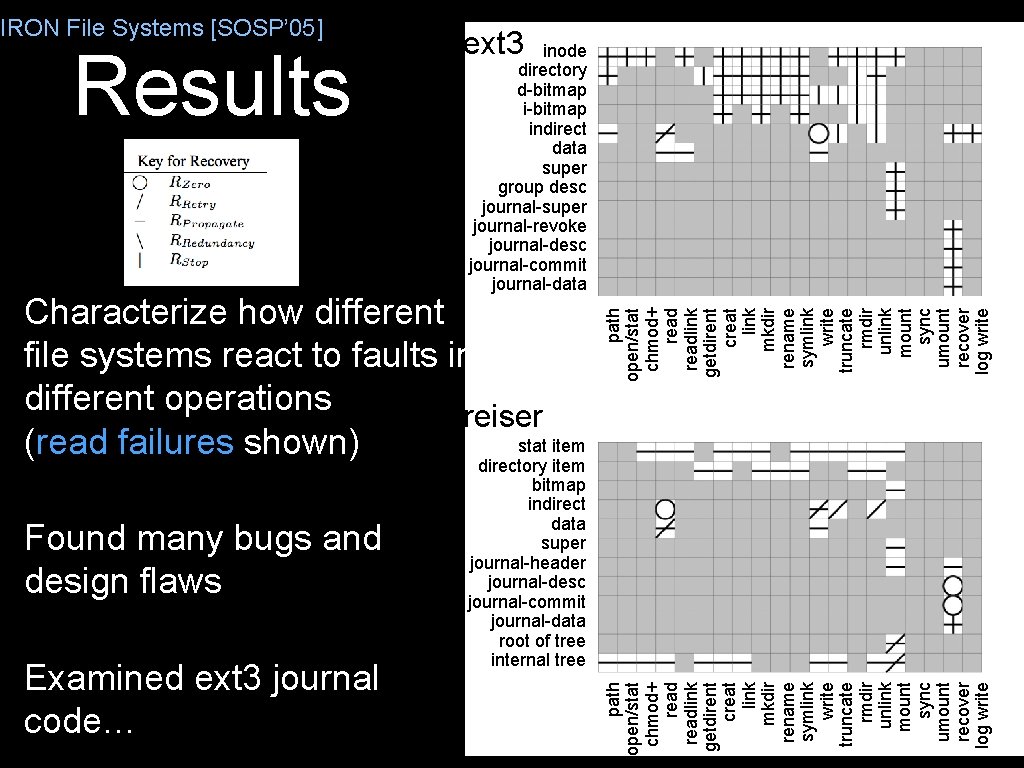

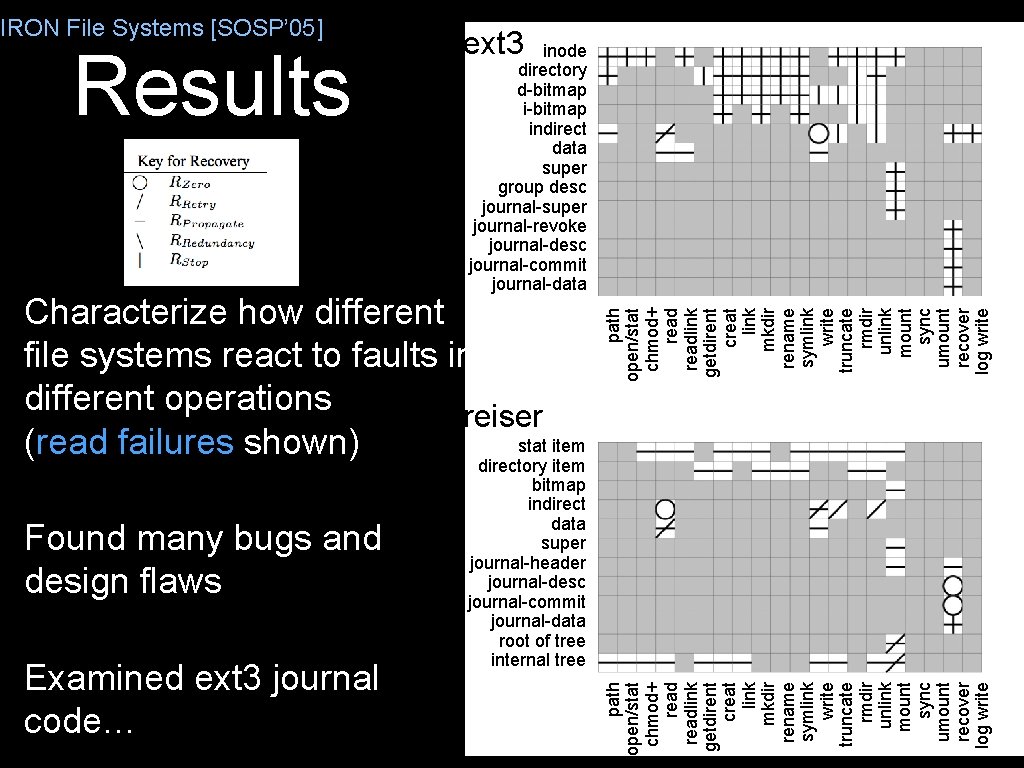

inode directory d-bitmap indirect data super group desc journal-super journal-revoke journal-desc journal-commit journal-data Characterize how different file systems react to faults in different operations reiser stat item (read failures shown) Found many bugs and design flaws Examined ext 3 journal code… path open/stat chmod+ readlink getdirent creat link mkdir rename symlink write truncate rmdir unlink mount sync umount recover log write Results ext 3 directory item bitmap indirect data super journal-header journal-desc journal-commit journal-data root of tree internal tree path open/stat chmod+ readlink getdirent creat link mkdir rename symlink write truncate rmdir unlink mount sync umount recover log write IRON File Systems [SOSP’ 05]

![IRON File Systems SOSP 05 Journaling File System Crash consistency Achieve atomic updates IRON File Systems [SOSP’ 05] Journaling File System Crash consistency • Achieve atomic updates](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-15.jpg)

IRON File Systems [SOSP’ 05] Journaling File System Crash consistency • Achieve atomic updates despite crashes How? • Use write-ahead log to record info about update • If crash occurs during update to in-place data, replay log to repair Turns multiple writes into single atomic action

![IRON File Systems SOSP 05 Example File Append Workload Appends block to single file IRON File Systems [SOSP’ 05] Example: File Append Workload: Appends block to single file](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-16.jpg)

IRON File Systems [SOSP’ 05] Example: File Append Workload: Appends block to single file Must update file-system structures atomically • Bitmap Mark new block as allocated • Inode Point to new block • Data block Contain data of append

![IRON File Systems SOSP 05 Ordered Journaling Protocol Append of data block Data inod IRON File Systems [SOSP’ 05] Ordered Journaling Protocol: Append of data block Data inod](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-17.jpg)

IRON File Systems [SOSP’ 05] Ordered Journaling Protocol: Append of data block Data inod bit T inod data Transaction Begin + Metadata Tb ee map e Wait; Transaction Commit Wait; Checkpoint inode, bitmap Memory File System Proper Journal (Log) Disk

![IRON File Systems SOSP 05 Journaling Observations Waiting for writes to complete and be IRON File Systems [SOSP’ 05] Journaling Observations Waiting for writes to complete and be](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-18.jpg)

IRON File Systems [SOSP’ 05] Journaling Observations Waiting for writes to complete and be acknowledged by storage device adds delay Forcing writes to be ordered causes slowness

![Transaction Checksums IRON File Systems SOSP 05 Key idea Use checksums to replace ordering Transaction Checksums IRON File Systems [SOSP’ 05] Key idea: Use checksums to replace ordering](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-19.jpg)

Transaction Checksums IRON File Systems [SOSP’ 05] Key idea: Use checksums to replace ordering How to avoid waiting and ordering? • Compute checksum over journal • Write journal metadata and Tx commit together • Upon crash: redo iff checksum matches contents

![Journaling Tx Checksum Protocol Write IRON File Systems SOSP 05 Journaling + Tx. Checksum Protocol: Write. . . IRON File Systems [SOSP’ 05] •](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-20.jpg)

Journaling + Tx. Checksum Protocol: Write. . . IRON File Systems [SOSP’ 05] • Data • inod bit Tb ee map Transaction Begin + Metadata T data e • Transaction Commit with Checksum • Wait; Checkpoint inode, bitmap Memory File System Proper Journal (Log) Disk

![IRON File Systems SOSP 05 All Done Success Improves performance Paper published IRON File Systems [SOSP’ 05] All Done? Success? • Improves performance • Paper published](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-21.jpg)

IRON File Systems [SOSP’ 05] All Done? Success? • Improves performance • Paper published at SOSP ‘ 05 • Transactional checksum adopted into Linux ext 4 (and still ships) But, idea lingered • Can checksums replace ordering more generally? • Moved on…

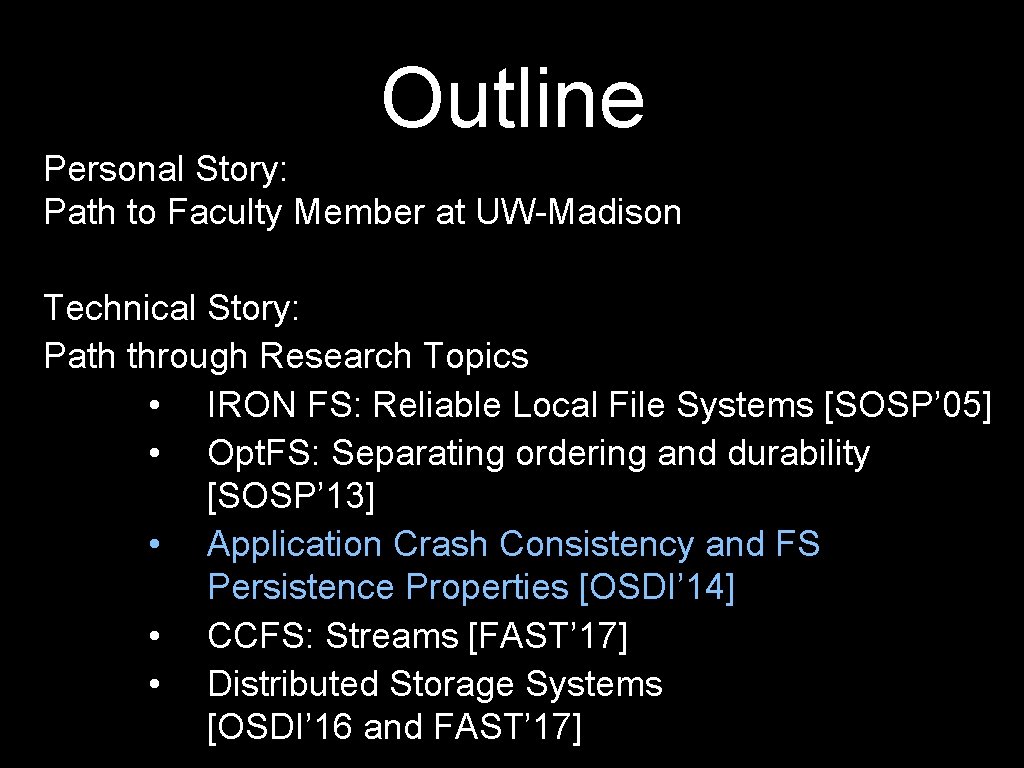

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’ 05] • Opt. FS: Separating ordering and durability [SOSP’ 13] • Application Crash Consistency and FS Persistence Properties [OSDI’ 14] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

Advice: Talk to Industry Work on real problems that are not be solved by others Or, work with people who do…

![Coerced Cache Eviction DSN 11 Can We Trust Wait Experts say not always Coerced Cache Eviction [DSN’ 11] Can We Trust Wait? Experts say not always •](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-24.jpg)

Coerced Cache Eviction [DSN’ 11] Can We Trust Wait? Experts say not always • Some hard drives ignore barriers/flush Documentation hints at problem: • From the fcntl man page on Mac OS X: • F_FULLSYNC: Does the same thing as fsync(2) then asks the drive to flush all buffered data to device. Certain Fire. Wire drives have also been known to ignore the request to flush their buffered data. • From Virtual. Box documentation: • If desired, the virtual disk images can be flushed when the guest issues the IDE FLUSH CACHE command. Normally these requests are ignored for improved performance.

![Caches Incent Cheating Coerced Cache Eviction DSN 11 Hard disk performance Critical for performance Caches Incent Cheating Coerced Cache Eviction [DSN’ 11] Hard disk performance Critical for performance](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-25.jpg)

Caches Incent Cheating Coerced Cache Eviction [DSN’ 11] Hard disk performance Critical for performance • Reads: avoid re-fetching • Writes: avoid committing to layer beneath • Reorder writes, squash writes Any layer of distributed storage system could cache data!

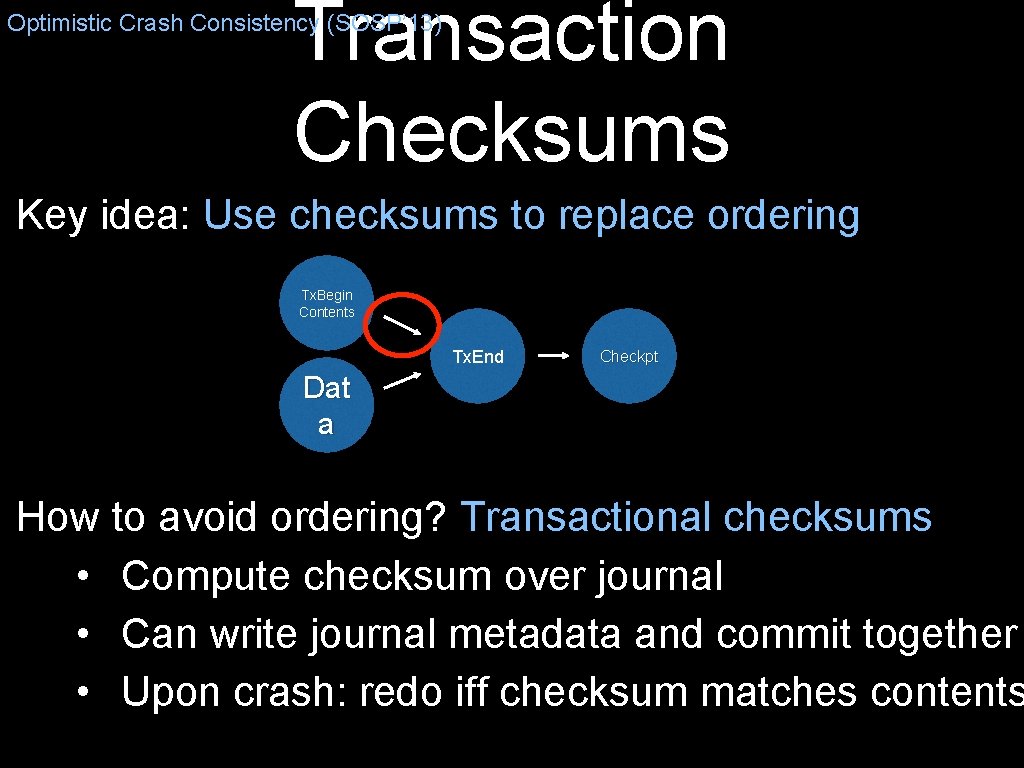

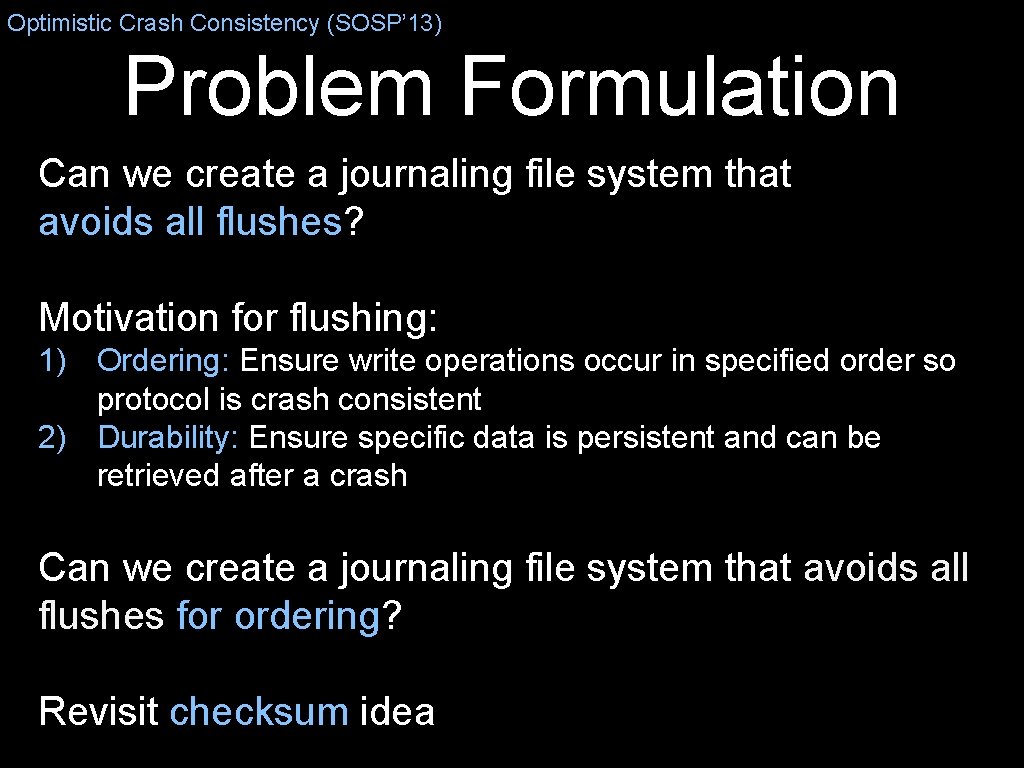

Optimistic Crash Consistency (SOSP’ 13) Problem Formulation Can we create a journaling file system that avoids all flushes? Motivation for flushing: 1) Ordering: Ensure write operations occur in specified order so protocol is crash consistent 2) Durability: Ensure specific data is persistent and can be retrieved after a crash Can we create a journaling file system that avoids all flushes for ordering? Revisit checksum idea

Optimistic FS Approach Optimistic Crash Consistency (SOSP’ 13) Observation: Most of the time, system doesn’t crash • Okay if writes ”out of order” if doesn’t crash! Provide new primitive osync() to applications • write(A), osync(), write(B) • Application uses fsync() to flush data for durability

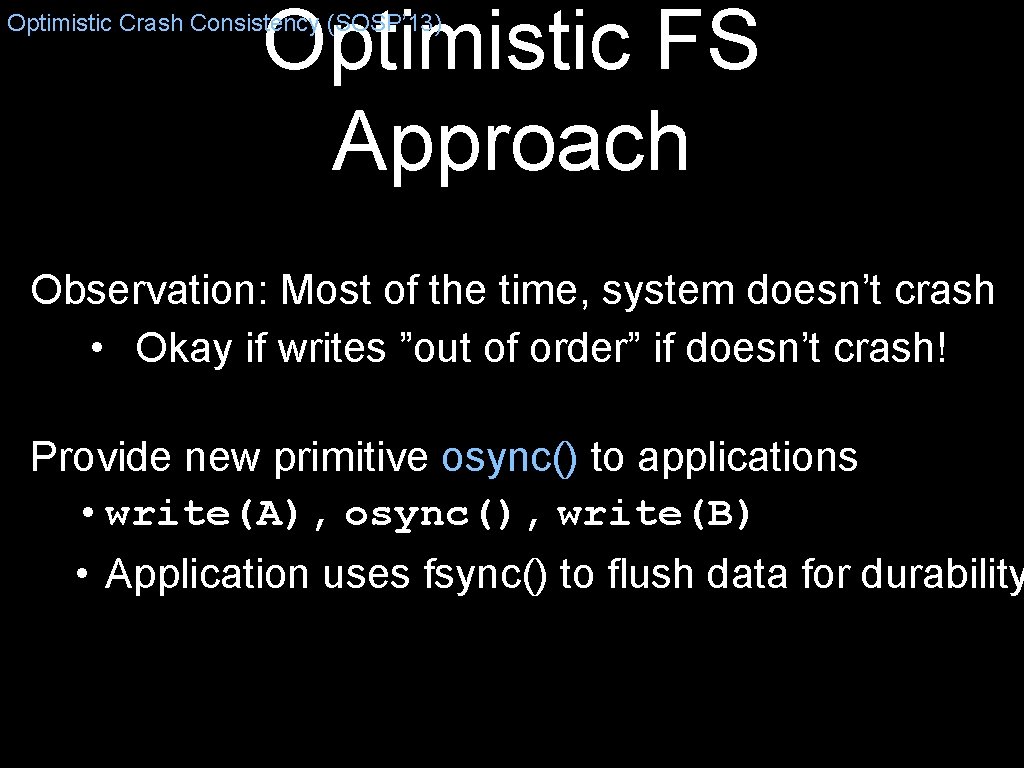

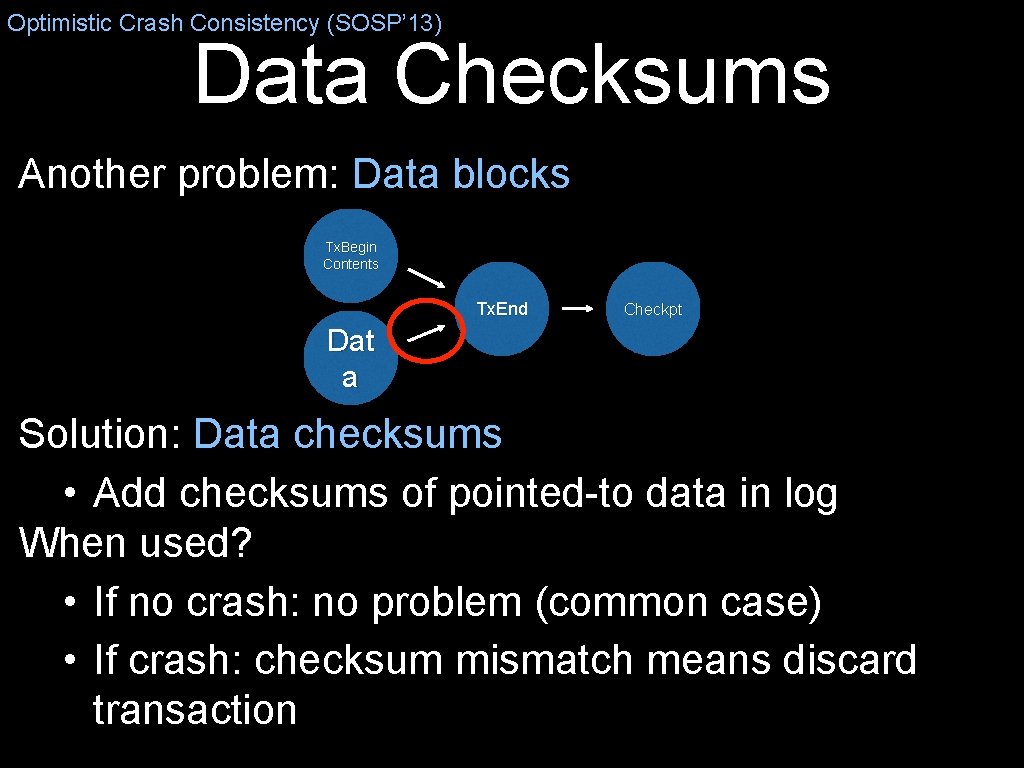

Transaction Checksums Optimistic Crash Consistency (SOSP’ 13) Key idea: Use checksums to replace ordering Tx. Begin Contents Tx. End Checkpt Dat a How to avoid ordering? Transactional checksums • Compute checksum over journal • Can write journal metadata and commit together • Upon crash: redo iff checksum matches contents

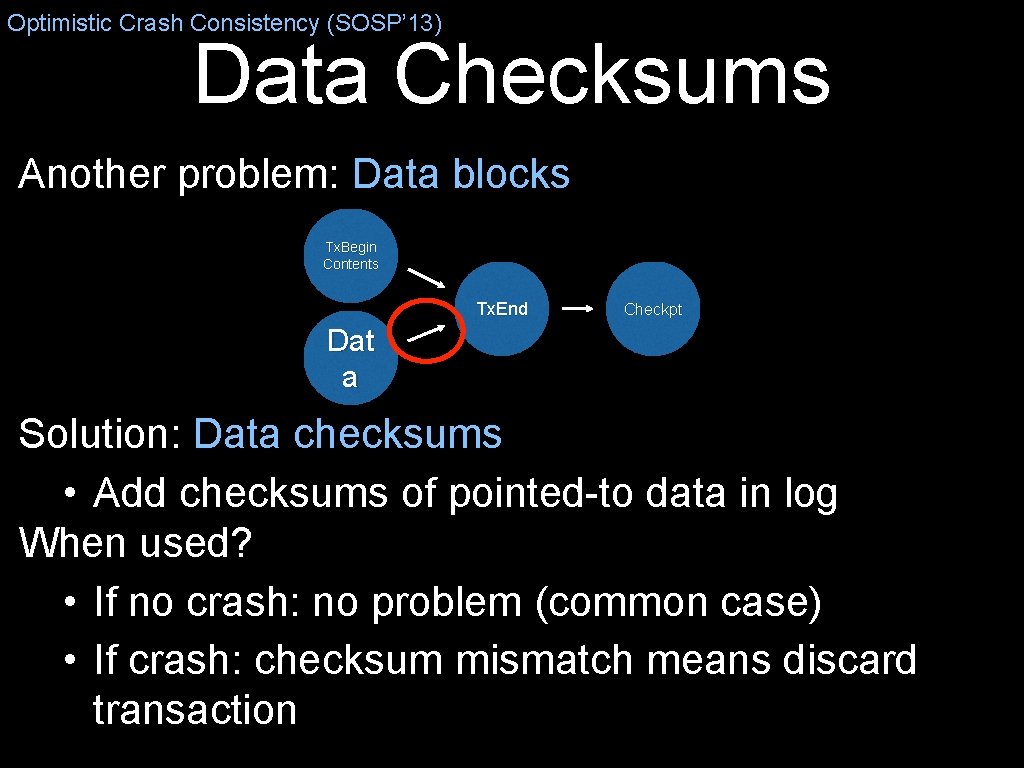

Optimistic Crash Consistency (SOSP’ 13) Data Checksums Another problem: Data blocks Tx. Begin Contents Tx. End Checkpt Dat a Solution: Data checksums • Add checksums of pointed-to data in log When used? • If no crash: no problem (common case) • If crash: checksum mismatch means discard transaction

Optimistic Crash Consistency (SOSP’ 13) One More Problem Must separate journaling from checkpointing Tx. Begin Contents Tx. End Checkpt Dat a How to know when logging is complete? New: Async Durability Notification (ADN) • After writes are persisted, OS notified by drive • Drive provides information, is not controlled • Only background work must wait for ADN

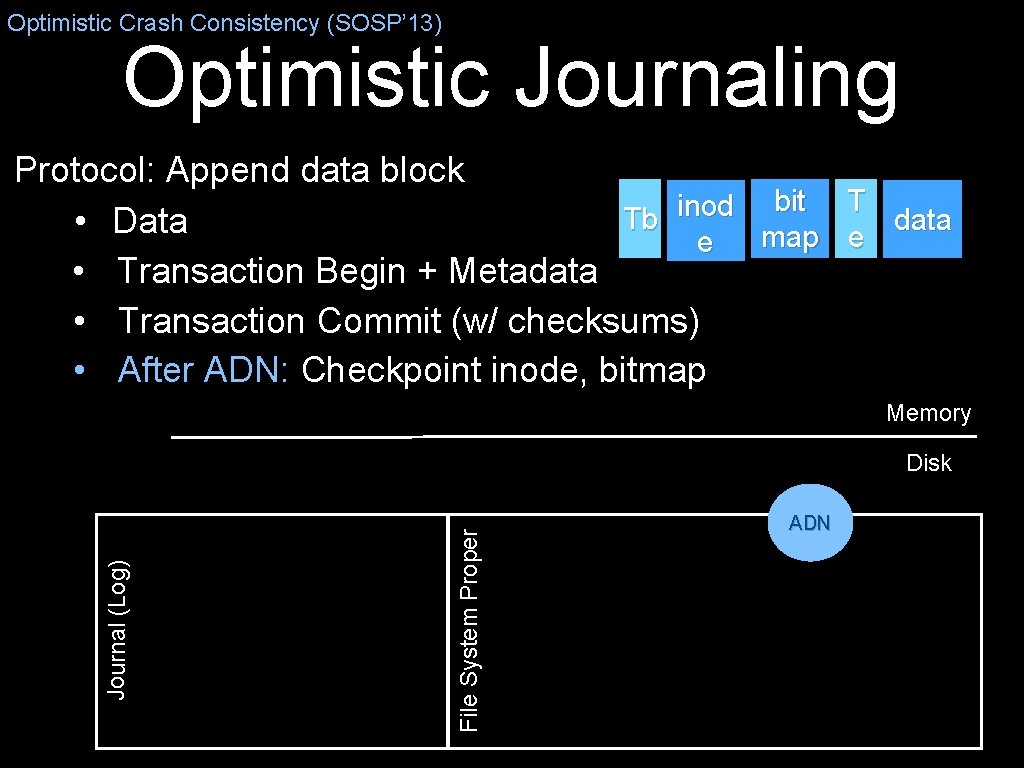

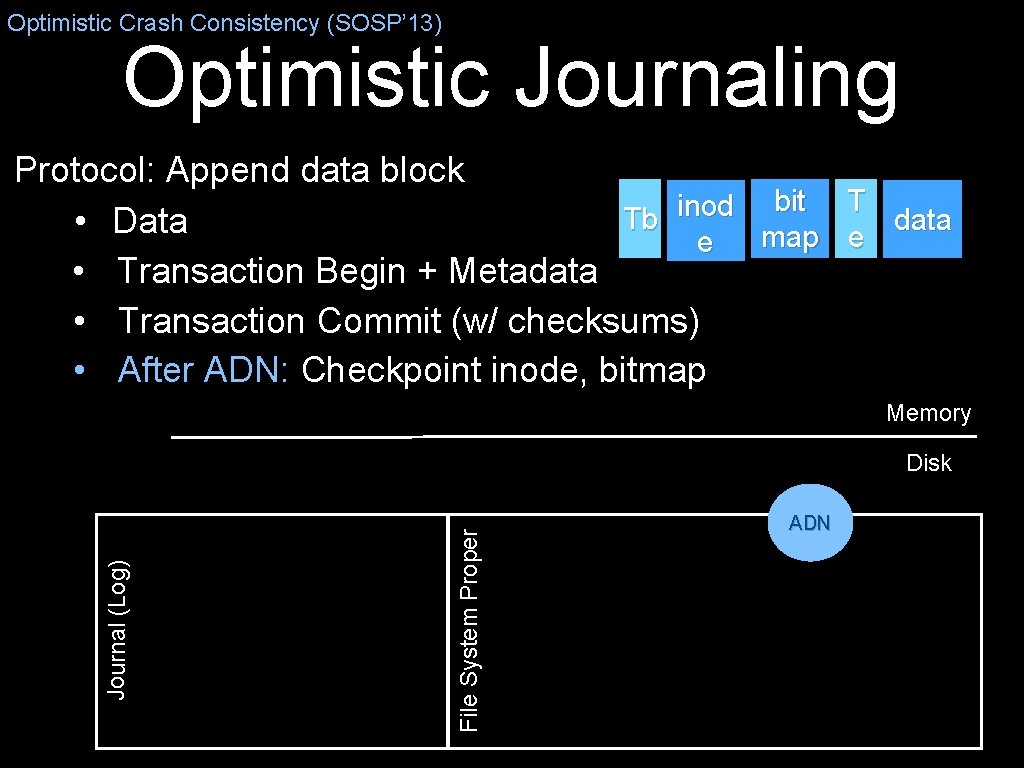

Optimistic Crash Consistency (SOSP’ 13) Optimistic Journaling Protocol: Append data block bit T inod Tb data • Data map e e • Transaction Begin + Metadata • Transaction Commit (w/ checksums) • After ADN: Checkpoint inode, bitmap Memory File System Proper Journal (Log) Disk ADN

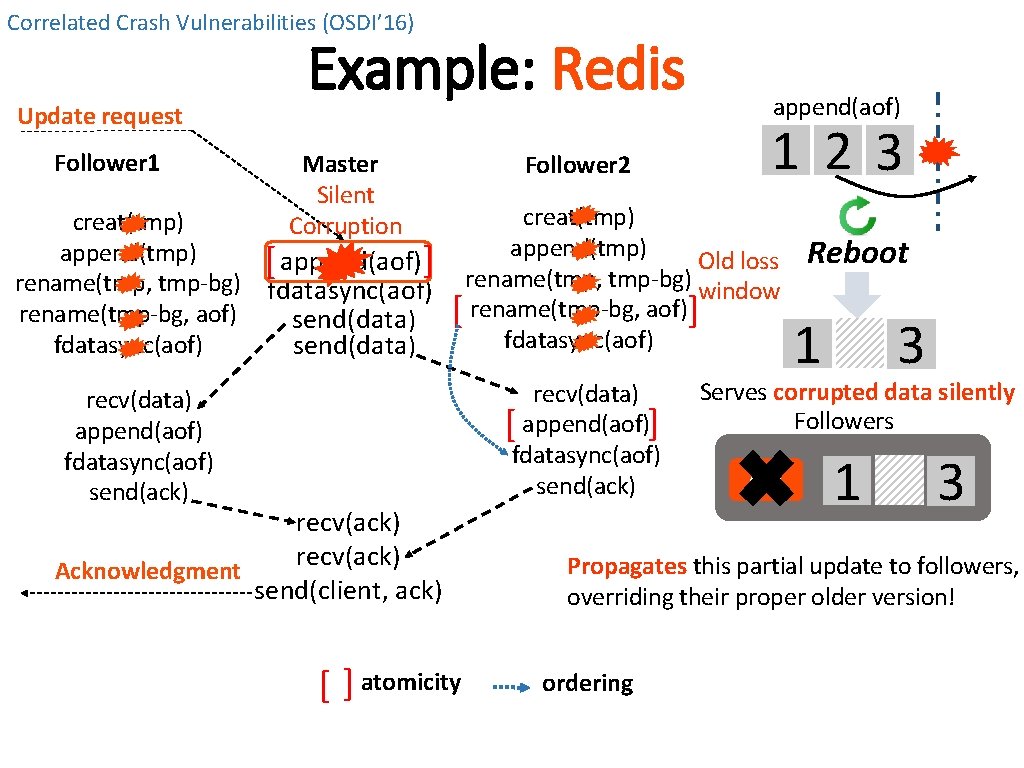

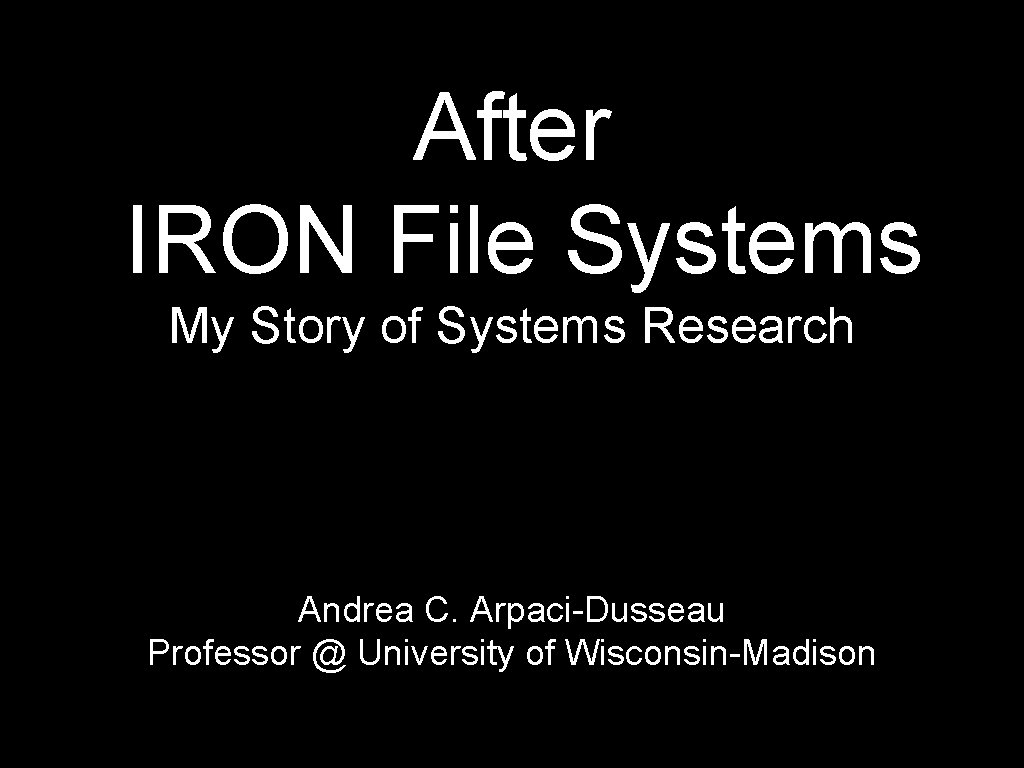

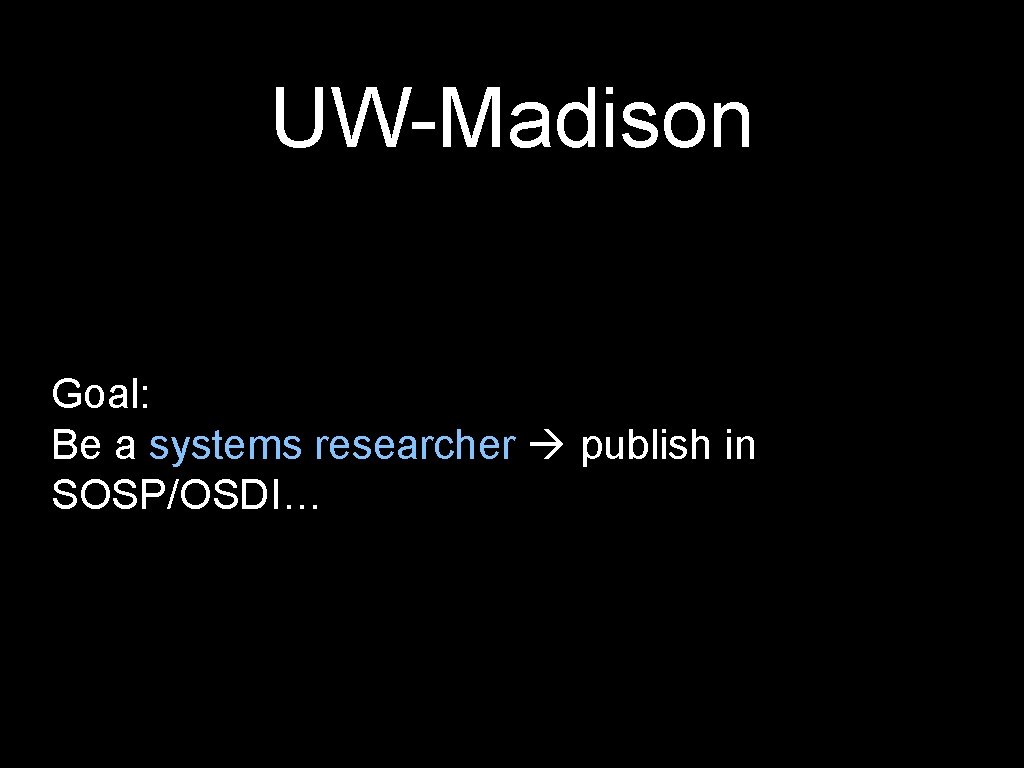

![Optimistic Crash Consistency SOSP 13 SQLite Analysis Crashpoints Inconsistent Consistentold Consistentnew Timeop ext 4

Optimistic Crash Consistency (SOSP’ 13) SQLite Analysis Crashpoints Inconsistent Consistent[old] Consistent[new] Time/op ext 4](https://slidetodoc.com/presentation_image_h/a01bb248c057cb237ac5b98f6af9d6b8/image-32.jpg)

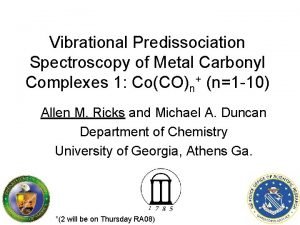

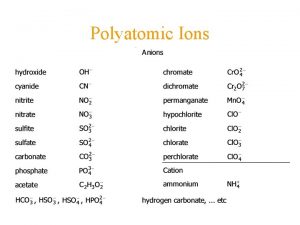

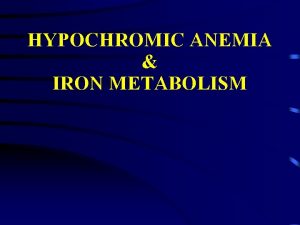

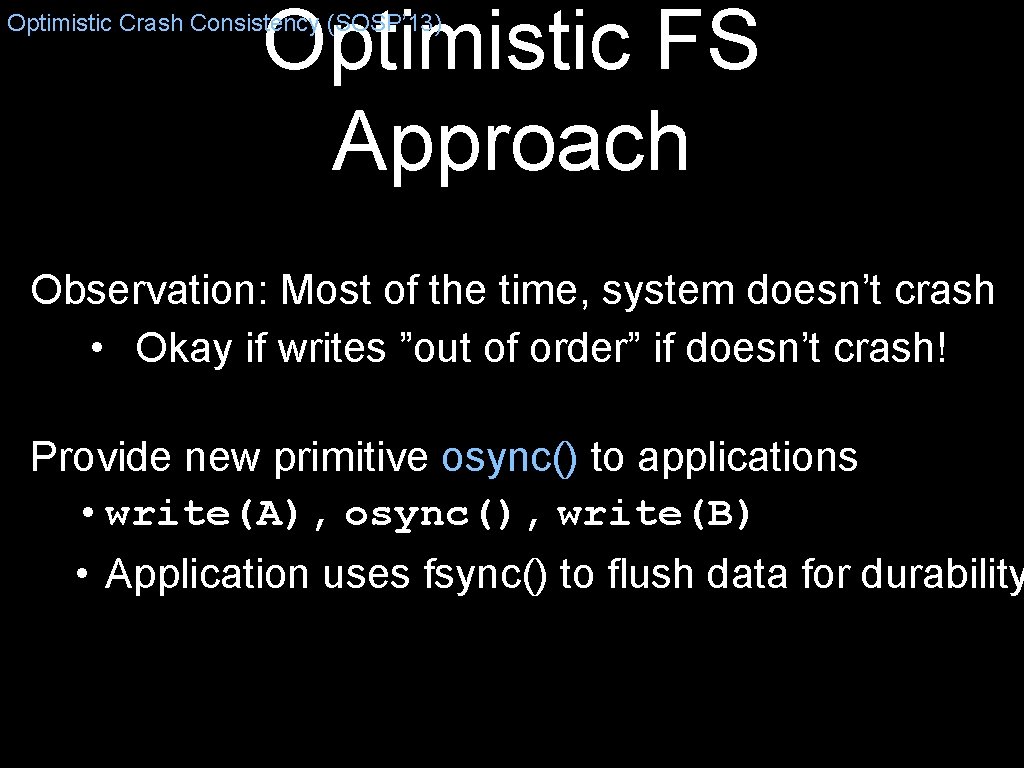

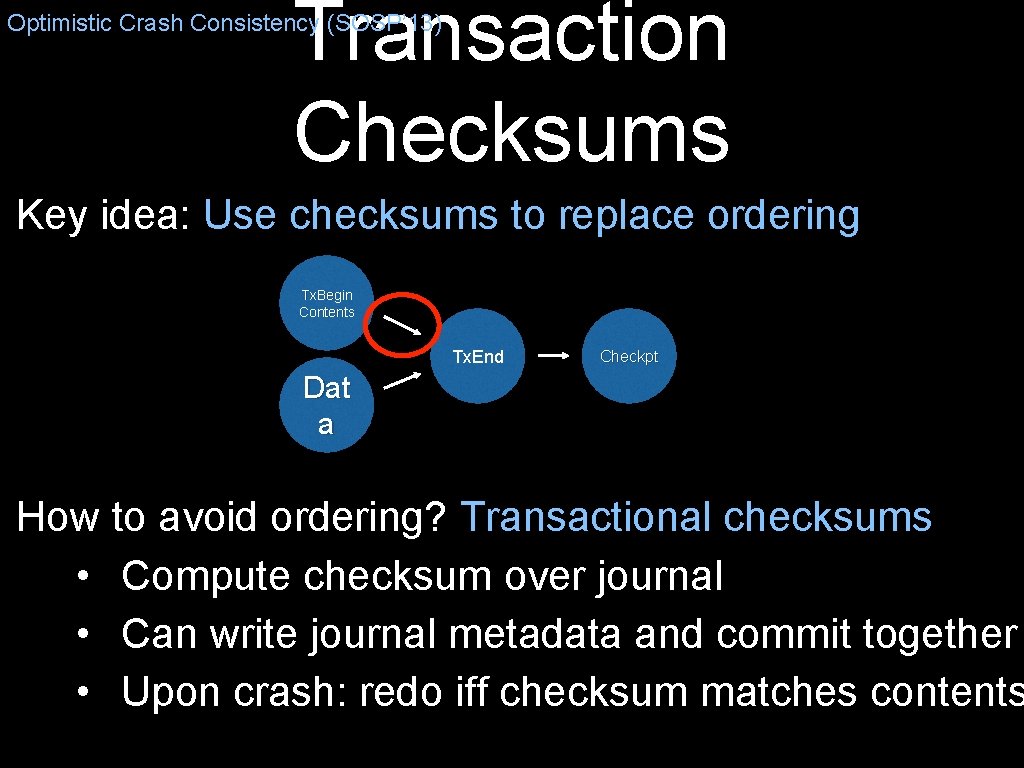

Optimistic Crash Consistency (SOSP’ 13) SQLite Analysis Crashpoints Inconsistent Consistent[old] Consistent[new] Time/op ext 4 (fast/risky) ext 4 (slow/safe) Opt. FS 100 73 8 19 ~15 ms 100 0 50 50 ~150 ms 100 0 76 24 ~15 ms Opt. FS: Fast and crash consistent • 10 x faster than slow/safe ext 4 for update workload • Prefix consistency: Always consistent (but old)

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’ 05] • Opt. FS: Separating ordering and durability [SOSP’ 13] • Application Crash Consistency and FS Persistence Properties [OSDI’ 14] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

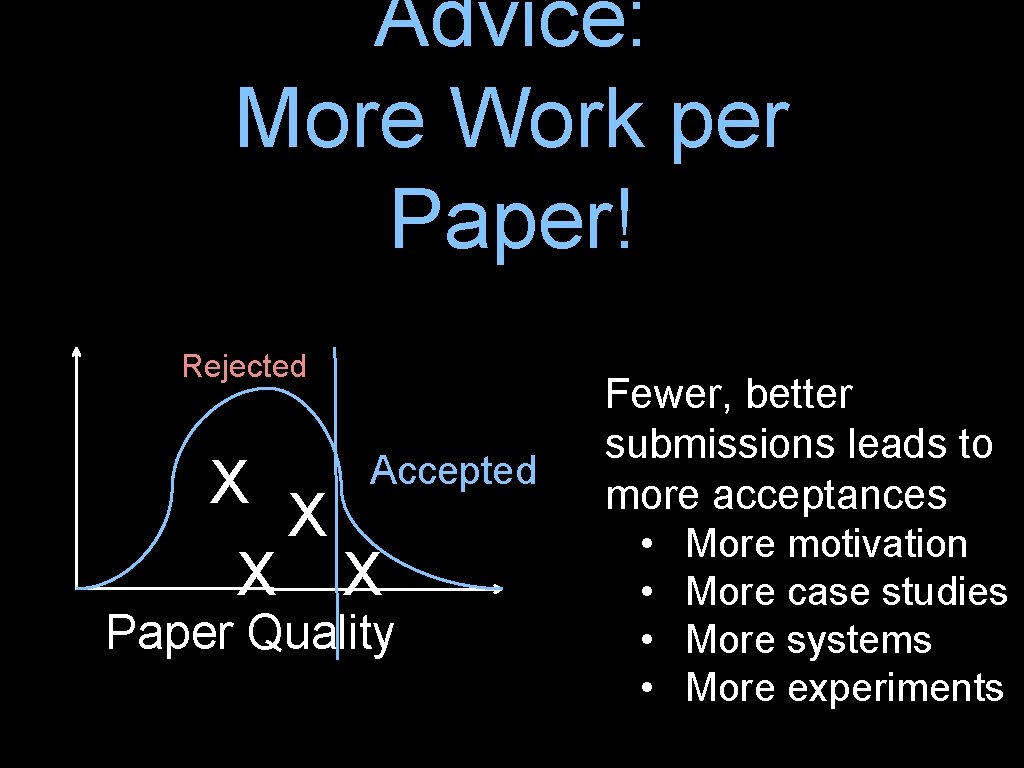

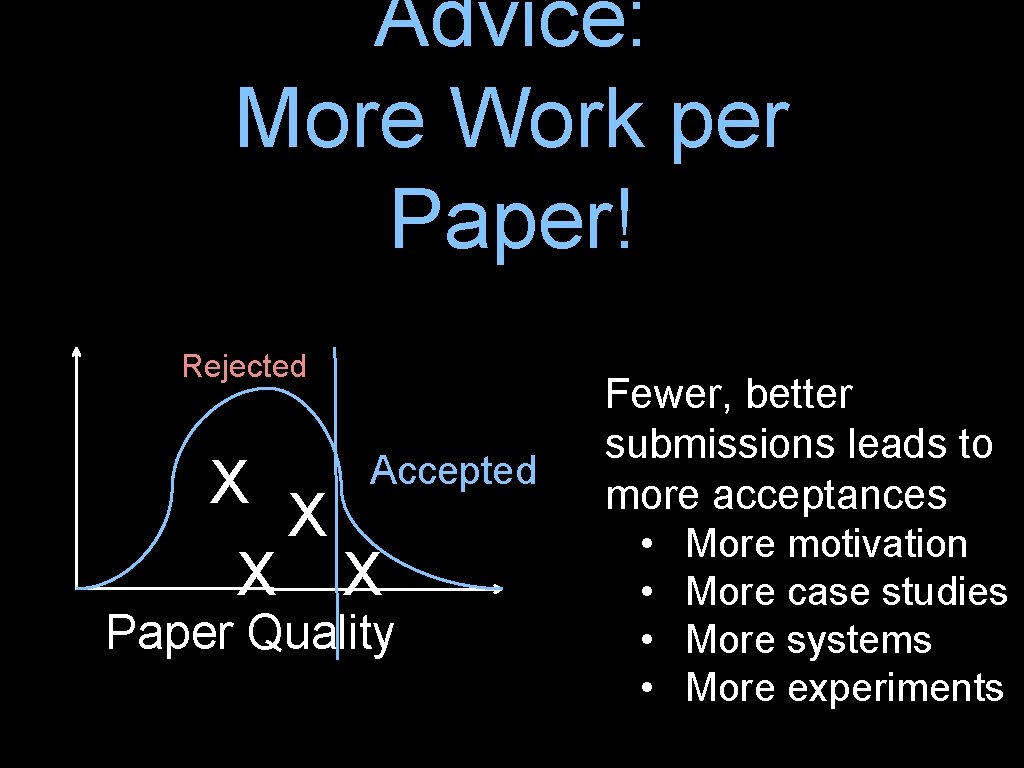

Advice: More Work per Paper! Rejected X X X Accepted X Paper Quality Fewer, better submissions leads to more acceptances • More motivation • More case studies • More systems • More experiments

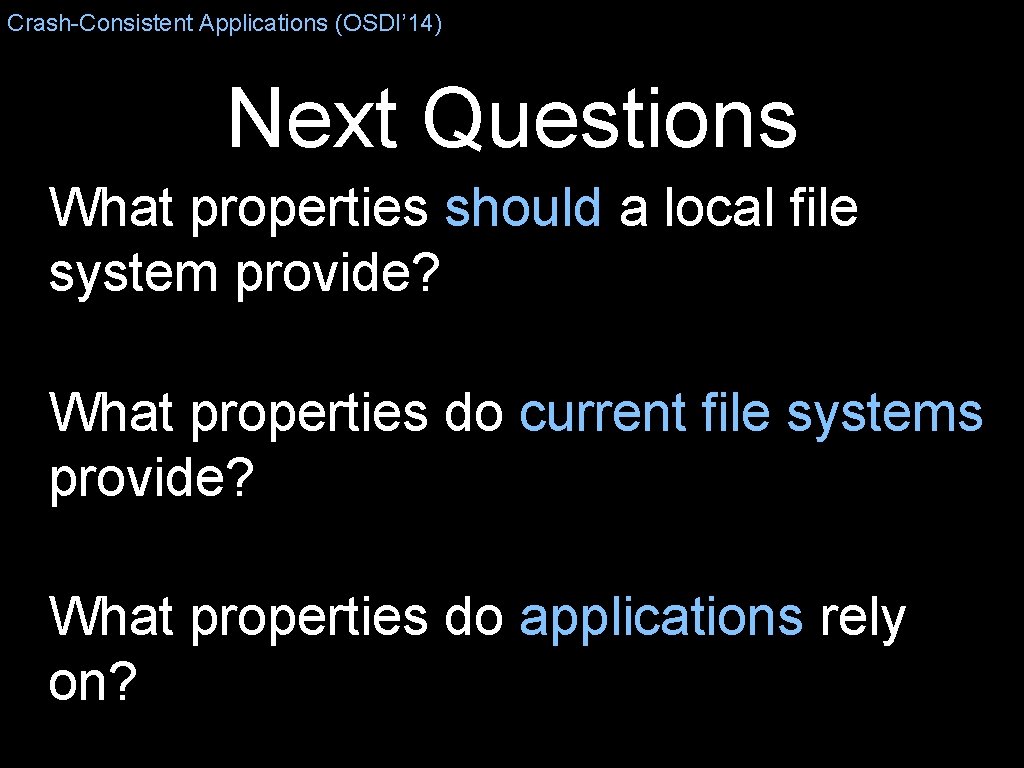

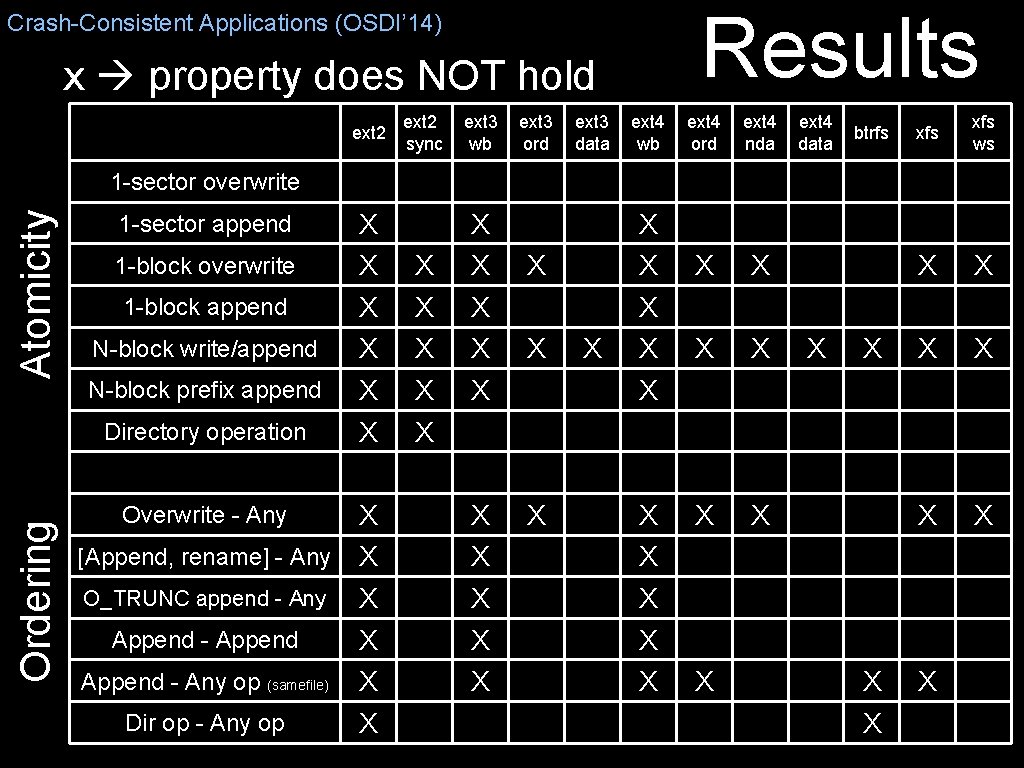

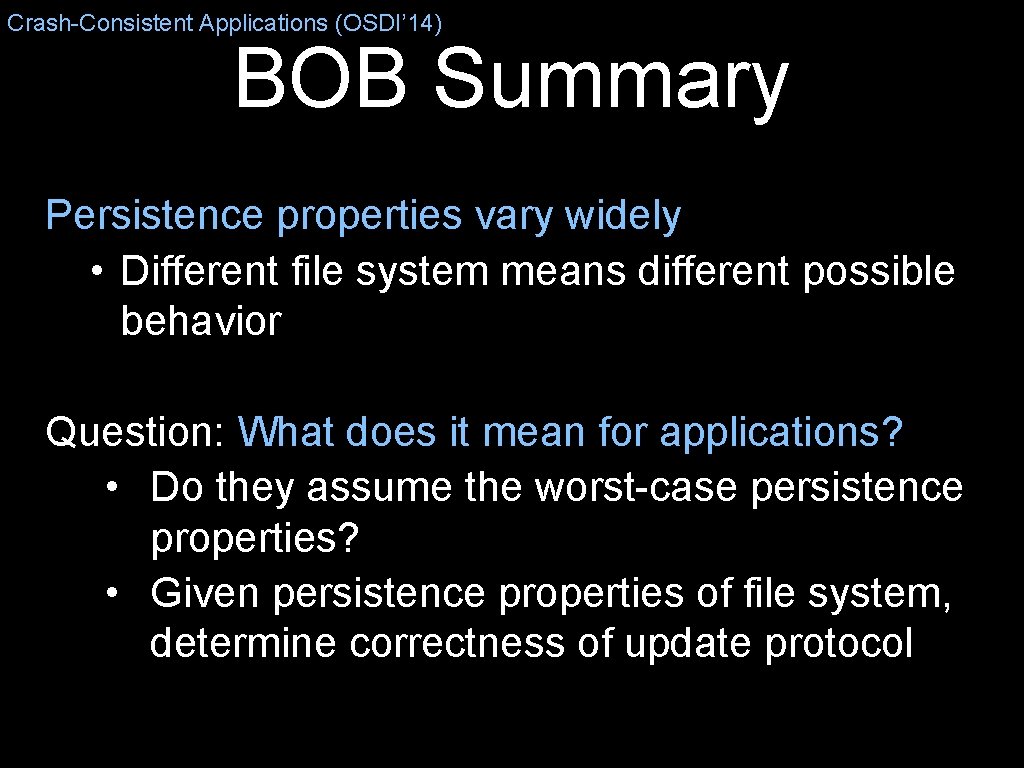

Crash-Consistent Applications (OSDI’ 14) Next Questions What properties should a local file system provide? What properties do current file systems provide? What properties do applications rely on?

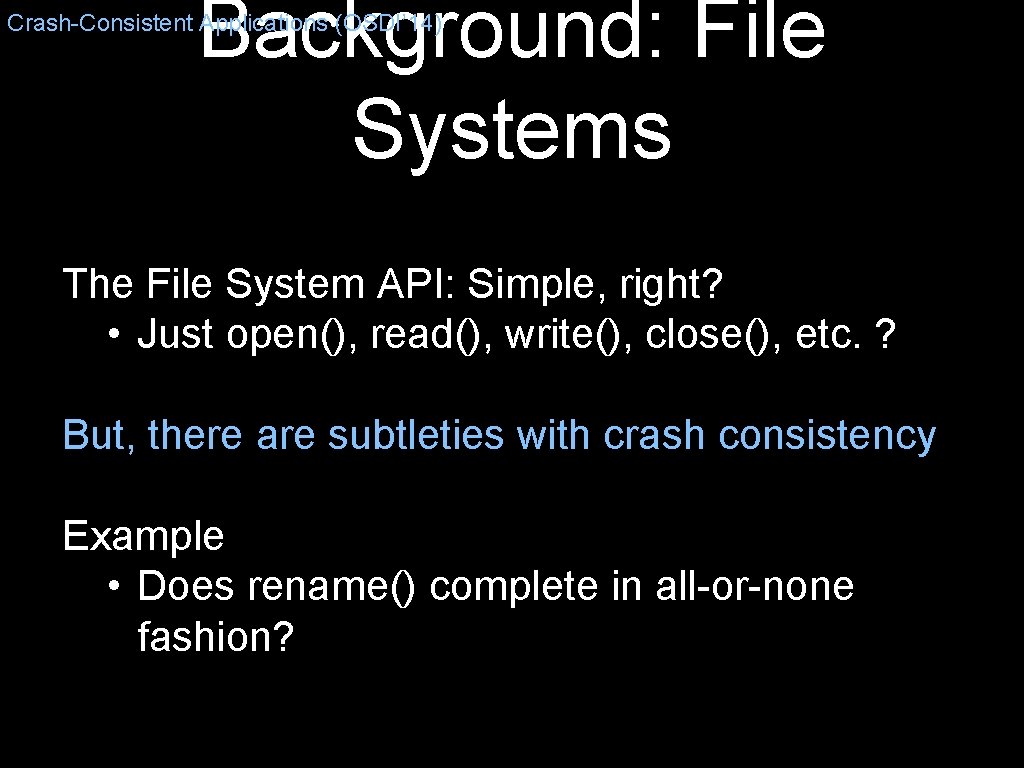

Background: File Systems Crash-Consistent Applications (OSDI’ 14) The File System API: Simple, right? • Just open(), read(), write(), close(), etc. ? But, there are subtleties with crash consistency Example • Does rename() complete in all-or-none fashion?

Persistence Properties Crash-Consistent Applications (OSDI’ 14) Persistence properties of a file system: Which post-crash on-disk states are possible? Atomicity • Does update happen all at once? • Example: rename(), write(multiple blocks) Ordering • Does A before B in program order imply A before B in persisted order? • e. g. , write() ordering maintained? How to determine?

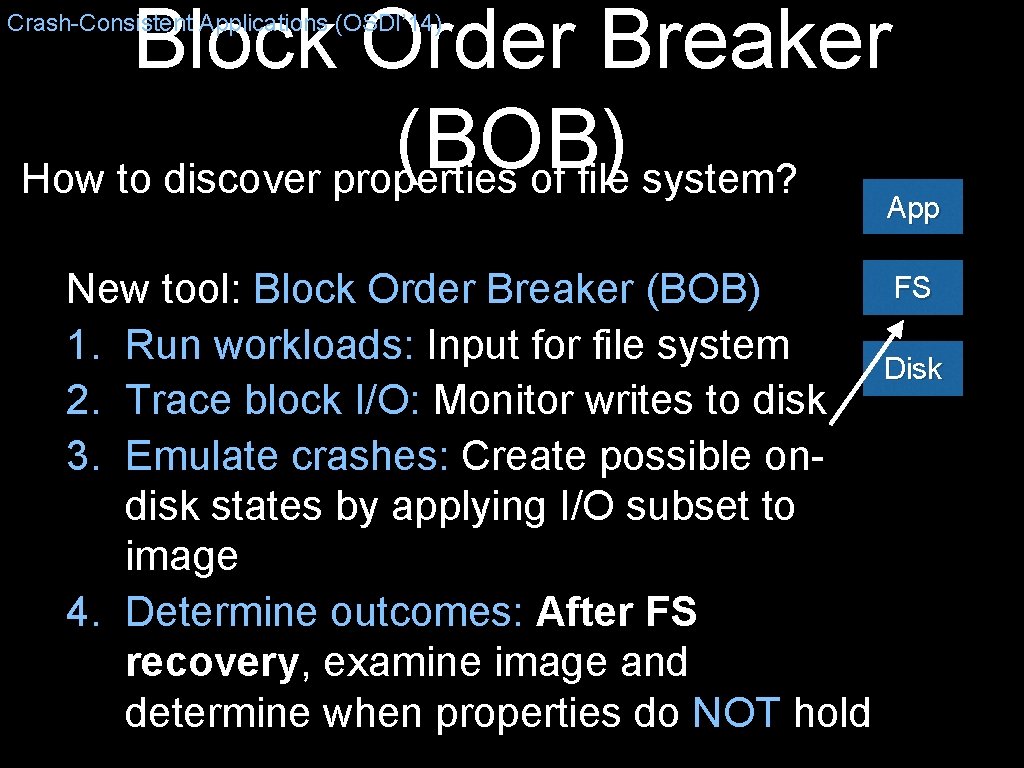

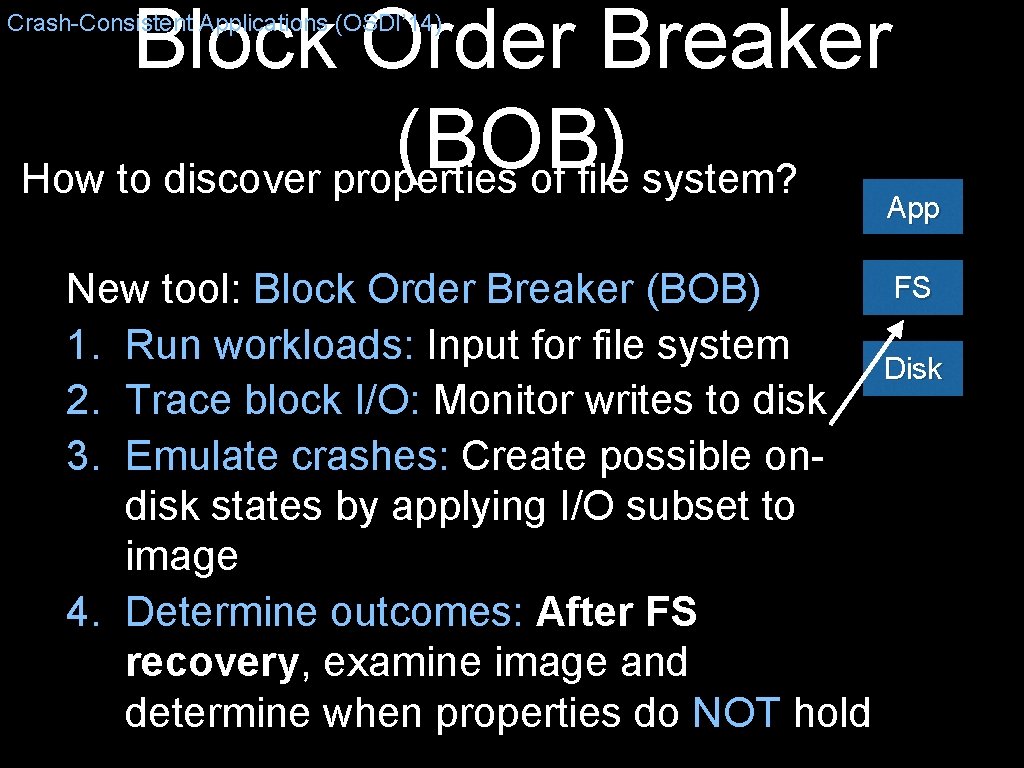

Block Order Breaker (BOB) How to discover properties of file system? Crash-Consistent Applications (OSDI’ 14) App FS New tool: Block Order Breaker (BOB) 1. Run workloads: Input for file system Disk 2. Trace block I/O: Monitor writes to disk 3. Emulate crashes: Create possible ondisk states by applying I/O subset to image 4. Determine outcomes: After FS recovery, examine image and determine when properties do NOT hold

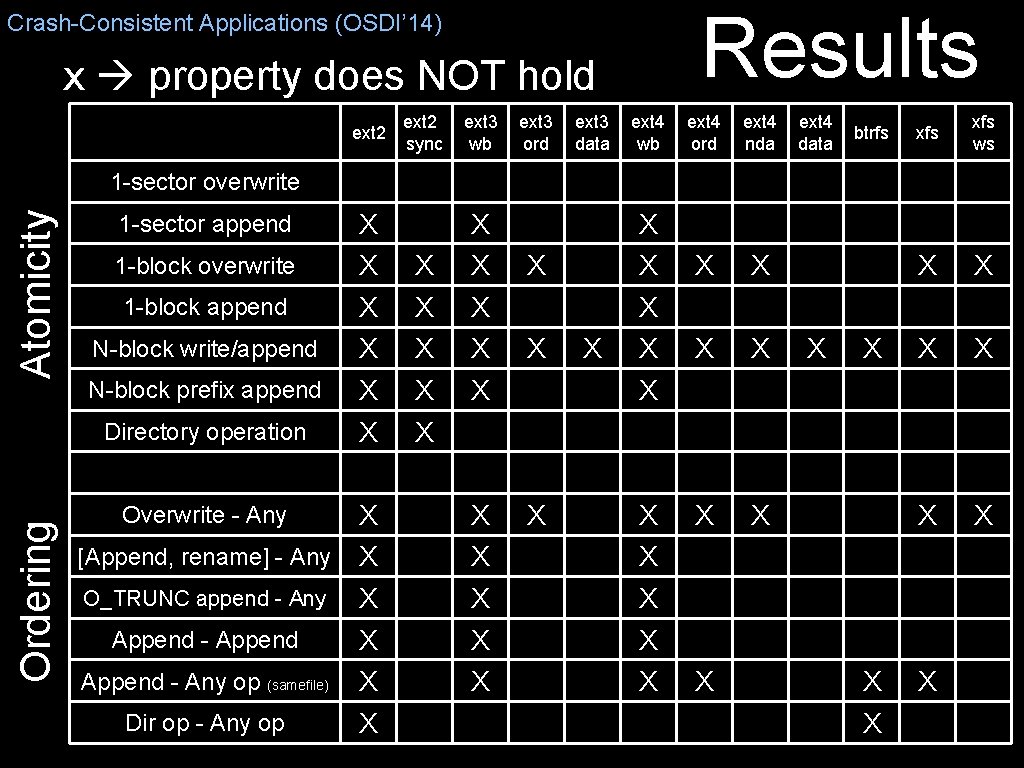

Results Crash-Consistent Applications (OSDI’ 14) x property does NOT hold ext 2 ext 3 sync wb ext 3 ord ext 3 data ext 4 wb ext 4 ord ext 4 nda X X X ext 4 data btrfs xfs ws X X X Atomicity 1 -sector overwrite 1 -sector append 1 -block overwrite 1 -block append N-block write/append N-block prefix append Ordering Directory operation X X X X [Append, rename] - Any X O_TRUNC append - Any X Append - Append X Append - Any op (samefile) X Dir op - Any op X Overwrite - Any X X X X X X X X X

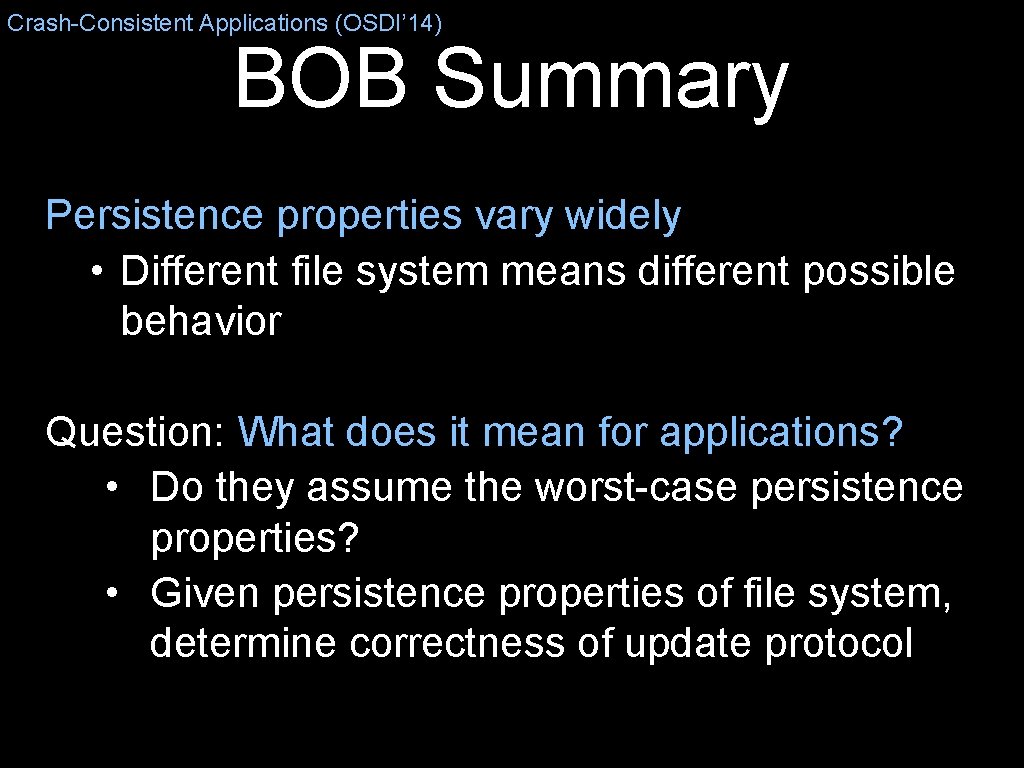

Crash-Consistent Applications (OSDI’ 14) BOB Summary Persistence properties vary widely • Different file system means different possible behavior Question: What does it mean for applications? • Do they assume the worst-case persistence properties? • Given persistence properties of file system, determine correctness of update protocol

Crash-Consistent Applications (OSDI’ 14) Application Crash Vulnerabilities Each application has an update protocol: series of system calls update persistent file-system state // a data logging protocol from BDB creat(log) trunc(log) append(log) What’s missing? • Truncate must be atomic • Need fdatasync() at end

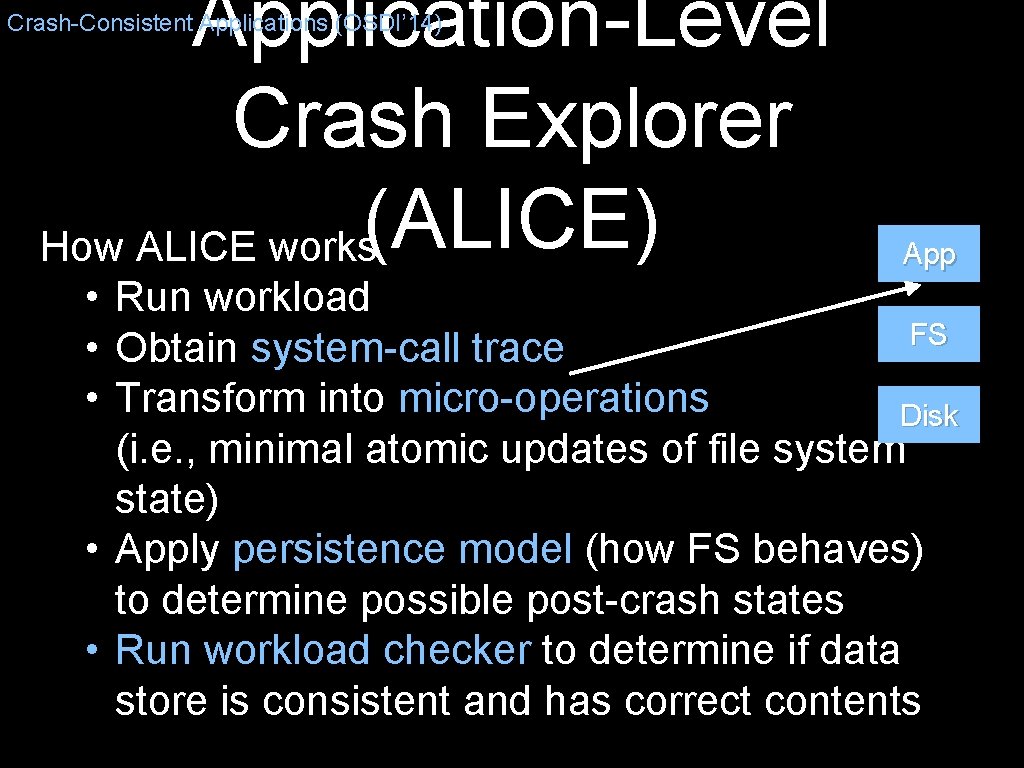

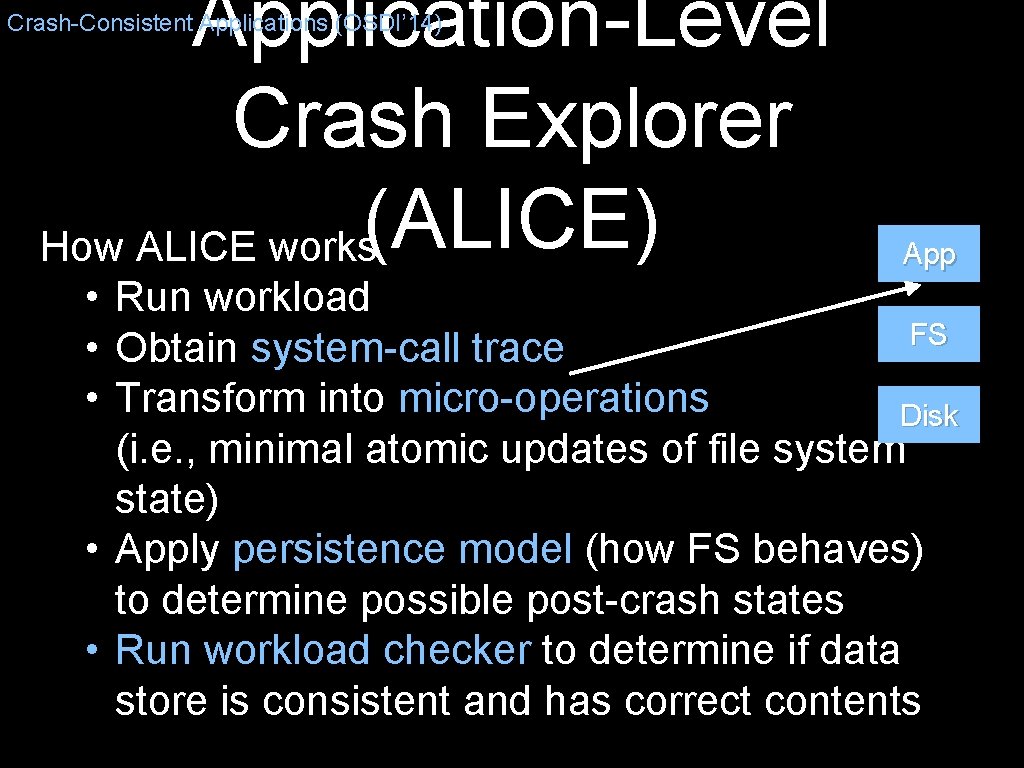

Application-Level Crash Explorer How ALICE works(ALICE) Crash-Consistent Applications (OSDI’ 14) App • Run workload FS • Obtain system-call trace • Transform into micro-operations Disk (i. e. , minimal atomic updates of file system state) • Apply persistence model (how FS behaves) to determine possible post-crash states • Run workload checker to determine if data store is consistent and has correct contents

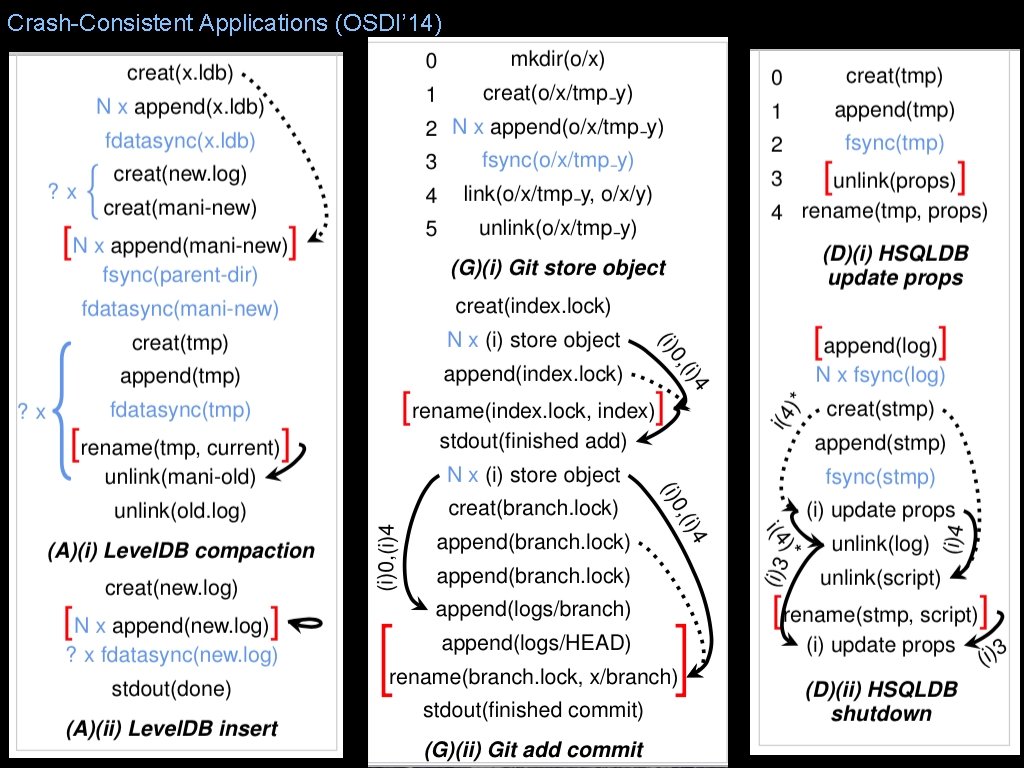

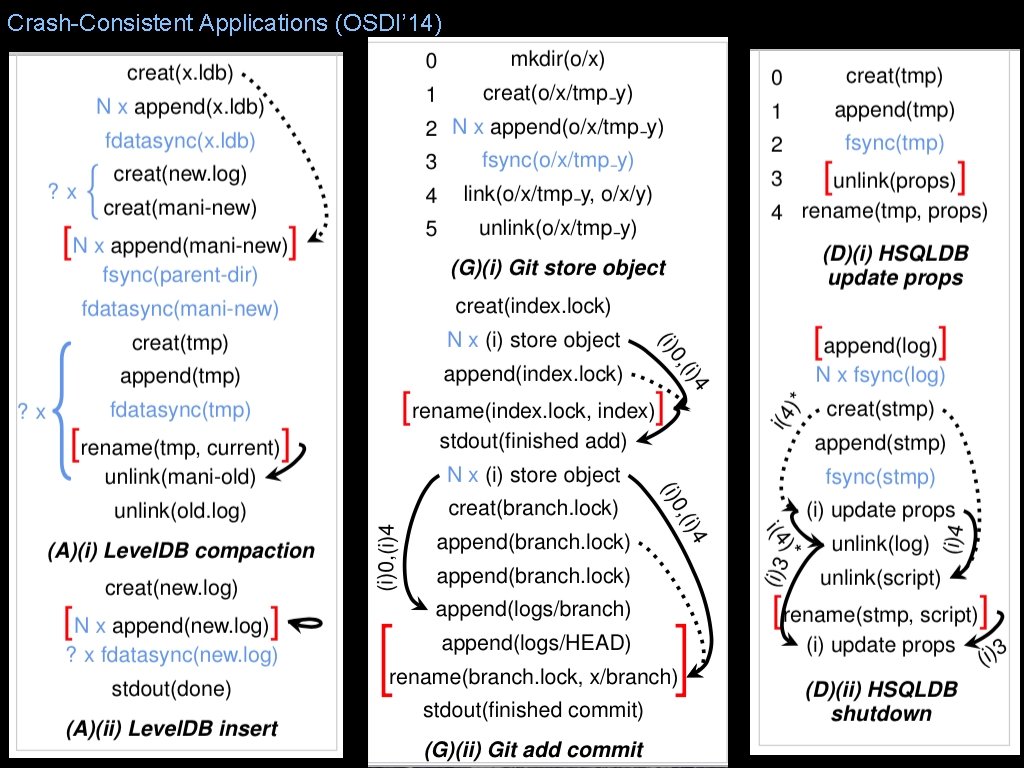

Crash-Consistent Applications (OSDI’ 14) Protocol Diagrams Output of ALICE: Protocol Diagrams } Blue: sync() operation [Red brackets] Required atomicity Arrows: Required ordering

Crash-Consistent Applications (OSDI’ 14) Applications KV Stores • Level. DB, GDBM, LMDB Relational DBs • SQLite, Postgres. QL, HSQLDB Version Control Systems • Git, Mercurial Distributed Systems • HDFS, Zoo. Keeper Virtualization Software • VMWare Player

Crash-Consistent Applications (OSDI’ 14)

Application Vulnerabilities Assuming weakest persistence properties Crash-Consistent Applications (OSDI’ 14) Atomicity Ordering Durability Level. DB 1. 1 1 4 3 Level. DB 1. 15 1 3 LMDB 1 GDBM 1 2 2 HSQLDB 1 6 3 SQLite 1 Postgre. SQL 1 Git 1 7 1 Mercurial 5 6 2 VMWare 1 HDFS 2 Zoo. Keeper 1 1 2

On Real File Systems? How do applications behave on real file Crash-Consistent Applications (OSDI’ 14) systems? Vulnerabilities ext 3 (writeback) 19 ext 3 (ordered) 11 ext 3 (data) 9 ext 4 (ordered) 13 btrfs 31 Correctness issues found in all applications • Some more problematic than others

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’ 05] • Opt. FS: Separating ordering and durability [SOSP’ 13] • Application Crash Consistency and FS Persistence Properties [OSDI’ 14] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

Advice: Motivation Right motivation is important • Need to convince audience solving important problem • Need to pitch your work Don’t always use initial motivation • As learn more, can change problem you are solving Give multiple motivations • Different reviewers convinced by different aspects

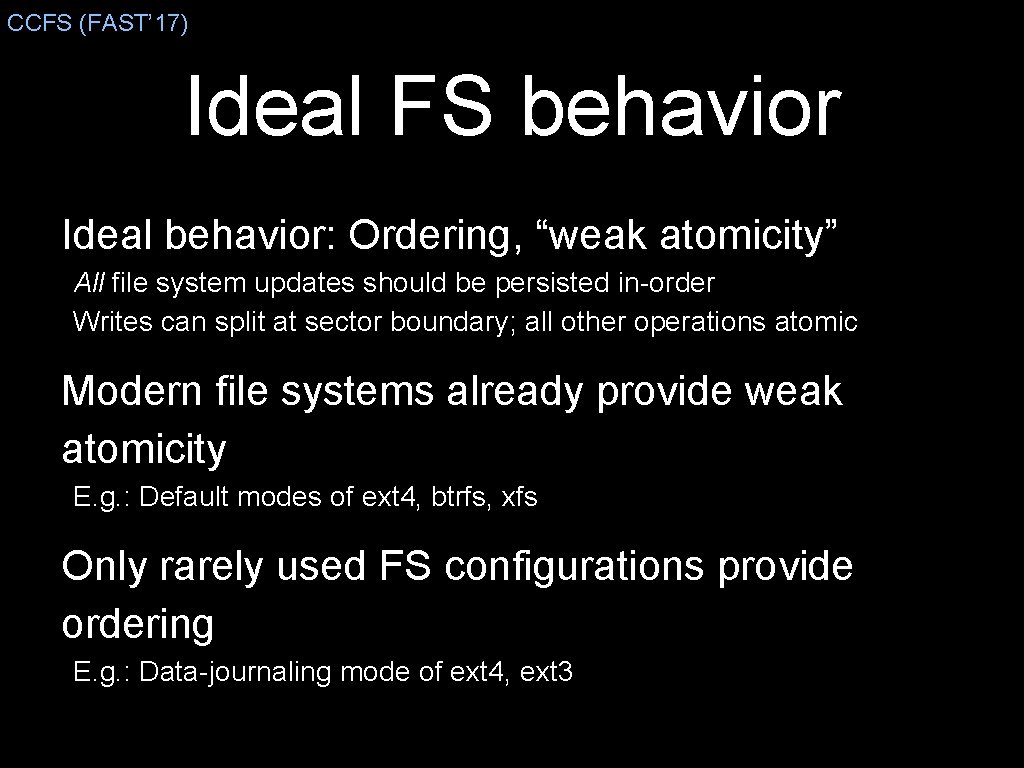

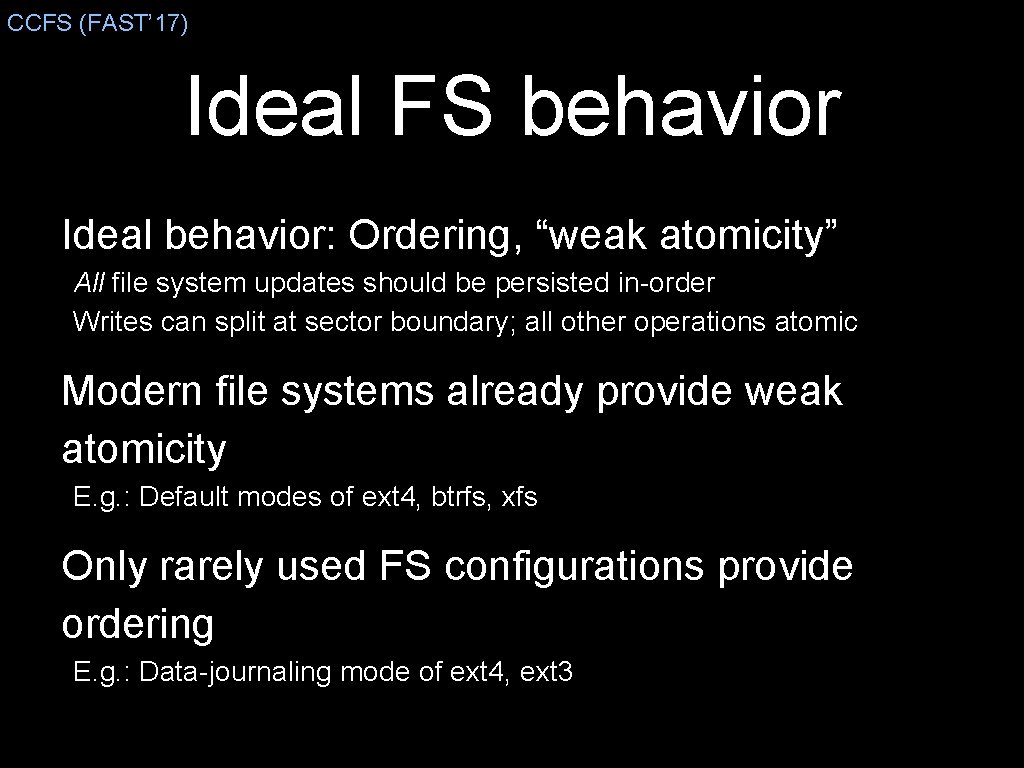

CCFS (FAST’ 17) Ideal FS behavior Ideal behavior: Ordering, “weak atomicity” All file system updates should be persisted in-order Writes can split at sector boundary; all other operations atomic Modern file systems already provide weak atomicity E. g. : Default modes of ext 4, btrfs, xfs Only rarely used FS configurations provide ordering E. g. : Data-journaling mode of ext 4, ext 3

CCFS (FAST’ 17) False Ordering Dependencies Problem: Ordering across independent applications Solution: Order only within each application Avoids performance overhead, provides application consistency Time Application A Application B 1 2 3 4 pwrite(f 1, 0, 150 MB); write(f 2, “hello”); write(f 3, “world”); fsync(f 3);

CCFS (FAST’ 17) Stream Abstraction New abstraction: Order only within a stream Each application is usually put into a separate stream-B stream-A stream Time Application A Application B 1 2 3 4 pwrite(f 1, 0, 150 MB); 0. 06 seconds write(f 2, “hello”); write(f 3, “world”); fsync(f 3); 53

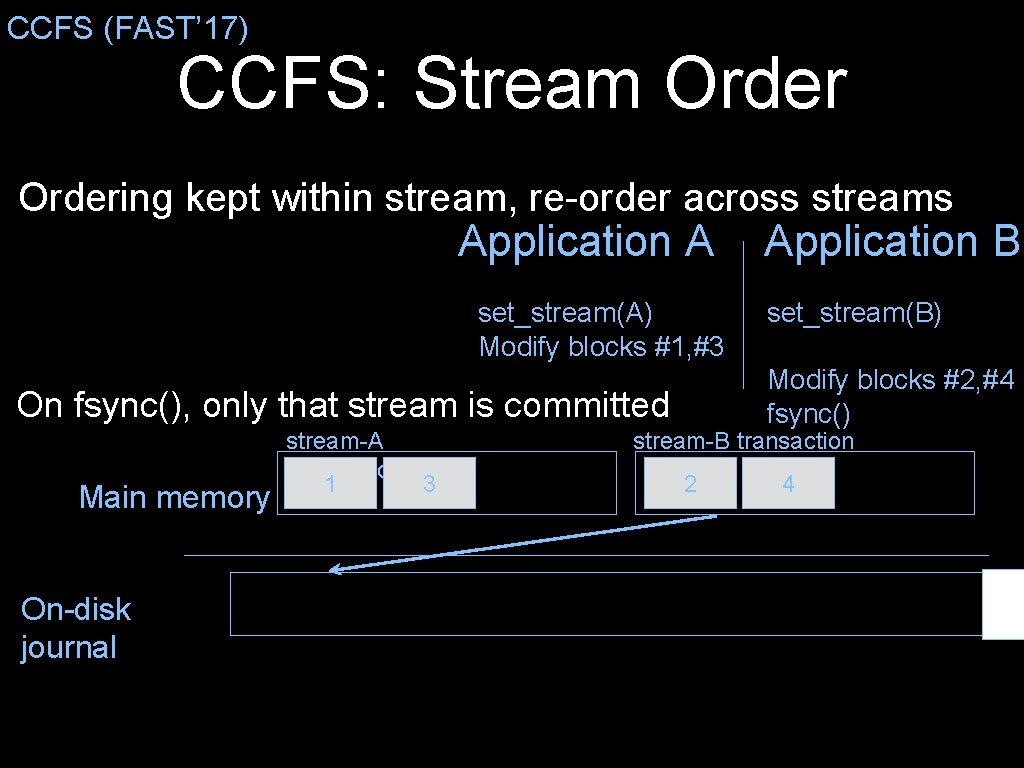

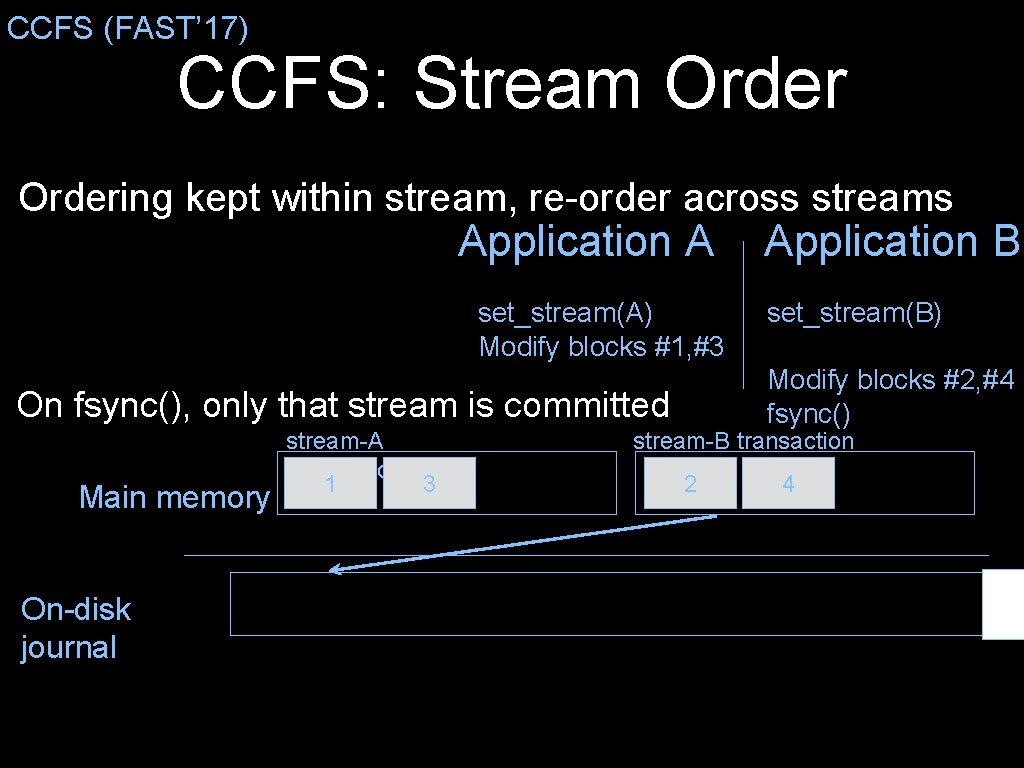

CCFS (FAST’ 17) CCFS: Stream Ordering kept within stream, re-order across streams Application A Application B set_stream(A) Modify blocks #1, #3 Modify blocks #2, #4 fsync() On fsync(), only that stream is committed Main memory On-disk journal stream-A transaction 1 3 set_stream(B) stream-B transaction 2 4

CCFS (FAST’ 17) CCFS Challenges 1. Independent streams modify same meta-data block Byte-level journaling 2. Both streams update directory’s modification date Delta journaling 3. Directory entries contain pointers to adjoining entry Pointer-less data structures 4. Directory entry freed by stream A can be reused by stream B Order-less space reuse 5. Ordering technique: Data journaling cost Selective data journaling 6. Ordering technique: Delayed allocation requires reordering

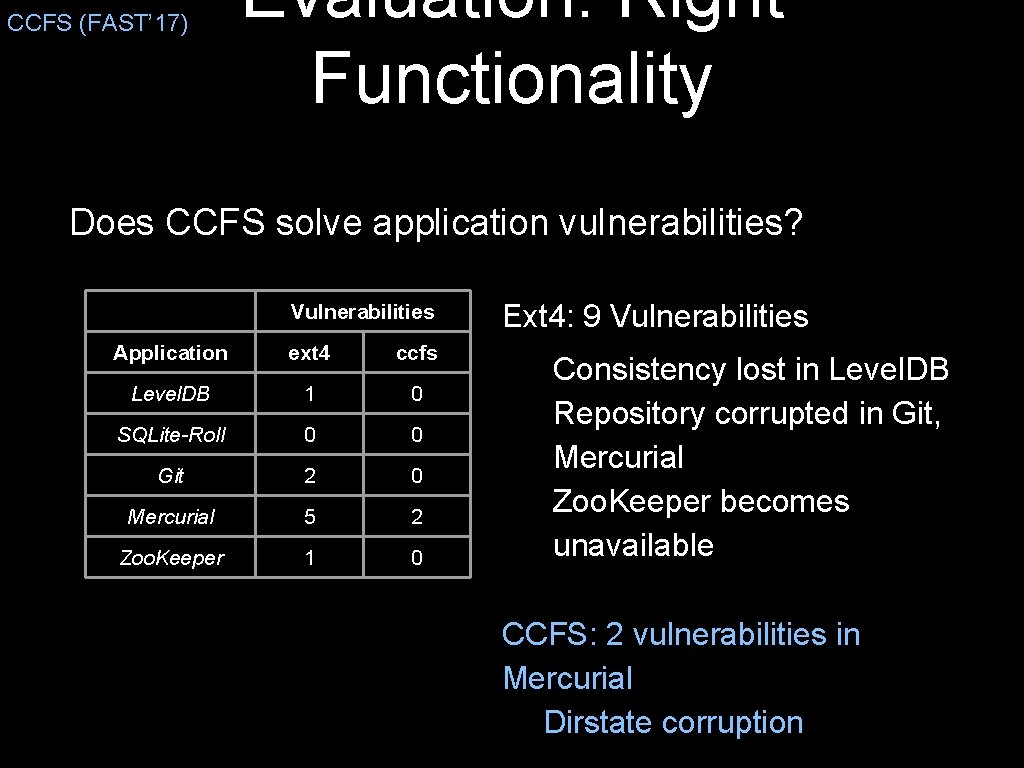

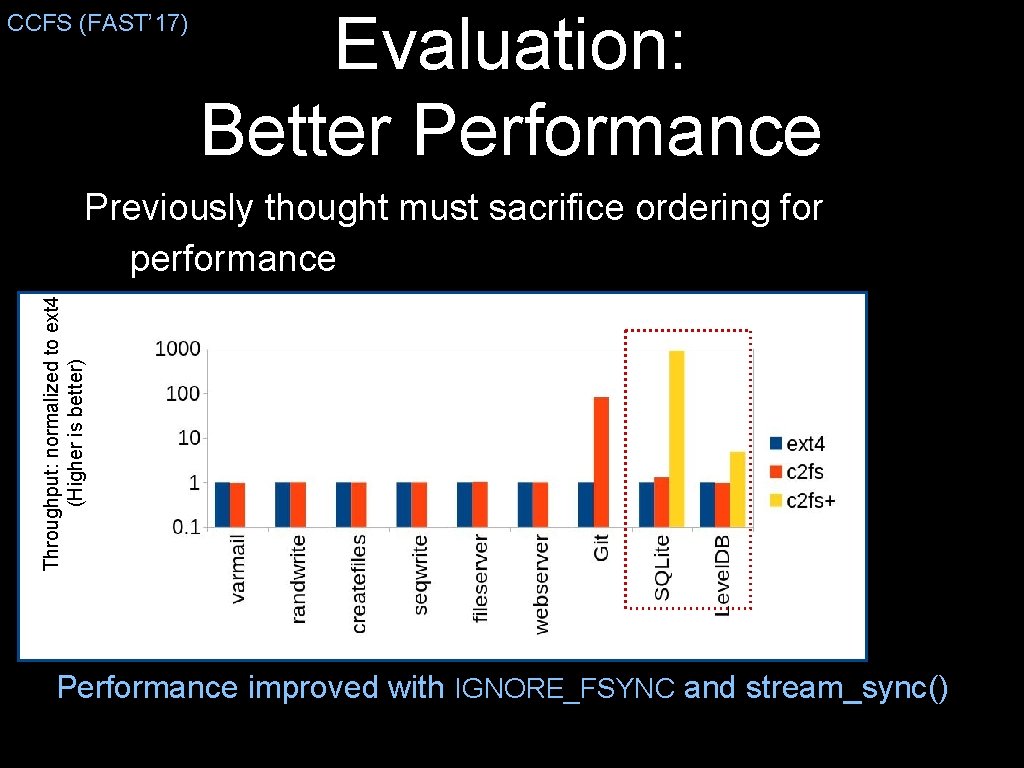

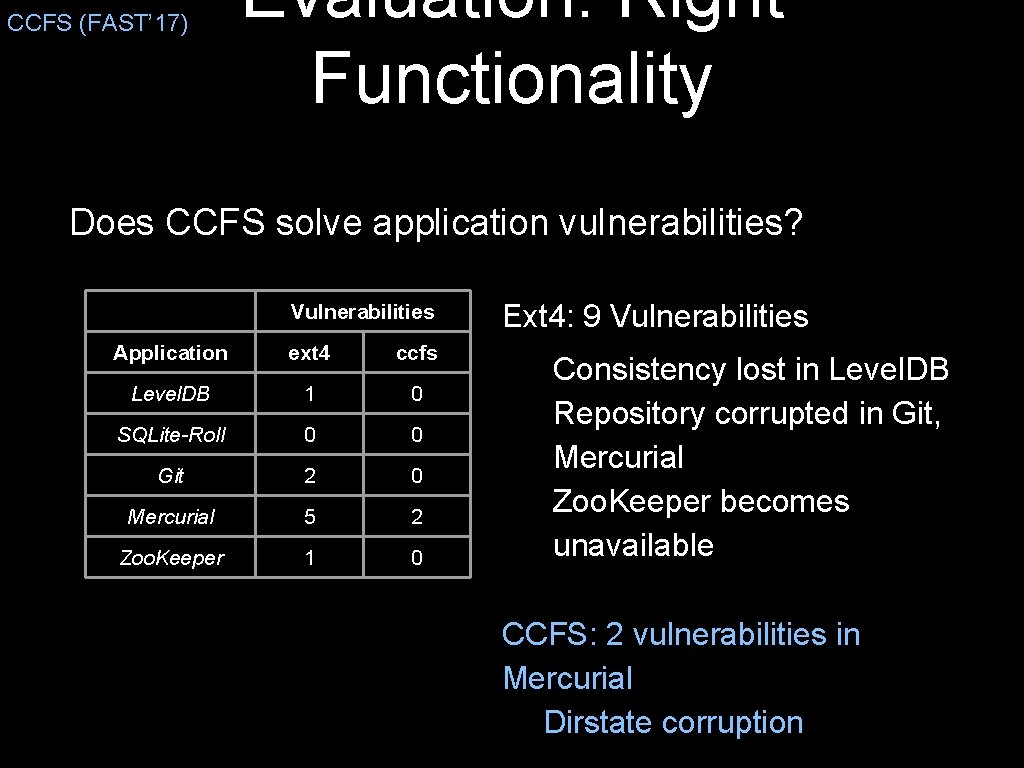

CCFS (FAST’ 17) Evaluation: Right Functionality Does CCFS solve application vulnerabilities? Vulnerabilities Application ext 4 ccfs Level. DB 1 0 SQLite-Roll 0 0 Git 2 0 Mercurial 5 2 Zoo. Keeper 1 0 Ext 4: 9 Vulnerabilities - Consistency lost in Level. DB - Repository corrupted in Git, Mercurial - Zoo. Keeper becomes unavailable CCFS: 2 vulnerabilities in Mercurial Dirstate corruption

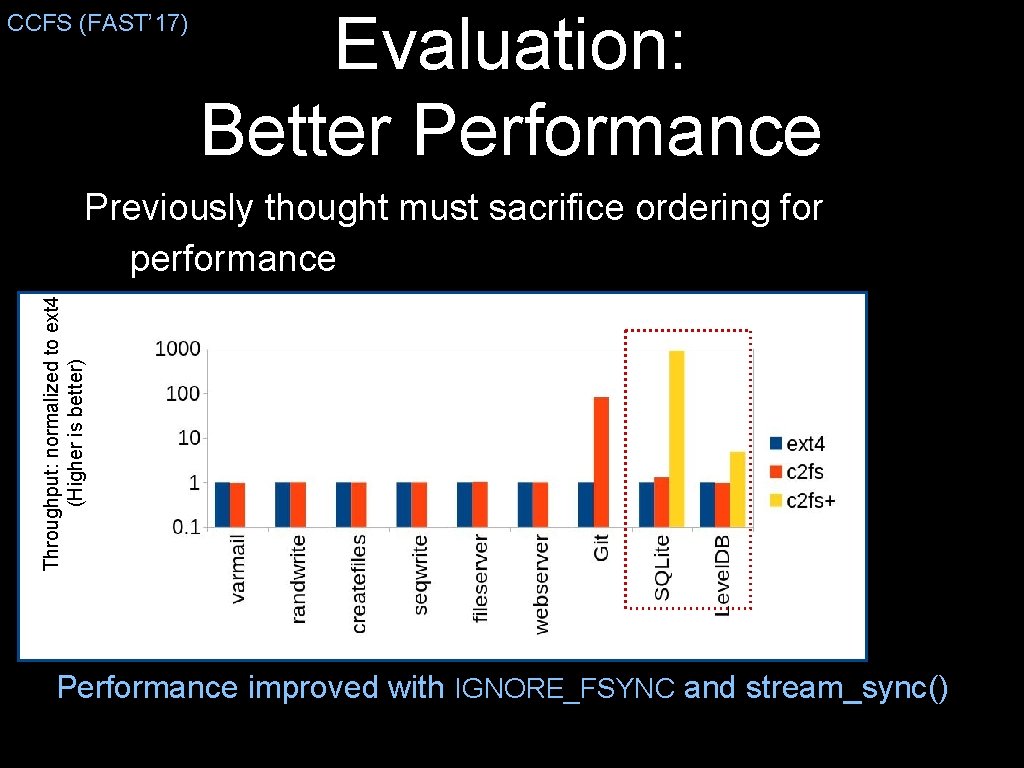

CCFS (FAST’ 17) Evaluation: Better Performance Throughput: normalized to ext 4 (Higher is better) Previously thought must sacrifice ordering for performance Performance within an application? Performance improved with IGNORE_FSYNC and stream_sync()

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’ 05] • Opt. FS: Separating ordering and durability [SOSP’ 13] • Application Crash Consistency and FS Persistence Properties [OSDI’ 14] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

Advice: Use Your Expertise Explore topics where you have leverage • some added advantage compared to other researchers Doesn’t have to be same sub-area, could be: • some complex system you know • some methodology • some technique

Distributed Storage Not much is known about how current distributed storage systems handle storage faults So, measure… Two fault models for storage: 1. Correlated crashes while updating data 2. Storage corruptions

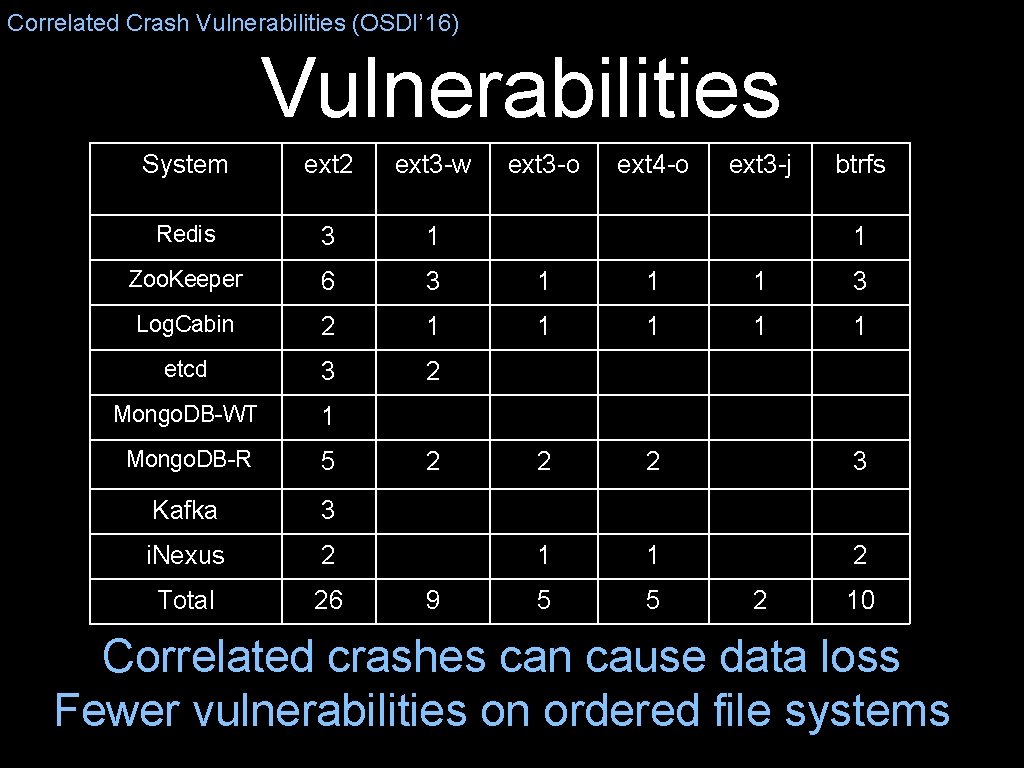

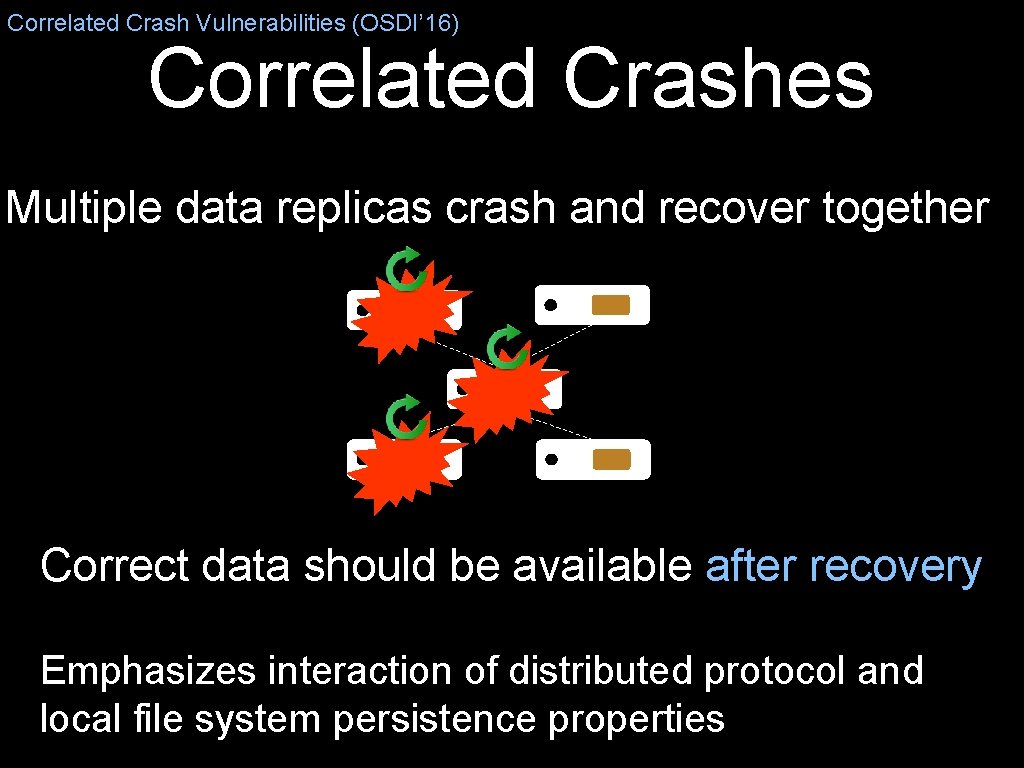

Correlated Crash Vulnerabilities (OSDI’ 16) Correlated Crashes Multiple data replicas crash and recover together Correct data should be available after recovery Emphasizes interaction of distributed protocol and local file system persistence properties

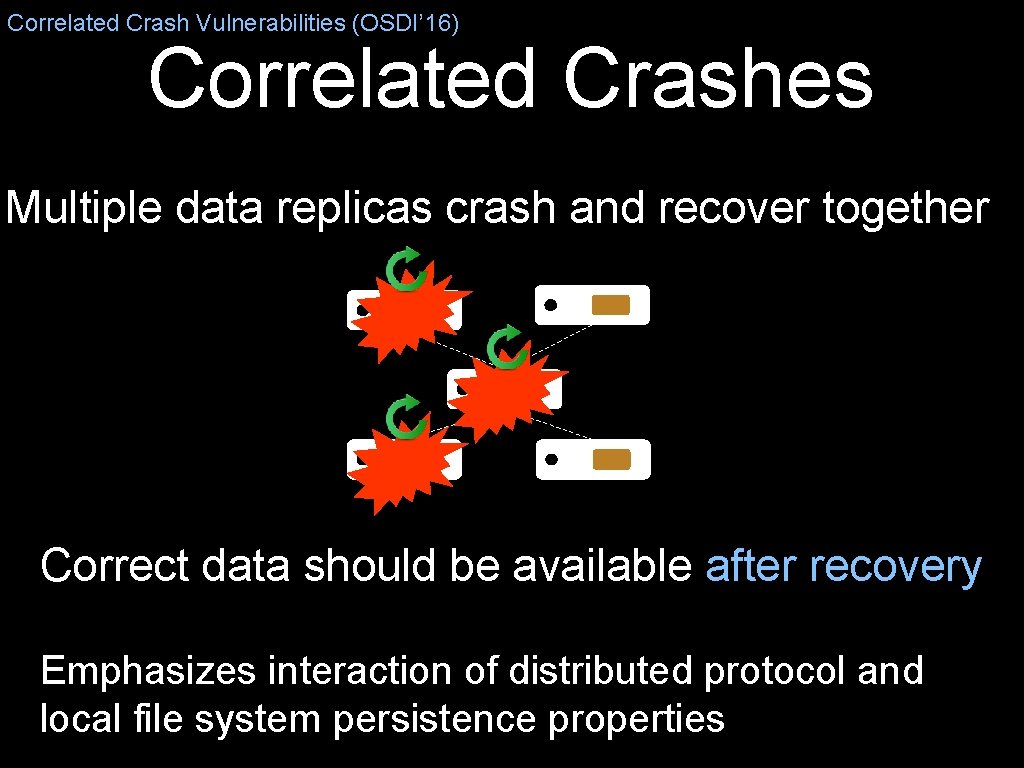

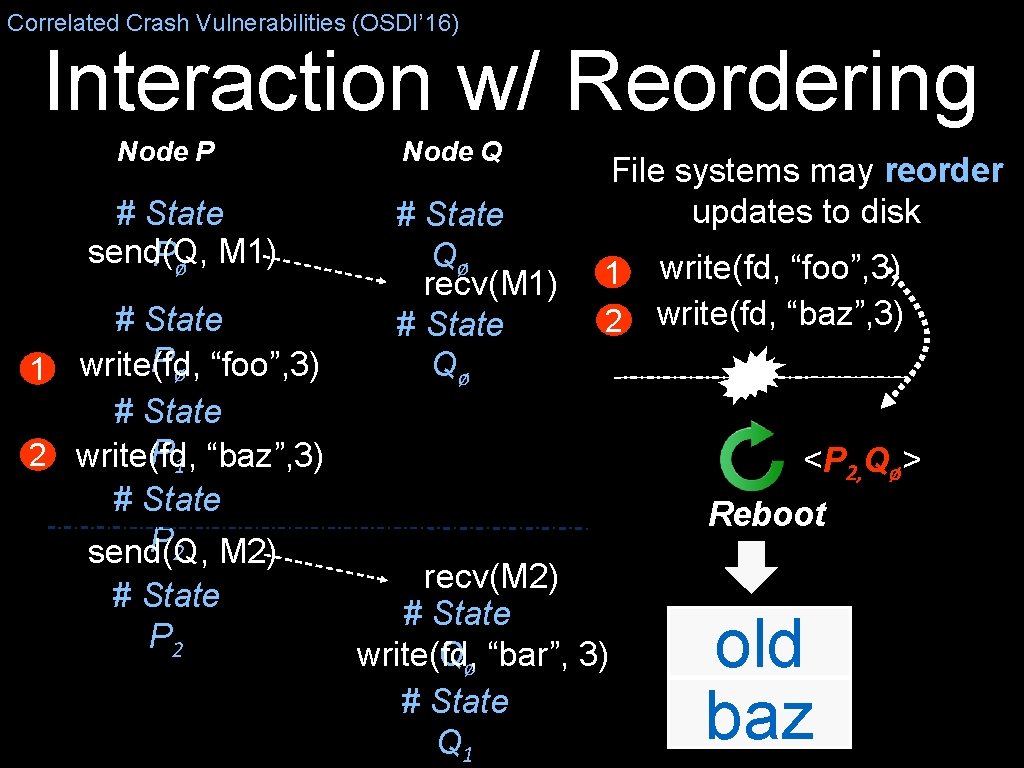

Correlated Crash Vulnerabilities (OSDI’ 16) Interaction w/ Reordering Node P # State send(Q, M 1) Pø # State Pø 1 write(fd, “foo”, 3) # State P 1 2 write(fd, “baz”, 3) # State P 2 send(Q, M 2) # State P 2 Node Q # State Qø recv(M 1) # State Qø File systems may reorder updates to disk 1 2 write(fd, “foo”, 3) write(fd, “baz”, 3) <P 2, Qø> Reboot recv(M 2) # State write(fd, “bar”, 3) Qø # State Q 1 old baz

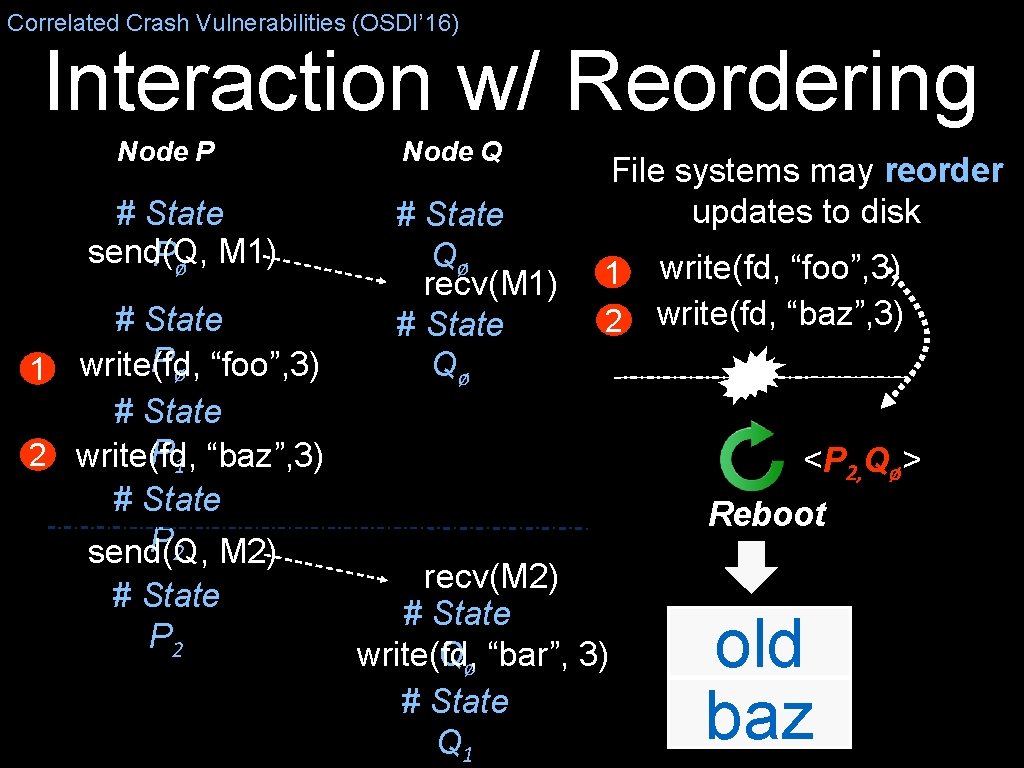

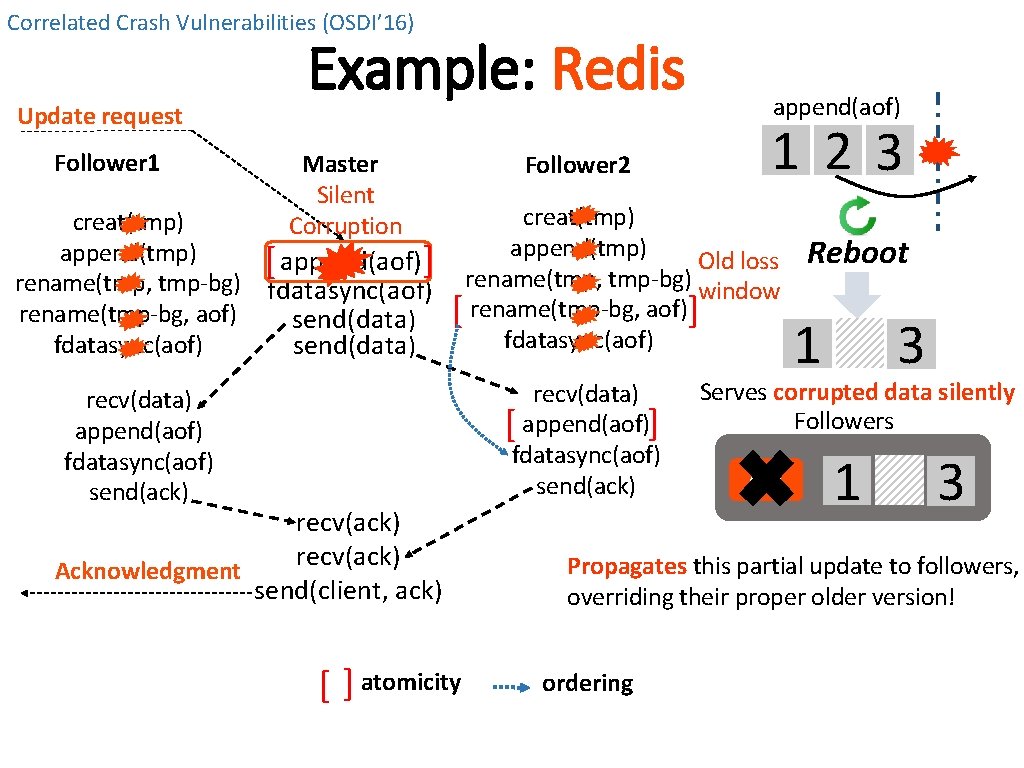

Correlated Crash Vulnerabilities (OSDI’ 16) Update request Follower 1 creat(tmp) append(tmp) rename(tmp, tmp-bg) rename(tmp-bg, aof) fdatasync(aof) Example: Redis Master Silent Corruption Follower 2 append(aof) 1 2 3 creat(tmp) append(tmp) [ append(aof)] rename(tmp, tmp-bg) Old loss fdatasync(aof) window send(data) [ rename(tmp-bg, aof)] fdatasync(aof) send(data) recv(data) [ append(aof)] fdatasync(aof) send(ack) recv(data) append(aof) fdatasync(aof) send(ack) recv(ack) Acknowledgment send(client, ack) ] atomicity Reboot 1 3 Serves corrupted data silently Followers old 1 3 Propagates this partial update to followers, overriding their proper older version! ordering ]

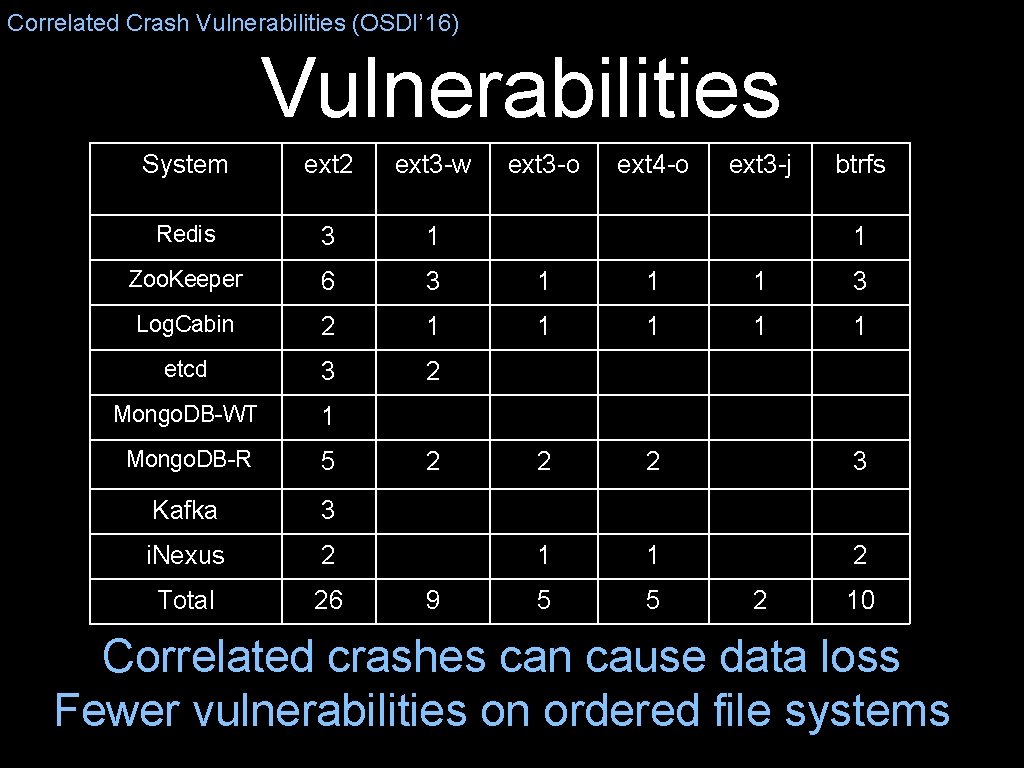

Correlated Crash Vulnerabilities (OSDI’ 16) Vulnerabilities System ext 2 ext 3 -w Redis 3 1 Zoo. Keeper 6 3 1 1 1 3 Log. Cabin 2 1 1 1 etcd 3 2 Mongo. DB-WT 1 Mongo. DB-R 5 2 2 3 Kafka 3 i. Nexus 2 1 1 2 Total 26 5 5 2 9 ext 3 -o ext 4 -o ext 3 -j btrfs 1 2 10 Correlated crashes can cause data loss Fewer vulnerabilities on ordered file systems

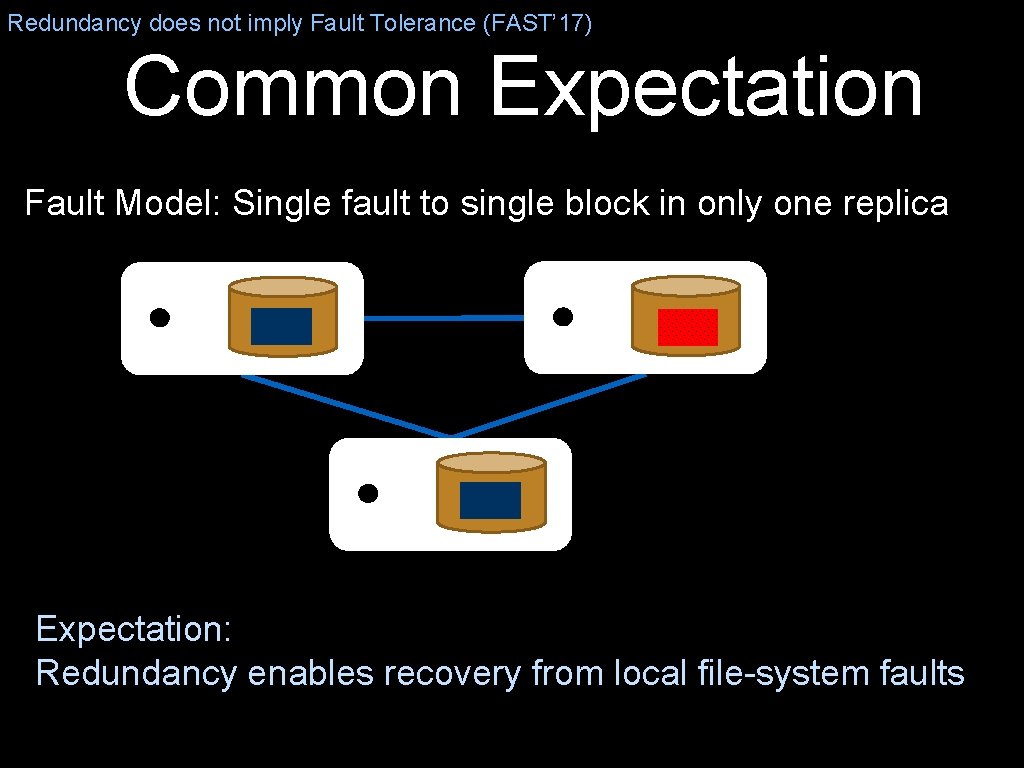

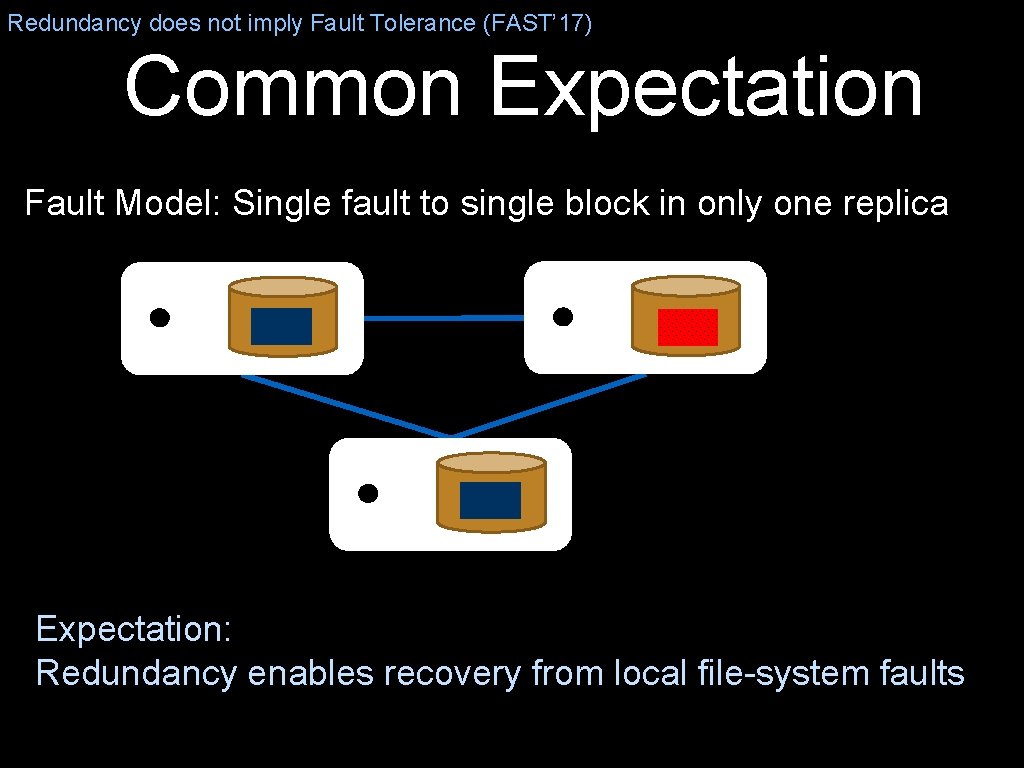

Redundancy does not imply Fault Tolerance (FAST’ 17) Common Expectation Fault Model: Single fault to single block in only one replica Expectation: Redundancy enables recovery from local file-system faults

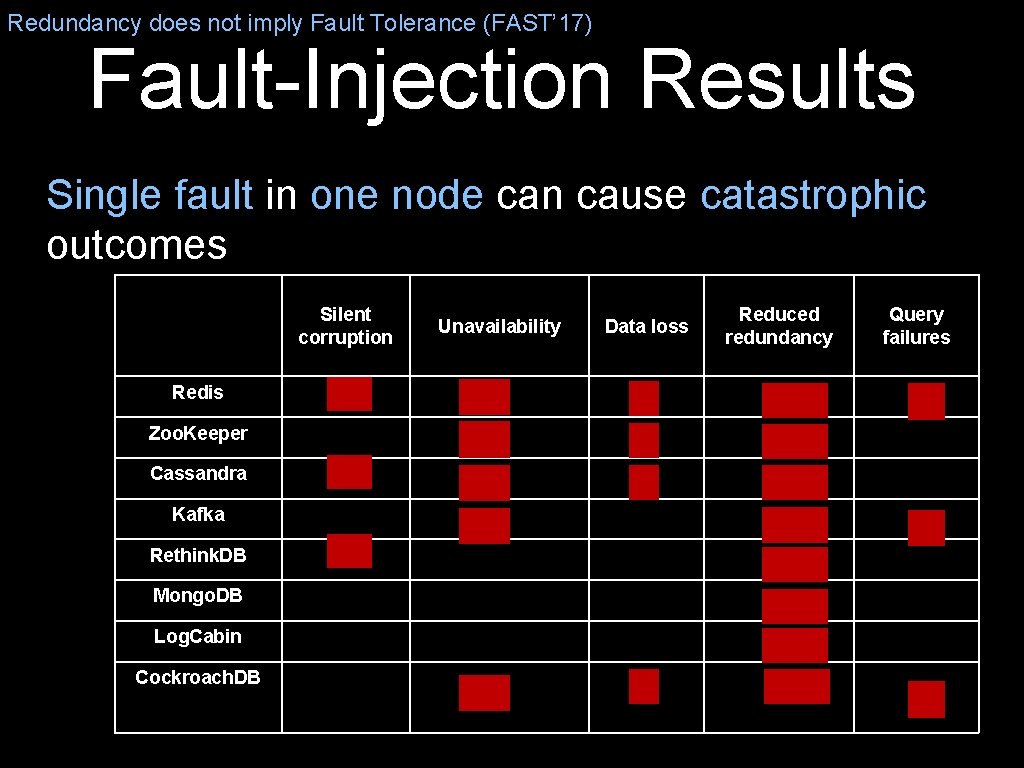

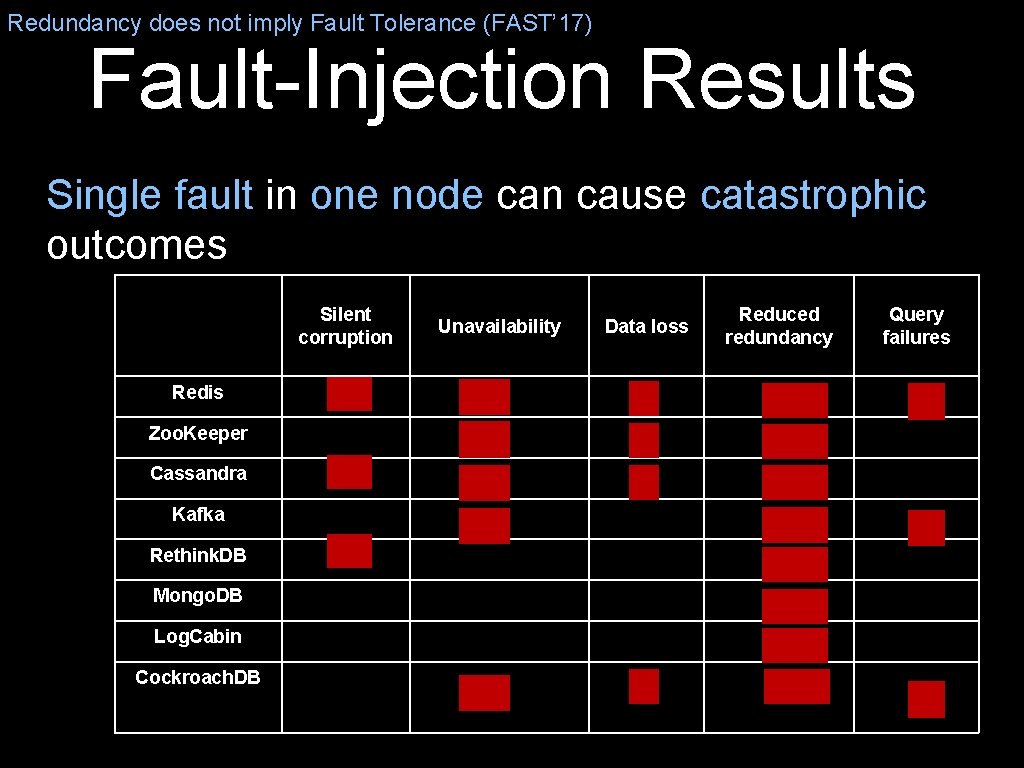

Redundancy does not imply Fault Tolerance (FAST’ 17) Fault-Injection Results Single fault in one node can cause catastrophic outcomes Silent corruption Redis Zoo. Keeper Cassandra Kafka Rethink. DB Mongo. DB Log. Cabin Cockroach. DB Unavailability Data loss Reduced redundancy Query failures

Advice: Keep Eyes Open • When doing meaurement and analysis work, goal is to find something intriguing… • Something broken that you can fix • Not just fixing a big or minor problem, but a fundamental flaw in how many systems are built

Crash and Corruption Entanglement Redundancy does not imply Fault Tolerance (FAST’ 17) Kafka Message Log Node crash 0 1 2 checksumdata Disk corruption Append(log, entry 2) Checksum mismatch Action: Truncate log at 1 Lose uncommitted data Checksum mismatch 0 1 2 Action: Truncate log at 0 Lose committed data! Need to differentiate checksum mismatches due to crashes vs. corruptions Common problem across many distributed storage systems Fun problem to fix in general way!

Outline Personal Story: Path to Faculty Member at UW-Madison Technical Story: Path through Research Topics • IRON FS: Reliable Local File Systems [SOSP’x] • Application Crash Consistency and FS Persistence Properties [] • Opt. FS: Separating ordering and durability [] • CCFS: Streams [FAST’ 17] • Distributed Storage Systems [OSDI’ 16 and FAST’ 17]

Advice Summary 1) Study systems, solve specific problem, generalize 2) Talk to industry for real unsolved problems 3) More work per paper 4) Spend time on motivation 5) Use your expertise 6) Keep your eyes open for odd behavior Last: Have great students and collaborators

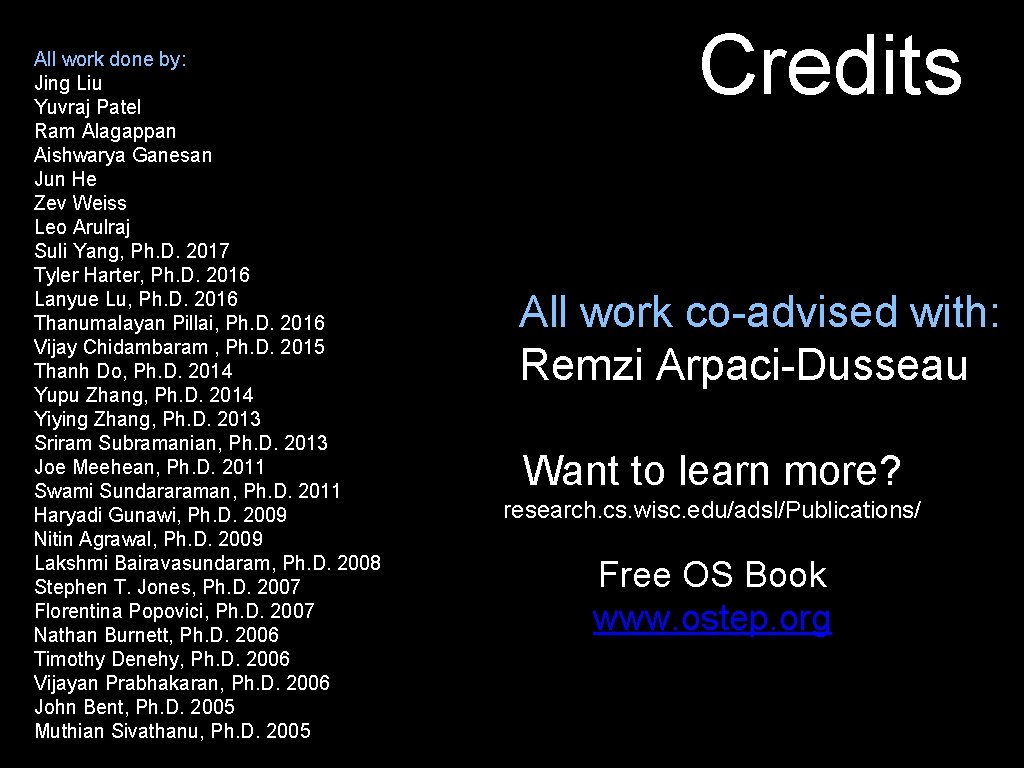

All work done by: Jing Liu Yuvraj Patel Ram Alagappan Aishwarya Ganesan Jun He Zev Weiss Leo Arulraj Suli Yang, Ph. D. 2017 Tyler Harter, Ph. D. 2016 Lanyue Lu, Ph. D. 2016 Thanumalayan Pillai, Ph. D. 2016 Vijay Chidambaram , Ph. D. 2015 Thanh Do, Ph. D. 2014 Yupu Zhang, Ph. D. 2014 Yiying Zhang, Ph. D. 2013 Sriram Subramanian, Ph. D. 2013 Joe Meehean, Ph. D. 2011 Swami Sundararaman, Ph. D. 2011 Haryadi Gunawi, Ph. D. 2009 Nitin Agrawal, Ph. D. 2009 Lakshmi Bairavasundaram, Ph. D. 2008 Stephen T. Jones, Ph. D. 2007 Florentina Popovici, Ph. D. 2007 Nathan Burnett, Ph. D. 2006 Timothy Denehy, Ph. D. 2006 Vijayan Prabhakaran, Ph. D. 2006 John Bent, Ph. D. 2005 Muthian Sivathanu, Ph. D. 2005 Credits All work co-advised with: Remzi Arpaci-Dusseau Want to learn more? research. cs. wisc. edu/adsl/Publications/ Free OS Book www. ostep. org

Advice: Questions Always have a question you are answering Don’t just explain what you are doing and how you are doing it Explain why you are doing this

File-file yang dibuat oleh user pada jenis file di linux

File-file yang dibuat oleh user pada jenis file di linux After me after me after me

After me after me after me If anyone desires to come after me

If anyone desires to come after me Mass of iron in an iron tablet

Mass of iron in an iron tablet Iron sharpens iron friendship

Iron sharpens iron friendship Physical image vs logical image

Physical image vs logical image Fungsi sistem file

Fungsi sistem file Remote file access in distributed file system

Remote file access in distributed file system Markup tag tells the web browser

Markup tag tells the web browser In a file-oriented information system, a transaction file

In a file-oriented information system, a transaction file Iron man story map

Iron man story map After twenty years summary

After twenty years summary Mobile file systems

Mobile file systems Rpcs34

Rpcs34 Mutable file system

Mutable file system Module 4 operating systems and file management

Module 4 operating systems and file management Once upon a time by nadine gordimer theme

Once upon a time by nadine gordimer theme Element of narrative text

Element of narrative text Short stories definition

Short stories definition Straight news story

Straight news story Every picture has a story and every story has a moment

Every picture has a story and every story has a moment Story

Story Iron defficincy

Iron defficincy Meera kaur

Meera kaur Vital villages thriving towns introduction

Vital villages thriving towns introduction Iron carbonyl fe co 5 is

Iron carbonyl fe co 5 is Aluminum and iron iii oxide balanced equation

Aluminum and iron iii oxide balanced equation Steel stronger than iron

Steel stronger than iron Iron bird transport

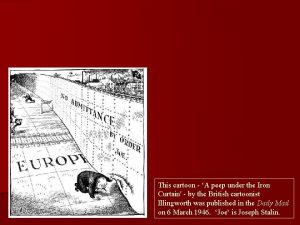

Iron bird transport Peep under the iron curtain cartoon analysis

Peep under the iron curtain cartoon analysis Iron man

Iron man The iron man newspaper report

The iron man newspaper report The iron curtain symbolized the division of

The iron curtain symbolized the division of Scope of bureaucracy

Scope of bureaucracy Methyl red

Methyl red The iron curtain

The iron curtain Iron age dates

Iron age dates What is cutting speed in milling

What is cutting speed in milling Soldering iron

Soldering iron Soldering iron for clothes

Soldering iron for clothes Triple sugar iron agar positive and negative results

Triple sugar iron agar positive and negative results A full section

A full section Kligler iron agar results

Kligler iron agar results Iron mountain pickup

Iron mountain pickup Total iron binding capacity

Total iron binding capacity Total iron binding capacity

Total iron binding capacity Chemical properties of alloys

Chemical properties of alloys Ti3n compound name

Ti3n compound name Ratio strength to percentage strength

Ratio strength to percentage strength Abeam behind or further aft, astern or toward the stern.

Abeam behind or further aft, astern or toward the stern. Chlorsis

Chlorsis Iron triangle definition ap gov

Iron triangle definition ap gov Percent by mass

Percent by mass Minerals are inorganic elements that the body

Minerals are inorganic elements that the body Iron deficiency anemia labs

Iron deficiency anemia labs Gossips bridle

Gossips bridle Lysine iron agar test

Lysine iron agar test Iron shower kenning

Iron shower kenning Iron parts name

Iron parts name Magnetism and electromagnetism

Magnetism and electromagnetism Bh curve of iron

Bh curve of iron Iron ring replacement mcgill

Iron ring replacement mcgill Total body iron

Total body iron Serum ferritin in iron deficiency anemia

Serum ferritin in iron deficiency anemia Iron element symbol

Iron element symbol Anemia face

Anemia face Iron curtain

Iron curtain Iron age time period

Iron age time period How elements heavier than iron are formed

How elements heavier than iron are formed Cold iron point poe

Cold iron point poe How is iron extracted from hematite

How is iron extracted from hematite Iron deficiency anemia smear

Iron deficiency anemia smear