Advanced Microarchitecture Prof Mikko H Lipasti University of

![Waiting Instruction Buffer [Lebeck et al. ISCA 2002] • Capture forward load slice in Waiting Instruction Buffer [Lebeck et al. ISCA 2002] • Capture forward load slice in](https://slidetodoc.com/presentation_image/4b498134fbf39975625cd8cf44922c3c/image-30.jpg)

![Continual Flow Pipelines [Srinivasan et al. 2004] • Slice buffer extension of WIB – Continual Flow Pipelines [Srinivasan et al. 2004] • Slice buffer extension of WIB –](https://slidetodoc.com/presentation_image/4b498134fbf39975625cd8cf44922c3c/image-31.jpg)

- Slides: 38

Advanced Microarchitecture Prof. Mikko H. Lipasti University of Wisconsin-Madison Lecture notes based on notes by Ilhyun Kim Updated by Mikko Lipasti

Outline • Instruction scheduling overview – Scheduling atomicity – Speculative scheduling – Scheduling recovery • Complexity-effective instruction scheduling techniques • Building large instruction windows – Runahead, CFP, i. CFP • Scalable load/store handling • Control Independence

Readings • Read on your own: – Shen & Lipasti Chapter 10 on Advanced Register Data Flow – skim – I. Kim and M. Lipasti, “Understanding Scheduling Replay Schemes, ” in Proceedings of the 10 th International Symposium on High-performance Computer Architecture (HPCA-10), February 2004. – Srikanth Srinivasan, Ravi Rajwar, Haitham Akkary, Amit Gandhi, and Mike Upton, “Continual Flow Pipelines”, in Proceedings of ASPLOS 2004, October 2004. – Ahmed S. Al-Zawawi, Vimal K. Reddy, Eric Rotenberg, Haitham H. Akkary, “Transparent Control Independence, ” in Proceedings of ISCA-34, 2007. • To be discussed in class: – T. Shaw, M. Martin, A. Roth, “No. SQ: Store-Load Communication without a Store Queue, ” in Proceedings of the 39 th Annual IEEE/ACM International Symposium on Microarchitecture, 2006. – Pierre Salverda, Craig B. Zilles: Fundamental performance constraints in horizontal fusion of in -order cores. HPCA 2008: 252 -263. – Andrew Hilton, Santosh Nagarakatte, Amir Roth, "i. CFP: Tolerating All-Level Cache Misses in In. Order Processors, " Proceedings of HPCA 2009. – Loh, G. H. , Xie, Y. , and Black, B. 2007. Processor Design in 3 D Die-Stacking Technologies. IEEE Micro 27, 3 (May. 2007), 31 -48.

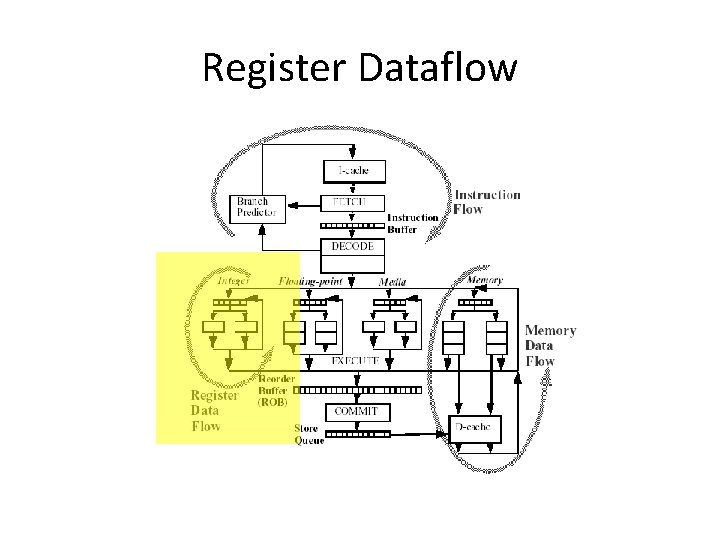

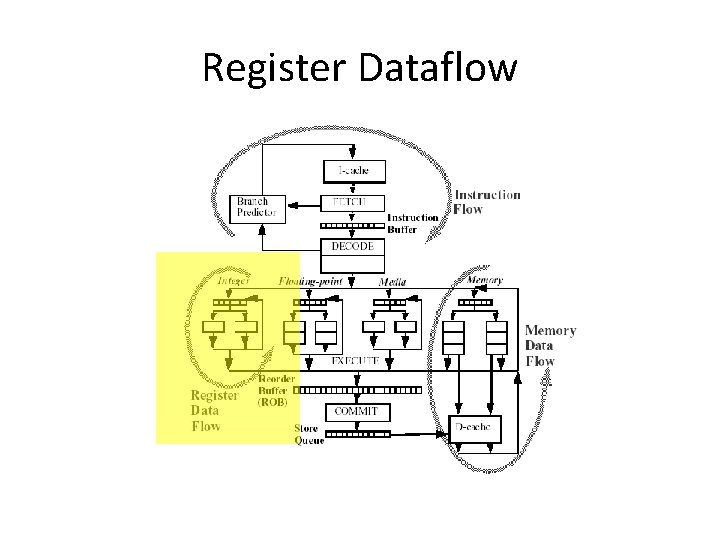

Register Dataflow

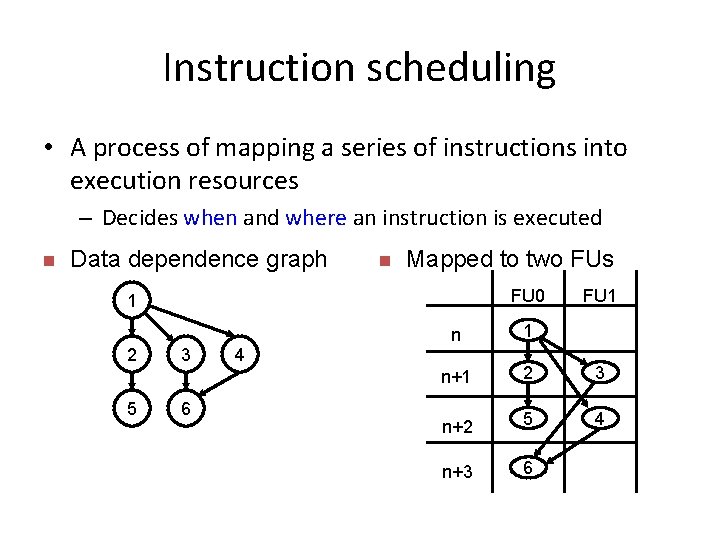

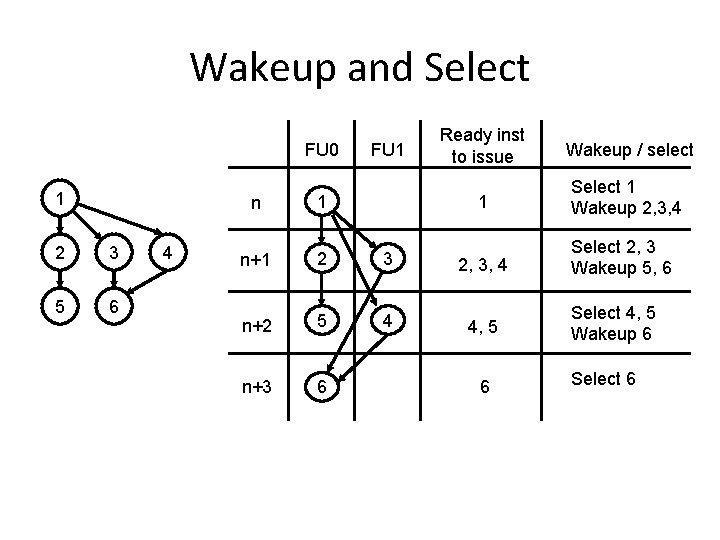

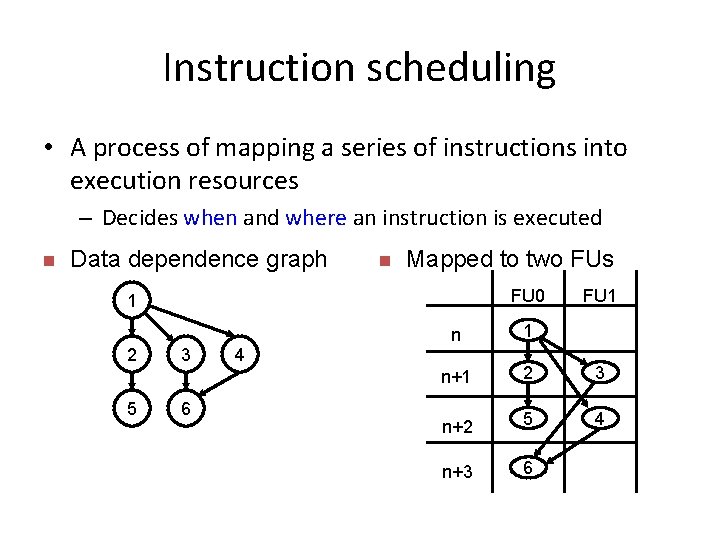

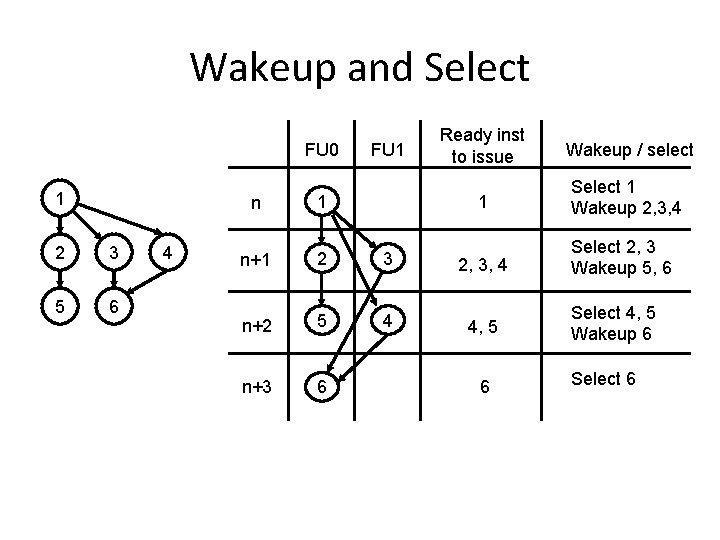

Instruction scheduling • A process of mapping a series of instructions into execution resources – Decides when and where an instruction is executed n Data dependence graph n Mapped to two FUs FU 0 1 2 5 3 6 4 FU 1 n+1 2 3 n+2 5 4 n+3 6

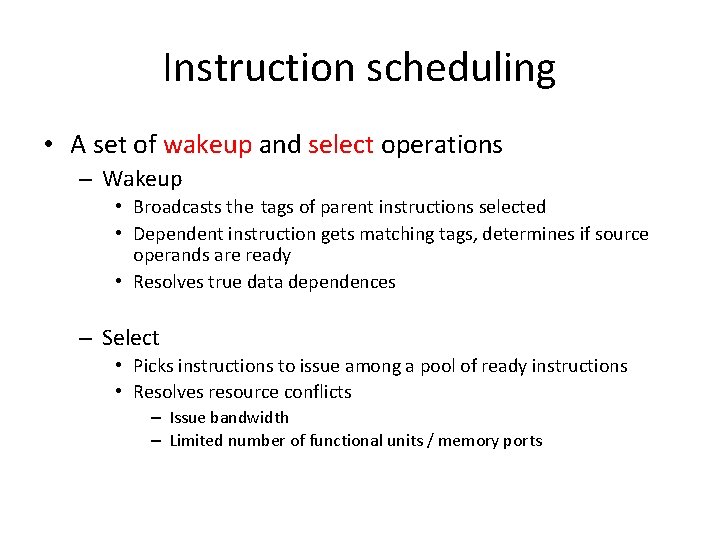

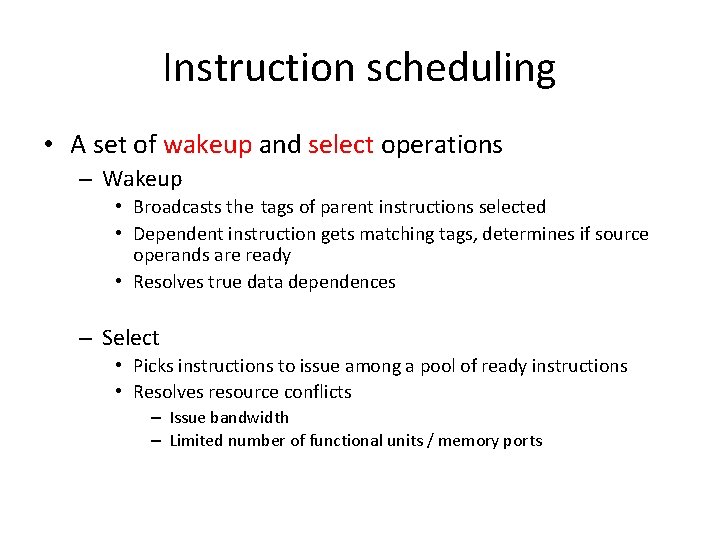

Instruction scheduling • A set of wakeup and select operations – Wakeup • Broadcasts the tags of parent instructions selected • Dependent instruction gets matching tags, determines if source operands are ready • Resolves true data dependences – Select • Picks instructions to issue among a pool of ready instructions • Resolves resource conflicts – Issue bandwidth – Limited number of functional units / memory ports

Scheduling loop • Basic wakeup and select operations broadcast the tag of the selected inst scheduling loop …… ready. L OR grant n request n Select logic selected = = tag. L … = = OR tag. R ready. R … grant 1 request 1 OR tag 1 … grant 0 request 0 tag W ready. L = = tag. L = = OR tag. R ready. R issue to FU ready - request Wakeup logic

Wakeup and Select FU 0 1 2 3 5 6 4 FU 1 n+1 2 3 n+2 5 4 n+3 6 Ready inst to issue Wakeup / select 1 Select 1 Wakeup 2, 3, 4 Select 2, 3 Wakeup 5, 6 4, 5 6 Select 4, 5 Wakeup 6 Select 6

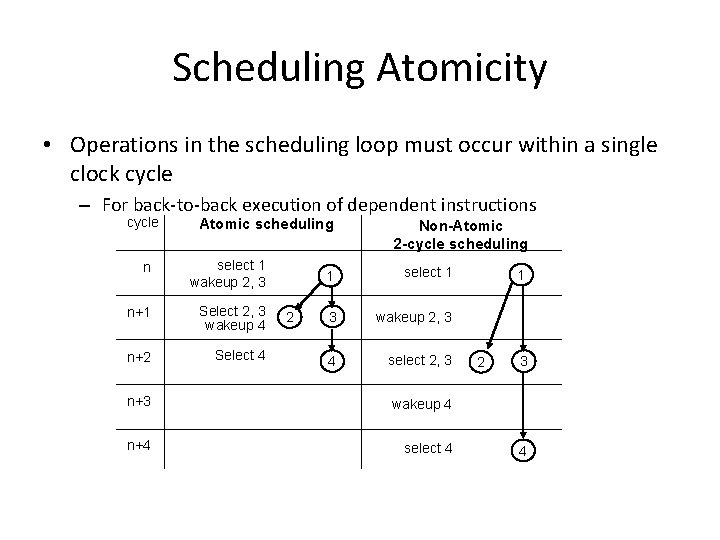

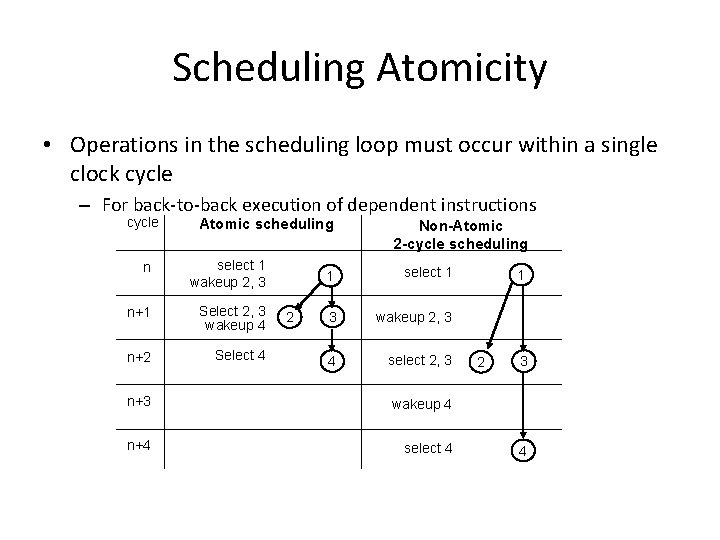

Scheduling Atomicity • Operations in the scheduling loop must occur within a single clock cycle – For back-to-back execution of dependent instructions cycle Atomic scheduling n select 1 wakeup 2, 3 n+1 Select 2, 3 wakeup 4 n+2 Select 4 2 Non-Atomic 2 -cycle scheduling 1 select 1 3 wakeup 2, 3 4 select 2, 3 n+3 wakeup 4 n+4 select 4 1 2 3 4

Implication of scheduling atomicity • Pipelining is a standard way to improve clock frequency • Hard to pipeline instruction scheduling logic without losing ILP – ~10% IPC loss in 2 -cycle scheduling – ~19% IPC loss in 3 -cycle scheduling • A major obstacle to building high-frequency microprocessors

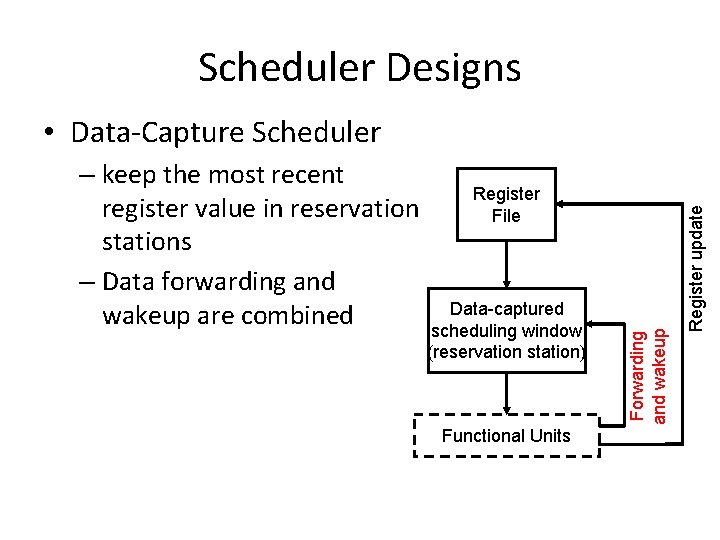

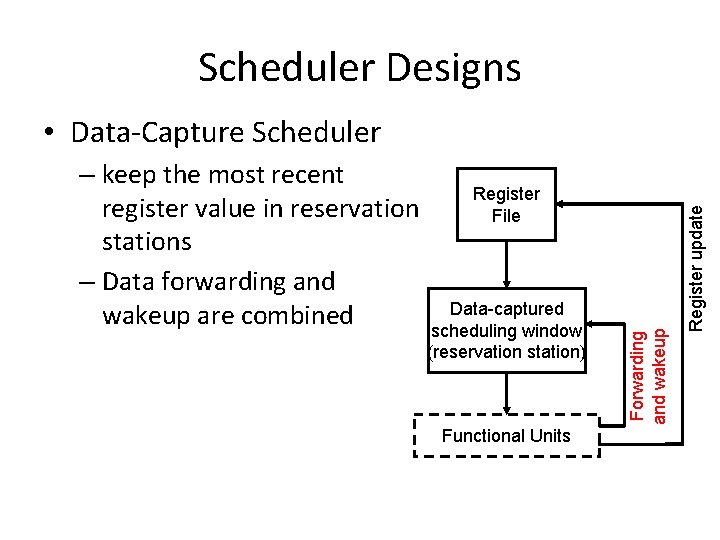

Scheduler Designs Register File Data-captured scheduling window (reservation station) Functional Units Forwarding and wakeup – keep the most recent register value in reservation stations – Data forwarding and wakeup are combined Register update • Data-Capture Scheduler

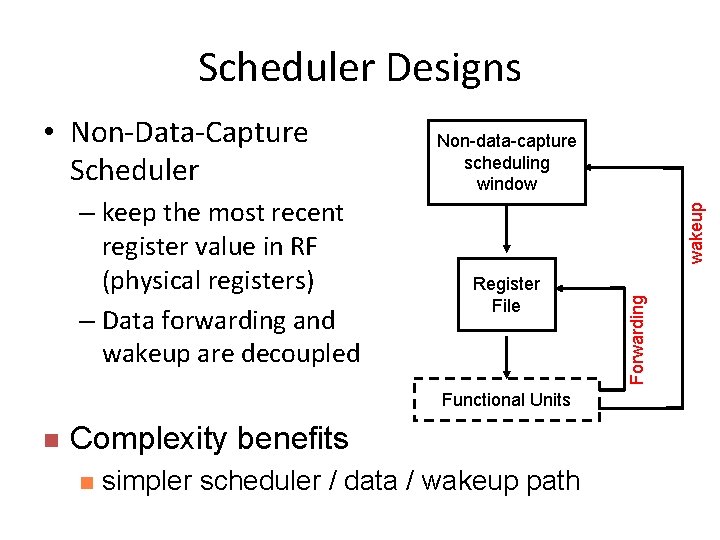

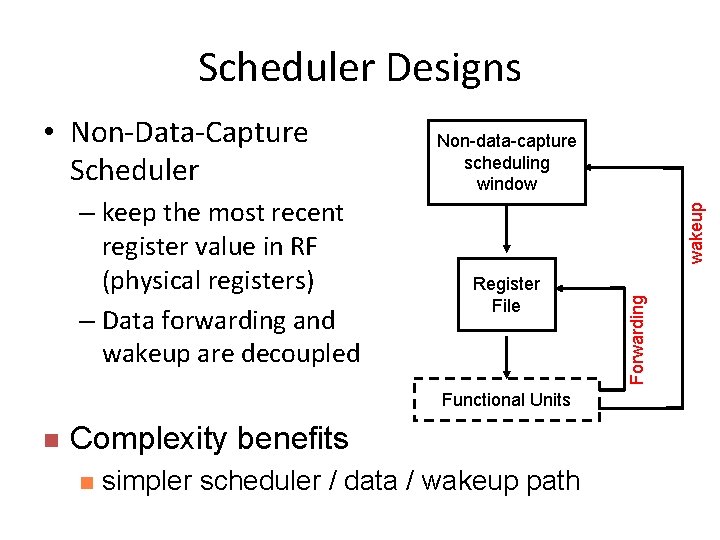

Scheduler Designs wakeup – keep the most recent register value in RF (physical registers) – Data forwarding and wakeup are decoupled Non-data-capture scheduling window Register File Functional Units n Complexity benefits n simpler scheduler / data / wakeup path Forwarding • Non-Data-Capture Scheduler

Mapping to pipeline stages • AMD K 7 (data-capture) Data / wakeup n Pentium 4 (non-data-capture) wakeup Data

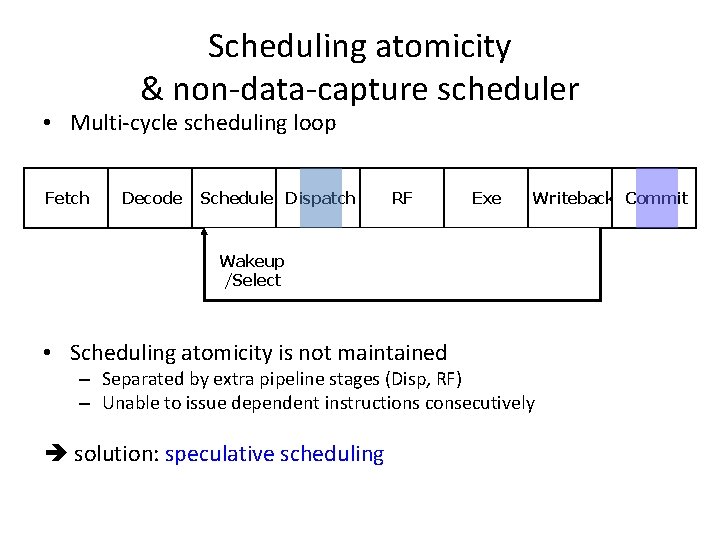

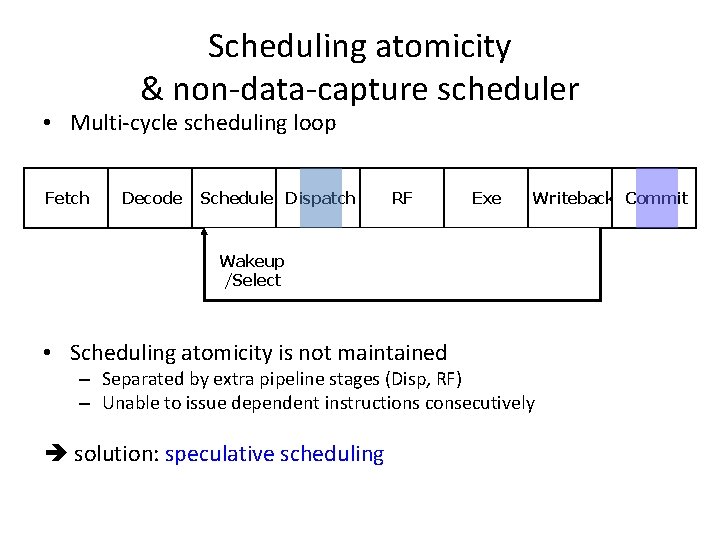

Scheduling atomicity & non-data-capture scheduler • Multi-cycle scheduling loop Fetch Decode Schedule Writeback Dispatch Commit RF /Exe Writeback Commit Wakeup wakeup/ Atomic Sched/Exe /Select select • Scheduling atomicity is not maintained – Separated by extra pipeline stages (Disp, RF) – Unable to issue dependent instructions consecutively solution: speculative scheduling

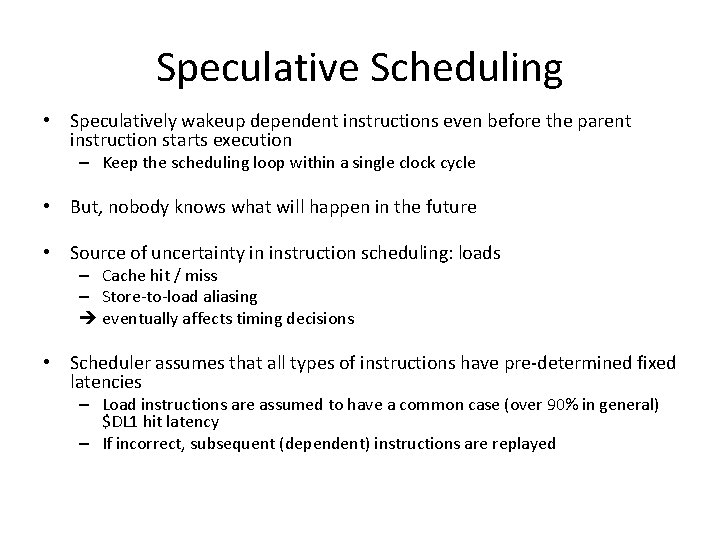

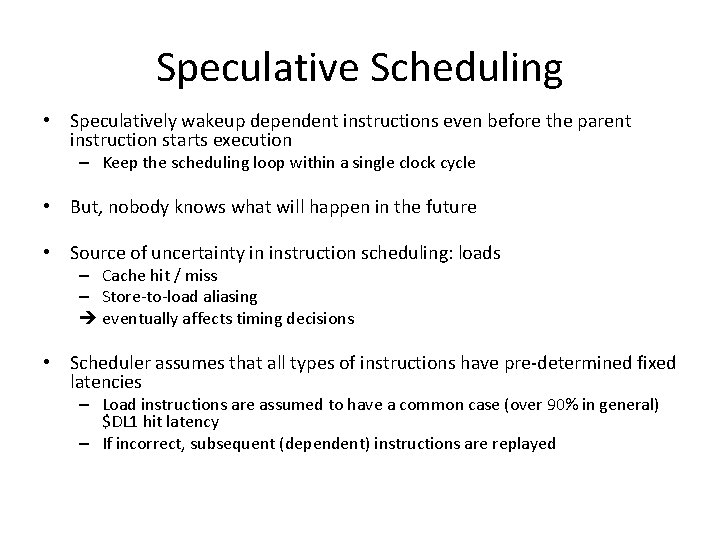

Speculative Scheduling • Speculatively wakeup dependent instructions even before the parent instruction starts execution – Keep the scheduling loop within a single clock cycle • But, nobody knows what will happen in the future • Source of uncertainty in instruction scheduling: loads – Cache hit / miss – Store-to-load aliasing eventually affects timing decisions • Scheduler assumes that all types of instructions have pre-determined fixed latencies – Load instructions are assumed to have a common case (over 90% in general) $DL 1 hit latency – If incorrect, subsequent (dependent) instructions are replayed

• Overview Speculative Scheduling Speculatively issued instructions Fetch Decode Schedule Dispatch Spec wakeup /select RF Exe Writeback Commit /Recover Latency Invalid input Changed!! value Re-schedule when latency mispredicted n Unlike the original Tomasulo’s algorithm n n Instructions are scheduled BEFORE actual execution occurs Assumes instructions have pre-determined fixed latencies n n ALU operations: fixed latency Load operations: assumes $DL 1 latency (common case)

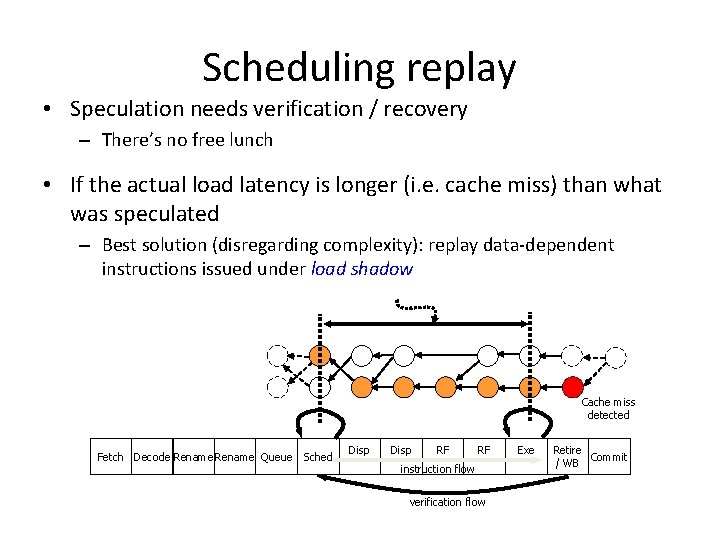

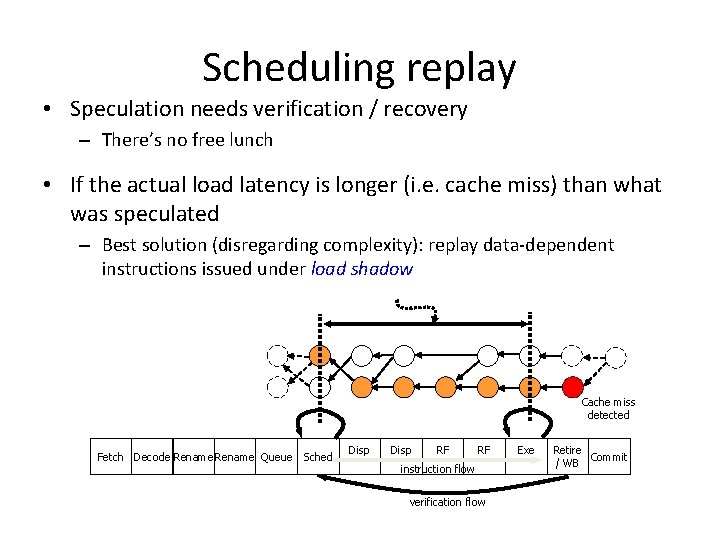

Scheduling replay • Speculation needs verification / recovery – There’s no free lunch • If the actual load latency is longer (i. e. cache miss) than what was speculated – Best solution (disregarding complexity): replay data-dependent instructions issued under load shadow Cache miss detected Fetch Decode Rename Queue Sched Disp RF RF instruction flow verification flow Exe Retire Commit / WB

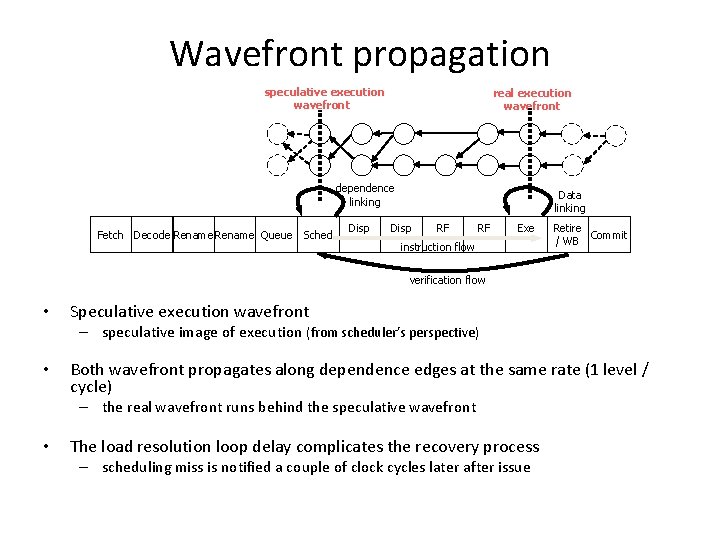

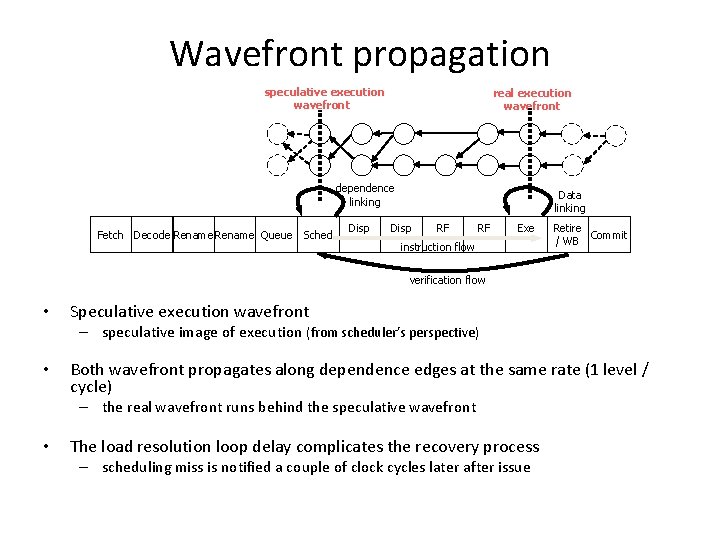

Wavefront propagation speculative execution wavefront real execution wavefront dependence linking Fetch Decode Rename Queue Sched Disp Data linking Disp RF RF Exe instruction flow Retire Commit / WB verification flow • Speculative execution wavefront – speculative image of execution (from scheduler’s perspective) • Both wavefront propagates along dependence edges at the same rate (1 level / cycle) – the real wavefront runs behind the speculative wavefront • The load resolution loop delay complicates the recovery process – scheduling miss is notified a couple of clock cycles later after issue

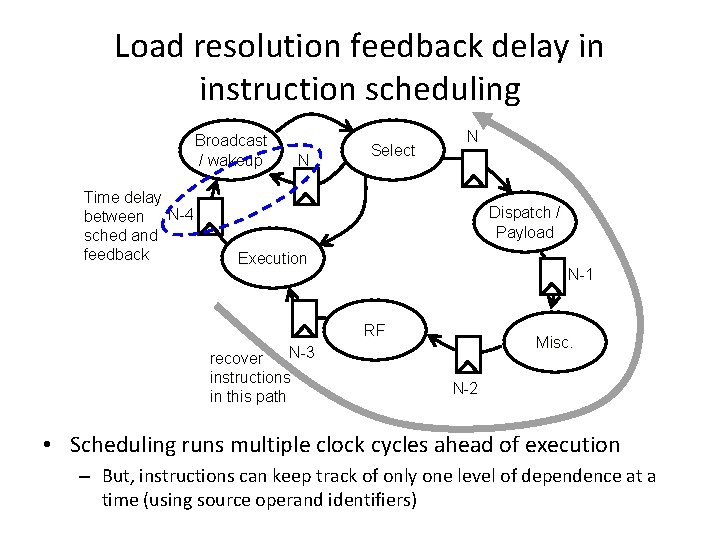

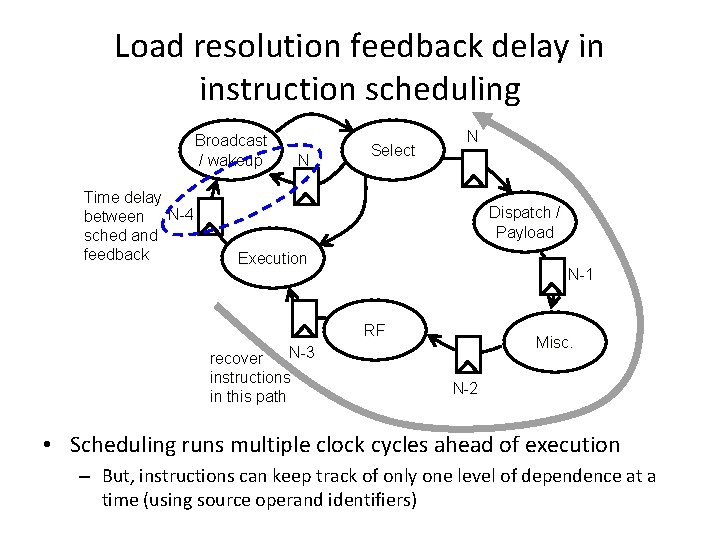

Load resolution feedback delay in instruction scheduling Broadcast / wakeup Time delay between N-4 sched and feedback N Select N Dispatch / Payload Execution N-1 RF Misc. N-3 recover instructions in this path N-2 • Scheduling runs multiple clock cycles ahead of execution – But, instructions can keep track of only one level of dependence at a time (using source operand identifiers)

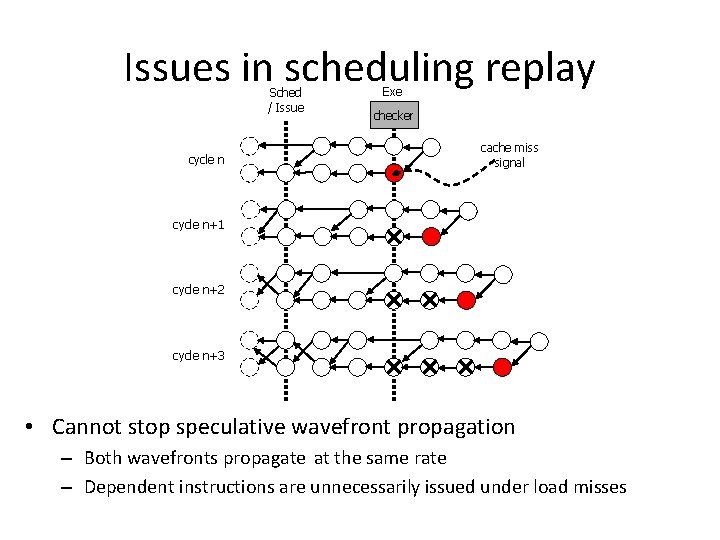

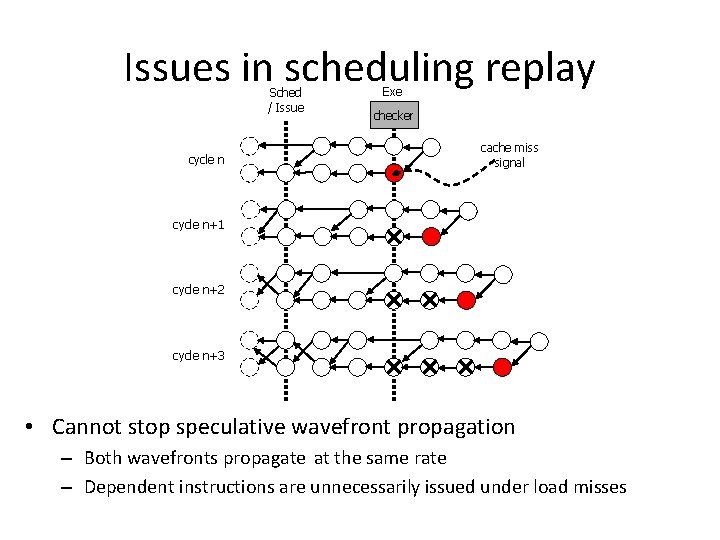

Issues in scheduling replay Sched / Issue cycle n Exe checker cache miss signal cycle n+1 cycle n+2 cycle n+3 • Cannot stop speculative wavefront propagation – Both wavefronts propagate at the same rate – Dependent instructions are unnecessarily issued under load misses

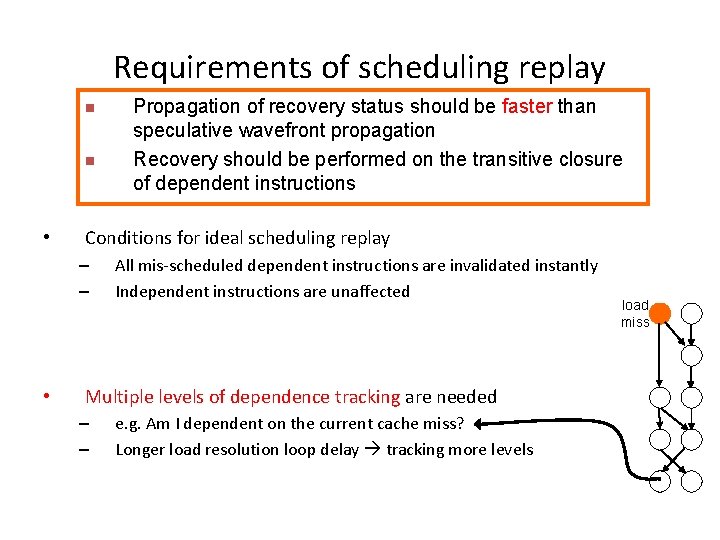

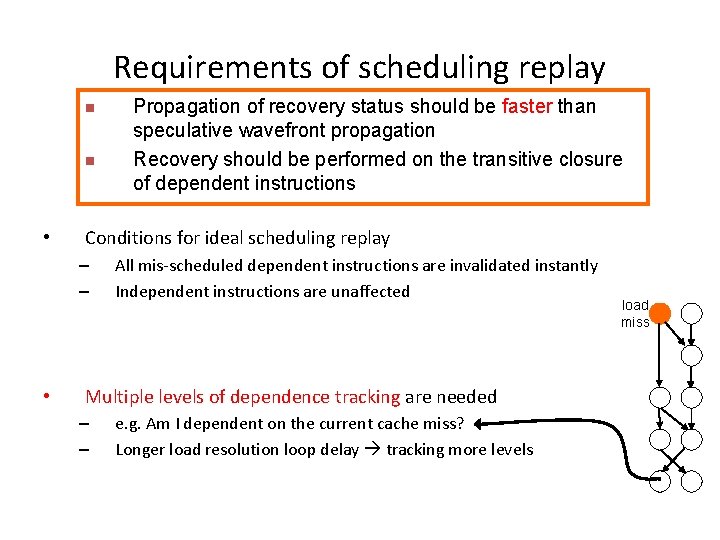

Requirements of scheduling replay n n • Conditions for ideal scheduling replay – – • Propagation of recovery status should be faster than speculative wavefront propagation Recovery should be performed on the transitive closure of dependent instructions All mis-scheduled dependent instructions are invalidated instantly Independent instructions are unaffected Multiple levels of dependence tracking are needed – – e. g. Am I dependent on the current cache miss? Longer load resolution loop delay tracking more levels load miss

Scheduling replay schemes • Alpha 21264: Non-selective replay – Replays all dependent and independent instructions issued under load shadow – Analogous to squashing recovery in branch misprediction – Simple but high performance penalty • Independent instructions are unnecessarily replayed Sched LD Disp RF miss LD resolved Exe ADD OR OR AND BR BR BR AND AND BR Invalidate & replay ALL instructions in the load shadow Retire LD Cache miss

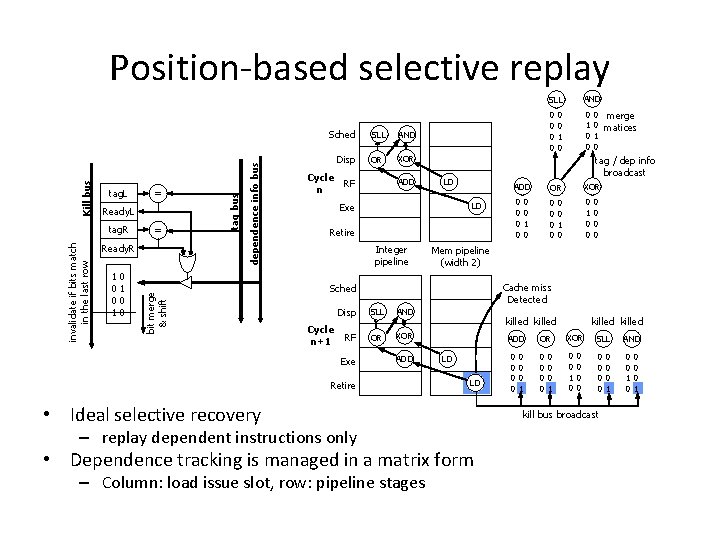

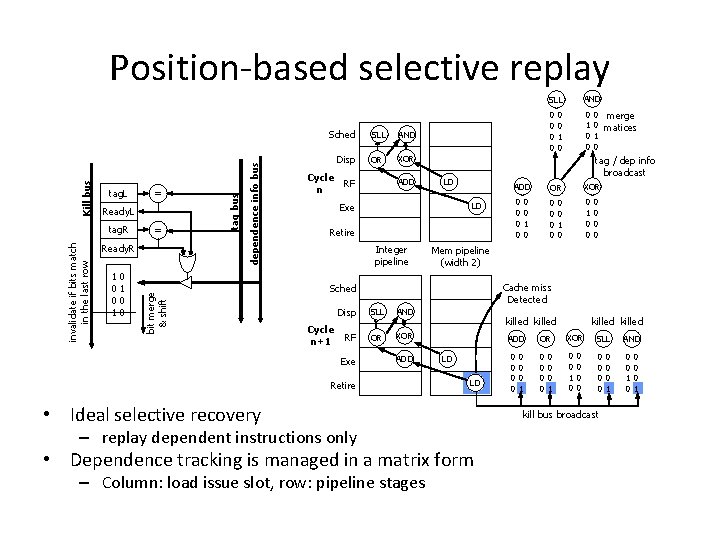

Position-based selective replay = Ready. R 1 0 0 dependence info bus Ready. L tag. R invalidate if bits match in the last row = tag bus tag. L bit merge & shift Kill bus Sched Disp SLL AND OR XOR Cycle RF n ADD RF Exe 0 merge 0 matices 1 0 XOR 0 0 0 0 0 1 0 0 0 Mem pipeline (width 2) Cache miss Detected Sched Cycle n+1 0 0 0 0 1 0 OR Retire Disp 0 0 ADD LD Integer pipeline AND tag / dep info broadcast LD Exe SLL AND OR XOR ADD Retire killed ADD LD LD • Ideal selective recovery – replay dependent instructions only • Dependence tracking is managed in a matrix form – Column: load issue slot, row: pipeline stages 0 0 0 0 1 OR 0 0 0 0 1 killed XOR 0 0 1 0 0 0 SLL 0 0 kill bus broadcast 0 0 0 1 AND 0 0 1

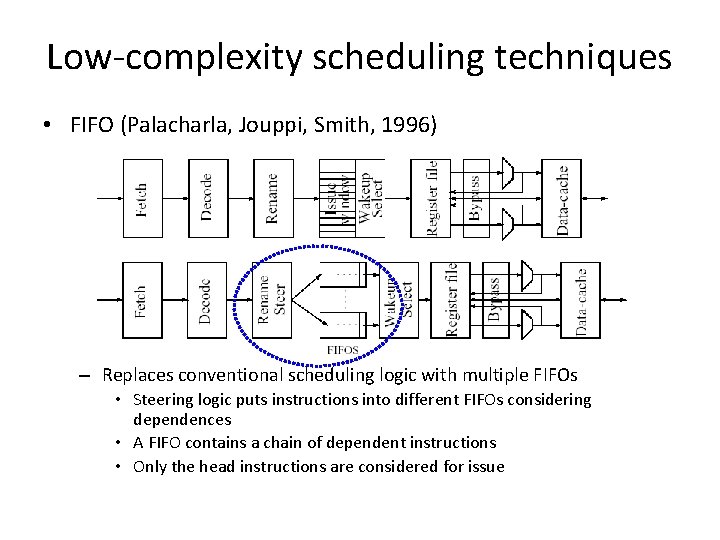

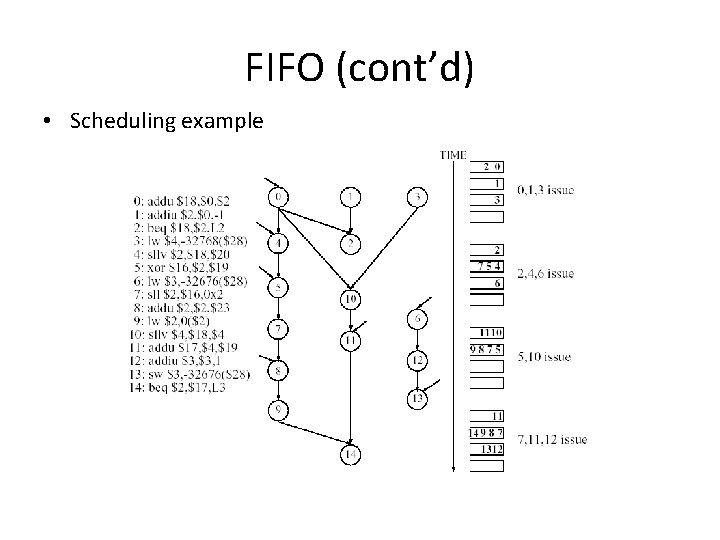

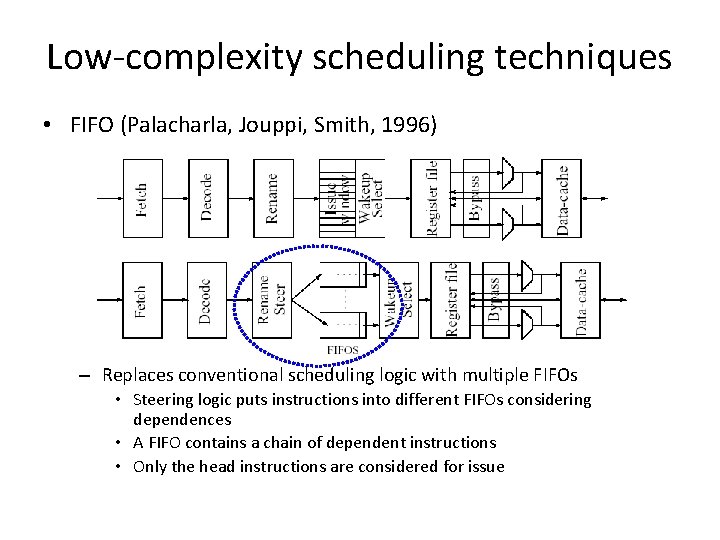

Low-complexity scheduling techniques • FIFO (Palacharla, Jouppi, Smith, 1996) – Replaces conventional scheduling logic with multiple FIFOs • Steering logic puts instructions into different FIFOs considering dependences • A FIFO contains a chain of dependent instructions • Only the head instructions are considered for issue

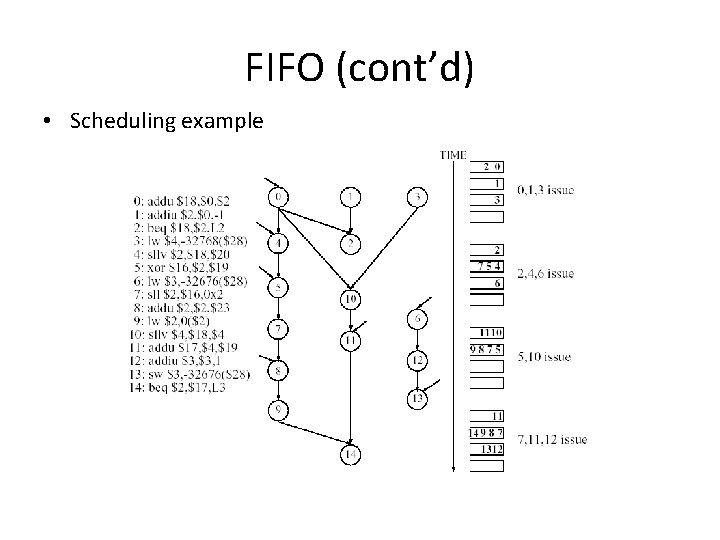

FIFO (cont’d) • Scheduling example

FIFO (cont’d) • Performance • • Comparable performance to the conventional scheduling Reduced scheduling logic complexity Many related papers on clustered microarchitecture Can in-order clusters provide high performance? [Zilles reading]

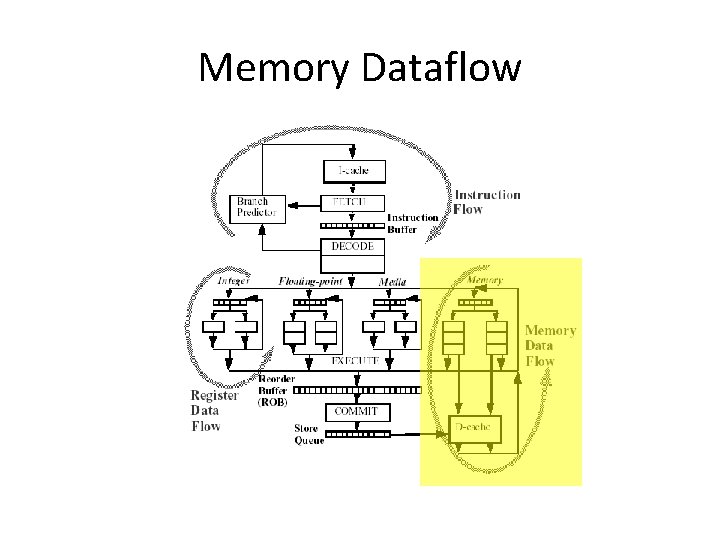

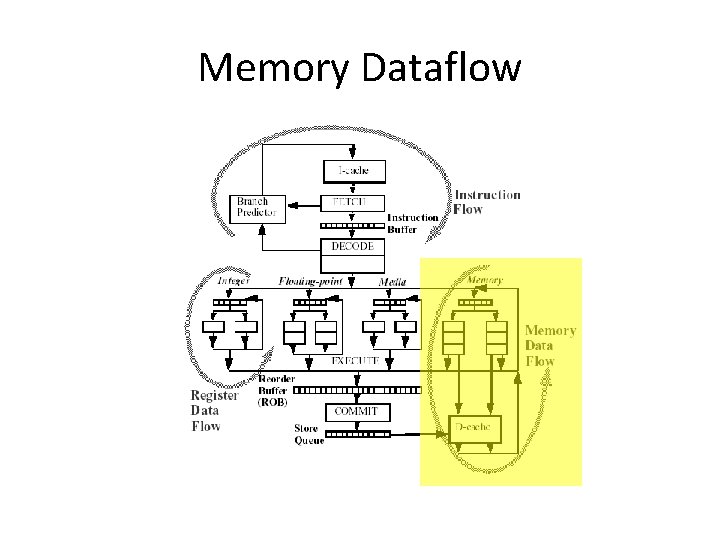

Memory Dataflow

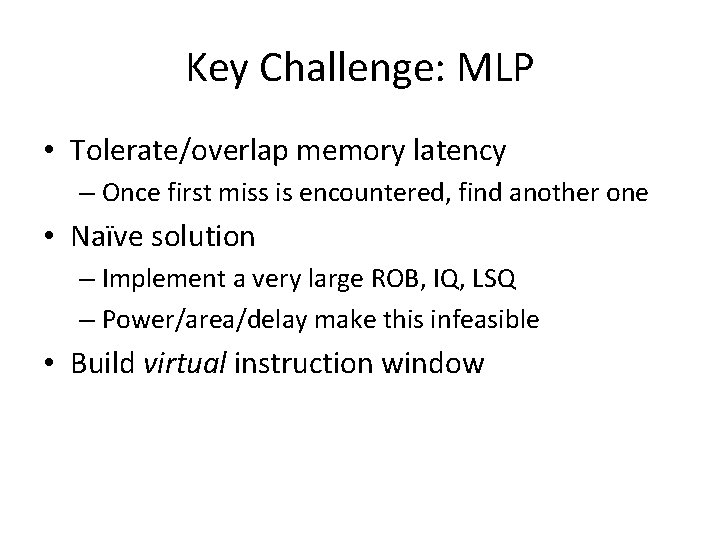

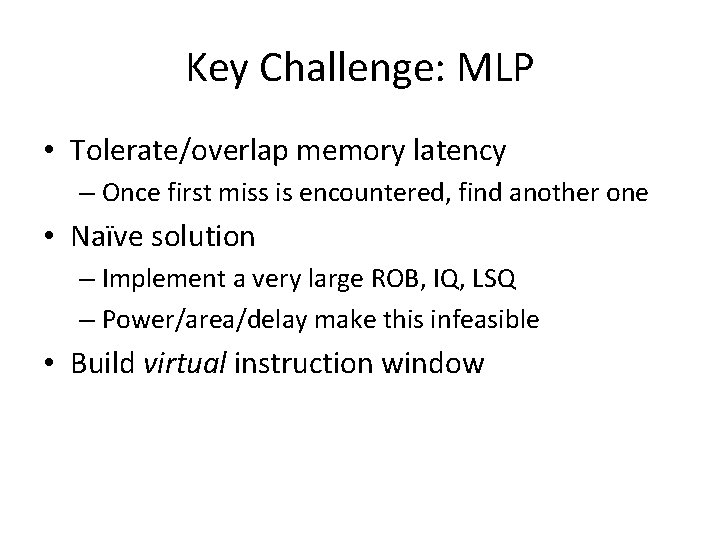

Key Challenge: MLP • Tolerate/overlap memory latency – Once first miss is encountered, find another one • Naïve solution – Implement a very large ROB, IQ, LSQ – Power/area/delay make this infeasible • Build virtual instruction window

Runahead • Use poison bits to eliminate miss-dependent load program slice – Forward load slice processing is a very old idea • Massive Memory Machine [Garcia-Molina et al. 84] • Datascalar [Burger, Kaxiras, Goodman 97] – Runahead proposed by [Dundas, Mudge 97] • Checkpoint state, keep running • When miss completes, return to checkpoint – May need runahead cache for store/load communication

![Waiting Instruction Buffer Lebeck et al ISCA 2002 Capture forward load slice in Waiting Instruction Buffer [Lebeck et al. ISCA 2002] • Capture forward load slice in](https://slidetodoc.com/presentation_image/4b498134fbf39975625cd8cf44922c3c/image-30.jpg)

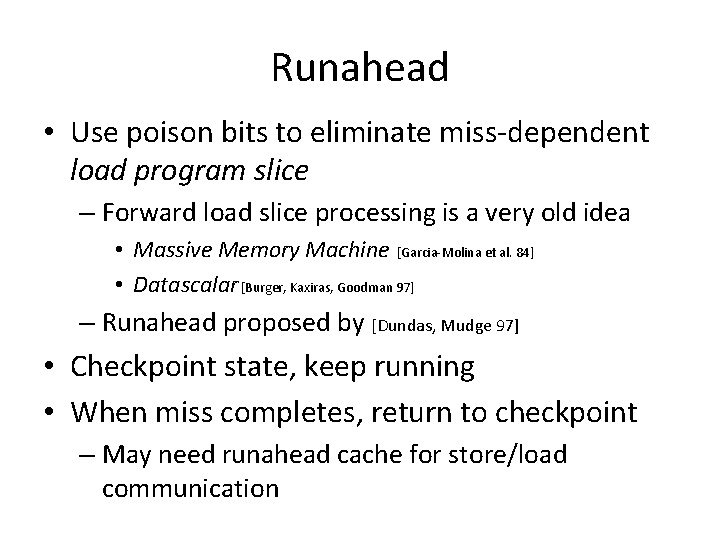

Waiting Instruction Buffer [Lebeck et al. ISCA 2002] • Capture forward load slice in separate buffer – Propagate poison bits to identify slice • Relieve pressure on issue queue • Reinsert instructions when load completes • Very similar to Intel Pentium 4 replay mechanism – But not publicly known at the time

![Continual Flow Pipelines Srinivasan et al 2004 Slice buffer extension of WIB Continual Flow Pipelines [Srinivasan et al. 2004] • Slice buffer extension of WIB –](https://slidetodoc.com/presentation_image/4b498134fbf39975625cd8cf44922c3c/image-31.jpg)

Continual Flow Pipelines [Srinivasan et al. 2004] • Slice buffer extension of WIB – Store operands in slice buffer as well to free up buffer entries on OOO window – Relieve pressure on rename/physical registers • Applicable to – data-capture machines (Intel P 6) or – physical register file machines (Pentium 4) • Recently extended to in-order machines (i. CFP) • Challenge: how to buffer loads/stores

Scalable Load/Store Queues • Load queue/store queue – Large instruction window: many loads and stores have to be buffered (25%/15% of mix) – Expensive searches • positional-associative searches in SQ, • associative lookups in LQ – coherence, speculative load scheduling – Power/area/delay are prohibitive

Store Queue/Load Queue Scaling • Multilevel queues • Bloom filters (quick check for independence) • Eliminate associative load queue via replay [Cain 2004] – Issue loads again at commit, in order – Check to see if same value is returned – Filter load checks for efficiency: • Most loads don’t issue out of order (no speculation) • Most loads don’t coincide with coherence traffic

SVW and No. SQ • Store Vulnerability Window (SVW) – Assign sequence numbers to stores – Track writes to cache with sequence numbers – Efficiently filter out safe loads/stores by only checking against writes in vulnerability window • No. SQ – Rely on load/store alias prediction to satisfy dependent pairs – Use SVW technique to check

Store/Load Optimizations • Weakness: predictor still fails – Machine should fail gracefully, not fall off a cliff – Glass jaw • Several other concurrent proposals – DMDC, Fire-and-forget, …

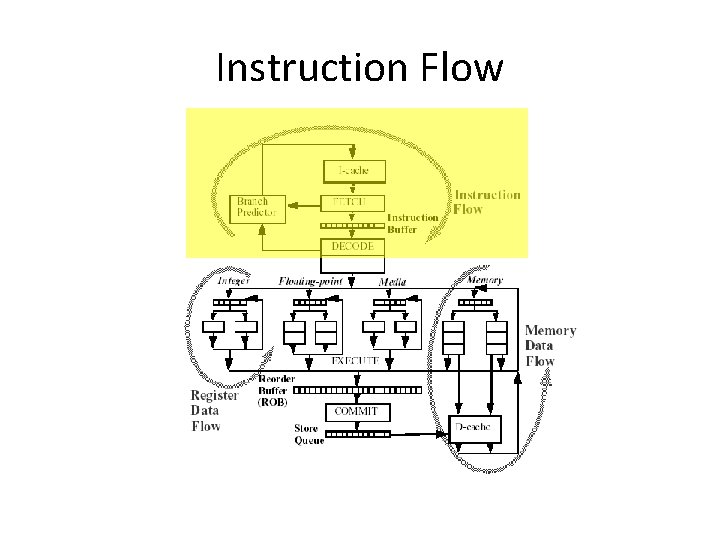

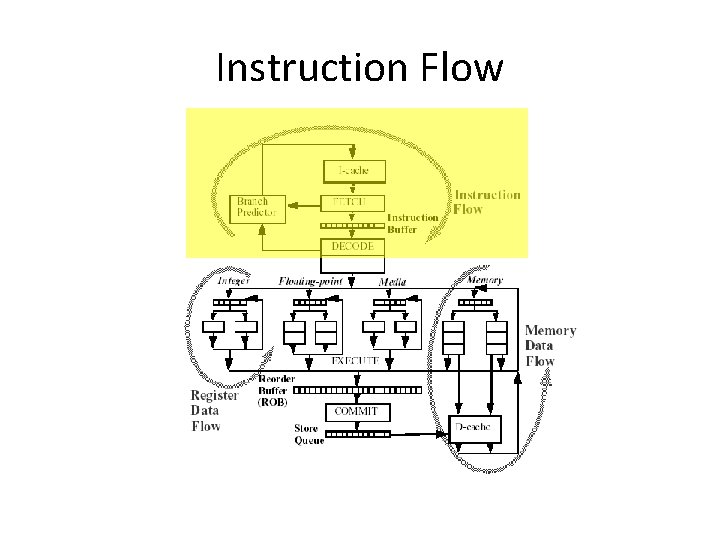

Instruction Flow

Transparent Control Independence • Control flow graph convergence – Execution reconverges after branches – If-then-else constructs, etc. • Can we fetch/execute instructions beyond convergence point? • How do we resolve ambiguous register and memory dependences – Writes may or may not occur in branch shadow • TCI employs CFP-like slice buffer to solve these problems – Instructions with ambiguous dependences buffered – Reinsert them the same way forward load miss slice is reinserted • “Best” CI proposal to date, but still very complex and expensive, with moderate payback

Summary of Advanced Microarchitecture • Instruction scheduling overview – Scheduling atomicity – Speculative scheduling – Scheduling recovery • Complexity-effective instruction scheduling techniques • Building large instruction windows – Runahead, CFP, i. CFP • Scalable load/store handling • Control Independence

Mikko lipasti

Mikko lipasti Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Pri register

Pri register Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Mikko lipasti

Mikko lipasti Mikko lipasti

Mikko lipasti Microinstruction format

Microinstruction format Computer architecture lecture

Computer architecture lecture Processor microarchitecture

Processor microarchitecture Microarchitecture diagram

Microarchitecture diagram µop

µop Structured computer organization

Structured computer organization Agner fog

Agner fog Private krotoshinsky

Private krotoshinsky Mikko ranta-huitti

Mikko ranta-huitti Mikko kesonen

Mikko kesonen Mikko posti

Mikko posti Mikko häikiö

Mikko häikiö Mikko leitsamo

Mikko leitsamo Mikko juusela

Mikko juusela Mikko manka tampereen yliopisto

Mikko manka tampereen yliopisto Mikko heiskanen

Mikko heiskanen Mikko vienonen

Mikko vienonen Mikko sola

Mikko sola Mikko karppinen

Mikko karppinen Mikko lappalainen

Mikko lappalainen Mikko keränen kamk

Mikko keränen kamk Mikko mäkelä metropolia

Mikko mäkelä metropolia Mikko ranta njs

Mikko ranta njs Mikko ulander

Mikko ulander Mikko lindeman

Mikko lindeman Mikko tiira

Mikko tiira Mikko nieminen

Mikko nieminen