Main Memory Prof Mikko H Lipasti University of

![Future: Hybrid Memory Cube l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube. Future: Hybrid Memory Cube l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube.](https://slidetodoc.com/presentation_image/06355b55ac0d9740c859b9b381835cc6/image-21.jpg)

![Hybrid Memory Cube MCM l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube. Hybrid Memory Cube MCM l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube.](https://slidetodoc.com/presentation_image/06355b55ac0d9740c859b9b381835cc6/image-22.jpg)

- Slides: 52

Main Memory Prof. Mikko H. Lipasti University of Wisconsin-Madison ECE/CS 752 Spring 2016 Lecture notes based on notes by Jim Smith and Mark Hill Updated by Mikko Lipasti

Readings l Discuss in class: – Read Sec. 1, skim Sec. 2, read Sec. 3: Bruce Jacob, “The Memory System: You Can't Avoid It, You Can't Ignore It, You Can't Fake It, ” Synthesis Lectures on Computer Architecture 2009 4: 1, 1 -77. – Review #2 due 3/30/2016: Steven Pelley, Peter Chen, and Thomas Wenisch, "Memory Persistency, " Proc. ISCA 2014, June 2014 Online PDF 2

Summary: Main Memory l DRAM chips l Memory organization – Interleaving – Banking l Memory controller design l Hybrid Memory Cube l Phase Change Memory (reading) l Virtual memory l TLBs l Interaction of caches and virtual memory (Baer et al. ) l Large pages, virtualization

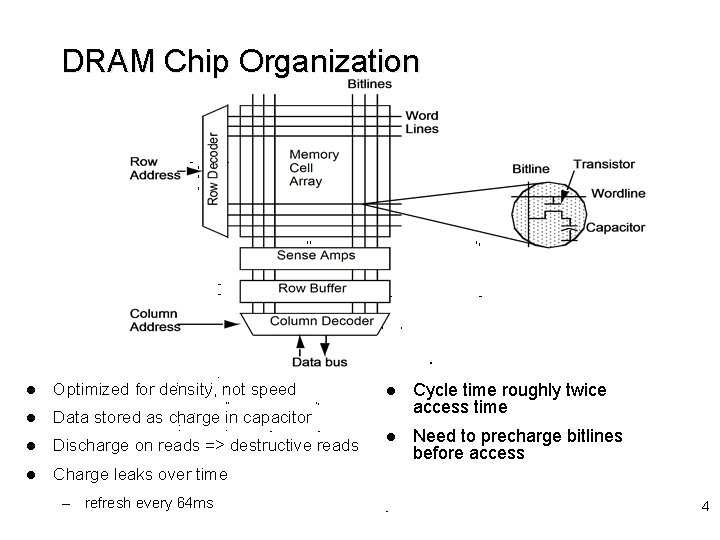

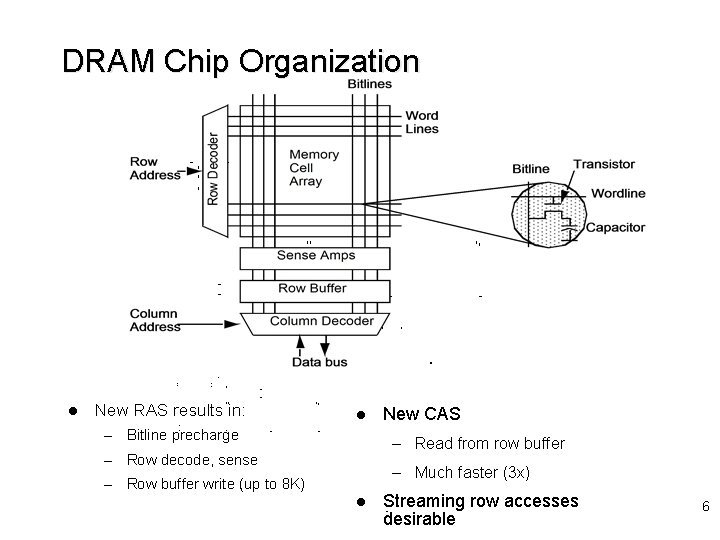

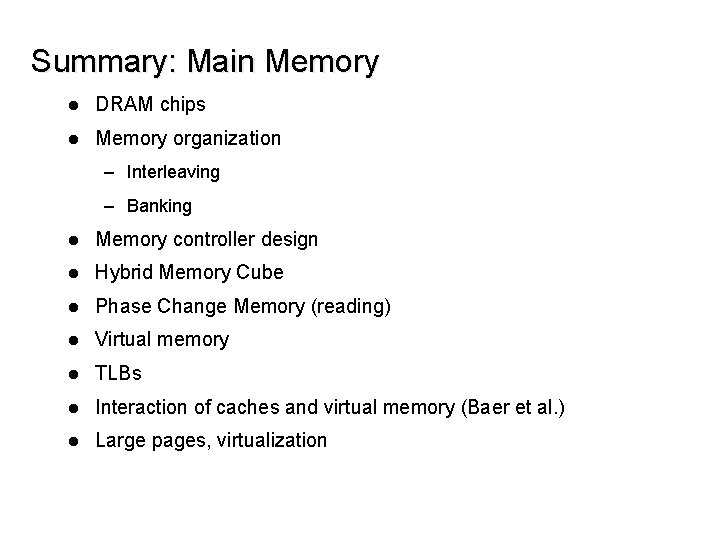

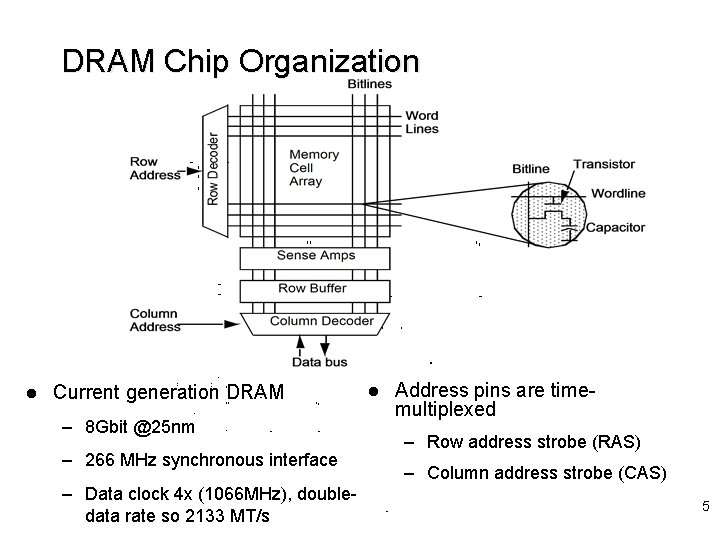

DRAM Chip Organization l Optimized for density, not speed l Data stored as charge in capacitor l Discharge on reads => destructive reads l Charge leaks over time – refresh every 64 ms l Cycle time roughly twice access time l Need to precharge bitlines before access 4

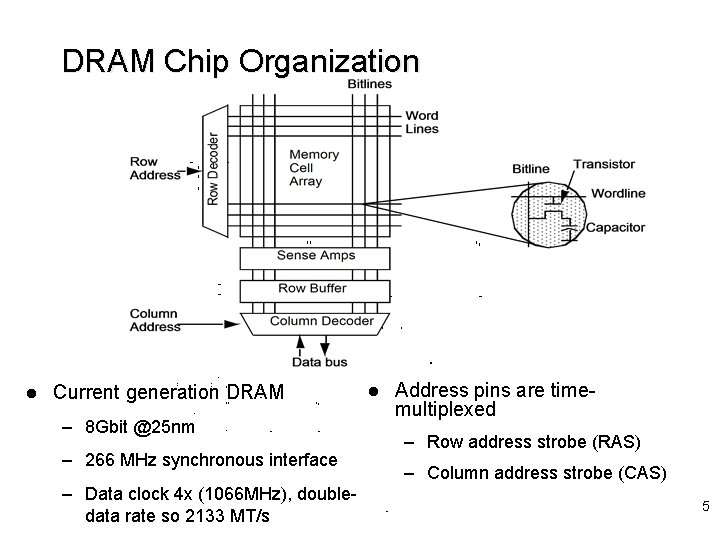

DRAM Chip Organization l Current generation DRAM – 8 Gbit @25 nm – 266 MHz synchronous interface – Data clock 4 x (1066 MHz), doubledata rate so 2133 MT/s l Address pins are timemultiplexed – Row address strobe (RAS) – Column address strobe (CAS) 5

DRAM Chip Organization l New RAS results in: l – Bitline precharge New CAS – Read from row buffer – Row decode, sense – Much faster (3 x) – Row buffer write (up to 8 K) l Streaming row accesses desirable 6

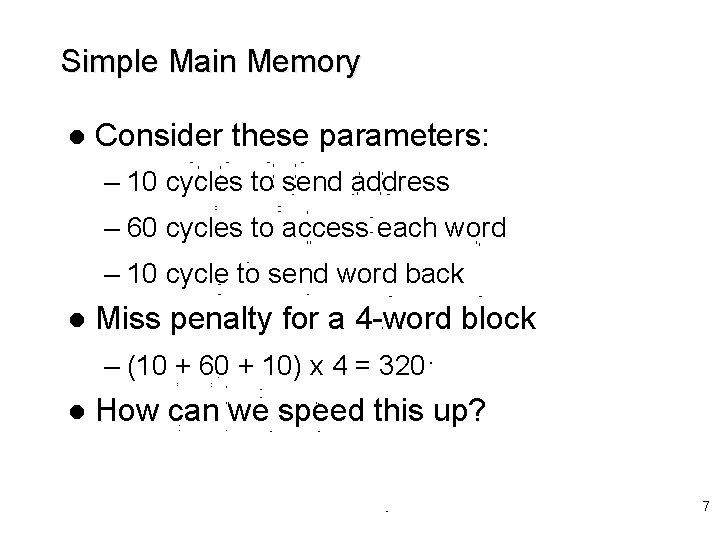

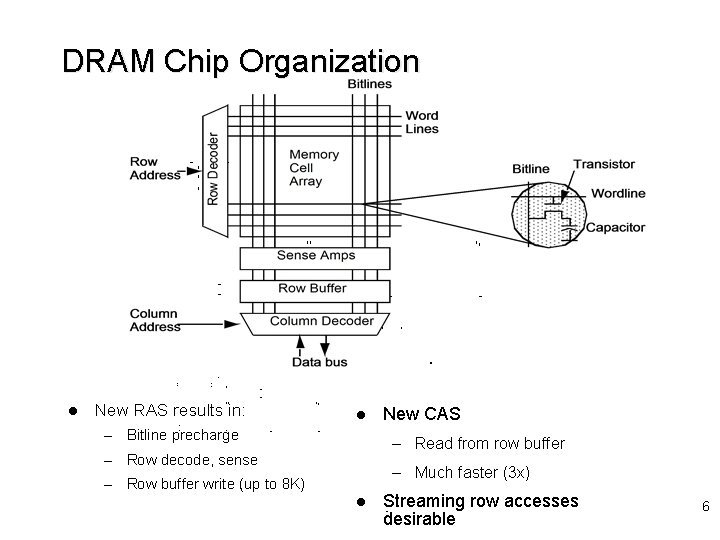

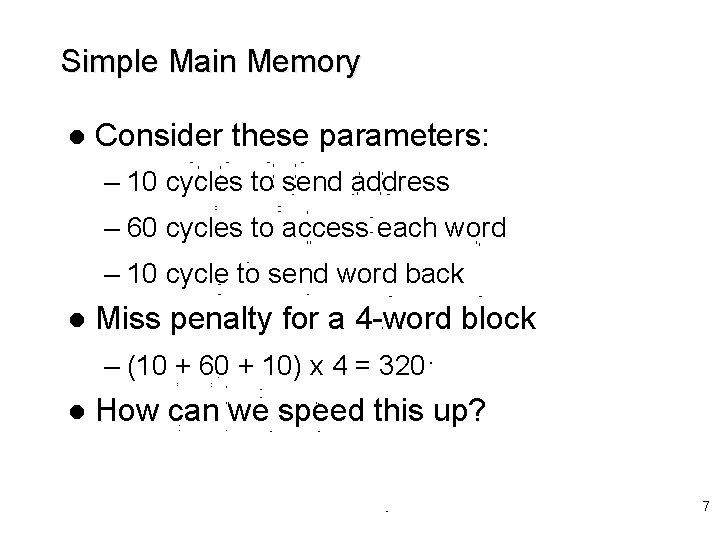

Simple Main Memory l Consider these parameters: – 10 cycles to send address – 60 cycles to access each word – 10 cycle to send word back l Miss penalty for a 4 -word block – (10 + 60 + 10) x 4 = 320 l How can we speed this up? 7

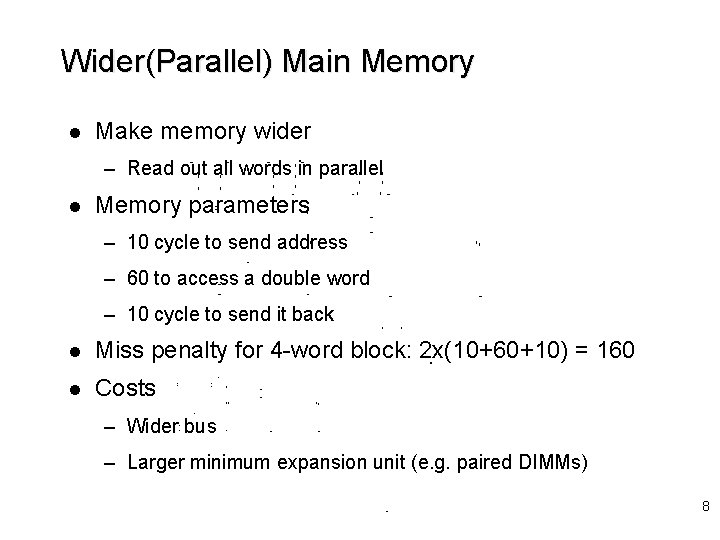

Wider(Parallel) Main Memory l Make memory wider – Read out all words in parallel l Memory parameters – 10 cycle to send address – 60 to access a double word – 10 cycle to send it back l Miss penalty for 4 -word block: 2 x(10+60+10) = 160 l Costs – Wider bus – Larger minimum expansion unit (e. g. paired DIMMs) 8

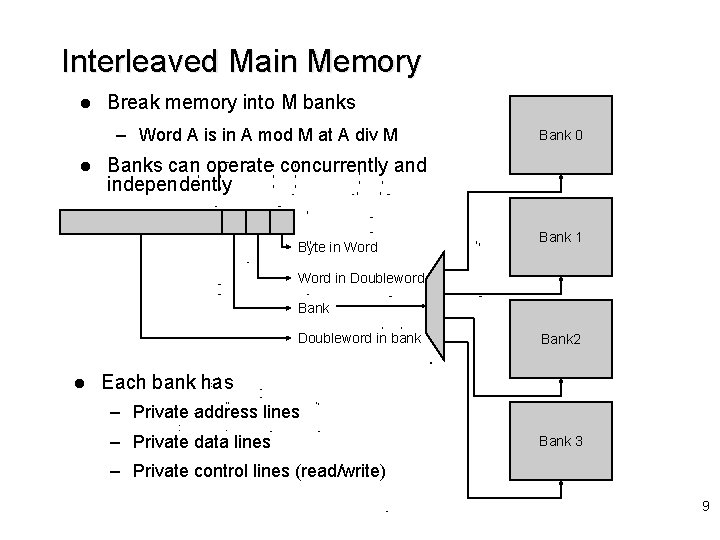

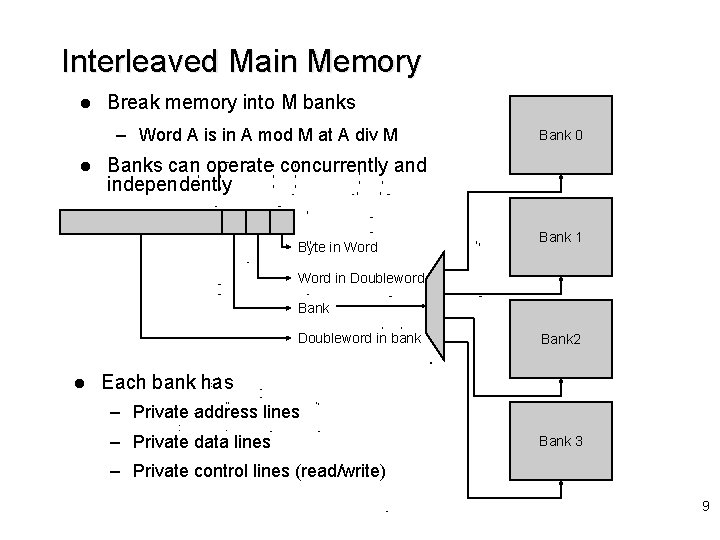

Interleaved Main Memory l Break memory into M banks – Word A is in A mod M at A div M l Bank 0 Banks can operate concurrently and independently Byte in Word Bank 1 Word in Doubleword Bank Doubleword in bank l Bank 2 Each bank has – Private address lines – Private data lines Bank 3 – Private control lines (read/write) 9

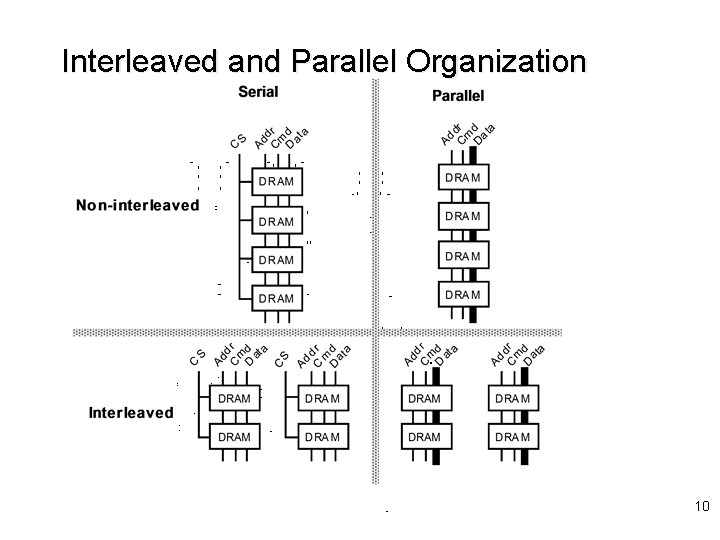

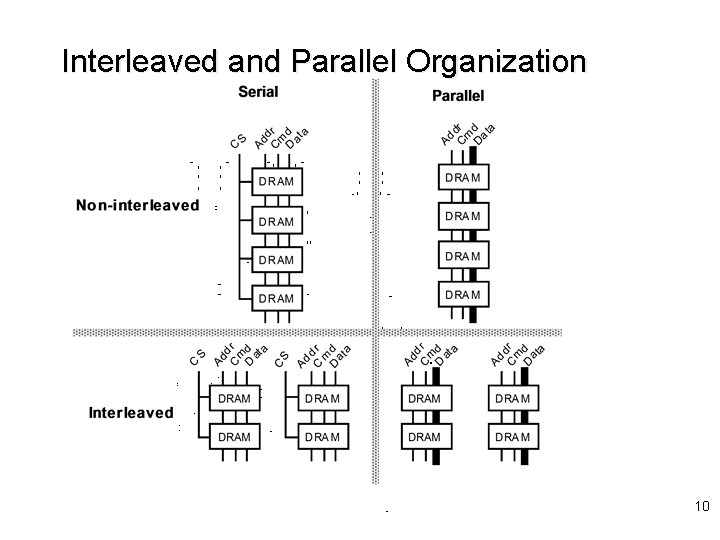

Interleaved and Parallel Organization 10

Interleaved Memory Examples Ai = address to bank i Ti = data transfer – Unit Stride: • Stride 3: 11

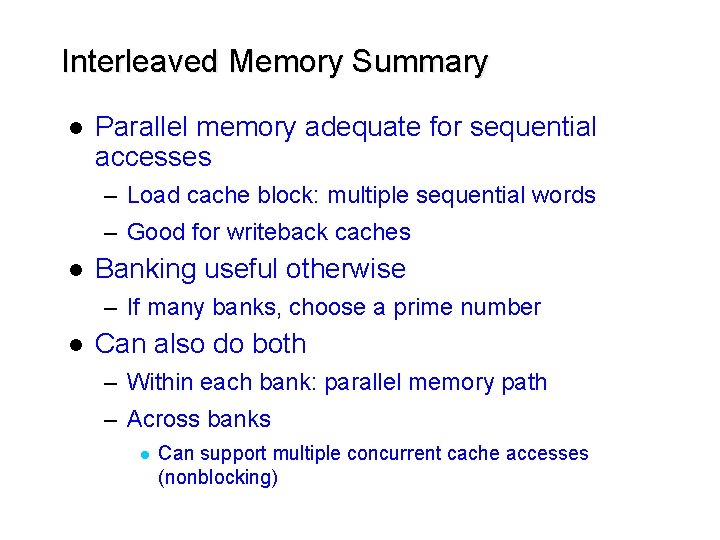

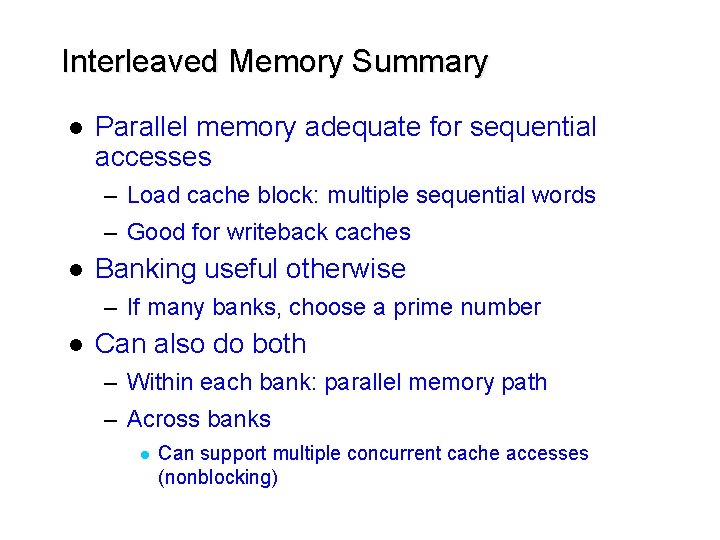

Interleaved Memory Summary l Parallel memory adequate for sequential accesses – Load cache block: multiple sequential words – Good for writeback caches l Banking useful otherwise – If many banks, choose a prime number l Can also do both – Within each bank: parallel memory path – Across banks l Can support multiple concurrent cache accesses (nonblocking)

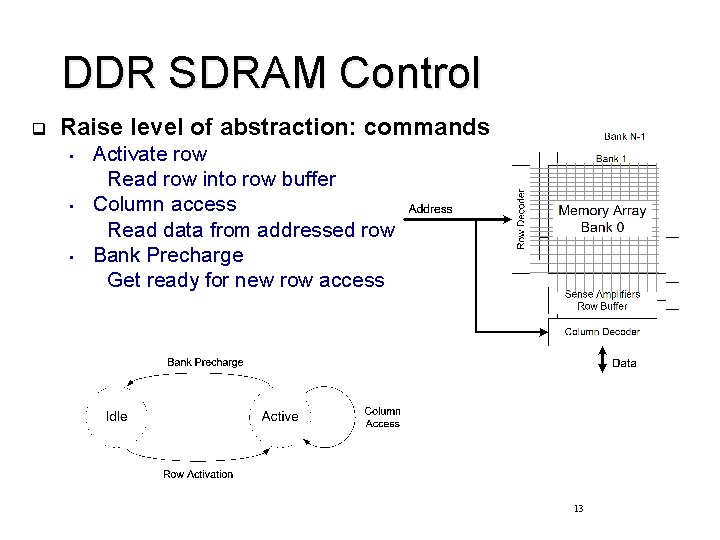

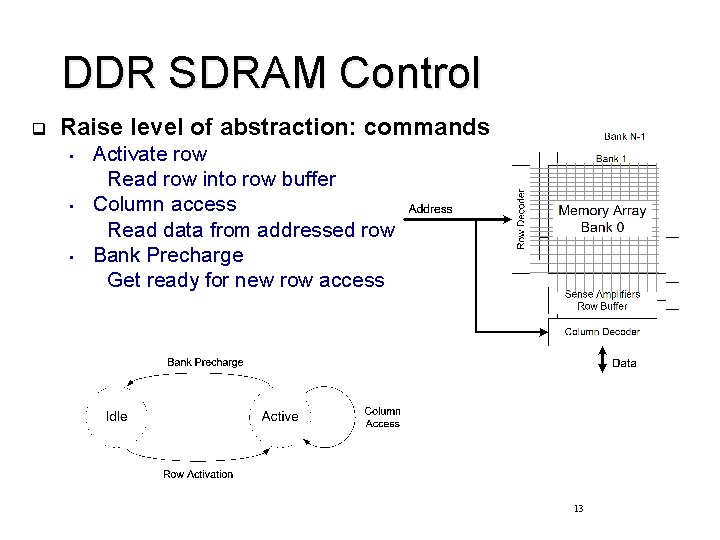

DDR SDRAM Control q Raise level of abstraction: commands • • • Activate row Read row into row buffer Column access Read data from addressed row Bank Precharge Get ready for new row access 13

DDR SDRAM Timing q Read access 14

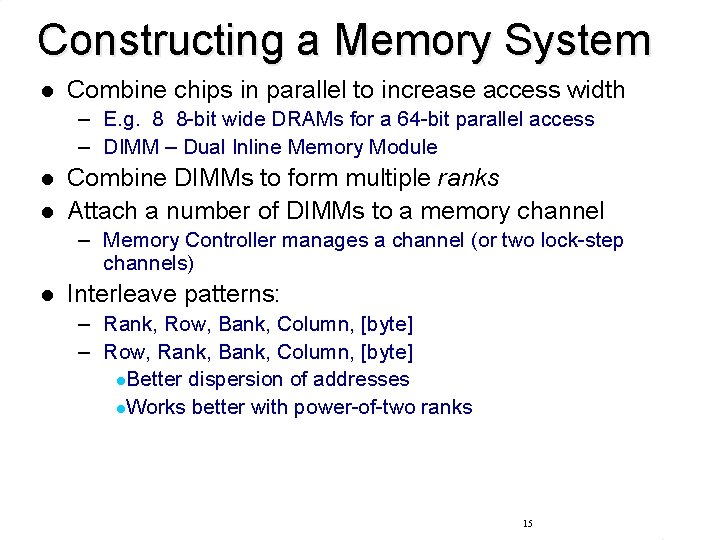

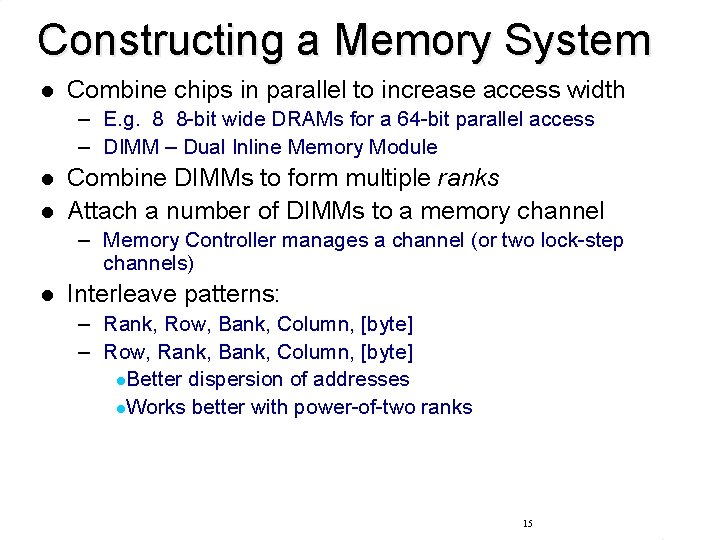

Constructing a Memory System l Combine chips in parallel to increase access width – E. g. 8 8 -bit wide DRAMs for a 64 -bit parallel access – DIMM – Dual Inline Memory Module l l Combine DIMMs to form multiple ranks Attach a number of DIMMs to a memory channel – Memory Controller manages a channel (or two lock-step channels) l Interleave patterns: – Rank, Row, Bank, Column, [byte] – Row, Rank, Bank, Column, [byte] l. Better dispersion of addresses l. Works better with power-of-two ranks 15

Memory Controller and Channel 16

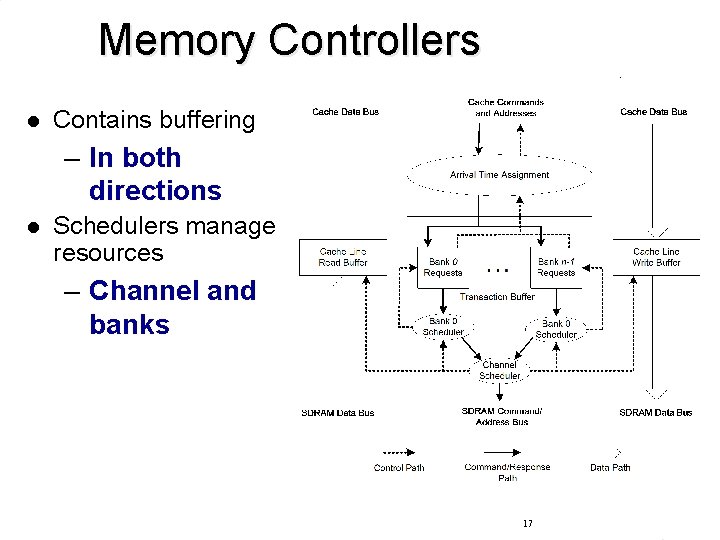

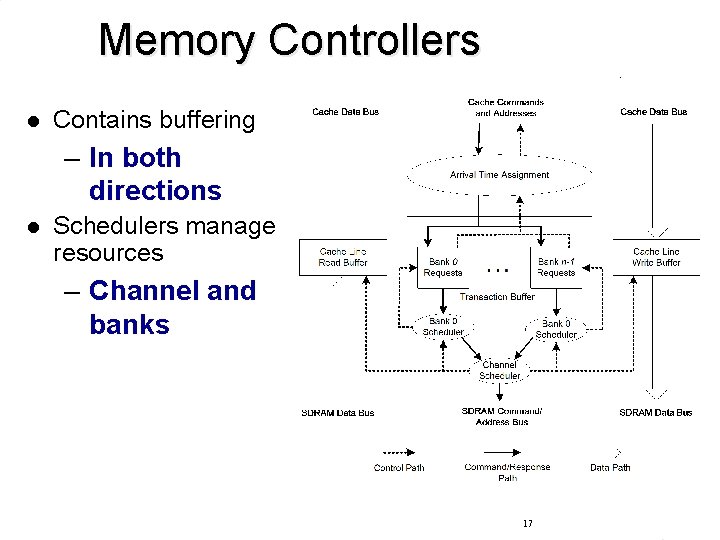

Memory Controllers l Contains buffering – In both directions l Schedulers manage resources – Channel and banks 17

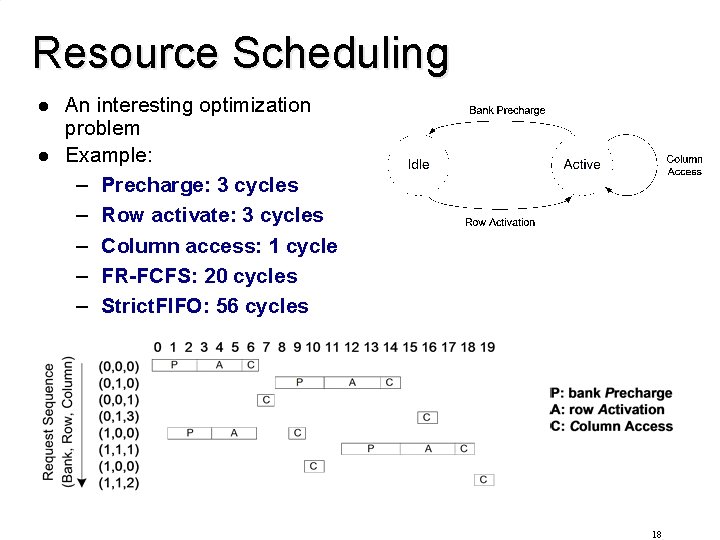

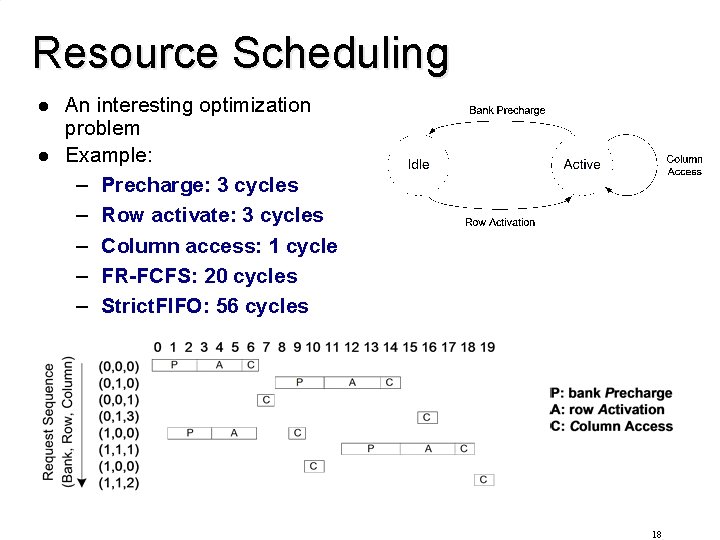

Resource Scheduling l l An interesting optimization problem Example: – Precharge: 3 cycles – Row activate: 3 cycles – Column access: 1 cycle – FR-FCFS: 20 cycles – Strict. FIFO: 56 cycles 18

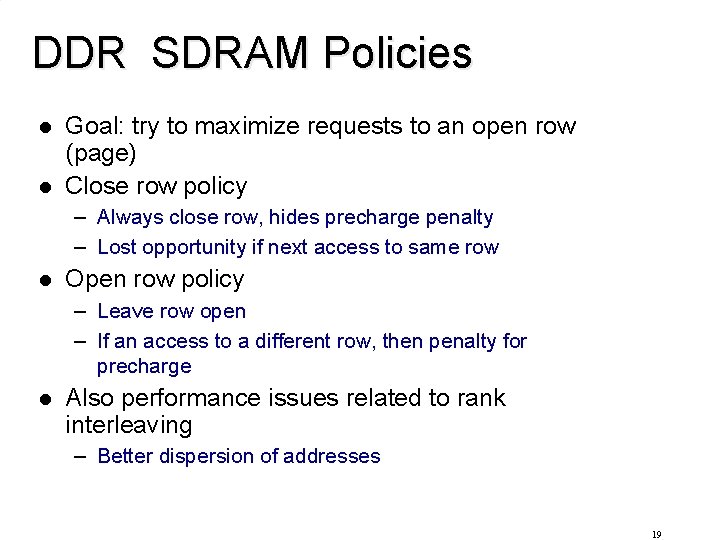

DDR SDRAM Policies l l Goal: try to maximize requests to an open row (page) Close row policy – Always close row, hides precharge penalty – Lost opportunity if next access to same row l Open row policy – Leave row open – If an access to a different row, then penalty for precharge l Also performance issues related to rank interleaving – Better dispersion of addresses 19

Memory Scheduling Contest l l l l http: //www. cs. utah. edu/~rajeev/jwac 12/ Clean, simple, infrastructure Traces provided Very easy to make fair comparisons Comes with 6 schedulers Also targets power-down modes (not just page open/close scheduling) Three tracks: 1. Delay (or Performance), 2. Energy-Delay Product (EDP) 3. Performance-Fairness Product (PFP) 20

![Future Hybrid Memory Cube l Micron proposal Pawlowski Hot Chips 11 www hybridmemorycube Future: Hybrid Memory Cube l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube.](https://slidetodoc.com/presentation_image/06355b55ac0d9740c859b9b381835cc6/image-21.jpg)

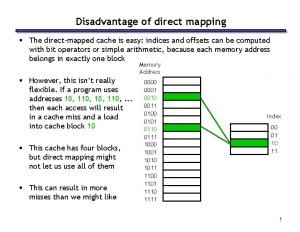

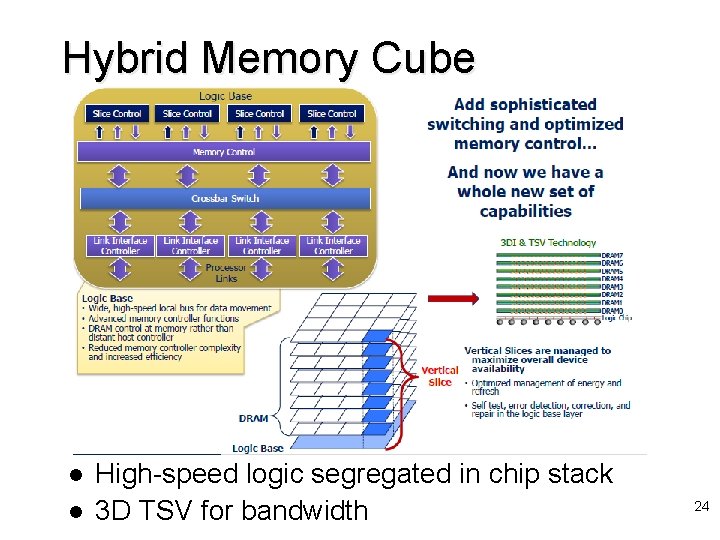

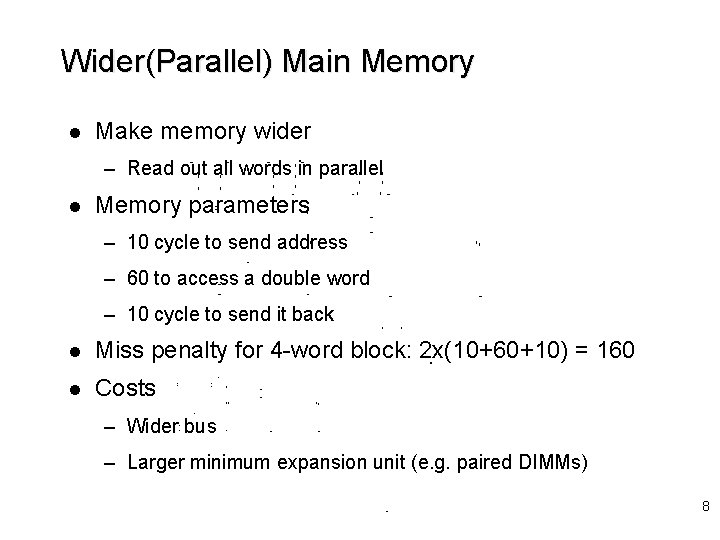

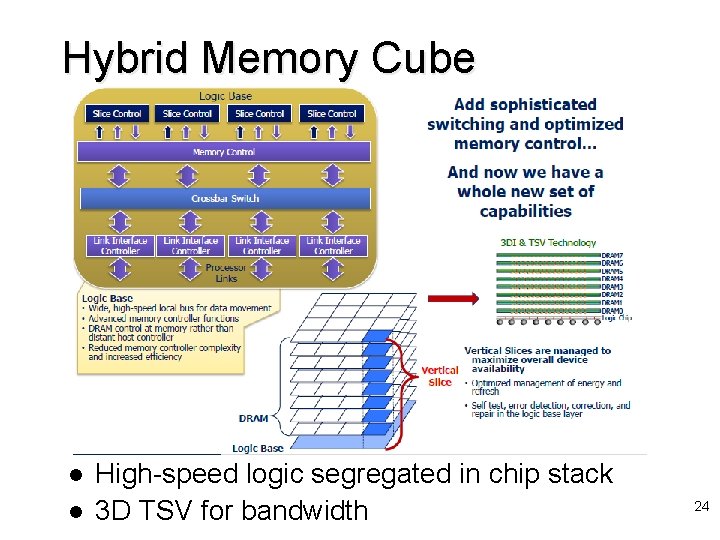

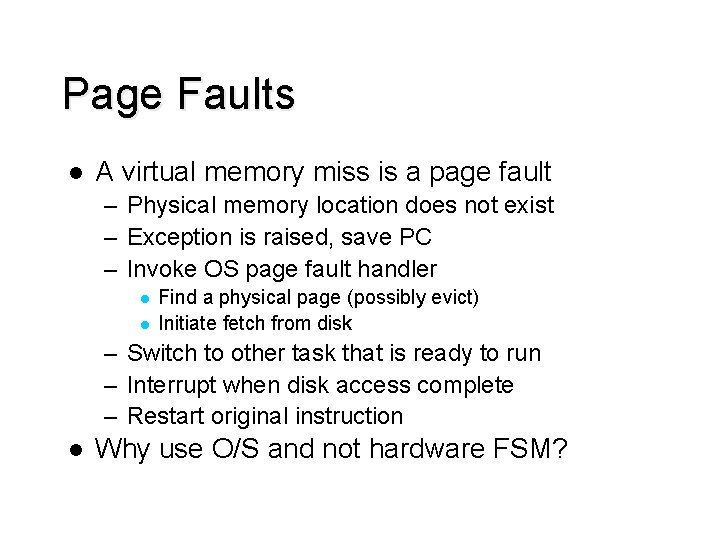

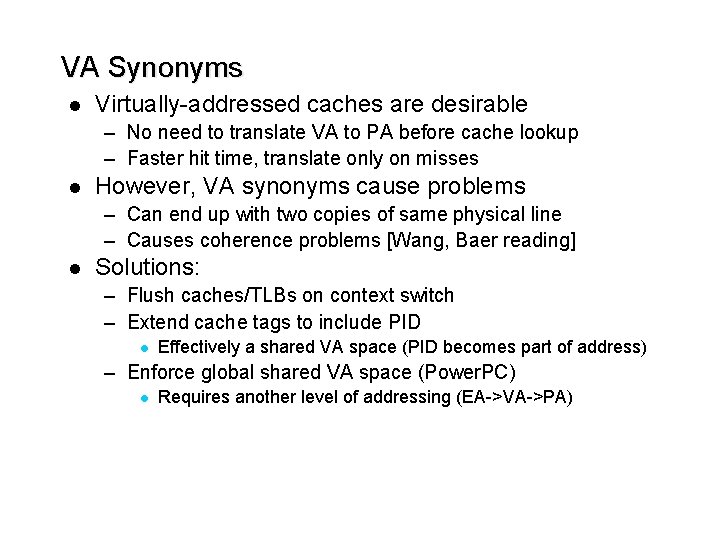

Future: Hybrid Memory Cube l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube. org 21

![Hybrid Memory Cube MCM l Micron proposal Pawlowski Hot Chips 11 www hybridmemorycube Hybrid Memory Cube MCM l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube.](https://slidetodoc.com/presentation_image/06355b55ac0d9740c859b9b381835cc6/image-22.jpg)

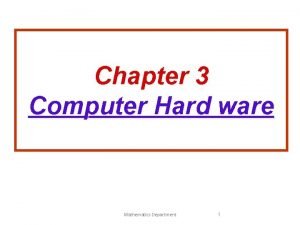

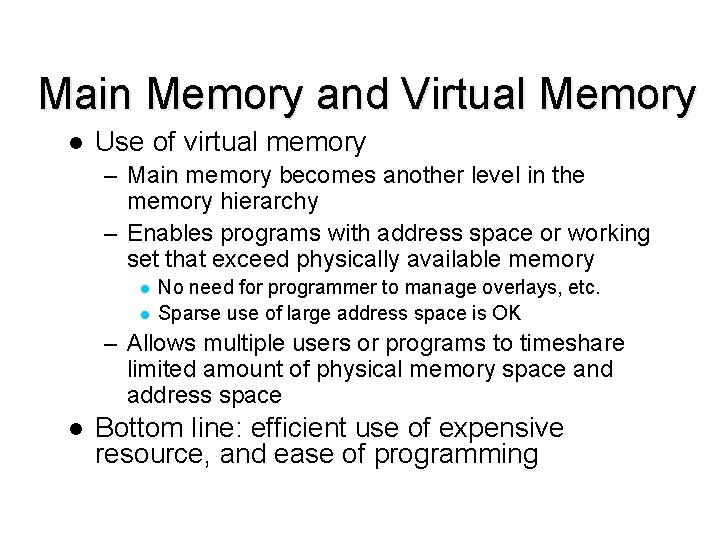

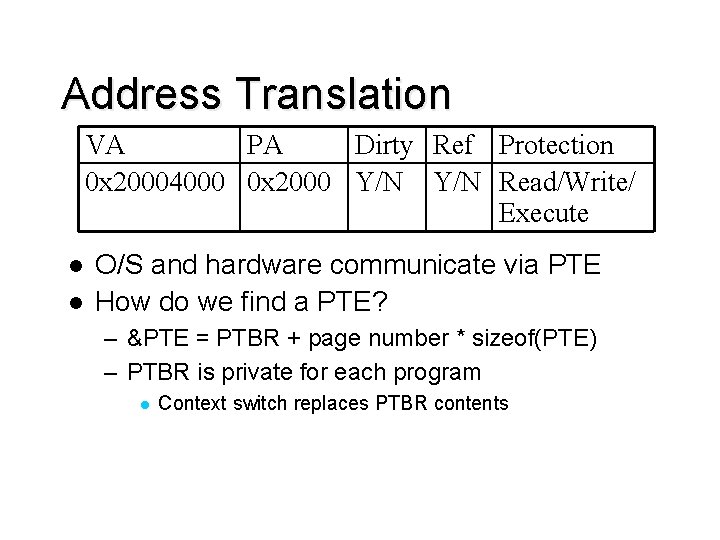

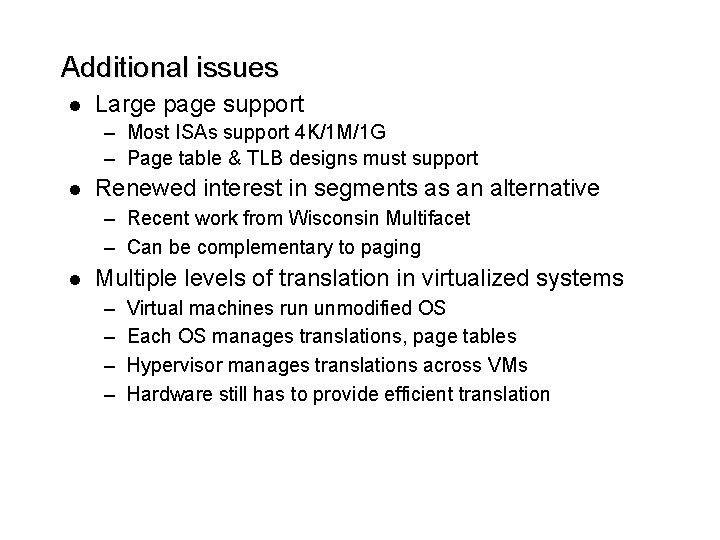

Hybrid Memory Cube MCM l Micron proposal [Pawlowski, Hot Chips 11] – www. hybridmemorycube. org 22

Network of DRAM Traditional DRAM: star topology l HMC: mesh, etc. are feasible l 23

Hybrid Memory Cube l l High-speed logic segregated in chip stack 3 D TSV for bandwidth 24

Future: Resistive memory l l PCM: store bit in phase state of material Alternatives: – Memristor (HP Labs) – STT-MRAM l l l Nonvolatile Dense: crosspoint architecture (no access device) Relatively fast for read Very slow for write (also high power) Write endurance often limited – Write leveling (also done for flash) – Avoid redundant writes (read, cmp, write) – Fix individual bit errors (write, read, cmp, fix) 25

Main Memory and Virtual Memory l Use of virtual memory – Main memory becomes another level in the memory hierarchy – Enables programs with address space or working set that exceed physically available memory l l No need for programmer to manage overlays, etc. Sparse use of large address space is OK – Allows multiple users or programs to timeshare limited amount of physical memory space and address space l Bottom line: efficient use of expensive resource, and ease of programming

Virtual Memory l Enables – Use more memory than system has – Think program is only one running l l Don’t have to manage address space usage across programs E. g. think it always starts at address 0 x 0 – Memory protection l Each program has private VA space: no-one else can clobber it – Better performance l Start running a large program before all of it has been loaded from disk

Virtual Memory – Placement l Main memory managed in larger blocks – Page size typically 4 K – 16 K l Fully flexible placement; fully associative – Operating system manages placement – Indirection through page table – Maintain mapping between: Virtual address (seen by programmer) l Physical address (seen by main memory) l

Virtual Memory – Placement l Fully associative implies expensive lookup? – In caches, yes: check multiple tags in parallel l In virtual memory, expensive lookup is avoided by using a level of indirection – Lookup table or hash table – Called a page table

Virtual Memory – Identification Virtual Address 0 x 20004000 l Physical Address 0 x 2000 Dirty bit Y/N Similar to cache tag array – Page table entry contains VA, PA, dirty bit l Virtual address: – Matches programmer view; based on register values – Can be the same for multiple programs sharing same system, without conflicts l Physical address: – Invisible to programmer, managed by O/S – Created/deleted on demand basis, can change

Virtual Memory – Replacement l Similar to caches: – FIFO – LRU; overhead too high Approximated with reference bit checks l “Clock algorithm” intermittently clears all bits l – Random l O/S decides, manages – CS 537

Virtual Memory – Write Policy l Write back – Disks are too slow to write through l Page table maintains dirty bit – Hardware must set dirty bit on first write – O/S checks dirty bit on eviction – Dirty pages written to backing store l Disk write, 10+ ms

Virtual Memory Implementation Caches have fixed policies, hardware FSM for control, pipeline stall l VM has very different miss penalties l – Remember disks are 10+ ms! l Hence engineered differently

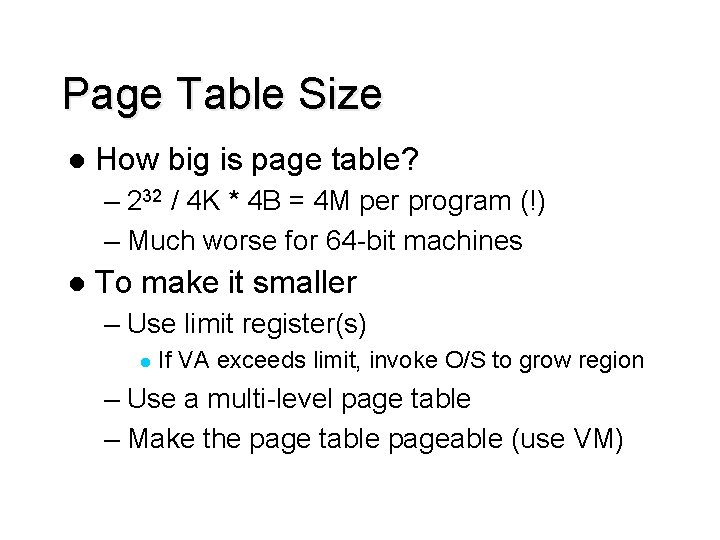

Page Faults l A virtual memory miss is a page fault – Physical memory location does not exist – Exception is raised, save PC – Invoke OS page fault handler l l Find a physical page (possibly evict) Initiate fetch from disk – Switch to other task that is ready to run – Interrupt when disk access complete – Restart original instruction l Why use O/S and not hardware FSM?

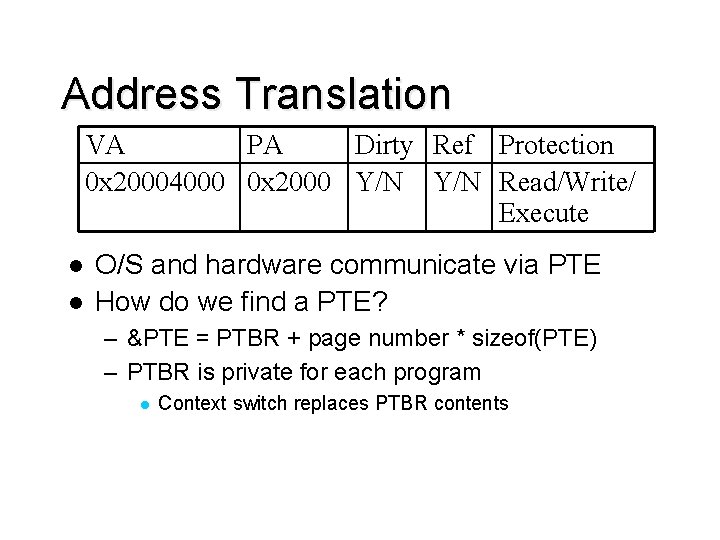

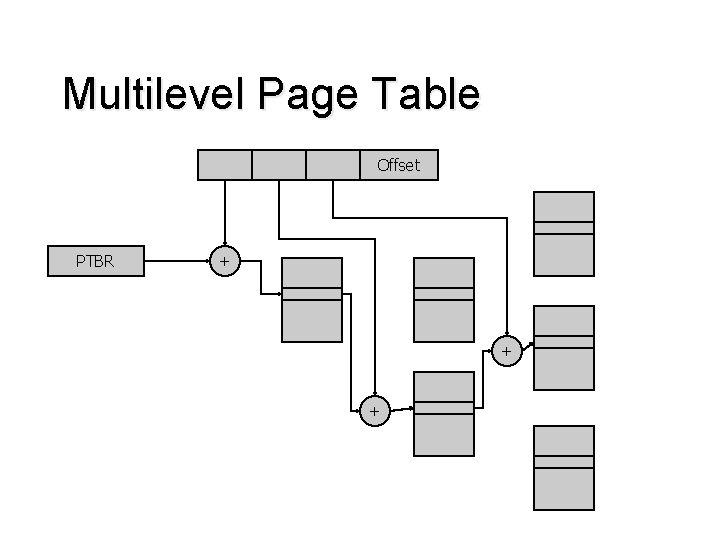

Address Translation VA PA Dirty Ref Protection 0 x 20004000 0 x 2000 Y/N Read/Write/ Execute l l O/S and hardware communicate via PTE How do we find a PTE? – &PTE = PTBR + page number * sizeof(PTE) – PTBR is private for each program l Context switch replaces PTBR contents

Address Translation Virtual Page Number PTBR Offset + D VA PA

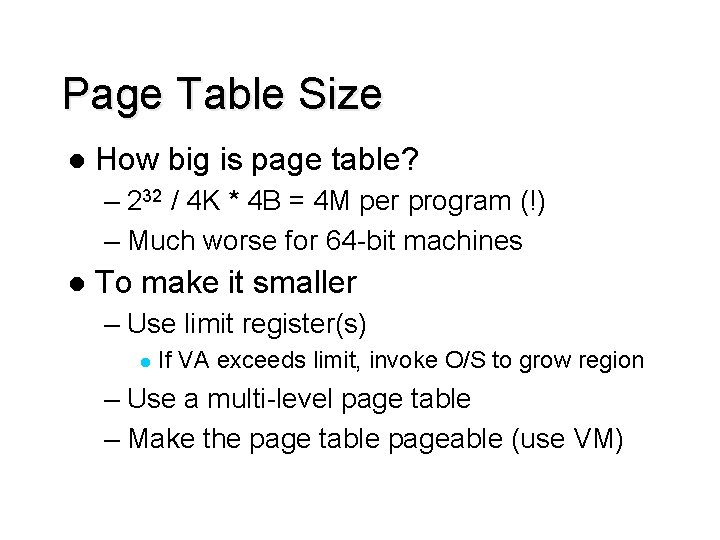

Page Table Size l How big is page table? – 232 / 4 K * 4 B = 4 M per program (!) – Much worse for 64 -bit machines l To make it smaller – Use limit register(s) l If VA exceeds limit, invoke O/S to grow region – Use a multi-level page table – Make the page table pageable (use VM)

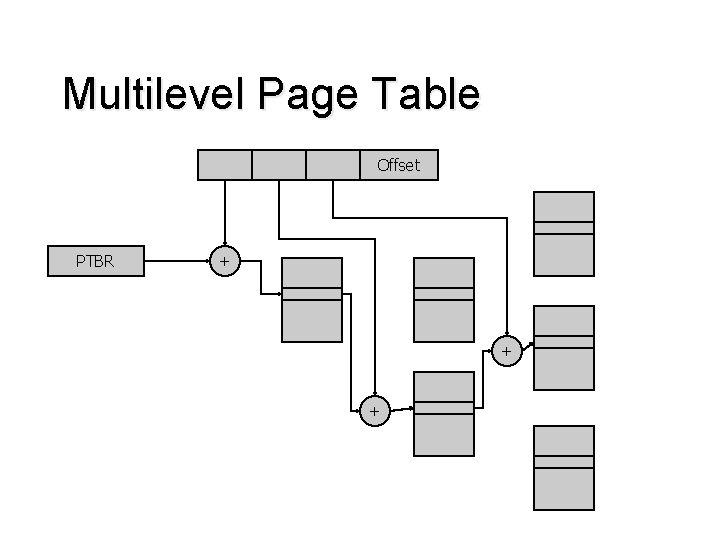

Multilevel Page Table Offset PTBR + + +

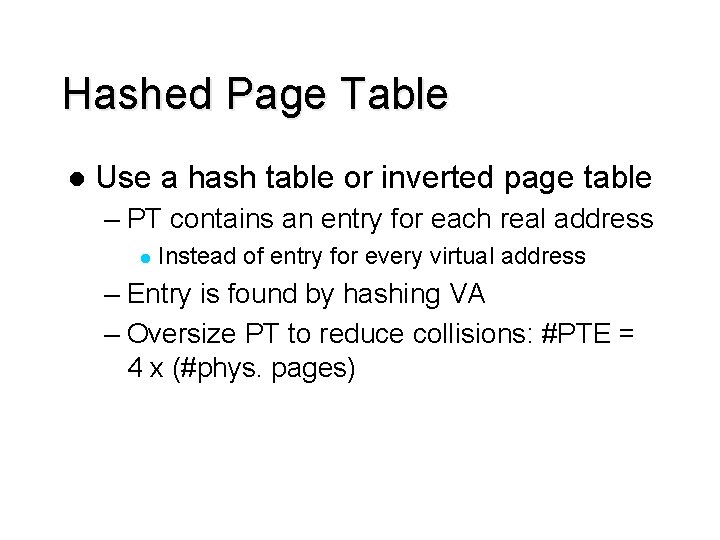

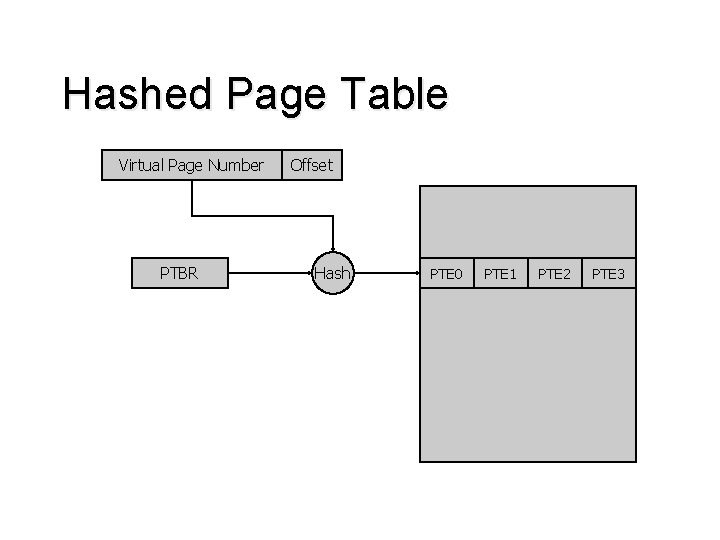

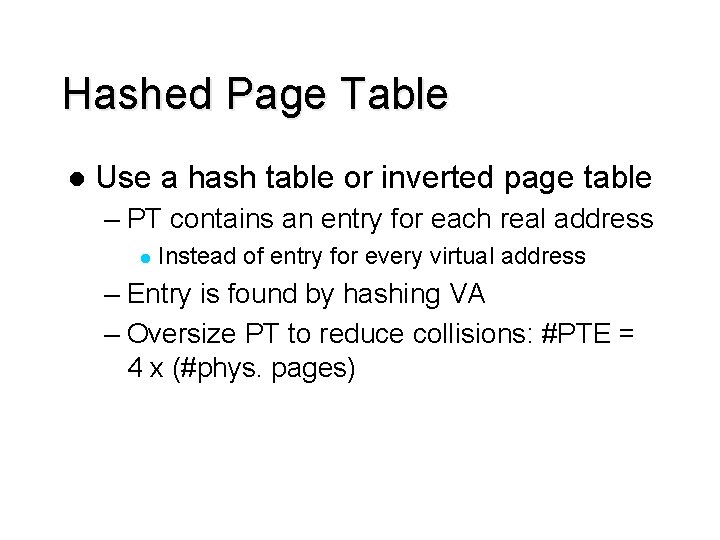

Hashed Page Table l Use a hash table or inverted page table – PT contains an entry for each real address l Instead of entry for every virtual address – Entry is found by hashing VA – Oversize PT to reduce collisions: #PTE = 4 x (#phys. pages)

Hashed Page Table Virtual Page Number PTBR Offset Hash PTE 0 PTE 1 PTE 2 PTE 3

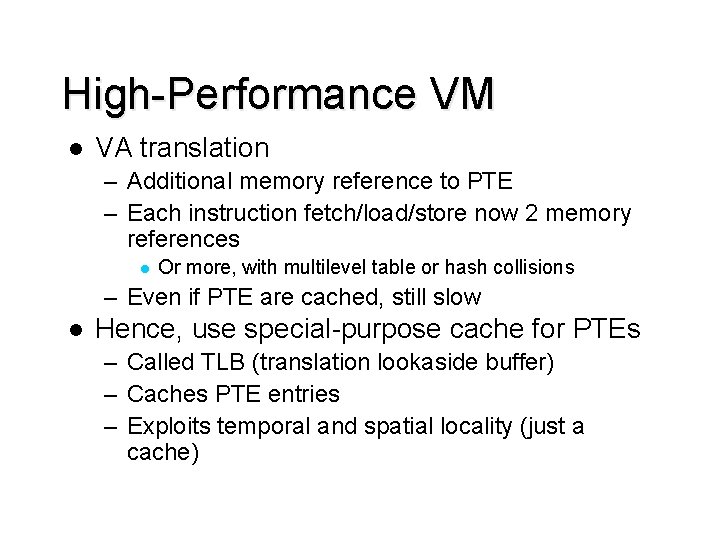

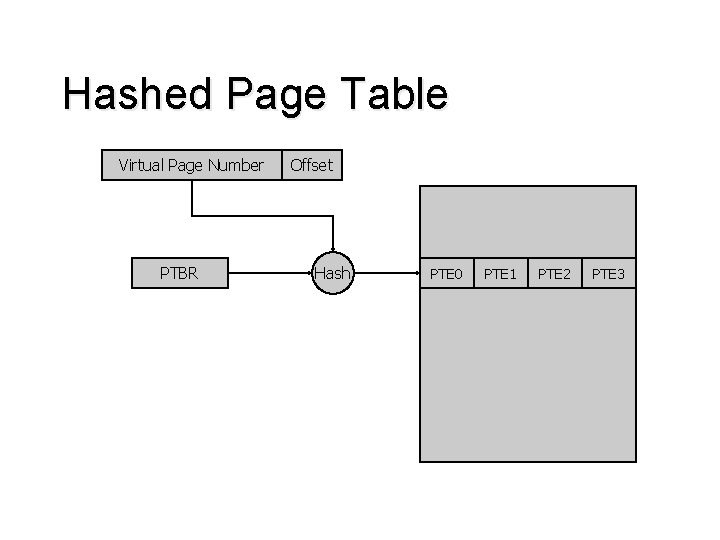

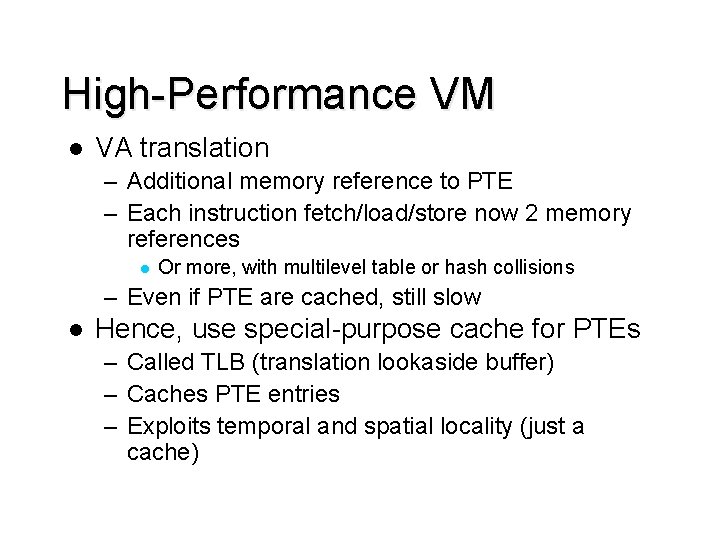

High-Performance VM l VA translation – Additional memory reference to PTE – Each instruction fetch/load/store now 2 memory references l Or more, with multilevel table or hash collisions – Even if PTE are cached, still slow l Hence, use special-purpose cache for PTEs – Called TLB (translation lookaside buffer) – Caches PTE entries – Exploits temporal and spatial locality (just a cache)

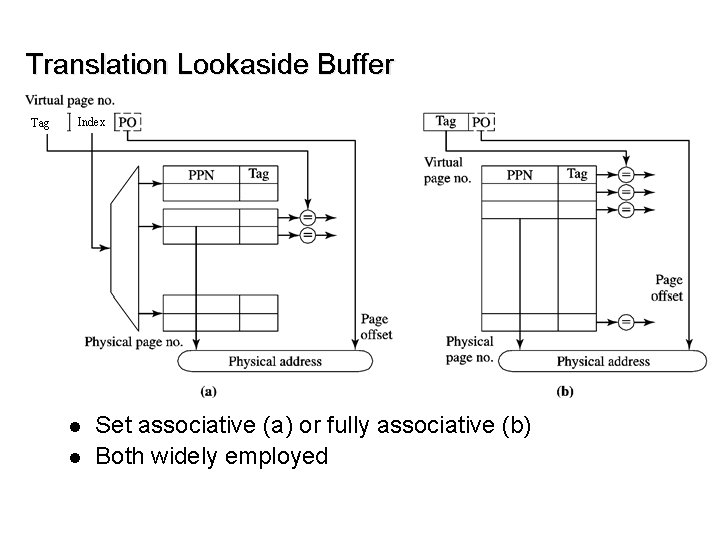

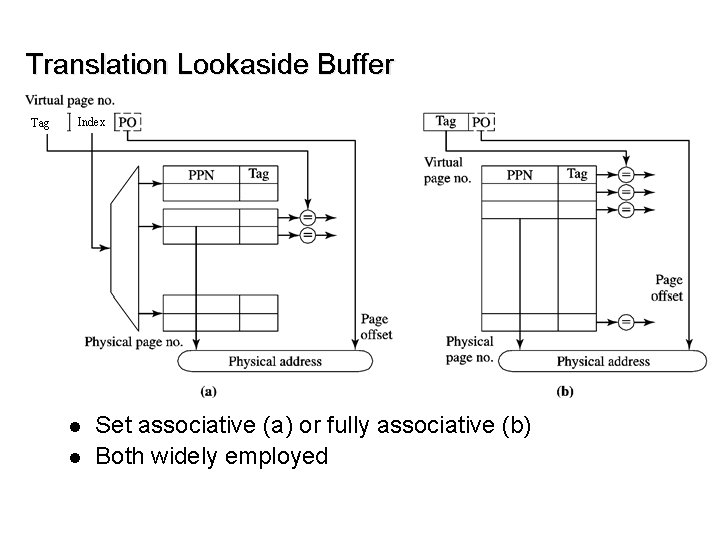

Translation Lookaside Buffer Tag Index l l Set associative (a) or fully associative (b) Both widely employed

Interaction of TLB and Cache l l Serial lookup: first TLB then D-cache Excessive cycle time

Virtually Indexed Physically Tagged L 1 l l l Parallel lookup of TLB and cache Faster cycle time Index bits must be untranslated – Restricts size of n-associative cache to n x (virtual page size) – E. g. 4 -way SA cache with 4 KB pages max. size is 16 KB

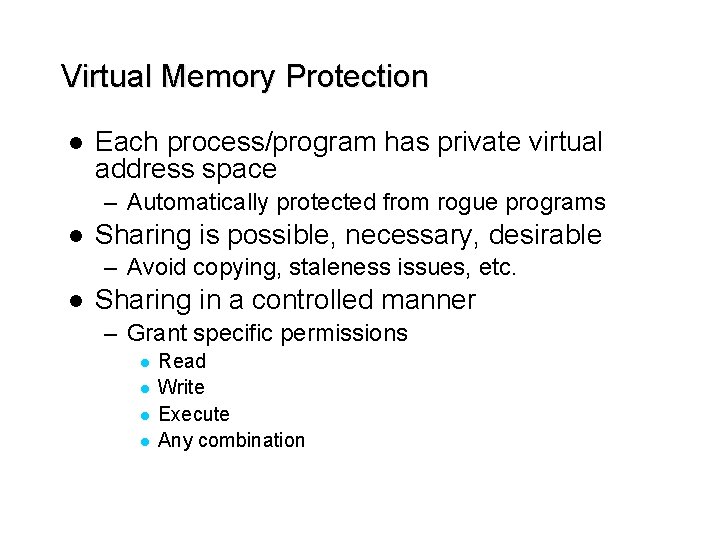

Virtual Memory Protection l Each process/program has private virtual address space – Automatically protected from rogue programs l Sharing is possible, necessary, desirable – Avoid copying, staleness issues, etc. l Sharing in a controlled manner – Grant specific permissions l l Read Write Execute Any combination

Protection l Process model – Privileged kernel – Independent user processes l Privileges vs. policy – Architecture provided primitives – OS implements policy – Problems arise when h/w implements policy l Separate policy from mechanism!

Protection Primitives l User vs kernel – at least one privileged mode – usually implemented as mode bits l How do we switch to kernel mode? – Protected “gates” or system calls – Change mode and continue at pre-determined address l Hardware to compare mode bits to access rights – Only access certain resources in kernel mode – E. g. modify page mappings

Protection Primitives l Base and bounds – Privileged registers base <= address <= bounds l Segmentation – Multiple base and bound registers – Protection bits for each segment l Page-level protection (most widely used) – Protection bits in page entry table – Cache them in TLB for speed

VM Sharing l Share memory locations by: – Map shared physical location into both address spaces: l E. g. PA 0 x. C 00 DA becomes: – VA 0 x 2 D 000 DA for process 0 – VA 0 x 4 D 000 DA for process 1 – Either process can read/write shared location l However, causes synonym problem

VA Synonyms l Virtually-addressed caches are desirable – No need to translate VA to PA before cache lookup – Faster hit time, translate only on misses l However, VA synonyms cause problems – Can end up with two copies of same physical line – Causes coherence problems [Wang, Baer reading] l Solutions: – Flush caches/TLBs on context switch – Extend cache tags to include PID l Effectively a shared VA space (PID becomes part of address) – Enforce global shared VA space (Power. PC) l Requires another level of addressing (EA->VA->PA)

Additional issues l Large page support – Most ISAs support 4 K/1 M/1 G – Page table & TLB designs must support l Renewed interest in segments as an alternative – Recent work from Wisconsin Multifacet – Can be complementary to paging l Multiple levels of translation in virtualized systems – – Virtual machines run unmodified OS Each OS manages translations, page tables Hypervisor manages translations across VMs Hardware still has to provide efficient translation

Summary: Main Memory l DRAM chips l Memory organization – Interleaving – Banking l Memory controller design l Hybrid Memory Cube l Phase Change Memory (reading) l Virtual memory l TLBs l Interaction of caches and virtual memory (Baer et al. ) l Large pages, virtualization

Mikko lipasti

Mikko lipasti Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Pri register

Pri register Mikko h. lipasti

Mikko h. lipasti Ece 751

Ece 751 Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Mikko h. lipasti

Mikko h. lipasti Mikko lipasti

Mikko lipasti Mikko lipasti

Mikko lipasti Mikko lipasti

Mikko lipasti Sgt gaedeke

Sgt gaedeke Rocky slowly got up from the mat

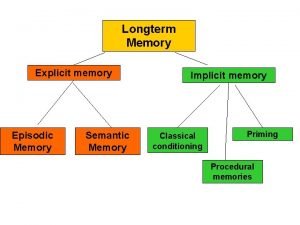

Rocky slowly got up from the mat Excplicit memory

Excplicit memory Long term memory vs short term memory

Long term memory vs short term memory Internal memory and external memory

Internal memory and external memory Primary memory and secondary memory

Primary memory and secondary memory Logical versus physical address space

Logical versus physical address space Which memory is the actual working memory?

Which memory is the actual working memory? Virtual memory

Virtual memory Virtual memory in memory hierarchy consists of

Virtual memory in memory hierarchy consists of Eidetic memory vs iconic memory

Eidetic memory vs iconic memory Shared vs distributed memory

Shared vs distributed memory Hh embryo

Hh embryo Mikko kesonen kuopio

Mikko kesonen kuopio Mikko posti

Mikko posti Mikko häikiö

Mikko häikiö Mikko leitsamo

Mikko leitsamo Mikko juusela

Mikko juusela Mikko manka tampereen yliopisto

Mikko manka tampereen yliopisto Potentiometric accelerometer

Potentiometric accelerometer Mikko vienonen

Mikko vienonen Modulaarinen tuotekehitys

Modulaarinen tuotekehitys Mikko karppinen

Mikko karppinen Mikko lappalainen

Mikko lappalainen Mikko keränen kamk

Mikko keränen kamk Mikko mäkelä metropolia

Mikko mäkelä metropolia Mikko ranta njs

Mikko ranta njs Mikko ulander

Mikko ulander Mikko lindeman

Mikko lindeman Mikko tiira

Mikko tiira Mikko nieminen

Mikko nieminen Mikko bentlin

Mikko bentlin Sote kokonaisarkkitehtuuri

Sote kokonaisarkkitehtuuri Mikko routala

Mikko routala System by mikko

System by mikko Ked ferno

Ked ferno Ericsson psirt

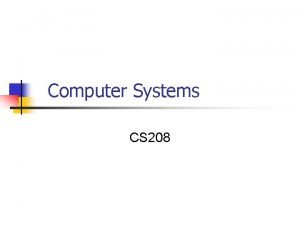

Ericsson psirt Drawback of direct mapping

Drawback of direct mapping Two kinds of main memory are

Two kinds of main memory are Internal memory rom

Internal memory rom Major components of computer system

Major components of computer system