Voice DSP Processing III Yaakov J Stein Chief

![Special Excitations Shift technique reduces random CB operations from O(N 2) to O(N( ]a Special Excitations Shift technique reduces random CB operations from O(N 2) to O(N( ]a](https://slidetodoc.com/presentation_image_h2/c15ebded124301d35abee017547b859b/image-31.jpg)

- Slides: 54

Voice DSP Processing III Yaakov J. Stein Chief Scientist RAD Data Communications Stein Voice. DSP 3. 1

Voice DSP Part 1 Speech biology and what we can learn from it Part 2 Speech DSP (AGC, VAD, features, echo cancellation) Part 3 Speech compression techiques Part 4 Speech Recognition Stein Voice. DSP 3. 2

Voice DSP - Part 3 Simple coders – G. 711 A-law m-law – Delta – ADPCM Other methods – MBE – MELP – STC – Waveform Interpolation CELP coders – LPC-10 – RELP/GSM – CELP Stein Voice. DSP 3. 3

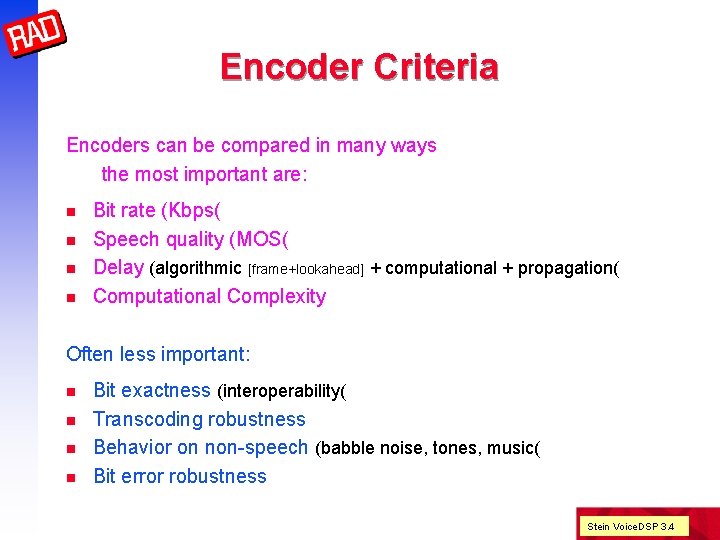

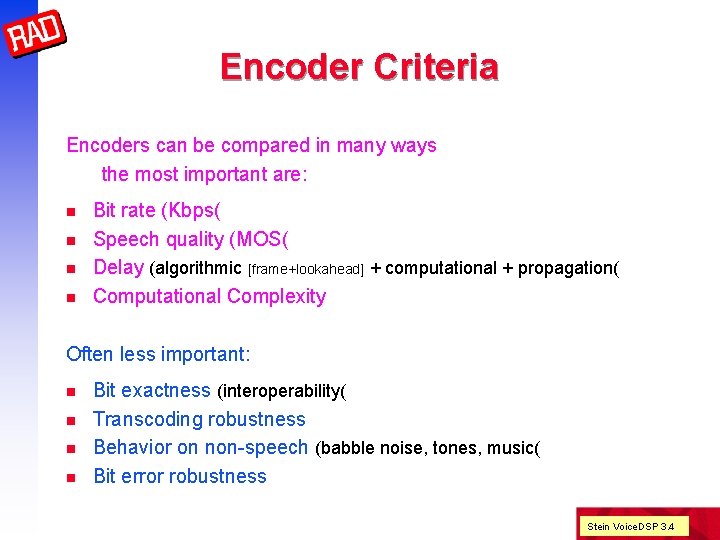

Encoder Criteria Encoders can be compared in many ways the most important are: n n Bit rate (Kbps( Speech quality (MOS( Delay (algorithmic [frame+lookahead] + computational + propagation( Computational Complexity Often less important: n n Bit exactness (interoperability( Transcoding robustness Behavior on non-speech (babble noise, tones, music( Bit error robustness Stein Voice. DSP 3. 4

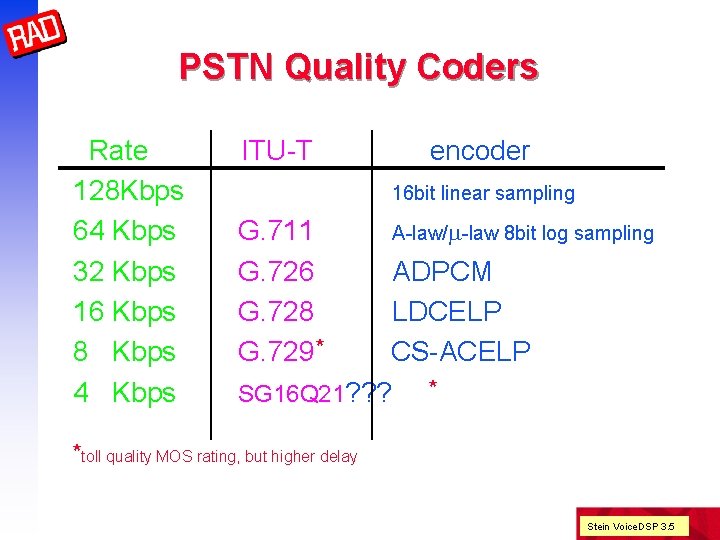

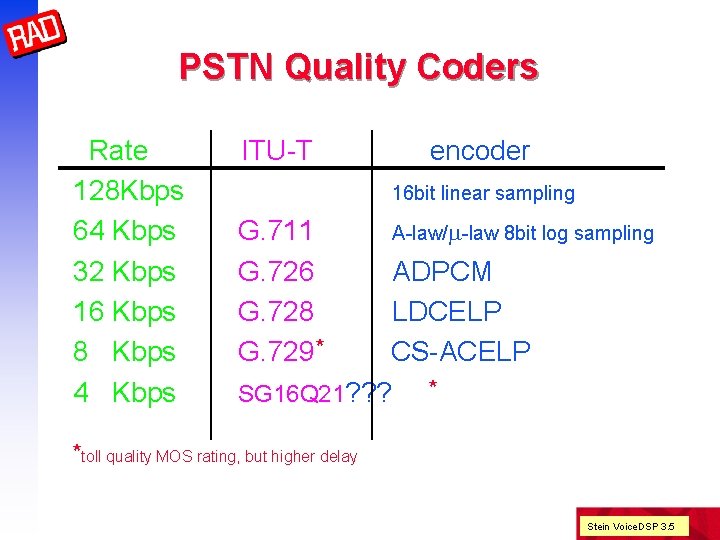

PSTN Quality Coders Rate 128 Kbps 64 Kbps 32 Kbps 16 Kbps 8 Kbps 4 Kbps ITU-T encoder 16 bit linear sampling G. 711 G. 726 G. 728 G. 729* A-law/m-law 8 bit log sampling ADPCM LDCELP CS-ACELP * SG 16 Q 21? ? ? *toll quality MOS rating, but higher delay Stein Voice. DSP 3. 5

Digital Cellular Standards Stein Voice. DSP 3. 6

Military / Satellite Standards Stein Voice. DSP 3. 7

Voice DSP Simple coders Stein Voice. DSP 3. 8

G. 711 16 bit linear sampling at 8 KHz means 128 Kbps Minimal toll quality linear sampling is 12 bit (96 Kbps( 8 bit linear sampling (256 levels) is noticeably noisy Due to – prevalence of low amplitudes – logarithmic response of ear we can use logarithmic sampling Different standards for different places Stein Voice. DSP 3. 9

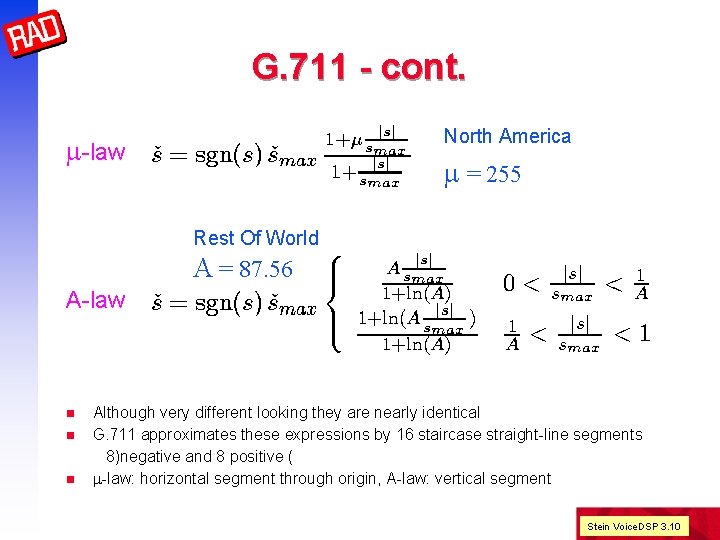

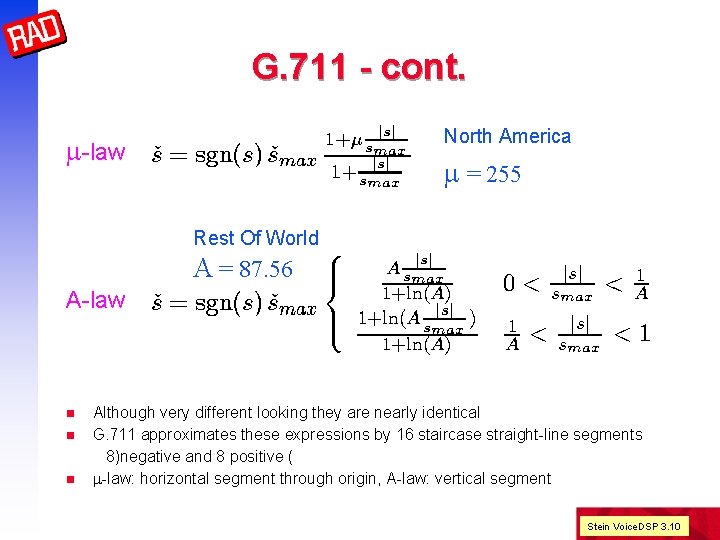

G. 711 - cont. North America m-law m = 255 Rest Of World A = 87. 56 A-law n n n Although very different looking they are nearly identical G. 711 approximates these expressions by 16 staircase straight-line segments 8)negative and 8 positive ( m-law: horizontal segment through origin, A-law: vertical segment Stein Voice. DSP 3. 10

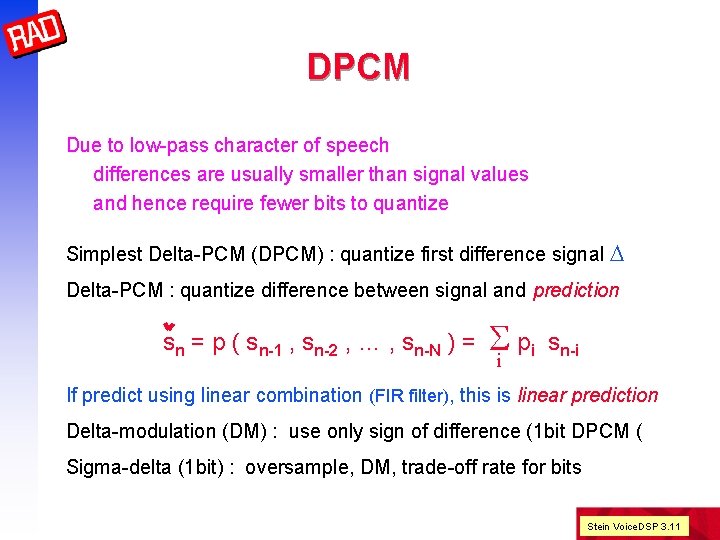

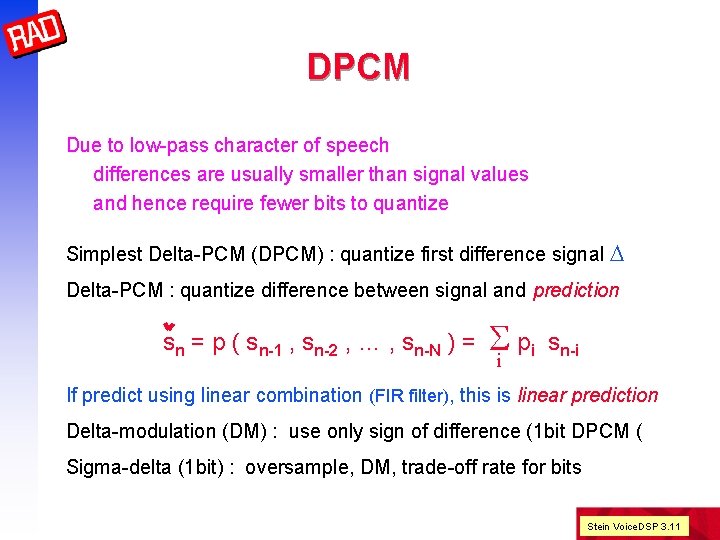

DPCM Due to low-pass character of speech differences are usually smaller than signal values and hence require fewer bits to quantize Simplest Delta-PCM (DPCM) : quantize first difference signal D Delta-PCM : quantize difference between signal and prediction sn = p ( sn-1 , sn-2 , … , sn-N ) = Si pi sn-i If predict using linear combination (FIR filter), this is linear prediction Delta-modulation (DM) : use only sign of difference (1 bit DPCM ( Sigma-delta (1 bit) : oversample, DM, trade-off rate for bits Stein Voice. DSP 3. 11

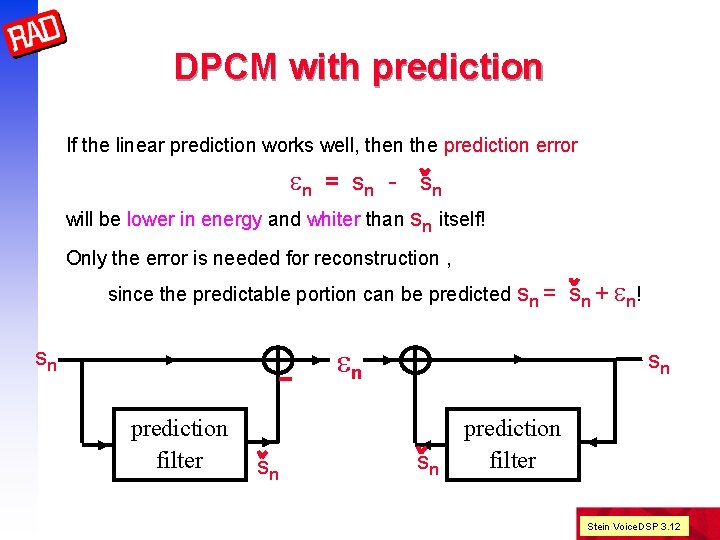

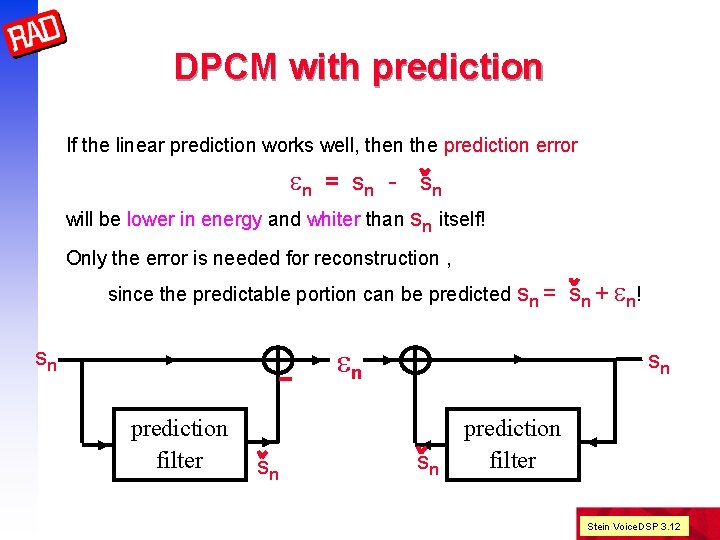

DPCM with prediction If the linear prediction works well, then the prediction error en = sn - sn will be lower in energy and whiter than sn itself! Only the error is needed for reconstruction , since the predictable portion can be predicted sn = sn prediction filter sn en sn + en! sn sn prediction filter Stein Voice. DSP 3. 12

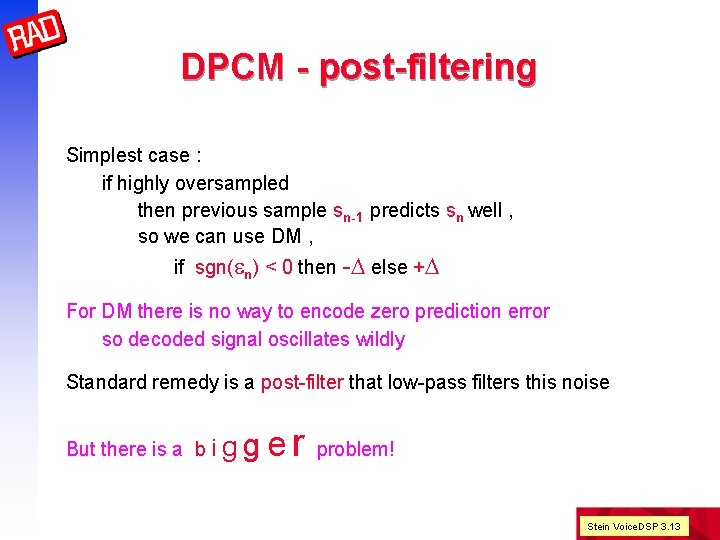

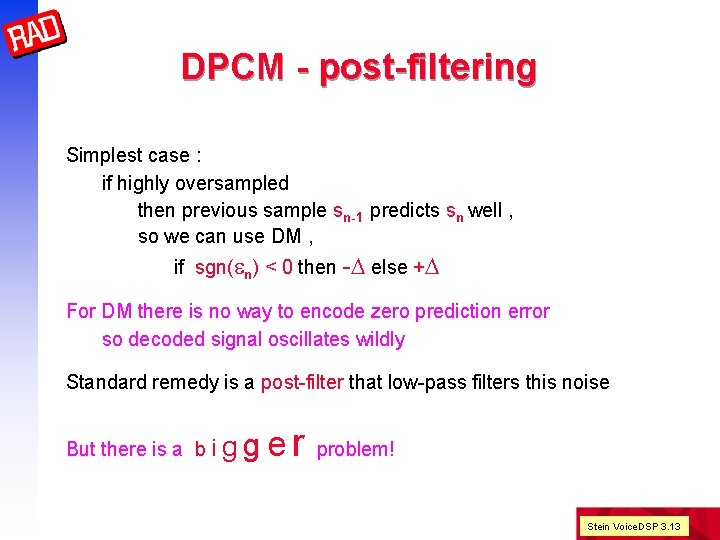

DPCM - post-filtering Simplest case : if highly oversampled then previous sample sn-1 predicts sn well , so we can use DM , if sgn(en) < 0 then -D else +D For DM there is no way to encode zero prediction error so decoded signal oscillates wildly Standard remedy is a post-filter that low-pass filters this noise But there is a b i g ger problem! Stein Voice. DSP 3. 13

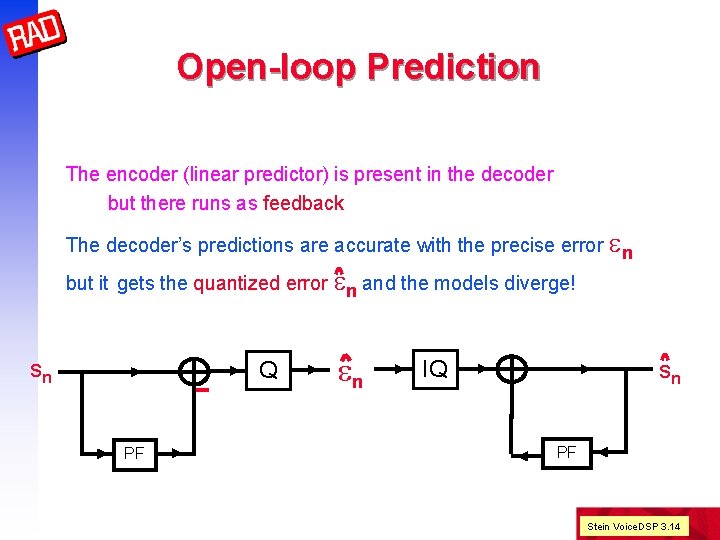

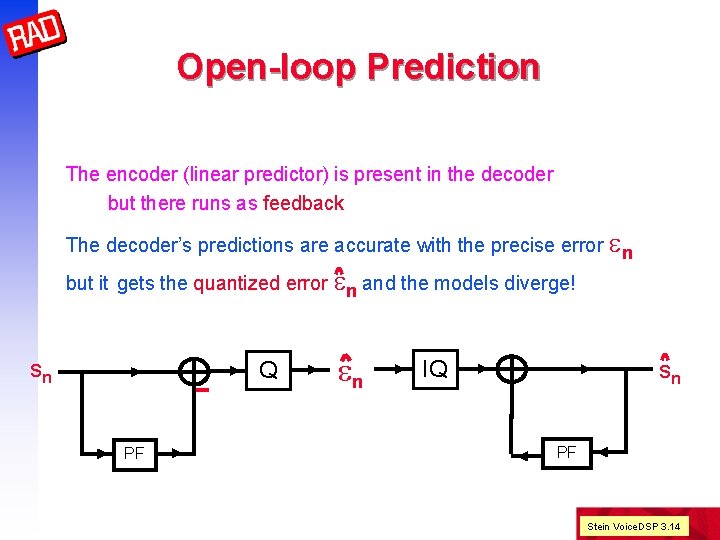

Open-loop Prediction The encoder (linear predictor) is present in the decoder but there runs as feedback The decoder’s predictions are accurate with the precise error en but it gets the quantized error en and the models diverge! sn PF Q en IQ sn PF Stein Voice. DSP 3. 14

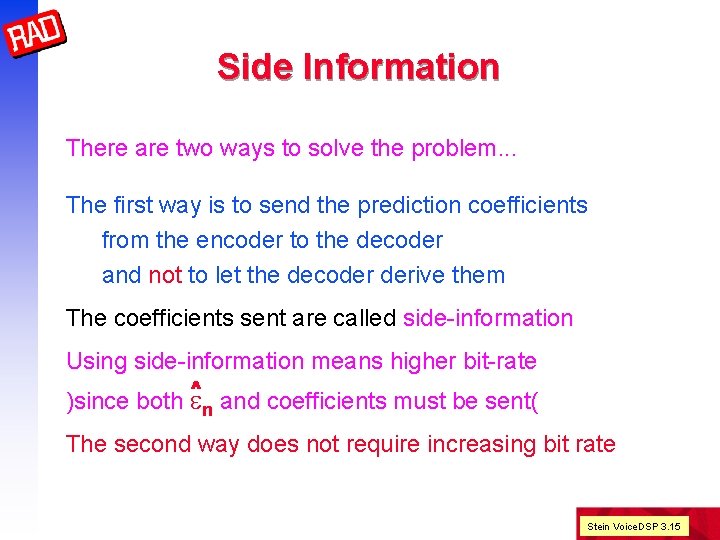

Side Information There are two ways to solve the problem. . . The first way is to send the prediction coefficients from the encoder to the decoder and not to let the decoder derive them The coefficients sent are called side-information Using side-information means higher bit-rate )since both en and coefficients must be sent( The second way does not require increasing bit rate Stein Voice. DSP 3. 15

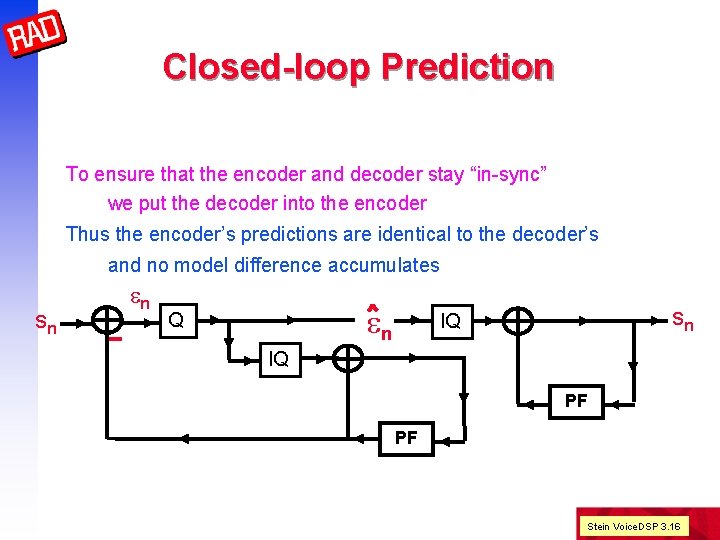

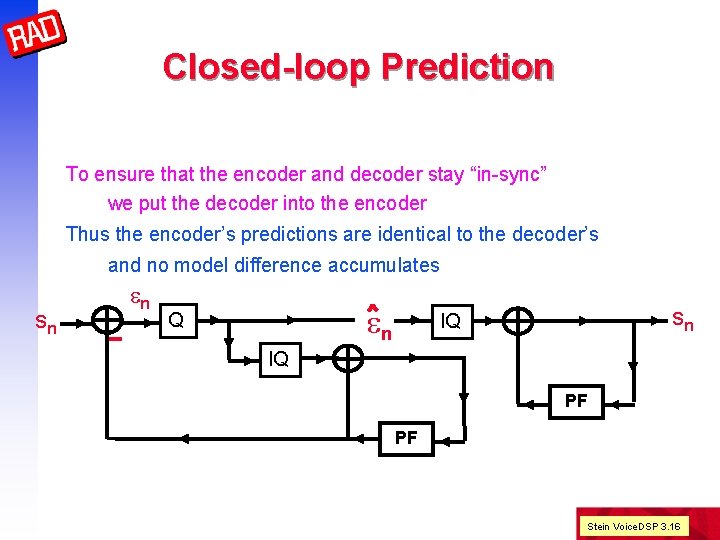

Closed-loop Prediction To ensure that the encoder and decoder stay “in-sync” we put the decoder into the encoder Thus the encoder’s predictions are identical to the decoder’s and no model difference accumulates - sn en en Q sn IQ IQ PF PF Stein Voice. DSP 3. 16

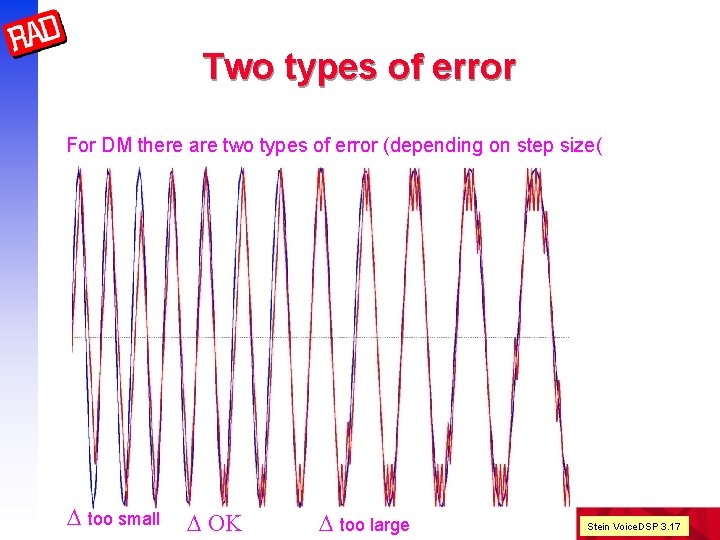

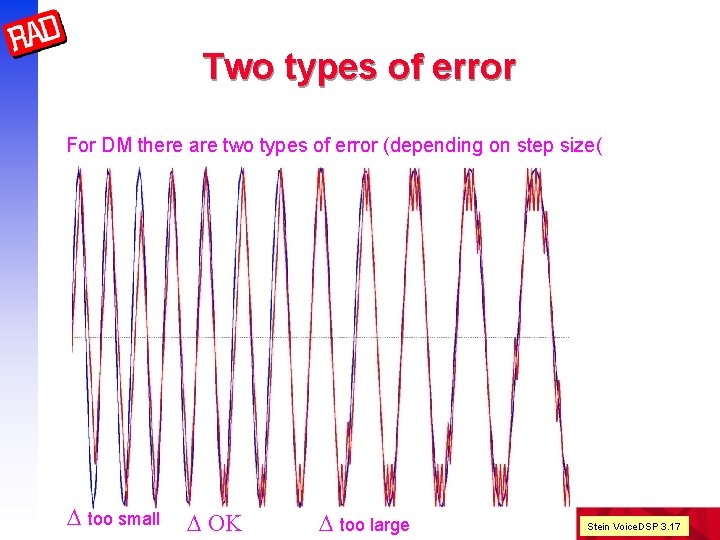

Two types of error For DM there are two types of error (depending on step size( D too small D OK D too large Stein Voice. DSP 3. 17

Adaptive Step Size Speech signals are very nonstationary We need to adapt the step size to match signal behavior – Increase D when signal changes rapidly – Decrease D when signal is relatively constant Simplest method (for DM only: ( – If present bit is the same as previous multiply D by K (K=1. 5( – If present bit is different, divide D by K – Constrain D to a predefined range More general method: – Collect N samples in buffer (N = 128 … 512( – Compute standard deviation in buffer – Set D to a fraction of standard deviation • Send D to decoder as side-information or • Use backward adaptation (closed-loop D computation( Stein Voice. DSP 3. 18

ADPCM n G. 726 has – Adaptive predictor – Adaptive quantizer and inverse quantizer – Adaptation speed control – Tone and transition detector – Mechanism to prevent loss from tandeming n Computational complexity relatively high (10 MIPS( 24 and 16 Kbps modes defined, but not toll quality n G. 727 same rates but embedded for packetize networks ADPCM only used general low-pass characteristic of speech What is the next step? Stein Voice. DSP 3. 19

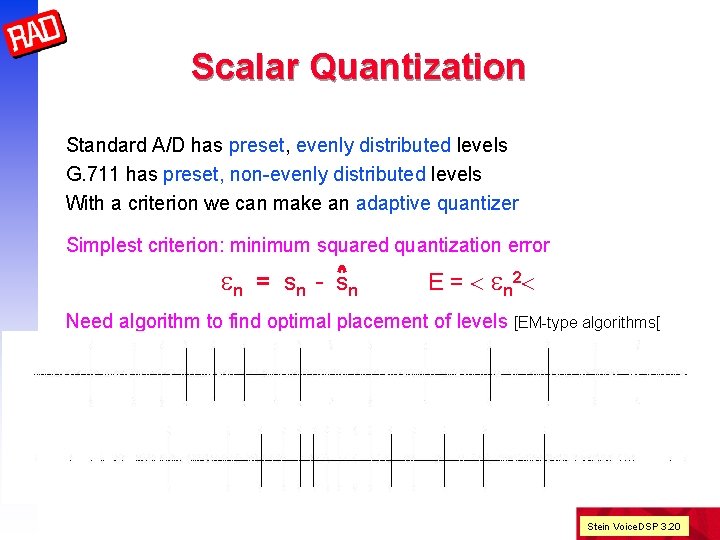

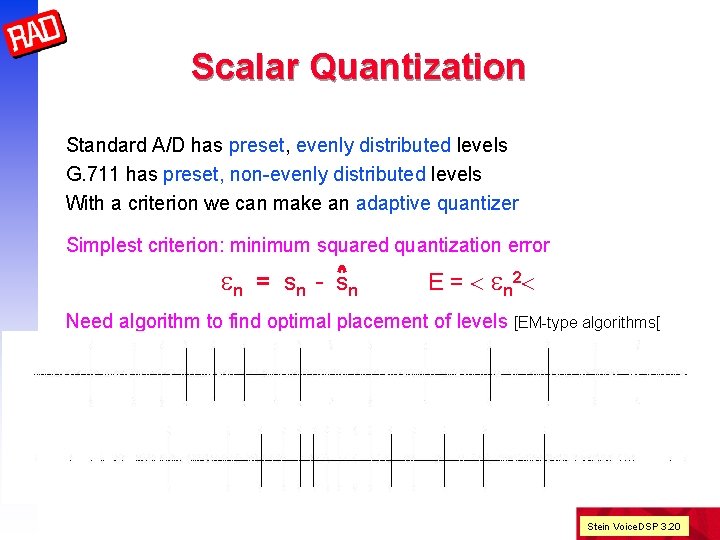

Scalar Quantization Standard A/D has preset, evenly distributed levels G. 711 has preset, non-evenly distributed levels With a criterion we can make an adaptive quantizer Simplest criterion: minimum squared quantization error en = sn - sn E = < e n 2< Need algorithm to find optimal placement of levels [EM-type algorithms[ Stein Voice. DSP 3. 20

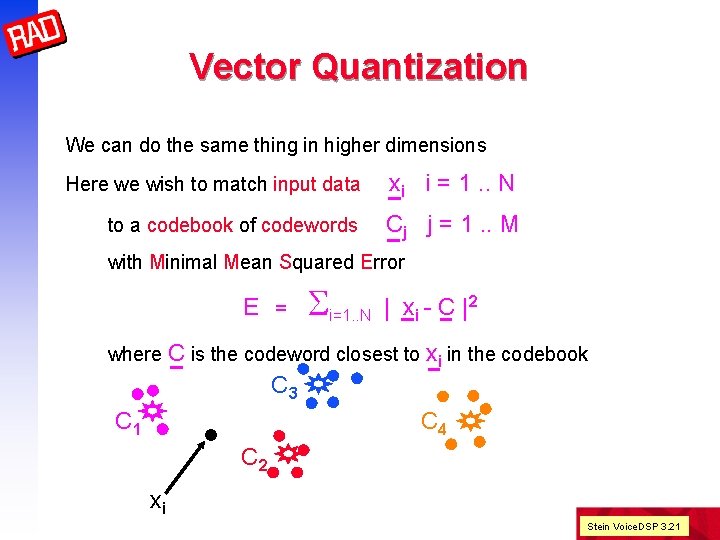

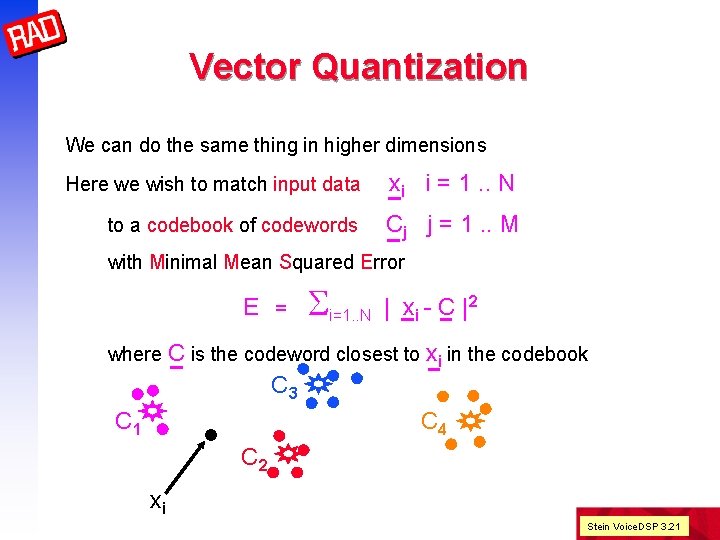

Vector Quantization We can do the same thing in higher dimensions Here we wish to match input data xi i = 1. . N to a codebook of codewords Cj j = 1. . M with Minimal Mean Squared Error E = Si=1. . N | xi - C |2 where C is the codeword closest to xi in the codebook C 3 C 1 C 4 C 2 xi Stein Voice. DSP 3. 21

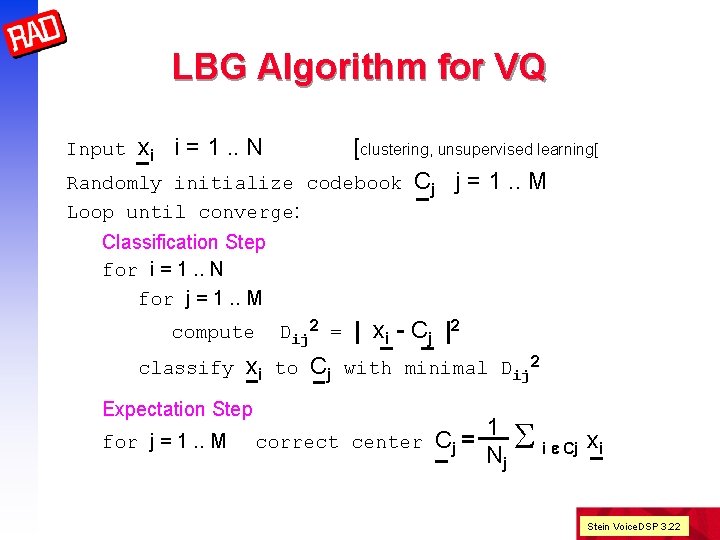

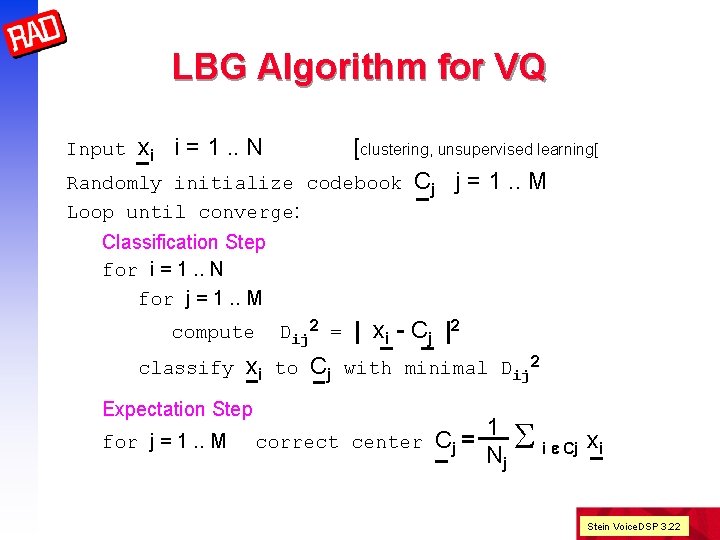

LBG Algorithm for VQ [clustering, unsupervised learning[ Randomly initialize codebook Cj j = 1. . M Input xi i = 1. . N Loop until converge: Classification Step for i = 1. . N for j = 1. . M compute classify Dij 2 = | xi - Cj |2 xi to Cj with minimal Dij 2 Expectation Step for j = 1. . M correct center Cj = 1 S i e Cj xi Nj Stein Voice. DSP 3. 22

Speech Application of VQ OK, I understand what to do with scalar quantization what is VQ good for? We could try to simply VQ frames of speech samples but this doesn’t work well! We can VQ spectra or sub-band components We often VQ parameter sets (e. g. LPC coefficients( We also VQ model error signals Stein Voice. DSP 3. 23

Voice DSP CELP coders Stein Voice. DSP 3. 24

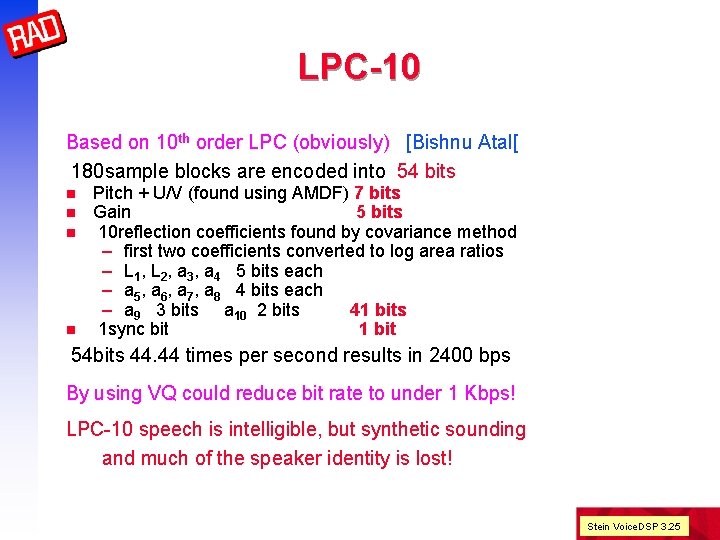

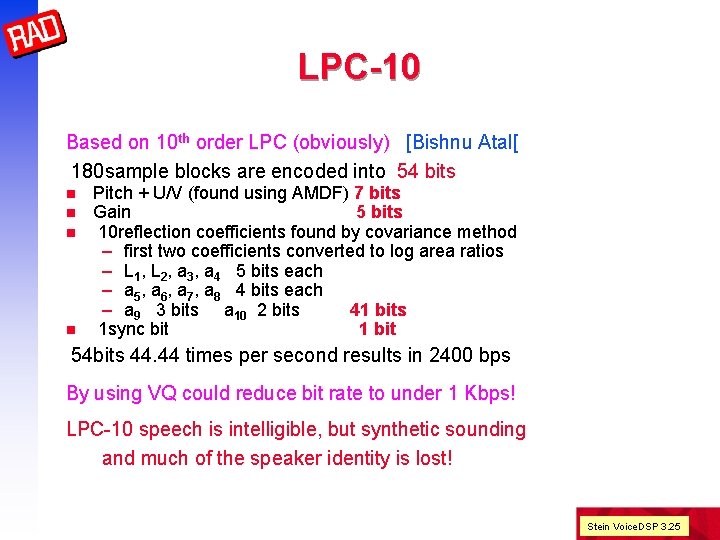

LPC-10 Based on 10 th order LPC (obviously) [Bishnu Atal[ 180 sample blocks are encoded into 54 bits n n Pitch + U/V (found using AMDF) 7 bits Gain 5 bits 10 reflection coefficients found by covariance method – first two coefficients converted to log area ratios – L 1, L 2, a 3, a 4 5 bits each – a 5, a 6, a 7, a 8 4 bits each – a 9 3 bits a 10 2 bits 41 bits 1 sync bit 1 bit 54 bits 44. 44 times per second results in 2400 bps By using VQ could reduce bit rate to under 1 Kbps! LPC-10 speech is intelligible, but synthetic sounding and much of the speaker identity is lost! Stein Voice. DSP 3. 25

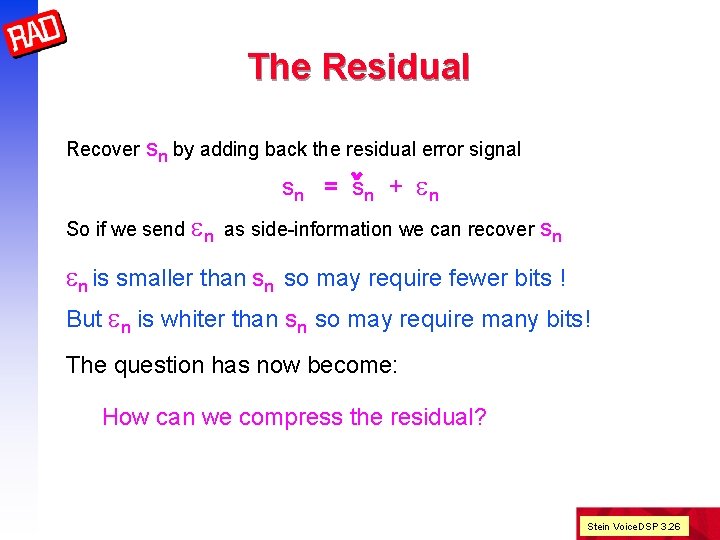

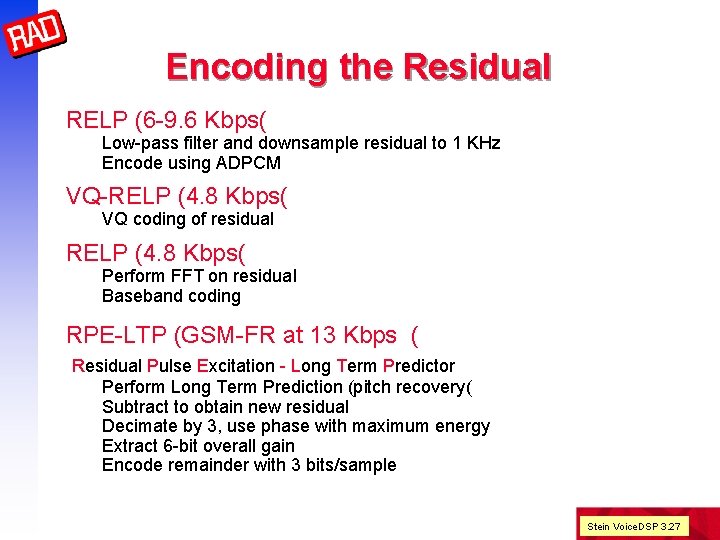

The Residual Recover sn by adding back the residual error signal sn = sn + en So if we send en as side-information we can recover sn en is smaller than sn so may require fewer bits ! But en is whiter than sn so may require many bits! The question has now become: How can we compress the residual? Stein Voice. DSP 3. 26

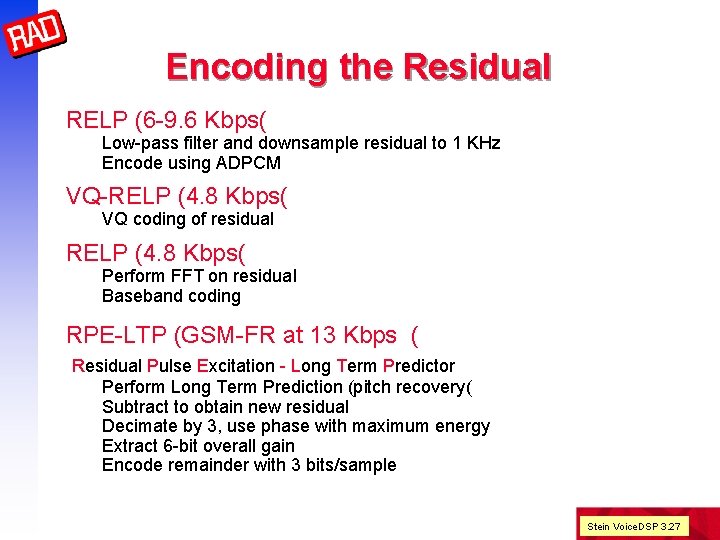

Encoding the Residual RELP (6 -9. 6 Kbps( Low-pass filter and downsample residual to 1 KHz Encode using ADPCM VQ-RELP (4. 8 Kbps( VQ coding of residual RELP (4. 8 Kbps( Perform FFT on residual Baseband coding RPE-LTP (GSM-FR at 13 Kbps ( Residual Pulse Excitation - Long Term Predictor Perform Long Term Prediction (pitch recovery( Subtract to obtain new residual Decimate by 3, use phase with maximum energy Extract 6 -bit overall gain Encode remainder with 3 bits/sample Stein Voice. DSP 3. 27

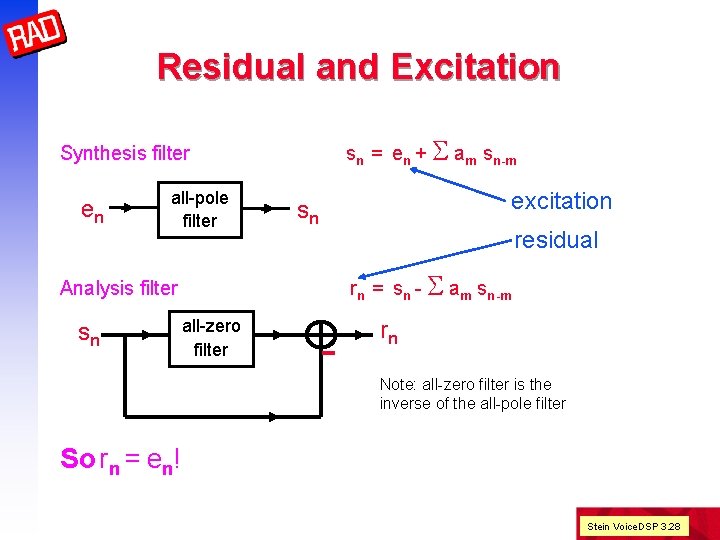

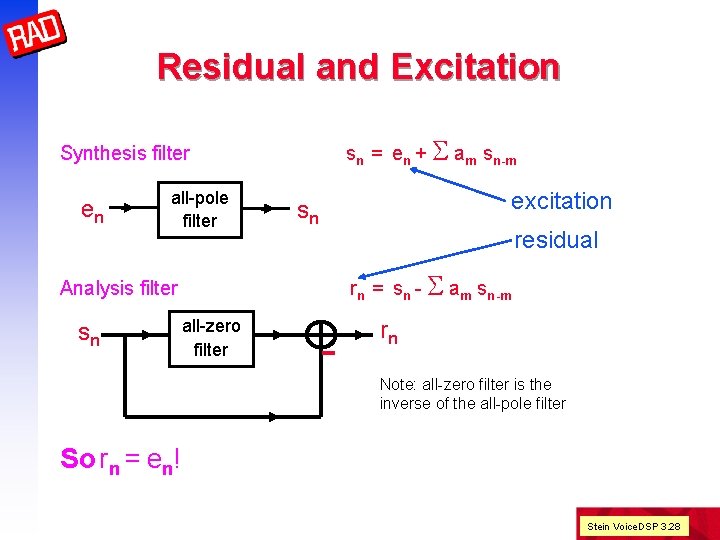

Residual and Excitation sn = en + S am sn-m Synthesis filter en all-pole filter excitation sn residual rn = sn - S am sn-m Analysis filter all-zero filter - sn rn Note: all-zero filter is the inverse of the all-pole filter So rn = en! Stein Voice. DSP 3. 28

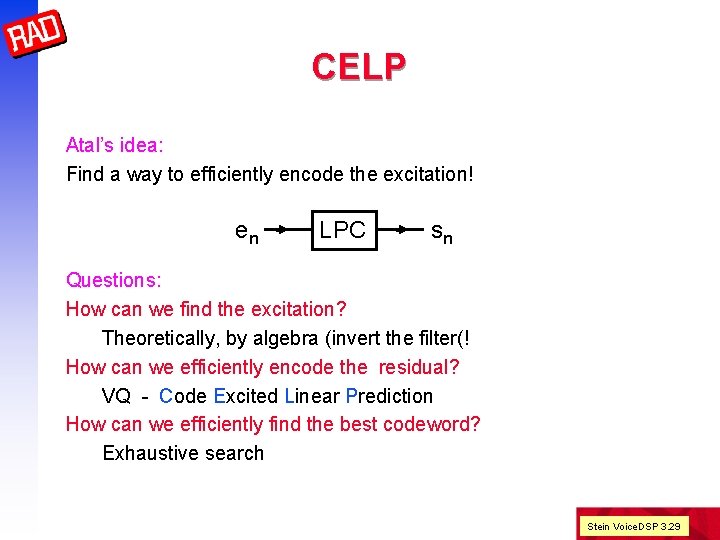

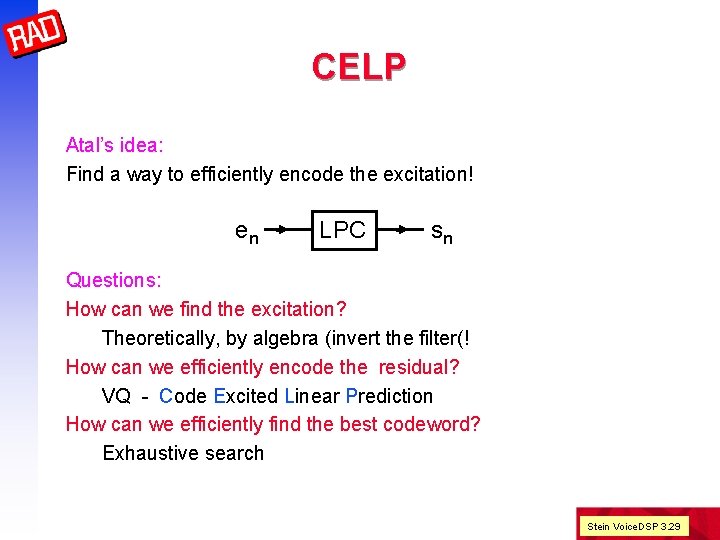

CELP Atal’s idea: Find a way to efficiently encode the excitation! en LPC sn Questions: How can we find the excitation? Theoretically, by algebra (invert the filter(! How can we efficiently encode the residual? VQ - Code Excited Linear Prediction How can we efficiently find the best codeword? Exhaustive search Stein Voice. DSP 3. 29

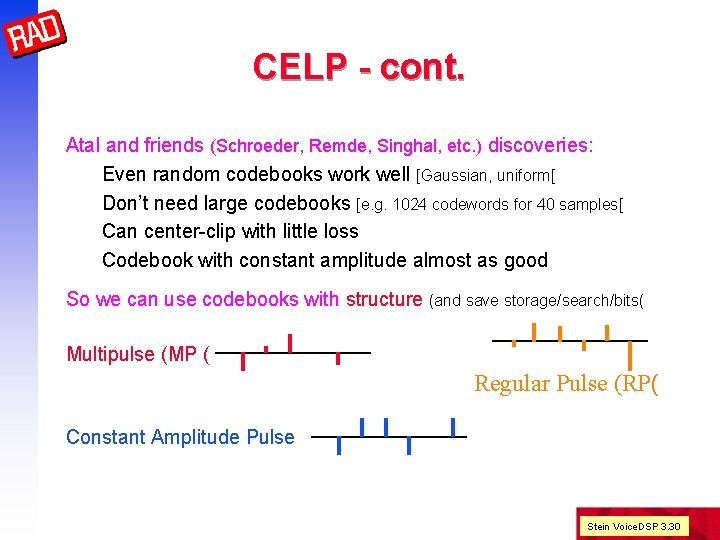

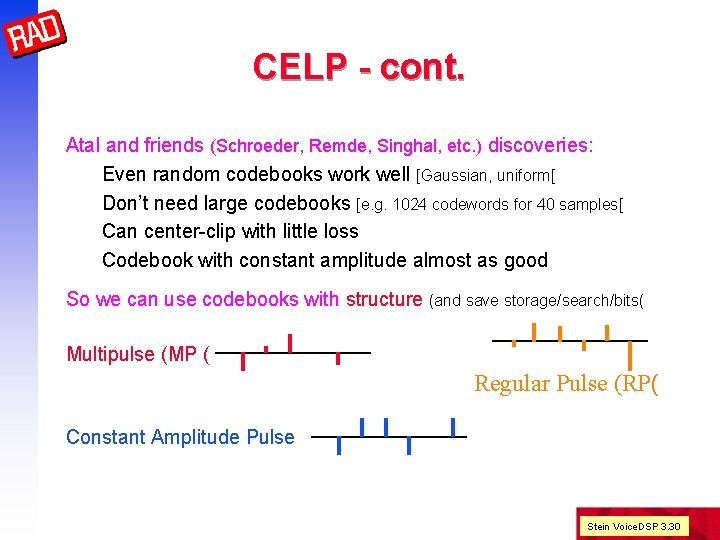

CELP - cont. Atal and friends (Schroeder, Remde, Singhal, etc. ) discoveries: Even random codebooks work well [Gaussian, uniform[ Don’t need large codebooks [e. g. 1024 codewords for 40 samples[ Can center-clip with little loss Codebook with constant amplitude almost as good So we can use codebooks with structure (and save storage/search/bits( Multipulse (MP ( Regular Pulse (RP( Constant Amplitude Pulse Stein Voice. DSP 3. 30

![Special Excitations Shift technique reduces random CB operations from ON 2 to ON a Special Excitations Shift technique reduces random CB operations from O(N 2) to O(N( ]a](https://slidetodoc.com/presentation_image_h2/c15ebded124301d35abee017547b859b/image-31.jpg)

Special Excitations Shift technique reduces random CB operations from O(N 2) to O(N( ]a b c d e f] [c d e f g h] [e f g h I j. . . [ Using a small number of +1 amplitude pulses leads to MIPS reduction n Since most values are zero, there are few operations n Since amplitudes +1 no true multiplications n In a CB containing CW and -CW we can save half n Algebraic codebooks exploit algebraic structure Example: choose pulses according to Hadamard matrix Using FHT reduces computation n Conjugate structure codebooks Excitation is sum of codewords from two related CBs Stein Voice. DSP 3. 31

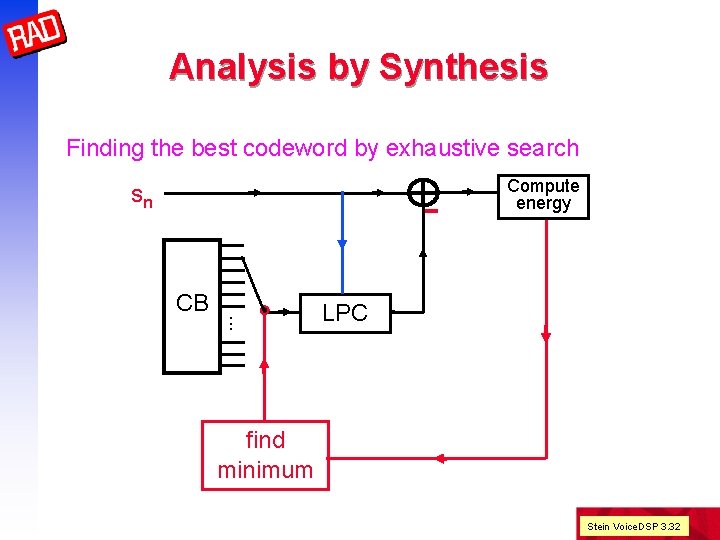

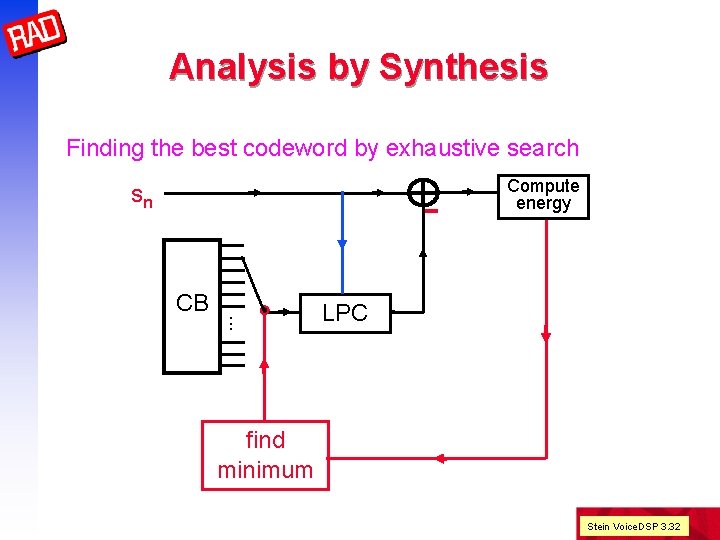

Analysis by Synthesis Finding the best codeword by exhaustive search sn CB. . . Compute energy LPC find minimum Stein Voice. DSP 3. 32

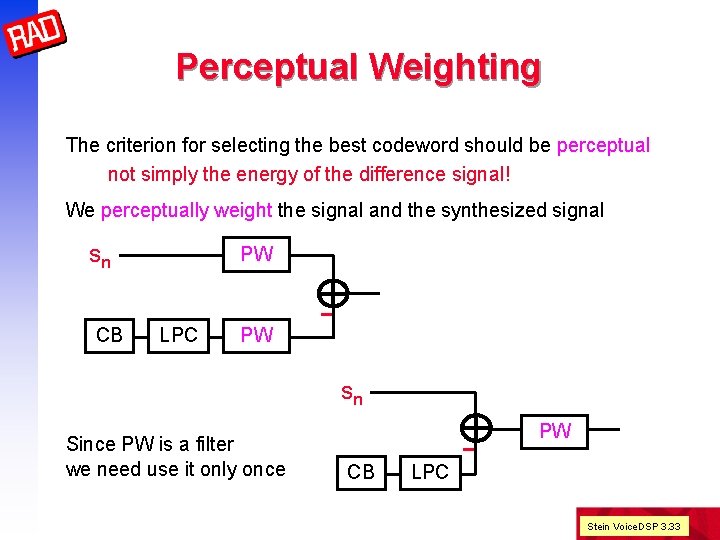

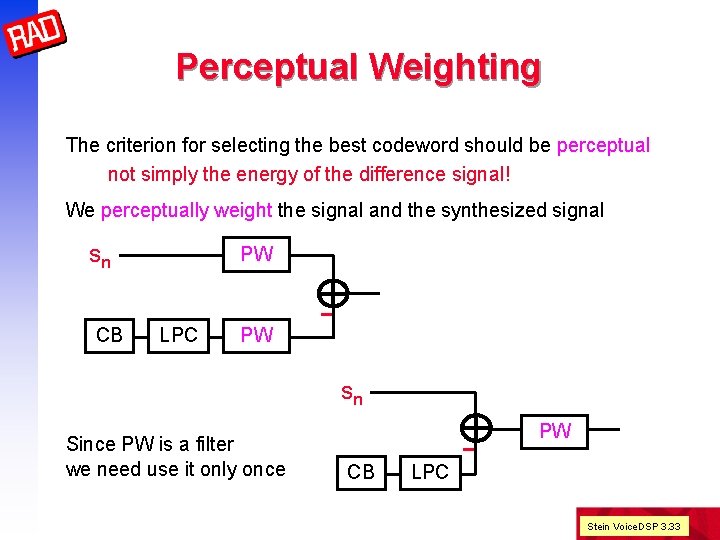

Perceptual Weighting The criterion for selecting the best codeword should be perceptual not simply the energy of the difference signal! We perceptually weight the signal and the synthesized signal sn LPC PW - CB PW sn CB LPC - Since PW is a filter we need use it only once PW Stein Voice. DSP 3. 33

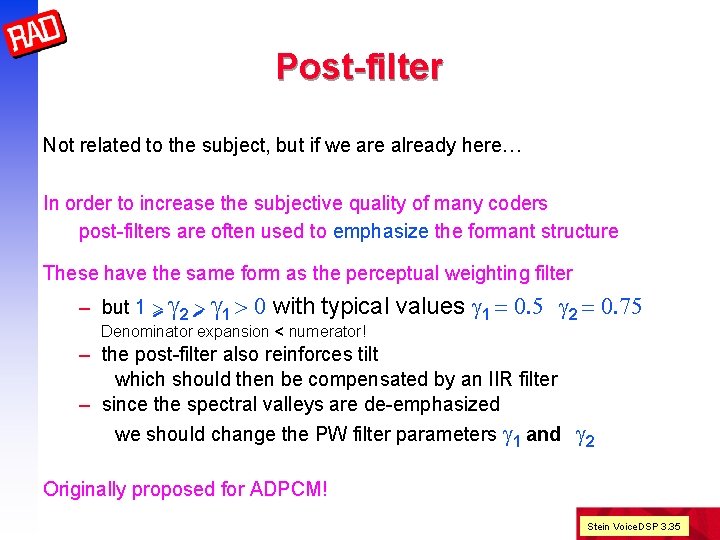

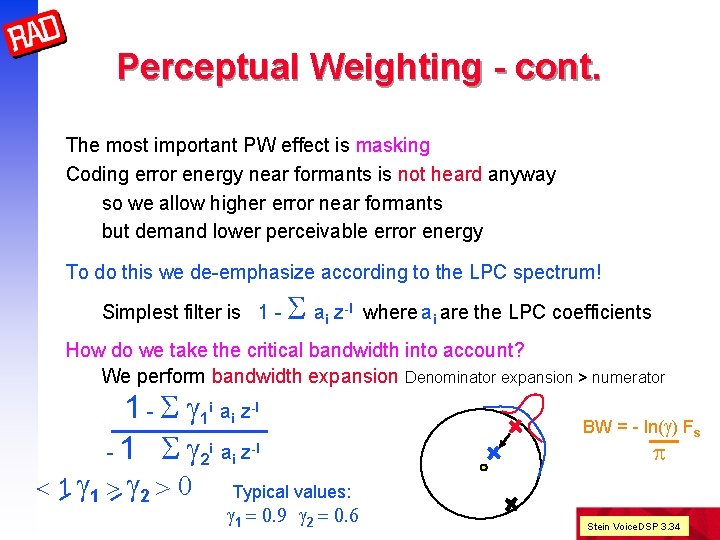

Perceptual Weighting - cont. The most important PW effect is masking Coding error energy near formants is not heard anyway so we allow higher error near formants but demand lower perceivable error energy To do this we de-emphasize according to the LPC spectrum! Simplest filter is 1 - S ai z-I where ai are the LPC coefficients How do we take the critical bandwidth into account? We perform bandwidth expansion Denominator expansion > numerator 1 - S g 1 i ai z-I - 1 S g 2 i ai z-I < 1 g 1 > g 2 > 0 Typical values: g 1 = 0. 9 g 2 = 0. 6 BW = - ln(g) Fs p Stein Voice. DSP 3. 34

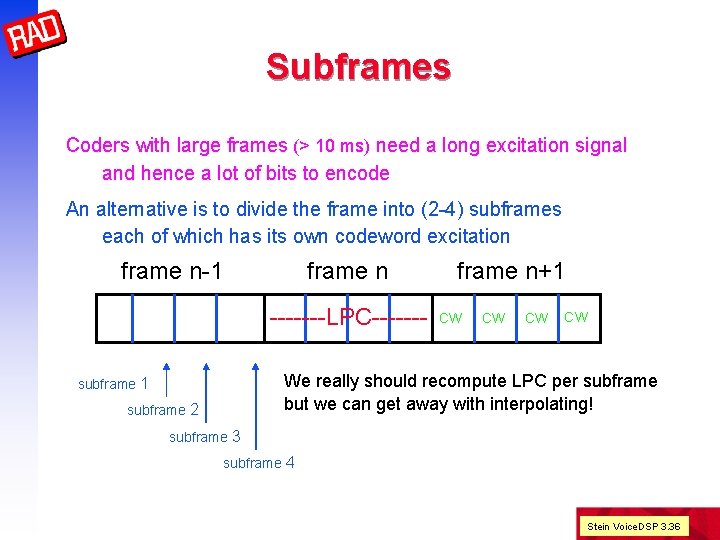

Post-filter Not related to the subject, but if we are already here… In order to increase the subjective quality of many coders post-filters are often used to emphasize the formant structure These have the same form as the perceptual weighting filter – but 1 > g 2 > g 1 > 0 with typical values g 1 = 0. 5 g 2 = 0. 75 Denominator expansion < numerator! – the post-filter also reinforces tilt which should then be compensated by an IIR filter – since the spectral valleys are de-emphasized we should change the PW filter parameters g 1 and g 2 Originally proposed for ADPCM! Stein Voice. DSP 3. 35

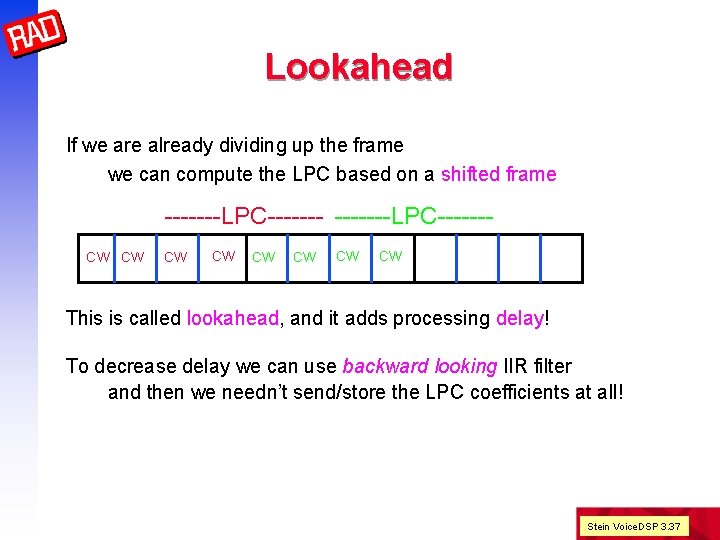

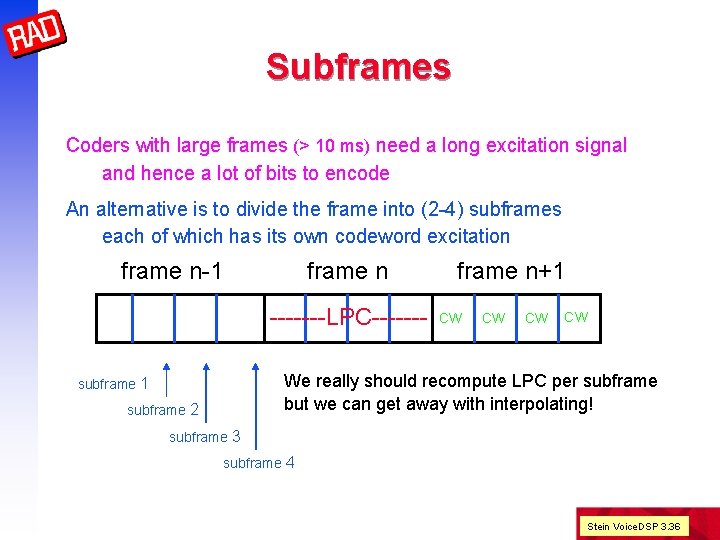

Subframes Coders with large frames (> 10 ms) need a long excitation signal and hence a lot of bits to encode An alternative is to divide the frame into (2 -4) subframes each of which has its own codeword excitation frame n-1 frame n -------LPC------- frame n+1 CW CW We really should recompute LPC per subframe but we can get away with interpolating! subframe 1 subframe 2 subframe 3 subframe 4 Stein Voice. DSP 3. 36

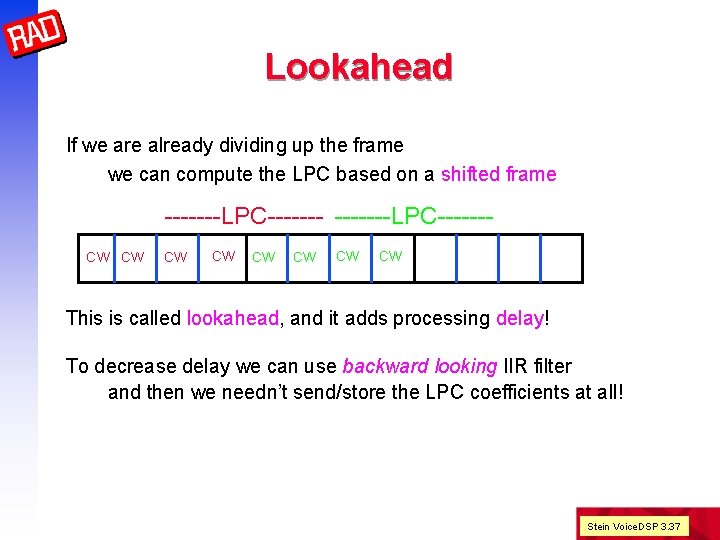

Lookahead If we are already dividing up the frame we can compute the LPC based on a shifted frame -------LPC-------LPC------CW CW This is called lookahead, and it adds processing delay! To decrease delay we can use backward looking IIR filter and then we needn’t send/store the LPC coefficients at all! Stein Voice. DSP 3. 37

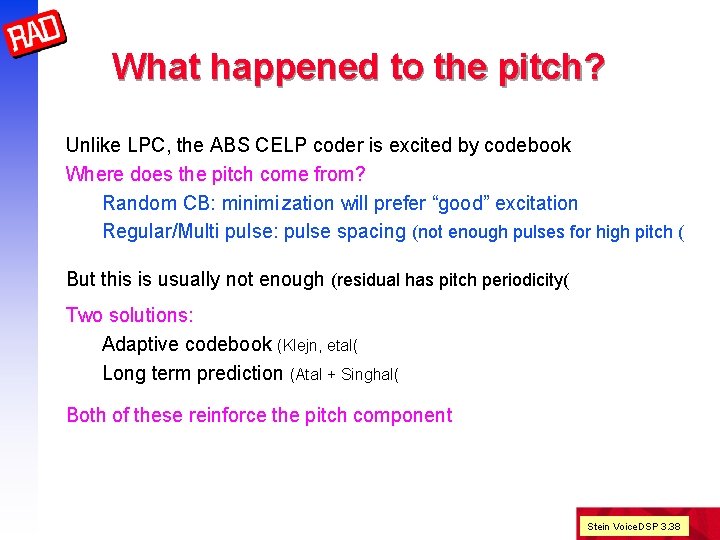

What happened to the pitch? Unlike LPC, the ABS CELP coder is excited by codebook Where does the pitch come from? Random CB: minimi zation will prefer “good” excitation Regular/Multi pulse: pulse spacing (not enough pulses for high pitch ( But this is usually not enough (residual has pitch periodicity( Two solutions: Adaptive codebook (Klejn, etal( Long term prediction (Atal + Singhal( Both of these reinforce the pitch component Stein Voice. DSP 3. 38

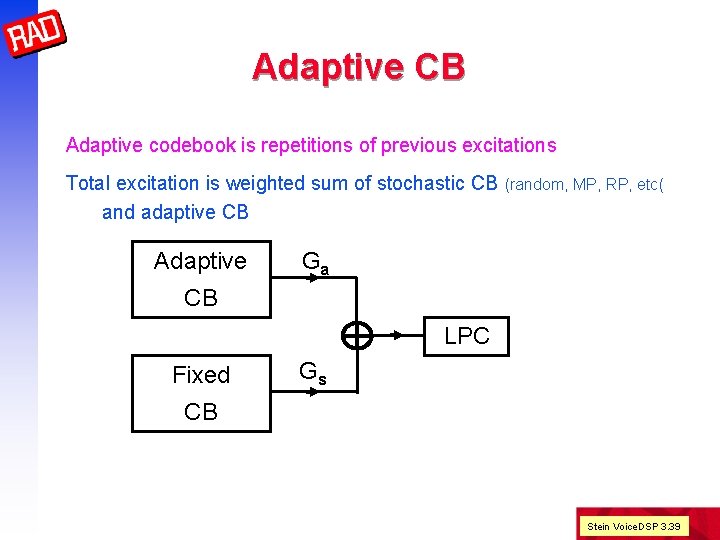

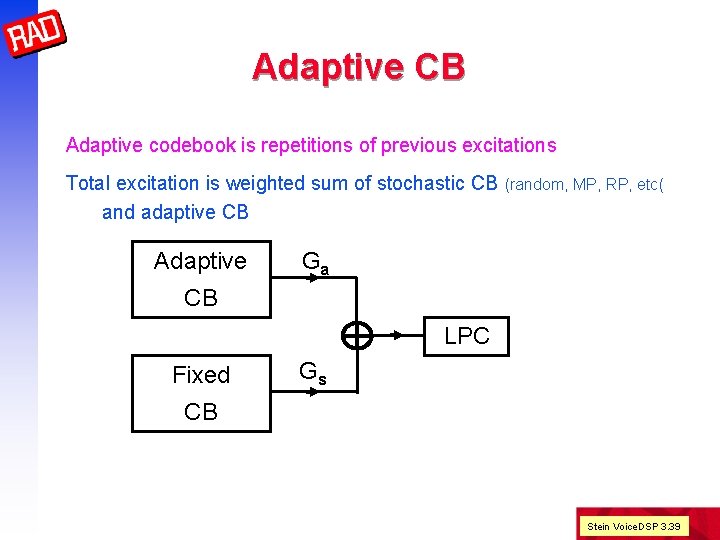

Adaptive CB Adaptive codebook is repetitions of previous excitations Total excitation is weighted sum of stochastic CB (random, MP, RP, etc( and adaptive CB Adaptive Ga CB LPC Fixed Gs CB Stein Voice. DSP 3. 39

Long Term Prediction Using long-term (pitch predictor) and short-term (LPC) prediction sn gain pitch predictor Long term predictor may have only one delay, but then non-integer 1 -1 b z -d LPC - codebook perceptual weighting error computation Stein Voice. DSP 3. 40

Federal Standard CELP FS 1016 at 4. 8 Kbps has MOS 3. 2 Developed by AT&T Bell Labs for DOD 144 bits / 30 ms frame 10 th order LPC on 30 ms Hamming window no pre-emphasis, additional 15 Hz BW expansion (quality and LSP robustness( Conversion to LSP and nonuniform scalar quantization to 34 bits 4 subframes (7. 5 ms) LSP interpolation 512 entry fixed CB - static -1, 0, +1 from center-clipped Gaussian 5 +bit nonuniform quantized gain 56 bits 256 entry adaptive CB - 8 bits + 5 bit nonuniform quantized gain 48 bits optional noninteger delays, optional Perceptual weighting Postfilter + spectral tilt compensation, removable for noise or tandeming FEC 4 bits SYNC 1 bit reserved 1 bit Stein Voice. DSP 3. 41

G. 728 16 Kbps with MOS similar to G. 726 at 32 Kbps Low 5 sample (0. 625 msec) delay High computational complexity (about 30 MIPS( CELP with Backward LPC order 50 (why not? - we don’t transmit side-information(! Frame of 2. 5 ms (20 samples( 4 subframes of 0. 625 ms (5 samples( Perceptual weighting Only 10 bit index to fixed CB is transmitted 10 bits per 0. 625 ms is 16 Kbps! Stein Voice. DSP 3. 42

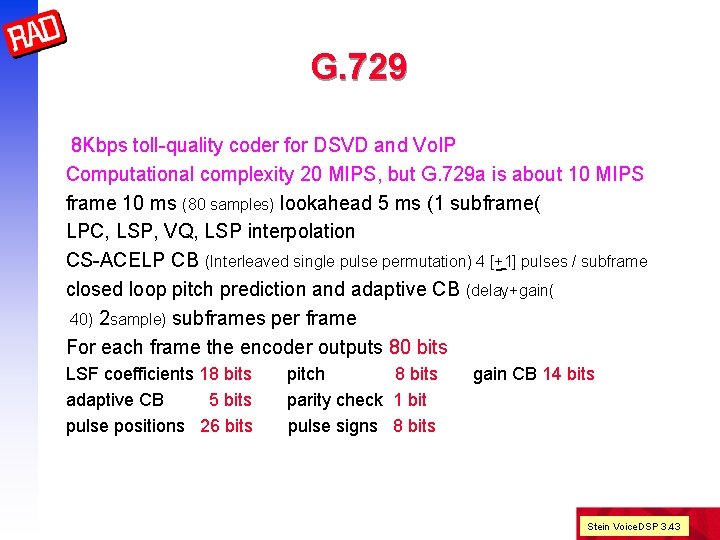

G. 729 8 Kbps toll-quality coder for DSVD and Vo. IP Computational complexity 20 MIPS, but G. 729 a is about 10 MIPS frame 10 ms (80 samples) lookahead 5 ms (1 subframe( LPC, LSP, VQ, LSP interpolation CS-ACELP CB (Interleaved single pulse permutation) 4 [+1] pulses / subframe closed loop pitch prediction and adaptive CB (delay+gain( 40) 2 sample) subframes per frame For each frame the encoder outputs 80 bits LSF coefficients 18 bits adaptive CB 5 bits pulse positions 26 bits pitch 8 bits parity check 1 bit pulse signs 8 bits gain CB 14 bits Stein Voice. DSP 3. 43

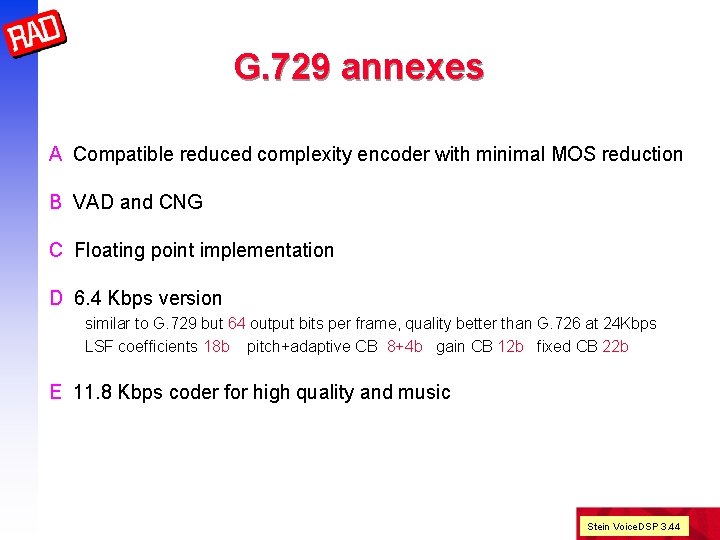

G. 729 annexes A Compatible reduced complexity encoder with minimal MOS reduction B VAD and CNG C Floating point implementation D 6. 4 Kbps version similar to G. 729 but 64 output bits per frame, quality better than G. 726 at 24 Kbps LSF coefficients 18 b pitch+adaptive CB 8+4 b gain CB 12 b fixed CB 22 b E 11. 8 Kbps coder for high quality and music Stein Voice. DSP 3. 44

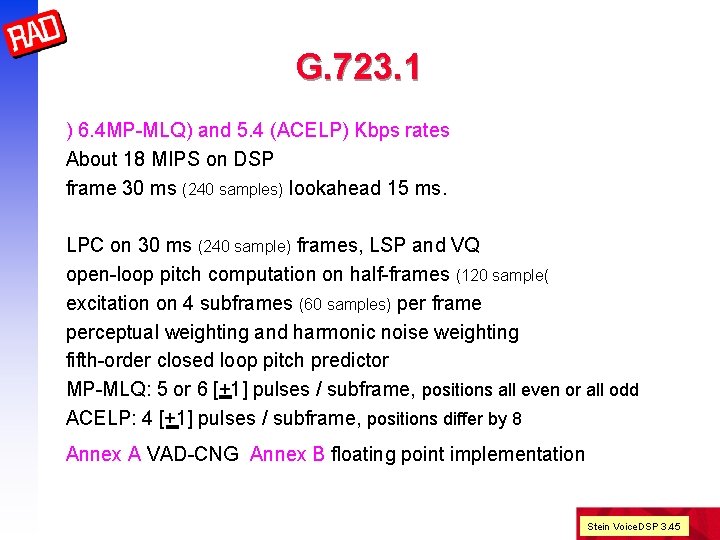

G. 723. 1 ) 6. 4 MP-MLQ) and 5. 4 (ACELP) Kbps rates About 18 MIPS on DSP frame 30 ms (240 samples) lookahead 15 ms. LPC on 30 ms (240 sample) frames, LSP and VQ open-loop pitch computation on half-frames (120 sample( excitation on 4 subframes (60 samples) per frame perceptual weighting and harmonic noise weighting fifth-order closed loop pitch predictor MP-MLQ: 5 or 6 [+1] pulses / subframe, positions all even or all odd ACELP: 4 [+1] pulses / subframe, positions differ by 8 Annex A VAD-CNG Annex B floating point implementation Stein Voice. DSP 3. 45

Voice DSP Other Methods MBE/MELP STC/WI Stein Voice. DSP 3. 46

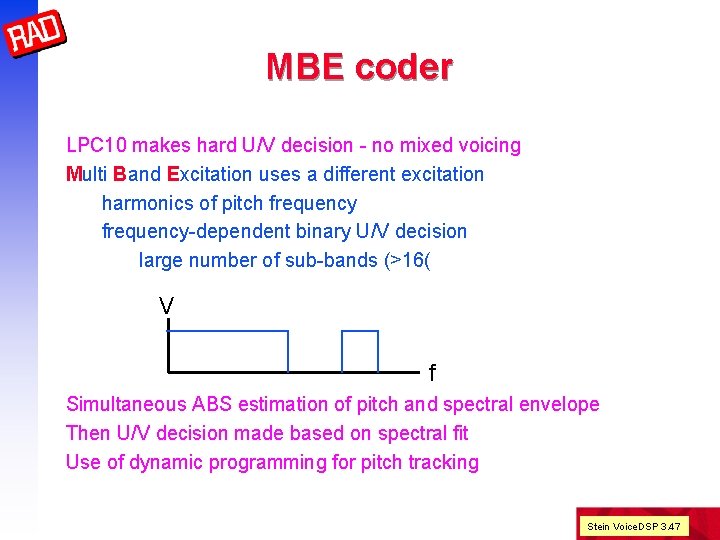

MBE coder LPC 10 makes hard U/V decision - no mixed voicing Multi Band Excitation uses a different excitation harmonics of pitch frequency-dependent binary U/V decision large number of sub-bands (>16( V f Simultaneous ABS estimation of pitch and spectral envelope Then U/V decision made based on spectral fit Use of dynamic programming for pitch tracking Stein Voice. DSP 3. 47

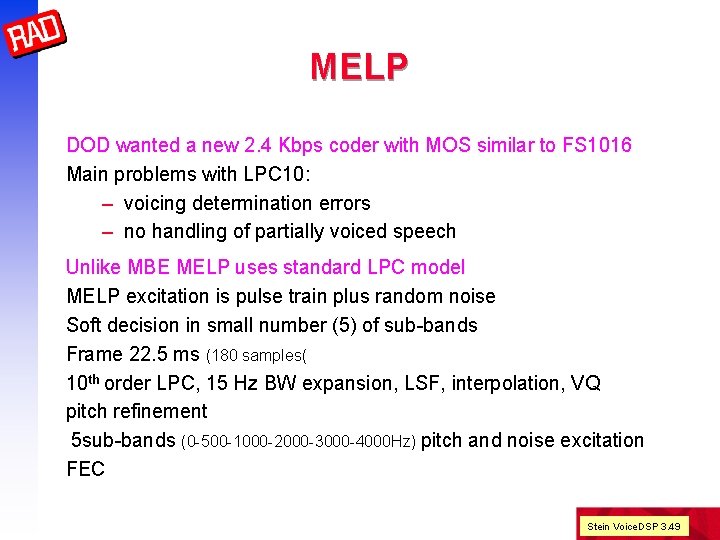

MBE coder - cont. DVSI made various MBE, AMBE and IMBE for satellite (INMARSAT( Bit rates 2. 4 - 9. 6 Kbps (toll quality at 3. 6 Kbps( Integral FEC for bit-error robustness As an example: 128 bits for each 20 ms frame pitch 8 bits U/V decisions K bits (K < 12( spectral amplitudes (DCT) 75 -K bits FEC (Golay codes) 45 bits Stein Voice. DSP 3. 48

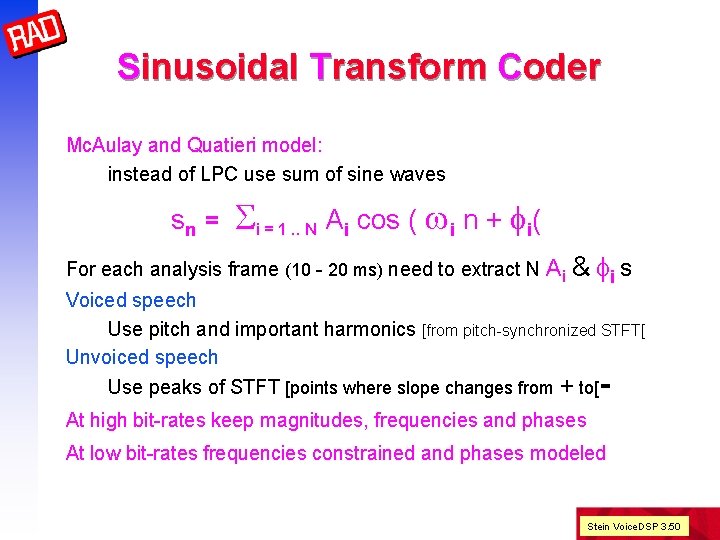

MELP DOD wanted a new 2. 4 Kbps coder with MOS similar to FS 1016 Main problems with LPC 10: – voicing determination errors – no handling of partially voiced speech Unlike MBE MELP uses standard LPC model MELP excitation is pulse train plus random noise Soft decision in small number (5) of sub-bands Frame 22. 5 ms (180 samples( 10 th order LPC, 15 Hz BW expansion, LSF, interpolation, VQ pitch refinement 5 sub-bands (0 -500 -1000 -2000 -3000 -4000 Hz) pitch and noise excitation FEC Stein Voice. DSP 3. 49

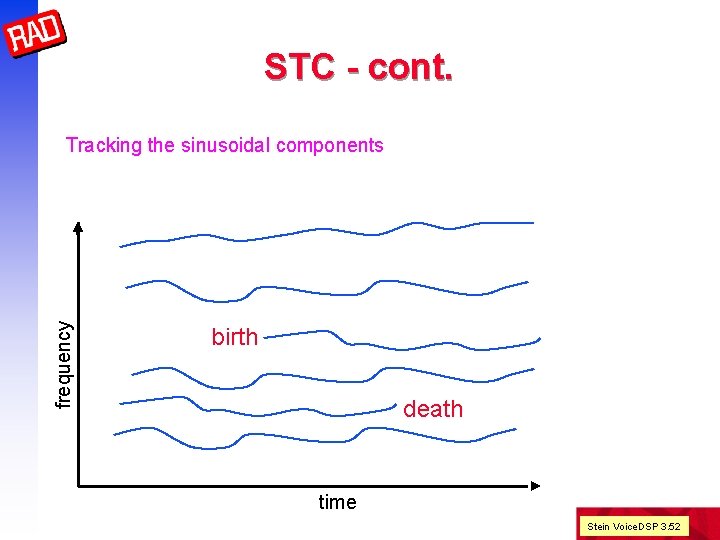

Sinusoidal Transform Coder Mc. Aulay and Quatieri model: instead of LPC use sum of sine waves sn = Si = 1. . N Ai cos ( wi n + fi( For each analysis frame (10 - 20 ms) need to extract N Ai & fi s Voiced speech Use pitch and important harmonics [from pitch-synchronized STFT[ Unvoiced speech Use peaks of STFT [points where slope changes from + to[ - At high bit-rates keep magnitudes, frequencies and phases At low bit-rates frequencies constrained and phases modeled Stein Voice. DSP 3. 50

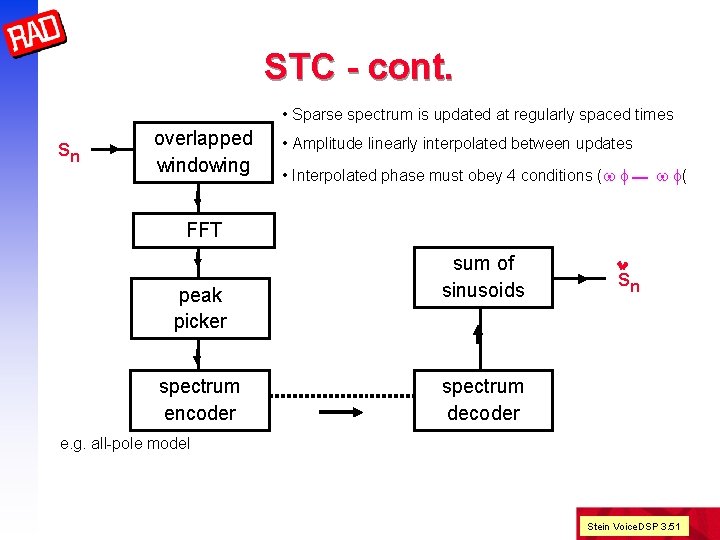

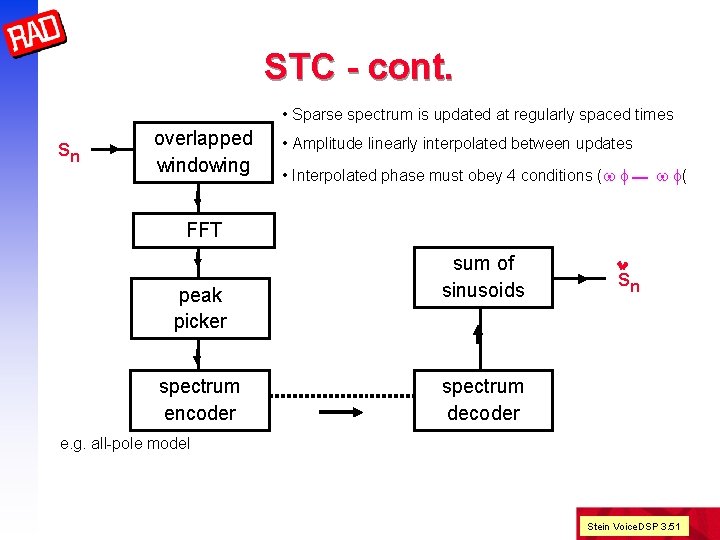

STC - cont. • Sparse spectrum is updated at regularly spaced times sn overlapped windowing • Amplitude linearly interpolated between updates • Interpolated phase must obey 4 conditions (w f w f( FFT peak picker spectrum encoder sum of sinusoids sn spectrum decoder e. g. all-pole model Stein Voice. DSP 3. 51

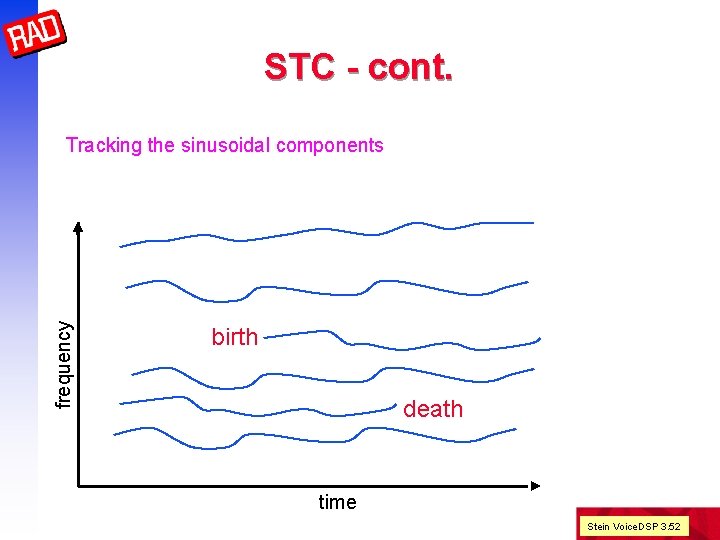

STC - cont. frequency Tracking the sinusoidal components birth death time Stein Voice. DSP 3. 52

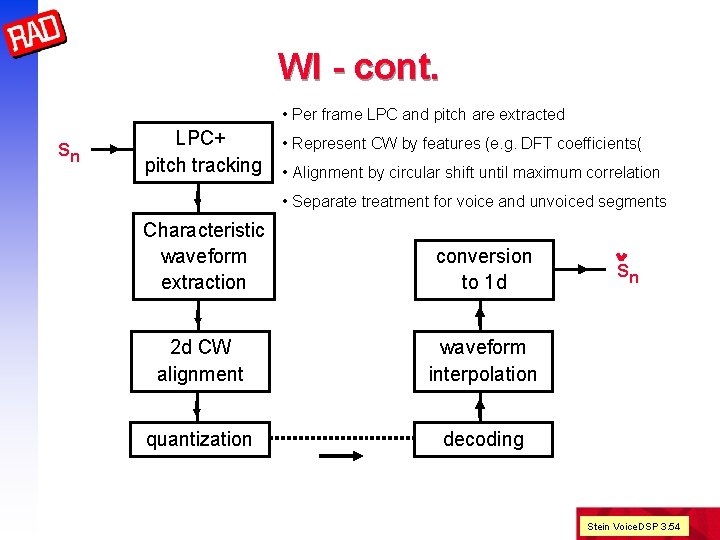

Waveform Interpolation Voiced speech is a sequence of pitch-cycle waveforms The characteristic waveform usually changes slowly with time Useful to think of waveform in 2 d time Phase in pitch period This waveform can be the speech signal or the LPC residual Stein Voice. DSP 3. 53

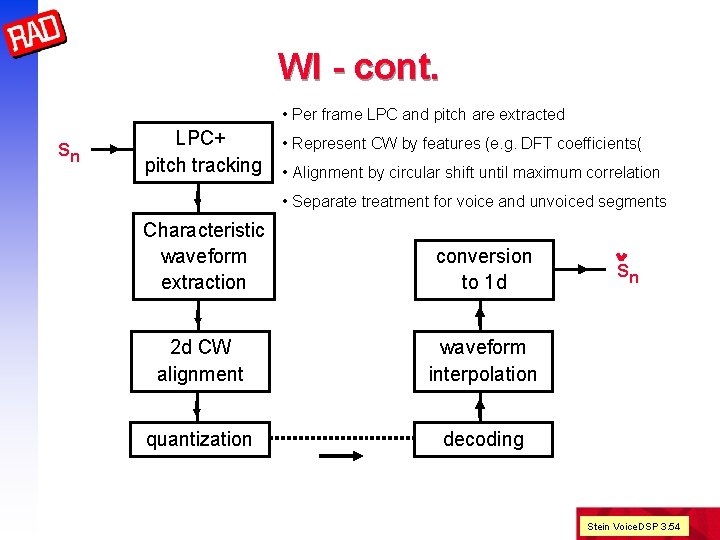

WI - cont. • Per frame LPC and pitch are extracted sn LPC+ • Represent CW by features (e. g. DFT coefficients( pitch tracking • Alignment by circular shift until maximum correlation • Separate treatment for voice and unvoiced segments Characteristic waveform extraction conversion to 1 d 2 d CW alignment waveform interpolation quantization decoding sn Stein Voice. DSP 3. 54