Use DataOriented Design to write efficient code Alessio

- Slides: 71

Use Data-Oriented Design to write efficient code Alessio Coltellacci System developer @lightplay 8 on twitter Not. Bad 4 U on github 1

2

Understanding the field 3

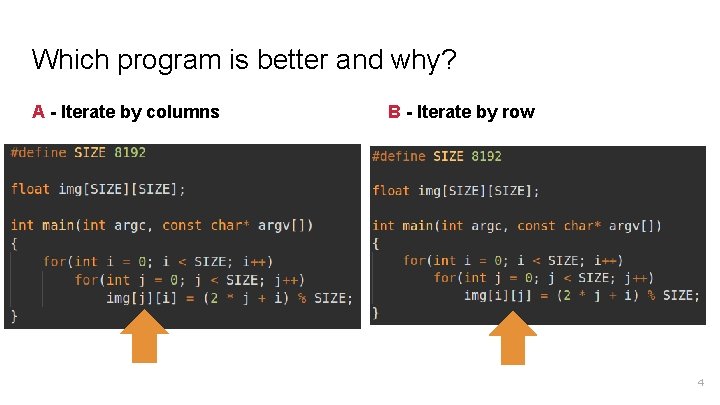

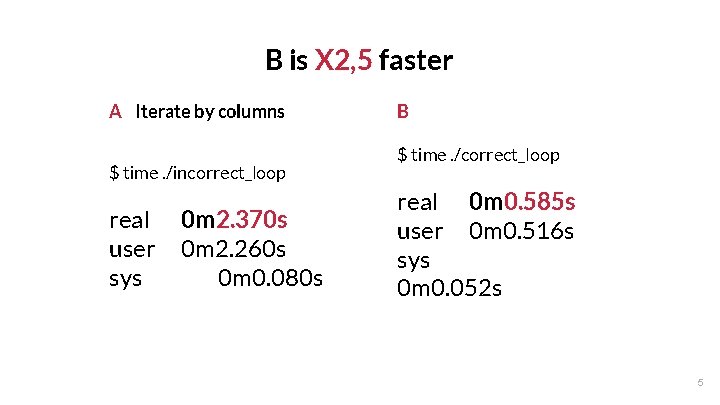

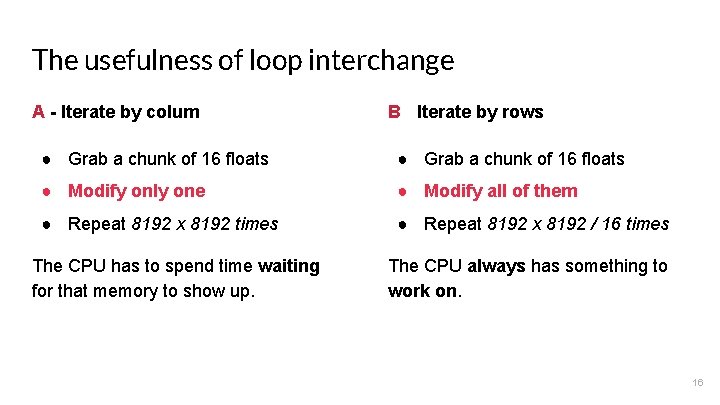

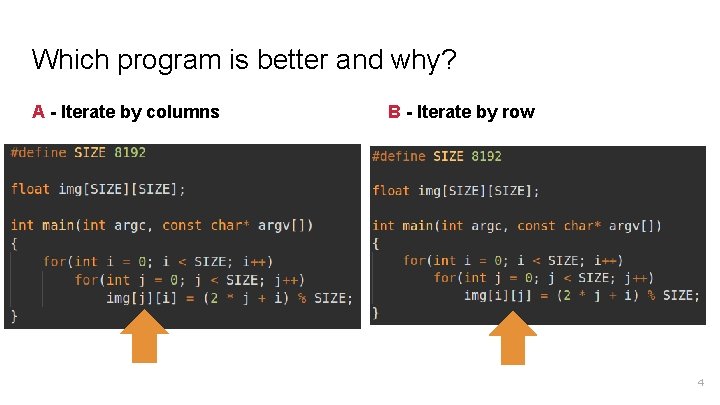

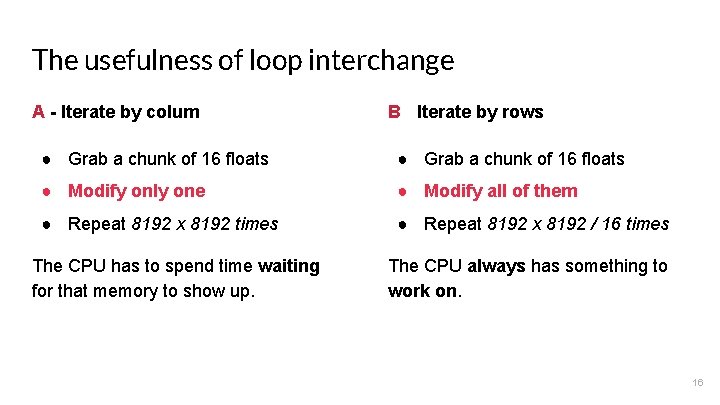

Which program is better and why? A - Iterate by columns B - Iterate by row 4

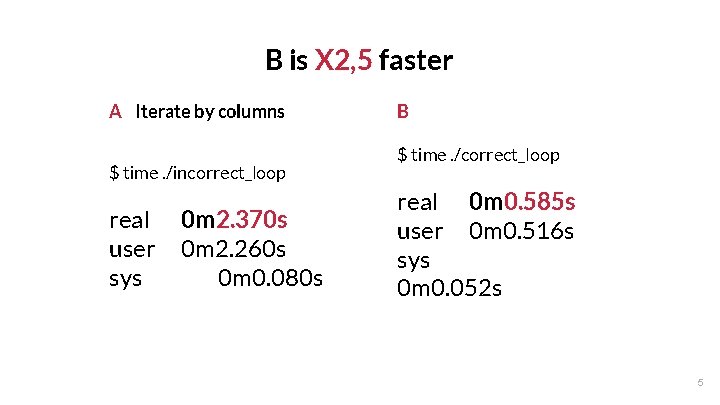

B is X 2, 5 faster A - Iterate by columns $ time. /incorrect_loop real user sys 0 m 2. 370 s 0 m 2. 260 s 0 m 0. 080 s B - Iterate by row $ time. /correct_loop real 0 m 0. 585 s user 0 m 0. 516 s sys 0 m 0. 052 s 5

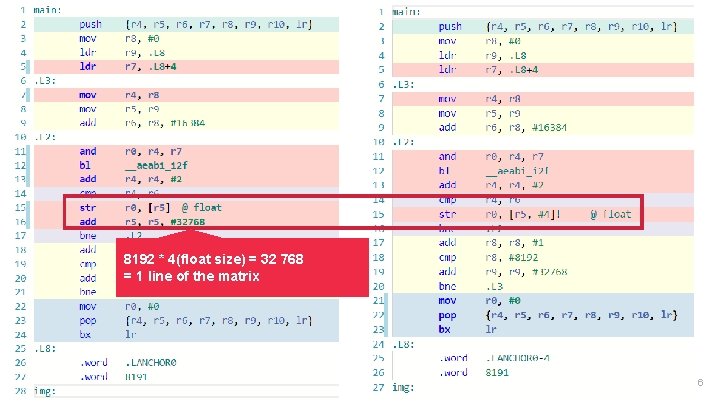

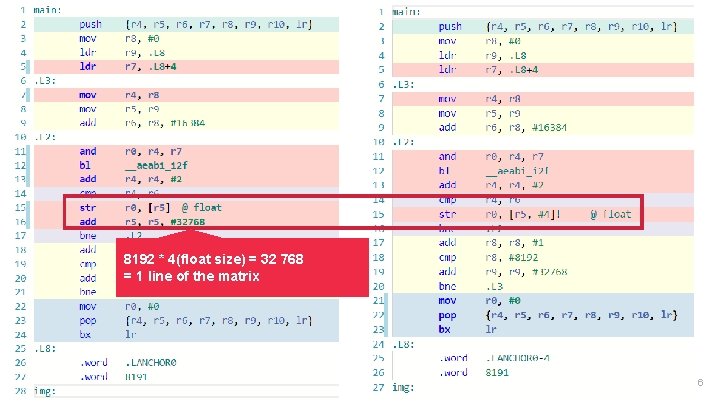

8192 * 4(float size) = 32 768 = 1 line of the matrix 6

We need to remember how CPUs work 7

The central processing unit (CPU) carries out the instructions of a computer program by performing arithmetic. 8

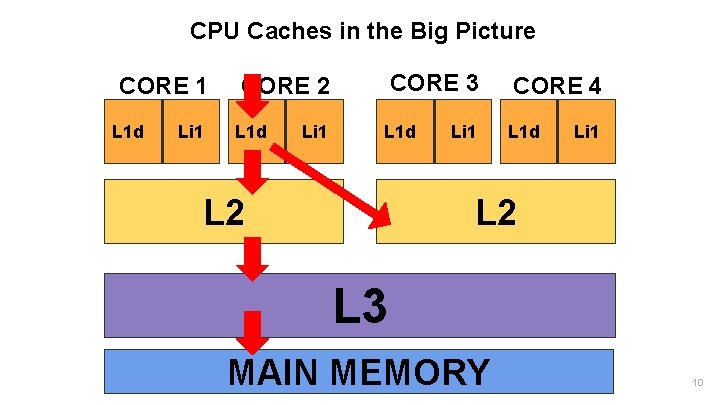

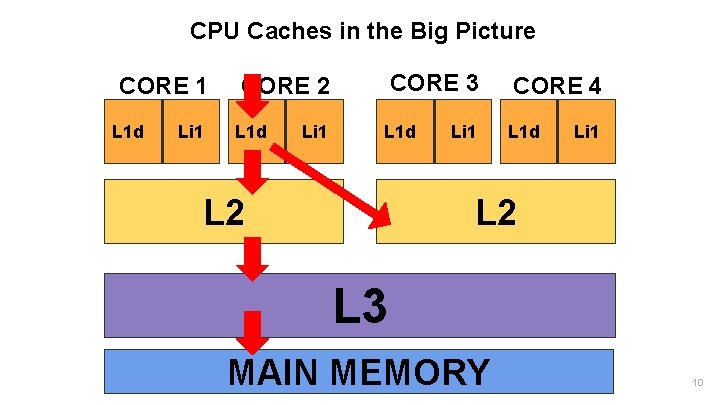

CPU Caches in the Big Picture CORE 1 L 1 d Li 1 CORE 3 CORE 2 L 1 d Li 1 L 1 d L 2 Li 1 CORE 4 L 1 d Li 1 L 2 L 3 MAIN MEMORY 10

MESI cache coherence protocol 11

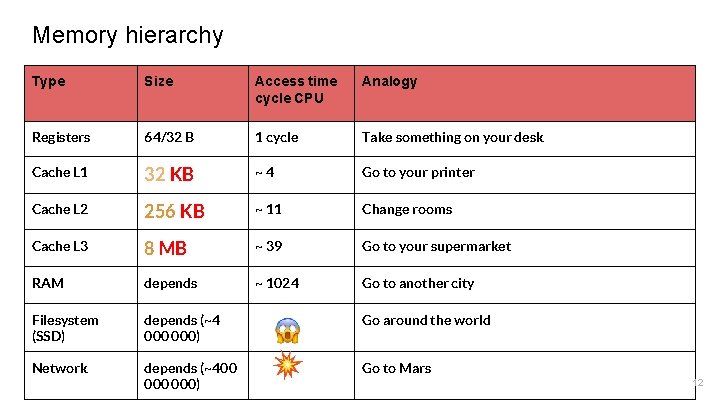

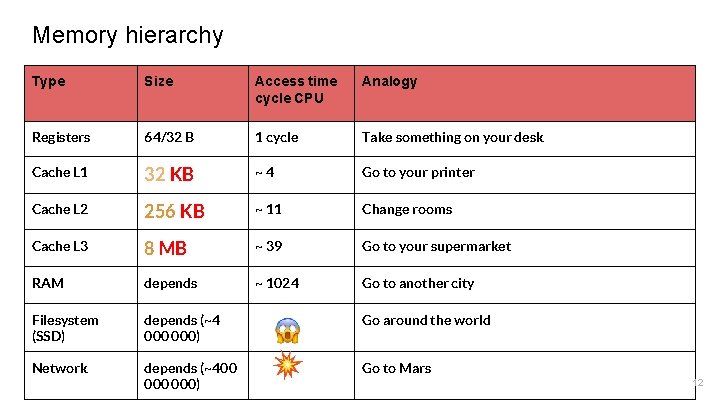

Memory hierarchy Type Size Access time cycle CPU Analogy Registers 64/32 B 1 cycle Take something on your desk Cache L 1 32 KB ~4 Go to your printer Cache L 2 256 KB ~ 11 Change rooms Cache L 3 8 MB ~ 39 Go to your supermarket RAM depends ~ 1024 Go to another city Filesystem (SSD) depends (~4 000) Go around the world Network depends (~400 000) Go to Mars 12

Why don’t we have a big L 1 cache ? With a large cache, the latency to find an item in the cache approaches the latency of looking up in main memory. 13

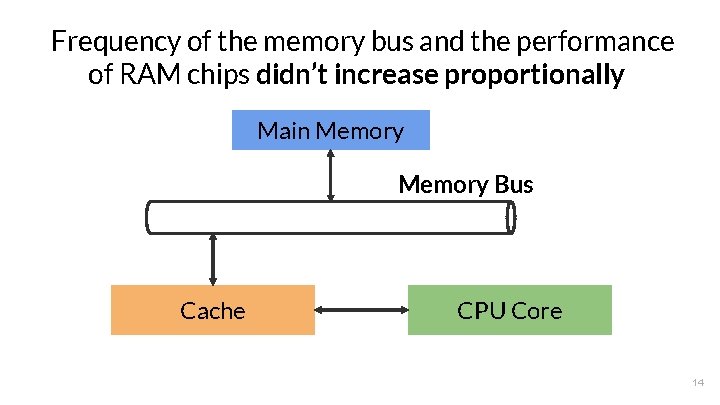

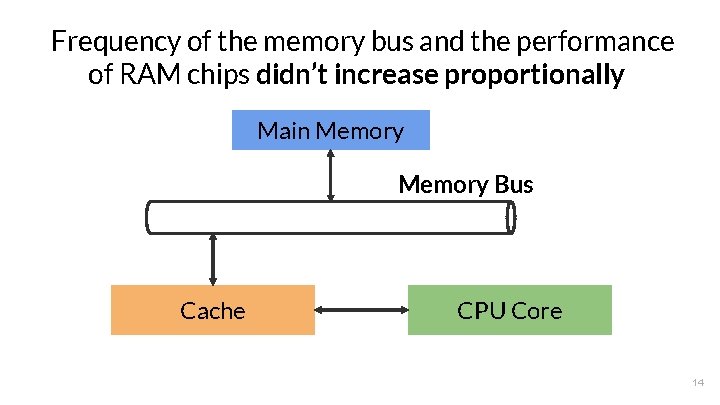

Frequency of the memory bus and the performance of RAM chips didn’t increase proportionally Main Memory Bus Cache CPU Core 14

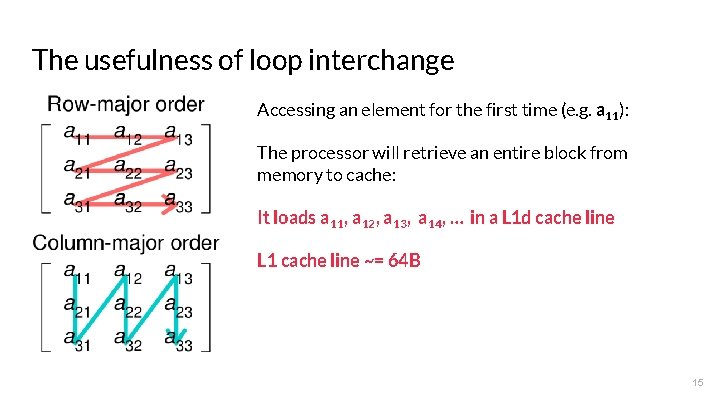

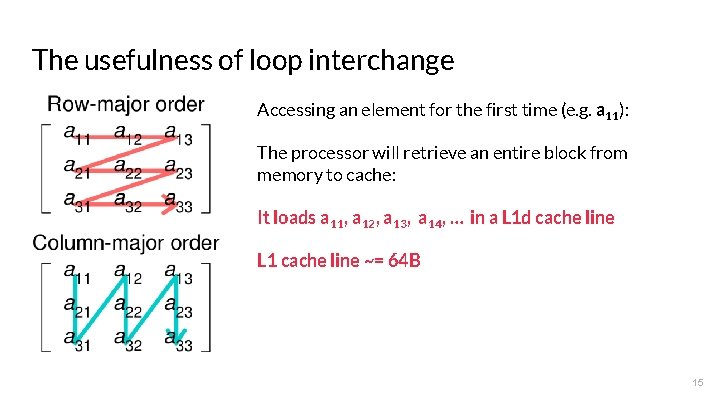

The usefulness of loop interchange Accessing an element for the first time (e. g. a 11): The processor will retrieve an entire block from memory to cache: It loads a 11, a 12, a 13, a 14, … in a L 1 d cache line L 1 cache line ~= 64 B 15

The usefulness of loop interchange A - Iterate by columns B - Iterate by rows ● Grab a chunk of 16 floats ● Modify only one ● Modify all of them ● Repeat 8192 x 8192 times ● Repeat 8192 x 8192 / 16 times The CPU has to spend time waiting for that memory to show up. The CPU always has something to work on. 16

Profiling! 17

perf Tool for Linux profiling with performance counters 18

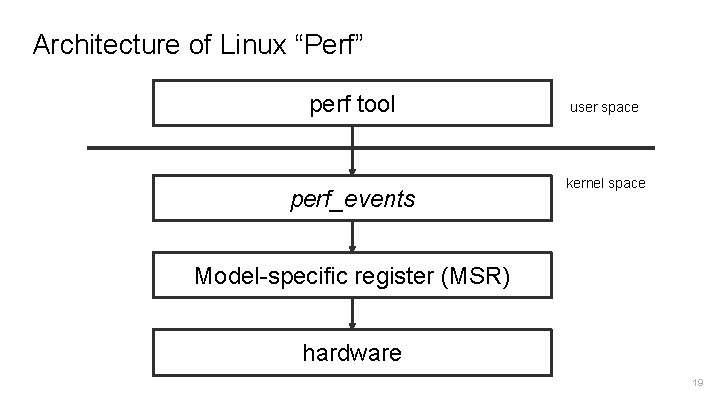

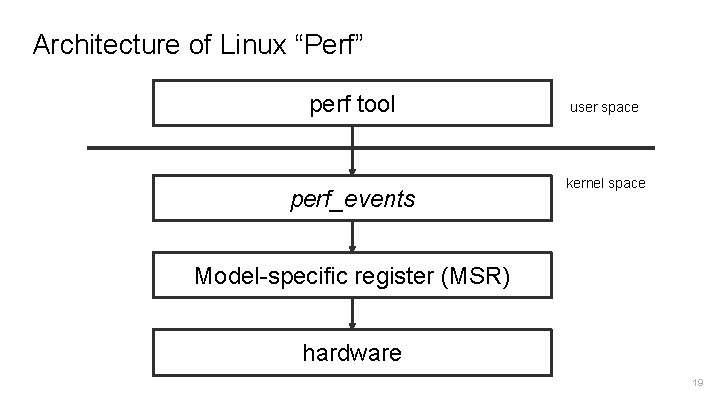

Architecture of Linux “Perf” perf tool perf_events user space kernel space Model-specific register (MSR) hardware 19

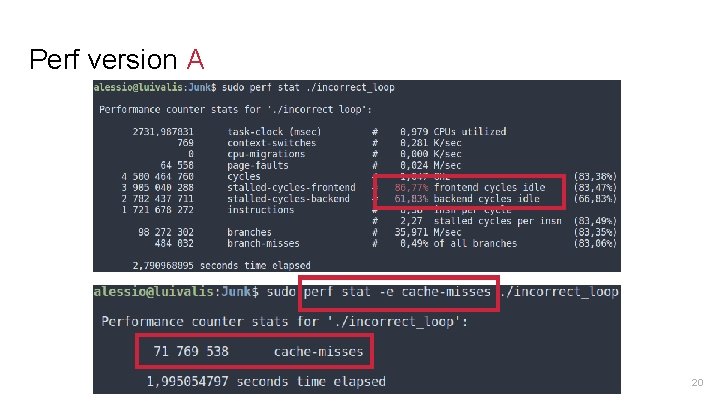

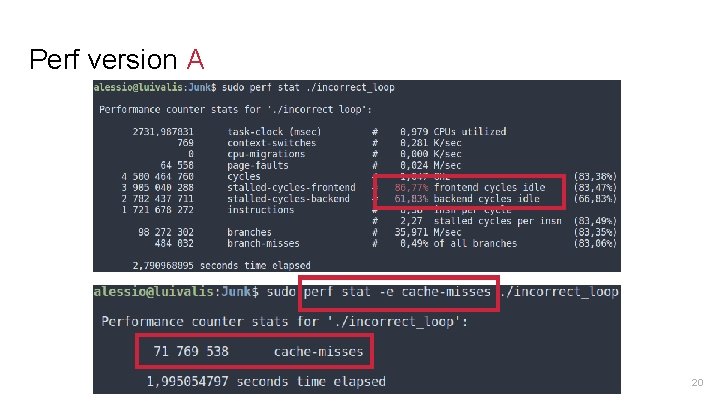

Perf version A 20

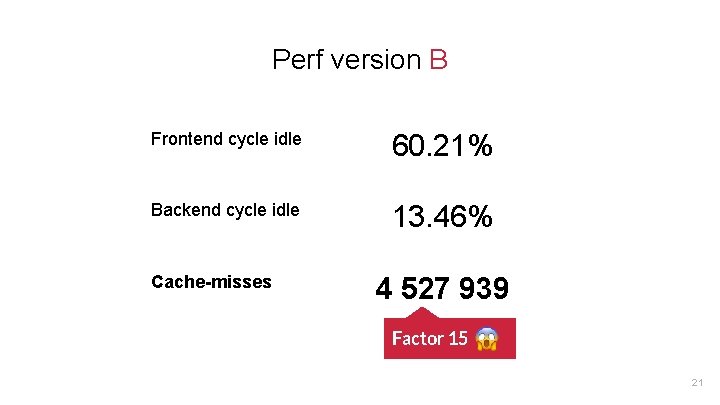

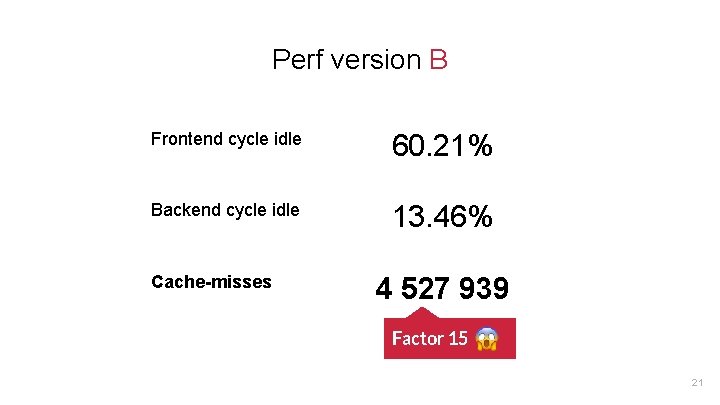

Perf version B Frontend cycle idle 60. 21% Backend cycle idle 13. 46% Cache-misses 4 527 939 Factor 15 21

Let’s talk about Data-Oriented Design 22

We want to help the processor by preparing our data to be processed in a more efficient way. 23

Memory access pattern Prefer sequential access rather than random access to benefit from prefetched data in the cache. NOTE: Remember the first example 25

Use packed contiguous chunks of memory for data structures 26

Data locality Keep data in the order that you process it. 27

Use algorithms that process a single task at a time. It’s easier to profile with micro benchmarks 28

“Efficiency through algorithms. Performance through data structures. ” Chandler Carruth, Cpp. Con 2014 29

Let’s talk about data structures 30

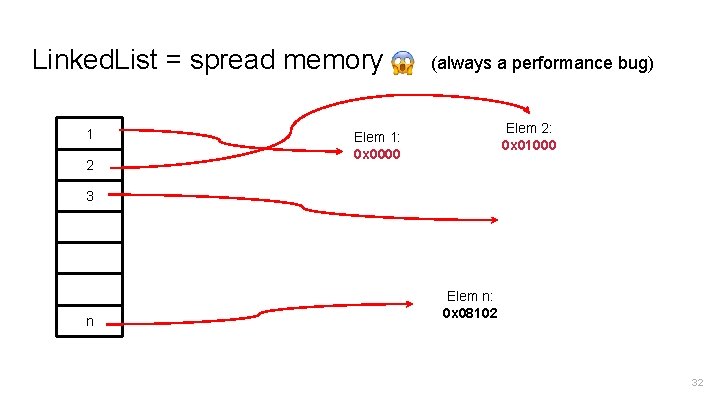

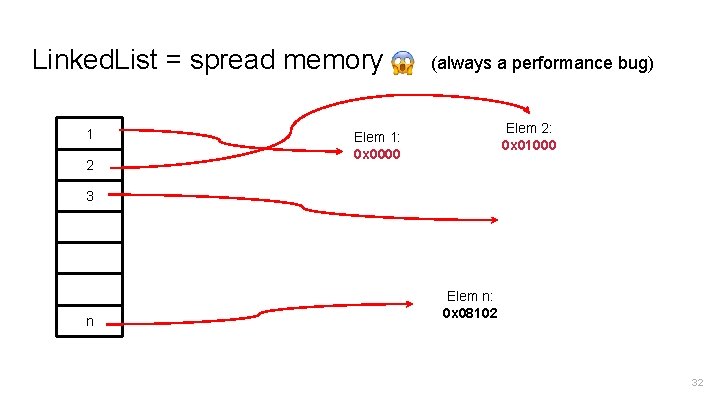

Use pointers to the next element The pointer might point into memory that isn't in cache. 31

Linked. List = spread memory 1 2 (always a performance bug) Elem 2: 0 x 01000 Elem 1: 0 x 0000 3 Elem 3: 0 x 08000 n Elem n: 0 x 08102 32

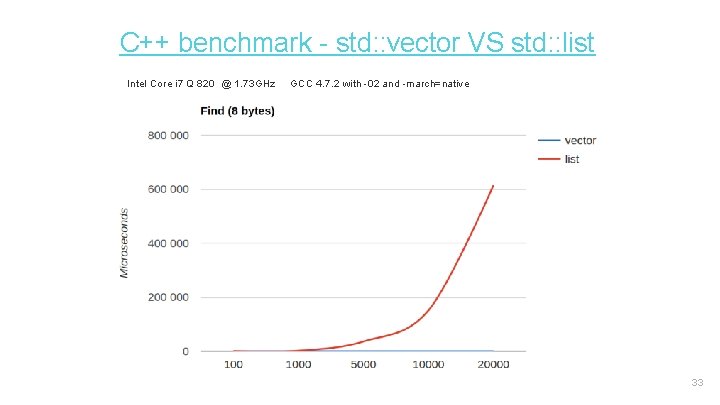

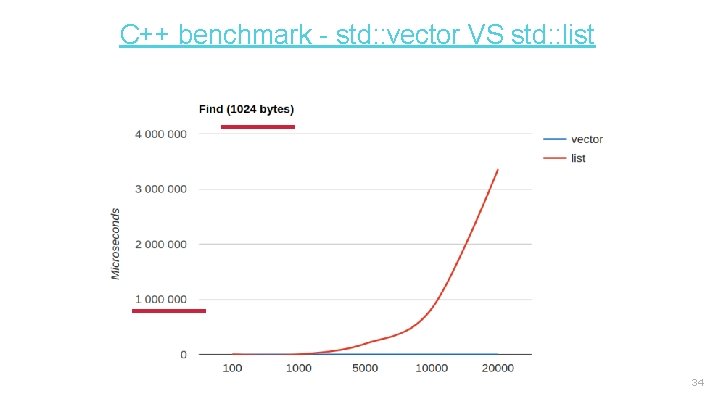

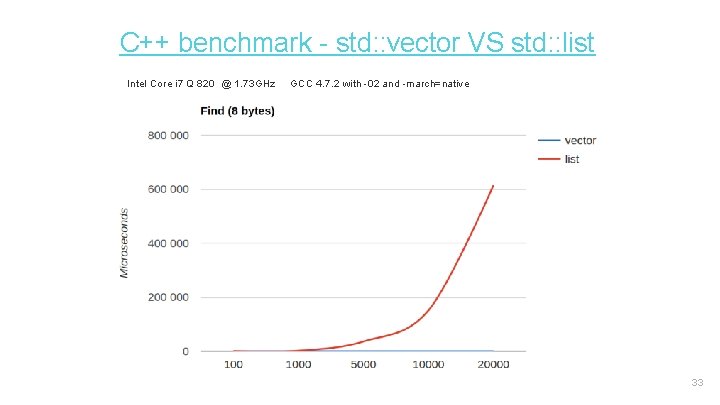

C++ benchmark - std: : vector VS std: : list Intel Core i 7 Q 820 @ 1. 73 GHz GCC 4. 7. 2 with -02 and -march=native 33

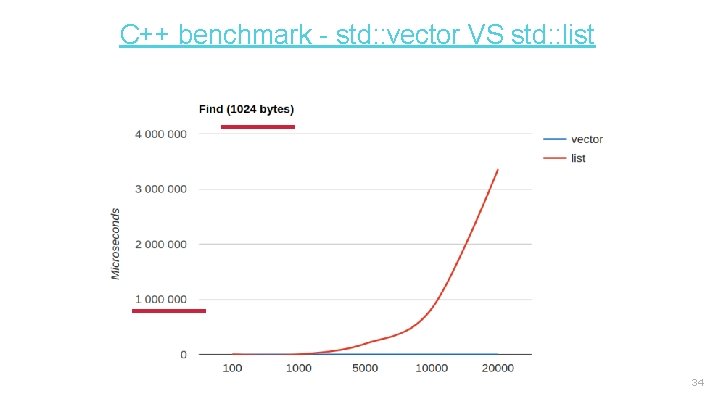

C++ benchmark - std: : vector VS std: : list 34

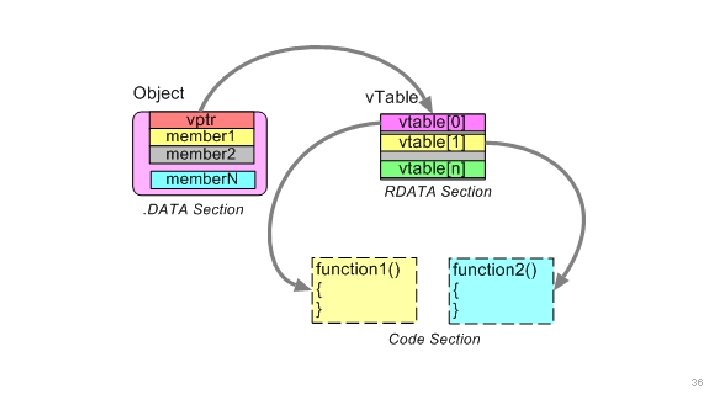

Using generic data structure is slow when runtime polymorphism (dynamic dispatch) is used. 35

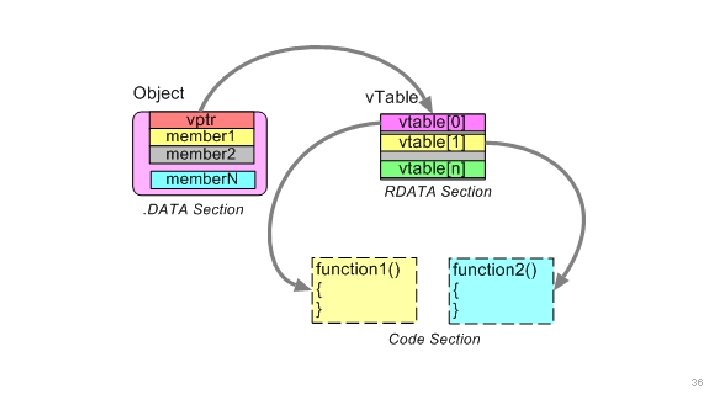

36

Use Plain Old Data ● Simple structs with all data in itself. ● Separe the logic from your data. ● Try to avoid pointers, virtual functions, inheritance, . . . 37

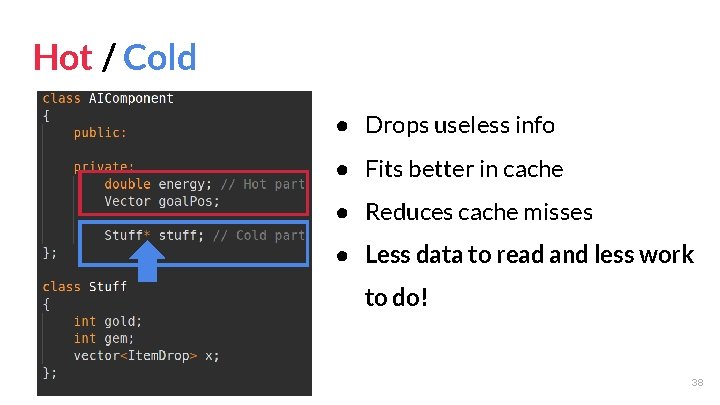

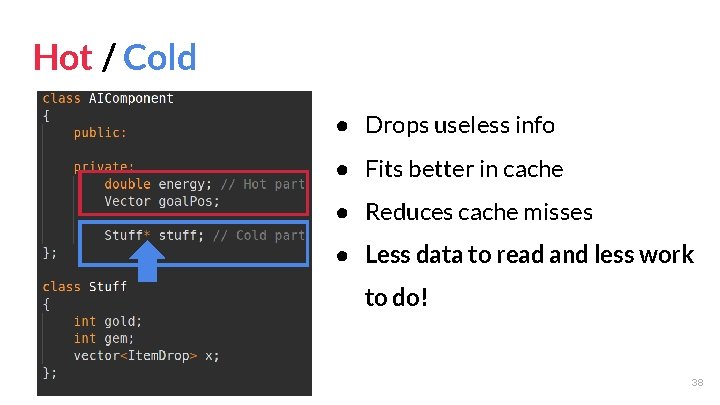

Hot / Cold splitting ● Drops useless info ● Fits better in cache ● Reduces cache misses ● Less data to read and less work to do! 38

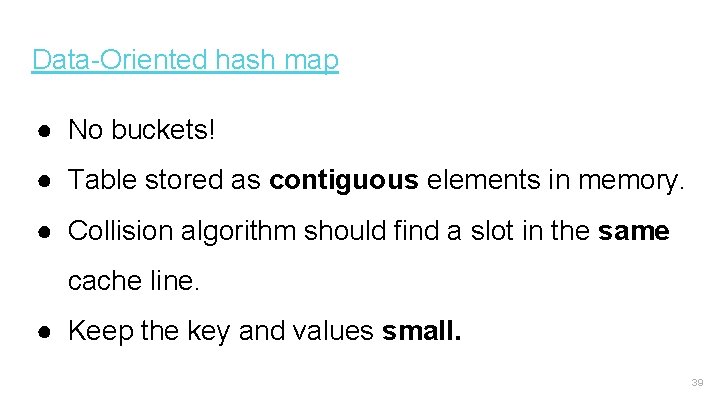

Data-Oriented hash map ● No buckets! ● Table stored as contiguous elements in memory. ● Collision algorithm should find a slot in the same cache line. ● Keep the key and values small. 39

https: //github. com/sozu-proxy/sozu 40

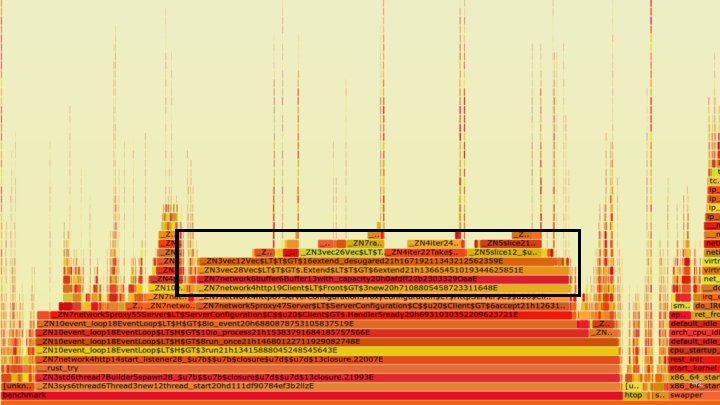

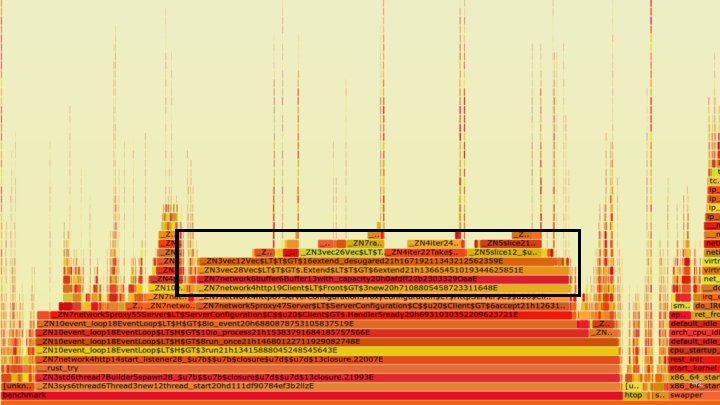

At the beginning, for each request we allocated a new vector. 41

42

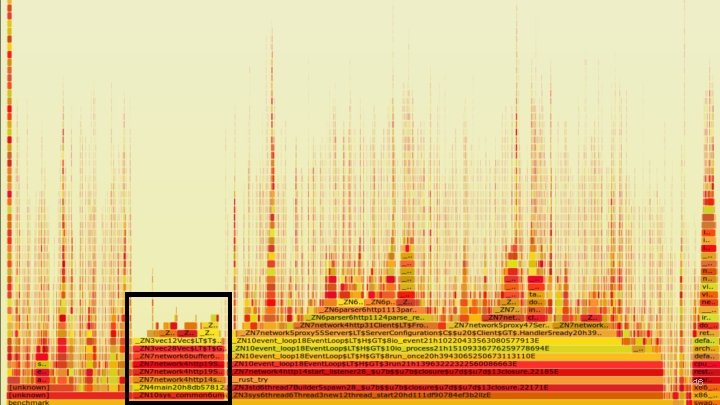

A lot of time spent allocating memory and not processing the requests 43

So we switched to a SLAB Pre-allocated storage for a uniform data type. 44

BTW SLAB is possible in Java with sun. misc. Unsafe or Byte. Buffer https: //github. com/Richard. Warburton/slab 45

46

Workers use CPU Affinity Enable the binding of a process to a CPU core. pthread_setaffinity_np That reduces the cache miss rates if you use the same L 1 d/L 2 all the time. 47

Yeah but I use a language with a Runtime System. . . Can I use Data-Oriented Design? 62

Data-Oriented Design with Java. Script C++ C Rust 63

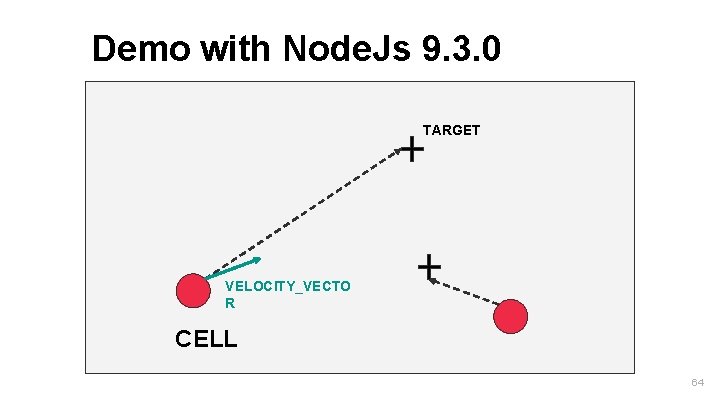

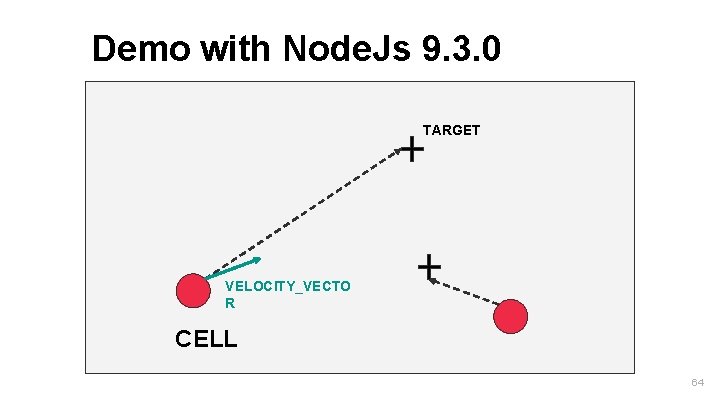

Demo with Node. Js 9. 3. 0 TARGET VELOCITY_VECTO R CELL 64

Let’s do an OOP version ● Use a JS object to represent an Entity. ● Attach an update method to their prototype. ● Store all different entities in the same JS array. 65

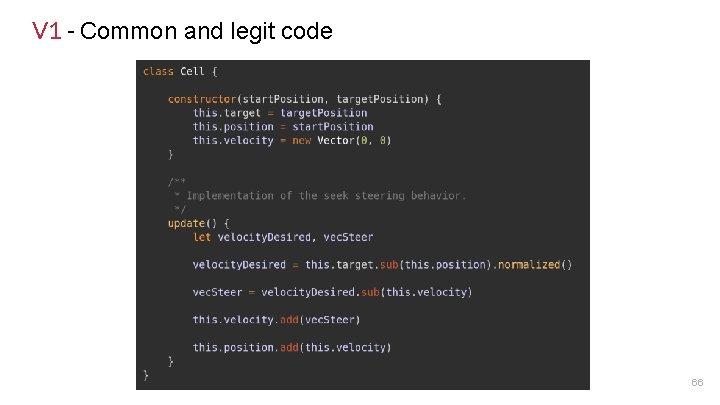

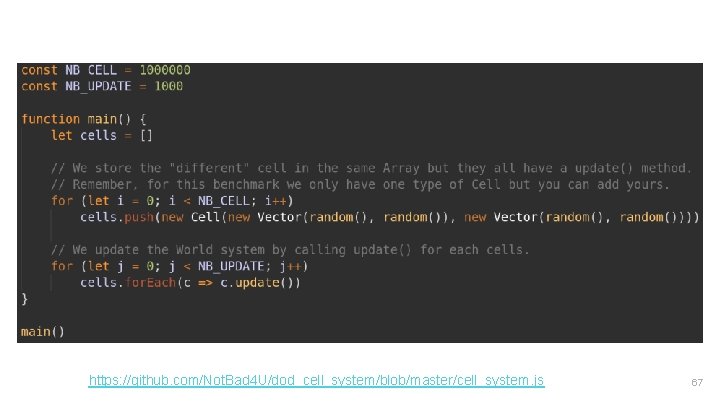

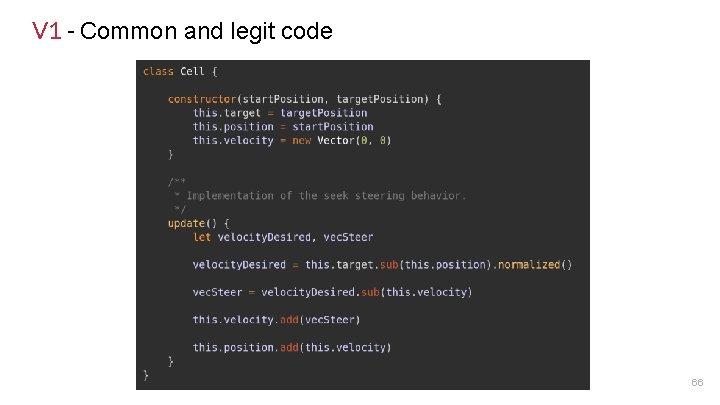

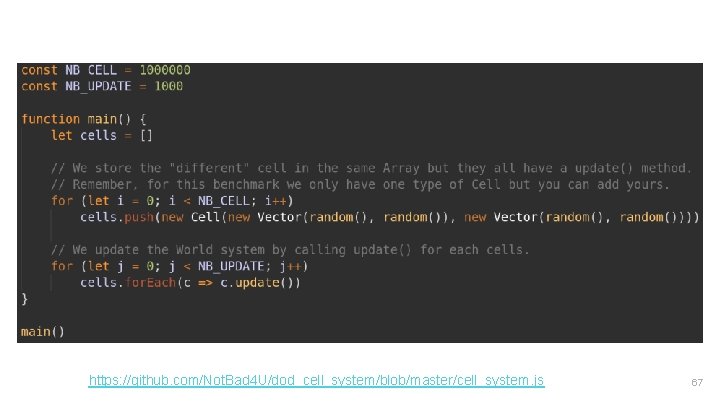

V 1 - Common and legit code 66

https: //github. com/Not. Bad 4 U/dod_cell_system/blob/master/cell_system. js 67

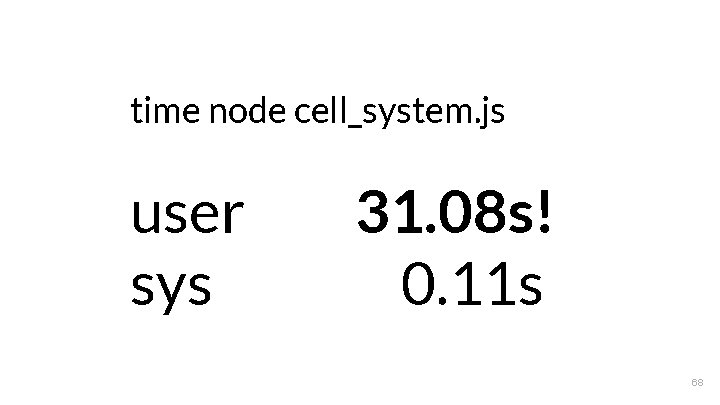

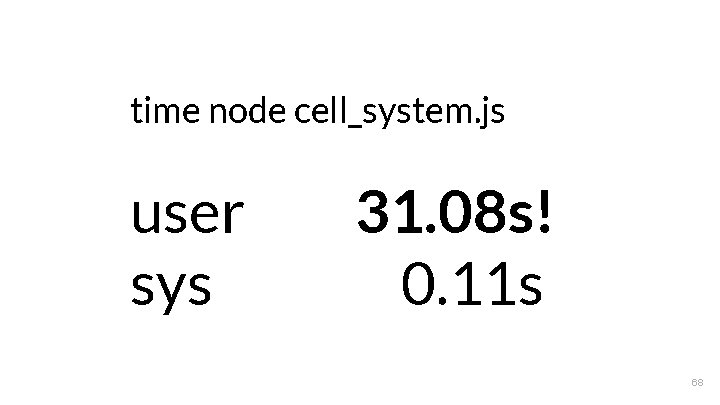

time node cell_system. js user sys 31. 08 s! 0. 11 s 68

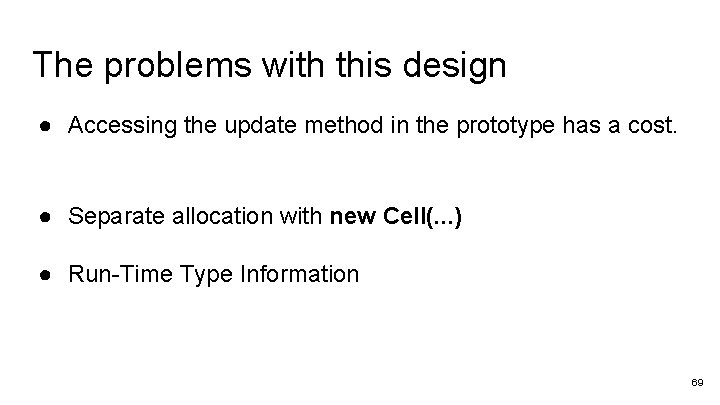

The problems with this design ● Accessing the update method in the prototype has a cost. ● Separate allocation with new Cell(. . . ) ● Run-Time Type Information 69

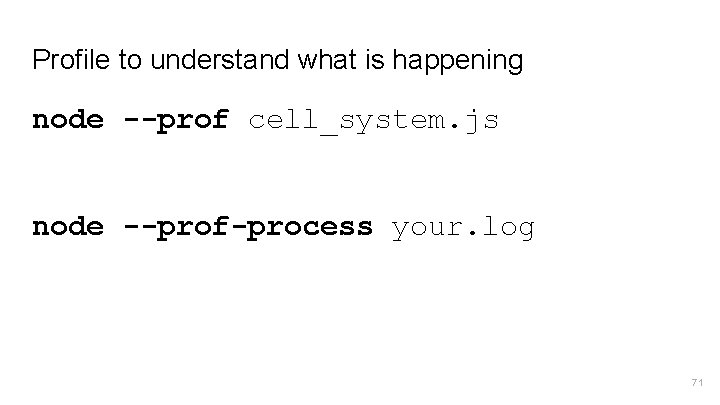

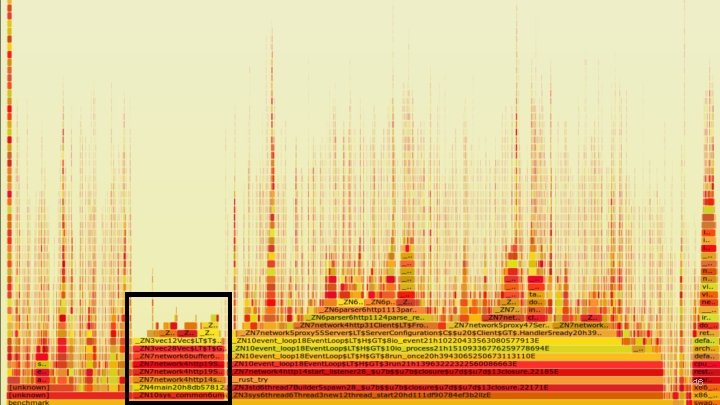

Profiling! 70

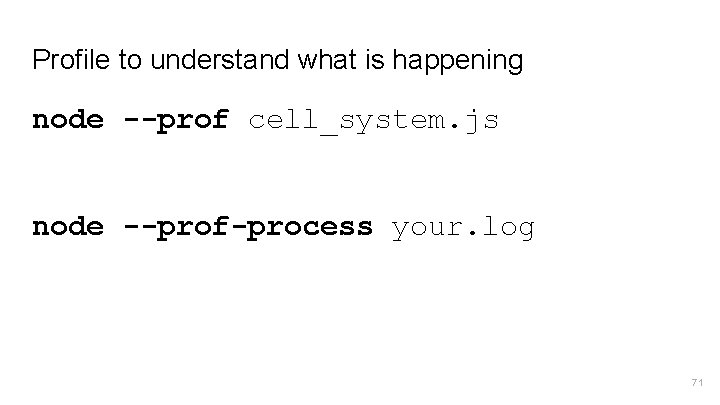

Profile to understand what is happening node --prof cell_system. js node --prof-process your. log 71

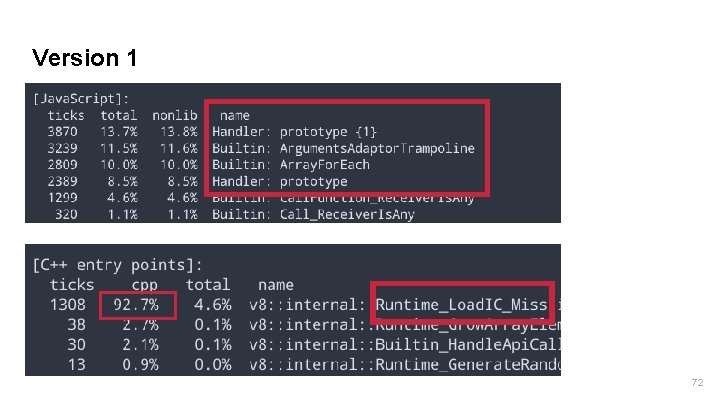

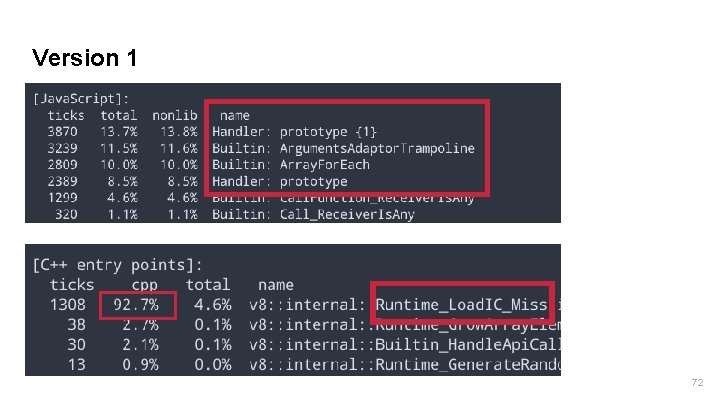

Version 1 72

Let’s try to optimize this 73

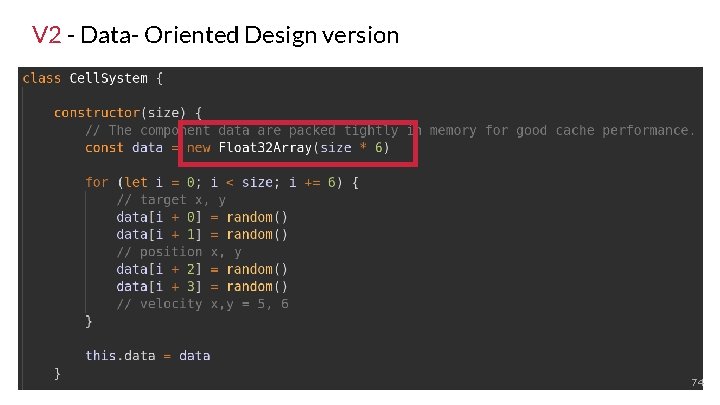

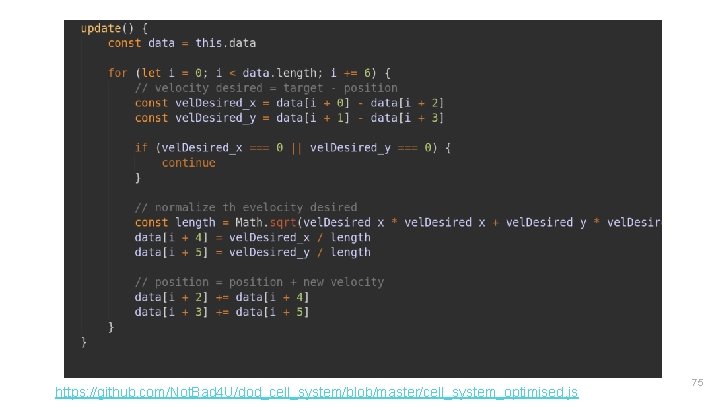

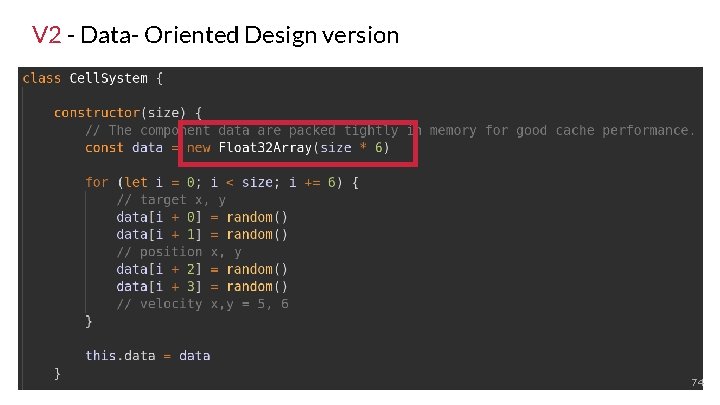

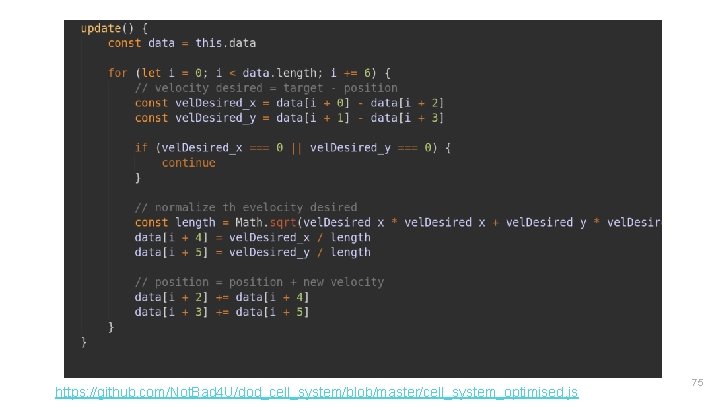

V 2 - Data- Oriented Design version 74

https: //github. com/Not. Bad 4 U/dod_cell_system/blob/master/cell_system_optimised. js 75

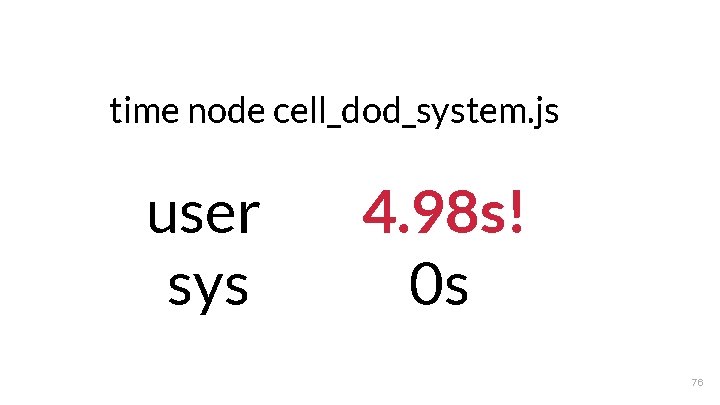

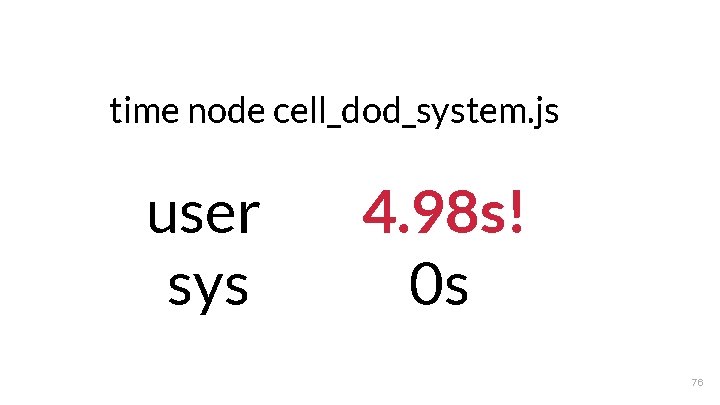

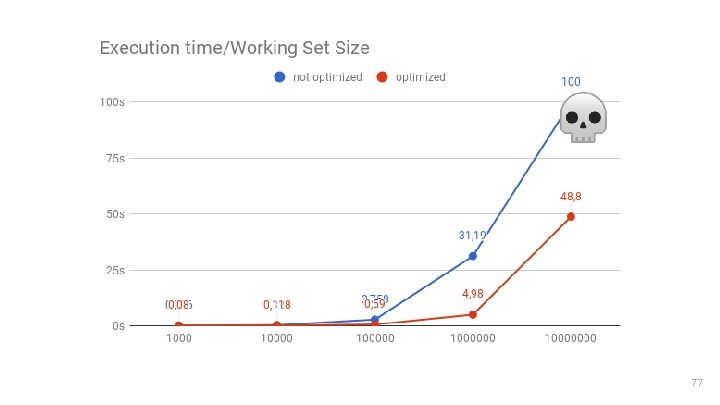

time node cell_dod_system. js user sys 4. 98 s! 0 s 76

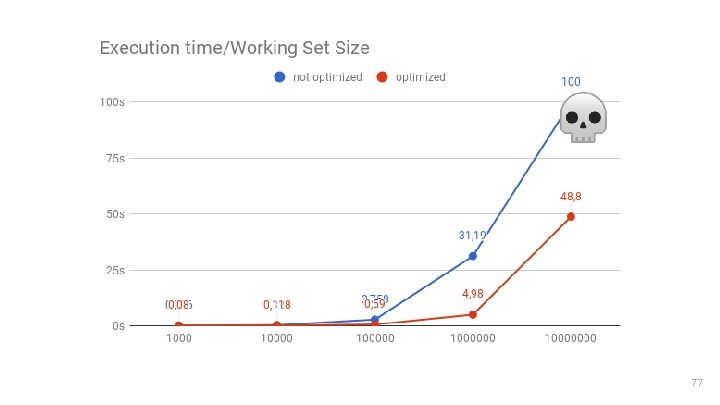

NOTE: Lower is better 77

Profiling (to be sure)! 78

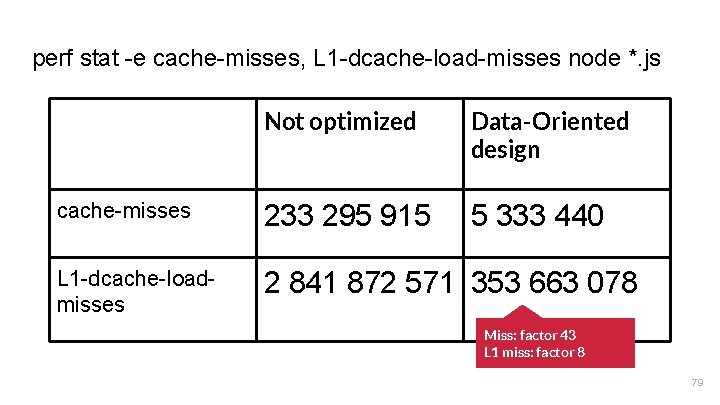

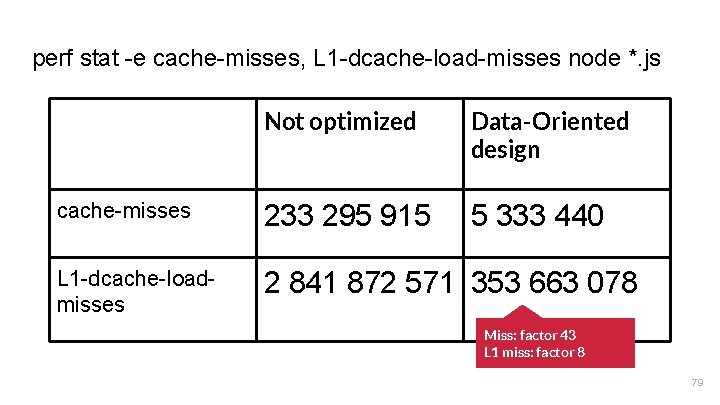

perf stat -e cache-misses, L 1 -dcache-load-misses node *. js Not optimized Data-Oriented design cache-misses 233 295 915 5 333 440 L 1 -dcache-loadmisses 2 841 872 571 353 663 078 Miss: factor 43 L 1 miss: factor 8 79

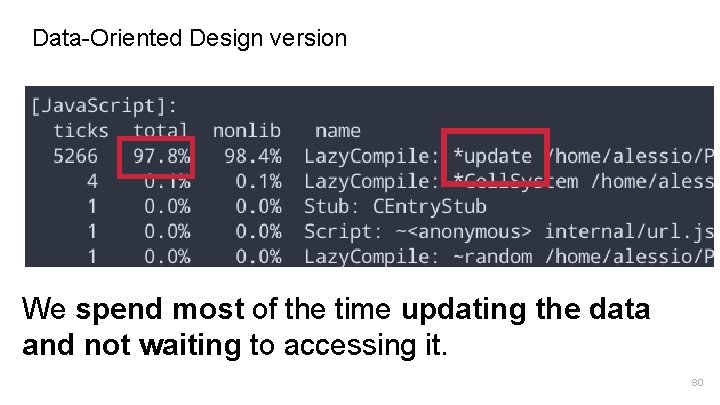

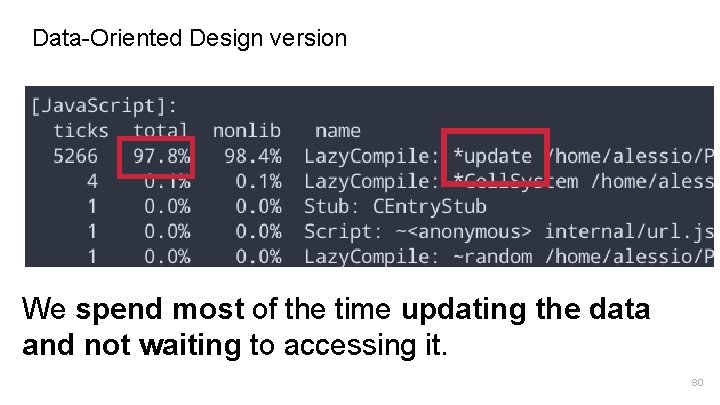

Data-Oriented Design version We spend most of the time updating the data and not waiting to accessing it. 80

81

● Profile your code often. ● Use contiguous, dense, cache-oriented data structures. ● Know how your languages and runtime systems works 82

Furthermore, we have SIMD-friendly and parallel computing data layouts. 83

Go further ● Impact Of Virtualization ● Optimizing Page Table Access (TLB) ●. . . 84

Now you know where to look and profile 85

For more information ● What every Programmer Should know About Memory by Ulrich Drepper ● http: //www. dataorienteddesign. com/site. php ● Data Oriented Design Resources ● Data-Oriented Design and C++ by Mike Acton at Cpp. Con 2014 ● C++ in Huge AAA Games by Nicolas Fleury at Cpp. Con 2014 86

15€ Coupon Clever Cloud: devfest. Toulouse 2018 https: //docs. google. com/pres<entation/d/14 IBNbj. Yn. CYr. Nd. Mq 6 hn. Yd Uc 2 GLbrh. S 3 godn 6 Nv 93 fmn. A/edit? usp=sharing 87