Efficient storage Efficient CPU use Implementation effort Efficient

![Necessary to store efficiently A Closer Look: Lists of nodes � List<byte[]> should not Necessary to store efficiently A Closer Look: Lists of nodes � List<byte[]> should not](https://slidetodoc.com/presentation_image_h2/00be9f727823980bfe1498b0bfd4663e/image-8.jpg)

- Slides: 26

Efficient storage! Efficient CPU use! Implementation effort? Efficient implementations of Alignmentbased algorithms B. F. van Dongen

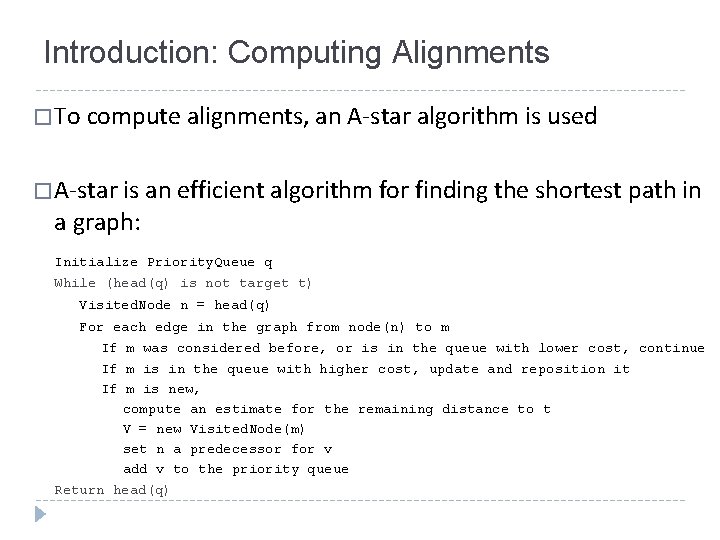

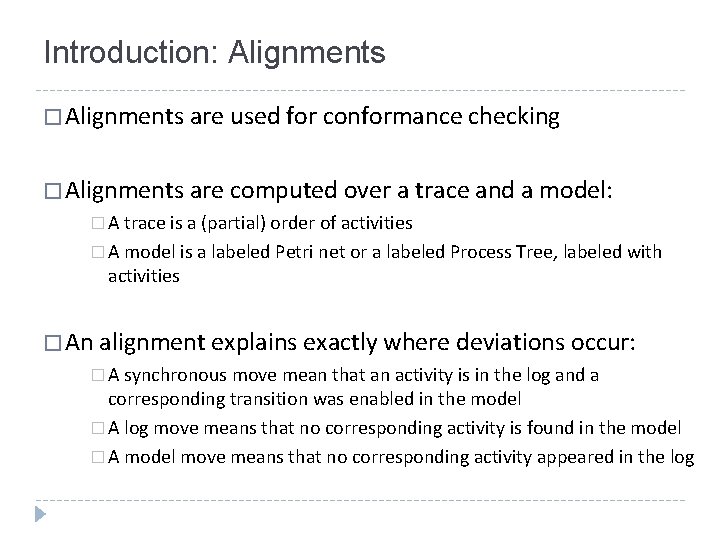

Introduction: Alignments � Alignments are used for conformance checking � Alignments are computed over a trace and a model: � A trace is a (partial) order of activities � A model is a labeled Petri net or a labeled Process Tree, labeled with activities � An alignment explains exactly where deviations occur: � A synchronous move mean that an activity is in the log and a corresponding transition was enabled in the model � A log move means that no corresponding activity is found in the model � A model move means that no corresponding activity appeared in the log

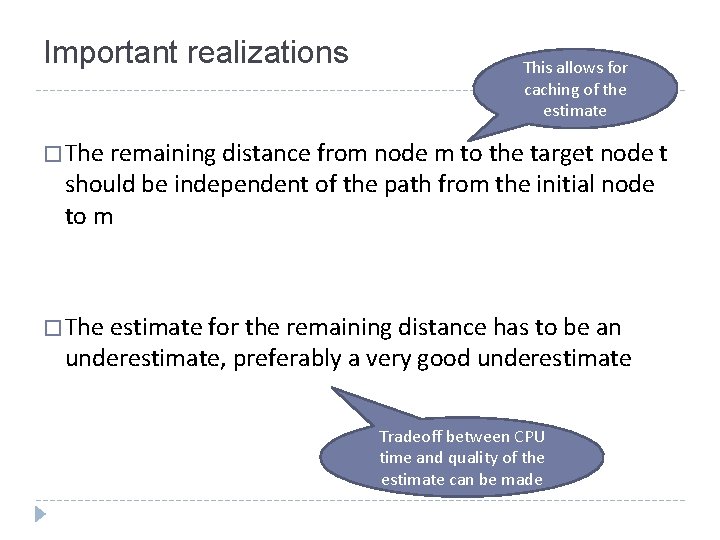

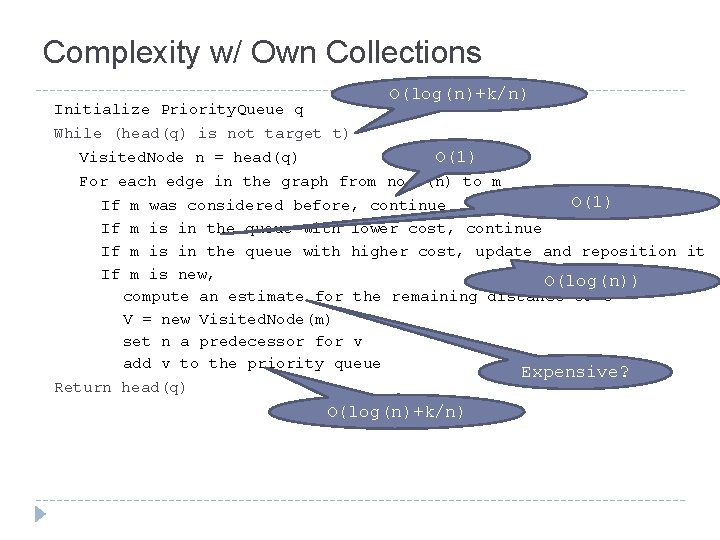

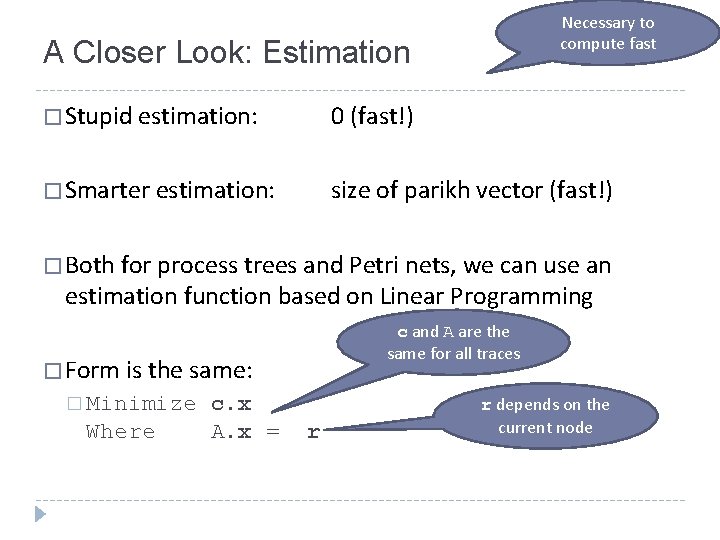

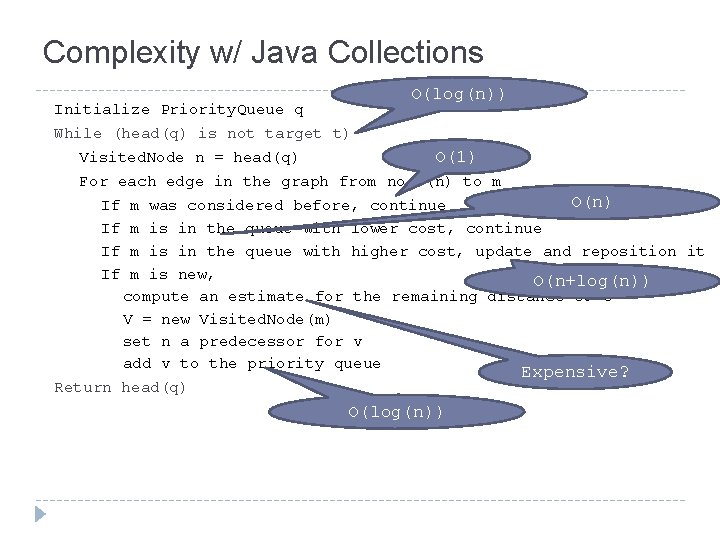

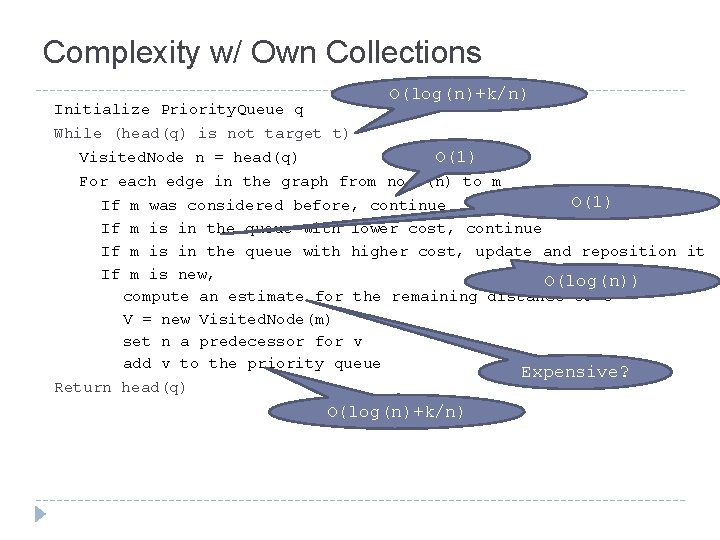

Introduction: Computing Alignments � To compute alignments, an A-star algorithm is used � A-star is an efficient algorithm for finding the shortest path in a graph: Initialize Priority. Queue q While (head(q) is not target t) Visited. Node n = head(q) For each edge in the graph from node(n) to m If m was considered before, or is in the queue with lower cost, continue If m is in the queue with higher cost, update and reposition it If m is new, compute an estimate for the remaining distance to t V = new Visited. Node(m) set n a predecessor for v add v to the priority queue Return head(q)

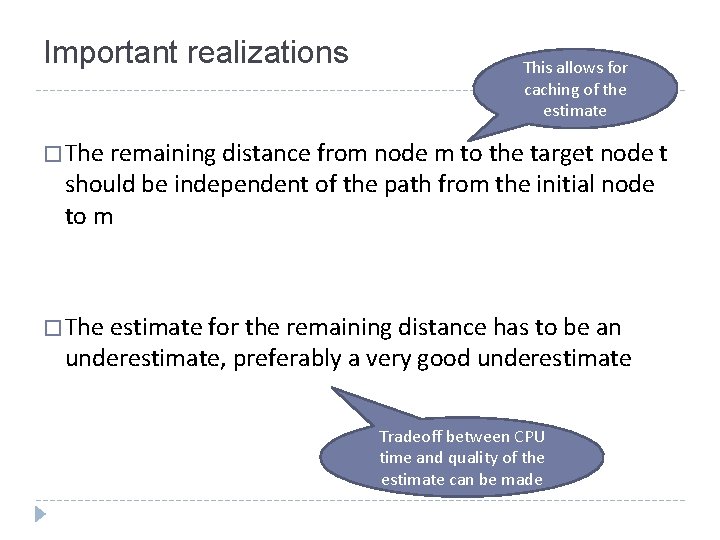

Important realizations This allows for caching of the estimate � The remaining distance from node m to the target node t should be independent of the path from the initial node to m � The estimate for the remaining distance has to be an underestimate, preferably a very good underestimate Tradeoff between CPU time and quality of the estimate can be made

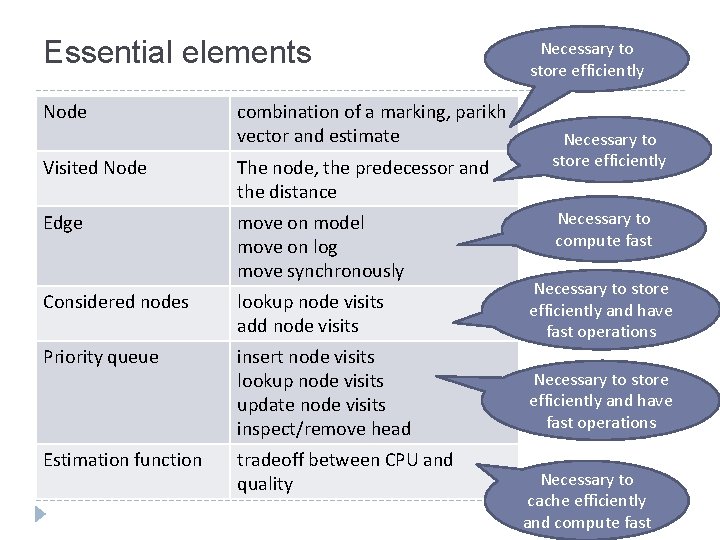

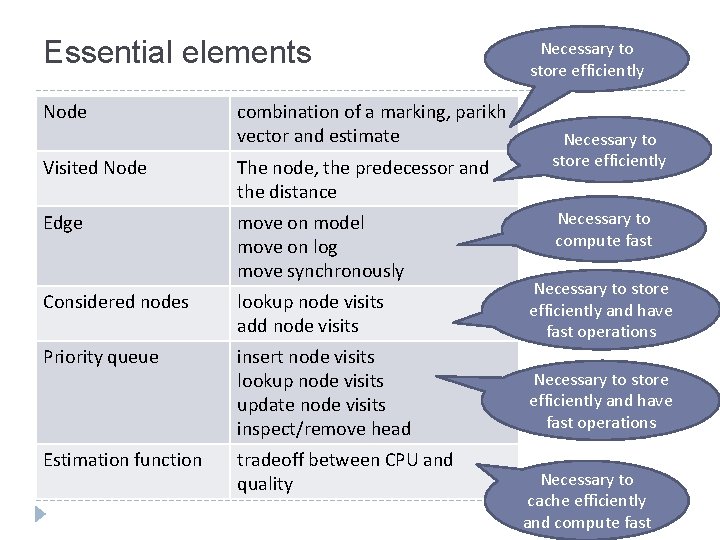

Essential elements Node combination of a marking, parikh vector and estimate Necessary to store efficiently Visited Node The node, the predecessor and the distance Edge move on model move on log move synchronously Considered nodes lookup node visits add node visits Necessary to store efficiently and have fast operations Priority queue insert node visits lookup node visits update node visits inspect/remove head Necessary to store efficiently and have fast operations Estimation function tradeoff between CPU and quality Necessary to compute fast Necessary to cache efficiently and compute fast

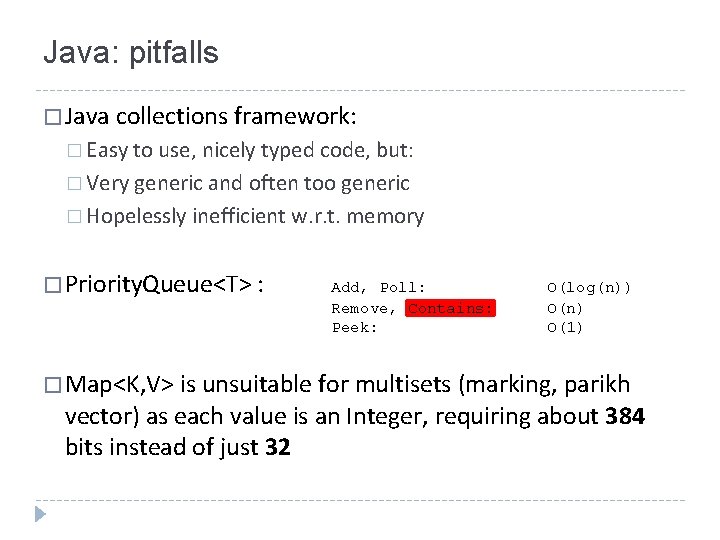

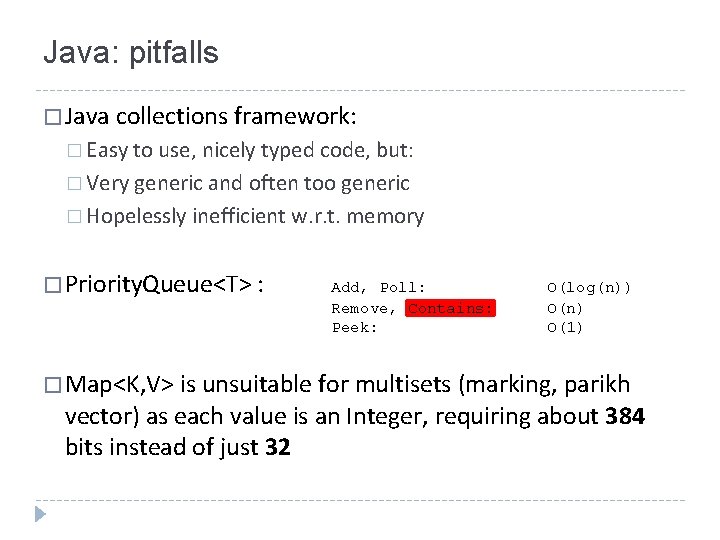

Java: pitfalls � Java collections framework: � Easy to use, nicely typed code, but: � Very generic and often too generic � Hopelessly inefficient w. r. t. memory � Priority. Queue<T> : Add, Poll: Remove, Contains: Peek: O(log(n)) O(n) O(1) � Map<K, V> is unsuitable for multisets (marking, parikh vector) as each value is an Integer, requiring about 384 bits instead of just 32

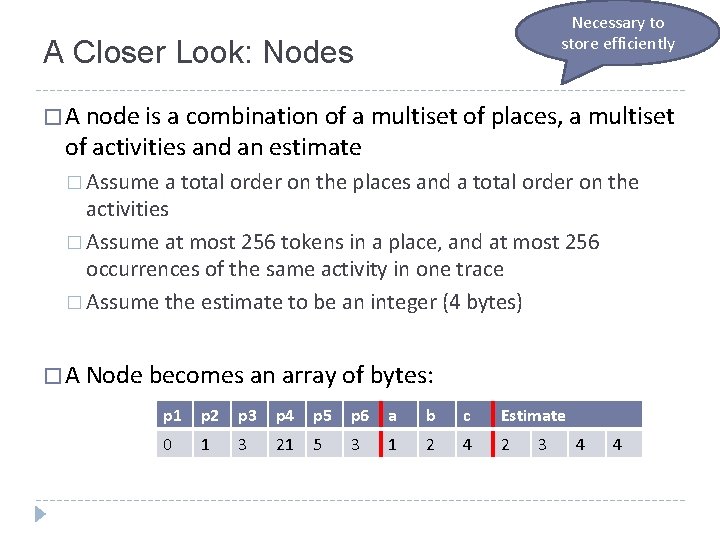

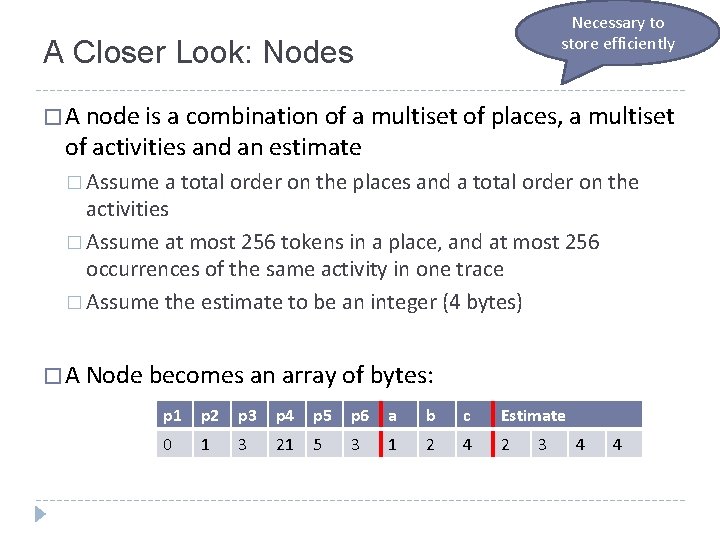

Necessary to store efficiently A Closer Look: Nodes � A node is a combination of a multiset of places, a multiset of activities and an estimate � Assume a total order on the places and a total order on the activities � Assume at most 256 tokens in a place, and at most 256 occurrences of the same activity in one trace � Assume the estimate to be an integer (4 bytes) � A Node becomes an array of bytes: p 1 p 2 p 3 p 4 p 5 p 6 a b c Estimate 0 1 3 21 5 3 1 2 4 2 3 4 4

![Necessary to store efficiently A Closer Look Lists of nodes Listbyte should not Necessary to store efficiently A Closer Look: Lists of nodes � List<byte[]> should not](https://slidetodoc.com/presentation_image_h2/00be9f727823980bfe1498b0bfd4663e/image-8.jpg)

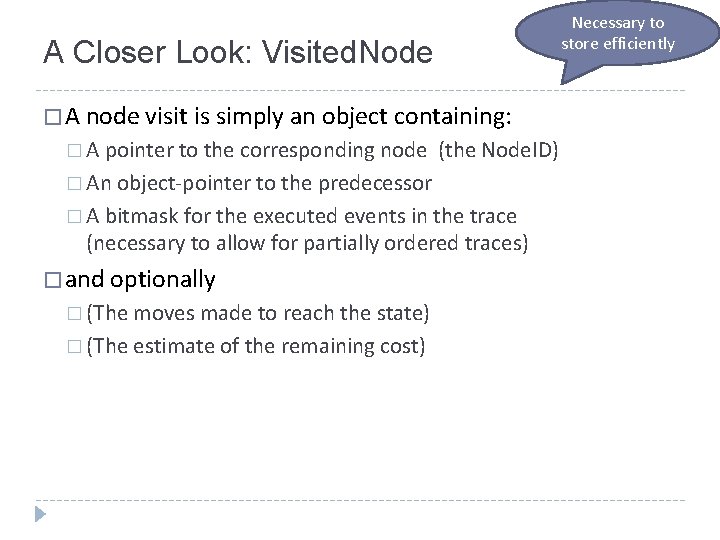

Necessary to store efficiently A Closer Look: Lists of nodes � List<byte[]> should not be used here! � A list of nodes is an array of bytes, where the first byte of a node n is stored at index n*13 (in our example) � Add: O(1) Remove: O(n) Lookup: O(n) Update: O(n) p 1 p 2 p 3 p 4 p 5 p 6 a b c Estimate 0 1 3 21 5 3 1 2 4 2 3 4 4 0 0 2 11 4 0 0 2 4 0 -2 4 4 0 3 1 10 3 0 0 2 3 0 5 0 9 0 2 4 2 2 0 0 1 2 0 2 4 2

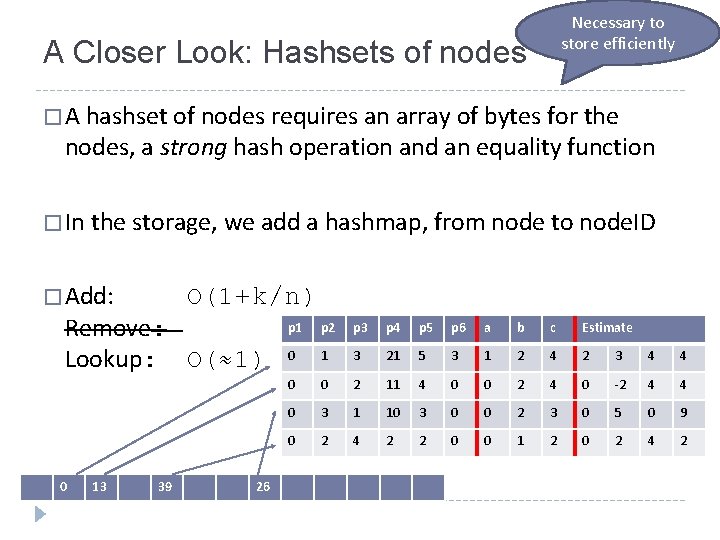

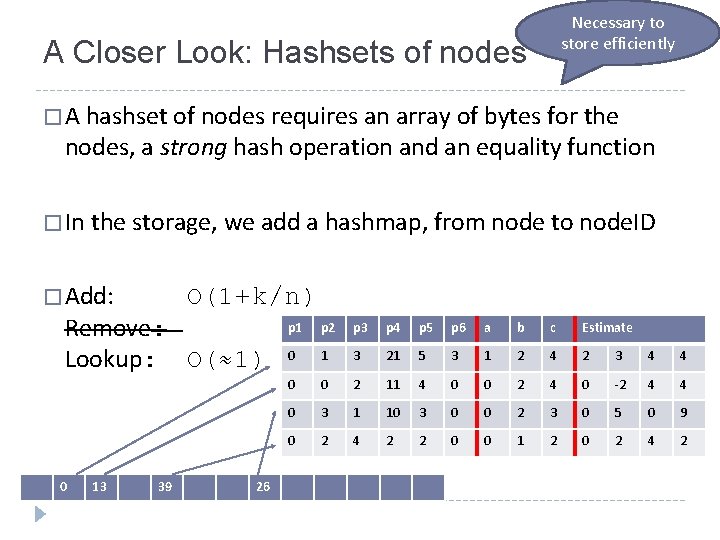

Necessary to store efficiently A Closer Look: Hashsets of nodes � A hashset of nodes requires an array of bytes for the nodes, a strong hash operation and an equality function � In the storage, we add a hashmap, from node to node. ID � Add: O(1+k/n) Remove: Lookup: O(≈1) 0 13 39 26 p 1 p 2 p 3 p 4 p 5 p 6 a b c Estimate 0 1 3 21 5 3 1 2 4 2 3 4 4 0 0 2 11 4 0 0 2 4 0 -2 4 4 0 3 1 10 3 0 0 2 3 0 5 0 9 0 2 4 2 2 0 0 1 2 0 2 4 2

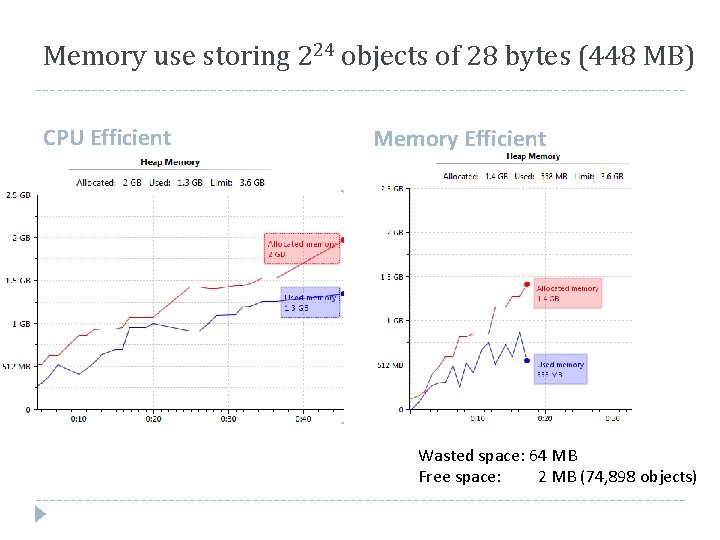

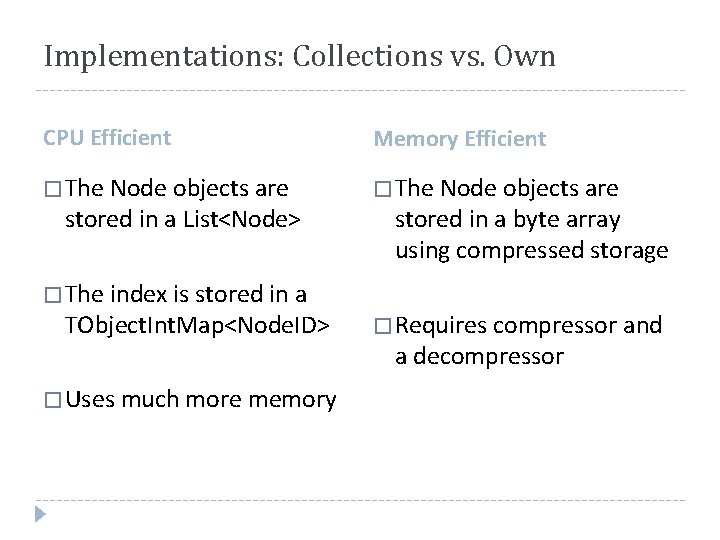

Implementations: Collections vs. Own CPU Efficient Memory Efficient � The Node objects are stored in a List<Node> � The index is stored in a TObject. Int. Map<Node. ID> � Uses much more memory stored in a byte array using compressed storage � Requires compressor and a decompressor

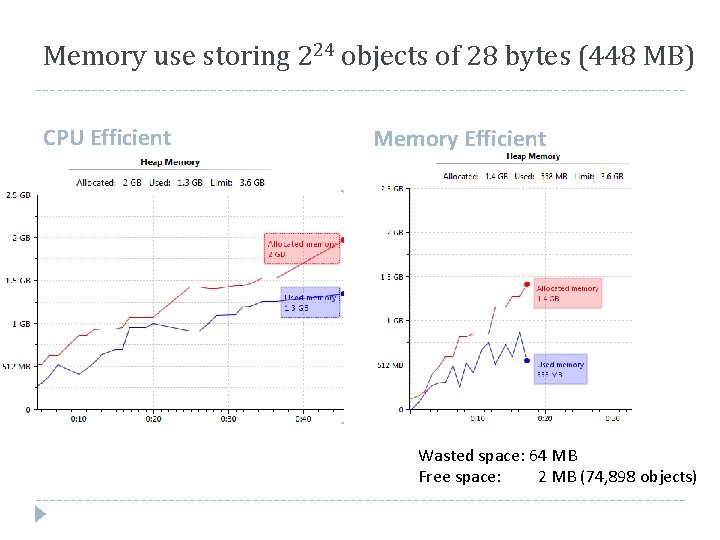

Memory use storing 224 objects of 28 bytes (448 MB) CPU Efficient Memory Efficient Wasted space: 64 MB Free space: 2 MB (74, 898 objects)

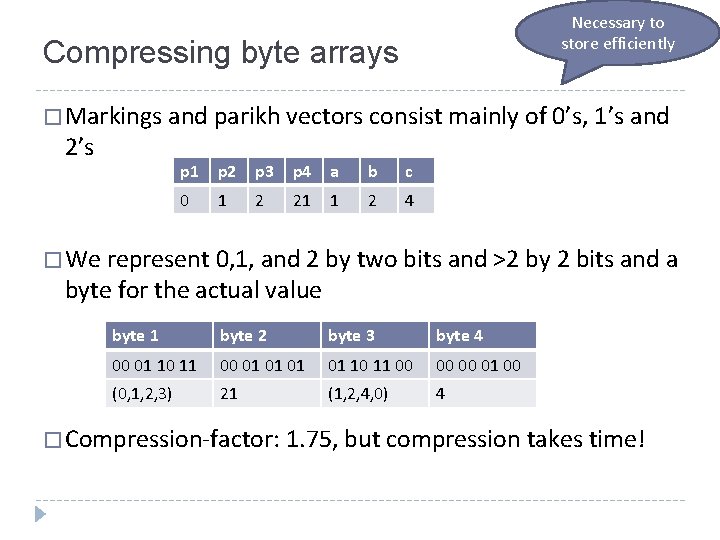

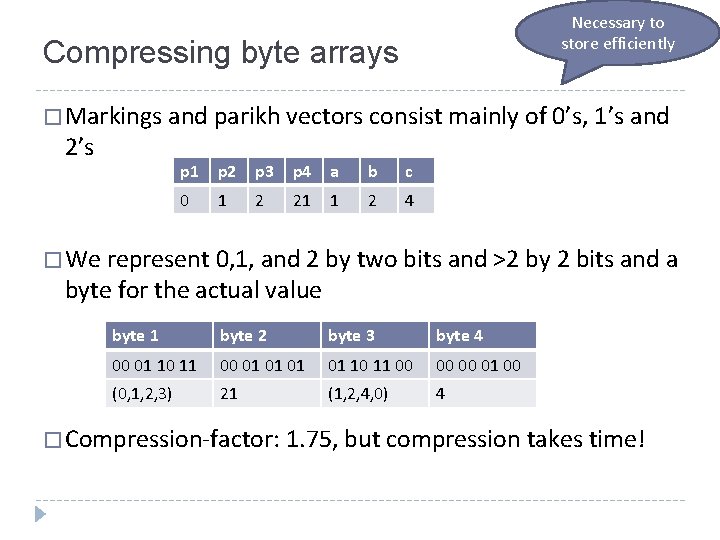

Necessary to store efficiently Compressing byte arrays � Markings and parikh vectors consist mainly of 0’s, 1’s and 2’s p 1 p 2 p 3 p 4 a b c 0 1 2 21 1 2 4 � We represent 0, 1, and 2 by two bits and >2 by 2 bits and a byte for the actual value byte 1 byte 2 byte 3 byte 4 00 01 10 11 00 01 01 10 11 00 00 00 01 00 (0, 1, 2, 3) 21 (1, 2, 4, 0) 4 � Compression-factor: 1. 75, but compression takes time!

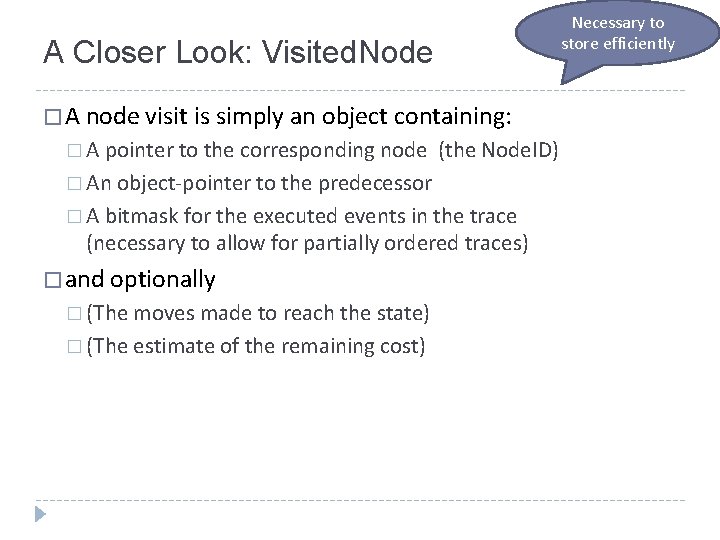

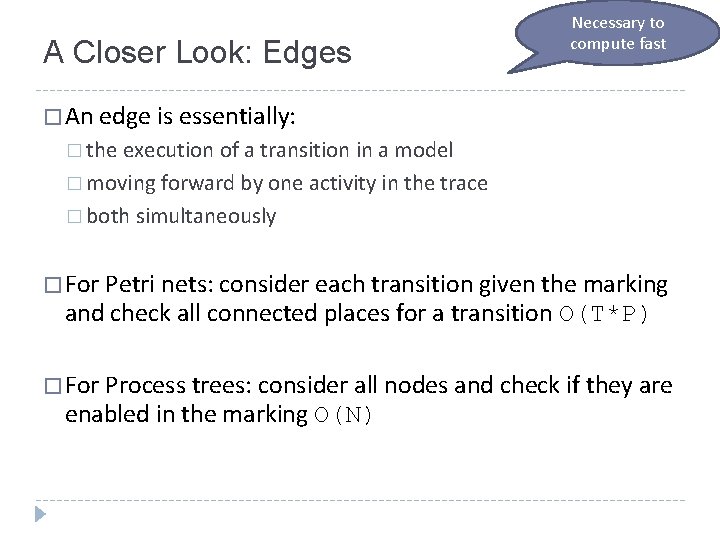

Necessary to store efficiently A Closer Look: Visited. Node � A node visit is simply an object containing: � A pointer to the corresponding node (the Node. ID) � An object-pointer to the predecessor � A bitmask for the executed events in the trace (necessary to allow for partially ordered traces) � and optionally � (The moves made to reach the state) � (The estimate of the remaining cost)

A Closer Look: Edges Necessary to compute fast � An edge is essentially: � the execution of a transition in a model � moving forward by one activity in the trace � both simultaneously � For Petri nets: consider each transition given the marking and check all connected places for a transition O(T*P) � For Process trees: consider all nodes and check if they are enabled in the marking O(N)

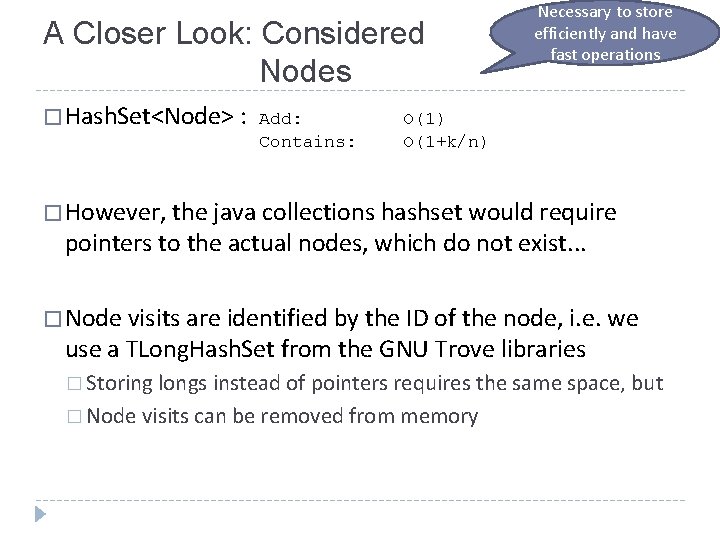

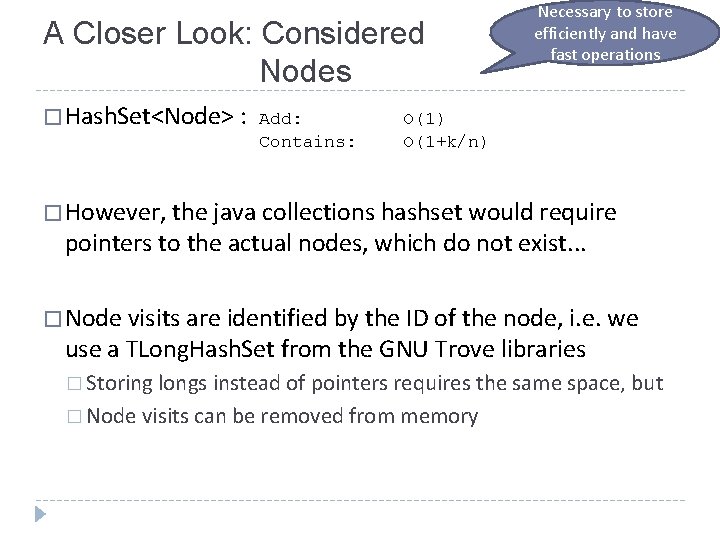

A Closer Look: Considered Nodes � Hash. Set<Node> : Add: Contains: Necessary to store efficiently and have fast operations O(1) O(1+k/n) � However, the java collections hashset would require pointers to the actual nodes, which do not exist. . . � Node visits are identified by the ID of the node, i. e. we use a TLong. Hash. Set from the GNU Trove libraries � Storing longs instead of pointers requires the same space, but � Node visits can be removed from memory

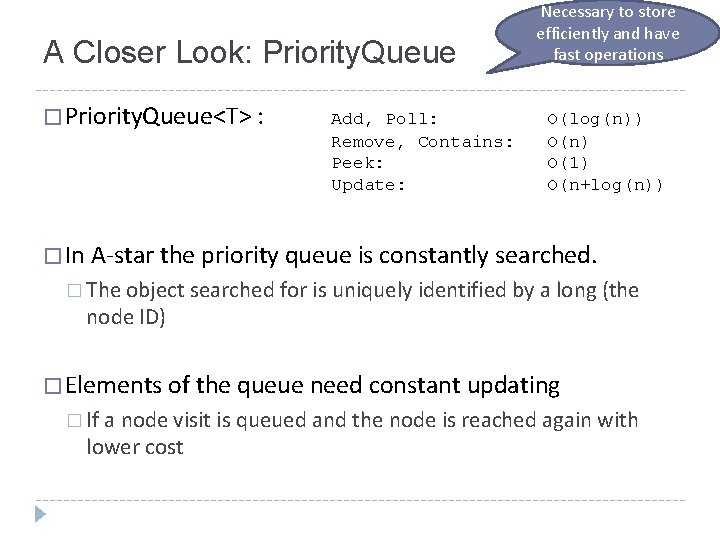

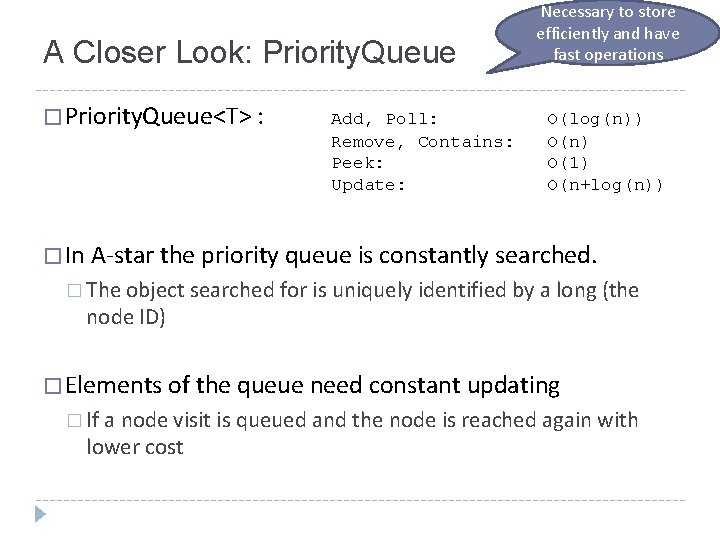

A Closer Look: Priority. Queue � Priority. Queue<T> : Add, Poll: Remove, Contains: Peek: Update: Necessary to store efficiently and have fast operations O(log(n)) O(n) O(1) O(n+log(n)) � In A-star the priority queue is constantly searched. � The object searched for is uniquely identified by a long (the node ID) � Elements of the queue need constant updating � If a node visit is queued and the node is reached again with lower cost

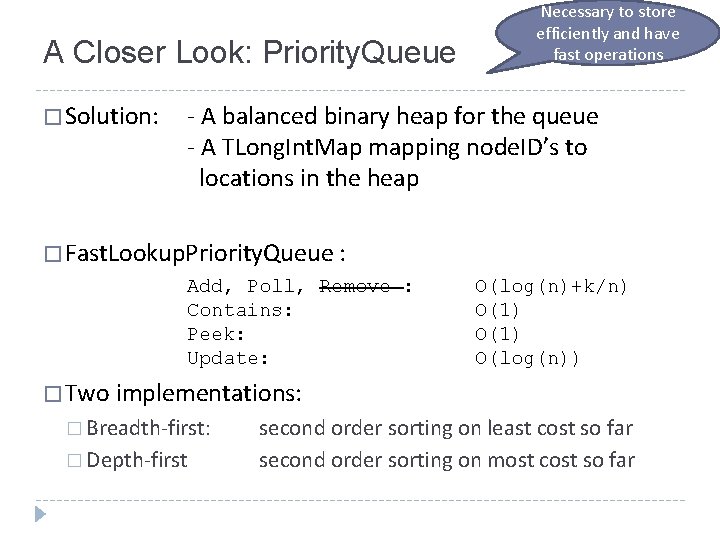

A Closer Look: Priority. Queue � Solution: Necessary to store efficiently and have fast operations - A balanced binary heap for the queue - A TLong. Int. Map mapping node. ID’s to locations in the heap � Fast. Lookup. Priority. Queue : Add, Poll, Remove : Contains: Peek: Update: O(log(n)+k/n) O(1) O(log(n)) � Two implementations: � Breadth-first: � Depth-first second order sorting on least cost so far second order sorting on most cost so far

Complexity w/ Java Collections O(log(n)) Initialize Priority. Queue q While (head(q) is not target t) Visited. Node n = head(q) O(1) For each edge in the graph from node(n) to m O(n) If m was considered before, continue If m is in the queue with lower cost, continue If m is in the queue with higher cost, update and reposition it If m is new, O(n+log(n)) compute an estimate for the remaining distance to t V = new Visited. Node(m) set n a predecessor for v add v to the priority queue Expensive? Return head(q) O(log(n))

Complexity w/ Own Collections O(log(n)+k/n) Initialize Priority. Queue q While (head(q) is not target t) Visited. Node n = head(q) O(1) For each edge in the graph from node(n) to m O(1) If m was considered before, continue If m is in the queue with lower cost, continue If m is in the queue with higher cost, update and reposition it If m is new, O(log(n)) compute an estimate for the remaining distance to t V = new Visited. Node(m) set n a predecessor for v add v to the priority queue Expensive? Return head(q) O(log(n)+k/n)

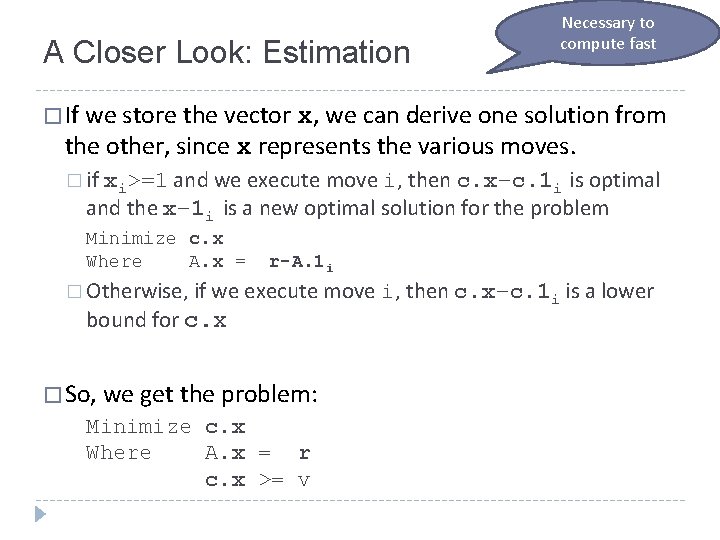

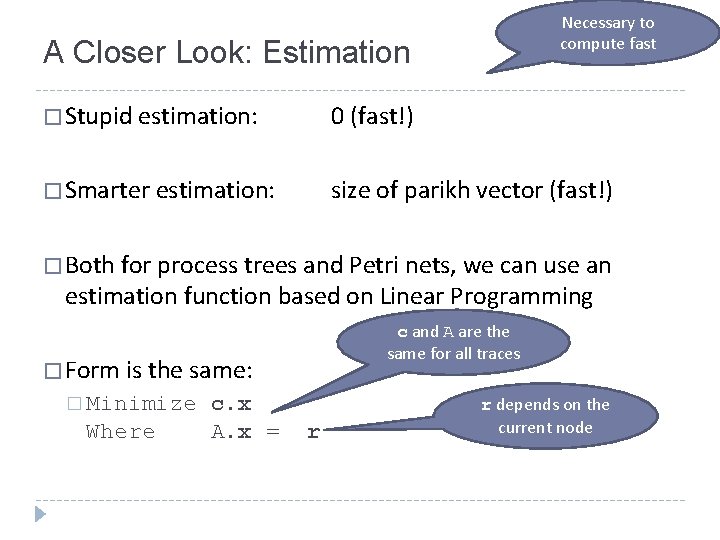

Necessary to compute fast A Closer Look: Estimation � Stupid estimation: 0 (fast!) � Smarter estimation: size of parikh vector (fast!) � Both for process trees and Petri nets, we can use an estimation function based on Linear Programming c and A are the same for all traces � Form is the same: � Minimize Where c. x A. x = r r depends on the current node

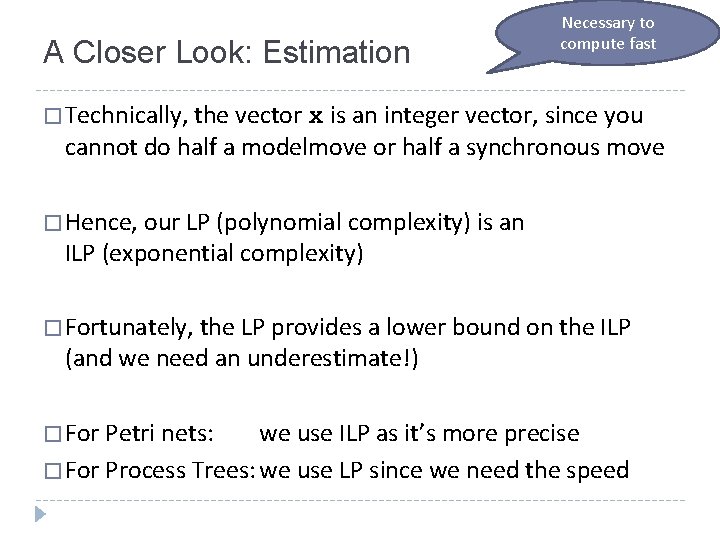

A Closer Look: Estimation Necessary to compute fast � If we store the vector x, we can derive one solution from the other, since x represents the various moves. � if xi>=1 and we execute move i, then c. x–c. 1 i is optimal and the x– 1 i is a new optimal solution for the problem Minimize c. x Where A. x = r-A. 1 i � Otherwise, if we execute move bound for c. x � So, we get the problem: Minimize c. x Where A. x = r c. x >= v i, then c. x–c. 1 i is a lower

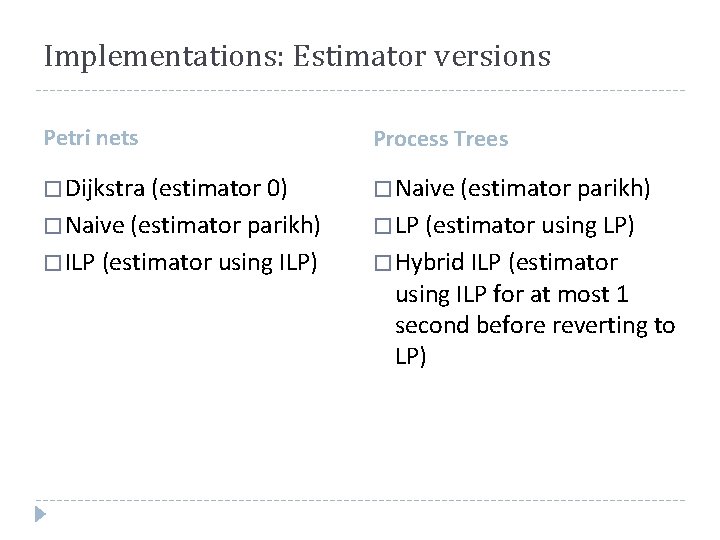

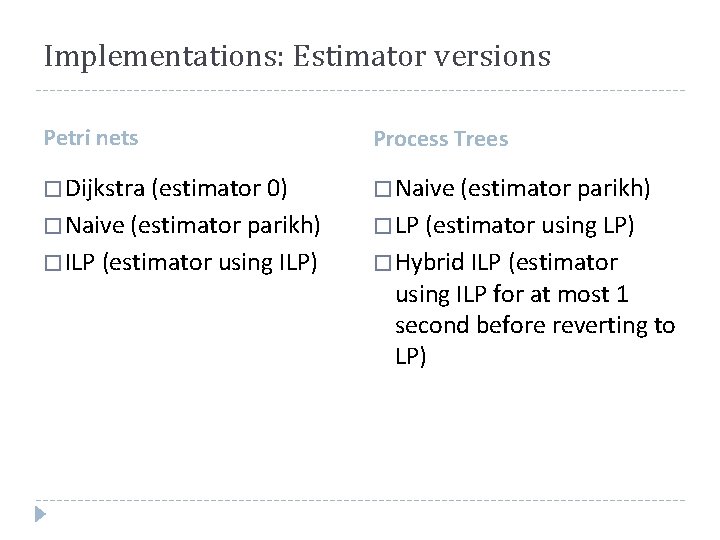

A Closer Look: Estimation Necessary to compute fast � Technically, the vector x is an integer vector, since you cannot do half a modelmove or half a synchronous move � Hence, our LP (polynomial complexity) is an ILP (exponential complexity) � Fortunately, the LP provides a lower bound on the ILP (and we need an underestimate!) � For Petri nets: we use ILP as it’s more precise � For Process Trees: we use LP since we need the speed

Implementations: Estimator versions Petri nets Process Trees � Dijkstra (estimator 0) � Naive (estimator parikh) � LP (estimator using LP) � ILP (estimator using ILP) � Hybrid ILP (estimator using ILP for at most 1 second before reverting to LP)

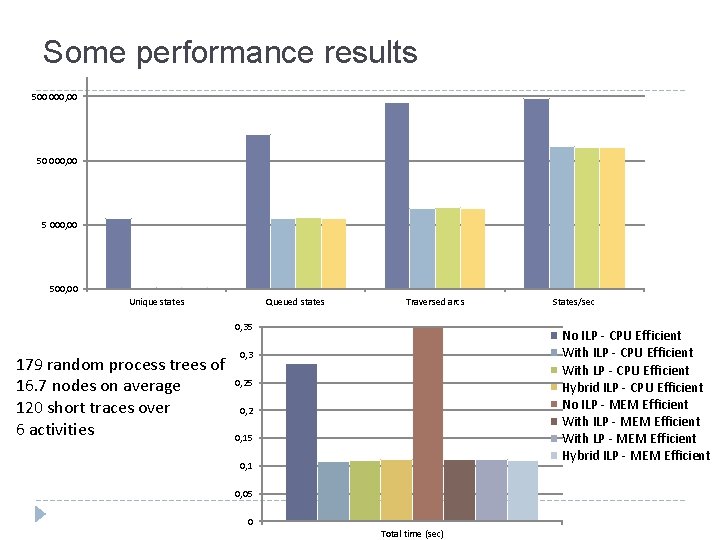

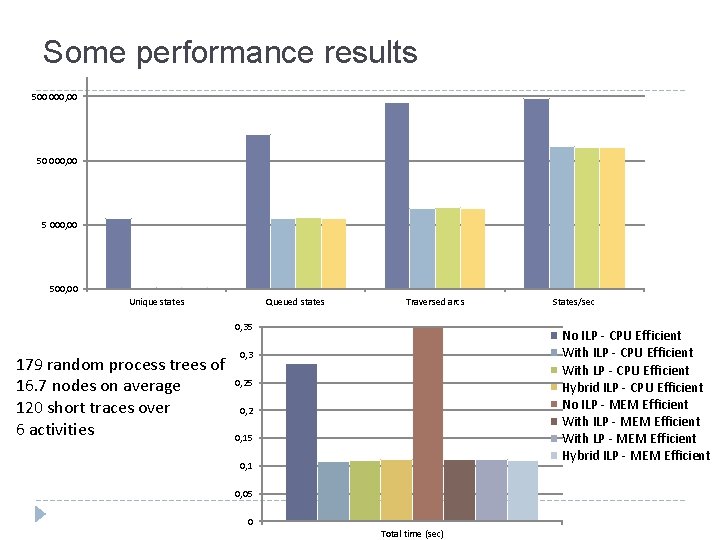

Some performance results 500 000, 00 500, 00 Unique states Queued states Traversed arcs 0, 35 179 random process trees of 16. 7 nodes on average 120 short traces over 6 activities States/sec No ILP - CPU Efficient With LP - CPU Efficient Hybrid ILP - CPU Efficient No ILP - MEM Efficient With LP - MEM Efficient Hybrid ILP - MEM Efficient 0, 3 0, 25 0, 2 0, 15 0, 1 0, 05 0 Total time (sec)

Conclusion � Fast, memory efficient implementations can be essential n e e � Memory efficiency comes at a cost of code readability w t e b f f o e d n a o i r t t p a m s u y s a n w o l performance c a y � There’s negligible overhead for memory s ’ r t e r o m e f f e h e T m n , o e i efficiency t m a i t CPU t implemen and � A-star efficiency required good estimation functions, but � Good estimation functions can be expensive

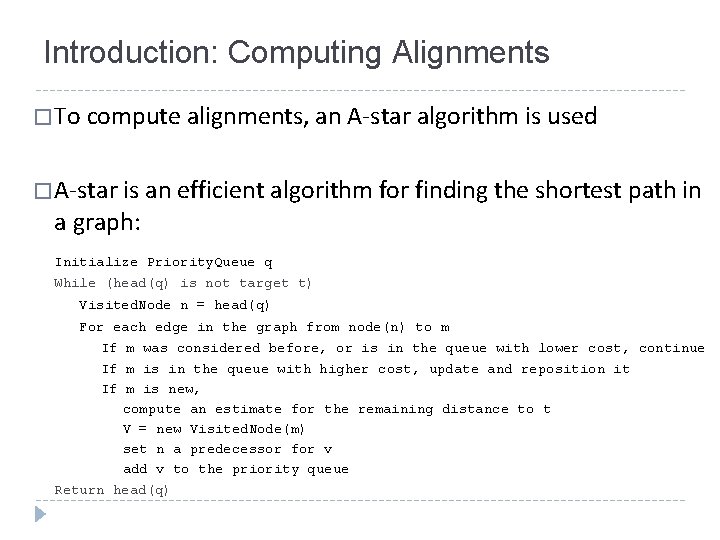

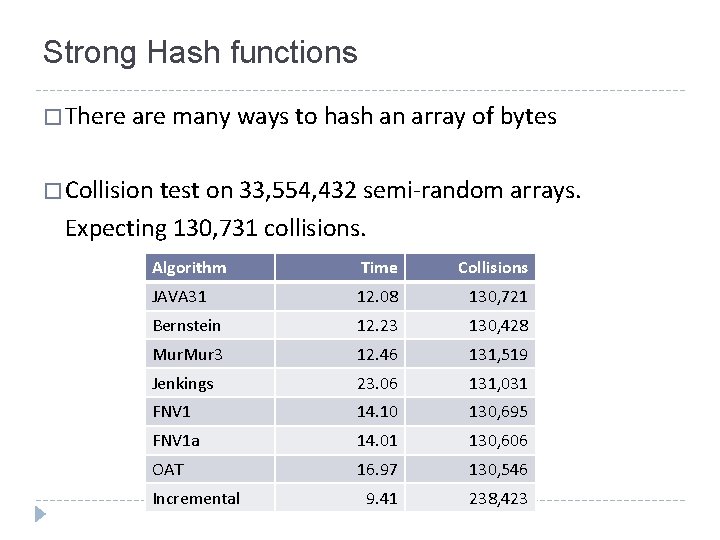

Strong Hash functions � There are many ways to hash an array of bytes � Collision test on 33, 554, 432 semi-random arrays. Expecting 130, 731 collisions. Algorithm Time Collisions JAVA 31 12. 08 130, 721 Bernstein 12. 23 130, 428 Mur 3 12. 46 131, 519 Jenkings 23. 06 131, 031 FNV 1 14. 10 130, 695 FNV 1 a 14. 01 130, 606 OAT 16. 97 130, 546 9. 41 238, 423 Incremental