Unsupervised learning is the process of finding structure

- Slides: 29

Unsupervised learning is the process of finding structure, patterns or correlation in the given data. we distinguish: • Unsupervised Hebbian learning – Principal component analysis • Unsupervised competitive learning – Clustering – Data compression 2/28/2021 Rudolf Mak TU/e Computer Science 1

Unsupervised Competitive Learning In unsupervised competitive learning the neurons take part in some competition for each input. The winner of the competition and sometimes some other neurons are allowed to change their weights • In simple competitive learning only the winner is allowed to learn (change its weight). • In self-organizing maps other neurons in the neighborhood of the winner may also learn. 2/28/2021 Rudolf Mak TU/e Computer Science 2

Applications • Speech Recognition • OCR, e. g. handwritten characters • Image compression – (using code-book vectors) • Texture maps – Classification of cloud pattern (cumulus etc. ) • Contextual maps 2/28/2021 Rudolf Mak TU/e Computer Science 3

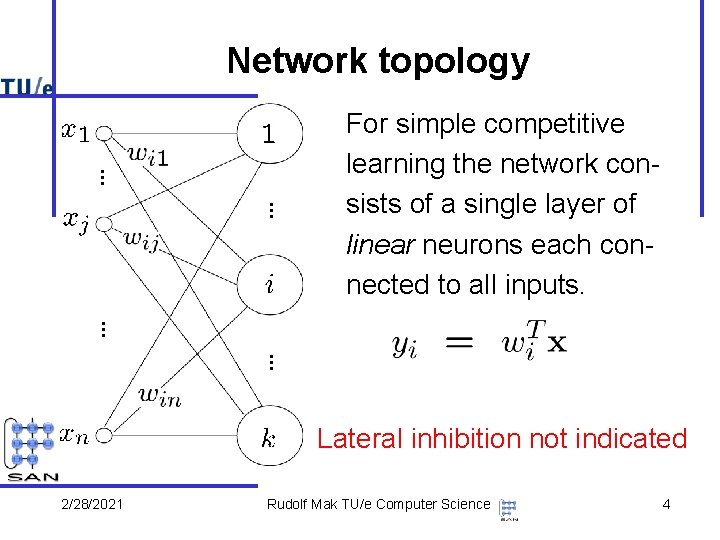

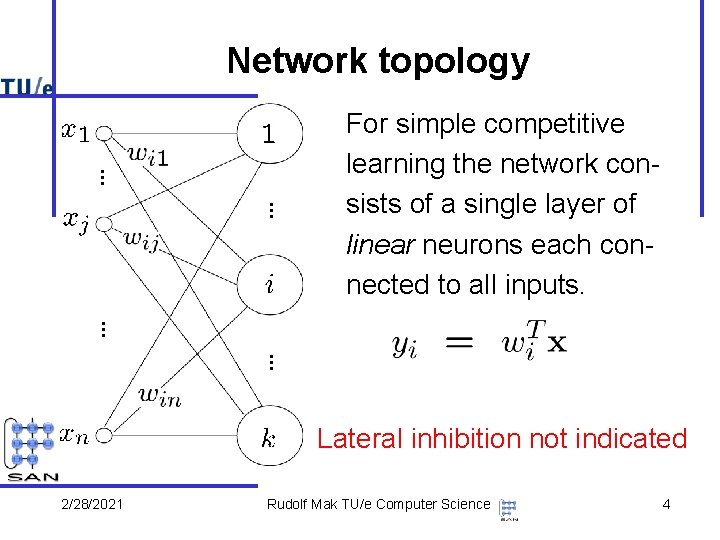

Network topology For simple competitive learning the network consists of a single layer of linear neurons each connected to all inputs. Lateral inhibition not indicated 2/28/2021 Rudolf Mak TU/e Computer Science 4

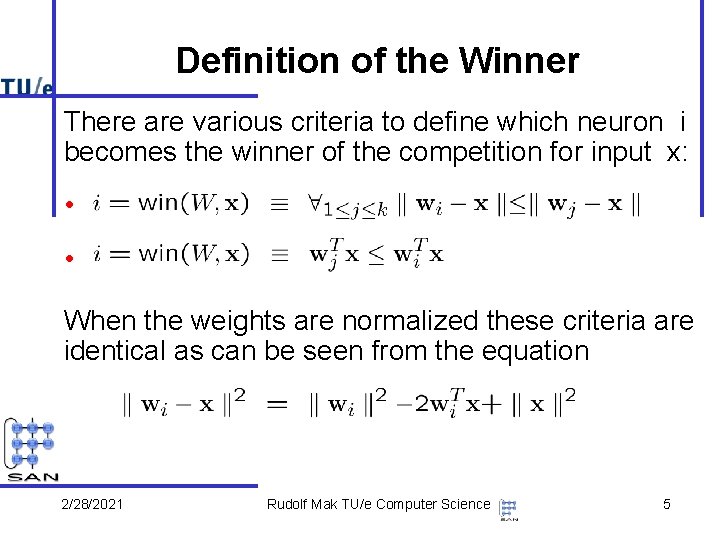

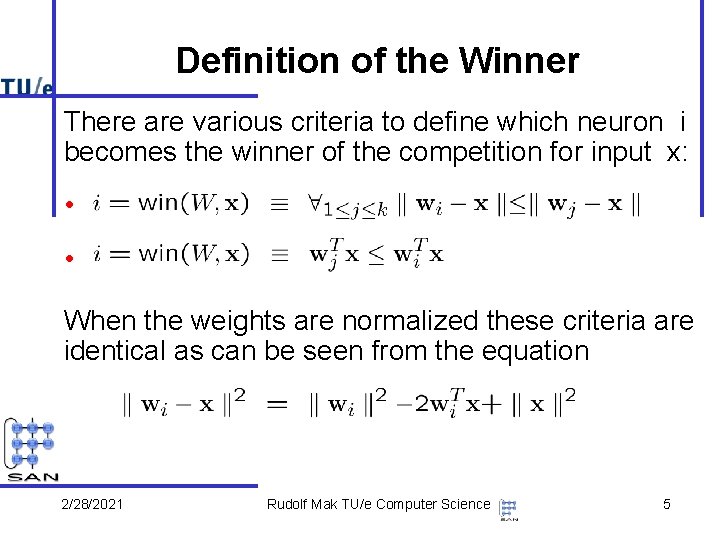

Definition of the Winner There are various criteria to define which neuron i becomes the winner of the competition for input x: • • When the weights are normalized these criteria are identical as can be seen from the equation 2/28/2021 Rudolf Mak TU/e Computer Science 5

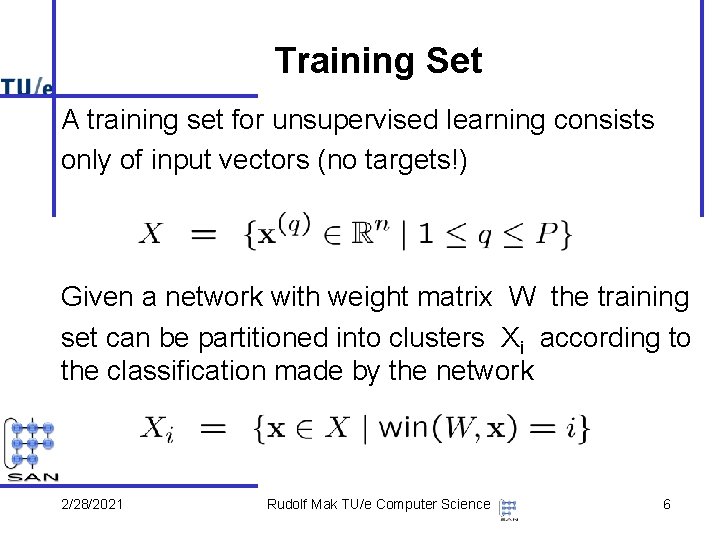

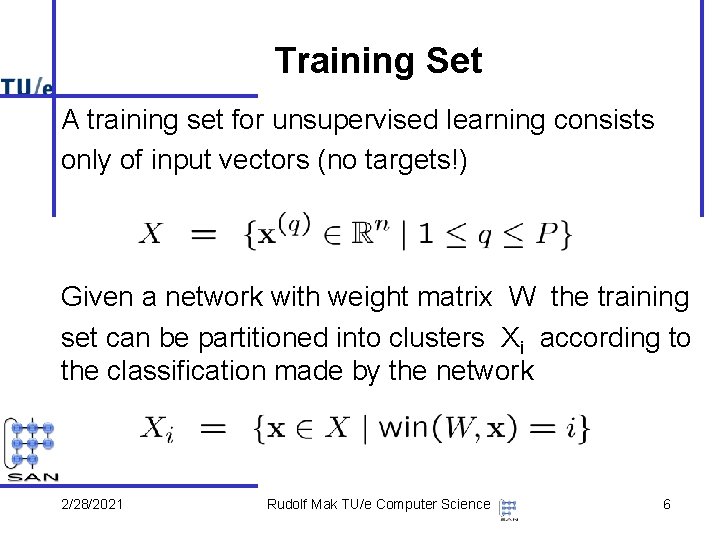

Training Set A training set for unsupervised learning consists only of input vectors (no targets!) Given a network with weight matrix W the training set can be partitioned into clusters Xi according to the classification made by the network 2/28/2021 Rudolf Mak TU/e Computer Science 6

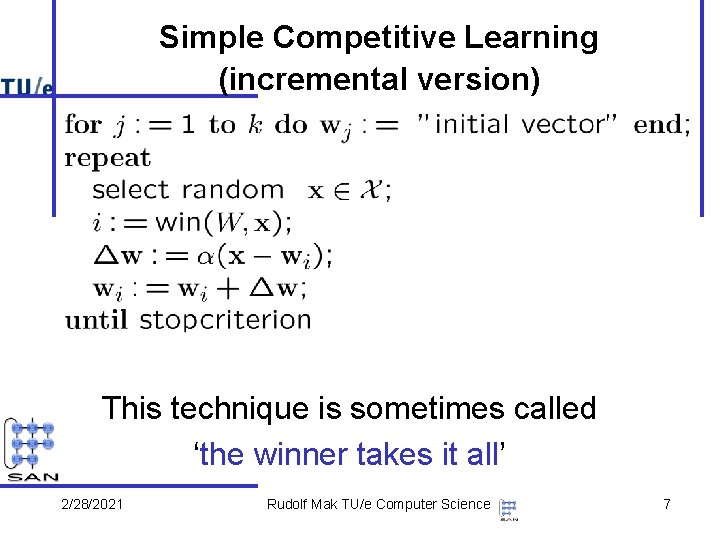

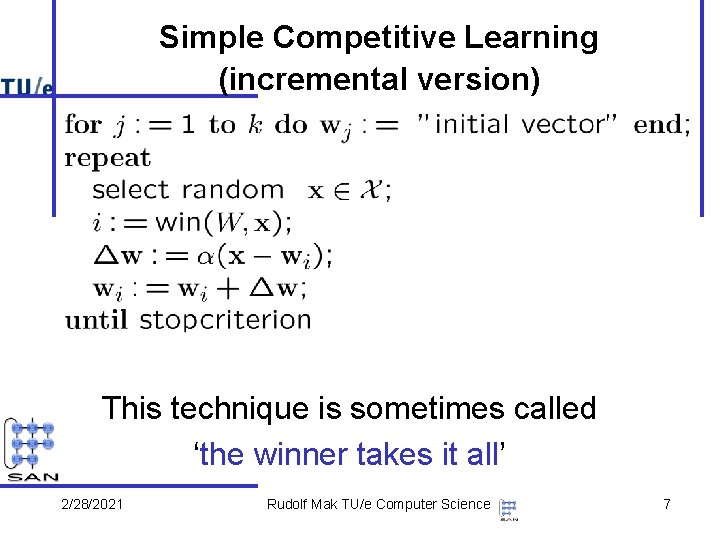

Simple Competitive Learning (incremental version) This technique is sometimes called ‘the winner takes it all’ 2/28/2021 Rudolf Mak TU/e Computer Science 7

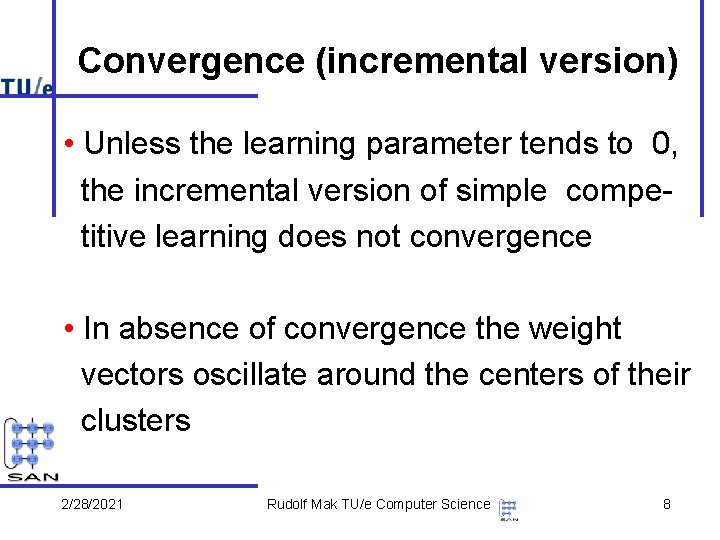

Convergence (incremental version) • Unless the learning parameter tends to 0, the incremental version of simple competitive learning does not convergence • In absence of convergence the weight vectors oscillate around the centers of their clusters 2/28/2021 Rudolf Mak TU/e Computer Science 8

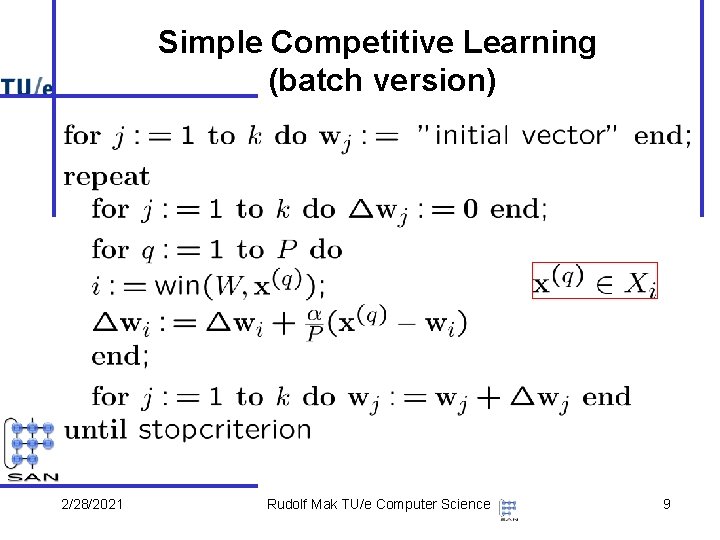

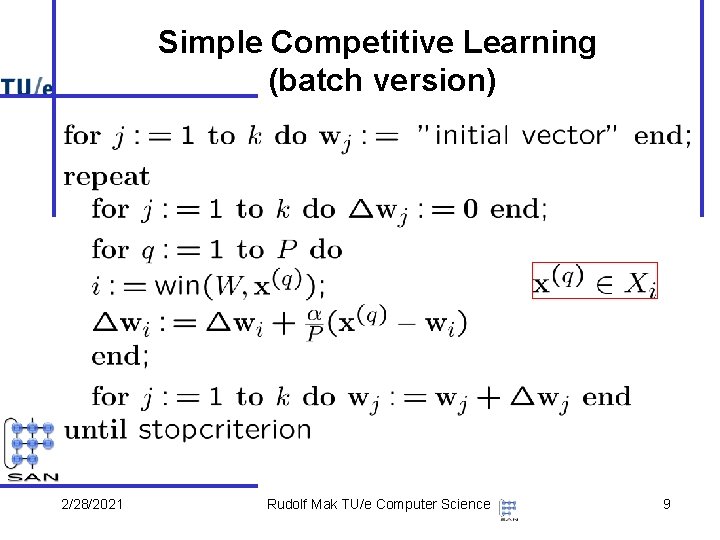

Simple Competitive Learning (batch version) 2/28/2021 Rudolf Mak TU/e Computer Science 9

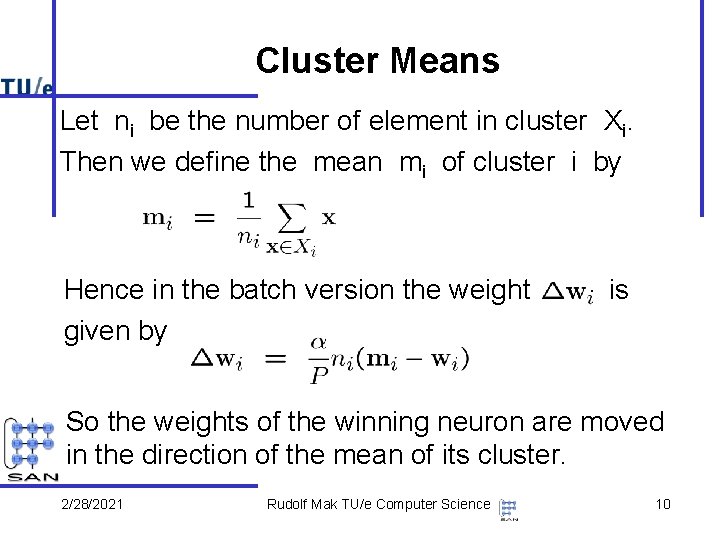

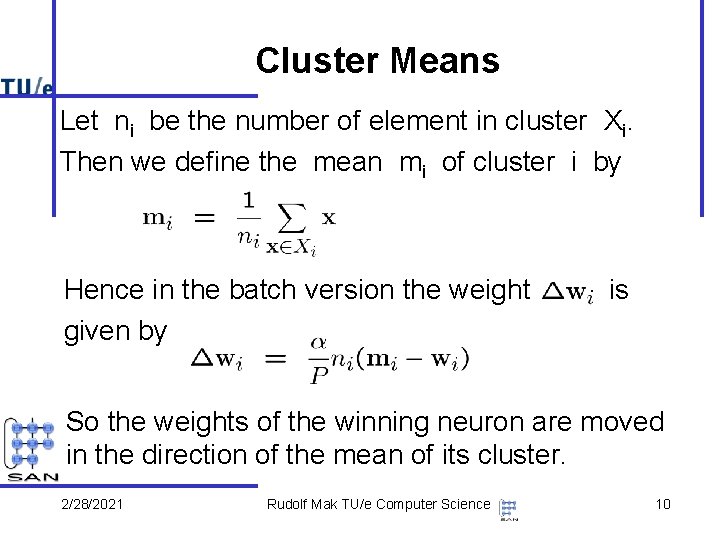

Cluster Means Let ni be the number of element in cluster Xi. Then we define the mean mi of cluster i by Hence in the batch version the weight given by is So the weights of the winning neuron are moved in the direction of the mean of its cluster. 2/28/2021 Rudolf Mak TU/e Computer Science 10

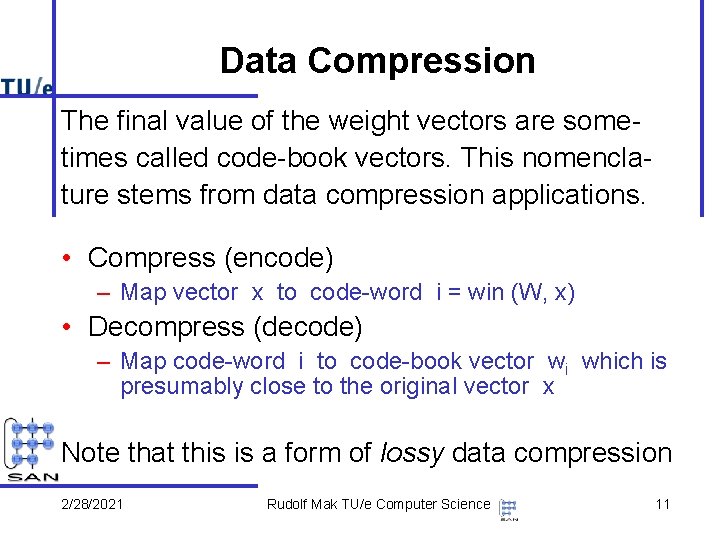

Data Compression The final value of the weight vectors are sometimes called code-book vectors. This nomenclature stems from data compression applications. • Compress (encode) – Map vector x to code-word i = win (W, x) • Decompress (decode) – Map code-word i to code-book vector wi which is presumably close to the original vector x Note that this is a form of lossy data compression 2/28/2021 Rudolf Mak TU/e Computer Science 11

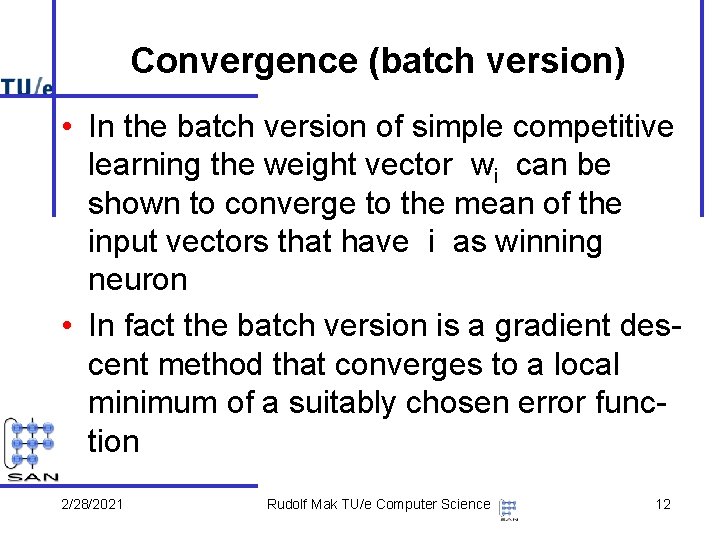

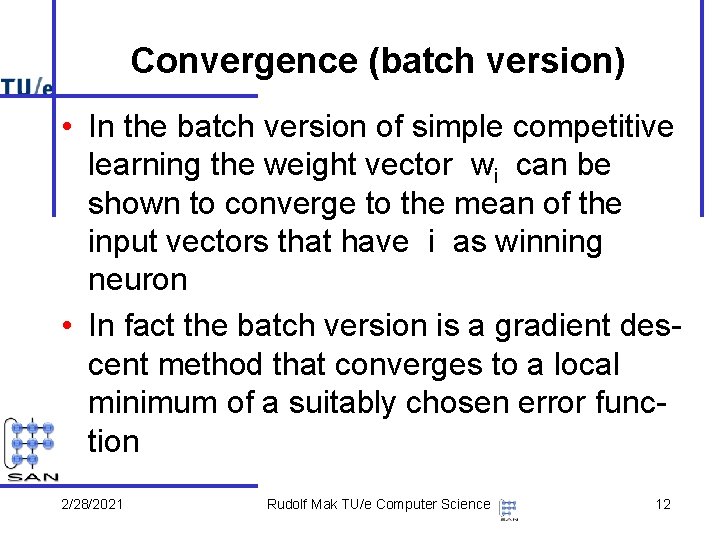

Convergence (batch version) • In the batch version of simple competitive learning the weight vector wi can be shown to converge to the mean of the input vectors that have i as winning neuron • In fact the batch version is a gradient descent method that converges to a local minimum of a suitably chosen error function 2/28/2021 Rudolf Mak TU/e Computer Science 12

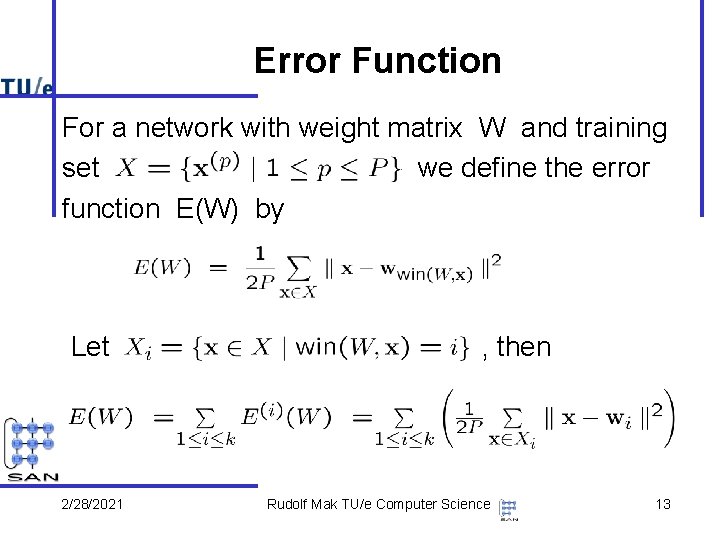

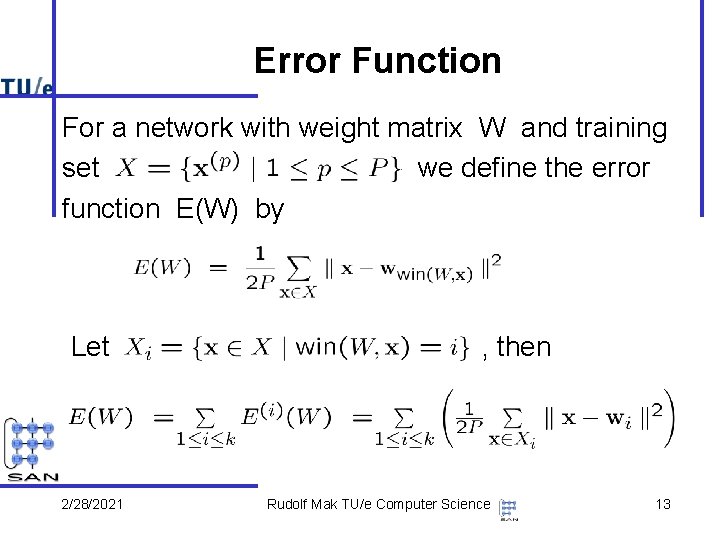

Error Function For a network with weight matrix W and training set we define the error function E(W) by Let 2/28/2021 , then Rudolf Mak TU/e Computer Science 13

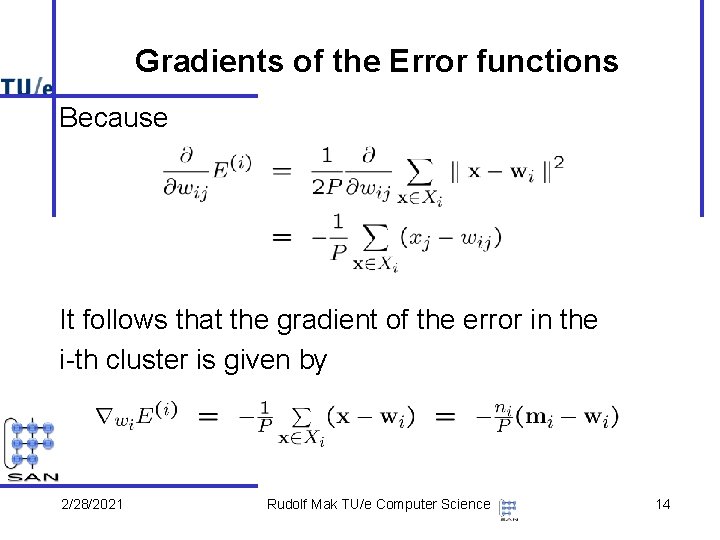

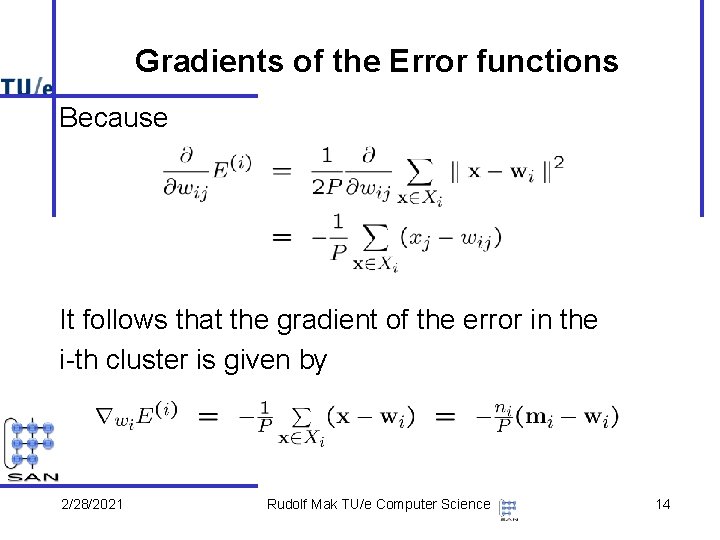

Gradients of the Error functions Because It follows that the gradient of the error in the i-th cluster is given by 2/28/2021 Rudolf Mak TU/e Computer Science 14

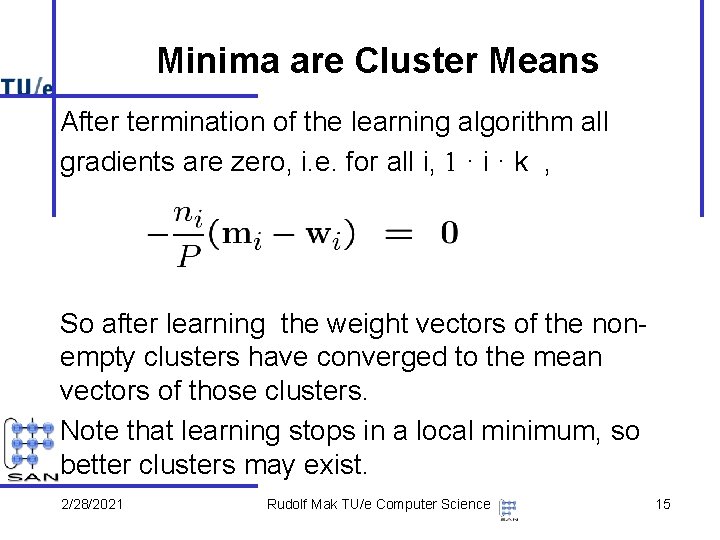

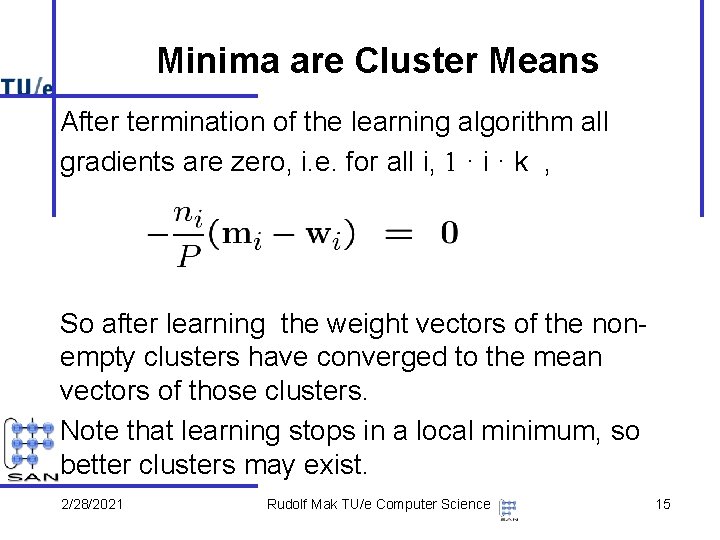

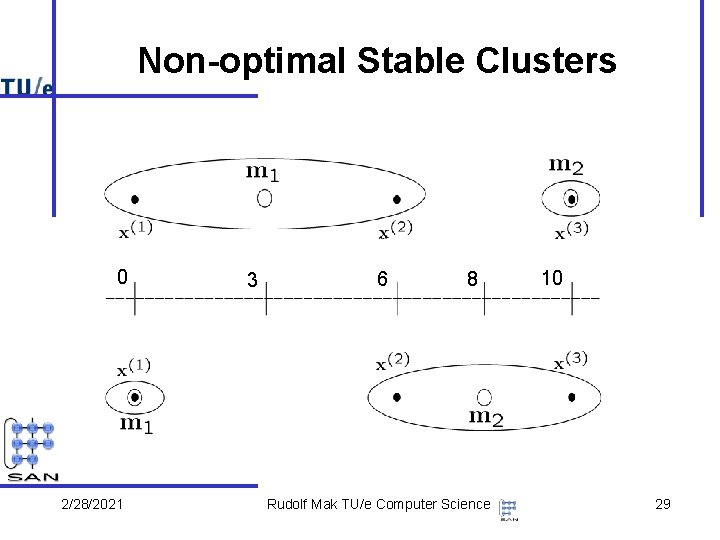

Minima are Cluster Means After termination of the learning algorithm all gradients are zero, i. e. for all i, 1 · i · k , So after learning the weight vectors of the nonempty clusters have converged to the mean vectors of those clusters. Note that learning stops in a local minimum, so better clusters may exist. 2/28/2021 Rudolf Mak TU/e Computer Science 15

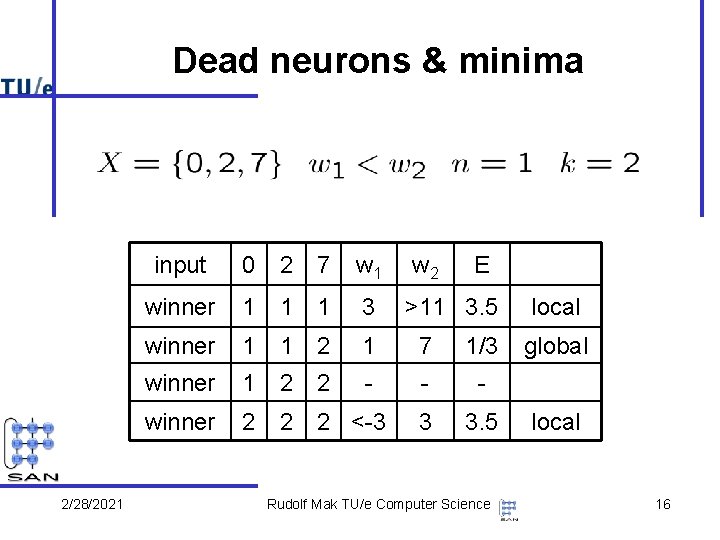

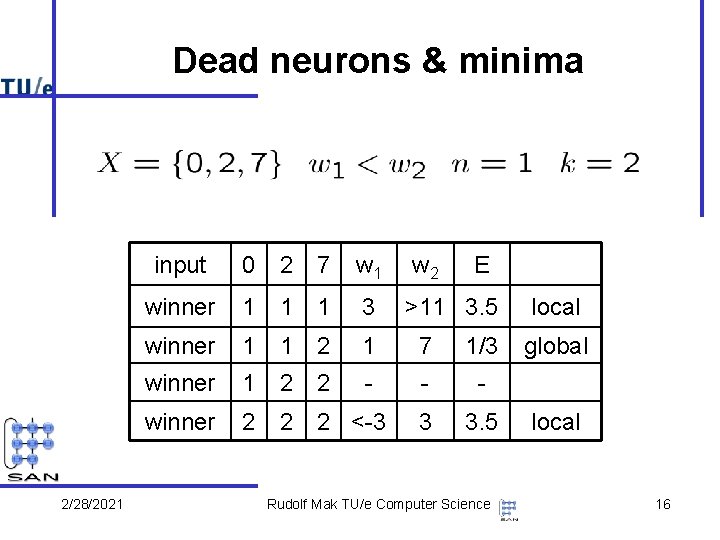

Dead neurons & minima 2/28/2021 input 0 2 7 w 1 w 2 E winner 1 1 1 3 winner 1 1 2 1 7 1/3 winner 1 2 2 - - - winner 2 2 2 <-3 3 3. 5 >11 3. 5 Rudolf Mak TU/e Computer Science local global local 16

K-means clustering as SCL • K-means clustering is a popular statistical method to organize multi-dimensional data into K groups. • K-means clustering can be seen as an instance of simple competitive learning, where each neuron has its own learning rate. 2/28/2021 Rudolf Mak TU/e Computer Science 17

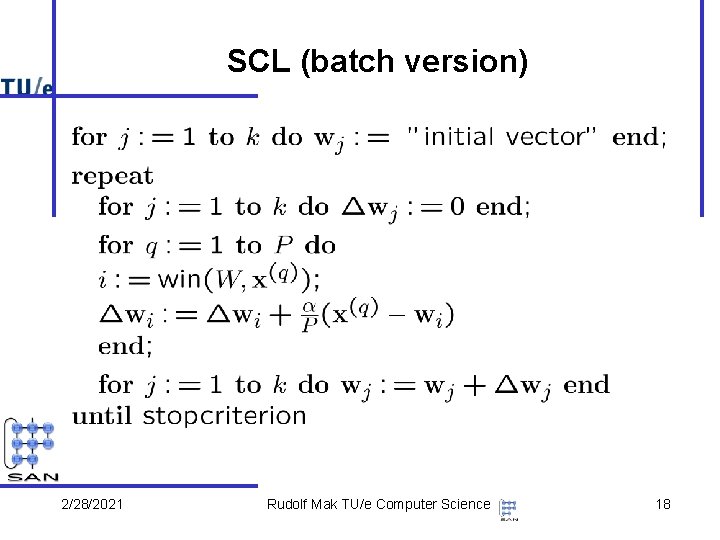

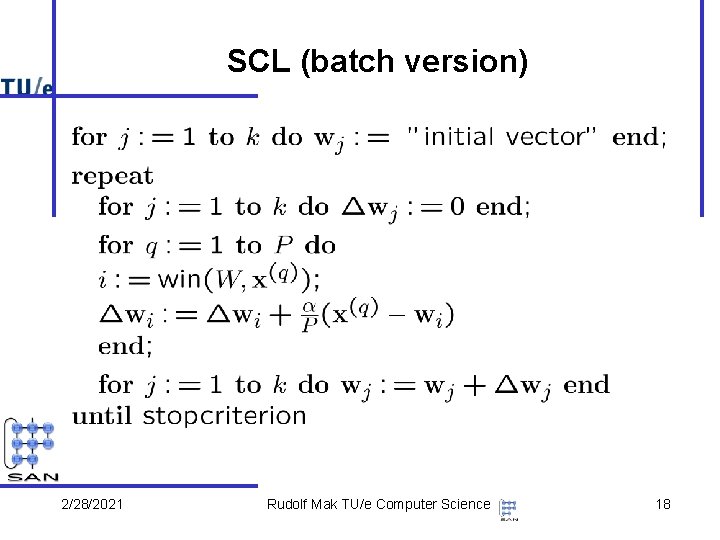

SCL (batch version) 2/28/2021 Rudolf Mak TU/e Computer Science 18

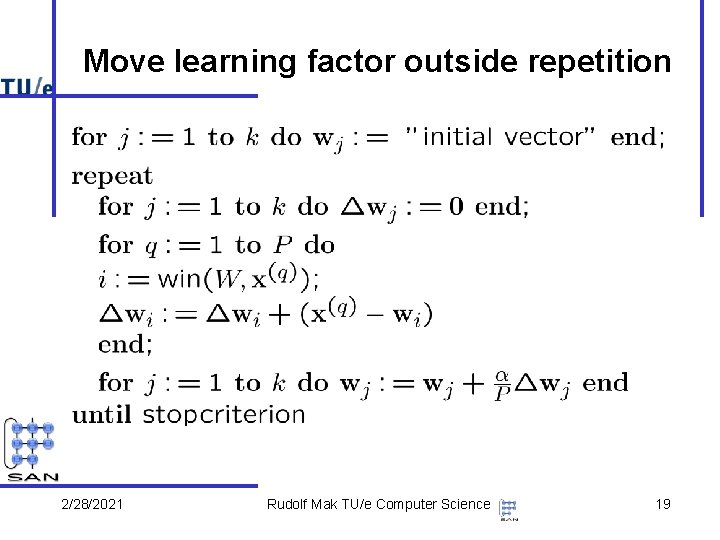

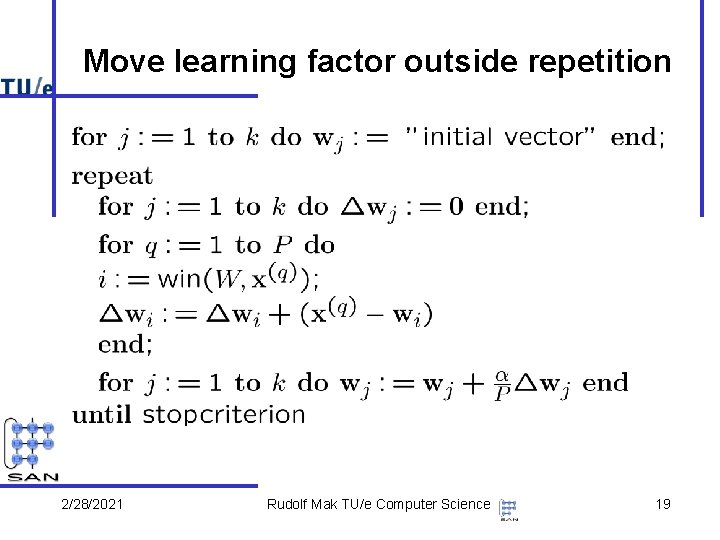

Move learning factor outside repetition 2/28/2021 Rudolf Mak TU/e Computer Science 19

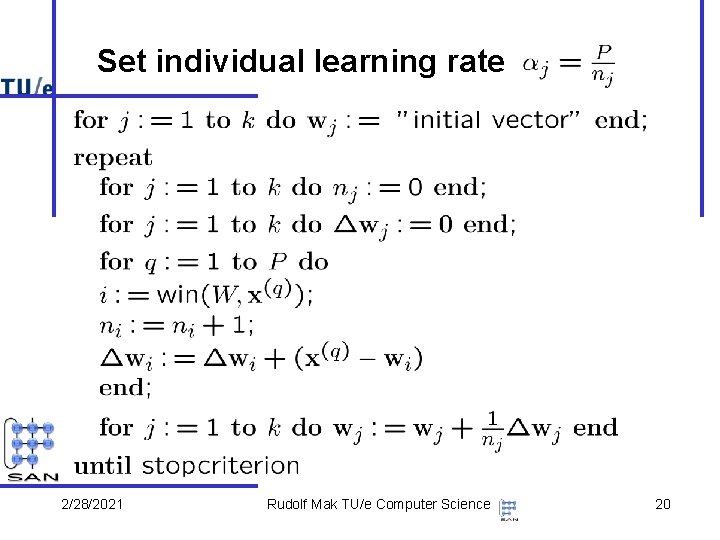

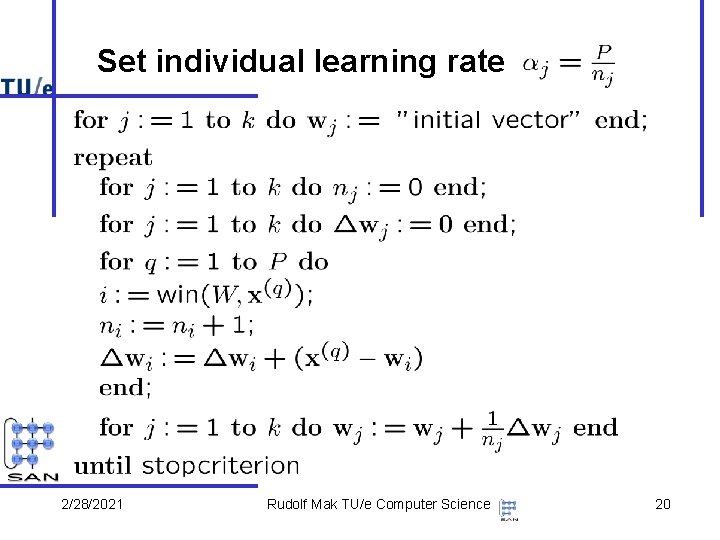

Set individual learning rate 2/28/2021 Rudolf Mak TU/e Computer Science 20

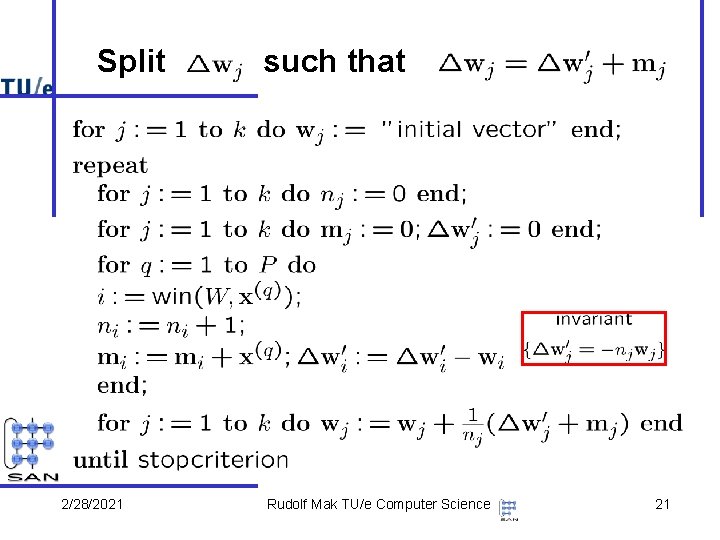

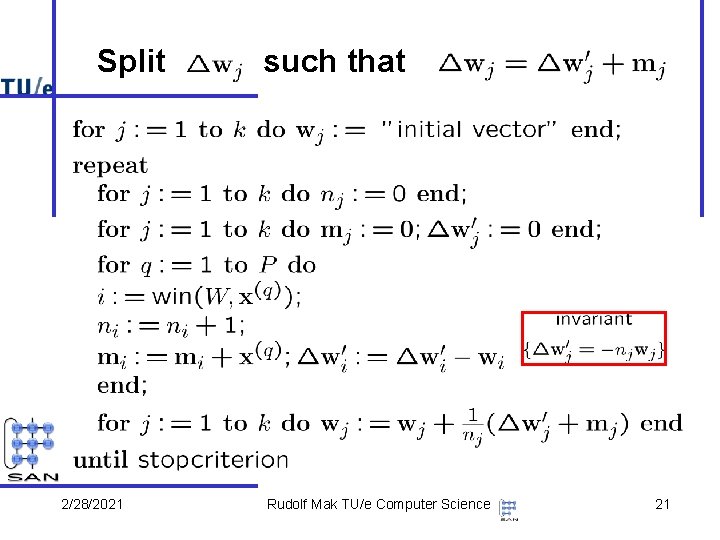

Split 2/28/2021 such that Rudolf Mak TU/e Computer Science 21

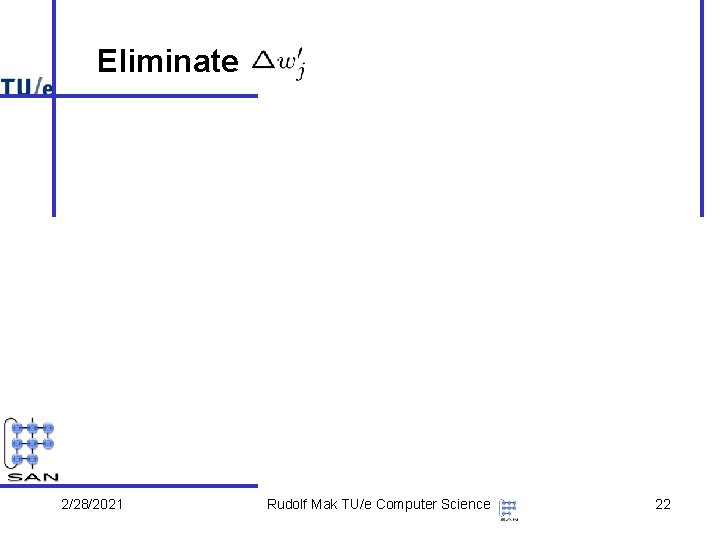

Eliminate 2/28/2021 Rudolf Mak TU/e Computer Science 22

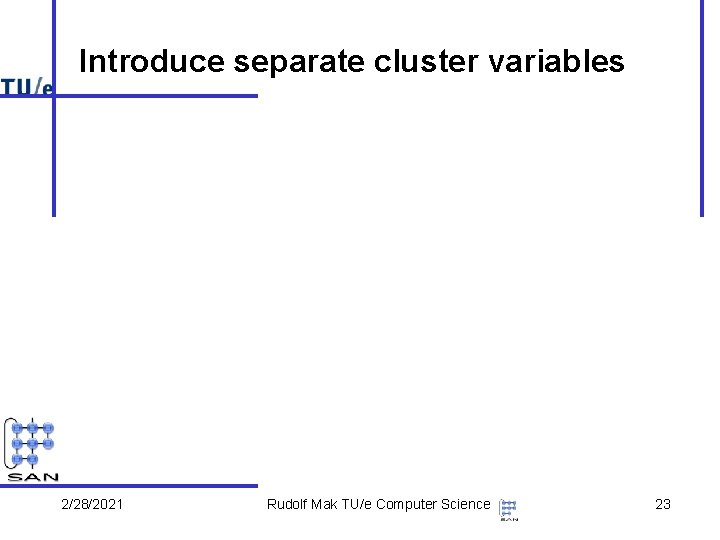

Introduce separate cluster variables 2/28/2021 Rudolf Mak TU/e Computer Science 23

Reuse mj 2/28/2021 : K-means clustering I Rudolf Mak TU/e Computer Science 24

K-means Clustering II 2/28/2021 Rudolf Mak TU/e Computer Science 25

Convergence of K-means Clustering The convergence proof of the K-means clustering algorithms involves showing two facts • Reassigning a vector to a different cluster does not increase the error function • Updating the mean of a cluster does not increase the error function 2/28/2021 Rudolf Mak TU/e Computer Science 26

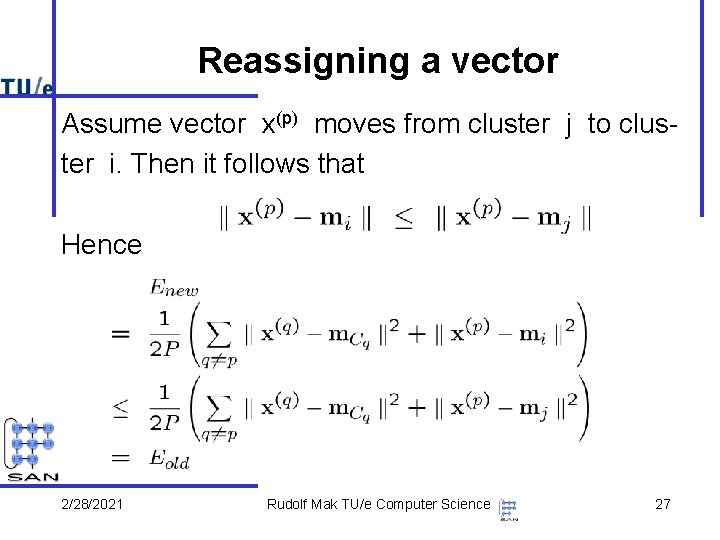

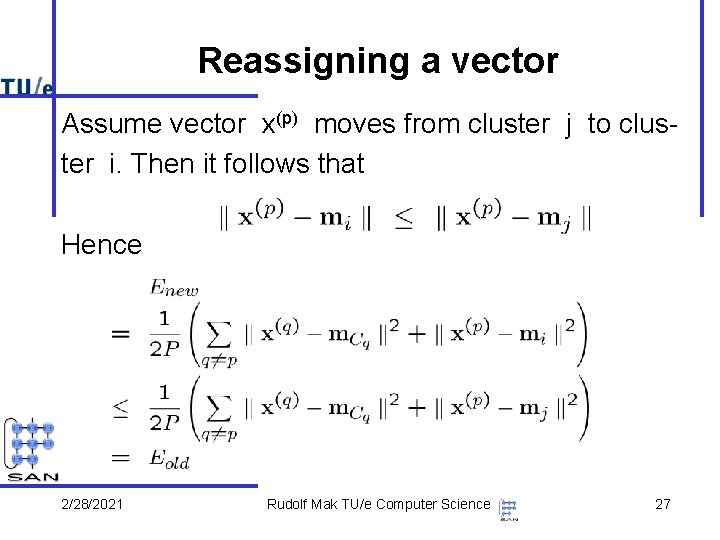

Reassigning a vector Assume vector x(p) moves from cluster j to cluster i. Then it follows that Hence 2/28/2021 Rudolf Mak TU/e Computer Science 27

Updating the Mean of a Cluster Consider cluster Xi with old mean 2/28/2021 Rudolf Mak TU/e Computer Science and new 28

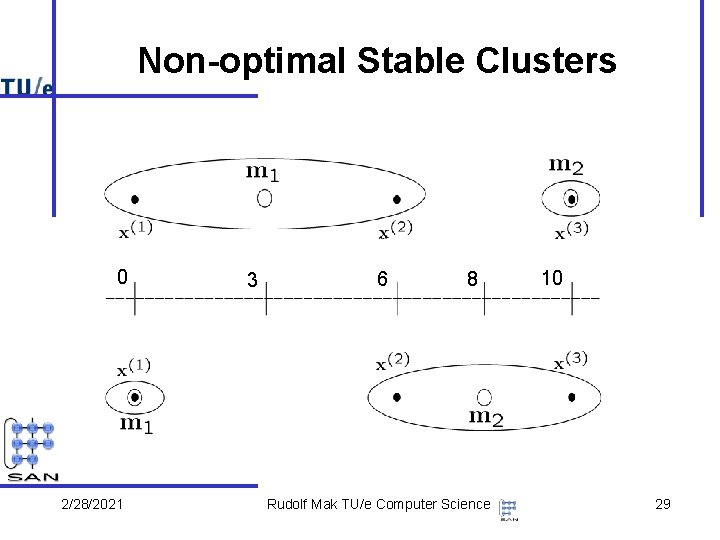

Non-optimal Stable Clusters 0 2/28/2021 3 6 8 Rudolf Mak TU/e Computer Science 10 29